Abstract

The aim of this paper is to introduce a field of study that has emerged over the last decade, called Bayesian mechanics. Bayesian mechanics is a probabilistic mechanics, comprising tools that enable us to model systems endowed with a particular partition (i.e. into particles), where the internal states (or the trajectories of internal states) of a particular system encode the parameters of beliefs about external states (or their trajectories). These tools allow us to write down mechanical theories for systems that look as if they are estimating posterior probability distributions over the causes of their sensory states. This provides a formal language for modelling the constraints, forces, potentials and other quantities determining the dynamics of such systems, especially as they entail dynamics on a space of beliefs (i.e. on a statistical manifold). Here, we will review the state of the art in the literature on the free energy principle, distinguishing between three ways in which Bayesian mechanics has been applied to particular systems (i.e. path-tracking, mode-tracking and mode-matching). We go on to examine a duality between the free energy principle and the constrained maximum entropy principle, both of which lie at the heart of Bayesian mechanics, and discuss its implications.

Keywords: free energy principle, active inference, Bayesian mechanics, information geometry, maximum entropy, gauge theory

1. Introduction

In this paper, we aim to introduce a field of study that has begun to emerge and consolidate over the last decade—called Bayesian mechanics—which might provide the first steps towards a general mechanics of self-organizing and complex adaptive systems [1–6]. Bayesian mechanics involves modelling physical systems that look as if they encode probabilistic beliefs about the environment in which they are embedded, and in particular, about the ways in which they are coupled to that environment. Bayesian mechanics thereby purports to provide a mathematically principled explanation of the striking property of all things that exist over some time period, namely: that they come to acquire the statistics of their embedding environment, and seem thereby to encode a probabilistic representation of that environment [7,8]. Bayesian mechanics is premised on the idea that the physical mechanics of particular kinds of systems are systematically related to a mechanics of information, or the mechanics of the probabilistic beliefs that such systems encode. Bayesian mechanics describes physical systems in terms of a pair of complementary spaces linked by certain laws of motion: the space of probability distributions over the physical states of a system (the beliefs of an observer about its environment, say), and a simultaneous space of probability distributions encoded or entailed by the system, which are linked by approximate Bayesian inference. Bayesian mechanics is premised on the conjugation of the dynamics of the beliefs of a system (i.e. their time evolution in a space of beliefs) and the physical dynamics of the system encoding those beliefs (i.e. their time evolution in a space of possible states of trajectories) [2,6]; the resulting mathematical structure is known as a ‘conjugate information geometry’ in [1], where one should note that ‘conjugate’ is a synonym for ‘adjoint’ or ‘dual’. Using the tools of Bayesian mechanics, we can form mechanical theories for a self-organizing system that looks as if it is modelling its embedding environment. Thus, Bayesian mechanics describes the image of a physical system as a flow in a conjugate space of probabilistic beliefs held by the system, and describes the systematic relationships between both perspectives.

It is often said that systems that are able to preserve their organization over time, such as living systems, appear to resist the entropic decay and dissipation that are dictated by the second law of thermodynamics (this view is often attributed to Schrödinger [9]). This is, in fact, untrue, and something of a sleight of hand, as Schrödinger himself knew well: self-organizing systems, and living systems in particular, not only conform to the second law of thermodynamics, which states that the internal entropy of an isolated system always increases, but conform to it exceptionally well—and in doing so, they also maintain their structural integrity [4,9–14]. The foundations of Bayesian mechanics have been laid out by the pioneers of the physics of complex adaptive systems and of the study of natural and artificial intelligence. Bayesian mechanics builds on these foundational methods and tools, which have been applied to develop mathematical theories and computational models, allowing us to study the seemingly paradoxical emergence of stable structure as a special case of entropic dissipation [15–17]. Bayesian mechanics has origins in variational principles from other fields of physics and statistics, such as Jaynes’ principle of maximum entropy [18] and the principle of stationary action, and draws on a broad, multidisciplinary array of results from information theory and geometry [19–21], cybernetics and artificial intelligence [22,23], computational neuroscience [24,25], gauge theories for statistical inference and statistical physics [6,26–29], as well as stochastic thermodynamics and non-equilibrium physics [17,30,31]. Bayesian mechanics builds on these tools and technologies, allowing us to write down mechanical theories for the particular class of physical systems that look as if they are estimating posterior probability densities over (i.e. estimating and updating their beliefs about) the causes of their observations.

In this paper, we discuss the relationship between dynamics, mechanics and principles. In physics, the ‘dynamics’ of a system usually refers to descriptions (i.e. phenomenological accounts) of how something behaves: dynamics tell us about changes in position and the forces that cause such changes. Dynamics are descriptive, but are not necessarily explanatory: they are not always directly premised on things like laws of motions. We move from description to explanation via mechanics, or mechanical theories: specific mathematical theories formulated to explain where dynamics come from, by providing a formulation of the relationships between change, movement, energy (or force) and position. Finally, principles are prescriptive: they are compact mathematical statements in light of which mechanical theories can be interpreted. That is, if a mechanical theory explains how a system behaves the way that it does, a principle explains why. For instance, classical mechanics provides us with equations of motion to explain how the dynamics of non-relativistic bodies are generated, relating the changes in position of the system to its potential and kinetic energies; while the principle of stationary action tells us why such relations obtain, i.e. the real path of the system is the one where the accumulated difference between these two energies is at a minimum. Likewise, Bayesian mechanics is a set of mechanical theories designed to explain the dynamics of systems that look as if they are driven by probabilistic beliefs about an embedding environment.

We have said that mechanics rest on prescriptive principles. At the core of Bayesian mechanics is the variational free energy principle (FEP). The FEP is a mathematical statement that says something fundamental (i.e. from first principles) about what it means for a system to exist, and to be ‘the kind of thing that it is’. The FEP provides an interpretation of mechanical theories for systems that look as if they have beliefs. The FEP is thereby purported to explain why self-organizing systems seem to resist the local tendency to entropic decay, by acting to preserve their structure. The FEP builds on decades of previous work redefining classical and statistical mechanics in terms of surprisal and entropy (e.g. the pioneering work of [15–17]). Surprisal is defined as the log-probability of an event: heuristically, it quantifies how implausible a given state of a process or outcome of a measurement is, where high surprisal is associated with states or outcomes with a low probability of being observed (in other words, those states something would not, typically, be found in).1 Entropy is the expected or average surprisal of states or outcomes. It is also a measure of the spread of some probability distribution or density, and quantifies the average information content of that distribution [34]. Variational free energy is a tractable (i.e. computable) upper bound on surprisal; negative free energy is known as the evidence lower bound or ELBO in machine learning [35]. The FEP describes self-organization as a flow towards a free energy minimum. It has been known that one can use the FEP to write down the flow of dynamical systems as self-organizing by avoiding surprising exchanges with the environment and thereby minimizing entropic dissipation over time (e.g. [1,36]). The FEP consolidates this into a modelling method, analogous to the principles of maximum entropy or stationary action. That is, the FEP is not a metaphysical statement about what things ‘really are’. Rather, the FEP starts from a stipulative, particular definition of what it means to be a thing, and then can be used to write down mechanical theories for systems that conform to this definition of thing-ness [1,3].

Before proceeding, we highlight the distinction between two meanings of the word ‘belief’: a probabilistic one, where the term ‘belief’ is used in the technical sense of Bayesian statistics, to denote a probability density over some support, and thereby formalizes a belief of sorts about that support; and a propositional or folk understanding of the term, common in philosophy and cognitive science, which entails some kind of semantic content with verification conditions (e.g. truth-conditional ones). In this paper, we always mean the former, probabilistic sense of ‘belief’; and we will use the terms ‘belief’ and ‘probability density’ interchangeably.

With that caveat in place, Bayesian mechanics is specialized for particular systems that have a partition of states, with one subset parametrizing probability distributions or densities over another. Bayesian mechanics articulates mathematically a precise set of conditions under which physical systems can be thought of as being endowed with probabilistic (conditional or Bayesian) beliefs about their embedding environment. Formally, Bayesian mechanics is about so-called ‘particular systems’ that are endowed with a ‘particular partition’ [1]—i.e. into particles, which are coupled to, but separable from, their embedding environment. By ‘particular system’ we mean a system that has a specific (i.e. ‘particular’) partition into internal states, external states, and intervening blanket states, which instantiate the coupling between inside and outside (the ‘Markov blanket’). The internal and blanket states can then be cast as constituting a ‘particle’, hence the name of the partition.2 Under the FEP, the internal states of a physical system can be modelled as encoding the parameters of probabilistic beliefs, which are (probability density) functions whose domain are quantities that characterize the system (e.g. states, flows, trajectories, other measures).

In a nutshell, Bayesian mechanics is set of a physical, mechanical theories about the beliefs encoded or embodied by internal states and how those beliefs evolve over time: it provides a formal language to model the constraints, forces, fields, manifolds, and potentials that determine how the internal states of such systems move in a space of beliefs (i.e. on a statistical manifold). Because these probabilistic beliefs depend on parameters that are physically encoded by the internal states of a particle, the resulting statistical manifolds (or belief spaces) and the flows along them have a non-trivial, systematic relationship to the physics of the system that sustain them. This is operationalized by applying the FEP: we model the behaviour of a particular system by a path of stationary action over free energy, given a function (called a synchronization map) that defines the manner in which internal and external states are synchronized across the boundary (or Markov blanket) that partitions any such dynamical system (should that partition exist). In summary, Bayesian mechanics is about the image of a physical system in the space of beliefs, and the connections between these representations: that is, it takes the internal states of a particular system (and their dynamics) and maps them into a space of probability distributions (and trajectories or paths in that space), and vice versa.

Two related mathematical objects form part of the core of the FEP, and will play a key role in our account of Bayesian mechanics: (i) ontological potentials or constraints and (ii) the mechanics of systems that are driven by such potentials. An ontological potential, on this account, is similar to other potentials in physics, e.g. gravitational or electromagnetic potentials. It is a scalar quantity that defines an energy landscape, the gradients of which determine the forces (vector fields) to which the system is subject. Such potentials are ontological because they characterizes what it is to be the kind of thing that a thing is: they allow us to specify equations of motion that a system must satisfy to remain the kind of thing that it is.

Ontological potentials or constraints provide a mathematical definition of what it means for a particular system to be the kind of system that it is: they enable us to specify the equations of motion of particular systems (i.e. their characteristic paths through state space, the way that they evolve over time, the states that they visit most often, etc.), based on a description of what sorts of states or paths are typical of that kind of system. We review these notions in technical detail in §§3 and 4. In particular, Bayesian mechanics is concerned with the relationship between the ontological potentials or constraints, and the flows, paths, and manifolds that characterize the time evolution of systems with such potentials, allowing us to stake out a new view on adaptive self-organization in physical systems.

We shall see that this description via the FEP always comes with a dual, or complementary, perspective on the same dynamics, which is derived from maximum entropy. This view is about the probability density that the system samples under, and how that density is enforced or evolves over time.3 We consider at length the duality between the FEP and the constrained maximum entropy principle (CMEP), showing that they are two perspectives on the same thing. This provides a unifying point of view on adaptive, self-organizing dynamics, which embraces the duality of perspectives: that of adaptive systems on their environment (and themselves), and that of the ambient heat bath, in which they are embedded (and into which all organized things ultimately decay).

These points of view might seem opposed, at least prima facie: after all, persistent, complex adaptive systems appear organized to resist entropic decay and dissipation; while the inevitable, existential thermodynamic imperative of all organized things embedded in the heat bath is to dissipate into it [9]. The resolution of this apparent tension is a core motivation for dualizing the entire construction. Just as we can think of controlled systems that maintain their states around characteristic, unsurprising set-points [2]—despite perturbations from the environment—we can view a self-organizing system as a persistent, cohesive locus of states that is embedded within an environment, and which is countering the tendency of the environment to dissipate it. This ‘agent–environment’ or ‘relational’ symmetry is fundamental to almost all formal approaches to complex systems, which are rooted in the interactions between open systems [38–43], making it an attractive framework for understanding complexity.

In particular, self-organization can be viewed in two ways. One is from the perspective of the ‘self’, inhabiting the point of view of the individuated thing that is distinct from other things in its environment. From this perspective, provided by the FEP, one can ask how particular systems interpret their environment and maintain their ‘selves’—the kinds of structure typical of the kind of thing that they are. This requires engaging in inference about the causes of internal or sensory states. Dually, one can view this from the perspective of ‘organization’—i.e. from the outside peering in, modelling what it looks like for a structure to remain cohesive, and not dissipate into its environment over some appreciable timescale. This latter perspective is like asking about the internal states of some system, rather than the beliefs carried by internal states (as one might under an FEP-theoretic lens). That both stories concern the self-organization of the system, but model it in different ways, is no accident.

In the same, dual sense, asking about organization is like being an observer or a modeller situated in the external world, formulating beliefs about the internal states of a particular system. These viewpoints are equivalent, in that they tell the same story about inference and the dynamics of self-organization. This duality allows us to view the FEP and Bayesian mechanics through a number of complementary lenses. The advantage to changing our perspective is that we can compare the FEP to the view from maximum entropy, which is more familiar in standard mathematics and physics. In particular, it should provide us with a systematic method to relate the dynamics and mechanics of organized systems to the dynamics and mechanics of the beliefs encoded or embodied by these organized systems, recovering fundamental predecessor formulations to Bayesian mechanics and the FEP in the language of the physics of self-organization.

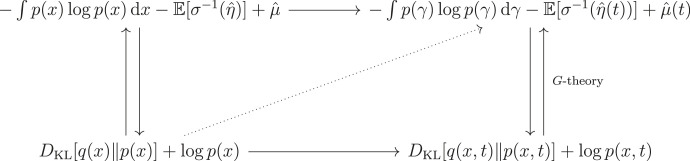

The argumentative sequence of this paper is as follows. The overall paper is divided into three main parts. The first part of the paper is written as a (relatively) reader-friendly, high-level descriptive summary of the FEP literature, which spans nearly two decades of development. We first offer some preliminary material on dynamics, mechanics, field theories, and principles, and provide some motivation for the emergence of Bayesian mechanics. We then discuss the state of the art of Bayesian mechanics in quite some depth. We provide a narrative review the core formalisms and results of Bayesian mechanics. We comprehensively review the FEP as it appears in the literature, and distinguish three main ways in which it has been applied to model the dynamics of particular systems. We call these path-tracking, mode-tracking and mode-matching. The second part of the paper introduces, again at a high level, a new set of results that have become available only recently, which concern the duality between the FEP and the principle of maximum entropy subject to a particular constraint; and which are more mathematically involved, drawing in particular on gauge theory. We make a short detour to discuss gauge theories, maximum entropy, and dualization. With this in place, we examine the duality of the FEP and the CMEP. The final part of the paper discusses the burgeoning philosophy of Bayesian mechanics. We discuss the implications of the duality between the FEP and the CMEP for Bayesian mechanics, and sketch some directions for future work. Finally, taking stock, we chart a path to a generalization of this duality to even more complex systems, allowing for the systematic study of systems that exist far from equilibrium and elude steady-state densities or stationary statistics—an area of study that we designate as G-theory, covering the duality of Bayesian mechanics of paths and entropy on paths (or calibre), and beyond.

The reader should note that this paper is not a standalone treatment of the Bayesian mechanics and the free energy principle, and should instead be seen as a more conceptually oriented companion paper to the technical material that is reviewed; as such, we will often opt for qualitative descriptions instead of explicit equations, and refer the reader to the technical material for detailed examinations of assumptions and proofs. It should be noted that the fields encompassing Bayesian mechanics, the free energy principle, and the maximum entropy principle are inherently technical ones that presuppose and leverage detailed formal constructs and concepts. We aim for this paper to be relatively self-contained, and provide some introductory material to facilitate reading; but we assume that the reader has a working knowledge of dynamical systems theory (specifically, the state or phase space formalism), calculus (especially ordinary and stochastic differential equations), and probability or information theory. A familiarity with gauge theory is also useful in reading the second main part of the paper. The philosophical denouement of the paper should be accessible to readers with relatively little background in mathematics and physics.

2. An overview of the idea of mechanics

Before diving into Bayesian mechanics proper, we begin by reviewing some of the core concepts that underwrite contemporary theoretical physics.

In formal approaches to the study of physics, a description of the behaviour of a specific object is the very bottom of a hierarchy of theory-building. As discussed in the Introduction, the dynamics of a system constitute a description of the forces to which that system is subject, which is typically specified via laws or equations of motion (i.e. a mechanics). Before we can derive a mathematical description of the behaviour of something, we need a large amount of other information accounting for where those equations of motion come from. A mechanical theory, for instance, is a mathematical theory that tells us how force, movement, change and position relate to each other. In other words, mechanical theories tell us how a thing should behave; and, given some specific system, we can use the mechanical theory to specify its dynamics. This distinction between a phenomenological (or merely descriptive) model and a physical, mechanical theory usually lies in that mechanical theories can be derived from an underlying principle, like the stationary action principle. Thus, the resultant mechanical theory specifies precisely what systems that obey that principle do—and conversely, the principle provides an interpretation of that mechanical theory, given a set of system-level details relevant for the sought dynamical picture.

The word ‘theory’ is polysemous. A quick overview of the key notions in the philosophy of scientific modelling is useful to clarify what we mean here (see [44] for an excellent overview; also see [45–47]). What we have called ‘dynamics’, ‘mechanics’ and ‘principles’ are, ultimately, mathematical structures (in mathematics, these are also called mathematical theories). The content of a mathematical theory or structure is purely formal: e.g. clearly, the axioms and theorems of calculus and probability theory are not intrinsically about any real empirical thing in particular. What are usually called ‘scientific theories’ or ‘empirical theories’ comprise a mathematical structure and what might be called an empirical application, interpretation or construal of that structure, which relates the constructs of the mathematical structure to things in the world, e.g. to specific observable features of systems that exist.4

It is sometimes said that principles in physics, such as the principle of stationary action, are not falsifiable, strictly speaking; and this, despite playing an obviously prominent role in scientific investigation, which is ultimately grounded in empirical validation (at least prima facie). We can make sense of this in light of the distinction between mathematical theories (i.e. what we have called mechanical theories and principles) and their empirical applications. The resolution of the tension lies in noting that absent some specific empirical application, mathematical structures are not meant to say anything specific about empirical phenomena. Indeed, as [45] and [6, remark 5.1] argue, the possibility of introducing productive ‘abuses of notation’ by deploying one same mathematical structure to explain quite different phenomena is part of what makes formal modelling such a powerful scientific tool in the first place.

We have said that dynamics are descriptive, but that they are not necessarily explanatory. There is a long tradition of argument according to which dynamical systems models are not inherently explanatory (e.g. [48–50]), since they do not necessarily appeal to an explanatory mechanism, and instead provide a convenient formal summary of behaviour. This is the main difference between, for example, Kepler’s account of the motion of celestial bodies, which was merely descriptive (and so, a dynamics according to our definition), and Newton’s universal laws of motion, which provide us with a mechanics, apt to explain these dynamics. That is, Kepler’s laws of planetary motion are not really equations of motion in the contemporary sense; they are description of heliocentric orbits as elliptical trajectories, and do not provide an explanation of the shape of these orbits (e.g. in terms of things such as mass and gravitational attraction, as Newton would later do; and which we would label as mechanics).

Since the pioneering work of Maxwell, nearly all of modern physics has been formulated in terms of field theories. After the turn of the twentieth century, all of physics was reformulated in terms of spatially extended fields, due to their descriptive advantages [51]. Fields are a way to formally express how a mechanical theory applies to a system within the confines of a single path on space–time, a so-called world-line. That is, a field constrains equations of motion to apply to specific, physically realizable trajectories in space–time. (Likewise, most of modern physics has been geometrized due to geometry’s descriptive advantages [52]. Later, we will see that contemporary physics augments the field theoretical apparatus with the geometric tools of gauge theory.) Mathematically speaking, a field is an n-dimensional, abstract object that assigns a value to each point in some space; when that value is a scalar, we call the field a scalar field, of which a special case is a potential function. For example, the electromagnetic field assigns a charge density to each point in space (i.e. the electric and magnetic potential energies); the gradient of this potential, in turn, determines the force undergone by a particle in that potential. Similarly, a gravitational field assigns a value to each point in space–time, in terms of the work per unit mass that is needed to move a particle away from its inertial trajectory.

We are concerned here with what we have called Bayesian mechanics. In general, when one speaks about a mechanical theory for some sort of physics—such as quantum mechanics (a theory describing the behaviour of things at high energies, i.e. very small things moving very quickly, like quantum particles), statistical mechanics (a theory producing the behaviour of systems with probabilistic degrees of freedom, especially the ensemble-level behaviour of large numbers of functionally identical things), or classical mechanics (a theory producing the behaviour of objects and particles in the absence of noise or quantum effects, and at non-relativistic speeds)—mechanics, which provide the equations of motion that we are interested in, are themselves deduced from some sort of symmetry or optimization principle. Mechanical theories can then be fed data about a specific system, like an initial or boundary condition for the evolution of that system, and the equation will return the dynamics of that system.5

An example of a mechanical theory operationalizing a mathematical principle, which thereby allows us to specify the dynamics of a system, is in the fact that classical objects obey Newton’s second law—i.e. that

for the position q of some object at a time t, and a force on that position, −(∂/∂q)V(q). Note that, as discussed above, the force is expressed as the space-derivative (∂/∂q) of a gravitational potential, V(q), with respect to position q. Newtonian classical mechanics, which is embodied by this equation, gives us the dynamics (i.e. a trajectory) of some classical object when we specify precisely what the potential V(q), initial velocity , initial position q(0), other appropriate boundary conditions, and finally the domain, are.

If, instead, we had specified energy functions for our system based on the force observed, we could have produced the dynamics of this object via Lagrangian mechanics. In classical physics, and more generally, a quantity which summarizes the dynamics of a system in terms of the energy on a trajectory through the space of possible states, or path, is called a Lagrangian [53]. Physically, this is defined in terms of a kinetic energy. We can use a Lagrangian to determine the principle from which classical mechanics follows. Letting , Newton’s second law comes from the principle of stationary action, since the variation of the following integral of the Lagrangian along a path,

yields the Euler–Lagrange equation

when minimized. The integral mentioned is called an action functional, and the variation of the action leads to a rule describing any path of stationary action [54]. We can see this in our example: the Euler–Lagrange equation reduces to

after some algebra. This is Newton’s second law (noting that the acceleration a is the second derivative of position, i.e. a = ). This result is a summarization of the fact that systems tend not to use6 any more energy than they need to accomplish some motion (see [55] for a pedagogical overview); this translates to the use of the least possible amount of energy to perform a given task. In other words, along the path of stationary action, the change in potential energy is precisely equal to the change in kinetic energy (i.e. their difference is zero), such that no ‘extra’ energy is used, and no ‘extra’ motion is performed. The fact that the accumulated difference in kinetic energy and potential energy is zero reflects this desired law for the exchange of the two quantities—indeed, this is what underwrites the conservation of energy in physics more generally. It is also what justifies the observation that systems tend to accelerate along forces, and do so by precisely the force applied—no more, and no less. As such, classical mechanics tells us that systems accelerate along forces because they conserve their energy and follow paths of stationary action (i.e. paths over which its variation is zero), and conversely, the theory of classical mechanics comes from the stationary action principle. See figure 1.

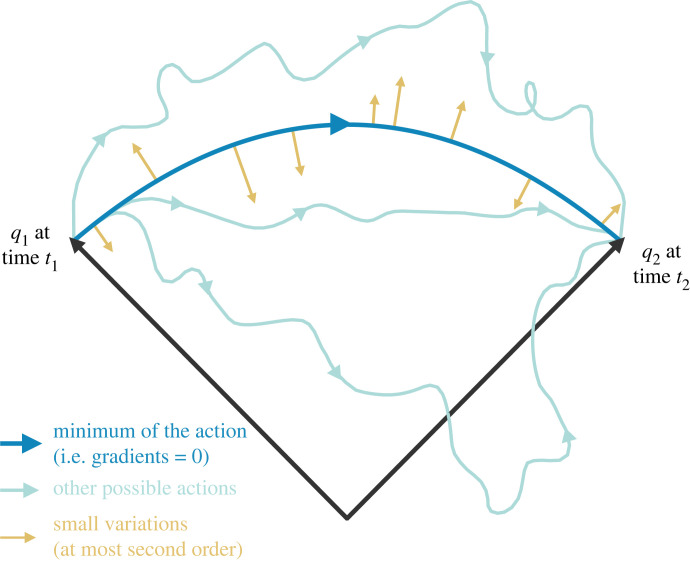

Figure 1.

Depiction of the principle of stationary action. This figure shows that the path of least action (darker blue) is the path which is a stationary point of the action—a path for which the gradient of the action is zero. Here, the trajectory is a parabola, like the kind of path one might observe through a gravitational field. On this path, the action changes at most quadratically under the variations in yellow. Other paths in lighter blue are less ‘ideal’, in that they break the precise balance between kinetic and potential energies. That is, these paths do not move in the potential well.

For us, important examples of a principle (with are accompanied by mechanical theories) include the principle of stationary action (which we have just discussed), the maximum entropy principle, and the free energy principle. According to Jaynes, the maximum entropy principle is the principle whereby the mechanics of statistical objects lead to diffusion [56–58].7 Likewise, the FEP is the principle according to which organized systems remain organized around system-like states or paths, and the mechanical theory induced by the FEP can be understood as entailing the dynamics required to self-organize. We can understand the former as statistical mechanics—the behaviour of particles under diffusion—and we have called the latter Bayesian mechanics. Interestingly, to every physical theory is paired some sort of characteristic geometry, such as symplectic geometry in classical mechanics; moreover, as discussed, mechanical theories are usually seen as the restrictions of field theories to a worldline. By focusing on the aspects of the FEP that relate a physical principle of symmetry to a mechanical theory for the dynamics of some given system, we implicitly introduce notions of geometry and field theory to the FEP, both of which are enormously powerful. We review these ideas in §§5 and 6.

3. The free energy principle and Bayesian mechanics: an overview

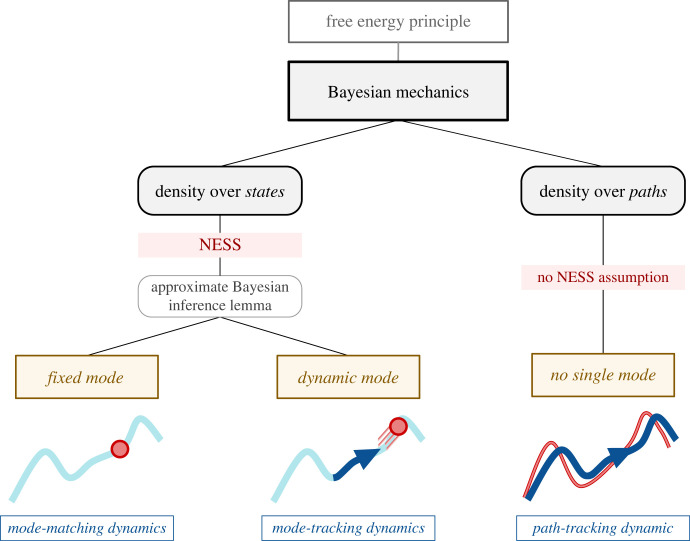

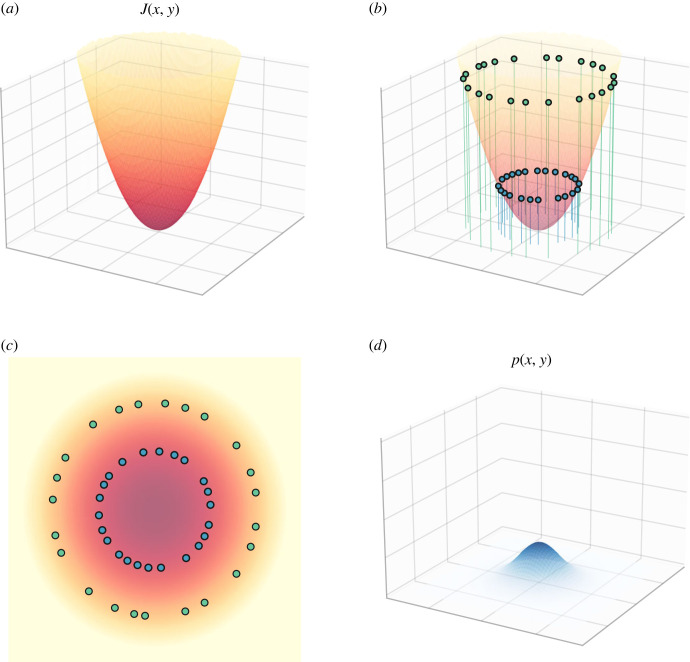

This section reviews key results that have been derived in the literature on the variational FEP, situating them within the broader Bayesian mechanical perspective. We first provide a general introduction to the FEP. We then outline a fairly comprehensive typology of formal applications of the FEP that one can find in the literature; these are applications to different kinds of systems with different mathematical properties, which are not often distinguished explicitly. We begin by examining the simplest and most general formulation of the FEP, applied to model probability densities over paths of a particular system, often written in generalized coordinates of motion. This general paths-based formulation of the FEP assumes very little about the dynamics of the system, and in particular, does not assume that a non-equilibrium steady state with a well-defined mode exists. We then turn to a formulation of the FEP in terms of the dynamics of a probability density over states (i.e. the density dynamics formulation of the FEP). This density dynamics formulation, in turn, has taken two main forms in the literature, where the external partition of states has, and does not have, any dynamics to it, respectively. The density dynamics formulation makes stronger assumptions than the paths-based formulation, namely, that the mechanics of the system admit a steady state solution, which allows us to say specific things about the form of the flow of a particular system. We discuss a result known as the approximate Bayesian inference lemma that follows from the density dynamics formulation. See figure 2.

Figure 2.

Three faces of Bayesian mechanics. Under the FEP, we can define specific mechanical theories for beliefs, which defines what kinds of self-evidencing are possible in a given regime of mathematical systems. The literature contains three main applications of Bayesian mechanics, which we represent as a tree with two branching points. On the one hand, the FEP has been applied to densities over paths or trajectories of a particular system (the paths-based formulation of FEP, leading to what we call path-tracking dynamics) and to densities over states (the density dynamics formulation), which depend on a NESS solution to the mechanics of the system. The density dynamics formulation, in turn, applies to systems with a static mode, and to systems with a dynamic mode; we call the former mode-matching, and the latter mode-tracking.

3.1. An introduction to the free energy principle

In §2, we said that principles are mathematical theory or structure that are used to write down mechanical theories for a given class of systems. The FEP is precisely such a mathematical principle, which we can leverage to write down mechanical theories for ‘things’ or ‘particles’, defined in a particular way. The FEP is the mathematical statement that if something persists over time with a given structure, then it must encode or instantiate a statistical (generative) model of its environment. In other words, the FEP tells us that things that maintain their structure in an embedding environment necessarily acquire the statistical structure of that environment.

Like most of contemporary statistical physics, the FEP starts from probabilistic description of a system—usually, a system of stochastic differential equations (SDEs). SDEs are used to describe the time evolution or flow of a system (i.e. to write down a mechanical theory) in the space of possible states or configurations that it can occupy (what is known as the state or phase space of that system). The SDEs allow us to formulate mechanical theories to explain dynamics with a deterministic component (also known as the drift of the SDE) and a stochastic component (the noise of the SDE). In the absence of noise, an SDE reduces to an ordinary differential equation (ODE), whereby the system evolves deterministically in the direction of the flow.

Formal treatments of the FEP usually begin with a physical system described by an Itô SDE of the form

where f(x) is the drift of the flow of x and ξ(t) is white noise; heuristically, the time derivative of a standard Wiener process dWt/dt. The volatility matrix C encodes the spatial directions and magnitude of the noise and yields the diffusion tensor , which encodes the covariance of random fluctuations.8

With such a general setup in place, most of contemporary physics, from classical to statistical and quantum mechanics, proceeds to ask whether we can say anything interesting about the probability distribution of different paths or states of the system. The probability distribution over paths or states of a system is usually called a generative model in the FEP literature [62]. In statistics, a generative model or probability density is a joint probability density function over some variables. In the FEP literature, the generative model can be analysed in two complementary ways, leading to two main formulations of the FEP: either as a density over states, specifying their probability of being encountered (as opposed to encountering surprising states); or as a density over paths, quantifying the probability of that path (as opposed to other, less probable paths). One can visualize the generative model as a curved (probability density shaped) surface over the space of states or paths of a particular system; the probability of a state of path is associated with the height of the image of the function over the space of states or paths.

The next step concerns the particular partition. We said that the FEP applies to ‘things’ defined in a particular way, which become models of their embedding environment. Clearly, to talk about ‘a model’ necessitates a partition into two entities: something that we can identify as the model, and something being modelled. Accordingly, the FEP applies informatively to ‘things’ defined in a particular way, via a sparse causal dependence structure or sparse coupling, which is the key construction from which the rest of the FEP follows [3,63,64]. In other words, the FEP is a principle that we can apply to specify the mechanics of systems that have a specific, particular partition (i.e. into a thing that is a model and a thing that is being modelled). For such a distinction to hold in physical systems, it must be the case that the causal coupling between the thing that instantiates the model and the thing that is modelled evince some kind of sparsity. Consider the informal proof by contradiction: if everything was causally affected by everything else (i.e. if there was no sparse coupling, as in a gas) over some meaningful timescale, then we would not be able to speak of any one ‘thing’ as against a backdrop of other things.

So, we partition the entire system x into four components.9 Explicitly, we set x = (η, s, a, μ), where μ denotes variables pertaining to the model (termed ‘internal states’ or ‘internal paths’), η denotes variables pertaining to the generative process (termed ‘external states’ or ‘external paths’) and b = (s, a) denotes variables that couple internal and external states—the Markov blanket—which, here, comprises sensory and active states (or paths).10 Generically speaking, the Markov blanket of the particular partition is precisely the set of degrees of freedom that separate—but also couple—one particle (or open system) to another, within a given overarching system [65]. For instance, in [66], the Markov blanket becomes the variable whose space–time path distinguishes one particle from another; this is similar to the argument presented in [67]. The aim of introducing a particular partition is exactly to introduce the degrees of freedom allowing us to separate one system from another, in such a way that they could in principle engage in inference about (i.e. track) each other. In this sense, the Markov blanket is not special—it simply entails separability given some variable b.11

Sensory states are a subset of the Markov blanket: they are those blanket states that are affected by external states and that affect internal states, but that are not affected by internal states. Active states are those blanket states that are affected by internal states and that affect external states, but that are not affected by external states.12

As indicated, this partition assumes sparse coupling [64,68] among the subset of states or paths (i.e. some subset of the partition evolves independently of another given the dynamics of the blanket), which has the following form:

| 3.1 |

where we use subscripts to denote which subset to which each flow applies. The key point to note is that the flow of internal and active components (i.e. their trajectory through state space) does not depend upon external components (and reciprocally, the flow of external and sensory states or paths does not depend upon internal states or paths). It should be stressed that the blanket is an interface or boundary in state space, i.e. it is not necessarily a boundary in space–time [69] (although in some cases, it coincides with one, e.g. the walls of a cell). The internal states (or paths) and their blanket states (or paths) are generally referred to as the particular states or paths (i.e. states or paths of a particle); while the internal and active states (or paths) are together called autonomous states or paths because they are not influenced by external states (or paths).

The key point of this construction is that, given such a partition, under the FEP, we can interpret the autonomous partition of the particular system as engaging in a form of Bayesian inference, the exact form of which depends on additional assumptions made about the kind of sparse coupling and conditional independence structures of the particular system being considered [68]. The particular partition is ultimately what licences our analogy with Bayesian inference per se, because it licences our interpretation of the internal states of the system as performing (approximate Bayesian or variational) inference. In variational inference, we approximate some ‘true’ probability density function p by introducing another probability density called the variational density (also known as the recognition density), denoted q, with parameters μ. Using variational methods, we vary the parameters μ until q becomes a good approximation to p. In a nutshell, the FEP says that, given a particular partition, the internal states of a particular system encode the sufficient statistics of a variational density over external states (e.g. the mean and precision of a Gaussian probability density). This, as we shall see, induces an internal, statistical manifold in the internal space of states or paths—and accompanying information geometry.

As stated in §1, the point of this sort of inference is to minimize the surprisal of particular states or paths of such states. We encountered the idea of minimization of some action as a principle for mechanics in a more general fashion in §2. This remains the case here: we can write down the FEP as a ‘principle of least surprisal’. When applied to different kinds of formal systems, we get different types of Bayesian mechanics, in the same sense as we get various sorts of classical mechanics in different mathematical contexts, depending on the assumptions made about the underlying state spaces and action functionals (Newtonian or Lagrangian mechanics, gravitational mechanics, continuum mechanics, and so forth).

In [66,70], the surprisal on a path (and in particular, a path conditioned on an initial state)

is suggested as an action for Bayesian mechanics. Here, x(t) is a path of system states at a set of times parametrized by t. The path of least action is the expected flow of the system, f(xt).

A crucial aspect of this formulation is that we can recover Bayesian inference from the minimization of surprisal. Suppose we apply the surprisal to paths of particular states, i.e. π(t) = (a(t), s(t), μ(t)). The mechanics of π(t), in terms of the mechanics of the beliefs that π(t) holds, is where the Bayesian mechanical story starts. As we just said, the presence of this particular partition implies the existence of a variational density

| 3.2 |

at a given time—in other words, a belief about external states parametrized by internal states. Heuristically, we can think of this as a probabilistic specification of how causes generate consequences. Indeed, we can now say

and using arguments typical in variational Bayesian inference, this allows us to claim that such systems do engage in inference, via the identity

| 3.3 |

which holds if and only if the system is inferring the causes of its observations, such that (3.2) holds (in which case the KL divergence above is zero). In that sense, any system which minimizes its surprisal automatically minimizes the variational free energy, licensing an interpretation as approximate Bayesian inference.

In detail—crucially, this variational free energy is a functional of a probability density over external states or paths that is parametrized by internal states or paths (given some blanket states or paths) and it plays the role of a marginal likelihood or model evidence in Bayesian statistics. This is a key step of the construction, because it connects the entropy of the system to the entropy of its beliefs, i.e. the entropy of the distribution over internal states H[p(μ)] and the entropy of the variational (or recognition) density over external states, parametrized by internal states H[q(η)].

3.2. Applications of the free energy principle to paths, without stationarity or steady-state assumptions

The simplest, most general, and in many ways most natural expression of the FEP, is formulated for the paths of evolution of a particular system [66]. It is often underappreciated that the FEP was originally formulated in terms of paths, in generalized coordinates of motion [71,72]; it is, after all, a way of expressing the principle of stationary action, which tells us about the most likely path that a particle will take under some potential. Much of the mathematics of the FEP goes back to work done in signal processing and Bayesian filtering, which is dynamical, and was developed for neuroimaging [73,74]. It would, however, be inaccurate to say that the work on the path integral formulation was abandoned in favour of other formulations. Indeed, the astute reader will note that the main monograph on the FEP, namely [1], was written (albeit sometimes implicitly) in terms of generalized coordinates.

When we operate in paths-based formulation, we operate in what are known as ‘generalized coordinates’, where the temporal derivatives of a system’s flow are considered separately as components of the ‘generalized states’ of the system [66,74,75]. We can use these generalized states to define an instantaneous path in terms of the state space of a system, because they can be read as the coefficients of a Taylor expansion of the states as a function of time [66,70]. In this formulation, a ‘point’ in generalized coordinates corresponds to a possible instantaneous path, i.e. a trajectory over state space or ordered sequence of states; and the FEP, as formulated over paths, concerns probability densities over such instantaneous paths.

Formally, the paths-based formulation of the FEP says that, for any given path of sensory states, the most likely path of autonomous states (i.e. the path in the joint space of internal and external states) is a minimizer or stationary point of a free energy functional.13 This can be expressed as a variational principle of stationary action, where the action is defined as the path integral of free energy [66]; in the sense that variations of the most likely path do not appreciably change the integral along a trajectory of the free energy F, i.e.

See [65,66] for more details. There is no need, here, for assumptions as to steady state densities or stationary modes: this is a straightforward path of least action, where autonomous states minimize their action.

A central application of this formalism is active inference, where the path of active states is a minimizer of expected free energy (EFE) [76].14 Denoting the action of a conditional probability density as A[ − | − ], we can formulate active states as minimizers of an action,

and thus of the EFE

under certain exchangeability conditions [66,70]. Note that EFE is very different from the variational free energy explored in the density-over-states formulation of the FEP, in that EFE is not a bound on surprisal. This is consistent with the fact that the density-over-paths formulation describes systems very differently from the density-over-states formulation.

In short, under the FEP, we can say in full generality (i.e. without making any assumptions about stationarity or steady state) that the existence of a particular partition, defined in terms of sparse coupling of paths of internal and external subsets of a particular system, licences an interpretation of the most likely internal and active paths, given sensory paths, as instantiating an elemental form of Bayesian inference.15 Thus, the dynamics of such systems appear to engage in path-tracking dynamics: autonomous paths look as if they track (i.e. predict) external paths. This is sometimes called self-evidencing [6,79]. This has both a sentient aspect (i.e. responsive to sensory information [80]) and an elective or enactive aspect (i.e. decision- and planning-related [8]). Formally, these are attributed to the internal and active paths, respectively; and in the case of the latter, following a path of stationary action (i.e.minimizing EFE) is known as active inference.

There are two aspects to the notion of prediction in this setting. The first is that these equations of motion constitute mechanical theories, as we have defined them; they provide the laws of motion that explain the dynamics of a system. As such, they constitute a predictive (i.e. generative) model that allows us (as experimenters or modellers) to predict the behaviour of systems, given some initial conditions, as in [81]. A complementary aspect is the kind prediction in which the particles that we are modelling engage. Briefly, if a system has a particular partition, then it will look as if subsets of that system (i.e. internal and external states) track each other, or equivalently, infer the statistical structure of each other. This allows us to make predictions (as modellers) about the kinds of inferences or predictions that are made by particles themselves.

We should note that this result is more minimalistic than the ones we review next. In particular, it says nothing about the specific form of the flow taken by autonomous states (indeed, this formulation leaves open an entire equivalence class of trajectories that minimize the variational free energy equally well, a more general problem for inference [58]).

3.3. From paths of stationary action to density dynamics: applications of the free energy principle to systems with a steady-state solution

This section moves to the density dynamics formulation of the FEP. Recent literature on the FEP (circa 2012–2019) has tended to focus on the density dynamics formulation, which defines a probability density over states that evolves over time, as opposed to a probability density over paths. In this setting, we are still dealing with paths of stationary action. However, in the new setting, we assume that the statistics of the underlying probability density have a something called a steady-state solution. We discuss a result known as the approximate Bayesian inference lemma (ABIL), which takes on two main forms in the literature, depending of the statistics of the target system. Additional assumptions can be made about the about sparse coupling and conditional independence of a particular system. When they are, they are helpful in that they make some of the mathematical derivations simpler and also, importantly, in that they allow us to say more informative things about the flow of target systems.

The density dynamics formulation has been explored for a number of reasons. The first is that non-equilibrium steady state, which features the breaking of detailed balance and a recurrence implied by solenoidal flow (see below), is an interesting model of biorhythms and other biological regularities. As such, looking at how far one can go under the assumption of a steady state density is an interesting exercise for modelling purposes. Since the path-based formulation is asymptotically equivalent to the density dynamics formulation (i.e. since maximum calibre is asymptotically equivalent to maximum entropy), nothing is lost by looking at the more restricted, special case—provided, of course, that the limitations of this approximation are noted. The second reason is pedagogical: it is that the derivations of the relevant equations of motion are less involved in ordinary coordinates when one assumes a steady state density.

The reader should note that, perhaps a bit confusingly, to say that a system is at steady state, or has a steady state solution, is not a characterization of the states of a system per se, but rather a characterization of the time evolution of the underlying probability density of the system. To say that a system has a steady state solution means that, if left unperturbed, the system would flow on the manifold defined by that solution until it arrives at a stationary point—or orbit—of that solution, where the variation of the action is zero. To say that the system is at steady state, in turn, means that the density dynamics have stopped evolving and is now at—or near, in the presence of random fluctuations—a stationary point of its action functional, at which the action cannot further be minimized. It does not mean that the system has evolved to a fixed point.

More formally, when the FEP is applied to the density over states, as opposed to paths, we assume that the equations of motion for the system admit a non-equilibrium steady-state (NESS) density. Formally, a NESS density is a stationary solution to the Fokker–Planck equation for the density dynamics [82] describing (3.1). The assumption that this density exists makes the FEP less generally applicable (how much less is still being debated; see [83] and the responses to that paper); but under these conditions, it can be used to say interesting things about flow of self-organizing systems.

Under the FEP, a NESS density satisfies the following properties:

- 1.

-

2. The flow vector field f under the NESS density can be written, via the Helmholtz decomposition (see figure 3), in the form [2,3]

where Q(x) is a skew-symmetric matrix, , is positive semidefinite matrix, J(x) is a potential function and denotes the gradient operator. is a term of the form3.5

where i is used to index the variables in x. contains the sum of the partial derivatives of each entry in both Q(x) and . It has been introduced as the ‘housekeeping’ or correction term in the FEP literature [3,70]. -

3.

The potential function J(x) in (3.5) equals the surprisal; that is, J(x) = −log p(x), where p(x) is the NESS density satisfying (3.4) [2,3].

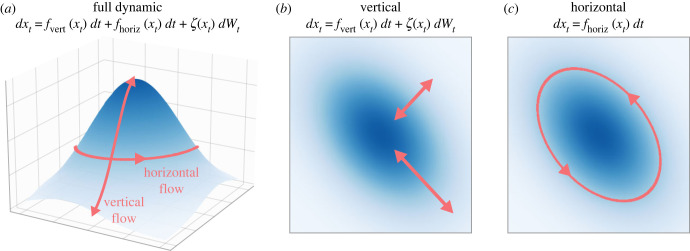

Figure 3.

Helmholtz decomposition. Splitting of flows referred to as the Helmholtz decomposition. The vertical direction consists of a gradient ascent given by and random fluctuations pushing the system away from a mode (preventing the system from collapsing to a point). The horizontal flow is a solenoidal, energetically conservative but temporally directed flow, given by a matrix operator Q.

The NESS assumption is interesting in particular systems because it allows us to say something very fundamental and informative about the kinds of flows that one finds in such systems. In these formulations, the NESS density functions as a potential function of a Lagrangian for the system’s dynamics [70].

Crucially under the FEP, the surprisal, defined in the third point of the above definition of the NESS density, can be cast as the ontological potential of a system (when it exists). We define an ontological potential as an abstract potential that induces an attractor for the dynamics of some system. It is ontological in the sense that it characterizes what it is to be the kind of system being considered. This is simply because the system is attracted to sets of states or paths that are characteristic the kind of system that it is, by definition (since they are attractor regions of that system).

An ontological potential can also be written as a set of constraints on what constitutes a system-like state. Indeed, as we will see later, for the maximum entropy solution to an inference problem, the log-probability is equal to the constraints on the particular system—so it is also a potential in the literal sense of constraining the particular system to visit a set of characteristic states. That is, mathematically, we can think of the surprisal as a potential, analogous to a gravitational or electromagnetic potential, the gradients of which allow us to specify the forces to which the particular system is subject. These determine its evolution in state space, as well as in the conjugate belief space. (Correspondingly, the log-probability of a probability density constrained to weight states in a certain way reproduces that weight, i.e. that

We will explore this further in the notion of an ontological constraint, which is dual to the notion of an ontological potential, and introduced in §5.)

When we consider the statistics of sampling dynamics that converge towards the mode of an intended NESS density, the ontological potential acquires another interpretation in terms of the system’s preferences [1,84–88]. We can view the NESS density as providing a set of prior preferences which the particular system looks as if it attempts to enact or bring about through action [2]. Indeed, we can think of this solution to the dynamics as a naturalized account of the teleology of cognitive systems [7,89].

With these assumptions in play, we can derive a stronger version of the claim that particular systems engage in a form of approximate Bayesian inference. This ABIL can be stated as follows: when a system has a steady-state solution, we can define a synchronization map that systematically relates the conditional mode of external states to that of internal states.16 Under these conditions, we can say that the particular looks as if it performs inference about an optimal conditional mode, by internally encoding the statistics of the outside environment. The ABIL itself says that under a synchronization map and a variational free energy functional (or its equivalent), this mode matching is both necessary and sufficient for approximate Bayesian inference [6].

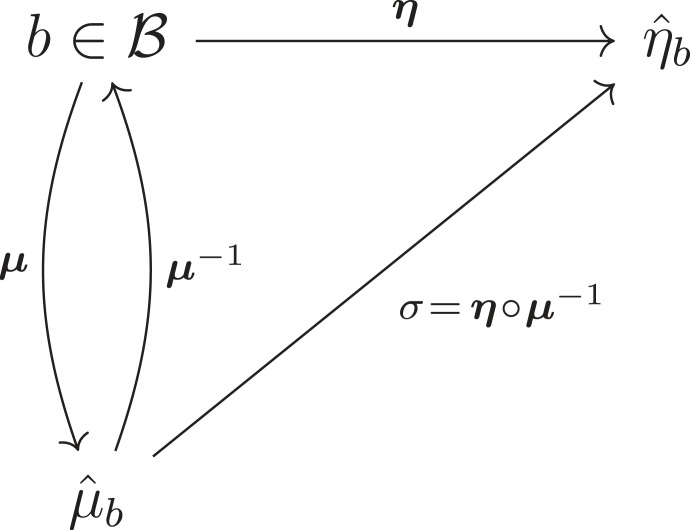

We can define the synchronization map σ formally. The map σ is a function that sends the most likely internal state, given a blanket state, to the most likely external state, given that same blanket state. These internal states are what really flow on variational free energy, a variational ‘move’ that allows us to talk about inference—since this flow shares the same minimum as the flow on surprisal, we can read these states as performing inference. As we said, this licenses an interpretation of the dynamics of a system as instantiating an elemental form of approximate Bayesian inference [91].

Let be the maximum a posteriori estimate arg max , and μ(b) be a function taking b to (and identically for η). Usually, there exists a synchronization map

described in [1,2,31,83]. We can depict this relation in the following diagram (adapted from [2]):

|

where is the set of possible blanket states, and where we have assumed, for illustration’s sake only, that μ is invertible.

To elaborate more informally, what this means is that, for every blanket state, there is an average internal state or internal mode that parametrizes a probability density over or belief about an average external state or external mode [2]. The claim behind the ABIL is that systems which match these conditional modes across a Markov blanket are storing models of their environment (or, can be read as such) and thus are engaging in a sort of inference. One should note that the existence of μ−1 is not guaranteed: we can prove that σ exists if and only if μ−1 is invertible on its image, i.e. is an injection (see [2] or [6]), but a priori cannot claim that μ−1 necessarily exists on any domain. That said, it is important to also note that we do not at all need μ to be bijective.

Furthermore, note that—given its dependence on the existence of a NESS solution to the mechanics of a particular system—this description is only taken to hold in the asymptotic limit [32,33,92].

Now, the conditional external mode may or may not have any interesting dynamics to it. This is where we encounter the second arm of the typology of formal applications (figure 2), as the FEP applies informatively to the former case, and vacuously to the latter. For instance, in the class of one-dimensional linear systems analysed in [83], there are no dynamics to the mode at all: the only source of variation in the flow is the random fluctuations. As discussed in [92], in linear systems, the dynamics simply dissipate to a stationary fixed point and remain there. Now, a system that conforms to the FEP will still match the external mode via the synchronization map, but since there are no dynamics to the external mode, by construction, there can be no proactive ‘tracking’ that might be interpreted as sentient, proactive sampling. Accordingly, [83] find that free energy gradients in these cases are uninformative about the real dynamics of the system; but this is because there are no dynamics about which to say anything interesting. We can think of this behaviour as mode matching, a kind of static Bayesian inference (e.g. apt to describe Bayesian inference that statisticians apply to data under a general linear model).

In systems where there is a dynamical aspect to the external mode, we instead obtain a much richer, proactive kind of mode-tracking behaviour, where the external state is changing over time. In these conditions, the internal mode will seem to be tracking the external mode. Since mode tracking entails the most likely flow following the deterministic component of equation (3.1), this is like the classical limit—i.e. the limit of infinite certainty—of the path integral of free energy [70]. That is to say, certain mode tracking particular systems are macroscopic Bayesian ‘particles’ for which we can ignore random fluctuations.

The following of a mode as beliefs change induces a conjugate information geometry and a corresponding flow on the conjugate statistical manifolds, in the sense that such a system performs inference at each time point to determine what belief its internal state should be parametrizing, and flows towards that optimal parameter [31,92]. As indicated, in one case, we consider the ‘intrinsic geometry’ of probability densities defined over the physical states (or paths, i.e. sequences of states) of a system, i.e. the probability of these states or paths; and in the other case, we consider the ‘extrinsic geometry’ of probability densities that are parametrized by these states or paths, i.e. we treat them as the parameters of probability densities over some other set of states or paths (see [1,93]).

In §4, we will see that we can use the technology of the CMEP to reformulate the ontological potential (i.e. the NESS potential) as a set of constraints against which entropy is maximized as the system dissipates.

3.4. Some remarks about the state of the art

Before turning to the CMEP and its connection to the FEP, we comment on some important developments in the technical literature on the FEP. Recent work [83,94] has questioned whether Markov blankets are as ubiquitous as claimed by theorists of the FEP. We briefly address this work. In summary, we argue that Markov blankets (as defined in the appropriate sense, in terms of a particular partition) are ubiquitous in physical systems: essentially all physical systems feature Markov blankets.

According to the so-called sparse coupling conjecture (SCC), all sufficiently large, sparsely coupled, random dynamical systems have a Markov blanket, defined in the usual way. Recent work has shown that the SCC holds generically in an approximate form for systems with quadratic surprisals (including quadratic surprisals with state-dependent Helmholtz matrices). That is, we now know that as an extremely generic class of random dynamical system increases in size (i.e. as they become higher dimensional), the probability of finding a Markov blanket in the system, defined in the appropriate way (i.e. between subsets of a particular partition), tends to one.

In [64], a weakened version of the SCC is proven. The cited results show that the Hessian condition used to investigate Markov blankets, even in a large class of nonlinear systems, is obtained as dimension increases. Those results build on previous work [68], which identified a sufficient condition for claiming that a system displays a Markov blanket in the case of systems with Gaussian steady-state densities. This condition is that the inner product of the Hessian of the steady-state distribution of the system (the entries of which encode the curvature, or double partial derivatives, of the surprisal) and the matrix field that captures solenoidal part of the flow be identically zero. When this inner product is identically zero, we always have a Markov blanket in the appropriate sense. Now, in [68], it was merely conjectured that the probability of finding a blanket increases with the size of the system considered. The intuition was that, as a system increases in size, there is more ‘room’ for it to be sparse, and thereby, to evince a Markov blanket between subsets. In [64], it is proven that the Markov blanket property holds with probability one for many coupled random dynamical systems of sufficient size. The proof involves defining a ‘blanket index’, which scores the degree to which the inner product discussed is nonzero. Using this technology, one can explicitly quantify the degree to which systems depart from the strict Markov blanket condition. More interestingly, the probability of the blanket index vanishing tends to one with dimension. Crucially, most physical systems are large in the relevant sense. A mere teaspoon of water, for instance, contains approximately 1023 molecules. The brain contains 100 billion neurons, with each individual neuron making thousands of connections. Other examples abound.

Now, it may be true that the results in [94] undermine the original derivation of the ABIL, as it can be found in the well-known paper [95]. However, newer work has re-derived the ABIL using conventional mathematics [6]. The results in [94] only pertain to the derivations found in [95]; and we note that the latter is also critically discussed in [63]. Thus, the appropriate conclusion to draw from [94] is that one ought not to cite [95] to make points about the ABIL or the Markov blanket property; and more subtly, that one should move on from that formalism. But we have independent reasons to believe that the ABIL is true; and indeed, the literature has moved on from that formalism.

As discussed above, it would be misleading to conclude that the FEP does not apply to the systems analysed in [83]. The application of the FEP is uninformative because that work focuses on linear, low-dimensional mathematical edge-cases, i.e. systems with a small number of states [92,96]. More precisely, the paper considers whether the FEP can be applied usefully to one-dimensional dissipative systems; physically, these are coupled, dampened springs with one degree of freedom each. The paper cogently shows that it is difficult to construct a Markov blanket for such systems. However, this does not undermine the FEP or the obtaining of the Markov blanket property in the general case. Instead, these results constitute an interesting application of the FEP to very low (i.e. one) dimensional, linear systems. As such, the conclusion to draw from this work is not that the Markov blanket property does not obtain in general, but rather, that Markov blankets are rare or difficult to construct in small, low dimensional systems; but they remain ubiquitous in appropriately large ones (which comprise most physical systems). Thus, the FEP is not a ‘theory of everything’ in the sense that it could be applied informatively to any kind of mathematical system whatsoever—it is only a theory of everything that features a Markov blanket. Indeed, [83] shows that the FEP applies vacuously to all sorts of systems; e.g. it applies to, but has nothing particularly interesting to say about, linear stochastic systems. This is by design: there is nothing interesting to say, FEP-theoretically, about such systems.

In summary, the FEP is a method or principle that applies to ‘things’ that are defined stipulatively in terms of Markov blankets, sparse coupling, and particular partitions. The FEP is not concerned with systems that do not contain any ‘thing’, so defined. On this view, the critical literature above focuses on whether or not any given system can be partitioned into some ‘thing’ and every ‘thing’ else. If it can, then the FEP applies—but not otherwise.

4. Some mathematical preliminaries on the maximum entropy principle, gauge theory and dualization

In the Introduction, we discussed how changing our perspective on self-organization entailed an exchange of points of view across a boundary: rather than asking how a particular system or particle maintains its ‘self’ and what beliefs it ought to hold about the environment, as the FEP is interested in, we can instead ask what that self is, and what it looks like from the perspective of an outsider observing the system. Likewise, to dualize the objects that we ask about also implies dualizing our application of Bayesian mechanics, to ask about our beliefs about a system, rather than the beliefs encoded or carried by the system. The idea, then, is that we can leverage this dual perspective to model self-organization as we conventionally would, restoring the symmetry of the problem and allowing us to apply the FEP to model self-organized systems.

We have said that the FEP is dual to the CMEP. Duality in this category-theoretic sense means that two objects, formally called an adjoint pair, share some common set of intrinsic features—but exhibit relationships to other objects in opposite directions. An adjunction (the existence of an adjoint pair) usually suggests some interesting structure hiding in a problem; in this case, it is the peculiar agent–environment symmetry which is definitional of coupled systems that infer each others’ states. It can be proven that exchanging (i) free energy for constrained entropy and (ii) internal for external states recovers all aspects of the ABIL and a simple case of self-evidencing, and thus much of the FEP, especially as it pertains to self-organization; see [6] for a proof of the ABIL (lemma 4.2 and theorem 4.1).

Thus, the motivation for dualization is almost threefold: (i) it recaptures the original spirit of the FEP, which is that of an observer modelling an agent exhibiting self-organization; (ii) it allows us to ground the mathematics of the FEP in the well-established foundations of maximum entropy and stationary systems at equilibrium; and (iii) it allows us to extend the existing methods of the FEP to scenarios that are new to the FEP literature, such as constraint-based formalisms. As a technical tool, changing our viewpoint introduces constrained self-entropy as the dual to the free energy of beliefs. In doing so, we can relate the FEP to existing insights in probability theory and dynamical systems theory. This new viewpoint turns out to be independently interesting for our reading of the FEP, and possibly extends it to new phenomena or systems. New approaches developed for the maximum entropy principle (such as gauge-theoretic results) reflect themselves in useful ways—within the FEP—via this relationship.

We begin not with maximum entropy, per se, but with a somewhat unconventional geometric view on the CMEP, to be related later to the density over states formulation of the FEP (and especially the Helmholtz decomposition). The core elements of the CMEP needed for this particular construction have their origins in gauge theory, a theory in mathematical physics that relates the dynamics of particles to the geometry of their state spaces. Later, this will allow us to discuss the decomposition of flows under maximum entropy in the same fashion as what exists under the FEP, as well as tie it in explicitly to the updating of probability distribution in response to changes in constraints. We recommend [97] as a reference with a good introductory tone, and [98] or [99] for more details.

A gauge theory begins with a field theory like electromagnetism or quantum electrodynamics (QED), describing the dynamics of matter and the particles that comprise it. The dynamics of ‘matter fields’ are typically described by applying a principle of stationary action (cf. §2) and thus are related to a special integral called an action functional, which, as we have discussed in §2, is a quantity that gets minimized by the field (or in quantum field theory, a description of the field’s most likely state). A functional, as we have said, is a function of a function: in this case, the action functional is a function of the Lagrangian of a system, which to repeat, summarizes the energies involved in the mechanics of the system. It follows that the minimizer of the action functional, which is a point in a function space, gives us the configuration of the field where the action is stationary.

In principle, the action functional gives us everything we need to know about a matter field. However, in many field theories, the action admits some sort of symmetry—this is a transformation that leaves the action invariant, so that an arbitrary change in some particular quantity has no effect on the equations of motion of the matter field predicted by the action. A loose example includes the gravitational field under changes in reference frame: the principle of general relativity is that we do not have absolute coordinates in which to describe physics, and things like motion appear different from different perspectives, despite the underlying physics being the same. Hence, gravity has a coordinate invariance, which means it stays the same under changes in coordinates: in other words, it has a symmetry under this transformation. In other theories, we have other symmetries: for instance, in QED, we can choose and change the complex phase of a particle arbitrarily, with no change in the associated action. The quantity under which the theory is symmetric is called a gauge. In gauge theory, the symmetry itself is referred to as gauge invariance, which is characterized by a free choice of gauge and invariance under changes in gauge called gauge transformations.

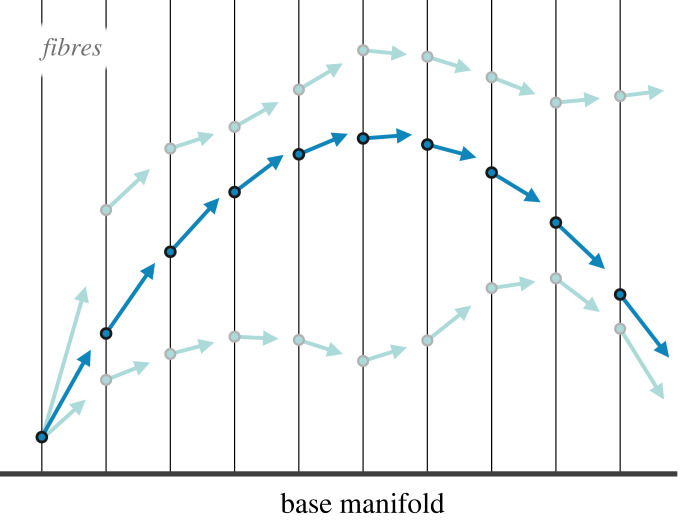

One of the reasons why gauge theories are interesting is because, unlike the action functional, the matter field itself is generally gauge covariant; this means that it changes with a change in gauge. While we can, in principle, deduce all the information we need about a matter field from the action functional, this symmetry is not manifest in the field: the equation expressing the evolution of the field changes with the choice of gauge. This is what is meant by covariance (varying together). Imagine choosing a reference frame: gauge symmetry only says that we can choose any new frame and still observe motion consistent with the laws of physics (e.g. motion which conserves total energy); but, the expression of that motion within a frame is still dependent on the choice of frame (for instance, choosing a moving frame of reference will convert inertial trajectories into moving ones, relative to that frame of reference). Gauge covariance has a very literal relationship with the idea of changing the coordinate basis in which we express the components of a vector. (For an example of this, see figure 4.)

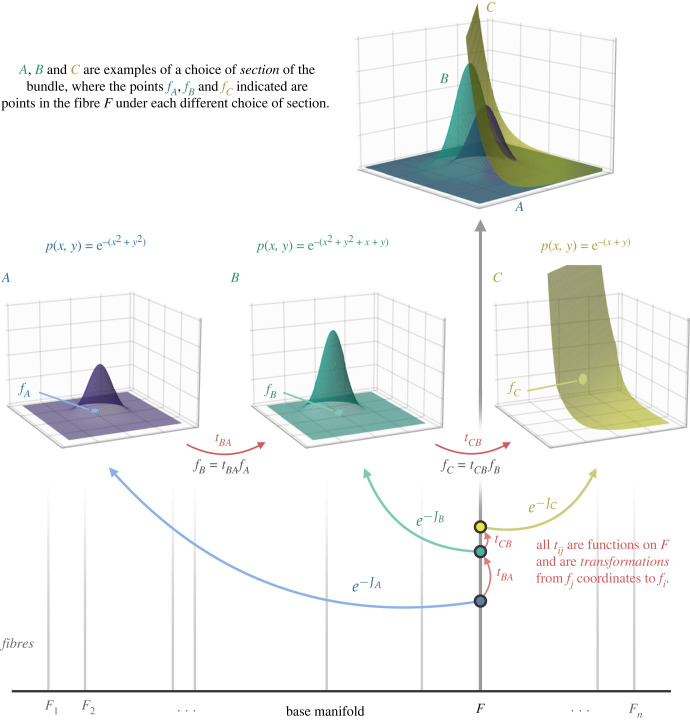

Figure 4.

Illustration of fibre bundles, sections and transformations. A fibre bundle exists on a base manifold, situated over points in that space. Here, the fibre is a copy of the real line attached to each point in the base. Different choices of section of the bundle correspond to different choices of constraint function. The section is a function which assigns coordinates in ‘probability space’—by which we mean values of probability, or specific points in the fibre F over some base point (x, y)—to points on the base. For fA, fB, fC ∈ F, these points lie over the base point (x, y). The height of any fi(x, y) corresponds to the probability of (x, y), and is a lift of (x, y) within the section p. Three simple choices of section are shown, with the same point (x, y) mapping to different regions of the corresponding probability density due to a different choice of constraint function (A, B and C). The inset over F shows that all of these densities are sections over the same base space.