Abstract

Emotion perception is essential for successful social interactions and maintaining long-term relationships with friends and family. Individuals with autism spectrum disorder (ASD) experience social communication deficits and have reported difficulties in facial expression recognition. However, emotion recognition depends on more than just processing face expression; context is critically important to correctly infer the emotions of others. Whether context-based emotion processing is impacted in those with Autism remains unclear. Here, we used a recently developed context-based emotion perception task, called Inferential Emotion Tracking (IET), and investigated whether individuals who scored high on the Autism Spectrum Quotient (AQ) had deficits in context-based emotion perception. Using 34 videos (including Hollywood movies, home videos, and documentaries), we tested 102 participants as they continuously tracked the affect (valence and arousal) of a blurred-out, invisible character. We found that individual differences in Autism Quotient scores were more strongly correlated with IET task accuracy than they are with traditional face emotion perception tasks. This correlation remained significant even when controlling for potential covarying factors, general intelligence, and performance on traditional face perception tasks. These findings suggest that individuals with ASD may have impaired perception of contextual information, it reveals the importance of developing ecologically relevant emotion perception tasks in order to better assess and treat ASD, and it provides a new direction for further research on context-based emotion perception deficits in ASD.

Subject terms: Perception, Human behaviour

Introduction

Emotion recognition and processing are essential for successful social interactions. Emotions play an important role in our social lives and in our understanding of others, and thus, shape the way that we understand the world around us. For example, individuals with autism spectrum disorder (ASD) have reported impairments in facial expression recognition, which could have knock-on consequences for other perceptual and social functions and could be a contributing factor in the reported deficits in social communication1,2. ASD is a neurodevelopmental disorder with an early onset and is characterized by impairments in social interaction and repetitive behaviors2. These deficits have often been attributed to an impairment in Theory of Mind, which is the ability to infer the mental states of others3–5.

One popular measure of Theory of Mind is the Reading the Mind in the Eyes Test6, also known as the Eyes Test, which requires participants to infer the emotion of a person based on their eyes alone, without any other information about the face or context. In this task, participants choose, among a selection of mental states, a single emotion label that they believe reflects the expression in the pair of isolated eyes. The Eyes Test distinguishes between typical controls and individuals with ASD6–9: performance on the Eyes Test is lower in individuals diagnosed with ASD compared to controls7 and it is correlated with Autism Spectrum Quotient (AQ) scores6. However, the Eyes Test has also been criticized on several grounds: it does not mimic how emotion is experienced in the real world; it lacks spatial context that is naturally experienced alongside facial expressions10–14; and it lacks temporal context, including how emotions change over time and how recent events influence an individual’s current emotional state 15,16. Moreover, some studies have found no difference in performance on the Eyes Test between individuals with ASD and other disorders like schizophrenia17 and alexithymia18. Additionally, some researchers argue that Theory of Mind, as operationalized by the Eyes Test, is not the key component of the underlying deficit in social communication that is normally observed in ASD. Instead, they suggest that ASD is not characterized by a single cognitive impairment but a deficit in a collection of higher-order cognitive abilities19–24. Specifically, alternative theories suggest that there are deficits in meta-learning19 and deficits in updating priors23 in individuals with ASD.

Despite the debate over the Eyes Test, it is well known that individuals with ASD have emotion perception deficits1–3,25–30, and this is true especially in more ecologically valid and dynamic situations31–33. These deficits have been attributed to moderators like emotion complexity34 and abnormal holistic processing of faces30,35–37 (though more recent studies suggest that individuals with ASD are able to utilize holistic face processing38–40). In addition, previous studies have also found that individuals with ASD display impairments in daily tasks that involve social interaction,41,42 even when there may be no impairment in contrived, less ecologically-valid experimental tasks of social cognition. These findings raise the possibility that the current assessments of social cognition in ASD may lack some critical attributes that are normally experienced during everyday social interactions10,11,13. In particular, existing popular tests6,43,44 do not measure or capture the role of spatial and temporal context in emotion perception.

Contextual information is critical for emotion perception. It influences emotion perception even at the early stages of face processing45,46, it is unintentionally and effortlessly integrated with facial expressions47, and it is an integral part of emotion perception in the real world13,14. More surprisingly, observers have been found to accurately and rapidly infer the emotions of characters in a scene without access to facial expressions, while using only contextual information10–12. The idea that “contextual blindness” may be a key component in ASD has been discussed before48 and has been attributed to the weak central coherence hypothesis49. Central coherence is the ability to combine individual pieces of information together into a coherent whole and has been suggested to be a key problem in ASD49. Weak central coherence in ASD could manifest as a reduced ability to integrate contextual information with face and body information when inferring the emotions of people in the real world. Previous research has found that individuals with ASD are less able to use contextual cues to infer the emotions of blurred-out faces50 supporting the idea that weak central coherence may affect how individuals with ASD integrate emotional cues in the real world.

Contextual information is not only present in the spatial properties of background scenes (e.g., background environment, scene information, surrounding faces and bodies, etc.), but there is also a temporal context which involves the integration of social information over time. Temporal context refers to the idea that information about emotions is dynamic, unfolds over time, and is subject to change15,51,52. Both spatial and temporal context can be informative in emotion perception. One example of temporal context relates to noticing when the emotion of another individual has changed. For example, when having a conversation with a friend, if you were to say something that offends them, then their emotion will change depending on the intensity of the offense. To successfully navigate the conversation, you would need to have noticed that the emotion of your friend has changed, in a timely manner, and either apologize or change the topic of conversation. Individuals with ASD may be impaired in such circumstances, because they have impairments processing dynamic complex scenes53. Moreover, many studies have reported differences in the processing of temporal context in typical individuals compared to those with ASD54–57. However, there is currently a dearth of research on how emotion perception of dynamic stimuli, which includes natural spatial context, is affected in individuals with ASD.

In the present study, we investigated whether individuals who scored high on the Autism Quotient58 (AQ) have an impaired ability to infer the emotion of a blurred-out (invisible) character using dynamic contextual information. To investigate this, we recruited a fairly large sample (n = 102) and had participants complete an Inferential Emotion Tracking10,11 (IET) task, where participants used a 2D valence-arousal rating grid to continuously track the emotion of a blurred-out (invisible) character while watching a series of short (1–3 min) movie clips. Each participant watched and rated a total of 35 different movie clips (which included Hollywood movies, documentaries, and home videos), and then completed a battery of questionnaires at the end of the experiment. To foreshadow the results, we found that individual differences on the IET task correlated strongly with AQ scores, suggesting that context-based emotion perception may be impaired in ASD, while the correlation between participants' scores on the Eyes Test and on the AQ questionnaire was not significant.

Results

All analysis scripts and datasets are available at the Open Science Framework (https://osf.io/zku24/). All statistical analyses were performed using Python.

IET task performance

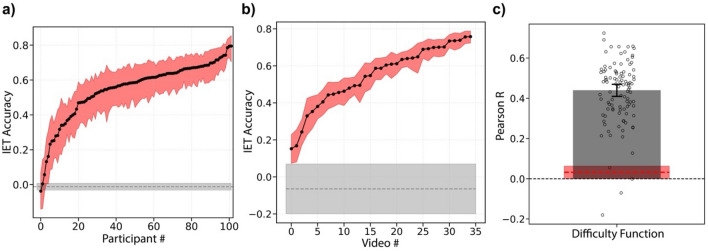

Descriptive statistics of all variables are presented in Table 1. We first quantified the individual differences in IET task accuracy, to assess whether there was systematic variability in accuracy across observers. We calculated each participant's IET accuracy for each video (for both valence and arousal ratings) and compared the average accuracy across participants (Fig. 1b; see “Methods”). IET task accuracy was calculated as the Pearson correlation between the participant’s ratings on each video and the “correct” ratings retrieved from an Informal Cultural Consensus Model59 (see “Methods”). The correct response computed from the Cultural Consensus Model is found by performing principal component analysis on all ratings for a given video and selecting the first set of factor scores (a weighted, linear combination of ratings)59. The first factor of the principal component analysis will contain individual responses that are the most correlated with each other. Essentially, the Cultural Consensus Model is a measure of the consensus judgments of valence and arousal over time for each video. It is a proxy for ground truth that is well supported for situations without an objective ground truth59–62. We found that the participants' IET accuracy varied significantly (Fig. 2a). We then investigated whether there were video-specific individual differences by performing a similar analysis on the accuracy of each video. Again, we found that task accuracy for each video varied significantly (Fig. 2b). To ensure that low performers in the task did not just respond randomly, we recalculated the video-specific individual differences (as shown in Fig. 2b) using a leave-one-out procedure for each participant. This allows us to organize the videos by their average accuracy across participants, which we call the difficulty function, and compare the leave-one-out group-averaged difficulty function to participants’ own difficulty functions. If each participant’s difficulty function is correlated with the leave-one-out group-averaged difficulty function, then this suggests that the participant’s accuracy on each video was correlated with the tracking difficulty of each video. That is, participants should have higher accuracy for the easier videos and lower accuracy for the harder videos. If participants have a low correlation with the group-averaged difficulty function, then this may suggest that they frequently lapsed, randomly responded, or did not actively participate in the task. We found that ~ 98% of the participants' difficulty function correlation fell outside of the permuted null distribution correlation values (Fig. 2c). This indicates that the vast majority of participants actively and consistently participated in the task. While two participants' difficulty functions fell within the 95% confidence interval of the permuted null distribution, we did not remove these subjects from the main analysis. However, in a separate analysis, we removed the two participants who fell within the permuted null distribution and found no significant difference in our results (Fig. S2).

Table 1.

Descriptive statistics.

| Variable | M | Median | SD | Min | Max | Skewness | Kurtosis |

|---|---|---|---|---|---|---|---|

| Reading the mind in the eyes | 25.17 | 26.00 | 5.37 | 5.00 | 33 (91.7%) | − 1.61 | 3.17 |

| Films facial expression test | 27.53 | 28.00 | 3.45 | 10.00 | 32 (100%) | − 2.23 | 7.98 |

| Matrices | 28.61 | 29.00 | 4.39 | 12.00 | 35 (100%) | − 1.33 | 2.27 |

| Vocabulary | 13.17 | 13.00 | 3.60 | 1.00 | 20 (100%) | − 0.42 | 0.49 |

| Age | 20.15 | 20.00 | 3.00 | 18.00 | 42.00 | 4.62 | 29.06 |

| Satisfaction w/life | 23.23 | 24.00 | 6.62 | 5.00 | 35 (100%) | − 0.55 | 0.06 |

| Empathy quotient | 43.69 | 44.00 | 12.45 | 15.00 | 68 (85%) | − 0.34 | − 0.27 |

| Autism quotient | 18.95 | 18.00 | 5.13 | 9.00 | 33 (66%) | 0.46 | 0.11 |

| State anxiety | 42.54 | 42.50 | 12.08 | 20.00 | 70 (87.5%) | 0.18 | − 0.60 |

| Trait anxiety | 45.86 | 47.00 | 10.46 | 20.00 | 74 (92.5%) | − 0.15 | − 0.02 |

| Beck depression | 9.64 | 8.00 | 7.89 | 0.00 | 37 (58.7%) | 1.12 | 1.31 |

| CAPE psychosis | 72.33 | 71.50 | 15.39 | 46.00 | 126 (75%) | 0.65 | 0.96 |

| CAPE depressive | 16.96 | 16.50 | 5.36 | 7.00 | 33 (75%) | 0.37 | − 0.39 |

| CAPE negative psychosis | 28.02 | 27.00 | 7.68 | 16.00 | 61 (89.7%) | 1.61 | 4.21 |

| CAPE positive psychosis | 27.35 | 26.00 | 5.81 | 16.00 | 44 (47.8%) | 0.53 | − 0.24 |

| Valence accuracy | 0.63 | 0.68 | 0.19 | − 0.18 | 0.87 | − 1.85 | 4.12 |

| Arousal accuracy | 0.49 | 0.54 | 0.18 | − 0.02 | 0.82 | − 0.82 | 0.18 |

Percentages under Max column indicate the percentage of the max possible score for each survey obtained by our subject pool (100% indicates that the maximum possible score was obtained by our subject pool).

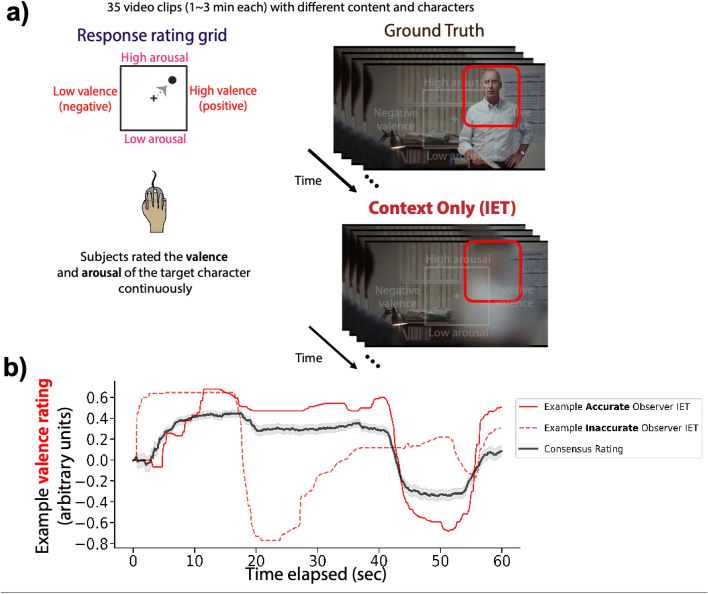

Figure 1.

Inferential Emotion Tracking (IET) task paradigm. (a) One hundred and two participants rated a total of 35 different video clips, which included Hollywood movies, documentaries, and home videos. A 2D valence-arousal rating grid was superimposed on the video and participants were required to rate the emotion of the target character. The red outline indicating the target character for a given trial was only shown on a single frame before the start of the trial. (b) An example of an accurate observer (solid red line) and an inaccurate observer (dashed red line) compared to the averaged ratings (consensus rating) of the context only condition (black line). Shaded regions on the consensus rating represent 1 standard error of the mean. Videos shown in this figure and study are publicly available (https://osf.io/f9rxn).

Figure 2.

Individual differences in participant accuracy and video difficulty. (a) IET accuracy scores for each individual participant, ranked (b) IET accuracy scores for each individual video, ranked, which we call the difficulty function. Shaded red regions depict 95% bootstrapped confidence intervals for each individual participant or video. Shaded gray regions show 95% confidence intervals around the permuted null distribution; dashed grey line shows mean permuted IET accuracy. (c) Correlation between participants' own stimulus difficulty function and the leave-one-out group averaged difficulty function. Error bars represent bootstrapped 95% CI. Dashed red line shows bootstrapped mean permuted IET accuracy and red shaded areas show 95% confidence intervals on the permuted null distribution.

Correlations between IET performance and questionnaire items

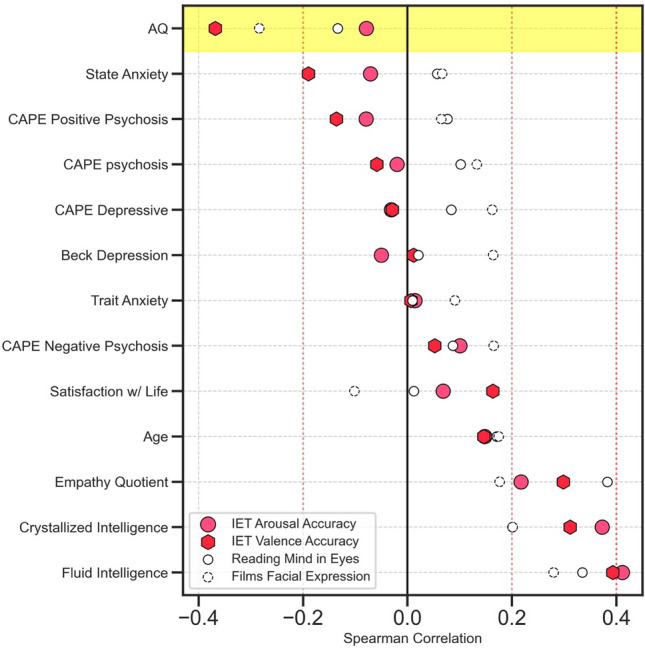

Our main goal was to investigate whether low accuracy on the IET task was correlated with high scores on the AQ in order to explore whether individuals with ASD have impaired context-based emotion processing. We also wanted to compare this relationship to that of other popular emotion perception tasks: the Eyes Test6 and the Films Facial Expression Task63. We calculated the Spearman correlation between all variables in our data (Fig. S1), instead of the Pearson correlation to avoid any assumptions about the distribution of the data. We report both uncorrected and Bonferroni corrected significance (for 17 comparisons made in the main results; Fig. 3). We found a significant negative correlation between participants' accuracy on the IET task for their valence ratings and their AQ scores (rho = − 0.368, p = 0.002, Bonferroni corrected; p < 0.001, uncorrected). Negative, but non-significant, correlations were found for the Films Facial Expression Task and AQ (rho = − 0.284, p = 0.065, Bonferroni corrected; p = 0.004, uncorrected), the Eyes Test and AQ (rho = − 0.134, p = 0.180, uncorrected) and IET arousal accuracy and AQ (rho = − 0.079 p = 0.431, uncorrected) (Fig. 3). Significant positive correlations were also found between IET valence accuracy and the Empathy Quotient (rho = 0.298 p = 0.04, Bonferroni corrected; p = 0.002, uncorrected) and between the Eyes Test and the Empathy Quotient (rho = 0.383, p < 0.001; Bonferroni corrected; p < 0.001, uncorrected). We found no significant correlations between Films Facial Expression Task and the Empathy Quotient (rho = 0.176, p = 0.076, uncorrected) or IET arousal accuracy and the Empathy Quotient (rho = 0.217, p = 0.478, Bonferroni corrected; p = 0.028, uncorrected). Significant correlations were also found between Fluid Intelligence and IET valence accuracy (rho = 0.393, p < 0.001, Bonferroni corrected; p < 0.001, uncorrected), IET arousal accuracy, (rho = 0.411, p < 0.001, Bonferroni corrected; p < 0.001, uncorrected), the Eyes Test (rho = 0.335, p = 0.01, Bonferroni corrected; p < 0.001, uncorrected), but no significant correlation was found between Fluid Intelligence and Films Facial Expression Task (rho = 0.28, p = 0.075, Bonferroni corrected; p = 0.004, uncorrected). Significant correlations were also found between Crystallized Intelligence and IET valence accuracy (rho = 0.311, p = 0.025, Bonferroni corrected; p < 0.001, uncorrected) and IET arousal accuracy, (rho = 0.373, p = 0.002, Bonferroni corrected; p < 0.001, uncorrected), however, no significant correlation was present between Crystallized Intelligence and the Eyes Test (rho = 0.201, p = 0.733, Bonferroni corrected; p = 0.043, uncorrected) and Films Facial Expression Task (rho = 0.201, p = 0.723, Bonferroni corrected; p = 0. 043, uncorrected). We also recalculated the correlation between IET valence accuracy and AQ while removing the two subjects who fell within the permuted null in Fig. 2c and found that the correlation remained significant (rho = − 0.361, p = 0.004, Bonferroni corrected; p < 0.001, uncorrected).

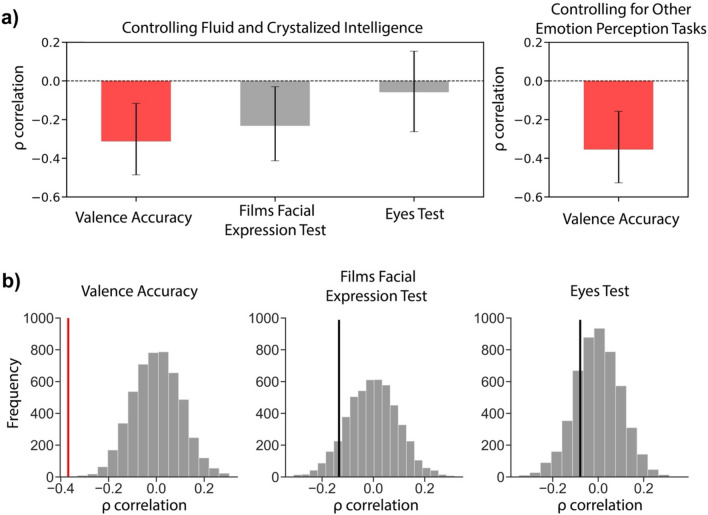

Figure 3.

Correlations between IET and questionnaires. Correlations between IET valence (red hexagon) and arousal (pink circle) accuracy scores, the Eyes Test (solid circle), Films Facial Expression Task (dashed circle), and questionnaires completed by participants. Highlighted row shows the correlation between each task and Autism Quotient (AQ) scores.

Controlling for general intelligence and covarying factors

We further investigated the correlation between IET valence accuracy and AQ by controlling for Fluid and Crystallized Intelligence. This assures that the correlation between the two variables is not just driven by general intelligence. We computed partial correlations between IET valence accuracy and AQ while controlling for Fluid and Crystalized Intelligence and plotted the bootstrapped mean and 95% confidence intervals revealing that the correlation does not cross 0 and remains significant (m = − 0.311, CI [− 0.485, − 0.117], 5000 iterations) (Fig. 4a). The correlation between Films Facial Expression Task and AQ also remained significant when controlling for general intelligence (m = -0.232, CI [− 0.413, − 0.03], 5000 iterations). However, the correlation between the Eyes Test and AQ was not significant (m = − 0.058, CI [− 0.263, 0.154], 5000 iterations). We also calculated partial correlations for IET valence accuracy and AQ while controlling for both Films Facial Expression Task and the Eyes Test performance, which revealed that the correlation remained significant (m = − 0.355, CI [− 0.527, − 0.157], 5000 iterations). This suggests that the correlation between IET valence accuracy and AQ is not explained by participants’ emotion perception abilities as measured by other popular face recognition tests.

Figure 4.

Significance tests for AQ correlations. (a) Partial correlations between AQ and IET valence accuracy, Films Facial Expression Task, and the Eyes Test while controlling for Fluid and Crystalized intelligence (left). We also computed partial correlations for AQ and IET valence accuracy while controlling for both the Eyes Test and Films Facial Expression Task performance (right). Error bars represent bootstrapped 95% CI. (b) Permutation tests for AQ correlations showing Valence accuracy, Films Facial Expression Task, and the Eyes Test from left to right. Gray distributions represent the permuted null distributions for each relationship. The solid vertical lines (red, black) represent the observed empirical correlations for each task, respectively.

In order to control for potential covarying factors, we performed new partial correlations between all tasks and AQ while controlling for general intelligence and the Empathy Quotient which had significant correlations with IET valence accuracy and the Eyes Test. We found that the correlation between IET valence accuracy and AQ remained significant (m = − 0.26, CI [− 0.447, − 0.057], 5000 iterations), and so did the correlation between Films Facial Expression Task and AQ (m = − 0.21, CI [− 0.4, − 0.003], 5000 iterations) (Fig. S3). The correlation between the Eyes Test and AQ was not significant when controlling for potential covarying factors (m = 0.05, CI [− 0.15, 0.25], 5000 iterations) (Fig. S3a). We also computed partial correlations while controlling for both IET valence and arousal accuracy between AQ and the emotion perception tasks. We found no significant correlation between AQ and the Films Facial Expression Task (m = − 0.17, CI [− 0.37, 0.04], 5000 iterations) and the Eyes Test (m = − 0.10, CI [− 0.29, 0.01], 5000 iterations) (Fig. S3b). This indicates that these popular tests of face emotion recognition do not account for significant variance in AQ once IET accuracy is controlled. Permutation tests were also conducted as additional statistical tests and revealed significant correlations between IET valence accuracy and AQ (p = 0.001, permutation test) and Films Facial Expression Task and AQ (p = 0.023, permutation test) (Fig. 4b). The correlation between the Eyes Test and AQ was not significant (p = 0.174, permutation test).

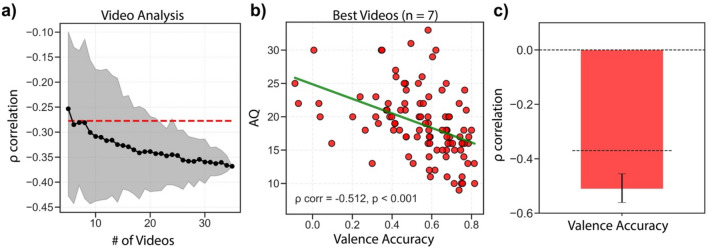

Video analysis: isolating the best videos for predicting AQ

Our next goal was to investigate which videos in the task were the best videos for assessing the relationship between IET and ASD. The original set of videos in this experiment were from a previous study11, and were not chosen to specifically investigate traits associated with ASD. Nevertheless, it is interesting that the correlation between IET task accuracy and AQ scores was so strong. To investigate which videos were the best for assessing ASD traits, akin to an item analysis, we calculated the minimum videos needed to reach 75% of the effect size of the original rho = 0.37 (i.e., threshold rho = 0.277). We first selected 5 videos at random, without replacement, from the list of videos and used these videos to calculate all participant's IET valence accuracy. We then calculated the spearman correlation between participants' IET valence accuracy for the currently chosen videos and AQ. At each step, we increased the number of videos used to calculate IET valence accuracy. This process was repeated 5000 times for each step in the analysis and the Fisher-Z mean correlation coefficient of the 5000 iterations between IET valence accuracy and AQ was used and compared to the 75% threshold. The results show that only 7 videos were needed to reach 75% of the effect size originally observed (Fig. 5a). We chose the 7 videos with the highest correlation between IET valence accuracy and AQ for further analysis which revealed a significant negative correlation (AQ versus IET: rho = − 0.512, p < 0.001) (Fig. 5b). In order to verify the strength and reliability of this relationship, we conducted a reliability test: we first split the data, at random, into five chunks as evenly as possible and then recalculated the AQ correlation in each of the five chunks. We then calculated the average correlation, using fisher-Z transformation, of the five chunks and ran the same analysis for 5000 iterations. Using only the best videos, we found that the correlation remained significant and was significantly stronger than using all the videos in the original analysis (rho = 0.51, CI [− 0.45, − 0.56], p < 0.001) (Fig. 5c). This reveals that the IET task has a substantial amount of power: it only takes a few videos to reveal a strong negative relationship with AQ scores. It further supports our original findings, that individuals with ASD may have deficits in context-based emotion perception.

Figure 5.

Isolating the best videos for predicting AQ. (a) Example of the video analysis, showing only 100 iterations for each step. In a Monte Carlo simulation, we randomly selected N videos (abscissa) from the 35 videos and bootstrapped the correlation between IET valence accuracy and AQ (ordinate). The black dots represent the bootstrapped mean Spearman correlation for each number of videos used. The red dashed line represents 75% of the size of the original effect size observed with all the videos. Only 7 videos were needed to achieve an average effect size of 75% of the original correlation. (b) Correlation between the 7 best videos identified from the analysis in (a) and AQ scores. Green solid line represents the fitted linear regression model. (c) Cross-validated correlation between IET valence accuracy and AQ. The data were split into 5 close-to-equal chunks and the correlation between IET valence accuracy and AQ was calculated for each chunk then averaged and was calculated for 5000 iterations. Dashed black line represents the originally observed correlation with all videos used. Error bars represent bootstrapped 95% CI.

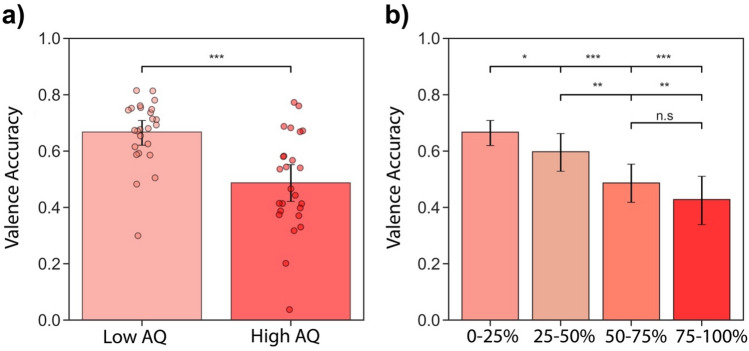

Finally, we further investigated the relationship between IET accuracy and AQ for the best videos by comparing IET accuracy using a split-half analysis. We split the data into two halves using the median AQ score and categorized individuals who had an AQ score less than 18 as the “Low AQ” group and individuals who scored higher than 18 as the “High AQ” group. We found that the high AQ group had significantly lower IET accuracy than the low AQ group (Fig. 6a, p < 0.001, bootstrap test). We then wanted to explore whether the IET task is sensitive to subtle differences in AQ by splitting the data into four quartiles: the 0–25% AQ include scores 9–16 (n = 25), the 25–50% AQ includes scores 16–18 (n = 25), the 50–75% AQ include scores 18–22 (n = 25), and the 75–100% AQ includes scores 22–33 (n = 27). The 0–25% group had significantly higher IET accuracy than the 25–50% AQ group (p = 0.037, bootstrap test), the 50–75% AQ group (p < 0.001, bootstrap test) and the 75–100% AQ group (p < 0.001, bootstrap test) (Fig. 6b). The 25–50% group had significantly higher IET accuracy than the 50–75% AQ group (p = 0.017, bootstrap test), and the 75–100% AQ group (p = 0.001, bootstrap test). IET accuracy in the last two groups (50–75% and 75–100%) was not significant (p = 0.149, bootstrap test)(Fig. 6b). These results suggest that the IET task can measure subtle changes in AQ scores including in the typical range of AQ scores (9–33 score)58.

Figure 6.

IET valence accuracy across different ranges of AQ scores for the best videos. (a) IET valence accuracy for low AQ scores (AQ < 18, n = 51) and high AQ scores (AQ > 18, n = 51). Each dot represents an individual participant. (b) IET accuracy as function of AQ quartiles: 0–25% AQ scores (9–16 AQ, n = 25), 25–50% AQ scores (16–18, AQ, n = 25), 50–75% AQ scores (18–22, AQ, n = 25), 75–100% AQ scores (22–33 AQ, n = 27). Error bars represent bootstrapped 95% CI.

Discussion

In the present study, we investigated whether context-based emotion perception is impaired in individuals who score high on the Autism Quotient (AQ), using a recently developed context-based emotion recognition task (Inferential Emotion Tracking; IET). We also compared this relationship to that of more popular assessments that use static face stimuli isolated from context. We found that participants' accuracy in IET was significantly correlated with their AQ scores, such that high AQ scores correlated with low IET task accuracy. These results indicate that context-based emotion recognition may be specifically impacted in those with Autism. Additionally, we found that the correlation between IET and AQ was stronger than the correlation between the Eyes Test and AQ, and higher than the correlation between the Films Facial Expression Task and AQ. Our results suggest that individuals with ASD may have deficits in processing emotion specifically from contextual information and they also highlight the importance of establishing ecological validity of stimuli and tasks to improve future assessments of ASD. Our result may also help explain the contradictory findings in the literature of facial emotion recognition in individuals with ASD1.

Whether facial emotion recognition is impaired in individuals with Autism has been under debate with some studies finding clear impairments25,64–66 while other studies have not67–74. These equivocal findings in the literature may reflect the heterogeneity of social cognition impairments in ASD, or they may be due to differences in demographic characteristics, task design (e.g. ceiling effects, variables measured, low powered studies), or task demands (e.g. context-based, dynamic, or static facial emotion recognition)1,73. Alternatively, this conflict may also be due to a lack of sensitivity in the behavioral measures used to assess emotion perception deficits in individuals with ASD, as studies using eye-tracking and neuroimaging methods are much more likely to find a group difference between individuals with ASD and typical controls than behavioral methods (for review, see Harms et al.1). Our results suggest that the inconsistent findings in the literature may be due to the lack of control or absence of contextual and dynamic information in previous studies. Moreover, our results suggest that future assessments should consider improving the ecological validity of stimuli and tasks by incorporating spatial and temporal context, thereby prioritizing the social-cognitive structure of scenes that humans typically experience in the real world75.

The relationship between the Eyes Tests and Autism has been extensively studied6,7,9,76. However, we found that participants' scores on the Eyes Test and the AQ questionnaire were not significantly correlated. This is consistent with some of the literature18, but may be surprising since the Eyes Test is commonly used to assess Theory of Mind in individuals with ASD and has previously been found to correlate with AQ6. While these results may be explained by the lack of clinically diagnosed individuals with ASD in the present study, it may also suggest that the Eyes Test is simply less sensitive: it was unable to differentiate between low and mid-range AQ scores and was not sensitive to subtle individual differences in emotion perception across participants. More popular tests used to assess ASD, like the Eye’s Test, lack both temporal and spatial contextual emotion processing which our findings reveal to be a potential core impairment in individuals with ASD. Thus, this may suggest that previous research that found no difference in performance on the Eye’s Test between healthy controls and individuals with ASD18,77 may be due to the lack of contextual information in the task. Additionally, low performance on the Eyes Test in individuals with ASD could reflect an impairment in facial emotion recognition due to alexithymia, which often co-occurs with ASD78,79.

The strength of the IET task, compared to more popular tests, is that it selectively removes the facial information of the character whose emotion is being inferred. Observers must therefore use the context to infer the emotion of the target characters. While some of the videos used in our study do include other faces, the information retrieved from these faces is not enough to accurately track the emotion of a blurred out character11. Consequentially, the design of the IET task and the relationship between task performance and participants’ AQ scores should not be accounted for by co-occurring alexithymia in individuals with ASD. However, we did not measure alexithymia80 in our subject pool and future studies should investigate whether context-based emotion perception is impaired in individuals with alexithymia.

Another strength of the IET task is that it is novel. To the best of our knowledge, only one other study has used context-only stimuli while investigating emotion recognition ability in ASD and they only used static stimuli of natural photos in their experiment50. Additionally, in the IET task participants must infer emotion dynamically, in real-time, meaning that they must identify changes in emotion as it occurs. This is a fundamental component of the IET task, and it reveals a potentially critical role of dynamic information in ASD. This echoes findings from previous studies, which have reported that differences in emotion recognition found in ASD may be specific to dynamic stimuli: individuals with ASD can successfully identify emotions from static images but fail to identify emotions in dynamic stimuli53,72. This might help explain why performance on the IET task, which requires participants to dynamically infer emotions from spatial and temporal context in real time, would have a stronger relationship with AQ than the Eyes Test and Films Facial Expression Task, both of which use static stimuli isolated from context.

Low performance on the IET task in individuals with high AQ scores may also be due to deficits in cognitive control, which is believed to be impaired in individuals with ASD81–84, especially when processing social stimuli85. Consequentially, the high cognitive demand that is required to actively infer both valence and arousal of a blurred-out character may be difficult for individuals with ASD. However, we found that IET arousal tracking did not significantly correlate with AQ scores. If a general deficit in cognitive control was driving the correlations, then we should have also found AQ scores correlated with low IET arousal tracking. It could be that individuals with higher AQ scores attended primarily to the arousal dimension instead of both dimensions, but it is not clear why this would occur consistently across individuals. Finally, low performance on the IET task may also reflect a lack of experience in social interactions in individuals with ASD. In other words, participants with high AQ scores potentially have less experience with a variety of social situations compared to participants with low AQ scores. This could interact with performance on the IET task because familiarity with a diverse range of contexts may be valuable when infering emotion in the videos.

Context-based emotion perception as a core deficit in ASD could be consistent with the weak central coherence hypothesis, which states that perception in individuals with ASD is oriented towards local properties of a stimulus and leads to impaired global processing35,86. Accurate perception of emotion, though, requires global processing. For example, context often disambiguates the natural ambiguity that is present in facial expressions87. To access this kind of global information, contextual information needs to be successfully integrated with facial information, and observers must make connections between multiple visuo-social cues across scenes and over time11,12. Impaired access to this global information in ASD could therefore impair emotion processing. The IET task may exacerbate the impaired central coherence in individuals with ASD, as they only have the context as a source of information when inferring the emotions of the blurred-out character in the scene. Global processing of contextual cues would be even more difficult for individuals with ASD, as they have been found to have relatively slow global processing86,88 and need long exposures to stimuli in order to improve global performance89. Thus, the dynamic nature of IET may further tax individuals with ASD, because the task not only involves spatial context (e.g., visual scene information and other faces) but also involves temporal context.

While IET valence accuracy was strongly correlated with AQ scores, IET arousal accuracy had a much weaker correlation with AQ scores (Fig. 3). The Affective Circumplex Model states that emotions can be described by a linear combination of two independent neurophysiological systems90; valence and arousal. Previous studies have found that the dimensional shape of valence and arousal values are constricted in individuals with ASD compared to typical controls91 and have found that individuals with ASD have deficits in detecting emotional valence92–95. Interestingly, Tseng et al. (2014)91 found that while children with ASD perceived a constricted range of both valence and arousal, adults with ASD perceive only a constricted range of valence, and not arousal. These findings may explain why we found that valence, and not arousal, IET tracking was negativity correlated with AQ scores. However, previous research investigating valence and arousal processing in individuals with ASD has found contradictory results56,96. One neuroimaging study found abnormal activation and deactivation in individuals with ASD while passively viewing dynamically changing facial expressions, suggesting that processing of valence information in individuals with ASD may be impaired56. However, in a more recent study, Tseng et al. investigated differences in neural activity for both valence and arousal in individuals with ASD while they actively rated the emotion of facial expressions and only group differences were found in neural activity for ratings of arousal but not for valence96. These contradictory results may be due to the difference in the use of static and dynamic stimuli when investigating valence and arousal perception in ASD.

While the main objective of this study was to investigate whether context-based emotion perception is impaired in individuals who score high on AQ, we also investigated its relationship with a variety of cognitive and social abilities in order to control for potential covarying factors. Other than the relationship with AQ, we also found a significant relationship between IET valence accuracy and Empathy Quotient scores. More importantly, the direction of the correlations between these surveys and IET accuracy supports previous research that has found deficits in emotional intelligence in individuals with depression97,98, schizophrenia99, and anxiety 98. These relationships, and all others observed in this study, suggest that IET might also be useful to evaluate an individual's emotional intelligence. IET would have great advantages in evaluating emotional intelligence as it is considered an “ability” based measure of emotional intelligence. Ability-based measures of emotional intelligence have strong advantages since the task is engaging and performance on the task cannot be faked like common-self report measures of emotional intelligence100. One criticism of ability-based measures is that they commonly have high correlations with general intelligence, suggesting that they may not actually be measuring emotional intelligence101. However, we controlled this and found that the correlation between IET valence accuracy and AQ remained significant even when general intelligence was factored out (Fig. 4a). Another criticism of ability-based measures of emotional intelligence is that they often do not correlate with outcomes that they theoretically should correlate with 102,103. However, we found IET accuracy for both valence and arousal to be correctly correlated with measures of depression97,98, schizophrenia99, and anxiety98. Consensus-based scoring has also been criticized in measures of emotional intelligence104, however, in our study, we use an alternative measure of consensus scoring by using Cultural Consensus Theory59–61. While establishing IET as a measure of emotional intelligence is beyond the scope of this study, our results hint that IET may be useful as a component of emotional intelligence metrics. This is worth investigating further in the future.

In conclusion, we investigated whether context-based emotion perception is impaired in individuals who score high on the AQ and compared this relationship with other emotion perception tasks such as the Eyes Test and Films Facial Expression Task. Our results show that performance on IET was negatively correlated with participants' AQ scores, raising the intriguing possibility that context-based emotion perception is a core deficit in ASD. Our results bring into focus a range of previous mixed findings on the relationship between emotion perception and ASD, and they shed light on possible avenues for assessing and treating ASD in future work.

Methods

Participants

In total, we tested 102 healthy participants (39 men and 63 women, age range 18–42, M = 20.19, SD = 2.98) on an online website created for this experiment. As a priori sample size, we aimed to collect a similar sample size as Chen and Whitney11 who also used the IET task in their study which had 50 participants. However, since we were interested in investigating the relationship between task performance and AQ scores, we aimed to atleast double their sample size which led to a final sample size of 102 participants. Informed consent was obtained by all participants and the study was approved by the UC Berkeley Institutional Review Board. All methods were performed in accordance with relevant guidelines and regulations of the UC Berkeley Institutional Review Board. Participants were affiliates of UC Berkeley and participated in the experiment for course credit. All participants were naive to the purpose of the study.

Inferential Emotion Tracking

We used 35 videos used by Chen and Whitney11 in a previous study as stimuli for our experiment11 (materials available at https://osf.io/f9rxn). The videos consist of short 1–3 min clips from Hollywood movies containing single or multiple characters, home videos, and documentaries. In total, there were 25 Hollywood movies, 8 home videos, and 2 documentary clips used in the experiment. Participants used a 2D valence-arousal rating grid that was superimposed on each video clip to continuously rate the emotion of a blurred-out target character in each movie clip (Fig. 1; video shown in figure is publicly available (https://osf.io/f9rxn)). Participants were shown who the target character is before the start of the trial and were given the following instructions: “The following character will be occluded by a mask and become blurred out. Your task is to track the real emotions of this character throughout the entire video (but NOT other characters NOR the general emotion of the clip) in real-time”.

Emotion perception tasks

Our main goal was to investigate the difference in the relationship between IET task accuracy and AQ, and the relationship between the Eyes Test and AQ. We used the revised version of the Eyes Test in this study which consisted of 36 questions where participants had to choose a mental state out of a group of words that best fit the pair of eyes shown6. In order to compare the results of the IET task to a general emotion perception task, we also used the Films Facial Expression Task. which investigates an individual's ability to recognize the emotional expression of others63. In this task, participants were presented with an adjective that represented an emotional state and participants had to select one of three images (of the same actor) that best displayed the emotional state for that trial. This task controls for general ability in recognizing emotion from facial expressions, allowing us to compare context-based emotion perception with facial expression recognition ability.

Questionnaires

Following the completion of the IET experiment, participants were asked to complete a short (20–25 min) questionnaire. The questionnaire included a demographic section as well as a series of surveys meant to access cognitive and social ability. The first section of the questionnaire asks about gender, age, and education level. The second section contains the Satisfaction with Life Scale105, Autism-Spectrum Quotient58, Community Assessment of Psychic Experiences106, State-Trait Anxiety Index107, Beck Depression Inventory-II108, and the Empathy Quotient109. Each section is designed to assess the satisfaction with life, autism-like tendencies or characteristics, incidence of psychotic experiences, general trait anxiety, severity of depression, and ability to empathize, respectively, of the participant. Participants also completed segments from the Wechsler Adult Intelligence Scale in order to test their fluid and crystallized intelligence110. Specifically, we used the Vocabulary and the Matrix Reasoning subsets of the scale to measure for crystalized and fluid intelligence, respectively. These tests are well known and frequently used in psychology and have been historically used for these measures.

Cultural consensus theory

One issue that arises with many emotion perception tasks like the Eyes Test is that there is no “correct answer” in emotion perception tasks and thus the consensus is often used as the correct answer for many emotion perception and emotional intelligence tasks111. For example, target words for the Eyes Test were first chosen by the authors, and a set of judges then selected which target word was the most suitable for each stimulus6. Five out of the eight judges needed to agree on a target word in order to label it as “correct”. One theory of consensus scoring is that the judgment of non-experts is equivalent to expert judgments except that the responses are more distributed and less reliable, thus the consensus of non-expert judgments should equal the responses of experts112. However, consensus scoring can be limited due to the equal weighting of participants' responses. Averaging the response of all participants assumes that all participants are equally knowledgeable, which can be invalid in emotion perception tasks113. In our study, we used Cultural Consensus Theory to calculate the consensus which estimates the correct answers to a series of questions by assessing an individual's knowledge or competency compared to that of the group59,61.

We measured accuracy on the IET task by calculating participants Cultural Consensus Theory accuracy on participants Context-only ratings. We used the Informal Cultural Consensus Model in our analysis as it makes fewer assumptions about the data and we do not need to correct for guessing59. Cultural Consensus Theory accuracy is calculated as the Pearson correlation between an individual observer's rating for a given video and the first set of factor scores from the principal component analysis of the Context-only ratings. We conceptualize an individual’s IET accuracy as their ability to track and infer the emotions of a blurred-out character in a movie clip by using only contextual information. We computed the average IET accuracy by first applying Fisher Z transformation on all individual correlations, averaging the transformed values, and then transforming the mean back to Pearson’s r.

Supplementary Information

Author contributions

Testing and data collection were performed by Z.C. J.O. performed the data analysis and interpretation under the supervision of D.W. J.O. drafted the manuscript, and D.W. provided critical revisions. All authors approved the final version of the manuscript for submission. This work was supported in part by the National Institute of Health (grant no. 1R01CA236793-01) to D.W.

Funding

This study was funded by National Institutes of Health (1R01CA236793-01).

Data availability

Data are available at the OSF (https://osf.io/zku24/).

Code availability

Data analysis was conducted using Python. Code is available at the OSF (https://osf.io/zku24/).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-35371-6.

References

- 1.Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. Neuropsychol. Rev. 2010;20:290–322. doi: 10.1007/s11065-010-9138-6. [DOI] [PubMed] [Google Scholar]

- 2.Lord C, Elsabbagh M, Baird G, Veenstra-Vanderweele J. Autism spectrum disorder. Lancet. 2018;392:508–520. doi: 10.1016/S0140-6736(18)31129-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Baron-Cohen S, Leslie AM, Frith U. Does the autistic child have a “theory of mind”? Cognition. 1985;21(1):37–46. doi: 10.1016/0010-0277(85)90022-8. [DOI] [PubMed] [Google Scholar]

- 4.Baron-Cohen, S. Theory of mind and autism: A review. in International Review of Research in Mental Retardation vol. 23, 169–184 (Academic Press, 2000).

- 5.Frith C, Frith U. Theory of mind. Curr. Biol. 2005;15:R644–R646. doi: 10.1016/j.cub.2005.08.041. [DOI] [PubMed] [Google Scholar]

- 6.Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The, “Reading the Mind in the Eyes” test revised version: A study with normal adults, and adults with asperger syndrome or high-functioning autism. J. Child Psychol. Psychiatry. 2001;42:241–251. doi: 10.1111/1469-7610.00715. [DOI] [PubMed] [Google Scholar]

- 7.Peñuelas-Calvo I, Sareen A, Sevilla-Llewellyn-Jones J, Fernández-Berrocal P. The, “Reading the Mind in the Eyes” test in autism-spectrum disorders comparison with healthy controls: A systematic review and meta-analysis. J. Autism Dev. Disord. 2019;49:1048–1061. doi: 10.1007/s10803-018-3814-4. [DOI] [PubMed] [Google Scholar]

- 8.Sato W, et al. Structural correlates of reading the mind in the eyes in autism spectrum disorder. Front. Hum. Neurosci. 2017;11:361. doi: 10.3389/fnhum.2017.00361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Holt RJ, et al. “Reading the Mind in the Eyes”: An fMRI study of adolescents with autism and their siblings. Psychol. Med. 2014;44:3215–3227. doi: 10.1017/S0033291714000233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chen Z, Whitney D. Inferential emotion tracking (IET) reveals the critical role of context in emotion recognition. Emotion. 2020 doi: 10.1037/emo0000934. [DOI] [PubMed] [Google Scholar]

- 11.Chen Z, Whitney D. Tracking the affective state of unseen persons. Proc. Natl. Acad. Sci. USA. 2019;116:7559–7564. doi: 10.1073/pnas.1812250116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chen Z, Whitney D. Inferential affective tracking reveals the remarkable speed of context-based emotion perception. Cognition. 2021;208:104549. doi: 10.1016/j.cognition.2020.104549. [DOI] [PubMed] [Google Scholar]

- 13.Barrett LF, Mesquita B, Gendron M. Context in emotion perception. Curr. Dir. Psychol. Sci. 2011;20:286–290. doi: 10.1177/0963721411422522. [DOI] [Google Scholar]

- 14.Aviezer H, Ensenberg N, Hassin RR. The inherently contextualized nature of facial emotion perception. Curr. Opin. Psychol. 2017;17:47–54. doi: 10.1016/j.copsyc.2017.06.006. [DOI] [PubMed] [Google Scholar]

- 15.Kuppens P, Verduyn P. Emotion dynamics. Curr. Opin. Psychol. 2017;17:22–26. doi: 10.1016/j.copsyc.2017.06.004. [DOI] [PubMed] [Google Scholar]

- 16.Ong DC, Zaki J, Goodman ND. Computational models of emotion inference in theory of mind: A review and roadmap. Top. Cogn. Sci. 2019;11:338–357. doi: 10.1111/tops.12371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Fernandes JM, Cajão R, Lopes R, Jerónimo R, Barahona-Corrêa JB. Social cognition in schizophrenia and autism spectrum disorders: A systematic review and meta-analysis of direct comparisons. Front. Psychiatry. 2018;9:504. doi: 10.3389/fpsyt.2018.00504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Oakley BFM, Brewer R, Bird G, Catmur C. Theory of mind is not theory of emotion: A cautionary note on the Reading the Mind in the Eyes Test. J. Abnorm. Psychol. 2016;125:818–823. doi: 10.1037/abn0000182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Van de Cruys S, et al. Precise minds in uncertain worlds: predictive coding in autism. Psychol. Rev. 2014;121:649–675. doi: 10.1037/a0037665. [DOI] [PubMed] [Google Scholar]

- 20.Rajendran G, Mitchell P. Cognitive theories of autism. Dev. Rev. 2007;27:224–260. doi: 10.1016/j.dr.2007.02.001. [DOI] [Google Scholar]

- 21.Velikonja T, Fett A-K, Velthorst E. Patterns of nonsocial and social cognitive functioning in adults with autism spectrum disorder: A systematic review and meta-analysis. JAMA Psychiat. 2019;76:135–151. doi: 10.1001/jamapsychiatry.2018.3645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.van Boxtel JJA, Lu H. A predictive coding perspective on autism spectrum disorders. Front. Psychol. 2013;4:19. doi: 10.3389/fpsyg.2013.00019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pellicano E, Burr D. When the world becomes “too real”: A Bayesian explanation of autistic perception. Trends Cogn. Sci. 2012;16:504–510. doi: 10.1016/j.tics.2012.08.009. [DOI] [PubMed] [Google Scholar]

- 24.Boucher J. Putting theory of mind in its place: Psychological explanations of the socio-emotional-communicative impairments in autistic spectrum disorder. Autism. 2012;16:226–246. doi: 10.1177/1362361311430403. [DOI] [PubMed] [Google Scholar]

- 25.Hudepohl MB, Robins DL, King TZ, Henrich CC. The role of emotion perception in adaptive functioning of people with autism spectrum disorders. Autism. 2015;19:107–112. doi: 10.1177/1362361313512725. [DOI] [PubMed] [Google Scholar]

- 26.Kanner L. Autistic disturbances of affective contact. Nerv. Child. 1943;2:217–250. [PubMed] [Google Scholar]

- 27.Kennedy DP, Adolphs R. Perception of emotions from facial expressions in high-functioning adults with autism. Neuropsychologia. 2012;50:3313–3319. doi: 10.1016/j.neuropsychologia.2012.09.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Eack SM, Mazefsky CA, Minshew NJ. Misinterpretation of facial expressions of emotion in verbal adults with autism spectrum disorder. Autism. 2015;19:308–315. doi: 10.1177/1362361314520755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Walsh JA, Creighton SE, Rutherford MD. Emotion perception or social cognitive complexity: What drives face processing deficits in autism spectrum disorder? J. Autism Dev. Disord. 2016;46:615–623. doi: 10.1007/s10803-015-2606-3. [DOI] [PubMed] [Google Scholar]

- 30.Yeung MK. A systematic review and meta-analysis of facial emotion recognition in autism spectrum disorder: The specificity of deficits and the role of task characteristics. Neurosci. Biobehav. Rev. 2022;133:104518. doi: 10.1016/j.neubiorev.2021.104518. [DOI] [PubMed] [Google Scholar]

- 31.He Y, et al. The characteristics of intelligence profile and eye gaze in facial emotion recognition in mild and moderate preschoolers with autism spectrum disorder. Front. Psychiatry. 2019;10:402. doi: 10.3389/fpsyt.2019.00402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Su, Q., Chen, F., Li, H., Yan, N. & Wang, L. Multimodal emotion perception in children with autism spectrum disorder by eye tracking study. in 2018 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES) 382–387 (ieeexplore.ieee.org, 2018).

- 33.de Jong MC, van Engeland H, Kemner C. Attentional effects of gaze shifts are influenced by emotion and spatial frequency, but not in autism. J. Am. Acad. Child Adolesc. Psychiatry. 2008;47:443–454. doi: 10.1097/CHI.0b013e31816429a6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Fridenson-Hayo S, et al. Basic and complex emotion recognition in children with autism: cross-cultural findings. Mol. Autism. 2016;7:52. doi: 10.1186/s13229-016-0113-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Happé F, Frith U. The weak coherence account: Detail-focused cognitive style in autism spectrum disorders. J. Autism Dev. Disord. 2006;36:5–25. doi: 10.1007/s10803-005-0039-0. [DOI] [PubMed] [Google Scholar]

- 36.Teunisse J-P, de Gelder B. Face processing in adolescents with autistic disorder: The inversion and composite effects. Brain Cogn. 2003;52:285–294. doi: 10.1016/S0278-2626(03)00042-3. [DOI] [PubMed] [Google Scholar]

- 37.Gauthier I, Klaiman C, Schultz RT. Face composite effects reveal abnormal face processing in Autism spectrum disorders. Vision Res. 2009;49:470–478. doi: 10.1016/j.visres.2008.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Brewer R, Bird G, Gray KLH, Cook R. Face perception in autism spectrum disorder: Modulation of holistic processing by facial emotion. Cognition. 2019;193:104016. doi: 10.1016/j.cognition.2019.104016. [DOI] [PubMed] [Google Scholar]

- 39.Ventura P, et al. Holistic processing of faces is intact in adults with autism spectrum disorder. Vis. cogn. 2018;26:13–24. doi: 10.1080/13506285.2017.1370051. [DOI] [Google Scholar]

- 40.Naumann S, Senftleben U, Santhosh M, McPartland J, Webb SJ. Neurophysiological correlates of holistic face processing in adolescents with and without autism spectrum disorder. J. Neurodev. Disord. 2018;10:27. doi: 10.1186/s11689-018-9244-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Barendse EM, Hendriks MPH, Thoonen G, Aldenkamp AP, Kessels RPC. Social behaviour and social cognition in high-functioning adolescents with autism spectrum disorder (ASD): Two sides of the same coin? Cogn. Process. 2018;19:545–555. doi: 10.1007/s10339-018-0866-5. [DOI] [PubMed] [Google Scholar]

- 42.Senju A. Atypical development of spontaneous social cognition in autism spectrum disorders. Brain Dev. 2013;35:96–101. doi: 10.1016/j.braindev.2012.08.002. [DOI] [PubMed] [Google Scholar]

- 43.Bölte S, et al. The development and evaluation of a computer-based program to test and to teach the recognition of facial affect. Int. J. Circumpolar Health. 2002;61(Suppl 2):61–68. doi: 10.3402/ijch.v61i0.17503. [DOI] [PubMed] [Google Scholar]

- 44.Nowicki S, Duke MP. Manual for the Receptive Tests of the Diagnostic Analysis of Nonverbal Accuracy 2 (DANVA2) Department of Psychology, Emory University; 2008. [Google Scholar]

- 45.Righart R, de Gelder B. Rapid influence of emotional scenes on encoding of facial expressions: An ERP study. Soc. Cogn. Affect. Neurosci. 2008;3:270–278. doi: 10.1093/scan/nsn021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Righart R, de Gelder B. Context influences early perceptual analysis of faces—An electrophysiological study. Cereb. Cortex. 2006;16:1249–1257. doi: 10.1093/cercor/bhj066. [DOI] [PubMed] [Google Scholar]

- 47.Aviezer H, Bentin S, Dudarev V, Hassin RR. The automaticity of emotional face-context integration. Emotion. 2011;11:1406–1414. doi: 10.1037/a0023578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Vermeulen P. Context blindness in autism spectrum disorder: Not using the forest to see the trees as trees. Focus Autism Other Dev. Disabl. 2015;30:182–192. doi: 10.1177/1088357614528799. [DOI] [Google Scholar]

- 49.Frith, U. Autism: Explaining the Enigma, 2nd edn. 2, 249 (2003).

- 50.Da Fonseca D, et al. Can children with autistic spectrum disorders extract emotions out of contextual cues? Res. Autism Spectr. Disord. 2009;3:50–56. doi: 10.1016/j.rasd.2008.04.001. [DOI] [Google Scholar]

- 51.Ortega J, Chen Z, Whitney D. Serial dependence in emotion perception mirrors the autocorrelations in natural emotion statistics. J. Vis. 2023;23:12. doi: 10.1167/jov.23.3.12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Cunningham WA, Dunfield KA, Stillman PE. Emotional states from affective dynamics. Emot. Rev. 2013;5:344–355. doi: 10.1177/1754073913489749. [DOI] [Google Scholar]

- 53.Speer LL, Cook AE, McMahon WM, Clark E. Face processing in children with autism: Effects of stimulus contents and type. Autism. 2007;11:265–277. doi: 10.1177/1362361307076925. [DOI] [PubMed] [Google Scholar]

- 54.Weisberg J, et al. Social perception in autism spectrum disorders: Impaired category selectivity for dynamic but not static images in ventral temporal cortex. Cereb. Cortex. 2014;24:37–48. doi: 10.1093/cercor/bhs276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Alaerts K, et al. Underconnectivity of the superior temporal sulcus predicts emotion recognition deficits in autism. Soc. Cogn. Affect. Neurosci. 2014;9:1589–1600. doi: 10.1093/scan/nst156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rahko JS, et al. Valence scaling of dynamic facial expressions is altered in high-functioning subjects with autism spectrum disorders: An fMRI study. J. Autism Dev. Disord. 2012;42:1011–1024. doi: 10.1007/s10803-011-1332-8. [DOI] [PubMed] [Google Scholar]

- 57.Pelphrey KA, Morris JP, McCarthy G, Labar KS. Perception of dynamic changes in facial affect and identity in autism. Soc. Cogn. Affect. Neurosci. 2007;2:140–149. doi: 10.1093/scan/nsm010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Baron-Cohen S, Wheelwright S, Skinner R, Martin J, Clubley E. The autism-spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J. Autism Dev. Disord. 2001;31:5–17. doi: 10.1023/A:1005653411471. [DOI] [PubMed] [Google Scholar]

- 59.Weller SC. Cultural consensus theory: Applications and frequently asked questions. Field Methods. 2007;19:339–368. doi: 10.1177/1525822X07303502. [DOI] [Google Scholar]

- 60.Batchelder & Anders. Cultural consensus theory. of experimental psychology … (2018).

- 61.Romney AK, Batchelder WH, Weller SC. Recent applications of cultural consensus theory. Am. Behav. Sci. 1987;31:163–177. doi: 10.1177/000276487031002003. [DOI] [Google Scholar]

- 62.Batchelder WH, Anders R. Cultural Consensus Theory: Comparing different concepts of cultural truth. J. Math. Psychol. 2012;56:316–332. doi: 10.1016/j.jmp.2012.06.002. [DOI] [Google Scholar]

- 63.Banissy MJ, et al. Superior facial expression, but not identity recognition, in mirror-touch synesthesia. J. Neurosci. 2011;31:1820–1824. doi: 10.1523/JNEUROSCI.5759-09.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Bal E, et al. Emotion recognition in children with autism spectrum disorders: Relations to eye gaze and autonomic state. J. Autism Dev. Disord. 2010;40:358–370. doi: 10.1007/s10803-009-0884-3. [DOI] [PubMed] [Google Scholar]

- 65.Corden B, Chilvers R, Skuse D. Avoidance of emotionally arousing stimuli predicts social–perceptual impairment in Asperger’s syndrome. Neuropsychologia. 2008;46:137–147. doi: 10.1016/j.neuropsychologia.2007.08.005. [DOI] [PubMed] [Google Scholar]

- 66.Wallace S, Coleman M, Bailey A. An investigation of basic facial expression recognition in autism spectrum disorders. Cogn. Emot. 2008;22:1353–1380. doi: 10.1080/02699930701782153. [DOI] [Google Scholar]

- 67.Rutherford MD, Towns AM. Scan path differences and similarities during emotion perception in those with and without autism spectrum disorders. J. Autism Dev. Disord. 2008;38:1371–1381. doi: 10.1007/s10803-007-0525-7. [DOI] [PubMed] [Google Scholar]

- 68.Neumann D, Spezio ML, Piven J, Adolphs R. Looking you in the mouth: abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Soc. Cogn. Affect. Neurosci. 2006;1:194–202. doi: 10.1093/scan/nsl030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Adolphs R, Sears L, Piven J. Abnormal processing of social information from faces in autism. J. Cogn. Neurosci. 2001;13:232–240. doi: 10.1162/089892901564289. [DOI] [PubMed] [Google Scholar]

- 70.Ogai M, et al. fMRI study of recognition of facial expressions in high-functioning autistic patients. NeuroReport. 2003;14:559–563. doi: 10.1097/00001756-200303240-00006. [DOI] [PubMed] [Google Scholar]

- 71.Loveland KA, Steinberg JL, Pearson DA, Mansour R, Reddoch S. Judgments of auditory–visual affective congruence in adolescents with and without autism: A pilot study of a new task using fMRI. Percept. Mot. Skills. 2008;107:557–575. doi: 10.2466/pms.107.2.557-575. [DOI] [PubMed] [Google Scholar]

- 72.Stagg S, Tan L-H, Kodakkadan F. Emotion recognition and context in adolescents with autism spectrum disorder. J. Autism Dev. Disord. 2022;52:4129–4137. doi: 10.1007/s10803-021-05292-2. [DOI] [PubMed] [Google Scholar]

- 73.Ashwin C, Chapman E, Colle L, Baron-Cohen S. Impaired recognition of negative basic emotions in autism: A test of the amygdala theory. Soc. Neurosci. 2006;1:349–363. doi: 10.1080/17470910601040772. [DOI] [PubMed] [Google Scholar]

- 74.Williams D, Happé F. Recognising ‘social’and ‘non-social’emotions in self and others: A study of autism. Autism. 2010;14:285–304. doi: 10.1177/1362361309344849. [DOI] [PubMed] [Google Scholar]

- 75.Risko EF, Laidlaw K, Freeth M, Foulsham T, Kingstone A. Social attention with real versus reel stimuli: Toward an empirical approach to concerns about ecological validity. Front. Hum. Neurosci. 2012;6:143. doi: 10.3389/fnhum.2012.00143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Baron-Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: Evidence from very high functioning adults with autism or asperger syndrome. J. Child Psychol. Psychiatry. 1997;38:813–822. doi: 10.1111/j.1469-7610.1997.tb01599.x. [DOI] [PubMed] [Google Scholar]

- 77.Spek AA, Scholte EM, Van Berckelaer-Onnes IA. Theory of mind in adults with HFA and Asperger syndrome. J. Autism Dev. Disord. 2010;40:280–289. doi: 10.1007/s10803-009-0860-y. [DOI] [PubMed] [Google Scholar]

- 78.Cook R, Brewer R, Shah P, Bird G. Alexithymia, not autism, predicts poor recognition of emotional facial expressions. Psychol. Sci. 2013;24:723–732. doi: 10.1177/0956797612463582. [DOI] [PubMed] [Google Scholar]

- 79.Bird G, Cook R. Mixed emotions: The contribution of alexithymia to the emotional symptoms of autism. Transl. Psychiatry. 2013;3:e285. doi: 10.1038/tp.2013.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Sifneos PE. Alexithymia: Past and present. Am. J. Psychiatry. 1996;153:137–142. doi: 10.1176/ajp.153.7.137. [DOI] [PubMed] [Google Scholar]

- 81.Solomon M, et al. The neural substrates of cognitive control deficits in autism spectrum disorders. Neuropsychologia. 2009;47:2515–2526. doi: 10.1016/j.neuropsychologia.2009.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Mackie M-A, Fan J. Reduced efficiency and capacity of cognitive control in autism spectrum disorder. Autism Res. 2016;9:403–414. doi: 10.1002/aur.1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Solomon M, Ozonoff SJ, Cummings N, Carter CS. Cognitive control in autism spectrum disorders. Int. J. Dev. Neurosci. 2008;26:239–247. doi: 10.1016/j.ijdevneu.2007.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Poljac E, Bekkering H. A review of intentional and cognitive control in autism. Front. Psychol. 2012;3:436. doi: 10.3389/fpsyg.2012.00436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Dichter GS, Belger A. Social stimuli interfere with cognitive control in autism. Neuroimage. 2007;35:1219–1230. doi: 10.1016/j.neuroimage.2006.12.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Booth RDL, Happé FGE. Evidence of reduced global processing in autism spectrum disorder. J. Autism Dev. Disord. 2018;48:1397–1408. doi: 10.1007/s10803-016-2724-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Hassin RR, Aviezer H, Bentin S. Inherently ambiguous: Facial expressions of emotions. Context. Emot. Rev. 2013;5:60–65. doi: 10.1177/1754073912451331. [DOI] [Google Scholar]

- 88.Van der Hallen R, Evers K, Brewaeys K, Van den Noortgate W, Wagemans J. Global processing takes time: A meta-analysis on local-global visual processing in ASD. Psychol. Bull. 2015;141:549–573. doi: 10.1037/bul0000004. [DOI] [PubMed] [Google Scholar]

- 89.Wang L, Mottron L, Peng D, Berthiaume C, Dawson M. Local bias and local-to-global interference without global deficit: A robust finding in autism under various conditions of attention, exposure time, and visual angle. Cogn. Neuropsychol. 2007;24:550–574. doi: 10.1080/13546800701417096. [DOI] [PubMed] [Google Scholar]

- 90.Posner J, Russell JA, Peterson BS. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005;17:715–734. doi: 10.1017/S0954579405050340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Tseng A, et al. Using the circumplex model of affect to study valence and arousal ratings of emotional faces by children and adults with autism spectrum disorders. J. Autism Dev. Disord. 2014;44:1332–1346. doi: 10.1007/s10803-013-1993-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Herpers PCM, et al. Emotional valence detection in adolescents with oppositional defiant disorder/conduct disorder or autism spectrum disorder. Eur. Child Adolesc. Psychiatry. 2019;28:1011–1022. doi: 10.1007/s00787-019-01282-z. [DOI] [PubMed] [Google Scholar]

- 93.Celani G, Battacchi MW, Arcidiacono L. The understanding of the emotional meaning of facial expressions in people with autism. J. Autism Dev. Disord. 1999;29:57–66. doi: 10.1023/A:1025970600181. [DOI] [PubMed] [Google Scholar]

- 94.Quinde-Zlibut JM, et al. Multifaceted empathy differences in children and adults with autism. Sci. Rep. 2021;11:19503. doi: 10.1038/s41598-021-98516-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Teh EJ, Yap MJ, RickardLiow SJ. Emotional processing in autism spectrum disorders: Effects of age, emotional valence, and social engagement on emotional language use. J. Autism Dev. Disord. 2018;48:4138–4154. doi: 10.1007/s10803-018-3659-x. [DOI] [PubMed] [Google Scholar]

- 96.Tseng A, et al. Differences in neural activity when processing emotional arousal and valence in autism spectrum disorders. Hum. Brain Mapp. 2016;37:443–461. doi: 10.1002/hbm.23041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Downey LA, Johnston PJ, Hansen K. The relationship between emotional intelligence and depression in a clinical sample. Behav. Processes. 2008;22(2):93–98. [Google Scholar]

- 98.Fernandez-Berrocal, P., Alcaide, R. The role of emotional intelligence in anxiety and depression among adolescents. Individ. Differ. Res.4(1) (2006).

- 99.Kee KS, et al. Emotional intelligence in schizophrenia. Schizophr. Res. 2009;107:61–68. doi: 10.1016/j.schres.2008.08.016. [DOI] [PubMed] [Google Scholar]

- 100.O’Connor PJ, Hill A, Kaya M, Martin B. The measurement of emotional intelligence: A critical review of the literature and recommendations for researchers and practitioners. Front. Psychol. 2019;10:1116. doi: 10.3389/fpsyg.2019.01116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.MacCann C, Joseph DL, Newman DA, Roberts RD. Emotional intelligence is a second-stratum factor of intelligence: Evidence from hierarchical and bifactor models. Emotion. 2014;14:358–374. doi: 10.1037/a0034755. [DOI] [PubMed] [Google Scholar]

- 102.Miao C, Humphrey RH, Qian S. A meta-analysis of emotional intelligence and work attitudes. J. Occup. Organ. Psychol. 2017;90:177–202. doi: 10.1111/joop.12167. [DOI] [Google Scholar]

- 103.O’Boyle EH, Jr, Humphrey RH, Pollack JM, Hawver TH, Story PA. The relation between emotional intelligence and job performance: A meta-analysis. J. Organ. Behav. 2011;32:788–818. doi: 10.1002/job.714. [DOI] [Google Scholar]

- 104.Maul A. The validity of the Mayer–Salovey–Caruso Emotional Intelligence Test (MSCEIT) as a measure of emotional intelligence. Emot. Rev. 2012;4:394–402. doi: 10.1177/1754073912445811. [DOI] [Google Scholar]

- 105.Diener E, Emmons RA, Larsen RJ, Griffin S. The satisfaction with life scale. J. Pers. Assess. 1985;49:71–75. doi: 10.1207/s15327752jpa4901_13. [DOI] [PubMed] [Google Scholar]

- 106.Konings M, Bak M, Hanssen M, van Os J, Krabbendam L. Validity and reliability of the CAPE: A self-report instrument for the measurement of psychotic experiences in the general population. Acta Psychiatr. Scand. 2006;114:55–61. doi: 10.1111/j.1600-0447.2005.00741.x. [DOI] [PubMed] [Google Scholar]

- 107.Spielberger, C. D. State-trait anxiety inventory for adults. (1983). 10.1037/t06496-000.

- 108.Beck AT, Steer RA, Brown G. Beck depression inventory–II. Psychol. Assess. 1996 doi: 10.1037/t00742-000. [DOI] [Google Scholar]

- 109.Baron-Cohen S, Wheelwright S. The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. J. Autism Dev. Disord. 2004;34:163–175. doi: 10.1023/B:JADD.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- 110.Benson N, Hulac DM, Kranzler JH. Independent examination of the Wechsler Adult Intelligence Scale—Fourth Edition (WAIS-IV): What does the WAIS-IV measure? Psychol. Assess. 2010;22:121–130. doi: 10.1037/a0017767. [DOI] [PubMed] [Google Scholar]

- 111.MacCann C, Roberts RD, Matthews G, Zeidner M. Consensus scoring and empirical option weighting of performance-based Emotional Intelligence (EI) tests. Pers. Individ. Dif. 2004;36:645–662. doi: 10.1016/S0191-8869(03)00123-5. [DOI] [Google Scholar]

- 112.Legree PJ. Evidence for an oblique social intelligence factor established with a Likert-based testing procedure. Intelligence. 1995;21:247–266. doi: 10.1016/0160-2896(95)90016-0. [DOI] [Google Scholar]

- 113.Hamann S, Canli T. Individual differences in emotion processing. Curr. Opin. Neurobiol. 2004;14:233–238. doi: 10.1016/j.conb.2004.03.010. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data are available at the OSF (https://osf.io/zku24/).

Data analysis was conducted using Python. Code is available at the OSF (https://osf.io/zku24/).