Abstract

The present study investigates the use of algorithm selection for automatically choosing an algorithm for any given protein–ligand docking task. In drug discovery and design process, conceptualizing protein–ligand binding is a major problem. Targeting this problem through computational methods is beneficial in order to substantially reduce the resource and time requirements for the overall drug development process. One way of addressing protein–ligand docking is to model it as a search and optimization problem. There have been a variety of algorithmic solutions in this respect. However, there is no ultimate algorithm that can efficiently tackle this problem, both in terms of protein–ligand docking quality and speed. This argument motivates devising new algorithms, tailored to the particular protein–ligand docking scenarios. To this end, this paper reports a machine learning-based approach for improved and robust docking performance. The proposed set-up is fully automated, operating without any expert opinion or involvement both on the problem and algorithm aspects. As a case study, an empirical analysis was performed on a well-known protein, Human Angiotensin-Converting Enzyme (ACE), with 1428 ligands. For general applicability, AutoDock 4.2 was used as the docking platform. The candidate algorithms are also taken from AutoDock 4.2. Twenty-eight distinctly configured Lamarckian-Genetic Algorithm (LGA) are chosen to build an algorithm set. ALORS which is a recommender system-based algorithm selection system was preferred for automating the selection from those LGA variants on a per-instance basis. For realizing this selection automation, molecular descriptors and substructure fingerprints were employed as the features characterizing each target protein–ligand docking instance. The computational results revealed that algorithm selection outperforms all those candidate algorithms. Further assessment is reported on the algorithms space, discussing the contributions of LGA’s parameters. As it pertains to protein–ligand docking, the contributions of the aforementioned features are examined, which shed light on the critical features affecting the docking performance.

Subject terms: Computational biology and bioinformatics, Chemistry, Mathematics and computing

Introduction

In the wake of emerging diseases and rising awareness of the desire to improve human well-being, there has been a persistent effort to implement new medical innovations. A broad array of concepts in Drug Discovery/Design (DD)1 has been the leading topics of interest. The DD process, however, is time-consuming and expensive. The entire DD pipeline can last as long as 15 years, requiring high budgets and the participation of large groups of scientists. In that respect, the traditional DD process often comes with a high cost and risk and a low success rate, factors that discourage new research and hinder substantive advances in this field2. A major factor that contributes to this fact is that DD is essentially a search problem of the enormous chemical space to detect druggable compounds3,4. Arguably, the most critical step in this arduous process is identifying the new chemical compounds that could be developed into new medicines.

Computational approaches have been practical, in general, as they are effective mechanisms to move the DD process forward at an increased pace, with improved successful outcomes. Computer-Aided DD (CADD)5–10 is an umbrella term covering those computational procedures. To be specific, CADD is a collection of mathematical and data-driven tools that cut across disciplines with respect to their utilization in DD. These tools are implemented as computer programs and are accommodated in conjunction with varying experimental methodologies to expedite the discovery of new chemical entities. The CADD strategies can quickly triage a very large number of compounds, identifying hits that can be converted to leads. The laboratory methods then take over for testing and finalizing the drug. This process is iterative and reciprocal. The outcomes of the CADD methods are exploited to devise compounds that are subjected to chemical synthesis and biological assay. The information derived from those experiments is exploited to further develop the structure activity relationships (SARs) and quantitative SARs (QSARs) that are embedded in the CADD approaches.

Among the CADD methods, molecular docking has been particularly popular. Molecular docking is the process by which a small molecule, generally referred to as a ligand, is computationally interacted with a protein or other biomolecules without any laboratory work. Procedurally, it varies the ligand’s conformation and orientation in limited and stochastic steps. Its goal is to seek the best docking conformation, or pose, that minimizes the binding energy. The results returned by the molecular docking programs are usually the binding energy value and a protein–ligand complex file that are indicative of the actual binding affinity and position when the ligand is co-crystalized with the receptor. Molecular docking has been benefited in different CADD procedures, including virtual screening, a process that queries the binding of a large number of molecules to a particular disease (biological) target.

This study aimed at applying Algorithm Selection (AS)11,12 to automatically suggest algorithms that best solve the Protein–Ligand Docking problem (PLDP). The idea of AS is motivated by the No Free Lunch Theorem (NFLT)13. The NFLT essentially states that every algorithm performs the same on average when it is applied to all possible problem instances. Thus, every algorithm has its own strengths and weaknesses, no matter how complex and advanced it is. AS basically attempts to choose the most suitable algorithm from an existing pool of algorithms to address a given problem instance of any domain. The objective of this work was to identify the most suitable algorithm from a fixed pool of PLDP algorithms for each given PLDP instance. AutoDock414 was preferred as it is a widely used PLDP tool, supplying a favorable algorithm pool. An existing AutoDock solver, Lamarckian GA (LGA)15, which integrates the Genetic Algorithm (GA)7 and the Local Search (LS)16, was used in a parameterized manner such that a suite of candidate algorithms was derived. This step resulted in 28 LGA variants, including the LGA with its default parameter values. They were used on 1428 PLDP instances, each concerning one ligand out of 1428 ligands and a single target protein of Human Angiotensin-Converting Enzyme (ACE). Those 28 algorithms are managed by ALORS17, which is a recommender systems-based AS approach. To be able to use AS, a feature set is derived for representing the PLDP instances, including the widely adopted molecular descriptors as well as the substructure fingerprints. Following this setup, an in-depth experimental analysis is reported, initially comparing each standalone LGA variant against ALORS. Concerning the analysis capabilities of ALORS, the resemblance of the candidate algorithms—in terms of the LGA parameter values in this case—and the PLDP instance similarities besides the importance of the LGA parameters and PLDP instance features are investigated. The consequent assessment provides practical insights for how to use LGA with increased performance and what to consider when solving a particular PLDP scenario.In the remainder of the paper, Section "Methods" discusses the relevant literature both on PLDP and AS after formally describing them. The AS method employed for choosing algorithms is detailed in Section "Results and discussion". A comprehensive computational analysis and discussion are provided in Section "Conclusion".

Protein ligand docking

Protein–ligand docking plays a crucial role in modern pharmaceutical research and drug development. Docking algorithms estimate the structure of the ligand-receptor complex through sampling and ranking. They first sample the conformation of the ligands in the active site of a receptor. Next, they rank all the generated poses based on specific scoring functions or simply by calculating the binding energy18. Docking algorithms are thus capable of simulating the best orientation of a ligand when it is bound to a protein receptor.

The initial docking technique is based on the Fischer’s lock-and-key assumption19. This assumption treats both the ligand and the receptor as rigid bodies with their affinity proportional to their geometric forms. In most elementary rigid-body systems, the ligand is sought in a six-dimensional rotational or translational space to fit the binding site. Later, Koshland proposed the theory induced-fit20, which implies that ligand interactions would continuously modify the active site of a receptor. In essence, the docking procedure is considered dynamic and adoptable. In the last several decades, numerous docking technologies and tools have been developed, such as DOCK21, AutoDock22, GOLD23, and Glide24. Besides the differences in the implementation of 3D pose investigation, protein receptor modeling, etc., the major variation among them is the evaluation of the binding affinity, performed by different Scoring Functions (SFs)25. The existing scoring functions can be categorized as (1) force field based, (2) empirical function based, and (3) knowledge based26. Because of the heterogeneity of how protein–ligand interaction is modeled in different scoring functions, it is likely that diverse performance can be observed if one scoring function is applied to all docking tasks.

This study utilized AutoDock4 as it is an open-source, widely used system. It is the first docking software that can model ligands with complete flexibility27. AutoDock4 consists of two fundamental software components: AutoDock and AutoGrid. While AutoDock is the main software, AutoGrid calculates the noncovalent energy of interactions and produces an electrostatic potential grid map28. As a feature of AutoDock427, it is possible to model receptor flexibility by shifting side chains. To deal with side-chain flexibility, a simultaneous sampling method is provided. While the other chains stay stiff, the user-selected chains are sampled by a certain method with the ligand. With AutoGrid, the rigid portion is processed as a grid energy map. The grid maps together with the receptor’s flexible portion direct the selected ligands’ docking process28.

AutoDock4 adopts the physics-based force field scoring function with van der Waals, electrostatic, and directional hydrogen-bond potentials derived from an early version of the AMBER force field29. In addition, a pairwise-additive desolvation term based on partial charges, and a simple conformational entropy penalty are included26. The scoring function consists of electrostatic and Lennard–Jones VDW terms:

where and are the VDW parameters, refers to the distance between the protein atom and the ligand atom , and and are atomic charges. is introduced as a simple distance-dependent dielectric constant in the Coulombic term. However, the desolvation effect cannot be represented in the Coulombic term26. The ignored solvent effect will lead to a biased scoring function that will not consider those relatively low-charged ligands.

A knowledge-based scoring function25 is further established based on the statistical mechanics of interacting atom pairs. A pairwise additive desolvation term is introduced, which is directly obtained from the frequency of occurrence of atom pairs by the Boltzmann relation. The energy potentials derived from structural information are also included in determining atomic structures26. The potentials are calculated by

where is the Boltzmann constant, is the absolute temperature of the system, is the number density of the protein–ligand atom pair at distance , and is the pair density when interatomic interactions are zero. The inverse Boltzmann stands for the mean-force potentials, not the true potentials, which are quite different from the simple fluid system26. Thus, although it excludes the effects of volume, composition, etc., it still helps to convert the atom–atom distances into a function suitable for complex protein systems.

Most AutoDock4 users, as well as users of other molecular docking platforms, tend to follow the recommended docking protocol with the given default values. This practice is mainly followed to avoid tweaking the docking program. Furthermore, some docking programs including AutoDock4, only provide a limited set of options for executing the search with a particular scoring function, but there still remain a lot of other combinations. In the case of AutoDock4, the recommended choice of algorithm is the Lamarckian Genetic Algorithm (LGA). That being said it is possible to show docking scenarios where LGA performs relatively poor.

Algorithm selection

The selection of appropriate algorithms for problem solving in a variety of contexts has drawn increasing attention in the last few decades30. A phenomenon known as performance complementarity argues, based on empirical research, that one algorithm may perform well in one setting while others perform better in other conditions12.

The concept of per-instance algorithm selection was proposed and examined11. This idea refers to finding which algorithm is the best for a given instance12. The rationale for the in-depth examination of this algorithm is the selection of a suitable algorithm from a huge number of diverse existing algorithms. However, it took decades to become widespread for being applied to address Boolean satisfiability (SAT) and other difficult combinatorial problems31. In the designated procedure, a rule is developed between an appropriate algorithm and a certain scenario. In optimization issues, per-instance algorithm selection has therefore become prominent.

As the application of machine learning methods has been proven to be competent in many tasks, an automatic rule-connecting method has been studied12. Detailed and insightful instructions32 have been provided on the first automatic algorithm selection process and it has addressed a number of important issues, including the selection of regression or classification and the distinction between dynamic and static feature. However, continuous issues have been omitted. Furthermore, a generalization to the continuous optimization problem33 has been proposed by highlighting the benefits of discrete problems.

Methods

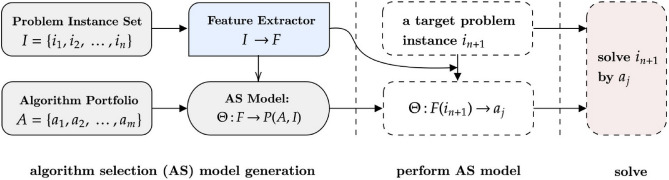

The main component of the proposed approach is the algorithm selection (AS) module as visualized in Fig. 1. It is responsible for choosing an algorithm in a per-instance manner and for matching a suitable algorithm to address a given (PLDP) instance. Also, referring to the earlier AS description, initially a group of PLDP algorithms, A, should be provided. Although these algorithms can be determined and used in a fixed way, algorithm portfolio generation strategies34–36 can be incorporated to derive candidate algorithms. Alongside an algorithm set, an instance set , should be accommodated to model the AS system. Although AS is a problem-independent strategy, the behavior of AS is highly affected by the choice of those instances. If the AS is planned to be used to realize a rather specific family of docking tasks, can include the instances from that particular family. Otherwise, to have a generalized AS model, it is beneficial for to contain a wide range of diverse PLDP instances. In the current study, there is only one target protein, yet a rather large set of ligands. Thus, any built AS model here is specific to that target protein while having some level of generality regarding the ligands. In relation to this diversity aspect, having a high diversity through complementarity in can potentially offer improved and robust AS models. The complementarity, here, denotes having algorithms with varying problem solving capabilities. While an algorithm works well on a certain type of instance, another algorithm can perform well on instances where the earlier algorithms perform poorly. The chosen and are then used to generate performance data, , denoting the performance of each candidate algorithm, , on each problem instance, . During this performance data generation step, it is critical to take into account the stochastic / non-deterministic nature of candidate algorithms. This means that if an algorithm may deliver a different solution after each run on the exact same problem instance, it will be misleading to run that algorithm only once and use that value in . In such cases, it is reasonable to run those algorithms multiple times and use their mean or median values as their per-instance performance indicators. One last element required to build an AS model is to specify the number of features, , adequately describing the characteristics of the target problem instances. With data manipulation or data format conversions, this step can be skipped as the features are automatically derived37. Otherwise, with the help of the chemistry experts, reasonably representative instance features can be collected. Yet, it is potentially possible to come up with such features referring to the relevant literature, without the need of the actual presence of experts. That said, depending on the target problem, it might be good enough to solely utilize basic statistical measures and values achieved via landmarking38. At this point, traditionally, an AS model can be built, in the form of performance prediction, , or other existing AS strategies can be employed.

Figure 1.

Illustration of Algorithm Selection. The traditional per instance Algorithm Selection (AS) process.

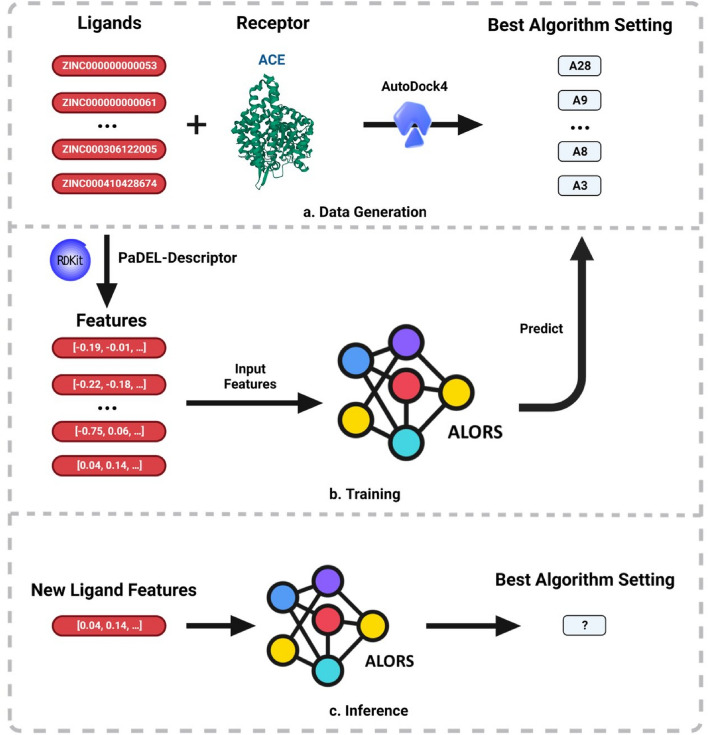

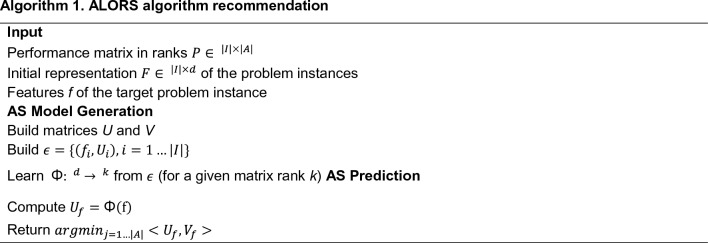

Following the given framework, Fig. 2 visualizes the AS setting performed in this article. The data generation step is achieved based on AutoDock 4.2. For the AS method, an existing technique, ALORS117, is recruited. ALORS is an algorithm recommendation system, based on collaborative filtering (CF)39. It has been successfully applied for different selection decisions on varying problem domains40–43, including those on a relevant protein-structure prediction problem44,45. CF is a type of recommendation approach, that predicts how much users like certain items such as movies and products. It makes predictions based on relating similar entries both at the user and item levels. Unlike other recommendation methods, CF works with sparse entries. ALORS accommodates the CF idea by considering problem instances as the users while considering algorithms as the items; that is, how much an instance likes an algorithm, depending on the relative success of the algorithm compared to all the candidate algorithms. Similar to the CF applications, ALORS also works with rank-based data, the ranks of all the present algorithms on all the problem instances. In that respect, ALORS performs algorithm selection (AS) as a rank-prediction task. However, unlike the existing AS systems, ALORS indirectly performs rank predictions. Essentially, a prediction model derived by ALORS is a feature-to-feature model, as detailed in Algorithm 1. It maps a set of hand-picked features characterizing the target problem instances to another group of instance features. The latter suite of features is the ones automatically extracted from the rank performance data by Matrix Factorization (MF). To be specific, Singular Value Decomposition (SVD)46 is used as the MF method for dimensionality reduction.

Figure 2.

Framework of ALORS for Protein–Ligand Docking. All ligands are docked with ACE using 28 algorithms, each with a different parameter configuration in AutoDock4 during the data generation procedure. The algorithm configuration that produces the lowest docking scores averaged for 50 runs is selected as the best algorithm for the given instance, such as the 28th algorithm setting (A28). The ALORS model is trained using molecular descriptors and fingerprints, and the best algorithm labels corresponding to each ligand. Our model uses features of a single new ligand to determine the best algorithm configuration for inference.

ALORS here is applied with k = 5 with respect to the rank of MF by SVD. Regarding to the modelling component of Random Forest (RF)47, the number of trees is set to 100 which is the default value in Scikit.

The candidate algorithm set is composed of 28 algorithms while the number of docking scenarios, instances, is 1428. The algorithms are essentially specified by setting distinct parameter configurations of a Lamarckian- Genetic Algorithm (LGA), as detailed in Table 1. The evaluation is realized through tenfold cross-validation (10-cv).

Table 1.

Docking configurations.

| Algorithm | Population size | Mutation rate | Window size |

|---|---|---|---|

| A1 | 50 | 0.02 | 10 |

| A2 | 150 | 0.02 | 10 |

| A3 | 200 | 0.02 | 10 |

| A4 | 150 | 0.5 | 10 |

| A5 | 150 | 0.8 | 10 |

| A6 | 150 | 0.02 | 30 |

| A7 | 150 | 0.02 | 50 |

| A8 | 50 | 0.02 | 30 |

| A9 | 50 | 0.02 | 50 |

| A10 | 200 | 0.02 | 30 |

| A11 | 50 | 0.5 | 10 |

| A12 | 50 | 0.5 | 30 |

| A13 | 50 | 0.5 | 50 |

| A14 | 50 | 0.8 | 10 |

| A15 | 50 | 0.8 | 30 |

| A16 | 50 | 0.8 | 50 |

| A17 | 150 | 0.5 | 30 |

| A18 | 150 | 0.5 | 50 |

| A19 | 150 | 0.8 | 30 |

| A20 | 150 | 0.8 | 50 |

| A21 | 200 | 0.02 | 50 |

| A22 | 200 | 0.5 | 10 |

| A23 | 200 | 0.5 | 30 |

| A24 | 200 | 0.5 | 50 |

| A25 | 200 | 0.8 | 10 |

| A26 | 200 | 0.8 | 30 |

| A27 | 200 | 0.8 | 50 |

| A28 | 150 | 0.02 | 10 |

The standalone docking algorithms are derived from a Lamarckian-Genetic Algorithm (LGA). A28 use classical Solis and Wets local searcher (sw), and the rest use pseudo-Solis and Wets local searcher (psw). Population size: the number of individuals, i.e., solutions, maintained in each generation of LGA. A larger population size typically requires more computational resources as more searches are performed before convergence. Mutation rate: the probability that an individual is mutated, i.e., a solution is manipulated. A higher mutation rate will lead to more exploratory searches while a lower rate renders the search more exploitative. Window size: the size of the energy window that is used to determine which individuals will be subjected to the local search procedure. A smaller window size will result in more focused refinement of the best individuals, while a larger one keeps more individuals for the local search.

The ligands are molecules approved by the U.S. Food and Drug Administration (FDA) 2 in ZINC15 database48. Human Angiotensin Converting Enzyme (ACE), a critical membrane protein for the SARS-COV virus, and renal and cardiovascular function, is chosen as the target receptor (PDB DOI: 1O86)49. The original ligand files are in MOL2 format and are converted to PDB format for docking via Openbabel50. Receptors and ligands are preprocessed by AutoDock Tools and include addition of hydrogen bonds and charges in the form of PDBQT. The whole docking process is performed via AutoDock 4.2. The random seed is fixed for the repeatability of the experiment. Each algorithm is set to run for 50 times for each ligand and the number of energy evaluations is set to 2, 500, 000. They are both fixed to control the computational resources each algorithm can utilize. The rest of the settings are default with details described in AutoDock4’s user guide 3. For feature extraction, RDKit51 is used to generate molecular descriptors, and the PubChem Substructure Fingerprints are computed by PaDEL-Descriptor52. Molecular descriptor are the numerical values of a molecule’s properties computed by algorithms51. After the removal of the descriptors with the value 0 across all ligands, 208 features are obtained. Following this step, the features with almost the same values across different ligands are discarded, which results in 119 useable features. All the features are determined through min–max normalization, fitting each feature’s values to [0, 1]. PubChem Substructure Fingerprint is an ordered list of binary values (0/1), which represents the existence of a specific substructure, such as a ring structure53. In our case, for each ligand, the length of binary encoded list is 881.

Results and discussion

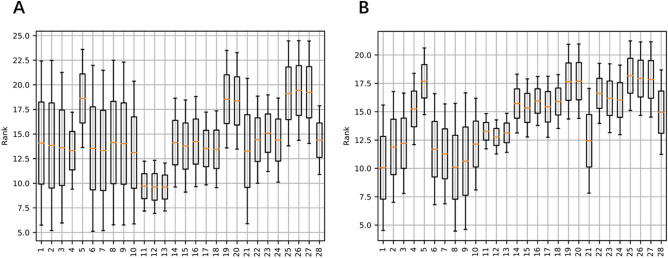

Figure 3 illustrates the ranks of each algorithm across all the docking scenarios for AVG and BEST, respectively. It can be seen that while some algorithms perform better than other in general, their relative performances vary. Beyond that, there is no ultimate algorithm that consistently outperforms the remaining algorithms on all the protein–ligand docking instances. This view suggests that algorithm selection is likely.to beat all these algorithms by automatically matching the right algorithms with the instances that can be effectively solved by the selected algorithms.

Figure 3.

Ranks of Docking Algorithms. (A) The ranks of the docking algorithms across all the instances, based on the AVG performance. (B) The ranks of the docking algorithms across all the instances, based on the BEST performance.

Table 2 reports the ranking of each standalone algorithm besides ALORS. All those algorithms are accommodated as the candidate algorithms for ALORS. Two separate performance evaluation is delivered. The first one focuses on the algorithms’ average performance, considering that all the utilized algorithms are stochastic. The second case relates to the best docking solutions out of all the runs on each docking instance. For both scenarios, ALORS outperforms all the standalone algorithms, while the performance difference on the AVG case is more drastic than in the BEST case.

Table 2.

Algorithm performance.

| Algorithm | AVG Perf | BEST Perf |

|---|---|---|

| Mean ± SD | Mean ± SD | |

| A1 | 8.48 ± 8.00 | 6.82 ± 6.40 |

| A2 | 8.04 ± 6.82 | 9.92 ± 6.22 |

| A3 | 8.59 ± 6.41 | 9.96 ± 5.95 |

| A4 | 17.13 ± 5.03 | 18.22 ± 6.70 |

| A5 | 23.25 ± 5.25 | 20.55 ± 6.71 |

| A6 | 7.90 ± 6.79 | 9.09 ± 5.88 |

| A7 | 7.91 ± 6.62 | 8.99 ± 5.89 |

| A8 | 8.50 ± 8.17 | 6.80 ± 6.31 |

| A9 | 8.48 ± 8.07 | 7.09 ± 6.65 |

| A10 | 8.38 ± 6.15 | 10.16 ± 5.89 |

| A11 | 12.47 ± 4.27 | 15.16 ± 6.28 |

| A12 | 12.50 ± 4.19 | 14.61 ± 6.20 |

| A13 | 12.29 ± 4.31 | 15.12 ± 6.35 |

| A14 | 18.40 ± 5.46 | 18.33 ± 6.83 |

| A15 | 18.17 ± 5.45 | 17.92 ± 6.87 |

| A16 | 18.56 ± 5.52 | 18.26 ± 6.70 |

| A17 | 17.20 ± 4.98 | 18.12 ± 6.63 |

| A18 | 17.27 ± 5.08 | 18.37 ± 6.56 |

| A19 | 23.16 ± 5.29 | 20.77 ± 6.65 |

| A20 | 22.94 ± 5.35 | 20.80 ± 6.73 |

| A21 | 8.45 ± 6.24 | 10.05 ± 6.18 |

| A22 | 18.63 ± 5.35 | 19.28 ± 6.51 |

| A23 | 18.91 ± 5.17 | 19.12 ± 6.64 |

| A24 | 18.49 ± 5.29 | 18.98 ± 6.73 |

| A25 | 24.04 ± 5.33 | 21.14 ± 6.71 |

| A26 | 24.12 ± 5.16 | 21.00 ± 6.78 |

| A27 | 24.05 ± 5.44 | 20.98 ± 6.80 |

| A28 | 12.66 ± 6.05 | 13.29 ± 6.77 |

| ALORS | 6.00 ± 5.14 | 6.75 ± 5.90 |

The ranking of the protein–igand docking algorithms with ALORS, utilizing all those standalone algorithms as a high-level approach. (The results in bold refer to the overall best ones).

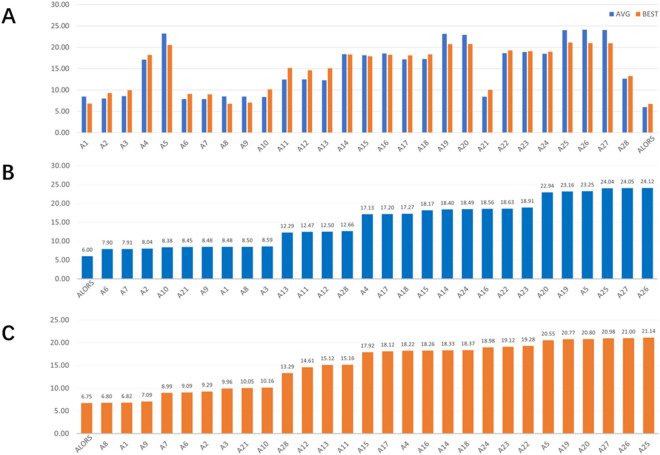

Overall, ALORS consistently delivers the top and most robust performance across all docking instances. The robustness aspect can be verified from the standard deviation values. Taking a closer look at the results and referring to the AVG performances, A6 happens to be the best standalone algorithm, meaning that it is traditionally used as the sole algorithm for all the docking instances, unlike AS, choosing one docking algorithm for each docking instance. While A6’s mean rank is 7.90, ALORS results in the mean rank of 6.00. A6 is followed by A7, with a mean rank of 7.91. Additionally, the default algorithm setting that is built into AutoDock, A2, is found to be the third best standalone approach on the present test scenarios. As to delivering the BEST docking results, unlike the AVG case, A8 offers the top mean rank of 6.80, among the constituent algorithms, following ALORS’ mean rank of 6.75. A1 offers a performance quite close to A8, with a mean rank of 6.82. The closest performer after A1 is A9 with the mean rank of 7.09. The default configuration of A2 takes the fifth place among these standalone methods.

Figure 4 visualizes the mean rank changes for both AVG and BEST, referring to the top chart. It is noteworthy that the relative performance trend among all the algorithms is somewhat maintained. The remaining charts shows the sorted docking methods on AVG and BEST, separately. Just by visually analyzing the charts, closely ranked methods, in groups, can be detected. For instance, A5, A19, A20, A25, A26, and A27 clearly deliver the worst performance among all algorithms.

Figure 4.

Mean Ranks of Docking Algorithms. The mean ranks of all the tested docking methods. (A) relative comparison on both AVG and BEST, (B) sorted comparison on AVG, (C) sorted comparison on BEST.

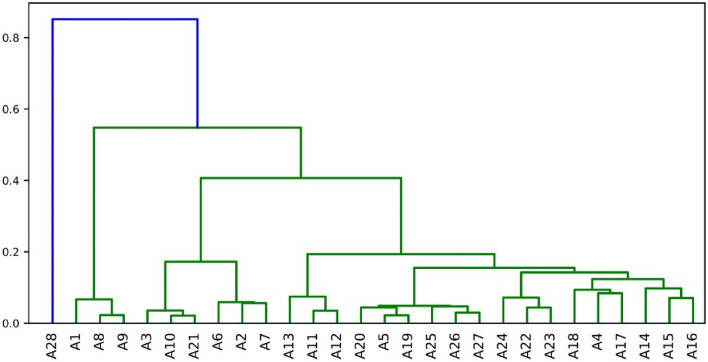

Figure 5 illustrates the similarities between all the constituent algorithms in terms of hierarchical clustering.

Figure 5.

Clustering of Docking Algorithms. A hierarchical clustering of the constituent docking algorithms based on the latent features extracted by SVD (k = 5) on the AVG case.

At the lowest level of the clusters, the following groups of algorithms happen to be highly similar: {A8, A9}, {A10, A21}, {A2, A7}, {A11, A12}, {A5, A19}, {A26, A27}, {A22, A23}, {A14, A17}, {A15, A16}. Referring to Table ~ \ref{algorithm-configurations}, except the {A14, A17} pair, all the grouped algorithms come with the same configuration with reference to their population sizes and mutation rates. The third variation used for utilizing a different configuration at the algorithm level, the window size, does not cause any drastic changes on the behavior of those algorithms.

Regarding this aspect of algorithm similarity, by only keeping one algorithm from similar ones, a potential sub-portfolio offering comparable performance would be {A1, A2, A3, A4, A5, A6, A8, A10, A11, A13, A14, A15, A18, A20, A22, A24, A25, A26, A28}, involving 19 algorithms out of 28 options. The portfolio can be further reduced by referring to large algorithm clusters by going one level higher on the hierarchical cluster. Then, an example portfolio would be {A1, A3, A6, A13, A14, A18, A20, A24, A28}.

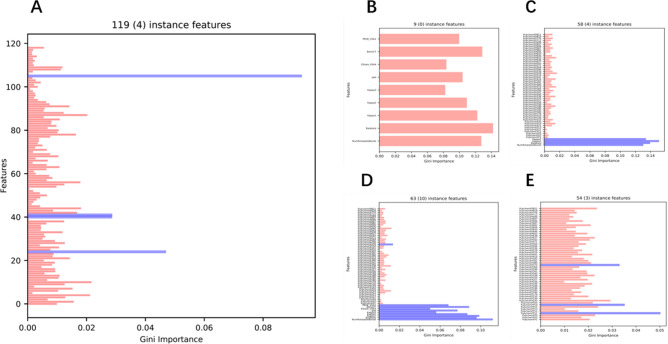

Figure 6A visualizes the importance of the PLDP instance features. The importance aspect is determined through the Gini importance values explored while building the Random Forest (RF) prediction models under ALORS. Among these 119 features, 4 of them obtain the much higher Gini Importance, thus coming as the significantly most critical compared to the rest. The corresponding features are.

NumRotatableBonds

BalabanJ

Kappa1

Kappa2

Figure 6.

Gini Importance of Features. The blues ones are the significantly more critical than the rest concerning their Gini values. (A) The Gini importance values of all the docking instance features, (B) The Gini importance values of the features, (C) The Gini importance values of the features, (D) The Gini importance values of the features, (E) The Gini importance values of the features.

In addition to molecular descriptors such as characteristics, , substructure fingerprints, , are used to perform AS. Fingerprints are binary forms of features, each representing the presence of a highly specific sub- structure. In that respect, it is relatively hard to benefit from the individual features as in the case for molecular descriptors. Table 3 reports the ALORS’ performance with varying feature sets. The results indicate that is more informative than as expected. Focusing on , two subsets are additionally evaluated, which are and .They are essentially the top features measured by their Gini values extracted from the original ALORS model. As mentioned above, denotes the main significantly influential features, while has 5 additional features besides the ones in They are chosen considering the Gini importance value is cut-off from 0.15. Both subsets are good enough to outperform the standalone algorithms rather than using the complete 119 features. However, the larger subset provides better results than . Figure 6B visualizes the contributions of each feature from when an AS model is built with . A similar approach is followed for , resulting in a subset of 54 features, . In relation to that, Fig. 6E illustrates the importance of each of these features. The use of 54 features out of 881 provided further performance improvement. Considering that the complete fingerprint feature set is rather large, an extra ALORS model is built using a higher number of tresses for RF, increasing from 100 to 500. Although superior performance with the mean rank of 6.39 5.62 is achieved compared to the default ALORS setting, the performance is still worse than the scenario using ,top54. The final evaluation on the features is carried out utilizing both and , in particular their aforementioned subsets, and . These combinations improved both the sole, and , feature subset based results. This outcome suggests that the substructure fingerprints come with extra information which is not directly come from the molecular descriptors. Corresponding feature importance are provided in Fig. 6C and D for and respectively.

Table 3.

Ranking of different feature sets.

| Feature set | Mean ± Sd |

|---|---|

| 6.00 ± 5.14 | |

| 6.30 ± 5.32 | |

| 6.11 ± 5.16 | |

| 6.57 ± 5.83 | |

| 6.29 ± 5.45 | |

| 6.10 ± 5.20 | |

| 08 ± 5.26 |

The ranking of the ALORS recommendation models with distinct instance feature sets on AVG.

Molecular descriptor analysis

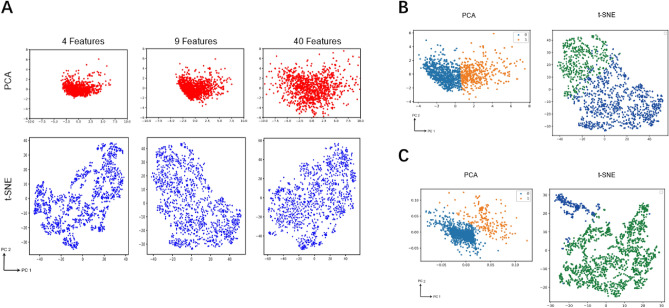

Considering the Gini importance, top 4, top 9, and top 40 features are picked for analyzing the instance space. To visualize the instances in the 2-dimensional space, Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) are applied to reduce those features into 2 dimensions. The instance representations achieved by PCA and t-SNE are shown in Fig. 7A. Compared to the PCA components, t-SNE delivers more separated instance clusters. By observation and analysis, selecting the 9 features turns out to be the most discriminant. Thus, k-means algorithm54 is applied to cluster the instances using those 9 features. After trying different k ∈ [2, 15] values, the best k is determined as 2 with respect to the silhouette score which is derived as the mean silhouette coefficients55 across all the instance points.

Figure 7.

Features Visualization with PCA, t-SNE and Kmeans. (A) 4, 9 and 40 features visualization with PCA and t-SNE. (B) In 2-D PCA and t-SNE space, Kmeans classification results of 9 features. (C) In 2-D PCA and t-SNE space, Kmeans classification results of 5 latent features, extracted by SVD, for a different feature set.

The final results of the clustering are shown in Fig. 7B. As the score indicates, it is best to divide the 9 top features into two clusters. It is observed that there is a distinct divide in the middle of the data. While we can find a more diverse spread of points in t-SNE, the division is relatively indistinct. In PCA, where distinct groups are clustered more tightly, the clustering is clearer for the other feature set if it is divided into two groups. Also, in t-SNE, the part in the top left corner from -10 to 40 PC2 is more concentrated, whereas the other part is dispersed and sparse. Figure 7C reflects a striking situation of the second feature set where five latent features are used. The amount of data in these two clusters is distributed heterogeneously, with one group outnumbering the other to a great extent. Consequently, the pattern of a particular group can be captured.

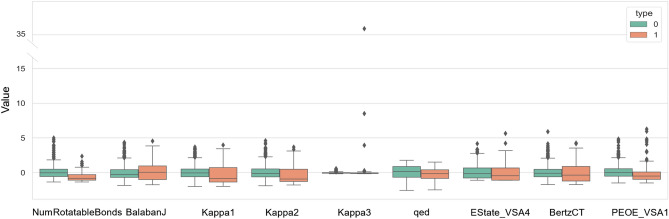

It should be noted that the silhouette score cannot indicate the situation when the points are only considered as a whole group. Though we have no idea how one group performs using the evaluation of score, we can still observe that the points are actually evenly dispersed in either PCA or t-SNE. This means that it is best to consider them as a group. That is to say, there is no obvious clear division or clustered pattern when considering these features. As shown in Fig. 8, group 0 as type 0, denoted by the green color, is clustered more closely in general. Group 0 shows a higher median except for BalabanJ. Although most of the data in group 0 are clustered, there are more outliers compared to group 1. Strikingly, kappa3 shows a strange pattern where data are extremely gathered with several outliers two to three times larger than most of the data.

Figure 8.

Boxplot of Features. Type 0 denote the same group 0 when conducting PCA and t-SNE and type 1 denote group 1. The distributions of 9 selected features in the two clusters are given to demonstrate the possible patterns for each group. Group 0 shows a clustered group while with more outliers compared to group 1.

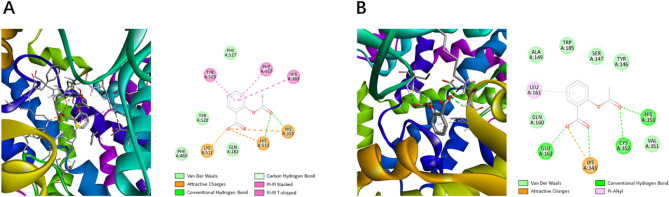

Figures 9A and B show the conformational and interaction difference of an instance docked with default algorithm and the best algorithm. As more hydrogen bonds are observed, the docking pose predicted by the best parameter configuration is likely to yield a more stable binding with the receptor protein compared to the pose predicted by the algorithm with default parameter configuration.

Figure 9.

Interaction Plot of Ligand ZINC000000000053 and ACE. (A) under default parameter configuration, (B) under best parameter configuration in AutoDock4.

As mentioned above, using the chemical descriptors provided by the open-source python library RDKit51, 208 features, molecular descriptors, are generated for each of the molecules involved in the docking process. Referring to their importance, starting from the most important one, the top 9 features are (1) the number of rotatable bonds, (2) the Balaban’s J index, (3.4.5) the Kappa molecular shape index including Kappa 1,2,3, (6) the quantitative estimate of the drug-likeness index, (7) the electrotopological state index, (8) Bertz molecular complexity index, and (9) the partial equalization of orbital electronegativity index. Although these features have been highlighted by ALORS, there is an additional need to examine their applications in QSAR studies concerning whether they can be comprehended in the docking process.

Number of bond rotations

The number of rotatable bonds can reflect the flexibility of a molecule56. Previous studies suggest that this.molecular descriptor helps to differentiate between drugs and other small molecules as drugs have lower flexibility57,58. Essentially, molecular docking is a searching process of best positions and poses under constrained docking space. Varying the number of rotatable bonds directly affects the potential docking poses returned by AutoDock. Thus, it is important to adjust the number of bond rotations, when ligands are preprocessed via AutoDock Tools27.

Balaban’s J index

Balaban’s J index is one of the topological indices that treat molecules as connected graphs, which represent.the molecular structure by a single numerical number59. The J Index improves the discriminating power especially for isomers since it employs the average sums of distances inside the molecule. It is sensitive to the number of bonds or atoms difference. The calculation of the index is computationally efficient while preserving the physical and structural information of the molecule60,61.

Kappa 1/2/3

The Kappa molecular shape index is another type of topological index that focuses on molecular shape information. The kappa molecular shape index quantifies the difference between the most complex and the potentially simplest conformation62. Kappa 1, 2, and 3 are able to discriminate between isomers that cannot be distinguished if measured by the number of atoms or bonds63. Therefore, kappa molecular shape indices are reliable descriptors for measuring the overall connectivity of a molecule.

QED

QED is short for quantitative estimation of drug-likeness, which was proposed to provide a practical guidance in drug selection as a refined alternative to Lipinski's rule of five64. QED is an integrated index that comprises 8 physical properties of molecules, including the octanol–water partition coefficient, the number of hydrogen bond donors and acceptors, the molecular polar surface area, the number of rotatable bonds, the number of aromatic rings and the number of structural alerts. QED has been applied in virtual screening of large compound databases to filter favorable molecules65 and to aid the building and benchmarking of deep learning models for de novo drug design66. QED’s strength is also mirrored by the given Gini importance.

EState_VSA4

EState_VSA descriptor compromises both EState (electrotopological state) and the VSA index. EState index contains atom-level and molecular level topology information67. Unlike the Kappa molecular shape index, which emphasizes the structure of molecules, the electrotopological state index reveals the electronegativity of each atom as well as the weighted electronic effect. It has been validated by its strong correlation with the 17O NMR shift in ethers and the binding affinity of various ligands68,69. VSA is the Van der Waals surface area value of an atom, and it is used to determine whether EState indices are calculated. Regarding molecular docking, the electrostatic interaction between the ligand and the receptor is a significant component of the energy evaluation in AutoDock’s semi-empirical force field computation, which may explain why it ranks eighth out of 208 descriptors.

BertzCT

Bertz index was defined to represent the complexity of a molecule quantitatively derived from molecular graphs70. It comprises two properties of the molecule: the number of lines in the line graph and the number of heteroatoms. As both heterogeneity and connectivity are integrated into one index, abundant information is extracted from the molecule. BertzCT is particularly useful in organic synthesis. It can be used to monitor the complexity of synthetic products, and thus evaluate intended synthesis route prior to the implementation71.

PEOE_VSA1

PEOE_VSA is another hybrid descriptor consisting of the partial equalization of orbital electronegativity and the Van der Waals surface area. The partial equalization of orbital electronegativity (PEOE) was first presented to assess reactivity in chemical synthetic design72. PEOE obtains the partial charges based on the atomic orbital electronegativity iteratively throughout the entire molecule. The electronegativity of atoms can be accurately computed in complex organic molecules even with electron withdrawing and donating effects. PEOE was first tested to model the taste of compounds and later applied to QSAR studies that included prediction of anesthetic activity and inhibition of HIV integrase73,74. To simulate in vivo environment, it is highly suggested to assign partial charges to ligands to obtain a reliable binding energy in AutoDock.

Conclusion

This paper is aimed at introducing and further evaluating ALORS as a recommender system-based algorithm selection system which automatically selects LGA variants on a per-instance basis on AutoDock. Features that include molecular descriptors and fingerprints pertaining to each protein–ligand docking instance have been employed to quantify chemical compounds. The study has shown that ALORS delivers the best results compared to all candidate algorithms from a fixed algorithm pool. Nine features have been highlighted as significant determinants of the protein–ligand interaction and are analyzed to inspire exploration into chemical features that are critical to docking performance. The findings of this research accentuate utilizing a suitable algorithm selector and features to best approach a molecular docking task that searches for druggable compounds. ALORS has the potential to become the preferred choice for performing protein–ligand docking tasks for CADD research. What’s more, the results of our study add to the rapidly expanding applications of automatic algorithm selections.

However, one limitation of our study is that ACE was the only protein adopted for the docking data generation. Although ALORS works well in the docking case with ACE; nevertheless, the generalizability of our model to other proteins remains to be determined. More proteins should be incorporated to our model to increase the diversity of protein–ligand interaction. Therefore, extending the docking scenarios with varied target proteins may present a more comprehensive evaluation of the performance of ALORS as an AS tool. At the same time, hand-selected characteristics of molecules derived from empirical evidence are equally viable options. Hand-selected features that are more specific and relevant can be mixed with algorithm-selected features to achieve more relevance and precision.

Other protein–ligand docking programs such as DOCK, Glide, and CABSdock are also recommended, and the underlying algorithm of each docking platform may be tailored to specific docking situations. AutoDock performs well in automated ligand docking to macromolecules because of its improved LGA search algorithm and empirical binding-free scoring function, but it remains to be seen whether exhaustive search-based docking programs such as Glide and DOCK that use the Geometric Matching Algorithm perform better in other areas. Further focus can be directed towards evaluating and automatically selecting the best docking programs in different docking scenarios.

During the study, we noticed the increasing prevalence of the application of Neural networks (NN) in protein–ligand interaction prediction. Neural networks, which are composed of layers and neurons to recognize patterns such as numerical vectors, images, texts, sounds, and even time series, are widely used for classification or prediction tasks. Under the frame of Neural networks, Graph neural networks (GNNs) rely on characterizing data as graphs that consist of nodes and edges and excel in capturing the nonlinear relation in images compared with traditional regression or classification models75. GNNs are particularly useful for graph data that have relational information. As molecules are bonded structures, natural information for chemicals can be represented as irregular molecular graphs. The image-based features derived from molecules bring about more promising results than the traditional characteristics derived from molecular descriptors76. Consequently, more efforts can be put into the implementation of GNNs for better prediction of protein–ligand interaction.

Supplementary Information

Acknowledgements

This work is supported by Duke Kunshan University Interdisciplinary Research Seed Grant.

Abbreviations

- ACE

Human angiotensin-converting enzyme

- LGA

Lamarckian-genetic algorithm

- ALORS

Algorithm recommender system

- DD

Drug discovery/design

- CADD

Computer-aid drug discovery/design

- SARs

Structure activity relationships

- QSARs

Quantitative structure activity relationships

- AS

Algorithm selection

- PLDP

Protein-ligand docking problem

- NFLT

No free lunch theorem

- GA

Genetic algorithm

- LS

Local search

- CF

Collaborative filtering

- MF

Matrix factorization

- SVD

Singular value decomposition

- RF

Random forest

- FDA

Food and drug administration

- MOL

Molecular data file

- PDB

Protein data bank

- PDBQT

Protein data bank, partial charge (Q), & atom type (T)

- AVG

Average

- PCA

Principal component analysis

- t-SNE

T-distributed Stochastic neighbor embedding

- QED

Quantitative estimation of drug-likeness

- PEOE

Partial equalization of orbital electronegativity

- HIV

Human immunodeficiency virus

- NNs

Neural networks

- GNNs

Graph neural networks

Author contributions

M. M. and F. B. conceived and designed the study. T. C. and H. Z. carried out the data generation. M. M., X.S., T. C., and H.Z. implemented the model and visualization. All authors discussed the results and contributed equally to the final manuscript.

Data availability

The receptor, ACE, can be found with PDB DOI: 1O86, and docking ligands are in ZINC15 database: https://zinc15.docking.org/catalogs/dbfda/.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Floyd A. Beckford, Email: floyd.beckford@duke.edu

Mustafa Misir, Email: mustafa.misir@dukekunshan.edu.cn.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-35132-5.

References

- 1.Everhardus JA. Drug Design: Medicinal Chemistry. Elsevier; 2017. [Google Scholar]

- 2.Jeffrey C, Carl R, Parvesh K. The price of progress: Funding and financing alzheimer’s disease drug development. Alzheimer Dementia Trans. Res. Clin. Inter. 2018;20:875. doi: 10.1016/j.trci.2018.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Reymond J-L. The chemical space project. Acc. Chem. Res. 2015;48(3):722–730. doi: 10.1021/ar500432k. [DOI] [PubMed] [Google Scholar]

- 4.Mullard A. 2020 fda drug approvals. Nat. Rev. Drug Discov. 2021;20(2):85–91. doi: 10.1038/d41573-021-00002-0. [DOI] [PubMed] [Google Scholar]

- 5.Edgar L-L, Jurgen B, Jose LM-F. Informatics for chemistry, biology, and biomedical sciences. J. Chem. Inf. Model. 2020;61(1):26–35. doi: 10.1021/acs.jcim.0c01301. [DOI] [PubMed] [Google Scholar]

- 6.Wenbo Y, Alexander DM. Computer-Aided Drug Design Methods. In: Jack E, editor. Antibiotics. Springer; 2017. pp. 85–106. [Google Scholar]

- 7.Stephani JYM, Vijayakumar G, Sunhye H, Sun C. Role of computer-aided drug design in modern drug discovery. Arch. Pharm. Res. 2015;38(9):1686–1701. doi: 10.1007/s12272-015-0640-5. [DOI] [PubMed] [Google Scholar]

- 8.Duch W, Swaminathan K, Meller J. Artificial intelligence approaches for rational drug design and discovery. Curr. Pharm. Des. 2007;13(14):1497–1508. doi: 10.2174/138161207780765954. [DOI] [PubMed] [Google Scholar]

- 9.Mohammad HB, Khurshid A, Sudeep R, Jalaluddin MA, Mohd A, Mohammad HS, Saif K, Mohammad AK, Ivo P, Inho C. Computer aided drug design: success and limitations. Curr. Pharm. Des. 2016;22(5):572–581. doi: 10.2174/1381612822666151125000550. [DOI] [PubMed] [Google Scholar]

- 10.Fernando DP-M, Edgar L-L, Juarez-Mercado KE, Jose LM-F. Computational drug design methods—current and future perspectives. In Silico Drug Des. 2019;2:19–44. [Google Scholar]

- 11.Rice JR. The algorithm selection problem. Adv. Comput. 1976;15:65–118. doi: 10.1016/S0065-2458(08)60520-3. [DOI] [Google Scholar]

- 12.Pascal K, Holger HH, Frank N, Heike T. Automated algorithm selection: Survey and perspectives. Evol. Comput. 2019;27(1):3–45. doi: 10.1162/evco_a_00242. [DOI] [PubMed] [Google Scholar]

- 13.Wolpert DH, Macready WG. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997;1:67–82. doi: 10.1109/4235.585893. [DOI] [Google Scholar]

- 14.David SG, Garrett MM, Arthur JO. Automated docking of flexible ligands: Applications of autodock. J. Mol. Recogn. 1996;9(1):1–5. doi: 10.1002/(SICI)1099-1352(199601)9:1<1::AID-JMR241>3.0.CO;2-6. [DOI] [PubMed] [Google Scholar]

- 15.Garrett MM, David SG, Robert SH, Ruth H, William EH, Richard KB, Arthur JO. Automated docking using a lamarckian genetic algorithm and an empirical binding free energy function. J. Comput. Chem. 1998;19(14):1639–1662. doi: 10.1002/(SICI)1096-987X(19981115)19:14<1639::AID-JCC10>3.0.CO;2-B. [DOI] [Google Scholar]

- 16.Emile A, Emile HLA, Jan KL. Local Search in Combinatorial Optimization. Princeton University Press; 2003. [Google Scholar]

- 17.Mısır M, Sebag M. ALORS: An algorithm recommender system. Artif. Intell. 2017;244:291–314. doi: 10.1016/j.artint.2016.12.001. [DOI] [Google Scholar]

- 18.Meng X-Y, Zhang H-X, Mezei M, Cui M. Molecular docking: A powerful approach for structure-based drug discovery. Curr. Comput. Aided Drug Des. 2011;7(2):146–157. doi: 10.2174/157340911795677602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fischer E. Einfluss der configuration auf die wirkung der enzyme. Ber. Dtsch. Chem. Ges. 1894;27(3):2985–2993. doi: 10.1002/cber.18940270364. [DOI] [Google Scholar]

- 20.Koshland DE., Jr Correlation of structure and function in enzyme action: Theoretical and experimental tools are leading to correlations between enzyme structure and function. Science. 1963;142(3599):1533–1541. doi: 10.1126/science.142.3599.1533. [DOI] [PubMed] [Google Scholar]

- 21.Cherayathumadom MV, Xiaohui J, Tom O, Marvin W. Ligandfit: A novel method for the shape-directed rapid docking of ligands to protein active sites. J. Mol. Gr. Model. 2003;21(4):289–307. doi: 10.1016/S1093-3263(02)00164-X. [DOI] [PubMed] [Google Scholar]

- 22.Fredrik O, Garrett MM, Michel FS, Arthur JO, David SG. Automated docking to multiple target structures: incorporation of protein mobility and structural water heterogeneity in autodock. Proteins Struct. Funct. Bioinf. 2002;46(1):34–40. doi: 10.1002/prot.10028. [DOI] [PubMed] [Google Scholar]

- 23.Gareth J, Peter W, Robert CG, Andrew RL, Robin T. Development and validation of a genetic algorithm for flexible docking. J. Mol. Biol. 1997;267(3):727–748. doi: 10.1006/jmbi.1996.0897. [DOI] [PubMed] [Google Scholar]

- 24.Richard AF, Jay LB, Robert BM, Thomas AH, Jasna JK, Daniel TM, Matthew PR, Eric HK, Mee S, Jason KP, et al. Glide: A new approach for rapid, accurate docking and scoring. 1. Method and assessment of docking accuracy. J. Med. Chem. 2004;47(7):1739–1749. doi: 10.1021/jm0306430. [DOI] [PubMed] [Google Scholar]

- 25.Isabella AG, Felipe SP, Laurent ED. Empirical scoring functions for structure-based virtual screening. Front. Pharmacol. 2018;9:1089. doi: 10.3389/fphar.2018.01089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Huang S-Y, Grinter SZ, Zou X. Scoring functions and their evaluation methods for protein-ligand docking: Recent advances and future directions. Phys. Chem. Chem. Phys. 2010;12(40):12899–12908. doi: 10.1039/c0cp00151a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Garrett MM, Ruth H, William L, Michel FS, Richard KB, David SG, Arthur JO. Autodock4 and autodocktools4: Automated docking with selective receptor flexibility. J. Comput. Chem. 2009;30(16):2785–2791. doi: 10.1002/jcc.21256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gromiha MM. Chapter 7-Protein Interactions. In: Gromiha MM, editor. Protein Bioinformatics. Academic Press; 2010. pp. 247–302. [Google Scholar]

- 29.Elaine CM, Brian KS, Irwin DK. Automated docking with gridbased energy evaluation. J. Comput. Chem. 1992;13(4):505–524. doi: 10.1002/jcc.540130412. [DOI] [Google Scholar]

- 30.Alexander T, Lukas G, Tanja T, Marcel W, Eyke H. Algorithm selection on a meta level. Mach. Learn. 2022;5:417. [Google Scholar]

- 31.Lin, X., Frank, H., Holger, H. H., & Kevin, L.-B. Satzilla-07: The design and analysis of an algorithm portfolio for sat. In International Conference on Principles and Practice of Constraint Programming 712–727 (Springer, 2007).

- 32.Lars, K. Algorithm selection for combinatorial search problems: A survey. In Data Mining and Constraint Programming 149–190 (Springer, 2016).

- 33.Mario, A. M., Michael, K., & Saman, K. H. The algorithm selection problem on the continuous optimization domain. In Computational Intelligence in Intelligent Data Analysis 75–89 (Springer, 2013).

- 34.Gomes CP, Selman B. Algorithm portfolios. Artif. Intell. 2001;126(1):43–62. doi: 10.1016/S0004-3702(00)00081-3. [DOI] [Google Scholar]

- 35.Xu, L., Hoos, H. H. & Leyton-Brown, K. Hydra: Automatically configuring algorithms for portfolio-based selection. In Proceedings of the 24th AAAI Conference on Artificial Intelligence (AAAI) 210–216 (2010).

- 36.Aldy, G., Hoong, C. L., & Mustafa, M. Designing and comparing multiple portfolios of parameter configurations for online algorithm selection. In Proceedings of the 10th Learning and Intelligent OptimizatioN Conference (LION), Vol. 10079 of LNCS 91–106 (Naples, Italy, 2016).

- 37.Andrea, L., Yuri, M., Horst, S., & Vijay, A. S. Deep learning for algorithm portfolios. In Proceedings of the 13th Conference on Artificial Intelligence (AAAI) 1280–1286 (2016).

- 38.Bernhard, P., Hilan, B., & Christophe, G.-C. Tell me who can learn you and i can tell you who you are: Landmarking various learning algorithms. In Proceedings of the 7th International Conference on Machine Learning (ICML) 743–750 (2000).

- 39.Xiaoyuan S, Taghi MK. A survey of collaborative filtering techniques. Adv. Artif. Intell. 2009;2009:4. [Google Scholar]

- 40.Mustafa, M. Algorithm selection on adaptive operator selection: A case study on genetic algorithms. In the 15th Learning and Intelligent Optimization Conference (LION), LNCS 12931 (2021).

- 41.Mustafa, M., Aldy, G., & Pieter, V. Algorithm selection for the team orienteering problem. In European Conference on Evolutionary Computation in Combinatorial Optimization (EvoCOP) (Part of EvoStar), Vol. 13222 of LNCS 33–45 (Springer, 2022).

- 42.Mustafa, M. Algorithm selection across algorithm configurators: A case study on multi-objective optimization. In IEEE Symposium Series on Computational Intelligence (SSCI). IEEE (2022).

- 43.Mustafa, M. Cross-domain algorithm selection: Algorithm selection across selection hyper-heuristics. In IEEE Symposium Series on Computational Intelligence (SSCI). IEEE (2022).

- 44.Mustafa, M. Generalized automated energy function selection for protein structure prediction on 2d and 3d hp models. In IEEE Symposium Series on Computational Intelligence (SSCI) (2021).

- 45.Mustafa, M. Selection-based per-instance heuristic generation for protein structure prediction of 2d hp model. In IEEE Symposium Series on Computational Intelligence (SSCI). IEEE (2021).

- 46.Gene HG, Christian R. Singular value decomposition and least squares solutions. Numerische Mathematik. 1970;14(5):403–420. doi: 10.1007/BF02163027. [DOI] [Google Scholar]

- 47.Breiman L. Random forests. Mach. Learn. 2001;45(1):5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 48.Sterling T, Irwin JJ. Zinc 15-ligand discovery for everyone. J. Chem. Inf. Model. 2015;55(11):2324–2337. doi: 10.1021/acs.jcim.5b00559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ramanathan N, Sylva LUS, Edward DS, Acharya KR. Crystal structure of the human angiotensin-converting enzyme–lisinopril complex. Nature. 2003;421(6922):551–554. doi: 10.1038/nature01370. [DOI] [PubMed] [Google Scholar]

- 50.Noel MO, Michael B, Craig AJ, Chris M, Tim V, Geoffrey RH. Open babel: An open chemical toolbox. J. Cheminf. 2011;3(1):1–14. [Google Scholar]

- 51.Greg L, et al. Rdkit: A software suite for cheminformatics, computational chemistry, and predictive modeling. Greg Landrum. 2013;2:47. [Google Scholar]

- 52.Chun Wei Yap Padel-descriptor: An open source software to calculate molecular descriptors and fingerprints. J. Comput. Chem. 2011;32(7):1466–1474. doi: 10.1002/jcc.21707. [DOI] [PubMed] [Google Scholar]

- 53.Sunghwan K, Jie C, Tiejun C, Asta G, Jia H, Siqian H, Qingliang L, Benjamin AS, Paul AT, Bo Y, et al. Pubchem in 2021: New data content and improved web interfaces. Nucleic Acids Res. 2021;49(D1):D1388–D1395. doi: 10.1093/nar/gkaa971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Anil KJ, Narasimha MM, Patrick JF. Data clustering: A review. ACM Comput. Surv. 1999;31(3):264–323. doi: 10.1145/331499.331504. [DOI] [Google Scholar]

- 55.Peter JR. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987;20:53–65. doi: 10.1016/0377-0427(87)90125-7. [DOI] [Google Scholar]

- 56.Khanna V, Ranganathan S. Physicochemical property space distribution among human metabolites, drugs and toxins. BMC Bioinf. 2009;10(15):S10. doi: 10.1186/1471-2105-10-S15-S10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Tudor IO, Andrew MD, Simon JT, Paul DL. Is there a difference between leads and drugs? A historical perspective. J. Chem. Inform. Comput. Sci. 2001;41(5):1308–1315. doi: 10.1021/ci010366a. [DOI] [PubMed] [Google Scholar]

- 58.Daniel FV, Stephen RJ, Hung-Yuan C, Brian RS, Keith WW, Kenneth DK. Molecular properties that influence the oral bioavailability of drug candidates. J. Med. Chem. 2002;45(12):2615–2623. doi: 10.1021/jm020017n. [DOI] [PubMed] [Google Scholar]

- 59.Alexandru TB. Highly discriminating distance-based topological index. Chem. Phys. Lett. 1982;89(5):399–404. doi: 10.1016/0009-2614(82)80009-2. [DOI] [Google Scholar]

- 60.Roy K. Topological descriptors in drug design and modeling studies. Mol. Diversity. 2004;8(4):321–323. doi: 10.1023/B:MODI.0000047519.35591.b7. [DOI] [PubMed] [Google Scholar]

- 61.Zlatko M, Nenad T. A Graph-Theoretical Approach to Structure-Property Relationships. Springer; 1992. [Google Scholar]

- 62.Lowell HH, Lemont BK. The molecular connectivity chi indexes and kappa shape indexes in structure-property modeling. Rev. Comput. Chem. 1991;5:367–422. [Google Scholar]

- 63.Lemont BK. A shape index from molecular graphs. Quant. Struct.-Activity Relation. 1985;4(3):109–116. doi: 10.1002/qsar.19850040303. [DOI] [Google Scholar]

- 64.Bickerton GR, Paolini GV, Besnard J, Muresan S, Hopkins AL. Quantifying the chemical beauty of drugs. Nat. Chem. 2012;4(2):90–98. doi: 10.1038/nchem.1243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Artem C, Eugene NM, Denis F, Alexandre V, Igor IB, Mark C, John D, Paola G, Yvonne CM, Roberto T, et al. Qsar modeling: Where have you been? Where are you going to? J. Med. Chem. 2014;57(12):4977–5010. doi: 10.1021/jm4004285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Rafael G-B, Jennifer NW, David D, Jose MH-L, Benjamin S-L, Dennis S, Jorge A-I, Timothy DH, Ryan PA, Alan A-G. Automatic chemical design using a data-driven continuous representation of molecules. ACS Central Sci. 2018;4(2):268–276. doi: 10.1021/acscentsci.7b00572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Lowell HH, Brian M, Lemont BK. The electrotopological state: an atom index for qsar. Quant. Struct. Activity Relation. 1991;10(1):43–51. doi: 10.1002/qsar.19910100108. [DOI] [Google Scholar]

- 68.Lemont BK, Lowell HH. An electrotopological-state index for atoms in molecules. Pharm. Res. 1990;7(8):801–807. doi: 10.1023/A:1015952613760. [DOI] [PubMed] [Google Scholar]

- 69.de Carolina G, Lemont BK, Lowell HH. Qsar modeling with the electrotopological state indices: Corticosteroids. J. Comput. Aided Mol. Des. 1998;12(6):557–561. doi: 10.1023/A:1008048822117. [DOI] [PubMed] [Google Scholar]

- 70.Steven HB. The first general index of molecular complexity. J. Am. Chem. Soc. 1981;103(12):3599–3601. doi: 10.1021/ja00402a071. [DOI] [Google Scholar]

- 71.Steven HB. Convergence, molecular complexity, and synthetic analysis. J. Am. Chem. Soc. 1982;104(21):5801–5803. doi: 10.1021/ja00385a049. [DOI] [Google Scholar]

- 72.Gasteiger J, Marsili M. Iterative partial equalization of orbital electronegativity—a rapid access to atomic charges. Tetrahedron. 1980;36(22):3219–3228. doi: 10.1016/0040-4020(80)80168-2. [DOI] [Google Scholar]

- 73.Sven H, Svante W, William JD, Johann G, Michael GH. The anesthetic activity and toxicity of halogenated ethyl methyl ethers, a multivariate QSAR modelled by PLS. Quant. Struct. Activity Relation. 1985;4(1):1–11. doi: 10.1002/qsar.19850040102. [DOI] [Google Scholar]

- 74.Hongbin Y, Abby LP. QSAR studies of HIV-1 integrase inhibition. Bioorganic Med. Chem. 2002;10(12):4169–4183. doi: 10.1016/S0968-0896(02)00332-2. [DOI] [PubMed] [Google Scholar]

- 75.Zhou J, Cui G, Shengding Hu, Zhang Z, Yang C, Liu Z, Wang L, Li C, Sun M. Graph neural networks: A review of methods and applications. AI Open. 2020;1:57–81. doi: 10.1016/j.aiopen.2021.01.001. [DOI] [Google Scholar]

- 76.Dejun J, Zhenxing W, Chang-Yu H, Guangyong C, Ben L, Zhe W, Chao S, Dongsheng C, Jian W, Tingjun H. Could graph neural networks learn better molecular representation for drug discovery? a comparison study of descriptor-based and graph-based models. J. Cheminform. 2021;13(1):1–23. doi: 10.1186/s13321-020-00479-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The receptor, ACE, can be found with PDB DOI: 1O86, and docking ligands are in ZINC15 database: https://zinc15.docking.org/catalogs/dbfda/.