Abstract

The human brain is constantly subjected to a multimodal stream of probabilistic sensory inputs. Electroencephalography (EEG) signatures, such as the mismatch negativity (MMN) and the P3, can give valuable insight into neuronal probabilistic inference. Although reported for different modalities, mismatch responses have largely been studied in isolation, with a strong focus on the auditory MMN. To investigate the extent to which early and late mismatch responses across modalities represent comparable signatures of uni‐ and cross‐modal probabilistic inference in the hierarchically structured cortex, we recorded EEG from 32 participants undergoing a novel tri‐modal roving stimulus paradigm. The employed sequences consisted of high and low intensity stimuli in the auditory, somatosensory and visual modalities and were governed by unimodal transition probabilities and cross‐modal conditional dependencies. We found modality specific signatures of MMN (~100–200 ms) in all three modalities, which were source localized to the respective sensory cortices and shared right lateralized prefrontal sources. Additionally, we identified a cross‐modal signature of mismatch processing in the P3a time range (~300–350 ms), for which a common network with frontal dominance was found. Across modalities, the mismatch responses showed highly comparable parametric effects of stimulus train length, which were driven by standard and deviant response modulations in opposite directions. Strikingly, P3a responses across modalities were increased for mispredicted stimuli with low cross‐modal conditional probability, suggesting sensitivity to multimodal (global) predictive sequence properties. Finally, model comparisons indicated that the observed single trial dynamics were best captured by Bayesian learning models tracking unimodal stimulus transitions as well as cross‐modal conditional dependencies.

Keywords: Bayesian inference, cross‐modal, mismatch negativity, multisensory, P3, predictive processing, surprise

Using a novel tri‐modal version of the roving stimulus paradigm we elicit modality specific mismatch negativity and modality independent P3 responses in auditory, somatosensory, and visual modalities which were source localized to sensory‐specific cortices and shared frontal sources. Across modalities, we found sensitivity of P3 responses to cross‐modal predictability. Using model comparison, our results suggest that single trial mismatch responses reflect signatures of Bayesian inference tracking stimulus transitions and cross‐modal conditional dependencies.

1. INTRODUCTION

Humans inhabit a highly structured environment governed by complex regularities. The brain is subjected to such environmental regularities by a multimodal stream of sensory inputs ultimately constructing a perceptual representation of the world. The sensory system is thought to capitalize on statistical regularities to efficiently guide interaction with the world enabling anticipation and rapid detection of sensory changes (Bregman, 1994; Dehaene et al., 2015; Friston, 2005; Frost et al., 2015; Gregory, 1980; Winkler et al., 2009).

Neuronal responses to deviations from sensory regularities can be valuable windows into the brain's processing of statistical properties of the environment and corresponding sensory predictions. The presentation of rare deviant sounds within a sequence of repeating standard sounds induces well known mismatch responses (MMRs) that can be recorded with electroencephalography (EEG), such as the mismatch negativity (MMN; Naatanen et al., 1978; Naatanen et al., 2007) and the P3 (or P300; Polich, 2007; Squires et al., 1975; Sutton et al., 1965). The MMN is defined as a negative EEG component resulting from subtraction of standard from deviant trials between ~100 and 200 ms poststimulus. Although the MMN has primarily been researched in the auditory modality, similar early mismatch components have been reported for other sensory modalities, including the visual (Kimura et al., 2011; Pazo‐Alvarez et al., 2003; Stefanics et al., 2014) and, to a lesser extent, the somatosensory modality (Andersen & Lundqvist, 2019; Hu et al., 2013; Kekoni et al., 1997). The P3 is a later positive going component in response to novelty between 200 and 600 ms around central electrodes, which has been described for the auditory, somatosensory, and visual modalities and is known for its modality independent characteristics (Escera et al., 2000; Friedman et al., 2001; Knight & Scabini, 1998; Polich, 2007; Schroger, 1996).

Despite being one of the most well‐studied EEG components, the neuronal generation of the MMN remains subject of ongoing debate (Garrido, Kilner, Stephan, & Friston, 2009; May & Tiitinen, 2010; Naatanen et al., 2005). Two prominent but opposing accounts cast the MMN as adaptation‐based or memory‐based, respectively. Adaptation‐based accounts argue that the observed differences between standard and deviant responses primarily result from neuronal attenuation leading to stimulus specific adaptation (SSA; Jaaskelainen et al., 2004; May et al., 1999). In animals, SSA has been shown to result in response patterns similar to the MMN (Ulanovsky et al., 2003; Ulanovsky et al., 2004) and simulation work suggests that different types of MMN‐like responses can be reproduced by pure adaptation models (May & Tiitinen, 2010). However, it remains unclear if the full range of MMN characteristics can be explained by adaptation alone (Fitzgerald & Todd, 2020; Garrido, Kilner, Stephan, & Friston, 2009; Wacongne et al., 2012). The memory‐based view, on the other hand, suggests that the MMN is a marker of change detection based on sensory memory trace formation (Näätänen, 1990; Naatanen et al., 2005; Naatanen & Näätänen, 1992). The memory trace stores local information on stimulus regularity and compares it to incoming sensory inputs that may signal changes in the current sensory stream.

While largely neglected by previous interpretations of the MMN, it is becoming increasingly clear that key empirical features of MMRs concern stimulus predictability rather than stimulus change per se. The MMN has been reported in response to abstract rule violations (Paavilainen, 2013), unexpected stimulus repetitions (Alain et al., 1994; Horvath & Winkler, 2004; Macdonald & Campbell, 2011) and unexpected stimulus omissions (Heilbron & Chait, 2018; Hughes et al., 2001; Salisbury, 2012; Wacongne et al., 2011; Yabe et al., 1997). Similar characteristics have been reported for P3 MMRs (Duncan et al., 2009; Prete et al., 2022) and both MMN and P3 responses have been shown to increase for unexpected compared to expected deviants (Schroger et al., 2015; Sussman, 2005; Sussman et al., 1998). Insights concerning the predictive nature of MMRs have led to further development of the memory‐based account of MMN generation into the model‐adjustment hypothesis (Winkler, 2007). This view assumes a perceptual model that is informed by previous stimulus exposure and continually predicts incoming sensory inputs. The model is updated whenever inputs diverge from current predictions, and the MMN is hypothesized to constitute a marker of such divergence.

The model‐adjustment hypothesis is in line with the increasingly influential view that the brain is engaging in perceptual inference to anticipate future sensory inputs (Friston, 2005; Gregory, 1980; Von Helmholtz, 1867). Related theories regard the brain as an inference engine and come with neuronal implementation schemes that accomplish probabilistic (Bayesian) inference in a neurologically plausible manner (Bastos et al., 2012; Friston, 2005, 2010). Process theories such as predictive coding assume that the brain maintains a generative model of its environment which is continuously updated by comparing incoming sensory information with model predictions on different levels of hierarchical cortical organization (Friston, 2005, 2010; Rao & Ballard, 1999; Winkler & Czigler, 2012). Differential influences of SSA and change detection on the MMN are proposed to result from the same underlying process of prediction error minimization, mediated by different post‐synaptic changes to (predicted) sensory inputs (Auksztulewicz & Friston, 2016; Garrido et al., 2008; Garrido, Kilner, Kiebel, & Friston, 2009). As such, the theory has the potential to unify previously opposing theories of MMN generation (Garrido et al., 2008; Garrido, Kilner, Kiebel, & Friston, 2009; Garrido, Kilner, Stephan, & Friston, 2009) while accounting for its key empirical features (Heilbron & Chait, 2018; Wacongne et al., 2012).

With regard to the proposed universal nature of predictive accounts of brain function, reports of comparable MMRs across different modalities are of particular interest. So far, mismatch signals have been primarily studied in isolation, with a strong focus on the auditory system. However, key properties of the auditory MMN, such as omission responses and modulations by predictability, have also been reported for the visual (Czigler et al., 2006; Kok et al., 2014) and the somatosensory MMN (Andersen & Lundqvist, 2019; Naeije et al., 2018), and modeling studies in all three modalities suggest that MMRs may reflect signatures of Bayesian learning (BL; Gijsen et al., 2021; Lieder et al., 2013; Maheu et al., 2019; Ostwald et al., 2012; Stefanics et al., 2018). While studies directly investigating mismatch signals in response to multimodal sensory inputs are rare, previous research indicates a ubiquitous role for cross‐modal probabilistic learning. The brain tends to automatically integrate auditory, somatosensory, and visual stimuli during sequence processing (Bresciani et al., 2006, 2008; Frost et al., 2015) and cross‐modal perceptual associations can influence statistical learning of sequence regularities (Andric et al., 2017; Parmentier et al., 2011), modulate MMRs (Besle et al., 2005; Butler et al., 2012; Friedel et al., 2020; Kiat, 2018; Zhao et al., 2015) and influence subsequent unimodal processing in various ways (Shams et al., 2011). Recent advances in modeling Bayesian causal inference suggest that the main computational stages of multimodal inference evolve along a multisensory hierarchy involving early sensory segregation followed by mid‐latency sensory fusion and late Bayesian causal inference (Cao et al., 2019; Rohe et al., 2019; Rohe & Noppeney, 2015). However, the extent to which the MMN and P3 reflect these stages and should be considered sensory specific signatures of regularity violation or the result of modality independent computations in an underlying predictive network is not fully understood.

The current study aimed to investigate the commonalities and differences between MMRs in different modalities in a single experiment and to elucidate in how far they reflect local, unimodal or global, cross‐modal computations. To this end, we employed a roving stimulus paradigm, in which auditory, somatosensory, and visual stimuli were simultaneously presented in a probabilistic tri‐modal stimulus stream.

Typically, MMRs are studied with the oddball paradigm, in which rarely presented “oddball” stimuli deviate from frequently presented standard stimuli in some physical feature, such as sound pitch or stimulus intensity. The roving stimulus paradigm, on the other hand, defines deviants and standards in terms of their local sequence position, while the frequency of occurrence of their stimulus features across the sequence is equal (Baldeweg et al., 2004; Cowan et al., 1993). The deviant is defined as the first stimulus that breaks a train of repeating (standard) stimuli. With repetition, the deviant subsequently becomes the new standard, defining a train of stimulus repetitions. Thus, the roving stimulus paradigm is an excellent tool to experimentally induce MMRs, while controlling for differences in physical stimulus features.

Based on a probabilistic model, we generated sequences of high and low intensity stimuli that were governed by unimodal transition probabilities as well as cross‐modal conditional dependencies. This allowed us to test to what extent early and late MMRs are sensitive to local and global violations of statistical regularities and to draw conclusions regarding their potential role in cross‐modal hierarchical inference. Specifically, we extracted the MMN and P3 MMRs for each modality and investigated their modality specific and modality general response properties regarding stimulus repetition and change, as well as their sensitivity to cross‐modal predictive information. Further, we used source localization to investigate modality specific and modality general neuronal generators of MMRs. Finally, we complemented our average‐based analyses with single‐trial modeling to investigate if signatures of unimodal and cross‐modal Bayesian inference can account for trial‐to‐trial fluctuations in the MMN and P3 amplitudes.

2. MATERIALS AND METHODS

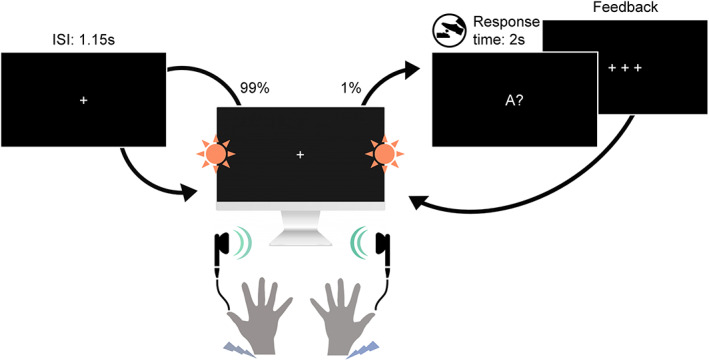

Participants underwent a novel multimodal version of the roving stimulus paradigm. Our paradigm, depicted in Figure 1, consisted of simultaneously presented auditory (A), somatosensory (S), and visual (V) stimuli, which each alternated between two different intensity levels (“low” and “high”). The tri‐modal stimulus sequences originated from a single probabilistic model (described in Section 2.3), resulting in different combinations of low and high stimuli across the three modalities in each trial.

FIGURE 1.

Experimental paradigm. Participants were seated in front of a screen and received sequences of simultaneously presented bilateral auditory beep stimuli (green), somatosensory electrical pulse stimuli (purple) and visual flash stimuli (orange) each at either low or high intensity. On consecutive trials, stimuli within each modality either repeated the previous stimulus intensity of that modality (standard) or alternated to the other intensity (deviant). This created tri‐modal roving stimulus sequences, where the repetition/alternation probability in each modality was determined by a single probabilistic model (see Section 2.3). In 1% of trials (catch trials) the fixation cross changed to one of the three letters A, T, or V, interrupting the stimulus sequence. The letter prompted participants to indicate whether the last auditory (letter A), somatosensory (letter T for “tactile”) or visual (letter V) stimulus, respectively, was of high or low intensity. Responses were given with a left or right foot pedal press using the right foot.

2.1. Participants

Thirty‐four healthy volunteers (19–43 years old, mean age: 26, 22 females, all right‐handed), recruited from the student body of the Freie Universität Berlin and the general public, participated for monetary compensation or an equivalent in course credit. The study was approved by the local ethics committee of the Freie Universität Berlin and written informed consent was obtained from all participants prior to the experiment.

2.2. Experimental setup

Each trial consisted of three bilateral stimuli (A, S, and V) that were presented simultaneously by triggering three instantaneous outputs of a data acquisition card (National Instruments Corporation, Austin, Texas, USA) every 1150 ms (inter‐stimulus interval).

Auditory stimuli were presented via in‐ear headphones (JBL, Los Angeles, California, USA) to both ears and consisted of sinusoidal waves of 500 Hz and 100 ms duration that were modulated by two different amplitudes. The amplitudes were individually adjusted with the participants to obtain two clearly distinguishable intensities (mean of the low intensity stimulus: ; mean of the high intensity stimulus: ).

Somatosensory stimuli were administered with two DS5 isolated bipolar constant current stimulators (Digitimer Limited, Welwyn Garden City, Hertfordshire, UK) via adhesive electrodes (GVB‐geliMED GmbH, Bad Segeberg, Germany) attached to the wrists of both arms. The stimuli consisted of electrical rectangular pulses of 0.2 ms duration. To account for interpersonal differences in sensory thresholds, the two intensity levels used in the experiment were determined on an individual basis. The low intensity level (mean: ) was set in proximity to the detection threshold yet high enough to be clearly perceivable (and judged to be the same intensity on both sides). The high intensity level (mean: ) was determined for each participant to be easily distinguishable from the low intensity level yet remaining non‐painful and below the motor threshold.

Visual stimuli were presented via light emitting diodes (LEDs) and transmitted through optical fiber cables mounted vertically centered to both sides of a monitor. The visual flashes consisted of rectangular waves of 100 ms duration that were modulated by two different amplitudes (low intensity stimulus: ; high intensity stimulus: ) that were determined to be clearly perceivable and distinguishable prior to the experiment. Participants were seated at a distance of about 60 cm to the screen such that the LED's were placed within the visual field at a visual angle of about 67°.

In each of six experimental runs of 11.5 min, a sequence of 600 stimulus combinations was presented. To ensure that participants maintained attention throughout the experiment and to encourage monitoring of all three stimulation modalities, participants were instructed to respond to occasional catch trials (target questions) via foot pedals. In six trials randomly placed within each run the fixation cross changed to one of the letters A, T, or V followed by a question mark. This prompted participants to report if the most recent stimulus (directly before appearance of the letter) in the auditory (letter A), somatosensory (letter T for “tactile”), or visual (letter V) modality was presented with low or high intensity. The right foot was used to press either a left or a right pedal, and the pedal assignment (left = low/right = high or left = high/right = low) was counterbalanced across participants.

It should be noted that our MMR paradigm in form of an attended roving stimulus sequence with relatively long ISI (1.15 s) differs from the classic oddball protocol for MMN elicitation in which participants are engaged in a primary task, often attending a separate modality. Since this is not easily possible with our paradigm (containing auditory, somatosensory, and visual stimuli), we used catch‐trials in each modality to ensure that attentional resources were distributed largely equally across the simultaneous stimulus streams. The ISI at the upper end of the range used for MMN elicitation was set during piloting such that the perceptually demanding tri‐modal bilateral stimulation was deemed not to be overwhelming in terms of sensory overload.

2.3. Probabilistic sequence generation

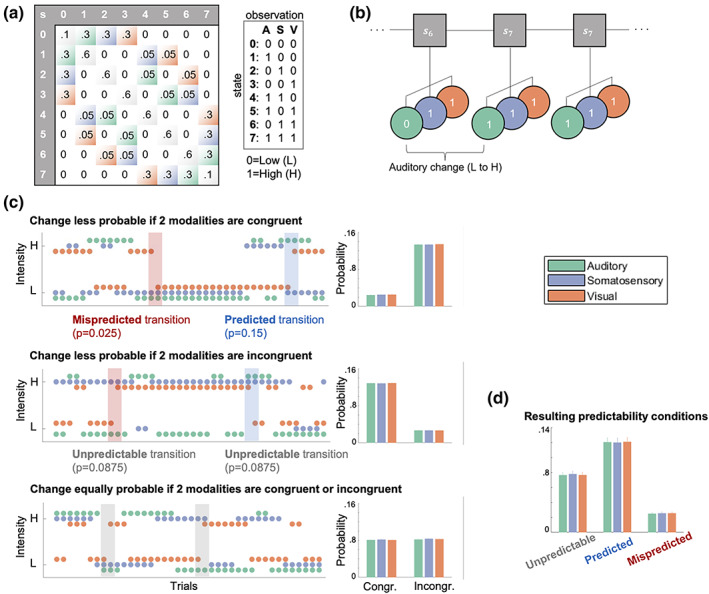

Each of the three sensory modalities (A, S, V) were presented as binary (low/high) stimulus sequences originating from a common probabilistic model. The model consists of a state at time evolving according to a Markov chain with each state deterministically emitting a combination of three binary observations conditional on the preceding observation combination . For example, a transition expressed as [100|000] indicates a unimodal auditory change with repeating somatosensory and visual modalities and . For each stimulus modality, in each state, the other two modalities form either congruent observations ([00] and [11]), or incongruent observations ([01] and [10]), which was used to manipulate the predictability of transitions in the sequences in different runs of the experiment.

Three types of stimulus sequences, depicted in Figure 2 were generated with different probability settings. The settings determine the transition probabilities within each modality given the arrangement of the other two modalities (i.e., either congruent or incongruent). One setting defines lower change probability if the other two modalities are congruent (e.g., for any change in modality A from to , S and V were congruent with and S and V were incongruent with ). The second setting defines lower change probability if the other two modalities are incongruent (e.g., for any change in modality A from to , S and V were incongruent with and S and V were congruent with ). The third setting defines equal change probability if the other two modalities are congruent or incongruent (e.g., for any change in modality A from to , S and V were congruent with and S and V were incongruent with ).

FIGURE 2.

Probabilistic sequence generation. (a) Schematic of state transition matrix (left). Light color shading depicts transitions in the respective modality which were assigned specific transition probabilities: Green = auditory change, purple = somatosensory change, orange = visual change, gray diagonal = tri‐modal repetition, white = multimodal change (set to zero). States 0–7 correspond to a specific stimulus combination (right), that is, eight permutations of low (0) and high (1) stimuli across three modalities (A = auditory; S = somatosensory; V = visual) as described in the main text. (b) Visualization of states (s) evolving according to a Markov chain emitting tri‐modal binary outcomes. (c) Probability settings of stimulus sequences. Left column: Sequences. Right column: Averaged empirical change probabilities across all sequences. Top: Transition probabilities determine that for each modality a change is unlikely (p = .025) if the other two modalities are congruent (and likely if they are incongruent; p = .15). Middle: Transition probabilities determine that for each modality a change is likely (p = .15) if the other two modalities are congruent (and unlikely if they are incongruent; p = .025). Bottom: Transition probabilities determine that for each modality a change is equally likely (p = .0875) if the other two modalities are congruent or incongruent. (d) Averaged empirical change probabilities for predictability conditions.

In each of six experimental runs, the stimulus sequence was defined by one of the three different probability settings. Each probability setting was used twice during the experiment and the order of the six different sequences was randomized. Participants were unaware of the sequence probabilities and any learning of sequence probabilities was considered to be implicit and task irrelevant.

Following the nomenclature suggested by Arnal and Giraud (2012), the resulting stimulus transitions for each modality within the different sequences can be defined as being either predicted (here higher change probability conditional on congruency/incongruency), mispredicted (here lower change probability conditional on congruency/incongruency) or unpredictable (here equal change probability). For each modality, repetitions are more likely than changes regardless of the type of probability setting and stimulus, resulting in classic roving standard sequences for each modality (mean stimulus train length: 5, mean range of train length: 2–34 stimuli).

2.4. EEG data collection and preprocessing

Data were collected using a 64‐channel active electrode EEG system (ActiveTwo, BioSemi, Amsterdam, Netherlands) at a sampling rate of 2048 Hz, with head electrodes placed in accordance with the extended 10–20 system. Individual electrode positions were recorded using an electrode positioning system (zebris Medical GmbH, Isny, Germany).

Preprocessing of the EEG data was performed using SPM12 (Wellcome Trust Centre for Neuroimaging, Institute for Neurology, University College London, London, UK) and in‐house MATLAB scripts (MathWorks, Natick, MA). First, the data were referenced against the average reference, high‐pass filtered (0.01 Hz), and downsampled to 512 Hz. Subsequently, eye‐blinks were corrected using a topographical confound approach (Berg & Scherg, 1994; Ille et al., 2002). The Data were epoched using a peri‐stimulus time interval of −100 to 1050 ms and all trials were visually inspected and artifactual data removed. Likewise, catch trials were omitted for all further analyses. Furthermore, the EEG data of two consecutive participants were found to contain excessive noise due to hardware issues, resulting in their exclusion from further analyses and leaving data of 32 participants. Finally, a low‐pass filter was applied (45 Hz) and the preprocessed EEG data were baseline corrected with respect to the pre‐stimulus interval of −100 to −5 ms. To use the general linear model (GLM) implementation of SPM, the electrode data of each participant were linearly interpolated into a 32 × 32 grid for each time point, resulting in one three‐dimensional image (with dimensions 32 × 32 × 590) per trial. These images were then spatially smoothed with a 12 × 12mm full‐width half‐maximum Gaussian kernel to meet the requirements of random field theory, which the SPM software uses to control the family wise error rate.

2.5. Event‐related responses and statistical analysis

First, to extract basic MMR signals of each modality from the EEG data, we contrasted standard and deviant trials of each modality with paired t‐tests corrected for multiple comparisons by using cluster‐based permutation tests implemented in fieldtrip (Maris & Oostenveld, 2007). Two time windows of interest were defined based on the literature (Duncan et al., 2009) to search for earlier negative clusters between 50 and 300 ms, corresponding to the MMN, and later positive clusters between 200 and 600 ms, corresponding to the P3. Clusters were defined as adjacent electrodes with a cluster defining threshold of .

For further analyses, GLMs were set up as implemented in SPM12, which allows defining conditions on the single trial level. To test for effects of stimulus repetitions on standards, deviants, and MMRs (deviants minus standards), a TrainLength model was defined that consisted of 45 regressors: an intercept regressor, 36 regressors coding for the repetition train length (trials binned into 1, 2, 3, 4–5, 6–8, >8 repetitions) for standards (i.e., the position of the standard in the current train) and deviants (i.e., the number of standards preceding the deviant) in each modality, as well as four global standard and four global deviant regressors. The binning was chosen such that trials were roughly balanced across the bins, corresponding to, on average, around 100 deviant trials and around 500 standard trials in each category for each modality The global regressors captured the train length (1, 2, 3, >3 repetitions) of standards and deviants regardless of their modality, meaning that trials in which standards occurred in all three modalities were coded as global standards, whereas trials in which a deviant occurred in any of the three modalities were coded as global deviants.

To test for the implicit effect of cross‐modal predictability based on the different conditional probability setting in the sequence, a Predictability model was defined that consisted of 37 regressors: an intercept regressor and 18 regressors coding standards and deviants of each modality for each of the three conditions described above: unpredictable (trials originate from sequences with no conditional dependence between modalities), predicted (trials originate from sequences with conditional dependence; trials defined by change being likely), mispredicted (trials originate from sequences with conditional dependence; trials defined by change being unlikely). On the single‐participant level, these were coded for congruent and incongruent trials separately resulting in 36 regressors. By definition, the number of trials in regressors with mispredicted trials was lowest, on average corresponding to around 60 deviant trials and 800 standard trials per modality.

Finally, a P3‐Conjunction model was specified that consisted of seven regressors: an intercept regressor and six regressors coding all standards and deviants for each of the three modalities. This model was used to apply SPM's second level conjunction analysis, contrasting standards and deviants across modalities in search of common P3 effects across modalities.

Each GLM was estimated on the single‐trial data of each participant using restricted maximum likelihood estimation. This yielded β‐parameter estimates for each model regressor over (scalp‐) space and time, which were subsequently analyzed at the group level. Second level analyses consisted of a mass‐univariate multiple regression analysis of the individual scalp‐time images with a design matrix specifying regressors for each condition of interest as well as a subject factor. Second level beta estimates were contrasted for statistical inference and multiple comparison correction was achieved with SPM's random field theory‐based FWE correction (Kilner et al., 2005).

2.6. Source localization

To investigate the most likely underlying neuronal sources for the MMN and P3 MMR we applied distributed source reconstruction as implemented in SPM12 to the ERP data. For each participant, the MMN of each modality (auditory, somatosensory, visual) was source localized within a time window of 100–200 ms. For the P3, the average MMR at 330 ms was chosen for source localization as this time point showed the strongest overlap of P3 responses between modalities (based on the results of the P3 conjunction contrast).

Participant‐specific forward models were created using an 8196‐vertex template cortical mesh co‐registered with the individual electrode positions via fiducial markers. An EEG head model based on the boundary element method was used to construct the forward model's lead field. For the participant‐specific source estimates, multiple sparse priors under group constraints were applied. The source estimates were subsequently analyzed at the group level using the GLM implemented in SPM12. Second‐level contrasts consisted of one‐sample t tests for each modality as well as (global) conjunction contrasts across modalities. The resulting statistical parametric maps were thresholded at the peak level with p < .05 after FWE correction. The anatomical correspondence of the MNI coordinates of the cluster peaks were identified via cytoarchitectonic references using the SPM Anatomy toolbox (Eickhoff et al., 2005).

2.7. Single‐trial modeling of EEG data

In addition to the analysis of event‐related potentials, the study aimed to compare different computational strategies of sequence processing potentially underlying neuronal generation of MMRs. To this end, we generated regressors from different BL models as well as a train length dependent change detection (TLCD) model making different predictions for the single‐trial EEG data.

Theories on MMN generation hypothesize adaptation and memory‐trace dependent change detection to contribute to the MMN. With prior repetition of stimuli, the response to standard stimuli tends to decrease while the response to deviant stimuli tends to increase. We defined the TLCD model to reflect such reciprocal dynamics of responses to stimulus repetition and change without invoking assumptions of probabilistic inference. The model is defined for each modality separately and tracks the stimulus train lengths for a given modality by counting stimulus repetitions: ), where takes on the value 1 whenever the current observation is a repetition of the previous observation and resets the current train length to zero. To form single‐trial predictors of the EEG data, the model outputs values that increase linearly with train length and have opposite signs for standards and deviants:

In addition to the TLCD model, different BL models were created to contrast the static train length based TLCD model with dynamic generative models tracking transition probabilities. The BL models consist of conjugate Dirichlet‐Categorical models estimating probabilities of observations read out by three different surprise functions: Bayesian surprise (BS), predictive surprise (PS), and confidence‐corrected surprise (CS).

BS quantifies the degree to which an observer adapts their generative model to incorporate new observations (Baldi & Itti, 2010; Itti & Baldi, 2009) and is defined as the Kullback–Leibler (KL) divergence between the belief distribution prior and posterior to the update: . PS is based on (Shannon, 1948) definition of information and defined as the negative logarithm of the posterior predictive distribution, assigning high surprise to observed events with low estimated probability of occurrence: . CS additionally considers the commitment of the generative model and scales with the negative entropy of the prior distribution (Faraji et al., 2018). It is defined as the KL divergence between the (informed) prior distribution at the current time step and a flat prior distribution updated with the most recent event : .

Following Faraji et al. (2018) surprise quantifications can be categorized as puzzlement or enlightenment surprise. While puzzlement refers to the mismatch between sensory input and internal model belief, closely related to the concept of prediction error, enlightenment refers to the update of beliefs to incorporate new sensory input. In the current study, we were interested in a quantification of the model inadequacy by means of an unsigned prediction error as reflected by surprise. As such, throughout the manuscript, with prediction error we do not refer to the specific term of (signed) reward prediction error as used for example in reinforcement learning but rather use it to refer to the signaling of prediction mismatch. While both PS and CS are instances of puzzlement surprise, CS is additionally scaled by belief commitment and quantifies the concept that a low‐probability event is more surprising if commitment to the belief (of this estimate) is high. BS, on the other hand, is an instance of enlightenment surprise and is considered a measure of the update to the generative model resulting from new incoming observations.

A detailed description of the Bayesian observer, its transition probability version as well as the surprise read‐out functions can be found in our previous work on somatosensory MMRs (Gijsen et al., 2021). Here, we will primarily provide a brief description of the specifics of two implementations of Dirichlet‐Categorical observer models, a unimodal and a cross‐modal model. Both observer models receive stimulus sequences (of one respective modality) as input and iteratively update a set of parameters with each new incoming observation. In each iteration, the estimated parameters are read out by the surprise functions (BS, PS, and CS) to produce an output which is subsequently used as a predictor for the EEG data.

For each modality, the unimodal Dirichlet‐Categorical model considers a binary sequence with two possible stimulus identities (low and high) estimating transition probabilities with for with a set of hidden parameters for each possible transition from . This unimodal model does not capture any cross‐modal dependencies in the sequence (i.e., the alternation and repetition probabilities conditional on the tri‐modal stimulus configuration). Therefore, we defined a cross‐modal Dirichlet‐Categorical model to address the question whether the conditional dependencies were used by the brain during sequence processing for prediction of stimulus change. The dependencies in the sequence were independent of stimulus identity but provide information about the probability of repetition or alternation ) conditional on the congruency of the other modalities. The cross‐modal model thus estimates alternation probabilities for with a set of hidden parameters when other modalities are incongruent and when other modalities are congruent. Therefore, while the unimodal model learns the probability of stimulus transitions within modality, the cross‐modal model learns the probability of stimulus alternations within modality conditional on the congruency of the other modalities. As such, the cross‐modal model provides a minimal implementation of a Bayesian observer that captures the cross‐modal dependencies in the sequences.

2.7.1. Model fitting procedure

The technical details of the model fitting and subsequent Bayesian model selection (BMS) procedures are identical to Gijsen et al. (2021) where the interested reader is kindly referred to for further information. First, the stimulus sequence‐specific regressor of each model was obtained for each participant. After z‐score normalization, the regressors were fitted to the single‐trial, event‐related electrode data using a free‐form variational inference algorithm for multiple linear regression (Flandin & Penny, 2007; Penny et al., 2003; Penny et al., 2005). The obtained model‐evidence maps were subsequently subjected to the BMS procedure implemented in SPM12 (Stephan et al., 2009) to draw inferences across participants with well‐established handling of the accuracy‐complexity trade‐off (Woolrich, 2012).

In total, eight regression models were fit: A null model (offset only), a TLCD regression model and, for each of the three surprise read‐out functions, one regression model including only the unimodal regressors and one additionally including the cross‐modal regressors. The purely unimodal regression model will be called UM and the regression model including unimodal and, additionally, cross‐modal regressors will be called UCM. The design matrix of the TLCD regression model consisted of four regressors, an offset and the predicted parametric change responses for each of the three modality sequences (auditory, somatosensory, visual). Similarly, the design matrix of the UM regression model consisted of four regressors, an offset and the surprise responses of the unimodal model for each of the three modalities. The UCM regression model was identical to the UM regression model but with an additional three regressors containing the cross‐modal surprise responses for each modality. Therefore, the UCM regression model is more complex and gets assigned higher model evidence than the reduced UM regression model only if the additional regressors contribute significantly to a better model fit (Stephan et al., 2009).

To allow for the possibility of different timescales of stimulus integration (Maheu et al., 2019; Ossmy et al., 2013; Runyan et al., 2017), the integration parameter of the Dirichlet‐Categorical model was optimised for each model, participant and peri‐stimulus time‐bin before model selection. To this end, model regressors were fit for a range of 11 tau parameter configurations ([0, 0.001, 0.0015, 0.002, 0.003, 0.005, 0.01, 0.02, 0.05, 0.1, 0.2]) corresponding to integration windows with a 0.5 stimulus weighting at (half‐life of) [600, 462, 346, 231, 138, 69, 34, 13, 6, 3] stimuli, of which the parameter with the best model evidence was chosen.

2.7.2. Bayesian model comparison

The estimated model‐evidence maps were used to evaluate the models' relative performance across participants via family‐wise BMS (Penny et al., 2010). The model space was partitioned into three types of families to draw inference on different aspects of the involved models. Given that the literature provides some evidence for each of the three surprise read‐out functions (BS, PS, CS) to capture some aspect of EEG MMRs, we included all of them in the family wise comparisons to avoid biasing the comparison of different BL models.

The first model comparison considered the full space of BL models as a single family (BL family) and compared it to the TLCD model (TLCD family) and the null model (NULL family). Since the BL models had their tau parameter optimized, which was not possible for the TLCD model, we applied the same penalization method used in our previous study (Gijsen et al., 2021). The degree to which the optimization on average inflated model evidence was subtracted from the BL models prior to BMS. Specifically, for all parameter values, the difference between the average model evidence and that of the optimized parameter was computed and averaged across poststimulus time bins, electrodes and participants.

Subsequent analyses grouped the different BL models into separate families: The second comparison grouped the BL models into two families of UM and UCM models, as well as the null model, to test which electrodes and time points showed influences of unimodal versus cross‐modal processing. The third comparison grouped the BL models into three surprise families and the null model, to test whether the observed MMRs were best captured by PS, BS, or CS.

3. RESULTS

3.1. Behavioral results

Participants showed consistent performance in responding to the catch trials during each experimental run, indicating their ability to globally maintain their attention to the tri‐modal stimulus stream. Of the 85.5% responses made in time, 75.3% were correct with an average reaction time of .

3.2. Event‐related potentials

3.2.1. Unimodal MMRs

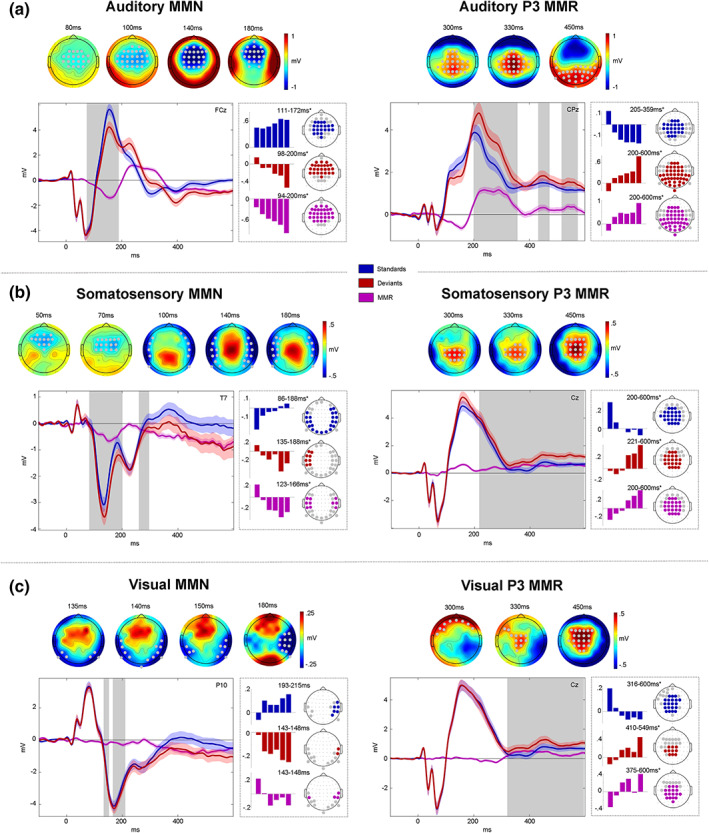

Cluster‐based permutation tests confirmed the presence of early modality specific MMN components as well as later P3 MMRs for all three modalities. Both early and late MMRs showed a modulation by the number of stimulus repetitions, the details of which will be described in the following sections.

3.2.2. Auditory MMRs

The MMN, as the classic MMR, has originally been studied in the auditory modality and is commonly described as the ERP difference wave calculated by subtraction of standard trials from deviant trials (deviants‐standards). This difference wave typically shows a negative deflection at fronto‐central electrodes and corresponding positivity at temporo‐parietal sites, ranging from around 100 to 250 ms (Naatanen et al., 1978; Naatanen et al., 2007). Correspondingly, we find a significant negative fronto‐central auditory MMN cluster between 80 and 200 ms (Figure 3a). Within the MMN cluster, deviants appear to deflect from the standard ERP around the peak of the auditory N1 component and reach their maximum difference around the peak of the subsequent P2 component. In the later time window, we observe positive MMRs at central electrodes between 200 and 400 ms, corresponding to a P3 modulation, as well as beyond 400 ms at progressively more posterior electrodes.

FIGURE 3.

Mismatch responses. Panels (a–c) show mismatch responses (MMRs) of auditory (a), somatosensory (b), and visual (c) modalities. Within panels: Left: mismatch negativity (MMN). Right: P3 MMR. Gray dots (top) and gray boxes (bottom) indicate significant MMR electrodes and time points with . Top row: MMR scalp topographies (deviants‐standards). Bottom row: Grand average ERPs (left panels) and beta parameter estimates of significant linear contrast clusters (right panels). Colored bars depict six beta parameter estimates of the TrainLength GLM (1, 2, 3, 4–5, 6–8, >8 repetitions) averaged across electrodes within linear contrast clusters. Asterisks indicate significance of the linear contrast ().

Within early and late auditory MMR clusters, the response to both standards and deviants was modulated by the number of standard repetitions. The auditory system is known to be sensitive to stimulus repetitions, particularly within the roving standard paradigm (Baldeweg et al., 2004; Cowan et al., 1993; Ulanovsky et al., 2003; Ulanovsky et al., 2004). Therefore, we hypothesized a gradual increase of the auditory response to standard stimuli around the time of the MMN, known as repetition positivity (Baldeweg, 2006; Baldeweg et al., 2004; Haenschel et al., 2005) as well as reciprocal negative modulation of the corresponding deviant response (Bendixen et al., 2007; Naatanen et al., 2007). Together, these effects should result in a gradual increase of the MMN amplitude with stimulus repetition. Indeed, linear contrasts applied to the GLM beta parameter estimates of the TrainLength model revealed that the MMN increases with the repetition of standards before a deviant was presented (94–200 ms, cluster ). This effect was driven by a negative linear modulation of the deviant response (98–200 ms, cluster ) as well as a repetition positivity effect on the standards (111–172 ms, cluster ). Similarly, the later P3 MMR increased with standard repetitions (200–600 ms, cluster ) and this effect was driven by an increase of deviant responses (200–600 ms, cluster ) and a decrease of standard responses (205–359 ms, cluster ). Given the temporal difference between standard (around 200–350 ms) and deviant (200–600 ms) train length effects, the parametric modulation of the late MMR beyond 350 ms seems to be primarily driven by the increase in deviant responses.

3.2.3. Somatosensory MMRs

We hypothesized somatosensory MMRs to consist of early bilateral (fronto‐) temporal negativities, resulting primarily from increased N140 components (Kekoni et al., 1997), with a corresponding central positivity extending into a later central P3 component.

After an early mismatch effect starting at ~50 ms at fronto‐central electrodes, a more pronounced bilateral temporal cluster emerged that extended from ~90 to 190 ms and can be considered the somatosensory equivalent of the auditory MMN (Figure 3b). A reversed positive central component can be observed at the time of the somatosensory MMN (sMMN) and throughout the entire later time window (200–600 ms) at which point it can be considered a putative P3 MMR.

Early and late somatosensory MMRs were significantly modulated by stimulus repetition. Bilateral electrodes within the sMMN cluster show an increase of the sMMN amplitude with repetition (123–166 ms, cluster ). This effect was driven by an increase of deviant negativity (135–188 ms, cluster ) in combination with a positivization of the standard (86–188 ms, cluster ). Similarly, the later P3 MMR increases with repetition of standards (200–600 ms, cluster ), mutually driven by increasing deviant responses (221–600 ms, cluster ) and decreasing standard responses (200–600 ms, cluster ).

3.2.4. Visual MMRs

We hypothesized visual MMRs to present as an early MMN at occipital to parieto‐temporal electrodes and a later P3 component at central electrodes. Although less pronounced than its auditory and somatosensory counterparts, we indeed observed a negative visual mismatch component that developed from occipital to parieto‐temporal electrodes between ~130 and 200 ms (Figure 3c). In the later time window, we found a central positive component between ~300 and 600 ms, corresponding to a P3 MMR.

Within the significant visual MMN (vMMN) cluster, the linear contrast testing for repetition effects did not reach significance when correcting clusters for multiple comparisons (). However, it is worth noting that some electrodes in this cluster seemed to show a similar pattern of response increases and decreases as in the auditory and somatosensory modality, which became apparent at more lenient thresholds. The vMMN tended to become more negative with repetition of standards (143–148 ms, peak ), with opposite tendencies of deviant negative increase (143–148 ms, peak ) and standard decrease (193–215 ms, peak ). Thus, although we cannot conclude a modulation by standard repetition of the vMMN with any certainty, the observed beta parameters are in principle compatible with the effects observed in the auditory and somatosensory modalities (please see the discussion for potential reasons for the reduced vMMN in our data).

Within the P3 MMR cluster, on the other hand, we find significant clusters of linear increase of the MMR (375–600 ms, cluster ), again constituted by an increase in deviant responses (410–549 ms, cluster ) and concomitant decrease in standard responses (316–600 ms, cluster ).

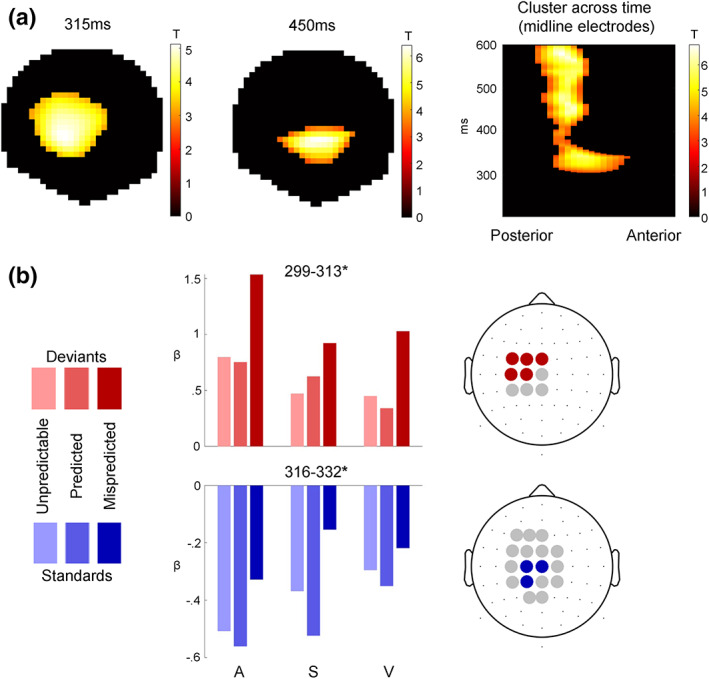

3.2.5. Cross‐modal P3 effects

In search of a common P3 effect to deviant stimuli, we created conjunctions of the deviants > standards contrasts across the auditory, somatosensory, and visual modalities. The conjunction revealed a common significant cluster starting at ~300 ms (cluster ) that comprised anterior central effects around 300–350 ms followed by more posterior effects from 400 to 600 ms (Figure 4a).

FIGURE 4.

Cross‐modal P3 effects. (a) T‐Maps of the conjunction of deviant > standard contrasts across the auditory, somatosensory, and visual modalities. (b) Beta estimates averaged across electrodes within significant clusters with peak <0.05, resulting from two‐way ANOVAs testing for differences between unpredictable, predicted, and mispredicted deviants (red) and standards (blue).

To investigate the modulation of the P3 MMR by predictability, we used two‐way ANOVAs with the three‐level factor modality (auditory, somatosensory, visual) and the three‐level factor predictability condition (predicted, mispredicted, unpredictable). Separate ANOVAs were applied to deviants and standards. We hypothesized that the cross‐modal P3 MMR might be sensitive to multisensory predictive information in the sequence, as the P3 has been shown to be sensitive to global sequence statistics (Bekinschtein et al., 2009; Wacongne et al., 2011) and to be modulated by stimulus predictability (Horvath et al., 2008; Horvath & Bendixen, 2012; Max et al., 2015; Prete et al., 2022; Ritter et al., 1999; Sussman et al., 2003). Indeed, within the common P3 cluster, both deviants (299–313 ms, peak ) and standards (316–332 ms, peak ) show significant differences between predictability conditions. No significant interaction of predictability condition with modality was observed.

Post hoc t tests were applied to the peak beta estimates to investigate the differences between the three pairs of conditions. For the ANOVA concerning the deviant trials, post hoc t tests show a significant difference for mispredicted > predicted (t = 14.667; p < .001, Bonferroni corrected), mispredicted > unpredictable (t = 14.76; p < .001, Bonferroni corrected) and no significant difference between unpredictable > predicted conditions (t = 0.01; p > .05). Similarly, for the ANOVA concerning the standard trials, post hoc t tests show that there is a significant difference for mispredicted > predicted (t = 10.67; p < .001, Bonferroni corrected), mispredicted > unpredictable (t = 6.87; p < .001, Bonferroni corrected) and unpredictable > predicted conditions (t = 3.83; p < .001, Bonferroni corrected).

Taken together, this result suggests that stimuli which were mispredicted based on the predictive multisensory configuration resulted in increased responses within the common P3 cluster compared to predicted or unpredictable stimuli, regardless of their role as standards or deviants in the current stimulus train.

For completeness, we also tested the effect of predictability in the earlier MMN cluster, but we did not observe any significant modulations here (results not shown).

3.3. Source localization

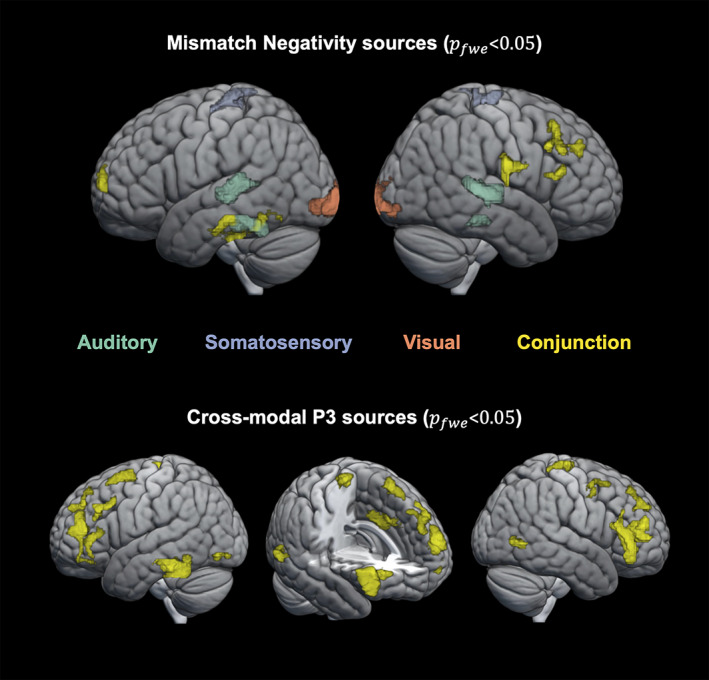

The source reconstruction analysis resulted in significant clusters of activation for each modality's MMN as well as the P3 MMR. The results are depicted in Figure 5 and cytoarchitectonic references are described in Table 1.

FIGURE 5.

Source localization. Top row: significant sources () for the auditory (green), somatosensory (purple), and visual (orange) mismatch negativity (MMN) as well as their conjunction (yellow). Bottom row: significant sources () for the conjunction (yellow) of the P3 mismatch response (MMR) in the auditory, somatosensory, and visual modalities.

TABLE 1.

Source localization and cytoarchitectonic reference

| Contrast | Hemisphere | Cytoarchitecture (probability) | MNI coord. at cytoarch. | t‐Statistics at cytoarch. (p‐value) |

|---|---|---|---|---|

| aMMN | Left | Auditory areas: | ||

| TE 4 (67.3%) | −52 −26 0 | 6.2 () | ||

| TE 3 (15.1%) | −59.8 −17.6 5.4 | 4.81 () | ||

| TE 1 (50.7%) | −50.3 −19.2 5.8 | 4.05 () | ||

| aMMN | Right | Auditory areas: | ||

| TE 4 (54.1%) | −56 −26 0 | 7.64 () | ||

| TE 3 (33.6%) | −64.2 −16.4 5 | 5.85 () | ||

| TE 1 (61.9%) | 53 −10.1 3.8 | 3.53 () | ||

| sMMN | Left | Somatosensory areas: | ||

| 3b [S1] (31.4%) | −15.7 −33.7 68.6 | 4.8 () | ||

| OP4 [S2] (38.6%) | −65 −14.8 20.1 | 3.72 () | ||

| OP1 [S2] (16%) | ||||

| sMMN | Right | Somatosensory areas: | ||

| 3b [S1] (40.3%) | 13.3 −33.7 68.2 | 4.76 () | ||

| OP4 [S2] (prob. 46.9%) | 66.5 −10.6 20.9 | 3.51 () | ||

| OP1 [S2] (prob. 9.1%) | ||||

| vMMN | Left | Visual areas: | ||

| hOc1 [V1] (84.6%) | −10 −100 0 | 6.18 () | ||

| hOc2 [V2] (11.1%) | ||||

| hOc4v [V4] (51%) | −30 −87.9 ‐11.7 | 5.51 () | ||

| hOc3v [V3] (24.6%) | ||||

| FG4 (89.5%) | −43.5 −49.4 −13.3 | 3.83 () | ||

| vMMN | Right | Visual areas: | ||

| hOc1 [V1] (86.4%) | 20 −100 −4 | 5.17 () | ||

| hOc2 [V2] (11%) | ||||

| hOc3 [V3] (43.7%) | 34.4 −88.7 −7.7 | 4.7 () | ||

| hOc4 [V4] (23.8%) | ||||

| FG4 (59.8%) | 50.3 −42.5 −18.8 | 3.82 () | ||

| MMN conjunction | Left | Frontal pole (28%) | −14 62 8 | 2.56 () |

| Inferior temporal gyrus (49%) | −50 −24 −28 | 2.52 () | ||

| MMN conjunction | Right | Middle frontal gyrus (36%) | 42 28 30 | 2.89 () |

| Inferior frontal gyrus (53%) | 53 27.6 17.3 | 2.96 () | ||

| Frontal pole (65%) | 30 42 32 | 2.28 () | ||

| Inferior parietal lobe (46.8%) | 62 −14 24 | 2.85 () | ||

| P3 con‐junction | (Left) | Anterior cingulate gyrus (51%) | −6 14 36 | 4.34 () |

| P3 conjunction | Left | Frontal pole (74%) | −34 44 20 | 3.31 () |

| Inferior frontal gyrus (35%) | 52 26 18 | 2.74 () | ||

| Middle frontal gyrus (78%) | −44 30 32 | 2.87 () | ||

| Superior frontal gyrus (43%) | −22 12 60 | 3.13 () | ||

| Inferior temporal gyrus (34%) | −48 −44 −24 | 3.21 () | ||

| Lateral occipital [hOc4la] (81%) | −46 −80 −8 | 2.99 () | ||

| P3 conjunction | Right | Frontal pole (86%) | 45 42 10 | 3.57 () |

| Inferior frontal gyrus (39.5%) | 52 26 18 | 3.45 () | ||

| Middle frontal gyrus (38%) | 36 2 54 | 3.0 () | ||

| Precentral gyrus [4a] (19%) | 6 −32 64 | 2.64 () | ||

| Lateral occipital cortex [hOc5] (55%) | 56 −62 0 | 3.45 () |

For each modality, the MMN was localized to source activations in the respective modality's sensory cortex and frontal cortex. Source localization of the auditory MMN shows the strongest activation in bilateral superior temporal areas (; left cluster: peak t = 6.20; right cluster: peak t = 7.64) corresponding to auditory cortex and in inferior temporal areas (; left cluster: peak t = 5.63; right cluster: peak t = 5.60). The somatosensory MMN shows highest source activation in postcentral gyrus (; left cluster: peak t = 5.22; right cluster: peak t = 4.92) corresponding to primary somatosensory cortex. Similarly, the vMMN shows highest source activation in the occipital cortex (; left cluster: peak t = 6.18; right cluster: peak t = 5.17), around the occipital pole, corresponding to visual areas (V1–V4). Lowering the threshold to (only shown in Table 1) suggests additional activation of hierarchically higher sensory areas such as secondary somatosensory cortex for the sMMN (; left cluster: peak t = 4.21; right cluster: peak t = 5.01) and lateral occipital cortex (fusiform gyrus) for vMMN (part of the primary visual cluster). In addition to the sensory regions, common frontal sources with dominance on the right hemisphere were identified using a conjunction analysis for the MMN of all three modalities. In particular, significant common source activations were found in the right inferior frontal gyrus (IFG; ; cluster: peak t = 3.15) and right middle frontal gyrus (MFG; ; cluster: peak t = 2.89). Additional significant common sources include frontal pole (; left cluster: peak t = 2.56; right cluster: peak t = 2.28), left inferior temporal gyrus (; cluster: peak t = 2.52) and right inferior parietal lobe (; cluster: peak t = 2.85).

For the late P3 MMR, a wide range of sources was expected to contribute to the EEG signal (Linden, 2005; Sabeti et al., 2016). To identify those that underlie the P3 MMR common to all modalities, we used a conjunction analysis. Significant clusters were found primarily in anterior cingulate cortex (; cluster: peak t = 4.34) and bilateral (pre‐)frontal cortex (; left IFG cluster: peak t = 3.57; left superior frontal gyrus cluster: peak t = 3.13; left MFG cluster: peak t = 2.87; left frontal pole cluster: peak t = 3.31; right IFG cluster: peak t = 3.45; right MFG cluster: peak t = 3.0; right frontal pole cluster: peak t = 3.57). Additional significant sources were found in left inferior temporal gyrus (; cluster: peak t = 3.21), left and right lateral occipital cortex (; left cluster: peak t = 2.99; right cluster: peak t = 3.45) and right precentral gyrus (; cluster: peak t = 2.64).

3.4. Single‐trial modeling

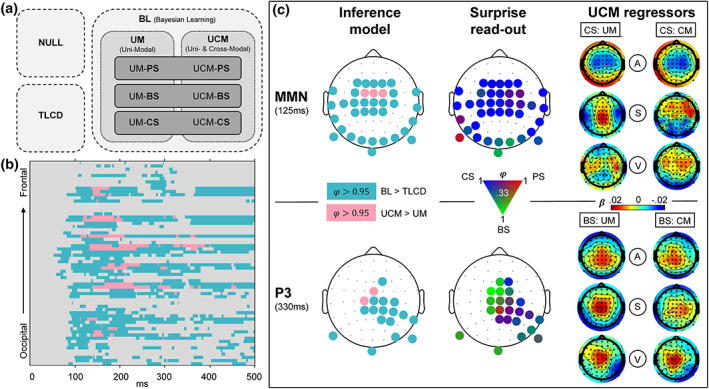

As described in the previous sections, the responses of standards and deviants show specific sensitivity to (1) stimulus repetition and (2) cross‐modal conditional probability. To investigate the computational principles underlying these response profiles, eight different models capturing various learning strategies were fit to the single‐trial EEG data and compared via family‐wise BMS. A summary of the modeling results is depicted in Figure 6.

FIGURE 6.

Modeling results. (a) Schematic overview of models. Model comparison 1 (light‐gray box, dashed contour): Null model family (NULL), train length‐dependent change detection model family (TLCD), and Bayesian learning model family (BL). Comparison 2 (gray box, dotted contour): Unimodal regression model family (UM), cross‐modal regression model family (UCM). Comparison 3 (dark‐gray box, line contour): Read‐out model family comparison of predictive surprise family (PS), Bayesian surprise family (BS), and confidence‐corrected surprise family (CS). (b) Results of comparison 1 and 2 shown for all electrodes and poststimulus time points. Color depicts exceedance probability (EP) . Light‐blue = BL > TLCD, pink = UCM > UM. (c) Topography of modeling results at time windows of MMN (top row) and P3 (bottom row). Left column: Results of comparison 1 (same colors as (B), depicting ). Middle column: Results of comparison 3. EPs between 0.33 and 1 of the three surprise functions are represented by a continuous three‐dimensional RGB scale (red = predictive surprise [PS]; green = Bayesian surprise [BS]; blue = confidence‐corrected surprise [CS]). Right column: Beta estimates of the model regressors of the UCM model (regressors: A = auditory; S = somatosensory; V = visual; CM = cross‐modal; UM = unimodal) for CS read‐out models (top) and BS read‐out models (bottom).

The first model comparison aimed to further investigate observation (1) and the question whether the observed parametric modulation of standard and deviant EEG responses merely reflects a combination of neuronal adaptation and change detection dynamics or if the observed response patterns are indicative of an underlying generative model engaged in probabilistic inference. To this end, we ran family wise BMS which is schematically depicted in Figure 6a. The first comparison concerned the TLCD model, a model family containing all BL models, and a null model. In the fronto‐central, temporal, occipital, and central electrodes showing MMN and P3 effects, the model comparison shows strong evidence in favor of the BL model family with an exceedance probability (corresponding to expected posterior probability ) from ~70 ms onward. On the other hand, the TLCD model did not exceed for any electrode or time point. Therefore, the TLCD model was disregarded at this point, and we focused further investigation on the different BL models.

The second comparison set out to investigate observation (2) and evaluate the contribution of stimulus alternation tracking conditional on multimodal configurations beyond unimodal transition probability inference. Within the electrodes and time‐points with sufficient evidence for BL signatures (as established by the first model comparison), a comparison of a purely unimodal model family with a cross‐modally informed model family (UCM) was performed. Those electrodes and time‐points where the additional inclusion of cross‐modal regressors (UCM models) provided better model fits than purely unimodal models are highlighted in Figure 6b,c. The UCM family outperforms the UM family at central and fronto‐central electrodes at ~100–400 ms (with , corresponding to ).

Inspection of the beta estimates of auditory, somatosensory, and visual regressors of the UCM regression models shows that the beta maps of the unimodal predictors of the model resemble the ERP mismatch topographies of the respective modalities (depicted in Figure 6c). The cross‐modal predictor, on the other hand, rather shows (fronto‐) central activations which appear to resemble frontal aspects of the respective auditory, somatosensory, and visual MMRs.

The third comparison concerned the three surprise measures used as read‐out functions for the probabilistic models. Overall, the family comparison does not show overwhelming evidence for any specific surprise function as only few electrodes reach exceedance probabilities of . Nevertheless, a tendency of the MMN and the P3 to reflect different surprise dynamics can be observed. Although around the time of the MMN, only some electrodes show in favour of CS, inspection of the topographies without thresholding (as depicted in Figure 6c) shows CS to be dominant throughout the spatio‐temporal range of the MMN (as suggested by higher EPs compared to BS and PS). On the other hand, at the time of the P3, BS appears to be the dominant surprise computation with multiple (fronto‐) central electrodes showing . Overall, the surprise comparison provides some evidence for a reflection of CS dynamics in the earlier mismatch signals around the time of the MMNs and suggests a tendency of the P3 to reflect BS dynamics.

In a final analysis, the optimal observation integration parameter was inspected. For each modality, the significant MMN clusters of the ERP analyses were used to inspect the optimal integration window of the regression models. For the UM regression model, highly similar optimal integration parameters were found within the electrodes and time‐points of the different MMN clusters with no significant difference between the modalities. The optimal integration parameters were found to correspond to windows of stimulus integration with a half‐life of (50% weighting at) around 5 to 25 stimuli ( across participants within the MMN clusters: [8–16 stimuli], [6–9 stimuli], [9–18 stimuli], [6–9 stimuli]; across participants within the P3 clusters: [9–21 stimuli], [6–11 stimuli], [10–25 stimuli], [6–10 stimuli]). Overall, the same range of stimulus integration was found for the UM and UCM regression models and CS models tended to have higher integration windows (~10–20 stimuli) compared to Bayesian surprise models (~5–10 stimuli).

4. DISCUSSION

The present study set out to compare mismatch signals in response to tri‐modal sequence processing in the auditory, somatosensory, and visual modalities and to investigate influences of predictive cross‐modal information. We found comparable but modality specific signatures of MMN‐like early mismatch processing between 100 and 200 ms in all three modalities, which were source localized to their respective sensory specific cortices and shared right lateralized frontal sources. An additional cross‐modal signature of mismatch processing was found in the P3 MMR for which a common network with frontal dominance was identified. With exception of the vMMN, both mismatch signals (MMN and P3) show parametric modulation by stimulus train length driven by reciprocal tendencies of standards and deviants across modalities. Strikingly, standard and deviant responses within the cross‐modal P3 cluster were sensitive to predictive information carried by the tri‐modal stimulus configuration. Comparisons of computational models indicated that BL models, tracking transitions between observations, captured the observed dynamics of single‐trial responses to the roving stimulus sequences better than a static model reflecting TLCD. Moreover, a BL model which additionally captured cross‐modal conditional dependence of stimulus alternation outperformed a purely unimodal BL model primarily at central electrodes. The comparison of different read‐out functions for the BL models provides tentative evidence that the early MMN may reflect dynamics of CS whereas later P3 MMRs seem to reflect dynamics of BS.

4.1. Modality specific mismatch signatures in response to tri‐modal roving stimuli

By using a novel tri‐modal roving stimulus sequence originating from an underlying Markov process of state transitions, we were able to elicit and extract unique EEG signatures in each of the three sensory modalities (auditory, somatosensory, and visual).

Of the EEG mismatch signatures, the auditory MMN is one of the most widely researched responses to deviation from an established stimulus regularity (Naatanen et al., 1978; Winkler et al., 2009). Contrasting responses to standard and deviant stimuli of the auditory sequence in the current study resulted in the expected fronto‐central MMN signature with more negative responses to deviants compared to standards. The extent of the MMN might suggest an underlying negative mismatch component as proposed by Naatanen et al. (2005), which drives a more negative going ERP around the N1, extending beyond the P2 component. Such post‐N1 effects of the MMN have been suggested as markers of a “genuine” mismatch component in contrast to confounds by stimulus properties modulating auditory ERP components (Naatanen et al., 2007) and might speak against pure N1 adaptation (as suggested by Jaaskelainen et al., 2004; May et al., 1999).

The somatosensory equivalent to the auditory MMN (sMMN) reported in the current study shows negative polarity at bilateral temporal electrodes and corresponding central positivity. The sMMN likely reflects an enhanced N140 component, as suggested by Kekoni et al. (1997). However, most previous sMMN studies used oddball paradigms where some critical discussion revolves around the distinction of the sMMN from an N140 modulation by stimulus properties alone. Here, we report an sMMN around the N140 which can be assumed to be independent of stimulus confounds due to the reversed roles of standard and deviant stimuli in the roving paradigm. Although several previous studies have reported somatosensory mismatch responses, conflicting evidence exists regarding the exact components that may constitute an equivalent to the auditory MMN. Some studies report a more fronto‐centrally oriented negativity (Kekoni et al., 1997; Shen et al., 2018; Spackman et al., 2007; Spackman et al., 2010) or observed such pronounced central positivity that they were led to conclude that it is in fact the central positivity that should be considered the somatosensory equivalent of the aMMN (Akatsuka et al., 2005; Shinozaki et al., 1998). However, some evidence appears to converge on a temporally centered negativity with corresponding central positivity as the primary sMMN around 140 ms (Gijsen et al., 2021; Ostwald et al., 2012).

While the auditory and somatosensory MMN's in the current study were found to be highly comparable in their signal strength, their hypothesized counterpart in the visual modality showed a comparatively weaker response. Nevertheless, we found a significant vMMN at occipital electrodes extending to temporal electrodes within a time window of 100–200 ms poststimulus, with corresponding (fronto‐) central positivity. This observation is in line with previous research reporting posterior (Cleary et al., 2013; Kimura et al., 2010; Urakawa et al., 2010) and temporal (Heslenfeld, 2003; Kuldkepp et al., 2013) patterns of vMMN with corresponding central positivity (Cleary et al., 2013; Czigler et al., 2006; File et al., 2017).

4.2. Neuronal generators of MMN signatures

Source reconstruction analyses were used to identify underlying neuronal generators of the modality specific MMN signatures. Interestingly, for each sensory modality, we found generators in the primary and higher order sensory cortices as well as additional frontal generators in IFG and MFG.

The sensory specific neuronal sources underlying the auditory MMN were identified as bilateral auditory cortex with a dominance in hierarchically higher auditory areas. With an additional modality independent contribution of right lateralized frontal sources, this set of neuronal generators identified for the aMMN is in line with previous research suggesting primary auditory cortex and higher auditory areas in superior temporal sulcus as well as right IFG as underlying the aMMN (Garrido et al., 2008; Garrido, Kilner, Kiebel, & Friston, 2009; Molholm et al., 2005; Naatanen et al., 2005; Opitz et al., 2002) with consideration of an additional frontal generator in MFG (Deouell, 2007).

The sources underlying the sMMN were identified in the current study as primary (S1) and secondary (S2) somatosensory cortices with additional frontal generators in right IFG and MFG. This finding is in accordance with previous research showing a combined response of S1 and S2 to underlie the sMMN (Akatsuka, Wasaka, Nakata, Kida, Hoshiyama, et al., 2007; Akatsuka, Wasaka, Nakata, Kida, & Kakigi, 2007; Andersen & Lundqvist, 2019; Butler et al., 2012; Gijsen et al., 2021; Naeije et al., 2016, 2018; Ostwald et al., 2012; Spackman et al., 2010) in combination with involvement of (inferior) frontal regions (Allen et al., 2016; Downar et al., 2000; Fardo et al., 2017; Huang et al., 2005; Ostwald et al., 2012).

For the visual modality, we identified sources in visual areas (V1–V4) and additional frontal activations in IFG and MFG as the neuronal generators underlying the vMMN. Previous studies have shown similar combinations of visual and prefrontal areas (Kimura et al., 2010; Kimura et al., 2011; Kimura et al., 2012; Urakawa et al., 2010; Yucel et al., 2007) and have particularly highlighted the IFG as a frontal generator of the vMMN (Downar et al., 2000; Hedge et al., 2015). Similarly, an fMRI study of perceptual sequence learning in the visual system has shown right lateralized prefrontal activation in addition to activations in visual cortex in response to regularity violations (Huettel et al., 2002). Yet another study has suggested a role for right prefrontal areas in interaction with hierarchically lower visual areas for the prediction of visual events (Kimura et al., 2012), all in line with our results.

Overall, our finding of inferior and middle frontal sources for the MMN in all three modalities provides further evidence for a modality independent role for these generators as previously suggested by Downar et al. (2000). As such, these modality‐independent frontal generators might reflect higher stages of a predictive hierarchy working across modalities in interaction with lower modality specific regions, as previously suggested primarily for the auditory modality (Garrido, Kilner, Stephan, & Friston, 2009).

4.3. Modulation of the MMN by stimulus repetition

An important feature of the MMN which theories of its generation have aimed to account for is its sensitivity to stimulus repetition. The MMN is known to increase with prior repetition of standards (Imada et al., 1993; Javitt et al., 1998; Naatanen & Näätänen, 1992; Sams et al., 1983). Correspondingly, in the current study, we find a significant increase of auditory and somatosensory MMN with the length of the preceding stimulus train as well as a comparable tendency for the vMMN. Moreover, we show that this increase was driven by a reciprocal negative modulation of deviant and positive modulation of standard responses, suggesting a combined influence of repetition dependent change detection and dynamics akin to stimulus adaptation.

The observed positive modulation of standard responses, particularly in the auditory modality, is in line with the repetition positivity account of Baldeweg and colleagues (Baldeweg, 2006; Baldeweg, 2007; Baldeweg et al., 2004; Haenschel et al., 2005). In the auditory modality, repetition positivity has been isolated as a positive slow wave that accounts for repetition‐dependent increases of auditory ERPs up to the P2 component (Haenschel et al., 2005). With regard to its functional role, it has been argued to reflect auditory sensory memory trace formation (Baldeweg et al., 2004; Costa‐Faidella, Baldeweg, et al., 2011; Costa‐Faidella, Grimm, et al., 2011). Interestingly, MMN studies using the oddball paradigm often report an increasing MMN with standard repetition without further dissecting the contributions from standard and deviant dynamics. A contribution of the standard repetition positivity appears to be particularly dominant in roving stimulus paradigms (Cooper et al., 2013), potentially because a memory trace of the standard stimulus identity must be reestablished after each change of roles for standard and deviant stimuli. It has even been suggested that the memory trace dynamics of the standard observed in response to roving oddball sequences might in fact be the primary driver of train length effects on MMN amplitudes (Baldeweg et al., 2004; Costa‐Faidella, Baldeweg, et al., 2011; Costa‐Faidella, Grimm, et al., 2011; Haenschel et al., 2005). Importantly, although some evidence exists to suggest an additional role for train length dependent deviant modulation also in roving paradigms (Cowan et al., 1993; Haenschel et al., 2005), a dissection of combined standard and deviant contributions as performed here is rarely described.

Similar to the aMMN, we found the sMMN to be modulated by stimulus repetition. An early repetition positivity effect in the responses to standards was observed prior to 100 ms indicating comparable sensory adaptation dynamics as described for the aMMN. Subsequently, the negative deviant and sMMN responses increase with repetition around the N140 (i.e., around the sMMN peak). While somatosensory deviant responses have previously been shown to decrease with increasing stimulus probability (Akatsuka, Wasaka, Nakata, Kida, & Kakigi, 2007), only few other studies have reported sensitivity of the sMMN to stimulus repetition. Interestingly, in our previous study on somatosensory MMRs (Gijsen et al., 2021) we report the same reciprocal pattern found here: Negative modulation of the deviant and positive modulation of the standard response which result in an increase of the sMMN amplitude with stimulus train length.

In the visual modality, a comparable train length effect to auditory and somatosensory modalities was observed but did not reach statistical significance in the vMMN time window. Given the overall weaker response in the current study for vMMN this might not be surprising. Moreover, discussions about the repetition modulation of vMMN responses are often based on findings concerning the auditory system rather than direct findings in the visual modality. While sensory adaptation to stimulus repetition is generally found throughout the visual system (e.g., Clifford et al., 2007; Grill‐Spector et al., 2006) it is rarely directly reported in vMMN studies (but see Kremlacek et al., 2016). Overall, the vMMN literature seems to suggest that the vMMN may be a rather unstable phenomenon. In fact, by controlling for confounding effects, one study has called the existence of the vMMN for low level features such as the ones used here into question entirely (Male et al., 2020). The vMMN appears to show a much less pronounced spatiotemporal pattern than auditory and somatosensory equivalents, which is reflected in larger variance in the reported topographies and time windows in studies investigating vMMN (but see Section 11 for a discussion of alternative explanations regarding the current study).

4.4. MMN as a signature of predictive processing

Recent research supports the view that Bayesian perceptual learning mechanisms underlie the generation of mismatch responses such as the MMN (Friston, 2005, 2010; Garrido, Kilner, Stephan, & Friston, 2009). Given the proposal of Bayesian inference and predictive processing as universal principles of perception and perceptual learning in the brain (Friston, 2005, 2010), comparable mismatch responses are expected to be found across sensory modalities. Evidence for the predictive nature of mismatch responses, akin to key findings from the auditory modality, is for instance given by studies showing somatosensory (Andersen & Lundqvist, 2019; Naeije et al., 2018) and visual (Czigler et al., 2006; Kok et al., 2014) MMN in response to predicted but omitted stimuli. Moreover, Ostwald et al. (2012) and Gijsen et al. (2021) have shown that single trial somatosensory MMN and P3 MMRs can be accounted for in terms of surprise signatures of Bayesian inference models tracking stimulus transitions. Similarly, the vMMN has been described as a signature of predictive processing (Kimura et al., 2011; Stefanics et al., 2014), signaling prediction error instead of basic change detection (Stefanics et al., 2018).

Correspondingly, we found comparable mismatch signatures in auditory, somatosensory, and visual modalities. The train length effects observed in our study across modalities have previously been related to predictive processing. Repetition positivity in the auditory modality has been interpreted as a reflection of repetition suppression, resulting from fulfilled prediction (Auksztulewicz & Friston, 2016; Baldeweg, 2007; Costa‐Faidella, Baldeweg, et al., 2011; Costa‐Faidella, Grimm, et al., 2011). A corresponding negative modulation of deviant responses on the other hand, would signal a failure to suppress prediction error after violation of the regularity established by the current stimulus train. Under such a view, longer trains of repetitions lead to higher precision in the probability estimate which in turn results in a scaling of the prediction error in response to prediction violation (Auksztulewicz & Friston, 2016; Friston, 2005; Friston & Kiebel, 2009). In line with these hypotheses, Garrido and colleagues (Garrido et al., 2008; Garrido, Kilner, Kiebel, & Friston, 2009) used dynamic causal modeling (DCM) to show that the MMN elicited in a roving stimulus paradigm is best explained by the combined dynamics of auditory adaptation and model adjustment. Their network, proposed to underlie MMN generation, was set up as an implementation of hierarchical predictive processing involving bottom‐up signals from auditory cortex and top‐down modulations by inferior frontal cortex. Similarly, another DCM study proposed a predictive coding model of pain processing in response to somatosensory oddball sequences, highlighting the role of inferior frontal cortex in top‐down modulations of somatosensory potentials (Fardo et al., 2017). As we find involvement of such modality specific sensory and modality independent frontal areas for MMN responses across modalities, our results suggest comparable roles for these sources in a predictive hierarchy.

4.5. P3 Mismatch responses reflect cross‐modal processing