Abstract

Visual estimates of stimulus features are systematically biased toward the features of previously encountered stimuli. Such serial dependencies have often been linked to how the brain maintains perceptual continuity. However, serial dependence has mostly been studied for simple two-dimensional stimuli. Here, we present the first attempt at examining serial dependence in three dimensions with natural objects, using virtual reality (VR). In Experiment 1, observers were presented with 3D virtually rendered objects commonly encountered in daily life and were asked to reproduce their orientation. The rotation plane of the object and its distance from the observer were manipulated. Large positive serial dependence effects were observed, but most notably, larger biases were observed when the object was rotated in depth, and when the object was rendered as being further away from the observer. In Experiment 2, we tested the object specificity of serial dependence by varying object identity from trial to trial. Similar serial dependence was observed irrespective of whether the test item was the same object, a different exemplar from the same object category, or a different object from a separate category. In Experiment 3, we manipulated the retinal size of the stimulus in conjunction with its distance. Serial dependence was most strongly modulated by retinal size, rather than VR depth cues. Our results suggest that the increased uncertainty added by the third dimension in VR increases serial dependence. We argue that investigating serial dependence in VR will provide potentially more accurate insights into the nature and mechanisms behind these biases.

Keywords: serial dependence, orientation, virtual reality (VR), sequential effects, perceptual stability

Introduction

Recent years have seen a growing interest in understanding how the brain anticipates and predicts the environment (Rao & Ballard, 1998; Chetverikov & Kristjánsson, 2016; Kristjánsson, 2022). Many overarching frameworks, from predictive coding to the Bayesian brain (Friston, 2009; Hohwy, 2017; Girshick, Landy, & Simoncelli, 2011; Seriès & Seitz, 2013; Tanrikulu, Chetverikov, Hansmann-Roth, & Kristjánsson, 2021; Trapp, Pascucci, & Chelazzi, 2021) suggest that the brain uses prior experience to predict present sensory input, in a constant attempt at minimizing prediction errors (Hohwy, 2017). This scheme has been invoked as a causal explanation of many well-known biases that originate from long-term priors, such as the slow-speed prior (Weiss, Simoncelli, & Adelson, 2002) or the light from above assumption (Adams, 2007), as well as from biases due to the recent history of perceptual input (Brascamp et al., 2008; Cicchini & Kristjánsson, 2015).

From a computational perspective, using the recent past as a prior for current perception seems a reasonable strategy to maintain continuity. Despite systematic changes in viewpoints, in illumination levels and various other incidental factors, such as moment-to-moment differences in reflectance or occlusion, the objects around us remain the same. Using this knowledge can help to maintain the constancy and continuity of perceptual representations, an “inner necessity” in perception (Wertheimer, 1923; see Westheimer, 1999). This notion lies at the core of recent theories about the nature of serial dependence in perceptual decisions.

Serial dependence

In serial dependence, when observers are asked to reproduce a visual feature, such as the orientation of a Gabor patch, their judgments are systematically biased toward the orientation of the Gabor seen on the previous trials. This bias has been demonstrated in a variety of visual tasks (for reviews see Kiyonaga, Scimeca, Bliss, & Whitney, 2017; Pascucci et al., 2023) suggesting that this is a general principle of visual processing, which might originate from our constant exposure to a visual world made of temporally correlated features (Kalm & Norris, 2018; van Bergen & Jehee, 2019). Yet, most of the evidence has come from studies using synthetic two-dimensional stimuli that are far simpler than the objects encountered in the natural world. This leaves two main questions open: how large are these effects in everyday perception and how specific are they to real objects?

How large do serial dependence biases need to be?

In the plethora of experiments with two-dimensional stimuli reported so far, the magnitude of serial dependence biases has varied considerably and seems to be paradigm and stimulus dependent. For instance, in Fischer and Whitney (2014) the average serial dependence in orientation judgments was around 8 degrees, but in many subsequent studies on orientation, the measured size was smaller than this, often around 1 to 2 degrees (Cicchini, Mikellidou, & Burr, 2017; Fritsche, Mostert, & de Lange, 2017; Pascucci et al., 2019; Kim, Burr, Cicchini, & Alais, 2020; Rafiei, Chetverikov, Hansmann-Roth, & Kristjánsson, 2021a; Rafiei, Chetverikov, Hansmann-Roth, & Kristjánsson, 2021b). One may wonder whether such variability depends upon the use of stimuli that depart from real-world objects and are too specific to summon strong and consistent priors about object continuity. Estimates of the size of serial dependence effects in real-world environments are therefore lacking.

Object specificity of serial dependence

Another important question is how specific serial dependence is to visual objects. With typical psychophysics stimuli, for example, serial dependence in orientation occurs even when the entire stimulus changes (e.g. from a Gabor patch to a symmetric dot pattern; Ceylan, Herzog, & Pascucci, 2021; see also Fornaciai & Park, 2019; Fornaciai & Park, 2022; Goettker & Stewert, 2022). These results indicate that, at least when elementary visual features are at stake, serial dependence is not object dependent (but see also Liberman, Zhang, & Whitney, 2016, Liberman, Manassi, & Whitney, 2018; Fischer et al., 2020; Collins, 2022).

All these studies, however, have used simple two-dimensional stimuli, which ignores what could be the determinant of serial dependence in the real world: the recognition of a familiar object as the same, from one viewpoint to the next. Virtual reality allows a step forward in bridging this gap, enabling the use of realistic visual objects and environments that still allow detailed control of elementary parameters.

Current aims

Kristjánsson and Draschkow (2021) have recently argued for the use of more real-world-like scenarios in the study of visual cognition, including gamification and virtual reality (VR), which can provide more ecologically valid and generalizable knowledge about the functioning of perception. Following this goal, we set out to test whether and how serial dependence occurs in a three-dimensional environment rendered with VR. Demonstrations of serial dependence in more interactive and realistic scenarios are lacking. Moreover, novel questions about serial dependence can clearly be asked within such real-world applications. One pertinent example is orientation in three dimensions instead of the two dimensions that have typically been tested. More generally, it is timely and important to assess what role serial dependence biases may play in perception in a more general sense. Our general goal here is to provide an initial step in this direction that can later be built on to test serial dependence in even more ecologically valid environments.

The specific aims of this work are therefore to test (1) the size and consistency of serial dependence effects with real-world objects and (2) the specificity of these effects to the identity of familiar objects. This was accomplished in a series of three experiments. In Experiment 1, we used VR to assess serial dependence for objects rotating in depth (in 3 dimensions) versus the same objects rotating in the fronto-parallel plane (in 2 dimensions). Additionally, we tested the effect of perceived distance. In Experiment 2, we contrasted three different conditions, between two exemplars of the same object, two different items from the same category, and between two different items from different categories. Experiment 3 then contrasted the role of perceived distance versus retinal size.

Experiment 1 – Serial dependence for a realistic object in virtual reality

Our goal in Experiment 1 was to replicate standard serial dependence effects in orientation judgments using a real daily-life object in a basic VR environment. We manipulated two factors: (1) whether the object was rotated in depth or in the fronto-parallel plane; and (2) the distance of the object from the observer. Observers provided their orientation judgments by adjusting a 3D bar using the VR controller.

Methods

Participants

The study involved 16 participants (Mage = 33.75, SDage = 9.1), who had normal or corrected to normal vision and gave written informed consent. All participants were staff or students at the University of Iceland and participated voluntarily. All aspects of the experiment were performed in accordance with the requirements of the local bioethics committee and with the Declaration of Helsinki for testing human participants.

Stimuli and equipment

The experiment ran on a Windows 10 PC with an Intel i7-8700 3.20GHz CPU and an Nvidia GTX 1080 GPU. The experiment was built using the Vizard software (www.worldviz.com) with VR rendered on an HTC Vive VR system. The HTC Vive VR (first generation) system had screen dimensions of 1080 × 1200 pixels per eye (2160 × 1200 pixels combined). The refresh rate was 90 Hz, and the field of view was approximately 110 degrees. The sensors that were used were SteamVR Tracking, a G-sensor, a proximity sensor, and a gyroscope. Observers used an HTC Vive controller with a SteamVR Tracking sensor to interact with the virtual environment and report the perceived orientation by adjusting a multifunction trackpad on the controller. Stimulus presentation and response collection were carried out with a custom VR application written in Vizard. Two base stations, located 3.13 m apart conveyed signals to the headset and controller.

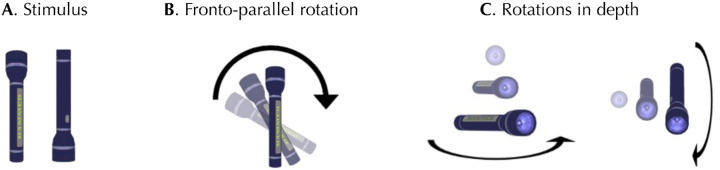

The stimulus for the orientation judgment task was a 3D model of a portable blue flashlight that appeared in the horizontal line of sight and at the observers’ eye height when sitting (Figure 1A). The flashlight was taken from the default object library of the Vizard software. The dimensions of the flashlight in VR were rendered to be 90 cm × 18 cm × 18 cm1 within a uniform gray background, and it appeared either 4 m or 12 m away from the observer, which corresponds to the retinal size of 12.8 degrees × 0.9 degrees × 0.9 degrees and 4.3 degrees × 0.3 degrees × 0.3 degrees, respectively.

Figure 1.

(A) Two different views of the stimulus object used in Experiment 1. (B) Depiction for the rotation of the object in fronto-parallel (xy-) plane. (C) Two different types of rotation in depth, on the left, the object rotates within the depth plane that is parallel to the ground. On the right, the object rotates within the depth plane that is perpendicular to the ground.

A rectangular 3D adjustment bar was rendered for observers and they made their judgments by adjusting its orientation using the VR controllers. This bar appeared 3 m away from the observer and its size was 100 cm × 10 cm × 10 cm, yielding a retinal size of 19 degrees × 1.9 degrees × 1.9 degrees. The pattern on the bar consisted of alternating black and white stripes (width = 1.25 cm per stripe) parallel to the central axis of the bar. The objects in the VR environment were illuminated by a “head-light” attached to the observer's viewpoint.

Design

The experiment involved a 2 × 2 design where we manipulated the plane of rotation and the distance of the object from the observer. Rotation type was blocked, and the object was either rotated in-depth or in the fronto-parallel plane (as in classic 2D serial dependence displays; see Figures 1B, 1C). For the fronto-parallel condition, the object was rendered such that its central longitudinal axis was within the fronto-parallel (xy) plane and its rotation axis (z-axis) was orthogonal to its central longitudinal axis (see Figure 1B). For the in-depth condition, the object was rendered such that its central longitudinal axis was either in the depth plane that is parallel (xz-plane) or perpendicular (yz-plane) to the ground (see Figure 1C). In all conditions, the rotation axis of the object was perpendicular to the plane that the object's central longitudinal axis was placed within. The bottom of the flashlight was the fixed center of rotation, and it was located at the same horizontal and vertical location as the observer's head. On half of the trials, the distance of the object from the observer was rendered to be 4 m, and 12 m in the other half. The distance of the object was determined pseudo-randomly on each trial to ensure that each experimental block had an equal number of trials from each distance condition.

The experiment consisted of 288 trials administered in eight blocks. The objects rotated in the fronto-parallel plane in four blocks but in depth in the other four blocks (2 blocks involved rotation in a plane parallel to the ground and the other 2 rotations in a plane perpendicular to the ground). The fronto-parallel and in-depth blocks were presented in alternation and the order of blocks was counterbalanced between subjects.

The orientation of the central longitudinal axis of the object was randomly determined on the first trial of each block, and the orientation on the following trials was selected pseudo-randomly to keep Δθ (previous trial orientation – current trial orientation) in the range of ±60 degrees, in steps of 15 degrees. We also added +/− 5 degrees jitter to Δθ on each trial. All this added up to 288 experimental trials: two distances of the object (4 m or 12 m) times two rotational planes (fronto-parallel or in depth) times nine Δθ values times eight repetitions.

Procedure

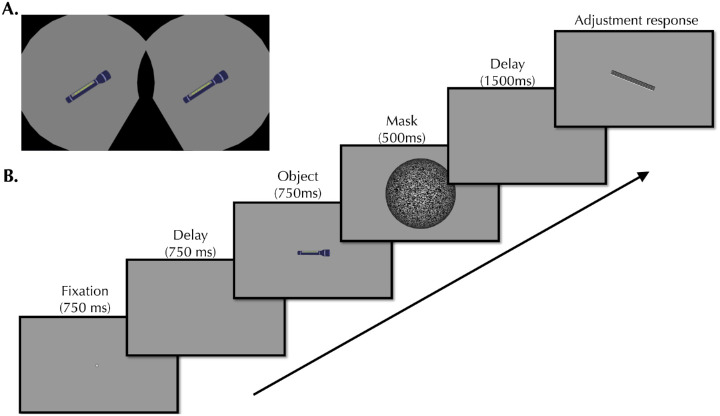

Observers were seated in a chair in the middle of the room. The VR headset was adjusted to the interpupillary distance of each observer. Before experimental blocks, all participants received 36 practice trials where they became familiar with the headset and used the controller to provide their orientation estimates.

Figure 2 demonstrates typical experimental trials. A trial started with the presentation of a fixation dot (radius = 1 cm, color = red, located 0.5 m away from the observer and at the height of the observer's head) for 750 ms, followed by a 750 ms delay. Then, the object appeared and stayed visible for 750 ms, followed by a mask for 500 ms. The mask was a sphere that had a random-dot texture around it, with a radius of 1 m and rendered to be 4 m away from the observer and at the height of the observer's head. After a 1500 ms delay, the response bar appeared whose orientation could be adjusted using the trackpad on the Vive VR controller. The task was to report the orientation of the target object by adjusting the orientation of the response bar. The initial orientation of the response bar was randomly selected for each trial. Once observers finished their adjustment, they confirmed their response by clicking the trackpad, which immediately started the next trial. Observers could only rotate the response bar within the plane in which the central longitudinal axis of the target object for that trial was placed (i.e. the rotation plane of the target object and of the response bar was the same within each trial). The center of the bar was the fixed center of rotation, and it was located at the same horizontal and vertical location as the observer's head.

Figure 2.

(A) Example of the images presented to the observer's left and right eye during the 750 ms of object presentation. (B) Display sequence of a typical trial in Experiment 1. A demo video demonstrating the display sequence can be found at: https://osf.io/e6vgd.

After completing each block, the observers were given a chance to rest. However, if they chose not to rest between blocks, they were forced to rest after completing the fourth block. During the experiment, if observers moved their chair outside of a circle (radius = 40 cm), the experiment was automatically paused until they moved the chair back to the marked central location in the VR environment2. An experimental session took around 60 minutes to complete on average.

Results

Before merging all the observers’ data, trials containing adjustment errors larger than three standard deviations from the individual observer's mean were excluded from the analysis (2% of all trials). The average absolute adjustment error was 8.77 degrees. Even though the mean errors were larger when the object was 12 m away from the observer (M = 9.29 degrees, SD = 2.01 degrees) compared to 4 m away (M = 8.29 degrees, SD = 1.60 degrees), this difference was not statistically significant (t(30) = 1.56, p > 0.05). However, absolute adjustment errors were significantly larger when the object was rotated in-depth (M = 9.80 degrees, SD = 1.97 degrees) compared to when it rotated in the fronto-parallel plane (M = 7.79 degrees, SD = 1.84 degrees) (t(30) = 2.98, p < 0.01).

To assess serial dependence, adjustment errors were mean-corrected and then plotted as a function of the actual change in orientation (Δθ). First derivatives of Gaussians (DoGs) were then fitted to the data (Figures 3A, 3B). These functions are useful because they should be a flat line if there is no bias (i.e. observers report the orientation accurately), but any significant deviation from a flat line indicates a serial bias in orientation judgments either toward or away from the previous trial. We then measured the maximum amplitude of the DoGs, which can be used as a measure of the size of the serial dependence effects. The DoGs were then bootstrapped and the distributions of the max amplitudes were plotted (as depicted in Figure 3C). Bootstrapping was done across trials and for the aggregate observer. With this approach, positive amplitude means that the bias is attractive (the judgments of the orientation of the current stimulus are pulled toward the previously presented ones) whereas negative amplitude denotes repulsive biases (judgments are biased away from the preceding stimulus). The statistical significance of the DoG amplitudes and the comparisons between the amplitudes of different conditions were tested using permutation tests with 10,000 samples.

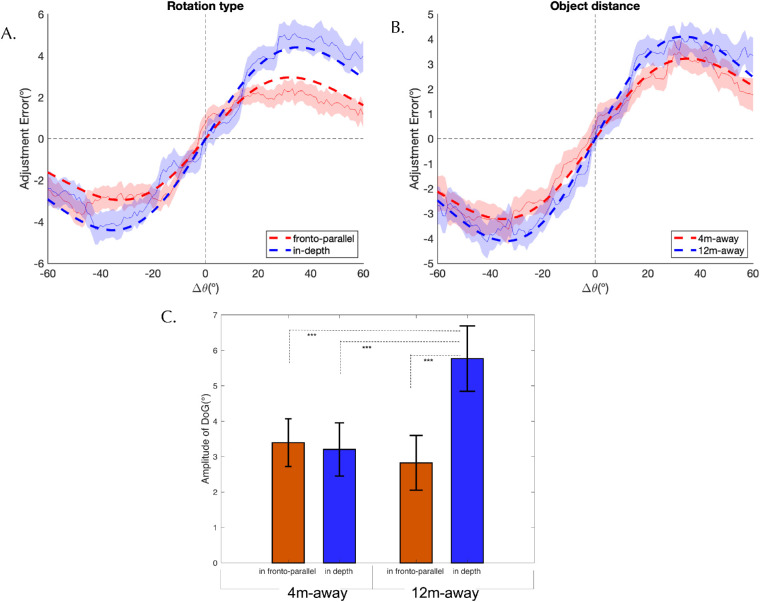

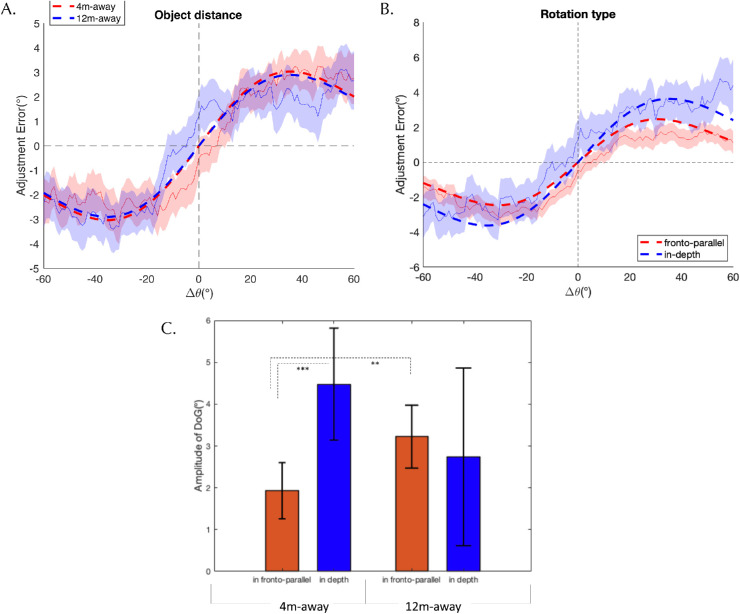

Figure 3.

Results of Experiment 1. Adjustments errors as a function of the orientation difference between the previous and current trial. The DoG curves are fitted to the aggregate group data. The thin solid lines show the raw data and the shaded areas denote the 95th percentile of the bootstrapping results. The thick dashed lines indicate the fitted DoG curves. (A) DoG curves for different type of object rotations. (B) DoG curves for different distances of the object from the observer. (C) The amplitude of the DoG curves for the interaction of the two conditions. Error bars denote the 95th percentile of the bootstrapping results. The number of stars indicate the level of significance between the conditions (*p < 0.05; **p < 0.01; ***p < 0.001).

The first important result is that we found strong attractive serial dependence (large amplitude of the fitted DoG's) in our VR paradigm. The judgments of the orientation of the stimulus were strongly pulled to the one on the preceding trial by 3.7 degrees overall (p < 0.001). Figures 3A and 3B show the DoG curves obtained for the different rotation types and the different object distances.

Second, there was a large difference in whether the object was rotated in depth or in the fronto-parallel plane. If the rotation was in depth, the positive bias was larger (amplitude = 4.4 degrees) than if it was in the fronto-parallel plane (amplitude = 2.9 degrees, p < 0.01). Third, we found that the further away in distance the object was rendered in VR, the larger the positive bias (amplitude at 12 m [4.1 degrees] > amplitude at 4 m (3.2 degrees, p = 0.03).

Figure 3C shows the results in the form of the size of serial dependence biases as a function of apparent distance from the observer and by whether the rotation between objects was in depth or in the fronto-parallel plane. The pair-wise permutation tests between the different conditions indicate that both effects were mostly due to a large attractive serial dependence bias when the object was further away and rotated in depth. When the current object was at 4 m, the rotation type did not significantly affect DoG amplitudes, however, when the objects were 12 m away, the amplitude for the in-depth rotation (5.7 degrees) was significantly larger than the one for the fronto-parallel rotation (2.6 degrees, p < 0.001). We did not, however, observe any significant effect of the distance of the previous object (i.e. inducer) on serial dependence on the current trial (p = 0.4). We also did not observe any effect of location specificity of the object. Whether the distance of the current object was the same or different from the distance of the object on the previous trial did not have a significant effect on DoG amplitudes (p = 0.13).

In sum, in Experiment 1, we found a large serial dependence effect on perceptual decisions in a virtual reality environment. Judgments of the most recently shown item were strongly biased toward the item presented on the preceding trial. Notably, these effects were quite large, compared to what has been seen in previous estimates where orientation-based tasks in two dimensions have been used (Fritsche et al., 2017; Pascucci et al., 2019; Rafiei et al., 2021a), although these size estimates do vary between experiments. Perhaps the most novel and interesting finding is that serial dependence was larger for rotation in depth than in the fronto-parallel plane. When the visual information was less reliable, serial dependence tended to be larger, as reported in previous work (Cicchini, Mikellidou, & Burr, 2018; van Bergen & Jehee, 2019). The mean adjustment errors were significantly larger when the object was rotated in-depth compared to in fronto-parallel plane, suggesting that uncertainty is likely to be higher for in-depth rotation than the two-dimensional fronto-parallel plane, therefore causing larger serial dependence.

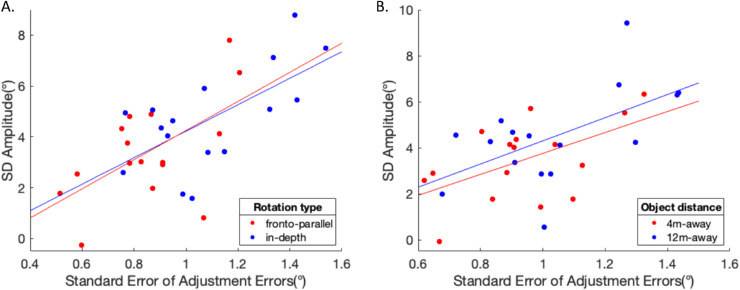

To further investigate the potential influence of uncertainty on serial dependence, we looked at how DoG amplitudes change as a function of individual observers’ response “scatter” (Figure 4). Here, we used response scatter as a proxy for the uncertainty in performing the task with a given stimulus. Although there were individual differences, the serial dependence amplitudes had a positive correlation with response scatter, independently of whether the object was rotated in the frontoparallel plane (R2 = 0.34, F(1, 14) = 7.2, p < 0.05) or in depth (R2 = 0.41, F(1, 14) = 9.69, p < 0.01). We observed the same positive correlation with respect to object distance (see Figure 4B). Individuals with higher response scatter tend to have higher amplitudes, both when the object was 4 m away (R2 = 0.29, F(1, 14) = 5.65, p < 0.05) and 12 m away (R2 = 0.32, F(1, 14) = 6.58, p < 0.05). These results are in line with our previous interpretation; as uncertainty increases, the amplitude of serial dependence increases as well.

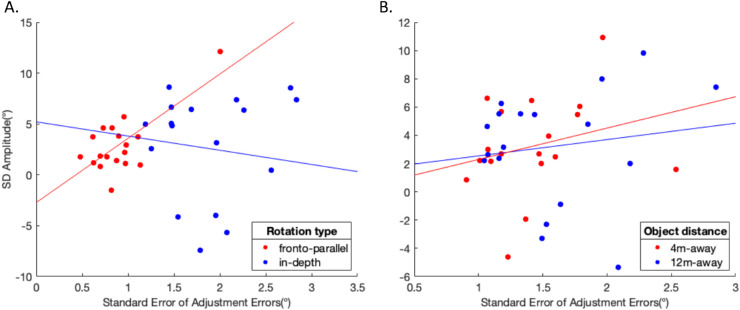

Figure 4.

(A) Amplitude of DoG curves as a function of response scatter (i.e. standard error of adjustment errors) of each individual observer for different rotation types. Each point corresponds to data from one rotation type condition of an individual observer. Red and blue data points are obtained from the frontoparallel and in-depth rotation conditions, respectively. The red and the blue lines are the best fitting linear models for the data from frontoparallel and in-depth rotation conditions, respectively. (B) Amplitude of DoG curves as a function of standard error of adjustment errors made by each individual observer for different object distances. Each point corresponds to data from one distance condition of an individual observer. Red and blue data points are obtained from the 4 m-away and 12 m-away conditions, respectively. The red and the blue lines are the best fitting linear models for the data from the 4 m-away and 12 m-away conditions, respectively.

Experiment 2 – Object specificity of serial dependence

In Experiment 1, the object identity was constant between trials, leaving the question of the role of object specificity in serial dependence unanswered. In Experiment 2, we tested the effects of object specificity by comparing the effect for identical objects, different objects from the same category, or different objects from different categories. We again chose orientation as a key feature of the objects to enable easy comparison with the large amount of previous serial dependence studies on orientation biases.

Methods

Participants

Eleven participants (Mage = 26.36, SDage = 5.28), who had normal or corrected to normal vision, gave written informed consent and took part in the study. All were volunteers and staff or students at the University of Iceland. All aspects of the experiment were performed in accordance with the requirements of the local bioethics committee and with the Declaration of Helsinki for testing human participants.

Stimulus

The equipment and the stimulus properties were identical to those reported for Experiment 1, except for the following. Rather than using a single object throughout the experiment, we generated four different objects: two types of swords (size = 90 cm) and two types of toothbrushes (size = 30 cm). They were rendered in VR to appear 1.5 m away from the observer (Figure 5).

Figure 5.

Objects used in Experiment 2. On the left: The two different types of swords. On the right: The two different types of toothbrushes.

Procedure and design

We tested three conditions for object transitions from the previous to the current trial:

-

1)

The second object was the same as the first one (e.g. red toothbrush -> red toothbrush).

-

2)

The second object involved a different instance of the same category from the first one (e.g. red toothbrush -> electric toothbrush).

-

3)

The second object involved a different object category than the first one (e.g. red toothbrush -> sword).

In addition to object transitions, we also manipulated the rotational plane of the object (in-depth or fronto-parallel), as in Experiment 1.

The procedure was identical to Experiment 1, except that each observer completed a total of 216 trials: three object transitions (identical, same, or different category) times two rotational planes (fronto-parallel or in depth) times 9 Δθ values times four repetitions. These trials were split into four blocks, with 54 trials per block. Observers were able to rest if they wanted between the blocks. However, if they chose not to rest between blocks, they were forced to rest after completing the second block. The experiment took roughly 50 minutes to complete.

Results

Before all the observers’ data was aggregated, trials containing adjustment errors larger than three standard deviations from the mean were excluded (1.4%). The average absolute adjustment error was significantly larger when the objects were rotated in depth (M = 10.36 degrees, SD = 1.81 degrees) compared to fronto-parallel rotation (M = 6.42 degrees, SD = 0.99 degrees) (t(20) = 6.32, p < 0.001).

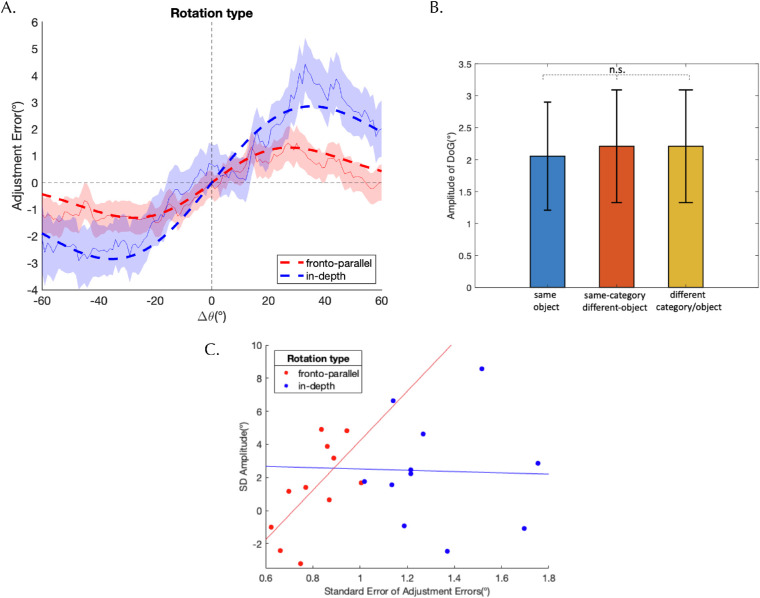

Overall, we found robust serial dependence, although it was smaller than in Experiment 1. Importantly, we replicated the effect that serial dependence was larger if the rotation was in depth than if the object rotated in the fronto-parallel plane. The bias amplitude when the rotation was in-depth (2.8 degrees) was significantly larger than for the fronto-parallel plane (1.3 degrees, p = 0.008; Figure 6A)

Figure 6.

(A) Adjustments errors as a function of the orientation difference between the previous and current object in Experiment 2. The DoG curves are fitted to the aggregate group data. The thin solid lines show the raw data and the shaded areas denote the 95th percentile of the bootstrapping results. The thick dashed lines indicate the fitted DoG curves. Red and blue colors indicate fronto-parallel and in-depth rotation, respectively. (B) The amplitude of the DoG curves for the three object transition conditions. Error bars denote the 95th percentile of the bootstrapping results. n.s.: not significant. (C) Amplitude of DoG curves as a function of response scatter (i.e. standard error of adjustment errors) of each individual observer for different rotation types. Each point corresponds to data from one rotation type for individual observers. Red and blue data points represent the frontoparallel and in-depth rotation conditions, respectively and the red and the blue lines are the best fitting linear models.

Figure 6B shows the serial dependence amplitude in Experiment 2 as a function of whether the two consecutively presented objects were the same object, were different objects from the same category, or whether they came from different categories. Interestingly, there was no effect of object or category switch upon serial dependence amplitude. All pair-wise permutation tests yielded nonsignificant results (p > 0.05). Apart from object switches, we also compared whether the category of the object on the current trial (swords versus toothbrushes) influenced the amplitude of serial dependence. However, whether the object was a sword (amplitude = 2.17 degrees) or a toothbrush (amplitude = 1.81 degrees) did not significantly influence the bias amplitude (p > 0.05).

We also assessed the influence of stimulus uncertainty on serial dependence, by plotting the DoG amplitudes as a function of response scatter (Figure 6C). Rotation in the depth plane resulted in higher response scatter than frontoparallel rotation. The amplitudes had a positive correlation with response scatter for fronto-parallel rotation (R2 = 0.43, F(1, 9) = 6.7, p < 0.05), but not for in-depth rotation.

Experiment 3 – Retinal size versus actual distance

Experiment 3 was conducted to address an issue that arose regarding the distance effect observed in Experiment 1. In Experiment 1, we found that the size of serial dependence increased when the object was rendered further away from observers in VR. But it is important to note that retinal size was not controlled for in Experiment 1, an issue that we, therefore, address in Experiment 3, by investigating the distance effect while controlling for retinal size. One of the advantages of rendering visual stimuli with VR technology is, indeed, that perceived distance can be manipulated independently of retinal size.

Methods

We recruited 18 participants (Mage = 23.94, SDage = 5.47) for this experiment. The methods were identical to Experiment 1, except for the following changes. First, the retinal size of the objects was scaled depending on how far away from the observer the object was rendered so that the retinal size of the object was constant on all trials. The length of the object was rendered to be either 90 cm or 30 cm depending on whether it was 4 m or 12 m away, respectively. Therefore, the length of the object was 4.3 degrees of visual angle regardless of its distance from the observer.

Second, instead of using a single object throughout the experiments and for all the observers, each observer was shown two objects randomly chosen from the three possible objects (the flashlight from Experiment 1, and the sword and red toothbrush from Experiment 2). There were six combinations of object pairs out of this selection of three objects, and the object combinations were counterbalanced across participants. This was done to avoid any object-specific effects. For each observer, the object was constant for the first half of the experiment but was different for the next half. Otherwise, the design and the procedure of the experiment were identical to Experiment 1.

Results

Similar to the previous analyses, trials where adjustment errors were larger than three standard deviations from the individual observer's mean were excluded (2.5% of all trials). The results (see Figure 7) show that the average absolute adjustment error did not differ depending on whether the object was 4 m (M = 11.89 degrees, SD = 3.39 degrees) or 12 m away (M = 13.05 degrees, SD = 4.29 degrees) (t(34) = 0.90, p > 0.05). However, the average error was again significantly larger when the objects were rotated in-depth (M = 17.13 degrees, SD = 5.45 degrees) compared to when it rotated in the fronto-parallel plane (M = 8.00 degrees, SD = 2.59 degrees) (t(34) = 6.42, p < 0.001).

Figure 7.

The results of Experiment 3. Adjustments errors as a function of the orientation difference between the previous and current object. The DoG curves are fitted to the aggregate group data. The thin solid lines show the raw data and the shaded areas denote the 95th percentile of the bootstrapping results. The thick dashed lines indicate the fitted DoG curves. (A) DoG curves for different object distances from the observer. (B) DoG curves for different types of object rotations. (C) The amplitude of the DoG curves for the interaction of the two conditions. Error bars denote the 95th percentile of the bootstrapping results. The number of stars indicate the level of significance between the conditions (*p < 0.05; **p < 0.01; ***p < 0.001).

When retinal size was controlled for, there was no significant difference (p = 0.41) between objects shown 4 m and 12 m away from the observer. The amplitude of the bias was 2.97 degrees when the object was 4 m away, and 2.83 degree when it was 12 m away. This suggests that the effects of distance found in Experiment 1 are due to changes in the retinal size of the object. However, an effect of distance was observed when the object was rotated in the frontoparallel plane (compare red bars in Figure 7C), but not when it was rotated in depth plane, which instead yielded high individual differences (the blue bars in Figure 7C).

Importantly, we once again replicated the effect of the rotation plane on the amplitude of the serial biases. The serial dependence bias was higher (amplitude = 3.84 degrees) when the object was rotated in depth, compared to when it was rotated in the fronto-parallel plane (amplitude = 2.45 degrees, p = 0.02). However, this effect was due to a large attractive serial dependence bias when the object was 4 m-away and rotated in depth (see Figure 7C, left side of the plot). When the object was 12 m-away, significant individual differences emerged in DoG amplitudes for in-depth rotation.

To assess the influence of stimulus uncertainty, we looked at the correlation between response scatter and serial dependence amplitudes. Amplitude correlated with response scatter when the object was rotated in the frontoparallel plane (R2 = 0.51, F(1, 16) = 16.6, p < 0.01), but not when it rotated in-depth plane (Figure 8A). The positive relation between serial dependence amplitude and response scatter was not significant when the data were divided by object distance (Figure 8B). This probably reflects large individual differences in serial dependence for in-depth rotation. As in Experiment 2, the in-depth rotation yielded higher uncertainty and higher individual differences so any correlation between response scatter and serial dependence did not emerge.

Figure 8.

Amplitude of DoG curves as a function of response scatter (i.e. standard error of adjustment errors) of each individual observer for the different conditions. (A) Each point corresponds to data from one rotation type condition of an individual observer. Red and blue data points represent frontoparallel and in-depth rotation, respectively. The red and the blue lines are the best fitting linear models. (B) Each point corresponds to data from one distance condition for an individual observer. Red and blue data points represent the 4 m and 12 m conditions, respectively, and the red and the blue lines are the best fitting linear models for the corresponding conditions.

General discussion

Although serial dependence has been extensively investigated to date, it has first and foremost been studied using simple 2D stimuli (such as Gabor patches, drifting dot patterns, or tasks involving numerosity judgments). Given that serial dependence is considered to be an adaptive strategy in naturalistic environments that serves the purpose of preserving continuity, we argue that examining serial dependence in VR provides more informative and potentially more accurate insights into these perceptual history biases (Kristjánsson & Draschkow, 2021).

Our study involves an initial attempt at characterizing serial dependence in a relatively more realistic setting involving three-dimensional objects rendered in a virtual environment. Observers were presented with objects commonly encountered in daily life. It was important to match our paradigm to common two-dimensional serial dependence studies and we, therefore, used objects with a distinct orientation axis (e.g. a flashlight or toothbrush) for comparison with orientation-based serial dependence studies. Observers were required to report the orientation of the most recent item in an adjustment task within the three-dimensional VR environment. Importantly, the use of VR enabled us, for the first time in serial dependence studies, to assess effects of the plane in which the object was rotated since the object was rotated either in depth or in the fronto-parallel plane. We also manipulated the distance between the observer and the object.

There are a few important points to take away from these studies.

-

i)

Strong positive serial biases were observed in all conditions and interestingly these biases were larger than most of those recently seen in studies performed in the orientation domain (e.g. Cicchini et al., 2017; Fritsche et al., 2017; Pascucci et al., 2019; Kim et al., 2020; Rafiei et al. 2021a; Rafiei et al. 2021b). In all conditions, observers’ orientation judgments were biased toward the orientation observed on the previous trials, showing standard attractive serial dependence.

-

ii)

Serial dependence in all three experiments was much larger when the items were rotated in depth than in the fronto-parallel plane. These results may therefore indicate that the additional uncertainty added by the third dimension may yield larger and more robust positive serial dependence than standard two-dimensional studies.

-

iii)

We found no evidence for selectivity for object-level representations in serial dependence. This is in many ways analogous to the results reported by Ceylan et al. (2021) and Goettker and Stewert (2022).

-

iv)

Larger biases were observed in Experiment 1 when the object was further away in depth from the observers but Experiment 3 showed that the retinal size of the object could account for this.

Non-specificity of serial dependence

The non-specificity of serial dependence in Experiment 2 is reminiscent of what Ceylan and colleagues (2021) and Goettker and Stewert (2022) found. They reported serial dependence even for objects that were clearly very dissimilar (i.e. the orientation of Gabor patches versus dot patterns; or the speed of a car versus a dynamic blob). In addition, Houborg, Pascucci, Tanrikulu, and Kristjánsson (in press) have recently reported that serial dependence for the orientation of Gabor patches occurred independently of changes in their color. Collectively, this indicates that serial dependence for orientation does not operate on integrated objects, even when observers judge the orientation of real-world and familiar objects.

Ceylan et al. (2021) interpreted this non-specificity as reflecting that serial dependence could not be a lower-level phenomenon. Our results seem consistent with that interpretation. It is also possible that the orientation feature was abstracted away from the other features of the object, affecting the processing of the orientation of another object in the next trial (Houborg et al., in press; Kwak & Curtis, 2022). This would be consistent with object-independent serial dependence that still operates at the level of early orientation processing (Murai & Whitney, 2021).

Previous studies have demonstrated that serial dependence can occur between different visual objects (Manassi, Liberman, Chaney, & Whitney, 2017; Ceylan et al, 2021; Fornacai & Park, 2019; Fornacai & Park, 2022). Here, we show that even when using stimuli similar to real objects that one might encounter in daily life, serial dependence for an elementary feature like orientation occurs independently of changes in the object. That is, despite manipulating the semantic properties of an object—that is, the collection of all the features that describe its unique identity and its category, observers were still biased to reproduce the orientation of an object as similar to the orientation of a different object seen in the recent past. It is worth noting that, when contextual and semantic properties are task-relevant—that is, the task involves conjunctions of visual features or multidimensional aspects of an object, object identity seems to play a role in serial dependence (Fischer et al., 2020; Collins, 2022). But when the task involves elementary visual features, object identity plays no role (as we show here). This again highlights the importance of the task demands, the type of response, and the processing level of the target feature for the object selectivity of serial dependence.

Stimulus uncertainty and serial dependence

Overall, we confirmed the ubiquitous nature of serial dependence, even in a VR environment. Every condition of all three experiments revealed strong (up to 6 degrees-7 degrees in certain conditions) positive serial biases towards orientations from preceding trials. These amplitudes are larger than the ones observed in previous studies (except in Fischer & Whitney, where they observed amplitudes up to approximately 8 degrees). Moreover, previous studies have observed repulsive biases when the orientation difference between the previous and current object was large (Bliss, Sun, & D'Esposito, 2017; Fritsche et al., 2017; Samaha, Switzky, & Postle, 2019). However, in our experiments, the width of the DoG curves was large such that there were no repulsive effects at higher orientation differences.

One potential reason for the strength of serial dependence found here is the higher uncertainty introduced by the third dimension (i.e. depth). For example, in all three experiments, we observed stronger positive serial dependence when the object was rotated in-depth which may have increased the stimulus uncertainty in orientation estimates, leading to higher positive serial dependence. This would be consistent with previous studies showing a positive relationship between stimulus uncertainty and serial dependence biases (Cicchini et al., 2018; van Bergen & Jehee, 2019; Ceylan et al., 2021, Kondo, Murai, & Whitney, 2022).

Other factors may have contributed to uncertainty in our experiments. Our stimuli included familiar real-life objects whose shape properties are far more complex than simple bars or oriented Gabors. For example, even though our objects had a clear longitudinal axis with a specific orientation, the borders of these objects do not constitute straight contours, but instead include undulations. This could lead to noisier orientation encoding, compared to the orientation signals caused by a straight line. Furthermore, the lower resolution of VR head-mounted displays, compared to high-resolution screens used to show two-dimensional stimuli, could add even more noise to the encoding process. Another factor was that a line or an oriented Gabor repeats itself every 180 degrees, however, our objects were not symmetric across their midpoint, technically making them repeat their orientations every 360 degrees. However, we do not think that this should have a large effect, because the response bar was symmetric across its midpoint, so participants reported their estimates along a 180 degrees orientation space, as in previous serial dependence studies. It is, however, difficult to pin down the exact reasons for the large DoG curves we observed, among these display and stimuli differences. Similarly, these differences may also be responsible for not observing any repulsive biases when the orientation difference was large between the current and the previous object. Such repulsive biases are generally attributed to adaptation effects (Pascucci et al., 2019), and our display does not seem to give way to such effects.

This relationship between stimulus uncertainty and serial dependence may potentially explain the effects observed in all three experiments, especially effects of rotation type on serial dependence biases. DoG amplitude was positively correlated with response scatter, suggesting that the uncertainty was responsible for the large serial biases observed here. In conditions where stimulus uncertainty (as inferred from task performance) was presumably higher (such as when the object was rotated in depth in Experiments 2 and 3), the correlation disappeared and individual differences in serial biases emerged. This becomes apparent when the response scatter in the fronto-parallel conditions of all three experiments is compared (i.e. compare Figure 4A, 6C, 8A). The separation between the two rotation type conditions (the blue and the red data points in these plots) is very clear for Experiments 2 and 3, which did not yield a positive correlation for when the object was rotated in depth. However, the response scatter was overall lower in Experiment 1, which might have allowed this significant positive correlation to emerge for both rotation types. However, these results should be interpreted with caution because our experiments were not designed to manipulate or measure the effect of stimulus uncertainty. Even though these results do not allow us to make strong conclusions about the effect of stimulus uncertainty, they point out that manipulating uncertainty by introducing depth (via stereoscopic disparity) could be an interesting direction for future studies.

Further comparison between experiments may uncover the factors that influence serial biases, but it also raises questions. In Experiment 1, the effect of rotation type was only observed when the object was 12 m-away, whereas in Experiment 3 it was only observed when the object was 4 m-away. This indicates that the crucial factor determining the DoG amplitudes was the retinal size of the object (as opposed to its distance), because the retinal size of the 12 m-away object in Experiment 1 was equal to the retinal size of the 4 m-away object in Experiment 3. We observed a similar pattern of results in these two conditions across Experiments 1 and 3, and what was common between them was the retinal size of the object. However, we also observed an effect of distance on DoG amplitudes in Experiment 1 when the object was rotated in the frontoparallel plane. This effect was independent of the retinal size of the object. In addition to that, we should note that, in Experiment 2, even though the swords were three times larger than the toothbrushes, this difference did not affect serial dependence amplitude. To sum up, we independently observed the effect of retinal size and object distance on serial dependence separately, but not in all experimental conditions. In principle, it is possible that serial dependence occurs before stereo depth cues, such as those created by the rendered distance in VR, have their effect. Our results cannot answer this, however. Future studies could disentangle effects of stimulus uncertainty and distance to understand whether depth cues (such as stereo-disparity) influence serial biases independent of stimulus uncertainty. This would, in turn, determine whether the bias originates from retinal or perceived size.

Conclusions

Our experiments were motivated by the call raised by Kristjánsson and Draschkow (2021) that phenomena of visual cognition should be put under the real-world test – a call we agree with. As an initial step, we tested serial dependence of orientation for real-world objects rendered in VR and we believe that our current results involve a proof of concept for serial dependence effects within a 3D environment. The size of the serial dependence effects is highly notable because the effects are larger than in many recent serial dependence studies. The other important finding is that the biases were larger when the object was rotated in depth compared to the fronto-parallel plane. This could suggest that realistic visual environments incorporate higher stimulus uncertainty overall, than the stripped-down conditions seen in most other serial dependence studies.

Future studies can utilize VR to focus on more interactive visual tasks that observers might typically perform in their daily life. This could provide insights into what particular aspects of the scene, object, task, or action contribute to the direction and amplitude of serial biases. Our current initial step in testing serial dependence in a VR environment will hopefully inspire further serial dependence studies with naturalistic stimuli and environments to aid the understanding of the relationship between such history effects in vision and how humans perform complex visual tasks in the real world.

Acknowledgments

The authors are grateful to Tómas Ragnarsson for his help in making some of the figures in this article, as well as for his help in collecting the data for the first two experiments. We are also grateful to Katrin Fjóla Aspelund for her help in collecting the data for Experiment 3.

Supported by funding from the Swiss National Science Foundation (grant nos. PZ00P1_179988 and PZ00P1_179988/2 to D.P.), from the Icelandic Research Fund (#207045-052 to A.K. and D.P.) and the Research Fund of the University of Iceland (to A.K.).

The data from the experiments reported in this paper, and scripts for running the experiment, and demo videos from typical trials of the experiments can be found at https://osf.io/e6vgd.

Commercial relationships: none.

Corresponding author: Ömer Dağlar Tanrikulu.

Email: ot1031@unh.edu.

Address: Department of Psychology, University of New Hampshire, 105 Main St, Durham, NH 03824, USA.

Footnotes

This is the size of the rectangular bounding box of the 3D model of the object (length * width * depth). All the following size measurements of objects reported in this article also refers to the size of the bounding box.

However, this never occurred in any of the experiments.

References

- Adams W. J. (2007). A common light-prior for visual search, shape, and reflectance judgments. Journal of Vision, 7(11), 1–7, 10.1167/7.11.11. [DOI] [PubMed] [Google Scholar]

- Bliss, D. P., Sun, J. J., & D'Esposito, D. (2017). Serial dependence is absent at the time of perception but increases in visual working memory. Scientific Reports, 7, 14739, 10.1038/s41598-017-15199-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brascamp, J. W., Knapen, T. H., Kanai, R., Noest, A. J., van Ee, R., & van den Berg, A. V. (2008). Multi-timescale perceptual history resolves visual ambiguity. PLoS One, 3(1), e1497, 10.1371/journal.pone.0001497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceylan, G., Herzog, M. H., & Pascucci, D. (2021). Serial dependence does not originate from low-level visual processing. Cognition, 212, 104709, 10.1016/j.cognition.2021.104709. [DOI] [PubMed] [Google Scholar]

- Chetverikov, A. & Kristjánsson, Á. (2016). On the joys of perceiving: Affect as feedback for perceptual predictions. Acta Psychologica, 169(2016), 1–10, 10.1016/j.actpsy.2016.05.005. [DOI] [PubMed] [Google Scholar]

- Cicchini, G. M., & Kristjánsson, Á. (2015). Guest Editorial: On the possibility of a unifying framework for serial dependencies. i-Perception, 6(6). Retrieved from https://www.researchgate.net/publication/288904005_Guest_Editorial_On_the_Possibility_of_a_Unifying_Framework_for_Serial_Dependencies. 10.1177/2041669515614148. [DOI] [Google Scholar]

- Cicchini, G. M., Mikellidou, K., & Burr, D. (2017). Serial dependencies act directly on perception. Journal of Vision, 17(14), 6, 10.1167/17.14.6. [DOI] [PubMed] [Google Scholar]

- Cicchini, G. M., Mikellidou, K., & Burr, D. C. (2018). The functional role of serial dependence. Proceedings of the Royal Society B, 285(1890), 20181722, 10.1098/rspb.2018.1722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins T. (2022). Serial dependence occurs at the level of both features and integrated object representations. Journal of Experimental Psychology. General, 151(8), 1821–1832, 10.1037/xge0001159. [DOI] [PubMed] [Google Scholar]

- Fischer, J., & Whitney, D. (2014). Serial dependence in visual perception. Nature Neuroscience, 17(5), 738–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer, C., Czoschke, S., Peters, B., Rahm, B., Kaiser, J., & Bledowski, C. (2020). Context information supports serial dependence of multiple visual objects across memory episodes. Nature Communications, 11(1), 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston, K. (2009). The free-energy principle: A rough guide to the brain? Trends in Cognitive Sciences, 13(7), 293–301. [DOI] [PubMed] [Google Scholar]

- Fritsche, M., Mostert, P., & de Lange, F. P. (2017). Opposite effects of recent history on perception and decision. Current Biology, 27(4), 590–595, 10.1016/j.cub.2017.01.006. [DOI] [PubMed] [Google Scholar]

- Fornaciai, M., & Park, J. (2019). Serial dependence generalizes across different stimulus formats, but not different sensory modalities. Vision Research, 160, 108–115, 10.1016/j.visres.2019.04.011. [DOI] [PubMed] [Google Scholar]

- Fornaciai, M., & Park, J. (2022). The effect of abstract representation and response feedback on serial dependence in numerosity perception. Attention, Perception & Psychophysics, 84(5), 1651–1665, 10.3758/s13414-022-02518-y. [DOI] [PubMed] [Google Scholar]

- Girshick, A. R., Landy, M. S., & Simoncelli, E. P. (2011). Cardinal rules: Visual orientation perception reflects knowledge of environmental statistics. Nature Neuroscience, 14(7), 926–932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goettker, A., & Stewart, E. (2022). Serial dependence for oculomotor control depends on early sensory signals. Current Biology, 32(13), 2956–2961.e3, 10.1016/j.cub.2022.05.011. [DOI] [PubMed] [Google Scholar]

- Hohwy, J. (2017). Priors in perception: Top-down modulation, Bayesian perceptual learning rate, and prediction error minimization. Consciousness and Cognition, 47, 75–85. [DOI] [PubMed] [Google Scholar]

- Houborg, C., Pascucci, D., Tanrikulu, Ö. D. & Kristjánsson, A. (in press). The role of secondary features in serial dependence. Journal of Vision. [DOI] [PMC free article] [PubMed]

- Kalm, K., & Norris, D. (2018). Visual recency bias is explained by a mixture model of internal representations. Journal of Vision, 18(7), 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim, S., Burr, D., Cicchini, G. M., & Alais, D. (2020). Serial dependence in perception requires conscious awareness. Current Biology, 30(6), R257–R258, 10.1016/j.cub.2020.02.008. [DOI] [PubMed] [Google Scholar]

- Kiyonaga, A., Scimeca, J. M., Bliss, D. P., & Whitney, D. (2017). Serial dependence across perception, attention, and memory. Trends in Cognitive Sciences, 21(7), 493–497, 10.1016/j.tics.2017.04.011v. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kondo, A., Murai, Y., & Whitney, D. (2022). The test-retest reliability and spatial tuning of serial dependence in orientation perception. Journal of Vision, 22(4), 5, 10.1167/jov.22.4.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kristjánsson, A. (2022). Priming of probabilistic attentional templates. Psychonomic Bulletin & Review, 30(1), 22–39, 10.3758/s13423-022-02125-w. [DOI] [PubMed] [Google Scholar]

- Kristjánsson, Á., & Draschkow, D. (2021). Keeping it real: Looking beyond capacity limits in visual cognition. Attention, Perception, & Psychophysics, 83(4), 1375–1390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwak, Y., & Curtis, C. E. (2022). Unveiling the abstract format of mnemonic representations. Neuron, 110(11), 1822–1828.e5, 10.1016/j.neuron.2022.03.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liberman, A., Manassi, M., & Whitney, D. (2018). Serial dependence promotes the stability of perceived emotional expression depending on face similarity. Attention, Perception, and Psychophysics, 80(6), 1461–1473. [DOI] [PubMed] [Google Scholar]

- Liberman, A., Zhang, K., & Whitney, D. (2016). Serial dependence promotes object stability during occlusion. Journal of Vision, 16(15), 16, 10.1167/16.15.16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manassi, M., Liberman, A., Chaney, W., & Whitney, D. (2017). The perceived stability of scenes: Serial dependence in ensemble representations. Scientific Reports, 7(1), 1971, 10.1038/s41598-017-02201-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murai, Y., & Whitney, D. (2021). Serial dependence revealed in history-dependent perceptual templates. Current Biology, 31(14), 3185–3191.e3, 10.1016/j.cub.2021.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascucci, D., Mancuso, G., Santandrea, E., Della Libera, C., Plomp, G., & Chelazzi, L. (2019). Laws of concatenated perception: Vision goes for novelty, decisions for perseverance. PLoS Biology, 17(3), e3000144, 10.1371/journal.pbio.3000144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascucci D., Tanrikulu, Ö.D., Ozkirli, A., Houborg, C., Ceylan, G., Zerr, P., Kristjánsson Á. (2023) Serial dependence in visual perception: A review. Journal of Vision, 23(1), 9, 10.1167/jov.23.1.9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rafiei, M., Chetverikov, A., Hansmann-Roth, S., & Kristjánsson, Á. (2021a). You see what you look for: Targets and distractors in visual search can cause opposing serial dependencies. Journal of Vision, 21(10), 3, 10.1167/jov.21.10.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rafiei, M., Chetverikov, A., Hansmann-Roth, S., & Kristjansson, Á. (2021b). The role of attention and feature-space proximity in perceptual biases from serial dependence. Journal of Vision, 21(9), 2543–2543. [Google Scholar]

- Rao, R. P., & Ballard, D. H. (1998). Development of localized oriented receptive fields by learning a translation-invariant code for natural images. Network: Computation in Neural Systems, 9(2), 219. [PubMed] [Google Scholar]

- Samaha, J., Switzky, M., & Postle, B. R. (2019). Confidence boosts serial dependence in orientation estimation. Journal of Vision, 19(4):25, 1–13, 10.1167/19.4.25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seriès, P., & Seitz, A. R. (2013). Learning what to expect (in visual perception). Frontiers in Human Neuroscience, 7, 668, 10.3389/fnhum.2013.00668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanrikulu, Ö. D., Chetverikov, A., Hansmann-Roth, S., & Kristjánsson, Á. (2021). What kind of empirical evidence is needed for probabilistic mental representations? An example from visual perception. Cognition, 217, 104903, 10.1016/j.cognition.2021.104903. [DOI] [PubMed] [Google Scholar]

- Trapp, S., Pascucci, D., & Chelazzi, L. (2021). Predictive brain: Addressing the level of representation by reviewing perceptual hysteresis. Cortex, 141, 535–540, 10.1016/j.cortex.2021.04.011. [DOI] [PubMed] [Google Scholar]

- van Bergen, R. S., & Jehee, J. F. (2019). Probabilistic representation in human visual cortex reflects uncertainty in serial decisions. Journal of Neuroscience, 39(41), 8164–8176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss, Y., Simoncelli, E. P., & Adelson, E. H. (2002). Motion illusions as optimal percepts. Nature Neuroscience, 5(6), 598–604. [DOI] [PubMed] [Google Scholar]

- Wertheimer, M. (1923). “Untersuchungen zur Lehre von der Gestalt II,” Psycologische Forschung, Vol. 4, 301–350. Available online at: http://psychclassics.yorku.ca/Wertheimer/forms/forms.htm. [Google Scholar]

- Westheimer G. (1999). The rise and fall of a theory of vision–Hecht's photochemical doctrine. Perception, 28(9), 1055–1058, 10.1068/p2809ed. [DOI] [PubMed] [Google Scholar]