Abstract

Across European countries, the SHAPES Project is piloting AI-based technologies that could improve healthcare delivery for older people over 60 years old. This article aims to present a study developed inside the SHAPES Project to find a theoretical framework focused on AI-assisted technology in healthcare for older people living in the home, to assess the SHAPES AI-based technologies using the ALTAI tool, and to derive ethical recommendations regarding AI-based technologies for ageing and healthcare. The study has highlighted concerns and reservations about AI-based technologies, namely dealing with living at home, mobility, accessibility, data exchange procedures in cross-board cases, interoperability, and security. A list of recommendations is built not only for the healthcare sector, but also for other pilot studies.

Keywords: healthcare, aging, artificial intelligence, assessment, ethics, ALTAI, SHAPES Project

1. Introduction

According to forecasts, by 2050, Europe will have approximately 130 million inhabitants, of which almost a third will be 65 years or older [1]. Studies have shown the benefits of ageing in place, both in the home and community. Nevertheless, older people living at home and in the community also need healthcare services [1,2,3,4,5].

Ambient Assisted Living (AAL) technologies—which range from cheap devices to complex Artificial Intelligence (AI) and Machine Learning (ML) technologies—aim to take care of the growing number of older people [6]. They are purposed to detect things and events that would otherwise go unnoticed. AI and ML can enable and support disease prevention in the healthcare sector in parallel to disease treatment. According to recent studies, wearable devices with AI can save up to 313,000 people across the EU, around EUR 50 million, and about 300 million hours of healthcare professionals’ working time [7].

1.1. Artificial Intelligence in Healthcare

The quality of technologies, especially AI-based technologies, is based on learning systems that use personal data and algorithms, often non-public trade secrets [8]. Additionally, the public guidelines have no coherent and consolidated approaches for technology adoption by AI developers [9] and healthcare professionals. Recent studies have highlighted that healthcare professionals’ (physicians and nurses) perceptions are a critical issue in adopting AI technologies (such as AAL) in healthcare delivery; the negative perception of the trustworthiness of AI is tantamount to a rejection of AI [10,11,12,13].

Shinners et al. developed and validated a survey tool (questionnaire) to investigate healthcare professionals’ perceptions towards AI. The questionnaire comprised 11 items bordering on respondent demographics such as age and other items centered on perceptions of AI’s impact on the future role of healthcare professionals and their professional preparedness. Shinners et al. conducted a reliability test of the survey tool. They found that the use of AI had a significant effect on the perception of healthcare professionals regarding their preparedness and future role in the healthcare sector. This initial outcome showed that the survey instrument helps explore AI perceptions [14].

Another study with different health fields (ophthalmology, dermatology, radiology, oncology) demonstrated that the clinicians’ acceptability of AI technologies was determined by how much the AI’s performance surpasses the average performance threshold of human specialists. In ranking the perceived advantages of AI, participants believed improved diagnostic confidence that borders on trustworthiness is only subsequent to improved patient access to disease screening. Reduced time spent on monotonous tasks appeared third in the ranking. Despite these perceived advantages of AI, concerns remain, as the study further indicated. The top-ranked among these concerns raised by respondents are the divestiture of healthcare to technology and data companies, medical liability owing to machine error, and a decrease in reliance on medical specialist diagnosis and treatment counselling. The second concern highlights the need for trustworthy AI [15].

In a French study, Laï et al. found that AI is considered a myth requiring debunking among healthcare professionals. These professionals find AI tools useful in delivering healthcare. However, they see less and less incorporation of AI in their daily practice. This outcome might not be unconnected with healthcare industry partners’ perceived legal bottlenecks surrounding access to individual health data, thus hampering AI adoption. For this study, AI acceptability is often influenced by accountability, hence the concern about the possible harm that AI clinical tools might cause patients [16].

In addressing accountability, Habli et al. highlighted the role of moral accountability of AI harm for patients and safety assurance to protect patients against resulting harm caused by AI tools. The authors called for a review of the current practice of accountability and safety in using AI tools for decision making. To mitigate AI potential harm to patients and for an encompassing evaluation of AI tools, Habli et al. argued that stakeholders such as AI developers and systems safety engineers need to be considered when assessing moral accountability for patient harm [17].

Transparency is also a factor against AI tool adoption. Markus et al. proposed a framework that guides AI developers and practitioners in choosing between different categories of explainable AI methods that may be adopted. Adopting explainability in creating trustworthy AI creates a demand for explainability, determining what should be explained about the AI tool. The authors argued that applying explainable modelling could help make AI more trustworthy, even as the perceived benefits require proof in practice, especially in healthcare [18].

Other studies pointed out the patients’ perspectives. For example, Nichols et al. showed that patients who participated in a survey investigating confidence levels between AI and clinician-assisted interpretation of radiographic images favored the clinician-led radiograph interpretation. In addition, based on participant demographics, younger and more educated patients tend to favor AI-assisted image interpretation [19]. Another piece of research revealed that security and privacy are key factors that are perceived to influence medical assistive technologies, including AI technologies. The authors found that young and middle-aged individuals are more concerned about security and privacy standards than the ailing elderly population who participated in the study [20].

1.2. Ethical Framework for Trustworthy AI

Since the integration of AI-assisted technologies in healthcare interacts with the perceptions, acceptability, accountability, transparency, explainability, privacy, security, and literacy of both patients and physicians, the development of new ethical frameworks is required [21]. Morley et al. [22] proposed three ethical levels to discuss AI in healthcare: (a) epistemic, related to misleading, incomplete, or unexamined evidence; (b) normative, related to unjust outcomes and transformative effects; and (c) dealing with traceability [22].

Nevertheless, there is still no consensus because what can be effective and desirable for society may not necessarily be desirable for an individual [23]. At the same time, some stakeholders agree on ethical issues of AI at the principal level. In contrast, others disagree on how the principles are interpreted, why they are important, which issues, domains, or actors they apply to, and how they should be implemented [24].

It is also unclear how the principles should be prioritized, how conflicts between ethical principles should be resolved, who should monitor AI’s ethics, and how different parties can comply with the principles. Different interpretations of the principles only become apparent when the principles or concepts are tested in their context, which is important to understand [25]. According to Yin et al., these results indicate a gap between creating principles and practical implementation [26].

In the preceding context, effective collaborations with all stakeholders (IT developers, healthcare professionals and patients, universities and research centers, and governments) in healthcare are mandatory to build critical structures that promote trust in AI technologies. These could include appropriate safeguards to protect and maintain patient agency, clinical decision making and support for medical diagnosis, checklists and guidelines for AI developers, and user and technical requirements for professionals and services [27].

The legitimacy of an AI implies that it is lawful in that it respects all applicable laws and regulations. The ethics of an AI means that the AI application complies with all known ethical principles, societal values, and technical requirements. There are several parallel global efforts towards achieving international standards (e.g., ISO/IEC 42001 AI Management System) [28], regulations (e.g., AI Act) [29], and individual–organizational policies relevant to AI and its trustworthiness features [30].

The European Commission’s High-Level Expert Group on Artificial Intelligence (AI HLEG) published the European Commission’s Ethical Guidelines for Trustworthy AI in 2019. These guidelines set standards for three main requirements when developing an AI system: legitimacy, ethics, and reliability [31]. These EU recommendations are based on human rights, which, according to the EU Treaties and the EU Charter, comprise values such as human dignity, equality, freedom, solidarity, justice, and civil rights. Developed by AI HLEG, the Assessment List for Trustworthy AI (ALTAI) is an assessment list that aims to safeguard against AI harms in healthcare and requires a shared global effort. The AI HLEG report offers room for localized solutions that may not foster trustworthy AI globally [32,33]. Furthermore, there is an online version of this tool [34].

2. Materials and Methods

2.1. Context, Purposes, and Methodology

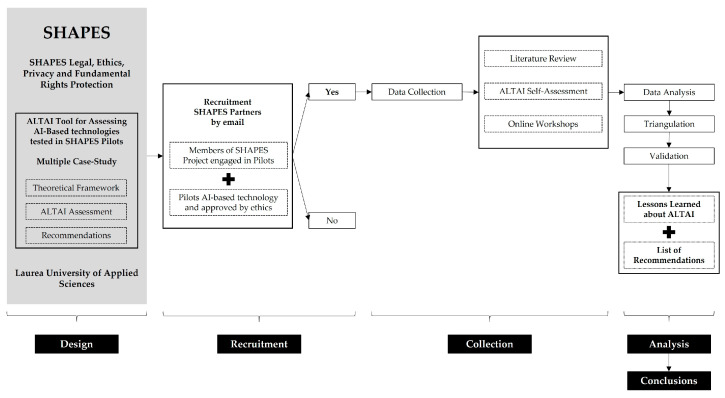

The current study is integrated into the SHAPES Project work group “SHAPES Legal, Ethics, Privacy and Fundamental Rights Protection,” led by Laurea University of Applied Sciences (Laurea) [10]. The objectives are (1) to find a theoretical framework focused on AI-assisted technology in healthcare for older people living at home; (2) to assess the SHAPES pan-European pilots by the ALTAI tool; and (3) to derive ethical recommendations regarding AI-based technologies for ageing and healthcare. The study adopts multiple case study methodologies [32,35] with the scope to explore the AI-based technology in healthcare for older people in a fine-grained, in-depth, and contextualized way; it also compares cases, maps their differences and similarities, and identifies emerging patterns.

2.2. Recruitment, Collection, and Analysis and Validation

The study was initiated by Laurea’s researchers through emails to all SHAPES partners (consortium) targeting to explain the study’s framework (context, purposes, methodology) and invite SHAPES Pilots’ leaders. In this email, the researchers have defined five criteria to enable the participation in the study: (1) members of SHAPES consortium; (2) partners directly engaged in SHAPES Pilots as researchers, IT developers, or healthcare providers; (3) partners who participate in all the study’s phases; (4) pilots that are testing AI-based technology to provide healthcare in the home; and (5) pilots approved by ethical committees. Three of the seven SHAPES Pilots met all these criteria.

Laurea’s researchers, supported by the leaders of the SHAPES Pilots selected, have produced a literature review to define a theoretical framework for the study. They limited the research to two databases (PubMed and Scopus). They used different articulations between five keywords (Ageing, Healthcare, Artificial Intelligence, Ethics, and ALTAI). The results are presented in the Introduction section.

The leaders (coordinators) of the SHAPES Pilots selected have completed the development of the online version of the ALTAI tool. The ALTAI tool is a list of requirements (seven) that evaluate AI trustworthiness: (1) Human Agency and Oversight; (2) Privacy and Data Governance; (3) Technical Robustness and Safety; (4) Accountability; (5) Transparency; (6) Societal and Environmental Well-being; and (7) Diversity, Non-discrimination, and Fairness. At the end of each section, a self-assessment is performed on a 5-level assessment scale (Likert scale): ‘non-existing’ corresponds to 0; ‘completely inadequate’ to 1; ‘almost adequate’ to 2; ‘adequate’ to 3; and ‘fully adequate’ corresponds to 4 [34].

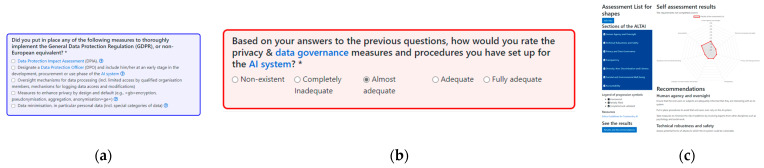

The ALTAI is an interactive self-assessment tool; different questions are suggested according to the answers provided. All the questions can be consulted in the tool guideline: “ETHICS GUIDELINES FOR TRUSTWORTHY AI: High-Level Expert Group on Artificial Intelligence” [31] (pp. 26–31). Each question has a glossary and examples from the Ethics Guidelines for Trustworthy AI. The questions are color-coded to describe the features of the AI system; blue questions will contribute to generating the recommendations, and red questions aim to self-assess compliance with the requirement/blue answer. When the ALTAI questionnaire is completed, a visual graphic (diagram) with the evaluation and a recommendations list is generated automatically (Figure 1). The following link accesses the platform: https://altai.insight-centre.org/Identity/Account/Login (accessed on 15 March 2023).

Figure 1.

ALTAI color code: (a) Blue answers will contribute to recommendations (the asterisk symbol denotes that it is obligatory to answer).; (b) Red answers will contribute to self-assessing compliance (the asterisk symbol denotes that it is obligatory to answer); (c) Results and Recommendations presentation.

Laurea’s researchers have conducted three online workshops, one per SHAPES Pilot selected. The workshops were targeted at the partners that directly engaged in the pilots (researchers, IT developers/technology owners, and healthcare providers). These workshops aimed to present the ALTAI self-assessment and literature review results, collect data regarding the pilots’ design and lessons learned, and inquire about the partners’ perspectives on ALTAI assessment, namely the recommendations.

A triangulation analysis was performed to understand similarities and differences between the SHAPES Pilots (case studies) and to validate the lessons learned and the list of final recommendations defined related to the ALTAI recommendations. Figure 2 depicts the aforementioned steps of the study.

Figure 2.

Study design.

2.3. Limits and Ethics

This study involved a small number of participants, which reduces the recommendations’ scalability; one person (leader/coordinator) per pilot who represents the team engaged in the pilots’ engagement. There was no requirement for ethical approval for this study because all participants are members of a consortium, the SHAPES Project (Grant Agreement No. 857159), which already has an ethics and GDPR framework required by the funding program (The European Union’s Horizon 2020 research and innovation program). Each SHAPES Pilot is supported by the corresponding ethical approval from national ethical committees.

3. Results

3.1. SHAPES Pilots

The SHAPES Project is building and piloting a large-scale, EU-standardized open platform that integrates digital solutions and sociotechnical models to promote long-term healthy and active ageing and maintain a high-quality standard of life. Using digital solutions (and respective sociotechnical models) in the community and home (APPs, Voice Assistants, Sensors, Smart Wearables, Software, Medical Devices), older people, caregivers (formal and informal), and healthcare providers could be better integrated. Healthcare delivery could have a higher impact (e.g., preventive care, self-care, reducing costs and hospitalizations) [10].

This ‘vision’ is being tested by the SHAPES Pan-European Pilot Campaign, which includes building and demonstrating in a real-life context several digital solutions and sociotechnical models across different European countries and users, namely older people, informal caregivers, formal caregivers (e.g., nursing homes), and healthcare providers (e.g., health authorities). The pilot campaign is divided into seven thematic or “pilot themes” (PTs) to cover many domains related to healthy ageing, independent living, and integrated care: (PT1) Smart Living Environment for healthy ageing at Home; (PT2) Improving In-Home and Community-based Care; (PT3) Medicine Control and Optimization; (PT4) Psycho-social and Cognitive Stimulation Promoting Wellbeing; (PT5) Caring for Older Individuals with Neurodegenerative Diseases; (PT6) Physical Rehabilitation at Home; and (PT7) Cross-border Health Data Exchange [10].

All the SHAPES Pilots were expected to test AI-based technologies; however, only three have fitted in with all recruitment criteria at the time of this study. There were two key reasons for not participating: they had not yet obtained ethics approval and did not complete all phases of the study. Within this context, this study considered only three pilot themes (i.e., PT5, PT6, and PT7). In the Table below, these pilots are described in four topics: (1) Persona and Use Case, (2) Digital Solution, (3) the AI ‘role’, and (4) SHAPES Protocol (Table 1).

Table 1.

SHAPES Pilots description.

| PT5 Caring for Older Individuals with Neurodegenerative Diseases |

PT6 Physical Rehabilitation at Home |

PT7 Cross-border Health Data Exchange |

|

|---|---|---|---|

| Persona and Use Case | Older individuals (+60 years old) living in a home. They complain about cognitive decline (e.g., daily errors, forgetting things) and require ‘attention’ from caregivers (formal and informal). The informal caregivers (e.g., children) install a digital solution in the home to monitor behaviors and health indicators, support daily activities, and provide cognitive and physical activities in the home. | Older individuals (+60 years old) living at home who either need to recover from a health issue at home (e.g., stroke, fall, surgery, rheumatoid arthritis, osteoarthritis, orofacial disorder, etc.) or need a digital solution to improve the physical activity, preventing or improving frailty conditions. | Older individuals (+60 years old) living in a community with chronic diseases (e.g., Atrial Fibrillation, Diabetes, Chronic Obstructive Pulmonary Disease, Visual or Hearing impairment, Physical Disability) who need a digital solution to improve their mobility in holidays, tourism activities, leisure, and being connected with informal caregivers and healthcare providers. |

| Digital Solution | Smart-mirror-based platform composed of hardware and software based on a smart mirror, which is equipped with a set of digital solutions (smart band, individual fall sensor, panic button, motion home sensors, cognitive and physical training programs, video call, smart agenda, and notification system). | Physical rehabilitation tool that provides a web-based platform (Phyx.io) for users and care providers. The personalized exercises prescribed in the platform are performed in front of a totem or smart mirror solution that autonomously tracks body movement and performance while doing the exercises. | Combination of smart mobile devices (smartphone, smart-band, smartwatch, tablet) connected to a healthcare platform to remote monitoring of key health parameters (heart rate, blood pressure, SPO2, and ECG), but also to enable healthcare providers’ remote evaluation and consultation (telemedicine). |

| AI-based | The fall detection sensor and the physical training tool use machine learning to identify certain movement patterns based on the information extracted from an Inertial Measurement Unit (IMU) and a video camera. | The physical training tool uses machine learning to identify certain movement patterns via a video camera and a pose estimation model. | Advanced filtering techniques to show a result easier to read or recognize on the screen. Data gathering methods to provide the locations with available accessibility assets. AI algorithms to identify images and detect food dishes and calories. Sensor data analysis to identify health problems. |

| SHAPES Protocol | Pilots will be assessed three times (Baseline, Post-Intervention, Follow-up) with the same protocol: WHOQOL-Bref (10 items); EQ-5D-5L (10 items); General self-efficacy scale (10 items); OSSS-3 (10 items); Participation Questionnaire (10 items); Health Literacy Measure (10 items); SUS (10 items); and Technology Acceptance Model (10 items). |

||

3.2. ALTAI Assessment

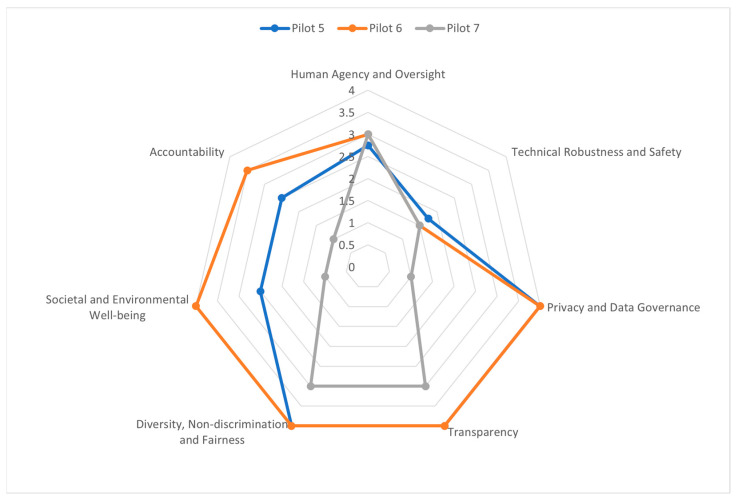

Based on the answers provided by the pilots’ partners, a cross-case analysis (Figure 3) was conducted to understand the similarities and differences between different pilots (case studies). Globally, the best result is obtained from the evaluation point of ‘transparency’ and the worst from ‘technical robustness and safety’. Moreover, each partner has received a list of recommendations (Appendix A).

Figure 3.

ALTAI results per SHAPES partner and cross-analysis.

Regarding human agency and oversight, three recommendations were given for more than one pilot. These recommendations were given to Pilots 6 and 7. They aim to promote the responsible use of AI systems by avoiding over-reliance on the system, preventing inadvertent effects on human autonomy, and providing appropriate training and oversight to individuals responsible for monitoring the system’s decisions.

There were two pilots that got no recommendation for the technical robustness and safety requirement. Pilot 5 received five recommendations, which aim to identify and manage the risks associated with using AI systems, including potential attacks and threats, possible consequences of system failure or malfunction, and the need for ongoing monitoring and evaluation of the system’s technical robustness and safety.

Concerning the Privacy and Data Governance requirement, Pilot 5 received no recommendations for this. Pilots 6 and 7 were advised to ‘establish mechanisms that allow flagging issues related to privacy or data protection concerning the AI system’. Pilot 7 received four individual recommendations, which aim to ensure that privacy and data protection are considered throughout the lifecycle of the AI system, from data collection to processing and use, and that appropriate mechanisms are in place to protect individuals’ privacy rights.

Regarding the Transparency requirement, Pilots 5 and 6 were recommended to consider regularly surveying the users to inquire about their comprehension of the AI system’s decision-making process. Pilots 6 and 7 were advised in the case of interactive AI systems to consider informing the users that they are engaging with a machine. Pilot 6 was advised to take steps to continuously assess the quality of input data used by their AI systems, explain the decisions made or suggested by the system to end-users, and regularly survey users to ensure they understand these decisions.

The conformance to the Diversity, non-discrimination, and fairness requirements received most of the recommendations, a total of 43 recommendations, of which 17 recommendations were given to at least two pilots. These recommendations, which were given at least to two pilots, can be further divided into the following subcategories:

Data and algorithm design: This includes recommendations related to the input data and algorithm design used in the AI system, such as avoiding bias and ensuring diversity and representativeness in the data, using state-of-the-art technical tools to understand the data and model, and testing and monitoring for potential biases throughout the AI system’s lifecycle.

Awareness and education: It includes recommendations associated with the education of AI designers and developers about the potential for bias, the discrimination in their work, and the established mechanisms for flagging bias issues. They ensure that information about the AI system is accessible to all users, including those with assistive technologies.

Fairness definition: This includes recommendations related to defining fairness and consulting with impacted communities to ensure that the definition is appropriate and inclusive. It also includes suggestions for establishing quantitative metrics to measure and test the meaning of fairness.

Risk assessment: This includes recommendations relevant to assessing the possible unfairness of the AI system’s outcomes on end-users or subject’s communities and identifying groups that might be disproportionately affected by the system’s outcomes.

The Societal and Environmental Well-being requirement received 11 recommendations, of which one recommendation was given to all three pilots, and three recommendations were given to two pilots. All three pilots were advised to establish strategies to decrease the environmental impact of their AI system throughout its lifecycle and participate in contests focused on creating AI solutions that address this issue.

Regarding Accountability, all three pilots got a recommendation that suggest that if AI systems are used for decision-making, it is important to ensure that the impact of these decisions on people′s lives is fair, in line with uncompromisable values, and accountable. Therefore, any conflicts or trade-offs between values should be documented and explained thoroughly.

4. Discussion

4.1. Lessons Learned

SHAPES partners have acknowledged that the ALTAI tool is a reliable and easy-to-use self-assessment tool for Trustworthy AI-based technology in pilot studies such as SHAPES pilots, which are of interest in the market. Similar analyses [11,12] have reported the importance of the ALTAI tool to provide reliable recommendations towards the improvement of trustworthiness in AI solutions. Specifically, although authors in [11] have slightly altered the ALTAI tool questions in their study, their results’ analysis showed an awareness of some of the broader key areas of trustworthy factors as indicated by the ALTAI tool, such as accuracy of the performance of the AI solution. Moreover, the study revealed that the participating researchers were unsure whether their work was covered by the various definitions and how applicable the recommendations could be to their solutions. They recommended that a repository of ALTAI tool experiences along with examples of good practices would be the key to the tradeoff between AI complexity and the compliance with ALTAI’s trustworthiness recommendations. Similarly, the study in [12] reported that industry and public bodies should both be engaged to efficiently regulate the incorporation of the ALTAI tool or its future improvements in the development of AI solutions.

Despite ALTAI not being directly addressed to the market, the tool would allow corporations to compare results with others at a similar level of sophistication or in similar application domains or locations. Before an AI tool is released to the market and during its evaluation process, developers and researchers could adopt this ALTAI to increase the trustworthiness level of the AI tools. Effective use of the ALTAI tool and other assessment and rating schemes is crucial for achieving trustworthy governance in AI, promoting consumer confidence in AI, and facilitating its adoption.

Nevertheless, and as mentioned before, we also identified that some cases cannot be considered, such as a lack of regulations worldwide or in the EU that could also be used. This presents difficulty in convincing the AI tool providers to fully address the ALTAI findings. Furthermore, an independent assessor could offer valuable insights for improvement in spite of the fact that the ALTAI tool has the benefits of simplicity and ease of implementation. For future EU regulation around AI to be successful, there needs to be an ecosystem of organizations, auditors, and rating schemes developed to address the challenges created by the industry.

The SHAPES partners also have pointed out an ALTAI weakness regarding the relative risk of AI systems in the assessment process. While there is an ongoing debate about the best approach to risk assessment, it is essential to embed risk weighting fully in any evaluation of the appropriateness of the level of governance for an AI system.

It should be noted here that the spider diagram is automatically generated by the ALTAI tool as described before. The final scores produced by the spider diagram are associated with the number of recommendations. Precisely, a high score corresponds to a high number of recommendations. However, the ALTAI method developers have not published how each answer to the ALTAI questions contributes to the final scores shown in the spider diagram.

Furthermore, it is important to involve specific co-creation processes in the iterative design methodologies employed by most of the developers of AI solutions. Within these processes, the participation of different stakeholders such as end-users or associated institutions is considered (i.e., organizations or chambers that will use the AI technology, such as doctors, retail branches, etc.). Therefore, AI trustworthiness checks could be part of the co-creation activities. After the completion of each one of the associated co-creation steps, the ALTAI recommendations should be taken into account without of course degrading the fidelity of the performance of the AI technology under development. Obviously, some of the recommendations given by the ALTAI tool should be involved from the beginning of the development procedure, as a design principle. Moreover, some of the stakeholders, involved in the self-assessments, noted that it would be useful to know the level of the compliance with a trustworthy AI and ALTAI tool of publicly available machine learning models and datasets before their incorporation into their AI solutions and in order to not alter the performance of their designs. Additionally, it should be mentioned that the recommendations provided by the ALTAI tool regarding the opportunities for improvement are invaluable for organizations seeking to build a road map for the maturity of their governance [12].

4.2. Recommendations

Based on the Horizon Europe ethics self-assessment orientations [13], the ALTAI tool could become the standard for any Horizon Europe projects involving AI-based technology. However, the ALTAI recommendations seem extensive, repeated, and difficult to understand and use. The inability of the stakeholders to interpret the ALTAI score in relation to what would be suitable to improve was also observed. This was also reported by authors in [12]. Furthermore, we received a few inquiries from the evaluators that performed the self-assessment requesting clarifications about some points of the questionnaire. This was likely due to the fact that the ALTAI tool offers guidance for each question through references to relevant parts of the Ethics Guidelines for Trustworthy AI and specific glossary. Therefore, the partners engaged in this study have defined a list of recommendations easy to use and easy to understand for other researchers, IT developers, and healthcare providers to determine the necessary level of action and urgency to tackle AI-related risks. Table 2 provides the defined list of recommendations.

Table 2.

List of recommendations.

| Category | Recommendations |

|---|---|

|

Human agency

and oversight |

|

|

Technical robustness

and safety |

|

|

Privacy and data

governance |

|

| Transparency |

|

|

Diversity,

non-discrimination, and fairness |

|

| Societal and environmental well-being |

|

| Accountability |

|

5. Conclusions

The theoretical framework provided by this study has pointed out that AI-assisted technology in the healthcare field remains a ‘good promise,’ among others, for clinical diagnosis, improving decision making, and remote monitoring. Nevertheless, integrating AI-assisted technology in healthcare is influenced by the professionals’ and patients’ perspectives on acceptability, accountability, transparency, explainability, privacy, security, and literacy.

The ALTAI tool is a self-assessment tool for trustworthiness in AI that evaluates the rate of the AI system in seven requirements and generates a list of recommendations. This study presented the results from three SHAPES Pilots that used the ALTAI tool to self-assess the AI systems’ robustness and trustworthiness. The ALTAI category ‘transparency’ received the best results in contrast to ‘technical robustness and safety,’ which obtained the worst outcomes. This study also evaluated the functionality of this tool, which is easy to use. However, on the other side, its produced recommendations are extensive and not easy to understand and apply. Therefore, a new recommendation list was developed and validated in order to be easily considered and comprehended by the stakeholders.

Appendix A

Table A1.

List of per pilot recommendations provided by the ALTAI tool.

|

ALTAI

Requirements |

PT5

Caring for Older Individuals with Neurodegenerative Diseases |

PT6

Physical Rehabilitation at Home |

PT7

Cross-border Health Data Exchange |

|---|---|---|---|

|

Human agency

and oversight |

No Recommendations |

|

|

|

Technical robustness

and safety |

|

No Recommendations | No Recommendations |

|

Privacy

and data governance |

No Recommendations |

|

|

| Transparency |

|

|

|

|

Diversity,

non-discrimination and fairness |

|

|

|

|

Societal

and environmental well-being |

|

|

|

| Accountability |

|

|

|

Author Contributions

Conceptualization, J.R.; methodology, J.R., F.G., P.A.L.R., X.d.T.G., P.O. and J.T.; validation, J.R., F.G., P.A.L.R., X.d.T.G., P.O. and J.T.; formal analysis, J.R., F.G., P.A.L.R., X.d.T.G., P.O. and J.T.; investigation, J.R., F.G., P.A.L.R., X.d.T.G., P.O. and J.T.; resources, J.R., P.O. and J.T.; data curation, J.R., F.G., P.A.L.R., X.d.T.G., P.O. and J.T.; writing—original draft preparation, J.R., F.G., P.A.L.R., X.d.T.G., P.O. and J.T.; writing—review and editing, J.R., F.G., P.A.L.R., X.d.T.G., P.O. and J.T.; Visualization, J.R., P.O. and J.T.; supervision, J.R.; project administration, J.R. and F.G. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

PT5—Ethics Committee: Ethical Committee of School of Medicine and Biomedical Sciences and Central Hospital of Santo António. Approval Code: GS/HCC/123-2022/CE/P20(P398/CETI/ICBAS). Approval Date: 28 October 2022. PT6-Ethic Committee Name: The Social Research Ethics Committee (SREC) of the University of Castilla-La Mancha. Approval Code: CEIS-643333-Y9W4-R1. Approval Date: 28 July 2022. PT7- Ethic Committee Name: Scientific Council for Primary Healthcare (Ethics Committee) of the 5th Regional Health Authority of Thessaly & Sterea Greece. Approval Code: 4530/ΕΣ 09. Approval Date: 5 January 2023.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was supported by the SHAPES Project, which has received funding from the European Union’s Horizon 2020 research and innovation program under the grant agreement number 857159.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Amián J.G., Alarcón D., Fernández-Portero C., Sánchez-Medina J.A. Aging Living at Home: Residential Satisfaction among Active Older Adults Based on the Perceived Home Model. Int. J. Environ. Res. Public Health. 2021;18:8959. doi: 10.3390/ijerph18178959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gefenaite G., Björk J., Schmidt S.M., Slaug B., Iwarsson S. Associations among housing accessibility, housing-related control beliefs and independence in activities of daily living: A cross-sectional study among younger old in Sweden. J. Hous. Built Environ. 2019;35:867–877. doi: 10.1007/s10901-019-09717-4. [DOI] [Google Scholar]

- 3.Pettersson C., Slaug B., Granbom M., Kylberg M., Iwarsson S. Housing accessibility for senior citizens in Sweden: Estimation of the effects of targeted elimination of environmental barriers. Scand. J. Occup. Ther. 2018;25:407–418. doi: 10.1080/11038128.2017.1280078. [DOI] [PubMed] [Google Scholar]

- 4.Slaug B., Granbom M., Iwarsson S. An Aging Population and an Aging Housing stock—Housing Accessibility Problems in Typical Swedish Dwellings. J. Aging Environ. 2020;34:156–174. doi: 10.1080/26892618.2020.1743515. [DOI] [Google Scholar]

- 5.Clark A., Rowles G., Bernard M., editors. Environmental Gerontology: Making Meaningful Places in Old Age. Springer Publishing Company; New York, NY, USA: 2013. p. 336. [Google Scholar]

- 6.Blackman S., Matlo C., Bobrovitskiy C., Waldoch A., Fang M., Jackson P., Mihailidis A., Nygård L., Astell A., Sixsmith A. Ambient Assisted Living Technologies for Aging Well: A Scoping Review. J. Intell. Syst. 2016;25:55–69. doi: 10.1515/jisys-2014-0136. [DOI] [Google Scholar]

- 7.Segers K. The Socio-Economic Impact of AI on European Health Systems. Deloitte Belgium. 2020. [(accessed on 28 March 2023)]. Available online: https://www2.deloitte.com/be/en/pages/life-sciences-and-healthcare/articles/the-socio-economic-impact-of-AI-on-healthcare.html.

- 8.Rajamäki J., Helin J. Ethics and Accountability of Care Robots; Proceedings of the European Conference on the Impact of Artificial Intelligence and Robotics; Virtual. 1–2 December 2022. [Google Scholar]

- 9.Nevanperä N., Rajamäki J., Helin J. Design Science Research and Designing Ethical Guidelines for the SHAPES AI Developers. Procedia Comput. Sci. 2021;192:2330–2339. doi: 10.1016/j.procs.2021.08.223. [DOI] [Google Scholar]

- 10.Seidel K., Labor M., Lombard-Vance R., McEvoy E., Cooke M., D’Arino L., Desmond D., Ferri D., Franke P., Gheno I., et al. Implementation of a pan-European ecosystem and an interoperable platform for Smart and Healthy Ageing in Europe: An Innovation Action research protocol [version 1; peer review: Awaiting peer review] Open Res. Eur. 2022;2:85. doi: 10.12688/openreseurope.14827.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stahl B.C., Leach T. Assessing the ethical and social concerns of artificial intelligence in neuroinformatics research: An empirical test of the European Union Assessment List for Trustworthy AI (ALTAI) AI Ethics. 2022:1–23. doi: 10.1007/s43681-022-00201-4. [DOI] [Google Scholar]

- 12.Radclyffe C., Ribeiro M., Wortham R. The assessment list for trustworthy artificial intelligence: A review and recommendations. Front. Artif. Intell. Sec. AI Bus. 2023;6:37. doi: 10.3389/frai.2023.1020592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.European Commission EU Grants: How to Complete Your Ethics Self-Assessment—V2.0. Brussels. 2021. [(accessed on 28 March 2023)]. Available online: https://ec.europa.eu/info/funding-tenders/opportunities/docs/2021-2027/common/guidance/how-to-complete-your-ethics-self-assessment_en.pdf.

- 14.Shinners L., Aggar C., Grace S., Smith S. Exploring healthcare professionals’ perceptions of artificial intelligence: Validating a questionnaire using the e-Delphi method. Digit. Health. 2021;7:20552076211003433. doi: 10.1177/20552076211003433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Scheetz J., Rothschild P., McGuinness M., Hadoux X., Soyer H.P., Janda M., Condon J.J.J., Oakden-Rayner L., Palmer L.J., Keel S., et al. A survey of clinicians on the use of artificial intelligence in ophthalmology, dermatology, radiology and radiation oncology. Sci. Rep. 2021;11:5193. doi: 10.1038/s41598-021-84698-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Laï M.C., Brian M., Mamzer M.F. Perceptions of artificial intelligence in healthcare: Findings from a qualitative survey study among actors in France. J. Transl. Med. 2020;18:14. doi: 10.1186/s12967-019-02204-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Habli I., Lawton T., Porter Z. Artificial intelligence in health care: Accountability and safety. Bull. World Health Organ. 2020;98:251–256. doi: 10.2471/BLT.19.237487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Markus A.F., Kors J.A., Rijnbeek P.R. The role of explainability in creating trustworthy artificial intelligence for health care: A comprehensive survey of the terminology, design choices, and evaluation strategies. J. Biomed. Inform. 2021;113:103655. doi: 10.1016/j.jbi.2020.103655. [DOI] [PubMed] [Google Scholar]

- 19.Nichols E., Szoeke C.E., Vollset S.E., Abbasi N., Abd-Allah F., Abdela J., Aichour M.T.E., Akinyemi R.O., Alahdab F., Asgedom S.W., et al. Global, regional, and national burden of Alzheimer’s disease and other dementias, 1990–2016: A systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2019;18:88–106. doi: 10.1016/S1474-4422(18)30403-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wilkowska W., Ziefle M. Privacy and data security in E-health: Requirements from the user’s perspective. Health Inform. J. 2012;18:191–201. doi: 10.1177/1460458212442933. [DOI] [PubMed] [Google Scholar]

- 21.Floridi L., Cowls J., Beltrametti M., Chatila R., Chazerand P., Dignum V., Luetge C., Madelin R., Pagallo U., Rossi F., et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds Mach. 2018;28:689–707. doi: 10.1007/s11023-018-9482-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Morley J., Machado C.C.V., Burr C., Cowls J., Joshi I., Taddeo M., Floridi L. The ethics of AI in health care: A mapping review. Soc. Sci. Med. 2020;260:113172. doi: 10.1016/j.socscimed.2020.113172. [DOI] [PubMed] [Google Scholar]

- 23.Rajamäki J., Lebre Rocha P.A., Perenius M., Gioulekas F. SHAPES Project Pilots’ Self-assessment for Trustworthy AI; Proceedings of the 2022 12th International Conference on Dependable Systems, Services and Technologies (DESSERT); Athens, Greece. 9–11 December 2022; pp. 1–7. [DOI] [Google Scholar]

- 24.Jobin A., Ienca M., Vayena E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019;1:389–399. doi: 10.1038/s42256-019-0088-2. [DOI] [Google Scholar]

- 25.Mittelstadt B. Principles alone cannot guarantee ethical AI. Nat. Mach. Intell. 2019;1:501–507. doi: 10.1038/s42256-019-0114-4. [DOI] [Google Scholar]

- 26.Hollweck T. Case Study Research Design and Methods (5th ed.), Robert K. Yin. Can. J. Program Eval. 2015;30:108–110. doi: 10.3138/cjpe.30.1.108. [DOI] [Google Scholar]

- 27.Berger S., Rossi F. AI and Neurotechnology: Learning from AI Ethics to Address an Expanded Ethics Landscape. Commun. ACM. 2023;66:58–68. doi: 10.1145/3529088. [DOI] [Google Scholar]

- 28.Information Technology—Artificial Intelligence—Management System. International Organization for Standardization; Geneva, Switzerland: 2022. [(accessed on 16 May 2023)]. Available online: https://www.iso.org/standard/81230.html. [Google Scholar]

- 29.Artificial Intelligence Act: Proposal for a Regulation of the European Parliament and the Council Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative. 2021. [(accessed on 28 March 2023)]. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELLAR:e0649735-a372-11eb-9585-01aa75ed71a1.

- 30.Lewis D., Filip D., Pandit H.J. An Ontology for Standardising Trustworthy AI. Factoring Ethics in Technology, Policy Making, Regulation and AI. IntechOpen; Rijeka, Croatia: 2021. [(accessed on 16 May 2023)]. Available online: https://www.intechopen.com/chapters/76436. [DOI] [Google Scholar]

- 31.European Commission’s High-Level Expert Group on Artificial Intelligence Ethics Guidelines for Trustworthy AI. Apr 8, 2019. [(accessed on 28 March 2023)]. Available online: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai.

- 32.European Commission Welcome to the ALTAI Portal! [(accessed on 4 May 2023)]. Available online: https://futurium.ec.europa.eu/en/european-ai-alliance/pages/welcome-altai-portal.

- 33.Bærøe K., Miyata-Sturm A., Henden E. How to achieve trustworthy artificial intelligence for health. Bull. World Health Organ. 2020;98:257–262. doi: 10.2471/BLT.19.237289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Insight The Assessment List for Trustworthy Artificial Intelligence. 2020. [(accessed on 4 May 2023)]. Available online: https://altai.insight-centre.org/ [DOI] [PMC free article] [PubMed]

- 35.Crowe S., Cresswell K., Robertson A., Huby G., Avery A., Sheikh A. The case study approach. BMC Med. Res. Methodol. 2011;11:100. doi: 10.1186/1471-2288-11-100. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.