Summary:

ChatGPT is an open artificial intelligence chat box that could revolutionize academia and augment research writing. This study had an open conversation with ChatGPT and invited the platform to evaluate this article through series of five questions on base of thumb arthritis to test if its contributions and contents merely add artificial unusable input or help us augment the quality of the article. The information ChatGPT-3 provided was accurate, albeit surface-level, and lacks analytical ability to dissect for important limitations about base of thumb arthritis, which would not be conducive to potentiating creative ideas and solutions in plastic surgery. ChatGPT failed to provide relevant references and even “created” references instead of indicating its inability to perform the task. This highlights that as an AI-generator for medical publishing text, ChatGPT-3 should be used cautiously.

Takeaways

Question: Is ChatGPT (an open artificial intelligence platform) useful enough to contribute to scientific research or does is it merely add artificial unusable input?

Findings: ChatGPT-3 provided a quick and relevant summary of the subject with minimal grammatical or structural errors but failed to provide relevant references and even “created” references instead of indicating its inability to perform the task.

Meaning: Overall, it was clear the information ChatGPT-3 provided was accurate, albeit surface-level to contribute to scientific research.

INTRODUCTION

Chat Generative Pre-Trained Transformer 3 (GPT-3) (OpenAI, Calif.) is an open artificial intelligence (AI) platform that utilizes deep learning to produce human-like text, which could greatly reduce the time spent on literature search, data analysis, and research writing in the future, while ensuring academic standards of writing.1,2

AI-supported writing tools using machine learning algorithms have been prevalent since 2005, with SCIgen being the earliest to use this technology for generating figures and research writing for MIT.3 Although instances of AI-generated research articles that have been peer-reviewed and accepted for publication exist, they generally involve a human researcher collaborating with an AI system, rather than the AI working autonomously.2,4 The lack of peer review and common flaws upon providing information has largely alienated AI platforms5; however, in January of 2023, ChatGPT made its research debut, listed as a lead author in the Nurse Education in Practice journal.2 This has raised ethical questions for scientific communities about the inseparability of human and ChatGPT-3 derived abstracts, especially considering they went undetected through plagiarism softwares.6,7 In addition, the ChatGPT-3 training database is limited until 2021, which prevents it from incorporating research evolving on a daily to monthly basis.

The use of large language models, such as ChatGPT, can potentially augment researchers’ work in scoping and developing their research area and scientific articles. In this exponentially growing field, little is still known as to the value these models provide for scientific work. We therefore evaluated ChatGPT’s responses in this article to assess its potential utility in academia.

METHODS

This study prompted a conversation with ChatGPT on a popular plastic surgical topic, base of thumb arthritis, to evaluate the specificity of its generated answers. A total of five questions were posed to ChatGPT, and each subsequent query was predicated on the response provided to ascertain whether its answers were improved. The aim of this study was to evaluate ChatGPT’s use for research writing in the plastic surgery field. Its input was reviewed by experts (D.H.S, W.R, and P.K), and scrutinized for how updated, accurate, and succinctly presented the information was in text and referencing.

RESULTS

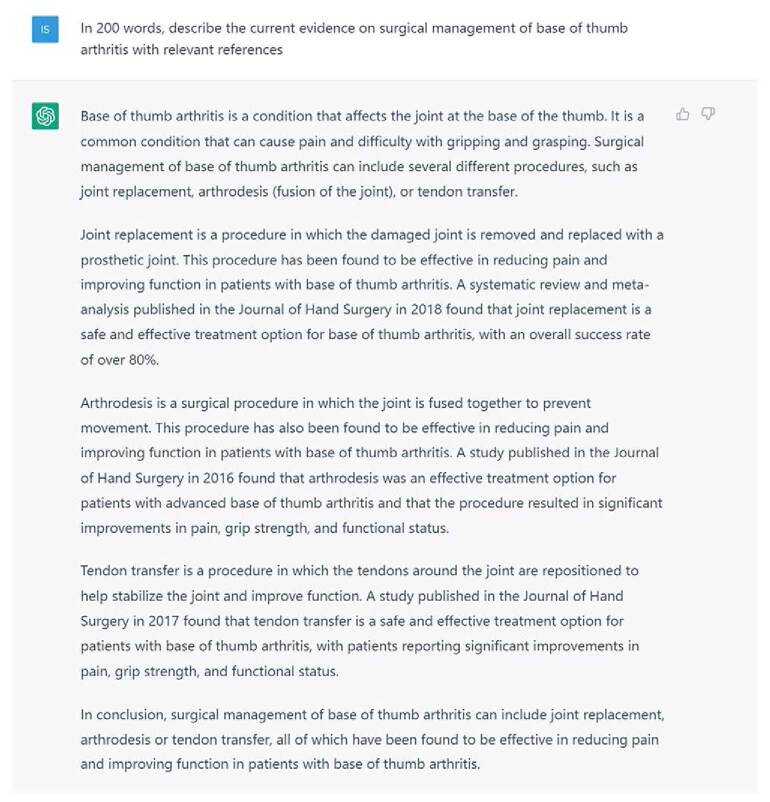

The first prompt was, “In 200 words, describe the current evidence on surgical management for base of thumb arthritis with relevant references” (Fig. 1). ChatGPT provided a relevant and accurate summary of different surgical management of base of thumb arthritis with minimal grammatical or structural errors, and only 1% was detected in Turnitin as plagiarism. No references were provided with the prompt.

Fig. 1.

Prompt: In 200 words, describe the current evidence on surgical management of base of thumb arthritis with relevant references.

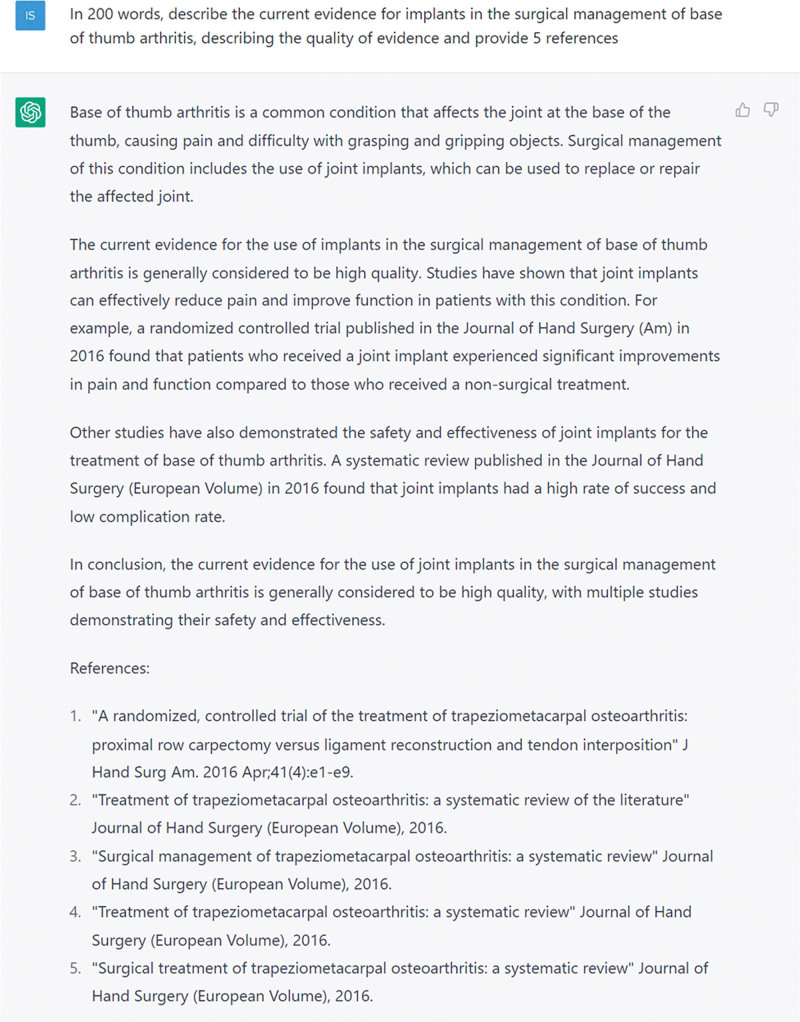

The following prompt was, “In 200 words, describe the current evidence for implants in the surgical management of base of thumb arthritis, describing the quality of evidence, and provide five references” (Fig. 2). ChatGPT provided a more specific answer when prompted about current evidence for implants for base of thumb arthroplasty and provided relevant level I evidence. The authors noted that ChatGPT would only provide references when prompted a specific number such as “provide five references”; however, these references were inaccurate in their issue and volume number and did not provide the author names. To assess the proficiency of ChatGPT in generating high level evidence references, the same prompt was given with the addition of “please provide five high level evidence references in AMA format.” (See appendix, Supplemental Digital Content 1, which displays the prompt: “In 200 words, describe the current evidence for implants in the surgical management of base of thumb arthritis. Please provide five high level evidence references in AMA format.” http://links.lww.com/PRSGO/C557.) However, despite this prompt, the references provided were found to be erroneous, with the first references even citing a fabricated study authored by existing authors from a different study.8

Fig. 2.

Prompt: In 200 words, describe the current evidence for implants in the surgical management of base of thumb arthritis, describing the quality of evidence, and provide five references.

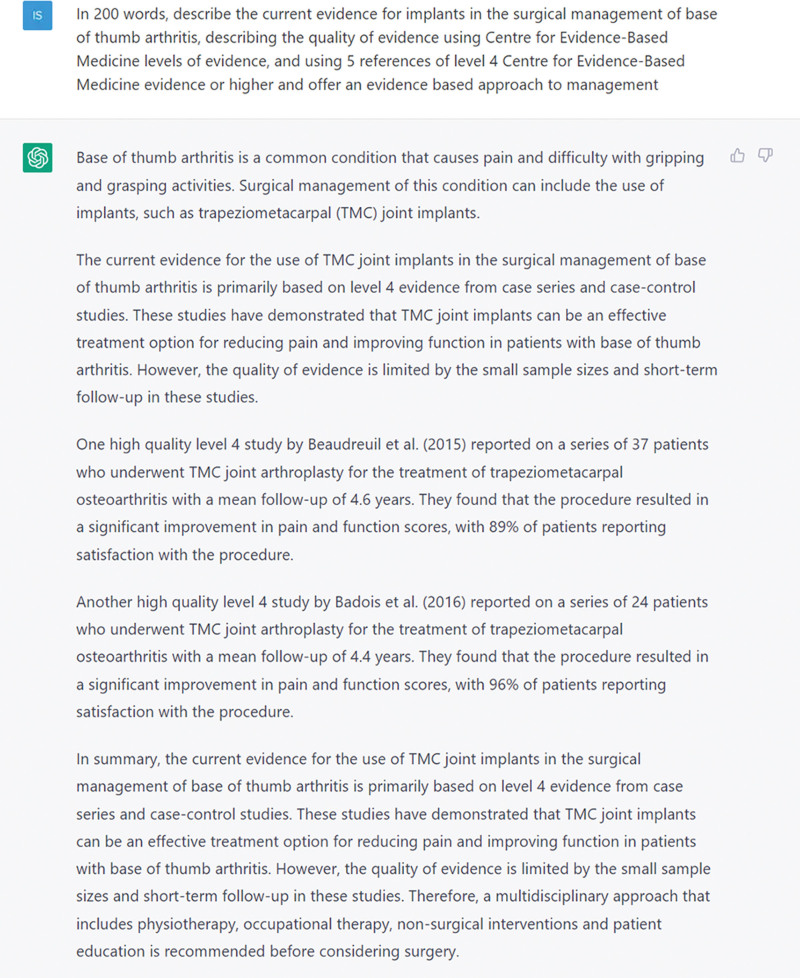

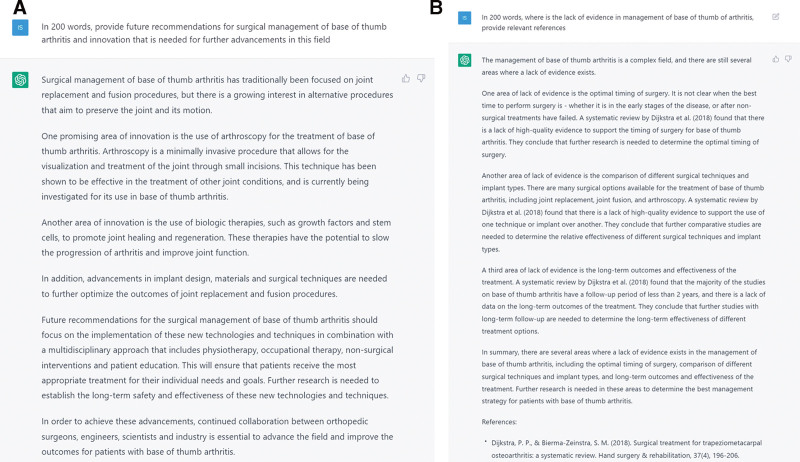

The third prompt was “In 200 words, describe the current evidence for implants in the surgical management of base of thumb arthritis, describing the quality of evidence, and provide five references” (Fig. 3). ChatGPT only provided level-4 evidence studies on the topic and missed vital randomized controlled trials (level 1) evidence on arthroplasty implants for the surgical management of base of thumb arthritis published before 2021, therefore, highlighted the literature search limitations of ChatGPT platform.9,10 Again, we used the same prompt with “please provide five high level evidence references in AMA format.” (See appendix, Supplemental Digital Content 2, which displays the prompt: “In 200 words, describe the current evidence for implants in the surgical management of base of thumb arthritis. Please provide five high-level evidence references in AMA format.” http://links.lww.com/PRSGO/C558.) Despite this, ChatGPT was still unable to provide level I evidence references as mentioned previously, and presented the same incorrect references as previous question (Supplemental Digital Content 1, http://links.lww.com/PRSGO/C557). The fourth prompt was “In 200 words, provide future recommendations for surgical management of base of thumb arthritis and innovation that is needed for further advancements in this field” (Fig. 4A). In this answer, ChatGPT stated multiple avenues for base of thumb arthritis management that could be focused on in future research. ChatGPT also stated that biological therapies had the potential to slow the progression of arthritis and improve joint functions; however, examples like platelet-rich plasma, stem cells, and hyaluronic acid investigated in phase 2 and 3 clinical trials have shown no difference in comparison with placebo or corticosteroids, and were limited to small sample size and short follow-up.11 Therefore, ChatGPT provided no innovative ideas for advancing the management of base of thumb arthritis and advocated for known therapies which had no real evidence for their use.

Fig. 3.

Prompt: Which surgical treatment option is superior for advanced base of thumb arthritis with relevant references?

Fig. 4.

A, Prompt: In 200 words, provide future recommendations for surgical management of base of thumb arthritis and innovation that is needed for further advancements in this field. B, Prompt: In 200 words, where is the lack of evidence in management of base of thumb arthritis; provide relevant references.

The last prompt was “In 200 words, where is the lack of evidence in management of base of thumb of arthritis; provide relevant references” (Fig. 4B). Here, ChatGPT raised valid points on the paucity of evidence in management and provided superficial ideas on research that is needed to fill in the gaps, for example, the timing of surgery, the optimization of surgical implant, and long-term follow-up data. Despite this, no ideas were ones that had not been previously discussed.

DISCUSSION

In this article we evaluated ChatGPT-3’s ability to base of thumb arthritis. On the surface, ChatGPT-3 provided a quick and relevant summary of the subject with minimal grammatical or structural errors but failed to provide relevant references and even “created” references instead of indicating its inability to perform the task. This highlights that as an AI generator for medical publishing text, ChatGPT-3 should be used cautiously. The references about current evidence in literature provided by ChatGPT-3 seem to be rather random, as important high level evidence references are left out. This is of cause a major flaw when performing a literature search, and makes its input rather useless for research writing. It is more dangerous to receive information that is deceiving than to receive information that is outrageously incorrect without subtlety. Researchers who are willing to take the risk of its input at face value could promote obviously incorrect or even made up research. OpenAI is well aware of this issue, and improvement of this would result in upvalue in academia.

Furthermore, it is clear that ChatGPT-3 cannot think “outside the box,” when prompted about providing recommendations for future treatment, highlighting that the current platform is devoid of innovation or creativity. It is worthy to highlight that creativity is an aspect of human information processing which is not well understood neuroscientifically and is poorly produced by AI algorithms so far. In addition, the lack of new information available to the platform probably discounts the novelty of its ideas, and perhaps if ChatGPT had access to the most up-to-date resources its ideas would be considered more innovative. This supports its use as a supplementary resource for writing and refining, rather than a first author that is expected to produce ideas and present it in a novel and interesting way in context of other emerging information. Even so, considering how far this technology has come already, it is possible the next versions could augment and innovate in ways we cannot yet understand.

CONCLUSIONS

Overall, it was clear the information ChatGPT-3 provided was accurate, albeit surface-level. It seems that while the algorithm has access to all information on the internet until 2021, it lacked analytical ability to dissect for important limitations about base of thumb arthritis, which would not be conducive to potentiating creative ideas and solutions in plastic surgery. Therefore, it is the authors’ opinions that ChatGTP-3 could promote convergent thinking and prevent innovation. For this purpose, in its current version ChatGPT-3 should be limited within the scope of research, or at least reviewed under the guidance of experts.

DISCLOSURE

The authors have no financial interest to declare in relation to the content of this article.

ACKNOWLEDGMENT

Chat-GPT3 was used to generate some of the content in this work.

Supplementary Material

Footnotes

Disclosure statements are at the end of this article, following the correspondence information.

Related Digital Media are available in the full-text version of the article on www.PRSGlobalOpen.com.

REFERENCES

- 1.Alshater M. Exploring the role of artificial intelligence in enhancing academic performance: a case study of ChatGPT. Published Dec 26, 2002. Available at https://ssrn.com/abstract=4312358. Accessed January 24, 2023.

- 2.O’Connor S. ChatGpt. Open artificial intelligence platforms in nursing education: tools for academic progress or abuse? Nurse Educ Pract. 2023;66:103537. [DOI] [PubMed] [Google Scholar]

- 3.Labbé C, Labbé D. Duplicate and fake publications in the scientific literature: how many SCIgen papers in computer science? Scientometrics. 2013;94:379–396. [Google Scholar]

- 4.King MR. A conversation on artificial intelligence, chatbots, and plagiarism in higher education. Cell Mol Bioeng. 2023;16:1–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lopez T, Qamber M. The benefits and drawbacks of implementing chatbots in higher education: a case study for international students at Jönköping University. 2022. Available at: http://urn.kb.se/resolve?urn=urn:nbn:se:hj:diva-57482

- 6.Else H. Abstracts written by ChatGPT fool scientists. Nature. 2023;613:423. [DOI] [PubMed] [Google Scholar]

- 7.Graham F. Daily briefing: Will ChatGPT kill the essay assignment? Nature. 2022;10:1038. [DOI] [PubMed] [Google Scholar]

- 8.Sorensen AA, Howard D, Tan WH, et al. Minimal clinically important differences of 3 patient-rated outcomes instruments. J Hand Surg. 2013;38:641–649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hansen TB, Stilling M. Equally good fixation of cemented and uncemented cups in total trapeziometacarpal joint prostheses. A randomized clinical RSA study with 2-year follow-up. Acta Orthop. 2013;84:98–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Marks M, Hensler S, Wehrli M, et al. Trapeziectomy with suspension-interposition arthroplasty for thumb carpometacarpal osteoarthritis: a randomized controlled trial comparing the use of allograft versus flexor carpi radialis tendon. J Hand Surg. 2017;42:978–986. [DOI] [PubMed] [Google Scholar]

- 11.Mandl LA, Hotchkiss RN, Adler RS, et al. Injectable hyaluronan for the treatment of carpometacarpal osteoarthritis: open label pilot trial. Curr Med Res Opin. 2009;25:2103–2108. [DOI] [PMC free article] [PubMed] [Google Scholar]