Abstract

In 2017, the Medicare Shared Savings Program (MSSP) began incorporating regional spending into Accountable Care Organization (ACO) benchmarks, introducing new incentives favoring the participation of ACOs and practices with lower baseline spending in their region. To characterize providers’ response to these incentives, we isolated changes in spending due to changes in the mix of ACOs and practices participating in the MSSP. In contrast to earlier participation patterns, the composition of the MSSP after 2017 increasingly shifted to providers with lower pre-existing levels of spending relative to their region, consistent with a selection response. Changes occurred through the entry of new ACOs with lower baseline spending, exit of higher-spending ACOs, and reconfiguration of participant lists favoring lower-spending practices within continuing ACOs. These participation patterns varied meaningfully by ACO type. While compositional changes could not be definitively tied to benchmarking changes, the disproportionate participation of providers with lower baseline spending nevertheless implies substantial costs and the need for ACO benchmarking reforms.

Medicare relies on population-based payment models like accountable care organizations (ACOs) to encourage more efficient care delivery and control spending growth. These models set targets for total annual spending per beneficiary and allow providers to earn bonuses as a function of spending below these benchmarks; in some ACO contracts, providers must also pay a penalty if their spending exceeds a certain threshold above their benchmark.

Benchmarks and Provider Participation Incentives

When population-based payment models are voluntary (all Medicare ACO initiatives currently are), how benchmarks are set is a key determinant of participation. All else equal, the extent to which an ACO’s expected spending exceeds (or falls below) the benchmark under the status quo (i.e., without attempts to slow spending growth), the weaker (or stronger) its incentive to participate.

If ACO benchmarks are based on average risk-adjusted spending in the ACO’s region, providers with already lower risk-adjusted spending for their region have a stronger incentive to participate. Such selective participation in response to differences between pre-existing spending levels and expected benchmarks (“selection on levels”) is costly on two fronts.[1] First, absent savings from changes in care delivery, selection results in a net subsidy to participating providers as bonus payouts increase and recouped penalties decrease. Second, foregoing the participation of providers with higher risk-adjusted spending, and thus greater potential savings, means foregoing program success in reducing waste.

In contrast, benchmarks based on ACOs’ own historical spending and adjusted for changes in ACO composition largely negate the incentives favoring ACOs or practices with lower pre-existing spending levels. Under a historical benchmarking scheme, providers are still incentivized to participate based on expected or realized spending reductions (“selection on slopes”).[1] It is challenging for ACOs to know ex ante how their spending trends under the status quo would deviate from benchmark trends because spending levels and trends are weakly predictive of future spending trends.[2] Still, ACOs can, ex post, selectively exit based on their realized spending growth relative to benchmarks, and they may have some ex ante knowledge of their savings potential. For example, ACOs with higher risk-adjusted spending have been found to achieve greater savings, consistent with greater opportunities to reduce waste.[3]

Although historical benchmarks can limit costly selection on levels, they raise other problems. If the baseline period is not updated over time, initially less efficient providers are favored with more opportunities to earn bonuses that may advantage them over more efficient competitors, potentially increasing spending as the former grow and the latter contract. Moreover, if the baseline period is updated (or “rebased”), ACOs face a ratchet effect that makes success difficult to sustain and diminishes incentives to reduce spending.

Benchmarking Changes in the Medicare Shared Savings Program

These design issues have played out in the Medicare Shared Savings Program (MSSP) as benchmarks changed from a purely historical to partially regional basis. In the MSSP, an ACO is defined by its participant list, the official roster of participating medical groups and facilities, identified by taxpayer identification numbers (TINs) and Centers for Medicare and Medicaid Services (CMS) Certification Numbers (CCNs). Beneficiaries are attributed to these TINs and CCNs, which we collectively refer to as “practices,” during a baseline period to determine the historical basis for the benchmark and during each performance year to determine performance-year spending.

In the original MSSP design, benchmarks were based on ACOs’ own historical spending during a baseline period trended forward at a national rate of Medicare spending growth. In response to concerns about unequal benchmarks for ACOs in the same market, the CMS introduced a regional adjustment that effectively blended an ACO’s historical spending with the average spending in its region.[4,5] These changes were finalized in June 2016 and first affected ACOs renewing their contracts in 2017 and later. Subsequent changes introduced by the Pathways to Success redesign in 2019 further accelerated ACOs’ transition to regionalized benchmarking, introducing a historical-regional blend in newly entering ACOs’ first contract period.

The inclusion of regional spending into MSSP benchmarks fundamentally altered participation incentives. Pure historical benchmarking favored practices achieving greater spending reductions but not those with lower baseline risk-adjusted spending because CMS recalculated ACOs’ baseline spending to account for participant list changes. Beyond selection on expected spending reductions (slopes), providers had limited opportunities for strategic selection at the ACO or TIN/CCN level because such selection was netted out of bonus calculations.

The regional benchmark adjustment, which we refer to as regionalized benchmarks, introduced new incentives to select on spending levels. Providers with lower baseline risk-adjusted spending in their region mechanically received higher benchmarks than they would under purely historical benchmarking, and vice versa for providers with higher baseline spending.

Providers could act on these new incentives by entering the MSSP with a favorable set of providers (ACO-level entry), exiting the program if they had an unfavorable set (ACO-level exit), or reconfiguring their participant list after entry to favor practices with lower baseline spending (within-ACO reconfiguration). Reconfiguration simply required ACOs to submit updated participant lists ahead of the next performance year. Selective reconfiguration could then mechanically raise ACOs’ benchmarks and allow them to earn bonuses more easily – a strategy not rewarded under the original historical benchmarking design.

In both periods, under historical or regionalized benchmarking, ACOs had incentives to select patients with favorable risks or clinicians with lower spending within participating TINs/CCNs. Past work found limited evidence of these types of selection in the early period, but whether this activity has evolved over time is unknown.[6] Similarly, in both periods ACOs had incentives to select providers who could reduce spending, all else equal. Our study focuses on new incentives at the ACO and practice levels to select on established spending levels, which constitute the primary change introduced by benchmark regionalization.

Recent evidence suggests that selection on baseline spending at ACO and practice levels in response to regionalized benchmarking should be expected. Evidence from the Comprehensive Care for Joint Replacement (CJR) program, a bundled payment model with similarly regionalized benchmarks, suggests that selective participation after half of the program became voluntary was substantial and inefficient.[1,7] Whether similar behavior and implications apply with ACOs remains unclear, though early descriptive evidence is consistent with selection. In the first year following benchmark regionalization, ACOs exiting the MSSP tended to have higher spending in their region relative to those that stayed, and new entrants had lower spending in their region relative to those that entered before.[8,9]

In this study, we conducted a series of analyses to investigate the evidence for selective participation at the ACO or practice level in the MSSP in response to regionalized benchmarking. First, we assessed the strength of the new incentives to select on baseline spending relative to region. Second, we characterized how compositional changes in MSSP participation over time changed ACOs’ spending relative to their region. Specifically, we decomposed observed changes in spending into those driven by ACO-level entry, ACO-level exit, and within-ACO reconfiguration, comparing participation patterns before and after benchmark regionalization. Finally, we compared findings between different subgroups to explore ACO characteristics that might facilitate selective participation.

STUDY DATA & METHODS

Study Data & Population

For each performance year from 2013 through 2019, we used the MSSP Provider-level Research Identifiable Files to identify each ACO’s participant list. We used traditional Medicare claims from a random 20% sample of beneficiaries and the Medicare Data on Provider Practice and Specialty (MD-PPAS) to attribute beneficiaries to practices and calculate spending measures. For each claim year, we attributed beneficiaries to the practice accounting for the plurality of spending on qualifying primary care services (appendix). Practices were then assigned to ACOs based on performance year-specific ACO configurations. Since ACOs could not participate simultaneously in multiple Medicare ACO programs, our analyses excluded ACOs ever participating in Pioneer or Next Generation programs to avoid misclassifying transfers from the MSSP to those models as program exits (appendix). While CMS provides official beneficiary lists for each ACO, these rosters are only available for performance years and thus do not support comparison of counterfactuals (e.g., ACO spending in a given year under different configurations). Nevertheless, our application of the assignment algorithm resulted in beneficiary assignments that were highly concordant with the CMS beneficiary assignments where the two could be compared (appendix).

Risk-Adjusted Regional Spending Deviation

We characterized practices, ACOs, and cohorts of ACOs entering in the same year by their risk-adjusted regional spending deviation, mirroring the method used in the MSSP to adjust benchmarks for regional efficiency (appendix). This measure represented the risk-adjusted difference between providers’ average spending and the average spending in providers’ service area, adjusting for beneficiary enrollment type and Hierarchical Condition Category (HCC) risk score. For brevity, we refer to the risk-adjusted regional spending deviation as simply the “spending deviation.”

Assessing the Strength of New Selection Incentives

Because regionalized benchmarks are a blend of ACOs’ historical and region’s spending, a regional adjustment that increases an ACO’s benchmark is equal to only a portion of the ACO’s negative baseline spending deviation. Also, some of this advantage may be offset by regression to the mean. For example, ACOs with lower historical spending at baseline due to random fluctuations should experience more rapid spending growth in the near term as their spending reverts toward the average. Incentives for selective participation then depend on the extent to which the regional adjustment outweighs any countervailing effects from mean reversion: the more stable spending deviations are over time (i.e., less mean reversion), the stronger the incentives to select on baseline spending deviations. We therefore assessed the stability of ACO spending deviations across the six-year period prior to entry into the MSSP (appendix).

Characterizing Compositional Changes Over Time

In our main analyses, we investigated the extent to which the composition of MSSP participants shifted to lower-spending providers before versus after CMS finalized rules to regionalize benchmarks in June 2016. Our analyses considered four types of newly incentivized selection behavior: ACO-level entry, ACO-level exit, and practice-level entry and exit within continuing ACOs through participant list reconfiguration. In all analyses, we calculated average spending deviations weighted by the number of attributed beneficiaries.

To examine changes in ACO-level entry patterns, we calculated ACOs’ spending deviation at entry using claims from their three-year baseline period. We then assessed the average spending deviation in the entry year for ACOs that entered the MSSP before versus after introduction of regionalized benchmarks in 2017.

In each performance year after MSSP entry, ACOs decided whether to exit the MSSP or make participant list changes. To investigate selective ACO-level exit and within-ACO participant list reconfiguration, we calculated the change in each cohort’s average spending deviation attributable to these participation changes occurring between performance years. Because we wanted to measure only exit and reconfiguration effects, absent any savings from the effects of ACOs on care delivery, we held constant the claim years used to calculate spending deviations for each pair of consecutive performance years (Exhibit A1). These claim years were the three years prior to each pair of performance years. Thus, changes in spending deviations between performance years reflect only the change in participating ACOs and practices between those years. We provide more detail on these methods and examples in the appendix.

For each pair of years, we also estimated annual changes in cohort spending deviation due to reconfiguration alone by restricting to ACOs that participated in both years. These estimates quantified the extent to which ACOs that continued to participate from one year to the next raised or lowered their spending deviation via participant list changes.

In addition to these main exit and reconfiguration analyses, we also conducted sensitivity analyses that used alternative approaches (appendix).

Subgroup Analyses

To explore potential subgroups exhibiting stronger selective participation, we estimated exit and reconfiguration effects stratified by certain ACO characteristics. First, an ACO’s size should affect its selection incentives because larger ACOs should have more stable spending deviations over time. Larger ACOs may also have more resources and opportunities (if more practices) to respond to reconfiguration incentives than smaller ACOs. Thus, we compared exit and reconfiguration effects between small (less than 1,500 beneficiaries based on our 20% sample), medium (1,500 to 3,500), and larger (more than 3,500) ACOs.

Second, the extent to which an ACO’s beneficiaries are concentrated in a few practices versus more evenly distributed across many practices may affect an ACO’s capacity for practice-level selection. For example, ACOs with more dispersed beneficiaries may have more opportunities for strategic reconfiguration compared to concentrated ACOs. With greater practice-level spending variation within dispersed ACOs, more higher-spending practices could exit while keeping the ACO above minimum size thresholds. To characterize each ACO’s distribution of beneficiaries, we calculated a Herfindahl-Hirschman Index (HHI) ranging from 0 to 10,000 based on each practice’s share of its ACO’s attributed beneficiaries in the baseline period. We then defined concentrated and dispersed ACOs as those with HHIs greater than and less than 2,500, respectively.[10]

Finally, ACOs formed by or involved with convener organizations (or “aggregators”) may have unique characteristics that predict selective participation. Conveners play a key administrative role, often tasked with building an ACO’s network of practices, providing data resources, and managing reporting and participation.[11] These same capabilities may also position ACOs to respond to new selection incentives. Furthermore, the reliance on conveners to participate could reflect ACOs that were more likely to be on the margin of MSSP participation and potentially more sensitive to disadvantages imposed by benchmarking changes. Thus, conveners may mediate selective participation by jointly encouraging and informing participation among less advanced providers. To identify these ACOs, we used the CMS Public Use Files and internet searches (appendix). Finally, since conveners may tend to be involved with more dispersed ACOs with more opportunities for selective participation, we compared convener versus non-convener ACOs within concentrated and dispersed ACOs.

For all analyses of the effects of selective participation on spending deviation, standard errors were estimated based on 1,000 bootstrapped samples (appendix).

Limitations

Our study was subject to several limitations. First, our main analysis was limited to pre-post comparisons of MSSP participation patterns because a suitable control group was not available. Although regionalization applied to some ACO cohorts in later calendar years, these cohorts could not be used as controls due to potential anticipatory effects; ACOs likely made participation decisions based on long-run expectations of bonuses or losses. Given these limitations, observed compositional changes could not be interpreted as definitely attributable to the introduction of regionalized benchmarks. Other unmeasured factors may have driven observed changes, such as the presence of other MSSP changes occurring simultaneously. To address such risks, we controlled for other known MSSP changes, such as attribution rule changes (appendix).

Other sources of confounding may have come from changes elsewhere in Medicare or provider markets that disproportionately affected higher-spending providers’ participation decisions. However, we could not offer and did not find evidence of a clear alternative explanation. As expected, including transfers to the Next Generation ACO model (starting in 2016) as exits slightly attenuated our exit estimates (Exhibit A2). Benchmarks in the Next Generation model were also disproportionately attractive to lower spending providers. Thus, excluding transfers in our main analysis should better characterize the overall selective retention of lower-spending ACOs. Findings remained largely unchanged when including ACOs with prior Pioneer model participation (Exhibit A3).

Second, because we measured spending deviations using claims before each pair of performance years, estimated exit and reconfiguration effects could not distinguish between selection on lower spending achieved prior to MSSP entry versus lower spending achieved during earlier MSSP performance years leading up to the participation decision. To distinguish the former from the latter, we conducted a sensitivity analysis using a fixed set of pre-MSSP baseline claim years (appendix). While helping to isolate selection on lower spending achieved prior to MSSP entry, this sensitivity analysis was constrained by inherent drawbacks with TIN/CCNs, such as limited lookback periods. Still, sensitivity estimates were only slightly smaller than those from the main analysis, suggesting that the majority of exit and reconfiguration favoring lower spending providers was driven by selection on lower baseline spending prior to MSSP entry (Exhibit A4).

RESULTS

Study Sample and Program Churn

Our study included 528 ACOs that entered the MSSP between 2012 and 2016, prior to benchmark regionalization, and 285 ACOs that entered between 2017 and 2019 (Exhibit A5). Consistent with other research, we found that although a relatively steady number of ACOs entered each year, the number exiting the program has grown (Exhibit A6).[12] ACOs also made participant list changes, with net growth within ACOs in early performance years and increased practice-level exit over time (Exhibit A7).

Stability of Spending Relative to Region

Past ACO spending deviation strongly predicted future spending deviation during the six-year period prior to MSSP entry, suggesting that ACOs could anticipate whether they would face advantageous or disadvantageous benchmarks based on their baseline spending deviations. On average, an ACO could expect a persistent -$92 spending deviation over the first three performance years for every $100 their baseline three-year spending was below the regional average (95% confidence interval [CI]:-$75,-$109; Exhibit A8). Assuming 35% weight given to an ACO’s baseline spending deviation for its benchmark calculation, this approximately translated into a net advantage of $27 for every $100 of baseline spending below regional average (0.35×100 – 8). As expected, larger baseline spending deviations and baseline spending deviations for larger ACOs more reliably predicted subsequent spending deviations (Exhibit A8).

Overall Changes in Participation Patterns

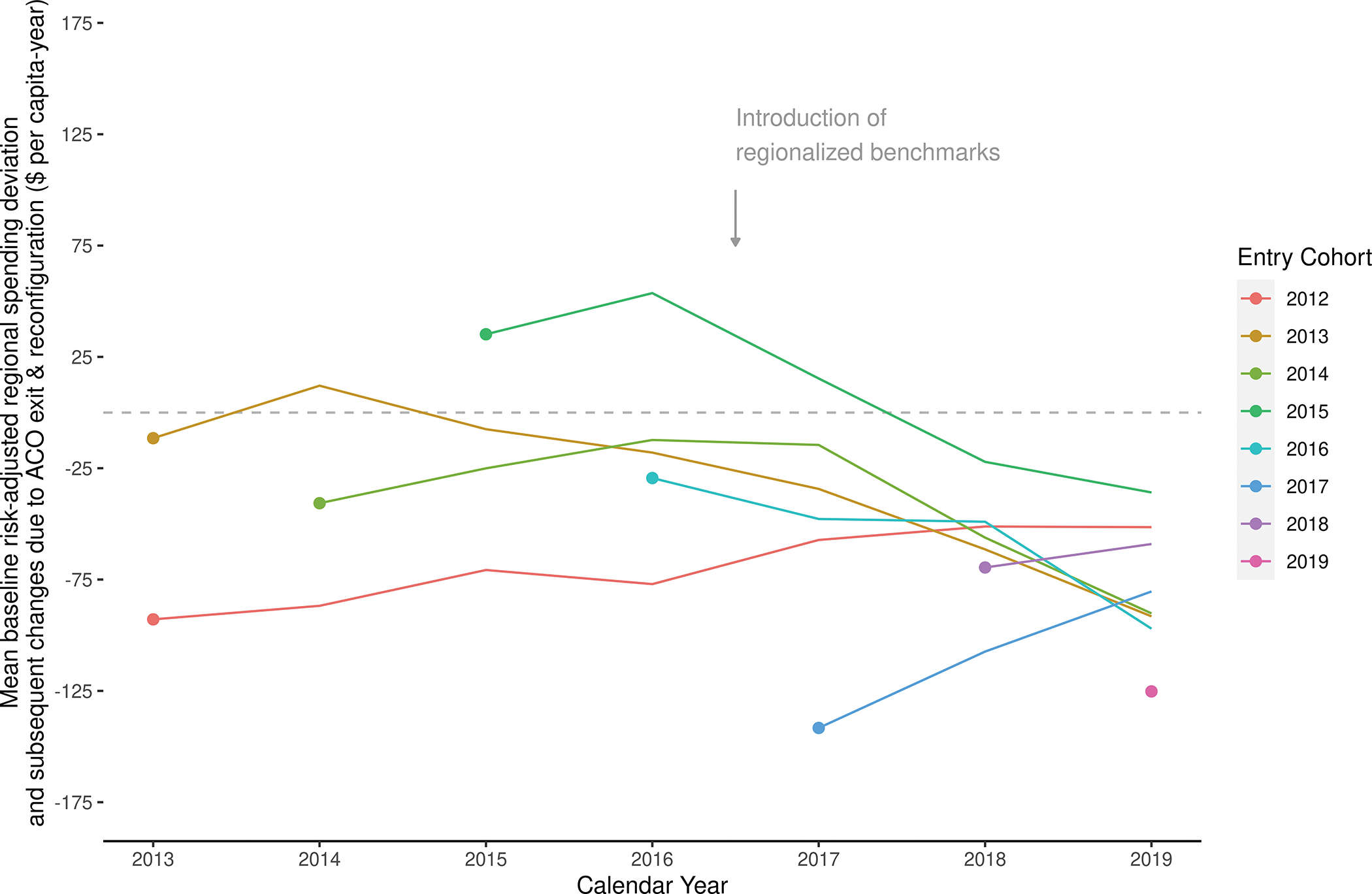

Exhibit 1 illustrates the average baseline spending deviation for each ACO cohort at their initial entry into the MSSP and changes to these deviations thereafter due to ACO-level exit and within-ACO participant list reconfiguration. Thus, Exhibit 1 characterizes the compositional changes in the MSSP attributable to ACO-level entry (changes in the entry points over time) and through ACO exit and reconfiguration (the trends over time) in terms of each cohort’s spending deviation.

EXHIBIT 1.

Medicare Shared Savings Program ACO cohorts’ initial average baseline spending deviation and subsequent changes over time attributable to ACO-level exit and within-ACO participant list reconfiguration.

Source/Notes: SOURCE Authors’ analysis of CMS MSSP Provider-level Research Identifiable Files, Medicare claims, and MD-PPAS data. NOTES Points represent average baseline risk-adjusted regional spending deviation based on ACOs’ initial participant list configuration, weighted by number of attributed beneficiaries, and lines represent net changes within a cohort due to ACO-level exit and within-ACO participant list reconfiguration over time; For example, an ACO cohort with no exits and no participant list changes would be represented as straight horizontal lines, shifted vertically depending on its initial baseline risk-adjusted regional spending deviation.

ACOs entering the MSSP after the introduction of regionalized benchmarks had baseline spending deviations that were significantly more negative than those for ACOs entering before. Specifically, average baseline spending deviation for the more recent entrants was $81.48 per beneficiary more negative (95% CI:-$104.02,-$58.94), or nearly 3.5 times lower, compared to ACOs that entered before 2017 (average baseline spending deviation among pre-2017 entrants:-$23.46 per beneficiary; Exhibit A9).

After the introduction of benchmark regionalization, ACO-level exit and within-ACO participant list reconfiguration also shifted each cohort’s composition toward lower spending participants. Among cohorts entering the MSSP before benchmark regionalization, exits and reconfigurations within each cohort prior to 2017 led to modestly positive changes in spending deviations (average annual change:$9.45 per beneficiary-year, 95% CI:$3.61,$15.29; Exhibit 2). In the years following benchmark regionalization, exits and reconfigurations instead led to more negative spending deviations (average annual changes: -$20.50 per beneficiary-year; 95% CI:-$24.88,-$16.11). By 2019, ACOs remaining in the MSSP had an average baseline spending deviation at entry of -$39.36 (vs. -$23.46 on average among all ACOs in our analysis; data not shown).

Exhibit 2.

Average annual change in risk-adjusted regional spending deviation within ACO cohorts due to participation changes before and after the introduction of regionalized benchmarks

| Mean annual within-cohort change in risk-adjusted regional spending deviation, $ per beneficiary-year (SE) | ||

|---|---|---|

|

| ||

| During Pre-Benchmark Regionalization

Period 2013 – 2016 |

During Post-Benchmark Regionalization

Period 2017 – 2019 |

|

|

| ||

| Net changes due to ACO-level exit or within-ACO participant list reconfiguration | 9.45 (2.98)** | −20.50 (2.24)*** |

| Net changes due to within-ACO participant list reconfiguration only | 6.13 (2.71)* | −12.13 (1.86)*** |

SOURCE Authors’ analysis of CMS MSSP Provider-level Research Identifiable Files, Medicare claims, and MD-PPAS data.

NOTES:

p<0.05,

p<0.01,

p<0.001, based on two-sided z-test using bootstrapped standard errors and null hypothesis of zero average change; Means were weighted by number of attributed beneficiaries and based on study ACOs that entered the Medicare Shared Savings Program prior to benchmarking changes (i.e., cohorts 2012 through 2016); Net changes due to within-ACO participant list reconfiguration were estimated among the subset of ACOs participating in the MSSP in the following year (see Appendix for details); If ACOs never exited and never changed their participant list from their initial entry through 2019, all values above would equal zero.

Reconfigurations within ACOs prior to 2017 raised ACOs’ spending deviation slightly by an average of $6.13 per beneficiary-year (95% CI:$0.83,$11.43; Exhibit 2). In contrast, within-ACO reconfigurations in 2017 and later led to more negative spending deviations (average annual change:-$12.13 per beneficiary-year, 95% CI:-$15.78,-$8.49; Exhibit 2).

Subgroup Participation Patterns

Exit and reconfiguration effects that reduced cohorts’ spending relative to region were more pronounced among ACOs that were medium- to large-sized, involved with a convener organization, and whose attributed beneficiaries were more evenly dispersed across practices. ACO types also varied in whether selective participation was driven by ACO-level exit versus within-ACO reconfiguration.

For large-sized ACOs, for example, after benchmark regionalization, annual exits and reconfigurations led to increasingly negative spending deviations, averaging -$17.49 per beneficiary-year (95% CI:-$25.74,-$9.24; Exhibit 3). Among large-sized ACOs that continued to participate, similar changes were observed due to reconfiguration alone (average annual change:-$17.13, 95% CI:-$24.00,-$10.25; Exhibit 3), implying that selective participation among large-sized ACOs occurred largely through reconfigurations. In contrast, selective participation among medium-sized ACOs occurred through a mix of exits and reconfigurations, as the average annual change in these ACOs’ spending deviation due to exit and reconfiguration (-$19.25, 95% CI:-$30.70,-$7.80; Exhibit 3) was only partially explained by estimated changes due to reconfiguration alone (-$11.42, 95% CI:-$20.41,-$2.43; Exhibit 3).

Exhibit 3.

Average annual change in risk-adjusted regional spending deviation within ACO cohorts attributable to participation changes before and after the introduction of regionalized benchmarks, measured by ACO subgroups

| Mean annual within-cohort change in risk-adjusted regional spending deviation, $ per beneficiary-year (SE) | ||

|---|---|---|

|

| ||

| During Pre-Benchmark Regionalization

Period 2013 – 2016 |

During Post-Benchmark Regionalization

Period 2017 – 2019 |

|

|

| ||

| Changes due to ACO-level exit or within-ACO reconfiguration | ||

| By ACO’s size at entry (number of beneficiaries) | ||

| Small (less than 1,500) | −8.52 (9.02) | −9.87 (6.54) |

| Medium (1,500 to 3,500) | 17.73 (5.52)* | −19.25 (4.03)* |

| Large (more than 3,500) | 5.39 (3.65) | −17.49 (2.91)* |

| By convener involvement | ||

| Convener-involved | −13.30 (10.74) | −76.04 (8.80)* |

| Non-convener | 12.66 (3.11)* | −16.40 (2.32)* |

| By distribution of beneficiaries within the ACO at entry | ||

| Concentrated | 9.93 (3.63) | −8.55 (2.42)* |

| Dispersed | 6.18 (4.74) | −28.23 (4.26)* |

| By convener involvement * beneficiary distribution | ||

| Convener + concentrated | −8.45 (13.08) | −41.48 (10.35)* |

| Convener + dispersed | −8.35 (14.43) | −103.87 (13.10)* |

| Non-convener + concentrated | 11.50 (3.75)* | −8.28 (2.41)* |

| Non-convener + dispersed | 10.07 (5.07) | −16.56 (4.59)* |

| Changes due to within-ACO reconfiguration only | ||

| By ACO’s size at entry (number of beneficiaries) | ||

| Small (less than 1,500) | 2.19 (8.19) | 5.37 (5.72) |

| Medium (1,500 to 3,500) | 5.36 (4.65) | −11.42 (3.17)* |

| Large (more than 3,500) | 9.11 (3.51) | −17.13 (2.42)* |

| By convener involvement | ||

| Convener-involved | 11.90 (9.32) | −10.57 (7.15) |

| Non-convener | 5.27 (2.87) | −14.07 (1.96)* |

| By distribution of beneficiaries within the ACO at entry | ||

| Concentrated | −4.21 (3.17) | −4.59 (1.96) |

| Dispersed | 12.69 (4.42)* | −17.59 (3.43)* |

| By convener involvement * beneficiary distribution | ||

| Convener + concentrated | −15.66 (11.11) | 2.45 (7.56) |

| Convener + dispersed | 22.39 (12.33) | −24.92 (10.35) |

| Non-convener + concentrated | −3.55 (3.28) | −5.55 (1.96) |

| Non-convener + dispersed | 9.61 (4.84) | −20.26 (3.70)* |

SOURCE Authors’ analysis of CMS MSSP Provider-level Research Identifiable Files, Medicare claims, and MD-PPAS data.

NOTES:

p<0.0045 based on two-sided z-test using bootstrapped standard errors (null hypothesis of zero average change) and after applying Bonferroni correction for multiple comparisons within column and panel (for family-wise p<0.05); Means are weighted by number of attributed beneficiaries and based on study ACOs that entered the Medicare Shared Savings Program prior to benchmarking changes (i.e., cohorts 2012 through 2016); Net changes due to within-ACO participant list reconfiguration only were estimated among the subset of ACOs participating in the MSSP in the following year (see Appendix for details); If ACOs never exited and never changed their participant list from their initial entry through 2019, all values above would equal zero; ACO size represents the number of attributed beneficiaries based on the study’s 20% sample; Concentrated ACOs are defined as those where the Herfindahl-Hirschman Index (HHI), based on shares of attributed beneficiaries across tax identifier number (TIN) or CMS certification number (CCN), is ≥2,500.

The interaction between convener involvement and the distribution of beneficiaries within the ACO also revealed different participation patterns following benchmark regionalization. Exit and reconfiguration effects were greater among ACOs involved with convener organizations, particularly those that were also more dispersed. Following the start of regionalized benchmarking, exits and reconfigurations among these ACOs led to an average annual change in spending deviations of -$103.87 per beneficiary-year (95% CI:-$141.03,-$66.71; Exhibit 3). Among non-convener ACOs, selective participation of lower spending providers was more pronounced among the subset of dispersed non-convener ACOs (average annual change:-$16.56; 95% CI:-$29.57,-$3.55; Exhibit 3). Notably, exit and reconfiguration effects among convener-involved ACOs, concentrated and dispersed alike, appeared mostly driven by ACO-level exit. Dispersed non-convener ACOs instead appeared to select lower-spending providers through reconfiguration (Exhibit 3).

DISCUSSION

Our study found that ACO- and practice-level participation in the MSSP increasingly shifted to providers with lower spending in their region following the introduction of regionalized benchmarking that rewarded such selection. The disproportionate participation of providers with more negative spending deviations led to higher benchmarks than would have occurred if only historical spending was used to set benchmarks and thus larger bonuses than would have occurred through changes in practice patterns alone. Because a suitable control group was not available, we could not conclusively identify whether recent participation changes were directly caused by benchmark regionalization. However, the results were consistent in several ways with strategic selection behavior related to benchmarking changes.

First, we found that the incentive to select on baseline spending deviations was strong. Under the status quo, providers could expect their spending relative to region to remain largely stable over time, particularly for ACOs with greater volume. These findings aligned with observed exit and reconfiguration effects, which were most pronounced for larger ACOs.

Second, more recent trends in MSSP participation significantly differed from those prior to benchmark regionalization. Before 2017, annual compositional changes within cohorts did not shift toward providers with lower spending relative to region and actually tended to favor those with higher spending. In this earlier period, the MSSP relied on pure historical benchmarking, and ACOs with less efficient practices and greater potential savings were found to achieve greater savings.[13] As realized financial performance in the program (earning bonuses) predicts subsequent participation in the voluntary MSSP, the modest rise in spending deviations in the early years is thus consistent with exit of ACOs failing to achieve savings.[3]

These trends reversed in 2017, coinciding with the introduction of regionalized benchmarking. In contrast to earlier patterns, annual exits and reconfigurations after the benchmarking change resulted in the disproportionate participation of providers whose spending was already lower than their region. Furthermore, ACOs that entered the MSSP following the benchmarking change had significantly lower baseline spending deviation compared to those that had entered before.

Our subgroup findings also shed light on how different ACO characteristics might predict different selection behavior. We found the largest effects among those involved with convener organizations, consistent with the hypothesized responsiveness of these types of ACOs. Importantly, these findings do not imply that the role of convener organizations is necessarily problematic. To the contrary, we found no evidence of strategic practice-level selection behavior among convener-involved ACOs, as selective participation was driven by ACO-level exit. However, we did not examine whether conveners facilitated selective configurations at entry or subsequent re-entry into the MSSP.

Instead, convener organizations may have supported participation of ACOs with greater sensitivity to benchmark adjustments. Research has found that many providers participate in ACOs only with the external operational and financial support offered by companies like conveners, and these external partners often share in ACOs’ financial risk.[11] Facing new disadvantageous participation incentives, such ACOs that required more assistance to facilitate entry may have been more likely to exit.

Among non-convener ACOs, selective participation effects were largest among dispersed ACOs and driven by reconfiguration rather than exit. The latter finding may reflect the greater opportunity for practice-level selection in ACOs where attributed beneficiaries are evenly distributed across more participating practices. CMS provides ACOs with claims data that could be used for practice profiling and participation decisions. Although some ACOs may initially lack advanced data infrastructure to support strategic reconfiguration, qualitative evidence has found that successful ACOs develop such capabilities over time or rely on consultants[14].

Alternatively, strategic practice-level selection need not be based on practice-level information. ACOs likely add or subtract practices for reasons unrelated to practice spending deviations, introducing variation in ACO-level spending deviations. For example, practices join independent practice associations or clinically integrated networks to contract with other payers. ACOs that happened to remove practices with positive spending deviations (or add those with negative spending deviations) then would be less likely to exit, creating a pattern of reconfiguration among continuing ACOs that may appear to be mediated by decisions based on practice-level spending deviations when in fact it is mediated by ACO exit based on ACO-level performance.

Policy Implications

Whether explained by strategic behavior or other factors, selective participation under regionalized benchmarking likely led to sizeable subsidies for lower pre-existing spending levels. Importantly, our study could not directly evaluate the overall merits of regionalized benchmarks. Decoupling benchmarks from past performance (removing ratchet effects) strengthens incentives, but it seems unlikely that ACOs remaining in the program reduced inefficient spending (in response to those stronger incentives) enough to compensate for the subsidies they received and the loss of participants with greater savings potential. ACO policy must better preserve incentives to participate as it strengthens incentives to save.

One way to encourage MSSP participation is to make FFS less attractive by slowing fee growth. However, Medicare FFS growth rates are already set to be slow, and the effect of constrained fee growth on participation may be too gradual to meaningfully mitigate selective participation in the near term.

Therefore, MSSP benchmarking methods must be reformed. At a minimum, slower or delayed convergence of historical benchmarks toward a regional average would be more palatable to higher-spending providers, and ACO-specific ratchet effects from rebasing must be removed. Furthermore, benchmarks that converge to average realized FFS spending will continue to incentivize selective participation in voluntary settings, as approximately half of providers will always fall above average spending. Thus, to set a common benchmark for all ACOs in a region while permitting program expansion, benchmarks must converge to a rate above realized spending. This can be achieved by letting ACOs retain more of the savings they achieve. Specifically, benchmarks growth rates that diverge from observed spending growth (set administratively) can help address various ratchet effects related to rebasing and high MSSP penetration rates while facilitating convergence in benchmarks to a common regional rate set above, rather than at, average realized FFS spending.[15]

CMS recently finalized changes in this direction, as well as other refinements aimed at strengthening incentives to participate and save in the MSSP.[16] These include additional caps on downward benchmark adjustments for ACOs with spending above their regional average, opportunities to remain in one-sided risk longer, new health equity adjustments, and shielding bonuses from rebasing to mitigate incentive-weakening ratchet effects.

Conclusion

Our study found meaningful changes in MSSP participation patterns that were consistent with the incentives introduced by key changes to benchmarking methods. ACO entry, exit, and participant list reconfiguration all resulted in disproportionate participation of providers with lower baseline spending for their region. These findings support recently proposed changes to MSSP benchmarking policy but emphasize the importance of continued improvements.

Supplementary Material

Contributor Information

Peter F. Lyu, RTI International, Research Triangle Park, North Carolina

Michael E. Chernew, Harvard University, Boston, Massachusetts

J. Michael McWilliams, Harvard University and Brigham and Women’s Hospital, Boston, Massachusetts.

References

- 1.Einav L, et al. , Voluntary Regulation: Evidence from Medicare Payment Reform. Q J Econ, 2022. 137(1): p. 565–618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.McWilliams JM and Song Z, Implications for ACOs of variations in spending growth. N Engl J Med, 2012. 366(19): p. e29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bleser WK, et al. , Why Do Accountable Care Organizations Leave The Medicare Shared Savings Program? Health Aff (Millwood), 2019. 38(5): p. 794–803. [DOI] [PubMed] [Google Scholar]

- 4.Centers for Medicare and Medicaid Services, Medicare Program; Medicare Shared Savings Program: Accountable Care Organizations, 80 FR 32785–32796. 2015.

- 5.Douven R, McGuire TG, and McWilliams JM, Avoiding unintended incentives in ACO payment models. Health Aff (Millwood), 2015. 34(1): p. 143–9. [DOI] [PubMed] [Google Scholar]

- 6.McWilliams JM, et al. , Savings or Selection? Initial Spending Reductions in the Medicare Shared Savings Program and Considerations for Reform. Milbank Q, 2020. 98(3): p. 847–907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wilcock AD, et al. , Hospital Responses to Incentives in Episode-Based Payment for Joint Surgery: A Controlled Population-Based Study. JAMA Intern Med, 2021. 181(7): p. 932–940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.McWilliams JM, et al. , Getting More Savings from ACOs - Can the Pace Be Pushed? N Engl J Med, 2019. 380(23): p. 2190–2192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chernew ME, et al. MSSP Participation Following Recent Rule Changes: What Does It Tell Us? Health Affairs Blog 2019. Nov 22; Available from: 10.1377/hblog20191120.903566/full/. [DOI]

- 10.U.S. Department of Justice and Federal Trade Commission, Horizontal Merger Guidelines. 2010.

- 11.Lewis VA, et al. , The Hidden Roles That Management Partners Play In Accountable Care Organizations. Health Aff (Millwood), 2018. 37(2): p. 292–298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Muhlestein D, et al. , All-Payer Spread Of ACOs And Value-Based Payment Models In 2021: The Crossroads And Future Of Value-Based Care, in Health Affairs Forefront. 2021, Health Affairs. [Google Scholar]

- 13.McWilliams JM, et al. , Early Performance of Accountable Care Organizations in Medicare. N Engl J Med, 2016. 374(24): p. 2357–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gonzalez-Smith J, et al. , How to Better Support Small Physician-led Accountable Care Organizations: Recent Program Updates, Challenges, and Policy Implications. 2020, Duke University Margolis Center for Health Policy. [Google Scholar]

- 15.McWilliams JM, Chen A, and Chernew ME, From Vision to Design in Advancing Medicare Payment Reform: A Blueprint for Population-based Payments. 2021, USC-Brookings Schaeffer Initiative for Health Policy. [Google Scholar]

- 16.Centers for Medicare and Medicaid Services. Calendar Year (CY) 2023 Medicare Physician Fee Schedule Final Rule - Medicare Shared Savings Program. 2022. Nov 1; Available from: https://edit.cms.gov/files/document/mssp-fact-sheet-cy-2023-pfs-final-rule.pdf.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.