Abstract

The number of databases that provide various measurements of lexical properties for psycholinguistic research has increased rapidly in recent years. The proliferation of lexical variables, and the multitude of associated databases, makes the choice, comparison, and standardization of these variables in psycholinguistic research increasingly difficult. Here, we introduce The South Carolina Psycholinguistic Metabase (SCOPE), which is a metabase (or a meta-database) containing an extensive, curated collection of psycholinguistic variable values from major databases. The metabase currently contains 245 lexical variables, organized into seven major categories: General (e.g., frequency), Orthographic (e.g., bigram frequency), Phonological (e.g., phonological uniqueness point), Orth-Phon (e.g., consistency), Semantic (e.g., concreteness), Morphological (e.g., number of morphemes) and Response variables (e.g., lexical decision latency). We hope that SCOPE will become a valuable resource for researchers in psycholinguistics and affiliated disciplines such as cognitive neuroscience of language, computational linguistics, and communication disorders. The availability and ease of use of the metabase with comprehensive set of variables can facilitate the understanding of the unique contribution of each of the variables to word processing, and that of interactions between variables, as well as new insights and development of improved models and theories of word processing. It can also help standardize practice in psycholinguistics. We demonstrate use of the metabase by measuring relationships between variables in multiple ways and testing their individual contribution towards a number of dependent measures, in the most comprehensive analysis of this kind to date. The metabase is freely available at go.sc.edu/scope.

Keywords: psycholinguistic, database, lexical characteristics, word recognition

How words are processed is a central question in psycholinguistics and cognitive science in general. A rich body of research has addressed this question using behavioral studies, most commonly using lexical decision and word naming tasks. This has provided valuable insights into properties that affect word processing, shedding light on mechanisms behind both visual and auditory word processing. In the last 15 years, large scale studies (“megastudies”), often including tens of thousands of words, have greatly boosted this research. For example, the English Lexicon Project (ELP) provides measures of both visual lexical decision and speeded naming tasks for over 40,000 words, along with numerous psycholinguistic properties (Balota et al., 2007). Other megastudies provide data for visual lexical decision of British English (Keuleers et al., 2012), auditory lexical decision (Goh et al., 2020; Tucker et al., 2019), and semantic decision (Pexman et al., 2017). Instead of relying on a small sample of carefully chosen words that may be idiosyncratic in some fashion, these studies enable development and testing of potentially more robust word processing models on a larger scale. At the same time, the number of studies that measure word properties by collecting ratings on psycholinguistic variables for thousands of words has also increased rapidly (Taylor et al., 2020), providing rich and robust measures of many lexical properties (e.g., Brysbaert et al., 2014; Lynott et al., 2020; Pexman et al., 2019; Scott et al., 2019).

A welcome result of this work is an extensive and ever-increasing list of psycholinguistic variables that are found to affect, to varying extents, how words are processed. When investigating the contribution of a particular variable, researchers control for effects of other variables that are deemed to be standard control variables. However, what is considered “standard” often differs significantly between studies. Typically, a handful of variables such as frequency, number of letters or phonemes, and imageability or concreteness are used, in addition to a few other variables that vary. However, the number of psycholinguistic variables known to affect word processing is much larger. The justification for using a particular set of variables is often not clear. Investigators’ research interest and expertise are understandably important factors in the choice, as well as convenience or ease of obtaining values for these variables. With the proliferation of megastudies that provide ratings on myriad of variables, it is increasingly difficult to identify and obtain values for all potentially relevant variables. This is because these variables are often spread across many datasets, most of which provide ratings for partially different sets of words. Some databases also contain values for multiple senses of the same word forms (e.g., Scott et al., 2019).

Moreover, in some cases, a nominally single variable has several subtlety different versions. For example, word frequency has well over a dozen measures. Many other variables, such as affective ratings or imageability also have multiple measures available. The contribution of different versions of a variable to a dependent variable is likely different (Brysbaert & New, 2009). Investigators often use a particular version of a variable that is familiar, or customary in their lab. For example, one lab may often use the log_Freq_HAL variable from the ELP as frequency measure as a convention or habit, while another may use log_SUBTLEX as standard practice. Ideally, this choice should be driven by a systematic comparison between different versions taking into account factors such as variances explained by a particular version of a variable, differences of tasks instructions, goals of the study, and dependent measures of interest.

To overcome these challenges in psycholinguistic research, we introduce a new meta-database or metabase named SCOPE (South CarOlina Psycholingusitic mEtabase). It aims to provide the most comprehensive collection of variables to date, by integrating megastudies and other major databases in the form of a curated metabase. It also contains additional variables for both words and nonwords not found in other databases. We have attempted to be extensive in our coverage, while acknowledging that the number of possible lexical variables is virtually unlimited. No database can be truly comprehensive, because new variables are being proposed and measured all the time, but we hope to update the metabase periodically to include new variables. Our hope is that this metabase will be, for many cases, a “one stop shop” for psycholinguistics and for affiliated disciplines such as cognitive neuroscience of language, computational linguistics, and communication disorders. Ease of use and availability may enhance incorporation of many of the provided variables in psycholinguistic and neurolinguistic studies, potentially leading to faster progress.

Our aims are threefold. First, we expect that the metabase will enable a more comprehensive examination of word processing, which will result in a better understanding of the unique contribution of each of the variables and their interactions. It may promote development of improved models and theories of word and language processing and a better exchange of insights from different facets of language research, such as reading, speech perception, and semantics. Second, we hope that such a metabase will help standardize practice in psycholinguistics and will lead to a wider agreement over which variables, and which versions of those variables, are the most informative and should be routinely used or controlled for in different contexts. Finally, we present a preliminary analysis that measures the relationship between a large subset of the variables, and their individual contributions to several dependent variables, in the most comprehensive analysis of this type to date.

The variables in the metabase are organized in seven groups. The first group, “General”, contains variables that have elements of orthography, phonology, and semantics, and are often strongly predictive of word processing performance. Variables such as frequency and age-of-acquisition are contained in this group. Three groups correspond to major components of a lexical item: “Orthographic”, “Phonological” and “Semantis”. A fifth group, “Orth-Phon”, contains variables that represent the relationship between orthography and phonology. A sixth group is “Morphology,” which contains variables such as length of morpheme and morpheme frequency. Finally, the seventh group contains “Response” variables represented by mean response times and accuracies. In addition to words, the database also contains orthographic and phonological measures as well as dependent measures, when available, for some pseudowords and nonwords.

We describe the distribution of each of the variables, and the relationship between each of the independent variables and the dependent variables. The metabase contains 245 variables (some of which are multi-dimensional) and a total of 105,992 words and 81,934 nonwords in the current version, with varying number of variable values available for each item. Finally, with each variable, we provide associated information such as the definition and the citation of its source that should be used when that variable is included in a study, as it is essential to credit the original creators of these databases.

Description of Database

The variables in the SCOPE metabase (Supplemental Table 1; Supplemental material can be found at https://osf.io/9qbjz/) were divided into General, Orthographic, Phonological, Semantic, Orth-Phon, Morphological, and Response Variable groups. Each variable is briefly described below.

General Variables

Freq_HAL

Log10 version of frequency norms based on the Hyperspace Analogue to Language (HAL) corpus (Lund & Burgess, 1996). It contains text from approximately 3,000 Usenet newsgroups that is very conversional and noisy like spoken language.

Freq_KF

Log10 version of frequency norms based on the Kucera and Francis corpus (Kučera & Francis, 1967).

Freq_SUBTLEXUS

Log10 version of frequency norms based on the SUBTLEXus corpus (Brysbaert & New, 2009). The main sources of SUBTLEXus corpus are American television and film subtitles.

Freq_SUBTLEXUS_Zipf

A standardized version of Freq_SUBTLEXUS that can be interpreted independently of the corpus size, and it is calculated based on the equation: , in which the size of corpus and number of word types are in millions. It is a standardized measure with the same interpretation irrespective corpus size, and it has multiple advantages compared with frequency per million words (Brysbaert & New, 2009; Van Heuven et al., 2014).

Freq_SUBTLEXUK

Log10 version of the frequency norms based on SUBTLEXuk corpus (Van Heuven et al., 2014). Like Freq_SUBTLEXUS, it is based on British film and television subtitles rather than books and other written sources.

Freq_SUBTLEXUK_Zipf

A standardized version of Freq_SUBTLEXUK that can be interpreted independently of the corpus size and it is computed in the same way as Freq_SUBTLEXUS_Zipf, for which (Van Heuven et al., 2014).

Freq_Blog

Log10 version of the frequency norms based on sources from blogs (Gimenes & New, 2016).

Freq_Twitter

Log10 version of the frequency norms based on sources from Twitter (Gimenes & New, 2016).

Freq_News

Log10 version of the frequency norms based on sources from newspapers (Gimenes & New, 2016).

Freq_Cob

Log10 of word frequencies in English based on COBUILD corpus (Baayen et al., 1996).

Freq_CobW

Log10 of word frequencies in written English based on COBUILD corpus (Baayen et al., 1996).

Freq_CobS

Log10 of word frequencies in spoken English based on COBUILD corpus (Baayen et al., 1996).

Freq_Cob_Lemmas

Log10 of lemma frequencies in English based on COBUILD corpus (Baayen et al., 1996), which is the sum of frequencies of all the infected forms of a particular word.

Freq_CobW_Lemmas

Log10 of lemma frequencies in written English based on COBUILD corpus (Baayen et al., 1996).

Freq_CobS_Lemmas

Log10 of lemma frequencies in spoken English based on COBUILD corpus (Baayen et al., 1996).

CD_SUBTLEXUS

Log10 version of the contextual diversity of a word, which refers to the number of passages in the SUBTLEXus corpus containing a particular word (Brysbaert & New, 2009).

CD_SUBTLEXUK

Log10 version of the contextual diversity of a word based on the SUBTLEXuk corpus (Van Heuven et al., 2014).

CD_Blog

Log10 version of the contextual diversity of a word based on blog sources (Gimenes & New, 2016).

CD_Twitter

Log10 version of the contextual diversity of a word based on Twitter sources (Gimenes & New, 2016).

CD_News

Log10 version of the contextual diversity of a word based on news sources (Gimenes & New, 2016).

Fam_Glasgow

A word’s rated subjective familiarity on a 1 (unfamiliar) to 7 (familiar) scale (Scott et al., 2019).

Fam_Brys

Percentage of participants who know the word well enough to rate its concreteness (Brysbaert et al., 2014).

Prevalence_Brys

The proportion of participants who know the word. Participants were asked to indicate whether they knew the stimulus (yes or no) in a list of words and nonwords. Percentages were translated to z values based on cumulative normal distribution. A word known by 2.5% of the participants corresponds to a word prevalence of −1.96; a word known by 97.5% of the participants corresponds to a prevalence of +1.96 (Brysbaert et al., 2019).

AoA_Kuper

The age at which people acquired the word. Participants were asked to enter the age (in years) at which they estimated they had learned the word (Kuperman et al., 2012).

AoA_LWV

The age at which people acquired the word, in which a three-choice test was administered to participants in grades 4 to 16 (college) (Living Word Vocabulary database) (Dale & O’Rourke, 1981).

AoA_Glasgow

Rated age of acquisition, which indicates the age at which people estimate they acquired the word on 1 (early) to 7 (late) scale (Scott et al., 2019).

Freqtraj_TASA

How experience with a word is distributed over time based on TASA corpus. It was computed by first taking logarithms of the frequencies and then transforming them to z-values for low (grades 1–3) and high grades (grades 11–13) respectively (Brysbaert, 2017).

Cumfreq_TASA

Total amount of exposure to a word across time based on TASA corpus. It was computed by first taking logarithms of the frequencies at different grade levels from grade 1 to 13, transforming them to z-values and then obtaining the sum of the z-values (Brysbaert, 2017).

DPoS_Brys

The dominant grammatical category to which a word is assigned in accordance with its syntactic functions (Brysbaert et al., 2012).

DPoS_VanH

The dominant grammatical category to which a word is assigned in accordance with its syntactic functions (Van Heuven et al., 2014).

SCOPE_ID

Unique ID for each word. This was chosen to be the same as the ELP ID (Balota et al., 2007) for words/nonwords that are in the ELP database, and new values were created for other items.

ELP_ID

Unique ID for each word from the ELP database (Balota et al., 2007), when available.

Orthographic Variables

NLett

Number of letters in a word.

UnigramF_Avg_C

UnigramF_Avg_C_Log

The average frequency of the constrained unigrams of a word and its log10 version. A constrained unigram is defined as a specific letter in a specific position, for words of a specific length (Medler & Binder, 2005).

UnigramF_Avg_U

UnigramF_Avg_U_Log

The average frequency of the unconstrained unigrams for a word and its log10 version. An unconstrained unigram is defined as a specific letter within a word, regardless of its position, or the word length (Medler & Binder, 2005).

BigramF_Avg_C

BigramF_Avg_C_Log

The average frequency of the constrained bigrams for a word and its log10 version. A constrained bigram is defined as a specific two letter combination (bigram) within a word, in a specific position, for words of a specific length (Medler & Binder, 2005).

BigramF_Avg_U

BigramF_Avg_U_Log

The average frequency of the unconstrained bigrams for a word and its log10 version. An unconstrained bigram is defined as a specific two letter combination (bigram) within a word, regardless of its position, or word length (Medler & Binder, 2005).

TrigramF_Avg_C

TrigramF_Avg_C_Log

The average frequency of the constrained trigrams for a word and its log10 version. A constrained trigram is defined as a specific three letter combination (trigram) in a specific position, for words of a specific length (Medler & Binder, 2005).

TrigramF_Avg_U

TrigramF_Avg_U_Log

The average frequency of the constrained trigrams for a word. An unconstrained trigram is defined as a specific three letter combination (trigram) within a word, regardless of its position, or the word length (Medler & Binder, 2005).

OLD20

The Orthographic Levenshtein Distance 20, a measure of orthographic neighborhood defined as the mean Levenshtein distance of a word to its 20 closest orthographic neighbors (Balota et al., 2007; Yarkoni et al., 2008). Levenshtein Distance is the minimum number of substitution, insertion or deletion operations required to change one word to another (Levenshtein, 1966).

OLD20F

The mean log HAL frequency of the closest 20 Levenshtein Distance orthographic neighbors (Balota et al., 2007; Yarkoni et al., 2008).

Orth_N

Orthographic neighborhood (or Coltheart’s N), which is the number of words that can be obtained by changing one letter while preserving the identity and positions of the other letters (Balota et al., 2007; Coltheart, 1977).

Orth_N_Freq

The average frequency of the orthographic neighborhood of a particular word (Balota et al., 2007).

Orth_N_Freq_G

The number of words in the orthographic neighborhood of an item with a frequency greater than the frequency of the item (Balota et al., 2007).

Orth_N_Freq_G_Mean

The average frequency of the orthographic neighbors who have a frequency greater than the given word (Balota et al., 2007).

Orth_N_Freq_L

The number of orthographic neighbors with a frequency less than that of a given item (Balota et al., 2007).

Orth_N_Freq_L_Mean

The average frequency of the orthographic neighbors who have a frequency lower than the given word (Balota et al., 2007).

Orth_Spread

The number of letter positions that can be changed to form a neighbor that differs by a single letter (Chee et al., 2020).

OUP

Orthographic uniqueness point of a word. It indicates which letter position within the word distinguishes it from all other words. An index of one greater than the number of letters is assigned if even the final letter of the word does not make it unique (Tucker et al., 2019; Weide, 2005).

Phonological Variables

NPhon

The number of phonemes in a word (Balota et al., 2007).

NSyll

The number of syllables in a word (Balota et al., 2007).

UniphonP_Un

UniphonP_St

The average likelihood of each phoneme occurring in each position of a word weighted by SUBTLEXus frequency, with vowel-stress ignored (Un) or distinct stress-vowels considered (St) (Vaden et al., 2009). It was calculated by averaging the positional probabilities of the constituent phonemes of a word in their respective positions (i.e., frequency of each phoneme occurring in a specific position).

UniphonP_Un_C

UniphonP_St_C

The length-constrained average likelihood of each phoneme occurring in each position of a word weighted by SUBTLEXus frequency, with vowel-stress ignored (Un) or distinct stress-vowels considered (St) (Vaden et al., 2009).

BiphonP_Un

BiphonP_St

The relative frequency of the sound sequences of a word at the level of its phoneme pairs (i.e., number of items a phoneme pair occurrs among all words divided by all pairwise counts) weighted by SUBTLEXus frequency, with vowel-stress ignored (Un) or stress-vowels accounted for (St) (Vaden et al., 2009; Vitevitch & Luce, 1999).

TriphonP_Un

TriphonP_St

The relative frequency of the sound sequences of a word at the level of its phoneme triplets weighted by SUBTLEXus frequency, with vowel-stress ignored (Un) or distinct stress placement distinguished (St) (Vaden et al., 2009).

PLD20

Phonological Levenshtein Distance 20, which is the mean Levenshtein distance of a word to its 20 closest phonological neighbors (Balota et al., 2007; Yarkoni et al., 2008).

PLD20F

The mean log frequency of the closest 20 Levenshtein distance phonological neighbors (Balota et al., 2007; Yarkoni et al., 2008).

Phon_N

Phonological neighborhood, measured by the number of words that can be obtained by changing one phoneme while preserving the identity and positions of the other phonemes (Balota et al., 2007).

Phon_N_Freq

The average logHAL frequency of the phonological neighborhood of a particular word (Balota et al., 2007).

Phon_Spread

The number of phoneme positions that can be changed to form a neighbor that differs by a single phoneme (Chee et al., 2020).

PUP

Phonological uniqueness point of a word based on CMU Pronouncing Dictionary, which indicates which phoneme position within the word distinguishes it from all other words. An index of one greater than the number of letters is assigned if even the final sound of the word does not make it unique (Tucker et al., 2019; Weide, 2005).

Phon_Cluster_Coef

The fraction of neighbors of a word that are also phonological neighbors of each other (Goldstein & Vitevitch, 2014).

First_Phon

The first phoneme of a word based on the CMU Pronouncing Dictionary (Weide, 2005). It includes the following 14 dimensions coded with a binary code: bilabial, labiodental, interdental, alveolar, palatal, velar, glottal, stop, fricative, affricate, nasal, liquid, glide, and voiced.

IPA Transcription

Phonemic transcription using the International Phonetic Alphabet, based on the CMU Pronouncing Dictionary (Weide, 2005).

Semantic Variables

Conc_Brys

The degree to which the concept can be experienced directly through the senses on a 1 (abstract) to 5 (concrete) scale (Brysbaert et al., 2014).

Conc_Glasgow

The degree to which the concept can be experienced directly through the senses on a 1 (abstract) to 7 (concrete) scale (Scott et al., 2019).

Imag_Glasgow

The degree of effort involved in generating a mental image of something on a 1 (unimageable) to 7 (imageable) scale (Scott et al., 2019).

Imag_Composite

The degree of effort involved in generating a mental image of a concept on a scale from 1 (unimageable) to 7 (imageable) (See Graves et al., 2010 for details). This measure was obtained from a database compiled from six sources, and ratings of words present in multiple databases were averaged (Bird et al., 2001; Clark & Paivio, 2004; Cortese & Fugett, 2004; Gilhooly & Logie, 1980; Paivio et al., 1968; Toglia & Battig, 1978).

Nsenses_WordNet

Number of senses based on the WordNet database (Miller, 1995). A sense is a discrete representation of one aspect of the meaning of a word.

Nsenses_Wordsmyth

Number of senses based on the Wordsmyth dictionary (Rice et al., 2019).

Nmeanings_Wordsmyth

Number of meanings based on the Wordsmyth dictionary (Rice et al., 2019).

Nmeanings_Websters

Number of meanings based on the Websters dictionary, which was computed in the current paper by counting the number of distinct entries under the same wordform presented in the Websters dictionary.

NFeatures

Number of features listed for a word (Buchanan et al., 2019). This measure was obtained by asking participants to provide lists of features for each concept presented.

Visual_Lanc

To what extent one experiences the referent by seeing, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Auditory_Lanc

To what extent one experiences the referent by hearing, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Haptic_Lanc

To what extent one experiences the referent by feeling through touch, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Olfactory_Lanc

To what extent one experiences the referent by smelling, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Gustatory_Lanc

To what extent one experiences the referent by tasting, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Interoceptive_Lanc

To what extent one experiences the referent by sensations inside one’s body, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Head_Lanc

To what extent one experiences the referent by performing an action with the head, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Torso_Lanc

To what extent one experiences the referent by performing an action with the torso, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Mouth_Throat_Lanc

To what extent one experiences the referent by performing an action with the mouth/throat, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Hand_Arm_Lanc

To what extent one experiences the referent by performing an action with the hand/arm, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Foot_Leg_Lanc

To what extent one experiences the referent by performing an action with the foot/leg, from 0 (not experienced at all) to 5 (experienced greatly) (Lynott et al., 2020).

Mink_Perceptual_Lanc

Minkowski distance at m = 3 of an 11-dimension sensorimotor vector from the origin. It represents a composite measure of the perceptual strength in all dimensions, with the influence of weaker dimensions attenuated (Lynott et al., 2020).

Mink_Action_Lanc

Minkowski distance at m = 3 of an 11-dimension sensorimotor vector from the origin. It represents a composite measure of the action strength in all dimensions, with the influence of weaker dimensions attenuated (Lynott et al., 2020).

Compo_attribs [65]

Componential Attributes: A set of 65 experiential attributes based on neurobiological considerations, comprising sensory, motor, spatial, temporal, affective, social, and cognitive experiences on a 0 (Not at all) to 6 (very much) scale (Binder et al., 2016).

BOI

Body-Object Interaction, which is the ease with which the human body can interact with a word’s referent on a scale from 1 (low interaction) to 7 (high interaction) (Pexman et al., 2019).

Sem_Size_Glasgow

Magnitude of an object or concept expressed in either concrete (physical) or abstract terms on a 1 (small) to 7 (big) scale (Scott et al., 2019).

Gender_Assoc_Glasgow

The degree to which words are associated with male or female behavior on a 1 (feminine) to 7 (masculine) scale (Scott et al., 2019).

Feature_Visual

The word is associated with sense of vision as indicated by 0 or 1 rated by two English speakers; disagreements were discussed and agreed upon (Vinson & Vigliocco, 2008).

Feature_Perceptual

The word describes information gained through sensory input, including body state and proprioception as indicated by 0 or 1 rated by two English speakers; disagreements were discussed and agreed upon (Vinson & Vigliocco, 2008).

Feature_Functional

The word refers to the purpose of a thing, or the purpose or goal of an action as indicated by 0 or 1 rated by two English speakers; disagreements were discussed and agreed upon (Vinson & Vigliocco, 2008).

Feature_Motoric

The word describes a motor component of an action as indicated by 0 or 1 rated by two English speakers; disagreements were discussed and agreed upon (Vinson & Vigliocco, 2008).

Sensory_Experience

The extent to which a word evokes a sensory and/or perceptual experience in the mind of the reader on a 1 to 7 scale, with higher numbers indicating a greater sensory experience (Juhasz et al., 2012).

Socialness

The extent to which a word’s meaning has social relevance on a seven-point Likert scale from 1 (not social) to 7 (highly social) (Diveica et al., 2022).

Valence_Warr

The pleasantness of a stimulus on a 1 (happy) to 9 (unhappy) scale (Warriner et al., 2013).

Valence_Extremity_Warr

The absolute value of the difference between valence rating from 5, the neutral point on the scale (Warriner et al., 2013).

Valence_Glasgow

The pleasantness of a stimulus on a 1 (happy) to 9 (unhappy) scale (Scott et al., 2019).

Valence_NRC

Word-emotion association built by manual annotation using Best-Worst Scaling method, with scores ranging from 0 (negative) to 1 (positive) (Mohammad & Turney, 2010; Mohammad & Turney, 2013).

Arousal_Warr

The intensity of emotion evoked by a stimulus on a 1 (aroused) to 9 (calm) scale (Warriner et al., 2013).

Arousal_Glasgow

The intensity of emotion evoked by a stimulus on a 1 (aroused) to 9 (calm) scale (Scott et al., 2019).

Arousal_NRC

Word-emotion association built by manual annotation using Best-Worst Scaling method, with scores ranging from 0 (low arousal) to 1 (high arousal) (Mohammad & Turney, 2010; Mohammad & Turney, 2013).

Dominance_Warr

The degree of control exerted by a stimulus on a 1 (controlled) to 9 (in control) scale (Warriner et al., 2013).

Dominance_Glasgow

The degree of control exerted by a stimulus on a 1 (controlled) to 9 (in control) scale (Scott et al., 2019).

Dominance_NRC

Word-emotion association built by manual annotation using Best-Worst Scaling method, with scores ranging from 0 (low dominance) to 1 (high dominance) (Mohammad & Turney, 2010; Mohammad & Turney, 2013).

Humor_Male_Enge

Humor_Female_Enge

Humor_Young_Enge

Humor_Old_Enge

Humor_Overall_Enge

Humor ratings on a scale from 1 (humorless) to 5 (humorous) for group of raters that are male/female or young/old, and an overall rating (Engelthaler & Hills, 2018).

Emot_Assoc [10]

Word-emotion association built by manual annotation, with 0 (not associated) and 1 (associated) ratings for 10 emotions: positive, negative, anger, anticipation, disgust, fear, joy, sadness, surprise, and trust (Mohammad & Turney, 2010; Mohammad & Turney, 2013).

Sem_Diversity

The degree to which different contexts associated with a word vary in their meanings. In other words, similarity of different contexts in which a word can appear. This is a computationally derived measure of semantic ambiguity, which is more objective compared to the measure by summing the number of senses or dictionary definitions (Hoffman et al., 2013).

Sem_N

The number of semantic neighbors within a threshold determined in a co-occurrence space. The space was created from a sparse matrix that contains all co-occurrence information for each word with window size and weighting scheme applied. The threshold is calculated by randomly sampling many word pairs and calculating their interword distances to obtain the mean and standard deviation of this distance distribution (Shaoul & Westbury, 2006, 2010). The threshold was 1.5 SDs below the mean distance.

Sem_N_D

The average radius of co-occurrence, which is the average distance between the words in the semantic neighborhood and the target word (Shaoul & Westbury, 2006, 2010).

Sem_N_D_Taxonomic_N3

Sem_N_D_Taxonomic_N10

Sem_N_D_Taxonomic_N25

Sem_N_D_Taxonomic_N50

The mean distance of nearest 3, 10, 25, or 50 semantic neighbors of a word based on taxonomic similarity. Similarity is calculated using vector representations (calculated from a corpus) of words that emphasize taxonomic (as opposed to thematic or associative) relations (Reilly & Desai, 2017; Roller & Erk, 2016).

Assoc_Freq_Token

The number of times that a word is the first associate across all target words. The task instruction was to elicit free associations in the broadest possible sense wherein participants were asked to provide multiple responses per cue (De Deyne et al., 2019).

Assoc_Freq_Type

The number of unique words that produce the target word first in a free association task (De Deyne et al., 2019).

Assoc_Freq_Token123

The number of times that a word is one of the first three associates across all target words in a free association task (De Deyne et al., 2019).

Assoc_Freq_Type123

The number of unique words that produce the target word in the first three associates in a free association task (De Deyne et al., 2019).

Cue_SetSize

The number of different responses or targets given by two or more participants in the normative sample, which provides a relative index of the set size of a particular word by providing a reliable measure of how many strong associates it has (Nelson et al., 2004).

Cue_MeanConn

The number of connections among the associate set of a word, divided by the size of the set, which captures the density and in some sense the level of organization among the strongest associates of the cue (Nelson et al., 2004).

Cue_Prob

The probability that each associate in a set produces the normed cue as an associate (Nelson et al., 2004).

Cue_ResoStrength

Resonance strength between the cue and its associates, calculated by cross-multiplying cue-to-associate strength by associate-to-cue strength for each associate in a set and then summing the result (Nelson et al., 2004).

Word2Vec [300]

Vector representation of a word created from 300 hidden layer linear units in the neural net model trained on the Google news dataset (Mikolov et al., 2013).

GloVe [300]

Vector representation of a word created from an unsupervised learning algorithm. Training is performed on aggregated global word-word co-occurrence statistics from a corpus (Pennington et al., 2014).

Taxonomic [300]

Vector representation of a word created from a model that uses a narrow window of co-occurrence, effectively emphasizing taxonomic similarities between words as opposed to associations (Roller & Erk, 2016). In most distributional models, words such as cow and milk have similar representations due to their high association, while the distance between cow and bull is relatively greater. These representations reverse this relationship, and assign a greater similarity to cow and bull.

Orth-Phon Variables

Phonographic_N

The number of words that can be obtained by changing one letter and one phoneme while preserving the identity and position of the other letters and phonemes (Balota et al., 2007; Peereman & Content, 1997).

Phonographic_N_Freq

The average frequency of the phonographic neighborhood of the particular word (Balota et al., 2007).

Consistency_Token_FF

The spelling-to-sound consistency measure, in which a given word’s log frequencies of friends are divided by its total log frequencies of friends and enemies. In addition to the composite value, this measure also includes token feedforward onset (Consistency_Token_FF_O), nucleus (Consistency_Token_FF_N), coda (Consistency_Token_FF_C), oncleus (Consistency_Token_FF_ON), and rime (Consistency_Token_FF_R) consistency (Chee et al., 2020).

Consistency_Token_FB

The sound-to-spelling consistency measure, in which a given word’s log frequencies of friends are divided by its total log frequencies of friends and enemies. In addition to the composite value, this measure also includes token feedback onset (Consistency_Token_FB_O), nucleus (Consistency_Token_FB_N), coda (Consistency_Token_FB_C), oncleus (Consistency_Token_FB_ON), and rime (Consistency_Token_FB_R) consistency (Chee et al., 2020).

Consistency_Type_FF

The spelling-to-sound consistency measure, in which a given word’s number of friends were divided by its total number of friends and enemies. In addition to the composite value, this measure also includes type feedforward onset (Consistency_Type_FF_O), nucleus (Consistency_Type_FF_N), coda (Consistency_Type_FF_C), oncleus (Consistency_Type_FF_ON), and rime (Consistency_Type_FF_R) consistency (Chee et al., 2020).

Consistency_Type_FB

The sound-to-spelling consistency measure, in which a given word’s number of friends were divided by its total number of friends and enemies. In addition to the composite value, this measure also includes type feedback onset (Consistency_Type_FB_O), nucleus (Consistency_Type_FB_N), coda (Consistency_Type_FB_C), oncleus (Consistency_Type_FB_ON), and rime (Consistency_Type_FB_R) consistency (Chee et al., 2020).

Morphological Variables

NMorph

The number of morphemes in a word (Sánchez-Gutiérrez et al., 2018).

PRS_signature

A prefix-root-suffix signature (Sánchez-Gutiérrez et al., 2018). For example, words that include one suffix and one root, but no prefix, share a 0-1-1 PRS signature.

ROOT1_Freq_HAL

ROOT2_Freq_HAL

ROOT3_Freq_HAL

The summed frequency of all members in the morphological family of a morpheme occurring as the first (ROOT1), second (ROOT2), or third (ROOT3) root (Sánchez-Gutiérrez et al., 2018).

SUFF1_Freq_HAL

SUFF2_Freq_HAL

SUFF3_Freq_HAL

SUFF4_Freq_HAL

The summed frequency of all members in the morphological family of a morpheme occurring as the first (SUFF1), second (SUFF2), third (SUFF3), or fourth (SUFF4) suffix (Sánchez-Gutiérrez et al., 2018).

ROOT1_FamSize

ROOT2_FamSize

ROOT3_FamSize

The number of word types in which a given morpheme is a constituent as the first (ROOT1), second (ROOT2), or third (ROOT3) root. It was computed by counting all its types in the ELP database (Sánchez-Gutiérrez et al., 2018).

SUFF1_FamSize

SUFF2_FamSize

SUFF3_FamSize

SUFF4_FamSize

The number of word types in which a given morpheme is a constituent as the first (SUFF1), second (SUFF2), third (SUFF3), or fourth (SUFF4) suffix. It was computed by counting all its types in the ELP database (Sánchez-Gutiérrez et al., 2018).

ROOT1_PFMF

ROOT2_PFMF

ROOT3_PFMF

Percentage of other words in the family that are more frequent for the first (ROOT1), second (ROOT2), or third (ROOT3) root. It was computed by dividing the number of more frequent words in the family by the total number of members in the family minus one (Sánchez-Gutiérrez et al., 2018).

SUFF1_PFMF

SUFF2_PFMF

SUFF3_PFMF

SUFF4_PFMF

Percentage of other words in the family that are more frequent for the first (SUFF1), second (SUFF2), third (SUFF3), or fourth (SUFF4) suffix. It was computed by dividing the number of more frequent words in the family by the total number of members in the family minus one (Sánchez-Gutiérrez et al., 2018).

SUFF1_length

SUFF2_length

SUFF3_length

SUFF4_length

The number of letters of the first (SUFF1), second (SUFF2), third (SUFF3), or fourth (SUFF4) suffix (Sánchez-Gutiérrez et al., 2018).

SUFF1_P

SUFF2_P

SUFF3_P

SUFF4_P

Affix productivity measured by the probability that a given affix, i.e., the first (SUFF1), second (SUFF2), third (SUFF3), or fourth (SUFF4) will be encountered in a hapax (words that appear only once). It was computed by dividing all hapaxes in the corpus that contain a morpheme by the summed token frequency of a morpheme (Sánchez-Gutiérrez et al., 2018).

SUFF1_Px

SUFF2_Px

SUFF3_Px

SUFF4_Px

Affix productivity measured by the probability that a hapax (words that appear only once) contains a certain affix, i.e., the first (SUFF1), second (SUFF2), third (SUFF3), or fourth (SUFF4). It was computed by dividing all hapaxes in the corpus that contain a morpheme by the total of all hapax legomena in the corpus (Sánchez-Gutiérrez et al., 2018).

Response Variables

LexicalD_RT_V_ELP

LexicalD_RT_V_ELP_z

LexicalD_ACC_V_ELP

The mean visual lexical decision latency (in msec) and its normalized (z-scored) version, and the proportion of accurate responses for a particular word across participants from the English Lexicon Project (Balota et al., 2007).

LexicalD_RT_V_ECP

LexicalD_RT_V_ECP_z

LexicalD_ACC_V_ECP

The mean latency (in msec) and its normalized version, and the proportion of accurate responses for a particular word in the word knowledge task across participants from the English Crowdsourcing Project (Mandera et al., 2019). This task is similar, but not identical, to the traditional lexical decision task. Participants were asked to indicate whether each item “is a word you know or not.” Their results showed that RTs in this task correlate well with those from lexical decision in ELP and BLP, and hence we have labelled it as such. It should be noted that in this task, participants were not instructed to respond quickly, and were discouraged to guess (large penalty for labelling a nonword as a known word).

LexicalD_RT_V_BLP

LexicalD_RT_V_BLP_z

LexicalD_ACC_V_BLP

The mean visual lexical decision latency (in msec) and its normalized version, and the proportion of accurate responses for a particular word across participants from the British Lexicon Project (Keuleers et al., 2012).

LexicalD_RT_A_MALD

LexicalD_RT_A_MALD_z

LexicalD_ACC_A_MALD

The mean auditory lexical decision latency (in msec) and its normalized version, and the proportion of accurate responses for a particular word from the Massive Auditory Lexical Decision database (Tucker et al., 2019).

LexicalD_RT_A_AELP

LexicalD_RT_A_AELP_z

LexicalD_ACC_A_AELP

The mean auditory lexical decision latency (in msec) and its normalized version, and the proportion of accurate responses for a particular word from the Auditory English Lexicon Project (Goh et al., 2020).

Naming_RT_ELP

Naming_RT_ELP_z

Naming_ACC_ELP

The mean naming latency (in msec) and its normalized version, and the proportion of accurate responses for a particular word across participants from the English Lexicon Project (Balota et al., 2007).

SemanticD_RT_Calgary

SemanticD_RT_Calgary_z

SemanticD_ACC_Calgary

The mean latency (in msec) and its normalized version, and the proportion of accurate responses of concrete/abstract semantic decision (i.e., does the word refer to something concrete or abstract?) for a particular word from the Calgary database (Pexman et al., 2017).

Recog_Memory

Recognition memory performance indicated by d’ (hits minus false alarms) (Khanna & Cortese, 2021).

Data Analyses

As an initial effort, we examined the relationship between independent variables and reported the correlations between independent and dependent variables. We aimed to include the largest number of variables over the maximum number of words. Because variable values are available for partially different sets of words, including more variables leads to a smaller set of words, and selecting a larger set of words leads to a smaller variable set. As a compromise between these competing factors, we created a subset of the data containing 1,728 words with measurements on 130 independent variables (28 General, 17 Orthographic, 17 Phonological, 38 Semantic, 26 Orth-Phon, 4 Morphological) and 13 response variables (3 visual lexical decision reaction times, 3 visual lexical decision accuracies, 2 auditory lexical decision times, 2 auditory lexical decision accuracies, 1 naming time, 1 naming accuracy, and 1 recognition memory). To create this subset, we excluded variables that are available for relatively small sets of words or those that have low overlap with other variables (in terms of the words that the values are available for; e.g., NFeatures, Feature_Perceptual, Emot_Assoc), are categorical (i.e., DPoS_Brys, DPoS_VanH, and First_Phon), or are in a vector form (e.g., Word2vec).

Interrelations Between Variables

Spearman’s Correlation Between Variables

To summarize the description of the relationship between each of the independent variables, we computed the Spearman’s correlation among the 130 independent variables and created a similarity plot using these correlations.

t-Distributed Stochastic Neighbor Embedding (t-SNE)

We also examined the interrelations between variables with t-Distributed Stochastic Neighbor Embedding (t-SNE) and hierarchical cluster analyses. Barnes-Hut t-SNE (perplexity = 30; theta = 0) was used to visualize high-dimensional data. This method converts high-dimensional Euclidean distances between variables into conditional probabilities and then projects these distances onto a two-dimensional embedding space using the Student-t distribution by minimizing the Kullback-Leibler divergence. It has the advantage of revealing global structure while also capturing local structure of the high-dimensional data (Van der Maaten & Hinton, 2008).

Hierarchical Cluster Analysis

As an additional method of visualizing clustering among independent variables, hierarchical cluster analysis was performed using Ward’s criterion (Murtagh & Legendre, 2014).

Exploratory Factor Analysis

A parallel analysis was performed to determine the appropriate number of latent factors (Crawford et al., 2010; Horn, 1965). The exploratory factor analysis was performed using the principal axis factoring extraction method and oblimin rotation.

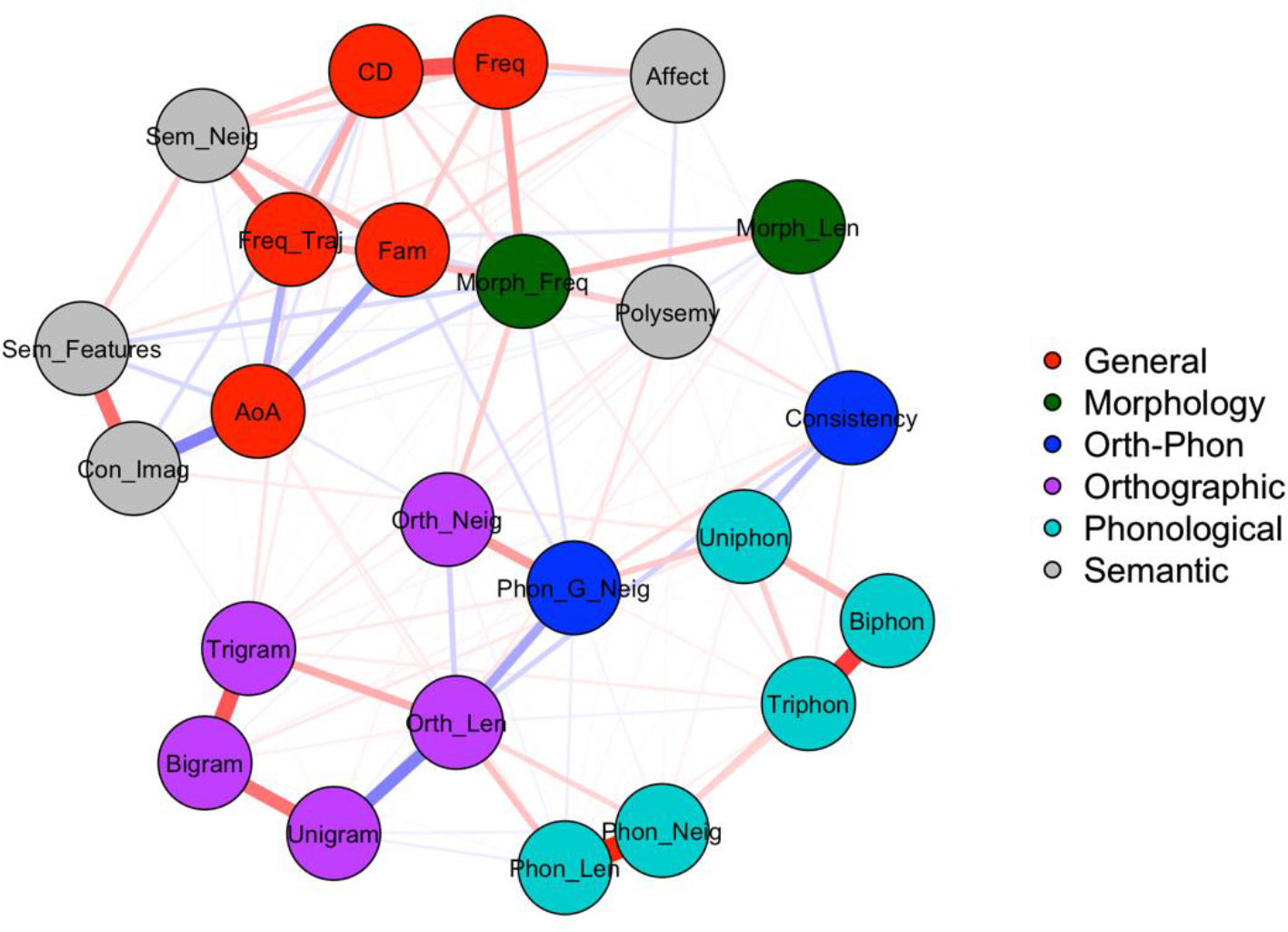

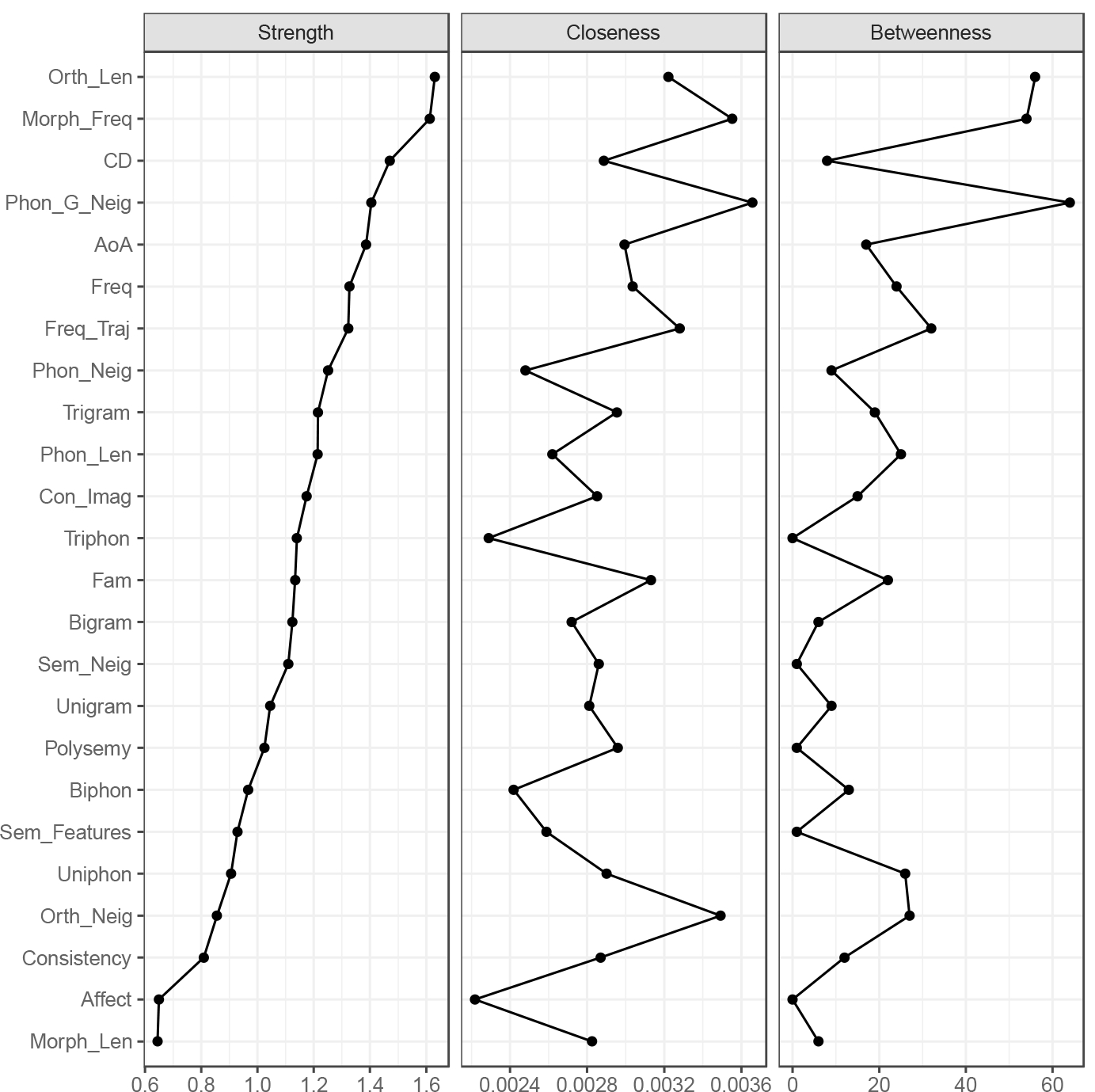

Network Analysis

To further determine the interrelations between variables, we performed a psychometric network modeling analysis in which each observed variable was modelled as a node and partial correlations among variables were modelled as edges (Epskamp et al., 2018; Epskamp & Fried, 2018). Three centrality indices were computed to examine the relative importance of a node in the network: strength, closeness and betweenness. Strength refers to the sum of absolute partial correlation values for each node. Closeness refers to the inverse of the sum of distances from one node in the network to all other nodes. Betweenness refers to the number of the shortest paths that one node was passed through. A “best” measure of each individual variable group, defined as the measure that has the highest overall weighted correlation with dependent measures, was chosen to represent the group in the network analysis (e.g., CD_SUBTLEXUS for all contextual diversity measures). This ensures that that centrality indices are not biased towards one particular variable because of the unequal number of measures (e.g., having many frequency measures but few age-of-acquisition measures).

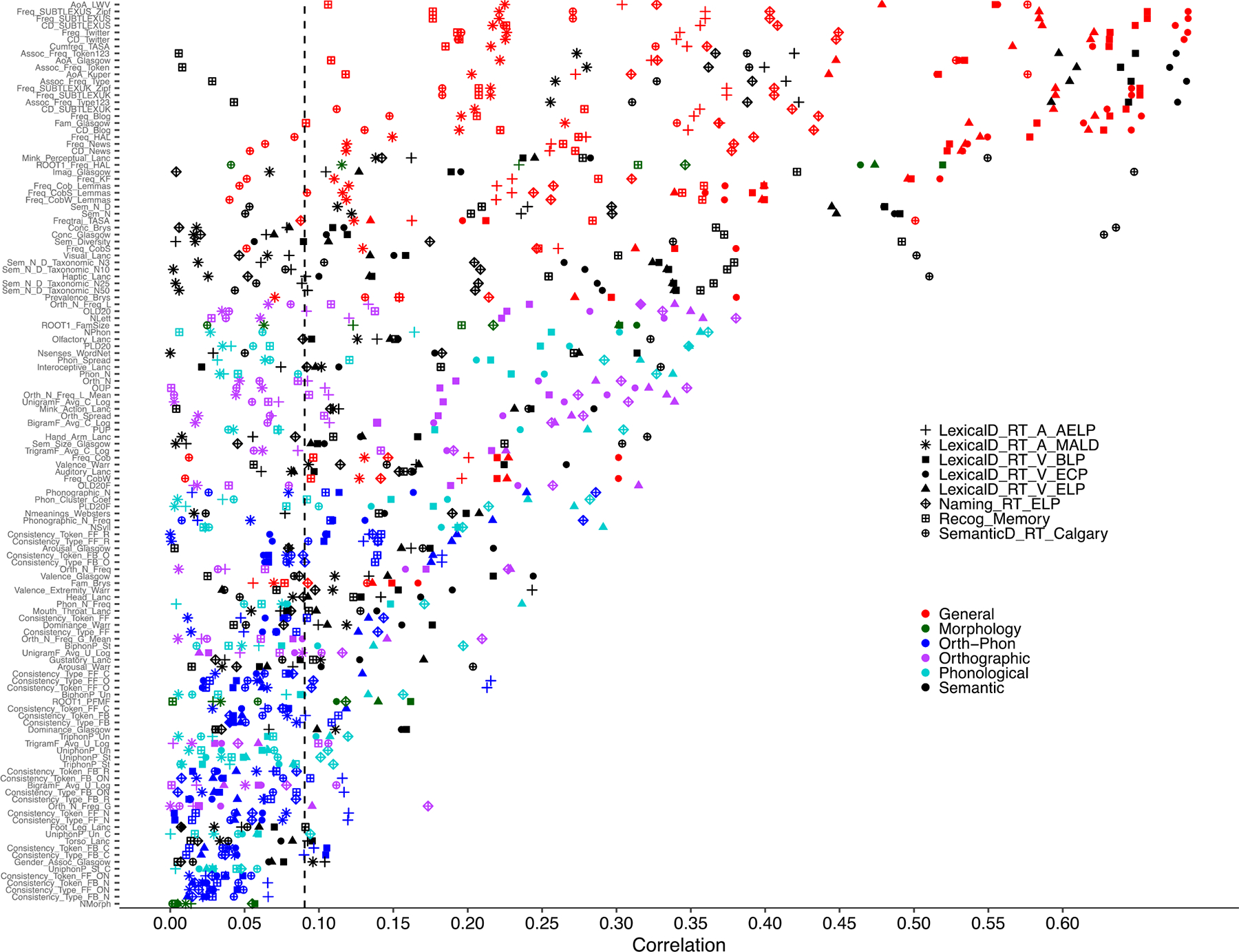

Correlations Between Variables/Factors and the Dependent Variables

In addition to the analyses on the dataset that excluded the semantic decision task (n=1,728), we also performed analyses for correlations between variables/factors and the dependent variables after including semantic decision (SemanticD_RT_Calgary), resulting in n=471 words.

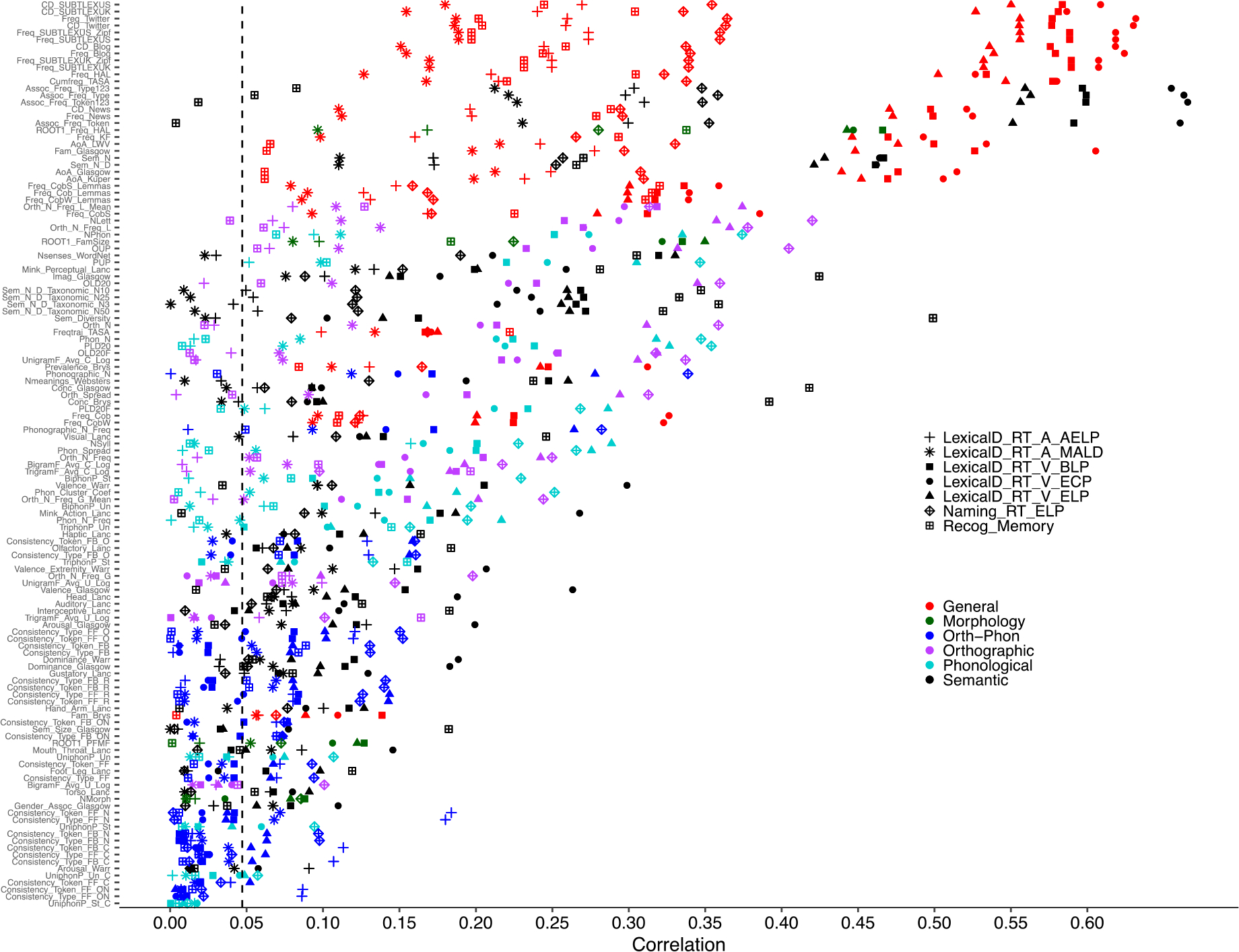

Correlations Between Each Independent Variable and the Dependent Variables

To examine bivariate relationships between the dependent and independent variables, normalized reaction time measures (zRTs) for each of 7 dependent variables (i.e., LexicalD_RT_V_ELP_z, LexicalD_RT_V_ECP_z, LexicalD_RT_V_BLP_z, LexicalD_RT_A_MALD_z, LexicalD_RT_A_AELP_z, Naming_RT_ELP_z, and Recog_Memory) were correlated with each of the 130 variables over 1,728 words using Spearman’s correlation. In addition to correlations between independent variables and each of the dependent variables separately, we also computed an overall weighted absolute correlation that gives equal weight to each task (visual lexical decision, auditory lexical decision, naming, and recognition memory), so that the overall value is not dominated by tasks such as visual lexical decision that have multiple measures. It was computed using Spearman’s R for each measure as [(visual lexical decision R of ELP + visual lexical decision R of ECP + visual lexical decision R of BLP)/3 + (auditory lexical decision R of MALD + auditory lexical decision R of AELP)/2 + naming R + recognition memory R]/4. A similar weighted absolute correlation was computed for the smaller dataset (n=471) that included semantic decision times, using five different tasks. We also provide ranks of different measures for each variable (e.g., frequency) by overall weighted correlation or correlation values for response variables from each dataset (e.g., ELP, ECP etc). The measure of each independent variable that has the highest overall weighted correlation was chosen for the network analysis.

Correlations Between Each Factor and the Dependent Variables

We also performed Spearman’s correlation between factor scores of each factor obtained from the exploratory factor analysis, and normalized reaction time measures (zRTs) for each of 7 dependent variables (i.e., LexicalD_RT_V_ELP_z, LexicalD_RT_V_ECP_z, LexicalD_RT_V_BLP_z, LexicalD_RT_A_MALD_z, LexicalD_RT_A_AELP_z, Naming_RT_ELP_z, and Recog_Memory).

Contributions of Distributional Semantic Vectors to the Dependent Variables

We also performed multiple regression analyses with three distribution semantic vectors (Word2Vec, GloVe, and Taxonomic) as predictors and each of the 7 dependent variables as response variables. Adjusted multiple R was obtained from each of the multiple regression analyses for comparison with correlation values of other variables. Overall weighted R for the distributional semantic vectors was computed in a similar way as for other independent variables.

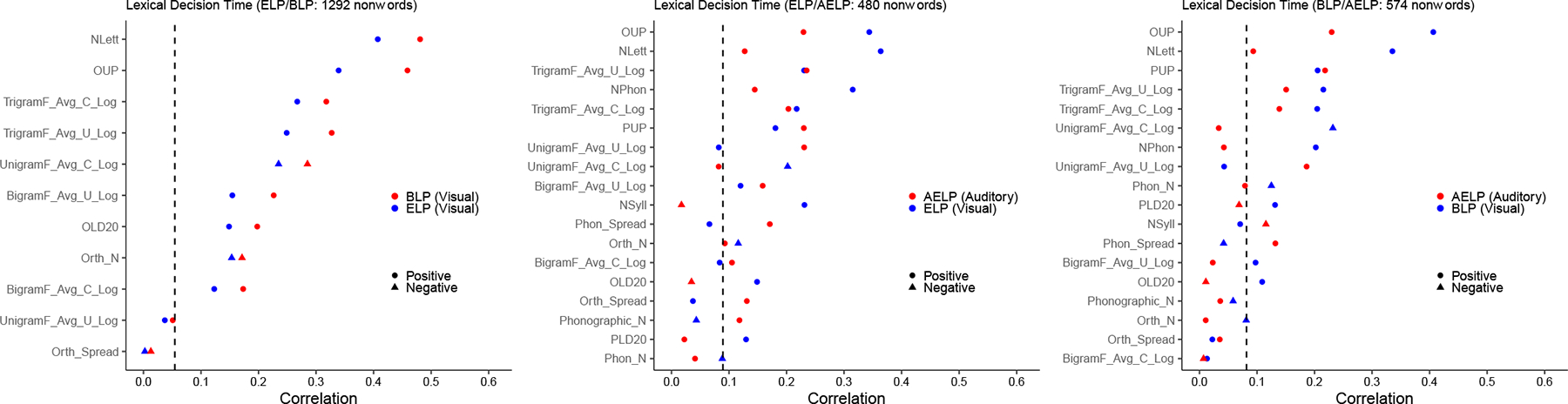

Correlations Between Each Independent Variable and Dependent Variables for Nonwords

To further examine the bivariate relationships between the dependent and independent variables, we also correlated normalized reaction time measures (zRTs) of nonwords for LexicalD_RT_V_ELP_z, LexicalD_RT_V_BLP_z, LexicalD_RT_A_MALD_z with a set of independent variables. These variables include NLett, Orth_N, OLD20, OUP, Orth_Spread obtained using LexiCAL (Chee et al., 2021); and UnigramF_Avg_C_Log, BigramF_Avg_C_Log, TrigramF_Avg_C_Log, UnigramF_Avg_U_Log, BigramF_Avg_U_Log, and TrigramF_Avg_U_Log retrieved from MCWord database (Medler & Binder, 2005). Given that IPA transcription is available for AELP database (Goh et al., 2020), we were able to compute additional measures including NPhon, NSyll, PLD20, PUP, Phon_Spread, and Phonographic_N using LexiCAL for AELP. We then correlated these measures with reaction time measure of nonwords for LexicalD_RT_A_AELP.

To compare the correlations between reaction time measures and independent variables across databases, we merged across databases and compared the databases that have a reasonable sample size of overlapping nonwords. This resulted in 1,292 nonwords shared between ELP and BLP, 480 nonwords shared between ELP and AELP, and 574 nonwords shared between AELP and BLP.

Results

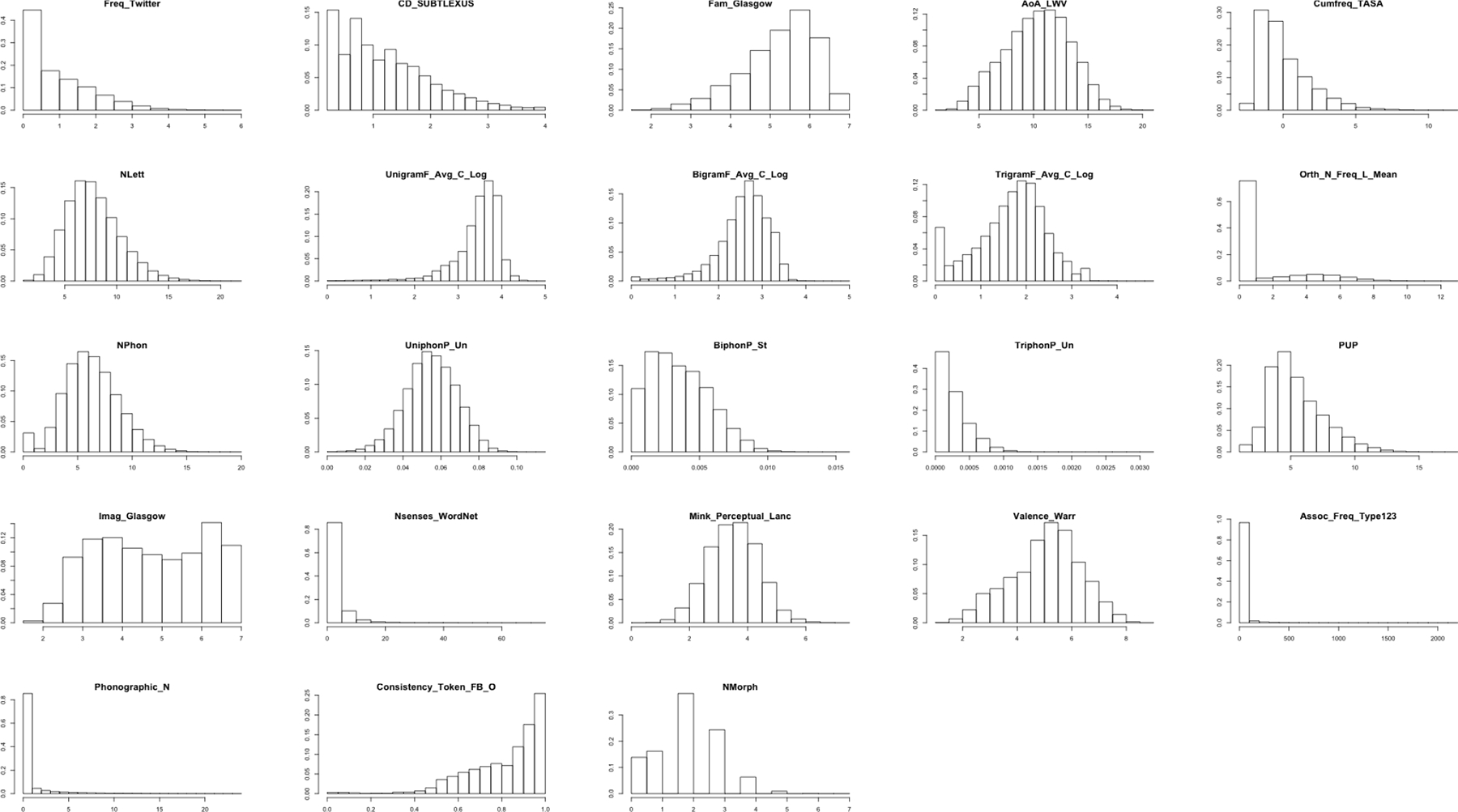

We present the distribution for a sample of representative variables in Figure 1. The distribution of all 143 variables for all available words for that variable is shown separately for each of the seven groups (i.e., General, Orthographic, Phonological, Semantic, Orth-Phon, Morphological, Response) in Supplemental Figure 1–7 (Supplemental material can be found at https://osf.io/9qbjz/). Frequency measures from SUBTLEX and Worldlex were widely distributed across the whole range. Compared to constrained unigram, bigram, or trigram frequencies, values for the unconstrained versions of frequency measures were more distributed. The concreteness measures had relatively uniform distributions. The measures of reaction times generally had skewed distributions as expected. The accuracies were generally very high.

Figure 1.

Distribution of a sample of representative variables over all words for which the variable is available.

Interrelations Between Variables

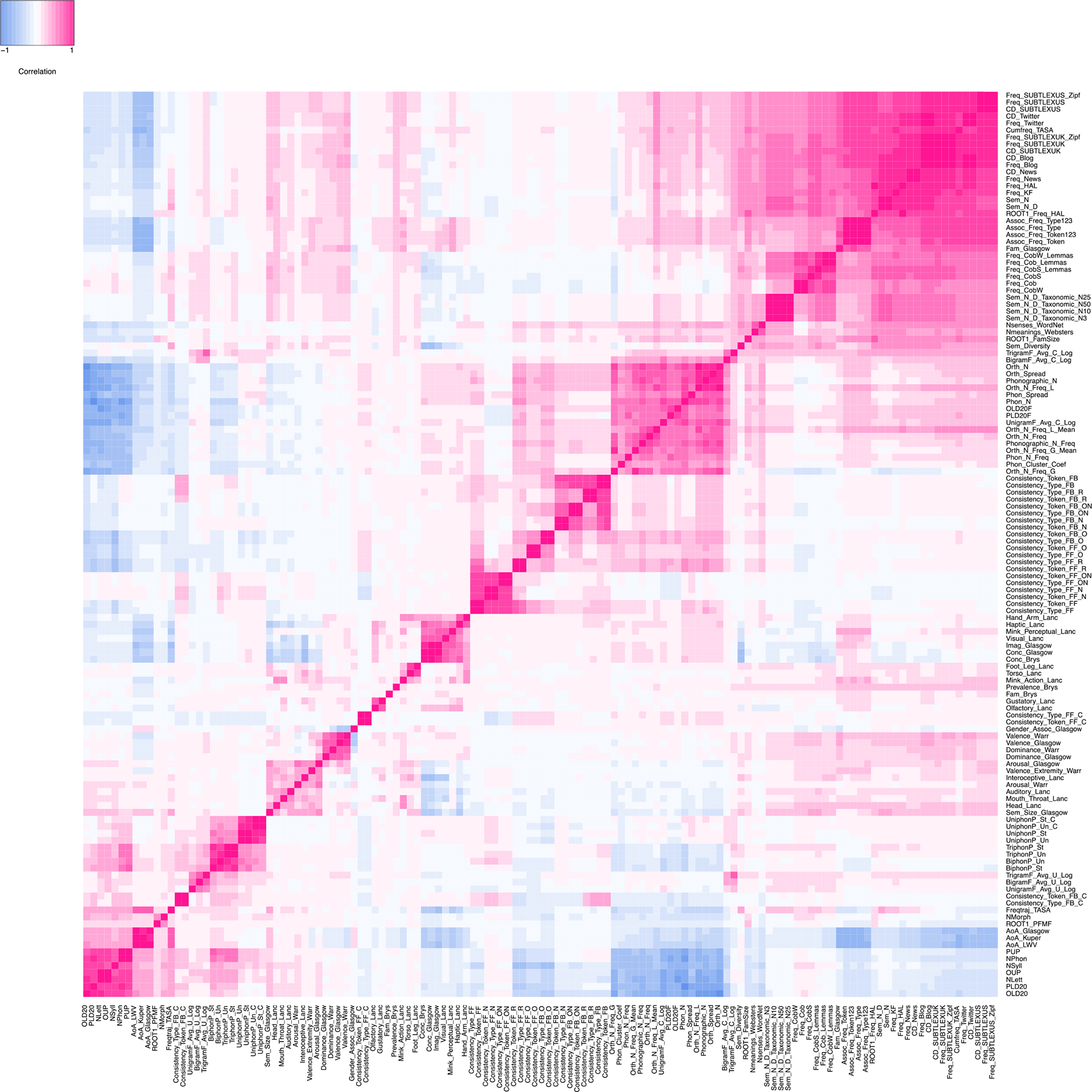

Spearman’s Correlations Between Variables

The similarity among 130 predictor variables based on Spearman’s correlation is shown in Figure 2. Several clusters can be identified from this visualization. The largest cluster included a series of frequency measures such as those from SUBTLEX, CELEX, HAL and Worldlex databases; cumulative frequency; semantic neighbourhood measures; association frequency measures and familiarity. The second cluster included orthographic neighbourhood measures, phonological neighbourhood measures, frequencies for the orthographic and phonological neighbours, orthographic, phonological and phonographic neighbourhood measures, as well as orthographic and phonological spread measures. The third cluster included length and uniqueness-related variables, such as the number of letters, number of syllables, number of phonemes, number of morphemes, orthographic and phonological Levenshtein distances, orthographic and phonological uniqueness point, and age of acquisition measures. The fourth cluster included a series of positional probability measures, biphon and triphon probability measures. The fifth cluster included sensory and motor semantic variables, including visual features, haptic features, Minkowski perceptual strength, imageability, concreteness, and strength of experiences with hand/arm. The last cluster as also semantic, and included strength of experiences with head or mouth/throat, auditory feature, interoceptive feature, arousal, and semantic size (Figure 2). Similarity plots for each of the General, Orthographic, Phonological and Semantic groups are also shown in Supplemental Material (Supplemental Figure 8–11; other groups are not shown due to small number of variables).

Figure 2.

Visualization of the similarity between 130 variables computed over 1,728 words based on Spearman’s correlation. Color represents correlation coefficients between different variables, ranging from −1 (blue) to 1 (pink).

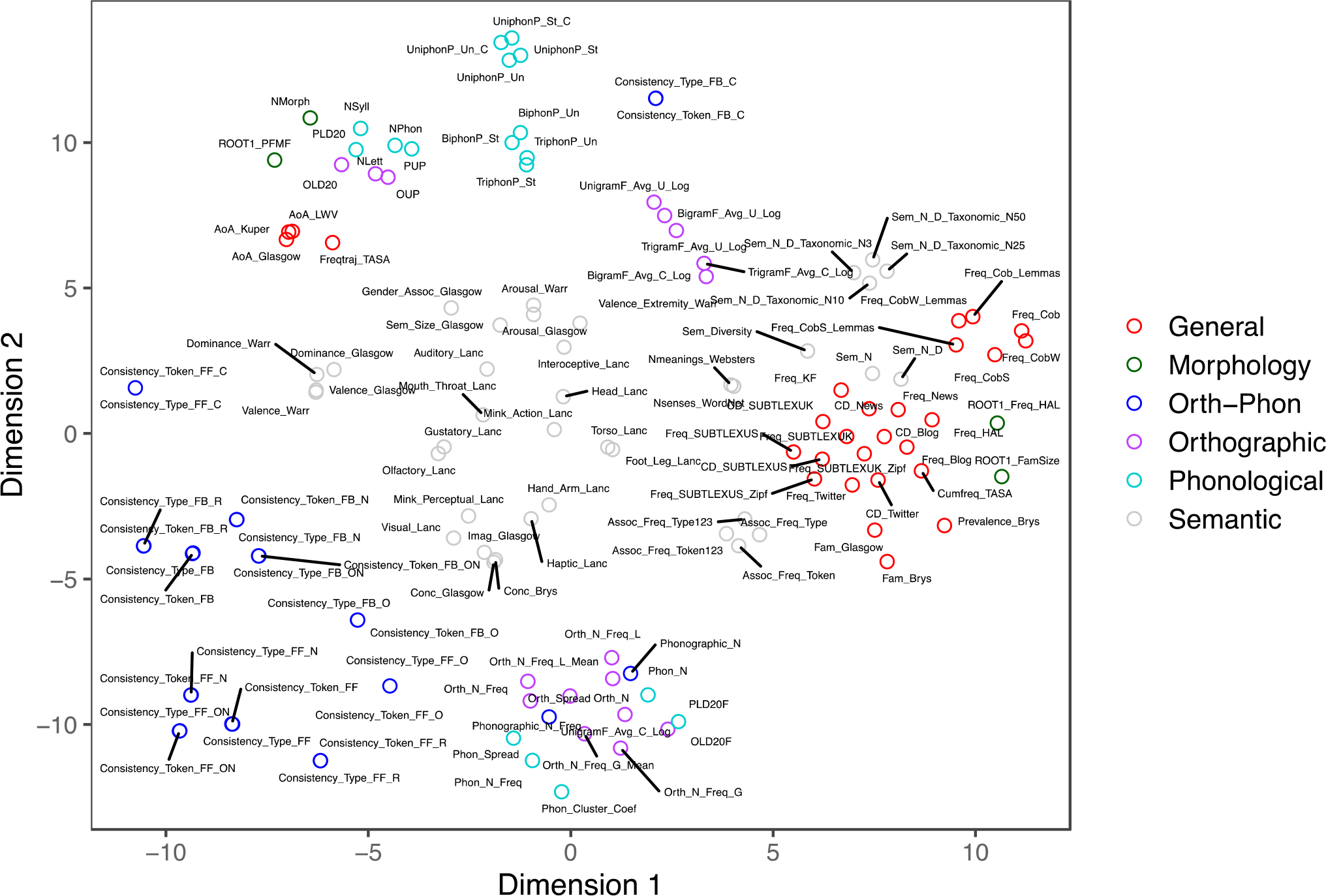

t-Distributed Stochastic Neighbor Embedding (t-SNE)

Variable groupings based on t-SNE visualization partially followed our categorical assignments (Figure 3). Most General variables clustered together, with those related to development (age of acquisition and frequency trajectory) forming a distinct cluster. Orthographic variables also clustered together, with those related to unconstrained and constrained ngrams forming a different cluster. Phonological variables formed two categories, with one related to neighbourhood measures, while the other mainly related to phonotactic variables and length. Semantic variables were spread out and formed three clusters, with the largest cluster, with sensory-motor and affective features, occupying the centre of the space. A second cluster was related to semantic neighbourhood measures, while the third was related to the frequency of the associates. Though Orth-Phon variables were mostly related to consistency, they were still relatively spread out, suggesting that different aspects of consistency capture different properties, and should not be treated in a unitary manner.

Figure 3.

Two-dimensional t-distributed stochastic neighbour embedding (t-SNE) results for 130 variables over 1,728 words. Colors indicate six theoretically defined groups (i.e., General, Orthographic, Phonological, Semantic, Orth-Phon, Morphological).

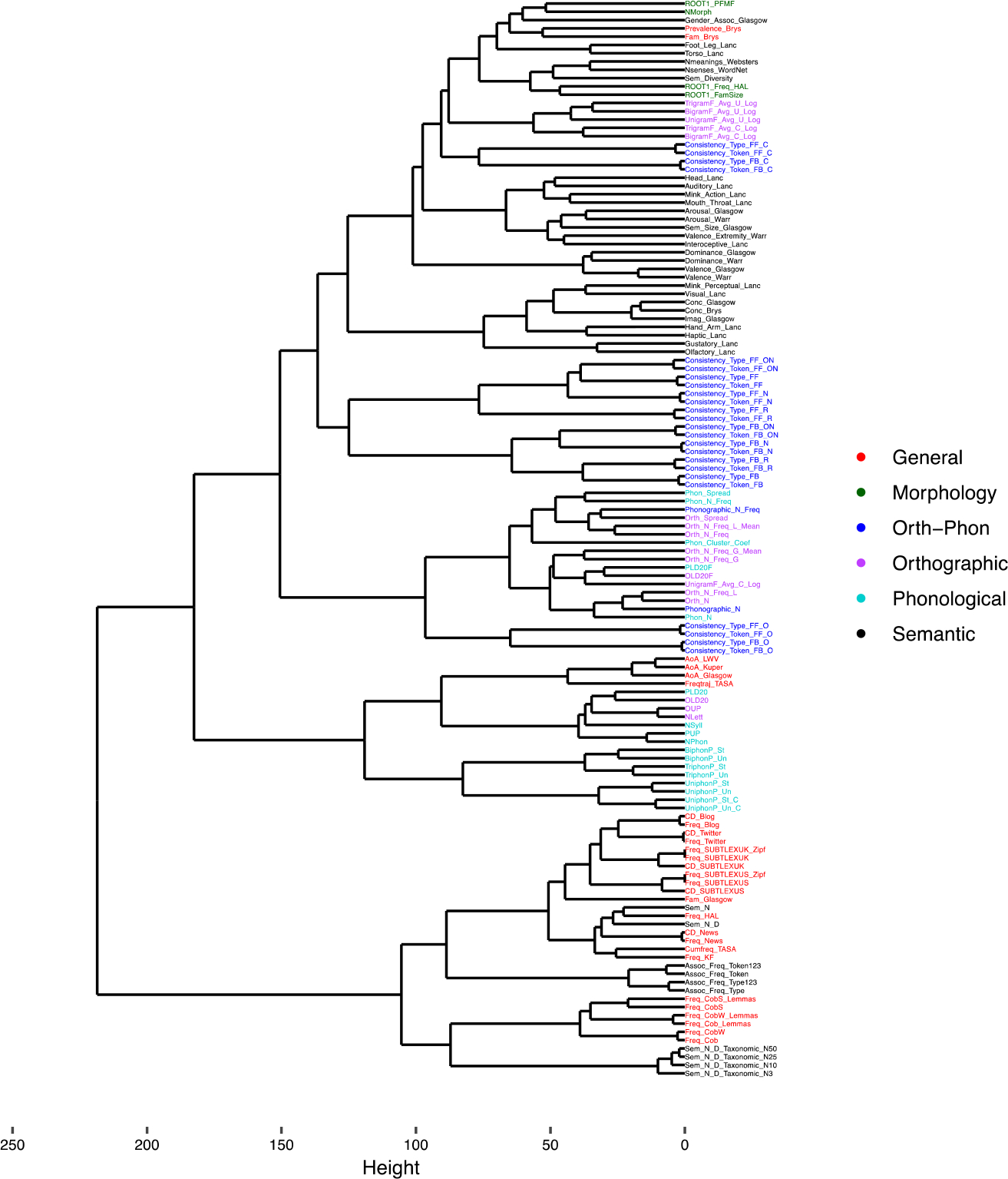

Hierarchical Cluster Analysis

Hierarchical clustering showed similar groupings as the similarity and t-SNE results (Figure 4). This visualization is useful for identifying within- and between-category similarities. For example, some semantic variables clustered with General variables, while others with Phonological and Orthographic variables.

Figure 4.

Hierarchical clustering results using Ward’s criterion for 130 variables over 1,728 words.

Exploratory Factor Analysis

The exploratory factor analysis with a number of 24 latent factors determined by the parallel analysis was performed. Variables with factor loadings larger than 0.4 are presented in Table 1. A full table with factor loading values is provided in Supplemental Table 2. Based on the table of factor loadings, we labelled these factors as following: Freq_CD, Consistency_FF_ONN, Sem_N_Taxonomic, Assoc_Freq, Freq_Cob, Consistency_FB_ONN, ConcImage, OrthPhon_OLD, Uniphon_P, OrthPhon_N, BiTriPhon_P, Valence_Dominance, Consistancy_FB_CR, AoA, NLettPhon_Unique, Consistency_FF_R, NGramLog, Consistency_FF_O, Action, Arousal, Consistency_FB_O, Consistency_FF_C, Gust_Olfac, Haptic.

Table 1.

Variables with factor loadings larger than 0.4 for the exploratory factor analysis over 1,728 words.

|

PA1: Freq_CD Freq_HAL Freq_KF Freq_SUBTLEXUS Freq_SUBTLEXUS_Zipf Freq_SUBTLEXUK Freq_SUBTLEXUK_Zipf Freq_Blog Freq_Twitter Freq_News CD_SUBTLEXUS CD_SUBTLEXUK CD_Blog CD_Twitter CD_News Fam_Glasgow Cumfreq_TASA Sem_N Sem_N_D |

PA3: Consistency_FF_ONN Consistency_Token_FF_ON Consistency_Type_FF_ON Consistency_Token_FF_N Consistency_Type_FF_N Consistency_Token_FF Consistency_Type_FF |

PA7: Sem_N_Taxonomic Sem_N_D_Taxonomic_N10 Sem_N_D_Taxonomic_N25 Sem_N_D_Taxonomic_N50 Sem_N_D_Taxonomic_N3 |

PA15: Assoc_Freq Assoc_Freq_Token123 Assoc_Freq_Token Assoc_Freq_Type Assoc_Freq_Type123 |

PA13: Freq_Cob Freq_Cob Freq_CobW Freq_Cob_Lemmas Freq_CobS Freq_CobW_Lemmas Freq_CobS_Lemmas |

PA6: Consistency_FB_ONN Consistency_Type_FB Consistency_Token_FB Consistency_Token_FB_ON Consistency_Token_FB_N Consistency_Type_FB_N Consistency_Type_FB_ON |

|

PA4: ConcImage Interoceptive_Lanc Sem_Diversity Conc_Glasgow Imag_Glasgow Conc_Brys Visual_Lanc Mink_Perceptual_Lanc |

PA22: OrthPhon_OLD OLD20 Orth_N_Freq Phonographic_N_Freq Orth_N_Freq_L_Mean Orth_Spread Phon_Spread Phon_N_Freq Orth_N_Freq_G_Mean |

PA5: Uniphon_P UniphonP_Un_C UniphonP_St_C UniphonP_St UniphonP_Un |

PA23: OrthPhon_N Orth_N Phonographic_N Orth_N_Freq_L Phon_N Orth_N_Freq_G |

PA17: BiTriPhon_P TriphonP_Un TriphonP_St BiphonP_St BiphonP_Un |

PA8: Valence_Dominance Valence_Glasgow Valence_Warr Dominance_Warr Dominance_Glasgow |

|

PA9: Consistancy_FB_CR Consistency_Type_FB_R Consistency_Token_FB_R Consistency_Token_FB_C Consistency_Type_FB_C Consistency_Type_FB Consistency_Token_FB |

PA20: AoA AoA_LWV AoA_Glasgow AoA_Kuper Freqtraj_TASA |

PA2: NLettPhon_Unique OLD20F PLD20F NLett OUP NPhon PUP |

PA19: Consistency_FF_R Consistency_Type_FF_R Consistency_Token_FF_R |

PA12: NGramLog BigramF_Avg_U_Log TrigramF_Avg_U_Log UnigramF_Avg_U_Log BigramF_Avg_C_Log TrigramF_Avg_C_Log |

PA21: Consistency_FF_O Consistency_Type_FF_O Consistency_Token_FF_O |

|

PA11: Action Hand_Arm_Lanc Mink_Action_Lanc Torso_Lanc Foot_Leg_Lanc |

PA18: Arousal Arousal_Glasgow Arousal_Warr Sem_Size_Glasgow Valence_Extremity_Warr |

PA10: Consistency_FB_O Consistency_Token_FB_O Consistency_Type_FB_O |

PA14: Consistency_FF_C Consistency_Token_FF_C Consistency_Type_FF_C |

PA16: Gust_Olfac Gustatory_Lanc Olfactory_Lanc Mouth_Throat_Lanc |

PA24: Haptic Head_Lanc Auditory_Lanc Haptic_Lanc Hand_Arm_Lanc |

Network Analysis

The network analysis showed that the clusters partially reflected the theoretically defined groups: General, Orthographic, Phonological, Semantic, Orth-Phon, and Morphological (Figure 5). Semantic features were especially distributed. While sensory-motor semantic features and concreteness cluster together, other semantic groups representing semantic neighborhood, affect, and polysemy were distinct from them as well as from each other. Overall, morphological frequency and orthographic length were the variables that most strongly connected to other variables (Figure 6). Notably, phonographic neighborhood was the variable most close to other nodes in the network and a hub that other variables passed through. Contextual diversity, frequency and age of acquisition were also among the variables that had strong connections with other variables. We also performed network analyses on the data after including semantic decision with n=471 words. The psychometric network model and centrality indices for these analyses are provided in Supplemental Figures 12 and 13.

Figure 5.

Psychometric network model describing relationships between independent variables over 1,728 words. Line thickness refers to the edge strength, which is the size of the partial correlation between variables. Line colors indicate the sign of the correlation, in which red lines correspond to a positive correlation while blue lines correspond to a negative correlation.

Figure 6.

Three centrality indices of the psychometric network model over 1,728 words: strength, closeness and betweenness. Strength refers to the sum of absolute partial correlation values for each node. Closeness refers to the inverse of the sum of distances from one node in the network to all other nodes. Betweenness refers to the number of the shortest paths that one node was passed through. The variables are ordered in an ascending order by strength.

Correlations Between Variables/Factors and the Dependent Variables

Correlations Between Each Independent Variable and the Dependent Variables

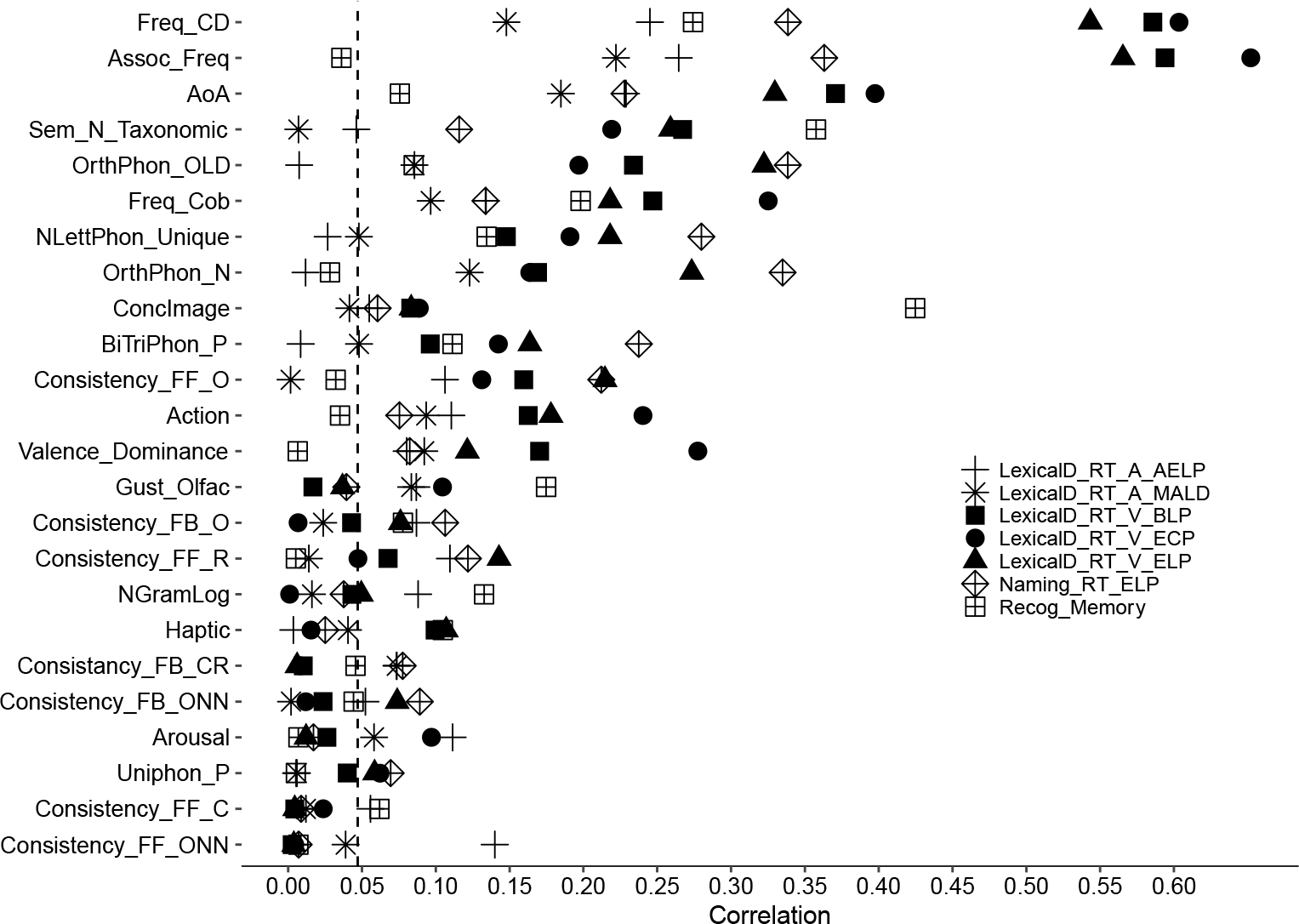

The Spearman’s correlation between 130 variables and each of the 7 dependent variables of reaction times is shown in Figure 7. Separate correlation plots for each dependent measure are presented in supplemental material (Supplemental Figures 14–20). The absolute correlation values of the ‘best’ variable (highest correlation) in each group, and its ranking, is given in Table 2. The full table containing correlations and rankings for each task for each variable is provided in Supplemental Table 3.

Figure 7.

Absolute Spearman’s correlation values for 130 variables with each of the seven dependent variables (six zRTs and d’ for recognition memory) over 1,728 words. The variables are ordered in an ascending order by overall weighted absolute correlation values. The vertical dashed line indicates a threshold for p < 0.05 level of significance.

Table 2.

Absolute correlation values of the ‘best’ independent variable within each subcategory with seven dependent variables as well as rankings over 1,728 words.

| Variable Name | Group (Category) | Group (Subcategory) | Weighted_Overall_R | Weighted_Overall_Rank |

|---|---|---|---|---|

|

| ||||

| Freq_Twitter | General | Frequency | 0.344 | 6 |

| CD_SUBTLEXUS | General | Contextual Diversity | 0.351 | 4 |

| Fam_Glasgow | General | Familiarity | 0.281 | 25 |

| AoA_LWV | General | Age of Acquisition | 0.282 | 24 |

| Cumfreq_TASA | General | Frequency Trajectory | 0.329 | 15 |

| NLett | Orthographic | Orthographic Length | 0.213 | 35 |

| UnigramF_Avg_C_Log | Orthographic | Graphotactic Probabilities: Unigram | 0.162 | 55 |

| BigramF_Avg_C_Log | Orthographic | Graphotactic Probabilities: Bigram | 0.128 | 70 |

| TrigramF_Avg_C_Log | Orthographic | Graphotactic Probabilities: Trigram | 0.125 | 71 |

| Orth_N_Freq_L_Mean | Orthographic | Orthographic Neighborhood | 0.216 | 33 |

| NPhon | Phonological | Phonological Length | 0.208 | 37 |

| UniphonP_Un | Phonological | Phonotactic Probabilities: Uniphon | 0.053 | 112 |

| BiphonP_St | Phonological | Phonotactic Probabilities: Biphon | 0.118 | 72 |

| TriphonP_Un | Phonological | Phonotactic Probabilities: Triphon | 0.102 | 79 |

| PUP | Phonological | Phonological Neighborhood | 0.195 | 41 |

| Imag_Glasgow | Semantic | Concreteness/Imageability | 0.189 | 43 |

| Nsenses_WordNet | Semantic | Polysemy | 0.202 | 40 |

| Mink_Perceptual_Lanc | Semantic | Specific Semantic Features | 0.195 | 42 |

| Valence_Warr | Semantic | Affect | 0.114 | 73 |

| Assoc_Freq_Type123 | Semantic | Semantic Neighborhood | 0.323 | 16 |

| Phonographic_N | Orth-Phon | Phonographic Neighborhood | 0.157 | 57 |

| Consistency_Token_FB_O | Orth-Phon | Consistency | 0.101 | 81 |

| NMorph | Morphology | Morphological Length | 0.044 | 118 |

| ROOT1_Freq_HAL | Morphology | Morphological Frequency | 0.301 | 22 |

| GloVe | Semantic | Vector Representation | 0.484 | 1 |

Notes. Weighted_Overall_R indicates the overall weighted absolute correlation that gives equal weight to each task (visual lexical decision, auditory lexical decision, naming, and recognition memory). Weighted_Overall_Rank indicates the rank based on Weighted_Overall_R among all 130 variables.

Notes. Weighted_Overall_R indicates the overall weighted absolute correlation that gives equal weight to each task (visual lexical decision, auditory lexical decision, naming, and recognition memory). Weighted_Overall_Rank indicates the rank based on Weighted_Overall_R among all 130 variables.

Overall, General variables had the largest correlation with visual and auditory lexical decision reaction times. Contextual diversity, along with frequency measures, were overall the most significant predictors. Association frequency measures were also among the most significant predictors, especially for visual and auditory lexical decision tasks. Overall, auditory lexical decision correlations were much lower than those of other measures. Age of acquisition and familiarity were near the top for auditory lexical decision, differentiating this task from other measures.

A subgroup of Semantic variables was the next most informative overall, followed by several Orthographic and a few Phonological variables. Orth-Phon variables, as a group, were the least informative on average. However, we note that more emphasis was given to lexical decision tasks (due to the presence of both visual and auditory lexical decision), whereas Orth-Phon variables were relevant especially for naming. Indeed, they showed much higher correlation in the naming task, but still lagged behind several General, Semantic, and Orthographic variables. Naming was the only task where Orthographic variables (length and uniqueness point) were strongest predictors (Supplementary Figure 17). Recognition memory was differentiated by the fact that semantic diversity and imageability/concreteness were the top predictors, followed by taxonomic semantic neighborhood measures (Supplementary Figure 20). Unlike other measures, lemma frequencies were found to be more predictive than wordform frequencies for recognition memory.

For the 471 words when semantic decision was included, the results for lexical decision reaction times and naming latencies were generally consistent with previous findings with the larger subset of words shown in Figure 7. The correlation values of the ‘best’ variable in each group, and its ranking, is given in Table 3, with the full table provided in Supplemental Table 4. The overall ranking of the variables changes somewhat due to the inclusion of the semantic decision task. The strongest predictors for semantic decision were Semantic and General variables such as concreteness/imageability, and age of acquisition measures. In contrast to other measures, frequency and contextual diversity were ranked relatively lower for semantic decision (Figure 8). Correlation plot for semantic decision reaction times over 471 words was presented in Supplemental Figure 21.

Table 3.

Absolute correlation values of the ‘best’ independent variable within each subcategory with eight dependent variables including Semantic Decision, as well as rankings, over 471 words.

| Variable Name | Group (Category) | Group (Subcategory) | Weighted_Overall_R | Weighted_Overall_Rank |

|---|---|---|---|---|

|

| ||||

| Freq_SUBTLEXUS | General | Frequency | 0.357 | 5 |

| CD_SUBTLEXUS | General | Contextual Diversity | 0.356 | 7 |

| Fam_Glasgow | General | Familiarity | 0.339 | 21 |

| AoA_LWV | General | Age of Acquisition | 0.361 | 4 |

| Cumfreq_TASA | General | Frequency Trajactory | 0.352 | 10 |

| NLett | Orthographic | Orthographic Length | 0.168 | 49 |

| UnigramF_Avg_C_Log | Orthographic | Graphotactic Probabilities: Unigram | 0.146 | 61 |

| BigramF_Avg_C_Log | Orthographic | Graphotactic Probabilities: Bigram | 0.143 | 64 |

| TrigramF_Avg_C_Log | Orthographic | Graphotactic Probabilities: Trigram | 0.134 | 68 |

| Orth_N_Freq_L | Orthographic | Orthographic Neighborhood | 0.181 | 47 |

| NPhon | Phonological | Phonological Length | 0.166 | 51 |

| UniphonP_Un | Phonological | Phonotactic Probabilities: Uniphon | 0.058 | 111 |

| BiphonP_St | Phonological | Phonotactic Probabilities: Biphon | 0.082 | 96 |

| TriphonP_Un | Phonological | Phonotactic Probabilities: Triphon | 0.063 | 109 |

| PLD20 | Phonological | Phonological Neighborhood | 0.160 | 53 |

| Imag_Glasgow | Semantic | Concreteness/Imageability | 0.266 | 28 |

| Nsenses_WordNet | Semantic | Polysemy | 0.155 | 54 |

| Mink_Perceptual_Lanc | Semantic | Specific Semantic Features | 0.275 | 26 |

| Valence_Warr | Semantic | Affect | 0.133 | 70 |

| Assoc_Freq_Token123 | Semantic | Semantic Neighborhood | 0.349 | 11 |

| Phonographic_N | Orth-Phon | Phonographic Neighborhood | 0.127 | 74 |

| Consistency_Token_FF_R | Orth-Phon | Consistency | 0.114 | 80 |

| NMorph | Morphology | Morphological Length | 0.019 | 133 |

| ROOT1_Freq_HAL | Morphology | Morphological Frequency | 0.272 | 27 |

| Word2Vec | Semantic | Vector Representation | 0.567 | 1 |

Notes. Weighted_Overall_R indicates the overall weighted absolute correlation that gives equal weight to each task (visual lexical decision, auditory lexical decision, naming, recognition memory, and semantic decision). Weighted_Overall_Rank indicates the rank based on Weighted_Overall_R among all 130 variables.

Figure 8.

Absolute Spearman’s correlation values for 130 variables with each of the eight dependent variables (seven zRTs and d’ for recognition memory) over 471 words. The variables were ordered in an ascending order by overall weighted absolute correlation values. The vertical dashed line indicates a threshold for p < 0.05 level of significance.

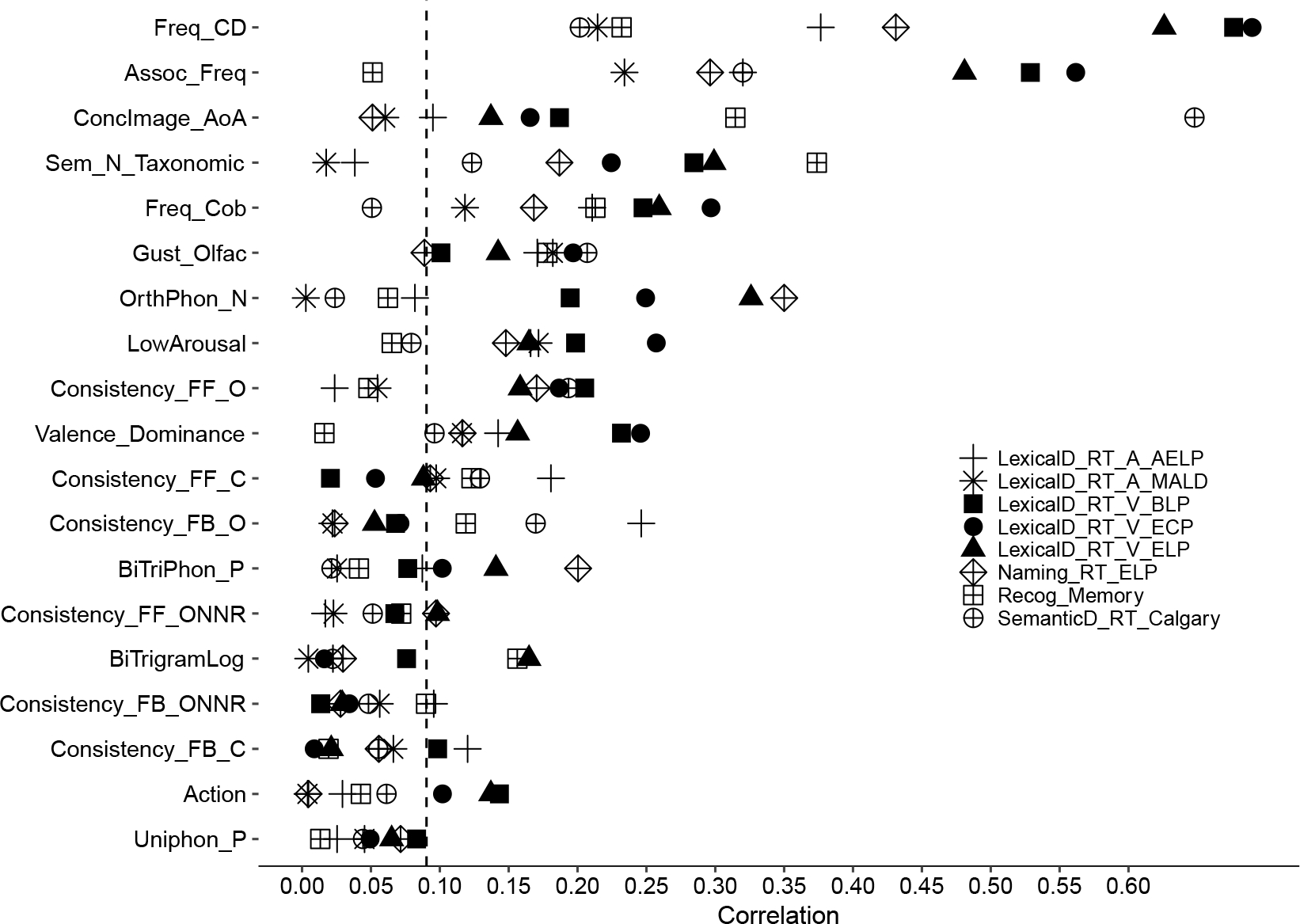

Correlations Between Each Factor and the Dependent Variables

The correlations between 24 factors and each of the 7 dependent variables of reaction times are shown in Figure 9 and Table 4. Same as the correlation between 130 individual variables and the 7 dependent variables, factors representing general variables such as frequency and contextual diversity had the largest correlation with visual and auditory lexical decision reaction times. Orth-Phon variables were also relevant especially for naming. We also found a high contribution of imageability/concreteness and semantic neighborhood to recognition memory.

Figure 9.

Absolute correlation values for 24 factors with each of the seven dependent variables (six zRTs and d’ for recognition memory) over 1,728 words. The variables are ordered in an ascending order by overall weighted absolute correlation values. The vertical dashed line indicates a threshold for p < 0.05 level of significance.

Table 4.

Actual correlation values for 24 factors with each of the seven dependent variables (six zRTs and d’ for recognition memory) over 1,728 words.

| Factor_ID | FactorNames | Weighted_Overall_R | LexicalD_RT_V_ELP_R | LexicalD_RT_V_ECP_R | LexicalD_RT_V_BLP_R | LexicalD_RT_A_MALD_R | LexicalD_RT_A_AELP_R | Naming_RT_ELP_R | Recog_Memory_R |

|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||

| PA1 | Freq_CD | −0.347 | −0.543 | −0.603 | −0.586 | −0.148 | −0.245 | −0.339 | −0.274 |

| PA15 | Assoc_Freq | −0.312 | −0.565 | −0.652 | −0.594 | −0.222 | −0.265 | −0.363 | −0.036 |

| PA20 | AoA | 0.219 | 0.33 | 0.398 | 0.371 | 0.185 | 0.229 | 0.228 | −0.076 |

| PA7 | Sem_N_Taxonomic | −0.187 | −0.259 | −0.219 | −0.267 | −0.007 | −0.046 | −0.116 | −0.358 |

| PA22 | OrthPhon_OLD | −0.18 | −0.322 | −0.197 | −0.234 | −0.086 | 0.008 | −0.338 | −0.085 |

| PA13 | Freq_Cob | −0.178 | −0.218 | −0.325 | −0.247 | −0.097 | −0.134 | −0.134 | −0.198 |

| PA2 | NLettPhon_Unique | 0.159 | 0.218 | 0.191 | 0.148 | 0.048 | 0.027 | 0.28 | −0.134 |

| PA23 | OrthPhon_N | −0.158 | −0.273 | −0.164 | −0.169 | −0.123 | −0.012 | −0.335 | −0.028 |

| PA4 | ConcImage | −0.155 | −0.083 | −0.089 | −0.083 | −0.041 | −0.055 | −0.061 | 0.425 |

| PA17 | BiTriPhon_P | 0.128 | 0.164 | 0.142 | 0.096 | 0.048 | −0.008 | 0.238 | −0.111 |

| PA21 | Consistency_FF_O | −0.117 | −0.215 | −0.131 | −0.16 | −0.002 | 0.106 | −0.212 | −0.032 |

| PA11 | Action | −0.102 | −0.178 | −0.24 | −0.163 | −0.094 | −0.111 | −0.075 | −0.035 |

| PA8 | Valence_Dominance | −0.091 | −0.121 | −0.278 | −0.17 | −0.092 | −0.08 | −0.082 | 0.007 |

| PA16 | Gust_Olfac | −0.088 | −0.037 | −0.105 | −0.017 | −0.083 | −0.087 | −0.039 | 0.175 |

| PA10 | Consistency_FB_O | −0.07 | −0.076 | 0.007 | −0.043 | −0.024 | 0.087 | −0.106 | −0.078 |

| PA19 | Consistency_FF_R | −0.069 | −0.143 | −0.047 | −0.068 | −0.014 | 0.11 | −0.122 | 0.005 |

| PA12 | NGramLog | −0.063 | −0.05 | 0.001 | −0.043 | 0.016 | 0.088 | 0.038 | −0.133 |

| PA24 | Haptic | −0.057 | −0.107 | 0.016 | −0.099 | 0.041 | 0.004 | −0.025 | −0.105 |

| PA9 | Consistancy_FB_CR | −0.051 | −0.006 | 0.008 | 0.01 | −0.074 | −0.073 | −0.078 | −0.046 |

| PA6 | Consistency_FB_ONN | −0.049 | −0.074 | 0.012 | −0.024 | −0.002 | 0.052 | −0.089 | −0.044 |

| PA18 | Arousal | 0.039 | −0.012 | −0.097 | −0.026 | −0.058 | −0.111 | 0.017 | 0.007 |

| PA5 | Uniphon_P | 0.033 | 0.059 | 0.062 | 0.04 | −0.006 | −0.005 | 0.069 | 0.005 |

| PA14 | Consistency_FF_C | −0.029 | −0.004 | 0.024 | −0.004 | −0.012 | −0.056 | 0.009 | −0.062 |

| PA3 | Consistency_FF_ONN | −0.027 | 0.004 | −0.004 | −0.003 | −0.039 | −0.14 | −0.007 | −0.007 |

Notes. Weighted_Overall_R indicates the overall weighted correlation that gives equal weight to each task (visual lexical decision, auditory lexical decision, naming, and recognition memory).

LexicalD_RT_V_ELP_R indicates the correlation for lexical decision (i.e., LexicalD) time (i.e., RT) of visual modality (i.e., V) from ELP. Similar naming convention for other variables.

To examine the correlations between each factor and the dependent variables for the 471 words when semantic decision was included, we first ran an exploratory factor analysis with a number of 19 latent factors determined by the parallel analysis. Factor loadings (> 0.4) are presented in Supplemental Table 5. Based on the table of factor loadings, we named these factors as follows: Freq_CD, OrthPhon_N, ConcImage_AoA, Consistency_FB_ONNR, Consistency_FF_ONNR, Sem_N_Taxonomic, Freq_Cob, Assoc_Freq, Uniphon_P, Valence_Dominance, BiTriPhon_P, Consistency_FB_C, Consistency_FB_O, Consistency_FF_C, BiTrigramLog, Gust_Olfac, Action, Consistency_FF_O, and LowArousal. The correlations between 19 factors and each of the 7 dependent variables of reaction times are shown in Figure 10 and Table 5. Similarly, we found that General variables such as frequency and contextual diversity had the largest correlation with visual and auditory lexical decision reaction times and semantic neighborhood was highly correlated with recognition memory. For semantic decision, the strongest factor was concreteness/imageability, which was combined with other AoA.

Figure 10.

Absolute correlation values for 19 factors with each of the eight dependent variables (seven zRTs and d’ for recognition memory) over 471 words. The variables are ordered in an ascending order by overall weighted absolute correlation values. The vertical dashed line indicates a threshold for p < 0.05 level of significance.

Table 5.

Actual correlation values for 19 factors with each of the eight dependent variables (seven zRTs and d’ for recognition memory) over 471 words.

| Factor_ID | FactorNames | Weighted_Overall_R | LexicalD_RT_V_ELP_R | LexicalD_RT_V_ECP_R | LexicalD_RT_V_BLP_R | LexicalD_RT_A_MALD_R | LexicalD_RT_A_AELP_R | Naming_RT_ELP_R | Recog_Memory_R | SemanticD_RT_Calgary_R |

|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||

| PA1 | Freq_CD | −0.365 | −0.626 | −0.69 | −0.676 | −0.214 | −0.377 | −0.431 | −0.232 | −0.202 |

| PA11 | Assoc_Freq | −0.294 | −0.481 | −0.562 | −0.529 | −0.234 | −0.32 | −0.296 | 0.051 | −0.32 |

| PA3 | ConcImage_AoA | 0.251 | 0.137 | 0.166 | 0.187 | 0.06 | 0.095 | 0.051 | −0.315 | 0.648 |

| PA7 | Sem_N_Taxonomic | −0.196 | −0.299 | −0.225 | −0.285 | 0.017 | −0.038 | −0.187 | −0.374 | 0.123 |

| PA12 | Freq_Cob | −0.173 | −0.259 | −0.297 | −0.248 | −0.118 | −0.211 | −0.168 | −0.213 | 0.051 |

| PA16 | Gust_Olfac | −0.159 | −0.142 | −0.197 | −0.101 | −0.182 | −0.171 | −0.089 | 0.178 | −0.207 |

| PA2 | OrthPhon_N | −0.147 | −0.326 | −0.249 | −0.195 | −0.003 | −0.082 | −0.35 | −0.062 | −0.024 |

| PA19 | LowArousal | 0.134 | 0.165 | 0.257 | 0.199 | 0.172 | 0.166 | 0.148 | −0.065 | 0.079 |

| PA18 | Consistency_FF_O | −0.127 | −0.158 | −0.187 | −0.205 | −0.055 | −0.024 | −0.17 | −0.048 | −0.193 |

| PA8 | Valence_Dominance | −0.114 | −0.157 | −0.246 | −0.232 | −0.116 | −0.142 | −0.117 | 0.016 | −0.096 |

| PA13 | Consistency_FF_C | −0.108 | −0.088 | 0.053 | −0.021 | 0.097 | 0.181 | −0.093 | −0.123 | 0.129 |

| PA10 | Consistency_FB_O | −0.102 | −0.053 | 0.071 | 0.068 | −0.022 | 0.246 | −0.024 | −0.119 | 0.17 |

| PA17 | BiTriPhon_P | 0.085 | 0.141 | 0.102 | 0.077 | 0.025 | −0.087 | 0.2 | −0.041 | 0.021 |

| PA6 | Consistency_FF_ONNR | −0.064 | −0.098 | −0.068 | −0.067 | −0.023 | −0.017 | −0.097 | −0.072 | 0.051 |

| PA14 | BiTrigramLog | −0.061 | −0.165 | −0.016 | −0.076 | −0.005 | 0.022 | −0.03 | −0.156 | 0.022 |

| PA4 | Consistency_FB_ONNR | −0.054 | −0.029 | 0.034 | 0.014 | −0.056 | 0.096 | −0.028 | −0.09 | 0.048 |

| PA9 | Consistency_FB_C | −0.053 | 0.021 | 0.009 | 0.098 | −0.066 | −0.12 | −0.056 | 0.019 | −0.055 |

| PA15 | Action | −0.051 | −0.137 | −0.102 | −0.143 | 0.004 | −0.029 | 0.005 | −0.043 | −0.061 |

| PA5 | Uniphon_P | 0.046 | 0.065 | 0.05 | 0.083 | 0.045 | 0.025 | 0.072 | 0.013 | −0.044 |

Notes. Weighted_Overall_R indicates the overall weighted correlation that gives equal weight to each task (visual lexical decision, auditory lexical decision, naming, recognition memory, and semantic decision). LexicalD_RT_V_ELP_R indicates the correlation for lexical decision (i.e., LexicalD) time (i.e., RT) of visual modality (i.e., V) from ELP. Similar naming convention for other variables.