Abstract

Patients with age-related hearing loss face hearing difficulties in daily life. The causes of age-related hearing loss are complex and include changes in peripheral hearing, central processing, and cognitive-related abilities. Furthermore, the factors by which aging relates to hearing loss via changes in auditory processing ability are still unclear. In this cross-sectional study, we evaluated 27 older adults (over 60 years old) with age-related hearing loss, 21 older adults (over 60 years old) with normal hearing, and 30 younger subjects (18–30 years old) with normal hearing. We used the outcome of the upper-threshold test, including the time-compressed threshold and the speech recognition threshold in noisy conditions, as a behavioral indicator of auditory processing ability. We also used electroencephalography to identify presbycusis-related abnormalities in the brain while the participants were in a spontaneous resting state. The time-compressed threshold and speech recognition threshold data indicated significant differences among the groups. In patients with age-related hearing loss, information masking (babble noise) had a greater effect than energy masking (speech-shaped noise) on processing difficulties. In terms of resting-state electroencephalography signals, we observed enhanced frontal lobe (Brodmann’s area, BA11) activation in the older adults with normal hearing compared with the younger participants with normal hearing, and greater activation in the parietal (BA7) and occipital (BA19) lobes in the individuals with age-related hearing loss compared with the younger adults. Our functional connection analysis suggested that compared with younger people, the older adults with normal hearing exhibited enhanced connections among networks, including the default mode network, sensorimotor network, cingulo-opercular network, occipital network, and frontoparietal network. These results suggest that both normal aging and the development of age-related hearing loss have a negative effect on advanced auditory processing capabilities and that hearing loss accelerates the decline in speech comprehension, especially in speech competition situations. Older adults with normal hearing may have increased compensatory attentional resource recruitment represented by the top-down active listening mechanism, while those with age-related hearing loss exhibit decompensation of network connections involving multisensory integration.

Key Words: age-related hearing loss, aging, electroencephalography, fast-speech comprehension, functional brain network, functional connectivity, resting-state, sLORETA, source analysis, speech reception threshold

Introduction

The most prevalent sensory deficiency in older adults is hearing loss. According to the World Health Organization’s World Report on Hearing, the worldwide prevalence of hearing loss (of moderate or higher-grade severity) rises exponentially with age, going from 15.4 percent among those in their 60s to 58.2 percent among those over 90 years old (World Health Organization, 2021). Age-related hearing loss (ARHL) is a complicated condition defined by deteriorated auditory function, such as increased hearing thresholds and poor frequency resolution (Yamasoba et al., 2013). It is a consequence of the cumulative effects of aging on the auditory system. ARHL can cause communication barriers, depression, and cognitive decline, and has recently been proposed to be the most easily modifiable risk factor for dementia (Slade et al., 2020).

Speech comprehension is a critical problem for people with ARHL. Mitchell et al. (2011) showed that only 23% of ARHL patients truly benefit from hearing aids. Thus, in addition to peripheral auditory input, specific auditory mechanisms are necessary to track subtle differences in complex spectral and time information (Dobreva et al., 2011), enabling the interpretation of tasks involving auditory processing capabilities, such as understanding speech in noise (Humes et al., 2013), recognizing fast speech with time compression (Adel Ghahraman et al., 2020), and localizing sound sources. The ability to process these types of spectral information gradually decreases with age (Otte et al., 2013). Moreover, the correlation between speech acceptance and visual information, as reported by George et al. (2007), indicates that cognitive ability influences the ability of the auditory system to process speech information. Although differences in research populations and research methods among studies have limited the degree to which a correlation between cognitive ability and speech comprehension can be confirmed, in complex situations such as those with high background noise or an increased speech rate, speech comprehension is considered to be related to both auditory and nonauditory factors.

A routine clinical audiology examination mainly focuses on the functions of the peripheral auditory system, as well as neuronal activity in the 8th cranial nerve and brainstem auditory pathway, and rarely involves the central processing of information and cognitive-related processes (Lan and Wang, 2022). In addition to clinical audiology exams, speech audiometry is important for assessing challenges in patients’ daily lives (National Research Council (US) Committee on Disability Determination for Individuals with Hearing Impairments et al., 2004). A previous study used speech test materials with a fixed speech rate to evaluate individuals’ speech based on recognition scores, which could easily lead to ceiling or floor effects (Ye et al., 2015). This problem could be addressed by integrating an adaptive signal-to-noise ratio into speech recognition threshold tests (Schlueter et al., 2015). The employment of adaptive techniques to estimate the speech recognition threshold (SRT) as a function of the speech rate is referred to as the time-compression threshold (TCT) (Phatak et al., 2018). Time-compressed speech is generated by deleting periodic information from typical speech utterances throughout the speech signal (Fu et al., 2001). As a result, the spectral characteristics of the original signal are largely preserved, but the phonetic information is presented across a shorter temporal interval (Dias et al., 2019). Versfeld and Dreschler (2002) performed SRT and TCT tests in fluctuating and stationary noise conditions. They found that when assessing individuals’ speech comprehension, TCT tests or SRT tests in fluctuating noise conditions were superior to SRT tests in stationary noise conditions.

As difficulties in speech-in-noise tests are not fully explained by peripheral hearing loss and cognitive decline, they may be related to central auditory processing mechanisms at the brainstem and cortical levels (Briley and Summerfield, 2014). Several fMRI studies have shown that the structure of the cerebral cortex and the activation of the central auditory system differ between older and younger individuals (Profant et al., 2014; Fitzhugh et al., 2019). Moreover, specific age-related changes in the central auditory system that form the basis of central deafness appear to be independent of changes in the inner ear (Ouda et al., 2015). These neural-related manifestations are associated with the reduction of gray matter in the frontal cortex. Resting-state functional connectivity is described as spontaneous activity organized into coherent networks. A previous study has indicated that resting-state functional connectivity is significantly associated with aging and hearing impairment (Onoda et al., 2012). Schmidt et al. (2013) found that in people with hearing impairments, dorsal attention network (DAN) connectivity was increased while default mode network (DMN) connectivity was decreased. However, studies have produced conflicting results regarding the activation and connection of brain networks in individuals with ARHL. The findings of Schmidt et al. (2013) differed from those of Husain et al. (2014), who found reduced connectivity in the DAN, left insula, and left postcentral gyrus, as well as increased connectivity in the DMN between seeds and the left middle frontal gyrus in individuals with hearing loss. In addition, there is evidence to indicate that the decline in functional resting-state connectivity in the frontal lobe in older individuals is related to an increase in the daily listening effort in this population rather than hearing loss (Rosemann and Thiel, 2019). Therefore, the relationship between changes in brain network connectivity and behavioral indicators of auditory processing ability in individuals with presbycusis merits further exploration.

In the current study, we hypothesized that older individuals with normal hearing ability would exhibit a decline in speech comprehension compared with younger people (18–30 years old). Furthermore, we hypothesized that, compared with individuals with normal audition, those with ARHL would exhibit a more severe decline in speech comprehension. These hypotheses are based on evidence from previous studies, i.e., the finding that speech processing is affected by a variety of peripheral and central factors, particularly in complex situations (Harris and Dubno, 2017), and data indicating that the TCT is a useful measurement for revealing individual differences in speech comprehension (Phatak et al., 2018).

Finally, according to previous studies (Griffiths et al., 2020), we hypothesized that individuals with ARHL experience increased cognitive demands such as increased attentional demands, enhanced activation of transsensory pathways, and abnormal functional connectivity between networks. There is evidence to suggest that decreased auditory input leads to the depletion of cognitive resources. For instance, Rosemann et al. (2018) found that hearing loss elicited stronger audio-visual integration with altered brain activation involving the frontal regions, while Xing et al. (2021) reported that abnormal frontal lobe-related connectivity in people with ARHL reflected executive dysfunction at the central level. To address these issues in the present study, we sought to characterize speech processing abilities and the spontaneous activity of neural networks in older adults with normal audition and those with ARHL.

Methods

Study design

The participant group in this cross-sectional study included individuals over 60 years old with presbycusis and those with normal hearing. The control group comprised younger people (18–30 years old) with normal hearing. The sample size was estimated based on the results of our preliminary experiment. Using PASS 15.0 software (NCSS, LLC. Kaysville, UT, USA), we calculated a minimum sample size of 16 participants per group with a statistical power (1–β) of 0.9 and an α error probability of 0.05. A post hoc power analysis was performed using G*Power 3.1.3 software (Heinrich-Heine-Universität Düsseldorf, Düsseldorf, Germany; http://www.gpower.hhu.de/). The basic demographic and audiological characteristics of the older adults with normal hearing and those with ARHL are listed in Table 1. Data collection was conducted at Sun Yat-sen Memorial Hospital, Sun Yat-sen University, Guangzhou, Guangdong, China from October 2019 to May 2021. All enrolled subjects completed the Mini-Mental State Examination and underwent pure-tone audiometry, a tympanogram, a speech discrimination ability test, and resting-state electroencephalogram (EEG) acquisition.

Table 1.

The demographic information and hearing threshold of ARHL and normal older participants

| Normal elderly | ARHL | P-value | |

|---|---|---|---|

| n | 21 | 27 | |

| Age (yr) | 66.2± 4.5 | 68.9 ± 4.9 | 0.060 |

| Gender (male/female, n) | 5/16 | 11/16 | 0.217 |

| MMSE | 27.70±1.40 | 27.40±1.00 | 0.454 |

| Hearing thresholds (right) | 20.00±7.50 | 31.25±15.00 | < 0.001 |

| Hearing thresholds (left) | 20.00±6.25 | 31.25±18.75 | < 0.001 |

Data are represented as the mean ± SD. ARHL: Age related hearing loss; MMSE: Mini- Mental State Examination.

Participants

Twenty-eight older adults with age-related hearing loss were recruited from the Ear, Nose, and Throat clinic at Sun Yat-sen Memorial hospital, Sun Yat-sen University. The detailed criteria for participant inclusion and exclusion were as follows:

- Bilateral symmetrical progressive hearing loss, defined as pure tone audiometry (PTA) results 0.5, 1, 2, or 4 kHz greater than 25 dB according to the World Health Organization criteria (WHO, 1997), dominated by a high frequency;

- No history of head trauma or central nervous system disorders;

- For participants in the presbycusis group, neither current conductive hearing loss nor a previous history of middle ear surgery (e.g., mastoidectomy).

Of the initially selected individuals, one subject was excluded because a data quality assessment indicated that their data were of suboptimal quality. Finally, complete datasets from 27 patients (11 men and 16 women; mean age 68.9 ± 4.9 years) were included in our analysis.

We also recruited 21 older control individuals with normal audition (5 men and 16 women; mean age 66.2 ± 4.5 years, no significant age differences compared with the presbycusis group, t = –1.923, P = 0.06, independent sample t-test; no differences in gender composition via chi-square test, χ2 = 1.524, P = 0.217) and thirty healthy younger adults (18 men and 12 women; mean age 21.6 ± 2.9 years) with normal audition. The average hearing threshold between 0.5 kHz and 4 kHz in all control participants was less than or equal to 25 dB HL (WHO, 1997). No control participants had a history of neurological, ontological, or systemic disease. All older adults achieved a score of at least 26 on the Mini-Mental State Examination (Li et al., 2016), indicating that their overall cognitive ability was within the normal range.

Prior to the experiment, all participants signed a written consent form (Additional file 1 (191.8KB, pdf) ) after they had been properly informed regarding the experimental aims and procedures. This study was approved by the institutional review board of Sun Yat-sen Memorial Hospital at Sun Yat-sen University, China (approval No. SYSEC-KY-KS-2021-311) on November 25, 2021 (Additional file 2 (1.7MB, pdf) ) and conducted in accordance with the Declaration of Helsinki.

Audiological examinations

Routine audiological assessments consisted of otoscopy, tympanometry, and PTA. Air-conduction thresholds were measured for both ears at 0.125, 0.25, 0.5, 1, 2, 4, and 8 kHz, and bone-conduction hearing thresholds were measured between 0.25 kHz and 4 kHz in a soundproof booth. The frequency setting for tympanometry was 226 Hz. We collected the average PTA-defined hearing thresholds for each ear at 500, 1000, 2000, and 4000 Hz.

All participants in the younger and older normal-hearing groups had average audiometric thresholds ≤ 25 dB HL between 250 and 4000 Hz, with interaural asymmetries less than 15 dB HL at all tested frequencies. The participants with age-related hearing loss had typical audiograms for their age, with symmetrical bilateral mild hearing loss at 4–8 kHz. The lower quartile, median, and upper quartile at the right ear in the ARHL group was 27.50, 31.25, and 42.50 dBHL, respectively. The younger participants and older participants with normal hearing had a median of 8.75 dBHL (interquartile range: 7.50–11.56) and 20.00 dBHL (interquartile range: 16.25–23.75), respectively, at the right ear. Audiometric thresholds for both ears are reported in Figure 1.

Figure 1.

Audiograms for all included participants.

Audiograms for all subjects in the two groups of older participants; bold indicates the median of each group. Data for younger subjects with normal hearing are shown in gray, data for older subjects with normal hearing are shown in green, and data for subjects with presbycusis are shown in yellow. The hearing threshold of the right ear is represented by the graph with a red background and that for the left ear is depicted with a blue background.

Discrimination of Mandarin in a noise test

Environment and equipment

This part of the experiment was carried out in a soundproof room at Sun Yat-sen Memorial Hospital, Sun Yat-sen University. The background noise in the standard sound field was set at < 25 dBA. Using a Chinese speech audiometry platform developed at South China University of Technology, sound files were sampled at 16 kHz and integrated into MATLAB (2019b, the Math Works Inc. Natick, MA, USA). Audio was presented via a speaker connected to the computer, and the sound volume was set at a suitable level to ensure that the participants could hear the sounds clearly and comfortably (approximately 65 dBA, adjusted according to the PTA). To minimize the chance of inducing peripheral hearing loss, the participants were able to adjust the output volume to a subjectively comfortable level. The sound pressure level was calibrated using a sound level meter placed in the same position as the center of each participant’s head.

Materials and procedures

The experimental sound stimuli were obtained from an official Mandarin speech database used in Mainland China. The Mandarin hearing in noise test (MHINT) corpus (Wong et al., 2007) contains a total of 12 sentence lists, each with 20 short sentences containing 10 monosyllabic words. The sentences were spoken by monolingual native Chinese speakers without any dialects or speech disorders.

For each subject, the experimenters randomly selected four different sentence lists for use in four different trials. The selection of sentence lists was roughly balanced across the subjects. All 20 sentences in each list were randomly presented by the computer program. During the hearing in noise protocol, the participant was asked to listen to and repeat the words in the last sentence as accurately as possible. Their response was monitored and evaluated in real time by the experimenter and then recorded using the software interface. Sentences that could not be repeated were replayed a maximum of two times. Therefore, the final score for each short sentence represents the participant’s performance after a maximum of three attempts. The software automatically calculated the result after all of the sentences in the list had been presented. The entire MHINT process comprised three blocks, as follows.

In the first block, the original versions of the short sentences from the selected sentence list were presented to the participant. The function of this block was to obtain the speech recognition score in a quiet condition. The percentage of correctly recognized syllables/words at a suitable SPL was recorded as the word recognition score (WRS).

In the second block, the time-compressed threshold (TCT) was measured using an adaptive staircase (one-up, one-down) procedure (Kociński and Niemiec, 2016) involving the random presentation of 20 short sentences that were time compressed according to the variable ratio R, which was applied to the entire sentence list. A trial was scored as “intelligible” when more than half of the syllables in the sentence were repeated correctly. When a trial was scored as intelligible, the R was increased for the next trial; otherwise, i.e., if half of the syllables in a sentence could not be correctly repeated after up to three attempts, the R was decreased for the next trial. The variable ratio R was initially set to 0.5 and then changed by a factor of 1.5 for the first two reversals, decreasing to a factor of 1.25 until the sixth reversal, and finally to a factor of 1.1 thereafter until the end of the current list. We used the arithmetic mean of the speaking rate (in syllables/s) of the last eight sentences to compute the TCT.

In the third block, we compared the SRT in babble noise and speech-shaped noise (SSN). In the SRT block, we employed the same adaptive staircase program used in the TCT block, and the babble and SSN conditions comprised 20 short sentences from a nonrepetitive sentence list. SSN was generated by imposing the long-term average amplitude spectrum of all sentences in the corresponding database on white noise using the Fourier method. In contrast, babble noise was generated by summing five sentences that were pseudorandomly selected from the MHINT practice lists to form babble with the same long-term average spectral-temporal pattern as the target sentences (Meng et al., 2019). The MHINT steady-state background noise was presented at a fixed level, and the adaptive signal-to-noise ratio was achieved by changing the intensity of the target. The step size was set to 8 dB until the second reversal, and then set to 4 dB until the fourth reversal, after which it was decreased to 2 dB until the end of the current list. The SRTs were estimated by taking the arithmetic mean of the SNRs (in dB) of the last eight sentences.

EEG recording

Resting-state brain activity was acquired via dense array surface EEG using the 128-lead Hydrocel Geodesic Sensor Net (Electrical Geodesics, Inc. Eugene, OR, USA) and a NetAmps 200 amplifier. All experiments took place in an electromagnetically and acoustically shielded room, and impedances were kept below 50 kΩ. Data were collected at a sampling rate of 1000 Hz with low pass (0.1 Hz), high-pass (70 Hz), and notch (50 Hz) filters. The participants were asked to keep their eyes open for the first 7 minutes of the EEG recordings and to keep their eyes closed for the last 7 minutes. The recording session lasted approximately 14 minutes. Before recording, the experimenter explained the purpose of the EEG session as well as the safety precautions taken. The subjects were asked to sit on a chair in a comfortable position, with their head in a fixed position, and to try to limit blinking and physical activity. During the eyes-open condition, the participants were instructed to remain calm and to visually fixate on a small cross presented on a computer screen in front of them.

EEG data analysis

Preprocessing of EEG data

The raw data files from the 128-lead Hydrocel Geodesic Sensor Net were transformed into MAT-file format and then extracted in MATLAB R2019a using the EEGLAB v14.1.0 toolbox. The data were first referenced to bilateral mastoid electrodes (E56, E107), and then the EEG signals were resampled to 250 Hz, high pass filtered at 50 Hz, and low pass filtered at 1 Hz. EEG components corresponding to eye blinks, eye movements, frontal or temporal muscle artifacts, or cardiovascular artifacts were removed using an independent component analysis (ICA)-based correction process.

After the ICA procedure, we used a fast Fourier transform to compute a power spectrum with a frequency resolution of 0.5 Hz. The spectral band was separated into the following individual bands: delta (1.5–6.0 Hz), theta (6.5–8.0 Hz), alpha1 (8.5–10.0 Hz), alpha2 (10.5–12.0 Hz), beta1 (12.5–18.0 Hz), beta2 (18.5–21.0 Hz), and beta3 (21.5–30 Hz) (Kubicki et al., 1979).

Source localization

We used standardized low-resolution brain electromagnetic tomography (sLORETA) via LORETA-KEY v20081103 software (Zurich, Switzerland; http://www.uzh.ch/keyinst/loreta.htm) to estimate the sources of EEG activity in the three groups. Our goal was to identify any abnormal brain activity in the eyes-closed condition in the older participant groups. sLORETA is an advantageous source imaging method for solving the EEG inverse problem, and the three-shell spherical model (skin, skull, cortex) registered to the Talairach human brain atlas provided by the Montreal Neurological Institute (MNI) (Dümpelmann et al., 2012) was used to locate the source of EEG activity. After applying a mean reference transformation, we used the sLORETA algorithm to calculate the neuronal activity in terms of current density (A/m2) without assuming a predefined number of active sources. The intracerebral volume was partitioned into 6239 voxels at a spatial resolution of 5 mm (Lancaster et al., 2000).

Functional connectivity measurements

We constructed functional connectivity maps to assess whether and how changes in brain activity were related to changes in age and hearing loss. We used the lagged-phase synchronization (LPS) analysis implemented in the LORETA-KEY software (The KEY Institute for Brain-Mind Research, Zurich, Switzerland) to characterize differences in functional connectivity among the regions of interest (ROIs; Table 2) when comparing the older versus younger groups in terms of the frequency domains defined in the “preprocessing of EEG data” section. The locations of the ROIs with significant changes were expressed in x, y, and z stereotactic MNI coordinates with a millimeter resolution, with positive values representing the right, anterior, and superior directions. Similar to the method used in previous studies (Dümpelmann et al., 2012), we used the Hilbert transform to calculate the instantaneous phase of the preprocessed data, and the discrete Fourier transform to precisely decompose the data into a finite number of cosine and sine waves at the Fourier frequency. The current density for each ROI over time was extracted using sLORETA. The power in all voxels was normalized to 1 and log transformed at each time point. As a result, the ROI values reflected the logarithmic transformation fraction of the total power for all voxels at given frequencies. We were interested in both the signal power and connectivity analysis for regions including the default mode network, sensorimotor network, frontoparietal network, occipital network, and cingulo-opercular network, including cognitive and auditory-related cortices such as the frontal, cingulate, temporal, and parieto-occipital lobes. We chose these areas for analysis according to previous studies indicating that critical structures associated with aging include the frontal lobes and anterior cingulate gyrus (Pardo et al., 2007), as well as the precentral and postcentral gyrus (Zhou et al., 2020). Auditory processing and speech comprehension predominantly involve the temporal cortex, and the effect of age on central information integration mainly involves changes in the default mode, cingulo-opercular, and frontoparietal control networks (Geerligs et al., 2015). The location coordinates were confirmed via fMRI (Dosenbach et al., 2010).

Table 2.

The regions of interest created in sLORETA for the analysis of functional connectivity in terms of “lagged phase synchronization”

| Network | ROI Label | MNI coordinates (mm) | ||

|---|---|---|---|---|

|

| ||||

| x | y | z | ||

| Cingulo-opercular | Temporal | –59 | –47 | 11 |

| ACC | –2 | 30 | 27 | |

| dACC | 9 | 20 | 34 | |

| Temporal | 51 | –30 | 5 | |

| Default | IPS | –36 | –69 | 40 |

| vmPFC | –6 | 50 | –1 | |

| Postcingulate | –5 | –52 | 17 | |

| Occipital | –2 | –75 | 32 | |

| mPFC | 0 | 51 | 32 | |

| Postcingulate | 1 | –26 | 31 | |

| vmPFC | 8 | 42 | –5 | |

| Fronto-parietal | dlPFC | –44 | 27 | 33 |

| IPL | –41 | –40 | 42 | |

| aPFC | –29 | 57 | 10 | |

| aPFC | 29 | 57 | 18 | |

| dlPFC | 40 | 36 | 29 | |

| IPL | 44 | –52 | 47 | |

| Occipital | Occipital | –18 | –50 | 1 |

| Postoccipital | –5 | –80 | 9 | |

| Postoccipital | 13 | –91 | 2 | |

| Occipital | 19 | –66 | –1 | |

| Sensorimotor | Postcentral gyrus | –47 | –18 | 50 |

| SMA | 0 | –1 | 52 | |

| pre-SMA | 10 | 5 | 51 | |

| Supparietal | 34 | –39 | 65 | |

| Postcentral gyrus | 41 | –23 | 55 | |

| Precentral gyrus | 46 | –8 | 24 | |

ACC: Anterior cingulate cortex; aPFC: anterior prefrontal cortex; dACC: dorsal anterior cingulate cortex; dlPFC: dorsal lateral prefrontal cotrex; IPL: inferior parietal lobule; IPS: intraparietal sulcus; MNI: Montreal Neurological Institute; mPFC: medial prefrontal cortex; sLORETA: standardized low-resolution brain electromagnetic tomography; SMA: supplementary motor area; vmPFC: ventromedial prefrontal cortex.

EEG statistical analysis

We investigated the contribution of different frequency bands by partitioning the time-frequency space into seven frequency bands corresponding to delta, theta, alpha1, alpha2, beta1, beta2, and beta3.

We compared differences in resting-state EEG activity among the participants in the eyes-closed condition via nonparametric statistical analysis of LORETA-KEY images (statistical nonparametric mapping; SnPM). This was done using the built-in voxel method randomized test (Holmes et al., 1996) in the LORETA-KEY software (Zurich, Switzerland). We compared the lagged-phase connectivity between groups using independent-samples t-tests with a significance threshold of α = 0.05 (corrected for multiple comparison), which was estimated by permuting the network strength values 5000 times. Correction for multiple comparisons in SnPM using random permutations has been shown to produce results similar to those obtained via statistical parameter mapping using a general linear model with a correction for multiple comparisons.

Statistical analysis

Statistical analysis was performed using SPSS software (version 25.0; IBM Corporation, Armonk, NY, USA). The WRS and SRT results were first compared among the three groups using the Kruskal-Wallis test, and if a significant difference was identified, a Holm-Bonferroni correction was used for multiple comparisons. For the SRT results, the type of background noise (SSN and babble) was treated as a within-subject factor, and group membership was treated as a between-subjects factor. The Wilcoxon signed rank test was used to compare within-subject differences in SRT test results. For the TCT results, differences among the groups were tested via a one-way analysis of variance followed by the Bonferroni correction. The criterion used for statistical significance in this study was P < 0.05.

Results

Comparison of MHINT measurements

The participant word recognition scores when the fixed speech rate was 5.1 syllables/s in a quiet environment are shown for all groups in a boxplot in Figure 2A. The median WRS in the participants in the younger group was significantly higher than that in the participants in the ARHL group (Z = 4.64, P < 0.001), and even higher than that in the participants in the normal-hearing older group (Z = 3.02, P = 0.007). However, we found no significant differences between the ARHL group and the older normal-hearing group (Z = 1.27, P = 0.61).

Figure 2.

Results of speech discrimination tests.

(A) Word recognition scores for the three participant groups. The box plot extends from the 25th to the 75th percentile, and the line represents the median (Kruskal-Wallis test followed by pairwise Wilcoxon rank sun test). (B) Time-compression threshold in the three groups. The dot represents the mean, and the error bar represents the standard error (one-way analysis of variance followed by the Bonferroni correction). (C) Speech reception thresholds for trials with speech-shaped noise (SSN) and babble noise for the participants in the three groups (Kruskal-Wallis test followed by between-subject pairwise Wilcoxon rank sum test and within-subject Wilcoxon signed rank test). The boxplots summarize the data for each group, and the line represents the median. Data are expressed as the means ± standard errors of the mean (B, n = 78) or median (interquartile range) (A, C, n = 78). *P < 0.05, **P < 0.01, ***P < 0.001.

The TCT results are shown in Figure 2B. The TCT in a quiet environment was significantly lower in participants with hearing loss and older adults with normal hearing compared with younger subjects (presbycusis-younger: t = 16.13, P < 0.001; normal older-younger: t = 10.66, P < 0.001, Holm-Bonferroni corrected), and the TCT in participants with presbycusis was significantly lower than that in older adults with normal hearing (t = 4.29, P < 0.001, Holm-Bonferroni corrected).

The SRT results were shown in Figure 2C. The median SRTs in the babble noise condition were always significantly higher than those in the SSN condition (P < 0.001), and we found significant differences in both conditions among the three groups. Pairwise comparisons between groups indicated that participants with presbycusis required the highest SNR, older adults with normal hearing required the second highest, and younger participants required the lowest SNR in both the SSN condition (presbycusis-normal older: χ2 = 2.662, P = 0.023; presbycusis-younger: χ2 = 7.518, P < 0.001; normal older-younger: χ2 = 4.288, P < 0.001, Holm-Bonferroni corrected) and the babble condition (presbycusis-normal older: χ2 = 2.465, P = 0.041; presbycusis-younger: χ2 = 6.687, P < 0.001; normal older-younger: χ2 = 3.714, P = 0.001, Holm-Bonferroni corrected).

We performed a post hoc power analysis on the TCT data using G* power 3.1.3 software (F = 137.919, observed effect size d = 2.49, power = 0.99).

Resting state EEG data

Source localization

Older subjects with normal hearing showed significantly greater activation in the middle frontal gyrus Brodmann’s area 11 (BA11) across the alpha1 frequency band (8.5–10 Hz) compared with that in the younger subjects (P < 0.05; Figure 3A). When compared with the younger subjects, the presbycusis patients exhibited increased brain activity in the precuneus (BA9) and cuneus (BA17) in the alpha1 frequency bands (P < 0.01; Figure 3B). No significant differences were found between the older participants with normal hearing versus those with presbycusis at any frequency.

Figure 3.

Standardized low-resolution brain electromagnetic tomography compared with the younger participant group.

(A) Compared with participants in the younger group, the middle frontal gyrus (BA11) activation across the alpha1 frequency bands was significantly enhanced in the older individuals with normal hearing (P < 0.05). (B) Compared with the younger participant group, the activity in the precuneus (BA9) and the cuneus (BA17) at the alpha1 frequency bands was significantly enhanced in the patients with presbycusis (P < 0.01). L: Left; R: right.

Functional connectivity

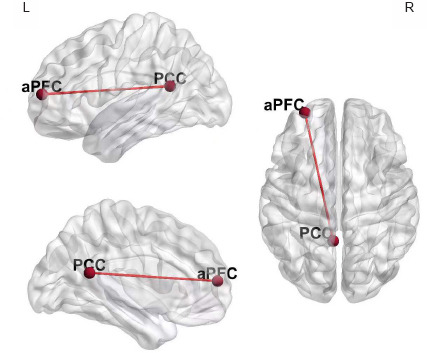

Older participants with normal hearing showed significant and extensive functional connection enhancement in the beta3 band compared with the younger participants with normal hearing (P < 0.05; Figure 4A), including connections between the default mode network and sensorimotor network, specifically, between the medial prefrontal cortex (mPFC, BA9) and the postcentral gyrus (BA2/3), between the mPFC (BA9) and the pre-SMA (BA24), and between the ventromedial prefrontal cortex (vmPFC, BA10) and the postcentral gyrus (BA2/3) (P < 0.05; Figure 4B). When comparing the participants in the normal hearing group with individuals in the younger group in the eyes-closed condition, we found a significant increase in functional connections between the postcentral gyrus (BA2/3) and precentral gyrus (BA 6) and between the anterior cingulate (BA32) and superior parietal lobule (BA2) (P < 0.05; Figure 4C). The connections between the inferior parietal lobule (BA40) and lingual gyrus (BA19) and between the inferior parietal lobule (BA40) and mPFC (BA9) were also enhanced according to the lagged phase synchronization analysis (P < 0.05; Figure 4D). The lagged phase synchronization analysis indicated that functional connectivity in the theta band was stronger between the posterior cingulate (BA30) and anterior prefrontal cortex (aPFC, BA10) in subjects with age-related hearing loss compared with the younger subjects (P < 0.05; Figure 5). However, we found no significant differences in connectivity in the participants with presbycusis versus older participants with normal hearing.

Figure 4.

Functional connectivity in older adults with normal audition compared with younger participants.

According to the lagged phase synchronization analysis, functional connectivity was enhanced in the older participants with normal audition (P < 0.05) compared with that in the younger participants. (A) We found extensive significantly enhanced connections between networks and within the sensorimotor network. (B) Increased functional connections in the beta3 band between the default mode network and sensorimotor network (between the mPFC (Brodmann’s area, BA9) and the postcentral gyrus (BA 2/3), between the mPFC (BA9) and pre-SMA (BA24), and between the vmPFC (BA10) and the postcentral gyrus (BA 2/3)). (C) Significantly increased functional connections were also found between the postcentral gyrus (BA 2/3) and precentral gyrus (BA 6), and between the anterior cingulate (BA 32) and the superior parietal (BA 2). (D) The connections between the inferior parietal lobule (BA 40) and lingual gyrus (BA 19) and between the inferior parietal lobule (BA 40) and mPFC (BA 9) were also enhanced according to the lagged phase synchronization analysis. IPL: Inferior parietal lobule; LinG: lingual gyrus; mPFC: medial prefrontal cortex; PostCG.L: left right postcentral gyrus; PostCG.R: right postcentral gyrus; PreCG: precentral gyrus; pre-SMA: pre-supplementary motor area; SPG: superior parietal; vmPFC: ventromedial prefrontal cortex.

Figure 5.

Functional connectivity in participants with ARHL compared with younger participants.

The lagged phase synchronization analysis revealed significantly enhanced connections between the posterior cingulate (BA30) and aPFC (BA10) in ARHL patients compared with that in the younger participants (P < 0.05). aPFC: Anterior prefrontal cortex; ARHL: age-related hearing loss; PCC: posterior cingulate cortex.

Discussion

In this study, we used resting-state source localization and functional connectivity analysis to examine differences between older people with normal hearing and those with ARHL. We used the TCT as an indicator of rapid speech comprehension when assessing the effects of aging and hearing loss on speech processing and brain network connections. Compared with younger people, older individuals exhibited a lower rapid speech recognition threshold and significantly reduced speech comprehension in the noise condition. ARHL appeared to further reduce auditory processing ability. The TCT quantifies differences in auditory processing ability in complex environments that are not accurately reflected by speech recognition rate test. In terms of EEG data, our results show that regardless of whether the participants had peripheral hearing loss, older individuals appeared to compensate for a lack of bottom-up resources through a top-down mechanism. Older individuals with normal hearing appeared to increase their attentional resources and to maintain auditory processing ability through extensive compensation, while participants with ARHL appeared to compensate for changes in multiple sensory pathways by enhancing attentional resources and increasing their dependence on vision.

The negative effect of aging and hearing loss on upper-threshold speech processing

In this study, we adopted a number of tests including the WRS, which is commonly used in clinical practice, as well as the more innovative TCT and SRT tests. Our results suggest that compared with the WRS, conducted in a quiet environment, the TCT and SRTs are better able to avoid the ceiling effect. Thus, these tests may be good clinical indicators of auditory processing ability.

A previous study (Ye et al., 2015) documented a ceiling effect for the word recognition test in quiet situations. In this study, the ceiling effect manifested as a similar level of difficulty in distinguishing speech comprehension among older participants with normal audition and those with ARHL. We found that the average TCT in younger individuals was 15.6 words/s. This is consistent with Meng et al. (2019), indicating that the test has high repeatability. Furthermore, our finding of a between-group trend towards a decline in TCT with age and hearing loss was the same as that reported by Schlueter et al. (2015). This decline in the ability to understand fast speech may be related to aging- and hearing loss-related decreases in processing capabilities specific to time and spectral fine structures. Tsukasa et al. (2018) demonstrated the effects of time-compressed speech training on resting-state intrinsic activity and connectivity involving the cortex, which plays a key role in listening comprehension. James et al. (2019) used time-compressed sentences to assess auditory processing ability. They found that the P1 and N1 latencies of click-induced auditory-evoked potentials, used in that study to describe auditory neural processing speed, indicated that the processing of time-distorted speech was affected by lower-level auditory neural processing as well as higher-level perceptuomotor and executive processes.

Our results regarding SRTs measured in noisy conditions (SSN and babble) indicate that aging and hearing loss both contribute to decreased speech processing ability under noisy conditions. This result is consistent with previous research suggesting that speech recognition under noisy conditions is related to both hearing loss and cognition (Mukari et al., 2020). The results among the groups in the different noise conditions showed that it is more difficult to perform speech recognition in the case of informational masking (babble noise) compared with energetic masking (SSN). Energetic masking is sometimes called peripheral masking because the masker competes with targets in the peripheral auditory system, while masking by competing speech is called informational masking. Previous studies have indicated that speech recognition during informational masking requires a higher cognitive load and is more affected by age-related decline in temporal processing and central executive function (Goossens et al., 2017).

The recognition of time-compressed speech and the processing of speech under noisy conditions both rely on coordination between high-fidelity encoding of bottom-up acoustic features, such as the fine temporal structure of the stimulus, and top-down cognitive signatures of active listening, including attention, memory, and multisensory integration (Parthasarathy et al., 2020). Our TCT and SRT results support the idea that in the absence of hearing loss, speech perception further declines with normal aging. As a test methodology, adaptive upper-threshold tests could simultaneously capture the effects of hearing loss and declining cognitive ability. We believe that the auditory threshold is not sufficient to describe the auditory processing difficulties faced by individuals in daily complex environments. Therefore, speech tests in simulated real environments should be used for assessments, while brain activation and functional connection status could also be used to characterize changes in brain activity.

Increased demand for cognitive resources in older individuals with normal audition

We found increased alpha1-band activity in BA11 in the middle frontal gyrus of older participants with normal audition when compared with the participants in the younger group. The middle frontal gyrus (BA11), which is part of the vmPFC, is considered to be involved in the evaluation and response stage of emotion production (Ochsner et al., 2012). In addition, the vmPFC is a key node of the cerebral cortex and subcortical network and participates in network activities including those with social, cognitive, and emotional functions (Hiser and Koenigs, 2018). Williams et al. (2006) suggested that normal aging may be related to the transition from automatic to more controlled emotional processing or emotional regulation caused by the enhanced recruitment of the PFC. At the same time, the increased activity in the frontal lobe observed in older individuals appears to be related to increased auditory effort (Rosemann and Thiel, 2019).

The brain functional network architecture in older people with normal hearing differs from that in younger people, particularly in terms of enhanced extensive functional connection between networks including the default mode network, cingulo-opercular network, occipital network, frontoparietal network, and sensorimotor network. Age-related decreases in network segregation may reflect disrupted neuronal functioning in older adults. Increased inter-network connectivity and enhanced intrasensory-motor network connectivity in healthy older adults appears to be an effective compensation for this reduction in neuronal activity.

The mPFC and vmPFC, which are considered to be part of the DMN, facilitate the flexible use of information during the construction of self-relevant mental simulations (Buckner et al., 2008). Our study found enhanced connections between the mPFC and postcentral gyrus, between the vmPFC and postcentral gyrus, and between the pre-SMA and mPFC in older individuals compared with younger individuals with normal hearing. The postcentral gyrus and pre-SMA are nodes of the sensorimotor network. Decreased segregation of neural networks is a neurophysiological indication of diminished sensorimotor ability and also represents a decrease in the neural specificity of a cortical network. Thus, enhanced compensatory network connections within the DMN (especially in the prefrontal area) that are caused by an age-related decline in neural processing ability and changes in brain physiological structure may be the central manifestation of declining suprathreshold auditory processing without obvious peripheral hearing loss.

The sensorimotor network and salience network overlap in the postcentral gyrus. The sensorimotor network includes a somatosensory area and a motor area (Chenji et al., 2016). In this network, the postcentral gyrus plays a major role in integrating somatosensory stimuli and in memory formation (Chen et al., 2008). The salience network is implicated in communication, social behavior, and self-awareness through the integration of sensory, affective, and cognitive information (Seeley et al., 2007). We observed an increase in resting state functional connectivity (rsFC) between the postcentral gyrus and the precentral gyrus, anterior cingulate (ACC), and vmPFC in older individuals. This enhanced information integration may be related to previously observed age-related physiological changes, such as declining white matter integrity in the postcentral and precentral gyrus (Zhou et al., 2020). At the same time, studies have reported that in the resting state, the connectivity of sensorimotor functional networks increases with age (Moezzi et al., 2019). This enhanced connectivity between networks suggests a decline in cognitive and motor sensory abilities in older individuals. Moreover, there is evidence that the connectivity between the postcentral gyrus and other networks is negatively correlated with cognitive reserve (Conti et al., 2021). A meta-analysis identified the ACC and vmPFC as component regulatory regions of the central autonomic network (Beissner et al., 2013). These regions are also thought to play a crucial role in the control of visceral body functions, the maintenance of homeostasis, and adaptation to internal or external challenges (Hagemann et al., 2003). The vmPFC participates in a unique integration of information from systems involved in higher-level cognition with those involved in the most basic forms of affective experience and physiological regulation (Roy et al., 2012), and the ACC has multiple functions, perhaps most importantly in attention and mood regulation (Posner and Rothbart, 1998). One study reported a pattern of functional connectivity changes after cognitive-related training, where reduced functional connectivity led to decreased neuron recruitment and increased efficiency (Karni et al., 1995). Therefore, the extensive functional connectivity between the postcentral gyrus and multiple cortical areas in older individuals, especially in areas related to cognitive and executive control, might represent an age-related decline in efficiency and increased resource recruitment.

We found that the connections between the inferior parietal lobule (IPL) and lingual gyrus (LinG) and between the IPL and mPFC were enhanced in older individuals. The IPL is part of the frontoparietal control network, which is involved in working memory and language functions and participates in executive control process (Dixon et al., 2018). The observed increase in cross-network connections in the present study is consistent with the previously discussed mechanism by which older people with normal audition compensate for changes in global efficiency (Geerligs et al., 2015). Imaging studies have confirmed that the most significant decline in metabolic activity in healthy brains occurs in the prefrontal cortex (PFC) and ACC (Pardo et al., 2007), and enhancement of this extensive connection may be related to nonselective recruitment of the frontal lobe (Logan et al., 2002). The LinG is widely regarded as an important element of emotional priming and visual regulation pathways (Meppelink et al., 2009). Similar to the IPL, abnormalities in the LinG are thought to be related to impaired selective attention and working memory (Desseilles et al., 2009). Based on the above-mentioned studies, the enhanced connection between the IPL and LinG that we observed in older individuals may indicate that aging leads to confusion between visual and verbal expression pathways and an increase in the recruitment of emotional expression and cognitive resources.

The increased alpha1-band activity found in the middle frontal gyrus region in the present study may reflect an increase in the emotional processing and cognitive needs of older people with normal audition. Furthermore, this finding could indicate that auditory effort increases before obvious peripheral hearing loss is apparent. Our results suggest that although the decline in metabolism associated with physiological aging leads to a decrease in the local efficiency of the brain, it is still possible to improve overall brain efficiency through many connections between networks in a normally aging brain. Among these networks, changes in the DMN and sensorimotor network are prominent. The vast increases in the connectivity of these networks are the result of declining cognition, motor perception, and emotional processing capabilities of older individuals, and corresponding mutual compensation.

Increased demand for attention and multisensory integration in patients with ARHL

In older individuals with obvious peripheral hearing loss, compared with younger people with normal audition, the activation area of the alpha1 frequency band had expanded into the cuneus and precuneus area. The precuneus, located in BA7, is part of the parietal association cortex, which is the key hub of visuospatial imagery processing. This region plays an important role in visual spatial positioning and spatial perception, and participates in the cognitive processing underlying episodic memory retrieval and self-processing (Cavanna and Trimble, 2006). The cuneus is a portion of the left temporo-occipital junction located in BA19, and is related to high-order processing of complex visual stimuli (Cavanna and Trimble, 2006; Vogel et al., 2012). In addition, as part of the DMN, BA19 is also considered to be involved in cognitive processes (Jones et al., 2011). In general, the precuneus and cuneus are considered to be important areas for visual and motor coordination and are thought to participate in cognitive processes to a certain extent.

Although we found an overall trend towards decreased functional connectivity in patients with ARHL compared with older individuals with normal audition, our results may have been limited by insufficient differences in hearing thresholds. Indeed, we obtained no direct evidence that the brain activities in these two groups were significantly different. Unlike the efficient compensatory mechanism of enhanced connectivity across wide-ranging networks in healthy older adults, our data indicated that subjects with ARHL developed decompensation of brain network connectivity because of the effects of chronically reduced auditory input and increased auditory load. When compared with individuals in the younger group, the connectivity between the aPFC and PCC was enhanced in individuals with ARHL. The aPFC (BA10) is part of the frontoparietal control network. The aPFC may play a unique role in communicating between the DMN and DAN such that it integrates information from the two systems and decides between potentially competitive internal and external guidance processes (Vincent et al., 2008) and participates in the process of combining working memory with attention resource allocation (Shaw et al., 2015). Koechlin and Hyafil (2007) suggested that the aPFC is at the apex of the cognitive control hierarchy, where it coordinates information processing and information transmission between multiple brain regions (Ramnani and Owen, 2004). The PCC (BA30) is a key node of the traditional default network in the resting state and plays an important role in cognitive functions such as episodic memory, spatial attention, and self-evaluation (Greicius et al., 2003). The abnormal connectivity observed in patients with ARHL indicates that this population compensates for insufficient auditory cues in terms of attention, memory, and multisensory integration.

Yu et al. (2017) found that the directional connection between the left prefrontal lobe (BA10/11) and the PCC was significantly increased in patients with Alzheimer’s disease (AD), and previous studies have shown that AD lesions originate from the limbic system, including areas such as the hippocampus and posterior cingulate gyrus, and gradually develop in the parietal and frontal lobe integration areas. The connection between the aPFC and PCC is also thought to be involved in the regulation of human social emotional behavior (Bramson et al., 2018), and increased connectivity between frontal regions and seed regions for the DAN and DMN was suggested to be related to hearing-loss-induced decline in emotional processing ability in older individuals (Bramson et al,. 2014).

Our findings indicate that the plasticity compensation mechanism caused by hearing loss manifests as increased activation in the DMN, including connections between the aPFC and PCC in the theta band. This increased activation is caused by dysfunctions in recognition, such as memory and attention integration processes, and an increased demand for emotional processing. The increased activity in the parieto-occipital junction region observed in ARHL patients may indicate integrated compensation of visual and motor information, reflecting enhanced activation of visuospatial perception in the resting state. That we only found increased functional connectivity in one pair of brain networks also reflects the decompensation of brain function following hearing loss and increased auditory load.

This study had several limitations. First, this was a cross-sectional study with a limited sample size. Because bilateral symmetrical high-frequency hearing loss is rare among younger people, it was difficult to include a matched group of younger people for comparison. In addition, we mainly studied differences in resting-state cortical activity and connectivity between the groups. Although the two groups of older people had significant changes relative to the younger people, we found no significant differences between older individuals with and without hearing loss. Finally, we did not further explore changes in the brain networks under task conditions. Exploring changes in dynamic cognitive load in future work could provide a better understanding of the characteristics of presbycusis.

Conclusion

Our results suggest that both normal aging and the development of ARHL have a negative effect on advanced auditory processing capabilities, and that hearing loss accelerates the decline in speech comprehension, especially in speech competition situations. We found that the speech rate test could effectively reflect the upper-threshold speech comprehension ability of individuals with presbycusis and that it could distinguish changes caused by various factors such as age and hearing ability. The observed differences in speech cognitive ability were also reflected in changes in resting brain activation and functional connectivity. Our EEG data revealed the mechanisms of speech-related cognitive decline in older individuals with presbycusis and normal hearing relative to speaking speed. Specifically, compared with younger people, there appear to be a large number of compensatory functional connections in individuals undergoing normal aging, whereas patients with ARHL exhibit decompensation involving abnormal activation of the visual area.

Overall, our results indicate that the fast speech recognition threshold test and the speech recognition threshold test in noisy conditions can effectively reflect the auditory difficulties faced by subjects. Thus, these may both be useful in clinical audiology assessments. We found significant changes in the auditory-related neural activation and connection state in older individuals, providing ideas for clinical rehabilitation and further research on auditory brain processes.

Additional files:

Additional file 1 (191.8KB, pdf) : Informed consent form (Chinese).

Additional file 2 (1.7MB, pdf) : Ethical document (Chinese).

Acknowledgments:

We thank Dr. Christopher Wigham (Swansea University) for his valuable comments and for proofreading the manuscript.

Footnotes

Author contributions: Study conception and design: YQZ, YXC, HMH; data acquisition: HMH, GSC, HWD, ZYL, XWT, JLG, XMC; data analysis and interpretation: HMH, GSC, JHL; methodology: QLM, JHL; statistical analysis: HMH, GSC; drafting of the article: HMH; critical revision of the article: YCC, FZ; study supervision: YQZ, YXC. All authors approved the final version of this paper.

Conflicts of interest: The authors declares that there is no conflict of interest.

Data availability statement: The data are available from the corresponding author on reasonable request.

C-Editor: Zhao M; S-Editor: Li CH; L-Editors: Li CH, Song LP; T-Editor: Jia Y

Contributor Information

Yue-Xin Cai, Email: caiyx25@mail.sysu.edu.cn.

Yi-Qing Zheng, Email: zhengyiq@mail.sysu.edu.cn.

References

- 1.Adel Ghahraman M, Ashrafi M, Mohammadkhani G, Jalaie S. Effects of aging on spatial hearing. Aging Clin Exp Res. 2020;32:733–739. doi: 10.1007/s40520-019-01233-3. [DOI] [PubMed] [Google Scholar]

- 2.Beissner F, Meissner K, Bär KJ, Napadow V. The autonomic brain:an activation likelihood estimation meta-analysis for central processing of autonomic function. J Neurosci. 2013;33:10503–10511. doi: 10.1523/JNEUROSCI.1103-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bramson B, Jensen O, Toni I, Roelofs K. Cortical oscillatory mechanisms supporting the control of human social-emotional actions. J Neurosci. 2018;38:5739–5749. doi: 10.1523/JNEUROSCI.3382-17.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Briley PM, Summerfield AQ. Age-related deterioration of the representation of space in human auditory cortex. Neurobiol Aging. 2014;35:633–644. doi: 10.1016/j.neurobiolaging.2013.08.033. [DOI] [PubMed] [Google Scholar]

- 5.Buckner RL, Andrews-Hanna JR, Schacter DL. The brain's default network:anatomy, function, and relevance to disease. Ann N Y Acad Sci. 2008;1124:1–38. doi: 10.1196/annals.1440.011. [DOI] [PubMed] [Google Scholar]

- 6.Cavanagh JF. Electrophysiology as a theoretical and methodological hub for the neural sciences. Psychophysiology. 2019;56:e13314. doi: 10.1111/psyp.13314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cavanna AE, Trimble MR. The precuneus:a review of its functional anatomy and behavioural correlates. Brain. 2006;129:564–583. doi: 10.1093/brain/awl004. [DOI] [PubMed] [Google Scholar]

- 8.Chen TL, Babiloni C, Ferretti A, Perrucci MG, Romani GL, Rossini PM, Tartaro A, Del Gratta C. Human secondary somatosensory cortex is involved in the processing of somatosensory rare stimuli:an fMRI study. Neuroimage. 2008;40:1765–1771. doi: 10.1016/j.neuroimage.2008.01.020. [DOI] [PubMed] [Google Scholar]

- 9.Chenji S, Jha S, Lee D, Brown M, Seres P, Mah D, Kalra S. Investigating default mode and sensorimotor network connectivity in amyotrophic lateral sclerosis. PLoS One. 2016;11:e0157443. doi: 10.1371/journal.pone.0157443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Conti L, Riccitelli GC, Preziosa P, Vizzino C, Marchesi O, Rocca MA, Filippi M. Effect of cognitive reserve on structural and functional MRI measures in healthy subjects:a multiparametric assessment. J Neurol. 2021;268:1780–1791. doi: 10.1007/s00415-020-10331-6. [DOI] [PubMed] [Google Scholar]

- 11.Desseilles M, Balteau E, Sterpenich V, Dang-Vu TT, Darsaud A, Vandewalle G, Albouy G, Salmon E, Peters F, Schmidt C, Schabus M, Gais S, Degueldre C, Phillips C, Luxen A, Ansseau M, Maquet P, Schwartz S. Abnormal neural filtering of irrelevant visual information in depression. J Neurosci. 2009;29:1395–1403. doi: 10.1523/JNEUROSCI.3341-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dias JW, McClaskey CM, Harris KC. Time-compressed speech identification is predicted by auditory neural processing, perceptuomotor speed, and executive functioning in younger and older listeners. J Assoc Res Otolaryngol. 2019;20:73–88. doi: 10.1007/s10162-018-00703-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dixon ML, De La Vega A, Mills C, Andrews-Hanna J, Spreng RN, Cole MW, Christoff K. Heterogeneity within the frontoparietal control network and its relationship to the default and dorsal attention networks. Proc Natl Acad Sci U S A. 2018;115:E1598–1607. doi: 10.1073/pnas.1715766115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dobreva MS, O'Neill WE, Paige GD. Influence of aging on human sound localization. J Neurophysiol. 2011;105:2471–2486. doi: 10.1152/jn.00951.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dümpelmann M, Ball T, Schulze-Bonhage A. sLORETA allows reliable distributed source reconstruction based on subdural strip and grid recordings. Hum Brain Mapp. 2012;33:1172–1188. doi: 10.1002/hbm.21276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fitzhugh MC, Hemesath A, Schaefer SY, Baxter LC, Rogalsky C. Functional connectivity of Heschl's gyrus associated with age-related hearing loss:a resting-state fMRI study. Front Psychol. 2019;10:2485. doi: 10.3389/fpsyg.2019.02485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Fu QJ, Galvin JJ, 3rd, Wang X. Recognition of time-distorted sentences by normal-hearing and cochlear-implant listeners. J Acoust Soc Am. 2001;109:379–384. doi: 10.1121/1.1327578. [DOI] [PubMed] [Google Scholar]

- 18.Geerligs L, Renken RJ, Saliasi E, Maurits NM, Lorist MM. A brain-wide study of age-related changes in functional connectivity. Cereb Cortex. 2015;25:1987–1999. doi: 10.1093/cercor/bhu012. [DOI] [PubMed] [Google Scholar]

- 19.George EL, Zekveld AA, Kramer SE, Goverts ST, Festen JM, Houtgast T. Auditory and nonauditory factors affecting speech reception in noise by older listeners. J Acoust Soc Am. 2007;121:2362–2375. doi: 10.1121/1.2642072. [DOI] [PubMed] [Google Scholar]

- 20.Goossens T, Vercammen C, Wouters J, van Wieringen A. Masked speech perception across the adult lifespan:Impact of age and hearing impairment. Hear Res. 2017;344:109–124. doi: 10.1016/j.heares.2016.11.004. [DOI] [PubMed] [Google Scholar]

- 21.Gordon-Salant S, Fitzgibbons PJ. Temporal factors and speech recognition performance in young and elderly listeners. J Speech Hear Res. 1993;36:1276–1285. doi: 10.1044/jshr.3606.1276. [DOI] [PubMed] [Google Scholar]

- 22.Greicius MD, Krasnow B, Reiss AL, Menon V. Functional connectivity in the resting brain:a network analysis of the default mode hypothesis. Proc Natl Acad Sci U S A. 2003;100:253–258. doi: 10.1073/pnas.0135058100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hagemann D, Waldstein SR, Thayer JF. Central and autonomic nervous system integration in emotion. Brain Cogn. 2003;52:79–87. doi: 10.1016/s0278-2626(03)00011-3. [DOI] [PubMed] [Google Scholar]

- 24.Hiser J, Koenigs M. The multifaceted role of the ventromedial prefrontal cortex in emotion, decision making, social cognition, and psychopathology. Biol Psychiatry. 2018;83:638–647. doi: 10.1016/j.biopsych.2017.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Holmes AP, Blair RC, Watson JD, Ford I. Nonparametric analysis of statistic images from functional mapping experiments. J Cereb Blood Flow Metab. 1996;16:7–22. doi: 10.1097/00004647-199601000-00002. [DOI] [PubMed] [Google Scholar]

- 26.Humes LE, Busey TA, Craig J, Kewley-Port D. Are age-related changes in cognitive function driven by age-related changes in sensory processing? Atten Percept Psychophys. 2013;75:508–524. doi: 10.3758/s13414-012-0406-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Husain FT, Carpenter-Thompson JR, Schmidt SA. The effect of mild-to-moderate hearing loss on auditory and emotion processing networks. Front Syst Neurosci. 2014;8:10. doi: 10.3389/fnsys.2014.00010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Jones DT, Machulda MM, Vemuri P, McDade EM, Zeng G, Senjem ML, Gunter JL, Przybelski SA, Avula RT, Knopman DS, Boeve BF, Petersen RC, Jack CR Jr. Age-related changes in the default mode network are more advanced in Alzheimer disease. Neurology. 2011;77:1524–1531. doi: 10.1212/WNL.0b013e318233b33d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Karni A, Meyer G, Jezzard P, Adams MM, Turner R, Ungerleider LG. Functional MRI evidence for adult motor cortex plasticity during motor skill learning. Nature. 1995;377:155–158. doi: 10.1038/377155a0. [DOI] [PubMed] [Google Scholar]

- 30.Kociński J, Niemiec D. Time-compressed speech intelligibility in different reverberant conditions. Appl Acoust. 2016;113:58–63. [Google Scholar]

- 31.Koechlin E, Hyafil A. Anterior prefrontal function and the limits of human decision-making. Science. 2007;318:594–598. doi: 10.1126/science.1142995. [DOI] [PubMed] [Google Scholar]

- 32.Kubicki S, Herrmann WM, Fichte K, Freund G. Reflections on the topics:EEG frequency bands and regulation of vigilance. Pharmakopsychiatr Neuropsychopharmakol. 1979;12:237–245. doi: 10.1055/s-0028-1094615. [DOI] [PubMed] [Google Scholar]

- 33.Lan L, Wang QJ. Auditory brainstem response clinical practice-technology application to disease diagnosis. Zhongguo Tingli Yuyan Kangfu Kexue Zazhi. 2022;20:161–168. [Google Scholar]

- 34.Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT. Automated Talairach atlas labels for functional brain mapping. Hum Brain Mapp. 2000;10:120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Li H, Jia J, Yang Z. Mini-mental state examination in elderly Chinese:a population-based normative study. J Alzheimers Dis. 2016;53:487–496. doi: 10.3233/JAD-160119. [DOI] [PubMed] [Google Scholar]

- 36.Logan JM, Sanders AL, Snyder AZ, Morris JC, Buckner RL. Under-recruitment and nonselective recruitment:dissociable neural mechanisms associated with aging. Neuron. 2002;33:827–840. doi: 10.1016/s0896-6273(02)00612-8. [DOI] [PubMed] [Google Scholar]

- 37.Maruyama T, Takeuchi H, Taki Y, Motoki K, Jeong H, Kotozaki Y, Nakagawa S, Nouchi R, Iizuka K, Yokoyama R, Yamamoto Y, Hanawa S, Araki T, Sakaki K, Sasaki Y, Magistro D, Kawashima R. Effects of time-compressed speech training on multiple functional and structural neural mechanisms involving the left superior temporal gyrus. Neural Plast. 2018;2018:6574178. doi: 10.1155/2018/6574178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Meng Q, Wang X, Cai Y, Kong F, Buck AN, Yu G, Zheng N, Schnupp JWH. Time-compression thresholds for Mandarin sentences in normal-hearing and cochlear implant listeners. Hear Res. 2019;374:58–68. doi: 10.1016/j.heares.2019.01.011. [DOI] [PubMed] [Google Scholar]

- 39.Meppelink AM, de Jong BM, Renken R, Leenders KL, Cornelissen FW, van Laar T. Impaired visual processing preceding image recognition in Parkinson's disease patients with visual hallucinations. Brain. 2009;132:2980–2993. doi: 10.1093/brain/awp223. [DOI] [PubMed] [Google Scholar]

- 40.Moezzi B, Pratti LM, Hordacre B, Graetz L, Berryman C, Lavrencic LM, Ridding MC, Keage H, McDonnell MD, Goldsworthy MR. Characterization of young and old adult brains:an EEG functional connectivity analysis. Neuroscience. 2019;422:230–239. doi: 10.1016/j.neuroscience.2019.08.038. [DOI] [PubMed] [Google Scholar]

- 41.Mitchell P, Gopinath B, Wang JJ, McMahon CM, Schneider J, Rochtchina E, Leeder SR. Five-year incidence and progression of hearing impairment in an older population. Ear Hear. 2011;32:251–257. doi: 10.1097/AUD.0b013e3181fc98bd. [DOI] [PubMed] [Google Scholar]

- 42.Mukari S, Yusof Y, Ishak WS, Maamor N, Chellapan K, Dzulkifli MA. Relative contributions of auditory and cognitive functions on speech recognition in quiet and in noise among older adults. Braz J Otorhinolaryngol. 2020;86:149–156. doi: 10.1016/j.bjorl.2018.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.National Research Council (US) Committee on Disability Determination for Individuals with Hearing Impairments. Dobie RA, Van Hemel S. Hearing loss:determining eligibility for social security benefits. Washington (DC): National Academies Press (US); 2004. [PubMed] [Google Scholar]

- 44.Ochsner KN, Silvers JA, Buhle JT. Functional imaging studies of emotion regulation:a synthetic review and evolving model of the cognitive control of emotion. Ann N Y Acad Sci. 20121251:E1–24. doi: 10.1111/j.1749-6632.2012.06751.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Onoda K, Ishihara M, Yamaguchi S. Decreased functional connectivity by aging is associated with cognitive decline. J Cogn Neurosci. 2012;24:2186–2198. doi: 10.1162/jocn_a_00269. [DOI] [PubMed] [Google Scholar]

- 46.Otte RJ, Agterberg MJ, Van Wanrooij MM, Snik AF, Van Opstal AJ. Age-related hearing loss and ear morphology affect vertical but not horizontal sound-localization performance. J Assoc Res Otolaryngol. 2013;14:261–273. doi: 10.1007/s10162-012-0367-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Ouda L, Profant O, Syka J. Age-related changes in the central auditory system. Cell Tissue Res. 2015;361:337–358. doi: 10.1007/s00441-014-2107-2. [DOI] [PubMed] [Google Scholar]

- 48.Pardo JV, Lee JT, Sheikh SA, Surerus-Johnson C, Shah H, Munch KR, Carlis JV, Lewis SM, Kuskowski MA, Dysken MW. Where the brain grows old:decline in anterior cingulate and medial prefrontal function with normal aging. Neuroimage. 2007;35:1231–1237. doi: 10.1016/j.neuroimage.2006.12.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Parthasarathy A, Hancock KE, Bennett K, DeGruttola V, Polley DB. Bottom-up and top-down neural signatures of disordered multi-talker speech perception in adults with normal hearing. Elife. 2020;9:e51419. doi: 10.7554/eLife.51419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Phatak SA, Sheffield BM, Brungart DS, Grant KW. Development of a test battery for evaluating speech perception in complex listening environments:effects of sensorineural hearing loss. Ear Hear. 2018;39:449–456. doi: 10.1097/AUD.0000000000000567. [DOI] [PubMed] [Google Scholar]

- 51.Posner MI, Rothbart MK. Attention, self-regulation and consciousness. Philos Trans R Soc Lond B Biol Sci. 1998;353:1915–1927. doi: 10.1098/rstb.1998.0344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Profant O, Tintěra J, Balogová Z, Ibrahim I, Jilek M, Syka J. Functional changes in the human auditory cortex in ageing. PLoS One. 2014;10:e0116692. doi: 10.1371/journal.pone.0116692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ramnani N, Owen AM. Anterior prefrontal cortex:insights into function from anatomy and neuroimaging. Nat Rev Neurosci. 2004;5:184–194. doi: 10.1038/nrn1343. [DOI] [PubMed] [Google Scholar]

- 54.Rosemann S, Thiel CM. The effect of age-related hearing loss and listening effort on resting state connectivity. Sci Rep. 2019;9:2337. doi: 10.1038/s41598-019-38816-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Roy M, Shohamy D, Wager TD. Ventromedial prefrontal-subcortical systems and the generation of affective meaning. Trends Cogn Sci. 2012;16:147–156. doi: 10.1016/j.tics.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Schlueter A, Brand T, Lemke U, Nitzschner S, Kollmeier B, Holube I. Speech perception at positive signal-to-noise ratios using adaptive adjustment of time compression. J Acoust Soc Am. 2015;138:3320–3331. doi: 10.1121/1.4934629. [DOI] [PubMed] [Google Scholar]

- 57.Schmidt SA, Akrofi K, Carpenter-Thompson JR, Husain FT. Default mode, dorsal attention and auditory resting state networks exhibit differential functional connectivity in tinnitus and hearing loss. PLoS One. 2013;8:e76488. doi: 10.1371/journal.pone.0076488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, Reiss AL, Greicius MD. Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci. 2007;27:2349–2356. doi: 10.1523/JNEUROSCI.5587-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Shaw EE, Schultz AP, Sperling RA, Hedden T. Functional connectivity in multiple cortical networks is associated with performance across cognitive domains in older adults. Brain Connect. 2015;5:505–516. doi: 10.1089/brain.2014.0327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Slade K, Plack CJ, Nuttall HE. The effects of age-related hearing loss on the brain and cognitive function. Trends Neurosci. 2020;43:810–821. doi: 10.1016/j.tins.2020.07.005. [DOI] [PubMed] [Google Scholar]

- 61.Versfeld NJ, Dreschler WA. The relationship between the intelligibility of time-compressed speech and speech in noise in young and elderly listeners. J Acoust Soc Am. 2002;111:401–408. doi: 10.1121/1.1426376. [DOI] [PubMed] [Google Scholar]

- 62.Vincent JL, Kahn I, Snyder AZ, Raichle ME, Buckner RL. Evidence for a frontoparietal control system revealed by intrinsic functional connectivity. J Neurophysiol. 2008;100:3328–3342. doi: 10.1152/jn.90355.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Vogel AC, Petersen SE, Schlaggar BL. The left occipitotemporal cortex does not show preferential activity for words. Cereb Cortex. 2012;22:2715–2732. doi: 10.1093/cercor/bhr295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.WHO. With adaptations from report of the first informal consultation on future organization development for the prevention of deafness and hearing impairment. Geneva: WHO/PDH/97.3; 1997. [Google Scholar]

- 65.Williams GV, Castner SA. Under the curve:critical issues for elucidating D1 receptor function in working memory. Neuroscience. 2006;139:263–276. doi: 10.1016/j.neuroscience.2005.09.028. [DOI] [PubMed] [Google Scholar]

- 66.Wong LL, Soli SD, Liu S, Han N, Huang MW. Development of the Mandarin Hearing in Noise Test (MHINT) Ear Hear. 2007;28:70s–74s. doi: 10.1097/AUD.0b013e31803154d0. [DOI] [PubMed] [Google Scholar]

- 67.World Health Organization. World Report on Hearing, WHO, Geneva. 2021. [Accessed July 8, 2022]. https://www.who.int/publications/i/item/world-report-on-hearing.

- 68.Yamasoba T, Lin FR, Someya S, Kashio A, Sakamoto T, Kondo K. Current concepts in age-related hearing loss:epidemiology and mechanistic pathways. Hear Res. 2013;303:30–38. doi: 10.1016/j.heares.2013.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Ye W, Ya S, Ying F, Qian W, Yifei F, Xin X. Study of ceiling efect of commonly used Chinese recognition materials in post-lingual deafened patients with cochlear implant. Zhonghua Erbiyanhou Toujing Waike Zazhi. 2015;29:298–303. [PubMed] [Google Scholar]

- 70.Yu E, Liao Z, Mao D, Zhang Q, Ji G, Li Y, Ding Z. Directed functional connectivity of posterior cingulate cortex and whole brain in Alzheimer's disease and mild cognitive impairment. Curr Alzheimer Res. 2017;14:628–635. doi: 10.2174/1567205013666161201201000. [DOI] [PubMed] [Google Scholar]

- 71.Zhou L, Tian N, Geng ZJ, Wu BK, Dong LY, Wang MR. Diffusion tensor imaging study of brain precentral gyrus and postcentral gyrus during normal brain aging process. Brain Behav. 2020;10:e01758. doi: 10.1002/brb3.1758. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.