Abstract

This study examines the visibility, impact, and applications of bibliometric software tools in the peer-reviewed literature through a “Cited Reference Search” using the Web of Science (WOS) database. A total of 2882 citing research articles to eight bibliometric software tools were extracted from the WOS Core Collection between 2010 and 2021. These citing articles are analyzed by publication year, country, publication title, publisher, open access level, funding agency, and WOS category. Mentions of bibliometric software tools in Author Keywords and KeyWords Plus are also compared. The VOSviewer software is utilized to identify specific research areas by discipline from the keyword co-occurrences of the citing articles. The findings reveal that while bibliometric software tools are making a noteworthy impact and contribution to research, their visibility through referencing, Author Keywords, and KeyWords Plus is limited. This study serves as a clarion call to raise awareness and initiate discussions on the citing practices of software tools in scholarly publications.

Keywords: Bibliometric software, Citation analysis, Scholarly publications, Author keywords, KeyWords plus, VOSviewer

Introduction

Software is a set of instructions or commands that tell a computer what to do (Bainbridge, 2004; Chun, 2005). Scientific software (i.e., non-trivial) is a complex software program with a broad range of functionality used for advanced tasks that require regular updates to fix bugs, improve performance, address security issues, and enhance compatibility (e.g., bibliometric software tools). On the other hand, non-scientific software (i.e., trivial) refers to basic software programs with minimal functionality designed for general tasks (e.g., unit converter).

Nowadays, researchers are becoming more dependent on scientific software, particularly in the STEM fields. Content analysis studies on software in specific scientific journals confirm that software is significantly involved in research [e.g., PLOS ONE (Li et al., 2017; Pan et al., 2015, 2016), Nature (Nangia & Katz, 2017), and Science (Katz & Chue Hong, 2018)]. Software is thus a creative and critical aspect of research that merits recognition and credit. Despite its importance, scholarly publications have traditionally overlooked the citation of software, highlighting a need for increased awareness of its acknowledgement in the research process. The tendency to view software as a technical contribution rather than an original scholarly work may be a contributing factor to this lack of recognition (Soito & Hwang, 2016). Moreover, the changing nature of software over time with authorship, roles, and credits presents its own issues with accurately citing and referencing.

According to Alliez et al. (2020), “First, software authorship is extremely varied, involving many roles: software architect, coder, debugger, tester, team manager, and so on. Second, software itself is a complex object: the lifespan can range from a few months to decades, the size can range to a few dozens of lines of code to several millions, and it can be stand-alone or rely on multiple external packages. And finally, sometimes one may want to reference a particular version of a given software (this is crucial for reproducible research), while at other times one may want to cite the software as a whole” (pp. 1–2). Bouquin et al. (2020) point out, “It is also important to recognize that software citation standards are still being normalized in all disciplines, and software developed for one discipline may be used in entirely different contexts.” Further, studies reveal that LIS scholars tend to cite a related publication compared to biologists who cite software directly (Pan et al., 2019; Yang et al., 2018).

Citation of software poses challenges not only from its recognition and standardization but also from the academic culture and disciplinary norms (Katz & Chue Hong, 2018). However, such academic hurdles can be mitigated by working with diverse communities, including researchers, librarians, indexers, publishers, professional societies, editors, reviewers, archivists, funders, and other stakeholders, to bring educational awareness and facilitate necessary changes for proper software citation practices (Katz et al., 2019a, 2019b; Niemeyer et al., 2016).

Bibliometric software tools are one type of non-trivial software designed to assist scientific tasks that are considered essential for conducting bibliometric and scientometric analyses in research (Bales et al., 2020; Bankar & Lihitkar, 2019; Cobo et al., 2011; Moral-Muñoz et al., 2020; Pradhan, 2016; van Eck & Waltman, 2014). The emergence of sophisticated software tools has revolutionized how data is analyzed, visualized, and differentiated. Consequently, this has enabled researchers to capture, refine, and analyze large data sets that would have been otherwise impossible to process. To this end, the present study examines the visibility, impact, and applications of bibliometric software tools within scholarly articles, thereby highlighting their pivotal significance in academic research.

Background

International efforts to software citation

International efforts have been used to promote the visibility, reuse, and standardization of software citation in academic research so that the contributions of software developers are recognized. The FORCE11 Software Citation Working Group, a collaboration of researchers, librarians, and other experts, outlined key elements to include when citing software and best practices through their “Software Citation Principles” guidelines (Smith et al., 2016). These principles are based on the idea that software should be treated in the same way as other research outputs, such as articles so that the citation will give credit to the developers and allow it to be correctly traced and evaluated (Katz et al., 2021; Smith et al., 2016). The Joint Declaration of Data Principles (JDDCP), developed by international scientific organizations, also explained the importance of citing data and software to ensure transparency and reproducibility of research. They recommended using a persistent unique identifier [e.g., Digital Object Identifier (DOI)] with the software’s name, version, and creators in the citation (Cousijn et al., 2018; Martone, 2014). The Software Sustainability Institute (SSI), a UK-based organization, has crafted a set of guidelines and practices for software citation (Crouch et al., 2013). Task Groups organized by the Committee on Data for Science and Technology (CODATA) and International Council for Scientific and Technical Information (ICSTI) have provided recommendations to improve the practice of data citation, which has, in turn, impacted the citing of software (Task Group on Data Citation Standards and Practice, 2013).

Bibliometrics and citation analysis

Bibliometrics is an interdisciplinary research field that uses statistical methods to analyze publications and citation data. Research in bibliometrics has emerged from manual counts of items for in-depth analysis, mapping, and visualization of data. Studies in bibliometrics measure, assess, and evaluate the output of scholarly information (Borgman & Furner, 2002). The bibliometric citation analysis technique is used to examine the frequency and patterns of citation in documents (Garfield, 1979; Smith, 1981). This method is based on the number of times others have cited works. The central premise of citation analysis is that references direct researchers to valuable sources. As a result, citations to sources can measure the visibility and impact of scholarly works, author(s), co-authorship, publication titles, institutions, countries, disciplines, research fields, and non-indexed sources.

Much of the growth in bibliometrics and citation analysis can be attributed to bibliometric and other visualization tools. Bibliometric software tools can create and map visual representations of information in an understandable way based on citation data, keywords, and other bibliographic metadata. Additionally, this type of software is vital for evaluating and analyzing large datasets to shape and forecast the future of academia. Bibliometric software tools have therefore gained importance in research evaluation, management, science policy, scholarship, and subject-specific disciplines.

Scholars have underscored the impact of bibliometrics research. Haustein and Larivière (2015) emphasized, “Over the last 20 years, the increasing importance of bibliometrics for research evaluation and planning led to an oversimplification of what scientific output and impact were which, in turn, lead to adverse effects such as salami publishing, honorary authorships, citation cartels, and other unethical behavior to increase one’s publication and citation scores, without actually increasing one’s contribution to the advancement of science” (p. 122). Mokhnacheva and Tsvetkova (2020) highlight, “Bibliometrics makes it possible to understand what new scientific directions are emerging and how quickly they are developing, what scientific topics are most in demand at the present stage of the development of society, how and at what pace multidisciplinary research is developing, what role globalization plays in scientific productivity, etc.” (p. 159). Mou et al. (2019) stated, “The importance of Bibliometrics has skyrocketed in both the management field and academic research. As a result, a lot of software for bibliometric and co-citation analysis has recently developed and been applied to various fields” (p. 221). Dhillon and Gill (2014) went on to say, “… the importance of bibliometrics which allows for analysis of scientific productivity and also helps to segregate information in a manner that it can be easily retrieved and utilized” (p. 201).

Citations to bibliometric software

If a specific software is used in research, it should be properly cited in the reference list. Sometimes a cited reference is included to the software tool itself, the website from which the tool can be downloaded, the tool’s manual, the publication in which the tool has been introduced, or the repository containing the source code. The format for citing software can also vary depending on the citation style required for a publication. Occasionally, the name of the software tool is only mentioned in the main text of a publication, a footnote, or a table, leading to it being missed in the times cited.

Scholars have analyzed how bibliometric software tools are cited using content analysis methods. Pan et al. (2017) conducted a context analysis study investigating how articles published in English and Chinese journals cite and use CiteSpace. The study showed that although CiteSpace was used in China, many Chinese authors need to provide more adequate information for identifying the software in their articles. Pan et al. (2018) further examined 481 review papers and articles for patterns of citations to CiteSpace, HistCite, and VOSviewer. Their study showed that even though all three software are increasingly used, researchers citing practices need more standard formatting. It was stated, “The three software tools were adopted earlier and used more frequently in their field of origin—library and information science. They were then gradually adopted in other domains, initially at a lower diffusion speed but afterward at a rapidly growing rate” (Pan et al., 2018, p. 489).

Osinska and Klimas (2021) investigated the popularity of Gephi, Sci2 Tool, VOSviewer, Pajek, CiteSpace, and HistCite in the literature and social media. They determined that there is little influence of bibliometric software tools by social media or video tutorials. Orduña-Malea and Costas (2021) applied a webometric approach to successfully track VOSviewer usage, examining URL mentions of the software in scholarly publications, web-pages, and social media through Google Scholar, Majestic, and Twitter. They specified, “Google Scholar mentions shows how VOSviewer is used as a research resource, whilst mentions in web-pages and tweets show the interest on VOSviewer’s website from an informational and a conversational point of view” (Orduña-Malea & Costas, 2021, p. 8153).

Cited reference searching

A cited reference search is a method used to find scholarly works that have cited a specific author or source. By tracing the citations back in time, one can attain an understanding of how the original works have evolved. For instance, if many researchers cite a work, it may indicate that it is used and respected in a field. The process can also identify potential collaborators and experts in the research field. By regularly performing a cited reference search, researchers can keep track of the latest developments and trends associated with a specific research area. To this end, the cited reference search can provide a sense of how the research is being applied or related, thereby bringing ideas for use or to build upon. A cited reference search can additionally be used to find citations to non-indexed sources such as theses, databases, and software applications (Scalfani, 2021).

Studies that examine the citation of sources in scholarly publications have traditionally relied on content analysis methodology that involves selecting and analyzing articles separately. Manual evaluation of information can be time-consuming, expensive, and often limited by the sheer quantity of data. A manual approach would also require applying specific refined criteria for painstakingly selecting and collecting the initial documents for viewing.

Rather than relying on traditional methods, researchers can take a non-traditional approach to discovering papers that cite indexed or non-indexed sources by utilizing the “Cited Reference Search” feature in databases like Web of Science (WOS) and Scopus. This method is computer-generated, which makes it more effective in quickly capturing and analyzing thousands of documents automatically and instantaneously. The process provides greater control over search parameters and captures specific matches of all relevant documents. Furthermore, databases with advanced search capabilities allow for the separation and analysis of documents on various bibliographic fields, such as authors, countries, publication titles, funding agencies, disciplines, and research categories. The records from the database can be exported to a bibliometric software tool for processing and analysis. As a result, this can help researchers better understand, visualize, and draw inferences from large volumes of data.

The limitations of a cited reference search using a database include the restricted coverage of information indexed by the resource, limited control over search parameters, incomplete or outdated citation information, subscription costs, time constraints when working with large data, and bias from citation patterns. Further, some documents could be overlooked, over-cited, or under-cited due to factors like the subject matter. Despite the limitations, cited reference searching is a valuable technique for tracking the impact, influence, and prevalence of documents and other sources in the scholarly literature.

The “Cited Reference Search” tool has been successfully used to study citations to Wikipedia (Li et al., 2021; Park, 2011; Tomaszewski & MacDonald, 2016), chemistry handbooks (Tomaszewski, 2017), chemical encyclopedias (Tomaszewski, 2018), STEM databases (Tomaszewski, 2019, 2021), MATLAB software (Tomaszewski, 2022), conference proceedings (Cardona & Marx, 2007), industry standards (Rowley & Wagner, 2019), news stories (Kousha & Thelwall, 2017), and tweets (Haunschild & Bornmann, 2023). This inspired the idea that a “Cited Reference Search” could be used to analyze citations to multiple bibliometric software tools.

Research questions

The visibility, impact, and applications of bibliometric software tools in scholarly research articles were examined through the following four research questions (RQs):

RQ1:

How frequently are bibliometric software tools cited in research articles?

RQ2:

Which countries, publication titles, publishers, funding agencies, and levels of Open Access (OA) cite bibliometric software tools?

RQ3:

What subject-specific research areas cite bibliometric software tools?

RQ4:

Are bibliometric software tools included in Author Keywords and KeyWords Plus?

Methodology

The WOS database is a multidisciplinary resource owned and maintained by Clarivate Analytics (Clarivate Analytics, 2023a). This database is recognized as one of the world’s largest and most authoritative resource for scholarly information. The WOS platform is a powerful tool for document retrieval and data analysis, covering approximately 1.9 billion searchable cited references from over 171 million records (Clarivate Analytics, 2023a). In order to be considered for inclusion in the WOS Core Collection, journals must undergo an evaluation process that includes the requirement of peer review (Clarivate Analytics, 2023b). The content in the WOS Core Collection is organized into three major indexes (i.e., Science Citation Index Expanded, Social Sciences Citation Index, and Arts & Humanities Citation Index), making it an ideal resource for in-depth analysis of specialized fields in research within the three major disciplines of Sciences, Social Sciences, and Arts & Humanities.

Eight bibliometric software mapping tools were selected from recent review papers as the most commonly used in research (Bales et al., 2020; Bankar & Lihitkar, 2019; Moral-Muñoz et al., 2020). The criteria for selection were to identify software predominately used for constructing, analyzing, and visualization of bibliometric networks based on bibliographic data. Software tools used as general network analysis tools (e.g., Gephi, NetDraw, and Pajek) were excluded on the basis that they do not contain significant unique functionality to extract information from bibliographic data. Table 1 lists the references to the eight bibliometric software tools in the present study.

Table 1.

References associated with bibliometric software tools

| Bibliometric software tool | References |

|---|---|

| Bibexcel | Persson, O., Danell, R., & Schneider, J. W. (2009). How to use Bibexcel for various types of bibliometric analysis. Celebrating scholarly communication studies: A Festschrift for Olle Persson at his 60th Birthday, 9–24. http://lup.lub.lu.se/record/1458990/file/1458992.pdf |

| Bibliometrix/BiblioShiny | Aria, M., & Cuccurullo, C. (2017). Bibliometrix: An R-tool for comprehensive science mapping analysis. Journal of Informetrics, 11(4), 959–975. 10.1016/j.joi.2017.08.007 |

| BiblioMaps/BiblioTools | Grauwin, S., & Jensen, P. (2011). Mapping scientific institutions. Scientometrics, 89(3), 943–954. 10.1007/s11192-011-0482-y |

| CiteSpace | Chen, C. (2006). CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. Journal of the American Society for Information Science and Technology, 57(3), 359–377. 10.1002/asi.20317 |

| CitNetExplorer | van Eck, N. J., & Waltman, L. (2014). CitNetExplorer: A new software tool for analyzing and visualizing citation networks. Journal of Informetrics, 8(4), 802–823. 10.1016/j.joi.2014.07.006 |

| SciMAT | Cobo, M. J., López‐Herrera, A. G., Herrera‐Viedma, E., & Herrera, F. (2012). SciMAT: A new science mapping analysis software tool. Journal of the American Society for Information Science and Technology, 63(8), 1609–1630. 10.1002/asi.22688 |

| Sci2 Tool | Sci2 Team. (2009). Science of Science (Sci2) Tool. Indiana University and SciTech Strategies. https://sci2.cns.iu.edu |

| VOSviewer | van Eck, N. J., & Waltman, L. (2010). Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics, 84(2), 523–538. 10.1007/s11192-009-0146-3 |

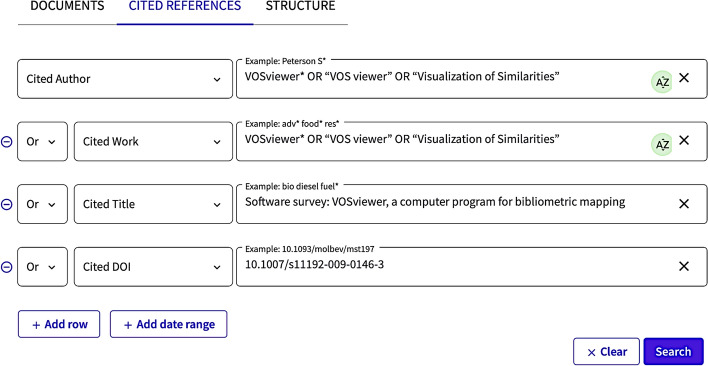

For each software tool, a reference search was performed using the “Cited References” tab and applying the OR operator to the fields of “Cited Author,” “Cited Work,” “Cited Title,” and “Cited DOI.” Each of these fields refers to a specific aspect of the cited reference (Clarivate Analytics, 2021b). The “Cited Author” pertains to the first author’s name being cited. The “Cited Work” refers to the publication where the reference was cited, such as the journal, conference, book, or book chapter. The “Cited Title” corresponds to the title of the cited document, such as the article’s title. The “Cited DOI” denotes the cited Digital Object Identifier utilized to identify an electronic document. Table 1 lists the appropriate reference for each bibliometric software tool that was used to extract the “Cited Title” and “Cited DOI” fields, while Table 2 presents the Boolean strings used for the “Cited Author” and “Cited Work” fields. An example of a cited reference search for VOSviewer is illustrated in Fig. 1.

Table 2.

Citing articles to bibliometric software tools from a Cited Reference Search, Author Keywords, and KeyWords Plus

| Bibliometric software tool | Boolean string used in “Cited Author” and “Cited Work” fields |

# Citing articles | |||

|---|---|---|---|---|---|

| Cited Reference Search (Cited Author OR Cited Work OR Cited Title OR Cited DOI)a |

Author Keywords | KeyWords Plus | |||

| Review articles | Research articles | ||||

| BibExcel | BibExcel* OR “Bib Excel” | 81 | 181 | 7 | 0 |

| BiblioMaps/BiblioToolsb | BiblioMaps* OR BiblioTools* OR “Biblio Maps” OR “Biblio Tools” | 2 | 33 | 0 | 0 |

| Bibliometrix/Biblioshinyc | Bibliometrix* OR Biblioshiny* OR “Biblio mertrix” OR “Biblio shiny” | 293 | 389 | 33 | 0 |

| CiteSpace | CiteSpace* OR “Cite Space” | 390 | 851 | 274 | 14 |

| CitNetExplorer | CitNetExplorer* OR “CitNet Explorer” OR “Citation Network Explorer” | 50 | 123 | 4 | 6 |

| SciMAT | SciMAT* OR “Sci MAT” OR “Science Mapping Analysis Software Tool” | 56 | 188 | 34 | 0 |

| Sci2 Tool | “Sci2 Tool*” OR “Science of Science (Sci2)” OR “Science of Science Tool” | 5 | 19 | 2 | 0 |

| VOSviewer |

VOSviewer* OR “VOS viewer” OR “ Visualization of Similarities” |

950 | 1664 | 308 | 0 |

aThe “Cited Title” and “Cited DOI” information is available in Table 1

bBiblioTools are the python scripts and the base of the BiblioMaps

cBiblioshiny is the web interface of Bibliometrix

Fig. 1.

Example of a cited reference search for VOSviewer

The resulting cited references were selected, while any irrelevant references to bibliometric software were unselected (e.g., cited references to SciMat that included Scientific Materialism, Science et Materialism, Scimatic, The Science of Materials, or Sci. Mater. were excluded). The “See Results” button was clicked to view the citing documents. The documents were then refined by selecting all years between 2010 and 2021 (inclusive) under the “Publication Years” facet. The resulting documents were compared with research articles and review articles found under the “Document Types” facet. They were then separated into research articles by selecting the “Articles” constraint. Journal articles were selected as the document type because they relate to the research. A single document type will provide a more homogeneous dataset by eliminating potential biases or confounding factors that could arise from multiple document types. It will thus ensure that the results of the bibliometric analysis are more accurate, comparable, and meaningful.

The citing articles from each bibliometric software tool were combined by going to the “View your search history” tab and clicking the “Advanced Search” link. The “Analyze Results” button was used to analyze articles by publication years, countries, publication titles, publishers, funding agencies, open access publications, and WOS categories. The articles were also separated by the three discipline indexes under the “Web of Science Index” facet.

The retrieved citing article records were exported by clicking on the “Export” button and selecting “Tab delimited file,” followed by “Full Record and Cited References.” The records were saved 500 at a time. The data records were subsequently uploaded into the VOSviewer software (Visualization of Similarities, version 1.6.18) and analyzed for keyword co-occurrences (van Eck & Waltman, 2010, 2022). A thesaurus file was constructed and uploaded to the VOSviewer to remove irrelevant terms and combine spelling variations, related terminology, and synonyms. Lastly, mentions of bibliometric software tools were compared through a basic search using the Boolean strings from Table 2 in the fields of Author Keywords and KeyWords Plus.

Results and findings

RQ1: How frequently are bibliometric software tools cited in research articles?

To address RQ1, the combined number of citings to eight bibliometric software tools were captured using the “Cited References” option in WOS. This research found that 2882 citing research articles contain at least one citation to a bibliometric software tool. This indicates that 0.015% of all scholarly articles in the three disciplines of WOS include a citation to one or more bibliometric software tools. Table 2 provides the number of articles that cite each software tool. Although bibliometric software tools are mainly cited in research articles, the study revealed that these tools are also cited in review papers. Review articles often use bibliometric software tools to analyze and map a particular scientific field to help identify key research trends and gaps in the literature. VOSviewer and CiteSpace are the most frequently cited software in both research articles and review articles.

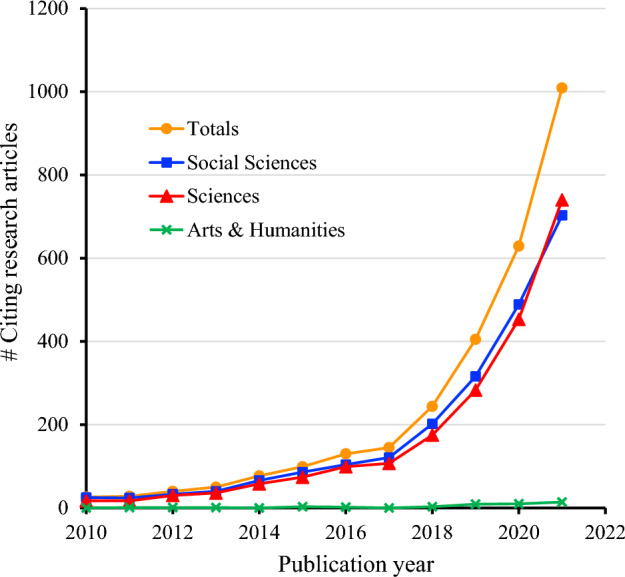

The citation counts from the research articles vary among the bibliometric software tools, ranging from 19 citations for the Sci2 Tool to 1664 citations for VOSviewer between 2010 and 2021. Based on the number of citations to the bibliometric software tools, the Social Sciences discipline hold the most significant visibility and impact in terms of article counts (2209 citing research articles), followed by the Sciences (2089) and the Arts & Humanities (43). Figure 2 provides a comparison of the yearly number of citing research articles across the three disciplines. Notably, bibliometric software has experienced a steady increase in both the social sciences (24 to 703 citing research articles) and sciences (17 to 740) disciplines. However, the Arts & Humanities disciplines have shown minimal growth in this regard with an increase from 0 to 14 citing research articles. Over the past decade, the total number of cited research papers has increased from 26 in 2010 to 1009 in 2021, reflecting a significant rise in scholarly citation output across all disciplines. However, it is worth noting that a majority of these citations are attributed to VOSviewer (48% citings) and CiteSpace (25%).

Fig. 2.

Citing research articles to bibliometric software tools by publication year

RQ2: Which countries, publication titles, publishers, funding agencies, and levels of Open Access (OA) cite bibliometric software tools?

To answer RQ2, the “Analyze Results” feature in WOS was used to determine the number of citations for research articles referencing the bibliometric tools by country publication title, publisher, funding agency, and levels of OA. The results of the analysis are presented in Table 3.

Table 3.

Highest incidence of citing research articles to bibliometric software tools by country, publication title, publisher, and funding agency

| Country | # Citing research articles | Publication title | # Citing research articles | Publisher | # Citing research articles | Funding agency | # Citing research articles |

|---|---|---|---|---|---|---|---|

| People’s Republic of China | 928 | Scientometrics | 295 | Elsevier | 607 | National Natural Science Foundation of China | 410 |

| USA | 430 | Sustainability | 162 | Springer Nature | 603 | Conselho Nacional de Desenvolvimento Científico e Tecnológico | 56 |

| Spain | 410 | International Journal of Environmental Research and Public Health | 62 | MDPI | 350 | Coordenação de Aperfeiçoamento de Pessoal de Nível Superior | 54 |

| England | 211 | PLOS ONE | 48 | Wiley | 220 | European Commission | 52 |

| Australia | 185 | Journal of Informetrics | 46 | Taylor & Francis | 173 | Fundamental Research Funds for the Central Universities | 52 |

| Brazil | 158 | Technology Forecasting and Social Change | 41 | Emerald Group Publishing | 142 | Spanish Government | 52 |

| Italy | 156 | Environmental Science and Pollution Research | 36 | Sage | 98 | National Science Foundation | 50 |

| Netherlands | 143 | Journal of Cleaner Production | 34 | Frontiers Media SA | 76 | UK Research Innovation | 39 |

| Germany | 135 | Journal of the Association for Information Science and Technology | 29 | IEEE | 71 | China Postdoctoral Science Foundation | 38 |

| India | 131 | IEEE Access | 27 | Public Library Science | 50 | China Scholarship Council | 34 |

Countries of origin

The Research Software Alliance (2023) offers a wealth of international resources on software policies and strategies, which can aid in establishing best software practices. Given that the bibliometric software tools analyzed in this study are freely accessible worldwide, their availability might motivate scholars from low and middle-income countries to engage in bibliometric research. The analysis of 2882 research articles reveals that scholars from 94 countries have cited one or more bibliometric tools. Among the top countries, the People’s Republic of China holds the most influence in citing bibliometric software tools (32.2% of total citing research papers), followed by the US (14.9%), Spain (14.2%), and England (7.3%). Although researchers from developing countries cite bibliometric software tools, scholars in economically advanced countries generate the greatest number of citations.

Publication titles

The practice of citing bibliometric software tools in discipline-specific journals is evident in the study. Specifically, the analysis of the WOS Core Collection from 2010 to 2021 revealed one or more citations to bibliometric software tools in 1104 journals, representing 6.5% of the total indexed journals across the three disciplines examined. Further, 29 journals had ten or more citations, while only eight journals had 30 or more citations to the bibliometric software. Among the journals with the highest incidence of citing articles, Scientometrics ranked first with 295 citations, accounting for 10.2% of the total, followed by Sustainability (5.6%) and the International Journal of Environmental Research and Public Health (2.2%). To that end, journals that frequently cite bibliometric software tools may exhibit a tendency to accept papers that focus on bibliometric research. Author manuscript guidelines should therefore include a comprehensive reference list of commonly used bibliometric software tools to foster and promote the proper citation of software tools, as exemplified in Table 1. Additionally, editors and reviewers should be advised to address uncited software in their evaluations of research papers, thereby raising awareness and promoting good citation practices in the scholarly community.

Publishers

An examination of the 2882 citing research articles by publisher found that Elsevier and Springer Nature hold the bulk of journals citing bibliometric software tools (1210 citing research articles; 42% of the total). Eleven of the 217 publishers contain 20 or more citings to bibliometric software tools (84% of the total). To improve software citation practices, it is suggested that major publishers communicate with their editors to incorporate guidelines for software referencing (both in-text and referenced) in their publications. Adopting such an approach would prove advantageous in inspiring smaller and independent publishers to follow suit. Further, software developers can play a key role in this process by proactively recommending citations for their software, thereby facilitating transparency and appropriate referencing in scholarly publications.

Funding agencies

Many funding agencies have made efforts in their policy statements and guidelines to promote the citation of software in research publications. The US National Science Foundation (NSF), for instance, requires software citation in its Grant Proposal Guide (GPG) and mandates investigators to include a plan for preserving and sharing any developed software (NSF, 2013). The National Institute of Health (NIH) similarly encourages software citation in their Research Performance Progress Report (RPPR) and grant applications (NIH Office of Science Policy, 2017). Likewise, the Europeans Research Council (ERC) expects researchers to adhere to the FAIR principles when managing and disseminating data, including software, to enable easy discoverability and seemless accessibility (European Commission, Directorate-General for Research and Innovation, 2020).

Citing software is becoming increasingly common with research funding policies and guidelines in North America and Europe. Thus, it is reasonable to speculate that a significant proportion of research publications that reference bibliometric software tools are affiliated with these major funding agencies. The study identified 1808 different funding agencies from the 2882 citing research articles. Out of all the funders analyzed during the 12-year study, only 1.9% (35 funders) were associated with ten or more citing research papers. The highest incidence of citing articles to bibliometric software tools contains funding from the National Natural Science Foundation of China (410 citing research articles; 14.2% of the total), which correlates to the data associated with the most influential country (Table 3). The third and fourth highest number of citing research articles were affiliated with funders from Brazil, namely, Conselho Nacional de Desenvolvimento Científico e Tecnológico (56; 1.9%) and Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (54; 1.9%). The European Commission, Fundamental Research Funds for the Central Universities, and the Spanish Government, each had the third-highest number of citing research articles (52; 1.8%). In comparison, NSF (50; 1.7%) and NIH (12; 0.4%) had relatively fewer citing research articles. This highlights the importance of implementing effective outreach and follow-up strategies to promote and monitor proper in-text and reference citation practices.

Open access journals

Since bibliometric software tools are frequently open-source, it would be practical to expect that OA journals take greater responsibility in mandating the inclusion of relevant software references in scholarly articles. This would not only promote the availability of the free software but also encourage its use in the research community, which is particularly important when barriers such as paywalls limit access to similar types of software. Figure 3 shows a treemap chart of the distribution of OA research articles from the 2882 citing research articles, revealing 1468 OA citing articles (50.9% of the total in the study). Among these, a majority of 874 OA citing articles are Gold. This covers 0.027% of Gold OA articles from the three disciplines in the WOS Core Collection, which can be compared to 0.013% of non-OA articles. Notably, the highest incidences of bibliometric software tools in Table 3 are all from OA journals except for Scientometrics. The data suggest that OA journals tend to prioritize the citing and use of open-source bibliometrics software tools in their research papers.

Fig. 3.

Citing research articles to bibliometric software tools by the level of open access

RQ3: What subject-specific research areas cite bibliometric software tools?

To tackle RQ3, the strategy was to analyze the retrieved citing papers by WOS category. The VOSviewer software was employed to visually analysis of the co-occurring keywords derived from the Author Keywords and KeyWords Plus attributed to each research paper. This approach enabled to investigate the research trends and patterns associated with the bibliometric software tools under study.

WOS categories

Each journal in WOS is assigned at least one subject category that appears in a publication record (Clarivate Analytics, 2020). Table 4 presents the top ten subject categories by the highest number of citings to bibliometric software tools. Citations to bibliometric software tools are occurring in 216 different WOS categories within the disciplines of Social Sciences (184 WOS categories), Sciences (182), and Arts & Humanities (24). The category of Information & Library Science leads with the highest number of citing articles (20.1% of the total), followed by Environmental Sciences (15.0%) and Computer Science, Interdisciplinary Applications (13.2%). Other notable categories are occurring in business, management, and health (e.g., Health Care Sciences Services, Nursing, Emergency Medicine, and Medical Ethics).

Table 4.

Highest incidence of citing research articles to bibliometric software tools by Web of Science category

| Web of Science category | # Citing research articles |

|---|---|

| Information & Library Science | 578 |

| Environment Science | 433 |

| Computer Science, Interdisciplinary Applications | 381 |

| Green & Sustainable Science & Technology | 235 |

| Environmental Studies | 234 |

| Management | 221 |

| Business | 195 |

| Computer Science, Information Systems | 187 |

| Public, Environmental & Occupational Health | 158 |

| Multidisciplinary Sciences | 119 |

Keyword co-occurrences

The WOS database categorizes keywords as Author Keywords (uncontrolled vocabulary) and KeyWords Plus (controlled vocabulary) (Clarivate Analytics, 2021a; Garfield, 1990). Author keywords are keywords provided by the author(s) when submitting an article used to highlight a paper’s main research focus and core concepts. These keywords have been included in WOS records since 1991 (Clarivate Analytics, 2021a). KeyWords Plus are indexed keywords generated from the titles of cited articles that appear at least twice in the bibliography. KeyWords Plus are an additional independent variable that can provide more information on research trends. The combination of Author Keywords and KeyWords Plus can provide an overview and understanding of each paper’s research context and trends.

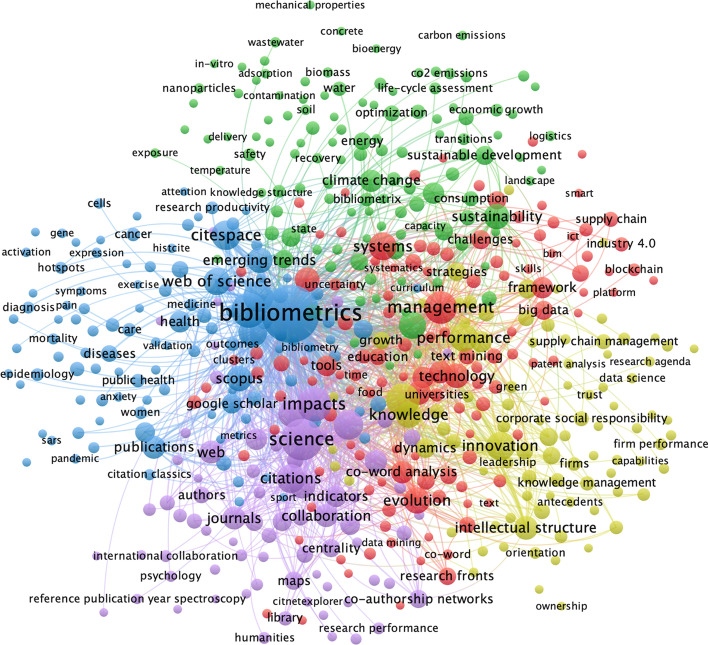

The VOSviewer software created a network map from the citing articles based on the Author Keywords and KeyWords Plus. A total of 527 keywords were extracted from the 2882 citing research articles with a co-occurrence of seven. Five major interconnected clusters were identified (Fig. 4). Table 5 (Appendix 1) lists the cluster names by major research fields with examples of extracted keywords. The colors in the clusters depict the relevant keywords to that cluster. Interestingly, research with big data is evident in all clusters.

Fig. 4.

Citing research articles to bibliometric software tools by keyword cluster (VOSviewer software)

Table 5.

Selected keywords extracted from citing research articles to bibliometric software tools (VOSviewer)

| Cluster # (color) | Cluster name | Examples of keywords |

|---|---|---|

| Cluster 1 (red) | Information & Library Science | Information, library and information science, higher education, information literacy, databases, internet, journal analysis, knowledge discovery, deep learning, internet of things, big data, network visualization, data visualization, data mining, augmented reality, software, technology, emerging technology, disruptive technology |

| Cluster 2 (green) | Environment | Environment, environmental sustainability, climate change, CO2 emissions, coastal, ecosystems, greenhouse-gas emission, forest, soil, energy consumption, wastewater, biomass, biodiversity, toxicity, pollution, oxidation, nitrogen, heavy metals, GIS, data envelopment analysis |

| Cluster 3 (blue) | Health Sciences | Health, healthcare, public health, mental health, medicine, diagnosis, nursing, surgery, risk factors, diseases, infectious diseases, pandemic, SARS, COVID-19, pneumonia, obesity, meta-analysis, bibliometrics, Scopus, Web of Science, cancer, breast cancer, proteins, cells, metabolism, gene, mutations |

| Cluster 4 (yellow) | Business & Management | Business, business intelligence, management research, supply chain management, knowledge management, market orientation, financial performance, firm performance, predictive analysis, customer satisfaction, entrepreneurship, hospitality, tourism, sharing economy, globalization, leadership, patent analysis, data science |

| Cluster 5 (violet) | Citation Impact | Citation impact, citation analysis, cited references, research impact, authors, scientific impact, impact factors, ranking, h-index, international collaboration, institutions, productivity, output, patterns, centrality, historical roots, scholarly communication, big data research, altmetrics |

The first cluster (red color) is the most relevant, focusing on Information & Library Science. This cluster also contains terms related to technology, such as technology, disruptive technology, emerging technologies, and augmented reality. The second-largest cluster (green color) is grouped as Environment. This cluster is composed of keywords reflective of environmental studies (e.g., climate change, CO2 emissions, ecosystems, energy consumption, pollution, and sustainability). Cluster 3 (blue color) relates to Health Sciences, recognized by terms such as health, healthcare, public health, mental health, medicine, nursing, surgery, and pandemic. This cluster further uncovers applications of bibliometric software tools in research on diseases (e.g., cancer, infectious diseases, pneumonia, breast cancer, COVID-19, and obesity) and biological sciences (e.g., cells, brain, gene, mutations, and proteins). The fourth cluster (yellow color) focuses on applications of software mapping tools in Business and Management. Characteristic broad terms include business, hospitality, business models, entrepreneurship, tourism, and management research. Cluster 5 (violet color) is designated as Citation Impact, encapsulating pertinent terms such as citation impact, research impact, metrics, h-index, citation analysis, big data research, international collaboration, institutions, and scholarly communication. Overall, the data demonstrates that bibliometric software tools are used in subject-specific fields across numerous disciplines.

RQ4: Are bibliometric software tools included in Author Keywords and KeyWords Plus?

In dealing with RQ4, a basic search in WOS for each bibliometric software tool was adopted using the fields of Author Keywords and KeyWords Plus. Table 2 provides the corresponding number of research articles that have mentioned a software tool in these fields. The study shows that bibliometric software tools exhibit a higher number of citations when referenced in research papers as opposed to their inclusion as Author Keywords or KeyWords Plus. The article counts are generally higher in the Author Keywords compared to the KeyWords Plus. VOSviewer and CiteSpace are observed to contain the highest number of software mentions as Author Keywords, each with over 200 research papers. Two out of eight software tools were found in KeyWords Plus (i.e., CiteSpace and CitNetExplorer). Thertefore, the uncontrolled vocabulary from Author Keywords provides more visibility to these software tools than the controlled vocabulary from KeyWords Plus. It is noteworthy that WOS’s controlled vocabulary process fails to capture bibliometric software tools since the software names are absent in the reference lists of the article titles.

Software tools are not the primary focus of the research reported in articles and therefore are not typically included as Author Keywords. Authors are also bound by limitations on the number of keywords they can use as Author Keywords to describe the nature of the research in their articles. Since indexing plays a critical role in information discovery and visibility, it would be advantageous to incorporate a separate field that allows authors to add specific software names. Resources such as Scopus already offer fields such as “Trade Name,” to identify commercial products and services. A software field can be valuable for scholars who wish to search for information or ideas regarding the use of specific software in research.

Limitations and future work

This research is restricted to documents indexed in the WOS database such that publications not indexed could not be analyzed. The WOS Core Collection encompasses a varying number of journals and articles within the citation indexes, which creates an inherent bias in terms of the citation index and subject areas that frequently cite software. The study depends upon the authors including a formal citation for the software in their reference lists. In addition, the “Cited Reference Search” feature does not capture informal mentions of software that may appear in the body of a publication.

The application of bibliometric software tools in systematic literature reviews across various fields outside of Library & Information Science is expanding rapidly, rendering it a promising area for future investigation. It may be interesting to study whether there are differences between publications that explicitly include a cited reference to a software tool and those that merely mention the tool’s name without a cited reference. Future work could also include analyzing citations to specific data sets, software source codes, or fragments of codes, as well as AI chatbots, such as ChatGPT-4 (OpenAI, 2021).

Conclusions

This study utilized a “Cited Reference Search” approach to examine the impact and contribution of eight bibliometric software tools in research articles published between 2010 and 2021. A comprehensive analysis of 2882 citing articles retrieved from the WOS Core Collection revealed that these tools have a noteworthy impact and contribution to research, but their visibility through referencing, Author Keywords, and KeyWords Plus is generally low.

The findings showed an upward trend in yearly citation counts within the Social Sciences and Sciences disciplines, while the Arts & Humanities remained relatively stable. The citation analysis results indicate that VOSviewer and CiteSpace have emerged as the most frequently cited bibliometric software tools. The study identified Scientometrics and Sustainability as the journals with the highest number of citations to bibliometric software tools. It is worth noting that only eight journals from the 1104 titles analyzed had 30 or more citations per source to bibliometric software tools during the 12-year period of the study. The findings further revealed that the People’s Republic of China was the most influential country and funder. Additionally, the research highlighted that Elsevier and Springer Nature are the dominant publishers of bibliometric software tools, contributing to 42% of the citing articles. Lastly, the study demonstrated that OA articles tend to have a higher citation frequency to bibliometric software tools.

The versatility and significant impact of bibliometric software tools in research across different disciplines is evidenced by the variety of WOS categories of the citing papers. Moreover, the visualization cluster map generated by the VOSviewer software identified subject-specific research areas in which these tools are employed. The outcomes of this research have significant value to a diverse range of stakeholders. Researchers, academic librarians, publishers, editors, reviewers, and research evaluation agencies can all benefit from the insights provided by this study, which shed light on the impact and contributions of bibliometric software tools.

Acknowledgements

I thank the reviewers for their thorough and insightful feedback, which has significantly enhanced the quality and clarity of the manuscript.

Appendix 1

See Table 5.

Declarations

Conflict of interest

The author has no funding or conflict of interest to declare.

References

- Alliez P, Di Cosmo R, Guedj B, Girault A, Hacid MS, Legrand A, Rougier N. Attributing and referencing (research) software: Best practices and outlook from Inria. Computing in Science & Engineering. 2020;22(1):39–52. doi: 10.1109/MCSE.2019.2949413. [DOI] [Google Scholar]

- Bainbridge DI. Introduction to computer law. 5. Pearson Education Limited; 2004. [Google Scholar]

- Bales, M. E., Wright, D. N., Oxley, P. R., & Wheeler, T. R. (2020). Bibliometric visualization and analysis software: State of the art, workflows, and best practices. Retrieved March 27, 2023, from https://hdl.handle.net/1813/69597

- Bankar RS, Lihitkar SR. Science mapping and visualization tools used for bibliometric and scientometric studies: A comparative study. Journal of Advancements in Library Sciences. 2019;6(1):382–394. [Google Scholar]

- Borgman CL, Furner J. Scholarly communication and bibliometrics. Annual Review of Information Science and Technology. 2002;36:3–72. [Google Scholar]

- Bouquin DR, Chivvis DA, Henneken E, Lockhart K, Muench A, Koch J. Credit lost: Two decades of software citation in astronomy. The Astrophysical Journal Supplement Series. 2020;249(1):8. doi: 10.3847/1538-4365/ab7be6. [DOI] [Google Scholar]

- Cardona M, Marx W. Anatomy of the ICDS series: A bibliometric analysis. Physica B: Condensed Matter. 2007;401–406:1–6. doi: 10.1016/j.physb.2007.08.101. [DOI] [Google Scholar]

- Chun WHK. On software, or the persistence of visual knowledge. Grey Room. 2005;18:26–51. doi: 10.1162/1526381043320741. [DOI] [Google Scholar]

- Clarivate Analytics. (2020). Web of Science core collection help. Retrieved March 27, 2023, from https://images.webofknowledge.com/images/help/WOS/hp_subject_category_terms_tasca.html

- Clarivate Analytics. (2021a). Core collection full record details – Keywords. Retrieved March 27, 2023, from https://webofscience.help.clarivate.com/en-us/Content/wos-core-collection/wos-full-record.htm

- Clarivate Analytics. (2021b). Web of Science core collection search fields – Cited reference fields. Retrieved March 27, 2023, from https://webofscience.help.clarivate.com/en-us/Content/wos-core-collection/woscc-search-fields.htm

- Clarivate Analytics. (2023a). Web of Science. Retrieved March 27, 2023, from https://clarivate.com/webofsciencegroup/solutions/web-of-science

- Clarivate Analytics. (2023b). Web of Science journal evaluation process and selection criteria – Web of Science core collection. Retrieved March 27, 2023, from https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/web-of-science/core-collection/editorial-selection-process/editorial-selection-process/

- Cobo MJ, López-Herrera AG, Herrera-Viedma E, Herrera F. Science mapping software tools: Review, analysis, and cooperative study among tools. Journal of the American Society for Information Science and Technology. 2011;62(7):1382–1402. doi: 10.1002/asi.21525. [DOI] [Google Scholar]

- Cousijn H, Kenall A, Ganley E, Harrison M, Kernohan D, Lemberger T, Murphy F, Polischuk P, Taylor S, Martone M, Clark T. A data citation roadmap for scientific publishers. Scientific Data. 2018;5(1):1–11. doi: 10.1038/sdata.2018.259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crouch S, Hong NPC, Hettrick S, Jackson M, Pawlik A, Sufi S, Carr S, De Roure D, Goble CA, Parsons M. The software sustainability institute: Changing research software attitudes and practices. Computing in Science Engineering. 2013;15(6):74–80. doi: 10.1109/MCSE.2013.133. [DOI] [Google Scholar]

- Dhillon JK, Gill NC. Contribution of Indian pediatric dentists to scientific literature during 2002–2012: A bibliometric analysis. Acta Informatica Medica. 2014;22(3):199–202. doi: 10.5455/aim.2014.22.199-202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- European Commission, Directorate-General for Research and Innovation. (2020). Scholarly infrastructures for research software: Report from the EOSC Executive Board Working Group (WG) Architecture Task Force (TF) SIRS, Publications Office. Retrieved March 27, 2023, from https://data.europa.eu/doi/10.2777/28598

- Garfield E. Citation indexing: Its theory and application in science, technology, and humanities. Wiley; 1979. [Google Scholar]

- Garfield E. Keywords plus-ISI's breakthrough retrieval method. 1. Expanding your searching power on current-contents on diskette. Current Contents. 1990;32:5–9. [Google Scholar]

- Haunschild R, Bornmann L. Which papers cited which tweets? An exploratory analysis based on Scopus data. Journal of Informetrics. 2023;17(2):101383. doi: 10.1016/j.joi.2023.101383. [DOI] [Google Scholar]

- Haustein, S., & Larivière, V. (2015). The use of bibliometrics for assessing research: Possibilities, limitations and adverse effects. In I. M. Welpe, J. Wollersheim, S. Ringelhan, & M. Osterloh (Eds.), Incentives and performance: Governance of research organizations (pp. 121–139). Springer. 10.1007/978-3-319-09785-5_8

- Katz, D. S., Bouquin, D., Chue Hong, P. C., Hausman, J., Jones, C., Chivvis, D., Clark, T., Crosas, M., Druskat, S., Fenner, M., Gillespie, T., Gonzalez-Beltran, A., Gruenpeter, M., Habermann, T., Haines, R., Harrison, M., Henneken, E., Hwang, L., Jones, M. B., & Zhang, Q. (2019a). Software citation implementation challenges, Retrieved March 27, 2023, from http://arxiv.org/abs/1905.08674

- Katz DS, McInnes LC, Bernholdt DE, Mayes AC, Chue Hong NP, Duckles J, Gesing S, Heroux MA, Hettrick S, Jimenez EC, Pierce EC, Weaver B, Wilkins-Diehr N. Community organizations: Changing the culture in which research software is developed and sustained. Computing in Science & Engineering. 2019;21(2):8–24. doi: 10.1109/MCSE.2018.2883051. [DOI] [Google Scholar]

- Katz, D. S., & Chue Hong, N. P. (2018). Software citation in theory and practice. In J. H. Davenport, M. Kauers, G. Labahn, & J. Urban (Eds.), Mathematical Software – ICMS 2018, Lecture Notes in Computer Science (pp. 289–296). Springer. 10.1007/978-3-319-96418-8

- Katz, D. S., Chue Hong, N. P., Clark, T., Muench, A., Stall, S., Bouquin, D., Cannon, M., Edmunds, S., Faez, T., Feeney, P., Fenner, M., Friedman, M., Grenier, G., Harrison, M., Heber, J., Leary, A., MacCallum, C., Murray, H., Pastrana, E., & Yeston, J. (2021). Recognizing the value of software: A software citation guide. F1000Research,9, 1257. 10.12688/f1000research.26932.2 [DOI] [PMC free article] [PubMed]

- Kousha K, Thelwall M. News stories as evidence for research? BBC citations from articles, books, and Wikipedia. Journal of the Association for Information Science and Technology. 2017;68(8):2017–2028. doi: 10.1002/asi.23862. [DOI] [Google Scholar]

- Li K, Yan E, Feng Y. How is R cited in research outputs? Structure, impacts, and citation standard. Journal of Informetrics. 2017;11(4):989–1002. doi: 10.1016/j.joi.2017.08.003. [DOI] [Google Scholar]

- Li X, Thelwell M, Mohammadi E. How are encyclopedias cited in academic research? Wikipedia, Britannica, Baidu Baike, and Scholarpedia. Profesional de la Información. 2021;30(5):e300508. doi: 10.3145/epi.2021.sep.08. [DOI] [Google Scholar]

- Martone, M. (2014). Joint declaration of data citation principles. FORCE11. 10.25490/a97f-egyk

- Mokhnacheva YV, Tsvetkova VA. Development of bibliometrics as a scientific field. Scientific and Technical Information Processing. 2020;47(3):158–163. doi: 10.3103/S014768822003003X. [DOI] [Google Scholar]

- Moral-Muñoz JA, Herrera-Viedma E, Santisteban-Espejo A, Cobo MJ. Software tools for conducting bibliometric analysis in science: An up-to-date review. El Profesional de la Información. 2020;29(1):e290103. doi: 10.3145/epi.2020.ene.03. [DOI] [Google Scholar]

- Mou, J., Cui, Y., & Kurcz, K. (2019). Bibliometric and visualized analysis of research on major e-commerce journals using CiteSpace. Journal of Electronic Commerce Research, 20(4), 219–237. Retrieved March 27, 2023, from http://www.jecr.org/node/590

- Nangia, U., & Katz, D. S. (2017). Understanding software in research: Initial results from examining Nature and a call for collaboration. In 2017 IEEE 13th International Conference on e‐Science (e‐Science), (pp. 486–487). 10.1109/eScience.2017.78

- National Science Foundation (NSF). (2013). Grant Proposal Guideline: NSF 13–1 GPG Summary of Changes. Retrieved March 27, 2023, from http://nsf.gov/pubs/policydocs/pappguide/nsf13001/gpg_sigchanges.jsp

- Niemeyer KE, Smith AM, Katz DS. The challenge and promise of software citation for credit, identification, discovery, and reuse. Journal of Data and Information Quality. 2016;7(4):1–5. doi: 10.1145/2968452. [DOI] [Google Scholar]

- NIH Office of Science Policy. (2017). Compiled Public Comments on NIH Request for Information: Strategies for NIH Data Management, Sharing, and Citation. Retrieved March 27, 2023, from https://osp.od.nih.gov/wp-content/uploads/Public_Comments_Data_Managment_Sharing_Citation.pdf

- Orduña-Malea E, Costas R. Link-based approach to study scientific software usage: The case of VOSviewer. Scientometrics. 2021;126(9):8153–8186. doi: 10.1007/s11192-021-04082-y. [DOI] [Google Scholar]

- Osinska V, Klimas R. Mapping science: Tools for bibliometric and altmetric studies. Information Research. 2021 doi: 10.47989/irpaper909. [DOI] [Google Scholar]

- OpenAI. (2021). ChatGPT-4: A large-scale generative language model for conversational AI. Retrieved May 2, 2023, from https://openai.com/blog/chat-gpt-4/

- Pan, X., Cui, M., Yu, X., & Hua, W. (2017). How is CiteSpace used and cited in the literature? An analysis of the articles published in English and Chinese core journals. In ISSI 2017–16th International Conference on Scientometrics and Informetrics, Conference Proceedings (pp. 158–165). International Society for Scientometrics and Informetrics. Retrieved March 27, 2023, from https://www.issi-society.org/proceedings/issi_2017/2017ISSI%20Conference%20Proceedings.pdf

- Pan X, Yan E, Cui M, Hua W. Examining the usage, citation, and diffusion patterns of bibliometric mapping software: A comparative study of three tools. Journal of Informetrics. 2018;12(2):481–493. doi: 10.1016/j.joi.2018.03.005. [DOI] [Google Scholar]

- Pan X, Yan E, Cui M, Hua W. How important is software to library and information science research? A content analysis of full-text publications. Journal of Informetrics. 2019;13(1):397–406. doi: 10.1016/j.joi.2019.02.002. [DOI] [Google Scholar]

- Pan X, Yan E, Hua W. Disciplinary differences of software use and impact in scientific literature. Scientometrics. 2016;109(3):1593–1610. doi: 10.1007/s11192-016-2138-4. [DOI] [Google Scholar]

- Pan X, Yan E, Wang Q, Hua W. Assessing the impact of software on science: A bootstrapped learning of software entities in full-text papers. Journal of Informetrics. 2015;9(4):860–871. doi: 10.1016/j.joi.2015.07.012. [DOI] [Google Scholar]

- Park, T. K. (2011). The visibility of Wikipedia in scholarly publications. First Monday, 16(8–1). 10.5210/fm.v16i8.3492

- Pradhan P. Science mapping and visualization tools used in bibliometric & scientometric studies: An overview. Inflibnet Newsletter. 2016;23(4):19–33. [Google Scholar]

- Research Software Alliance. (2023). Guidelines. Retrieved March 27, 2023, from https://www.researchsoft.org/guidelines/

- Rowley, E. M., & Wagner, A. B. (2019). Citing of industry standards in scholarly publications. Issues in Science and Technology Librarianship, (92). 10.29173/istl27

- Scalfani, V. F. (2021). Finding citations to non-indexed resources in Web of Science. R-LIB: A blog of Rodgers Library for Science and Engineering. Retrieved March 27, 2023, from https://apps.lib.ua.edu/blogs/rlib/2021/01/14/finding-citations-to-non-indexed-resources-in-web-of-science/

- Smith AM, Katz DS, Niemeyer KE. Software citation principles. Science. 2016;2:e86. doi: 10.7717/peerj-cs.86. [DOI] [Google Scholar]

- Smith LC. Citation analysis. Library Trends. 1981;30(1):83–106. [Google Scholar]

- Soito L, Hwang LJ. Citations for software: Providing identification, access and recognition for research software. International Journal of Digital Curation. 2016;11(2):48–63. doi: 10.2218/ijdc.v11i2.390. [DOI] [Google Scholar]

- Task Group on Data Citation Standards and Practices. (2013). Out of cite, out of mind: The current state of practice, policy, and technology for the citation of data. Data Science Journal, 12, CIDCR1–CIDCR75. 10.2481/dsj.OSOM13-043

- Tomaszewski R. Citations to chemical resources in scholarly articles: CRC Handbook of Chemistry and Physics and the Merck Index. Scientometrics. 2017;112(3):1865–1879. doi: 10.1007/s11192-017-2437-4. [DOI] [Google Scholar]

- Tomaszewski R. A comparative study of citations to chemical encyclopedias in scholarly articles: Kirk-Othmer Encyclopedia of Chemical Technology and Ullmann’s Encyclopedia of Industrial Chemistry. Scientometrics. 2018;117(1):175–189. doi: 10.1007/s11192-018-2844-1. [DOI] [Google Scholar]

- Tomaszewski R. Citations to chemical databases in scholarly articles: To cite or not to cite? Journal of Documentation. 2019;75(6):1317–1332. doi: 10.1108/JD-12-2018-0214. [DOI] [Google Scholar]

- Tomaszewski R. A study of citations to STEM databases: ACM Digital Library, Engineering Village, IEEE Xplore, and MathSciNet. Scientometrics. 2021;126(2):1797–1811. doi: 10.1007/s11192-020-03795-w. [DOI] [Google Scholar]

- Tomaszewski R. Analyzing citations to software by research area: A case study of MATLAB. Science & Technology Libraries. 2022 doi: 10.1080/0194262X.2022.2070329. [DOI] [Google Scholar]

- Tomaszewski R, MacDonald KI. A study of citations to Wikipedia in scholarly publications. Science & Technology Libraries. 2016;35(3):246–261. doi: 10.1080/0194262X.2016.1206052. [DOI] [Google Scholar]

- van Eck NJ, Waltman L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics. 2010;84(2):523–538. doi: 10.1007/s11192-009-0146-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Eck, N. J., & Waltman, L. (2014). Visualizing bibliometric networks. In Y. Ding, R. Rousseau, & D. Wolfram (Eds.), Measuring scholarly impact: Methods and practice (pp. 285–320). Springer. 10.1007/978-3-319-10377-8_13

- van Eck, N. J., & Waltman, L. (2022). VOSviewer manual. Version 1.6.18. Centre for Science and Technology Studies. Leiden University. Retrieved March 27, 2023, from http://www.vosviewer.com/getting-started#VOSviewer%20manual

- Yang B, Rousseau R, Wang X, Huang S. How important is scientific software in bioinformatics research? A comparative study between international and Chinese research communities. Journal of the Association for Information Science and Technology. 2018;69(9):1122–1133. doi: 10.1002/asi.24031. [DOI] [Google Scholar]