Abstract

Hearing aid fitting formulas intended for the pediatric population can differ by 6 to 25 dB in prescribed output across frequency leading to large variations in aided audibility. Children perceive these differences and have expressed preferences that favor more audibility for quiet speech and less audibility for noisy speech. In this study, the effect of variations in audibility consistent with hearing aid fittings for children was examined. Sixteen children and adolescents (9–17 years) with mild-to-moderate hearing loss participated. Hearing aids programed to National Acoustic Laboratories or Desired Sensation Level v5.0a targets were fitted to each participant. Also, separate programs with and without a low-level adaptive gain feature were provided with each prescription. Speech reception threshold (SRT) was measured as well as performance for four suprathreshold auditory tasks that increased in cognitive demand. These tasks were word recognition, nonword detection, multiword recall, and rapid word learning. A significant effect of fitting formula, but not low-level or adaptive gain, was observed for SRT. Significant effects of presentation level, fitting formula, and low-level gain were observed for word recognition. The effect of presentation level was significant for nonword detection, multiword recall, and rapid word learning but no other main effects or interactions were significant. Finally, word recognition and nonword detection increased significantly with audibility while multiword recall and word learning did not. The results suggest that audibility assists with the initial perception of auditory input but plays a smaller role in memory formation and learning.

Keywords: pediatric, short-term memory, inhibitory control, auditory perception, speech intelligibility index

Introduction

When fitting hearing aids to pediatric patients, a fitting formula is used to generate an individualized prescription for each child. Of the many formulas available, two are used widely with pediatric patients around the world: the Desired Sensation Level v5.0a (DSL; Scollie et al., 2005) and the National Acoustic Laboratories (NAL) Non-Linear 2 (Byrne et al., 2001). These formulas are favored over manufacturer prescriptions because they rest on a foundation of empirical evidence for the pediatric population (Keidser et al., 2012; Seewald et al., 2005). Despite their popularity, there are nontrivial differences between the gain and amplitude compression prescribed by the two. Most significantly, DSL prescribes an average of 15 dB more amplification than NAL for children with the same hearing loss (Ching et al., 2010b). Children are sensitive to these level differences and have expressed a preference for DSL for quiet speech and for NAL for speech in noise (Scollie et al., 2010b). While it is reasonable to expect better outcomes with the DSL fitting formula, because it provides more audibility, children's early speech and language outcomes and the ability to perceive conversational speech are similar for the two prescriptions (Ching et al., 2013; Scollie et al., 2010b). This suggests that while children can perceive the differences between the DSL and NAL prescriptions, the impact on overall communication is small. Audiologists would benefit from evidence showing the effect of fitting formula and other hearing aid features that affect the audibility provided to children for a broad range of behavioral measures.

NAL Versus DSL Formulas

Both the NAL and DSL formulas generate individualized hearing-aid prescriptions based on several parameters, including the child's hearing thresholds, age, and language environment. Gain is adjusted to accommodate the small ear canals of young children and the frequency response is shaped to accommodate tonal and non-tone languages. However, hearing thresholds have the most influence over the output of the device. Each formula calculates the amount of amplification needed to raise the level of an incoming signal to a value that exceeds the hearing threshold by a specific amount. The hearing aid then amplifies and compresses the naturally wide range of sound levels in the environment into the child's narrowed dynamic range of hearing (AAA, 2013). The DSL formula uses low, frequency-specific, compression thresholds to provide more gain for low input levels to support the recognition of soft speech (Scollie et al., 2005). The NAL-NL2 formula also employs frequency-specific gain but the compression thresholds are higher to promote overall loudness comfort. Gain is prescribed according to a speech intelligibility model that provides sufficient audibility for speech understanding based on the tonality of the language (Keidser et al., 2012).

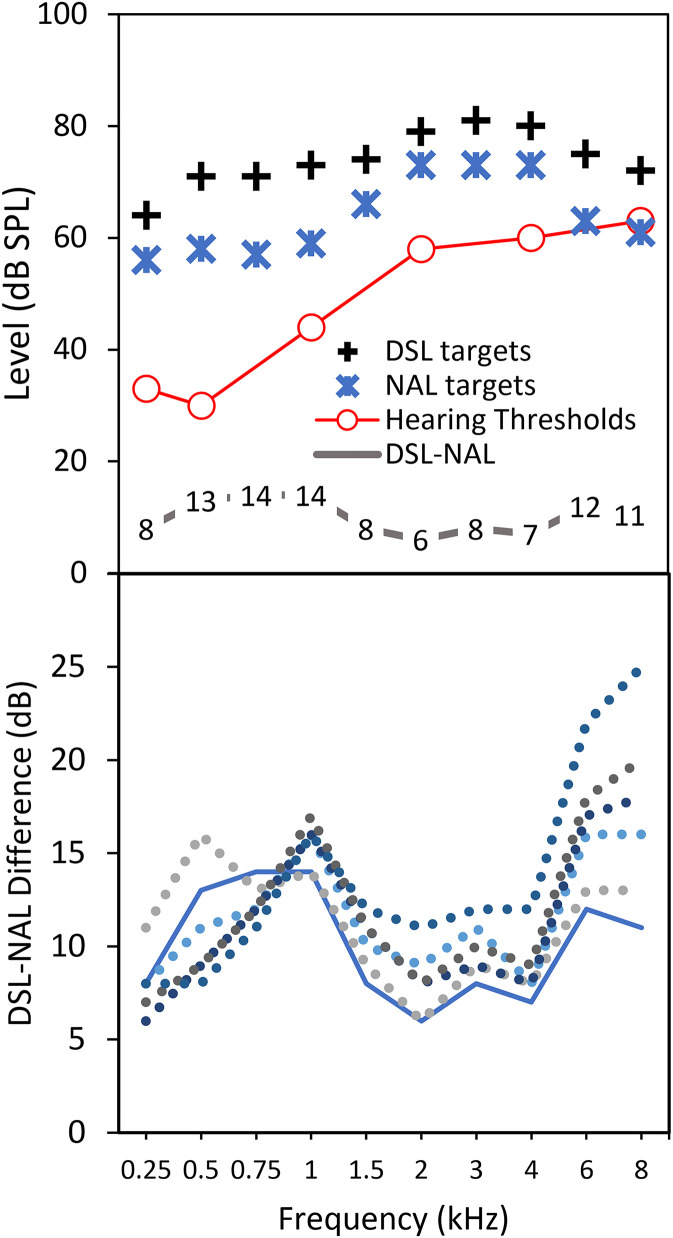

The primary difference between the NAL and DSL formulas is the prescription of the overall gain. For children, the DSL formula yields significantly higher outputs than the NAL formula for a wide range of hearing losses. As an example, the upper panel of Figure 1 shows NAL and DSL fitting targets for a hypothetical 10-year-old with mild-to-moderate hearing loss. 1 Also shown in the upper panel is the difference in decibels between the two prescriptions. On average, the formulas differed by 10 dB with a range of 6 to 14 dB. The DSL/NAL difference for this loss is also shown in the lower panel of Figure 1 (solid line) along with five other difference functions for the same loss increased in increments of 10 dB (dashed lines). As hearing loss increases, the difference between the formulas increases at all frequencies, with the largest differences at 1, 6, and 8 kHz. DSL prescribed 6 to 25 dB more gain than NAL for this representative sample of losses for children—substantially greater than the 5 to 7 dB differences in prescribed gain for adults (Furuki et al., 2023; Shyekhaghaei et al., 2021).

Figure 1.

Upper panel: fitting targets for DSL (black crosses) and NAL (blue asterisks) for a hypothetical mild-to-moderate hearing loss (red circles). Targets represent prescribed output at audiometric frequencies for a 65 dB SPL speech input. Also shown are the difference values between the targets at each frequency. Lower panel: Difference values for six sets of DSL-NAL targets (dotted lines) corresponding to the hypothetical hearing thresholds increased in 10-dB steps. The solid line is replicated from the upper panel.

Note. DSL = Desired Sensation Level v5.0a; NAL = National Acoustic Laboratories NL2.

Recognizing the similarly large differences in output in earlier versions of these formulas (Ching et al., 2010a), the developers collaborated to examine the impact of NAL-NL1 and DSL[i/o] prescriptions on children's communication in various contexts (Ching et al., 2010a, 2010b, 2010c; Scollie et al., 2010a, 2010b). In one study, preference for one prescription over the other was examined in an 8-week field trial (Scollie et al., 2010a). Using identical procedures in Canada and Australia, children and adolescents with hearing loss were fitted with devices that provided NAL and DSL prescriptions in separate programs. The participants were blinded to the contents of each program and were instructed to use them as they wished. The results revealed two clear priorities when selecting one program over the other. First, for soft speech in quiet environments, the children chose the DSL prescription. Second, for listening to speech in noise, the children chose the NAL prescription. Similar results have been reported in several studies representing the broader population of hearing aid users. These studies spanned the age range (young children and adults), hearing levels (mild to profound), and outcome measures (objective and subjective; Ching et al., 2018; Marriage et al., 2018; Quar et al., 2013; Scollie et al., 2010a). Overall, participants expressed a preference for NAL when listening to loud speech or speech in noise, and for DSL when listening to soft speech or speech originating from behind.

Clinical and Laboratory Measures

While children and adults perceive differences between the NAL and DSL prescriptions in daily life, minimal effects have been observed in clinical and laboratory settings. The only significant effect reported in the literature is better perception of speech near threshold in quiet with DSL than with NAL (Marriage & Moore, 2003; Quar et al., 2013; Scollie et al., 2010b). However, the benefit of the additional amplification prescribed by DSL for low-level speech in quiet did not generalize to better perceptual thresholds in noise for words or sentences, nor did performance improve with additional amplification for a range of speech perception measures at higher sensation levels (Ching et al., 2010a, 2018; Marriage et al., 2018; Quar et al., 2013; Scollie et al., 2010b). These outcomes suggest that the difficulties reported by hearing aid users in quiet environments may be limited to difficulty perceiving speech near threshold rather than at conversational levels where the additional audibility provided by DSL is of no benefit or detriment.

However, it is possible that traditional measures of speech perception do not reflect listening effort. It is not uncommon for two listening conditions to require different levels of effort but results in the same level of performance. The Cognitive Load Hypothesis theorizes that more than the normal amount of cognitive resources is required to decode an auditory signal degraded by hearing loss (Sweller et al., 2011; Tun et al., 2009; Uchida et al., 2019; Wayne & Johnsrude, 2015). These resources must be diverted from other cognitive processes, leaving too few resources to comprehend, respond, and remember the auditory message as well as listeners with normal hearing. Similarly, the Information Degradation Hypothesis (Baltes & Lindenberger, 1997; Lindenberger & Baltes, 1994) theorizes that the challenge of hearing loss to auditory perception cascades upwards. That is, the consequences of hearing loss increase with increasing cognitive complexity when more resources must be allocated to auditory perception (Pichora-Fuller & Singh, 2006). Indeed, evidence from older adults with moderate-to-severe hearing loss suggests that memory for auditory input is compromised compared to normal hearing peers (Deal et al., 2017). While increased listening effort due to hearing loss is thought to be prevalent in older adults, children and adolescents with hearing loss are no less susceptible to these effects and may experience greater listening effort during periods of language development.

A tenet shared by the Cognitive Load and Information Degradation hypotheses is that hearing aid use should free cognitive resources and improve the balance of resources across auditory processing domains. Certain cognitive processes may be more sensitive than others to the amount of amplification provided, especially processes that occur after the initial perception of speech. For example, the ability to perceive and repeat one word at a time requires, in general, auditory perception while the ability to detect a target word or words within a sentence requires both auditory perception and selective inhibition. Likewise, recalling familiar words after a period of time requires auditory perception and short-term memory. Finally, learning new words requires all three of these cognitive skills. If greater gain frees cognitive resources as the Cognitive Load and Information Degradation hypotheses suggest, a direct relationship should exist between the audibility of a signal and the ability to perceive, retain, and learn information communicated in the signal.

Purpose

The purpose of this study was to examine the effect of variations in audibility consistent with hearing aid fittings for children and adolescents with mild to moderate hearing loss. Audibility varied according to NAL and DSL prescriptions and an adaptive gain feature that engaged in the presence of low-level speech. Speech reception threshold (SRT) was measured as well as performance for four suprathreshold auditory tasks that increased in cognitive demand. Performance was expected to improve as audibility increased for both the threshold and suprathreshold tasks, with the largest improvement for tasks requiring the greatest cognitive demand.

Materials and Methods

Participants

Participants were 16 children and adolescents (eight males, eight females) between the ages of 9 and 17 years (M 14 years, SD 2 years) with mild-to-moderate, bilateral, sensorineural hearing loss. The participants communicated orally and fluently in English and used bilateral air-conduction hearing aids for daily use. The good health of each participant's outer and middle ears was confirmed using otoscopy and tympanometry during the first two test sessions. In the same session, pure-tone hearing thresholds were obtained at audiometric frequencies between 0.25 and 8 kHz in a sound-treated test-booth. The demographics for each participant are provided in Appendix A.

Each participant's hearing thresholds were used to program two pairs of receiver-in-canal hearing aids. One pair of hearing aids was programmed with the DSL prescription and the other with the NAL prescription according to the parameters listed in Appendix B. Noncustom earpieces (domes) were selected according to the acoustic coupling prescribed by the fitting software (Phonak Target v7.1), based on each participant's hearing thresholds. Real-ear measures (Audioscan, Verifit2) were used to verify that each fitting achieved the targets for the NAL and DSL prescriptions to within 5 dB root-mean-square error (RMSE) of the target. Figure 2 shows the average (±1 SD) unaided hearing thresholds for the right and left ears as well as the average (±1 SD) binaural aided thresholds for the DSL and the NAL prescriptions. Aided thresholds were 2 to 8 dB lower (better) across frequency with the DSL prescription than the NAL prescription.

Figure 2.

Average (±1 SD) hearing thresholds for the right (o) and left (x) ears of the participants. Also shown are the average binaural thresholds of these participants obtained in the sound field with the DSL prescription (filled squares) and the NAL prescription (open squares).

Note. DSL = Desired Sensation Level v5.0a; NAL = National Acoustic Laboratories NL2.

Large differences in gain across formulas were observed at suprathreshold input levels. Figure 3 shows average output levels as a function of frequency for the right and left aids in response to a 65 dB SPL passage of speech (“Carrot story”). Results for DSL and NAL are shown as well as the difference between the two formulas. On average, the DSL formula prescribed 14 dB more gain for frequencies at and below 1 kHz and 7 dB more gain for frequencies above 1 kHz. These DSL/NAL differences are larger than those for the aided warble-tone thresholds, suggesting that differences between the formulas at low levels were limited by the internal noise of the hearing devices (27 dB SPL A-weighted equivalent input noise; Lewis & Moss, 2013).

Figure 3.

Average (±1 SD) real-ear hearing aid output as a function of frequency for the left (blue) and right (red) devices programmed to DSL targets (filled squares) and to NAL targets (open squares). Also shown are the average left- and right-ear hearing thresholds from Figure 2. The dashed line represents the difference between the DSL and NAL levels averaged across ears.

Note. DSL = Desired Sensation Level v5.0a; NAL = National Acoustic Laboratories NL2.

Adaptive Situational Gain

The participants were fit binaurally with Phonak Audéo P90-R hearing aids. This device is capable of a dual-path compression scheme implemented in the Adaptive Phonak Digital (APD) 2.0 proprietary fitting formula. A mix of fixed-slow and adaptive-fast dynamic range compression (DRC) is implemented in speech-in-noise, music, and streaming programs within AutoSense OS. In all other programs, including the quiet (“calm situation”) program used in the present study, APD applies adaptive DRC that engages rapidly during speech onsets (Fulton & Moritz, 2018; Woodward et al., 2020). These compression approaches are maintained in each program when DSL v5 or DSL [i/o] is chosen as the fitting formula. When the NAL-NL2 fitting formula is selected, the dual compression approach (predominantly slow DRC) is applied in all programs.

These devices were selected because they include a feature designed to improve the perception of soft speech. The feature (speech enhancer) adaptively increases gain above prescribed levels when low-level speech is detected in quiet; thus, this feature is engaged only in the calm situation program. Hereafter, this feature is referred to as adaptive situational gain (ASG). While the compression knee point is typically 50 to 55 dB SPL for speech, ASG adaptively lowers the knee point to 30–50 dB when soft speech is detected in a quiet environment (≥10 dB SNR). When activated, the gain is increased between 300 and 3000 Hz. As an example, the upper panel of Figure 4 shows the output of a Phonak Audéo P90-R for a 40 dB SPL speech input with and without ASG activated. The lower panel shows the output for the same hearing device for 40 dB SPL pink noise with and without ASG activated. Gain with ASG increased by 6 dB between 0.5 and 3 kHz for speech input but not for noise.

Figure 4.

Text-box measures for a Phonak Audéo P90-R device fitted to DSL targets and configured with ASG on and off. The same hypothetical hearing thresholds are shown in the two panels. The effect of the ASG feature on speech and pink noise is shown in the upper and lower panels, respectively.

Note. DSL = Desired Sensation Level v5.0a; ASG = adaptive situational gain.

Two programs were configured for each pair of hearing aids: one with ASG enabled, and one with it disabled. The program assignments were counterbalanced across participants. All other programming options and features were the same for each pair of hearing aids (see Appendix B). The programs were saved to the participant's profile in the fitting software database and loaded into the hearing aids as needed during testing. The same four hearing aids were fitted to each participant and only used during testing in the lab. Standard infection control procedures were administered after each test session.

Personal Hearing Aid Prescription

Attempts were made to ascertain the fitting formula used to program each participant's personal devices. DSL and NAL targets generated by the real-ear analyzer for the participant's hearing thresholds were compared to the output of each device to identify the closest match. Measures were available for 20 personal devices from 10 of the 16 participants. Using a criterion of ±5 dB at three or more frequencies, the outputs of six devices were found to exceed targets prescribed by DSL while the outputs of five devices fell below targets for NAL. The remaining nine devices matched targets for NAL (4) or DSL (5) although the right- and left-ear devices of three participants matched different formulas. Overall, amplification for seven of the 10 participants did not conform to a recognized clinical standard. Deviations from target are not uncommon in the pediatric population (McCreery et al., 2013) and can occur for many reasons, including failure to verify device output during fitting, changes in hearing threshold levels following the fitting, and the selection of a fitting formula other than NAL or DSL. Ultimately, this assessment indicates that the participants were not uniformly accustomed to one or the other fitting formula prior to participation in this project.

Procedure

Each participant attended two test sessions separated by about one week, each lasting no more than 2 h. In each session, the participant was fitted with hearing aids programed according to one of the two prescriptive formulas. The order of prescriptions (NAL, DSL) and ASG programs (on, off) was counterbalanced across participants. All assessments were completed with one hearing-aid program before administering the tests again with the second program. The process was repeated during the second test session with the other prescription. Participants received monetary compensation following each session. This study was conducted in the Pediatric Amplification Lab in the Speech and Hearing Science Department at Arizona State University and was approved by the local Institutional Review Board.

Stimuli

All stimuli were presented in quiet from a loudspeaker positioned 1 m from the participant at 0° azimuth in a double-walled, sound-treated test booth. The stimuli for the speech perception tasks were prerecorded and produced by a female talker with a standard American English accent. The stimulus lists were counterbalanced across hearing aid conditions to distribute any effects of unequal list difficulty. Stimulus levels for measurement of SRTs were controlled using a clinical audiometer. Administration of the suprathreshold tests required custom laboratory software that controlled the presentation level and timing of the stimuli, data capture, and visual reinforcement. For the suprathreshold tasks, the stimuli were presented randomly at low and high levels (40 and 70 dB SPL). Randomized presentation levels were chosen to represent speech originating at different distances.

Measures

Table 1 shows the general cognitive processes associated with five behavioral tests administered at threshold or suprathreshold levels. Note that the general cognitive demand varied across the suprathreshold tasks.

Table 1.

Overview of the Behavioral Measures in Two Domains.

| Domain | Measure (units) | Processes |

|---|---|---|

| Threshold | Spondees (dB HL) | Long-term memory |

| auditory perception | ||

| Suprathreshold | Word recognition (%) | Long-term memory |

| auditory perception | ||

| Nonword detection (%) | Long-term memory | |

| auditory perception | ||

| + inhibitory control | ||

| Multiword recall (%) | Long-term memory | |

| auditory perception | ||

| + short-term memory | ||

| Rapid word learning (%) | Long-term memory | |

| auditory perception | ||

| + inhibitory control | ||

| + short-term memory |

Speech Reception Threshold

SRTs for spondees (e.g., “baseball,” “sidewalk”) were determined using an ascending, bracketing procedure. Participants were instructed to repeat each word after it was presented. The presentation level was increased by 5 dB after each incorrect response and decreased by 10 dB after each correct response. Testing continued until at least three reversals occurred. The SRT was defined as the lowest level at which a correct response was obtained for at least two reversals.

Suprathreshold Tasks

Word Recognition. Perception of familiar words was assessed using a traditional clinical word recognition task. Performance for this task is directly related to signal audibility (Studebaker et al., 1993). Stimuli were 20 monosyllabic words drawn from the Northwestern University word lists. Half of the words in each list were presented at 40 dB SPL and half at 70 dB SPL. The level was randomized within each list. The participant's verbal responses were recorded digitally and scored offline for accuracy by independent examiners with clinical experience in audiology.

Nonword Detection. A nonword detection task was administered to assess the participants’ ability to differentiate familiar and unfamiliar words within a sentence. Performance is sensitive to age (Pittman et al., 2017), signal-to-babble ratio (Stewart & Pittman, 2021), bandwidth (Pittman, 2019), and hearing loss (Pittman & Schuett, 2013). For this task, 20 three-word phrases (10 per level, randomized) were presented in each condition. Each phrase was based on a familiar statement (e.g., “sip your drink”) that was altered to include nonsense words by replacing a single phoneme in one or more of the words (e.g., “ fip your drink”). The number of nonsense words per phrase varied from 0 to 3 with equal representation of each combination. The participants were instructed to listen to the three-word phrase, ignore the real words, and indicate the nonsense words by selecting numbered response buttons corresponding to the position of each word in the phrase. Reinforcement was provided in the form of a video game that advanced if each word in the phrase was categorized correctly. To perform well on this task, the listener must respond to nonsense words and inhibit responses to real words.

MultiWord Recall. A multiword recall task was administered to assess the participants’ ability to recall sets of unrelated words. Performance for this task improves significantly with age and education (Schmidt, 1996) and is poorer for people with dementia (Schoenberg et al., 2006) and for children with hearing loss compared to normal hearing peers (Pittman, 2008). For this task, 14 highly familiar mono- and bi-syllabic words (seven words per level) were presented randomly for verbal repetition. The stimuli and procedures were adapted from the Auditory Verbal Learning Test (Taylor, 1959), which is commonly used to evaluate auditory encoding, consolidation, memory, and retrieval (Schmidt, 1996). The participants listened to all 14 words before responding. Words recalled correctly in any order were tallied, and then the process was repeated immediately four times. The presentation level and word order were identical in each trial. The total number of words recalled correctly was summed across the five trials. To perform well on this task, the listener must retain multiple items in memory for a short time.

Rapid Word Learning. The combined effects of perception and memory for unfamiliar words were assessed using a rapid word-learning paradigm. Performance for this task is sensitive to age (Pittman et al., 2017), specific language impairment (Gray et al., 2014), bandwidth (Pittman et al., 2005), digital noise reduction (Pittman et al., 2017), and hearing loss (Pittman, 2008). Participants learned to associate novel words with novel images using an interactive game. Five novel images were displayed on response buttons below a reinforcement area. One novel word was assigned to each novel image. To learn the word–picture pair, the participants selected one image following the presentation of a randomly selected nonsense word. Reinforcement was provided in the form of a video game that advanced after each correct response but not after incorrect responses. Thus, learning improved through a process of trial and error. The five nonsense words were repeated 20 times each for a total of 100 trials, which required approximately 7 to 8 min to complete. Each word was presented at both the low and high levels. To perform well on this task, the listener must perceive the nonsense words accurately, retain the results of previous trials in memory, and inhibit previous incorrect choices.

Audibility of Speech

The speech intelligibility index (SII; ANSI, 2007) was used to quantify the audibility of the stimuli for each suprathreshold task. The SII is a personalized calculation based on the level of the stimuli relative to the listener's hearing thresholds. SII values represent the proportion of the effective dynamic range of speech that is above the threshold. The sensation level of speech in contiguous frequency bands is multiplied by frequency-specific weights. These weights represent the relative contribution of each band to the overall perception. The values are summed and scaled relative to the dynamic range of speech. The result is a value from 0 to 1 where SII = 0 indicates that the entire speech signal is below threshold and inaudible to the listener while SII = 1 indicates that the entire speech signal is above threshold and fully audible.

For this investigation, the SII was calculated by the hearing-aid analyzer (Verifit 2, Audioscan) using a concatenated file of RMS normalized stimuli for each task. The stimuli were accessed by the analyzer through a USB flash drive connected to an external port. The SII was calculated for both programs in the left and right devices fitted to NAL/DSL targets at 40 and 70 dB SPL input levels. To achieve the 40 dB SPL presentation level, which is lower than the minimum setting provided by the analyzer (50 dB SPL), the file name of a separate but identical set of stimuli included a modifier (e.g., −10) which the analyzer interpreted and applied to the presentation level selected during testing. The receivers of each device were connected to a 0.4 cc coupler and venting corrections appropriate for coupler-based simulated real-ear measures were applied (Scollie et al., 2022).

Statistical Analyses

The data representing proportion correct were arcsine transformed prior to statistical analyses. All data were subjected to linear mixed model (LMM) analyses. This method of analysis was selected because, unlike repeated measures ANOVA, LMM is more robust against missing data, it controls for random effects associated with variable performance, and the covariance matrix is modeled directly from the data. For each measure, the hearing aid formula (DSL, NAL) and ASG setting (on, off) were entered as fixed factors while the individual participants were entered as a random factor. An additional fixed factor of presentation level (40, 70 dB SPL) was added to the analyses of the suprathreshold speech measures. Significance was indicated by p < .05 and Cohen's d was calculated to indicate effect size (Cohen, 1988).

Data collection continued until significant differences were observed across level, prescription, and ASG setting for the word recognition task, which is the only task in the test battery for which performance is known to vary significantly with audibility. While no data were excluded, two participants completed only one hearing aid prescription condition because they did not return for the second of the two sessions. Thus, data for 15 participants were analyzed for each measure.

Results

Speech Reception Threshold

Figure 5 shows the SRT for the DSL and NAL fitting formulas and ASG settings. The box plots show the mean, median, first and third quartiles, and the 5th and 95th percentiles. The SRT ranged from 24 to 27 dB HL (39 to 42 dB SPL) across conditions. LMM analyses revealed a significant main effect of fitting formula (F(46) = 4.514, p = .039, d = −.436) but no effect of ASG (F(44) = 0.245, p = .623, d = .094) and no interaction (F(44) = 0.000, p = 1.00). The small differences between formulas and ASG setting (on vs. off) for this task are similar to those for the aided warble-tone thresholds. Perception of simple words at threshold may be limited by the internal noise of the hearing devices.

Figure 5.

Box plots show the average SRT (x), the median (horizontal line), first and third quartiles (boxes), and the 5th and 95th percentiles (whiskers). The formula is represented by blue (NAL) and yellow (DSL) shading while the ASG setting is represented by bolder (off) and lighter (on) shading of the boxes.

Note. SRT = speech reception threshold; DSL = Desired Sensation Level v5.0a; NAL = National Acoustic Laboratories NL2.

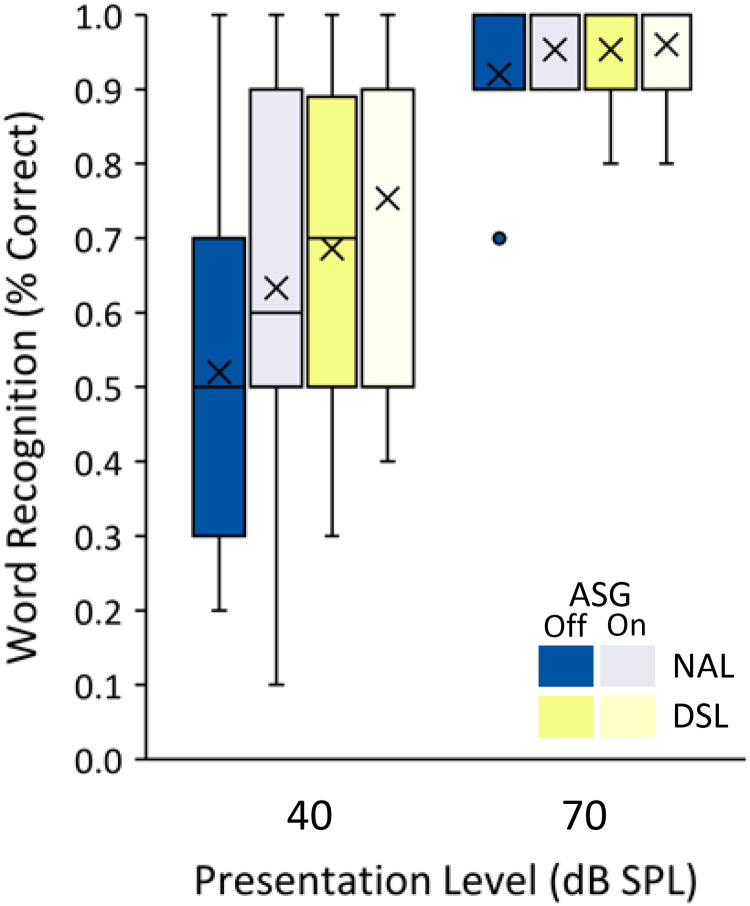

Word Recognition

Word recognition accuracy is shown in Figure 6 for the high and low presentation levels using the same box plot configuration as in Figure 5. Similar performance were achieved at the high level for the two formulas and the two ASG settings, while performance at the low level varied across the four formula/ASG combinations. LMM analyses revealed a significant main effect of level (F(104) = 172.331, p < .001, d = −1.173), fitting formula (F(107) = 8.36, p = .005, d = .321) and ASG setting (F(104) = 5.247, p = .024, d = −.229) with no significant interactions. Independent analysis of data for the low level showed significantly better performance with DSL than with NAL (F(45) = 12.297, p = .001, d = .523), better performance with ASG than without (F(44) = 6.454, p = .015, d = −.357), and no interaction (F(44) = 0.319, p = .575). While the children were able to recognize the words equally well with each formula/ASG combination when audibility was high (70 dB SPL), their performance at the low level varied predictably with the gain provided by the prescriptive formula and adaptive gain feature.

Figure 6.

Word recognition at low and high presentation levels using the box-plot configuration from Figure 5. Each box is based on a different list of counterbalanced words.

Nonword Detection

Average nonword detection is shown as a function of level in Figure 7. Performance represents the proportion of nonsense and real words that were correctly identified or ignored, respectively. Overall, identification of nonsense words was 19% higher for the 70 than for the 40 dB SPL level. Smaller increases were noted between NAL and DSL at the lower level. While there was a significant effect of level (F(103) = 110.645, p < .001, d = −1.604), there were no main effects of fitting formula (F(108) = 1.774, p = .186) or ASG (F(103) = 0.708, p = .402). For the low level, there was a main effect of formula (F(45) = 2.815, p = .033, d = 0.419) but not of ASG (F(43) = 0.088, p = .768), and no interaction (F(43) = 0.975, p = .321). To understand the nature of errors that occurred at the low presentation level, the error rates for the real and nonsense words are provided in Figure 7 for each hearing aid condition. On average, the error rate for nonsense words was more than twice that for real words. These results indicate that at low levels, unfamiliar words are more difficult to detect than familiar words.

Figure 7.

Nonword detection at low and high presentation levels using the box-plot configuration from Figure 5. Solid and dashed lines show error rates for the nonsense words and real words in each condition at the low presentation level.

Multiword Recall

Figure 8 shows multiword recall for each level. The 10–18% difference in recall between the low and high levels was significant (F(103) = 31.12, p < .001, d = −.860). There were no main effects of formula (F(108) = .019, p = .890) or ASG (F(103) = 2.106, p = .150) nor any interactions. For the low level, the 5% effect of ASG was not significant (F(42) = 2.389, p = .129). The main effect of formula (F(45) = 0.027, p = .871) and the interaction (F(42) = 0.500, p = .483) were also nonsignificant.

Figure 8.

Multiword recall at low and high presentation levels using the box-plot configuration from Figure 5.

Rapid Word Learning

Figure 9 shows rapid word learning for each fitting formula. The data are arranged according to ASG setting with the level indicated in each bar. Note that each set of bars represents learning for one set of words. Overall, learning was 5% better at the higher level than at the lower level, but this difference was not significant (F(101) = 3.108, p = .081). The small (<3%) difference across formula favoring NAL approached significance (F(103) = 3.797, p = .054, d = −.130). The main effect of ASG (F(102) = .087, p = .769) and the interactions was not significant. Independent analysis for the low level also showed no effect of formula (F(43) = 0.894, p = .350) or ASG setting (F(42) = 0.003, p = .824) and no interaction (F(42) = 0.033, p = .856). These results indicate that this aspect of rapid word learning is independent of variations in hearing aid output.

Figure 9.

Rapid word learning with the NAL and DSL fitting formula. The presentation level is indicated in each box. Each box shows results for one set of five words. Box-plot parameters are the same as in Figure 5.

Note. DSL = Desired Sensation Level v5.0a; NAL = National Acoustic Laboratories NL2.

Correlation Between Measures

Table 2 shows the bivariate correlations between demographic factors and each of the experimental measures. The p-values were adjusted for multiple comparisons (p < .007). Results revealed the effects of age and hearing loss as well as associations between cognitive tasks. Performance for the word learning task was significantly correlated with age, indicating that the adolescents performed better on this task than the children. No effect of age was observed for any of the other tasks. The degree of hearing loss was significantly correlated with SRT and with word recognition such that as average hearing thresholds increased, SRTs increased, and word recognition decreased. Performance for the more cognitively demanding tasks was not related to hearing levels.

Table 2.

Bivariate Correlation Coefficients Among Demographic Variables and Performance on the Experimental Tasks

| Age | BPTA | SRT | WR | NWD | MWR | RWL | |

|---|---|---|---|---|---|---|---|

| Speech reception threshold (SRT) | .02 | .47* | −.43* | −.28* | −.03 | .03 | |

| Word recognition (WR) | .02 | −.43* | −.43* | .58* | .29* | .07 | |

| Nonword detection (NWD) | .09 | −.22 | −.28* | .58* | .41* | .35* | |

| Multiword recall (MWR) | .16 | −.07 | −.03 | .29* | .41* | .30* | |

| Rapid word learning (RWL) | .32* | .06 | .03 | .07 | .35* | .30* |

BPTA = bilateral pure-tone average of unaided hearing thresholds at 500, 1000, and 2000 Hz

*Significant at p ≤ .007, adjusted for multiple comparisons.

Correlations between the scores for suprathreshold speech tasks revealed two interesting relationships. First, scores for each of the suprathreshold tasks were significantly correlated with scores for the other tasks except for the correlation between word recognition and rapid word learning. Thus, these two tasks may represent unique, rather than related, cognitive processes. Second, nonword detection was the only task whose scores were significantly correlated with those for each of the other speech tasks. These results suggest that nonword detection may involve a wide range of cognitive processes such that the scores for this one task may be predictive of those for other perception tasks.

The Performance–Audibility Relationship

Figure 10 shows average performance as a function of the average aided SII for each task. The eight data points in each panel represent the two presentation levels (40, 70 dB SPL), the two fitting formulas (DSL, NAL), and the two ASG settings (off, on). The SII varied from 0.20 to 0.77 and each hearing aid manipulation changed the SII as expected. For example, ASG effectively increased the SII at low levels for both formulas. Overall, the results show a diminishing effect of SII as cognitive demand increased across tasks. A nearly perfect relationship existed between SII and word recognition (r = .99, slope = 0.83) as well as SII and nonword detection (r = .94), although performance for the latter improved with SII at a lower rate (slope = 0.53). Performance increased with SII for multiword recall (r = .92) as well, but for this task the slope was substantially lower (0.32). Finally, the weakest performance–audibility relationship was observed for rapid word-learning (r = .69) with almost no change in performance as SII increased (slope = 0.10). Thus, the perception of individual words increased predictably with SII (far left panel) while learning new words did not (far right panel).

Figure 10.

Average performance as a function of SII for each task. Datapoints represent all combinations of fitting formulas, ASG settings, and presentation levels. Data for each suprathreshold task are shown in separate panels. Solid lines are linear fits to the data. Dashedlines are linear fits to stimulus repetition and labeled according to trial number (multiword recall) or block of trials (rapid word learning).

Note. SII = speech intelligibility index; ASG = adaptive situational gain.

One explanation for these results is that the influence of SII was obscured for tasks involving repeated stimulus presentations. That is, the dependence on SII diminished when there were multiple “glimpses” of the words over time. This possibility was tested by calculating performance for each of the five trials in the multiword recall task. Similarly, performance for the rapid word-learning task was calculated after every 20 trials at each level. The results are shown as dashed lines in Figure 10. Had the dependence on audibility diminished with repetition, the slopes of the functions would decrease with each repetition. Instead, the slopes of each function are similar indicating that performance across SII values improved similarly with repetition. Multivariate analysis of variance shown in Table 3 confirmed that performance improved significantly with repetition, but not SII, for both tasks.

Table 3.

Multivariate Analyses of Variance for the Multiword Recall and Word Learning Tasks With Speech Intelligibility Index (SII) and Repetition as Independent Variables

| Parameter | df | F | p |

|---|---|---|---|

| Multiword recall | |||

| Repetition | 4(575) | 65.845 | <.001* |

| SII | 0.0 | 1.00 | |

| Word learning | |||

| Repetition | 4(595) | 48.68 | <.001* |

| SII | 0.0 | 1.00 |

* Significant at p < .05

Finally, the results of the multiword recall task show that memory improved by 16% when the SII increased by 0.56 in the first trial. However, the same improvement in performance could be achieved with one repetition (second trial) and no increase in audibility. Repetition at higher levels of audibility is even more beneficial. Performance after one repetition (second trial) at the highest SII was equivalent to five repetitions at the lowest SII. Similar benefits of repetition occurred during rapid word learning but for this task, the performance at any SII represents the cumulative learning from previous trials at both high and low levels. Together these results suggest that repetition at any level of audibility can support memory and learning.

Discussion

The purpose of this study was to examine the effect of audibility on children's performance for a series of tasks that vary in cognitive demand. Audibility was varied through the use of different fitting prescriptions (NAL and DSL), adaptive gain (ASG), and presentation levels. The SRT was measured as well as word recognition, nonword detection, multiword recall, and rapid word learning. Better performance was expected with the hearing aid settings and conditions that resulted in more audibility. Also, the impact of audibility was expected to be most apparent for input levels near the threshold where unaided performance is the poorest and hearing aid gain is highest. Likewise, the benefit of audibility was expected to be most apparent for tasks requiring more, rather than less, cognitive demand.

As expected, the results revealed significant effects of fitting formula for speech at low levels. SRTs and word recognition performance at low levels were better with DSL than with NAL. Word recognition improved further with the additional gain provided by ASG for both formulas. These results are consistent with previous reports showing better outcomes with DSL than with NAL for populations differing widely in age and hearing impairment. There are several reports of better word and sentence reception threshold with the DSL formula (Bertozzo & Blasca, 2019; Marriage et al., 2018; Quar et al., 2013) as well as better word recognition at low levels (30 and 50 dB SPL) (Marriage et al., 2018). However, at higher levels (i.e., 55, 65, 70, and 80 dB SPL) no differences between formulas have been observed (Marriage et al., 2018; Scollie et al., 2010b). Together with the results of the present study, differences between formulas point to better perception of soft speech with DSL than with NAL, with little difference between formulas at conversational levels.

The expectation that the benefit of audibility would extend to tasks requiring greater cognitive demand was not observed. Instead, the simplest tasks improved with audibility while the more cognitively demanding tasks did not. These findings are consistent with those of Stiles et al. (2012), who examined word recognition and word learning for younger children (6 to 9 years old) with hearing loss. They reported a significant correlation between aided audibility and word recognition but not between aided audibility and word learning.

Our results appear to conflict with those of Wiseman et al. (2023), who reported an association between aided SII and language composite scores for a cohort of younger children with hearing loss (4- to 8-year-olds). The authors acknowledged that this association was questionable given the variability in the data and the influence of a small proportion of the children at the lowest SII levels. Further, there was no difference in language outcomes between children with well-fit and poorly-fit hearing aids. Together with the substantial and unexplained variability in the data, the Wiseman et al. (2023) results suggest a weak relationship between aided audibility and language outcomes. Our results are consistent with those findings and confirm that performance for tasks that support language development is largely insensitive to widely varying levels of audibility. Initial perception of speech appears to depend heavily on the audibility of the input while memory formation and learning do not.

Implications for Pediatric Hearing Research

The observation that the performance–audibility relationship differs across cognitive domains challenges the assumption that more audibility is the solution to poor outcomes. That is not to say that amplification is not beneficial, or that early hearing loss identification and intervention are not critical to overall language development and communication success. Rather, some deficits associated with hearing loss can be resolved by increasing the output of hearing aids while others cannot. For example, the increase in audibility that produced a 43% improvement in individual word recognition produced a 22% improvement in the detection of unfamiliar words, a 19% improvement in recall of familiar words, and a 5% improvement in word learning. One interpretation is that a little audibility goes a long way. These children and adolescents were able to perform complex auditory tasks with minimal audibility (0.20–0.29 SII). Another interpretation is that repetition may be a valuable contributor to successful auditory processing following hearing aid fitting. In the present study, repetition at every level of audibility supported memory and learning for speech.

Implications for Clinical Practice

The results of this study also have two implications for fitting hearing aids to children and adolescents with mild-to-moderate hearing loss. First, the presentation levels used in this study were lower than levels used routinely in clinical practice to assess hearing aid benefits. Unaided and aided SRTs and word recognition at levels near threshold could be more sensitive than at higher presentation levels to the benefit that a particular fitting formula or hearing aid feature will provide. Second, although deviations from prescriptive targets are commonly observed in the pediatric population (McCreery et al., 2015), our results and those of Wiseman et al. (2023) suggest that the effect of these deviations on language development may be smaller than expected.

Limitations Leading to Further Research

There are several important limitations to consider when interpreting these results within the larger context of pediatric audiology. Each of these limitations is also an opportunity for further research. First, the results are based on a relatively small number of participants. While this sample size was sufficient to satisfy the objectives of the study, a much larger sample would be required to calculate the effect size, or lack of effect, for tasks other than word recognition. Second, our results are limited to older children and adolescents. Younger children may show a greater, or lesser, dependence on audibility due to their more limited experience with auditory processing. Third, our results are limited to participants with mild to moderate hearing loss. Differences between prescriptive formulas may be reduced for more severe hearing losses due to their limited dynamic range of hearing (Marriage et al., 2018; Quar et al., 2013). Fourth, effects observed with Phonak devices and their ASG feature may not generalize to devices and features offered by other manufacturers. Outcomes may vary for devices with different compression characteristics. Fifth, standardized measures of cognition and language were not included in this study and may explain some of the variance in the data. Finally, a measure of recall following the word learning task may provide a more comprehensive understanding of the relationship between audibility and language learning in this population (Stelmachowicz et al., 2004; Wiseman et al., 2023).

Acknowledgments

The authors would like to thank Carol Samson for her extraordinary assistance with data collection during the challenging days of the pandemic as well as Hsiaohsiuan Chou, Sarahi Perez, and the amazing children and parents who shared their precious time with us.

This study was registered with ClinicalTrials.gov Identifier: NCT05150964

Appendix A. Demographics and hearing levels for each participant.

| Age | Right ear | Left ear | DSL binaural aided | NAL binaural aided | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Age | at ID | Thresholds (dB) | Thresholds (dB) | Thresholds (dB) | Thresholds (dB) | ||||||||||||||||||||||

| ID | Sex | (yrs) | (yrs) | .25 | .5 | 1 | 2 | 4 | 8 | .25 | .5 | 1 | 2 | 4 | 8 | .25 | .5 | 1 | 2 | 4 | 8 | .25 | .5 | 1 | 2 | 4 | 8 |

| 1 | F | 9 | UN | 40 | 50 | 60 | 60 | 75 | 75 | 30 | 45 | 60 | 65 | 70 | 70 | – | – | – | – | – | – | 20 | 30 | 25 | 35 | 40 | 50 |

| 2 | F | 11 | 4 | 55 | 60 | 65 | 70 | 70 | 90 | 60 | 55 | 60 | 70 | 80 | 90 | 15 | 20 | 15 | 20 | 40 | 55 | 15 | 15 | 20 | 30 | 40 | 60 |

| 3 | M | 12 | UN | 35 | 25 | 15 | 15 | 10 | 20 | 40 | 30 | 20 | 25 | 65 | 55 | 5 | 10 | 5 | 15 | 10 | 15 | 5 | 15 | 5 | 15 | 15 | 20 |

| 4 | M | 12 | UN | 20 | 20 | 35 | 25 | 10 | 15 | 30 | 30 | 35 | 20 | 30 | 30 | 25 | 20 | 15 | 20 | 20 | 10 | 30 | 15 | 15 | 15 | 20 | 5 |

| 5 | M | 13 | 6 | 60 | 65 | 65 | 70 | 85 | 80 | 65 | 70 | 70 | 70 | 65 | 75 | 20 | 25 | 20 | 30 | 35 | 50 | 25 | 35 | 25 | 30 | 35 | 50 |

| 6 | F | 13 | 4 | 40 | 35 | 50 | 50 | 50 | 10 | 40 | 50 | 55 | 55 | 45 | 5 | 10 | 15 | 15 | 25 | 25 | 10 | 40 | 30 | 25 | 30 | 35 | 10 |

| 7 | M | 13 | 0 | 60 | 65 | 70 | 75 | 70 | 60 | 50 | 55 | 60 | 65 | 60 | 55 | 25 | 25 | 20 | 30 | 40 | 30 | – | – | – | – | – | – |

| 8 | F | 14 | 5 | 45 | 45 | 45 | 40 | 45 | 45 | 50 | 45 | 45 | 35 | 40 | 40 | 30 | 20 | 25 | 30 | 40 | 20 | 25 | 20 | 20 | 25 | 40 | 20 |

| 9 | M | 14 | 0 | 35 | 50 | 50 | 70 | 60 | 60 | 35 | 50 | 50 | 50 | 60 | 50 | 15 | 25 | 15 | 20 | 25 | 15 | 20 | 30 | 20 | 20 | 35 | 20 |

| 10 | F | 14 | UN | 40 | 35 | 30 | 20 | 10 | 10 | 25 | 30 | 35 | 25 | 15 | 10 | 25 | 15 | 5 | 15 | 20 | 5 | 25 | 40 | 15 | 20 | 20 | 5 |

| 11 | F | 15 | 6 | 30 | 40 | 50 | 55 | 30 | −5 | 35 | 35 | 55 | 55 | 20 | −5 | 20 | 20 | 20 | 25 | 20 | 5 | 35 | 30 | 25 | 35 | 20 | 20 |

| 12 | M | 15 | 1 | 35 | 40 | 50 | 55 | 50 | 50 | 35 | 40 | 50 | 60 | 50 | 45 | 20 | 15 | 5 | 25 | 25 | 20 | 20 | 25 | 15 | 30 | 30 | 20 |

| 13 | M | 15 | UN | 55 | 60 | 80 | 85 | 80 | 70 | 20 | 20 | 35 | 65 | 80 | 100 | 15 | 10 | 15 | 30 | 55 | 40 | 25 | 15 | 15 | 35 | 50 | 40 |

| 14 | M | 16 | 4 | 40 | 50 | 65 | 75 | 75 | NR | 40 | 55 | 65 | 70 | 70 | 70 | 15 | 20 | 20 | 35 | 40 | 30 | 30 | 20 | 25 | 30 | 45 | 40 |

| 15 | F | 17 | 0 | 30 | 45 | 45 | 55 | 50 | 45 | 30 | 40 | 45 | 55 | 50 | 45 | 30 | 25 | 20 | 30 | 35 | 30 | 25 | 25 | 15 | 30 | 30 | 25 |

| 16 | F | 17 | 1 | 20 | 30 | 40 | 55 | 55 | 55 | 20 | 30 | 40 | 55 | 60 | 55 | 10 | 15 | 10 | 25 | 30 | 20 | 30 | 35 | 20 | 40 | 40 | 35 |

UN = unknown; M = male; F = female; dB = decibel; DSL = Desired Sensation Level; ID = identification; F = female; M = male; NA = not available; NAL = National Acoustics Laboratory

Appendix B. Hearing aid fitting parameters using Phonak Target v7.1 fitting software.

| Software interface | Customizable options | Setting |

|---|---|---|

| Selection | Long-term user | |

| Fitting | Feedback and real-ear test | |

| Programs | Gain | 100% |

| Occlusion compensation | Off | |

| Compression | Default | |

| Hearing aid microphone | 4 (Real-ear sound) | |

| Dynamic noise cancellation | 0 (Off) | |

| WhistleBlock (transient feedback canceller) | 0 (Off) | |

| SoundRelax (impulse noise dampening) | 8 (Weak) | |

| NoiseBlock (single-channel noise reduction) | 8 (Weak) | |

| Soft noise reduction | 0 (Off) | |

| WindBlock (wind noise suppression) | 16 (Moderate) | |

| Fitting rationale | NAL-NL2 | |

| DSLv5a Pediatric | ||

| Speech enhancer | 14 (Moderate) | |

| 0 (Off) | ||

| Buttons and | ||

| indicators | Buttons | Program change |

DSL Targets-DSL-Child, HL transducer-Insert + foam, RECD-DSL average; NAL: Targets-NAL-NL2, RECD-NL2 average, Binaural-Yes, Language-Non total.

Footnotes

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article. This work was supported by Phonak.

ORCID iD: Andrea L Pittman https://orcid.org/0000-0003-2730-383X

References

- AAA (2013). American Academy of Audiology Clinical Practice Guidelines on Pediatric Amplification.

- ANSI (2007). Methods for calculation of the speech intelligibility index (ANSI S3.5-1997 [R2007]). New York, NY.

- Baltes P. B., Lindenberger U. (1997). Emergence of a powerful connection between sensory and cognitive functions across the adult life span: A new window to the study of cognitive aging? Psychology and Aging, 12(1), 12–21. 10.1037//0882-7974.12.1.12 [DOI] [PubMed] [Google Scholar]

- Bertozzo M. C., Blasca W. Q. (2019). Comparative analysis of the NAL-NL2 and DSL v5.0a prescription procedures in the adaptation of hearing aids in the elderly. Codas, 31(4), e20180171. 10.1590/2317-1782/20192018171 [DOI] [PubMed] [Google Scholar]

- Byrne D., Dillon H., Ching T., Katsch R., Keidser G. (2001). NAL-NL1 procedure for fitting nonlinear hearing aids: Characteristics and comparisons with other procedures. Journal of the American Academy of Audiology, 12(1), 37–51. PMID: 11214977. 10.1055/s-0041-1741117 [DOI] [PubMed] [Google Scholar]

- Ching T. Y., Dillon H., Hou S., Zhang V., Day J., Crowe K., Thomson J. (2013). A randomized controlled comparison of NAL and DSL prescriptions for young children: Hearing-aid characteristics and performance outcomes at three years of age. International Journal of Audiology, 52(Suppl 2), S17–S28. 10.3109/14992027.2012.705903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching T. Y., Scollie S. D., Dillon H., Seewald R., Britton L., Steinberg J., King K. A. (2010c). Evaluation of the NAL-NL1 and the DSL v.4.1 prescriptions for children: Paired-comparison intelligibility judgments and functional performance ratings. International Journal of Audiology, 49(Suppl 1), S35–S48. 10.3109/14992020903095791 [DOI] [PubMed] [Google Scholar]

- Ching T. Y., Scollie S. D., Dillon H., Seewald R., Britton L., Steinberg J. (2010b). Prescribed real-ear and achieved real-life differences in children's hearing aids adjusted according to the NAL-NL1 and the DSL v.4.1 prescriptions. International Journal of Audiology, 49(Suppl 1), S16–S25. 10.3109/14992020903082096 [DOI] [PubMed] [Google Scholar]

- Ching T. Y., Scollie S. D., Dillon H., Seewald R. (2010a). A cross-over, double-blind comparison of the NAL-NL1 and the DSL v4.1 prescriptions for children with mild to moderately severe hearing loss. International Journal of Audiology, 49(Suppl 1), S4–S15. 10.3109/14992020903148020 [DOI] [PubMed] [Google Scholar]

- Ching T. Y. C., Zhang V. W., Johnson E. E., Van Buynder P., Hou S., Burns L., McGhie K. (2018). Hearing aid fitting and developmental outcomes of children fit according to either the NAL or DSL prescription: Fit-to-target, audibility, speech and language abilities. International Journal of Audiology, 57(suppl 2), S41–S54. 10.1080/14992027.2017.1380851 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Lawrence Erlbaum Associates. [Google Scholar]

- Deal J. A., Betz J., Yaffe K., Harris T., Purchase-Helzner E., Satterfield S., Health A. B. C. S. G. (2017). Hearing impairment and incident dementia and cognitive decline in older adults: The health ABC study. The Journals of Gerontology Series A: Biological Sciences and Medical Sciences, 72(5), 703–709. 10.1093/gerona/glw069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fulton B., Mortiz M. (2018). Phonak Insight: Slow compression for people with severe to profound hearing loss. White Paper. https://www.phonakpro.com/content/dam/phonakpro/gc_hq/en/resources/evidence/white_paper/documents/technical_paper/insight_slow_compression_for_severe_to_profound.pdf [Google Scholar]

- Furuki S., Sano H., Kurioka T., Nitta Y., Umehara S., Hara Y., Yamashita T. (2023). Investigation of hearing aid fitting according to the national acoustic laboratories’ prescription for non-linear hearing aids and the desired sensation level methods in Japanese speakers: a crossover-controlled trial. Auris, Nasus, Larynx. 10.1016/j.anl.2023.01.004 [DOI] [PubMed] [Google Scholar]

- Gray S., Pittman A., Weinhold J. (2014). Effect of phonotactic probability and neighborhood density on word-learning configuration by preschoolers with typical development and specific language impairment. J. Speech Lang Hear. Res, 57(3), 1011–1025. https://doi.org/1831270.[pii];10.1044/2014_JSLHR-L-12-0282 [doi] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keidser G., Dillon H., Carter L., O'Brien A. (2012). NAL-NL2 empirical adjustments. Trends in Amplification, 16(4), 211–223. https://doi.org/1084713812468511. [pii];10.1177/1084713812468511 [doi] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis J., Moss B. (2013). MEMS microphones, the future for hearing aids. Analog Dialogue, 47(4), 1–3. [Google Scholar]

- Lindenberger U., Baltes P. B. (1994). Sensory functioning and intelligence in old age: A strong connection. Psychology and Aging, 9(3), 339–355. 10.1037//0882-7974.9.3.339 [DOI] [PubMed] [Google Scholar]

- Marriage J. E., Moore B. C. (2003). New speech tests reveal benefit of wide-dynamic-range, fast-acting compression for consonant discrimination in children with moderate-to-profound hearing loss. International Journal of Audiology, 42(7), 418–425. 10.3109/14992020309080051 [DOI] [PubMed] [Google Scholar]

- Marriage J. E., Vickers D. A., Baer T., Glasberg B. R., Moore B. C. J. (2018). Comparison of different hearing aid prescriptions for children. Ear & Hearing, 39(1), 20–31. 10.1097/AUD.0000000000000460 [DOI] [PubMed] [Google Scholar]

- McCreery R. W., Bentler R. A., Roush P. A. (2013). Characteristics of hearing aid fittings in infants and young children. Ear & Hearing, 34(6), 701–710. 10.1097/AUD.0b013e31828f1033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery R. W., Walker E. A., Spratford M., Bentler R., Holte L., Roush P., Moeller M. P. (2015). Longitudinal predictors of aided speech audibility in infants and children. Ear & Hearing, 36(Suppl 1), 24S–37S. 10.1097/AUD.0000000000000211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller M. K., Singh G. (2006). Effects of age on auditory and cognitive processing: Implications for hearing aid fitting and audiologic rehabilitation. Trends in Amplification, 10(1), 29–59. 10.1177/10847138060100010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittman A. L. (2008). Short-term word-learning rate in children with normal hearing and children with hearing loss in limited and extended high-frequency bandwidths. Journal of Speech, Language, and Hearing Research, 51(3), 785–797. 10.1044/1092-4388(2008/056) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittman A. L. (2019). Bone conduction amplification in children: Stimulation via a percutaneous abutment versus a transcutaneous softband. Ear and Hearing. 10.1097/AUD.0000000000000710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittman A. L., Lewis D. E., Hoover B. M., Stelmachowicz P. G. (2005). Rapid word-learning in normal-hearing and hearing-impaired children: Effects of age, receptive vocabulary, and high-frequency amplification. Ear and Hearing, 26(6), 619–629. 10.1097/01.aud.0000189921.34322.68 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittman A. L., Schuett B. C. (2013). Effects of semantic and acoustic context on nonword detection in children with hearing loss. Ear and Hearing, 34(2), 213–220. 10.1097/AUD.0b013e31826e5006 [DOI] [PubMed] [Google Scholar]

- Pittman A. L., Stewart E. C., Odgear I. S., Willman A. P. (2017a). Detecting and learning new words: The impact of advancing age and hearing loss. American Journal of Audiology, 26(3), 318–327. 10.1044/2017_AJA-17-0025 [DOI] [PubMed] [Google Scholar]

- Pittman A. L., Stewart E. C., Willman A. P., Odgear I. S. (2017b). Word recognition and learning: Effects of hearing loss and amplification feature. Trends in Hearing, 21, article no. 2331216517709597. 10.1177/2331216517709597 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quar T. K., Ching T. Y., Newall P., Sharma M. (2013). Evaluation of real-world preferences and performance of hearing aids fitted according to the NAL-NL1 and DSL v5 procedures in children with moderately severe to profound hearing loss. International Journal of Audiology, 52(5), 322–332. 10.3109/14992027.2012.755740 [DOI] [PubMed] [Google Scholar]

- Schmidt M. (1996). (RAVLT) Rey Auditory Verbal Learning Test, handbook. Western Psychological Services. [Google Scholar]

- Schoenberg M. R., Dawson K. A., Duff K., Patton D., Scott J. G. (2006). Test performance and classification statistics for the Rey Auditory Verbal Learning Test in selected clinical samples. Archives of Clinical Neuropsychology, 21(7), 693–703. 10.1016/j.acn.2006.06.010 [DOI] [PubMed] [Google Scholar]

- Scollie S., Ching T. Y., Seewald R., Dillon H., Britton L., Steinberg J., Corcoran J. (2010a). Evaluation of the NAL-NL1 and DSL v4.1 prescriptions for children: Preference in real world use. International Journal of Audiology, 49(Suppl 1), S49–S63. 10.3109/14992020903148038 [DOI] [PubMed] [Google Scholar]

- Scollie S., Folkeard P., Pumford J., Abbasalipour P., Pietrobon J. (2022). Venting corrections improve the accuracy of coupler-based simulated real-ear verification for use with adult hearing aid fittings. Journal of the American Academy of Audiology, 33(5), 277–284. 10.1055/a-1808-1275 [DOI] [PubMed] [Google Scholar]

- Scollie S., Seewald R., Cornelisse L., Moodie S., Bagatto M., Laurnagaray D., Pumford J. (2005). The desired sensation level multistage input/output algorithm. Trends in Amplification, 9(4), 159–197. 10.1177/108471380500900403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scollie S. D., Ching T. Y., Seewald R. C., Dillon H., Britton L., Steinberg J., King K. (2010b). Children's speech perception and loudness ratings when fitted with hearing aids using the DSL v.4.1 and the NAL-NL1 prescriptions. International Journal of Audiology, 49(Suppl 1), S26–S34. 10.3109/14992020903121159 [DOI] [PubMed] [Google Scholar]

- Seewald R., Moodie S., Scollie S., Bagatto M. (2005). The DSL method for pediatric hearing instrument fitting: Historical perspective and current issues. Trends in Amplification, 9(4), 145–157. 10.1177/108471380500900402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shyekhaghaei S., Sameni S. J., Rahbar N. (2021). The comparison of gains prescribed for digital behind-the-ear hearing aids using the manufacturer-specific and conventional prescriptive formulas. Auditory and Vestibular Research, 30(2), 119–127. 10.18502/vavr/v30i2.6097 [DOI] [Google Scholar]

- Stelmachowicz P. G., Pittman A. L., Hoover B. M., Lewis D. E. (2004). Novel-word learning in children with normal hearing and hearing loss. Ear & Hearing, 25(1), 47–56. 10.1097/01.AUD.0000111258.98509.DE [DOI] [PubMed] [Google Scholar]

- Stewart E. C., Pittman A. L. (2021). Learning and retention of novel words in musicians and nonmusicians. Journal of Speech, Language, and Hearing Research, 64(7), 2870–2884. 10.1044/2021_JSLHR-20-00482 [DOI] [PubMed] [Google Scholar]

- Stiles D. J., Bentler R. A., McGregor K. K. (2012). The speech intelligibility index and the pure-tone average as predictors of lexical ability in children fit with hearing aids. Journal of Speech, Language, and Hearing Research, 55(3), 764–778. https://doi.org/1092-4388_2011_10-0264. [pii];10.1044/1092-4388(2011/10-0264) [doi] [DOI] [PubMed] [Google Scholar]

- Studebaker G. A., Sherbecoe R. L., Gilmore C. (1993). Frequency-importance and transfer functions for the auditec of St. Louis recordings of the NU-6 word test. Journal of Speech, Language, and Hearing Research, 36(4), 799–807. 10.1044/jshr.3604.799 [DOI] [PubMed] [Google Scholar]

- Sweller J., Ayres P. H., Kalyuga S. (2011). Cognitive load theory. Springer. [Google Scholar]

- Taylor E. M. (1959). psychological appraisal of children with cerebral defects. Harvard University Press. [Google Scholar]

- Tun P. A., McCoy S., Wingfield A. (2009). Aging, hearing acuity, and the attentional costs of effortful listening. Psychology and Aging, 24(3), 761–766. 10.1037/a0014802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uchida Y., Sugiura S., Nishita Y., Saji N., Sone M., Ueda H. (2019). Age-related hearing loss and cognitive decline - the potential mechanisms linking the two. Auris, Nasus, Larynx, 46(1), 1–9. 10.1016/j.anl.2018.08.010 [DOI] [PubMed] [Google Scholar]

- Wayne R. V., Johnsrude I. S. (2015). A review of causal mechanisms underlying the link between age-related hearing loss and cognitive decline. Ageing Research Reviews, 23(Pt B), 154–166. 10.1016/j.arr.2015.06.002 [DOI] [PubMed] [Google Scholar]

- Wiseman K. B., McCreery R. W., Walker E. A. (2023). Hearing thresholds, speech recognition, and audibility as indicators for modifying intervention in children with hearing aids. Ear and Hearing. 10.1097/AUD.0000000000001328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodward J., Jansen S., Kühnel V. (2020). Hearing inspired by nature: the new APD 2.0 fitting formula with adaptive compression by Phonak . White paper. https://www.phonakpro.com/content/dam/phonakpro/gc_hq/en/resources/evidence/white_paper/documents/technical_paper/PH_Insight_APD_2_0_fitting_formula_EN_028- 2166-02.pdf [Google Scholar]