Abstract

From sparse descriptions of events, observers can make systematic and nuanced predictions of what emotions the people involved will experience. We propose a formal model of emotion prediction in the context of a public high-stakes social dilemma. This model uses inverse planning to infer a person’s beliefs and preferences, including social preferences for equity and for maintaining a good reputation. The model then combines these inferred mental contents with the event to compute ‘appraisals’: whether the situation conformed to the expectations and fulfilled the preferences. We learn functions mapping computed appraisals to emotion labels, allowing the model to match human observers’ quantitative predictions of 20 emotions, including joy, relief, guilt and envy. Model comparison indicates that inferred monetary preferences are not sufficient to explain observers’ emotion predictions; inferred social preferences are factored into predictions for nearly every emotion. Human observers and the model both use minimal individualizing information to adjust predictions of how different people will respond to the same event. Thus, our framework integrates inverse planning, event appraisals and emotion concepts in a single computational model to reverse-engineer people’s intuitive theory of emotions.

This article is part of a discussion meeting issue ‘Cognitive artificial intelligence’.

Keywords: emotion, inverse planning, theory of mind, social intelligence, affective computing, probabilistic generative model

1. Introduction

Human social life depends on our ability to understand and anticipate other people’s emotions. Intense efforts in both basic science and industrial applications are currently directed towards building models of emotion recognition: identifying a person’s emotions from their expression. Here we tackle a complementary challenge: predicting how a person will emotionally react to an event.

To illustrate the phenomenon we target, imagine watching an episode of the popular British gameshow called ‘Golden Balls’ [1]. During the episode, two players, Arthur and Bella, play a public one-shot social game called ‘Split or Steal’. On the table is a pot of $100 000 USD. Eventually, each player will secretly choose to Split (Cooperate) or Steal (Defect). If both players choose to Split, each takes home $50k. If both choose to Steal, they both leave with nothing. But if one chooses to Split and the other chooses to Steal, the one who stole takes the entire $100k and the other player leaves with nothing.1 Before Arthur and Belle make their choices, the gameshow host gives them a chance to talk to each other (in front of the live studio audience and TV viewers at home). They both vehemently promise to choose Split. Then they each make their secret choice. The choices are revealed simultaneously—they both chose Split! What do you predict Arthur will feel in this moment? Even without seeing their expressions, human observers generate systematic predictions about others’ emotional reactions to events [3–5]. For example, observers predict Arthur will feel joy, relief and gratitude. By contrast, if he Split but Bella Stole, Arthur is predicted to feel disappointment, envy and contempt.

The question for the current research is: How do human observers generate these emotion predictions? Social games offer a highly constrained but emotionally evocative context for studying social cognition. The ‘Split or Steal’ game can be fully described by a simple set of variables but evokes diverse and fine-grained predictions of players’ emotions. We develop a Bayesian framework [6,7] to formalize the conceptual knowledge, and the cognitive reasoning, that observers use to predict others’ emotional reactions to events [8]. We aim to capture how observers generate abstract representations of others’ minds from situational cues, tailor their emotion predictions to a specific individual, and predict distinctively social emotions like guilt, embarrassment and respect.

Our model is organized around a psychological premise: observers predict Arthur’s emotional reaction by reasoning about how he will evaluate the situation relative to his desires and beliefs [8–14]. Accordingly, the overall model of emotion prediction (figure 1) comprises three Modules, which simulate how observers (1) infer Arthur’s preferences and beliefs, (2) reason about how Arthur will evaluate (or ‘appraise’) events with respect to his mental contents (e.g. Was the event something he expected and wanted to happen?), and (3) predict Arthur’s emotions based on his likely appraisals. Module (1) uses inverse planning to model how observers reason over an intuitive Theory of Mind (wherein a player chooses actions that maximize a subjective utility function) to infer a player’s mental states [15–17]. Module (2) computes appraisals by reasoning about how a player will evaluate an event based on his inferred mental contents. Module (3) translates the computed appraisals into predictions of the player’s emotions using learned emotion concepts (i.e. functions that express emotions in terms of appraisals).

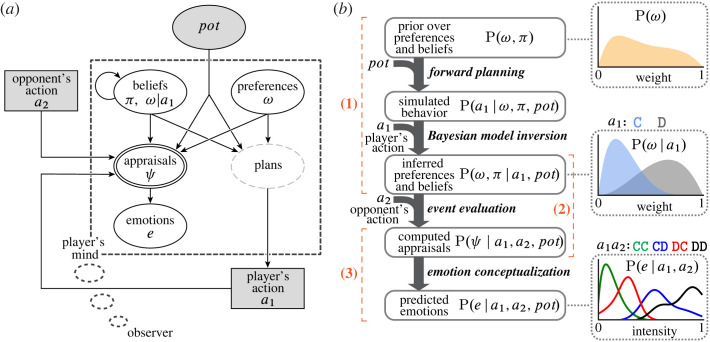

Figure 1.

Emotion prediction as inference over an intuitive Theory of Mind. Hypotheses about how human observers reason about others’ emotions can be formalized as probabilistic generative models. This reflects a hypothesis about observers’ intuitive theory of other people’s minds, not a scientific hypothesis about people’s actual emotions. (a) Implementation of the general hypothesis for the ‘Split or Steal’ game (a public one-shot Prisoner’s Dilemma). We treat observers’ emotion predictions as a function of their intuitive reasoning about how players will subjectively evaluate, or ‘appraise’, the game’s outcome. Observers predict a player’s emotions by inferring what preferences and beliefs motivated the player’s decision to Cooperate or Defect, and reason about how those preferences and beliefs would cause the player to emotionally react to the outcome of the game. The intuitive theories we test take the form of directed acyclic graphs, where arrows indicate the causal relationship between variables. Shaded nodes are observable variables and open nodes are latent variables. Round nodes are continuous variables, rectangular nodes are discrete variables. Nodes with a single border are random variables. The double border indicates that appraisals are calculated deterministically. Plans are shown with a partial border because they are not explicitly represented in this model. (b) Computational model of the intuitive theory. The model comprises three modules. Module (1) infers a joint distribution over preferences and beliefs given a player’s action via inverse planning. Module (2) computes appraisals based on how a player would evaluate the outcome of the game with respect to the inferred preferences and beliefs. Module (3) generates emotion predictions by transforming the computed appraisals. The probability density plots illustrate how observers’ prior belief about a player’s preference is updated based on the player’s action, and how the inferred preference is used to predict the player’s emotional reaction to the game’s outcome .

This work aims to computationally recapitulate how human observers predict others’ emotional reactions. To fit and test the model, we collect high-resolution behavioural data on the ‘Split or Steal’ game. Observers on Amazon mTurk made systematic predictions of 20 nuanced emotions and 20 individual players. Combining the modules, we quantitatively simulate how human observers predict players’ fine-grained emotional reactions to hypothetical events.

2. Relation to prior work

To predict what emotions Arthur will experience when he splits the pot with Belle, observers reason about what the situation means to Arthur. We build a model that generates emotion predictions from a description of the events (i.e. the same information given to observers). There are many approaches to building situation-computable models of emotion prediction [18], including behavioural economic decision models [19,20], rule-based emotion schemas [21,22], multi-agent computer simulations [12,23,24] and large language models [25,26]. These methodologically and philosophically diverse approaches share a view that emotion prediction depends on abstracting Theory of Mind representations from contextual information about a situation. However, these approaches vary widely in how the models abstract, represent and reason over Theory of Mind information.

Our work foregrounds this critical process, treating the prediction of emotional reactions to events as cognitive reasoning based on the inference of preferences and beliefs. We frame human emotion understanding as inference over an intuitive theory of other minds. An intuitive theory is a logically and causally structured mental model (a ‘lay’ ontology) [27–29], which is typically not explicit or fully introspectable [30]. This work studies an aspect of the intuitive theory of emotion: observers’ mental model of people’s emotional reactions to events. Note that the aim of this work is to build a formal scientific model of people’s intuitive theory of emotion, not to test whether the intuitive theory is accurate. That is, although people are able to sensitively infer and accurately predict others’ emotions in some contexts [31–33], people make systematic errors in other contexts [3,34,35]. Because we are interested in capturing and characterizing people’s intuitive theory of emotion, we do not here attempt to test the ground truth accuracy of either the observers’ or the model’s predictions, only their similarity to each other.

(a) . Inverse planning

How do human observers infer a specific person’s preferences and beliefs? One source of information is the person’s actions. People typically choose intentional actions that are likely to achieve their goals or maximize their rewards, given their values and beliefs (the principle of rational action). As a result, even a single sparse observation (e.g. observing one action) can lead observers to update estimates of the person’s mental states [36,37]. Observers’ intuitive reasoning about others’ actions can be framed as a theory-based Bayesian model [6,7], which formalizes how causal structure and prior beliefs constrain inference of latent mental contents [16]. In models of ‘inverse planning’, a forward planning model simulates how approximately rational agents, imbued with rich cognitive structure, perceive, plan and act in a dynamic world. Bayesian inversion of the forward model then supports inverse inference of what unobserved mental contents were likely to have caused the observed behaviour. Inverse planning models can closely match how observers use others’ behaviour to infer mental contents, such as beliefs, preferences, rewards, costs, habits and intelligence [15,17,38–41].

Inverse planning has been extensively applied in the domain of action understanding [42–44]. Adapting inverse planning to predict nuanced social emotions imposes demands on the latent representations and computations beyond what are typically required for action understanding. Predicting emotions like envy, guilt, respect and gratitude requires representing multifaceted preferences and recursive beliefs about rewards, costs, interpersonal relationships and reputation. Predicting emotions like surprise, disappointment, regret and relief requires computing prediction errors and counterfactuals.

While there are numerous approaches to modelling Theory of Mind [45,46], our work makes a principled commitment to inverse planning [8]. Module (1) instantiates an inverse planning model that infers rich abstract Theory of Mind representations. The forward model formalizes our hypotheses about observers’ conceptual knowledge of others’ intentional actions. For instance, observers know that people’s choices reflect values beyond their own monetary gain, such as equity and achieving a desirable reputation [36,47,48]. We hypothesize that observers infer these values to predict social emotions. To capture emotion predictions that depend on social values, we incorporate weighted utility terms like equity and reputation into the model. We empirically test these hypotheses by comparing observers’ attributions of players motivations against the model’s inference, and subsequently use the inferred weights to capture observers’ predictions of nuanced social emotions.

(b) . Inferring appraisals

Module (2) extends the inverse planning framework, using the inferred preferences and beliefs to simulate observers’ latent reasoning about how a player will evaluate a new world state (the outcome of the game). The idea that emotional experience is a consequence of how people evaluate events with respect to their desires and beliefs is a central principle of Appraisal Theory [49–51]. Appraisal Theories describe first-person emotional experience as an interaction between a person and an event, with respect to appraisal variables such as goal congruence (Did she get what she wanted?), agency (Who caused the event, and with what intention?), controllability (Could she influence the situation?) and probability (How likely was the outcome?) [52,53].

Many researchers have proposed that observers reason about others’ emotions using a mental model of appraisal-like processes [4,8,10,12,54–58]. An influential theory is that people engage in a process of ‘reverse appraisal’ to infer the desires and beliefs of others by observing their emotional reactions to events [11,59,60]. Reverse appraisal emphasizes inverse inference: that is, reasoning about the appraisals that caused someone’s emotions and expressions [13,59]. Empirical work supports the idea that people infer appraisals from expressions [61] and, based on the inferred appraisals, update their understanding of others’ motivations [11,62] and personality traits [60].

Our work follows in the spirit of this theoretical framework, but with important differences in how we implement the overarching idea. Module (2) uses mental state representations inferred via inverse planning to generate probabilistic representations that reflect observers’ latent reasoning about how a target will appraise a situation. We refer to these latent representations as computed appraisals. Whereas reverse appraisals reflect an inverse inference (appraisals are inferred from their effects), computed appraisals reflect a forward inference (appraisals are computed from their causes). A related approach is seen in multi-agent partially observable Markov decision processes (POMDPs), where simulated agents compute appraisals based on their action planning policies and belief updates, and can infer appraisals of other agents by representing models of their states [12,23,24]. At present, these computer simulations have not been tested against human judgements.

Another related line of work has computationally modelled human observers’ emotion understanding as a Bayesian intuitive theory [3,4,55,63,64], see [13] for review. This line of research has not yet incorporated inverse planning. For example, Ong et al. [4] studied how observers predict others’ emotional reactions to a lottery. Observers’ predictions of eight emotions were well captured by a model based on three deterministically computed appraisal variables: the amount won, the reward prediction error and the absolute value of the reward prediction error. While groundbreaking, this prior model has a limited representational space. Players in a lottery make no decisions and have no social interactions, and the model predicts the same emotion for every player who received the same outcome. In another example, Wu et al. [55] used an inverse planning model to study how observers infer preferences and beliefs based on a person’s emotional reaction to events (e.g. if a person smiles at the outcome, she wanted it; if she opens her eye wide, she did not expect it), but not how observers predict emotions from inferred preferences and beliefs. In our framework, we therefore formalize the computation of appraisals using the machinery of inverse planning to reason over a Bayesian intuitive theory of emotion, combining key components of prior work.

(c) . Modelling emotion understanding

In our proposed framework, computed appraisals act as a latent mental grammar of emotion understanding, and emotion concepts reflect computations in this latent space [65]. Module (3) translates computed appraisals into emotion predictions by applying ‘emotion concept’ functions that define emotions in terms of computed appraisals. There are many possible ways to accomplish this step (essentially, writing a dictionary of emotion labels). It might be possible to constrain these definitions manually, by consulting formal and intuitive theories of the meanings of emotion labels [20,66–73]. In the present work, we preferred to learn the transformation from computed appraisals to emotions. Thus, our strategy can be situated within two tensions among modelling approaches.

The first tension relates to how reliant models are on humans. Computer science research tends to emphasize stimulus-computability, building and training models that operate over stimuli directly. Psychology research tends to emphasize the testing of psychological hypotheses, using human observers to abstract mental state representations from stimuli. The second tension relates to how model structure is acquired. Theory-driven approaches define representations and computations upfront. Data-driven approaches learn these from training data.

Some computer science work favours building highly structured causal models with cognitive latent spaces. This approach forgoes learning transformations between latent variables and emotions, opting to hand-code computations for appraisal variables that are prescribed by Appraisal Theories [12,23,24], see [18,74] for reviews. Appraisal variables are then translated into emotions according to prescribed definitions [22,75–77]. Other computer science work favours training less-structured models. This approach forgoes extensive hand-coding, opting to learn latent representations from statistical regularities in large datasets [78–80], see [81–83] for reviews. These learned latent representations can encode patterns mapping between emotion labels and expressions, scenes, objects, actions and social interactions [84–88].

Psychology research has aimed to build and test first- and third-person appraisal theories by modelling the relationships between empirical ratings of appraisals and of emotions. This approach relies on human observers to generate representations of stimuli. Observers judge someone’s appraisals and emotions based on information about the situation or the person’s expression, and emotion concept functions are fit or tested on these data [11,54,61]. Given manually annotated appraisal variables, simple classifiers can match human labels for many emotional events [54]. For example, a classifier given human ratings of 25 appraisal variables picked the self-reported emotion label (from 14 choices) for 51% of 6000 real-life events [68].

Our present work takes a middle path between these various approaches. We build a highly structured causal model based on theoretical constraints and psychological insights into social decision making, appraisal computations and emotion understanding. However, we implement general cognitive principals from Appraisal Theories, behavioural economics and computational cognitive science, rather than predefined representations of appraisal variables and emotion concepts. Primitive appraisal computations (achieved utility, prediction error, counterfactual reasoning) are applied over abstract Theory of Mind representations (preferences and beliefs), which are inferred from the event by inverting a causally structured generative model. The latent space of computed appraisals is then used to learn the conceptual structure of emotion predictions. Thus, we leverage probabilistic programming to infer, generate and discover the cognitive structure of the human intuitive theory of emotion.

Our formulation is situation-computable, operating over the same information given to observers. Since the model does not rely on humans to judge appraisals, the latent space of computed appraisals can be larger and more complex than empirically rated appraisal variables. In addition to reflecting sophisticated latent Theory of Mind reasoning, computed appraisal variables (and the learned emotion concepts) are interpretable owing to the cognitive structure of the generative model.

(d) . Comparing our model to human observers

In this paper, we compare our framework to human observers in three ways. First, we compare our model’s inverse planning against humans observing the same game. Second, we compare our model’s capacity to capture inferences of nuanced social emotions following outcomes of the games. Third, we test the model’s ability to adjust to personalizing information about individual players in these games.

The ‘Split or Steal’ game is socially rich, involving high-stakes social coordination, trust, betrayal, equity and public reputation. In the first set of experiments, we tested hypotheses about how observers reason about players’ action planning. Module (1) formalizes these hypotheses by inverting forward planning models to infer observers’ attributions of players’ preferences and beliefs. Empirical studies of social games make evident that players value not only money, but also how their actions will impact others [89–92]. In a one-shot social dilemma like the ‘Split or Steal’ game, an agent maximizing monetary payoff would always choose to defect [2]. By contrast, humans playing ‘Split or Steal’ chose to cooperate about half of the time [1], because they bring non-monetary social values into these games. We predicted that observers infer that players are motivated by social values, such as inequity aversion and reputational signalling; and observing a player’s choice to cooperate or defect leads people to update their understanding of the player’s preferences and beliefs. The results support these hypotheses, indicating that latent action planning representations are intuitive to observers and that the cognitive structure of the inverse planning model captures observers’ mental state inferences.

In the next set of experiments, we investigated the cognitive structure of emotion concepts. To sample the sophistication and breadth of observers’ intuitive reasoning about players in this game, we collected empirical predictions of 20 nuanced emotion labels, which we adapted for this task from prior work [54,68] (see the electronic supplementary methods §S1e). We hypothesized that capturing the empirical pattern of emotion predictions requires that a model represent observers’ intuitive reasoning about players’ decision-making and event appraisals. Module (2) formalizes hypotheses about how observers use representations of preferences and beliefs to reason about players’ event appraisals. Drawing from Appraisal Theories, we predicted that emotion judgements depend on specific computations (achieved utility, prediction error, counterfactuals) over inferred mental states. For example, emotions like joy/happy are functions of achieved utilities [4] and fury/rage are functions of prediction errors [68]. Emotions like disappointment depend on counterfactual reasoning about how events outside of a person’s direct control could have been different [93], and embarrassment/shame depend on counterfactual reasoning about how one’s own actions could have been different [94]. Module (3) learns transformations between computed appraisals and empirical emotion predictions. Lesions of these modules confirm that inverse planning is necessary to capture emotions that depend on inferences of why a player acted a certain way (not simply what events the player experienced), like guilt, and social appraisals are necessary to capture emotions that depend on interpersonal interactions, like embarrassment and envy.

Finally, a key empirical phenomenon is that individual people may react differently to the same outcome.2 Observers can use multiple sources of information to predict individual players' emotions. Because our model links prior beliefs, intentional actions, appraisals of subsequent events and emotion concepts, new information (e.g. different priors) propagates through the causal structure to update connected representations. We provided observers with personalizing information about specific players and collected preference and belief attributions and emotion predictions, for the individual players. Thus, our model can capture how observers update their emotion predictions based on personalizing information.

3. Inverse planning with social values

In our model of observers’ intuitive theory of emotion, inferred mental contents serve as the basis for computing appraisals and predicting emotions (figure 1). Module (1) implements a richly structured inverse planning model [15,17], which simulates how observers infer preferences and beliefs by reasoning over an intuitive theory of players’ minds. A forward model simulates what actions would be made by players with varying preferences and beliefs by formalizing causal relations between players’ mental contents and their behaviour. Bayesian model inversion then enables inference of the joint preferences and beliefs that a player was likely to have, given the player’s decision to cooperate or defect in the ‘Split or Steal’ game.

In the forward planning model, we incorporate social equity utilities that account for people’s actual decisions in social dilemmas [97,98]. Fehr & Schmidt [97] proposed that humans are motivated, to varying degrees, by two kinds of concerns for fairness in social interactions. Disadvantageous inequity aversion (DIA), a preference not to end up worse off than others, is a powerful and culturally conserved social preference [99,100]. In the context of ‘Split or Steal’, DIA is a preference not to be left with nothing while the other player steals the whole pot. In addition, Fehr and Schmidt observed that people’s choices reflect advantageous inequity aversion (AIA), a preference not to extract more than one’s fair share of a resource. In the context of ‘Split or Steal’, AIA is a preference not to steal the whole pot and leave the other player with nothing. Rational planning in the game thus maximizes utility over both non-social (monetary) and social (interpersonal inequity aversion) preferences, given expectations of the opponent’s choice.

We hypothesize that observers have an intuitive grasp of players’ social and monetary values. After observing a player’s choice, observers update their estimate of the player’s monetary and social values, and expectations. We begin by simulating players in an anonymous version of ‘Split or Steal’, and then extend the forward model to the actual public game.

(a) . Inverse planning in an anonymous game

We first simulated how players privately decide whether to cooperate (C) or defect (D) in an anonymous version of ‘Split or Steal’. In this AnonymousGame model, players have preferences exclusively for ‘’ features, i.e. variables that can be objectively calculated from the situation and do not require mental state inference. We use the utility parametrization of Fehr & Schmidt [97] as our base features: player 1’s total monetary reward (), how much more player 1 received than player 2 (advantageous inequity, ), and how much more player 2 received than player 1 (disadvantageous inequity, ); see the electronic supplementary material, figure S2.

Payoffs to the players are determined by the action of player 1 (), the action of player 2 () and the amount of money in the jackpot (). Thus, the outcome of a game is represented by the tuple , and the base features are deterministic functions of this tuple.

We simulate player 1’s decision making as approximately rational subjective utility maximization over these base features. Simulated players are endowed with preferences and beliefs, which are randomly sampled from an empirically fit prior (see BasePrior in the electronic supplementary methods §S1j). Continuous preference weights () modulate the subjective utility that player 1 derives from the base features. A weighted expectation about what choice the opposing player will make () models player 1’s latent belief about . A prior belief about the expected value of the game () models how much money player 1 expected to win before the player learned how much money was actually in the pot.

A simulated player calculates the expected utilities of the action choices () based on its preferences and beliefs:

| 3.1 |

The negative signs associated with and indicate that players seek to minimize inequity, thus and reflect a player’s advantageous and disadvantageous inequity aversion. The value function and the reference point reflect insights from prospect theory and the study of how people value uncertain rewards [101] (for details of implementation, see the electronic supplementary methods §S1f). Because pot sizes in the ‘Split or Steal’ game can range from $1 to over $100k USD, we adjust the utilities to reflect people’s nonlinear valuation of rewards. The value function () applies a transformation commonly used in behavioural economics to account for people’s diminishing marginal utility [102,103]. For our purposes, amounts to a sign-adjusted logarithm that treats gains and losses symmetrically. Because players on the ‘Split or Steal’ gameshow, and observers in our studies, know the range of pot sizes before the game begins, we model players as having an expectation about how much they would win. The reference point adjusts monetary utility relative to how much a simulated player expected to win before learning the pot size. Rewards that fall short of the reference point are perceived as negative utilities.

To calculate the expected utility of an action (), simulated players integrate subjective utility over the opposing player’s possible actions (). This involves scaling the subjective utility of an outcome by player 1’s belief that player 2 will choose . The expected utility of a simulated player choosing action is . Decision making follows probabilistically by sampling from the softmax of expected utility: .

The softmax function is a standard decision policy for modelling an agent’s planning and decision-making in uncertain environments [104] and for observers’ reasoning about others’ noisy choices [15,16,36,38,40]. The thermodynamic parameter determines how rationally versus noisily decisions reflect differences between the expected utilities of choices considered by a simulated player. We marginalize over since we did not collect empirical attributions or fit the prior.

The AnonymousGame forward planning model can be inverted to infer observers’ attributions of mental contents to players in an anonymous version of the ‘Split or Steal’ game (see the electronic supplementary material, figure S3 for empirical attributions and model inference of preference and belief weights). However, as a model of the ‘Split or Steal’ game, the AnonymousGame model is incomplete. To serve as a basis for emotion prediction for the public game, we needed to introduce a salient aspect of the Golden Balls gameshow: the audience.

(b) . Second-order preferences: players’ motives for reputation

The AnonymousGame model is missing a critical element of social strategy games: players’ motive to enhance their reputation. For example, Arthur may choose to cooperate primarily to signal his cooperativeness to future social partners. We hypothesize that human observers can infer such second-order preferences, and that inferences about the motive to enhance one’s reputation underlie prediction of key social emotions, like embarrassment and pride [105]. We therefore extended the generative model of decision planning in an anonymous game to include players’ reputation concerns.

A standard way to incorporate reputation concerns might be to add additional utility variables that define the reputational consequences players expect of their actions. Unlike the base features, the reputation signals cannot be objectively computed directly from the events and must therefore be specified for each situation and action. We follow a more cognitively natural strategy, whereby players apply their own Theory of Mind to anticipate how others will evaluate them [36]. To choose an action that is reputation enhancing, players must first infer how their behaviour will be interpreted by others. This requires an embedded inference loop. We model a simulated player’s expected reputation as the inferences a rational observer would make about the weights of the player’s base utility function (). The inferred base weights are themselves weighted and treated as ‘second-order’ utilities. Thus, a simulated player’s expected reputation reflects the player’s belief, and preference, about what other people will think the player’s values are.

In the PublicGame model, we introduce a reputation utility for each base feature. The expected reputational consequences of a player’s action are weighted by randomly sampled preferences (), which model how much the player cares about other people’s beliefs. The expected utility of an action is the sum of the expected base utilities and the expected reputation utilities:

| 3.2 |

where is a simulated player’s expectation of what other people would infer about the player’s base preference, if the player chooses . The expected base utility of an action, , is the same as in the AnonymousGame model, equation (3.1). The sign on each reputation utility is opposite that of the corresponding base utility, reflecting the hypothesis that observers believe that players desire to be seen as motivated to improve equality, and not motivated to selfishly maximize their own monetary payoffs. We assume that reputation utilities are functions of the pot size (the higher the stakes of a decision, the more consequential the reputation signals).

A simulated player calculates the expected utility of an action, , based on randomly sampled base preferences (), reputation preferences (), belief about which action the opponent is likely to choose (), prior belief about the expected value of playing the game () and beliefs about the inferences other people will make of the player’s base preferences (). Decision making follows probabilistically by sampling from the softmax of expected utility:

| 3.3 |

Preferences, and the belief about the opponent’s action, are sampled from an empirical prior (see GenericPrior in the electronic supplementary methods §S1j).

The purpose of building a generative model that simulates how players with varying preferences and beliefs make decisions in the ‘Split or Steal’ game, is to capture observers’ latent reasoning about players’ intentional actions. Namely: What were a player’s mental contents given that the player chose to cooperate or chose to defect? Since the forward model of decision making is invertible, it supports inverse inference of a players’ preference and belief weights. We invert the PublicGame forward model using Bayes’s rule:

| 3.4 |

where is the six-element vector of base and reputation preference weights. is the joint posterior distribution over preference and belief weights conditional on player 1’s action.

(c) . Comparison to human observers

The first goal of our model is to capture the inferences observers make about players’ preferences and beliefs. We therefore tested whether human observers systematically infer the players’ values and expectations from observing a single choice, and whether we could capture these inferences by inverting our generative model of players’ behaviour. We presented Amazon mTurk participants with scenarios depicting one player’s decision to cooperate or defect on the ‘Split or Steal’ gameshow. These scenarios were synthesized from the range of possible pots and decisions, rather than reflecting any actual recorded episode. The observers judged players’ base and reputation preferences, and belief about the other player’s intended decision (see electronic supplementary methods §S1a for details of the data collection).

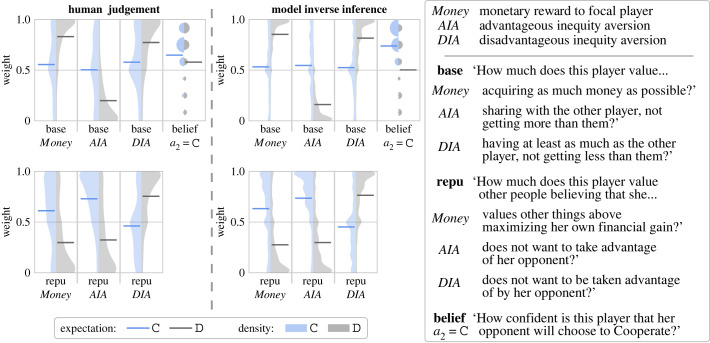

We found that human observers readily and consistently inferred the psychological features from under-specified input. Observers’ judgements and the inverse inference of the PublicGame model are shown in figure 2. Human observers and the model made very similar inferences from the same observed actions. For example, observers inferred that Arthur’s (player 1’s) decision to cooperate means that he was likely to be less motivated by acquiring as much money as possible (), more averse to gaining an unequal and superior outcome (), and less adverse to receiving an inferior outcome (), than if he had defected. If Arthur cooperated, he was also judged to care more about people believing that he values other things above maximizing his own financial gain () and does not want to take advantage of her opponent (). If he defected, he was judged to care more about people believing that he is a strong competitor and not one easily taken advantage of ().

Figure 2.

Inverse planning. Human observers were shown a player’s decision to cooperate (C) or defect (D) and judged the player’s likely preferences and belief. Model inversion yields a joint inference of the mental contents conditional on the players’ actions. Preference weights take continuous values between zero and one. Player 1’s belief about what player 2 will choose was rated on a 6-point confidence scale. Expectation shows the mean weight of each marginal distribution conditional on the player’s action ().

In sum, the PublicGame forward planning model can generate plausible player choices, and can be inverted to make inferences about players’ values from the observation of a single choice. Observers make similar systematic inferences of players’ values from the same observation. Both observers and our model successfully resolve an ill-posed inverse problem, to recover latent mental contents that motivated players’ behaviour.

As a generative model of decision making, the PublicGame model is much richer than is necessary to predict players’ choices in a Prisoner’s Dilemma, which can be captured by extremely simple models [106]. Nevertheless, our interest is in what observers infer about the latent mental contents that underlie the decisions made by others. For this purpose, the richer model better captures the inferences human observers make and supports the subsequent inference of players’ fine-grained reactions. Most importantly for our current purposes, we expect that this richness is necessary to capture the predictions that observers make about players’ emotions.

4. Computed appraisals

Through the successive inversion of increasingly rich generative models of behaviour, we have built an inverse planning model that uses a player’s choice in a social game to infer the joint posterior probability of the player’s selfish, social and reputational preferences, and belief about the opposing player’s intended action. The preferences and beliefs inferred by Module (1) serve as the basis for computing appraisals in Module (2) (figure 1b). Computed appraisals are latent Theory of Mind representations: how players evaluate a new world state (the outcome of the game) caused in part by an event outside of the player’s intentional control (the opponent’s action) given the player’s mental contents.

As the cognitive latent space for predicting emotions, computed appraisals need to represent the computational structure of observers’ emotion concepts. Drawing from prior work, we define primitive appraisal computations, which are applied over inverse planning representations. We implement four types of appraisal computations based on achieved utility (), utility prediction error () and counterfactual utilities with respect to the actions of player 1 and player 2 ( and , respectively). The rest of this section explains how these appraisals work in high-level terms; formal definitions are given in the electronic supplementary methods §S1i.

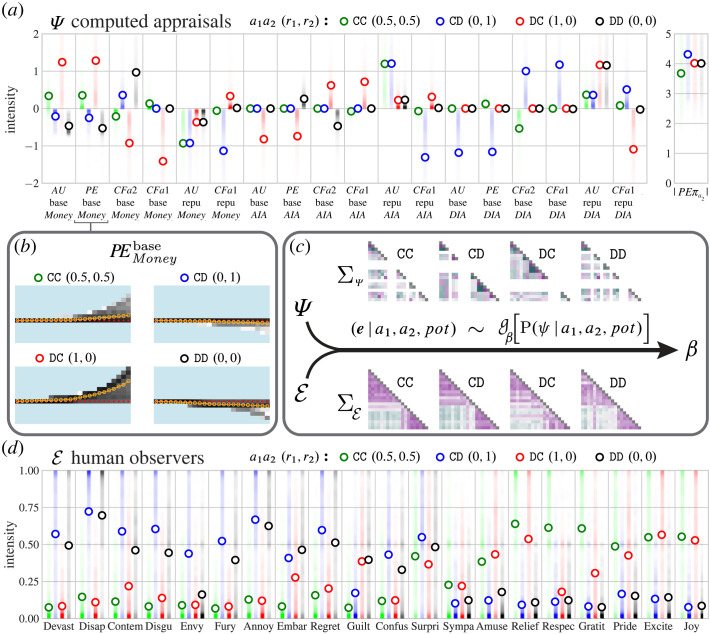

In the PublicGame model, players make decisions by calculating the expected utility of their actions. When the opponent’s action is revealed, and the outcome of the game is known, simulated players learn the utilities they achieved () and the error signals between these outcomes and what they expected (). The same mental contents that led a simulated player to choose an action can be used to compute the utilities that would have been achieved, if the player had made a different choice (), or if the opponent had made a different choice (). We leverage these rich mental state representations to compute appraisals over monetary, social and reputational representations, incorporating the simulated player’s beliefs about what was going to happen, what did and did not happen, and how the player and the opponent could have changed what happened. Figure 3a shows how the events of the ‘Split or Steal’ game load onto the computed appraisals.

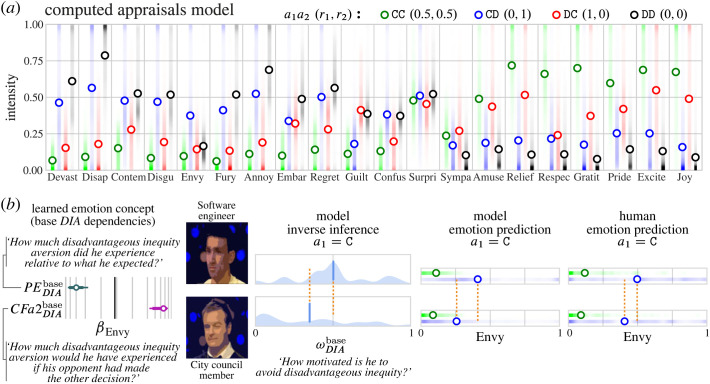

Figure 3.

Generative structure. (a) Expectations and densities of the normalized computed appraisals. is the matrix of the 19-dimensional appraisal vectors. The legend gives the relative payoffs to the players (), e.g. when player 1 Cooperated and won nothing because player 2 Defected and won the whole pot, : . Colour indicates the outcome of the games. (b) Example of a computed appraisal’s relationship to pot size. The -axis shows the 24 pot sizes (non-parametric scaling), -axis shows the loading on simulated players’ monetary utility prediction error, colour indicates density. (c) We learn a function () that transforms computed appraisals () into emotion predictions () by scoring the empirical emotion vectors predicted for the GenericPlayers from the joint posterior over computed appraisals. To learn the transformation parameters, we leverage the expectations, as well as the hierarchical covariance structure of computed appraisals and of empirical emotion attributions. The result is a sparse weights matrix . Expectations are shown in (a,d). Correlation matrices shown in (c) give the within-stimulus correlation (averaged within outcome) between computed appraisals (top) and between empirical emotion predictions (bottom). (d) Emotion predictions for the GenericPlayers. Circles show the expected intensity for each outcome, summing over pot sizes and the eight photos. Shading shows the density of judgements. is the matrix of the 20-dimensional emotion prediction vectors. Each expectation (and associated distribution) reflects judgements of observers.

Computed appraisals follow a similar nomenclature as utilities in §3, defined in terms of ‘Money’, ‘AIA’ and ‘DIA’ utility terms, and both first-order (‘’) and second-order (‘’) preferences. To illustrate more concretely how our four types of appraisals are defined, consider just the base monetary reward. During planning, the base monetary utility a player expected is written as . When the player learns the opponent’s decision (and correspondingly, the outcome of the game), the same mental contents that led to the player’s choice determine the subjective utility that the player achieves. Thus, the achieved utility () for base monetary utility, written as , reflects the simulated player’s subjective valuation of the event based on the player’s preference () and how much the event confers.

Prediction error (or ‘expectation violation’) is the discrepancy between what was expected and what occurred. Extensive research illustrates that prediction error is a fundamental computation in first-person emotion experience [107–113] and in third-person emotion understanding [13,114] of emotions like surprise, disappointment and fury/rage [4,68,115]. For a simulated player’s base utility terms, we compute prediction error () from the difference between the achieved utility and expected utility. In addition, we use a player’s weighted belief to compute the absolute prediction error of the opponent’s action (how unexpected the opponent’s behaviour was): .

Counterfactuals involve mental simulations of alternative realities, and are central to causal reasoning. For our current purposes, it is comparing what could have happened to what did happen. Counterfactual judgements are a fundamental computation in first-person experience [19,116–119] and in third-person emotion understanding [4,63,120–123]. Reasoning about counterfactual events outside of a person’s direct control is implicated in emotions like disappointment and relief, while reasoning about how a person could have changed the situation by choosing a counterfactual action is implicated in emotions like embarassement, regret and guilt [93,94,124,125].

We compute counterfactual contrasts based on the action not chosen by each player. For the opponent’s action counterfactual (), which a simulated player cannot directly control, the contrast between the counterfactual utility and achieved utility is weighted by the simulated player’s belief that the opponent would make the other choice: . For the simulated player’s action counterfactual (), the contrast is weighted by the probability that the player would have made the other choice, given the player’s preferences and updated belief about the opponent’s action3: .

In sum, the machinery of inverse planning makes it feasible to compute probabilistic representations of players’ appraisals. The latent space of computed appraisals reflects sophisticated causal reasoning about recursive social preferences and beliefs. We now leverage these abstract Theory of Mind representations to reverse-engineer the cognitive structure of observers’ emotion concepts. In effect, we learn a Computational Appraisal Theory directly from observers’ emotion judgements.

5. Emotion predictions

We now return to the challenge that we began with: testing whether our computational model can capture the conceptual knowledge and intuitive reasoning that underlie human observers’ emotion predictions.

(a) . Human observers’ emotion predictions

We collected human observers’ predictions of the emotions players would experience when the outcome was revealed in ‘Split or Steal’ games. We collected two datasets from online participants. The training data () were used to learn a transformation between the latent space of computed appraisals and the emotion predictions, which was then used to predict emotions for the test data (). In the test data, observers were presented with specific information about each focal player. Collection of these data, referred to as the SpecificPlayers, will be described in §6.

In the training data (GenericPlayers), observers were briefed on the structure of the ‘Split or Steal’ game and watched a video taken from the show in which the presenter explains the rules and two players negotiate in an attempt to convince the other to choose ‘Split’ (cheap talk negotiation). The introductory video ends before the players reveal their choices. Observers completed eight trials, in which they saw a photograph of the focal player (designated player 1), a pot size (ranging from $2 to $207365 USD), and the actions chosen by both players in that game. Observers saw two games for each category of payoff: CC where both players cooperated and each won half; CD where player 1 cooperated and received nothing; DC where player 1 defected and took everything; and DD where both players defected and both got nothing. Observers then predicted how much player 1 would experience 20 different emotions: Devastation, Disappointment, Contempt, Disgust, Envy, Fury, Annoyance, Embarrassment, Regret, Guilt, Confusion, Surprise, Sympathy, Amusement, Relief, Respect, Gratitude, Pride, Excitement and Joy.

To learn a transformation between the model and human emotion judgements, we make use of the rich structure present in the observers’ emotion predictions. The GenericPlayers data (figure 3d) illustrate that, even from sparse event depictions, human observers made systematically different emotion predictions for players in the four different outcome categories. At the coarsest qualitative level, observers predicted that players who won money (CC and DC outcomes) would experience more positive emotions and players leaving with nothing (CD and DD outcomes) would experience more negative emotions (figure 3d). However, observers’ emotion predictions do not just reflect the monetary outcomes of the game. For example, when player 2 defects, player 1 necessarily receives no monetary reward, yet observers predicted that player 1 would have different emotional reactions depending on whether he chose to cooperate (more envy and contempt) versus defect (more guilt). Note that preference/belief attributions (described in §3) and emotion predictions were collected from mutually exclusive groups to avoid cueing observers to think about emotion experience in terms of the planning variables or vice versa.

(b) . Learning the latent structure of the intuitive theory of emotion

We hypothesized that human observers infer players’ values and expectations from their actions using inverse planning, and predict players’ emotional reactions to events based on those inferred mental states. In this model, emotion predictions reflect observers’ reasoning about how players would react to an event given the particular players' beliefs and preferences. Critically, we assume that emotion prediction relies on inverse planning. Inferred mental contents, generated via the inversion of an intuitive Theory of Mind, form the basis for reasoning about how a player will evaluate events. In our proposed model of the intuitive theory of emotion (figure 1), computed appraisals serve the functional role of linking observations (the events in a ‘Split or Steal’ game) and emotion concepts. Thus we call our model of emotion prediction based on mental states inferred from inverse planning, a ComputedAppraisals model.

In Module (1), the model uses the pot size and player 1’s chosen action to update estimates of player 1’s preferences and beliefs. In Module (2), these preferences and beliefs then affect how the player appraises the situation. To generate computed appraisals for the GenericPlayers, we ran the PublicGame model in the same way as in §3b: using an empirically derived prior over the base preferences and beliefs, we inverted the hierarchical generative model of behaviour. Then, we computed how simulated players would appraise the outcome of the game. Appraisals are computed as the achieved utilities, prediction errors and counterfactuals, on a player’s beliefs, base preferences and reputation preferences. See the electronic supplementary methods §S1i for details of how appraisals are computed, and the electronic supplementary methods §S1j for details about the priors. Module (3) transforms computed appraisals into emotion predictions by applying learned emotion concepts: functions that translate appraisal loading into emotion intensities. Thus, before we can generate emotion predictions, we need to learn the ‘meaning’ of each of the 20 emotion labels that human observers rated, in terms of the set of appraisal variables.

To learn the function relating emotion labels to computed appraisals, we made a strong assumption about the generative structure of observers’ emotion predictions: when people are asked to predict a player’s emotions, they do not make 20 independent inferences but rather infer a joint distribution over the player’s preferences and beliefs, and reason about how these inferred mental contents would cause the player to evaluate the situation (figure 1). For instance, if player 1 cooperated while player 2 defected (CD), how much an observer thinks that player 1 wanted to avoid being disadvantaged will be reflected in that observer’s predictions of player 1’s experience of both embarrassment and envy. Thus, the covariance patterns of observers’ emotion predictions reflect the latent structure of their intuitive theory of psychology. Using the GenericPlayers training data, we leverage this information to learn a mapping between people’s empirical emotion predictions and the joint distribution over appraisal variables generated by the model.

Figure 3 shows the model’s computed appraisals and observers’ emotion predictions for the GenericPlayers. Figure 3a,d show the expected values given an outcome. Figure 3c shows the average within-stimulus correlation matrix for each outcome. Note that formalizing computed appraisals as a probabilistic generative model permits us to leverage within-stimulus covariance in latent structure discovery.

Based on the GenericPlayers data shown in figure 3, we learn a sparse transformation between the joint distribution of computed appraisals and the joint distribution of emotion predictions. Specifically, we treat the empirical emotion prediction vectors as observations from some function of the posterior distribution of computed appraisals ( in figure 3c). We find a transformation of the appraisal distribution that maximizes the probability of observing the empirical data under a Laplace prior on the transformation coefficients. This yields a sparse transformation between computed appraisals sampled from the ComputedAppraisals model and continuous quantitative predictions of the player’s emotions.

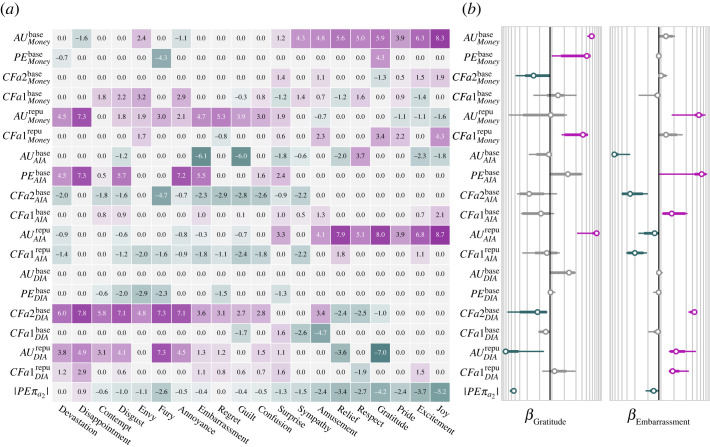

This model recapitulates the social cognitive reasoning that allows human observers to predict players’ emotions for arbitrary games. The emotion concepts shown in figure 4 reflect a computational hypothesis about the intuitive theory of emotion. This hypothesis says that observers’ emotion predictions reflect latent computations over players’ inferred mental contents. For example, a player who is inferred to value money highly will experience more gratitude when she wins more money , and when she wins more than she expected . A player will experience more gratitude when her opponent could have prevented her from winning money but chose not to, and less gratitude when her opponent could have chosen an action that would have caused the player to win money but instead chose an action that resulted in the player winning nothing . The more a player cares about not being in a socially disadvantageous position the more gratitude she will experience when her opponent could have taken advantage of her but chose not to, and the less gratitude she will experience when her opponent decided to exploit her . The more a player is motivated to be seen as a fierce competitor, the less gratitude she will experience .

Figure 4.

Appraisal structure of the intuitive theory of emotion. The weights of the transformation were learned based on the joint distribution of appraisals and the joint distribution of emotion predictions for the GenericPlayers. A Laplace prior over the weights induces a sparse solution. To learn the scale of the prior, we cross-validated on subsets of the SpecificPlayers and generated emotion predictions for the leftout players. Saturation indicates that the 99% CI does not overlap with zero. (a) Mean weights of the learned transformation. (b) These log-scale plots show the expectation, 95% and 99% CI of the weights learned for two example emotions, gratitude and embarrassment.

The appraisal pattern is quantitatively and categorically unique for each emotion, suggesting that these 20 emotions are conceptually distinct. Much of the learned structure qualitatively aligns with existing emotion taxonomies [68,72,126]. We next test if the computational hypothesis formalized by the ComputedAppraisals model captures human emotion predictions.

(c) . Comparing the computed appraisals model to human observers

The ComputedAppraisals model generates a joint distribution over 20 emotions based on the two players’ actions and the pot size by generating computed appraisals under a prior distribution of player 1’s preferences and belief . Using the transformation, we learned based on the GenericPlayers, we generated personalized emotion predictions for 20 SpecificPlayers. The SpecificPlayers are described in detail in §6, but before considering how personalizing information biases emotion predictions for individual players, we first consider the overall structure of the model. The ComputedAppraisals model captures the overall pattern of human emotion judgements. Emotion predictions generated by the model (averaged over players and pot sizes) are shown in figure 5a. Positive emotions are predicted when players win money and negative emotions when players lose money. In addition, the model captures some of the more nuanced features of the empirical judgements. When a player’s opponent defects, causing the player to leave with no money, the model (like human observers) predicts more envy if the player cooperated (CD) and more regret if the player defected (DD). When the opponent cooperates, causing the player to win money, the model (like human observers) predicts more relief and gratitude if the player cooperated (CC) and more guilt if the player defected (DC).

Figure 5.

Inferred emotion predictions. (a) Emotion predictions generated by the ComputedAppraisals model, averaged across players and pot sizes. (b) Example of how the model personalizes emotion predictions. Based on the GenericPlayers data, the model learned that envy is a function of appraisals derived from a player’s aversion to disadvantageous inequity (). When a player appraises that he is in a more socially disadvantageous position than he expected to be (negative prediction error), the negative loading on translates to greater envy intensity. Similarly, when a player appraises that he would have been in a less disadvantageous position if his opponent had made the other choice (positive counterfactual), the positive loading on translates to greater envy intensity. Given that they chose to cooperate (), the model infers that the engineer cares more about not ending up in an inferior position () than the councilman. When the opposing player defects (), the model predicts greater intensity of envy for the engineer because he is inferred to have a stronger preference. Human observers similarly predicted that the engineer will experience more envy than the councilman in CD games. Photos of the players have been downsampled for the purpose of publication.

To assess the explanatory power of the model, we use Lin’s Concordance Correlation Coefficient4 (), which is a metric of the agreement between model predictions and a ground truth measure (the empirical emotion predictions) [127], and bootstrap resampling to estimate 95% confidence intervals (CI). Across all players, emotions, outcomes and pot sizes, the ComputedAppraisals model fit the observer’s emotion predictions for the SpecificPlayers data well: (figure 6c).

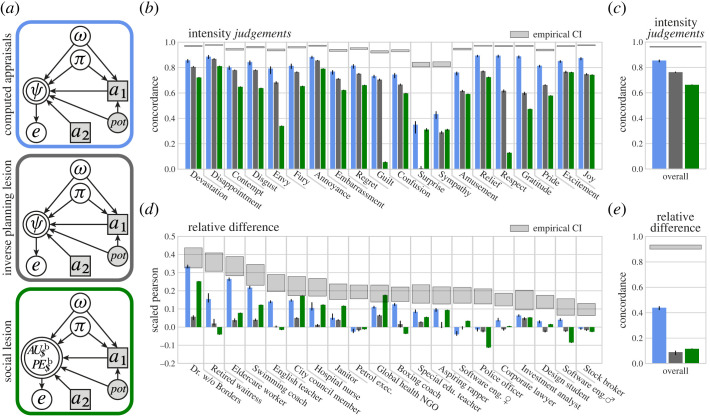

Figure 6.

Predicting specific player’s emotions. Human observers made preference and belief attributions to the 20 SpecificPlayers, based on a photo, brief description and decision, in the ‘Split or Steal’ game. (a) Based on what a SpecificPlayer was judged to care about and to expect, the models generated predictions of that player’s emotion reaction in 24 ‘Split or Steal’ games (four outcomes and eight pots). Bar colours in (b–e) correspond to the models in (a), and grey windows give the 95% bootstrap CI of the inter-rater reliability of the emotion predictions. (b) Concordance between predictions generated by the models and human observers for every emotion (collapsing across players, outcomes and pot sizes). (c) Overall fit the emotions observers predicted for the 20 SpecificPlayers. (d) The photos and descriptions of SpecificPlayers biased human observers’ judgements of the players’ motivations, expectations and emotional reactions. This plot shows how well the models were able to predict the bias in emotion predictions based on observers’ judgements of a player’s preferences and belief. Players are ordered based on how reliably observers’ emotion predictions differed from the emotions predicted for the GenericPlayers (grey windows). The model score gives the variance-scaled Pearson correlation. (e) Correlation between the relative difference predicted by the models and the relative difference in observers’ emotion predictions. (b,c,e) Each bar reflects a model’s performance based on emotion predictions of observers. () Each bar reflects a model’s performance based on a minimum of empirical predictions of all 20 emotions.

Predictions of different emotions depend on different types of information, making it likely that a model’s latent representations will enable it to capture some emotions better than others. Similarly, human observers can find a stimulus ambiguous with regard to one emotion but unambiguous with regard to another (figure 6b shows the reliably of observers’ predictions and how well the ComputedAppraisals model captures the empirical emotion predictions for the SpecificPlayers). To test whether the rich generative structure of the ComputedAppraisals model significantly contributed to its ability to capture observers’ emotion predictions in this task, we compared the ComputedAppraisals model with two simpler alternatives.

(d) . Inverse planning lesion model

The InversePlanningLesion (figure 6a) selectively blocks inverse planning by inferring appraisal variables based on the prior distribution of beliefs and preferences (before either player acts), rather than the posterior distribution (updated based on the decision of player 1). Players simulated by the InversePlanningLesion model are effectively forced to choose a ball at random, without looking inside. Without a causal link to behaviour, the inverse inference of players’ preferences and beliefs reduces to the prior. Thus, the posterior probability in equation (3.4) becomes: . We similarly lesion the embedded inverse planning loop, which simulates how their behaviour will be interpreted by others. Appraisal generation is identical to the ComputedAppraisals model and still depends heavily on .

To illustrate the InversePlanningLesion, consider the effect of simulated players’ beliefs about their opponents’ actions () on the appraisals made by each model. In the full ComputedAppraisals model, as for human observers, simulated players only tend to cooperate when they believe their opponent is also going to cooperate (see in figure 2). Players simulated by the InversePlanningLesion model select balls randomly, so the expectation of monetary utility reflects the prior on belief, , rather than what beliefs were likely given the player’s action, .

Across all players, combinations of decisions and pot sizes, the InversePlanningLesion model showed a lower fit to human observers’ emotion predictions (; figure 6c). The effect of lesioning inverse planning is particularly evident in specific emotions. For instance, the InversePlanningLesion caused notable decrements in the capture of envy, surprise, relief, gratitude, pride and joy (figure 6b).

(e) . Social lesion model

In the SocialLesion model, we removed all of the social (non-monetary) values attributed to players. This lesion allows us to test the importance of these social values for successfully generating human-like emotion predictions. The SocialLesion model leaves forward-planning intact: simulated behaviour, inferred monetary utility and prediction error, are all identical to the full ComputedAppraisals model (figure 6a). The social lesion can be likened to observers having the intuitive theory that players’ emotional reactions depend only on monetary considerations. The SocialLesion model predicts 20 emotions from the transformation of the joint distribution of monetary utility and monetary prediction error, .

Across all players, combinations of decisions and pot sizes, the SocialLesion model showed a lower fit to human observers’ emotion predictions (; figure 6c). As expected, the SocialLesion model was largely unable to capture predictions of social emotions like envy, guilt, gratitude and respect. Interestingly, the SocialLesion model also provided a poor fit for predictions of some emotions that were well fit by monetary appraisals of lottery outcomes [4]. For example, observers’ predictions of players’ joy are positively related to monetary payoff in both lotteries and ‘Split or Steal’: for a given action, players who win $12k USD are predicted to experience more joy than players who win $6k USD. However, for a given pot size in ‘Split or Steal’, observers predict similar levels of joy for players who cooperated and took half the pot, as for those who defected and took twice as much. Human observers predict that joy reflects social values. With access to only monetary appraisals, the SocialLesion can capture the positive relationship between joy and the pot size, but not the way in which predictions of joy depend on the social consequences of an action.

6. Personalizing emotion predictions

So far, we have investigated how human observers, and the ComputedAppraisals model, predict emotional reactions for players after observing only a single action in the game. However, the structure of the game means that single actions are highly ambiguous. An observer who personally knows a specific player might be able to use prior knowledge, from outside the game, to inform inferences about the player’s likely values and expectations [128,129]. If the ComputedAppraisals model is a good approximation of how human observers reason about players’ emotions, it should also be able to predict the emotions observers predict specific players will experience.

To mimic prior knowledge of the players, we constructed 20 SpecificPlayers, each composed of a unique headshot and brief description. The descriptions included, ‘Doctor, volunteering in South Africa with Doctors Without Borders’, ‘Software engineer at Google’, and ‘City council member, about to start campaigning for State Senate’. We hypothesized that even such sparse information would evoke stereotypes that allow human observers to update priors over the players’ likely preferences and beliefs.

To test this hypothesis, we asked human observers to rate how much each SpecificPlayer actually valued, and valued others believing that they valued, Money, AIA and DIA, and what the players predicted their opponents would do, given the players’ decisions in ‘Split or Steal’. After being familiarized with the ‘Split or Steal’ game, each observer made preference and belief attributions to eight players. In each trial, observers were shown a player’s description (a photo and career), the player’s choice and the pot size. Confirming our hypothesis, human observers made consistent and distinct preference and belief attributions to SpecificPlayers, which differ from the attributions made to the unspecified GenericPlayers. For example, the software engineer is viewed as more motivated to avoid being taken advantage of than the city councilman, even when both were shown to have cooperated (figure 5b). The overall patterns of emotions observers predicted for the GenericPlayers were replicated by the emotion predictions made for the SpecificPlayers in this experiment. No player is expected to experience more envy after winning money than not winning, for example, but how much envy observers expect a player to experience differs between players. See the electronic supplementary material §S6 for a complete example.

(a) . Simulation of the bias induced by personalizing cues

If a model has learned an accurate mapping from computed appraisals to emotion judgements, then it should be sensitive to variation in the psychological characteristics attributed to specific players, which are the bases for computing appraisals. The key generalization test is therefore whether the ComputedAppraisals model accurately predicts how emotion predictions will differ between players in the same situation, based on observers’ ratings of each player’s preferences and beliefs.

The empirical expected difference between emotions predicted for a SpecificPlayer and the GenericPlayers is given by where,

In similar manner, the expected difference predicted by a model, given by , is the difference between the emotions a model predicted for a SpecificPlayer and for the GenericPlayers. Since this difference is calculated relative to a model’s own prediction of the GenericPlayers emotions, a model that fails to fit the absolute expected emotion intensities can still capture how observers’ emotion predictions for SpecificPlayers change relative to GenericPlayers.

The ComputedAppraisals model was able to capture some of the bias in human observers’ emotion predictions for SpecificPlayers. Across all SpecificPlayers, the fit between the predicted difference of the ComputedAppraisals model and the empirical difference was: , Pearson . However, human observers disagreed amongst themselves about how emotion predictions should be personalized for each SpecificPlayer. The emotions predicted for some players reliably differ from the emotions predicted for the GenericPlayers, whereas other players did not elicit reliable differences (). We therefore separated the correlations in emotion prediction bias for each SpecificPlayer in figure 6d. Correlations are scaled by the total variation (see the electronic supplementary methods §S1l(ii)). The ComputedAppraisals model was better able to capture the relative difference in predicted emotions for the SpecificPlayers that evoked more reliably different emotion predictions. We hypothesize that the ComputedAppraisals model is mimicking human observers’ adjustment of emotion predictions, based on computed appraisals with personalized values and expectations.

Neither the InversePlanningLesion model nor the SocialLesion model were able to generate personalized emotion predictions (figure 6d,e). Despite predicting the expected emotion intensities nearly as well as the ComputedAppraisals model (figure 6c), the InversePlanningLesion model largely failed to predict how personalizing information biased emotion predictions relative to the generic players: , Pearson . The SocialLesion model yielded a similarly low correlation: , Pearson .

7. Discussion

This work computationally models how observers predict other’s emotions. We formalize emotion prediction as a Bayesian intuitive theory of emotion by building on modelling work from psychology, computer science and behavioural economics. Integrating these approaches in a cognitively structured model enables us to investigate how observers infer, reason over and predict, abstract representations of other’s mental contents. A contribution of this work is in illustrating how to modularly combine theory-based computational models in a causal Bayesian framework. The three Modules (figure 1) implement general psychological hypotheses but are tailored to the context of the ‘Split or Steal’ game. Each could be improved to better capture human cognition both within this domain and in general.

Module (1) instantiates a hypothesis about the causal generative structure of the mind: how observers infer the unobservable mental contents likely to have motivated someone’s observed behaviour. The module simulates how observers reason about what preferences and beliefs caused a player’s action by inverting a richly structured generative model of approximately rational decision making. The aim is to infer abstract Theory of Mind representations from the observable features of a situation (the players’ actions and the pot size) by modelling observers’ intuitive theory of players’ minds. We define objective representations of the situations, which are psychologically relevant but do not depend on mental state inferences. We operationalize this using the Fehr & Schmidt [97] parametrization of social inequity aversion (electronic supplementary material, figure S2), which reflects salient dimensions of events in the ‘Split or Steal’ games and is also generally applicable to a large domain of dyadic interactions. The forward planning model simulates how players plan and execute behaviours based on their beliefs about the world and other people, and preferences for the objective situation features and other people’s beliefs. The forward model builds up distributions over actions by sampling players with varying preferences and beliefs from empirically derived priors. Bayesian inversion of the forward planning model yields inference of the preferences and beliefs that jointly motivated a player’s observed behaviour.

Any instantiation of Module (1) expresses a hypothesis about which preferences and beliefs are potentially at stake in a situation. For instance, if we hypothesized that observers thought that players were only concerned with maximizing their monetary reward, we would not include preferences for social equity, and defecting would be the only rational choice for simulated players. Similarly, if we hypothesized that observers thought that players were not managing their reputations, we would use the AnonymousGame model, rather than a model that simulates players’ preferences for how they are perceived by others. However, observers expect players to cooperate and report that players have preferences for what inferences others make about their values. Thus, to capture the social cognition that we hypothesize observers employ to reason about why a player chose to cooperate or defect, we instantiate a Bayesian Theory of Mind (BToM) model with preferences and beliefs over recursive representations of the monetary and social features of the situation and other people. Our instantiation captures observers’ judgements of the latent mental contents that motivated a player’s action (figure 2).

Prior BToM models have focused on belief-desire inference (so-called ‘propositional attitudes’ [130]) via Bayesian inverse planning. BToM models have been applied extensively to infer motivations using POMDPs and reinforcement learning in domains where agents interact with simple environments [15,39,42,44,131,132]. Our present work demonstrates how the machinery of inverse planning can be extended to reason over a more general Bayesian Theory of Mind that includes appraisals and emotions. To our knowledge, this is the most richly structured inverse planning model to date, and the first use of inverse planning to predict emotional reactions to subsequent events.

Module (2) instantiates a hypothesis about the computational basis of predicted emotional reactions: how observers reason about someone’s appraisal of events. The module computes how a simulated player would evaluate a new world state (the outcome of the game) caused by an event outside of the player’s intentional control (the opponent’s action) given the player’s preferences and beliefs. The specific computations are inspired by the general cognitive principles of Appraisal Theory. We compute achieved utilities, prediction errors and counterfactuals over monetary, social and reputational representations, incorporating the simulated player’s beliefs about what was going to happen, what did and did not happen, and how the player and the opponent could have changed what happened (figure 3).

To build a computational model that generates emotion predictions from contextual information, we use situations with quantitatively well-defined features. This allows us to investigate what abstract representations and computations observers use to translate situation information into emotion predictions. Choosing a well-studied social context enables us to adapt behavioural economic models of behaviour to model people’s intuitive theory of actions and reactions. Scaling this approach to less constrained contexts will require more general methods of computing abstract psychologically relevant representations of situations [133]. While a Prisoner’s Dilemma can be expressed as the players’ actions, the pot size, the rules of the game (in the case of ‘Split or Steal’, a public one-shot weak PD), the cognitively relevant features of most real-world social interactions are harder to parse.

Large language models have recently made impressive strides in capturing patterns of social cognition [25,26,134–137], and various neural architectures have been able to capture some patterns of human Theory of Mind in gridworld tasks [138–140]. However, these models have yet to approach the logical and causal reasoning capacity that humans develop by childhood [139,141,142]. Compared to neural architecture with minimal upfront structure, highly structured inverse planning models evidence greater sophistication and generalization, even in Theory of Mind tasks simple enough for infants [42–44]. In our view, capturing the breadth and sophistication of social cognition will require probabilistic generative models of the human intuitive Theory of Mind [143]. Advances in probabilistic programming, program synthesis and neurosymbolic methods [144–148] suggest that the relevant abstractions can be learned by combining theory-based inductive constraints and conducive experimental domains [63,149–152].

Module (3) instantiates a hypothesis about the structure of emotion concepts: how observers transform latent appraisal representations into emotion predictions (an example is shown in figure 5). In the present work, we target retrospective emotions (emotions that occur in response to the outcome of the game) and did not include prospective emotions that concern uncertain future events (e.g. hope, fear; see the electronic supplementary methods §S1e). Emotion concepts (functions of appraisal variables) are learned by finding a transformation between the joint distribution over computed appraisals and the joint distribution over empirical emotion judgements. An advantage of this approach is that the computational structure of social cognition can be reverse-engineered directly from emotion judgements. Because the learned appraisal structure is interpretable, the results can be compared with other emotion taxonomies. While it did not need to be the case, the learned appraisal structure is unique for each emotion, suggesting that these 20 emotions are conceptually distinct (figure 4).