Abstract

Incorporating argument writing as a learning activity has been found to increase students’ mathematics performance. However, teachers report receiving little to no preservice or inservice preparation to use writing to support students’ learning. This is especially concerning for special education teachers who provide highly specialized mathematics instruction (i.e., Tier 3) to students with mathematics disabilities (MLD). The purpose of this study was to examine the effectiveness of teachers providing content-focused open-ended questioning strategies, which included both argument writing and foundational fraction content, using Practice-Based Professional Development (PBPD) and Self-Regulated Strategy Development (SRSD) for implementing a writing-to-learn strategy called FACT-R2C2. We report the relative number of higher-order mathematical content questions that teachers asked during instruction, from among three different-level question types: Level 1: yes/no questions focused on the mathematics content; Level 2: one-word responses focused on the mathematics content; and Level 3: higher-order open-ended responses centered around four mathematical practices from the Common Core State Standards for Mathematics. Within a well-controlled single-case multiple-baseline design, seven special education teachers were randomly assigned to each PBPD + FACT-R2C2 intervention tier. Results indicated that: (1) teachers’ relative use of Level 3 questions increased following the introduction of the FACT intervention; (2) this increase was apart from the professional development training that the teachers had initially received; and (3) students’ writing quality improved to some extent with the increase in teachers’ relative use of Level 3 questions. Implications and future directions are discussed.

Keywords: Writing-to-learn, Self-regulated strategy development, Professional development, Fraction knowledge

Mathematical reasoning involves multiple processes in which teachers can engage students in math learning, such as interpreting language and mathematical symbols accurately, justifying the choices they make when solving a problem, persevering to make sense of problems, using tools strategically when solving problems, and communicating their findings with peers (Casa et al., 2016; Jonsson et al., 2014; National Mathematics Advisory Panel [NMAP], 2008; Common Core State Standards—Mathematics [CCSS-M, 2010b]). However, research suggests that students who are eligible to receive specialized instruction in mathematics and are classified with a mathematics learning disability (MLD): (1) may struggle with reasoning and communicating their problem-solving processes [National Assessment of Education Progress (NAEP) for Writing, 2011]; and (2) exhibit comorbidity with language development, making it difficult to learn mathematics (Korhonen et al., 2012). Effective mathematics teaching of students with MLD requires teachers to set high expectations for learning while providing opportunities and support needed for developing their students’ foundational mathematics skills (National Council of Teachers of Mathematics [NCTM], 2000). Research further suggests that for teachers to address effectively students’ language and mathematics challenges, they should: use explicit and systematic instruction; incorporate mathematical vocabulary and language to help students communicate their understanding; and provide multiple representations of the mathematical concepts learned during instruction (Fuchs et al., 2021; NMAP, 2008; Schleppegrell, 2010).

Fraction learning for struggling learners

Understanding fractions is essential for learning algebraic reasoning and sense-making (NMAP, 2008; Siegler et al., 2010, 2012). Yet, many K-8 students with and without learning difficulties lack proficiency with fractions (CCSS-M, 2010b; National Assessment for Educational Progress [NAEP] for Mathematics, ), especially students classified with MLD (Bailey et al., 2015; Krowka & Fuchs, 2017; Namkung & Fuchs, 2016). Unlike whole numbers, the magnitude of a fraction is represented by two numbers, requiring a conceptual change in understanding that two numbers can be used to represent a numerical value (Bailey et al., 2015; Hallet et al., 2010; Jordan et al., 2013). Moreover, when learning fractions, struggling learners may also exhibit challenges with working memory capacity, using symbolic notation to represent their understanding, visualizing numerical representations that represent abstract concepts, and self-regulating their learning process for carrying out a series of tasks (Case et al., 1992; Forsyth & Powell, 2017; Geary, 2004; Montague & Jitendra, 2012).

Using argument writing to develop mathematical reasoning

Using argument writing to learn mathematics has shown to be effective for increasing K-12 students’ learning (Graham et al., 2020) and functions as a way for K-12 teachers to address state core curricula standards in both mathematics and English language arts (CCSS-M, 2010b; CCSS-LA, 2010a). Included in the majority of mathematics core state standards taught in K-12 classrooms across the United States is the Standards for Mathematical Practice, which represent the processes for teachers to engage students in developing their reasoning and proficiency in mathematics (CCSS-M, 2010b; NMAP, 2008). Included in these mathematical practice standards are constructing arguments, which is also a core English Language Arts standard across many states, requiring students to provide logical claims and counterclaims for arriving at a conclusion or solution to a problem (CCSS-LA, 2010a; CCSS-M, 2010b). Argument writing provides a rhetorical structure for students to justify their position, support their claims with evidence, and refute a position that they might disagree with or that may be inaccurate (Klein, 1999; Nussbaum & Kardash, 2005; Wiley & Voss, 1999). However, teachers receive little to no pre- or inservice professional development to teach writing (Brindle et al., 2015; Gillespie et al., 2014; Kiuhara et al., 2009; Ray et al., 2022). For teachers to implement writing activities to support mathematics learning for students who struggle with mathematics, professional learning must develop further teachers’ knowledge about the genre of argument writing, in addition to the mathematics domain taught and the learning challenges that students with MLD may encounter when learning mathematics (Fuchs et al., 2021; Graham & Harris, 2018; NCTM, 2000).

Evidence of professional learning of fractions

Research suggests that developing teachers’ mathematical content knowledge strengthens the quality of their instruction and increases student learning (Ball et al., 2005; Darling-Hammond et al., 2017; NMAP, 2008). Thus, it becomes essential to identify ways to increase teachers’ content knowledge and pedagogical skills, especially for special education teachers who provide specialized instruction in mathematics. Two important studies have examined the effects of fraction professional learning of teachers. Jayanthi et al. (2017) conducted a large-scale effort across states in the U. S. to increase 4th grade general education teachers’ content knowledge of the fraction domain from Grades 3 to 6 of the CCSS-M (2010b) who were randomly placed in the treatment condition (n = 129 teachers; n = 2,091 students) and the control condition (n = 135 teachers; n = 2,113 students). The professional learning included two 3-h sessions per day once per month over four consecutive months. The results of teacher’ fraction knowledge was inconclusive (p = 0.51; g = 0.19). There was also no statistically significant difference in fraction learning of students whose teachers participated in the training compared to students whose teachers did not participate in the training (p = 0.637, g = -0.03). As the authors noted, further research in this area is needed to understand better how implementing meaningful and high-quality instructional activities and identifying areas of students’ understanding and misconceptions about fractions could transfer to the classroom and would have a positive impact on both teachers and students’ fraction knowledge.

Faulkner and Cain (2013) compared the pre- and posttest scores of general education and special education teachers who had not attended mathematics professional learning in the previous seven years (n = 85). The teachers participated in a 40-h professional development seminar that targeted teachers’ understanding of the quantity and magnitude of whole numbers, numeration, equality, proportional reasoning, and algebraic thinking. Both general and special education teachers demonstrated meaningful gains from pre- to posttest on a fraction knowledge test (d = 0.58, p < 0.05) with no statistically significant differences between general and special education teachers. Faulkner and Caine did not measure student learning outcomes; however, the findings from the above two studies inform the relevance of the present study’s mathematics domain and confirm the importance of providing content-focused professional learning on foundational fraction concepts and measuring student learning outcomes, especially for students with MLD.

Developing students’ conceptual understanding through questioning strategies

One way to incorporate meaningful activities into professional learning of fractions is to provide activities for teachers to develop students’ conceptual understanding through questioning strategies. Borko et al. (2015) and Polly et al. (2014) examined teachers’ mathematics content and pedagogical knowledge by combining foundational mathematics concepts with content-focused questioning strategies. Content-focused questioning is intended for teachers to extend students’ thinking and facilitate meaningful discussions about students’ learning (Borko et al., 2015; NMAP, 2008; Polly et al., 2014).

Borko et al. (2015) provided 54 middle-school teachers with professional learning targeting ratios and proportions and activities for engaging students in meaningful discussions by watching video-taped instructional sessions of their mathematics instruction with students. During the training sessions, the teachers discussed and identified ways to expand their students’ thinking about their problem solving processes and how they might prompt students to make further connections about the concepts they were learning through direct and open-ended questioning. On a scale measuring teachers’ knowledge of mathematical content, the results indicated a statistically significant gain in teachers’ content knowledge pre- and postscores, t(52) = 3.53, p < 0.01, d = 0.49). Although the researchers did not measure student learning outcomes, their findings warrant further investigation of how teachers can work collaboratively during professional learning activities and how types of content-focused questioning can be used during training as a way to develop students’ mathematical reasoning.

Polly et al. (2014) observed K-3 teachers for their use of four critical questioning types: Level 0: questions that elicited only one answer (e.g., What was your answer?); Level 1: questions that elicited a summary of steps (e.g., How did you get your answer?); Level 2: questions that elicited students’ explanation of their reasoning (e.g., How do you know your answer is correct?); and Level 3: questions that elicited student-to-student discussion to explain their thinking (e.g., What do you think your peer did to find the answer?) to develop conceptual understanding of number sense using representations (e.g., cubes, number lines, and pictures). The mathematical concept was establishing equivalence and place value when solving algebraic tasks by identifying missing variables and representing algebraic problems with pictorial and concrete examples. The 28 teachers completed 84 h of professional learning over 13 months. The results on a scale measuring teacher knowledge showed that teachers increased the number of correct answers from the pre- to posttest, t(27) = 6.66, p < 0.01, d = 1.26. A notable shift occurred in the type of questions teachers posed from the beginning to the end of the study. For example, three teachers were randomly selected as case-study participants and were observed posing primarily Level 0 (e. g., what was your answer?) and Level 1 questions (e. g., questions that elicited a summary of steps) but did not pose any Level 2 and 3 questions that elicited elaboration and student-to-student discussion. As the study progressed, these teachers increased Level 2 questions but did not pose any Level 3 questions prompting student-to-student discussions. These results prompt further investigation using questioning strategies with special education teachers to elicit student discussions about their problem-solving processes.

Practice-based professional development for self-regulated strategy development

We draw upon the work of Harris and colleagues for providing effective practice-based professional development (PBPD) for implementing the Self-Regulated Strategy Development (SRSD) instructional framework, an evidence-based practice (Graham & Harris, 2018; Harris et al., 2008; What Works Clearinghouse [WWC], 2017; see description of SRSD below). As noted above, effective professional learning provides a context in which teachers further develop specific content knowledge and pedagogical skills to facilitate students’ learning. Professional learning should also focus on developing students’ agency as self-directed learners, problem-solvers, and collaborative learning partners with their peers (Ball & Cohen, 1999; Darling-Hammond et al., 2017; Desimone, 2009; Graham & Harris, 2018; Harris et al., 2012).

PBPD centers on principals for effective teacher learning to ensure that new skills teachers learn during training sessions change classroom practice by creating an instructional community, increasing teachers’ motivation to implement SRSD, and improving student learning outcomes (Ball & Cohen, 1999; Graham & Harris, 2018; Harris et al., 2012; McKeown et al., 2016). PBPD includes the following principles for effective professional learning: (a) collective participation of teachers who have similar teaching responsibilities, (b) a focus on specific learning needs of students in the participating teachers’ classrooms, (c) identification of both content and pedagogy needed to implement the SRSD strategies, (d) modeling of expected procedures and skills, (e) extensive opportunities for active learning; (f) use of the curriculum that is expected to be implemented in the classroom, (g) continual feedback and support from trainers and peers on teachers’ understanding of the content and activities they are expected to implement in their classrooms, and (h) feedback and support that continues into the classroom (Harris et al., 2008).

Self-regulated strategy development

The SRSD instructional framework provides teachers with an explicit and systematic approach in which students are supported until they take full responsibility for their learning. SRSD embeds self-regulation strategies (e.g., goal-setting and self-monitoring of learning) to help students manage the affective, behavioral, and cognitive demands of learning to write as they become independent and successful writers (Graham & Harris, 2018; Harris et al., 2008). Briefly described here, the SRSD instructional framework consists of six recursive (see Harris et al., 2008): (a) teachers develop students’ background knowledge, skills, and language needed to learn and use the learning strategies and activates students’ knowledge and prior learning experiences in preparation for learning the SRSD strategies; (b) the teacher discusses the targeted learning strategies, introduces and examines each step, and presents the graphic organizers the students will use; the students and the teacher discuss their learning challenges and the importance of effort, perseverance, and positive attributes when tackling difficult tasks; (c) the teacher models each step of the SRSD strategy for the students using a think-aloud strategy to model what to do at each step, allowing students to observe the teacher use the learning and self-regulation strategies while planning and composing text (e.g., self-regulation strategies include setting goals for learning, self-monitoring progress toward those goals, and identifying statements or things to say to themselves as they work through challenges or celebrate successes); (d) students paraphrase in their own words and practice memorizing each step of the strategy to recall the steps, apply them during the planning and problem-solving process, and generalize their learning across settings; (e) the teacher gradually releases responsibility for writing and self-regulation of their learning to the students, provides multiple opportunities for students to practice using the strategies collaboratively with peers, while supporting the maintenance and generalization of the strategies; and (f) students apply the writing and self-regulation strategies independently while the teacher continues to monitor students’ use of the strategies by either providing a review or reteaching lesson components for those students who need additional support. At each SRSD stage, the teacher can assess further students’ writing and self-regulation and individualize instruction and support accordingly.

Math-writing with FACT using SRSD

Based on our prior efficacy research (see Hacker et al., 2019; Kiuhara et al., 2020), our concern was to provide opportunities for teachers to facilitate active discussion with students during the implementation of a new writing-to-learn intervention called FACT-R2C2 (herein referred to as FACT), as a way for teachers to support further students’ understandings and misconceptions about learning fractions through argument writing. FACT was designed using the SRSD instructional framework (Graham & Harris, 2018; Harris et al., 2008). The uniqueness of FACT is that it teaches mathematics within a context that supports self-regulated learning and mathematical language development by engaging students in writing activities in which they must justify their learning about fractions and address their understandings and misconceptions about fractions. Each letter of the strategy represents steps to systematically engage students in the problem-solving and argument writing processes: F = Figure it out; A = Act on it; C = Compare your reasoning with a peer; T = Tie it up in an argument. The “T” in FACT summarizes the four letters needed to construct a written argument: R = Restate the task; R = Provide reasons; C = Present the counterclaim; and C = Conclude the paragraph.

Using a pre-posttest cluster-randomized controlled trial, Kiuhara et al. (2020) found that students with and at risk for MLD who received FACT instruction (n = 28) made meaningful gains in their fraction knowledge (g = 0.60) compared to students in the control condition (n = 31). Students in the FACT condition showed statistically significant and substantial improvement in: their mathematical language and quality of mathematical reasoning (g = 1.82); the number of argumentative writing elements (g = 3.20); and total words written (g = 1.92); moreover, students with MLD who received such instruction (n = 16) demonstrated statistically significant gains in fraction knowledge compared to their same-aged peers without MLD (n = 12, g = 1.04). These results suggest that explicit and systematic writing-to-learn math instruction benefited students with MLD. Teacher implementation of SRSD has also been shown to significantly increase the writing quality of students with an effect size (ES) of 1.52 (Graham & Harris, 2018). However, based on our treatment fidelity data in our prior research, some teachers required further support to understand foundational components for both argument writing and fraction content. Because FACT involves writing and mathematics, we found that teachers implementing instructional components at < 85% criterion benefited from additional instructional support of the writing and mathematics concepts underlying the lessons (Hacker et al., 2019; Kiuhara et al., 2020).

Aims of the present study

As a way for teachers to facilitate meaningful discussion with students during FACT instruction, we extended the PBPD protocol for writing and teacher implementation of SRSD to include professional learning of questioning strategies centered around the Standards of Mathematical Practice (CCSS-M, 2010b). We incorporated mathematics-focused, open-ended questioning strategies that prompted students to engage in mathematical practices for planning and constructing written arguments to increase their fraction knowledge and the quality of their mathematical reasoning. We previously reported on students’ performance associated with FACT instruction (Kiuhara et al., in press). Here we focus on teachers providing higher-level questions to help develop students’ fraction knowledge and mathematical reasoning in a sample of fifth- and sixth-grade MLD students. We also report student learning outcomes on a far-learning fraction measure.

We developed a new 3-level taxonomy of question types that extended the questioning strategies used in Borko et al. (2015) and Polly et al. (2014) that focused on specific questioning strategies for teacher to elicit active discussion from students during FACT instruction. Level 1 questions were yes/no questions that focused on the fractions content; Level 2 questions elicited a fraction-focused one-word response; and Level 3 questions elicited open-ended responses that centered around four Standards of Mathematical Practice (see the Method section below for a examples of each question type). Based on the literature reviewed above, we hypothesized that as students progressed through the FACT lessons, which scaffolded argument writing opportunities to problem solve while using the FACT strategies, the teachers’ proportional use of Level 3 questions would increase relative to the number of Level 1 and 2 questions during FACT instruction. Three primary research questions (RQs) guided the study, which, in one form or another, addressed the proportion of teachers’ questions that were coded as Level 3 questions (i.e., the number of Level 3 questions divided by the total number of questions).

RQ1: Did teachers’ proportion of Level 3 questions increase following the introduction of the FACT intervention?

The Level 3 questions in this study aligned with four Standards of Mathematical Practice (CCSS-M, 2010b) that prompt students to justify their reasoning about solutions, use representations to develop further their conceptual understanding of fractions, and increase opportunities for them to share their understandings with written their peers. We hypothesized that as students progressed through the FACT lessons, which scaffolded argument writing-to-learn activities, the teachers’ proportional use of Level 3 questions would increase relative to the number of Level 1 and 2 questions. Level 3 questions prompt students to reflect on these practices as they compose their written paragraph that addresses their mathematical understandings and common misconceptions about fractions.

We posed two additional research questions: one, to assess the potential positive contributory effects of PBPD to teachers’ provision of Level 3 questions; and a second, to examine whether the anticipated increases in teachers’ issuance of Level 3 questions were connected to a hoped-for improvement in students’ mathematical reasoning. Specifically:

RQ2: If teachers’ proportion of Level 3 questions increases, is the improvement associated with the FACT intervention above and beyond the PBPD component that preceded the intervention?

RQ3: Is there a connection between teachers’ increased proportion of Level 3 questions asked and the quality of students’ written responses? More generally, does a relationship exist between the type of questions teachers pose during FACT instruction and the quality of students’ mathematical reasoning?

Based on the findings from Borko et al. (2015) and Polly et al. (2014), we wanted to assess the effects of the embedded component of questioning strategies in PBPD on the quality of students’ mathematical reasoning. Because the four standards of mathematical practice were aligned with the FACT intervention, we hypothesized that when teachers explicitly prompt students to reflect on their problem solving and reasoning while composing, students will provide more cohesive and supportable arguments to justify their solutions.

Method

Setting and participants

After receiving institutional review board approval to conduct this study, we contacted key district personnel of a large and diverse school district located in the intermountain region of the United States, in which 51% of students are from historically underrepresented groups. Key district personnel identified one special education teacher at 12 schools who was licensed to teach special education and provided specialized mathematics instruction (i.e., Tier 3) to 5th and 6th graders with MLD for 45 min per day, four times per week. Eight female teachers from eight different schools consented to participate in the study. Five of these schools qualified for Title 1 services. On average, the teachers had taught for 13.4 years (range = 5 to 27 years). Four teachers had a master’s degree, two had a bachelor’s degree, and one had a Juris doctorate.

The student participants (n = 27) included 15 (56%) fifth-graders and 12 (44%) sixth-graders, 14 (52%) males, and 10 (37%) English learners (ELs). Fifteen students (56%) were Latinx, 8 (30%) were White, 2 (7%) were Black, and 2 (7%) were multiracial. The 5th and 6th grade students from each teacher’s Tier 3 class were initially administered two screening measures: (a) the mathematics subtest of the Wide Range Achievement Test, 4th Ed. (WRAT-4) (Wilkinson and Roberson, 2006) and (b) a writing subtest from Wechsler Individual Achievement Test (WIAT), 3rd Ed. (Wechsler, 2009), in which students wrote a short essay response to an expository prompt. Students’ writing was scored for word count, theme development, and text organization. All students scored below the 14th percentile on the mathematics subtest (M = 6.92; range = 3.17–13.36). The students’ average score on the written expression subtest was at the 7th percentile (range = 3.17–13.67). It is important to note that during the study, one teacher was removed from the study’s formal data analysis because of an unforeseen family emergency, which considerably delayed her participation during the initial professional learning phase of the study and the implementation of the FACT intervention (discussed below). The results and discussion are based on the remaining seven teachers and their classes.

Single-case intervention design

A single-case randomized multiple-baseline design (MBD), with random assignment of classrooms to the staggered multiple-baseline levels (“tiers”), was adopted to evaluate the effectiveness of the FACT intervention, because of its high degree of scientific credibility (Kratochwill & Levin, 2010; Levin et al., 2018). A nonconcurrent version of the design was implemented (Kratochwill et al., 2022; Morin et al., 2023; Slocum et al., 2022a, 2022b) because in school settings multiple intermittent unplanned-for events (e.g., irregularities of school-schedule timing, teacher absences, weather conditions, within-school events,) “conspire” to disturb the concurrency of intervention administration. Although the current What Works Clearinghouse’s Single-Case Standards document (WWC, 2022) gives somewhat shorter shrift to nonconcurrent MDs than to concurrent ones, the just-referenced authors suggest that nonconcurrent and concurrent MBDs hold near-equal footing with respect to their internal-validity characteristics. As Slocum et al. (2022a) note: “In our previous article on threats to internal validity of multiple baseline design variations…we argued that nonconcurrent multiple baseline designs (NCMB) are capable of rigorously demonstrating experimental control and should be considered equivalent to concurrent multiple baselines (CMB) in terms of internal validity.”

A MBD was implemented because the skills and knowledge that teacher and student participants may have acquired through participation were unlikely to be reversed, and this single-case intervention design allowed each teacher’s class to serve as its own control (e.g., Horner & Odom, 2014; Kiuhara et al., 2017). The nonconcurrency of the design was necessitated by the commencement of each teacher’s intervention on pre-specified calendar dates. Hence, the to-be-reported stagger of the design is represented by the number of pre- and post-intervention “sessions” rather than by actual chronological dates (Slocum et al., 2022b). The purpose of implementing randomization in the design and associated statistical analyses was: (a) to improve, respectively, the internal and statistical-conclusion validities of the study, thereby providing a small-scale proxy to a randomized controlled trial (Kratochwill et al., 2022; Shadish et al., 2002); and (b) to allow for a formal statistical assessment of the intervention’s effectiveness based on a well-controlled Type 1 error probability while furnishing adequate statistical power for uncovering the intervention effects of interest (Levin et al., 2018).

Intervention: PBPD for FACT

The PBPD protocol for FACT was designed to support special education teachers’ use of argument writing to learn fractions. The overall learning objective for PBPD for FACT was to increase teachers’ use of content questions while implementing the FACT lessons with fidelity. Teachers received 2 days of professional learning before implementing the FACT intervention. Table 1 presents the CCSS-M and CCSS-LA () standards taught across each FACT lesson. The fraction content included understanding equivalence, comparing fractions that refer to the same whole, composing and decomposing fractions, adding and subtracting with like and unlike denominators, and using equivalent fractions to solve problems with unlike denominators. The writing content included writing arguments to support claims with reasons and evidence. The fraction content was taught using a sequence of multiple representations (e.g., fraction blocks, number lines, area models, and numerical and mathematical notation (Hughes et al., 2014; Witzel et al., 2008).

Table 1.

FACT scope and sequence

| CCSS-mathematics and English language arts | Content | Lesson |

|---|---|---|

| Extend understanding of fraction equivalence and Ordering (M.4NF1; M.4NF2) |

Develop Background Information with Fractions. Explain equivalent fractions using visual models. Explain equivalent fractions with different denominators referring to the same whole. Compare fractions with different numerators and denominators when referring to the same whole Introduce FACT: Introduce and model a strategy to write opinion pieces or arguments on topics to support their reasons and claims with evidence and support: Content will compare the magnitude of two fractions and justifying stated claims. Discuss general academic and domain-specific words and phrases, gather vocabulary knowledge when considering a word or phrase important to comprehension or expression when learning fractions |

1 2–3 |

|

Write opinion pieces on topics supporting a point of view with reasons and information. Introduce content clearly and create organizational structure in which ideas are logically supported (W.41, 51, 61; L.6.6) |

Continue FACT. Support students use of argument writing to support their reasons and claims with evidence and support. Focus on using general academic and domain-specific words and phrases, gather vocabulary knowledge when considering a word or phrase important to comprehension or expression when learning fractions, presenting claims and counterclaims with evidence (i.e., use of transition words and phrases) | 4 |

|

Build Fractions from unit fractions by applying & extending previous understandings of operations on whole numbers (M.4NF3-4) Solve real-world problems involving addition and subtraction of fractions referring to the same whole, including cases of unlike denominators (M5.NF.2) |

FACT to Build Fractions knowledge. Compose fractions as joining equal-sized parts referring to the same whole. Decompose fractions as separating parts with the like denominators that refer to the same whole. Understand a mixed number is a whole number and a fraction can also be represented as a fraction greater than one. Interpret problems by using strategies and multiple representations, such as using concrete manipulatives, drawing area models or number lines FACT + R2C2 to Solve Word Problems. Solve real-world problems. Use benchmark fractions and number sense of fractions to estimate and assess reasonableness of answers |

5 |

The PBPD for FACT incorporated specific questioning strategies teachers could use to engage students in constructing written arguments to support learning fractions. For example, Level 1 questions were yes/no questions that focused on mathematics content; Level 2 questions elicited a one-word response; and Level 3 questions elicited open-ended responses that encouraged students to communicate their conceptual and procedural knowledge of fractions. Table 2 presents the Level 3 questions teachers could pose during instruction. These questions centered around four mathematical practices: (a) make sense of problems and persevere in solving them, (b) construct viable arguments and critique the reasoning of others, (c) use appropriate tools strategically, and (d) attend to precision (CCSS-M, 2010b; NCTM, 2014). Content questions focusing on these practices are assumed to allow teachers to engage students in learning mathematics content standards while developing their strategic competence and adaptive reasoning. For example, when asked to compare two fractions for magnitude, such as and , a teacher might ask, “How did you decide which fraction was greater?” to engage students in explaining their problem-solving approach. The students’ responses are hoped to demonstrate their mathematical reasoning process by identifying the strategy they used to solve the problem or how the students made sense of the problem. On the other hand, if the teacher asked a Level 1 question (e.g., “What is the answer to the problem?”), the students’ responses might only identify whether the answer was correct, giving no indication of their mathematical reasoning, conceptual understanding, or processes used to solve the problem. When teachers ask content questions, students can better connect concepts to operations and reason through problem-solving processes (NCTM, 2014).

Table 2.

Level 3 questions

| Focused content questions | ||

|---|---|---|

| Standards of mathematical practice | Definition | Examples |

| Make sense of problems and persevere when solving them | Questions prompt students to plan a solution to a problem, explain connections between strategies, use reasoning for finding a solution, and the check reasoning another approach |

What information is given in the problem How would you describe the problem in your own words? How might you use one of your previous problems to help you? How might you organize/represent/show your thinking or problem-solving? Describe what you tried and what you might change? |

|

Construct Arguments and Critique the Reasoning of Peers |

Questions encourage students to use assumptions, definitions, and previous understandings to explain and justify their mathematical reasoning and listen to other’s reasoning and use questions to clarify or build on other’s reasoning |

How did you decide what you needed to use? How would you prove your answer? Is there another way to solve this problem? Why or why not? What mathematical evidence did you use? |

| Use Appropriate Tools Strategically | Questions encourage students to choose tools or strategies that are relevant and useful to solve the problem. Tools can be drawing, technology, manipulatives, estimation, or algorithms |

What strategies could we use to visualize and represent the problem? What information do you already have? What approach are you considering trying first/next? Why was the tool you chose helpful? What other tools can you use to solve this problem? |

| Attend to Precision | Questions elicit students to communicate precisely by using careful explanations, use precise mathematics vocabulary, describes relationships clearly, and calculates accurately, efficiently, and clearly |

What symbols are important to solve this problem? What would be a more efficient/precise strategy? What mathematical language/definitions/ vocabulary can you use to explain your answer? Explain to peers how you solved the problem using precise vocabulary |

Adapted from National Council of Teachers of Mathematics (2014). Principles to actions: Ensuring mathematical success for all

PBPD for FACT: day one

The purpose of Day 1 was to (a) develop the importance of using argument writing to understand equivalence and magnitude of fractions, (b) learn the purpose and steps of the FACT strategy, (c) model and role-play teaching the lessons of the intervention, and (d) assign teachers a homework assignment that asked them to prepare a lesson demonstrating how they would solve a fraction problem by modeling the steps of FACT.

At the beginning of Day 1, the trainers welcomed the teachers, facilitated introductions, and discussed the professional learning agenda (the trainers used a detailed agenda as a checklist to address essential components of the training sessions and checked each on as they addressed during Day 1 and 2). To ensure the teachers’ collective participation, the teachers were invited to share their current practices and experiences about teaching fractions to their students, the curriculum and materials they used, and the degree to which students were successfully learning the core standards for fractions and argument writing (CCSS-LA, 2010a; CCSS-M, 2010b). The trainers and teachers also discussed common misconceptions that must be addressed during instruction and students’ common errors when solving fraction problems.

The trainers presented an overview of the evidence for developing students’ understanding of fractions and how writing can support their learning, the learning characteristics of students with MLD, the challenges students with MLD face when learning mathematics, and the importance of providing students with learning strategies to help overcome those challenges (Geary, 2004). Table 2 presents examples of content questions that center around the four mathematical practices used during the training. The trainer and teachers reviewed the example questions and discussed how the questions could encourage students to engage in learning and doing mathematics (Ball & Foranzi, 2009; (NCTM, 2014). The trainers explained that focused questions during instruction are designed to encourage students to deepen their understanding of the mathematics concepts and allow them to use writing to grapple with or make sense of the problem at hand.

The trainers introduced the SRSD framework with its six stages. To provide a model of teacher implementation of SRSD, the teachers watched a commercially produced video that explained and showed examples of the steps in the SRSD instructional framework (Harris & Graham, 2012). Second, the trainers presented the importance of teaching fractions using multiple representations (Hallet et al., 2010; Namkung & Fuchs, 2016; Siegler et al., 2012). The approach adopted in the intervention was the Concrete-Representational-Abstract (CRA) framework, where students represented their understanding of the mathematics concept or procedure by using concrete manipulatives to represent the problem (e.g., fraction block and paper folding), progressing to two-dimensional representations (e.g., area models and number lines), and then representing the concept or procedure using abstract representations (e.g., using mathematical notation to find the least common multiple to solve + ) (Witzel, 2005; Witzel et al., 2008). Finally, the trainers introduced FACT and discussed the research that supported using argument writing to learn fractions (Bangert-Drowns et al., 2004; Graham et al., 2020).

The trainers and the teachers reviewed Lessons 1 and 2 of the intervention. During each lesson, the trainers discussed the fraction or writing content and allowed the teachers to take turns teaching the writing and fraction activities and asking Level 3 questions. The teachers were given an advanced organizer that included lesson content, activities, specific vocabulary of the lesson, instructional targets, common student misconceptions of lesson topics, and examples of Level 3 questions that would encourage mathematical thinking.

PBPD for SRSD: day two

Day 2 provided opportunities for teachers to model the activities included in the remaining FACT lessons and to provide and receive feedback from the trainers and each other. At the beginning of Day 2, the teachers practiced modeling their prepared lessons with Level 3 questions as they presented their demonstration. After modeling the steps for writing the paragraph, they reflected on their demonstration, what worked, and any challenges in areas where they needed more support. The teachers practiced with each other until they felt confident modeling the FACT strategies independently. If the teacher did not feel confident with the process, the trainers and teachers collaboratively repeated the modeling of the steps.

Teacher measures

Questions types

An observational protocol was developed for this study to record the number of content questions teachers asked during the observed instructional session of the FACT intervention. Teachers were observed approximately every three instructional days. The observational protocol consisted of two sections. Section I included general information about the mathematical content the teacher covered during the observation period and space for the observer to take field notes of the classroom setting. For example, if the teacher was in the baseline phase, the observer noted the topic of the mathematics lesson (e.g., subtraction with regrouping, the area of a square, or identifying the variable in an expression). If the teacher was in the intervention phase, the observer recorded the FACT lesson number and the fraction content taught during the observational period (e.g., fraction comparison, addition, subtraction of fractions, or fraction magnitude). The observer noted any event that may have disrupted instruction, such as scheduling changes due to a snow day, an emergency fire drill, a field trip, or the teachers’ challenges with classroom management and noncompliant student behaviors.

In Section II, the observer recorded each occurrence of a question posed by the teacher by making a tick mark in one of three areas: Level 1 consisted of “yes or no” questions (e.g., “Is ¼ the correct answer?”); Level 2 consisted of questions that required a single word response that was related to the fraction content (e.g., “What is the top number of a fraction called?”); Level 3 consisted of open-ended questions and questions that were centered around four mathematical practices asking students to explain or describe with more than a one-word response. The following content questions focused on four mathematical practices: (a) sense-making and perseverance questions prompted students to plan a solution to a math problem (e.g., How might you represent your problem-solving process?); (b) argument and critique questions encouraged students to explain and justify their mathematical reasoning (e.g., What mathematical evidence did you use to solve this problem?); (c) use of appropriate tools questions asked students to articulate the tools or strategies students could use when solving a problem (e.g., What strategies or tools would be the best choice in solving a fraction comparison problem?); and (d) attending to precision questions asked students to use mathematics vocabulary or re-calculate the problem accurately (e.g., How would you explain your answer to your peer?).

Each observed session was audio recorded. An independent rater listened to a randomly selected sample of 20% of the recordings across baseline and intervention phases (What Works Clearinghouse, 2020). The independent scorer used the observational protocol to code the level of questions asked for each session. Interrater reliability for frequency agreement was calculated by dividing the lower frequency count by one rater by the higher frequency count of the second observer and multiplying by 100 (O’Neill et al., 2011). Interobserver agreement was 82%, ranging from 71 to 95%.

Mathematical knowledge for teachers

The Mathematical Knowledge for Teachers (MKT): Numbers and Operations (Hill et al., 2004) is a standardized assessment used to evaluate the mathematical content knowledge of teachers (parallel form reliability of 0.85). It consists of 24 questions worth 1 point each that aligns with the core domain of Numbers and Operations, which include fractions. The MKT was individually administered before the teacher participated in PBPD and after the teacher completed the last lesson of the FACT intervention. To ensure the reliability of each teacher’s total score (O’Neill et al., 2011), two independent raters scored 100% of all teachers’ assessments. The MKT tests administered at the pre-baseline and post-intervention phases were scored independently by two scorers who were blinded to the purpose and the conditions of the study. The scorers entered their scores into a spreadsheet and matched data for agreement at each level. Interobserver agreement was 100% agreement after the raters’ first pass.

Student measures

Students were administered 20 equivalent untimed writing probes each week, where they were prompted to construct an argumentative paragraph to justify their solution to a fraction problem. Students’ papers were scored at the end of the study by two independent scorers who had no knowledge of the purpose of the study. The third author placed the papers in random order so that the scorers would be blinded to the chronological sequence of the probes. Each probe was scored for writing quality, argumentative elements, and total words. For each probe, they were.

Quality of mathematical reasoning

Students’ papers were scored holistically using an index from 0 to 12, with higher scores representing reasoning quality and computational accuracy (e.g., 0 = no response or showed no understanding of the problem (e.g., I don’t know); 6 = the fraction problem was solved correctly, but the students showed gaps in their reasoning to support their answer or did not provide supporting evidence in a counterclaim; 12 = an accurate and focused justification of the students’ solution with a fully supported position and counterclaim presented cohesively with vital transitions phrases. Two independent scorers scored 100% on all assessments. Disagreements of more than 3 points between the two scorers were resolved by discussion. Interrater reliability was 90%.

Argumentative elements

Each probe was scored for the following six argumentative elements (0 to 36 points): (a) included a statement that represented the fraction problem to be solved (e.g., My task was to compare the fractions and to find out which fraction was greater.); (b) stated a claim or answer to the mathematics problem (e.g., four-sixths is greater than five-eighths); (c) provided reasons and elaborations to support the claim (e.g., I used a number line to figure out the answer, and is closer to one whole.); (d) provided a counterclaim or an incorrect solution to the problem (e.g., others may argue that is greater.); (e) provided reasons and elaborations to support the counterclaim (e.g., my peer might think is greater because 4 is greater than 3); and (f) provided a concluding statement (e.g., however, the number line shows that is greater than because it is closer to the 1). Two independent scorers scored 100% on all assessments. Disagreements of more than 3 points between the two scorers were resolved by discussion. Interrater reliability was 96%.

Total words

Students’ writing was scored for total words written. The third author typed the students’ writing probes verbatim into a word processing program to eliminate bias for handwriting, spelling, and grammar errors (Kiuhara et al., 2012). A second scorer checked the typed probes for accuracy and resolved any differences with the first scorer. The word-processing program calculated the total number of words written.

Fraction operations measure

The easyCBM Math Number and Operations assessment (Tindal & Alonzo, 2012) was modified to include 27 fraction items (0 to 27 points possible). The test items included multiple-choice questions focused on magnitude, equivalence, comparing two fractions, and adding and subtracting fractions with like and unlike denominators. Two equivalent forms of the test were counterbalanced and then administered to students before and at the end of the intervention. The tests were placed in a random order so that the two independent scorers, who were blinded to the purpose of the study, were also blinded to the pre- and post-conditions of the study. The scorers evaluated 100% of all assessments. Interrater reliability between two scorers was 100%.

FACT treatment fidelity

On average, the teachers completed the intervention in 28 instructional days (range = 16–42 days). Each teacher was observed and audio recorded approximately every third instructional session during the intervention phase of the study for implementing specific instructional components aligned with each FACT lesson. An independent rater listened to the audio recording of the observed lesson, and the average percent of instructional components across all teachers that the two raters agreed upon was 92% (83% to 97%). Based on our findings from the MBD study reported by Hacker et al. (2019), we found that teachers required further coaching and support if their treatment fidelity during the observed session did not reach a 90% criterion.

Approach to analyses

As was already noted, a randomized single-case MBD and associated randomization tests (Levin et al., 2018) were adopted for the present study. Following those procedures, eight participating teachers were randomly assigned to the design’s staggered tiers, with a planned two outcome-observation stagger between tiers. After the fact, one of the teachers was eliminated because of excessive absences, which resulted in gaps in her scheduled execution of the intervention. Moreover, because of an unfortunate consequence of intervention-scheduling constraints for the 7 participating teachers, the study proceeded with an actual between-tier stagger of only one outcome assessment per week (equivalent to four instructional lessons, depending on the teaching pace of the teacher) rather than the planned-for two staggers (eight instructional lessons). As a result, less clear differentiation among the present study’s staggered tiers serves to reduce the typically high scientific credibility of a randomized MBD (Levin et al., 2018, Figs. 2 and 3, pp. 298–300). All randomization tests were based on the average outcome performance of each teacher or each teacher’s class (with the latter ranging in size from 2 to 7 students), and those tests were directional (viz., positing that the mean of the intervention phase would exceed the mean of the baseline phase) based on a Type I error probability of 0.05. In addition, because previous related research (e.g., Hacker et al., 2019) suggested that any expected intervention effects would not emerge in an immediate fashion, a two-outcome observation delay was built into the randomization-test analyses of all measures of mean between-phase change, based on “data-shifting” procedure of Levin et al., (2017, p. 24) as operationalized in Gafurov and Levin’s (2023) freely available ExPRT single-case randomization-test package. Accompanying each randomization test were two effect-size measures: Busk and Serlin’s (1992) “no-assumptions” average ds and Parker et al.’s (2014) average NAPs. After rescaling the NAPs to range from.00 to 1.00, they represent the average proportion of observations in the seven teachers’ baseline and intervention phases that do not overlap.

Because of scheduling necessities, the PBPD sessions had to be administered to a pair of teachers at the same time. Consequently, a “pure” staggered between-tier MB design and associated randomization-test analysis (Wampold & Worsham, 1986) could not be applied to certain measures – in particular, for the study’s first two research questions, where there was between-tier overlap in the participants’ intervention start points. For those two questions, a close proxy replicated AB design and randomization tests were conducted instead (Levin et al., 2018; Marascuilo & Busk, 1988).

Results

We now represent, in turn, the three previously stated primary research questions and the evidence bearing on them.

Research question 1: Did teachers’ proportion of level 3 questions increase following the introduction of the FACT intervention?

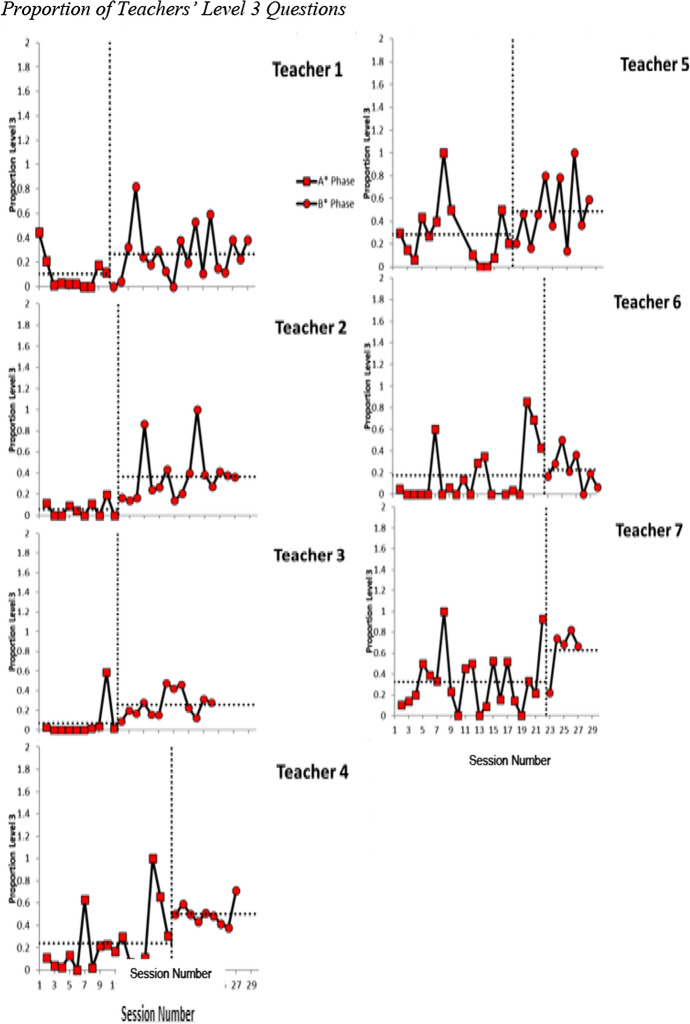

To address this single-case question, for each of the seven teachers, the A-phase series consisted of the proportion of teachers’ Level-3 questions in the baseline series plus those during the PBPD phase, and the B-phase series consisted of the proportion of teachers’ Level-3 questions during the FACT intervention phase. The results are summarized in the seven graphs presented in Fig. 1, with the two-observation delayed phases labeled Phase A* and Phase B*, and the horizontal dotted line in each phase representing the mean of that phase. The across-teachers total number of questions asked ranged from an average of 2.00 in the first session to 31.00 after the seven teachers had completed the FACT intervention. The across-teachers average proportion of Level 3 questions increased from 0.18 during the A phase to 0.39 during the B phase (range = 0.06 to 0.63), with all seven teachers exhibiting an A-phase to B-phase mean increase in their proportional production of higher-level questions. A randomization test of that increase was statistically significant, p = 0.008, average d = 1.38, average rescaled NAP = 0.62. Thus, it can be concluded that the proportion of Level 3 questions asked by the teachers did increase in concert with the staggered introduction of the FACT intervention. It is important to note that even though from Fig. 1 it appears that certain teachers did not produce a clear, unambiguous improvement from Phase A* to Phase B* (e.g., Teachers 6 and 7), the randomization test conducted assesses the across-teachers average between-phase change in levels and is not sensitive to individual teachers’ data patterns. Such individual teacher inconsistencies would be pinpointed in a traditional complementary visual analysis (e.g., Barton et al., 2018).

Fig. 1.

Proportion of teachers’ level 3 questions

Research question 2: was the just-noted increase associated primarily with the FACT intervention Per Se or with the initial PBPD training component?

The answer to this question is derived from two separate analyses. In the first analysis, to assess the effect of PBPD on the proportion of Level 3 questions that teachers asked, the A-phase series consisted of the initial baseline series of outcome observations, and the B-phase series consisted of just the set of professional development outcome observations. With that, the across-teachers average proportion of Level 3 questions asked during the A phase was 0.15, and that of the B phase was 0.10, and the randomization-test p-value was greater than 0.50. So, clearly there is no evidence to support the claim that PBPD training served to increase teachers’ production of the proportion of Level 3 questions.

In the second analysis, to assess the effect of FACT intervention above and beyond what might have been acquired through the professional development training, the A-phase consisted of just the professional development series of teachers’ proportion of Level-3 questions asked and the B-phase consisted of the FACT intervention series of the teachers’ proportion of Level-3 questions asked. In contrast to the preceding analysis, there was a moderate FACT intervention effect that mimicked the finding obtained for previous Research Question 1. Again, the across-teachers average proportion of Level 3 questions increased, from 0.21 during the A phase to 0.39 during the B phase (range = 0.06 to 0.63). The randomization test of that increase was statistically significant, p = 0.016, average d = 1.37, average rescaled NAP = 0.55.

Taken together with the results of Research Question 1, these two analyses indicate that FACT instruction following PBPD training was able to elicit a greater proportion of higher-level teacher questions than PBPD training by itself was able to accomplish.

Research Question 3: is there a connection between teachers’ increased proportion of level 3 questions asked and the quality of students’ written responses?

We have now seen that FACT instruction likely had a positive effect on teachers’ production of Level-3 questions. In a previous report of the student data from this study (Kiuhara et al., in press), we found large positive effects of FACT instruction on the quality of students’ written responses and, in particular, on the mathematical reasoning and argumentative elements measures (average ds = 6.86 and 6.14, average rescaled NAPs = 0.74 and 0.78, for the two student measures, respectively). To determine whether the proportion of teachers’ Level 3 questions and the quality of student writing performance were connected, we correlated the two over the course of the study. For teacher questions and students’ production of argumentative elements, the across-classrooms average r (based on a Fisher z transformation) was a modest 0.37; and for teacher questions and students’ mathematical reasoning the across-classrooms average r was only 0.23. The former was statistically significant according to a one-sample randomization test of the seven individual classroom rs (p < 0.05), whereas the latter was not significant at the 0.05 level (p = 0.09). So, as the study progressed, both teachers’ production of higher-order questions and the quality of students’ mathematical writing quality increased. However, there was only a small degree of coincident improvement in the two measures.

Additional findings

Three additional findings will be briefly noted, two of which were mentioned in our previous report (Kiuhara et al., in press). First, as with prior FACT studies, between the pretest and posttest students exhibited a statistically significant gain in the total number of words written, from an average of 2.40 to 5.22 words, p = 0.01. Second, students also experienced a statistically significant 3-item increase on the fraction operations measure between the beginning and end of the study, p < 0.001. When interpreting these two-measurement pre-post findings, one cannot conclude that any gains were a result of FACT instruction alone because the students with MLD may have received additional mathematics instruction during both the A and B phases of the study. Finally, on the standardized teacher mathematical knowledge test, there was only slight, and statistically nonsignificant, improvement between teachers’ pre- and posttest scores, from an average of 16.3 to 17.9 items correct, p = 0.11. Six of the original 8 teachers showed an improvement in their fraction knowledge.

Discussion

Our purpose was to understand whether special education teachers increased the proportion of Level 3 questions that engaged students in developing their mathematical reasoning and the degree to which PBPD had an effect on the proportion of Level 3 questions posed. We also examined whether a connection existed between the teachers’ increased proportion of Level 3 questions and students’ writing quality. We extended the PBPD protocol for SRSD from our previous work (Hacker et al., Kiuhara et al., 2020, in press). To our knowledge, this is the first examination of the effects of questioning types introduced in PBPD for SRSD and the first to document the role of PBPD on students’ writing-to-learn mathematics outcomes. A writing-to-learn mathematics approach presents several challenges for successful teacher implementation. In the case of FACT instruction, professional training for special education teachers must focus on developing further their knowledge and skills to provide instruction in both writing and mathematics to impact MLD students’ writing and mathematics outcomes.

The generally positive effects of FACT instruction combined with PBPD on teachers’ issuance of Level 3 questions were similar to findings from previous studies that examined content-questioning strategies (Borko et al., 2015; Polly et al., 2014) and supported the importance of content-focused pedagogical questioning embedded in the professional development training (Borko et al., 2015; NMAP, 2008; Polly et al., 2014). Although Polly et al (2014) did not find teachers asking more Level 3 questions during classroom instruction, it is important to note that the Level 3 questions used in the current study were aligned with four mathematical practice standards relevant to our writing-to-learn fraction intervention, and therefore differ from the type of Level 3 questions from Polly et al. (2014).

The increase in teachers’ proportion of Level 3 questions following the introduction of FACT may support the view that embedding such questions that center around mathematic practice can have a positive effect on changing teachers’ classroom practices (Ball & Foranzi, 2009; NMAP, 2008). The proportional increase may also be attributed to the sequencing of the FACT lessons (i.e., argument writing is not introduced until Lessons 2 and 3 and continues throughout the remaining lessons). Teachers would not have had opportunities to ask Level 3 questions during the early part of the intervention. Also, SRSD is taught to criterion, which means teachers can reteach lessons until students master the content taught before advancing to the next lesson. On average, teachers completed the FACT lessons in 28 days. For example, Teacher 7 completed the FACT lessons in 16 days, the shortest number of days, whereas Teacher 1 took the longest at 42 days. Four of the seven students in Teacher 1’s class were classified as English learners (ELs) with MLD, indicating that some students with MLD and who were ELs needed additional time to master learning the FACT strategies.

Second, although the single-case design used in this study allowed us to examine whether the PBPD training affected the proportional increase of Level 3 questions posed, our findings regarding the potential benefits of PBPD were inconclusive. Based on our present analyses, we determined that the increase of Level 3 questions was not directly attributable to the initial two days of PBPD training that took place prior to the implementation of FACT. A tenet of PBPD is for teachers to receive expert support in the classroom as they implement new approaches (Ball & Cohen, 1999; Harris & McKeown et al., 2016, 2018), which may be a possible reason for the proportional increase of Level 3 questions. Teachers have an opportunity to ask questions about the curriculum and receive feedback on their instruction. In addition, trainers may model an activity for the teacher or discuss with the trainer how to accommodate further the math and writing activities for students. Therefore, extending support to teachers during classroom instruction has shown to be an essential component of PBPD (Harris & McKeown, 2022; McKeown et al., 2016, 2019). Although most of the current study’s teachers successfully implemented the FACT lessons with high fidelity rates, only one teacher did not meet the 85% criterion on more than one observed lesson. The third author met with the teacher regularly to address the omitted fidelity components, why these components were essential to be retaught, and modeled using the number line for the fraction activities. Providing teachers with pedagogical and content support in the classroom after professional learning adheres to the principles of PBPD for SRSD (Harris & McKeown, 2022; McKeown et al., 2019). Thus: (1) FACT instruction combined with PBPD was effective in increasing teachers’ proportional issuance of Level 3 questions; and (2) the initial two-day PBPD training alone was not similarly effective.

Providing teachers with PBPD with extensive support and feedback during classroom instruction is an essential combination for changing classroom practice (Ball & Cohen, 1999; Darling-Hamond et al., 2017; Harris et al., 2012; McKeown et al., 2016). When professional learning continues in the classroom, teachers become engaged in their own learning, are allowed time to receive extensive feedback and support, and can reflect on their practice (Darling-Hamond et al., 2017; Graham & Harris, 2018; Harris et al., 2012; McKeown et al., 2016). Thus, conceptualizing PBPD with FACT, rather than PBPD for FACT as we did in this study, may better describe the professional learning approach for implementing multiple curricula components, such as FACT. Investigating the effects of FACT instruction per se (i.e., in the absence of ongoing accompanying PBPD support) is an academic question left open for future investigation, but one that likely does not require attention from a classroom-application perspective.

Finally, the data showed only modest relationships (average across-classrooms rs of 0.37 and 0.23) between the proportion of teachers’ production of Level 3 questions and the quality of students’ writing. We can surmise that the seven teachers who participated in PBPD for FACT in this study were better prepared to facilitate students’ understanding of the mathematical concepts taught. These findings suggest that additional steps and strategies may need to be taken when providing teachers with explicit content-focused questions during PBPD implementation of a writing-to-learn mathematics intervention using SRSD, such as FACT.

Limitations and future directions

Several limitations must be considered when interpreting the results of this study. First, even though we suggest that the teacher and student gains reported here and in Kiuhara et al. (in press) might have been attributable, at least to some extent, to the PBPD for FACT intervention, we cannot ignore the plausible alternative interpretation that such gains were merely the product of teacher and student “growth” and improvement over time. Although we have partial ability to counter that interpretation through our implementation of a between-tiers staggered single-case design, the study as conducted was far from perfect. Because of logistical constraints and personal difficulties experienced by teachers, the planned amount of stagger (namely, two sessions between successive tiers) did not occur. As a result, strong FACT intervention-based effects cannot be claimed here but are only hoped for in subsequent research with tighter control and more successful intervention implementation.

Second, the results observed from the teachers in this study might not be similarly obtained with other teachers in different contexts—and, in particular, the PBPD training, which was delivered to teachers in pairs. The present trainers were also experienced mathematics teachers. They had a personal interest in providing mathematics learning to special education teachers, which may have influenced the teachers who participated in the study. Although the trainers followed a checklist of essential components for each day of PBPD, interobserver reliability data for training fidelity were not evaluated by an independent observer. Moreover, student outcomes may differ in other studies insofar as students with MLD have individual learning strengths and challenges and should not be regarded as a homogeneous group.

Regarding the steps for further inquiry, the CCSS-M (2010b) lists eight mathematical practices, including the four mathematical practices used in this study. Providing teachers with systematic learning for embedding additional questioning strategies, specifically for using argument writing to learn mathematics, shows promise for developing students’ competence in justifying their solutions and understanding mathematic concepts and misconceptions. Second, refinement and further study of the PBPD package to include classroom support is needed to systematically embed opportunities for teachers to practice posing open-ended questions and ask questions or seek clarification during instruction that align with specific writing and fraction activities of the FACT lessons. This may lead to stability and increasing trends during teacher implementation of FACT.

Third, although most teachers who participated in the study improved their fraction teaching knowledge, we did not measure their knowledge for teaching argument writing. Our findings also suggest that identifying teachers’ current levels of content knowledge prior to PBPD may better inform the trainers on how to structure the learning activities. The student participants in this study represent a diverse community of learners. Most students received free and reduced lunch, 29% were English learners, and although none were classified with an Emotional Disturbance (ED), teachers reported that several students received behavioral intervention support. Future work should consider adding PBPD and intervention components, including incorporating technology, focusing more on students’ language development, and providing teachers with classroom management strategies. Moreover, future research is also needed to understand how Level 3 questions can be used to increase opportunities for MLD students who are English language learners.

Conclusion

Investigating how teachers can effectively implement writing-to-learn mathematics in their classrooms requires re-conceptualizing writing as more than a transcription or summarizing skill, especially for struggling learners whose mathematics achievement levels have declined likely as a result of gaps in the formal educational process stemming from the COVID-19 pandemic (NAEP, 2022). The findings we report here expose a research-to-practice gap in which further intervention research is needed, especially in the areas of professional learning and supports for both teachers and students that incorporate evidence-based writing-to-learn practices. Our FACT mathematics intervention is one such practice that warrants additional research attention.

Footnotes

The authors thank the following people for their support: the teachers and students who participated in this study, Drs. Noelle Converse, Leah Vorhees, the Utah State Board of Education Special Education Services, and the WING Institute for Student Research.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Sharlene A. Kiuhara, Email: s.kiuhara@utah.edu

Joel R. Levin, Email: jrlevin@arizona.edu

Malynda Tolbert, Email: malynda.tolbert@uvu.edu.

Breda V. O’Keeffe, Email: breda.okeeffe@utah.edu

Robert E. O’Neill, Email: rob.oneill@utah.edu

J. Matt Jameson, Email: matt.jameson@utah.edu.

References

- Bailey DH, Zhou X, Zhang Y, Cui J, Fuchs LS, Jordan NC, Siegler RS, Siegler RS. Development of fraction concepts and procedures in US and Chinese children. Journal of Experimental Child Psychology. 2015;129:68–83. doi: 10.1016/j.jecp.2014.08.006. [DOI] [PubMed] [Google Scholar]

- Ball DL, Cohen DK. Developing practice, developing practitioners: Toward a practice-based professional education. In: Sykes G, Darling-Hammond L, editors. Teaching as the learning profession: Handbook of policy and practice. Jossey Bass; 1999. pp. 2–21. [Google Scholar]

- Ball DL, Forzani FM. The work of teaching and the challenge for teacher education. Journal of Teacher Education. 2009;60:497–511. doi: 10.1177/0022487109348479. [DOI] [Google Scholar]

- Bangert-Drowns RL, Hurley MM, Wilkinson B. The effects of school-based writing-to-learn interventions on academic achievement: A meta-analysis. Review of Educational Research. 2004;74:29–58. doi: 10.3102/00346543074001029. [DOI] [Google Scholar]

- Barton EE, Lloyd BP, Spriggs AD, Gast D. Visual analysis of graphic data. In: Ledford JR, Gast DL, editors. Single case research in behavioral sciences. 3. Routledge; 2018. pp. 179–214. [Google Scholar]

- Borko, H., Jacobs, J., Koellner, K., & Swackhamer, L. E. (2015). Mathematics professional development: Improving teaching using the problem-solving cycle and leadership preparation models. National Council of Teachers of Mathematics.

- Brindle M, Graham S, Harris KR, Hebert M. Third and fourth grade teacher’s classroom practices in writing: A national survey. Reading and Writing: An Interdisciplinary Journal. 2015;29(5):929–954. doi: 10.1007/s11145-015-9604-x. [DOI] [Google Scholar]

- Busk PL, Serlin RC. Meta-analysis for single-case research. In: Kratochwill TR, Levin JR, editors. Single case research design and analysis: New directions for psychology and education. Lawrence Erlbaum Associates Inc; 1992. pp. 187–212. [Google Scholar]

- Casa, T. M., Firmender, J. M., Cahill, J., Cardetti, F., Choppin, J. M., Cohen, J., ... Zawodniak, R. (2016). Types of and purposes for elementary mathematics writing: Task force recommendations.https://mathwriting.education.uconn.edu.

- Case LP, Harris KR, Graham S. Improving the mathematical problem-solving skills of students with learning disabilities: Self-regulated strategy development. The Journal of Special Education. 1992;26:1–19. doi: 10.1177/002246699202600101. [DOI] [Google Scholar]

- Common Core State Standards Initiative. (2010a). Common core state standards for language arts. https://learning.ccsso.org/common-core-state-standards-initiative.

- Common Core State Standards Initiative. (2010b). Common core state standards for mathematics. https://learning.ccsso.org/common-core-state-standards-initiative.

- Darling-Hammond, L., Hyler, M. E., Gardner, M. (2017). Effective Teacher Professional Development. Palo Alto, CA: Learning Policy Institute. https://learningpolicyinstitute.org/product/teacher-prof-dev.

- Desimone LM. Improving impact studies of teachers’ professional development: Toward better conceptualizations and measures. Educational Researcher. 2009;38(3):181–199. doi: 10.3102/0013189X08331140. [DOI] [Google Scholar]

- Faulkner VN, Cain CR. Improving the mathematical content knowledge of general and special educators: Evaluating a professional development module that focuses on number sense. Teacher Education and Special Education. 2013;36(2):115–131. doi: 10.1177/0888406413479613. [DOI] [Google Scholar]

- Forsyth SR, Powell SR. Differences in the mathematics vocabulary knowledge of fifth-grade students with and without learning difficulties. Learning Disabilities Research & Practice. 2017;32:231–245. doi: 10.1111/ldrp.12144. [DOI] [Google Scholar]

- Fuchs, L.S., Newman-Gonchar, R., Schumacher, R., Dougherty, B., Bucka, N., Karp, K.S., Woodward, J., Clarke, B., Jordan, N. C., Gersten, R., Jayanthi, M., Keating, B., & Morgan, S. (2021). Assisting Students Struggling with Mathematics: Intervention in the Elementary Grades (WWC 2021). Washington, DC: National Center for Education Evaluation and Regional Assistance (NCEE), Institute of Education Sciences, US Department of Education. http://whatworks.ed.gov/.

- Gafurov, B. S., & Levin, J. R. (2023, May). ExPRT (Excel Package of Randomization Tests): Statistical Analyses of Single-Case Intervention Data; current Version 4.3 is retrievable from the ExPRT website at http://ex-prt.weebly.com

- Geary DC. Mathematics and learning disabilities. Journal of Learning Disabilities. 2004;37(1):4–15. doi: 10.1177/00222194040370010201. [DOI] [PubMed] [Google Scholar]

- Gillespie A, Graham S, Kiuhara SA, Hebert M. High school teachers use of writing to support students’ learning: A national survey. Reading & Writing. 2014;27(6):1043–1072. doi: 10.1007/s11145-013-9494-8. [DOI] [Google Scholar]

- Graham S, Harris KR. An examination of the design principles underlying self-regulated strategy development study. Journal of Writing Research. 2018;10(2):139–197. doi: 10.17239/jowr-2018.10.01.02. [DOI] [Google Scholar]

- Graham S, Kiuhara SA, MacKay M. The effects of writing on learning in science, social studies, and mathematics: A meta-analysis. Review of Educational Research. 2020;90(2):179–226. doi: 10.3102/0034654320914744. [DOI] [Google Scholar]

- Hacker DJ, Kiuhara SA, Levin JR. A metacognitive intervention for teaching fractions to students with or at-risk for learning disabilities in mathematics. ZDM. 2019 doi: 10.1007/s11858-019-01040-0. [DOI] [Google Scholar]

- Hallett D, Nunes T, Bryant P. Individual differences in conceptual and procedural knowledge when learning fractions. Journal of Educational Psychology. 2010;102(2):395–406. doi: 10.1037/a0017486. [DOI] [Google Scholar]

- Hallett D, Nunes T, Bryant P, Thorpe CM. Individual differences in conceptual and procedural fraction understanding: The role of abilities and school experience. Journal of Experimental Child Psychology. 2012;113(4):469–486. doi: 10.1016/j.jecp.2012.07.009. [DOI] [PubMed] [Google Scholar]

- Harris KR, Graham S, Mason L, Friedlander B. Powerful writing strategies for all students. Brookes; 2008. [Google Scholar]

- Harris KR, Lane KL, Graham S, Driscoll SA, Sandmel K, Brindle M, Schatschneider C. Practice-based professional development for self-regulated strategies development in writing: A randomized controlled study. Journal of Teacher Education. 2012;63:103–119. doi: 10.1177/0022487111429005. [DOI] [Google Scholar]

- Harris KR, McKeown D. Overcoming barriers and paradigm wars: Powerful evidence-based writing instruction. Theory into Practice. 2022;61(4):4290442. doi: 10.1080/00405841. [DOI] [Google Scholar]

- Hill HC, Schilling SG, Ball DL. Developing measures of teachers’ mathematics knowledge for teaching. Elementary School Journal. 2004;105:11–30. doi: 10.1086/428763. [DOI] [Google Scholar]

- Hill H, Rowan B, Ball D. Effects of teacher’s mathematical knowledge for teaching on student achievement. American Educational Research Journal. 2005;42:371–406. doi: 10.3102/00028312042002371. [DOI] [Google Scholar]