Abstract

Language has been proposed as a potential mechanism for young children’s developing understanding of emotion. However, much remains unknown about this relation at an individual difference level. The present study investigated 15- to 18-month-old infants’ perception of emotions across multiple pairs of faces. Parents reported their child’s productive vocabulary, and infants participated in a non-linguistic emotion perception task via an eye tracker. Infant vocabulary did not predict nonverbal emotion perception when accounting for infant age, gender, and general object perception ability (β=−.15, p=.300). However, we observed a gender difference: Only girls’ vocabulary scores related to nonverbal emotion perception when controlling for age and general object perception ability (β=.42, p=.024). Further, boys showed a stronger preference for the novel emotion face vs. girls (t(48)=2.35, p=.023, d=0.67). These data suggest that pathways of processing emotional information (e.g., using language vs visual information) may differ for girls and boys in late infancy.

Keywords: Infancy, Emotion, Eye tracking, Face perception, Language acquisition, Social cognition

The ability to perceive others’ emotional cues and reactions and then use that information to infer their internal states and future behavior is a crucial aspect of healthy social-cognitive development. Understanding emotions is a complex social skill that develops early (Denham, 1986) and has long-term implications for an individual’s social and academic development (Fabes et al., 2011; Izard et al., 2001; Voltmer & Von Salisch, 2017). Understanding emotions relies on emotion perception. That is, individuals must first identify emotional information before they can form categories that can then be extended to various people and situations. Considering the significance of early emotion perception for healthy development, it is important to identify factors that contribute to individual differences in emotion perception, as well as mechanisms behind its development. In particular, given the interplay between cognitive processes and emotional development among older age groups (e.g., Blair, 2002; Bodrova & Leong, 2006), examining the potential mechanistic role of other cognitive skills in early emotion perception development is of importance. The present study focuses on one such possible cognitive mechanism, language, and investigates how it may relate to individual differences in infant emotion perception.

How children come to perceive and understand emotional information is currently a debated topic. In particular, two major groups of theories have differing perspectives on the role of language in the development of emotion perception and understanding. Basic Emotions theory suggests that emotions and emotion understanding have a strong evolutionary and biological basis (Ekman, 1992). Language is a device for labeling emotions but is not crucial for the development of emotion understanding or perception (Ekman & Cordaro, 2011). In contrast, constructionist theories such as the Theory of Constructed Emotion (Barrett, 2017; Lindquist et al., 2015) suggests that emotion categories are built over time through contexts and experiences. The Theory of Constructed Emotion proposes that emotions are abstract categories because emotions comprise highly variable instances that do not all look alike (e.g., one person may appear fearful by jumping backwards, widening their eyes, and gasping, while another person may freeze). The theory further suggests that language may aid in creating cognitive representations of abstract emotion categories because applying labels and language to different examples of the same emotion may facilitate perceiving the similarities among these instances (cf. Balaban & Waxman, 1997), thereby scaffolding learning about emotions. That is, the Theory of Constructed Emotion holds that language may be crucial for the development of emotion perception and emotion understanding.

Adult research has previously investigated the relation between language and emotion perception. Adults who engage in semantic satiation of emotion words (repeating the same emotion word 30 times in a row causing it to temporarily lose its meaning) show disrupted processing of relevant emotional faces (Gendron et al., 2012). Additionally, when identifying whether two stimuli represent the same emotion, adults are quicker and more accurate when a face is followed by an emotion word as opposed to another face (Nook et al., 2015). Further, a meta-analysis demonstrated that the mere presence of emotion words changes the neural representation of emotions (Brooks et al., 2017), and the presence of labels leads to enhanced performance on a task categorizing chimpanzee facial configurations (Fugate et al., 2011). Research with adults therefore suggests that language and labeling may support the perception of emotion (Roberson et al., 2010). However, whether language is crucial to emotion understanding and perception development remains less clear.

Previous research with children has demonstrated a relation between children’s emotion understanding and general language abilities (Cutting & Dunn, 1999; Pons et al., 2003). In fact, covarying for language abilities when investigating emotion understanding in childhood has become common (e.g., Cook et al., 1994; Denham et al., 1994; Steele et al., 1999), indicating that researchers recognize language as related to emotion understanding. There are two possible explanations for this relation in childhood. First, it is possible that child language relates to emotion understanding because of the linguistic demands involved in common emotion understanding tasks. That is, children with more advanced general language skills may score higher than their peers on emotion understanding tasks simply because they are better at following along with the information presented in the task and/or verbalizing what they know. Even tasks that attempt to limit the requirement for verbal responses by the child (e.g., the Test of Emotion Comprehension, Pons et al., 2003) nevertheless require that the child comprehend a brief scenario that is told to them and respond appropriately. The second possibility is that child language fundamentally relates to emotion understanding in a causal fashion as proposed by constructionist theories (Barrett, 2017; Lindquist et al., 2015). If this is the case, the relation between general language abilities and emotion understanding and emotion perception should be task independent.

It is important to draw a distinction between general language abilities and knowledge of emotion words, specifically, in emotion perception and emotion category development. This is because language and category development have been powerfully linked in other domains (e.g., Balaban & Waxman, 1997; Fulkerson & Waxman, 2007). Although much theoretical emphasis has been placed on the importance of emotion words and studies have connected emotion labels with emotion understanding in children (Russell & Widen, 2002; Widen & Russell, 2004), more general language has also been associated with emotion understanding (e.g., Cutting & Dunn, 1999; Pons et al., 2003). This may be because language provides children with information which they can extend to emotion categories. That is, perhaps children with higher vocabularies are better able to interpret sentences and situations around them, and therefore more readily draw connections between emotional events even in the absence of specific emotion words. If understanding emotions is not unique from understanding and perceiving other categories, then general language abilities should similarly facilitate the development of emotion perception. Supporting this notion, research with 6- to 25-year-olds has shown that the relation between age and a more mature conceptualization of emotions (i.e., increased focus on both valence and arousal dimensions of emotion) was mediated only by verbal ability (Nook et al., 2017). This mediation was for general verbal language abilities and not emotion language. Additionally, although toddlers and preschoolers with general language impairments are capable of discriminating emotional stimuli, they are worse than their peers at emotion identification and attributing an emotion to another person in an emotion-eliciting situation (Rieffe & Wiefferink, 2017).

Together, these studies indicate that general language abilities may play a role in the development of emotion perception and understanding. However, to our knowledge there is no published research addressing this question in a manner that disentangles emotion understanding or perception from the linguistic demands of the task. That is, there has not yet been a direct comparison of individual differences in children’s general language abilities and emotion perception or emotion understanding in a task that includes no language at all. It is important to assess this relation early in life given previous suggestions that the influence of language on emotion perception may be most pronounced within the first two to three years after birth, when basic language skills are rapidly developing (Eisenberg et al., 2005).

Assessing infant perception of emotional faces using a purely non-linguistic task would allow the role of language in early emotion understanding to be directly addressed. One methodology that assesses potential precursors to emotion understanding without language is the facial emotion discrimination task, which simply measures whether infants spend more time looking to one emotional face than another. A consistent preference for one emotional face over another indicates that the infants can tell the two stimuli apart and may prefer one over the other (e.g., LaBabera et al., 1976; Schwartz et al., 1985). This is useful for determining when infants can distinguish between different facial configurations (though it provides little information about whether infants perceive differences in emotion beyond those specific instances or understand emotions more broadly). Thus, in the present study we used an emotion perception task which tests for generalization of facial configurations across different individuals. In this way, our task required perception of emotion beyond a specific perceptual match (children had to generalize the emotion to a new identity), but still allowed for a task completely devoid of language. This facial emotion perception task yielded substantial variability in individual infants’ performance, allowing us to test for relations between individual differences in emotion perception and general language ability. We reasoned that evidence from this emotion perception task, alongside a measure of children’s language abilities, will allow for a clearer answer to the question of how language relates to perception of emotional faces on an individual difference level. This question is of theoretical importance, and is also potentially important for interventions, as it will identify whether different language skills relate to different emotion perception skills in early development. More broadly, addressing this question will shed important light on how language relates to emotion perception development, and therefore will provide potential insight into how such cognitive factors may influence emotional development beginning in infancy.

The Present Study

We assessed perception of emotional faces in late infancy (15- to 18-months of age) using a nonlinguistic task. Late infancy is characterized by variability in individual infants’ language acquisition and emotion perception, and we aimed to capitalize on this variability to address the question of how individual differences in language relate to emotion perception development. We assessed perception of angry, happy, sad, and fearful faces as these are typically the earliest-learned emotion categories and are commonly used in early emotion understanding tasks (e.g., Denham, 1986). Due to the abstract nature of emotions, stronger language abilities may help some infants to identify similarities among various examples of the same emotion category as proposed by constructionist theories (Barrett, 2017; Lindquist et al., 2015). For this reason, we hypothesized that individual differences in the vocabulary size of 15- to 18-month-olds would significantly relate to their performance on a nonlinguistic eye tracking facial emotion perception task. Infants often demonstrate a novelty preference as evidence of categorization (Hunter & Ames, 1988), and consistent with this possibility a novelty preference on an emotion matching task at 15 months related to later emotion understanding (Ogren & Johnson, 2020). Therefore, we predicted that infant vocabulary would relate to a novelty preference (i.e., a preference for the new emotion category) in the present emotion perception task. Further, previous research has shown that child gender relates to infant attention to emotional stimuli (Caron et al., 1985), with boys looking longer at faces than girls. Therefore, we also accounted for child gender, age, and general object perception abilities (as a control for individual differences in discrimination) in our analyses, as these variables may confound relations between language and emotion perception, and our goal was to identify the unique contribution of language.

Method

Participants

Fifty healthy, full-term infants (23 female) ranging in age from 15.05 to 18.50 months (Mage=16.54, SDage=0.99) participated in the study. Infant age was comparable for female (M=16.59, SD=1.01) and male (M=16.49, SD=1.00) infants. An additional 22 infants were excluded from the final dataset due to fussiness or crying (N=17), limited English exposure (less than 90% of the time; N=2), inconsistent eye track (N=1), or failure to meet data inclusion criteria as described in the Results section (N=2). Attrition due to fussiness did not seem to be attributable to any common causes across infants. This age range was selected to capture early infant language and emotion perception abilities, while still providing substantial individual variability in both skills. As previous research correlating 15-month-olds’ eye-tracking performance on a similar task with other measures has shown medium-large effects (Ogren & Johnson, 2020), we conducted a power analysis to determine what sample size would be needed to identify a medium-large effect (f2=0.20). This power analysis indicated that a sample size of 42 would be necessary to achieve power of .8, which we rounded up to a sample size of 50 to ensure adequate power. Of the final sample, 45 infants had at least one parent who had completed four years of college. The ethnic/racial background of participants was as follows: White (N=31), Multiracial (N=10), African-American (N=5), Asian (N=1), Latino (N=1), Chose not to answer (N=2). Infants were recruited from lists of birth records and received a small gift (e.g., a T-shirt or toy) for their participation. The study received formal approval from the university ethics committee (IRB approval #10-000619).

Materials

Surveys.

Parents provided written informed consent and all data were anonymized and kept separate from the consent form with participants identified by ID number only. Parents also completed a demographic questionnaire and the MacArthur Bates Communicative Development Inventory (MCDI; Fenson et al., 2007), a measure of early childhood vocabulary. For this survey, parents were asked to check off every word that their child produces out of those provided. For the present study, the “Words and Sentences” version of the MCDI was utilized. Parents were only asked to identify their child’s productive vocabulary, as it is less subjective than asking parents to make judgments about their child’s receptive vocabulary. Further, parents are more accurate at judging their child’s productive vocabulary compared to receptive vocabulary (Eriksson et al., 2002; Tomasello & Mervis, 1994), and by the second year after birth children’s receptive vocabularies typically become extensive enough that it is challenging for parents to report it accurately (Law & Roy, 2008). This version of the MCDI included four emotion words: Mad, Happy, Sad, and Scared. Only one parent in our sample reported that their child produced any of these emotion words, and so we did not assess relations to emotion-specific vocabulary.

Stimulus creation.

To create emotional stimuli for the eye tracking task, 29 undergraduate women were recruited. This allowed us to create a large set of faces from which we could use the most stereotypical exemplars for our study. Regardless of child gender, mothers tend to contribute the most to childcare across early development (Fillo et al., 2015), making female faces more familiar to infants. Further, infants raised primarily by their mothers show a preference for female faces (Quinn et al., 2002). Thus, we reasoned that female faces as stimuli would facilitate infants’ interest and performance. To keep the stimuli as standardized as possible, each undergraduate was asked to remove earrings, glasses, hair accessories, and makeup, and participants with long hair were asked to place their hair behind their shoulders. Undergraduates were then recorded from the shoulders up and stood in front of the same white background while wearing a black shirt. To keep luminosity and visual angle as comparable as possible across undergraduates, all recordings occurred in the same room against the same wall while the camera was set up approximately 55cm away from their face and at the undergraduate’s eye level. All emotional stimuli were recorded within the same single session for each undergraduate, which typically lasted for fewer than 10 minutes. They were asked to think about a time when they felt a target emotion (anger, fear, happiness, and sadness) and to convey this through their face while the camera recorded them. All adults were instructed to keep a closed mouth and look directly at the camera to control for the potential salience of these perceptual feature to infants. Images were extracted from these video recordings at the peak intensity of each posed emotion. The peak intensity was determined by the research team as the moment in the video when the intended emotion was presented most clearly through the actor’s facial muscle configurations. Images were edited such that the faces were all centered and approximately the same size. Additionally, pictures of unfamiliar objects were taken for control trials, as described subsequently.

Stimulus validation.

A Qualtrics survey was conducted with adults to determine whether there was agreement regarding the emotion associated with the faces. Fifty-one adult raters (15 male) each viewed 116 images (29 women, 4 emotions per woman) and were asked which emotion (angry, fearful, happy, or sad) best described each image. Adults were then asked to rate how confident they were in each response (Likert scale 1–5 from “not at all confident” to “very confident”). Based on these responses, four images from each of the 16 women who had the highest agreement regarding their facial configurations for the 4 emotions were selected. On average for these images, the adult raters agreed on the intended emotion 94.6% of the time with average confidence ratings of 4.1 (Agreement ratings by emotion: Angry=94.9%; Fearful=91.8%; Happy=97.4%; Sad=94.5%. Confidence ratings by emotion: Angry=4.0; Fearful=3.6; Happy=4.4; Sad=4.1). Results of a one-way ANOVA revealed no significant difference in agreement ratings by emotion (F(3, 60)=1.73, p=.171), but confidence ratings did differ by emotion (F(3, 60)=14.45, p<.001) possibly because the direct rather than averted eye gaze can make fearful faces more challenging to detect (e.g., Milders et al., 2011), or because fearful faces may have been confused with surprise, which was not one of our forced-choice options, leading to lower confidence ratings. However, it is important to note that no single selected image was agreed upon less than 70% of the time, which is substantially above chance ratings of 25%. Further, no single woman’s average of the 4 images had lower than 85% agreement. Thus, we determined that the selected images were commonly viewed as appropriate representations of the four intended emotion categories.

Apparatus.

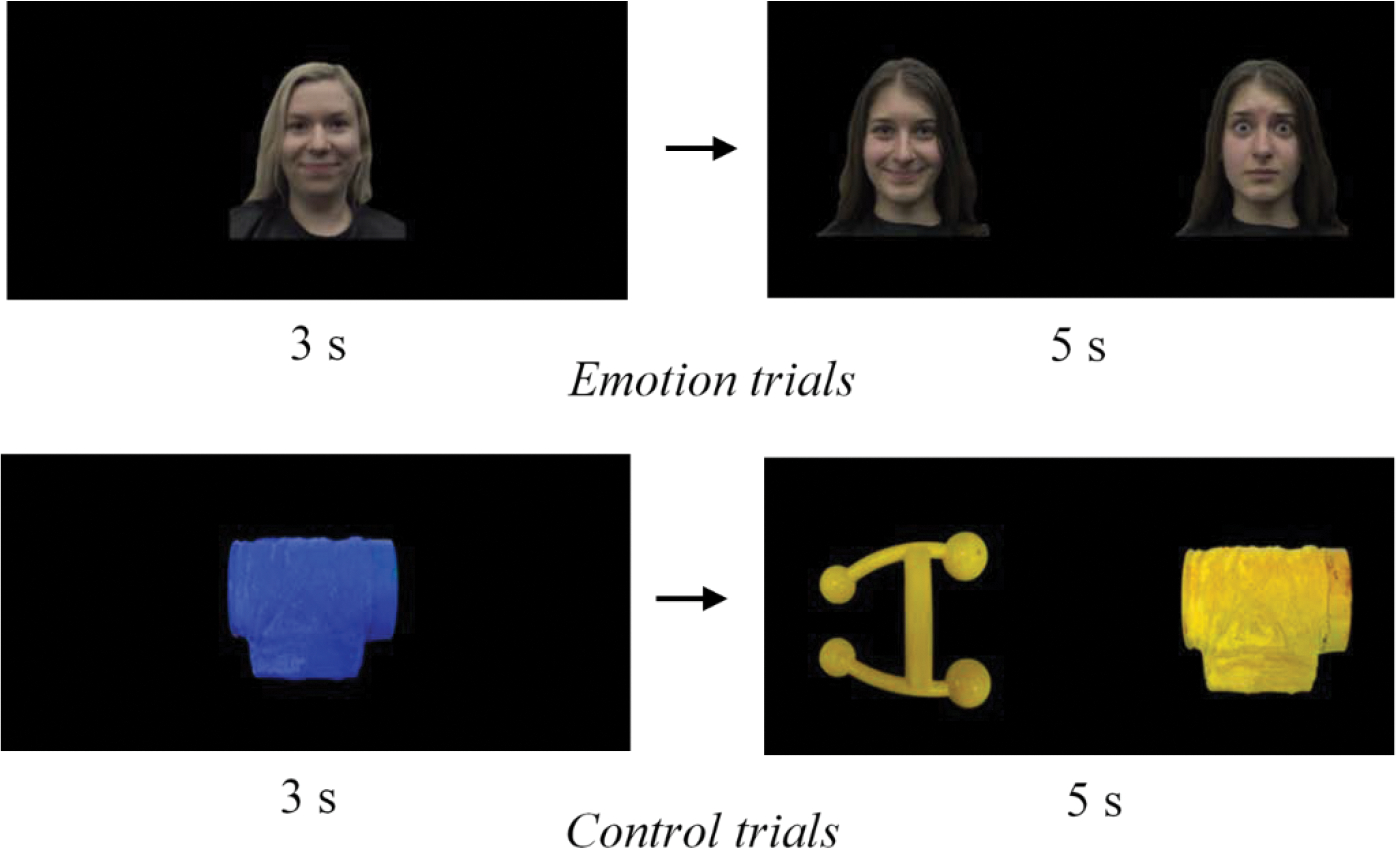

An SR Research EyeLink 1000 eye tracker was used to collect information about infants’ looking time to the stimuli. Infants viewed 36 trials, 24 of which included emotional faces as stimuli, and the remaining 12 contained unfamiliar objects for control trials. Infant eye movements were tracked for all trials. For each emotional trial, infants first viewed one woman posing a particular emotion (e.g., Woman 1- Happiness) for 3 seconds (see Figure 1). After this, the image disappeared and was immediately replaced by new faces on the screen. The two new faces were both from the same woman (e.g., Woman 2) to control for any spontaneous preferences for one person over another or perceptual features that differed across actors which may have influenced looking time. Woman 2 in one image displayed the same emotion as Woman 1 previously (in this case, happiness) and in the other image displayed a different emotion (e.g., fear). These images remained on the screen for 5 seconds, allowing infants sufficient time to visually scan the two faces and determine a preference. This design ensured that any emotion perception was more than a direct match because infants had to generalize the emotion from one woman to another. Thus, this task involved more advanced emotion perception in that it required infants to perceive emotions across multiple identities. Twenty-four of these emotional trials were presented, with each emotion followed by a combination of that emotion and one of the three other emotions on two separate trials (e.g., anger followed by anger-sadness appeared twice per infant). Side of presentation for the stimulus that matched the category of the previous emotion was counterbalanced, and the emotion that was presented alone for 3 seconds could not repeat for more than 2 trials in a row. The specific pairs of actors (i.e., which actor was present for 3 seconds followed by which actor was presented side-by-side for 5 seconds), as well as which two emotions were presented side-by-side for a given actor were randomized across participants.

Figure 1.

An example of a possible emotional (top) and control (bottom) trial. First, one image was presented for 3 seconds. Then this was immediately replaced by two new images which remained on the screen for 5 seconds.

Additionally, 12 control (nonsocial) trials were mixed in randomly among the emotional trials with the constraint that no more than two control trials were presented in a row. Stimuli for control trials consisted of novel objects (Figure 1). For control trials, one image of a novel object of a particular color was presented on the screen by itself for three seconds. Then, the image disappeared and was immediately replaced by two images of the same color (but different from the color of the original object). Of these two new objects, one matched the shape of the previous object and one did not. These two images remained on the screen for 5 seconds. In this way, the control trials mirrored the emotional trials without any emotional content. These control trials allowed us to determine each individual infant’s ability to discriminate objects, and thereby to account for overall memory/object perception ability independent of emotional content. See Figure 1 for example images of how the emotional and control trials were presented to infants. Areas of interest (AOIs) were created as rectangles surrounding each face and control object. These AOIs allowed us to calculate looking time to each face and object for each individual trial.

Procedure

To participate in the eye tracking task, infants sat on a parent’s lap approximately 60 cm from a 56-cm monitor. The eye tracker recorded infants’ eye movements at 500 Hz. Prior to stimulus presentation, each infant’s gaze was calibrated using the standard calibration routine provided by the eye tracker. After calibration, stimulus presentation began immediately. Each 8-second trial was preceded by an attention-getting stimulus in the center of the screen to re-center the child’s gaze. Stimulus presentation continued until all 36 trials were complete, which lasted approximately 5 minutes per participant. Parents were asked to hold their infants on their lap and not to interfere with the child’s gaze or attention. During calibration and stimulus presentation, parents also wore sunglasses which had been painted black to ensure that the parents could not see the stimuli and inadvertently influence their child’s looking behavior.

Results

Descriptive Statistics

Average vocabulary for our infant participants was 40.3 words (SD=66.9), which is comparable to previously reported norms for the 50th percentile at this age (Frank et al., 2017). One infant had a vocabulary value that was more than 3 standard deviations above the mean. To maintain the usable data provided by this participant (valid MCDI score and sufficient attention to the emotion perception task), but to reduce the influence of this outlier, we completed a 95% Winsorization of the data by replacing the lowest and highest vocabulary values with the next most extreme values. This process has been previously used to reduce the influence of outliers in data from a comparable infant age range (e.g., Crivello & Poulin-Dubois, 2019), and resulted in the final average vocabulary for our analyses of 36.0 words (SD=45.2).

For the eye tracking data, trials were removed if infants looked to the screen for less than 500 ms (out of 3000 ms possible) during the single-image presentation or less than 1000 ms (out of 5000 ms possible) during the paired-image presentation. Thus, trials were only included when infants attended to both the first image and the subsequent pair of images. This process resulted in removal of 7.6 trials (SD=5.5) out of 36 total per participant on average: 2.4 control trials (out of 12) and 5.2 emotional trials (out of 24). Thus, the average participant provided 28.4 trials which were included in the final data analysis. Additionally, we required that each participant provide at least 2 usable trials for each emotion (anger, fear, happiness, and sadness) and 4 usable control trials. This inclusion criterion resulted in removal of data from two participants, who therefore were not included in the final sample of 50 participants. These exclusion criteria are consistent with previous infant eye tracking studies (e.g., Geangu & Vuong, 2020) and ensure that all participants and trials included in the final sample had sufficient infant attention to the stimuli.

Statistical Analyses

To assess emotion perception, we analyzed visual attention during the portion of the task when two emotions were presented side-by-side. We calculated the proportion of time that each infant spent looking to the emotion that was novel relative to the category of the previously displayed emotion out of the total time the infant spent looking to the two faces (out of 5s max) for each trial. This proportion was calculated for each trial, then all usable trials for each infant were averaged to create one “emotion perception” score per infant. Similarly, object perception was calculated as the proportion of time that each infant spent looking to the object that belonged to the novel shape category relative to the previously displayed object, which was also averaged across usable trials. On average, infants spent 51.0% of the time looking to the novel face on emotion perception trials (SD=4.6%), and spent a similar proportion of time looking to the novel object on the control trials (M=51.6%, SD=6.7%). Thus, we interpreted the present results to indicate that the two tasks were of roughly comparable difficulty. When comparing to looking at chance level (50% to each face), infants as a group did not demonstrate a statistically significant novelty preference for the emotion trials (t(49)=1.51, p=.139, d= 0.21) or for the control trials (t(49)=1.73, p=.090, d= 0.24), but there was notable variability in performance on both tasks. Infant novelty preference did not significantly differ between the emotional and control trials (t(49)=−0.52, p=.605, d=0.12). Thus, the average infant performance indicates that the task was challenging for infants at this age, which we intended to allow for a comparison of individual differences. Previous research has demonstrated that even when infant overall performance is near chance on an eye tracking task, individual differences can still provide meaningful predictions of later performance (Ogren & Johnson, 2020).

To analyze the relation between infant language and nonverbal emotion perception, we used a multiple regression analysis that included infant age, gender, and object perception performance as covariates. MCDI values were used to determine the unique effect of infant vocabulary on nonverbal emotion perception when controlling for the effect of age, gender, and object perception. Table 1 presents correlations among each of these pairs of variables separate from the overall model, although these correlations should be interpreted with caution given the high number of overall comparisons being made. All reported analyses were conducted with the full sample size (50 participants) unless otherwise specified. Further, each statistical test that was conducted was non-directional.

Table 1.

Correlation coefficients for each pair of variables included in the final model

| Emotion Perception | Vocabulary | Child Age | Child Gender | Object Perception | |

|---|---|---|---|---|---|

|

| |||||

| Emotion Perception | 1 | .05 | −.01 | .32* | .31* |

| Vocabulary | 1 | .19 | −.09 | −.23 | |

| Child Age | 1 | −.05 | −.06 | ||

| Child Gender | 1 | .18 | |||

| Object Perception | 1 | ||||

indicates the correlation is significant at the p<.05 level

Note: Correlations involving child gender are point-biserial

Vocabulary and Nonverbal Emotion Perception

A multiple regression analysis was used to address the relation between infant vocabulary and nonverbal emotion perception. In this model, infant age did not uniquely account for variability in emotion perception as measured by a preference for the novel face (β=−.01, p=.945), but child gender and control object perception did (Gender: β=.28, p=.046; Object perception: β=.29, p=.044). Accounting for the effect of infant age, gender, and object perception, infant vocabulary did not relate to infant nonverbal emotion perception (β=.15, p=.300). Thus, across all 15- to 18-month-old participants, vocabulary did not uniquely relate to infant emotion perception when accounting for other potential confounds.

Analyses Separated by Emotion

We conducted post-hoc analyses to investigate the possibility that infant vocabulary related to nonverbal emotion perception when accounting for age, gender, and object perception for each of the four emotions independently. We separated our emotion perception outcome variable into four outcome variables based on the emotion presented during the first 3 seconds of the trial (i.e., which emotion was present during the first part of the trial when only a single face was shown at a time). We ran four separate regressions. Because of the post-hoc nature of this analysis, we adjusted our alpha level to account for multiple comparisons (.0125 rather than .05). There were no significant relations for any of the four emotional conditions- Anger: β=−.13, p=.392; Fear: β=.17, p=.263; Happiness: β=.15, p=.296; Sadness: β=.03, p=.830. That is, infant vocabulary did not uniquely relate to emotion perception for any individual emotion category. We further examined whether the interaction between vocabulary and emotion significantly predicted infant nonverbal emotion perception, and this was also non-significant (β=.09, p=.512).

Gender Differences

Because gender related to overall emotion perception in our multiple regression model (consistent with previous reports of gender differences in child language and emotion understanding), we conducted follow-up analyses assessing gender differences in vocabulary, object perception, and emotion perception. Results revealed no significant gender differences in our sample for vocabulary (t(48)=−0.65, p=.521, d=0.18) or object perception (t(48)=−1.28, p=.208, d=0.36). However, there was a significant gender difference for emotion perception (t(48)=−2.35, p=.023, d=0.67), such that the boys spent significantly more time looking to the novel face (M=0.52, SD=0.05) than did girls (M=0.49, SD=0.04). Further, one-sample t-tests based on sample sizes of 27 boys and 23 girls revealed that boys looked significantly more to the novel face than chance (t(26)=2.58, p=.016, d=0.50), but girls did not significantly differ from chance in their looking to the novel face (t(22)=−0.72, p=.481, d=0.15).

To further examine the role of gender identified in our original model, we ran two post-hoc models separately for boys and girls predicting infant nonverbal emotion perception from language ability when controlling for infant age and object perception. For the 27 boys, neither age (β=.11, p=.607) nor object perception (β=.10, p=.652) uniquely related to emotion perception. Accounting for the effect of boys’ age and object perception, vocabulary did not relate to nonverbal emotion perception (β=−.03, p=.901). In the same model for the 23 girls, age similarly did not uniquely relate to nonverbal emotion perception (β=.12, p=.459), but object perception did (β=.69, p=.001). Additionally, accounting for the effect of girls’ age and object perception, vocabulary significantly related to nonverbal emotion perception (β=.42, p=.024). That is, for girls only, object perception uniquely related to nonverbal emotion perception, and language abilities contributed over and above the effect of object perception to nonverbal emotion perception. However, it is important to note that interactions between gender and vocabulary (β=−.16, p=.480), as well as gender and age (β=1.71, p=.469) did not significantly predict nonverbal emotion perception with these data.

Discussion

The present study investigated individual differences in nonverbal emotion perception and language abilities in late infancy. We found a statistically significant relation between the two variables when accounting for age and general object perception abilities, but only for girls. Further, we found that boys performed significantly better than girls on the nonverbal emotion perception task, but as a group the infants did not perform above chance on the task. These results suggest that the relation between general language ability and emotion perception prior to 18 months of age may be modulated by multiple factors including child gender, and therefore that the interplay between emotion and cognitive processes such as language in infancy may be complex. Boys may have an early advantage in nonverbal emotion perception due to the lack of language in the task, and girls with higher productive vocabularies may see an advantage over girls with lower vocabularies. We elaborate on why this may be the case in the following paragraphs.

The results in the present study are novel in that they appear to indicate more advanced emotion perception (i.e., a stronger novelty preference) among boys than girls. Some prior work has shown that girls outperform boys on typical emotion understanding and perception tasks (e.g., Brown & Dunn, 1996; Caron et al., 1982; Denham et al., 2015; Dunn et al., 1991; Ontai & Thompson, 2002) but other studies have analyzed for gender differences and found none (Dunn et al., 1991; Fabes et al., 2001; Grazzani et al., 2016). The results of the current study indicate that boys showed an advantage over girls in emotion perception under tested circumstances. This may be due to the nonverbal nature of our task, and possibly distinct pathways of perceiving, interpreting, and processing emotional information for boys and girls. As young girls generally have better language abilities than same-age boys (Fenson et al., 1994), and typical emotion understanding tasks involve language (e.g., Denham, 1986), it is possible that the advantage for girls demonstrated in previous research has been driven by their language abilities. That is, perhaps girls rely on language more than boys for perceiving and processing emotion information. In contrast, in infancy and childhood boys tend to have enhanced visual and spatial processing compared to girls (Moore & Johnson, 2008; Tsang et al., 2018; Vederhus & Krekling, 1996; Wilcox et al., 2012). Thus, boys may have performed well on this task due to its focus on visual emotion perception because they may have enhanced visual and spatial processing skills. This interpretation is further supported by the fact that object perception, in addition to vocabulary, uniquely predicted nonverbal emotion perception for girls. This likely points to the importance of visual processing for performing well on this nonverbal emotion perception task, as the lack of audio requires an increased emphasis on visual perception. Thus, boys may perform well in general due to stronger visual processing skills, while girls with higher levels of visual processing and language relative to other girls also may see an advantage on this task.

Although we did not observe any gender difference in language ability in our sample, it is possible that without any linguistic cues to guide their performance, girl infants may have not been able to process emotions as well, while the lack of language and focus on visual processing posed less of a challenge for boys. Thus, the gender findings observed in the present study could potentially be attributed to language development, emotion perception development, or a combination of these two. This aligns with results from our multiple regression analyses, and with prior research suggesting that both neurological and socialization factors should be examined when accounting for sex differences in facial processing (McClure, 2000), and doing so in infancy will allow us further insight into how emotion perception develops. However, it should be noted that the present analyses separated by gender were conducted post-hoc. Thus, these analyses relied on small sample sizes and require replication.

A further intriguing possibility is that gender differences in input may account for some of our findings. Previous work has indicated that parents tend to use emotion language more with their preschool-aged daughters than sons (Denham et al., 2010). Further, girls tend to have higher vocabulary than their same-age boy peers (Fenson et al., 1994) and parents generally adjust their language to be more complex as their child’s language abilities are higher (van Dijk et al., 2013). Thus, girls in late infancy may have been exposed to more emotion language and more complex language in general relative to boys, and prior research has linked linguistic input from parents to later child emotion understanding (e.g., Taumoepeau & Ruffman, 2006). Thus, if young girls are exposed to more emotion language and complex language in general, they may have expected such language in the task and struggled more than boys to interpret the emotional faces without the language to guide them. However, young girls with more emotion language in general may have been able to use their prior linguistic knowledge to aid them in the task more than girls with lower language abilities. The possible role of parental input on our findings remains an important question for future research.

Recent theories have proposed that language should relate to the perception of emotion categories (e.g., Barrett, 2017) because language and words provide “invitations to form categories” (Brown, 1958). Our results with 15- to 18-month-old infants provide some evidence to support this constructionist perspective, indicating that infant vocabulary size relates to an advantage in emotion perception (i.e., increased looking to the novel face; Ogren & Johnson, 2020; Palama et al., 2018) across different faces for girls, but not boys. At least among girls, these correlational results suggest that early access to greater general vocabulary may provide an advantage for processing and perceiving emotional information. This may be because greater language allows children the ability to interpret and categorize information in their environment in general (Balaban & Waxman, 1997; Fulkerson & Waxman, 2007), and therefore greater vocabulary skills may also be beneficial for interpreting and categorizing emotional content. In this way, children may leverage the language that they hear to actively construct emotion categories based on their experiences. However, additional research is necessary to support this causal hypothesis, particularly among older age groups. Perhaps late infancy is too young to observe a relation between language and emotion perception for boys. As our participants were limited to 15- to 18-month-old infants, it is possible that language may predict emotion perception for both genders at ages older than 18 months.

In everyday social environments, there is substantial variability among emotion displays within emotion categories (Barrett et al., 2019). Not all angry faces, for example, look alike. Our emotional stimuli were validated by adults, yet it is possible that variability in emotions from one person to the next without the aid of any audio made this task particularly challenging for infants. If this is the case, it is possible that a relation between language and nonverbal emotion perception for both boys and girls would be apparent among older age groups as children gain further experience with this variability. This may be particularly important to investigate given that emotion words have been shown to influence emotion face categorization among 6-, 9-, and 12-year-olds (Vesker et al., 2018), but their effects in children older than 18 months but younger than 6 years remain largely unknown. This may also be important for teasing apart the role of general language abilities and emotion-specific vocabulary. In late infancy, emotion-specific vocabulary is low (as noted previously, only one out of the 50 participants in our sample produced any emotion words). However, previous work has suggested that by the preschool years, learning emotion words may play an important role in learning an emotion concept (Widen & Russell, 2008). Examining the role of emotion vocabulary versus general vocabulary as children get older and expand their emotion vocabulary (e.g., as toddlers) will afford further important insights into mechanisms behind emotion understanding development.

It is also important to note the limitation, which is common in emotional development research, that the present paradigm assessed infant perception of emotional faces, not emotion itself. Although infants had to generalize emotional information across different identities, infant performance on the present task likely involved matching of some perceptual features across individuals (e.g., furrowed eyebrows for anger). Because emotions are highly complex and involve multiple components, future research is needed assessing infant perception of emotion in other domains such as vocal tone or body posture, as well as cues with less distinct perceptual features such as the situation in which the emotion is embedded. It is possible that perceptual features, such as actor skin tone, may have influenced infant looking in the present study. However, this seems unlikely given the fact that images of the same woman were paired side-by-side, thereby controlling for skin tone, and that order of presentation of specific actors was randomized across participants. Nonetheless, such perceptual features are important to consider. Additionally, our results indicated that overall children were not above chance performance on the task, which contrasts with prior research indicating that children can perceive facial configurations of emotion in infancy (LaBabera et al., 1976; Schwartz et al., 1985). This is likely accounted for by our design; a large number of trials with relatively short durations made the task challenging and allowed for individual differences to emerge. However, future research may wish to examine the relation between emotion perception and language in late infancy using a less challenging task for infants, as perhaps the added challenge of perceiving an emotional match across an 8-second trial is too much for a relation to emerge with language for boys.

In conclusion, the present study assessed infant language abilities and nonverbal emotion perception. For the full sample of infants, we did not find evidence that infant vocabulary size significantly correlated with individual differences in nonverbal emotion perception when controlling for infant age, gender, and object perception abilities. However, a gender difference was identified, with boys demonstrating a significantly greater novelty preference than girls in the emotion perception task. Further, when examining nonverbal emotion perception separately for boys and girls, vocabulary was significantly correlated when controlling for age and object perception for girls only. This suggests the possibility that pathways of processing emotional information (particularly with regards to the role of language) may differ for young boys and girls in late infancy.

Highlights for Nonverbal Emotion Perception and Vocabulary in Late Infancy:

Infant emotion perception assessed using nonverbal task

No relation between nonverbal emotion perception and language overall

Language related to nonverbal emotion perception only for girls

Boys performed better than girls on nonverbal emotion perception task

Acknowledgments

The authors would like to thank Shannon Brady, Alexis Baird, Bryan Nguyen, participating families, and members of the UCLA Baby Lab. This work was supported by the National Institutes of Health [Grant numbers R01-HD082844, F31-HD100067, and F32-HD105316].

Footnotes

The authors declare no conflicts of interest

Data will be made available upon request

Contributor Information

Marissa Ogren, Rutgers University, Newark.

Scott P. Johnson, University of California, Los Angeles

References

- Balaban MT, & Waxman SR (1997). Do words facilitate object categorization in 9-month-old infants? Journal of Experimental Child Psychology, 64, 3–26. doi: 10.1006/jecp.1996.2332 [DOI] [PubMed] [Google Scholar]

- Barrett LF (2017). How emotions are made: The secret life of the brain. New York, NY: Houghton-Mifflin-Harcourt. [Google Scholar]

- Barrett LF, Adolphs R, Marsella S, Martinez AM, & Pollak SD (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science: In the Public Interest, 20, 1–68. doi: 10.1177/1529100619832930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blair C (2002). School readiness: Integrating cognition and emotion in a neurobiological conceptualization of children’s functioning at school entry. American Psychologist, 57, 111–127. doi: 10.1037/0003-066X.57.2.111 [DOI] [PubMed] [Google Scholar]

- Bodrova E, & Leong DJ (2006). Self-regulation as a key to school readiness: How early childhood teachers can promote this critical competency. In Zaslow M & Martinez-Beck I (Eds.), Critical issues in early childhood professional development (pp. 203–224). Paul H Brookes Publishing. [Google Scholar]

- Brooks JA, Shablack H, Gendron M, Satpute AB, Parrish MH, & Lindquist KA (2017). The role of language in the experience and perception of emotion: A neuroimaging meta-analysis. Social Cognitive and Affective Neuroscience, 12, 169–183. doi: 10.1093/scan/nsw121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown R (1958). Words and things. Glencoe, IL: Free Press. [Google Scholar]

- Brown JR, & Dunn J (1996). Continuities in emotion understanding from three to six years. Child Development, 67, 789–802. doi: 10.1111/j.1467-8624.1996.tb01764.x [DOI] [PubMed] [Google Scholar]

- Caron RF, Caron AJ, & Myers RS (1982). Abstraction of invariant face expressions in infancy. Child Development, 53, 1008–1015. doi: 10.2307/1129141 [DOI] [PubMed] [Google Scholar]

- Caron RF, Caron AJ, & Myers RS (1985). Do infants see emotional expressions in static faces? Child Development, 56, 1552–1560. doi: 10.2307/1130474 [DOI] [PubMed] [Google Scholar]

- Cook ET, Greenberg MT, & Kusche CA (1994). The relations between emotional understanding, intellectual functioning and disruptive behavior problems in elementary school aged children. Journal of Abnormal Child Psychology, 22, 205–219. doi: 10.1007/BF02167900 [DOI] [PubMed] [Google Scholar]

- Crivello C, & Poulin-Dubois D (2019). Infants’ ability to detect emotional incongruency: Deep or shallow? Infancy, 24, 480–500. doi: 10.1111/infa.12277 [DOI] [PubMed] [Google Scholar]

- Cutting AL, & Dunn J (1999). Theory of mind, emotion understanding, language, and family background: Individual differences and interrelations. Child Development, 70, 853–865. doi: 10.1111/1467-8624.00061 [DOI] [PubMed] [Google Scholar]

- Denham SA (1986). Social cognition, prosocial behavior, and emotion in preschoolers: Contextual validation. Child Development, 57, 194–201. doi: 10.1111/j.1467-8624.1986.tb00020.x [DOI] [Google Scholar]

- Denham SA, Bassett H, Brown C, Way E, & Steed J (2015). “I know how you feel”: Preschoolers’ emotion knowledge contributes to early school success. Journal of Early Childhood Research, 13, 252–262. doi: 10.1177/1476718X13497354 [DOI] [Google Scholar]

- Denham SA, Bassett HH, & Wyatt T (2010). Gender differences in the socialization of preschoolers’ emotional competence. In Kennedy RA & Denham SA (Eds.),The Role of Parent and Child Gender in the Socialization of Emotional Competence (pp. 29–49). San Francisco: Jossey-Bass. [DOI] [PubMed] [Google Scholar]

- Denham SA, Zoller D, & Couchoud EA (1994). Socialization of preschoolers’ emotion understanding. Developmental Psychology, 30, 928–936. doi: 10.1037//0012-1649.30.6.928 [DOI] [Google Scholar]

- Dunn J, Brown JR, & Beardsall L (1991). Family talk about feeling states and children’s later understanding of others’ emotions. Developmental Psychology, 27, 448–455. doi: 10.1037/0012-1649.27.3.448 [DOI] [Google Scholar]

- Dunn J, Brown JR, Slomkowski C, Tesla C, & Youngblade L (1991). Young children’s understanding of other people’s feelings and beliefs: Individual differences and their antecedents. Child Development, 62, 1352–1366. doi: 10.1111/j.1467-8624.1991.tb01610.x [DOI] [PubMed] [Google Scholar]

- Eisenberg N, Sadovsky A, & Spinrad TL (2005). Associations of emotion-related regulation with language skills, emotion knowledge, and academic outcomes. New Directions for Child and Adolescent Development, 109, 109–118. doi: 10.1002/cd.143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P (1992). An argument for basic emotions. Cognition and Emotion, 6, 169–200. doi: 10.1080/02699939208411068 [DOI] [Google Scholar]

- Ekman P, & Cordaro D (2011). What is meant by calling emotions basic. Emotion Review, 3, 364–370. doi: 10.1177/1754073911410740 [DOI] [Google Scholar]

- Eriksson M, Westerlund M, & Berglund E (2002). A screening version of the Swedish communicative development inventories designed for use with 18-month-old children. Journal of Speech, Language, and Hearing Research, 45, 948–960. doi: 10.1044/1092-4388(2002/077) [DOI] [PubMed] [Google Scholar]

- Fabes RA, Eisenberg N, Hanish LD, & Spinrad TL (2001). Preschoolers’ spontaneous emotion vocabulary: Relations to likability. Early Education and Development, 12, 11–27. doi: 10.1207/s15566935eed1201_2 [DOI] [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal DJ, & Pethick SJ (1994). Variability in early communicative development. Monographs of the Society for Research in Child Development, 59, 1–173. doi: 10.1111/j.1540-5834.1994.tb00173.x [DOI] [PubMed] [Google Scholar]

- Fenson L, Marchman VA, Thal DJ, Dale PS, Reznick JS, & Bates E (2007). MacArthur-Bates Communicative Development Inventories: User’s guide and technical manual (2nd ed.). Baltimore, MD: Brookes. [Google Scholar]

- Fillo J, Simpson JA, Rholes WS, & Kohn JL (2015). Dads doing diapers: Individual and relational outcomes associated with the division of childcare across the transition to parenthood. Journal of Personality and Social Psychology, 108, 298–316. doi: 10.1037/a0038572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MC, Braginsky M, Yurovsky D, & Marchman VA (2017). Wordbank: An open repository for developmental vocabulary data. Journal of Child Language, 44, 677–694. doi: 10.1017/S0305000916000209 [DOI] [PubMed] [Google Scholar]

- Fugate JMB, Gouzoules H, & Barrett LF (2011). Reading chimpanzee faces: Evidence for the role of verbal labels in categorical perception of emotion. Emotion, 10, 544–554. doi: 10.1037/a0019017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fulkerson AL, & Waxman SR (2007). Words (but not tones) facilitate object categorization: Evidence from 6- and 12-month-olds. Cognition, 105, 218–228. doi: 10.1016/j.cognition.2006.09.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geangu E, & Vuong QC (2020). Look up to the body: An eye-tracking investigation of 7-month-old infants’ visual exploration of emotional body expressions. Infant Behavior and Development, 60, 101473. doi: 10.1016/j.infbeh.2020.101473 [DOI] [PubMed] [Google Scholar]

- Gendron M, Lindquist KA, Barsalou L, & Barrett LF (2012). Emotion words shape emotion percepts. Emotion, 12, 314–325. doi: 10.1037/a0026007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grazzani I, Ornaghi V, Agliati A, & Brazzelli E (2016). How to foster toddlers’ mental-state talk, emotion understanding, and prosocial behavior: A conversation-based intervention at nursery school. Infancy, 21, 199–227. doi: 10.1111/infa.12107 [DOI] [Google Scholar]

- Hunter MA, & Ames EW (1988). A multifactor model of infant preferences for novel and familiar stimuli. In Lipsitt LP (Ed.), Advances in child development and behavior (pp. 69–95). New York: Academic. [Google Scholar]

- Izard CE, Fine S, Schultz D, Mostow AJ, Ackerman B, & Youngstrom E (2001). Emotion knowledge as a predictor of social behavior and academic competence in children at risk. Psychological Science, 12, 18–23. doi: 10.1111/1467-9280.00304 [DOI] [PubMed] [Google Scholar]

- LaBabera JD, Izard CE, Vietze P, & Parisi SA (1976). Four- and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Development, 47, 533–538. doi: 10.2307/1128816 [DOI] [PubMed] [Google Scholar]

- Law J, & Roy P (2008). Parental report of infant language skills: A review of the development and application of the Communicative Development Inventories. Child and Adolescent Mental Health, 13, 198–206. doi: 10.1111/j.1475-3588.2008.00503.x [DOI] [PubMed] [Google Scholar]

- Lindquist KA, MacCormack JK, & Shablack H (2015). The role of language in emotion: Predictions from psychological constructionism. Frontiers in Psychology, 6, Article 444. doi: 10.3389/fpsyg.2015.0044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure EB (2000). A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychological Bulletin, 126, 424–453. doi: 10.1037/0033-2909.126.3.424 [DOI] [PubMed] [Google Scholar]

- Milders M, Hietanen JK, Leppänen JM, & Braun M (2011). Detection of emotional faces is modulated by the direction of eye gaze. Emotion, 11, 1456–1461. doi: 10.1037/a0022901 [DOI] [PubMed] [Google Scholar]

- Moore DS, & Johnson SP (2008). Mental rotation in human infants: A sex difference. Psychological Science, 19, 1063–1066. doi: 10.1111/j.1467-9280.2008.02200.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nook EC, Lindquist KA, & Zaki J (2015). A new look at emotion perception: Concepts speed and shape facial emotion recognition. Emotion, 15, 569–578. doi: 10.1037/a0039166 [DOI] [PubMed] [Google Scholar]

- Nook EC, Sasse SF, Lambert HK, McLaughlin KA, & Somerville LH (2017). Increasing verbal knowledge mediates development of multidimensional emotion representations. Nature Human Behaviour, 1, 881–889. doi: 10.1038/s41562-017-0238-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogren M, & Johnson SP (2020). Intermodal emotion matching at 15 months, but not 9 or 21 months, predicts early childhood emotion understanding: A longitudinal investigation. Cognition and Emotion, 1–14. doi: 10.1080/02699931.2020.1743236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ontai LL, & Thompson RA (2002). Patterns of attachment and maternal discourse effects on children’s emotion understanding from 3 to 5 years of age. Social Development, 11, 433–450. doi: 10.1111/1467-9507.00209 [DOI] [Google Scholar]

- Palama A, Malsert J, & Gentaz E (2018). Are 6-month-old human infants able to transfer emotional information (happy or angry) from voices to faces? An eye-tracking study. PLOS One, 13(4), e0194579. doi: 10.1371/journal.pone.0194579 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pons F, Lawson J, Harris PL, & De Rosnay M (2003). Individual differences in children’s emotion understanding: Effects of age and language. Scandinavian Journal of Psychology, 44, 347–353. doi: 10.1111/1467-9450.00354 [DOI] [PubMed] [Google Scholar]

- Quinn PC, Yahr J, Kuhn A, Slater AM, & Pascalis O (2002). Representation of the gender of human faces by infants: A preference for female. Perception, 31, 1109–1121. doi: 10.1068/p3331 [DOI] [PubMed] [Google Scholar]

- Rieffe C, & Wiefferink CH (2017). Happy faces, sad faces: Emotion understanding in toddlers and preschoolers with language impairments. Research in Developmental Disabilities, 62, 40–49. doi: 10.1016/j.ridd.2016.12.018 [DOI] [PubMed] [Google Scholar]

- Russell JA, & Widen SC (2002). A label superiority effect in children’s categorization of facial expressions. Social Development, 11, 30–53. doi: 10.1111/1467-9507.00185 [DOI] [Google Scholar]

- Schwartz GM, Izard CE, & Ansul SE (1985). The 5-month-old’s ability to discriminate facial expressions of emotion. Infant Behavior and Development, 8, 65–77. doi: 10.1016/S0163-6383(85)80017-5 [DOI] [Google Scholar]

- Steele H, Steele M, Croft C, & Fonagy P (1999). Infant-mother attachment at one year predicts children’s understanding of mixed emotions at six years. Social Development, 8, 161–178. doi: 10.1111/1467-9507.00089 [DOI] [Google Scholar]

- Taumoepeau M, & Ruffman T (2006). Mother and infant talk about mental states relates to desire language and emotion understanding. Child Development, 77, 465–481. doi: 10.1111/j.1467-8624.2006.00882.x [DOI] [PubMed] [Google Scholar]

- Tomasello M, & Mervis CB (1994). The instrument is great, but measuring comprehension is still a problem. Monographs of the Society for Research in Child Development, 59, 174–179. doi: 10.1111/j.1540-5834.1994.tb00186.x [DOI] [Google Scholar]

- Tsang T, Ogren M, Peng Y, Nguyen B, Johnson KL, & Johnson SP (2018). Infant perception of sex differences in biological motion displays. Journal of Experimental Child Psychology, 173, 338–350. doi: 10.1016/j.jecp.2018.04.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Dijk M, van Geert P, Korecky-Kröll K, Maillochon I, Laaha S, Dressler WU, & Bassano D (2013). Dynamic adaptation in child-adult language interaction. Language Learning, 63, 243–270. doi: 10.1111/lang.12002 [DOI] [Google Scholar]

- Vederhus L, & Krekling S (1996). Sex differences in visual spatial ability in 9-year-old children. Intelligence, 23, 33–43. doi: 10.1016/S0160-2896(96)80004-3 [DOI] [Google Scholar]

- Vesker M, Bahn D, Kauschke C, Tschense M, Dege F, & Schwarzer G (2018). Auditory emotion word primes influence emotional face categorization in children and adults, but not vice versa. Frontiers in Psychology, 9, 618. doi: 10.3389/fpsyg.2018.00618 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voltmer K, & Von Salisch M (2017). Three meta-analyses of children’s emotion knowledge and their school success. Learning and Individual Differences, 59, 107–118. doi: 10.1016/j.lindif.2017.08.006 [DOI] [Google Scholar]

- Widen SC, & Russell JA (2004). The relative power of an emotion’s facial expression, label, and behavioral consequence to evoke preschoolers’ knowledge of its cause. Cognitive Development, 19, 111–125. doi: 10.1016/j.cogdev.2003.11.004 [DOI] [Google Scholar]

- Widen SC, & Russell JA (2008). Children acquire emotion categories gradually. Cognitive Development, 23, 291–312. doi: 10.1016/j.cogdev.2008.01.002 [DOI] [Google Scholar]

- Wilcox T, Alexander GM, Wheeler L, & Norvell JM (2012). Sex differences during visual scanning of occlusion events in infants. Developmental Psychology, 48, 1091–1105. doi: 10.1037/a0026529 [DOI] [PMC free article] [PubMed] [Google Scholar]