Abstract

Objectives: Applications of artificial intelligence (AI) have the potential to improve aspects of healthcare. However, studies have shown that healthcare AI algorithms also have the potential to perpetuate existing inequities in healthcare, performing less effectively for marginalised populations. Studies on public attitudes towards AI outside of the healthcare field have tended to show higher levels of support for AI among socioeconomically advantaged groups that are less likely to be sufferers of algorithmic harms. We aimed to examine the sociodemographic predictors of support for scenarios related to healthcare AI.

Methods: The Australian Values and Attitudes toward AI survey was conducted in March 2020 to assess Australians’ attitudes towards AI in healthcare. An innovative weighting methodology involved weighting a non-probability web-based panel against results from a shorter omnibus survey distributed to a representative sample of Australians. We used multinomial logistic regression to examine the relationship between support for AI and a suite of sociodemographic variables in various healthcare scenarios.

Results: Where support for AI was predicted by measures of socioeconomic advantage such as education, household income and Socio-Economic Indexes for Areas index, the same variables were not predictors of support for the healthcare AI scenarios presented. Variables associated with support for healthcare AI included being male, having computer science or programming experience and being aged between 18 and 34 years. Other Australian studies suggest that these groups may have a higher level of perceived familiarity with AI.

Conclusion: Our findings suggest that while support for AI in general is predicted by indicators of social advantage, these same indicators do not predict support for healthcare AI.

Keywords: Artificial intelligence, Machine Learning, Health Equity, Delivery of Health Care

WHAT IS ALREADY KNOWN ON THIS TOPIC

Artificial intelligence (AI) has the potential to perpetuate existing biases in healthcare data sets, which may be more harmful for marginalised populations. Support for the development of AI tends to be higher among more socioeconomically privileged groups.

WHAT THIS STUDY ADDS

While general support for the development of AI was higher among socioeconomically privileged groups, support for the development of healthcare AI was not. Groups that were more likely to support healthcare AI were males, those with computer science experience and younger people.

HOW THIS STUDY MIGHT AFFECT RESEARCH, PRACTICE OR POLICY

Healthcare AI is becoming more relevant for the public as new applications are developed and implemented. Understanding how public attitudes differ among sociodemographic subgroups is important for future governance of healthcare AI.

Background

There are currently many applications for healthcare artificial intelligence (HCAI) in various stages of development and implementation.1 Defined as technologies that allow computer programs to perform tasks and solve problems without explicit human guidance,2 HCAI-based systems employ algorithms to complete the tasks typically performed by health professionals. Algorithms have been trained to read ECGs,3 detect skin cancer from smartphone images4 and predict people’s risk of disease using large-scale national data sets5 with ostensibly comparable accuracy to current approaches.

While these technologies have the potential to improve aspects of healthcare, they also have the potential to cause harm to patients.6 Algorithmic harms are exacerbated in already marginalised populations,7 8 as the causes and effects of historical structural disadvantage are embedded in healthcare data sets, and training sets often exclude marginalised groups. Obermeyer et al9 audited an algorithm used in the USA for determining whether patients should be referred to high-risk care, and found that patients who identified as black were less likely to be flagged by the algorithm as needing high-risk care, despite having more comorbidities than non-black-identifying counterparts. Similarly, Seyyed-Kalantari et al,8 using data from the USA, found that women, people aged under 20, those with lower socioeconomic status and black or Hispanic-identifying people were less likely to be diagnosed correctly by a chest radiograph algorithm. Factors preventing marginalised groups from accessing care in the past exist implicitly in many healthcare data sets, and algorithms trained on these data sets perpetuate these inequities.9

Surveys examining public attitudes towards artificial intelligence (AI) have found that certain sociodemographic characteristics are associated with higher levels of support for AI. Zhang and Dafoe10 in a survey in the USA found that younger people, males, those with computer science experience and those with a high annual household income were more likely to be supportive of the development of AI. A survey study in the Netherlands, using a representative panel of the Dutch population, studied trust in HCAI and found that the sociodemographic characteristics associated with higher levels of trust were being male, having a higher level of education, being employed or a student and having not stayed in hospital in the past 12 months.11 It is suggested that those who are less likely to suffer from the negative impacts of AI are more supportive of its implementation.10–12

We conducted a survey to examine whether Australians’ attitudes towards HCAI vary with different sociodemographic characteristics.

Method

Our aims for this study were threefold. We aimed to (1) examine the sociodemographic variables associated with support for AI in Australia, (2) examine the sociodemographic variables associated with support for HCAI and (3) determine whether sociodemographic characteristics were associated with different preferences in AI-integrated healthcare.

This paper reports results from an analysis of the Australian Values and Attitudes toward AI (AVA-AI) survey. The survey was conducted with the Social Research Centre’s Life in Australia (LIA) study, which regularly engages a representative panel of Australians in independent surveys.13 A shortened version of the AVA-AI questionnaire was included in the 36th wave of the LIA study, disseminated in March 2020. The full version of the questionnaire was disseminated to a non-probabilistically sampled online panel. We used the shortened version of the questionnaire as a reference survey to produce weights for the non-probability sample that account for characteristics that influence people’s propensity to participate in the online panel. A more detailed description of the data collection and weighting methodology is provided in Isbanner et al’s study.14 For this analysis, we report on results from the weighted non-probability sample using data obtained from the full questionnaire.

Predictor variables

We selected predictor variables analogous to two other surveys on public attitudes towards AI: Zhang and Dafoe’s study in the USA10 and Selwyn and colleagues’ study in Australia.15 Variables used in the analysis included age group, gender, self-identification as having a chronic health condition or disability, living in a capital city, highest level of educational attainment, area of socioeconomic advantage (henceforth referred to as Socio-Economic Indexes for Areas (SEIFA)) (This study used the Australian Bureau of Statistics’ SEIFA to measure the relative advantage and disadvantage of areas.16 Participants were classified into quintiles based on the SEIFA of their area (ie, postcode) of residence, with those in quintiles 4 and 5 coded as ‘least socioeconomic disadvantage’, those in quintiles 2 and 3 coded as ‘moderate disadvantage’ and those in quintile 1 coded as ‘most socioeconomic disadvantage’), household income, computer science or programming experience and speaking a language other than English at home. Additionally, we included self-reported health status as a predictor variable because evidence elsewhere indicated that health-related metrics were associated with attitudes towards HCAI.11 A copy of the questionnaire is provided in online supplemental file 1.

bmjhci-2022-100714supp001.pdf (3.3MB, pdf)

We removed any responses where the participant had not responded to all predictor and outcome variables (n=17). One participant identified with a gender outside of the male/female binary. This response was removed,17 and the limitations of this will be discussed further below. n=1983 responses were analysed.

We calculated Spearman’s r coefficients to identify multicollinearity between predictor variables (table 1). Some pairs of variables were moderately correlated. Those with high self-reported health status were less likely to identify as having a disability, and those living in a capital city were more likely to live in postcodes with less socioeconomic disadvantage. We deemed these moderate correlations unlikely to have a detrimental effect on model fitting or interpretation.

Table 1.

Correlation matrix of predictor variables (Spearman’s r coefficients)

| Self-reported disability | 0.04 | ||||||||

| Age group | −0.16 | 0.23 | |||||||

| Education | 0.26 | −0.12 | −0.22 | ||||||

| Gender | 0.13 | 0.04 | 0.11 | 0.05 | |||||

| Household income | 0.07 | −0.22 | −0.17 | 0.24 | 0.04 | ||||

| Speaks languages other than English at home | 0.20 | −0.13 | −0.23 | 0.24 | 0.00 | 0.02 | |||

| Living in a capital city | 0.08 | −0.14 | −0.19 | 0.18 | −0.01 | 0.12 | 0.22 | ||

| SEIFA | 0.05 | −0.14 | −0.06 | 0.15 | −0.01 | 0.11 | 0.08 | 0.32 | |

| Self-reported health status | 0.08 | −0.34 | −0.23 | 0.14 | −0.02 | 0.17 | 0.10 | 0.08 | 0.10 |

| Computer science experience | Self-reported disability | Age group | Education | Gender | Household income | Languages other than English | Living in a capital city | SEIFA index |

0 indicates no correlation. Coefficients closer to 1 or −1 indicate stronger positive and negative correlations, respectively.

SEIFA, Socio-Economic Indexes for Areas.

Outcome variables

Eleven outcome variables were selected for the three aims of the study (table 2). Item 1 replicated a question from Zhang and Dafoe’s study,10 asking participants to indicate their level of support for the development of AI on a 5-point semantic scale from strongly oppose to strongly support. Item 2 was a question that asked participants to consider their support for HCAI in a scenario where an unexplainable algorithm was being used to analyse patient health records and suggest treatments. Item 3 asked participants to consider their support for an algorithm that diagnosed diseases more accurately than physicians but required patients to share their health record. Item 5 asked participants to consider their support for HCAI in a scenario where its development leads to physicians becoming less skilled at tasks that were replaced by AI. Each of these questions asked participants to indicate their level of support on a 5-point scale.

Table 2.

Aims and outcome variables

| Aim | Items used | Predictor variables |

| Aim 1: examine the sociodemographic variables associated with support for AI in Australia | 1. Level of support for the development of AI (B01) |

|

| Aim 2: examine the sociodemographic variables associated with support for HCAI | 2. Level of support for HCAI that is unexplainable (C03) 3. Level of support for HCAI that requires sharing personal data (C04) 4. Level of support for HCAI that leads to clinician deskilling (C05) |

|

| Aim 3: determine whether sociodemographic characteristics were associated with different preferences in AI-integrated healthcare | 5. Importance of explainability (C01a) 6. Importance of getting an answer quickly (C01b) 7. Importance of getting an accurate answer (C01c) 8. Importance of being able to talk to a person about one’s health (C01d) 9. Importance of knowing who is responsible for one’s care (C01e) 10. Importance of reducing health system costs (C01f) 11. Importance of knowing the system treats everyone fairly (C01g) |

*Residing in a capital city and SEIFA are derived from self-reported postcode.

AI, artificial intelligence; HCAI, healthcare artificial intelligence; SEIFA, Socio-Economic Indexes for Areas.

Items 5–11 were preceded by a scenario asking participants to imagine a situation where an algorithm was reading a medical test, diagnosing them with a disease and recommending treatments. Participants were asked to consider the importance of (5) explainability, (6) speed, (7) accuracy, (8) human oversight, (9) accountability, (10) cost to the healthcare system and (11) equity. Participants responded on a 5-point scale from not at all important to very important. Each outcome variable was recoded to binary categories, where the two highest categories (ie, strongly support and somewhat support, very important and extremely important) were recoded to 1 and remaining categories were coded to 0.

Statistical analysis

We generated frequency tables that incorporated the survey weights using the questionr package.18 We fit separate multiple logistic regression models for each of the outcome variables using the same suite of sociodemographic variables as predictors for each. All analyses were conducted in R.19 The survey package20 was used to incorporate survey weights in the analysis and calculation of SEs. ORs are reported with accompanying p values and 95% CIs. We considered results significant where p<0.05 and commented on all results where p<0.10.

Results

n=1983 responses were analysed. Weighted and unweighted sample demographics are shown in table 3. Weights primarily affected distributions in self-reported health, chronic health condition or disability status and speaking languages other than English at home.

Table 3.

Weighted and unweighted sample demographics

| Unweighted | Weighted | |||

| n | % | n | % | |

| Computer science or programming experience | ||||

| No | 1598 | 85.0 | 1603.4 | 85.3 |

| Yes | 281 | 15.0 | 275.6 | 14.7 |

| Has chronic health condition or disability | ||||

| No | 1361 | 72.4 | 1457.2 | 77.6 |

| Yes | 518 | 27.6 | 421.8 | 22.4 |

| Age group | ||||

| 18–34 | 572 | 30.4 | 593.2 | 31.6 |

| 35–54 | 630 | 33.5 | 640.5 | 34.1 |

| 55+ | 677 | 36.0 | 645.3 | 34.3 |

| Highest level of educational attainment | ||||

| High school | 603 | 32.1 | 632.3 | 33.7 |

| Trade certificate/diploma | 630 | 33.5 | 709.3 | 37.7 |

| Bachelor’s degree | 452 | 24.1 | 374.7 | 19.9 |

| Postgraduate degree | 194 | 10.3 | 162.7 | 8.7 |

| Gender | ||||

| Female | 947 | 50.4 | 968.1 | 51.5 |

| Male | 932 | 49.6 | 910.9 | 48.5 |

| Household income (per week) | ||||

| <$500 | 361 | 19.2 | 340.2 | 18.1 |

| $500–$1999 | 1095 | 58.3 | 1051.5 | 56.0 |

| $2000+ | 423 | 22.5 | 487.3 | 25.9 |

| Speaks languages other than English at home | ||||

| No | 1598 | 85.0 | 1473.8 | 78.4 |

| Yes | 281 | 15.0 | 405.2 | 21.6 |

| Lives in capital city | ||||

| No | 626 | 33.3 | 626.7 | 33.4 |

| Yes | 1253 | 66.7 | 1252.3 | 66.6 |

| SEIFA | ||||

| Most disadvantage | 281 | 15.0 | 294.9 | 15.7 |

| Moderate | 1185 | 63.1 | 1160.2 | 61.7 |

| Least disadvantage | 413 | 22.0 | 423.8 | 22.6 |

| Self-reported health | ||||

| Excellent/very good | 735 | 39.1 | 1015.1 | 54.0 |

| Good/fair/poor | 1144 | 60.9 | 863.9 | 46.0 |

SEIFA, Socio-Economic Indexes for Areas.

Support for development of AI

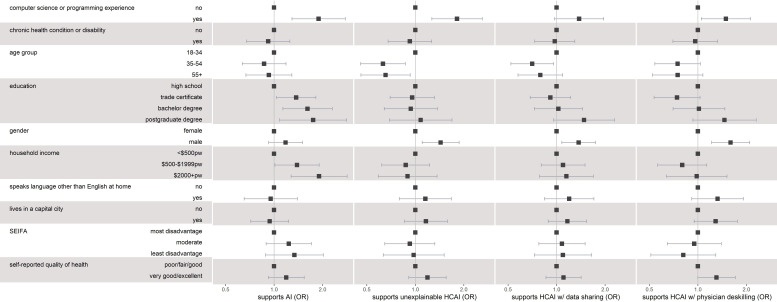

Logistic regression results are displayed in figure 1 with weighted proportions in online supplemental file 2. Overall, 56.7% of the weighted sample supported the development of AI. Support was significantly higher among those with computer science experience (weighted proportion supportive=72.1%; OR=1.89; p=0.001) compared with those without such experience; those with moderate (55.6%; OR=1.39; p=0.043) or high (66.3%; OR=1.90; p=0.002) household incomes compared with those with low income; and those with trade certificates/diplomas (57.4%; OR=1.37; p=0.028), bachelor’s degrees (65.6%; OR=1.61; p=0.008) and postgraduate degrees (69.0%; OR=1.75; p=0.022) compared with those with only high school-level education.

Figure 1.

OR plot of weighted logistic regression results. Error bar indicates 95% CI. Index categories displayed with OR=1. Plots indicate (1) participants’ level of support for artificial intelligence (AI), (2) participants’ level of support for unexplainable AI in healthcare, (3) participants’ support for AI in healthcare that necessitates sharing data and (4) participants’ support for healthcare artificial intelligence (HCAI) that leads to physician deskilling. pw, per week; SEIFA, Socio-Economic Indexes for Areas.

bmjhci-2022-100714supp002.pdf (21.9KB, pdf)

Support for the development of HCAI and trade-offs

Participants were asked to consider whether they supported the development of HCAI in three scenarios. Across the weighted sample, only 27.0% were supportive of HCAI that led to physician deskilling, 28.7% were supportive of unexplainable HCAI and 41.9% were supportive of HCAI that necessitated sharing personal data. Logistic regression results are displayed in figure 1.

Support for unexplainable HCAI was significantly higher among those with computer science experience (43.4%; OR=1.82; p=0.001) and males (32.5%; OR=1.44; p=0.007). Support was significantly lower among those aged 35–54 (25.3%; OR=0.63; p=0.005) and those aged 55+ (25.0%; OR=0.65; p=0.018) compared with those aged 18–34 (36.4%).

Support for AI that necessitates data sharing was significantly higher among males (46.0%; OR=1.37; p=0.011). Participants aged 35–54 (38.4%; OR=0.71; p=0.025) were less likely than those aged 18–34 (48.4%) to be supportive of HCAI that necessitates data sharing.

Support for HCAI that leads to physician deskilling was significantly higher among those with computer science experience (40.0%; OR=1.49; p=0.025) and males (31.6%; OR=1.60; p=0.001).

The analysis did not show an association between household income, living in areas with less social disadvantage, living in a capital city, speaking languages other than English at home or having a chronic health condition/disability and support for the HCAI trade-offs.

Importance of different features in AI-integrated healthcare

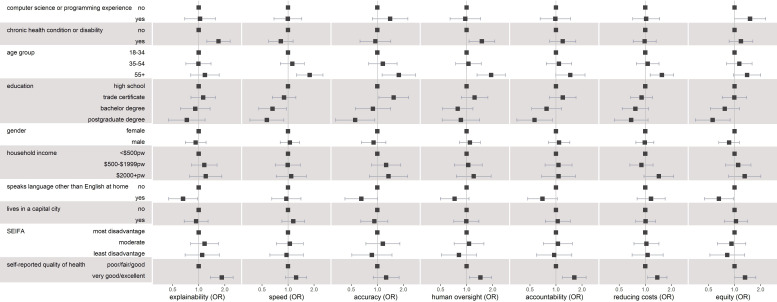

Participants were asked to respond to a series of questions about the importance of various aspects of HCAI implementation. Logistic regression results can be found in figure 2 and weighted proportions for each subgroup can be found in online supplemental file 3. Across all sociodemographic groups, accuracy was the feature most regarded as important, and reducing costs to the healthcare system was least likely to be regarded as important followed by speed.

Figure 2.

OR plots of weighted logistic regression results. Error bar indicates 95% CI. Index categories displayed with OR=1. Plots indicate level of importance attributed to each aspect of artificial intelligence (AI)-enabled care. pw, per week; SEIFA, Socio-Economic Indexes for Areas.

bmjhci-2022-100714supp003.pdf (24KB, pdf)

Socioeconomic characteristics

Socioeconomic factors had a little effect on perceived importance of the features. Having a high (>$2000 per week) income had a weak positive effect on perceived importance of reducing costs to the healthcare system (64.5%; OR=1.44; p=0.073). SEIFA was not associated with perceived importance for any of the features.

Demographic characteristics

Demographic characteristics had some associations with perceived importance of the features. Those who spoke languages other than English at home were significantly less likely to regard explainability (68.0%; OR=0.66; p=0.035) and equity (65.1%; OR=0.66; p=0.035) as very/extremely important. They were also perhaps less likely to perceive accuracy (77.7%; OR=0.65; p=0.056) and accountability (70.6%; OR=0.70; p=0.074) as very/extremely important. Those aged over 55 were more likely than those aged 18–34 to perceive all features as very important, particularly human oversight (85.0%; OR=1.92; p=0.001); however, this effect was not significant for equity and explainability. Gender and living in a capital city had no significant association with any of the features.

Educational characteristics

Those with postgraduate degrees were less likely than those with a high school-level education to see accuracy (73.9%; OR=0.55; p=0.027), equity (64.0%; OR=0.56; p=0.014), speed (61.1%; OR=0.57; p=0.015) and accountability (61.1%; OR=0.57; p=0.018) as very/extremely important. Those with computer science or programming experience were slightly more likely to see equity (76.0%; OR=1.51; p=0.052) as very/extremely important.

Health-related characteristics

Those with higher self-reported health were significantly more likely to perceive all features as important, except for equity (at p=0.056), speed and accuracy. Those who identified as having a chronic health condition were significantly more likely than those who did not to perceive explainability (81.1%; OR=1.69; p=0.001) and human oversight (83.2%; OR=1.5; p=0.02) as very/extremely important.

Discussion

In this study we examined sociodemographic differences in preference for healthcare AI using a large weighted Australian sample that was calibrated to the LIA probability sample using a range of behavioural and lifestyle questions, as well as major sociodemographic variables. Overall, 56.7% (95% CI 53.8%–59.0%) of the participants were supportive of the development of AI, slightly lower than results from another recent Australian study that also used an online panel, which found 62.4% were supportive.15 In a separate analysis of the same AVA-AI survey, combining the LIA probability sample results with the online panel results,14 it was found that 60.3% (95% CI 58.4%–62.0%) of Australians were supportive of the development of AI. In the unweighted non-probability sample, 54.8% (95% CI 52.5%–57%) of participants supported the development of AI, suggesting that the use of an extensive set of variables in the weighting led to some improvement, but the potential of self-selection in online panels may not have been corrected fully by the sophisticated weighting methodology.

Similar to Zhang and Dafoe’s10 study in the USA, we found that support for the development of AI was higher among those with computer science experience, higher levels of education and higher household incomes. It has been suggested that support for AI is lower among groups with less education and more social disadvantage, whose livelihoods may be more threatened by automation.10 12 The potential for AI to threaten people’s livelihoods through taking jobs appears to be a poignant concern in Australia, where Selwyn et al15 found that the prospect of automation and job loss was the most commonly mentioned fear among their Australian sample. Results from our survey appear to support these findings, where metrics for social advantage (ie, household income and education) were strongly associated with support for development of AI.

The sociodemographic characteristics associated with support for HCAI were different from those associated with support for AI in general. The items assessing support for HCAI required participants to consider whether they supported the development of HCAI, on balance, when it involved a trade-off (lack of explainability, data sharing or physician deskilling). For each of the HCAI questions, household income and education were no longer predictors of support. For example, 66.3% of the weighted sample with incomes >$2000 per week supported the development of AI in general, and only 30.5% supported the development of unexplainable HCAI. In contrast, 45.9% of those with incomes <$500 per week supported AI in general and 29.7% supported the development of unexplainable HCAI. This suggests that measures of socioeconomic advantage are linked to a general support of the development of AI, but when assessing specific and potentially harmful applications of HCAI, there is a low level of support regardless of socioeconomic characteristics.

Qualitative research on HCAI with members of the public has found that attitudes towards HCAI are shaped by complex evaluations of the alignment of the technologies with the values of medicine.21 If this is the case, then support for HCAI may be driven less by economic values and more by values relating to healthcare.

The characteristics that we found to be consistent predictors of support for HCAI and their specified trade-offs were having computer science experience, being male and being aged 18–34. Similarly, Zhang and Dafoe10 found that younger people and those with computer science degrees expressed less concern about AI governance challenges than those who were older or did not have computer science qualifications.

Being male, having computer science experience and being in a younger age category were three characteristics among those Selwyn et al15 found were associated with higher levels of familiarity with AI. It is possible that subgroups more familiar with AI are perhaps more tolerant of its risks. However, the Selwyn and colleagues’ study did not control for potential confounding relationships between age, gender and computer science experience so it is unclear from this work whether age and gender were indeed associated with greater familiarity with AI or whether a greater proportion of their younger male sample also had computer science experience, which may be more likely associated with higher levels of familiarity with AI. The relationship between familiarity with AI and tolerance of its risks may warrant further investigation.

Our investigation into subgroup differences in the perceived importance of features of HCAI found that accuracy was regarded as particularly important by all subgroups. This differs from Ploug et al22 who found, in a choice experiment in Denmark, that factors like explainability, equity and physicians being responsible for decisions were regarded as more important than accuracy. The Danish experiment, however, offered the qualifier that the algorithm would at least be as accurate as a human doctor, whereas our questionnaire did not. Further research could test whether algorithmic performance is more important than other features in circumstances where there are no assurances that the algorithm is as accurate as a human doctor.

Health-related characteristics such as self-reported health and having a chronic health condition or disability had a strong effect on perceived importance attributed to traditionally human aspects of healthcare like explainability, human oversight and accountability. This result is echoed by Richardson et al’s21 finding that people’s discussions about the value of HCAI were often framed by their previous experiences with the healthcare system. Participants with complex health needs may have been more inclined to reflect on whether automated systems could meet all aspects of those needs.

Subgroups that were more likely to be supportive of HCAI were not necessarily more likely to see the features of care that they were trading off as less important. While those who identified as male, those aged 18–34 and those with computer science or programming experience were more likely to support the development of unexplainable AI in healthcare, they were just as likely as others to perceive explainability (‘knowing why a decision is made’) as an important aspect of AI-integrated care. This hints at a complex relationship between people’s support for the development of HCAI and their willingness to make compromises to their healthcare.

Limitations

Given the quickly shifting landscape around AI, it is possible that public support for AI has changed in the 2 years since the questionnaire was administered. In addition, the AVA-AI survey includes an online panel obtained by non-probability sampling, which is subject to self-selection biases. The weighting methodology assists in reducing these effects by accounting for more than basic demographic variables, such as age by education, gender, household structure, language spoken at home, self-reported health, early adopter status and television streaming. Any selection effects due to the prediction variables included in the analysis are also accounted for. However, it is possible that support for HCAI is mediated by confounding factors not considered in the weighting methodology or included in the analysis.

One key population that were not represented in the study were those who identified as a gender outside of the male/female binary. Only one participant identified as a gender outside of the binary and was excluded from the analysis due to insufficient participant numbers to form a third gender category. Given that support for AI is lower among certain marginalised groups, consulting gender diverse individuals about their support for AI is an important consideration for future research.

Finally, the present study is a cross-sectional analysis which cannot infer causation between any of the predictor and outcome variables. While we found an association between certain sociodemographic characteristics such as education, and outcomes such as level of support for AI, we cannot ascertain the reasons for this association. These reasons are likely complex and multifaceted and should be explored in further research.

Conclusion

Respondents who reported having greater ill health or disability were more likely to consider human aspects of healthcare, such as explainability, human oversight and accountability, as important. While factors indicating socioeconomic advantage (higher income, higher education) were associated with general support for AI, these factors were not necessarily related to support for HCAI scenarios. Instead, support for HCAI scenarios was higher among males, younger people and those with computer science or programming experience. Based on other research, these groups may have a higher level of familiarity with AI. Further research should examine the relationship between familiarity with AI and support for the development of AI.

Acknowledgments

Statistical consulting was provided by Dr Brad Wakefield at the National Institute for Applied Statistics Research Australia (NIASRA) Statistical Consulting Centre.

Footnotes

Twitter: @EmmaKFrost, @yvessj_aquino

Contributors: EKF contributed to the design of the study, cleaned the data, conducted the analysis, drafted the manuscript, and acts as guarantor. PO'S contributed to the design of the survey instrument, contributed to the design of the study, oversaw the analysis and edited the manuscript. DS contributed to the design of the survey and edited the manuscript. AB-M and YSJA edited the manuscript. SMC contributed to the design of the survey instrument, contributed to the design of the study and edited the manuscript. All authors approved the final version of the manuscript for publication.

Funding: Funding for this survey was provided by the University of Wollongong’s Global Challenges Program.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

No data are available.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

This study involves human participants and was approved by University of Wollongong HREC (2019/458). Participants gave informed consent to participate in the study before taking part.

References

- 1.Topol E. Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again, Illustrated edition. New York: Basic Books, 2019. [Google Scholar]

- 2.Hajkowicz S, Karimi S, Wark T, et al. Artificial Intelligence: Solving problems, growing the economy and improving our quality of life, Data61. CSIRO, 2019. [Google Scholar]

- 3.Attia ZI, Noseworthy PA, Lopez-Jimenez F, et al. An artificial intelligence-enabled ECG algorithm for the identification of patients with atrial fibrillation during sinus rhythm: a retrospective analysis of outcome prediction. Lancet 2019;394:861–7. 10.1016/S0140-6736(19)31721-0 [DOI] [PubMed] [Google Scholar]

- 4.Esteva A, Topol E. Can skin cancer diagnosis be transformed by AI Lancet 2019;394:1795. 10.1016/S0140-6736(19)32726-6 [DOI] [Google Scholar]

- 5.Kim JOR, Jeong Y-S, Kim JH, et al. Machine learning-based cardiovascular disease prediction model: a cohort study on the Korean national health insurance service health screening database. Diagnostics (Basel) 2021;11:943. 10.3390/diagnostics11060943 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Carter SM, Rogers W, Win KT, et al. The ethical, legal and social implications of using artificial intelligence systems in breast cancer care. Breast 2020;49:25–32. 10.1016/j.breast.2019.10.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kurani S, Inselman J, Rushlow DR, et al. Abstract 9213: effect of ai-enabled ECG screening for low EF in patients with different socioeconomic status. Circulation 2021;144:A9213. 10.1161/circ.144.suppl_1.9213 [DOI] [Google Scholar]

- 8.Seyyed-Kalantari L, Zhang H, McDermott MBA, et al. Underdiagnosis bias of artificial intelligence Algorithms applied to chest Radiographs in under-served patient populations. Nat Med 2021;27:2176–82. 10.1038/s41591-021-01595-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Obermeyer Z, Powers B, Vogeli C, et al. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019;366:447–53. 10.1126/science.aax2342 [DOI] [PubMed] [Google Scholar]

- 10.Zhang B, Dafoe A. 2 general attitudes toward AI | Artificial intelligence: American attitudes and trends. 2019. Available: https://governanceai.github.io/US-Public-Opinion-Report-Jan-2019/general-attitudes-toward-ai.html [Accessed 25 Jun 2021].

- 11.Yakar D, Ongena YP, Kwee TC, et al. Do people favor artificial intelligence over physicians? A survey among the general population and their view on artificial intelligence in medicine. Value Health 2022;25:374–81. 10.1016/j.jval.2021.09.004 [DOI] [PubMed] [Google Scholar]

- 12.Kovarik CL. Patient perspectives on the use of artificial intelligence. JAMA Dermatol 2020;156:493–4. 10.1001/jamadermatol.2019.5013 [DOI] [PubMed] [Google Scholar]

- 13.Social Research Centre . Life in Australia study. Available: https://www.srcentre.com.au/our-research/life-in-australia-study [Accessed 21 Apr 2021].

- 14.Isbanner S, O’Shaughnessy P, Steel D, et al. The adoption of artificial intelligence in health care and social services in Australia: findings from a Methodologically innovative national survey of values and attitudes (the AVA-AI study). J Med Internet Res 2022;24:e37611. 10.2196/37611 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Selwyn N, Cordoba BG, Andrejevic M, et al. AI for social good - Australian public attitudes toward AI and society. 2020: 76. [Google Scholar]

- 16.Australian Bureau of Statistics . Main features - Socio-Economic Indexes for Areas (SEIFA) 2016. 2018. Available: https://www.abs.gov.au/ausstats/abs@.nsf/Lookup/by%20Subject/2033.0.55.001~2016~Main%20Features~SOCIO-ECONOMIC%20INDEXES%20FOR%20AREAS%20(SEIFA)%202016~1 [Accessed 06 Sep 2022].

- 17.Cameron JJ, Stinson DA. Gender (Mis)Measurement: guidelines for respecting gender diversity in psychological research. Soc Personal Psychol Compass 2019;13. 10.1111/spc3.12506 [DOI] [Google Scholar]

- 18.Barnier J, Briatte F, Larmarange J. questionr: Functions to make surveys processing easier. 2022. Available: https://CRAN.R-project.org/package=questionr [Accessed 08 Sep 2022].

- 19.R Core Team . R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2013. Available: http://www R-project org/ [Google Scholar]

- 20.Lumley T. Survey: analysis of complex survey samples. R package version 3.35-1, 2019; 2020.

- 21.Richardson JP, Curtis S, Smith C, et al. A framework for examining patient attitudes regarding applications of artificial intelligence in Healthcare. Digit Health 2022;8:20552076221089084. 10.1177/20552076221089084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ploug T, Sundby A, Moeslund TB, et al. Population preferences for performance and explainability of artificial intelligence in health care: choice-based Conjoint survey. J Med Internet Res 2021;23:e26611. 10.2196/26611 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjhci-2022-100714supp001.pdf (3.3MB, pdf)

bmjhci-2022-100714supp002.pdf (21.9KB, pdf)

bmjhci-2022-100714supp003.pdf (24KB, pdf)

Data Availability Statement

No data are available.