Abstract

Magnetic resonance imaging and computed tomography from multiple batches (e.g. sites, scanners, datasets, etc.) are increasingly used alongside complex downstream analyses to obtain new insights into the human brain. However, significant confounding due to batch-related technical variation, called batch effects, is present in this data; direct application of downstream analyses to the data may lead to biased results. Image harmonization methods seek to remove these batch effects and enable increased generalizability and reproducibility of downstream results. In this review, we describe and categorize current approaches in statistical and deep learning harmonization methods. We also describe current evaluation metrics used to assess harmonization methods and provide a standardized framework to evaluate newly-proposed methods for effective harmonization and preservation of biological information. Finally, we provide recommendations to end-users to advocate for more effective use of current methods and to methodologists to direct future efforts and accelerate development of the field.

1. Introduction

Brain imaging acquired via magnetic resonance imaging (MRI) or computed tomography (CT) from multiple batches, such as different sites or scanners, has shown promise in providing increased sample sizes for imaging-based neuroscience studies, prediction efforts, and more (Bethlehem et al., 2022; Casey et al., 2018; Choudhury et al., 2014; Di Martino et al., 2014; Horn et al., 2004; Marek et al., 2022; Mueller et al., 2005; Poldrack and Gorgolewski, 2014; van Erp et al., 2014; Van Essen et al., 2013). These multi-batch neuroimaging data are known to suffer from non-biological, technical variability between subjects from different batches, which we refer to as batch effects. Batch effects can be due to differences in acquisition protocol, magnetic field strength, scanner manufacturer, scanner drift, hardware imperfections, and more (Badhwar et al., 2020; Byrge et al., 2022; Cai et al., 2021; Han et al., 2006; Jovicich et al., 2006; Shinohara et al., 2017; Takao et al., 2014, 2011). These batch effects may explain, in part, challenges with reproducibility of neuroscience studies, generalizability of prediction algorithms, and incorporation of radiomics-derived imaging biomarkers in clinical practice (Crombé et al., 2021; Fournier et al., 2021; Mårtensson et al., 2020; Schwarz, 2021; Thieleking et al., 2021). Notably, batch effects have been shown to be significantly easier to detect than biological effects, both by statistical testing and machine learning algorithms (Bell et al., 2022; Fortin et al., 2018, 2017; Nielson et al., 2018). Additionally, due to the complex nature of batch effects, traditional statistical techniques for adjusting for confounders, such as inclusion of batch in a linear model as a mean effect, may be inadequate to sufficiently account for batch effects.

There is also growing interest in using neuroimaging to evaluate new treatments across a range of neurologic, psychiatric, and other clinical trials (Cash et al., 2014; Dercle et al., 2022; Polman et al., 2006; Saunders et al., 2016; Tariot et al., 2011; Tondelli et al., 2020; van Dyck et al., 2023). While clinical trial treatments are usually randomized within batches such that conclusions from unharmonized images are asymptotically unbiased, prespecified approaches to account for known confounders, including batch, allow for increased power and improved estimation of treatment effects (Hernández et al., 2006, 2004; Kent et al., 2009; Neuhaus, 1998; Optimising the Analysis of Stroke Trials (OAST) Collaboration et al., 2009). This is especially important when randomized treatment assignments are not completely balanced within each batch. Ultimately, in clinical trials where imaging biomarkers are measured across multiple centers, addressing batch effects allows for the detection of smaller treatments effects while requiring fewer required subjects, minimizing participant burden, and reducing costs.

In observational settings where batch effects are present, such as when multiple small neuroimaging datasets are aggregated into one larger sample, addressing batch effects is even more important to obtain valid conclusions (Grech-Sollars et al., 2015; Keshavan et al., 2016; Stonnington et al., 2008; Takao et al., 2014). In these settings, failure to account for the known confounding of batch effects may lead to decreased power, less replicable findings, and potentially-biased findings. Effective removal of batch effects has been shown to enable detection of otherwise-undetected biological effects as well as increase the replicability of biological effects of interest in simulations of discovery-validation study designs (Bashyam et al., 2022; Bell et al., 2022; Carré et al., 2022; Fortin et al., 2017; Zhang et al., 2022; Zuo et al., 2021). Additionally, when batch-wise differences in participant populations are present, failure to address batch effects may result in biased conclusions (Suttorp et al., 2015).

Various solutions have been proposed and implemented to address this problem at different points in data collection and analysis pipelines. For example, in study design, batch effects can be minimized by collecting data from only one scanner, one manufacturer, one field strength, one acquisition protocol, or some combination of these criteria (Clarke et al., 2020; De Stefano et al., 2022; Ihalainen et al., 2004; Malyarenko et al., 2013; Meeter et al., 2017; Satterthwaite et al., 2014; van de Bank et al., 2015; Vogelbacher et al., 2021). However, when data collection is limited to only one batch, it is challenging to collect large sample sizes, and design-based solutions cannot address batch effects in data that has already been collected (Harms et al., 2018). Additionally, even when acquisition properties or scanner manufacturer are tightly controlled, batch effects can still arise due to residual differences, such as hardware imperfections, site or operator characteristics, software or hardware upgrades in long-running studies, or otherwise non-controllable scanner properties (Jovicich et al., 2016; Shinohara et al., 2017).

At other stages of the data analysis pipeline, such as during the image pre-processing step, standardization of images using methods for gradient distortion correction, bias field correction, and intensity normalization can also reduce batch effects (Brown et al., 2020; Fortin et al., 2016; Guan et al., 2022; Hellier, 2003; Jovicich et al., 2006; Nyúl and Udupa, 1999; Shinohara et al., 2014; Tustison et al., 2010; Wang et al., 1998; Wrobel et al., 2020). These normalization methods act on intersubject variability without explicitly modeling batch effects, and as a result, can only reduce batch effects that coincide with inter-subject variability.

Additionally, some approaches account for batch effects using batch-aware downstream statistical or machine learning analyses. For example, data aggregation can be carried out in post-analysis through the use of meta-analysis or mega-analysis techniques, where estimates of interest are first calculated within batches and then analyzed jointly (Jahanshad et al., 2013). In certain settings, the simple approach of training models on large datasets across many batches can be considered, as these models are theoretically able to learn generalizable parameters that are invariant to batch, especially if the models are able to explicitly incorporate batch status. This approach has been used in normative modeling settings (Bayer et al., 2022a; Bethlehem et al., 2022; Kia et al., 2020; Kim et al., 2022). However, in many prediction or classification settings, complex machine learning algorithms are used that are not able to learn batch-invariant decision boundaries; in these settings, if outcome distributions differ across batches, models may incorrectly learn to use batch effects to make predictions. Here, transfer learning approaches have been used (Aderghal et al., 2020; Chen et al., 2020; Dar et al., 2020; He et al., 2021; Yang et al., 2019). In transfer learning, instead of reducing batch effects in the data itself, these methods seek to train deep learning models in a reference batch and then recalibrate these models for prediction in new batches.

Finally, batch effects can be explicitly modeled for and addressed in image pre-processing, such that raw data is mapped from multiple batches into one common batch and the resulting harmonized dataset can then be analyzed as if it originated from a common batch. We refer to this process as image harmonization, which is the focus of this review.

This review is broadly organized into four sections. In the first and second sections, we describe statistical harmonization methods and deep learning harmonization methods, respectively. These two sections are additionally subdivided based on whether methods are designed for retrospective or prospective study designs. We define prospective study designs as those where some subjects, commonly called “traveling subjects,” are purposefully scanned across multiple batches within a short time interval; these paired data across batches can then be used to facilitate harmonization of these batches at the time of analysis. In retrospective study designs, no such paired data are available. In the third section, we discuss the evaluation of harmonization methods, including the various domains under which harmonization should be evaluated as well as specific tests to perform that evaluation. Finally, in the fourth section, we provide recommendations to both end-users and methodologists. For end-users, we suggest harmonization methods for each data type and study design based on ease of use, theoretical behavior, and empirical validation. For methodologists, we provide guidance for further work in harmonization, a standardized framework of evaluation, and improved comparability of novel harmonization methods.

2. Literature search

We performed a literature search across the PubMed database using the following search term: (“magnetic resonance” OR “MRI”) AND (“harmonization” OR “harmonizing” OR “harmonize” OR “harmonisation” OR “harmonising” OR “harmonise” OR “scanner effect” OR “site effect” OR “batch effect” OR “batch correct” OR “domain effect” OR “domain transfer” OR “technical variability” OR “style transfer”).

This search returned 583 candidate publications, as of January 17th, 2023, which were screened by title and abstract. Publications were included if they proposed or validated a statistical or deep learning approach to image harmonization. Other literature the authors were aware of, but were not found in this search, were also included as well as relevant citations from included publications.

Notably, we identified five relevant review articles on the topic (Bayer et al., 2022b; Bento et al., 2022; Da-Ano et al., 2020b; Pinto et al., 2020; Stamoulou et al., 2022). Da-Ano et al., (2020b); Bayer et al., (2022b), and Stamoulou et al., (2022) described statistical methods; Bento et al., (2022) described deep learning methods; and Pinto et al., (2020) described harmonization methods specifically for diffusion MRI. In this review, we seek to add to this literature by unifying statistical and deep learning methods for diffusion and non-diffusion MRI. Additionally, we describe common evaluation techniques for validating harmonization methods and provide a framework for proposing and evaluating new methods to direct future efforts in the field.

2.1. Statistical methods

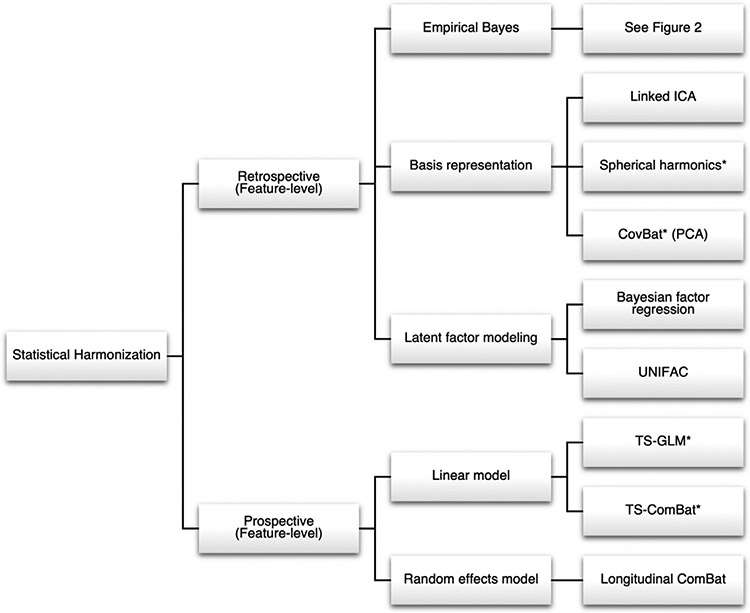

Several overarching statistical models have been used for image harmonization, including linear models, basis representations, latent factor models, and others (Figure 1). In this review, we provide an overview of methods for harmonization of imaging features across known batch labels. These statistical methods can largely be divided into retrospective and prospective harmonization methods. Retrospective harmonization is performed after data collection and aims to mitigate biases due to scanner with the available data. Prospective harmonization needs to be integrated into the study design and often involves collecting repeated measures for downstream analyses.

Fig. 1.

Flowchart of statistical models organized by study design and underlying model class. Asterisks indicate methods that have been evaluated in more than one study.

2.2. Retrospective harmonization

2.2.1. ComBat

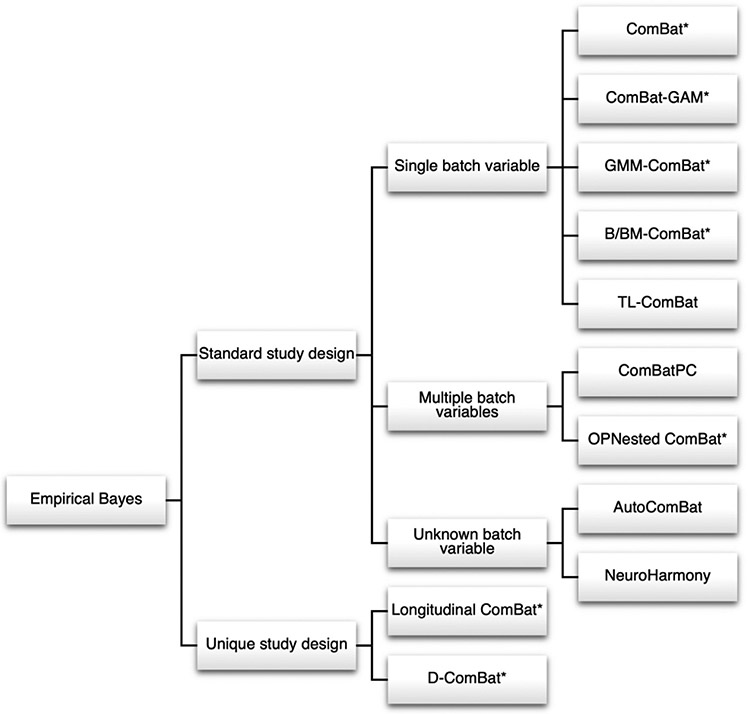

Fortin et al., (2017) proposed that ComBat, a method first designed for batch effect correction in genomics, could be used to harmonize MRI images and derived features (Johnson et al., 2007). ComBat and its various extensions, discussed below, have been widely used in neuroimaging and are organized in Figure 2.

Fig. 2.

Flowchart of ComBat-based models organized by study design and underlying model class. All models presented in this figure perform feature-level harmonization in retrospective settings. Asterisks indicate methods that have been evaluated in more than one study.

ComBat employs an empirical Bayes linear model framework, which we briefly review. Let , , , denote the -dimensional vectors of observed data where indexes site, indexes subjects within sites, is the number of subjects acquired on site , and is the number of features. The observed data can be measured across voxels, regions of interest, or any other parcellation of the brain. Our goal is to harmonize these features across the sites. ComBat assumes that the data follow

where is the intercept, is the vector of covariates, is the vector of regression coefficients, is the mean site effect, and is the variance site effect. ComBat assumes that the errors independently follow . First, least-squares estimates and are obtained for each feature. ComBat then assumes that the site effects follow the same distribution across features. That is, ComBat assumes the mean site effects follow independent normal distributions and the variance site effects follow independent inverse gamma distributions. The empirical Bayes step estimates the hyperparameters via method of moments using data across all features. The empirical Bayes point estimates and are then obtained as the means of the posterior distributions. The ComBat-harmonized data are then obtained as

| (1) |

ComBat was first applied to voxel-level fractional anisotropy (FA) values from two diffusion MRI datasets where, within each dataset, all subjects were imaged on the same scanner (Fortin et al., 2017). Subsequent studies validated ComBat on other neuroimaging features including cortical thickness and functional connectivity (Fortin et al., 2018; Yu et al., 2018). Since its publication and validation, ComBat has been widely validated and used in the field of MRI imaging (Acquitter et al., 2022; Barth et al., 2022; Bourbonne et al., 2021; Campello et al., 2022; Castaldo et al., 2022; P. Chen et al., 2022; A. Crombé et al., 2020; Dai et al., 2022; Haddad et al., 2022; Ingalhalikar et al., 2021; Leithner et al., 2022; Liu et al., 2022; Luna et al., 2021; Meyers et al., 2022; Onicas et al., 2022; Orlhac et al., 2021; Pagani et al., 2023; Radua et al., 2020; Saint Martin et al., 2021; Verma et al., 2019; Wengler et al., 2021; Whitney et al., 2021; H.M. 2020; Xia et al., 2022, 2019; Zavaliangos-Petropulu et al., 2019).

ComBat was also shown to be effective in magnetic resonance spectroscopy, and its applications to radiomics have been recently reviewed (Bell et al., 2022; Da-Ano et al., 2020b). To study its robustness, analyses have evaluated how ComBat behaves at various sample sizes (Parekh et al., 2022) and validated ComBat correction against correction based on traveling phantoms (Treit et al., 2022). ComBat has been recommended to use for harmonizing large-scale open-source neuroimaging datasets, such as the UK Biobank (Bijsterbosch et al., 2020; Bordin et al., 2021), ABIDE (Horien et al., 2021), ENIGMA (Hatton et al., 2020; Radua et al., 2020), ADNI (Ma et al., 2019), and ABCD (Hagler et al., 2019; Marek et al., 2019) datasets. Limitations of ComBat have been previously described in the field of genomics (T. Li et al., 2021; Nygaard et al., 2016; Zindler et al., 2020). These limitations are described in-depth in the “Recommendations for End-Users” section of the Discussion.

2.2.2. ComBat extensions

Extensions of the standard ComBat model have sought to relax certain model-based assumptions. Many of these methods and their methodological details are covered in a recent review (Bayer et al., 2022b). One popular extension is ComBat-GAM, which allows for preservation of non-linear covariate effects through use of the generalized additive model (GAM) (Pomponio et al., 2020). Such estimation of non-linear covariate effects has been shown to be necessary in certain data settings, such as in diffusion MRI (Cetin-Karayumak et al., 2020b). Another model-based extension incorporates Gaussian mixture models (GMM) into GMM-ComBat to account for multimodal feature distributions (Horng et al., 2022b).

Other extensions of ComBat retain the original model but modify its construction and estimation. A recent study used a fully Bayesian approach with Monte Carlo sampling in the ComBat model for estimating posterior distributions and found that fully-Bayesian ComBat could provide more accurate harmonization results and unconstrained posterior distributions compared to the standard Empirical-Bayes ComBat model (Reynolds et al., 2022). B-ComBat and BM-ComBat estimate site parameters via bootstrapping and allow for robust harmonization to the pooled feature distribution or a reference batch, respectively (Da-Ano et al., 2020a). TL-ComBat provides an algorithm for applying ComBat parameters learned on training data to new subjects from a known batch (Da-Ano et al., 2021). Another study found that applying intensity normalization via RAVEL followed by ComBat provides greater removal of batch effects (Eshaghzadeh Torbati et al., 2021).

ComBat has been adapted to various study designs. In longitudinal studies where subjects may be imaged one or more times, Longitudinal ComBat accounts for intra-subject correlation by incorporating random effects into the model (Beer et al., 2020). The ComBat framework has also been independently extended by two groups to work in a distributed data setting via Decentralized ComBat/Distributed ComBat (D-ComBat), where data is collected across multiple sites but data-privacy concerns only allow summary statistics from each site to be shared (Bostami et al., 2022b; A. A. Chen et al., 2022b). Many of the above ComBat extensions have been externally validated and used in applied studies (Bostami et al., 2022a; Richter et al., 2022; Saponaro et al., 2022; Singh et al., 2022; Sun et al., 2022; Tafuri et al., 2022).

Finally, methodologists have extended the ComBat model to settings where batch status could be defined by multiple batch covariates, or an unseen batch must be harmonized to a set of known batches. ComBatPC proposed that secondary batch variables to remove could be modeled as additional mean effects in the ComBat model, while the primary batch variable remained in the model as both a mean and variance effect (Wachinger et al., 2021). Additionally, borrowing from the field of genome-wide association studies (GWAS), they showed that including first principal component as one of the secondary batch variables could capture unobserved subpopulations and therefore improve harmonization performance. Applicable to similar settings, OPNested ComBat, an extension of Nested ComBat, learns an optimal order for correcting multiple batch variables and then performs iterative correction for each batch variable individually via the ComBat or GMM-ComBat model (Horng et al., 2022b; Horng et al., 2022a). AutoComBat sidesteps the issue of multiple batches by clustering subjects into automatically-identified batches, implicitly learning which combinations of metadata, such as image acquisition tags or image summary statistics, best define batch status before applying the standard ComBat model (Carré et al., 2022). For settings where an unseen batch must be harmonized to a set of known batches, NeuroHarmony has also been proposed to learn to predict appropriate ComBat parameters for correcting the unseen batch using scanner-associated image quality metrics (Garcia-Dias et al., 2020).

2.2.3. Basis representation

Several harmonization approaches represent the original data using basis vectors or functions estimated from the data then remove batch effects from the representation. Compared to methods that treat features individually, basis representations can capture more complex batch effects and enable harmonization while preserving joint structure among features. The basis chosen varies depending on the imaging modality but includes principal components, independent components, and spherical harmonics.

Correcting Covariance Batch Effects (CovBat) performs multivariate harmonization by projecting residuals from ComBat onto their principal component axes and applying batch-specific shifts in the principal component space. (A. A. Chen et al., 2022a). This study was the first to show that batch effects are present not only in individual features, but also in the covariance structure between features. CovBat first employs standard ComBat to globally shift and scale each feature, but additionally harmonizes in the principal component space to shift batch-specific covariance matrices towards the global covariance matrix. CovBat was shown to outperform existing harmonization methods in both multivariate statistical evaluations and prediction-based machine learning metrics in cortical structure measurements from the ADNI (A. A. Chen et al., 2022a). In functional connectivity harmonization, CovBat was shown to more effectively harmonize community structure, when compared to ComBat, in sites from the iSTAGING consortium as well as based on information theoretic metrics in the ABIDE, IMPAC, and ADHD-2020 studies (A. A. Chen et al., 2022c; Roffet et al., 2022). CovBat has also been shown to remove batch effects in the cortical and volumetric measures in the ENIGMA study and diffusion tensor imaging features from the ADNI study (Larivière et al., 2022; Sinha et al., 2021; Thomopoulos et al., 2021).

Independent component analysis (ICA) has been a widely used data-driven approach to identify and remove structured noise components, such as head motion-related, physiological, and scanner-induced noise, from fMRI signals (McKeown et al., 2003; Mckeown et al., 1998). Specifically, one study (Feis et al., 2015) used the Functional Magnetic Resonance Imaging of the Brain Centre’s (FMRIB’s) ICA-based X-noiseifier (FIX, Griffanti et al., 2014; Salimi-Khorshidi et al., 2014) implemented in FMRIB’s Software Library (FSL) to reduce scanner-related effects in resting-state networks (RSNs). This study found that ICA-based FIX was useful to remove separate noise components in individual subjects’ ICA, but it cannot deal with hardware differences in sensitivity to RSNs (in relation to configurations) or RSN spatial variability (in relation to head coils). Additionally, ICA-based FIX cannot remove scanner-related differences in the magnitude of the BOLD effect. A recently developed linked ICA method was shown to outperform standard general linear model and ICA in removing batch effects from multimodal MRI data collected on the same scanner, but with hardware and software upgrades and different acquisition parameters. Linked ICA used data fusion of multiple MRI modalities to identify and remove scanner-related noise components in multimodal spatial maps. It has yet to be shown whether linked ICA is efficient for removing batch effects from data collected from different scanners.

For diffusion tensor imaging (DTI), voxel-wise signal intensity can be represented in a spherical harmonics (SH) basis, which is an orthonormal basis for functions defined on a unit sphere. Projection of the original intensities into the SH basis yield rotation invariant spherical harmonic (RISH) features. Harmonization from a target batch to reference batch has been proposed by representing complex batch effects as mean shifts in RISH features, often referred to as RISH harmonization (Mirzaalian et al., 2015). Extensions of the RISH harmonization method have been proposed (Cetin Karayumak et al., 2019; Mirzaalian et al., 2018; Mirzaalian et al., 2016) and covered in a recent review (Pinto et al., 2020). Recent studies have compared statistical and deep learning SH-based harmonization methods, finding that the methods effectively mitigate batch effects but vary in performance on different metrics (Ning et al., 2020; Tax et al., 2019). A recent study found that RISH harmonization outperformed ComBat for preservation of biological effects in large-scale multi-center studies (de Brito Robalo et al., 2022, 2021). RISH harmonization has also been validated in traveling subjects studies (De Luca et al., 2022; Ning et al., 2020) and several major studies (Cetin Karayumak et al., 2019; Cetin-Karayumak et al., 2020a).

2.2.4. Latent factor modeling

Another approach to retrospective harmonization uses latent factors to model biological or batch effects in order to separate wanted and unwanted variation. A latent factor model was first used in Removal of Artificial Voxel Effect by Linear regression (RAVEL) for neuroimaging normalization to model technical variability as latent factors estimated using a set of control voxels not associated with biological variables of interest (Fortin et al., 2016). RAVEL assumes that the matrix of features follows

| (2) |

where is the matrix of known covariates, is the matrix of regression coefficients, is the matrix of unwanted latent factors, and is the coefficient matrix associated with . For a subset of voxels where there is no association between the voxels and , an estimate of can be obtained by performing factor analysis on . Then, estimates for are obtained by fitting separate linear regressions for each voxel under the model in (2), and the RAVEL-corrected features are obtained as .

The model in (2) was adapted as a Bayesian harmonization method by representing wanted variation through the latent factors, including known batch indicators in the linear model, and yielding harmonized low-dimensional features as the estimated latent factors (Avalos-Pacheco et al., 2022). Their model extends (2) by including a known batch indicator matrix via

| (3) |

where is the number of batches and is the coefficient matrix associated with . In contrast to RAVEL, this model also allows the variance of to vary by batch. They develop a non-local spike-and-slab prior to induce sparsity on the factor loadings . The authors then develop an expectation maximization algorithm for estimation of the posterior distribution , and the harmonized reconstruction are obtained from the mean of the posterior. In an application to gene expression data, they demonstrate that their method performs dimension reduction while adjusting for distinct covariance patterns across batches and benefits downstream survival analyses.

The UNIFAC harmonization method proposes a generalization of the latent factor model, allowing for flexible removal of multivariate batch effects (Zhang et al., 2022). Their main assumption is that the batch effects are low-rank and represented as matrix-valued shifts. Similar to ComBat and CovBat, UNIFAC harmonization first fits a linear model with known covariates and batch indicators, standardizes the data to have homogenous variance, and obtains standardized data where denotes data from batch , . The method then assumes that follows

where is low-ranked latent structure, are low-rank latent patterns associated with batch, are full-rank noise matrices with unit variance, and capture batch-specific scale shifts. UNIFAC harmonization estimates these latent patterns by optimizing a loss function with a nuclear norm penalty, which yields low-rank structures.

The UNIFAC-harmonized data are defined as

where is the estimated population variance from the standardization step. Unlike ComBat and CovBat, the UNIFAC harmonization method can capture multivariate batch effects that differ across subjects within the same batch. Compared to CovBat, UNIFAC harmonization can model batch effects that are not constrained to principal component directions. The authors compare UNIFAC harmonization to existing methods in a schizophrenia study conducted across three sites. They show that UNIFAC harmonization outperforms ComBat, CovBat, and several multivariate harmonization approaches on reducing differences in covariance, obscuring prediction of site, and statistical power in detection age-bydisease interactions.

2.3. Prospective harmonization

2.3.1. Traveling subjects linear models

Typical multi-center neuroimaging studies collect separate subjects from each study center, which leads to challenges in separating biological and technical variability. A recent study design addresses this issue by recruiting a subset of participants to travel to every scanner used in the study, often referred to as traveling subjects (Noble et al., 2017). Subsequent studies demonstrated that linear models effectively estimated and removed scanner-related biases from the traveling subjects subset (Yamashita et al., 2019). Increasingly, this study design has been employed in several large-scale multi-site studies (Hawco et al., 2022; Tanaka et al., 2021).

In these traveling subjects studies, subjects, are acquired multiple times across scanners. Let , , , denote the observed data where indexes site, indexes subject, and indexes feature. Furthermore, let denote a -dimensional vector of participant factors, which can include indicators for each participant, diagnosis labels, sample, or any other relevant label. The traveling-subject harmonization model, TS-GLM, assumes that batch effects can be modeled as mean shifts within subjects across batches (Yamashita et al., 2019). Notably, unlike many of the retrospective harmonization methods described above, TS-GLM does not model batch effect as a scale component in the variance of the residuals. The model is expressed as

where is the vector of regression coefficients, is the mean site effect, and are errors assumed to independently follow . Depending on the choice of indicators in , this model can have many more parameters than observations. Identifiability of the parameters in this model requires constraints on the estimators and . In the simple case where is a -dimensional vector of participant indicators, the constraints are and for each . Once estimates are obtained, the mean site parameters can be applied to any subject acquired on scanner , even those not included in the traveling subjects dataset. This model has been applied and validated across multiple studies (Koike et al., 2021; Yamashita et al., 2021; A. 2020).

ComBat has been extended to the traveling subjects study design, accounting for batch effects in the scale of measurements and leveraging information across features in parameter estimation (Maikusa et al., 2021). This traveling subjects ComBat (TS-ComBat) model is formulated as

where is the variance scanner effect. As in ComBat, the model assumes the mean batch effects follow independent normal distributions and the variance batch effects follow independent inverse gamma distributions. Estimation also requires identifiability constraints on and . The batch effects are obtained as empirical Bayes point estimates and are then obtained as the means of the posterior distributions. Comparison of TS-ComBat and the model in Yamashita et al., (2019) showed that both models performed well in multiple harmonization tasks, but TS-ComBat is superior in smaller sample sizes.

Limitations of TS-GLM and TS-ComBat restrict applicability to common scenarios. Both models require that sufficient subjects are scanned on all scanners in order to ensure that batch effects are not confounded with biological effects. Furthermore, these models do not account for time of scan, so any batch effects may also be driven by changes in imaging measurements over time. Since participants may be lost to follow-up and are acquired at multiple distant time points, these limitations are often relevant and impact the results of harmonization.

2.3.2. Longitudinal ComBat

An alternative for harmonization in traveling subjects studies is Longitudinal ComBat, which flexibly models repeated measures across time (Beer et al., 2020). Compared to other models, Longitudinal ComBat efficiently captures subject effects as random intercepts and incorporates time of scan into the harmonization. While this method was originally designed for longitudinal studies, it has recently been applied in a traveling subjects study to effectively mitigate batch effects (Richter et al., 2022).

Let , , , , denote the observed data where indexes site, indexes subject, indexes feature, and is a continuous or categorical time variable. The Longitudinal ComBat model is expressed as

where is the mean of feature at baseline, is the mean scanner effect, is the variance scanner effect, is a potentially time-varying vector of covariates, is a vector of regression coefficients, and is a subject-specific random intercept. The errors are assumed to be independent from the random intercepts . ComBat assumptions are placed on the mean and variance scanner parameters, and estimation proceeds through standard mixed model estimation followed by a modified empirical Bayes step.

3. Deep learning methods

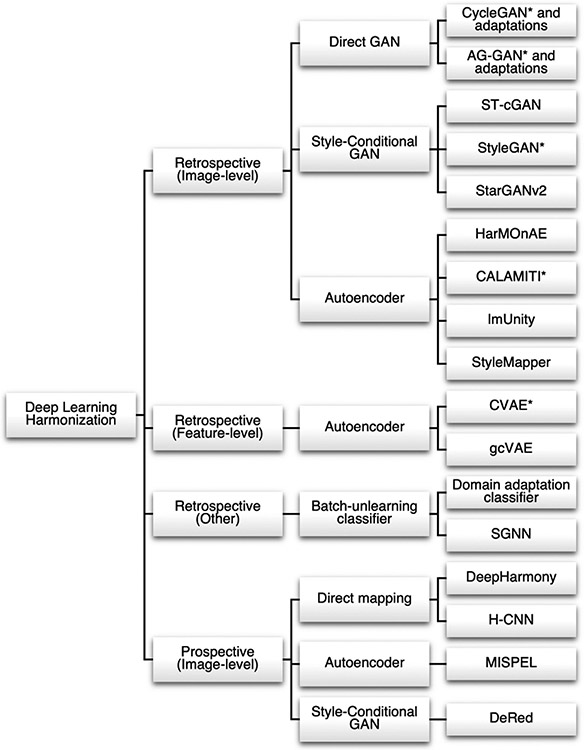

In recent years, a wide range of deep learning methods have been proposed as powerful and flexible tools to correct batch effects. These methods have especially shown promise for harmonization of unstructured data, such as images themselves, and for harmonization jointly across multivariate feature matrices. In the unpaired subject setting, popular approaches have used unpaired image-to-image translation frameworks as well as autoencoder networks designed to embed subjects into batch-invariant latent spaces. In paired subject data, methods have used specialized U-Net architectures adapted to imaging data as well as autoencoder methods to estimate direct mappings from one batch to another. Methods are categorized in Figure 3.

Fig. 3.

Flowchart of deep learning models organized by study design and underlying model class. Asterisks indicate methods that have been evaluated in more than one study.

3.1. Retrospective harmonization

3.1.1. Cycle-consistency GANs (Image-level)

Zhu et al., (2017) proposed the cycle-consistent generative-adversarial network (CycleGAN) to address the problem of unpaired image-to-image translation. The goal of this network is to learn a mapping between two image batches, and , using two generator-discriminator pairs. One generator, , seeks to learn a mapping such that its corresponding discriminator, , cannot distinguish the distribution of images from from that of images from . Similarly, generator and discriminator learn the inverse mapping . Finally, a cycle-consistency loss is introduced as an additional constraint to push the network to preserve image-level features, . This cycle-consistency loss enforces that an image translated from batch to batch and then back to batch should resemble the untranslated image. Thus, classical CycleGAN attempts to minimize the following objective function: , where is a hyperparameter controlling relative importance of the loss components.

In image harmonization, this architecture has been leveraged for unpaired image-to-image translation in many contexts with minor additions to the original CycleGAN loss function and architecture (Dar et al., 2019; Hognon et al., 2019; Kieselmann et al., 2021; Liu et al., 2020; Sinha et al., 2021; Tixier et al., 2021; Zhao et al., 2019; Zhong et al., 2020). Zhao et al., (2019) proposed surface-to-surface GAN (S2SGAN), a variation of CycleGAN using spherical U-Net layers instead of standard convolutional layers, in order to perform harmonization on subject-wise cortical thicknesses projected to a spherical surface. Additionally, they added a cycle-consistency correlation loss component to the original CycleGAN loss such that corresponding vertices between input and cycled images are highly correlated. Dar et al., (2019) demonstrated that a CycleGAN network could generate T1-weighted images from T2-weighted images, and vice versa. Hognon et al., (2019) and Tixier et al., (2021) developed a two-stage framework, where the original CycleGAN network is first used with early stopping criteria to generate “pseudo-paired” data and then a pix2pix network is used on this “pseudo-paired” data to learn the final source-to-reference batch mapping. This two-stage framework differs markedly from other CycleGAN-based approaches; the authors claimed that it allows for better preservation of content information in their data setting where all reference batch subjects were controls while a significant subset of source batch subjects had anatomical pathologies. To validate the beneficial effects of CycleGAN on performance of downstream tasks, Liu et al., (2020) demonstrated that use of the standard CycleGAN model across a multi-batch dataset drastically increased the performance of a fully-convolutional segmentation neural network trained on reference batch images; however, they noted that post-harmonization performance remained substantially lower compared to performance on reference batch images.

Other adaptations of CycleGAN have imposed additional assumptions on the nature of batch effects – namely, that there should be no distortions in anatomy across batches. Previous studies have described distortions in anatomical features across batches, such as cortical thicknesses (Fortin et al., 2018), so the validity of this assumption depends on whether these previously described anatomical differences are actually due to true distortions or instead due to errors in automated segmentation because of batch-wise intensity differences. For example, Kieselmann et al., (2021) added a cycle-consistency geometric loss, where binary geometric masks (1 inside the brain and 0 otherwise) generated from input and cycled images are encouraged to be similar. Meanwhile, Chang et al., (2022) proposed semi-supervised harmonization (SSH), a variation of CycleGAN that uses a two-stage framework to perform harmonization in a manner similar to intensity normalization. In the first stage, the standard CycleGAN model is used to generate an initial harmonized image for each raw image. In the second stage, these initial harmonized images are used along with raw data to perform intensity normalization – that is, histogram matching is used to match each raw intensity to its corresponding initial harmonized intensity. Finally, to generate the output harmonized image, the raw intensities within the raw image are swapped out for their corresponding initial harmonized intensities. Thus, SSH can maintain the high resolution and anatomical fidelity of the raw image, but with brightness and contrast characteristics of the desired reference batch. The authors showed that SSH was able to improve the performance, when compared to ComBat and standard CycleGAN, of a cervical cancer classifier that was trained on subjects from the reference batch and tested on subjects from the source batch that were harmonized to the reference batch. The authors did not compare SSH performance against standard intensity normalization techniques (Nyúl and Udupa, 1999; Shinohara et al., 2014).

3.1.2. Attention-Mechanism GANs (Image-level)

A further extension of the CycleGAN network called attention-guided GAN (AG-GAN) incorporated attention guidance in both generators and discriminators, where the network is able to learn which parts of an image are most different between batches and focus its attention on accurately translating these parts (Tang et al., 2019). It has been applied to the image harmonization setting with minimal alterations (Sinha et al., 2021). This model leverages the same cycle-consistency idea as CycleGAN, but additionally seeks to decompose generated images into an attention-weighted linear combination of the input image and a restyled image, such that voxels that do not differ between batches can be left mostly unchanged. The attention-guided discriminators then focus on the regions of the generated image that are most artificial. The AGGAN loss function consists of the original CycleGAN loss with additional attention-guided adversarial components, a pixel-wise loss to minimize unnecessary pixel-wise changes, and an attention mask loss to prevent attention masks from globally saturating to 1. Thus, in AG-GAN, the regions of generated images that are similar between batches and are largely reconstructed from the input image, allowing generator-discriminator pairs to focus on style transfer in the regions that differ. Other CycleGAN-based models that include attention mechanisms have also been introduced by Selim et al., (2022) and Gutierrez et al., (2023).

3.1.3. Style-conditional GANs (Image-level)

While CycleGAN-based methods perform style transfer conditional only on an input image, adaptations to the CycleGAN framework allow for GAN-based style transfer that is conditional on both an input image as well as a desired output style (Bashyam et al., 2022; Choi et al., 2020; Fetty et al., 2020; Karras et al., 2019; Liu et al., 2021; Tian et al., 2022; Yao et al., 2022). These methods implicitly learn continuous style features such that subtle batch features, like different acquisitions parameters within the same manufacturer, can potentially be corrected. Additionally, since these models include no explicit constraints to disentangle batch from non-batch style features, such as age and sex, nonbatch styles may also be incorporated into style representations. Notably, style-conditional GANs share key characteristics with other broad classes of methods described in this review; these methods incorporate cycle-consistency loss components, similarly to CycleGAN, and also attempt to learn a latent representation of data where content and style information are disentangled, similarly to autoencoder-based models discussed further below.

Qin et al., (2022) draw strongly from the original CycleGAN framework and perform harmonization between two batches using two paired style-conditional GANs, which they call style transfer conditional GAN (ST-cGAN). In each pair, an encoder takes two images as input – one image is encoded into a content representation while the other is encoded into a style representation. Then, these two components are fused via adaptive instance normalization (AdaIN, Huang and Belongie, 2017) by the generator to create an output with the content of the first image and style of the second. The loss function involves the cycle-consistency and paired discrimination loss components along with an additional constraint of identity loss, which enforces that “harmonization” of an image directly to its own true batch should reproduce itself.

Meanwhile, other style-conditional GANs deviate more from the CycleGAN. One such model, StyleGAN, was proposed by Karras et al., (2019) and later applied to imaging data by Fetty et al., (2020) and Liu et al., (2021). StyleGAN consists of one style-mapping network, one generator, one image discriminator, and one style discriminator. First, StyleGAN uses the style-mapping network to create a style representation from a random-noise latent space. Then, the generator encodes an image, combines it with this style representation using adaptive instance normalization, and attempts to generate a new image in that style, such that the image discriminator cannot tell the image is generated and the style discriminator can recover the input style representation. Since this generative process is under-constrained, a cycle-consistency loss component is added as well as a style diversification loss component. Thus, the network learns to sample diverse styles, generate realistic images in those styles that retain content, and implicitly learn the original style of each image.

A similar concept is employed by StarGANv2 and has been used in the multi-batch image harmonization setting (Bashyam et al., 2022; Choi et al., 2020). This model incorporates a style encoder that directly learns style representations from training images, in contrast to the StyleGAN mapping network which generates style representations from noise and then associates these randomly-generated style representations with relevant images. Once style representations as well as realistic image generation are learned by StarGAN, style transfer can be achieved by combining content representations with desired style representations. Again, both cycle-consistency and style diversification loss components are used. Harmonization using this model has been shown to improve out-of-sample performance of an age-prediction network trained in the reference batch. A model based on similar style-disentangling mechanisms has been shown to improve the performance of a 3D segmentation network trained on the reference batch when applied to source batch images (Yao et al., 2022). Notably, like autoencoder-based models, StyleGAN, StarGANv2, and the model by Yao et al. rely on one common generator that is able to take any content representation and combine it with any style representation.

3.1.4. Autoencoder models (Feature-level)

In 2015, Sohn et al., (2015) introduced the conditional variational autoencoder (CVAE) in order to generate new data conditional on additional covariates. This model can be best understood through its predecessor, the variational autoencoder (VAE), which in turn, builds on the standard autoencoder, a simple neural network architecture that seeks to learn a non-linear, low-dimensional representation of input data that contains sufficient information for reconstruction (Kingma and Welling, 2014). The VAE architecture and loss function, discussed below, allow for additional constraints compared to the standard autoencoder and seek to improve organization of the latent space as well as reduce potential for overfitting. In this model, the encoder seeks to embed the input data into a lower-dimensional latent distribution, , which approximates some pre-specified “prior” distribution, . In practice, is usually chosen to be the standard multivariate normal distribution. The probabilistic decoder, then takes a random sample from this distribution, and attempts to reconstruct the data using this sample. The VAE seeks to minimize the loss function , where is the Kullback-Leibler divergence between the latent distribution and prior distribution. The reconstruction loss component encourages latent-space distributions to efficiently retain information, while the Kullback-Leibler divergence component creates a trade-off that encourages representations to coexist around the origin as well as inject noise. Together, these constraints organize the latent space such that nearby points produce similar reconstructions.

CVAE builds on the VAE architecture by concatenating additional covariates, , onto the inputs for both the encoder and the decoder in order to condition the latent space on these covariates. In this model, since the decoder has necessary information from additional covariates readily available for reconstruction, the encoder no longer benefits from encoding covariate-dependent information in the latent space.

At the feature-level, a number of methodologies have harnessed CVAE ideas to learn a latent-space representation that is independent of the imaging batch and the corresponding batch-conditioned encoder-decoder pair (An et al., 2022; Moyer et al., 2020). Then, these methods perform harmonization by first encoding samples into the batch-invariant latent space using each samples’ actual batch, and then decoding those latent-space representations using the desired output batch.

Moyer et al., (2020) leveraged a deep learning model using the CVAE structure to perform unsupervised image-based harmonization on diffusion MRI images. First, this model maps diffusion-weighted imaging (DWI) signal for each voxel to a vector of spherical harmonics representations. Then, for each voxel, spherical harmonics vectors from itself and its six immediate neighbors are concatenated along with the batch covariate and fed into the CVAE to learn the batch-invariant latent representation. The loss function consists of the standard VAE loss; a reconstruction error for the projection of spherical harmonics vectors back into DWI space; an adversarial loss for detecting batch on the reconstruction as estimated by a discriminator; and a penalty on the mutual information between the latent space and batch, enforced via the sum of pairwise Kullback-Leibler divergences between latent-space representations.

An extension of this model, called goal-specific conditional variational autoencoder (gcVAE), has been proposed to perform harmonization on image-derived features that is explicitly aware of desired downstream applications – in this case, the prediction of Alzheimer disease diagnosis and Mini-Mental State Examination (MMSE) scores (An et al., 2022). gcVAE seeks to trains two neural networks independently – first, a CVAE model is pre-trained to learn a conditionally-independent latent-space representation and the corresponding conditional decoders. Additionally, a generic feed-forward prediction network is trained on reference batch data to predict Alzheimer disease diagnoses and MMSE scores from unharmonized features, and its weights are frozen. Finally, data from both batches are harmonized through the pre-trained CVAE and then fed through the frozen prediction network; the loss function for this step seeks to minimize the error in prediction network outputs. This loss is used along with a small learning rate and limited training epochs to fine-tune the CVAE model to retain information relevant to diagnosis and MMSE prediction in the harmonized reconstruction.

3.1.5. Autoencoder models (Image-level)

In image-level harmonization, methods have used ideas from the CVAE as well as from the standard autoencoder to disentangle content information from batch and other style features (Cackowski et al., 2021; Cao et al., 2022; Fatania et al., 2022; Zuo et al., 2021). These methods seek to decompose images into low-dimensional style-invariant content representations in the encoding step, and then in the generation step, inject these content representations with style information.

Zuo et al., (2021) introduced a harmonization method named Contrast Anatomy Learning and Analysis for MR Intensity Translation and Integration (CALAMITI) that uses similar tools to CVAE as well as style-conditional GANs. This model was based on previous work by the same group (Dewey et al., 2020). However, CALAMITI additionally leverages the fact that neuroimaging subjects are often imaged under multiple contrasts, such as T1-weighted and T2-weighted acquisitions. These intra-subject contrast pairs can be thought to share identical anatomical content with differing styles. Meanwhile, intra-batch images – those taken under the same contrast and scanner, but on different subjects – can be thought to share identical style but differing anatomical content. CALAMITI uses these two sets of pseudo-paired data to train a content encoder, style encoder, generator, and batch discriminator. Content representations within intra-subject pairs are constrained to be interchangeable and independent of batch as assessed by the batch discriminator. Style representations necessary to reconstruct a given image are obtained entirely from a random intra-batch image with no shared content. Harmonization is then performed by providing a trained decoder with image-specific content representations along with style representations from the desired reference batch. Finally, to account for the 3D structure of the brain despite using 2D slices, this procedure is performed in axial, coronal, and sagittal directions and the three “directional” brain volumes are unified into a final image through a 3D fusion network, an idea borrowed from DeepHarmony, described below (Dewey et al., 2019).

CALAMITI has been validated by Shao et al., (2022), who showed that training a 3D thalamus-segmentation network on images harmonized to the reference batch resulted in better out-of-sample performance on true images from the reference batch when compared to the same segmentation network trained on unharmonized images. Meanwhile, in-sample performance of the network did not decrease after harmonization, suggesting minimal degradation of anatomy. Additionally, the direct predecessor to CALAMITI, proposed by Dewey et al., has been shown to allow for improved harmonization, when compared to CycleGAN, of diffusion MRI across multiple batches as well as simultaneously allow for estimation of multi-shell diffusion MRI from single-shell data (Dewey et al., 2020; Hansen et al., 2022).

Inspired by the use of imaging data structure in CALAMITI, ImUnity sought to apply these ideas to the harmonization of not only batches available in the training dataset, but also unseen batches (Cackowski et al., 2021). At each training iteration, ImUnity takes two random slices, and , from the same image as input, such that the slices can be thought to have different content but share the same style. Next, both and are modified to and , respectively, using the gamma transformation, an image processing function that changes the relative intensity of gray colors. Slice is then embedded into a latent content representation, slice is embedded into a style representation, and these content and style representations are used to reconstruct slice , which should have the same content as and same style as . Additionally, this model applies both a batch discriminator and optional biological information classifier to the latent content representation which serve to promote the removal of batch information and maintenance of biological information, respectively. Through this process, content information can be disentangled from style in a self-supervised manner without additional imaging contrasts, and image harmonization can be carried out by inputting source batch slices to the content encoder and reference batch slices to the style encoder. If unseen batches are similar enough to training batches such that the content encoder can appropriately embed slices from unseen batches, the model can be easily extended to these settings.

StyleMapper also takes advantage of the ability to apply various image transformation functions to raw images in order to generate images that are known to have the same content but different styles (Cao et al., 2022). In this approach, each raw image is transformed to seven different styles using the following transformation functions: original, negative, logarithmic, gamma transformation, piecewise linear, Sobel X filter, and Sobel Y filter. Then, for each iteration, two raw images and two randomly-sampled corresponding transformed images (both using the same transformation function) are fed to a model consisting of one content encoder, one style encoder, and one generator, where the generator seeks reconstruct an image with desired style using the content and style representations. Notably, no discriminator is used in the StyleMapper model. To constrain this process, a number of loss function components are used: reconstruction of both raw images; reconstruction of both transformed images; similarity of style representations between raw images; similarity of style representations between transformed images; similarity of content representations between raw images and their corresponding transformed image; and cross-reconstruction, where swapping content and style representations between across input images should result in an output image that is similar to the corresponding “ground-truth” image. Thus, StyleMapper is able to create pseudo-paired data with the same content but different styles, learn to disentangle content and style within this dataset, and perform harmonization, given that differences across batches are somewhat similar to the transformations used in training.

Finally, HarMOnAE removes batch effects using style transfer within a standard convolutional autoencoder (Fatania et al., 2022). In this model, style representations are explicitly defined as the batch covariate and directly injected into the decoder via adaptive instance normalization. To enforce the learning of batch-invariant content representations, an adversarial loss is imposed on the content representation space.

3.1.6. Batch-unlearning classifiers (Other)

Related to standard harmonization methods, some deep learning methods have been developed to simultaneously perform harmonization and downstream classification tasks, such that classification should be robust to batch effects (Dinsdale et al., 2021; Hong et al., 2022). Notably, unlike other harmonization methods described in this review, these batch-unlearning classifiers do not attempt to produce a harmonized output dataset that can then be used for any generic downstream analysis.

Dinsdale et al., (2021) proposed a domain-adaptation classifier that could be used to improve the generalizability of age predictions across multiple batches where age distributions differed. The three-module network consists of a convolutional feature extractor, a batch discriminator, and a main task classifier, where the goal of the feature extractor is to learn a latent space representation of raw images that is useful for the main task classifier and can simultaneously fool the batch discriminator. Thus, the feature extractor learns to extract batch-invariant features, and the main task classifier learns generalizable decision boundaries. Importantly, the batch-unlearning classifier is trained using a subsample of the data where the outcome of interest is balanced across batches in order to avoid confounding. The authors showed this strategy is especially useful in settings where one batch makes up a large majority of the dataset and the distribution of the outcome of interest differs greatly in this batch compared to others. The method also improved performance of age prediction in an unseen batch. Similarly, Hong et al., (2022) showed a non-convolutional version of this network, which they call scanner-generalization neural network (SGNN), could be used to improve prediction of general psychopathology factors (Caspi and Moffitt, 2018) using functional connectivity matrices within the ABCD study.

3.2. Prospective harmonization

3.2.1. Direct mapping

In specially-curated multi-batch studies where traveling subjects are available, the “ground truth” batch-specific scans for these subjects are known under the assumption that all differences between these scans are entirely due to technical artifacts. This allows for a class of much more powerful and accurate methods that leverage this unique pairing of data to learn a mapping from one batch to another. Then, this mapping can be applied to unpaired images to remove batch effects, under the assumption that data from traveling subjects are a representative sample of those from unpaired subjects. However, despite the benefits of prospective harmonization methods, datasets where the required traveling subjects are available are expensive to obtain and can be limited in terms of subjects. Additionally, the assumption that traveling subjects are representative of all subjects should be verified; traveling subjects could, for example, be healthier or wealthier than non-traveling subjects.

Dewey et al., (2019) proposed DeepHarmony, a convolutional U-Net-based architecture could be applied to 2D patches across multiple contrasts from twelve subjects each scanned under each of two batches in order to directly harmonize the images themselves. In this architecture, the network attempts to jointly use multiple contrasts (T1-weighted, T2-weighted, FLAIR, and proton density) from each subject collected under one protocol. These multiple contrasts are used simultaneously to reconstruct the corresponding contrasts for that subject collected under another protocol. This “many-to-many” reconstruction approach can be thought of as allowing for the use of complementary information across contrasts. Additionally, DeepHarmony slightly modifies the vanilla U-Net architecture such that, in the final convolutional layer, the input contrasts are concatenated to the final feature map. Thus, instead of having to recreate reference contrasts entirely from scratch, the network can instead focus on learning an appropriate transform of the input data to reconstruct the intended output. Finally, as with CALAMITI, DeepHarmony sought to learn three independent image-to-image mappings for slices in each of the axial, sagittal, and coronal directions. These “directional” images are then aggregated using voxel-wise medians to produce a final harmonized image.

For diffusion imaging, Tong et al., (2020) showed that deep learning can be applied to pre-processed DWI images across traveling subjects in order to estimate derived diffusional kurtosis imaging (DKI) measures that are harmonized across batches. This study leveraged a 3D hierarchical-structured convolutional neural network (H–CNN) designed to take 3 × 3 × 3 voxel patches as input and jointly produce eight scalar DKI measures as output (axial diffusivity, radial diffusivity, mean diffusivity, fractional anisotropy, axial kurtosis, radial kurtosis, mean kurtosis, kurtosis fractional anisotropy) (Li et al., 2019). To perform harmonization, Tong et al. used DWI images from traveling subjects in the reference batch to calculate DKI measures for each image using an iteratively-reweighted linear least squares method. Then, these DKI measures were non-linearly registered to corresponding paired DWI images in source batches to create a training dataset, where the input is a DWI image from a source batch while the output is the set of DKI measures extracted from the paired image in the reference batch. Next, H–CNN is trained on this dataset in order to learn a mapping from source batch DWI images to reference batch DKI measures. Finally, this trained H–CNN was applied to other DWI images from the source batches in order to estimate DKI measures harmonized to the reference batch.

3.2.2. Content-style disentanglement

Another approach for directly harmonizing images, Multi-scanner Image harmonization via Structure Preserving Embedding Learning (MISPEL), was introduced by Torbati et al., (2022). Unlike DeepHarmony, MISPEL hopes to perform harmonization across batches, where can be more than two, through the use of a set of batch-specific convolutional autoencoders that are trained via a two-step algorithm. Importantly, the encoders are allowed to be deep networks while the decoders merely perform a linear combination of the latent-space representations. In step one, MISPEL seeks to train each batch-specific encoder to embed slices from its batch into a common latent space and then train the corresponding decoder to use those latent-space representations to reconstruct slices in the style of its batch. To do so, MISPEL trains each batch-specific autoencoder separately in a self-supervised fashion using a reconstruction loss and additionally enforces a common latent space between all autoencoders through a representation similarity loss, which penalizes high variance across all latent-space representations. In step two, all encoders are frozen and only the decoders are updated such that all decoders produce similar harmonized output slices and the outputs are also similar to the input slice. Thus, intuitively, MISPEL can be thought of as disentangling images into content and style representations, where the latent-space representations contain content information and differences in how those representations are linearly combined by the decoder describe style differences.

Tian et al., (2022) address the setting of paired data in a multiple-batch setting via their model, DeRed. This model can be thought of as an adaptation of CycleGAN and especially ST-cGAN, discussed in the style-conditional GAN section. Similarly to ST-cGAN, DeRed uses paired GANs to perform harmonization – however, to adapt the paired-GAN framework to the multiple-batch setting, DeRed trains a separate style encoder and generator for each batch-to-batch harmonization task, such that each set of networks harmonizes images either to or from the reference batch. Then, DeRed is able to harmonize any batch to the reference batch by combining a source-batch content representation with a reference-batch style representation. Additionally, harmonization to any source batch can be achieved through a two-step process, where all other source batches are first harmonized to the reference batch and then these generated reference-batch images are harmonized to the desired source batch. Data from paired subjects is taken advantage of in the loss function, which consists of four components: 1) batch consistency, where style representations should be similar within each batch; 2) content consistency, where content representations should be similar within paired subjects even from different batches; 3) reconstruction, where content and style representations from the same image should result in reconstruction of that image; and 4) cross-reconstruction, where content and style representations from different images of the same subject should result in reconstruction of the image that corresponds to the style representation.

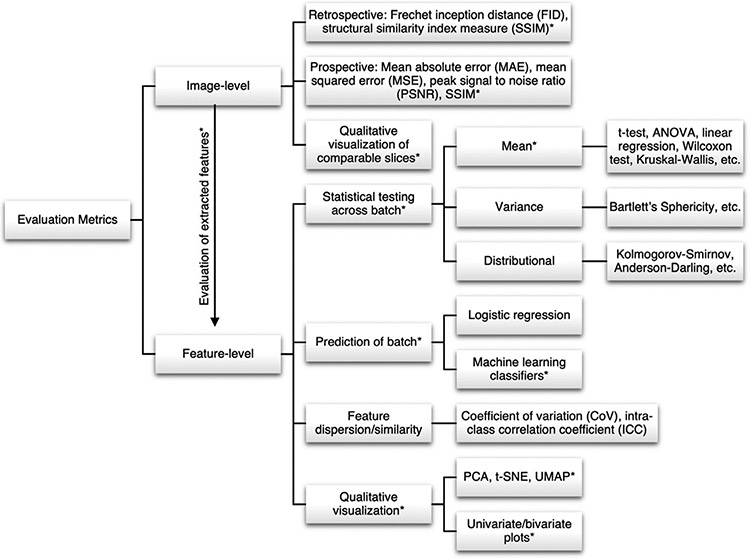

4. Evaluation metrics

Increasing interest in the development and application of harmonization methods requires standardized and effective metrics that quantify performance. Harmonization evaluation metrics can largely be grouped into two categories, harmonization performance metrics and predictive performance metrics (Figure 4). Harmonization performance metrics aim to detect or quantify batch effects and can be separated into metrics measured at the feature level and at the image level. These metrics can often be interpreted as summary statistics, requiring accompanying visualizations to complement their findings. Predictive performance metrics measure the effects of harmonization on performance in downstream analyses. Importantly, effective harmonization methods should reduce detectable batch effects in the data while preserving performance in downstream analyses.

Fig. 4.

Flowchart of evaluation metrics for harmonization organized by data type and evaluation types. Asterisks indicate the set of standardized evaluation types that we believe should be included in the evaluation of novel harmonization methods, depending on data type and study design. Note that metrics included here are only for evaluating harmonization and do not include metrics for evaluating performance in downstream analyses.

4.1. Harmonization performance

4.1.1. Feature-level metrics

Evaluation approaches for methods that perform feature-level harmonization can be broadly grouped into four general paradigms: statistical testing for differences in distribution across batches, predictive modeling of batch, assessing feature dispersion and similarity, and qualitative visualization.

Features can be interpreted as each having their own distribution that can be split along batch variables such that in the absence of batch effects, these sub-distributions should be identical. Harmonization methods can thus be evaluated based on their ability to remove differences in feature distribution across batch groups. This can be evaluated using statistical testing, where the test used depends on the assumed form of the distributional differences. Location effects can be assessed using tests for differences in mean (e.g. students and paired t-tests, ANOVA, linear regression to control for covariates, Wilcoxon rank-sum and signed rank tests, and Kruskal-Wallis test) while scale effects can be detected using tests for differences in variance (e.g. Bartlett’s sphericity test) (Fortin et al., 2018; Y. Li et al., 2021; Wengler et al., 2021; Yu et al., 2018). To test for more general differences in distribution beyond disparity in mean and variance, the Kolmogorov-Smirnov or Anderson-Darling tests can be used (Da-Ano et al., 2020a; Fatania et al., 2022; H.M. Whitney et al., 2020). These tests are all completed at the feature-level such that if harmonization is effective, significant differences in distribution due to batch will be detected before but not after harmonization. This result would indicate that the harmonization tool has removed differences in distribution associated with batch variables. In settings where a p-value would be inappropriate, effect size measures (e.g. Cohen’s d, Hedge’s g) can be used (Radua et al., 2020; Reardon et al., 2021). In the specific setting of functional connectivity matrices, which can be studied from the network theory perspective, Roffet et al., (2022) demonstrated the utility of the Kruskal-Wallis test on batch-wise differences between Normalized Network Shannon Entropy and Normalized Network Fisher Information metrics.

If biological covariates are imbalanced across batches, it may be expected that this imbalance may lead to differences in marginal batch-wise feature means that should not be corrected by harmonization. In these settings, it is instead important to evaluate harmonized outputs for differences in biological-covariate-conditional batch-wise feature means. One common approach is to use linear regression or linear mixed effects regression, where batch and biological covariates (e.g. age, sex) are used to jointly model the feature. The estimated regression coefficients for batch and biological covariates can be tested for significant effects on each feature, where a significant regression coefficient for the batch covariate corresponds to statistically-detectable batch effects (Badhwar et al., 2020; Bell et al., 2022; Wengler et al., 2021; Zavaliangos-Petropulu et al., 2019). Notably, this approach will provide a valid assessment of batch effects even if the biological covariates are not imbalanced across batches. Looking beyond batch, this evaluation procedure allows for simultaneous assessment of preservation of biological covariates; comparing regression coefficients for biological covariates before and after harmonization can provide insight into whether biological information is preserved.

Another approach uses features as predictors in a machine learning classifier – random forests, support vector machines (SVM), AdaBoost, and others – in order to predict batch as an outcome. If harmonization is effective, there will be reduced signal from batch in the data and therefore reduced classifier performance (An et al., 2022; A. A. Chen et al., 2022a; Saponaro et al., 2022). While this approach is more general than using a linear model, this comes at the cost of interpretability. When using a statistical test for differences in distribution or on linear model regression coefficients, there is a clear null hypothesis about the nature of batch effects – that is, whether they are differences in mean, variance, or distribution. This is contrasts with the machine learning classifier approach, where detection of batch effects is easy, but understanding the nature of these detected batch effects is challenging. While there are methods for measuring feature importance for machine learning classifiers, further visualization is necessary to fully characterize batch effects. Additionally, it is challenging to account for confounders when using this machine learning approach; for example, if there is significant imbalance in a biological covariate such that batch can be easily predicted by this biological covariate, preservation of biological information in the harmonized data would also result in predictability of batch, even if batch effects were perfectly removed.

A more direct metric for identifying variation associated with batch in feature-level data is the coefficient of variation (CoV). The CoV is the ratio of the mean to the standard deviation and can be used to measure between-batch variability by calculating the CoV within each batch for each feature (Cai et al., 2021; Garcia-Dias et al., 2020; Treit et al., 2022). The resulting set of CoV values is then described using summary statistics, and if harmonization is effective, the differences in CoV distributions between batch groups will be reduced post-harmonization.

In traveling subject studies or other datasets where matched-subject data is available, another direct metric for measuring feature similarity across batches is correlation coefficients, including the intra-class correlation coefficient (ICC), Spearman’s correlation, and Pearson’s correlation. If batch effects are not present in the data, then a feature extracted from scans associated with the same subject under different acquisition protocols should be more similar across protocols (Crombé et al., 2021; A. 2020; Kurokawa et al., 2021). Effective harmonization tools should increase the correlation coefficient for a given feature across batch groups provided the scans are from the same subject. Additionally, the discriminability statistic may also be a reasonable metric for this data setting, though this statistic has not yet been used in the context of harmonization (Bridgeford et al., 2021).

Finally, visualizations are an essential tool for characterizing batch effects more comprehensively than summary metrics. Visualization methods pertinent to harmonization can be broadly grouped into decomposition-based approaches and displays of feature distributions. Decomposition-based approaches condense high-dimensional data into a two to three-dimensional space suitable for visualization and include methods such as principal components analysis (PCA), t-distributed stochastic neighbor embedding (t-SNE), and uniform manifold approximation and projection (UMAP). In low-dimensional space, batch effects can be seen as increased distances between points of differing batch groups. Harmonization should reduce these distances and bring points of different batch closer together (Acquitter et al., 2022; A. A. Chen et al., 2022c; Guan et al., 2021).

However, decomposition-based methods condense information from all features into a single figure, necessitating visualizations of univariate or bivariate feature distributions to further characterize distributional differences affiliated with batch (e.g. feature density plots, box-plots, scatterplots etc.). Effective harmonization should reduce visual differences in distribution across batch groups (Bethlehem et al., 2022; Clarke et al., 2020; Da-Ano et al., 2021; Saint Martin et al., 2021). These visualizations can also be used to identify cases in which distributional assumptions of model-based methods are violated (e.g. non-Gaussian for ComBat) and further troubleshoot harmonization methods by providing comprehensive information regarding the effects of harmonization on feature distributions (Horng et al., 2022b).

4.1.2. Image-level metrics

Applications of deep learning to harmonize image-level data have emerged as promising approaches for correcting unstructured data. Consequently, their evaluation requires metrics that quantify the effects of harmonization at the image level. Because the goal of image-level harmonization can be viewed as mapping an image from one batch to another, the resulting evaluation is often based around measuring the distance between images of different batches.

When paired data are available, this distance can be directly quantified as the voxel-level difference between the harmonized image and the true image from the reference batch using metrics such as Mean Absolute Error (MAE) or Mean Squared Error (MSE). Also included in this category is peak signal to noise ratio (PSNR), a measure of image quality that takes the ratio of the maximum image value and the root MSE. For example, Dewey et al., (2019) use the MAE as a component of their loss function as well as a final measure of image similarity to compare paired images from the same subject scanned with different MRI acquisition protocols. While this approach likely provides the most accurate quantification of image differences associated with batch, it is not as commonly used because datasets of sufficient sample size to train deep learning algorithms that also contain paired samples from each batch are rare. A possible solution to this problem is to use unpaired data for training and use a more limited paired dataset for testing and evaluation (Denck et al., 2021).

The scenario of unpaired data is more common, but this setting requires more indirect measures of image similarity because no “ground truth” is available. The two most common metrics used in this context are the structural similarity index measure (SSIM) and Fréchet Inception Distance (FID) (Heusel et al., 2018; Wang et al., 2004). SSIM, as the name implies, measures the degree to which structures are preserved post-transformation. While historically used in paired data, SSIM can be applied in unpaired data under the assumption that key structures are largely the same between subjects. FID is a common evaluation metric for GANs that measures the distance between the ground truth and generated image distributions as opposed to the images themselves. Both FID and SSIM have been employed in the evaluation of adversarial networks used for image-level harmonization (Liu et al., 2021; Sinha et al., 2021). Notably, while SSIM measures presence of similar anatomy and FID measures “realism” of generated images – both important metrics for assessing the quality of generated images – neither explicitly evaluates whether generated images match the distribution of the reference batch or how well the images are harmonized. Additionally, FID is based on features learned on natural scenes from the ImageNet database; such features may not be applicable to medical images, so FID may not be a reliable measure of realism in this setting (Deng et al., 2009).

Finally, qualitative visualizations may include side-by-side image slices representing unharmonized slices, harmonized slices, and reference slices. Importantly, “directionality” of visualized slices (i.e. axial, coronal, sagittal) is important, since many image-level methods correct images at the individual slice level. Thus, visualization using slices in the same direction as the harmonization as well as slices in different directions may be revealing.