Abstract

The classical approach to analyze time-to-event data, e.g. in clinical trials, is to fit Kaplan–Meier curves yielding the treatment effect as the hazard ratio between treatment groups. Afterwards, a log-rank test is commonly performed to investigate whether there is a difference in survival or, depending on additional covariates, a Cox proportional hazard model is used. However, in numerous trials these approaches fail due to the presence of non-proportional hazards, resulting in difficulties of interpreting the hazard ratio and a loss of power. When considering equivalence or non-inferiority trials, the commonly performed log-rank based tests are similarly affected by a violation of this assumption. Here we propose a parametric framework to assess equivalence or non-inferiority for survival data. We derive pointwise confidence bands for both, the hazard ratio and the difference of the survival curves. Further we propose a test procedure addressing non-inferiority and equivalence by directly comparing the survival functions at certain time points or over an entire range of time. Once the model’s suitability is proven the method provides a noticeable power benefit, irrespectively of the shape of the hazard ratio. On the other hand, model selection should be carried out carefully as misspecification may cause type I error inflation in some situations. We investigate the robustness and demonstrate the advantages and disadvantages of the proposed methods by means of a simulation study. Finally, we demonstrate the validity of the methods by a clinical trial example.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10985-023-09589-5.

Keywords: Equivalence, Non-inferiority, Non-proportional hazards, Survival analysis, Time-to-event data

Introduction

Time-to-event outcomes are frequently observed in medical research, for instance in the area of oncology or cardiovascular diseases. A commonly addressed issue is the comparison of a test to a reference treatment with regard to survival (see Kudo et al. (2018) and Janda et al. (2017) among many others). For this purpose an analysis based on Kaplan-Meier curves (Kaplan and Meier 1958), followed by a log-rank test (Kalbfleisch and Prentice 2011) or a modified log-rank test (see, for example, Peto and Peto 1972 and Yang and Prentice 2010) is still the most popular approach. Further, in order to investigate treatment difference over time, simultaneous confidence bands for the difference of two survival curves have been considered (Parzen et al. 1997). Additionally, adjusting for multiple covariates, Cox’s proportional hazards model (Cox 1972) has been extensively used in the last decades (for some examples see Cox and Oakes (1984) and Klein and Moeschberger (2006) among many others). In case of addressing non-inferiority or equivalence, extensions of the log-rank test investigating the vertical distance between the survival curves have been proposed by Wellek (1993) and Com-Nougue et al. (1993). These approaches owe much of their popularity to the fact that they do not rely on assumptions on the distribution of event times. Moreover, a direct interpretation is obtained by summarizing the treatment effect in one single parameter, given by the hazard ratio of the two treatments, assumed to be constant over time.

However, this assumption has been heavily criticized (Hernán 2010; Uno et al. 2014) and is in practice rarely assessed or even obviously violated (Li et al. 2015; Jachno et al. 2019). In particular, if the two treatments’ short- and long-term benefits differ, for instance, when surgical treatment is compared to a non-surgical one (Howard et al. 1997), the assumption of proportional hazards is questionable. The most obvious sign of a violation of this assumption is crossing survival curves. However, graphical methods (Grambsch and Therneau 1994) or statistical tests (Gill and Schumacher 1987) are often needed to detect non-proportional hazards.

One of the advantages of the standard methodology based on Kaplan–Meier curves and the log-rank test is that equivalence hypotheses can be formulated using one parameter, the hazard ratio. If this relationship changes over time, both an alternative measure of treatment effect and an appropriate definition of equivalence must be found. For instance, Royston and Parmar (2011) introduce the restricted mean survival time to overcome this issue. Another non-parametric measure comparing survival times from two groups for right-censored survival data is the Mann–Whitney effect (Dobler and Pauly 2018). Regarding different test procedures, one alternative to commonly used log-rank based tests of equivalence has been proposed by Martinez et al. (2017). These authors show that type I errors for the classical log-rank test are higher than the nominal level if hazards are non-proportional. They present an alternative based on a proportional odds assumption yielding a robust -level test in this setting. In situations where neither hazards nor odds are proportional, Shen (2020) recently proposed an alternative test for equivalence based on a semiparametric log transformation model. Finally, having reviewed the most recent two-armed clinical oncology trials, Dormuth et al. (2022) give a user-friendly overview of which test to use when survival curves cross.

Methods using parametric survival models are less common than the semiparametric or non-parametric methods mentioned above. However, a correctly specified parametric survival model offers numerous advantages, such as more accurate estimates (Klein and Moeschberger 2006) and the ability to make predictions. Inference based on parametric models can be very precise even in case of misspecification, as demonstrated by Subramanian and Zhang (2013), who develop simultaneous confidence bands for parametric survival curves and compare them to non-parametric approaches based on Kaplan–Meier estimates.

In this paper, we develop a new methodology in two directions. We address the issue of non-inferiority and equivalence testing by presenting a parametric alternative to the classical methodology, without assuming proportional hazards. First, we derive pointwise confidence bands for the difference of two survival curves and the hazard ratio over time, by using asymptotic inference and a bootstrap approach. Second, we use these confidence bands to assess equivalence or non-inferiority of two treatments for both pointwise comparisons and for entire time intervals. A similar approach has been proposed by Liu et al. (2009) and Bretz et al. (2018), who derive such confidence bands for assessing the similarity of dose-response curves. Finally, all our methods are illustrated by a clinical trial example and by means of a simulation study, where we also investigate the robustness of our approach.

Methods

Consider two samples of size and respectively, resulting in a total sample size of . Let and denote independent random variables representing survival times for individuals allocated to two (treatment) groups, observing a time range given by , where 0 denotes the start of the observations and a fixed time point of last follow-up. Assume that the distribution functions and of and , respectively, are absolutely continuous with densities resp. . Consequently the probability of experiencing an event for an individual j of the -th sample before time t can be written as Further denote the corresponding survival functions by and the hazard rates by . The cumulative hazard function is given by , .

For the sake of simplicity we do not assume additional covariates. Further, in addition to a fixed end of the study, we assume all observations to be randomly right-censored and denote the censoring times of the two samples by and and the corresponding distribution functions by and respectively. Note that these distributions can differ from each other and are assumed to be independent from the . We define indicating whether an individual is censored () or experiences an event (), where I denotes the indicator function. Consequently the observed data is a realization of the bivariate random variable , where . In order to make inference on the underlying distributions we consider the likelihood function for group given by

| 1 |

as censoring times and survival times are assumed to be independent. Hence we can obtain estimates for the densities of the distributions of the survival times and densities corresponding to the censoring distributions by deriving the parameters and maximizing , . Note that if one is not interested in estimating the underlying distribution of the censoring times, this optimization procedure can be further simplified by just considering the first part in (1), resulting in an objective function given by

| 2 |

as and have no common parameters.

Confidence bands

In the following we will construct pointwise confidence bands for the difference of the survival functions and for the hazard ratio. First we derive an asymptotic approach using the Delta-method (Oehlert 1992) and second, we propose an alternative based on a bootstrap procedure. The latter can also be used when samples are very small or if asymptotic inference is impossible due to the lack of an explicit expression for the asymptotic variance of the maximum likelihood estimator (MLE) obtained by maximizing (1) or (2), respectively. In order to simplify calculations, we will consider the log-ratio and therefore the two measures of interest are given by

| 3 |

Under certain regularity conditions (Bradley and Gart 1962) the MLE , , is asymptotically normally distributed. Precisely,

where denotes the inverse of the Fisher information matrix, . This result can be used to make inference about the asymptotic distribution of the estimated survival curves. Using the Delta-method we obtain for every

Consequently, the asymptotic variance of is given by

| 4 |

By replacing by its estimate and by the observed information matrix , , a consistent estimator of the asymptotic variance in (4) is obtained (Bradley and Gart 1962). For sufficiently large samples this asymptotic result can be used to construct pointwise lower and upper -confidence bands, respectively, by

| 5 |

where denotes the -quantile of the standard normal distribution. More precisely, if L(t) and U(t) denote the pointwise lower and the pointwise upper confidence band, respectively, it holds

| 6 |

for all , where denotes the prespecified significance level. The construction of pointwise confidence bands for the log hazard ratio is done similarly, and an estimate of the asymptotic variance of is given by

| 7 |

Consequently and are given by

| 8 |

A concrete numerical example for calculating the estimates and and the corresponding confidence bands assuming a Weibull distribution are deferred to Section A2 of the Appendix. If sample sizes are rather small or the variability in the data is high, we propose to obtain estimates for the variances and by using a bootstrap approach, taking the right-censoring into account. This method can also be used if a formula for the asymptotic variance is not obtainable, for instance due to numerical difficulties. The following algorithm explains the procedure for and it can directly be adapted to .

Algorithm 1

(Parametric) Bootstrap Confidence Bands for .

Calculate the MLE and , from the data by maximizing (1).

Generate survival times from , . Further generate the corresponding censoring times by sampling from the distributions , . If , the observation is censored (i.e. ), . The observed data is given by , , , .

- Calculate MLE for the bootstrap sample from the , and calculate the difference of the corresponding survival functions at , that is

9 - Repeating steps 2a and 2b times yields . Calculate an estimate for the variance by

where denotes the mean of the .10

Finally the estimate in (10) is used to calculate the confidence band in (5). The procedure described in Algorithm 1 is a parametric bootstrap based on estimating the parameters . A non-parametric alternative, given by resampling the observations, could also be implemented (Efron 1981; Akritas 1986). However, it has been shown that the parametric bootstrap tends to be more accurate if the underlying parametric model is correctly specified (Efron and Tibshirani 1994). Note that the asymptotic inference approach to obtaining confidence bands does not require estimating the censoring distributions. Consequently, the MLE can be obtained by maximizing the likelihood function in Eq. (2), . On the other hand, the bootstrap proposed in Algorithm 1 requires additional estimation of the censoring distributions and therefore requires the more involved maximization of Eq. (1).

Equivalence and non-inferiority tests

We are aiming to compare the survival functions of two (treatment) groups which is commonly addressed by testing the null hypothesis that the two survival functions are identical against the alternative hypothesis that the survival functions differ at least at a single time point (for a review, see, e.g., Klein and Moeschberger 2006). More precisely the classical hypotheses are given by

Sometimes one is more interested in observing the non-inferiority of one treatment to another or the equivalence of the two treatments, meaning that we allow a deviation of the survival curves of a prespecified threshold instead of testing for equality. This can be done for a particular point in time or over an entire interval, for example the whole observational period.

Comparing survival for one particular point in time

We start by considering the difference in survival at a particular point in time . The corresponding hypotheses are then given by

| 11 |

for a non-inferiority trial observing whether a test treatment is non-inferior to the reference treatment (which is stated in the alternative hypothesis). Considering equivalence, we test

| 12 |

The same questions can be addressed considering the (log) hazard ratio, resulting in the hypotheses analogue to (11) given by

| 13 |

for a non-inferiority trial and

| 14 |

for addressing equivalence. The choice of the margins and has to be verified in advance with great care combining statistical and clinical expertise. From a regulatory point of view there is no fixed rule but general advice can be found in a guideline of the EMA (2014). Following recent literature, margins for the survival difference are frequently chosen between 0.1 and 0.2 (D’Agostino Sr et al. 2003; Da Silva et al. 2009; Wellek 2010).

In the following, we will only consider the hypotheses in Eqs. (11) and (12) referring to the difference of the survival curves; a similar procedure can be applied for testing the hypotheses in Eqs. (13) and (14), respectively. Therefore, we use the confidence bands derived in Eq. (5) for defining an asymptotic -level test for (11). The null hypothesis in Eq. (11) is rejected and non-inferiority is claimed if the upper bound of the confidence band is below the margin, that is

| 15 |

Further, an equivalence test for the hypotheses in Eq. (12) is defined by rejecting whenever

| 16 |

Note that according to the intersection–union-principle (Berger 1982), the -confidence bands and are used for both, the non-inferiority and the equivalence test. The following lemma states that this yields an asymptotic -level test.

Lemma 1

The test described in Eq. (16) yields an asymptotic -level equivalence tests for the hypotheses in Eq. (12). More precisely it holds for all

The proof is left to Section A1 of the Appendix.

Comparing survival over an entire period of time

From a practical point of view there are situations where it might be interesting to compare survival not only at one particular point in time but over an entire period of time , which could also be the entire observation period . This means that for instance the null hypothesis in Eq. (12) is extended to investigating for all t in . This yields the hypotheses

| 17 |

and a similar extension can be formulated for the non-inferiority test (11) and the tests on the hazard ratio stated in Eqs. (13) and (14), respectively. In this case we conduct a test as defined in Eq. (16) on each time point in the observational period and reject the null hypothesis in Eq. (17) if each pointwise null hypothesis as stated in (12) is rejected. Consequently, this means that the null hypothesis in Eq. (17) is rejected if for all t in , the confidence bands and derived in (5) are included in the equivalence region , which can be also formulated as

| 18 |

In order to prove that this yields an asymptotic -level test, we first note that the rejection region of in Eq. (17) is the intersection of the rejection regions of the two sub-hypotheses and . Again, due to the intersection–union-principle (Berger 1982), it is therefore sufficient to show that each of these individual tests is an asymptotic -level test. Without loss of generality we consider the non-inferiority test, where we reject the null hypothesis if . Denoting by the point in with yields

and as the assertion follows with (6).

Of note, this result also implies that the construction of simultaneous confidence bands, which are wider than pointwise ones and hence would result in a more conservative test, is not necessary.

Finite sample properties

In the following we will investigate the finite sample properties of the proposed methods by means of a simulation study. Survival times are distributed according to a Weibull distribution and a log-logistic distribution, respectively, where the latter scenario will be used for investigations on the robustness of the approach. We assume (randomly) right-censored observations in combination with an administrative censoring time in both scenarios. All results are obtained by running simulations and bootstrap repetitions. For all three scenarios we will calculate confidence bands for both the difference of the survival curves and the log hazard ratio and observe their coverage probabilities. For the difference of the survival curves, we will investigate the tests on non-inferiority and equivalence proposed in Eqs. (15), (16) and (18), respectively. For this purpose we will vary both, the particular time point under consideration and the non-inferiority/equivalence margin . More precisely we will consider three different choices for this margin, namely and 0.2. Additionally we also evaluate all scenarios using a non-parametric approach as described in Sect. 5.2. of Com-Nougue et al. (1993). Precisely we construct confidence bands for the difference of two Kaplan–Meier curves by estimating the variance using Greenwood’s formula (Greenwood 1926). This approach also comes along without the assumption of proportional hazards and consequently it can be directly compared to our method, which will be referred to as "the parametric approach" in the following. Further, we investigate the performance of the test when comparing survival over the entire observational period (that is ) as described in Sect. 2.2.2. Due to the sake of brevity, the detailed results for this analysis are deferred to Section 4 of the Supplementary Material.

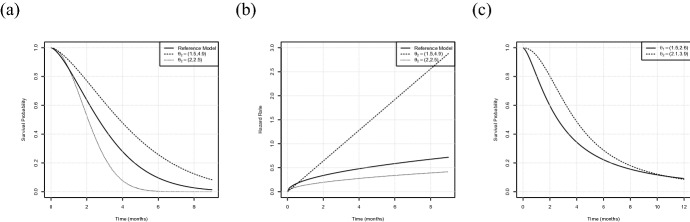

For the first two scenarios we assume the data in both treatment groups to follow a Weibull distribution, that is , , where denotes the shape parameter and the scale parameter corresponding to treatment group . We consider a time range (in months) given by , where is the latest point of follow up. For the first configuration we choose

| 19 |

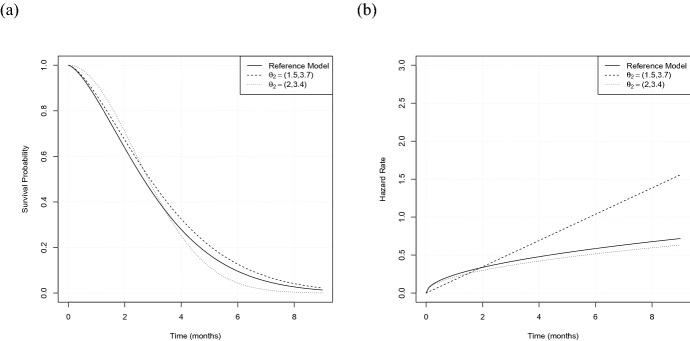

where corresponds to the reference model and the second model is varied by its scale parameter. Here is used for investigating the type I errors and coverage probabilities and for simulating the power, respectively (see Figs. 1 and 2). As an example, Fig. 1a displays the survival curves for a choice of . Both configurations result in a constant log hazard ratio of , representing the situation of proportional hazards. We assume the censoring times to be exponentially distributed and choose the rates of the two groups such that a censoring rate of approximately results, that is a rate of for the reference model and rates of and for and , respectively. In order to investigate the effect of non-proportional hazards we consider a second scenario of intersecting survival curves, where we keep the reference model specified by and all other configurations as above, but vary the parameters of the second model, resulting in

| 20 |

Here the choice of is used for investigating the type I errors and coverage probabilities and for simulating the power, respectively. Again, we consider censoring rates of approximately for both treatment groups, meaning a rate of and for and , respectively. In the following scenario (19) is denoted as “PH” (proportional hazards) and scenario (20) as “NPH” (non-proportional hazards).

Fig. 1.

The three scenarios under consideration used for simulating type I error rates and coverage probabilities a Survival curves for Scenarios (19) and (20) with , . b Corresponding hazard rates. c Survival curves for Scenario (21)

Fig. 2.

The two scenarios used for simulating the power. a Survival curves for Scenarios (19) and (20) with , . b Corresponding hazard rates

In order to investigate the effect of different censoring rates on both procedures, we additionally consider the NPH Scenario (20) with a fixed sample size of but vary the parameters , such that given the latest time point of follow-up by , between 10 and of the individuals are censored. Precisely, we also consider unbalanced situations where censoring rates are different across the two groups. The results are presented and discussed in Sect. 2 of the Supplementary Material.

Afterwards, we will analyze the robustness of the approach in two different ways. First, we will use the NPH scenario (20) in order to investigate the effect of misspecifying the distribution of the censoring times. Precisely, we will assume the censoring times to follow a uniform distribution instead of the true underlying exponential distribution. Note that this only affects the bootstrap-based confidence bands described in Algorithm 1 as the asymptotic bands do not require any estimation of the underlying censoring distribution. The detailed results of this analysis will be deferred to Sect. 1 of the Supplementary Material, we will briefly summarize them in Sect. 3.1. For further robustness investigations, we consider a third scenario, where we generate survival times according to a log-logistic distribution. Precisely we choose , . We now generate censoring times according to a uniform distribution on an interval , where is chosen such that a censoring rate of approximately results, . We consider a time range (in months) given by and define the scenario by the set of parameters given by

| 21 |

where corresponds to the reference model, see Fig. 1c. We will use this configuration to investigate the performance of the proposed method under the situation of misspecification of the event times. Precisely, we assume that the event times follow a Weibull distribution instead of the log-logistic distribution. The results will be presented in Sects. 3.1 and 3.2 and compared to the non-parametric approach.

Coverage probabilities

In order to investigate the performance of the confidence bands derived in Eqs. (5) and (8) we consider the scenarios described above for three different sample sizes, that is , and , resulting in total sample sizes given by and 200, respectively. We choose a nominal level of and calculate both, the asymptotic (two-sided) confidence bands obtained by using the Delta method, and the bands based on the bootstrap described in Algorithm 1, which we call in the following asymptotic bands and bootstrap bands, respectively. All bands were constructed for an equidistant grid of 23 time points ranging from 1.5 to 6 months for the first scenario and for a grid of 14 different points ranging from 1.5 to 4 months for the second one, respectively. For the investigations on the situation of misspecification we consider 21 time points, ranging from 1 to 5 months.

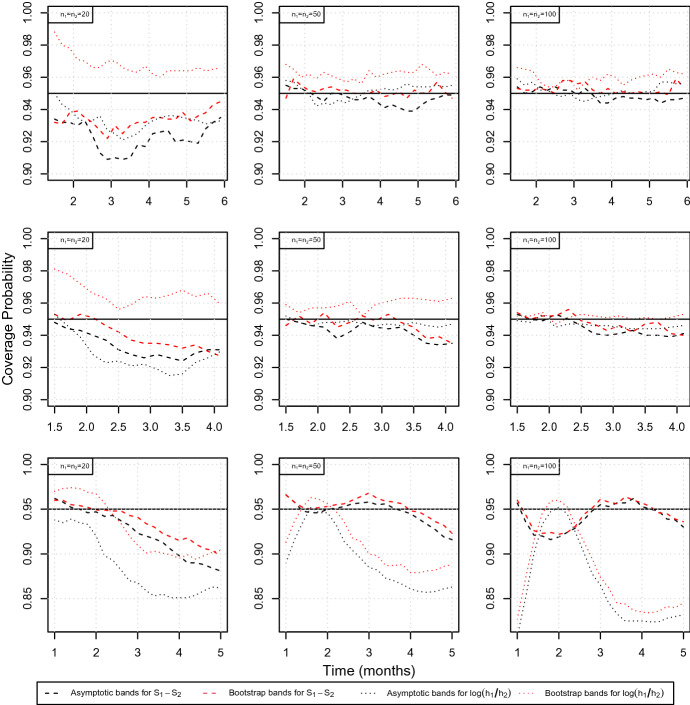

We first consider the two correctly specified scenarios (event distribution of reference and test group is Weibull and modelled as such). For the first configuration the hazard ratio is constant over time, for the second it varies between 0.5 and 2 on the grid described above, or, equivalently, from to 0.7 considering the log hazard ratio. The first two rows of Fig. 3 summarize the simulated coverage probabilities for these scenarios. In general it turns out that for both scenarios and all approaches, i.e. the asymptotic bands and the bootstrap bands for and , respectively, the approximation is very precise when sample sizes increase, as the coverage probabilities are very close to the desired value of 0.95 in this case. Further it becomes obvious that the confidence bands obtained by estimating the variance by bootstrap (10) are always slightly more conservative than their asymptotic versions (4) and (7), respectively.

Fig. 3.

Simulated coverage probabilities for the PH scenario (19) (first row), the NPH scenario (20) (second row) and the scenario of misspecification (21) (third row) at different time points for sample sizes of (left, middle, right column). The dashed lines correspond to the confidence bands for the difference of the survival functions (5), the dotted lines to the confidence bands for the log hazard ratio (8). Black lines display the asymptotic bands, red lines the confidence bands based on bootstrap, respectively

However, considering the bands on for very small sample sizes, that is , the coverage probability lies between 0.91 and 0.94 and hence these bands are rather liberal. The bootstrap bands perform slightly better, but still have coverage probabilities around 0.93 instead of 0.95, see the first column of Fig. 3. This effect already disappears for where a highly improved accuracy can be observed. The asymptotic bands for perform similarly, whereas the bootstrap bands show a different behaviour, that is being rather conservative for small sample sizes, but also getting more precise with increasing sample sizes. For smaller sample sizes, all confidence bands under consideration vary in their behaviour over time. This effect gets in particular visible when considering the NPH scenario (20), see the second row of Fig. 3. The coverage probabilities of the bands for start with a very accurate approximation during the first two months but then decrease to 0.93. This effect can be explained by the fact that in the setting of a very small sample, that is , after this period only very few patients remain (note that the median survival for the reference model is given by 2.6 months) and hence the uncertainty in estimating the variance increases. The same holds for all bands under consideration, explaining the decreasing accuracy at later time points.

We further investigated the effect of misspecifying the censoring distribution. Of note, this misspecification does only affect the bootstrap bands and not the asymptotic confidence bands, as these do not take the censoring mechanism into account. To this end, we considered the NPH scenario (20) and assumed a uniform distribution of the censoring times instead of the true underlying exponential distribution. A figure showing the coverage probabilities compared to the correctly specified situation is deferred to the Supplementary Material. It turns out that the effect of misspecification is rather small. The bands tend to be slightly more conservative as the coverage probabilities are close to 1 for the small sample size setting of . However, in general they are above the desired value of 0.95 in all scenarios and for increasing sample sizes this approximation is very precise. We therefore conclude that the bootstrap bands are very robust against misspecification of the censoring distribution.

Finally, we consider the log-logistic scenario of misspecification (21), where we erroneously assumed a Weibull distribution instead of the underlying log-logistic distribution. Further, concerning the bootstrap approach, the censoring distribution was assumed to be exponential and hence misspecified as well. In this scenario the hazard ratio varies from 2.5 to 0.8 over a time from 1 to 5 months, meaning that hazards are non-proportional. The corresponding coverage probabilities are shown in the third row of Fig. 3. It turns out that the performance is worse than in case of a correctly identified model as the coverage lies between 0.85 and 0.9 and hence below the desired value of 0.95. However, considering , in a majority of the cases the coverage is above 0.9, even for a small sample size of , where the bootstrap approach performs slightly better than the asymptotic analogue despite of suffering from an additional missspecification issue due to the censoring assumption. This result is in line with our findings from the NPH scenario (20), demonstrating that the bootstrap confidence bands are very robust against misspecifying the censoring distribution. For increasing sample sizes the coverage, which varies over the time, approximates 0.95, whereas the bands for still do not come sufficiently close to this desired value, even for the largest sample size of . Consequently we conclude that these bands suffer more from misspecification than the ones for , where the latter prove to be robust if sample sizes are sufficiently large.

Type I errors

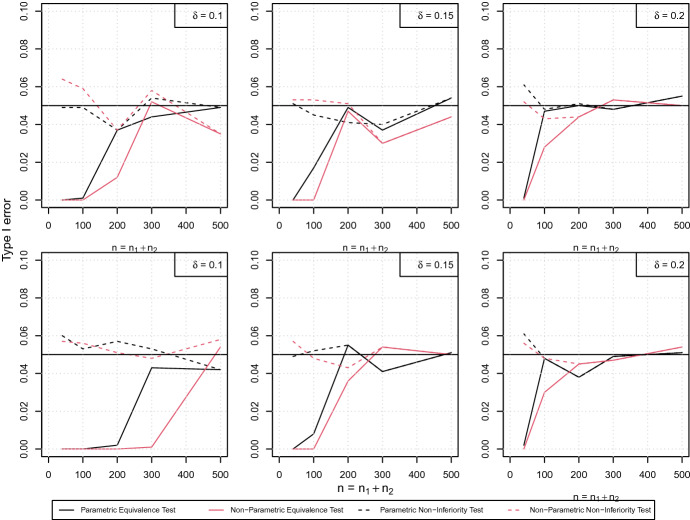

In the following we will first investigate type I error rates for the non-inferiority test (15) and the equivalence test (16) on the pointwise difference of survival curves. Secondly we will consider entire time intervals, as described in (18). We set and consider different sample sizes, i.e. , resulting in total sample sizes given by and 500, respectively. As already indicated by the simulated coverage probability presented in Fig. 3 the difference between asymptotic and bootstrap based bands is very small, in particular for total sample sizes larger than 50. This also holds for the test and hence, for the sake of brevity, we only display the results for the asymptotic version here.

We start with the PH scenario (19) and choose , such that the difference curve attains values of 0.1, 0.15 and 0.2 at time points 1.6, 2.3 and 4, respectively, see Fig. 1a. The median survival is given by 3.8 months and 2.7 months, respectively. The first row of Fig. 4 displays the type I errors simulated on the margin of the null hypothesis for every choice of for both, the non-inferiority tests (dashed lines) and the equivalence tests (solid lines). It turns out that the approximation of the level is very precise, for the non-inferiority test (15) in general and for the equivalence test (16) for sufficiently large sample sizes, as the type I errors are very close to 0.05 in these cases. For small samples, that is , the equivalence test (16) is conservative as the obtained type I errors are close to zero. The same conclusions can be drawn for the non-parametric approach (red lines). Again, the non-inferiority test approximates the significance level very precisely for all scenarios under consideration, whereas the equivalence test is conservative, but gets more precise with increasing sample sizes. Similar arguments hold for the NPH scenario (20) with , see the second row of Fig. 4. All results obtained here are qualitatively the same as the ones for the PH scenario (19), demonstrating that the presence of a non-constant hazard ratio does not affect the performance of the test. In general, for all procedures the most precise approximation of the significance level is obtained for a large equivalence/non-inferiority margin of . For situations, where the (absolute) difference of the survival curves is even larger than , the type I errors are practically zero for all configurations. The corresponding tables, which also include the numbers visualized in Fig. 4, are deferred to the Supplementary Material, Section 3.

Fig. 4.

Simulated type I errors for the PH scenario (19) (first row) and the NPH scenario (20) (second row) depending on the sample size. Type I errors have been simulated on the margin of the null hypothesis, that is (left, middle, right column). The dashed lines correspond to the non-inferiority test, the solid lines to the equivalence test. Black lines display the new, parametric approach ((15), (16)), red lines the non-parametric method (Color figure online)

Finally, we used the log-logistic scenario (21) to investigate the robustness of the approach regarding the type I error. It turns out that the robustness depends on the equivalence/non-inferiority margin. For the large margin and for some few configurations of we observe a low to moderate type I error inflation, whereas for there is no single type I error above its nominal level. Of note, these results depend on the chosen scenario, i.e. the true underlying models and the time points under consideration. As expected, the non-parametric approach does not suffer from this misspecification issue, which is a direct consequence of its construction with no need of assuming a particular distribution. We conclude that the correct specification of the underlying distribution is very important for the performance of the parametric approach. Although the choice of the equivalence/non-inferiority margin should clearly be determined by practical considerations rather than statistical properties, it should be noted that the risk of a type I error can be reduced by choosing a conservative, i.e. smaller, margin. The detailed tables presenting the simulation results can be found in Section 1 of the Supplementary Material.

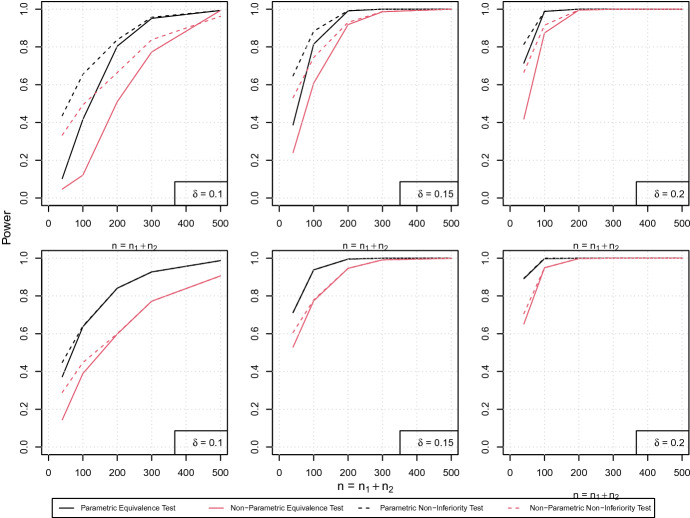

Power

For investigations on the power we consider the same configurations as given above. We now observe the PH scenario (19) such that the difference curve attains values of 0.01, 0.02 and 0.04 at time points 0.7, 1.2 and 2.3, respectively. Hence all chosen configurations belong to the alternatives in (15) and (16). For the NPH scenario (20) we consider , resulting in differences of 0.01 and 0.04, attained at time points 0.2 and 0.6 (0.01) and 3.2 and 2.7 (0.04), respectively (see Fig. 2). Figure 5 visualizes the power of both scenarios in dependence of the sample size. Therefore we chose two specific configurations, that is for the PH scenario (19) (first row) and for the NPH scenario (20) (second row). It becomes obvious that in general the power of all tests clearly increases with increasing sample sizes and a wider equivalence/non-inferiority margin . For instance, when considering the maximum power is close to or larger than for all sample sizes and both tests. In general, the power of the parametric approach is higher than for the non-parametric method for all configurations. Of note, considering a medium threshold of , both tests have a power of approximately 1 if the sample size is sufficiently large, i.e. . However, for smaller sample sizes or a smaller margin the parametric approach provides a power benefit of up to 0.2 which underlines the theoretical findings.

Fig. 5.

Simulated power for the PH scenario (19) at (first row) and the NPH scenario (20) at (second row) depending on the sample size for different non-inferiority/equivalence margins (left, middle, right column). The dashed lines correspond to the non-inferiority test, the solid lines to the equivalence test. Black lines display the new, parametric approach ((15), (16)), red lines the non-parametric method (Color figure online)

Considering different time points, the results for the PH scenario (19) can be found in Table 1 for the parametric approach and in Table 2 for the non-parametric approach, respectively. Similarly, Tables 3 and 4 display the power of the two methods in case of the NPH scenario (20), taking four different time points into consideration. For the latter, it becomes obvious that for later time points but equal distances between the two survival curves the power decreases, in particular in presence of small sample sizes. This can be explained by the fact that the remaining subjects become less with progressing time, resulting in a higher uncertainty after 3 months compared to 0.2 and 0.6 months, respectively. Of note, at 3 months more than half of the subjects experienced an event in this scenario. Again, comparing the results of the two approaches demonstrates a clear superiority of the parametric approach if sample sizes are small. For example, considering the PH scenario (19) with and a sample size of we observe a maximum power of 0.121 for the equivalence test based on the non-parametric approach whereas it is 0.416 for the new parametric approach.

Table 1.

Simulated power of the non-inferiority test (15) (numbers in brackets) and the equivalence test (16) for the PH scenario (19) with at three different time points for different sample sizes and equivalence margins . The nominal level is chosen as

| (20, 20) | (0.7, 0.01) | 0.103 (0.436) | 0.387 (0.647) | 0.714 (0.814) |

| (1.2, 0.02) | 0.002 (0.242) | 0.019 (0.370) | 0.191 (0.560) | |

| (2.3, 0.04) | 0.000 (0.133) | 0.000 (0.187) | 0.000 (0.263) | |

| (50, 50) | (0.7, 0.01) | 0.416 (0.655) | 0.855 (0.883) | 0.988 (0.988) |

| (1.2, 0.02) | 0.004 (0.344) | 0.371 (0.584) | 0.716 (0.793) | |

| (2.3, 0.04) | 0.000 (0.153) | 0.000 (0.236) | 0.149 (0.415) | |

| (100, 100) | (0.7, 0.01) | 0.804 (0.838) | 0.991 (0.992) | 1.000 (1.000) |

| (1.2, 0.02) | 0.265 (0.473) | 0.787 (0.822) | 0.979 (0.980) | |

| (2.3, 0.04) | 0.000 (0.190) | 0.240 (0.429) | 0.582 (0.652) | |

| (150, 150) | (0.7, 0.01) | 0.951 (0.957) | 1.000 (1.000) | 1.000 (1.000) |

| (1.2, 0.02) | 0.510 (0.619) | 0.940 (0.942) | 0.999 (0.999) | |

| (2.3, 0.04) | 0.004 (0.249) | 0.460 (0.527) | 0.818 (0.827) | |

| (250, 250) | (0.7, 0.01) | 0.993 (0.993) | 1.000 (1.000) | 1.000 (1.000) |

| (1.2, 0.02) | 0.803 (0.820) | 0.989 (0.989) | 1.000 (1.000) | |

| (2.3, 0.04) | 0.272 (0.375) | 0.734 (0.743) | 0.942 (0.942) |

Table 2.

Simulated power of the non-parametric approach for the non-inferiority test (numbers in brackets) and the equivalence test for the PH scenario (19) with at three different time points for different sample sizes and equivalence margins. The nominal level is chosen as

| (20, 20) | (0.7, 0.01) | 0.047 (0.333) | 0.240 (0.531) | 0.418 (0.666) |

| (1.2, 0.02) | 0.001 (0.204) | 0.021 (0.303) | 0.054 (0.429) | |

| (2.3, 0.04) | 0.000 (0.119) | 0.000 (0.197) | 0.000 (0.282) | |

| (50, 50) | (0.7, 0.01) | 0.121 (0.493) | 0.608 (0.744) | 0.874 (0.915) |

| (1.2, 0.02) | 0.002 (0.264) | 0.106 (0.435) | 0.456 (0.643) | |

| (2.3, 0.04) | 0.000 (0.160) | 0.000 (0.210) | 0.007 (0.312) | |

| (100, 100) | (0.7, 0.01) | 0.510 (0.664) | 0.918 (0.930) | 0.995 (0.996) |

| (1.2, 0.02) | 0.019 (0.341) | 0.534 (0.644) | 0.844 (0.866) | |

| (2.3, 0.04) | 0.000 (0.155) | 0.010 (0.324) | 0.315 (0.489) | |

| (150, 150) | (0.7, 0.01) | 0.773 (0.838) | 0.987 (0.987) | 0.999 (0.999) |

| (1.2, 0.02) | 0.228 (0.479) | 0.768 (0.801) | 0.959 (0.961) | |

| (2.3, 0.04) | 0.000 (0.203) | 0.175 (0.378) | 0.588 (0.664) | |

| (250, 250) | (0.7, 0.01) | 0.956 (0.962) | 0.999 (0.999) | 1.000 (1.000) |

| (1.2, 0.02) | 0.557 (0.663) | 0.936 (0.938) | 0.990 (0.990) | |

| (2.3, 0.04) | 0.012 (0.267) | 0.507 (0.575) | 0.828 (0.836) |

Table 3.

Simulated power of the non-inferiority test (15) (numbers in brackets) and the equivalence test (16) for the NPH scenario (20) with at four different time points for different sample sizes and equivalence margins . The nominal level is chosen as

| (20, 20) | (0.2, 0.01) | 0.964 (0.964) | 0.996 (0.999) | 1.000 (1.000) |

| (0.6, 0.04) | 0.372 (0.448) | 0.712 (0.712) | 0.891 (0.893) | |

| (2.7, 0.04) | 0.000 (0.124) | 0.000 (0.219) | 0.000 (0.297) | |

| (3.2, 0.01) | 0.000 (0.179) | 0.000 (0.284) | 0.000 (0.374) | |

| (50, 50) | (0.2, 0.01) | 1.000 (1.000) | 1.000 (1.000) | 1.000 (1.000) |

| (0.6, 0.04) | 0.637 (0.640) | 0.938 (0.938) | 0.997 (0.997) | |

| (2.7, 0.04) | 0.000 (0.151) | 0.047 (0.339) | 0.436 (0.563) | |

| (3.2, 0.01) | 0.000 (0.258) | 0.054 (0.468) | 0.481 (0.684) | |

| (100, 100) | (0.2, 0.01) | 1.000 (1.000) | 1.000 (1.000) | 1.000 (1.000) |

| (0.6, 0.04) | 0.818 (0.841) | 0.995 (0.995) | 1.000 (1.000) | |

| (2.7, 0.04) | 0.001 (0.246) | 0.442 (0.555) | 0.803 (0.833) | |

| (3.2, 0.01) | 0.006 (0.406) | 0.558 (0.728) | 0.869 (0.921) | |

| (150, 150) | (0.2, 0.01) | 1.000 (1.000) | 1.000 (1.000) | 1.000 (1.000) |

| (0.6, 0.04) | 0.927 (0.927) | 1.000 (1.000) | 1.000 (1.000) | |

| (2.7, 0.04) | 0.206 (0.326) | 0.678 (0.701) | 0.922 (0.928) | |

| (3.2, 0.01) | 0.267 (0.553) | 0.797 (0.859) | 0.903 (0.984) | |

| (250, 250) | (0.2, 0.01) | 1.000 (1.000) | 1.000 (1.000) | 1.000 (1.000) |

| (0.6, 0.04) | 0.987 (0.987) | 1.000 (1.000) | 1.000 (1.000) | |

| (2.7, 0.04) | 0.437 (0.471) | 0.882 (0.883) | 0.993 (0.993) | |

| (3.2, 0.01) | 0.609 (0.742) | 0.968(0.975) | 0.998 (0.998) |

Table 4.

Simulated power of the non-parametric approach for the non-inferiority test (numbers in brackets) and the equivalence test for the NPH scenario (20) with at four different time points for different sample sizes and equivalence margins. The nominal level is chosen as

| (20, 20) | (0.2, 0.01) | 0.709 (0.755) | 0.951 (0.953) | 0.968 (0.968) |

| (0.6, 0.04) | 0.144 (0.289) | 0.529 (0.606) | 0.651 (0.706) | |

| (2.7, 0.04) | 0.000 (0.124) | 0.000 (0.168) | 0.000 (0.263) | |

| (3.2, 0.01) | 0.000 (0.169) | 0.000 (0.229) | 0.000 (0.298) | |

| (50, 50) | (0.2, 0.01) | 0.961 (0.963) | 0.999 (0.999) | 1.000 (1.000) |

| (0.6, 0.04) | 0.389 (0.447) | 0.774 (0.779) | 0.949 (0.949) | |

| (2.7, 0.04) | 0.000 (0.150) | 0.000 (0.272) | 0.130 (0.425) | |

| (3.2, 0.01) | 0.000 (0.230) | 0.000 (0.349) | 0.125 (0.506) | |

| (100, 100) | (0.2, 0.01) | 0.998 (0.998) | 1.000 (1.000) | 1.000 (1.000) |

| (0.6, 0.04) | 0.598 (0.600) | 0.946 (0.946) | 0.998 (0.998) | |

| (2.7, 0.04) | 0.000 (0.188) | 0.183 (0.425) | 0.590 (0.657) | |

| (3.2, 0.01) | 0.000 (0.307) | 0.232 (0.581) | 0.668 (0.807) | |

| (150, 150) | (0.2, 0.01) | 1.000 (1.000) | 1.000 (1.000) | 1.000 (1.000) |

| (0.6, 0.04) | 0.772 (0.772) | 0.991 (0.991) | 1.000 (1.000) | |

| (2.7, 0.04) | 0.000 (0.255) | 0.465 (0.530) | 0.790 (0.806) | |

| (3.2, 0.01) | 0.000 (0.411) | 0.537 (0.702) | 0.857 (0.902) | |

| (250, 250) | (0.2, 0.01) | 1.000 (1.000) | 1.000 (1.000) | 1.000 (1.000) |

| (0.6, 0.04) | 0.906 (0.906) | 0.999 (0.999) | 1.000 (1.000) | |

| (2.7, 0.04) | 0.229 (0.329) | 0.703 (0.718) | 0.949 (0.949) | |

| (3.2, 0.01) | 0.316 (0.575) | 0.848 (0.889) | 0.980 (0.984) |

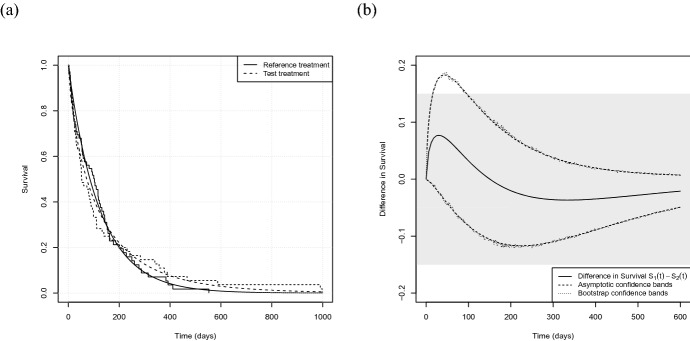

Case study

In the following we will investigate a well known benchmark dataset regarding survival analysis. The data set veteran from Veteran’s Administration Lung Cancer Trial (Kalbfleisch and Prentice 2011), implemented in the R package survival (Therneau 2020), describes a two-treatment, randomized trial for lung cancer. In this trial, male patients with advanced inoperable lung cancer were allocated to either a standard therapy (reference treatment, ) or a chemotherapy (test treatment, ). Numerous covariates were documented, including time to death for each patient, which is the primary endpoint of our analysis. In total 137 observations, allocated to patients in the reference group and in the test group, are given. The code reproducing the results presented in the following has been implemented in the R package EquiSurv (Möllenhoff 2020). As our analysis is model-based, we start with a model selection step. More precisely we split the data into the reference group and the test group and assume six different distributions, that is a Weibull distribution, an exponential distribution, a Gaussian distribution, a logistic distribution, a log-normal distribution and a log-logistic distribution, respectively. We fit the corresponding models separately per treatment group, resulting in 12 models in total. Finally we compare for each group the six different models using Akaike’s Information Criterion, AIC (Sakamoto et al. 1986). It turns out that for the group receiving the reference treatment the Weibull and the exponential model provide the best fits (AICs are given by 749.1, 747.1, 799.9, 794.7, 755.1 and 758.1 in the order of the models mentioned above) whereas in the test group the log-logistic, the log-normal and the Weibull model are the best ones (AICs given by 749.1, 750.1 and 751.7, respectively). Therefore we decide to base our analyses on Weibull models for both groups. However, note that all tests could also be performed assuming different distributions for each treatment. We assume the censoring times to be exponentially distributed and maximizing the likelihood (1) yields and These low censoring rates can be explained by the fact that in total only 9 of the 137 individuals have been censored, precisely 7.3% in the reference treatment group and 5.8% in the test treatment group, respectively. Fig. 6a displays the corresponding Weibull models and the non-parametric analogue given by Kaplan–Meier curves. It turns out that for both treatment groups the parametric and the non-parametric curves are very close to each other. Further we observe that the survival curves of the two treatment groups cross each other which indicates that the assumption of proportional hazards is not justified here. Indeed, the hazard ratio ranges from 0.55 to 1.93 from the first time of event (3 days) until the end of the observational period (999 days) and therefore an analysis using a proportional hazards model is actually not applicable here. The p-value of the log-rank test is 0.928 and thus does not detect any difference between the two groups.

Fig. 6.

a Survival curves (Kaplan–Meier curves and Weibull models) for the veteran data. Solid lines correspond to the reference group, dashed lines to the test treatment. b Difference in survival, pointwise confidence bands obtained by the asymptotic approach (dashed) and bootstrap (dotted), respectively, on the interval . The shaded area indicates the equivalence margins with

We will now perform a similar analysis using the parametric models and the theory derived in Sect. 2. For the sake of brevity we will only consider difference in survival, analyses concerning the (log) hazard ratio can be conducted in the same manner. We consider the first 600 days of the trial. We set and calculate lower and upper -pointwise confidence bands according to (5) at several points . Estimates of the variance are obtained by both, the asymptotic approach and bootstrap as described in Algorithm 1, respectively. Figure 6b displays the estimated difference curve and the pointwise confidence bands on the interval . We note that there is almost no difference between the two methods, meaning that the asymptotic and the bootstrap approach yield very similar results here, which can be explained by the rather high sample size combined with the very low rate of censoring. We start our analysis considering , which is close to the median survival of both treatment groups. The difference in survival is and the asymptotic confidence interval at this point is given by , while the bootstrap yields . Note that these are two-sided -confidence intervals, as we use -upper and -lower confidence bands for the test decisions. Of note, if we assume an alternative censoring distribution, namely a uniform distribution, we obtain , which is a bit wider but still very close to the other two intervals. Continuing with the narrower confidence bands and investigating the hypotheses (11) and (12) we observe that both, non-inferiority and equivalence can be claimed for all (0.162), respectively. Consequently, for , which is indicated by the shaded area in Fig. 6b, cannot be rejected in both cases, meaning in particular that the treatments cannot be considered equivalent with respect to the 80-days survival. Figure 6b further displays these investigations for simultaneously at all time points under consideration. We conclude that the chemotherapy is non-inferior to the standard therapy regarding survival after 96 days, as this is the earliest time point where the upper confidence bound is smaller than , which means that the null hypothesis can be rejected for the first time. The same conclusion can be made concerning equivalence as for all t in , the lower confidence bands are completely contained in the rejection region, meaning that they are larger than . Consequently we can conclude that both treatments are equivalent concerning for example the 6-months or one-year-survival, respectively. Finally we further observe that considering for instance , equivalence would be claimed at all time points under consideration.

Discussion

In this paper, we addressed the problem of survival analysis in the presence of non-proportional hazards. Here, commonly used methods, as Cox’s proportional hazards model or the log-rank test, are not optimal and suffer from a loss of power. Therefore we proposed another approach for investigating equivalence or non-inferiority of time-to-event outcomes based on the construction of (pointwise) confidence bands and applicable irrespectively of the shape of the hazard ratio. We proposed two ways of constructing confidence bands for both the (log) hazard ratio and the difference of the survival curves. One is based on asymptotic investigations, and the other on a parametric bootstrap procedure. Both approaches show a similar performance. The latter has the advantage that it can be used for small sample sizes and does not require calculating the asymptotic variance. Our approach provides a framework for investigating survival in many ways. Apart from specific time points entire periods of time can be observed and the difference in survival compared to a specific equivalence margin. We demonstrated that the presence of non-proportional hazards does not affect the performance of the confidence bands and the non-inferiority and equivalence tests, respectively, which means that they do not rely on this assumption. Consequently, this framework provides a much more flexible tool than standard methodology.

Our methods are based on estimating parametric survival functions, which can be an effective tool once the model’s suitability is proven. The latter has to be assessed in a preliminary study, using, for instance, model selection criteria as the AIC. If a model has to be prespecified, which can be necessary in some clinical trials, the model-selection step cannot be performed properly and the model may suffer from misspecification. To this end we investigated the robustness of our approach and it turned out that if the underlying distribution of the event times is not correctly specified a type I error inflation occurred in some configurations with a relatively large equivalence/non-inferiority margin. In general, the choice of the margin should clearly be determined by clinicians and practical considerations rather than by statistical properties. If one wants to reduce the risk of a type I error, a conservative, i.e. smaller, margin should be chosen, as no type I error inflation occurred for these configurations. However, we note that for those margins the power, that is correctly claiming equivalence/non-inferiority, can be decreased. Further, as another non-parametric alternative one could also investigate confidence bands based on the classical bootstrap for survival data (Efron 1981; Akritas 1986).

In clinical research there might be situations where it makes sense to consider different metrics as the (maximum) difference between survival curves. Such a metric could, for example, be the area between the survival curves, that is the difference in mean survival times. We leave the investigation and extension of the proposed methods to this situation for future research.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix

A1. Proof of Lemma 1

In the following we prove that the equivalence test described in Eq. (16) is an asymptotic -level test. Under the null hypothesis in Eq. (12) the probability of rejecting is given by

| 22 |

We now consider the margin of the null hypothesis (that is ). Without loss of generality we assume that and (22) becomes

due to (6). The same arguments hold for . When considering the interior of the null hypothesis, that is , the probability in Eq. (22) gets even smaller and, moreover, converges to zero due to the asymptotic normality of , as or .

A2. Asymptotic variances for a Weibull distribution

In order to calculate confidence bands as given in Eq. (5) and (8), respectively, we need a formula for the gradients yielding asymptotic variances as described in Eq. (4) and (7). We now present an exemplary calculation assuming a Weibull distribution, that is , . The gradients are given by

and

Substituting these gradients in the formula (4) and (7), respectively and using the MLE and the corresponding Fisher information matrix obtained via maximizing (1) finally yields the asymptotic variance of and , respectively. The estimates can be obtained by using standard statistical software as for example R, the concrete implementation can be found in the R package EquiSurv (Möllenhoff 2020).

Funding Information

Open Access funding enabled and organized by Projekt DEAL.

Declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Akritas MG. Bootstrapping the Kaplan–Meier estimator. J Am Stat Assoc. 1986;81:1032–1038. [Google Scholar]

- Berger RL. Multiparameter hypothesis testing and acceptance sampling. Technometrics. 1982;24:295–300. doi: 10.2307/1267823. [DOI] [Google Scholar]

- Bradley R, Gart J. The asymptotic properties of ml estimators when sampling from associated populations. Biometrika. 1962;49:205–214. doi: 10.1093/biomet/49.1-2.205. [DOI] [Google Scholar]

- Bretz F, Möllenhoff K, Dette H, Liu W, Trampisch M. Assessing the similarity of dose response and target doses in two non-overlapping subgroups. Stat Med. 2018;37:722–738. doi: 10.1002/sim.7546. [DOI] [PubMed] [Google Scholar]

- Com-Nougue C, Rodary C, Patte C. How to establish equivalence when data are censored: a randomized trial of treatments for b non-hodgkin lymphoma. Stat Med. 1993;12(14):1353–1364. doi: 10.1002/sim.4780121407. [DOI] [PubMed] [Google Scholar]

- Cox D. Regression models and life-tables. J R Stat Soc Ser B. 1972;34:187–202. [Google Scholar]

- Cox D, Oakes D. Analysis of survival data. CRC Press; 1984. [Google Scholar]

- Da Silva G, Logan B, Klein J. Methods for equivalence and noninferiority testing. Biol Blood Marrow Transplant. 2009;15:120–127. doi: 10.1016/j.bbmt.2008.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D’Agostino R, Sr, Massaro J, Sullivan L. Non-inferiority trials: design concepts and issues-the encounters of academic consultants in statistics. Stat Med. 2003;22:169–186. doi: 10.1002/sim.1425. [DOI] [PubMed] [Google Scholar]

- Dobler D, Pauly M. Bootstrap-and permutation-based inference for the Mann–Whitney effect for right-censored and tied data. Test. 2018;27:639–658. doi: 10.1007/s11749-017-0565-z. [DOI] [Google Scholar]

- Dormuth I, Liu T, Xu J, Yu M, Pauly M, Ditzhaus M. Which test for crossing survival curves? A user’s guideline. BMC Med Res Methodol. 2022;22:1–7. doi: 10.1186/s12874-022-01520-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron B. Censored data and the bootstrap. J Am Stat Assoc. 1981;76:312–319. doi: 10.1080/01621459.1981.10477650. [DOI] [Google Scholar]

- Efron B, Tibshirani R. An introduction to the bootstrap. CRC Press; 1994. [Google Scholar]

- EMA (2014) Committee for medicinal products for human use (chmp): guideline on the choice of non-inferiority margin. Available at https://www.ema.europa.eu/en/documents/scientific-guideline/guideline-choice-non-inferiority-margin_en.pdf [DOI] [PubMed]

- Gill R, Schumacher M. A simple test of the proportional hazards assumption. Biometrika. 1987;74:289–300. doi: 10.1093/biomet/74.2.289. [DOI] [Google Scholar]

- Grambsch P, Therneau T. Proportional hazards tests and diagnostics based on weighted residuals. Biometrika. 1994;81:515–526. doi: 10.1093/biomet/81.3.515. [DOI] [Google Scholar]

- Greenwood M (1926) The natural duration of cancer. London: Her majesty’s stationery office. Reports on public health and medical subjects

- Hernán M. The hazards of hazard ratios. Epidemiology. 2010;21:13. doi: 10.1097/EDE.0b013e3181c1ea43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard G, Chambless L, Kronmal R. Assessing differences in clinical trials comparing surgical vs nonsurgical therapy: using common (statistical) sense. JAMA. 1997;278:1432–1436. doi: 10.1001/jama.1997.03550170062033. [DOI] [PubMed] [Google Scholar]

- Jachno K, Heritier S, Wolfe R. Are non-constant rates and non-proportional treatment effects accounted for in the design and analysis of randomised controlled trials? a review of current practice. BMC Med Res Methodol. 2019;19:103. doi: 10.1186/s12874-019-0749-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janda M, Gebski V, Davies L, Forder P, et al. Effect of total laparoscopic hysterectomy vs total abdominal hysterectomy on disease-free survival among women with stage i endometrial cancer: a randomized clinical trial. JAMA. 2017;317:1224–1233. doi: 10.1001/jama.2017.2068. [DOI] [PubMed] [Google Scholar]

- Kalbfleisch J, Prentice R. The statistical analysis of failure time data. Wiley; 2011. [Google Scholar]

- Kaplan E, Meier P. Nonparametric estimation from incomplete observations. J Am Stat Assoc. 1958;53:457–481. doi: 10.1080/01621459.1958.10501452. [DOI] [Google Scholar]

- Klein J, Moeschberger M. Survival analysis: techniques for censored and truncated data. Springer; 2006. [Google Scholar]

- Kudo M, Finn R, Qin S, Han K, Ikeda K, Piscaglia F, Baron A, et al. Lenvatinib versus sorafenib in first-line treatment of patients with unresectable hepatocellular carcinoma: a randomised phase 3 non-inferiority trial. Lancet. 2018;391:1163–1173. doi: 10.1016/S0140-6736(18)30207-1. [DOI] [PubMed] [Google Scholar]

- Li H, Han D, Hou Y, Chen H, Chen Z. Statistical inference methods for two crossing survival curves: a comparison of methods. PLoS One. 2015;10:e0116774. doi: 10.1371/journal.pone.0116774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu W, Bretz F, Hayter AJ, Wynn HP. Assessing non-superiority, non-inferiority or equivalence when comparing two regression models over a restricted covariate region. Biometrics. 2009;65:1279–1287. doi: 10.1111/j.1541-0420.2008.01192.x. [DOI] [PubMed] [Google Scholar]

- Martinez E, Sinha D, Wang W, Lipsitz S, Chappell R. Tests for equivalence of two survival functions: alternative to the tests under proportional hazards. Stat Methods Med Res. 2017;26:75–87. doi: 10.1177/0962280214539282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Möllenhoff K (2020) Equisurv: modeling, confidence intervals and equivalence of survival curves R package. Available at https://CRAN.R-project.org/package=EquiSurv

- Oehlert G. A note on the delta method. Am Stat. 1992;46:27–29. [Google Scholar]

- Parzen M, Wei L, Ying Z. Simultaneous confidence intervals for the difference of two survival functions. Scand J Stat. 1997;24(3):309–314. doi: 10.1111/1467-9469.t01-1-00065. [DOI] [Google Scholar]

- Peto R, Peto J. Asymptotically efficient rank invariant test procedures. J R Stat Soc Ser A. 1972;135:185–198. doi: 10.2307/2344317. [DOI] [Google Scholar]

- Royston P, Parmar M. The use of restricted mean survival time to estimate the treatment effect in randomized clinical trials when the proportional hazards assumption is in doubt. Stat Med. 2011;30:2409–2421. doi: 10.1002/sim.4274. [DOI] [PubMed] [Google Scholar]

- Sakamoto Y, Ishiguro M, Kitagawa G. Akaike information criterion statistics. Dord Neth D Reidel. 1986;81:26853. [Google Scholar]

- Shen P (2020) Tests for equivalence of two survival functions: alternatives to the ph and po models. J Biopharmaceut Stat pp 1–12 [DOI] [PubMed]

- Subramanian S, Zhang P. Model-based confidence bands for survival functions. J Stat Plan Inference. 2013;143:1166–1185. doi: 10.1016/j.jspi.2013.01.012. [DOI] [Google Scholar]

- Therneau T (2020) A package for survival analysis in r R package. Available at https://CRAN.R-project.org/package=survival

- Uno H, Claggett B, Tian L, Inoue E, Gallo P, et al. Moving beyond the hazard ratio in quantifying the between-group difference in survival analysis. J Clin Oncol. 2014;32:2380. doi: 10.1200/JCO.2014.55.2208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wellek S (1993) A log-rank test for equivalence of two survivor functions. Biometrics pp 877–881 [PubMed]

- Wellek S. Testing statistical hypotheses of equivalence and noninferiority. CRC Press; 2010. [Google Scholar]

- Yang S, Prentice R. Improved Logrank-type tests for survival data using adaptive weights. Biometrics. 2010;66:30–38. doi: 10.1111/j.1541-0420.2009.01243.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.