Abstract

A workshop held at the 18th Annual Conference of the Pharmaceutical Contract Management Group in Krakow on 9 September 2022 asked over 200 delegates what the clinical trial landscape would look like in 2050. Issues considered included who will be running the pharmaceutical industry in 2050; how ‘health chips’, wearables and diagnostics will impact on finding the right patients to study; how will artificial intelligence be designing and controlling clinical trials; and what will the role of the Clinical Research Associate, the critical observer, documenter and conductor of a clinical trial need to look like by 2050. The consensus was that, by 2050, if you are working in clinical trials, you will be a data scientist. We can expect to see an increasing role of new technologies and a new three-phase registration model for novel therapies. The first phase will involve an aspect of quality evaluation and biological proof-of-concept probably involving more preclinical modelling and engineered human cell lines and fewer animal studies than currently used. Once registered, new products will enter a period of adaptive clinical development (delivered as a single study) intended to establish safety. This phase will most likely take around 1–2 years and explore tailored options for administration. Investigations will most likely be conducted in patients, possibly in a ‘patient-in-a-box’ setting (hospital or healthcare centre, virtual or microsite). On completion of safety licencing, drugs will begin an assessment of efficacy in partnership with those responsible for reimbursement – testing will be performed in patients, possibly where individual patient involvement in safety testing will offer some reimbursement deal for future treatment. Change is coming, though its precise form will likely depend on the creativity and vision of sponsors, regulators and payers.

Keywords: artificial intelligence, clinical trials, innovation, regulatory framework, trial data

Introduction

A workshop held at the 18th Annual Conference of the Pharmaceutical Contract Management Group (PCMG) in Krakow on 9th September 2022 asked over 200 delegates what the clinical trial landscape would look like in 2050. (Quotes presented throughout the text were obtained from the participant responses.)

As Confucius tells us, if we are to divine the future, we must first study the past. Scientists appreciate the value of extrapolation. In this case, the delegates had amassed 2500+ years of experience over the last 30+ years. Looking back, the last three decades have seen many changes, including the introduction of novel trial designs, the data revolution and transformative technologies. Many of the modifications have come as a series of bolt-ons, and stakeholders might be forgiven for thinking that the current clinical framework built on Good Clinical Practice and the ICH guidelines is struggling to remain fit for purpose.1,2 So, where do we go next?

It is said that true wisdom comes from asking the right questions, and the workshop focused its discussions around four key questions:

Who will be running the pharmaceutical industry in 2050?

How will ‘health chips’, wearables and diagnostics impact on finding the right patients to study?

How will artificial intelligence be designing and controlling clinical trials?

What will the role of the Clinical Research Associate (CRA), the critical observer, documenter and conductor of clinical trials need to look like by 2050?

The questions were posed in response to the unprecedented changes that have occurred throughout the industry.3–5 It would be fair to say that predicting what clinical trials will look like in the next 25, 10 or even 5 years must be little more than speculative. However, the question arises as to how an industry that has been reticent and resistant to change for over three decades will respond to the challenges ahead.

Who will be running the pharmaceutical industry in 2050?

Discussions around the question confirmed the understanding that it is the data that sits firmly at the centre of clinical trials. The 2021 Tufts report on the state of clinical trials highlighted however more complex clinical trial designs are collecting much higher volumes of data from a variety of sources.5–9 We have been seeing the consequence of biopharmaceutical companies engaging in more ambitious and customized drug development activity targeting a growing number of rare diseases, stratifying participant subgroups using biomarker and genetic data, and relying on more structured and unstructured patient data coming from an increasing number of sources.

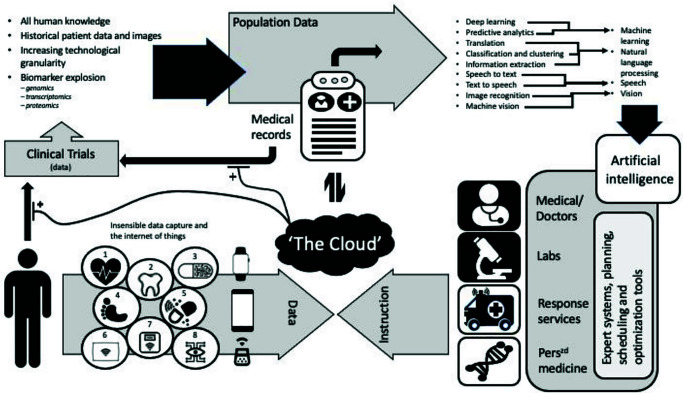

Clinical trial designs are expected to become more complex in the future, generating even greater data volume and diversity. Figure 1 summarizes the discussions around possible future data flows and gives a taste of the types of data all of us will soon be ‘providing’ – irrespective (possibly) of whether we have agreed to take part in a clinical study.10 It seems that most of us will be ‘voluntarily’ monitored by 2050 and whole populations might serve as trial participants.

Figure 1.

Diagram summarizing a proposed interaction between an individual’s data sources and collection, storage, and analytical processes in 2050.

Numbered items identify insensible data collection devices: (1) Implemented device for heart health monitoring. (2) Artificial tooth, monitors temperature, nutrition and oral health. (3) Smart pill, monitors digestive system. (4) Electronic tattoo, monitors activity, steps and falls. (5) Acute (emergency)/chronic drug delivery system. (6) Smart pillow, monitors sleep and breathing patterns. (7) Scales, monitors weight, body mass index and hydration. (8) Contact lens, monitors sugar levels/general eye health.

The general consensus was that, with the expected revolutions in data collection, the clinical study teams that devise and run studies must also change. Equally, the amount and type of data we can expect dictates that it will not be clinical pharmacologists or clinicians but more likely algorithms managed by data scientists that will be driving drug development. It seemed likely to the delegates that the true leadership in development will lie with those who own the algorithms that we will rely on to establish both the safety and efficacy of new treatments.11,12

Mergers such as that between Quintiles and IMS appear to reflect the growing acceptance of the concept of end-to-end data exploitation (development to reimbursement) solutions for pharmaceutical companies. But look closer. More significant change is coming. Over the last few decades, we have seen pharmaceutical companies divest expertise, reducing their employee base and adopting outsourcing models for specialist functions.13 Larger pharmaceutical companies have coupled this with an emphasis on asset acquisition over in-house development.14 In focusing on recouping profit over investing in the engine to drive future development it was generally agreed that the pharmaceutical industry has taken its eye off the ball. Clinical development was always about data – and now the Gods of data are coming to call.

“Who will run pharma? Google, Amazon, Apple – the big tech companies.”

The opinion was that, try as they might, Blue-chip pharmaceutical companies and mega contract research organization (CRO) conglomerates have already lost the data initiative. It was clear from delegate feedback that we are already seeing technology companies come to the fore as pharma’s trillion dollar spend attracts predators like Google, IBM and Microsoft.

How will ‘health chips’, wearables and diagnostics impact on finding the right patients to study?

Perhaps the greatest focus of comment and debate was around the future impact that technologies would have and how that technology would be employed in clinical studies of the future. Over the past few years, the general public have become more aware of the various wearable technologies in the form of sensors and diodes intended to monitor health data such as heart rate, lung capacity and body temperature. Such technology already exists in the guise of smartwatches and smart clothing and, while currently used in professional sports to track progress and fitness, the real capabilities of wearable medical technology are only just emerging.

“Implanted biosensors will be commonplace by 2050.”

At the forefront of the discussions on wearable technology is the revolution in and clinical potential of responsive ‘drug delivery’. People with chronic health conditions can often feel that their lives revolve around medication – the appropriate use of medicine is considered to represent better managed disease and relieve the burden on patients. An example offered was that of diabetes. Instead of manual checking of blood sugar and administering insulin as needed, it is proposed that the patient would rely on an electromedical device — not just for monitoring but for administration of correct (and variable) dosages based on specific patient needs.

Although diabetes is a common disease and likely target for new technologies, the delegates considered a broader application, not only for the treatment of disease but in the testing of new agents. It was considered how, during the COVID pandemic, healthcare agencies approved and released more convenient at-home chemotherapy cancer treatments in a bid to minimize possible exposure to infection and keep more hospital spaces open. The consensus was that, although this approach was adopted out of necessity, it showed a reliance on the administrative technology associated with at-home treatments; a definite step in the direction of trust for wearable technology that can be employed in the treatment of a variety of diseases and chronic conditions.

The wearable drug delivery market is exploding – from simple patches and medical wearable devices on the skin to subcutaneous non-needle injectors, the industry is expected to exceed US$240 billion in the next 2 years alone.15 The innovations coming from this corner of our industry are immense, and the audience considered that the potential offered by technology to manage the disease and promote healing is nothing short of astounding. It was not hard for the delegates to imagine that implantable devices will be monitoring a broad scope of factors both in health and disease, providing live, real-world population data long before 2050.

“We are already approaching true personalized medicine. The future will see ‘dose’ being a term consigned to the past – instead, dug delivery will be responsive.”

The consensus was that the new technologies will not only facilitate the recruitment of participants but also aid the identification of more appropriate patients, empowered by what could be a long-term ‘baseline’ date. With wearables becoming more commonplace and powerful in their breadth of offerings, they could, when combined with more informed genetic testing, result in earlier and more definitive diagnoses of a broad range of diseases – a real-life realization of the Theranos dream.16

“There will be rapid, home diagnostic testing – like Theranos, but that actually works.”

How will AI be designing and controlling clinical trials?

Discussions and opinions regarding how artificial intelligence (AI) may be impacting the design and conduct of clinical trials focused on three parts: optimization, facilitation and simulation.

In terms of optimization, delegates envisaged how historical data available on clinical trial registries such as ClinicalTrials.gov will empower the study design process. This information will be used to reduce the time it currently takes to prepare protocols, minimize error and the need for amendments, and guide clinical teams in the selection of the most appropriate measurements/biomarkers, milestones and endpoints. It was envisaged that the wider adoption of AI would accelerate the process to move studies from the planning stage to delivery. It was also proposed that the introduction of algorithms programmed to deliver protocols would allow clinical teams to explore different design options in ‘real time’, a development that would foster more creative and complex study designs most likely to provide high-value scientific and regulatory data.

Consideration was given to how AI might facilitate trial delivery. It was discussed how various algorithms are already being employed to identify disease-specific centres of excellence, high-performing trialists (those that consistently hit recruitment targets and with low ‘drop-outs’ or failures) and potential opinion leaders.17,18 Delegates recognized that further exploitation, almost down to identification of individual patients, will be necessary to reduce the trial cost burden of sites that fail to recruit. Emphasis was placed on an expected increase in the uptake and utilization of personal health monitoring devices and the rationalization of health data. It was proposed that patient identification could be simplified using algorithms to identify the most appropriate patients for trials (and their medical history and details entered automatically into trial databases), although it was agreed that these proposed reductions in administrative burden would need to fit within the current and any future data privacy framework (assuming that data privacy regulations are still in place by 2050). In addition, this ambition overlooks the use of coding systems in current electronic health record systems to identify medical diagnoses. Currently, data protection and governance guidelines mean that we only have access to coded data and not unstructured text, which encompasses the wider patient history. Finally, it was proposed that trial costs could be further reduced using large-scale (population), real-life, patient pathway data to dispense with the need for the inclusion of placebo treatment groups in clinical trials.

Simulation guided by AI was expected to play a key role in clinical trials by 2050. Although not within the general expertise of the conference attendees, it was generally agreed that simulation would contribute to the preclinical characterization of new therapies; identification of optimal dosing strategies and, through the availability of actual patient data, the modelling of large-scale, simulated/synthetic cohorts; provide instantaneous safety and population profiling; and deliver estimates of economic benefits that could be achieved following the deployment of new medicines.

How will the process of registration respond to innovation?

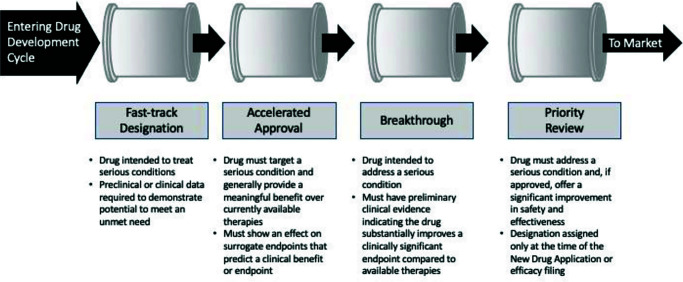

Debate around how changes in technology are likely to impact on the conduct of clinical research introduced an unplanned question: how will the process of registration respond to innovation? It was clear from the various discussion streams that, even if we continue with our current rate of identifying new therapies, we still have the problem of getting them into the patients. Delegates felt that, if anything needs addressing, it is the process of drug testing in man – it is recognized as being slow, expensive and inefficient. The statistics are well known: it takes 7–10 years to bring a drug to market and the best estimates suggest that only ~1 in 10,000 candidates pass the finishing line. Despite our best efforts, we have not found solutions for the dual curse of attrition and protracted development times. Modifications to the registration pathway intended to facilitate delivery from the FDA have made small, incremental improvements (Figure 2), and even with the modifications suggested earlier, preliminary estimates suggest that they will not make a substantial impact on either timelines or attrition.19

Figure 2.

Summary of the FDA accelerated (expedited) development pathways.

Source data: www.fda.gov/media/86377/download.

“Given the leaps forward in personalized medicine and genetic screening to identify which drugs will work best for specific patients, this is only going to increase the number of compounds we need to get on to the market to treat the same number of conditions … and as the number of patients each can treat will go down, either we have to increase the unit price of sale, or make the development a whole lot faster and cheaper.”

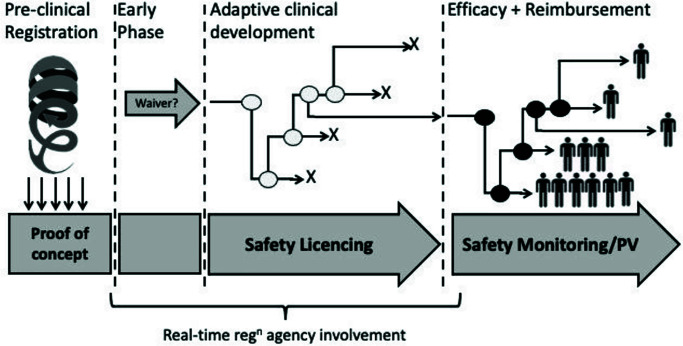

Delegates suggested that registration of new therapies in 2050 will have moved away from the traditional serial trial approach (Figure 3). We currently segment development into individual phases and trials, each incorporating different treatment groups, often with the focus of the study being to establish some statistical significance. This approach ties up considerable resources. For example, the process of writing and approval for each clinical study protocol can take anything from 5 to 20 weeks; each protocol undergoes (at least) four substantial amendments, with more delays and not insubstantial additional regulatory costs. Depending on the number of countries involved, a substantial amendment can cost between $250k and $750k in submission effort and fees. Once the study has been delivered, data review and analysis must be performed before writing and publishing the final report. If each drug development programme involves anything from 5 to 10 clinical studies, it can be estimated that regulatory ‘administration’ alone takes up over 3 years – a third of the current clinical development time.

Figure 3.

A proposed continuous and integrated drug registration and development framework.

It was suggested that regulatory authorities will begin to take a more proactive approach to registration, employing the improvements in data collection coupled with integrated data management systems to empower regulators to regulate in real time. On consideration of current stated regulatory strategy of the EMA, the delegates felt that it already contains many of the necessary components for real time programme coordination and monitoring.20 It was proposed that, with a few modest modifications it would be possible for protocols to become less rigid, even infinitely amendable, managed online and approved in an ‘as required’ basis. This would introduce the possibility of turning a whole development programme into a single, infinitely adaptive clinical trial, perhaps managed by a global authority. The savings in administration time alone would be significant.

“Technology is not the hurdle to more efficient drug development and registration. Agencies need to work as a global institution.”

Even if a global regulatory authority does not emerge, it is expected that agencies would share access to the same study design information (for example, patient profiling, endpoints and milestones), removing the requirements of sponsors to justify features of the protocols and modifications. It was even proposed that the process of building the registration data package itself could fall fully under the umbrella of the agencies.

What will the role of the CRA need to look like by 2050?

The last of the planned questions focused on the role of the CRA, currently the critical stakeholders of the clinical trial delivery team. The challenge associated with sourcing sufficiently trained and experienced CRAs to deliver clinical trials has been the focus of previous PCMG events over the last 5 years. In line with the law of supply and demand, the salaries of CRAs have seen marked increases. The constantly moving goalposts are driving movement across the clinical trial workforce. Employees are reaping the reward in terms of golden handshakes, salaries and perks. In contrast, sponsors and CROs are finding it difficult to retain teams.21,22

The overall conclusion was that improved data accountability, technology and automation will mean that there would no longer be any need for CRAs. Clinical trials were expected to be managed centrally, with data being provided from a variety of tools (wearables, etc.). Investigations will most likely be conducted directly in patients, doing away with the healthy participants involvement in clinical development programmes. Studies may be conducted in a ‘patient-in-a-box’ setting (hospital or healthcare centre, virtual or microsite, or within the home).

Beyond the four premeditated questions, the discussion sessions derived three additional questions that the delegates felt were important in determining the industry’s future.

What impact will the changes have on reimbursement?

Discussions ranged across all aspects of clinical development and focus often returned to the impact the changes would have on existing reimbursement mechanisms. Our expenditure on healthcare has been increasing and is expected to have doubled between now and 2030 – less than 10 years’ time.23 Although it was agreed that the proposed changes would likely reduce the time and costs of the development of new treatments, profit will remain a key industry driver. Although increases in healthcare costs will not solely be due to the price of medicines, the pharmaceutical industry and the price of medicines have come under increasing scrutiny. Looking at some of the medicines at the upper end of the market, it is clear that the numbers are many times greater than any single patient could ever afford (Table 1) – and this is for treatments that are often no more than life-extender therapies. It was agreed that, both on an individual and a healthcare service level, these costs are unsustainable.

Table 1.

Most expensive drugs in the world.

| Agent | Annual cost (US) | Target condition |

|---|---|---|

| Spinraza (nursinersen) | $375,000 ($750,000 in year 1) | Spinal muscular atrophy |

| Lumizyme (alglucosidase alfa) | $520,000 (up to $625,000) | Pompe disease |

| Elaprase (idursulfase) | $657,000 (for a child of 35 kg) | Hunter syndrome |

| Brineura (cerliponase alfa) | $700,000 | Neuronal ceroid lipofuscinosis type 2 disease |

| Soliris (eculizumab) | Up to $700,000 | Treatment of a rare group of diseases that affect red blood cells |

| Carbaglu (carglumic acid) | Up to $790,000 | Elevated blood ammonia |

| Ravicti (API glycerol phenylbutyrate) | Up to $794,000 | Urea cycle disorders |

| Luxturna (voretigene neparvovec) | $850,000 | Retinal dystrophy due to mutations in gene RPE65 |

| Zokinvy (ionafarnib) | $1,032,480 | Hutchinson–Gilford progeria syndrome |

| Zolgensma (onasemnogene abeparvovec-xioi) | $2,125,000 | Gene therapy for spinal muscular atrophy |

Estimates from 2022 (ref.31).

Whichever number you believe, US$1.1 billion or even US$3.2 billion,24 development of new drugs represents a significant investment for a single organization, especially seeing that success is not guaranteed. In 2016, for example, the FDA approved only 22 novel drugs of the 41 filed.

A 50% success rate is a considerable risk when you are spending a billion dollars. How have sponsor companies responded over the last few decades? We have seen many mergers and acquisitions as companies have attempted to minimize overheads. There has been reduced investment in maintaining internal teams and increased use of outsourcing models. Sponsors are investigating ‘shared risk’ models with CROs and there has been increased investment in specialty/orphan submissions. However, perhaps the most newsworthy has been the drive to increase reimbursement.

The general consensus was that pressures to drive change aimed at reducing the cost of development will continue. One aspect of change may link a patient access to treatment and cost of treatment to their involvement in the development pathway or alternate research studies.

“In the future, rather than paying full price for a product, you allow the ‘developer’ to use your data and you are rewarded with a price reduction.”

Delegates were also convinced that the current speculations over reimbursement models based on efficacy – that is, if your drug does not work for a patient you do not receive payment – will have matured. Such models could see a significant swing in the development landscape. Shifting the efficacy relationship to one between payers and sponsors – a relationship that offers the potential to reduce the burden on regulatory agencies to that of monitoring safety. It was believed that this approach has the potential to slash the time it takes to get drugs into patients.

The future of disease

Another aspect of the pharmaceutical industry landscape touched on by the discussions included how treatments and the practice of medicine will have changed by 2050. It was the consensus of the delegates that our fundamental understanding of disease will itself change. For most of our medical history, we have applied a symptomatic approach to the classification, diagnosis and treatment of disease. Our understanding has been based on what we could measure and what we could observe – for example, we defined high blood pressure as hypertension and increased body mass as obesity. However, daily advances in our understanding of the underlying pathophysiology of disease and the involvement of increasingly complex technology are changing what we know of disease. It is expected that we will start to see many diseases in a new light. The future will no longer be relying on textbook descriptions and diagnoses based on symptoms, our new ‘electronic dissection kit’ will be more subtle than the scalpels of Edwardian clinicians.

“I suspect we will be re-writing medical textbooks for years to come – except (obviously) there won’t be any textbooks.”

Delegates discussed how we are collecting more data at every level. This is not only newly generated data, we are also gaining access to more and more historical patient data as it is scanned and transcribed into databases or extracted from the bones in plague pits, for example, giving insights into genetic differences between those who survived (and those who did not) and how that has impacted the increased prevalence of autoimmune diseases 700 years later.25

Following calls for transparency, we are also sharing more and more clinical study data making it available for anyone to access through registries and databanks. With the explosion in biomarkers, we are monitoring more and more parameters6; we have also seen increasing technological granularity as our ‘measuring’ devices are becoming more sensitive, providing continuous multi-layered data streams.

There have been few restrictions in the ability of our technology to process these volumes of data plus that coming from our mobile phones, health apps, wearables and online sources from Amazon to Google to Zoom.

“The future is all about data.”

Previous PCMG conferences have drawn attention to how an ever-increasing array of computer-based systems are looking for ‘understanding’ at a level that individuals are not equipped to comprehend. We already have a veritable armoury of analytical tools to use in our clinical studies and the computer power to run them. In short, the delegates concluded that our technological wizardries are providing new insights as well as earlier and more precise diagnoses than clinicians can. The utility of these tools is already being reported across the clinical spectra – from acute kidney disease to automatic imaging assessment and patient triaging.26–28

The future of medicines

If the same way that our understanding of the disease is changing, it is only logical to assume that our approach to treatment will also change. Even if you ignore the growing issue of antibacterial resistance, we are in desperate need for new medicines as our population itself is changing. Estimates suggest that more than 1.4 billion people will be over 60 years of age by 2030.29 Although many of us can expect to remain active, old age is also associated with a plethora of chronic, non-communicable diseases and their associated disabilities that we have failed to understand for decades – hypertension, obesity, diabetes … even ageing itself? By 2030, one in three people over 50 will be suffering from chronic disease. At best, these diseases bring a plethora of morbidities that will require the co-administration a broad range of therapies and will account for 70% of deaths.30 It seems appropriate that the clinical trial paradigm involving healthy young participants will need to adapt to reflect these changes. Multimorbidity is the new norm, and we need to acknowledge that by testing drugs in populations that better reflect the ultimate recipient of drugs.

“Many existing medicines may find themselves having a second life, repurposed for ‘new’ indications.”

Over the last 100 years, we have pursued small-molecule solutions to symptomatic relief through the receptor theory of pharmacology. We have had some notable successes, but we have long known the limitations of our approach. People’s responses to medicines are variable – medicines do not work in everyone. All drugs are potential poisons and we have battled with the challenge of getting the right amount of that poison to the right place at the right time. The failure of all trial participants to respond in exactly the same way has meant that the only rational option has been to establish ‘optimal’ doses based on group responses established in relatively small, homogeneous cohorts. It was proposed that we will start to see more and more ‘smart’ medicines – self-regulating therapies that adjust to the dynamic nature of our own pathophysiologies. We have been running trials for some time in novel ‘devices’ that employ creative release mechanisms to provide more subtle and targeted delivery. We are also seeing medications that exploit mechanisms that have progressed far beyond the receptor theory – CRISPR, targeted protein degradation, immuno-oncology treatments, silencing and cellular therapies. Current experience indicates that these treatments take much less time to register.

“Gene and epigenetic profiling coupled with biomarker-targeted monitoring will see medicines able to ‘hit hard and hit early’ – potentially reducing the burden of chronically under-treated or ‘late to diagnosis’ disease.”

These new medicines raise some interesting questions. Are we equipped to validate these treatments before they are used in actual patients and will regulators believe the industry even if it claims it can? Do such clinical trials tell us anything more than a ‘sanitized’ safety and tolerability profile? It might seem a bit of a leap but, if we are investigating treatments for diseases we did not previously understand and those medicines are exploiting subtle mechanisms we cannot measure, our technology becomes the only way to ‘detect’ changes indicative of benefit (and this would only be achievable in patients).

Conclusion

The consensus was that, by 2050, if you are working in clinical trials and you are not the janitor, then you are a data scientist, probably working at Google. We can expect to see a new, three-phase registration model. The first phase will involve an aspect of quality evaluation and biological proof-of-concept probably involving more preclinical modelling and engineered human cell lines and fewer animal studies.

Once a new product is registered, it will enter a period of adaptive clinical development (delivered as a single study) intended to establish safety. This phase will most likely take ~1–2 years and explore tailored options for administration. Investigations will most likely be conducted in patients, possibly in a ‘patient-in-a-box’ setting (hospital or healthcare centre, virtual, or microsite). On completion of safety licencing, drugs will begin an assessment of efficacy in partnership with those responsible for reimbursement – testing will be performed in patients, possibly where individual patient involvement in safety testing will offer some reimbursement deal for future treatment. Change is coming, though its precise form will likely depend on the creativity and vision of sponsors, regulators and payers. In the words of Vladimir Lenin: “It is impossible to predict the time and progress of revolution as it is governed by its own mysterious laws.”

Acknowledgements

The Pharmaceutical Contract Management Group (PCMG) is a not-for-profit association of clinical development outsourcing and procurement professionals from across the pharmaceutical and biotechnology industry who seek to advance best outsourcing practices. The contents of this communication are a summary of collated statements from participants of the PCMG 2022 annual meeting and do not represent the views or position of any company whose employees contributed to the event.

Footnotes

Contributions: All authors contributed equally to the preparation of this manuscript. All named authors meet the International Committee of Medical Journal Editors (ICMJE) criteria for authorship for this article, take responsibility for the integrity of the work as a whole and have given their approval for this version to be published.

Disclosure and potential conflicts of interest: The authors declare that they have no conflicts of interest relevant to this manuscript. The International Committee of Medical Journal Editors (ICMJE) Potential Conflicts of Interests form for the authors is available for download at: https://www.drugsincontext.com/wp-content/uploads/2023/05/dic.2023-2-2-COI.pdf

Funding declaration: There was no funding associated with the preparation of this article.

Correct attribution: Copyright © 2023 Hardman TC, Aitchison R, Scaife R, Edwards J, Slater G on behalf of the Committee of the Pharmaceutical Contract Management Group. https://doi.org/10.7573/dic.2023-2-2. Published by Drugs in Context under Creative Commons License Deed CC BY NC ND 4.0.

Provenance: Submitted; externally peer reviewed.

Drugs in Context is published by BioExcel Publishing Ltd. Registered office: 6 Green Lane Business Park, 238 Green Lane, New Eltham, London, SE9 3TL, UK.

BioExcel Publishing Limited is registered in England Number 10038393. VAT GB 252 7720 07.

For all manuscript and submissions enquiries, contact the Editorial office editorial@drugsincontext.com

For all permissions, rights and reprints, contact David Hughes david.hughes@bioexcelpublishing.com

References

- 1.ICH Guidelines. [Accessed September, 2022]. https://www.ich.org/page/ich-guidelines .

- 2.The International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use. [Accessed September, 2022]. https://www.ich.org .

- 3.Dukart H, Lanoue L, Rezende M, Rutten P. Emerging from disruption: the future of pharma operations strategy. [Accessed September, 2022]. https://www.mckinsey.com/capabilities/operations/our-insights/emerging-from-disruption-the-future-of-pharma-operations-strategy .

- 4.Munos B. Lessons from 60 years of pharmaceutical innovation. Nat Rev Drug Discov. 2009;8:959–968. doi: 10.1038/nrd2961. [DOI] [PubMed] [Google Scholar]

- 5.Impact Report: Analysis and Insight into Critical Drug Development Issues. 1. Vol. 23. Boston: Tufts Center for the Study of Drug Development; [Accessed September, 2022.]. Rising protocol design complexity is driving rapid growth in clinical trial data volume. https://f.hubspotusercontent10.net/hubfs/9468915/TuftsCSDD_June2021/images/Jan-Feb-2021.png . [Google Scholar]

- 6.Ahlawat H, Graves G, Hou T, Le Deu F, Moss R, Parekh R. The Helix report: Is biopharma wired for future success? [Accessed November, 2022]. https://www.mckinsey.com/industries/life-sciences/our-insights/the-helix-report-is-biopharma-wired-for-future-success .

- 7.Marsolo KA, Richesson R, Hammond WE, Smerek M, Curti L. Common real-world data sources. [Accessed September, 2022]. https://rethinkingclinicaltrials.org/chapters/conduct/acquiring-real-world-data/common-real-world-data-sources/

- 8.Tufts Center for the Study of Drug Development. Tufts-eClinical solutions, data strategies and transformation study. [Accessed September, 2022]. https://issuu.com/eclinicalsol/docs/tufts-eclinical-solutions-study-overview_data-stra?e=40343184/76341586 .

- 9.Assessing fitness for use of real-world data sources: section 6– data provenance, rethinking clinical trials. [Accessed September, 2022]. https://rethinkingclinicaltrials.org/chapters/conduct/assessing-fitness-for-use-of-real-world-data-sources/data-provenance/

- 10.Singh M, Sachan S, Singh A, Singh KK. Chapter 7 – Internet of Things in pharma industry: possibilities and challenges. In: Balas VE, Solanki VK, Kumar R, editors. Emergence of Pharmaceutical Industry Growth with Industrial IoT Approach. London, UK: Academic Press; 2020. pp. 195–216. [DOI] [Google Scholar]

- 11.Gibney E. How many yottabytes in a quettabyte? Extreme numbers get new names. Prolific generation of data drove the need for prefixes that denote 1027 and 1030. Nature. 2022 November 18; doi: 10.1038/d41586-022-03747-9. [DOI] [PubMed] [Google Scholar]

- 12.Hird N, Ghosh S, Kitano H. Digital health revolution: perfect storm or perfect opportunity for pharmaceutical R&D? Drug Discov Today. 2016;21(6):900–911. doi: 10.1016/j.drudis.2016.01.010. [DOI] [PubMed] [Google Scholar]

- 13.Kinch MS, Flath R. New drug discovery: extraordinary opportunities in an uncertain time. Drug Discov Today. 2015;20(11):1288–1292. doi: 10.1016/j.drudis.2014.12.008. [DOI] [PubMed] [Google Scholar]

- 14.Senior M. Pharma backs off biotech acquisitions. Nat Biotechnol. 2022;40:1546–1550. doi: 10.1038/s41587-022-01529-2. [DOI] [PubMed] [Google Scholar]

- 15.Yosef A. Healthcare is ripe for full adoption of wearable drug delivery devices. [Accessed September, 2022];Med City News. 2019 May 10; https://medcitynews.com/2019/05/healthcare-is-ripe-for-full-adoption-of-wearable-drug-delivery-devices/ [Google Scholar]

- 16.Wetsman N. Theranos promised a blood testing revolution – Here’s what’s really possible. Innovation is possible, even if it’s not magic. [Accessed September, 2022]. https://www.theverge.com/22834348/theranos-blood-testing-innovation-drop-holmes .

- 17.Microsoft. Digital transformation in clinical research – Accelerating change for better experiences and outcomes. [Accessed October, 2022]. https://info.microsoft.com/ww-landing-digital-transformation-in-clinical-research.html?LCID=EN-US .

- 18.Toddenroth D, Sivagnanasundaram J, Prokosch H-U, Ganslandt T. Concept and implementation of a study dashboard module for a continuous monitoring of trial recruitment and documentation. J Biomedical Inf. 2016;64:222–231. doi: 10.1016/j.jbi.2016.10.010. [DOI] [PubMed] [Google Scholar]

- 19.US FDA Center for Drug Evaluation and Research. Expedited programs for serious conditions – Drugs and biologics. May, 2014. [Accessed December, 2022]. https://www.fda.gov/media/86377/download .

- 20.Sweeney F. EUFEMED 2019 conference. The changing landscape of early medicines development: be prepared! Update on regulatory considerations for early clinical development (including Brexit) The EMA perspective. [Accessed September, 2022]. https://www.eufemed.eu/wp-content/uploads/Fergus-Sweeney-EMA-Update-presentation-to-EUFEMED-conference-17-May-2019.pdf .

- 21.Studna A. Turnover for clinical monitoring remains high. [Accessed September, 2022.];Applied Clinical Trials-04-01-2021. 30(4) https://www.appliedclinicaltrialsonline.com/view/turnover-for-clinical-monitoring-remains-high . [Google Scholar]

- 22.2020/2021 CRO insights report: while compensation levels and practices remain status quo, turnover continues to be high. [Accessed September, 2022]. https://www.bdo.com/insights/tax/global-employer-services/2020-2021-cro-insights-report-while-compensation-l .

- 23.Gebreyes K, Davis A, Davis S, Shukla M. Breaking the cost curve. Deloitte predicts health spending as a percentage of GDP will decelerate over the next 20 years. [Accessed September, 2022]. https://www2.deloitte.com/uk/en/insights/industry/health-care/future-health-care-spending.html .

- 24.Wouters OJ, McKee M, Luyten J. Estimated research and development investment needed to bring a new medicine to market, 2009–2018. JAMA. 2020;323(9):844–853. doi: 10.1001/jama.2020.1166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Klunk J, Vilgalys TP, Demeure CE, et al. Evolution of immune genes is associated with the Black Death. Nature. 2022;611:312–319. doi: 10.1038/s41586-022-05349-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nichols JA, Herbert Chan HW, Baker MAB. Machine learning: applications of artificial intelligence to imaging and diagnosis. Biophys Rev. 2019;11:111–118. doi: 10.1007/s12551-018-0449-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18(8):500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Yuan Q, Zhang H, Deng T, et al. Role of artificial intelligence in kidney disease. Int J Med Sci. 2020;17(7):970–984. doi: 10.7150/ijms.42078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Department of Economic and Social Affairs Population Division. World Population Ageing: 1950–2050. New York: United Nations; 2001. [Accessed September, 2022]. https://www.un.org/development/desa/pd/sites/www.un.org.development.desa.pd/files/files/documents/2021/Nov/undesa_pd_2002_wpa_1950-2050_web.pdf . [Google Scholar]

- 30.Healthy Aging Team. The top 10 most common chronic diseases for older adults. [Accessed September, 2022]. https://www.ncoa.org/article/the-top-10-most-common-chronic-conditions-in-older-adults .

- 31.A Badwy 10 most expensive drugs in the world. [Accessed September, 2022]. https://pharmaoffer.com/blog/10-most-expensive-drugs-in-the-world/