Highlights

-

•

We detail EEG psychometric reliability profiles for testing individual differences.

-

•

We review internal consistency of power, ERP, nonlinear, connectivity measures.

-

•

We review test-retest reliability of power, ERP, nonlinear, connectivity measures.

-

•

We show how denoising, data quality measures improve individual difference studies.

-

•

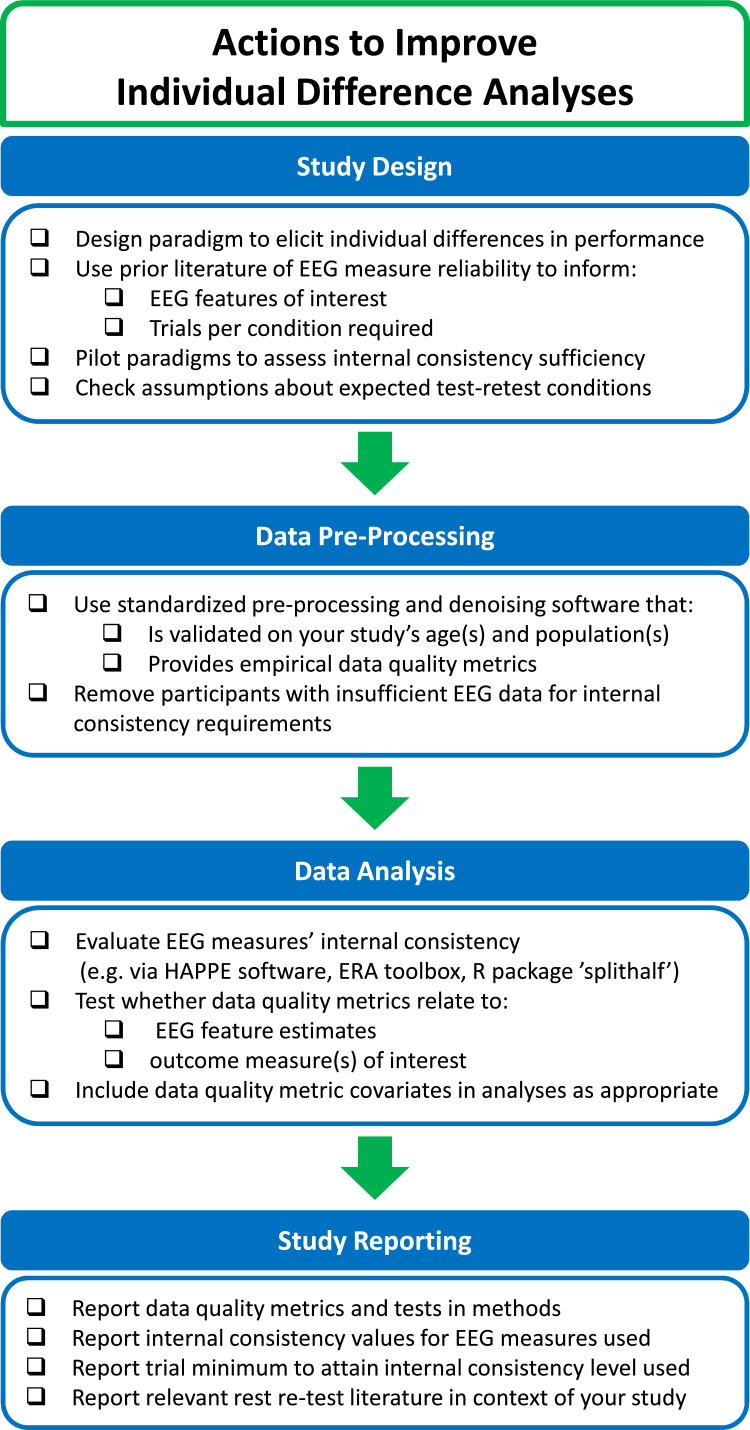

We provide actionable recommendations to improve EEG individual difference analyses.

Keywords: EEG, Individual differences, Psychometric reliability, Internal consistency, Test-retest reliability, Lifespan

Abstract

Electroencephalographic (EEG) methods have great potential to serve both basic and clinical science approaches to understand individual differences in human neural function. Importantly, the psychometric properties of EEG data, such as internal consistency and test-retest reliability, constrain their ability to differentiate individuals successfully. Rapid and recent technological and computational advancements in EEG research make it timely to revisit the topic of psychometric reliability in the context of individual difference analyses. Moreover, pediatric and clinical samples provide some of the most salient and urgent opportunities to apply individual difference approaches, but the changes these populations experience over time also provide unique challenges from a psychometric perspective. Here we take a developmental neuroscience perspective to consider progress and new opportunities for parsing the reliability and stability of individual differences in EEG measurements across the lifespan. We first conceptually map the different profiles of measurement reliability expected for different types of individual difference analyses over the lifespan. Next, we summarize and evaluate the state of the field's empirical knowledge and need for testing measurement reliability, both internal consistency and test-retest reliability, across EEG measures of power, event-related potentials, nonlinearity, and functional connectivity across ages. Finally, we highlight how standardized pre-processing software for EEG denoising and empirical metrics of individual data quality may be used to further improve EEG-based individual differences research moving forward. We also include recommendations and resources throughout that individual researchers can implement to improve the utility and reproducibility of individual differences analyses with EEG across the lifespan.

1. Introduction

There is great momentum within both basic and clinical human science to apply individual difference approaches to brain research. Examining differences between individuals is an important means to understand how variation in environmental experiences impacts the brain, and how brain measures relate to other systems and levels of measurement, like differences in cognition or behavior (e.g. Ambrosini and Vallesi, 2016; Drew and Vogel, 2008; Hakim et al., 2021; Hodel et al., 2019; Jones et al., 2020; Sanchez-Alonso and Aslin, 2020; Vogel et al., 2005; Vogel and Machizawa, 2004). These approaches are also critical for efforts towards precision clinical science, including identification of brain-based biomarkers for risk, health status, outcomes, intervention targets, and treatment response indicators (e.g. Bosl et al., 2018; de Aguiar Neto and Rosa, 2019; Frohlich et al., 2019; Furman et al., 2018; Gabard-Durnam et al., 2019; Geuter et al., 2018; Hannesdóttir et al., 2010; Jones et al., 2020; Moser et al., 2015; Stewart et al., 2011; Wilkinson et al., 2020). Developmental populations provide additional opportunities to apply individual difference approaches, including linking early brain measure differences to subsequent emergent behaviors or symptoms and mapping heterogeneity in brain development trajectories in both basic and clinical contexts (e.g. Bosl et al., 2018; Frohlich et al., 2019; Gabard-Durnam et al., 2019; Hannesdóttir et al., 2010; Hodel et al., 2019; Jones et al., 2020; Moser et al., 2015; Sanchez-Alonso and Aslin, 2020; Wilkinson et al., 2020).

Importantly, the validity of brain measurements to serve these purposes in individual differences research is constrained by their psychometric reliability (Parsons et al., 2019). That is, if there is insufficient certainty about individuals’ brain estimates due to error, it will be impossible to derive meaningful associations between those brain estimates and individual phenotypes. There are multiple approaches to conceptualizing, measuring, and interpreting psychometric reliability (e.g., Allen et al., 2004; Brandmaier et al., 2018; Tang et al., 2014; Tomarken et al., 1992). Psychometric reliability as used in this manuscript encompasses both the consistency of a feature's estimate within an individual's data obtained within a session (internal consistency as defined by Cronbach (1951); Streiner, 2010; Strube and Newman, 2007) and self-similarity in repeated measurements for individuals across sessions (test-retest reliability as measured by intraclass correlation coefficient proposed first by Fisher (1958); Bartko, 1966; Yen and Lo, 2002). While developmental and clinical studies are particularly fertile contexts for applying individual difference approaches, the changes these populations experience over maturation or clinical course also provide unique challenges in evaluating brain measure psychometric reliability for individual difference analyses (Sanchez-Alonso and Aslin, 2020).

Though issues of measurement reliability in individual differences research are neither new nor unique topics to neuroscience (Elliott et al., 2021; Greene et al., 2022; Kennedy et al., 2022; Kragel et al., 2021; Noble et al., 2021), recent communication about these issues in magnetic resonance imaging (MRI) contexts has led some to declare the entire field of cognitive neuroscience “at a crossroads” in terms of utility for individual differences analyses (“Cognitive neuroscience at the crossroads,” 2022). Is this so? Recent technological and computational advances in electroencephalography (EEG) make it timely to revisit the topic for several reasons. First, EEG has become increasingly cost-effective, mobile, and scalable with engineering advancements that facilitate increased use of this method with larger sample sizes. These technological advancements make individual differences approaches possible and powered to detect a wide range of effects in far more studies moving forward. For example, multiple large-scale, multi-site, or multi-national EEG-based studies (e.g., Healthy Brain and Child Development Study, Wellcome LEAP 1 kD Program, the Autism Biomarkers Consortium of Clinical Trials, Baby Siblings Research Consortium; Jordan et al., 2020; McPartland et al., 2020; Ozonoff et al., 2011) are underway and provide increased opportunities in the near future for performing individual differences analyses with EEG, especially in developmental contexts. There has also been tremendous innovation in the types of EEG features one can extract from the signal that requires consideration of how to optimize their reliability for individual differences research. Moreover, recent shifts in EEG pre-processing and denoising strategies also affect even well-characterized features’ measured reliability profiles across the lifespan. Renewed discussions about EEG measurement reliability and the implications for individual difference study designs and analyses have largely focused on specific populations or EEG measures (e.g., Boudewyn et al., 2018; Clayson, 2020), with far less work looking across types of EEG features or in contexts of change (Becht and Mills, 2020; Foulkes and Blakemore, 2018; Webb et al., 2022).

Here we take a developmental neuroscience perspective to consider progress and new opportunities for parsing the reliability and stability of individual differences in electroencephalography (EEG) measurements across the lifespan. We first conceptually map the different profiles of measurement reliability required for different types of individual difference analyses over the lifespan. Next, we summarize and evaluate the state of the field's empirical knowledge and need for testing measurement reliability, both internal consistency and test-retest reliability, across EEG measures of power, event-related potentials (ERPs), nonlinearity (e.g., entropy, complexity), and functional connectivity across ages. Finally, we highlight two salient challenges and opportunities for change in the research process that may make substantial impact in improving EEG-based individual differences research: 1) standardized, automated pre-preprocessing software for EEG denoising, and 2) empirical metrics of individual data quality. We conclude each topical section with recommendations and resources that individual scientists can implement immediately and in future studies with EEG to improve reproducibility of individual differences findings across the lifespan.

2. Mapping profiles of psychometric reliability for individual difference analyses

Individual difference analyses with EEG measures all test questions at the between-person level. They require greater variability in a measure between participants than within a given individual (i.e., one must be more similar to oneself than to other people for a measure). This ensures that researchers can distinguish between individuals with confidence. Thus, testing the psychometric reliability of an EEG feature (the degree to which a measure follows these conditions of self- versus other-similarity) is a critical step in conducting individual difference analyses. Beyond the psychometric reliability constraints however, there is great flexibility in individual difference analyses. They can be performed with a variety of analytic techniques from bivariate correlations to multiple regression frameworks and machine-learning predictive modeling to answer both data-driven and hypothesis-driven research questions (Botdorf et al., 2016; Figueredo et al., 2005; Hounkpatin et al., 2018; Pat et al., 2022; Qin et al., 2014; Sorella et al., 2022). Indeed, here we remain agnostic to the research questions at hand for any individual researcher, but we note the importance of theory, both topical and statistical, in deriving research questions and guiding the research process generally. We hope this manuscript will provide complementary information and guidance about how to best answer the research questions that require individual difference analyses.

It is also important to note briefly that many types of research questions, including some of the earliest and most common in human neuroscience, do not pertain to individual difference analyses at all. Indeed, many commonly-used psychological and neuroscience tasks were designed to minimize individual differences in performance and brain activity rather than reveal them (though they can be modified to reveal individual differences if desired; Dai et al., 2019; Soveri et al., 2018). These designs may instead examine differences within-person (e.g., condition differences like eyes-open vs. closed EEG power, most ERP designs with multiple task conditions, even brain-behavior analyses if performed across conditions within-individuals). Reliability considerations for strictly within-person analyses will not be considered here (though see MacDonald and Trafimow (2013) and Trafimow and Rice (2009) for important discourse around those designs). Between-group difference designs may also fall into this category historically, minimizing individual differences to reveal differences between the groups of participants (e.g., EEG-related differences between adults with schizophrenia vs. those without, or between children and adults). Thus, not all of a researcher's questions or designs will need or accommodate considering individual difference-related psychometric reliability profiles.

However, for those studies that do seek to use an individual differences approach, it is important to consider several contextual factors. That is, different contexts require different psychometric profiles of internal consistency and test-retest reliability for valid inferences to be drawn about individual differences. Additional considerations also apply when measuring reliability in contexts of change, including learning, development, and clinical course. Below, we conceptually map this landscape of reliability profiles required for different kinds of individuating EEG markers with illustrative examples. We hope this mapping provides clarity for researchers about what forms of reliability should be assessed with which study designs before conducting particular individual difference analyses of interest.

2.1. Patterns of internal consistency reliability

Internal consistency reflects measurement stability within a testing session. As a general rule, individual difference EEG markers for any purpose should be stable features with corresponding high internal consistency scores. That is, there should be high certainty about each individual's estimate for the EEG measure from that testing session. Internal consistency measurements may suffer (i.e., show low stability) for several reasons, including high measurement error, high within-person variability, or low between-person variability (Waltmann et al., 2022). This last condition is non-trivial, as recently noted by several researchers (Hedge et al., 2018; Infantolino et al., 2018), given many standard task designs prioritize within-person differences over between-person differences. Careful task selection (if measuring in a task context) that facilitates differences between participants is therefore critical to facilitate individual difference analyses. Internal consistency should always be evaluated before performing individual difference analyses to ensure both the appropriateness of that analysis and to understand the constraints (bound by internal consistency levels) on potential statistical explanation or prediction in that analysis.

2.1.1. Internally consistent measures of change

We highlight a special case in contexts of change where high within-person variability may nonetheless lead to high internal consistency values if measured appropriately: markers of learning, neural variability, or habituation. For this set of individual difference measures, the stable feature is the change or variability in the EEG signal itself. For example, several lines of clinical research have begun to consider differences in neural variability in conditions like Autism Spectrum Disorder and Schizophrenia (where those with the condition exhibit either increased or decreased variability in EEG measures relative to neurotypical comparisons; MacDonald et al., 2006; Trenado et al., 2018). Individual differences in how quickly brain responses habituate to stimuli provide a related set of inquiries about brain variability in clinical contexts (e.g., Cavanagh et al., 2018; Hudac et al., 2018). Similarly, neural markers of learning (e.g., learning rate) may differ between individuals in meaningful ways (e.g., Waltmann et al., 2022). In each of these cases, although changes are observed and expected over the course of the EEG recording, study design may allow for internal consistency evaluation of the changes. For example, in resting-state EEG, bootstrapped split-half analyses may reveal consistent estimates of the standard deviation of power values between iterations of data-halves. For task paradigms, multiple assessments of the habituation, learning, or variability should be included to enable calculating internal consistency. That is, degree of habituation to tones should be evaluated for two sets of tones, and learning tasks should include at least two rounds of learning to evaluate differences in learning rate or accuracy (e.g., Waltmann et al., 2022). This design may not be possible for all populations or contexts of interest (e.g., long ERP tasks with repeated bouts of learning may not be possible in early developmental contexts, especially for visual paradigms) and may limit when such individual markers of variability and learning are considered accordingly. There may also be interest in measuring the response differences between two conditions as an individual marker of learning or change (i.e., using difference scores as an index of change). Note that two task conditions or ERP components each demonstrating high internal consistency may not necessarily produce a difference score with corresponding high internal consistency (Infantolino et al., 2018; Thigpen et al., 2017). Thus, if difference scores are of interest as potential individual difference markers, the difference score itself must be evaluated for internal consistency rather than the two underlying conditions’ scores. The case of EEG measures of change and variation demonstrate how individual difference analyses do not necessarily preclude within-person variability that may be especially evident in developmental and clinical populations.

2.2. Patterns of test-retest reliability

Test-retest reliability reflects stability of markers across testing sessions and time. Unlike internal consistency where higher reliability scores are always more appropriate in individual difference analyses, multiple patterns of test-retest reliability are acceptable depending on the population, analysis purpose, and timescale, as illustrated below.

2.2.1. Low test-retest reliability

Patterns of low test-retest reliability between testing sessions may indeed be valid contexts for a restricted set of individual difference analyses. Namely, if one is interested in associating within-session state-related EEG measures with other in-session state-related measures (e.g., cognition, affective state, etc.), low test-retest reliability may be expected or test-retest reliability may even be impossible to measure. For example, in a decision-making game, participants can change strategy use from session to session, so test-retest reliability of the related EEG measure can be low (each individual will look different from session to session), but there may still be important information within-session about individual differences in degree of strategy use relating to that particular EEG measure. In the context of such brain-cognitive-behavior analyses, the expected stability of the cognitive/affective/behavioral measure is important. If that measure shows high variability across testing administrations within a person, e.g., high influence of state-like (instead of trait-like) contributions, the EEG-measured correlates may similarly show low test-retest reliability while still offering robust relation with the phenomena of interest (e.g., Clarke et al., 2022).

2.2.2. High test-retest reliability

Patterns of high test-retest reliability are desired for several types of individual difference analyses. This profile applies to stable contexts, in which the brain and its relation with physiology, behavior, the environment, or disease state is not expected to vary over time. For example, individual difference analyses in healthy young adults may fit this profile, as may those examining adult EEG features related to trait-like cognitive, affective, or behavioral profiles (e.g., EEG features related to stable temperament or attachment profiles or native language(s) skills). Another case may come from EEG biomarkers of stable disease or disorder characteristics or endophenotypes. Finally, analyses assessing the influence of prior environmental factors or current stable environmental factors on brain-related features may adhere to this reliability profile. For example, how does frequency of emotional abuse in childhood influence young adult EEG-derived neural phenotypes? In each of these cases, there is very low expectation that the brain, and thus, the EEG features, should demonstrate meaningful change from testing session to testing session, and so patterns of high test-retest reliability are expected to infer meaningful individual differences.

2.2.3. High short-term, low long-term test-retest reliability

This final pattern of reliability applies to many contexts of change, including clinical course, developmental change, and intervention targeting. For these populations and/or contexts, change over longer timescales is expected, so they will have low long-term test-retest reliability (though rank order stability measurement may reveal consistent rankings if not estimates over periods of change for some features). Long-term is subjective, of course, and depends on the particular context at hand. For example, low test-retest reliability over many months in infancy may indicate the presence of developmental change rather than lack of reliability for a given EEG measure. Similarly, one may select intervention target EEG measures because they exhibit change over time (e.g., plasticity) and thus may be more modifiable than a measure with high stability over the potential intervention ages. Additionally, a reliable marker of clinical severity should not exhibit high long-term test-retest reliability if that encompasses the course of the condition within an individual (instead the marker should change as an individual's status changes with time). Thus, in all of these cases, low long-term test-retest reliability is actually desired. Immediate test-retest stability though can provide confidence that the candidate measure is a reliable indicator of that person's developmental or clinical status in the moment. That is to say, though an EEG feature may change from 9 to 12 months of age, one should still expect high test-retest reliability for that feature if measured same day or the next day. The timescale for determining sufficient measure stability is clinical condition-specific and developmentally-dependent. That is, several weeks between measurements is functionally different in infancy than in adulthood given the respective rates of change in the brain. Still, if a candidate measure's values change within hours of measurement at any age (absent any intervention or clinically-significant change in the interim hours), it will likely have poor utility as an individual difference marker.

2.3. Recommendations for individual researchers

We offer the following recommendations that individual researchers can implement in designing studies and planning individual difference analyses from the outset to improve measured reliability profiles.

-

1)

Researchers may use prior literature or pilot testing to ensure individual differences will be elicited by the study paradigm. For examples of researchers evaluating study design changes to optimize individual differences in canonical paradigms, see Dai et al., 2019; Soveri et al., 2018. Ideally, measurement error will be minimized through design and sufficient trials will be planned to ensure stable within-individual estimates can be derived (see Sections 2 and 3 below for guidance in optimizing trial number and trial retention during preprocessing, respectively). Prior test-retest literature may also inform whether the candidate EEG measure(s) of interest fit the reliability profile required for the study context (e.g., if exploring a potential intervention target, does this EEG measure show change within the developmental window of interest?)

-

2)

For designs where learning, habituation, or EEG variability are the features of interest, consider designs that facilitate testing internal consistency of those changes before conducting individual difference analyses (e.g., two blocks of learning, sufficient trials to calculate internal consistency of the variability index like standard deviation, etc.).

-

3)

Finally, researchers should check the assumptions about expected test-retest conditions for their study context and ensure any prior literature or within-lab pilot testing supports those test-retest expectations for the planned individual difference analyses.

3. Reliability of EEG measurements across the lifespan

As others have noted before, psychometric reliability is a property of measurement in context rather than the EEG measure itself (e.g., Clayson et al., 2021; Thompson, 2003; Vacha-Haase, 2016). That is, the same EEG measure that may show excellent internal consistency in one population may demonstrate low consistency in a different population or differing consistency when measured in lab-based research settings relative to at-home acquisitions. Reliability may also depend on pre-processing or parameterization of the measure itself (discussed in Section 3 below). Thus, researchers and journals have begun calling for study-specific evaluation and reporting of EEG measure reliability in contexts of individual differences analyses (e.g., Carbine et al., 2021; Clayson, 2020; Clayson et al., 2021a, 2021b, 2019; Clayson and Miller, 2017a, 2017b; Hajcak et al., 2017; Thigpen et al., 2017). We support the momentum to assess and report reliability within each study, and we also believe there is value in looking at the extant reliability literature across ages and types of features for several reasons. First, while measuring internal consistency is technically feasible for each study, measuring test-retest reliability is neither pragmatic nor possible in all cases. Reviewing extant literature may provide guidance (ideally for studies matched for ages, populations, context) and highlight gaps in the field's knowledge that must be filled before measures can be used for some types of individual difference analyses. Second, reviewing reliability findings (both internal consistency and test-retest reliability) across ages and measures provides guidance for new study design and a priori feature selection and parameterization compatible with pre-registration initiatives. We note that drawing on extant literature in these cases does not negate the subsequent need to calculate internal consistency reliability within the study once underway. Third, such a review also provides some information about lifespan change in EEG measure reliability that may not be practical to capture within a single study but may also guide future study design in terms of participant ages or analysis planning. Finally, for several of the more recently introduced EEG features, we hope that reviewing extant literature exploring reliability will provide useful information to guide optimization of these features’ measurement and parameterization (e.g., see nonlinear EEG feature section below).

Therefore, below we evaluate the field's knowledge of reliability for the measures most commonly used with EEG data for in-lab contexts with largely neurotypical populations (unless otherwise indicated). Specifically, we evaluate what is known for power, event-related potentials (ERP), nonlinear, and functional connectivity measures by collating existing studies that have calculated internal consistency and/or test-retest reliability. We summarize the current state of the field's knowledge for each measure with regards to both internal consistency and test-retest reliability for adult populations followed by pediatric populations that experience significant brain change during maturation (here, including infants, toddlers, children, adolescents). Importantly, individual studies have used different reliability metrics and different thresholds (with different degrees of consensus for a given measure) for categorizing reliability results. Consequently, we have elected not to formally make the summary a systematic review. Instead, where there is sufficient literature converging on a single reliability method for an EEG measure, we have focused on reporting comparisons with that particular method for consistency (thus not all studies reporting reliability metrics are included below). This strategy facilitates offering summary statistics about reliability that average across measures and studies. This strategy also lets us focus the review on more contemporary contexts and acquisition setups as more recent studies show increased consensus in reliability measurement and reporting. Moreover, though we report the empirical values of reliability for all studies, to facilitate qualitative conclusions from the collective literature, we have used the following scale of thresholds that we found to be most frequently used in extant literature (reflecting the greatest consensus): poor— values < 0.40; fair—0.40 ≤ values ≤ 0.59; good— 0.60 ≤ values ≤ 0.74; and excellent— values ≥ 0.75 for test- retest reliability (Deuker et al., 2009; Haartsen et al., 2020; Hardmeier et al., 2014; Hatz et al., 2016; Jin et al., 2011; Kuntzelman and Miskovic, 2017). We then provide recommendations for individual actions moving forward for each EEG measure and a final summary across all measures considered.

3.1. Power

A common way to quantify EEG oscillatory activity is to compute spectral power, where power is operationalized as the peak signal amplitude squared (Mathalon and Sohal, 2015). There are multiple approaches to characterize EEG power. For example, studies may examine either baseline or task-related power, and quantify absolute power or relative power (the percentage of power in a specific frequency/frequencies relative to total power across all frequencies) (Marshall et al., 2002). Moreover, EEG power is often collapsed across frequencies into canonical frequency bands. These power features can also be combined across hemispheres or frequency bands to form what we refer to as relational power features, like the theta-beta ratio and hemispheric alpha asymmetry features. Finally, contemporary approaches parameterize the power spectrum in terms of periodic (i.e., oscillatory) and aperiodic (e.g., 1/f slope) contributions (e.g., Spectral Parameterization; Donoghue et al., 2020). We consider the internal consistency and test-retest reliability evidence across these types of power measures.

3.1.1. Internal consistency of power measures

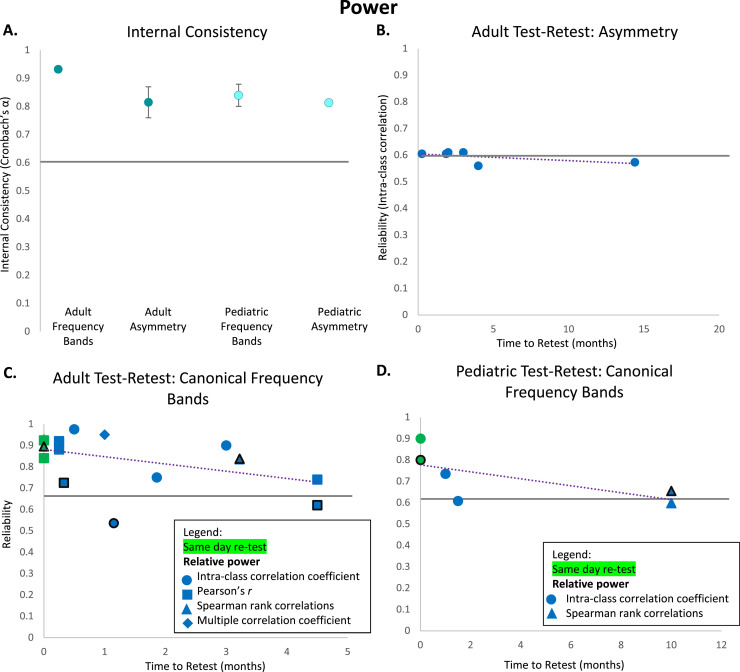

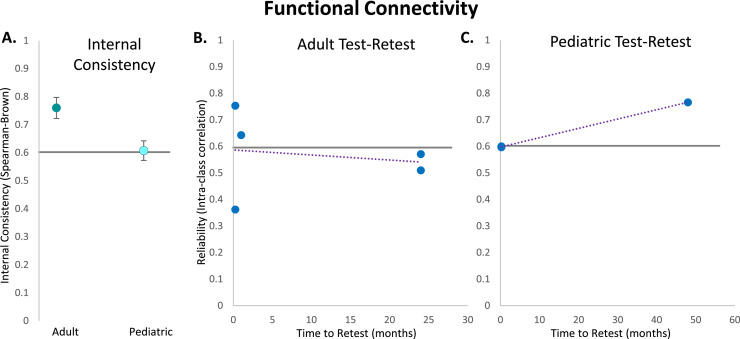

Studies calculating the internal consistency of power measures are surprisingly sparse considering the longevity of EEG power as a measure in the field. Though the studies that do exist suggest power is extremely reliable within testing sessions (Tables 1 and 2). Across power measures, internal consistency values for both adult and pediatric samples are considered excellent (adult: α = 0.92, n = 20 measurements, SE = 0.01; pediatric: α = 0.83, n = 12, SE = 0.03). The existing studies evaluate both canonical power frequency bands (adult: α = 0.93, n = 18 measurements, SE = 0.007; pediatric: α = 0.84, n =9 measurements, SE = 0.04; Anaya et al., 2021; Burgess and Gruzelier, 1993; Gold et al., 2013; Lund et al., 1995; Rocha et al., 2020, see Fig. 1A) and relational power features like frontal alpha asymmetry (adult: α = 0.82, n = 2 measurements, SE = 0.06; pediatric: α = 0.81, n = 3 measurements, SE = 0.007; Allen et al., 2003; Anaya et al., 2021; Gold et al., 2013; Hill et al., 2020; Towers et al., 2008, see Fig. 1A). There is also some evidence that the lowest frequency bands like delta demonstrate lower internal consistency relative to other bands in early development, perhaps because they are quite sensitive to arousal state changes that happen quickly and dramatically during testing in infancy (e.g., Anaya et al., 2021).

Table 1.

Internal consistency of adult power studies.

| Paper | Age(s) | Sample Size | Paradigm | Type of Power | Consistency Measure(s) | Frequency Band | Consistency |

|---|---|---|---|---|---|---|---|

| Hill et al. (2020) | Not specified | N = 31 | Resting-state | Broad frontal asymmetry | Spearman-Brown | Alpha | 0.99 |

| High frontal asymmetry | Spearman-Brown | Alpha | 0.99 | ||||

| Rocha et al. (2020) | Older adults | N = 31 | Resting-state | Relative (to nearby frequencies only) | Cronbach's α | Alpha | 0.87 |

| Towers and Allen (2008) | Undergraduates | N = 204 | Resting-state | Frontal asymmetry | Spearman-Brown | Alpha | 0.91 |

| Burgess and Gruzelier (1993) | 18–39 years | N = 24 | Resting-state | Absolute | Cronbach's α | Delta | 0.92 |

| Theta | 0.95 | ||||||

| Alpha | 0.95 | ||||||

| Beta | 0.95 | ||||||

| Task-related | Absolute | Cronbach's α | Delta | 0.90 | |||

| Theta | 0.94 | ||||||

| Alpha | 0.90 | ||||||

| Beta | 0.94 | ||||||

| Allen et al. (2003) | 18–45 years | N = 30 | Resting-state | Frontal asymmetry | Cronbach's α | Alpha | 0.87 |

| Gold et al. (2013) | 18–50 years | N = 79 | Resting-state | Frontal aymmetry | Cronbach's α | Alpha | 0.76 |

| Absolute | Cronbach's α | Theta | 0.99 | ||||

| Lund et al. (1995) | Mean age of 28.5 years | N = 49 | Resting-state | Absolute | Cronbach's α | Delta | 0.92 |

| Theta | 0.96 | ||||||

| Alpha | 0.96 | ||||||

| Beta | 0.95 | ||||||

| Relative | Cronbach's α | Delta | 0.90 | ||||

| Theta | 0.94 | ||||||

| Alpha | 0.94 | ||||||

| Beta | 0.90 |

Table 2.

Internal consistency of pediatric power studies.

| Paper | Age(s) | Sample Size | Paradigm | Type of Power | Consistency Measure(s) | Frequency Band | Consistency |

|---|---|---|---|---|---|---|---|

| Hill et al. (2020) | 12 months | N = 31 | Resting-state | Frontal asymmetry | Spearman-Brown | Alpha | 0.81 |

| Anaya et al. (2021) | 8 months | N = 108 | Resting-state | Frontal asymmetry | Cronbach's α | Alpha | 0.80 |

| Relative | Cronbach's α | Alpha | 0.89 | ||||

| Delta | 0.66 | ||||||

| Beta | 0.92 | ||||||

| 12 months | N = 71 | Resting-state | Frontal asymmetry | Cronbach's α | Alpha | 0.82 | |

| Relative | Cronbach's α | Alpha | 0.91 | ||||

| Delta | 0.73 | ||||||

| Beta | 0.91 | ||||||

| 18 months | N = 69 | Resting-state | Frontal asymmetry | Cronbach's α | Alpha | 0.82 | |

| Relative | Cronbach's α | Alpha | 0.91 | ||||

| Delta | 0.67 | ||||||

| Beta | 0.96 |

Fig. 1.

Internal consistency and test-retest reliability of power measures. A: Average internal consistency values calculated using Cronbach's Alpha for adult canonical frequency band power, adult alpha asymmetry, pediatric canonical frequency band power, and pediatric alpha asymmetry. B: Test-retest values calculated using intra-class correlations for each adult alpha asymmetry study based on time between testing sessions (in months). C: Test-retest values calculated using various reliability methods (denoted by different shapes in figure legend) for each adult canonical frequency band power study based on time between testing sessions (in months). D: Test-retest values calculated using various reliability methods (denoted by different shapes in figure legend) for each pediatric canonical frequency band power study based on time between testing sessions (in months). Green markers denote same-day time to retest. Black marker borders denote relative power.

3.1.2. Test-retest reliability of canonical power frequency bands

Many studies in adult populations have examined the test-retest reliability of EEG power. Across methods of test-retest evaluation and timescales, baseline EEG power is generally found to be very reliable (Angelidis et al., 2016; Burgess and Gruzelier, 1993; Corsi-Cabrera et al., 2007; Fernández et al., 1993; Keune et al., 2019; McEvoy et al., 2000; Näpflin et al., 2007; Pollock et al., 1991; Rocha et al., 2020; Schmidt et al., 2012; Suárez-Revelo et al., 2015, see Table 3). For example, Ip et al. (2018) have examined test-retest reliability of EEG absolute power using intra- class correlations (ICCs) in adults between multiple sessions 20–22 days apart. Adjacent timepoints showed excellent test-retest reliability (ICCs = 0.84–.97) in theta, alpha, and beta canonical frequency bands (especially at frontal, midline, and parietal sites). Delta and gamma bands showed more variable test-retest reliability across regions (ICCs = 0.30–.87). ICCs across the complete ∼80 day period showed similar results. Studies have also looked at test-retest reliability of EEG power during task paradigms (Fernández et al., 1993; Ip et al., 2018; Keune et al., 2019; McEvoy et al., 2000; Rocha et al., 2020; Salinsky et al., 1991; Schmidt et al., 2012; Suárez-Revelo et al., 2015, see Table 3). For example, McEvoy et al. (2000) found strong correlations between sessions an average of 7 days apart for absolute theta and alpha power during working memory (r > 0.9) and psychomotor vigilance (r > 0.8) tasks. Several studies have also offered direct comparisons with baseline EEG power reliability. Näpflin et al. (2008) were able to reliably individuate participants over a retest interval of more than one year using absolute alpha peak characteristics during both a working memory task (2008) and from baseline EEG (2007). Though, Ip et al. (2018) found that absolute baseline EEG test-retest reliability was generally higher than absolute task-related EEG power reliability from auditory paradigms within the same individuals over four sessions 20–22 days apart. Taken together, absolute and relative power during tasks appear to be stable across short (several minutes) and long (over one year) intervals in adults, though baseline EEG power may be more reliable than power evoked during at least some task paradigms (Fig. 1C).

Table 3.

Test-retest reliabilities of adult power studies.

| Paper | Age(s) at First Test | Sample Size | Time to Retest | Paradigm | Type of Power | Reliability Measure(s) | Frequency Band | Reliability |

|---|---|---|---|---|---|---|---|---|

| McEvoy et al. (2000) | 18–29 years | N = 20 | 1 hour | Resting-state | Absolute | Pearson's r | Frontal midline theta | 0.81 |

| Posterior theta | 0.85 | |||||||

| Alpha | 0.91 | |||||||

| Task-related (WM) | Absolute | Pearson's r | Frontal midline theta | 0.96 | ||||

| Posterior theta | 0.96 | |||||||

| Alpha | 0.98 | |||||||

| Task-related (PVT) | Absolute | Pearson's r | Frontal midline theta | 0.94 | ||||

| Posterior theta | 0.94 | |||||||

| Alpha | 0.96 | |||||||

| 7 days | Resting-state | Absolute | Pearson's r | Frontal midline theta | 0.79 | |||

| Posterior theta | 0.82 | |||||||

| Alpha | 0.88 | |||||||

| Task-related (WM) | Absolute | Pearson's r | Frontal midline theta | 0.91 | ||||

| Posterior theta | 0.92 | |||||||

| Alpha | 0.96 | |||||||

| Task-related (PVT) | Absolute | Pearson's r | Frontal midline theta | 0.88 | ||||

| Posterior Theta | 0.89 | |||||||

| Alpha | 0.88 | |||||||

| Angelidis et al. (2016) | 18–31 years | N = 41 | 1 week | Resting-state | Absolute | Pearson's r | Theta | 0.94 |

| Beta | 0.90 | |||||||

| Theta/Beta Ratio | 0.93 | |||||||

| Schmidt et al. (2012) | Mean age of 36.3 years | N = 33 | 1 week | Resting-state | Absolute | ICC | Frontal alpha | 0.86 |

| Central alpha | 0.94 | |||||||

| Parietal alpha | 0.95 | |||||||

| Task-related | Absolute | ICC | Frontal alpha | 0.86 | ||||

| Central alpha | 0.91 | |||||||

| Parietal alpha | 0.89 | |||||||

| Suarez-Revelo et al. (2015) | Mean age 23.3 years | N = 15 | 4–6 weeks | Resting-state | Relative | ICC | Delta | 0.46 |

| Theta | 0.76 | |||||||

| Alpha | 0.75 | |||||||

| Beta | 0.63 | |||||||

| Gamma | 0.57 | |||||||

| Task-related | Relative | ICC | Delta | 0.25 | ||||

| Theta | 0.48 | |||||||

| Alpha | 0.62 | |||||||

| Beta | 0.52 | |||||||

| Gamma | 0.32 | |||||||

| Corsi-Cabrera et al. (2007) | 18–29 years | N = 6 | 1 month (total of 9 months) | Resting-state | Absolute | Multiple correlation coefficient R | Total power | 0.95 |

| Pollock et al. (1991) | 56–76 years | N = 46 | 4.5 months | Resting-state | Absolute | Pearson's r | Delta | 0.50 |

| Theta | 0.81 | |||||||

| Alpha | 0.84 | |||||||

| Beta | 0.81 | |||||||

| Relative | Pearson's r | Delta | 0.47 | |||||

| Theta | 0.65 | |||||||

| Alpha | 0.68 | |||||||

| Beta | 0.68 | |||||||

| Salinsky et al. (1991) | 23–52 years | N = 19 | 5 minutes | Task-related | Absolute | Spearman rank correlations | Delta | 0.90 |

| Theta | 0.91 | |||||||

| Alpha | 0.95 | |||||||

| Beta | 0.95 | |||||||

| Relative | Spearman rank correlations | Delta | 0.86 | |||||

| Theta | 0.90 | |||||||

| Alpha | 0.89 | |||||||

| Beta | 0.93 | |||||||

| 12–16 weeks | Task-related | Absolute | Spearman rank correlations | Delta | 0.81 | |||

| Theta | 0.83 | |||||||

| Alpha | 0.82 | |||||||

| Beta | 0.88 | |||||||

| Relative | Spearman rank correlations | Delta | 0.82 | |||||

| Theta | 0.85 | |||||||

| Alpha | 0.80 | |||||||

| Beta | 0.88 | |||||||

| Rocha et al. (2020) | Older adults | N = 31 | 10 days | Resting-state | Relative (to nearby frequencies only) | Pearson's r | Alpha | 0.62 |

| Task-related | Relative (to nearby frequencies only) | Pearson's r | Alpha | 0.83 | ||||

| Burgess and Gruzelier (1993) | 18–39 years | N = 24 | 40 minutes | Resting-state | Absolute | Pearson's r | Delta | 0.70 |

| Theta | 0.86 | |||||||

| Alpha | 0.91 | |||||||

| Beta | 0.89 | |||||||

| Allen et al. (2003) | 18–45 years | N = 26 | 8 weeks | Resting-state | Frontal asymmetry | ICC | Alpha | 0.61 |

| N = 15 | 16 weeks | Resting-state | Frontal asymmetry | ICC | Alpha | 0.56 | ||

| Pathania et al. (2021) | Undergraduates | N = 60 | 30 minutes | Resting-state | Spectral slope (LMER) feature | ICC | Power spectral density | 0.91 |

| Aperiodic slope (SpecParam) | ICC | Power spectral density | 0.86 | |||||

| Keune et al. (2019) | 18–75 years | N = 10 | 2 weeks | Resting-state/Task-related | Absolute | ICC | Theta/beta ratio | 0.96 |

| Theta | 0.98 | |||||||

| Beta | 0.97 | |||||||

| Gold et al. (2013) | 18–50 years | N = 79 | 3 months | Resting-state | Frontal asymmetry | ICC | Alpha | 0.61 |

| Absolute | ICC | Theta | 0.90 | |||||

| Vuga et al. (2006) | 19–39 years | N = 99 | 1.2 years | Resting-state | Lateral frontal asymmetry | ICC | Alpha | 0.60 |

| Mid-frontal asymmetry | ICC | Alpha | 0.54 | |||||

| Parietal asymmetry | ICC | Alpha | 0.58 | |||||

| Koller-Schlaud et al. (2020) | Mean age of 27 years | N = 23 | 7 days | Task-related | Frontomedial asymmetry | ICC | Alpha | 0.52 |

| Frontolateral asymmetry | ICC | Alpha | 0.70 | |||||

| Parietomedial aymmetry | ICC | Alpha | 0.71 | |||||

| Parietolateral asymmetry | ICC | Alpha | 0.49 | |||||

| Metzen et al. (2021) | 20–70 years | N = 541 | 56.7 days | Resting-state | Absolute | ICC | Alpha | 0.75 |

| Frontal asymmetry | ICC | Alpha | 0.56 | |||||

| Parietal asymmetry | ICC | Alpha | 0.65 | |||||

| Tenke et al. (2018) | 18+ years | N = 46 | 12 years | Resting-state | Absolute (CSD-fPCA) | Spearman Brown | Alpha | 0.92 |

| Posterior asymmetry | Spearman Brown | Alpha | 0.73 |

In contrast, relational power measures, formed by relating power in specific frequency bands or over specific scalp topography, have shown more variable reliability, ranging from fair to excellent in adults. For example, the theta beta ratio measured in resting-state EEG has shown consistently excellent test-retest reliability on the scale of weeks in younger and older adults (r = 0.93 in young adults (Angelidis et al., 2016); ICC = 0.96 in older adults (Keune et al., 2019)). Additionally, alpha asymmetry has shown fair to good reliability in adults when time to retest spans from one week to over a decade later (ICC = 0.61 at 1 week (Koller-Schlaud et al., 2020); ICC = 0.61 at 8 weeks and ICC = 0.56 at 16 weeks (Allen et al., 2003); ICC = 0.61 at 56 days (Metzen et al., 2021); ICC = 0.61 at 3 months (Gold et al., 2013); rSB =0.73 at 12 years (Tenke et al., 2018), see Fig. 1B). Overall, studies examining frequency band power in adults indicate adequate reliability across most bands and relational band measures over time periods longer than a year.

Few studies to date have examined test-retest reliability of EEG power bands in pediatric populations, and those that do have been conducted only for baseline EEG with older children and adolescents (Table 4). Gasser et al. (1985) used spearman rank correlations to show that absolute and relative baseline power in most frequency bands and locations showed similar patterns of test-retest reliability in typically-developing peri-adolescents over 10 months (mean values for ρ = 0.58–.80 for absolute power; ρ = 0.47–.80 for relative power). Similarly, Winegust et al. (2014) have shown that baseline absolute frontal alpha power demonstrates borderline excellent test-retest reliability (ICCs = 0.73–.74) over a one-month interval in typically developing adolescents. Several pediatric studies have also examined whether power test-retest reliability differs between typically-developing and clinical populations. Fein et al. (1983) found that same-day test-retest reliability for power was good-excellent for both dyslexic and typically- developing children (ICCs > 0.70). Similarly, Levin et al. (2020) found that both typically- developing and autistic children demonstrated excellent reliability of baseline total power over up to several weeks when processed with standardized software (here, HAPPE and BEAPP software; typically-developing group ICC = 0.86; autistic group ICC = 0.81). However, a recent paper by Webb et al. (2022) found that typically-developing children demonstrated only fair reliability across power bands over a period of six weeks, while autistic children demonstrated good reliability over the same time period (typically-developing group ICC = 0.54; autistic group ICC = 0.68). Several of these studies have also noted higher ICCs for absolute compared to relative baseline power in development (Fein et al., 1983; Gasser et al., 1985). Relatedly, there is limited knowledge about the test-retest reliability of relational power features (e.g., alpha asymmetry, theta-beta ratio). For example, Vincent et al. (2021) and Anaya et al. (2021) have both found that frontal alpha asymmetry scores were only weakly stable across infancy and early childhood, whether measured as correlated values or rank orders. Delta-beta ratios demonstrated similar age-related changes during this early developmental window (Anaya et al., 2021). In sum, these studies suggest high reliability of canonical frequency band power (especially absolute power) in both healthy and clinical pediatric populations in childhood through adolescence (Fig. 1D).

Table 4.

Test-retest reliabilities of pediatric power studies.

| Paper | Age(s) at First Test | Sample Size | Time to Retest | Paradigm | Type of Power | Reliability Measure(s) | Frequency Band | Reliability |

|---|---|---|---|---|---|---|---|---|

| Winegust et al. (2014) | Mean age of 15.9 years | N = 9 | 1 month | Resting-state | Absolute | ICC | Left mid-frontal alpha | 0.74 |

| Right mid-frontal alpha | 0.73 | |||||||

| Absolute | Pearson's r | Left mid-frontal alpha | 0.74 | |||||

| Right mid-frontal alpha | 0.73 | |||||||

| Levin et al. (2020) | Mean age of 6.6 years (TD group) |

N = 26 | Median 6 days | Resting-state | Relative | ICC | Total power | 0.86 |

| Spectral offset (SpecParam) | ICC | Power spectral density (PSD) | 0.48 | |||||

| Aperiodic slope (SpecParam) | ICC | Power spectral density (PSD) | 0.28 | |||||

| Number of peaks (SpecParam) | ICC | Power spectral density (PSD) | 0.02 | |||||

| Largest alpha peak: center (SpecParam) | ICC | Alpha | 0.70 | |||||

| Largest alpha peak: amplitude (SpecParam) | ICC | Alpha | 0.86 | |||||

| Largest alpha peak: bandwidth (SpecParam) | ICC | Alpha | 0.42 | |||||

| Mean age of 8 years (ASD group) |

N = 21 | Median 6 days | Resting-state | Relative | ICC | Total power | 0.81 | |

| Spectral offset (SpecParam) | ICC | Power spectral density (PSD) | 0.53 | |||||

| Aperiodic slope (SpecParam) | ICC | Power spectral density (PSD) | 0.70 | |||||

| Number of peaks (SpecParam) | ICC | Power spectral density (PSD) | 0.23 | |||||

| Largest alpha peak: center (SpecParam) | ICC | Alpha | 0.62 | |||||

| Largest alpha peak: amplitude (SpecParam) | ICC | Alpha | 0.83 | |||||

| Largest alpha peak: bandwidth (SpecParam) | ICC | Alpha | 0.34 | |||||

| Gasser et al. (1985) | 10–13 years | N = 26 | 10 months | Resting-state | Absolute | Spearman rank correlations | Delta | 0.59 |

| Theta | 0.70 | |||||||

| Alpha | 0.76 | |||||||

| Beta | 0.62 | |||||||

| Relative | Spearman rank correlations | Delta | 0.47 | |||||

| Theta | 0.63 | |||||||

| Alpha | 0.76 | |||||||

| Beta | 0.76 | |||||||

| Fein et al. (1983) | 10–12 years | N = 32 | 4–5 hours | Resting-state | Absolute | ICC | Total power | > 0.90 |

| Relative | ICC | Total power | 0.70 - 0.90 | |||||

| Vincent et al. (2021) | 5 months, 7 months, or 12 months | N = 149 | 24–31 months | Resting-state | FAAln | Pearson's r | Alpha | -0.02 |

| FAAratio | Pearson's r | Alpha | -0.02 | |||||

| FAAlnratio | Pearson's r | Alpha | -0.07 | |||||

| FAAlnrel | Pearson's r | Alpha | 0.36 | |||||

| Anaya et al. (2021) | 8 months | N = 43–89 | 4 months | Resting-state | Frontal asymmetry | Pearson's r (rank-order stability) | Alpha | 0.09 |

| Delta-Beta coupling | Pearson's r (rank-order stability) | Delta/Beta Ratio | -0.06 | |||||

| 6 months | Resting-state | Frontal asymmetry | Pearson's r (rank-order stability) | Alpha | -0.19 | |||

| Delta-Beta coupling | Pearson's r (rank-order stability) | Delta/Beta Ratio | 0.08 | |||||

| 10 months | Resting-state | Frontal asymmetry | Pearson's r (rank-order stability) | Alpha | 0.27 | |||

| Delta-Beta coupling | Pearson's r (rank-order stability) | Delta/Beta Ratio | -0.05 | |||||

| Webb et al. (2022) | 6–11.5 years (TD Group) |

N = 119 | 6 weeks | Resting-state | Absolute | ICC | Delta | 0.39 |

| Theta | 0.51 | |||||||

| Beta | 0.68 | |||||||

| Alpha | 0.67 | |||||||

| Gamma | 0.45 | |||||||

| Aperiodic slope (SpecParam) | ICC | 2–50 Hz | 0.54 | |||||

| 6–11.5 years (ASD Group) |

N = 280 | 6 weeks | Resting-state | Absolute | ICC | Delta | 0.66 | |

| Theta | 0.68 | |||||||

| Beta | 0.75 | |||||||

| Alpha | 0.73 | |||||||

| Gamma | 0.56 | |||||||

| Aperiodic slope (SpecParam) | ICC | 2–50 Hz | 0.59 |

3.1.3. Test-retest reliability of periodic and aperiodic power spectrum features

Studies have also used the Spectral Parameterization (i.e., SpecParam, formerly Fitting Oscillations and One-Over-F (FOOOF)) and other algorithms developed recently (Donoghue et al., 2020; Wen and Liu, 2016) to characterize the EEG power spectrum in terms of periodic and aperiodic features (e.g., Ostlund et al., 2021). This direction is especially important given the emerging recognition and exploration of differences in functional significance and neural underpinnings for the periodic versus aperiodic power spectrum components (Colombo et al., 2019; Demanuele et al., 2007; He et al., 2010; McDonnell and Ward, 2011; Podvalny et al., 2015). Fortunately, though this approach is quite new, several studies have already investigated the reliability of periodic/aperiodic power spectrum features with promising preliminary results. In adults, the aperiodic slope shows excellent test-retest reliability on the same day with two different methods of calculation, linear mixed-effects regression and SpecParam (LMER ICCs = 0.85–.95, SpecParam ICCs = 0.78–.93; Pathania et al., 2021). Using a different approach, Demuru and Fraschini (2020) investigated how SpecParam features can identify participants from a large dataset of n = 109 of baseline EEG recordings. The SpecParam spectral offset and aperiodic slope features both performed very well as discriminators. In pediatric populations, Levin et al. (2020) found variable reliability estimates across baseline EEG SpecParam measures between sessions of about one week apart in typically-developing and autistic children. For example, aperiodic slope demonstrated variable reliability estimates between participant groups, with good reliability in the autism group (ICC = 0.70) and poor reliability in the typically-developing group (ICC = 0.28). Over a longer period of six weeks, Webb et al. (2022) found that aperiodic slope had fair reliability across both a typically-developing group (ICC = 0.54) and autism group (ICC = 0.59). Further, Levin et al. (2020) found that other features had poor reliability across all children, including the number of spectrum peaks. Thus, some SpecParam features may be more appropriate for biomarker/individual difference investigations than others over development. Overall, the studies reviewed here in pediatric and adult samples suggest that EEG aperiodic features, such as aperiodic slope and offset, may be a reliable source of interindividual variation.

3.1.4. Power measurement recommendations

Given the reliability studies conducted from childhood through adulthood so far, we offer the following recommendations. 1) Power measured in canonical frequency bands typically has sufficient internal consistency and test-retest reliability from childhood through adulthood to be considered for any individual difference study design. There is some evidence that measuring power during baseline/resting-state conditions produces more reliable estimates than measuring power during task paradigms, though in studies to date, power in both contexts is adequately reliable for individual difference analyses. 2) Relational power features like frontal alpha asymmetry have shown excellent internal consistency in adult and pediatric samples but display only fair-good test-retest reliability in adulthood and inadequate test-retest reliability in studies conducted in infancy through early childhood. 3) Contemporary approaches characterizing the power spectrum through periodic and aperiodic features (e.g., Spectral Parameterization, IRASA) show promise for reliable measurement but further testing should be undertaken in both pediatric and adult populations to explore optimizing these measurements’ reliability for individual difference assessments. Tutorials are available to guide users through applying spectral parameterization methods to their data (e.g., Ostlund et al., 2022; Voytek Lab (https://fooof-tools.github.io/fooof/auto_tutorials/index.html); Wilson and Cassani (https://neuroimage.usc.edu/brainstorm/Tutorials/Fooof)). 4) Very little is reported on internal consistency and test-retest reliability of any power measurements in infants and young children. Though test-retest reliability is especially challenging to measure with fidelity at the youngest ages when change is most rapid, researchers must begin measuring and reporting internal consistency values for their power measurements at these ages with available tools before using them for individual difference analyses.

3.2. Event-related potentials

Event-related potentials (ERPs) have been used extensively across the lifespan as a temporally-sensitive measure of task-evoked brain activity. Analyses often break ERPs down into components, distinct deflections in the ERP waveform characterized by location on the scalp, polarity, timing post-stimulus (i.e., latency), and sometimes task context. These different ERP components relate to specific cognitive, affective, and perceptual processes in the brain, so ERP components are being used in a variety of individual difference study designs, especially in clinical populations (e.g., Beker et al., 2021; Cremone-Caira et al., 2020; Webb et al., 2022; for best practices in using ERPs in clinical populations, see (Kappenman and Luck, 2016). Fortunately, there is a large body of work evaluating the internal consistency and test-retest reliability profiles of different ERP measurements (for examples, see recent meta-analysis from Clayson (2020) on reliability of the error-related negativity (ERN) and this thorough examination of factors influencing reliability of ERPs from Boudewyn et al. (2018)). We summarize this literature with respect to peak amplitude, mean amplitude, and latency to peak amplitude measurements, the most commonly assessed ERP measures (though see Clayson et al. (2013) and Luck and Gaspelin (2017) for arguments against using peak amplitude for reliability reasons).

3.2.1. Internal consistency of ERPs

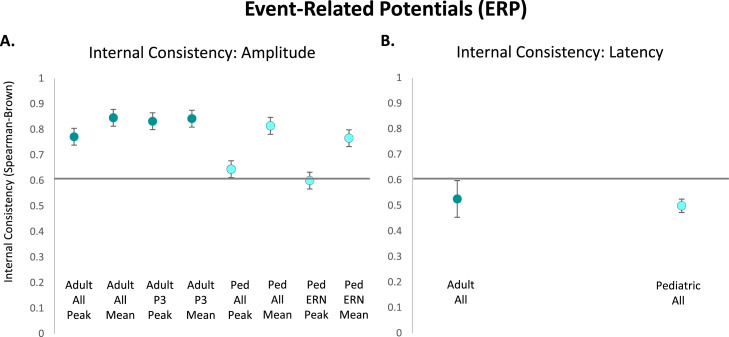

Fewer internal consistency studies were identified relative to test-retest reliability studies across the lifespan that used consistent reliability metrics (see Table 5, Table 6, Table 7, Table 8). Available evidence (Cassidy et al., 2012; Hämmerer et al., 2012; D.M. Olvet and Hajcak, 2009a; Sandre et al., 2020; Walhovd and Fjell, 2002) suggests ERP peak amplitude measurements in adults across studies usually demonstrate excellent internal consistency (rSB = 0.77, n = 31 measurements, SE = 0.03; Fig. 2A). Meanwhile, adult mean amplitude studies (Bresin and Verona, 2021; Cassidy et al., 2012; Foti et al., 2013; Hajcak et al., 2017; Levinson et al., 2017; Meyer et al., 2013; Pontifex et al., 2010; Sandre et al., 2020; Xu and Inzlicht, 2015) indicated even higher levels of excellent internal consistency across all ERPs (rSB = 0.85, n = 22 measurements, SE = 0.02; Fig. 2A). The P3, a component related to attention and working memory, was most commonly evaluated across adult peak amplitude studies (Cassidy et al., 2012; Hämmerer et al., 2012; Walhovd and Fjell, 2002) and adult mean amplitude studies (Bresin and Verona, 2021; Cassidy et al., 2012), demonstrating excellent internal consistency for both types of amplitude measurement (peak: rSB = 0.83, n = 9 measurements, SE = 0.04; mean: rSB = 0.84, n = 8 measurements, SE = 0.04; Fig. 2A).

Table 5.

Internal consistency of adult ERP peak amplitude and mean amplitude studies.

| Paper | Age(s) | Sample Size | Consistency Measure | ERP Measure(s) | Consistency |

|---|---|---|---|---|---|

| Cassidy et al. (2012) | 19–35 years | N = 25 | Spearman-Brown | P1 Peak | 0.73 |

| N1 (P08) Peak | 0.88 | ||||

| N1 (P07) Peak | 0.89 | ||||

| P3a Peak | 0.93 | ||||

| P3a Difference Peak | 0.66 | ||||

| P3b Peak | 0.73 | ||||

| P3b Difference Peak | 0.63 | ||||

| ERN Peak | 0.64 | ||||

| ERN Difference Peak | 0.72 | ||||

| Pe Peak | 0.88 | ||||

| Pe Difference Peak | 0.89 | ||||

| P400 Peak | 0.87 | ||||

| N170 Peak | 0.81 | ||||

| ERN Peak-to-Peak | 0.51 | ||||

| ERN Difference Peak-to-Peak | 0.44 | ||||

| P3a Mean | 0.9 | ||||

| P3a Difference Mean | 0.70 | ||||

| P3b Mean | 0.68 | ||||

| P3b Difference Mean | 0.71 | ||||

| Pe Mean | 0.76 | ||||

| Pe Difference Mean | 0.85 | ||||

| P400 Mean | 0.88 | ||||

| Area Under the P3a | 0.89 | ||||

| Area Difference P3a | 0.73 | ||||

| Area Under the P3b | 0.63 | ||||

| Area Difference P3b | 0.74 | ||||

| Area Under the Pe | -0.08 | ||||

| Area Difference Pe | 0.84 | ||||

| Area Under the P400 | 0.73 | ||||

| Levinson et al. (2017) | Undergraduates | N = 59 | Spearman-Brown | FN Mean | 0.80 |

| RewP Mean | 0.86 | ||||

| Cronbach's α | FN Mean | 0.82 | |||

| RewP Mean | 0.86 | ||||

| Walhovd & Fjell (2002) | Mean age of 56.1 years | N = 59 | Spearman-Brown | P3 (Pz) Peak | 0.92 |

| P3 (Cz) Peak | 0.93 | ||||

| P3 (Fz) Peak | 0.84 | ||||

| Hämmerer et al. (2012) | Younger adults (mean age = 24.27 years) | N = 47 | Spearman-Brown | Go-P3 Peak | 0.96 |

| Older adults (mean age = 71.24 years) | N = 47 | Spearman-Brown | Go-P3 Peak | 0.89 | |

| Hajcak et al. (2017) | Not specified | N = 53 | Spearman-Brown | ERN Mean | 0.75 |

| Cronbach's α | ERN Mean | 0.75 | |||

| Meyer et al. (2013) | Mean age of 19.14 years | N = 43 | Cronbach's α | ERN Mean | 0.70 |

| D.M. Olvet and Hajack (2009) | Undergraduates | N = 45 | Spearman-Brown | CRN Peak | 0.98 |

| ERN Peak | 0.86 | ||||

| ERN-CRN Difference Peak | 0.80 | ||||

| Area Under the CRN | 0.98 | ||||

| Area Under the ERN | 0.86 | ||||

| Area Difference ERN-CRN | 0.71 | ||||

| Area Under the Pe | 0.87 | ||||

| Bresin and Verona (2021) | Mean age of 29 years | N = 55 | Spearman-Brown | P3 Incongruent Mean | 0.92 |

| P3 Congruent Mean | 0.93 | ||||

| P3 No-Go Mean | 0.95 | ||||

| P3 Go Mean | 0.95 | ||||

| ERN Mean | 0.80 | ||||

| CRN Mean | 0.92 | ||||

| Pe Mean | 0.78 | ||||

| Pc Mean | 0.94 | ||||

| Foti et al. (2013) | 18–65 years (Healthy Individuals) |

N = 52 | Cronbach's α | ERN Mean Flanker | 0.86 |

| ΔERN Mean Flanker | 0.84 | ||||

| Pe Mean Flanker | 0.81 | ||||

| ΔPe Mean Flanker | 0.83 | ||||

| ERN Mean Picture/Word Task | 0.41 | ||||

| ΔERN Mean Picture/Word Task | 0.69 | ||||

| Pe Mean Picture/Word Task | 0.66 | ||||

| ΔPe Mean Picture/Word Task | 0.79 | ||||

| 28–68 years (Patients with Psychotic Illness) |

N = 84 | Cronbach's α | ERN Mean Flanker | 0.63 | |

| ΔERN Mean Flanker | 0.48 | ||||

| Pe Mean Flanker | 0.75 | ||||

| ΔPe Mean Flanker | 0.73 | ||||

| ERN Mean Picture/Word Task | 0.35 | ||||

| ΔERN Mean Picture/Word Task | 0.40 | ||||

| Pe Mean Picture/Word Task | 0.28 | ||||

| ΔPe Mean Picture/Word Task | 0.39 | ||||

| Pontifex et al. (2010) | 18–25 years and 60–73 years | N = 83 | Cronbach's α | ERN Mean | 0.96 |

| Pe Mean | at least 0.90 | ||||

| Sandre et al. (2020) | Mean age of 20.1 years | N = 263 | Spearman-Brown | ERN Peak (Cz) | 0.80 |

| ERN Peak (FCavg) | 0.76 | ||||

| CRN Peak (Cz) | 0.82 | ||||

| CRN Peak (FCavg) | 0.72 | ||||

| ERN Peak-to-Peak (Cz) | 0.75 | ||||

| ERN Peak-to-Peak (FCavg) | 0.67 | ||||

| CRN Peak-to-Peak (Cz) | 0.54 | ||||

| CRN Peak-to-Peak (FCavg) | 0.47 | ||||

| ERN Mean (Cz) | 0.81 | ||||

| ERN Mean (FCavg) | 0.78 | ||||

| CRN Mean (Cz) | 0.97 | ||||

| CRN Mean (FCavg) | 0.97 | ||||

| Cronbach's α | ERN Peak (Cz) | 0.73 | |||

| ERN Peak (FCavg) | 0.63 | ||||

| CRN Peak (Cz) | 0.77 | ||||

| CRN Peak (FCavg) | 0.68 | ||||

| ERN Peak-to-Peak (Cz) | 0.74 | ||||

| ERN Peak-to-Peak (FCavg) | 0.64 | ||||

| CRN Peak-to-Peak (Cz) | 0.80 | ||||

| CRN Peak-to-Peak (FCavg) | 0.66 | ||||

| ERN Mean (Cz) | 0.63 | ||||

| ERN Mean (FCavg) | 0.57 | ||||

| CRN Mean (Cz) | 0.75 | ||||

| CRN Mean (FCavg) | 0.70 | ||||

| Xu and Inzlicht (2015) | Mean age of 19.2 years | N = 39 | Cronbach's α | ERN Mean | 0.59 |

| CRN Mean | 0.97 | ||||

| ΔERN Mean | 0.74 | ||||

| Pe Mean | 0.94 | ||||

| ΔPe Mean | 0.92 |

Table 6.

Internal consistency of pediatric ERP peak amplitude and mean amplitude studies.

| Paper | Age(s) | Sample Size | Consistency Measure | ERP Measure(s) | Consistency |

|---|---|---|---|---|---|

| Jetha et al. (2021) | Kindergarten - 1st grade | N = 110 | Spearman Rho (rank order stability) | P1 Peak (O1) | 0.59 |

| P1 Peak (O2) | 0.64 | ||||

| P1 Peak (Oz) | 0.69 | ||||

| N170 Peak (P7) | 0.67 | ||||

| N170 Peak (P8) | 0.54 | ||||

| VPP Peak (Fz) | 0.59 | ||||

| VPP Peak (FC1) | 0.62 | ||||

| VPP Peak (FC2) | 0.56 | ||||

| VPP Peak (Cz) | 0.65 | ||||

| Meyer et al. (2014) | 8–13 years | N = 44 | Spearman-Brown | ERN Peak (Fz) Flanker | 0.67 |

| ERN Peak (Cz) Flanker | 0.85 | ||||

| ERN Peak (Fz) Go-NoGo | 0.38 | ||||

| ERN Peak (Cz) Go-NoGo | 0.50 | ||||

| Hämmerer et al. (2012) | Children (mean age = 10.15 years) | N = 45 | Spearman-Brown | Go-P3 Peak | 0.81 |

| Adolescents (mean age = 14.38 years) | N = 46 | Spearman-Brown | Go-P3 Peak | 0.91 | |

| Luking et al. (2017) | 8–14 years | N = 177 | Spearman-Brown | ERN Gain Mean | 0.85 |

| ERN Loss Mean | 0.86 | ||||

| ERN Difference (Gain-Loss) Mean | 0.36 | ||||

| Morales et al. (2022) | 4–9 years | N = 326 | Spearman-Brown | ERN Correct Mean | 0.96 |

| ERN Error Mean | 0.80 | ||||

| Pe Correct Mean | 0.98 | ||||

| Pe Error Mean | 0.89 | ||||

| Pontifex et al. (2010) | 8–11 years | N = 83 | Cronbach's α | ERN Mean | at least 0.90 |

| Pe Mean | at least 0.90 |

Table 7.

Internal consistency of adult ERP latencies (to peak amplitudes) studies.

| Paper | Age(s) | Sample Size | Consistency Measure | ERP Measure(s) | Consistency |

|---|---|---|---|---|---|

| Cassidy et al. (2012) | 19–35 years | N = 25 | Spearman-Brown | P1 | 0.70 |

| N1 (O2) | 0.86 | ||||

| N1 (P07) | 0.93 | ||||

| P3a | 0.38 | ||||

| P3a Difference | 0.34 | ||||

| P3b | 0.01 | ||||

| P3b Difference | 0.03 | ||||

| ERN | 0.39 | ||||

| ERN Difference | 0.15 | ||||

| Pe | 0.52 | ||||

| Pe Difference | 0.30 | ||||

| P400 | 0.05 | ||||

| N170 | 0.87 | ||||

| Walhovd & Fjell (2002) | Mean age 56.1 years | N = 59 | Spearman-Brown | P3 (Pz) | 0.77 |

| P3 (Cz) | 0.79 | ||||

| P3 (Fz) | 0.77 | ||||

| D.M. Olvet and Hajack (2009) | Undergraduates | N = 45 | Spearman-Brown | CRN | 0.86 |

| ERN | 0.56 | ||||

| ERN-CRN Difference | 0.71 |

Table 8.

Internal consistency of pediatric ERP latencies (to peak amplitudes) studies.

| Paper | Age(s) | Sample Size | Consistency Measure | ERP Measure(s) | Consistency |

|---|---|---|---|---|---|

| Jetha et al. (2021) | Kindergarten - 1st grade | N = 110 | Spearman Rho (rank order stability) | P1 (O1) | 0.57 |

| P1 (O2) | 0.36 | ||||

| P1 (Oz) | 0.37 | ||||

| N170 (P7) | 0.52 | ||||

| N170 (P8) | 0.53 | ||||

| VPP (Fz) | 0.55 | ||||

| VPP (FC1) | 0.55 | ||||

| VPP (FC2) | 0.53 | ||||

| VPP (Cz) | 0.51 |

Fig. 2.

Internal consistency of event-related potentials (ERP). A: Average internal consistency values calculated using the Spearman-Brown Formula for all adult ERP peak amplitudes, all adult ERP mean amplitudes, adult P3 peak amplitudes, adult P3 mean amplitudes, all pediatric ERP peak amplitudes, all pediatric ERP mean amplitudes, pediatric ERN peak amplitudes, and pediatric ERN mean amplitudes. B: Average internal consistency values calculated using the Spearman-Brown Formula for all adult ERP latencies (to peak amplitudes) and all pediatric ERP latencies.

With regard to pediatric studies in childhood and adolescence (Hämmerer et al., 2012; Jetha et al., 2021; Meyer et al., 2014), the internal consistency of peak amplitudes across all ERPs was considered good (rSB = 0.65, n = 15 measurements, SE = 0.04; Fig. 2A). Meanwhile, studies measuring mean amplitude across all ERPs in childhood and adolescence (Luking et al., 2017; Morales et al., 2022; Pontifex et al., 2010) indicated excellent internal consistency (rSB = 0.81, n = 7 measurements, SE = 0.08; Fig. 2A), outperforming peak amplitude measurement in pediatric samples. However, it is important to note the limited number of studies evaluating mean amplitude internal consistency compared to peak amplitude internal consistency in pediatric samples. The peak amplitude of the ERN component, related to error processing, was most commonly measured in pediatric samples for both peak amplitude (Meyer et al., 2014) and mean amplitude (Luking et al., 2017; Morales et al., 2022; Pontifex et al., 2010), demonstrating good internal consistency across studies of ERN peak amplitude (rSB = 0.60, n = 4 measurements, SE = 0.10; Fig. 2A) and excellent internal consistency across studies of ERN mean amplitude (rSB = 0.77, n = 5 measurements, SE = 0.10; Fig. 2A). Note, once again, the literature is still quite limited in evaluating the ERN using either peak or mean amplitude, necessitating additional evidence to more confidently draw conclusions regarding its reliability. Collectively, evidence to date suggests that mean ERP amplitude measurements across all ages and peak ERP amplitude in adulthood demonstrate excellent internal consistency, while peak ERP amplitude in pediatric samples indicates lower internal consistency. Measurements of mean ERP amplitude thus seem the best candidates to target for individual difference analyses across the lifespan.

Internal consistency of ERP latency measurements is not as well-characterized. Latencies are typically calculated as latency-to-peak amplitude. Based on the only three adult papers identified (Cassidy et al., 2012; D.M. Olvet and Hajcak, 2009a; Walhovd and Fjell, 2002), the internal consistency of ERP latencies was fair (rSB = 0.53, n = 19 measurements, SE = 0.07; Fig. 2B) across components. In pediatric samples, we identified only one paper that found fair ERP latency internal consistency (rSB = 0.50, n = 9 measurements, SE =0.03; Jetha et al., 2021; Fig. 2B). Acquisition set-ups may affect latency measurements in more ways than amplitude measurements (which are usually extracted within a temporal window whereas latency measurements are not). For example, the precision of presentation timing may particularly impact latency measurements, though recent hardware solutions (e.g., the Cedrus Stimtracker) may improve latency reliability measurements relative to historical measurements. Further testing is required across the lifespan to evaluate the consistency of ERP latency measurements.

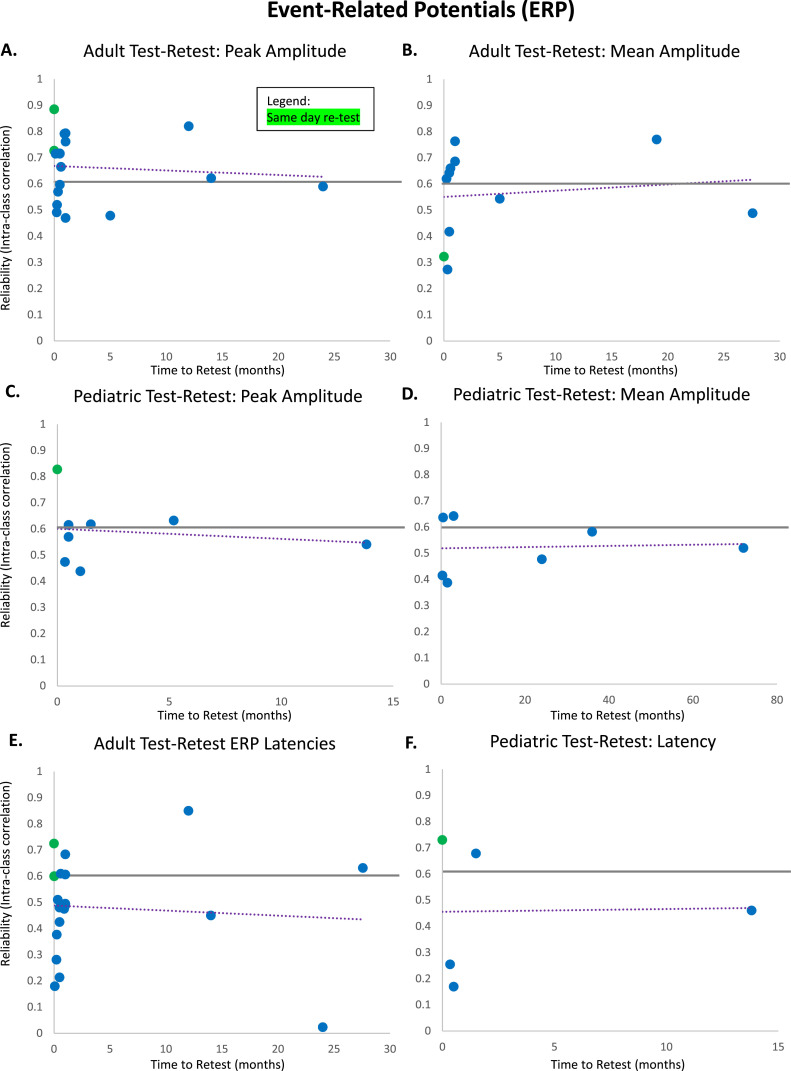

3.2.2. Test-retest reliability of ERPs

There is a robust test-retest reliability literature for ERPs across the lifespan, so to compare and summarize across results, we focus here on studies that calculated intraclass correlation coefficients (ICC) as the measure of test-retest reliability for both peak amplitude and mean amplitude (see Table 9, Table 10, Table 11, Table 12). Intraclass correlation coefficients are the most commonly used measure of reliability across ERP studies, providing the largest number of studies for direct comparison. Further, ICCs were the predominant measure used across more recent ERP reliability studies, providing the most current reliability estimates in the literature. Across all adult papers that calculated ICCs for ERP peak amplitude (Brunner et al., 2013; Cassidy et al., 2012; Fallgatter et al., 2001; Hall et al., 2006; Hämmerer et al., 2012; Kinoshita et al., 1996; Lew et al., 2007; Lin et al., 2020; Olvet and Hajcak, 2009a; Rentzsch et al., 2008; Sandre et al., 2020; Segalowitz et al., 2010; Sinha et al., 1992; Suchan et al., 2019; Taylor et al., 2016; Thesen and Murphy, 2002; Wang et al., 2021; Weinberg and Hajcak, 2011), reliability was generally good (ICC = 0.65, n = 109 measurements, SE = 0.02), though over a third of the studies did not report ICCs greater than the commonly-used threshold of 0.6 for inclusion in individual difference studies (7/20 studies). The effect of time to retest across studies with adults was also considered (range = 20 min – 2 years; see Fig. 3A). Meanwhile, across all adult studies that calculated ICCs for mean amplitude (Cassidy et al., 2012; Chen et al., 2018; Hall et al., 2006; Huffmeijer et al., 2014; Larson et al., 2010; Levinson et al., 2017; Light and Braff, 2005; Malcolm et al., 2019; Sandre et al., 2020; Taylor et al., 2016), reliability was only fair (ICC = 0.56, n = 44 measurements, SE = 0.03), regardless of time to retest (range = 7 hours – 2.3 years; see Fig. 3B). Further, the overall test-retest reliability across ERP peak amplitude measurements in studies with pediatric populations (Beker et al., 2021; Hämmerer et al., 2012; Jetha et al., 2021; Kompatsiari et al., 2016; Lin et al., 2020; Segalowitz et al., 2010; Taylor et al., 2016; Webb et al., 2022) achieved good reliability (ICC = 0.60, n = 73 measurements, SE = 0.02), without much change as a function of time to retest (range = 30 min – 1.15 years; see Fig. 3C). Meanwhile, pediatric ERP mean amplitude studies (Cremone-Caira et al., 2020; Kujawa et al., 2018, 2013; Munsters et al., 2019; Taylor et al., 2016; Webb et al., 2022) only reached fair reliability (ICC = 0.52, n = 29 measurements, SE = 0.04). Pediatric mean amplitude reliability also did not demonstrate a specific trend as time to retest increased (range = 1.5 weeks – 6 years; see Fig. 3D).

Table 9.

Test-retest reliabilities of adult ERP peak amplitude and mean amplitude studies.

| Paper | Age(s) at First Test | Sample Size | Time to Retest | ERP Measure(s) | Reliability (ICC) |

|---|---|---|---|---|---|

| Taylor et al. (2016) | 19–28 years | N = 32 | 1–2 weeks | CNV O-wave Component Mean | 0.58 |

| CNV E-wave Component Mean | 0.19 | ||||

| Total CNV Mean | 0.05 | ||||

| N1 Baseline-to-Peak | 0.41 | ||||

| N1 Peak-to-Peak | 0.26 | ||||

| P2 Baseline-to-Peak | 0.74 | ||||

| P2 Peak-to-Peak | 0.71 | ||||

| N2 Baseline-to-Peak | 0.65 | ||||

| N2 Peak-to-Peak | 0.47 | ||||

| P3 Baseline-to-Peak | 0.75 | ||||

| P3 Peak-to-Peak | 0.57 | ||||

| Lin et al. (2020) | 18–30 years | N = 53 | 1–3 weeks | ERN Peak-to-Peak | 0.69 |

| Pe Peak-to-Peak | 0.74 | ||||

| Weinberg and Hajcak (2011) | Mean age of 21.12 years | N = 26 | 1.5–2.5 years | ERN Peak | 0.62 |

| CRN Peak | 0.55 | ||||

| ΔERN Peak | 0.60 | ||||

| Area Under the ERN | 0.62 | ||||

| Area Under the CRN | 0.72 | ||||

| Area Difference ΔERN | 0.66 | ||||

| Area Under the Pe | 0.68 | ||||

| Area Around the ERN Peak | 0.66 | ||||

| Area Around the CRN Peak | 0.66 | ||||

| Area Around the Δpeak | 0.56 | ||||

| Levinson et al. (2017) | Undergraduates | N = 59 | 1 week | RewP Mean | 0.62 |

| FN Mean | 0.81 | ||||

| ΔRewP Mean | 0.43 | ||||

| Fallgatter et al. (2001) | 22–60 years | N = 23 | 30 min | P300 Go Peak | 0.85 |

| P300 NoGo Peak | 0.92 | ||||

| Brunner et al. (2013) | Median age 27.5 years | N = 26 | 6–18 months | P3 NoGo Wave Peak | 0.81 |

| IC P3 NoGo Early Peak | 0.85 | ||||

| IC P3 NoGo Late Peak | 0.80 | ||||

| Hämmerer et al. (2012) | Younger adults (mean age = 24.27 years) | N = 47 | 2 weeks | P2 Peak CPT Go Trials | 0.83 |

| N2 Peak CPT Go Trials | 0.79 | ||||

| P3 Peak CPT Go Trials | 0.56 | ||||

| P2-N2 Peak CPT Go Trials | 0.76 | ||||

| P2 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.59 | ||||

| N2 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.63 | ||||

| P3 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.64 | ||||

| P2-N2 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.67 | ||||

| Older adults (mean age = 71.24 years) | N = 47 | 2 weeks | P2 Peak CPT Go Trials | 0.75 | |

| N2 Peak CPT Go Trials | 0.76 | ||||

| P3 Peak CPT Go Trials | 0.77 | ||||

| P2-N2 Peak CPT Go Trials | 0.67 | ||||

| P2 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.83 | ||||

| N2 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.73 | ||||

| P3 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.70 | ||||

| P2-N2 Peak Reinforcement Learning Task (Avg Gain/Loss) | 0.78 | ||||

| Cassidy et al. (2012) | 19–35 years old | N = 25 | 1 month | P1 Peak | 0.76 |

| N1 (P08) Peak | 0.87 | ||||

| N1 (P07) Peak | 0.91 | ||||

| P3a Peak | 0.77 | ||||

| P3a Difference Peak | 0.64 | ||||

| P3b Peak | 0.77 | ||||

| P3b Difference Peak | 0.52 | ||||

| ERN Peak | 0.74 | ||||

| ERN Difference Peak | 0.87 | ||||

| Pe Peak | 0.71 | ||||

| Pe Difference Peak | 0.85 | ||||

| P400 Peak | 0.85 | ||||

| N170 Peak | 0.84 | ||||

| ERN Peak-to-Peak | 0.76 | ||||

| ERN Difference Peak-to-Peak | 0.56 | ||||

| P3a Mean | 0.78 | ||||

| P3a Difference Mean | 0.82 | ||||

| P3b Mean | 0.80 | ||||

| P3b Difference Mean | 0.73 | ||||

| Pe Mean | 0.62 | ||||

| Pe Difference Mean | 0.74 | ||||

| P400 Mean | 0.85 | ||||

| Area Under the P3a | 0.78 | ||||

| Area Difference P3a | 0.82 | ||||

| Area Under the P3b | 0.83 | ||||

| Area Difference P3b | 0.59 | ||||

| Area Under the Pe | 0.54 | ||||

| Area Difference Pe | 0.78 | ||||

| Area Under the P400 | 0.80 | ||||

| Huffmeijer et al. (2014) | 18–22 years | N = 10 | 4 weeks | VPP Mean | 0.95 |

| N170 Mean (Avg Left/Right) | 0.91 | ||||

| MFN Mean | 0.09 | ||||

| P3 Mean (Avg Left/Right) | 0.63 | ||||

| LPP Mean | 0.85 | ||||

| Segalowitz et al. (2010) | Mean age of 28.2 years | N = 11 | 20 min | ERN (Fz) Peak-to-Peak | 0.66 |

| ERN (FCz) Peak-to-Peak | 0.79 | ||||

| ERN (Cz) Peak-to-Peak | 0.73 | ||||

| Sinha et al. (1992) | Mean age of 36.48 years | N = 44 | 14 months | Visual N1 Peak (Avg Oz, Cz, Pz) | 0.69 |

| Visual N2 Peak (Avg Oz, Cz, Pz) | 0.66 | ||||

| Visual P3 Peak (Avg Cz, Pz) | 0.69 | ||||

| Auditory N1 Peak (Cz) | 0.66 | ||||

| Auditory N2 Peak (Cz) | 0.47 | ||||

| Auditory P3 Peak (Avg Cz, Pz) | 0.56 | ||||

| Kinoshita et al. (1996) | 29–52 years | N = 10 | 1 week | P300 Baseline-to-Peak | 0.49 |

| N100 Baseline-to-Peak | 0.58 | ||||

| N200 Baseline-to-Peak | 0.51 | ||||

| N100-P300 Peak-to-Peak | 0.48 | ||||

| N200-P300 Peak-to-Peak | 0.54 | ||||

| Rentzsch et al. (2008) | 19–51 years | N = 41 | 4 weeks | P50 Base-to-Peak | 0.86 |

| N100 Base-to-Peak | 0.71 | ||||

| P200 Base-to-Peak | 0.82 | ||||

| P50 Peak-to-Peak | 0.89 | ||||

| N100 Peak-to-Peak | 0.70 | ||||

| P200 Peak-to-Peak | 0.78 | ||||

| Malcolm et al. (2019) | Mean age of 24.2 years | N = 12 | Mean of 2.3 years | Frontocentral N2 Mean | 0.42 |

| Central N2 Mean | 0.61 | ||||

| Centroparietal N2 Mean | 0.28 | ||||

| Frontocentral P3 Mean | 0.61 | ||||

| Central P3 Mean | 0.61 | ||||

| Centroparietal P3 Mean | 0.40 | ||||

| Thesen and Murphy (2002) | Younger adults and elderly | N = 20 | 4 weeks | N1 Baseline-to-Peak | 0.51 |

| P2 Baseline-to-Peak | 0.27 | ||||

| P3 Baseline-to-Peak | 0.50 | ||||

| N1-P2 Peak-to-Peak | 0.55 | ||||

| N1-P3 Peak-to-Peak | 0.52 | ||||

| D.M. Olvet and Hajack (2009) | Undergraduates | N = 45 | 2 weeks | CRN Peak | 0.58 |

| ERN Peak | 0.70 | ||||

| ERN-CRN Difference Peak | 0.51 | ||||

| Area Under the CRN | 0.78 | ||||

| Area Under the ERN | 0.70 | ||||

| Area Difference ERN-CRN | 0.47 | ||||

| Area Under the Pe | 0.75 | ||||

| Larson et al. (2010) | 19–29 years | N = 20 | 2 weeks | ERN Mean | 0.66 |

| CRN Mean | 0.75 | ||||

| Pe Mean (Error Trials) | 0.48 | ||||

| Pe Mean (Correct Trials) | 0.68 | ||||

| Sandre et al. (2020) | Mean age of 18.2 years | N = 33 | 5 months | ERN Peak (Cz) | 0.67 |