Abstract

Background

Platform trials gained popularity during the last few years as they increase flexibility compared to multi-arm trials by allowing new experimental arms entering when the trial already started. Using a shared control group in platform trials increases the trial efficiency compared to separate trials. Because of the later entry of some of the experimental treatment arms, the shared control group includes concurrent and non-concurrent control data. For a given experimental arm, non-concurrent controls refer to patients allocated to the control arm before the arm enters the trial, while concurrent controls refer to control patients that are randomised concurrently to the experimental arm. Using non-concurrent controls can result in bias in the estimate in case of time trends if the appropriate methodology is not used and the assumptions are not met.

Methods

We conducted two reviews on the use of non-concurrent controls in platform trials: one on statistical methodology and one on regulatory guidance. We broadened our searches to the use of external and historical control data. We conducted our review on the statistical methodology in 43 articles identified through a systematic search in PubMed and performed a review on regulatory guidance on the use of non-concurrent controls in 37 guidelines published on the EMA and FDA websites.

Results

Only 7/43 of the methodological articles and 4/37 guidelines focused on platform trials. With respect to the statistical methodology, in 28/43 articles, a Bayesian approach was used to incorporate external/non-concurrent controls while 7/43 used a frequentist approach and 8/43 considered both. The majority of the articles considered a method that downweights the non-concurrent control in favour of concurrent control data (34/43), using for instance meta-analytic or propensity score approaches, and 11/43 considered a modelling-based approach, using regression models to incorporate non-concurrent control data. In regulatory guidelines, the use of non-concurrent control data was considered critical but was deemed acceptable for rare diseases in 12/37 guidelines or was accepted in specific indications (12/37). Non-comparability (30/37) and bias (16/37) were raised most often as the general concerns with non-concurrent controls. Indication specific guidelines were found to be most instructive.

Conclusions

Statistical methods for incorporating non-concurrent controls are available in the literature, either by means of methods originally proposed for the incorporation of external controls or non-concurrent controls in platform trials. Methods mainly differ with respect to how the concurrent and non-concurrent data are combined and temporary changes handled. Regulatory guidance for non-concurrent controls in platform trials are currently still limited.

Supplementary Information

The online version contains supplementary material available at 10.1186/s13063-023-07398-7.

Keywords: External controls, Non-concurrent controls, Platform trials

Background

Platform trials aim at evaluating the efficacy of several experimental treatments within a single trial. Experimental arms are allowed to be added or removed as the trial progresses. Furthermore, in platform trials the efficacy compared to control can be tested using a shared control group, which increases the statistical power and reduces the number of required patients as compared to separate trials. Shared controls in platform trials may include concurrent and non-concurrent control data, where, for a given experimental arm, non-concurrent controls refer to data from patients allocated in the control arm before the arm enters the trial. Platform trials have gained popularity during the last years, and there has been much discussion and controversy regarding the use of non-concurrent controls. Non-concurrent controls can further increase the power of the trial, but as the randomisation does not occur simultaneously to treatment arms they can introduce an inflation of the type 1 error rate and bias in the estimates if time trends are present.

The use of randomisation in clinical trials has become the gold standard and the proper approach to evaluate a new therapy in a clinical trial is by using a randomised control. However, sometimes the consideration of a randomised control is not feasible as is the case, for instance, in studies for diseases with high mortality or certain rare diseases. In such cases, the use of historical controls has been considered either by substituting the randomised control or by combining them to the randomised control [1]. The combination of randomised and historical controls in clinical trials has received much attention over the past several decades, see for example [2-6] for general discussions on the use of historical controls. Considerable interest has focused on how to combine both controls without introducing bias while reducing the total sample size needed and/or the average total trial duration since Pocock’s seminal paper [7] discussed the use of historical controls in the design and analysis of randomised treatment-control trials. For reviews on methods for the inclusion of historical controls, readers may refer to [8] and [9]. As with historical controls, non-concurrent controls in platform trials may differ from concurrent controls, and therefore utilising them in the analyses could lead to biases in the estimates if naive analyses are performed [10]. In the context of historical controls, Viele et al. [8] defined “drift” as the difference between the true unknown concurrent control parameter and the observed historical control data. To mitigate this problem, several approaches have been proposed for historical controls that could be applied as well to non-concurrent controls in platform trials. The first goal of this paper is to identify the methods currently available for incorporating non-concurrent controls, clarify the key concepts and assumptions, and name the main characteristics of each method.

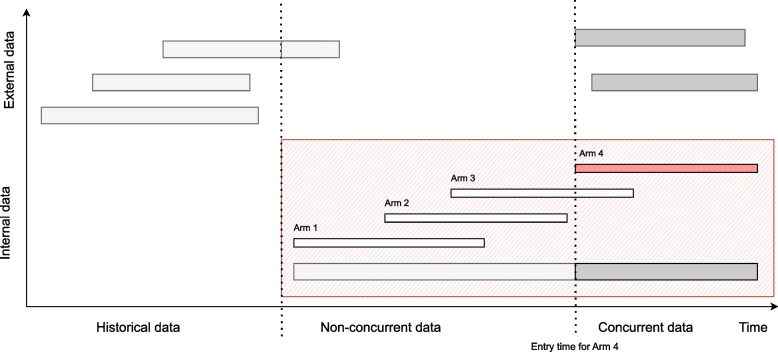

In the International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use (ICH) E10 guideline [11], an externally controlled trial is defined as “one in which the control group consists of patients who are not part of the randomised study as the group receiving the investigational agent, i.e. there is no concurrently randomised control group”. Categorising them by the time the subject data were collected, Jahanshahi et al. [2] distinguish between the following two types of external controls by: Concurrent external controls, as the group of patients recruited to control based on subject level data collected at the same time as the treatment arm but in another setting; and Non-concurrent external controls (also referred to as historical controls), as the group based on data collected at a time different (e.g. historical) from the treatment arm (e.g. retrospectively collected from a natural history study, or published data from a previous clinical study). Analogously, internal controls can be defined as the group of patients who are part of the same randomised trial as the group receiving the investigational agent, and divided them as well into: Non-concurrent (internal) controls: control patients who were recruited before the experimental treatment entered the trial and Concurrent (internal) controls: patients who are recruited to the control when the experimental treatment is part of the trial. Thus, concurrent control patients have a positive allocation probability of being randomised to the experimental arm. In that terminology the non-concurrent controls in platform trials are “non-concurrent internal controls”. Note here that the controls that are most important to distinguish between are: external controls from internal controls; and concurrent internal controls from non-concurrent internal controls. Also note that external control can come from several sources, such as clinical trials, but also “real world data”. See Fig. 1 for an illustration of different controls definitions.

Fig. 1.

Definition of controls depending on the source and time. The data within the red box represents the (internal) data from a platform trial, the data outside the red box represents the external data. Non-concurrent control data for arm 4 is represented in light grey boxes and concurrent controls are represented in dark grey

Although the inclusion of non-concurrent controls in analyses has been the subject of regulatory discussions for some time, it is still a young topic. Therefore, the second objective of this paper is to summarise the current regulatory view on non-concurrent controls in order to clarify the key concepts and current guidance. Therefore, we conducted a systematic search in regulatory guidelines regarding the use of non-concurrent controls. For the sake of simplicity, we will not use the term “internal” for concurrent and non-concurrent controls and distinguish them from external controls when it is unclear whether it is concurrent or non-concurrent external controls. Especially methods and regulatory opinions on historical controls and non-concurrent (internal) controls have a considerable overlap, and we will describe both and comment on commonalities and distinctions. We conclude the paper with a discussion of the advantages and potential caveats of using non-concurrent controls.

Methods

Review of methods

Search strategy

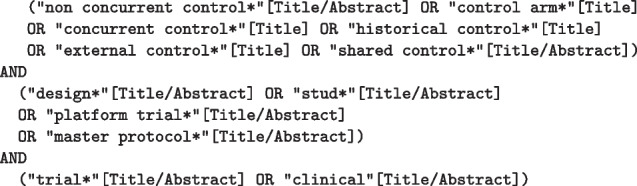

We carried out a systematic search for the methods in the PubMed database and supplemented the identified with manually searched papers. To identify methods to incorporate non-concurrent control data in PubMed, we performed the following search:

This study is reported in accordance with the PRISMA reporting guideline’s extension for scoping reviews [12]. Other options including the term “historical controls” were taken into consideration. However, such searches resulted in a large number of articles (for instance, including the term “historical control*” in the search as [Title/Abstract] returns 3209 articles) that were unfeasible to review and most of which were not of interest for this review. Therefore, it was decided to perform a narrower search, prioritising the relevance of the articles in terms of the methodology, and checking that the predefined a priori list of articles of interest was included and that the most relevant articles reviewing historical controls methods were also included.

Identification of articles

In order to be included for data extraction, the focus of the article had to be one of the following: (1) The description of a method proposed to include external/non-concurrent controls and concurrent controls in clinical trials. (2) The article considered an application of a method which included external/ non-concurrent controls together with concurrent controls in a clinical trial context with a detailed description of the method used. (3) The article is an overview of several methods to include external controls (e.g. review article). (4) The article is about the comparison of several methods (e.g. via simulation studies) to include external controls. On the other hand, the article was not considered if (1) the article focused on shared concurrent controls but not on the inclusion of external/non-concurrent controls; (2) the term external/non-concurrent control was used in another context; and (3) the article focused on a clinical trial using external/non-concurrent controls, but not on the methods.

The screening was performed by two reviewers in a three-step process: (1) Article titles were screened by a reviewer and selected based on the inclusion criteria or excluded based on the exclusion criteria. (2) Article abstracts were screened and selected by a reviewer based on the inclusion criteria or excluded based on the exclusion criteria. (3) Selected articles were fully read and selected by two reviewers based on the inclusion criteria or excluded based on the exclusion criteria. If the selected articles referred to other relevant articles not found by our search, these were added retrospectively.

Data extraction

Information from the identified articles was extracted by two independent reviewers using a standardised data extraction form (see Table 1 in the Supplementary Material 1). General information of the articles such as the year of publication, objective of paper or the type of paper (e.g. review, research article) were part of the extraction form. In addition, information on the study design (e.g. endpoints, treatment arms) was extracted. Concerning the incorporation of external/non-concurrent controls, we extracted details on the specific statistical methodology mentioned (e.g. Bayesian/Frequentist approach, covariate adjustment). Further information was collected regarding the implementation of simulation studies and the availability of code and software. Where possible, pre-specified categories were defined for each item in the extraction form. Adjudication was performed by a third reviewer in case of discrepancies. For the complete extraction form see Table 1 in the Supplementary Material 1.

Data analysis

The extracted information was analysed descriptively using R Version 4.0.3. The number of articles in each pre-specified category was determined and free-text fields were summarised in listings.

Review of guidelines

Our initial search was specifically on platform trials and non-concurrent controls in the strict sense. But since platform trials are still relatively new trial designs, there were so far not many guidelines available that addressed the use of non-concurrent controls. More precisely, only four platform trial specific guidelines were available: The recently published US Food and Drug Administration (FDA) guidance on “COVID-19: Master Protocols Evaluating Drugs and Biological Products for Treatment or Prevention” [13], the FDA guidance “Master Protocols: Efficient Clinical Trial Design Strategies to Expedite Development of Oncology Drugs and Biologics” [14] as well as the FDA guideline on “Interacting with the FDA on Complex Innovative Trial Designs for Drugs and Biological Products” [15], and, as the only European document, “Recommendation paper on the initiation and conduct of complex clinical trials” [16]. The topic of non-concurrent controls was either not considered at all or only marginally in these guidelines. Hence, we decided to broaden the review to guidance provided on the use of external or historical controls in clinical trials and to discuss the relevance and transferability of the results to non-concurrent controls.

Search strategy

Also this systematic guideline review was performed in accordance with the PRISMA reporting guideline’s extension for scoping reviews [12]. The database for our systematic review of guidelines was based on all documents available for download on 20/05/2021 from the database of the European Medicine Agency (EMA) as well as of the US Food and Drug Administration (FDA). In the EMA search engine1, we activated the filters “Topic = Scientific guidelines”, “Categories = Human”, “Type of content = Documents” and “Include Documents = Yes”. In the FDA Guidance Documents search2, we filtered the documents for “Product = Drugs” as well as “Product = Biologics”. We then used the advanced search function of Adobe Acrobat Pro 2020 to search in all pdf documents for the terms:

Duplicates and older draft versions were excluded after the keyword search.

Identification of guideline documents

We only included guidelines for data extraction which were guideline documents, Questions and Answers (QnAs), qualification opinion or reflection papers from EMA, ICH or FDA. We excluded documents (1) in which external/non-concurrent controls were not discussed in the context of an inclusion into the primary analysis (e.g. the use of external/non-concurrent controls were just mentioned in the context of sample size planning); (2) in which one of the keywords and hence the use of external/non-concurrent controls was only mentioned without further recommendation or description (e.g. mentioned only in the title of a reference); (3) in which the use of external/non-concurrent controls was only mentioned in a non-clinical or preclinical setting (4) or in the context of (secondary) safety data analyses or meta-analyses; (5) in which the use of external/non-concurrent controls was discussed in a medical device context.

Data extraction

Information from the identified guidelines was extracted by two independent reviewers using a standardised data extraction form (see Table 3 in the Supplementary Material 1). General information of the guidelines such as the year of the guideline or the type of document (e.g. guideline, reflection paper) were part of the extraction form. We documented whether the guideline discussed the use of external/non-concurrent data in early or late phase and whether the guideline was focused on methodological or clinical aspects. Concerning the use of external/non-concurrent controls, we extracted details on the specific circumstances in which the use was recommended or deemed acceptable, or unacceptable, the concerns that were raised as well as the requirements for the use. Furthermore, we identified the methods mentioned for the incorporation of external/non-concurrent data, the type of inferential question addressed and whether Bayesian methods were supported. We specifically identified whether the use of non-concurrent controls, or the joint use of external and concurrent controls in platform trials was discussed in the guideline. Where possible, pre-specified items were defined for each category in the extraction form after a first pre-screening (Supplementary Material 1 Table 3). Adjudication was performed by a third reviewer in case of discrepancies.

Data analysis

We analysed the extracted data descriptively with R Version 4.0.3. The number of guidelines in each pre-specified category was determined and free-text fields were summarised in listings.

Results of the methods review

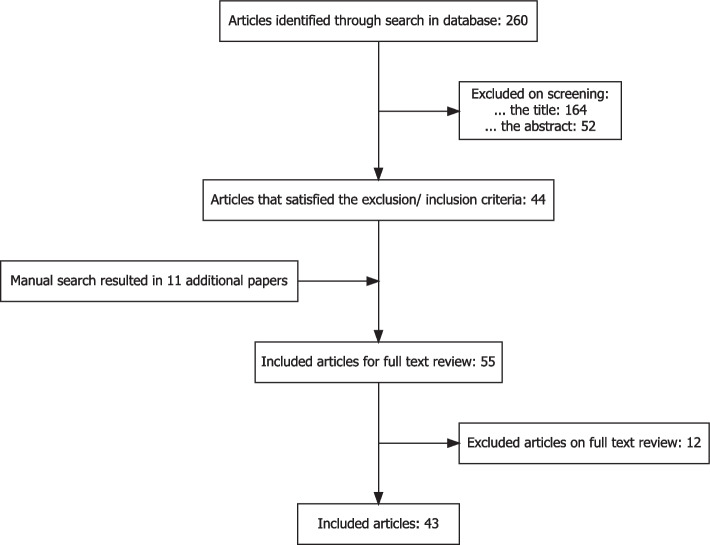

The data base search yielded 260 articles. Based on the titles 164 papers which did not address statistical methods were excluded, leaving 96 papers. We further excluded 52 articles after screening the abstracts. Hence, 44 articles satisfied the inclusion-exclusion criteria based on title and abstract screening. Additionally, 11 articles resulting from a manual search entered the full text review. Based on the full-text review further 12 articles were excluded leading to a total number of 43 relevant articles for which we performed the data extraction. The work-flow of the literature search is depicted in Fig. 2. A full list of these 43 articles can be found in Table 2 in the Supplementary Material 1.

Fig. 2.

Flow diagram of systematic article selection process. Date of search 17/08/2021

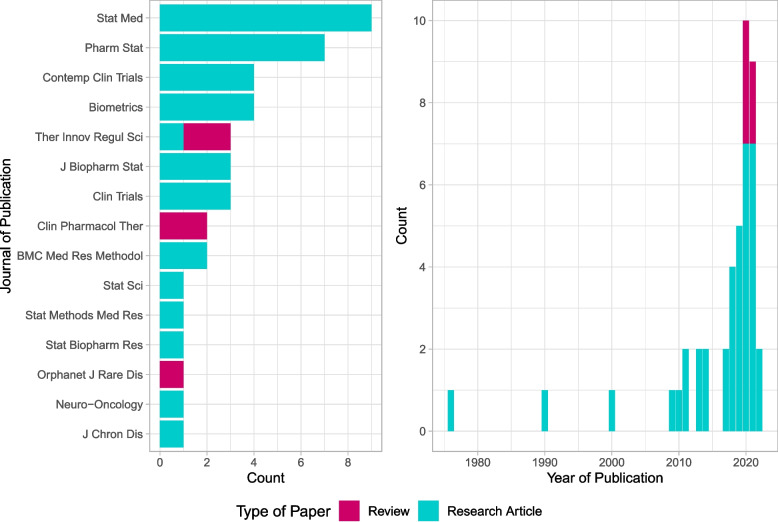

The distribution of the year of publication of the 43 identified articles shows an increase in publications, especially in the last two years (see Fig. 3). Please note that for 2021, only papers available on PubMed up to 17/08/2021 could be included. The articles included in the review are generally methodological articles, published in statistical journals. The journals with the most articles published on this topic were Statistics in Medicine with 9/43 (21%) articles and Pharmaceutical Statistics with 7/43 (16%) articles (see Fig. 3).

Fig. 3.

Journal and year of publication of the identified articles

Only 7/43 (16%) of the articles focused on platform trials while 36/43 (84%) of the articles concentrated on external controls. 28/43 (65%) articles considered the situation of a trial with only one treatment arm. In 21/43 (49%) of the identified articles, the considered primary endpoint was binary, for 16/43 (37%) of the articles a continuous endpoint was chosen, in 10/43 (23%) it was a survival endpoint, in 2/43 (5%) the described methodology was for count data and in 5/43 (12%) the articles provided high-level summaries of methodologies or discussions on the use of external controls without details on specific endpoints. With respect to the statistical methodology, in 28/43 (65% ) of the identified articles a Bayesian approach was used to incorporate external/non-concurrent controls while 7/43 (16%) used a Frequentist approach and 8/43 (19%) considered both.

We distinguish methods into two categories: downweighting-based approaches, referring to methods that downweight the non-concurrent control data in favour of the control data either using Bayesian methods, such as meta-analytic approaches, or propensity score approaches; and modelling-based approaches, referring to methods that use regression models to incorporate historical or non-concurrent control data. The majority of the articles, 34/43 (79%), considered a downweighting-based approach. A modelling-based approach was considered in 11/43 (26%) of the identified papers. Furthermore, we found that 37/43 (86%) of the articles discussed the potential biases and 11/43 (26%) covered interim analyses. Thirty-one out of 43 (72%) of the articles reported a simulation study or a case study and for 13/43 (30%) articles software or code is available.

Note that some articles fall into several categories and that is why the total sums to more than 100% in some cases.

Methods to incorporate non-concurrent controls

In this subsection, we present an overview and description of the main methods proposed in the identified articles. Most of these methods were originally proposed in the context of using historical controls and real-world evidence, but can also be applied to incorporate non-concurrent controls in platform trials.

Test-then-pool approaches

In the “test-then-pool” approach, the distributions of the non-concurrent and concurrent controls are first tested for equality using a frequentist test at level . If the null hypothesis of equality of the distribution functions is rejected, the non-concurrent control data is discarded and a separate analysis using solely the concurrent control data is conducted. If the hypothesis of equality can not be rejected, the non-concurrent and concurrent control data are assumed to be comparable and a pooled analysis is conducted [8, 17]. In the test-then-pool approach, the significance level of the pre-test can be chosen to reflect the a priori trust in the similarity of controls.

In the specific context of platform trials, Ren et al. [18] discussed the incorporation of non-concurrent controls in the analyses by means of a pooling approach in a trial with two treatment arms and a shared control, where one of the treatment arms enters later. The authors assumed that one treatment arm joins the platform later and compared different scenarios in which the treatment was tested against concurrent controls only, as well as against the pooled concurrent and non-concurrent controls without pre-testing the potential differences between controls. They evaluated the overall study power, defined as the probability of detecting at least one effective treatment, and the type I error control, as well as the optimal allocation ratio for the overlapping period between the two treatment arms. Another pooling approach was introduced by Jiao et al. [9], in which a platform trial with independent controls per treatment arm was introduced with a potential incorporation of external controls. The authors proposed a two-step test-then-pool design. In the first step, the test-then-pool approach was applied to the independent control groups in the platform trial to examine pooling of these groups to a common control. In the second step, the test-then-pool approach was applied to an external control and the platform control group (either separate or pooled control, depending on the first step).

Frequentist and Bayesian regression model approaches

In the specific context of platform trials with continuous data and without interim analyses, Lee and Wason [19] considered linear regression models that include a factor corresponding to time to adjust for time trends when using non-concurrent controls in the final analysis. They demonstrated in a simulation study that modelling time trends by a step-wise function leads to unbiased tests, even if the true time trend is linear rather than step-wise. These models were recently further investigated by Bofill Roig et al. [10] for platform trials with continuous and binary endpoints, showing that the regression model adjusting for time trends using a step-wise function relies on the assumption of equal time trends across all arms and on the correct specification of the scale of the time trends in the model. For fixed sample platform trials (without interim analysis), they show that under these assumptions the regression model gives valid treatment effect estimates and asymptotically controls the type 1 error if block randomisation is used.

For platform trials with continuous endpoints, Saville et al. [20] proposed a Bayesian generalised linear model, the so-called “Bayesian Time Machine”. The Time Machine allows to model the time trends and to perform an adjusted analysis. In this approach, in order to model the potential drift over time in response, time is divided into pre-defined time “buckets” (e.g. months or quarters) and the estimates for the time effects in different periods are smoothed using a normal dynamic linear model. The Time Machine can achieve nearly unbiased estimates if time trends are equal in all treatments and the time buckets and priors in the model are chosen appropriately. The model can also give an improvement in terms of power and mean square error as compared to approaches that estimate the time period effect independently in each time period.

Note that a difference in these model-based approaches is the definition of the time intervals. In the frequentist models in [19], the time intervals are defined by the time at which arms enter or leave the trial, but in Saville et al. [20], the intervals are defined based on the calendar times by means of the time “buckets” [21].

Propensity score approaches and baseline covariates-adjustments

Propensity score approaches have been proposed to adjust for differences between historical and concurrent controls. The scores are estimated with regression models based on baseline covariates [4].

Propensity scores were originally introduced by Rosenbaum and Rubin [22] in the context of causal inference in observational studies. The propensity score is the probability of being in one treatment group rather than the other given the observed baseline covariates. It is used as a balancing score, since patients with the same propensity score can be considered to have balanced covariates between the two groups. The balancing is then performed by matching, stratification, weighting or covariate adjustment using the propensity scores.

Yuan et al. considered in [23] two different approaches to augment a concurrent control arm with historical control data via propensity score matching. In [24], Chen et al. proposed a propensity score-integrated composite likelihood approach for augmentation of the concurrent control arm with real-world data, in which the composite likelihood is utilised to downweight the information contributed by the external controls in each propensity score stratum.

Covariate adjustment can also be directly implemented with regression models including relevant baseline covariates. For example, in the context of borrowing of historical control data, Han et al. [25] proposed a Bayesian hierarchical model that can incorporate patient-level baseline covariates to calibrate the exchangeability assumption between concurrent and historical control data.

Collignon et al. [5] discussed how the inter-study variation might be useful to quantify the amount of information that historical controls can provide, and opted for a clustered allocation design as the closest to a randomised trial.

Power prior and commensurate power prior

The Bayesian power prior approaches discount the historical data by a power parameter to account for potential differences between historical and concurrent control data. In their seminal paper, Ibrahim and Chen [26] proposed power priors in the context of regression models. For the prior specification of the regression coefficients, available historical data is used together with a scalar weight parameter that quantifies the uncertainty in the historical control data. The choice of such a parameter determines the weight of the historical data to be incorporated into the current study and hence, has implications on the operating characteristics of the trial. Although the choice of the value of this parameter is thus essential, it is often challenging to specify.

Duan et al. [27] and Neuenschwander et al. [28] proposed a modified power prior approach in which the weight parameter is considered an unknown parameter. This modified prior aligns with the Bayesian rationale and thus, introduces a prior for this weight. Banbeta et al. extended in [29] the modified power prior to incorporating multiple historical control arms. Gravestock et al. proposed in [30] an empirical Bayes approach to estimate the weight parameter based on the observed data. Bennett et al. [31] proposed a different approach to derive the weight parameter. They focused on adaptive designs with binary endpoints in which historical controls can replace the concurrent controls when there is an agreement between the historical and concurrent control data at the interim analysis. The authors [31] proposed two Bayesian methods for assessing the agreement between historical and concurrent control: the first method is based on an equivalence probability weight and the second on a weight based on tail area probabilities.

The commensurate power prior [31, 32] is another adaptive modification of the original power prior formulation [26] that uses conditional prior distributions for the concurrent controls, which adjusts the weight parameter through a measure of commensurability. In the specific context of platform trials, Normington et al. [33] also considered a trial with independent controls per treatment arm. Instead of borrowing data from an external control, the authors proposed a commensurate prior to borrow control data from the independent controls on the same platform. In comparison to an all-or-nothing approach (pool or discard completely), their design did not perform worse in simulations in terms of treatment effect bias (if control data was wrongly included) or longer study duration (if control data was wrongly discarded).

Hierarchical models

Meta-analytic-predictive (MAP) prior approaches account for heterogeneity by assuming exchangeability among the historical and concurrent control parameters and explicitly model the between-trial variation. This method performs a prediction of the control effect in a target clinical trial from historical control data using random-effects meta-analytic methods [4]. Schmidli et al. [34] defined a robust extension of the MAP (R-MAP) prior to allow for further discounting of historical data in the case of extreme discordance between the historical and concurrent control data. This prior is a mixture prior defined by two components: a MAP prior based on the historical data and a weakly-informative prior. Additionally, they proposed an adaptive design where in the interim analysis the agreement between historical controls and concurrent controls is evaluated. If the data is in agreement, then fewer concurrent controls will be recruited in the second stage.

More recently, Hupf et al. extended in [35] the MAP prior approach and proposed the Bayesian semiparametric MAP prior (BaSe-MAP). In the BaSe-MAP approach the random effects in the MAP prior are modelled nonparametrically as a Dirichlet process mixture of Gaussian distributions centred on a common mean. The BaSe-MAP borrows more conservatively than the other priors, but its performance in terms of frequentist operating characteristics is similar or better than the MAP and robust MAP methods.

Wang [36] proposed a new Bayesian model for adjusting for time trends in platform trials. The approach is based on pooling control data, using dynamic borrowing depending on how similar concurrent and non-concurrent controls are. In their setting, the standard of care control arm was permitted to be changed over time in the platform. Therefore, comparisons to the control arm were subject to change and therefore, changes over different recruitment times were expected. The authors proposed an extension of the R-MAP approach to accommodate these changes. Specifically, the approach uses mixture priors that link the most recent non-concurrent controls with those furthest away from the concurrent, and then using this to decide how far in time the non-concurrent controls should be pooled. The methods are illustrated by means of examples based on e.g. trials of Ebola virus disease therapeutics.

Elastic prior

The elastic prior method [37] uses a so-called elastic function, which is basically a congruence measure mapped to (0,1). This elastic function measures the strength of evidence for the congruence between concurrent and historical data (e.g. a test statistic). The elastic function is constructed to satisfy a set of pre-specified criteria such that the resulting prior will strongly borrow information when historical and trial data are not in conflict with each other, but refrain from information borrowing when historical and trial data are in-congruent by inflating the variance of the prior distribution. The method was extended in [38] to biosimilar studies.

Pocock’s random bias model

In his seminal paper [7], Pocock proposed a Bayesian statistical method that accounts for the difference in the historical and concurrent controls by means of a bias term, which is assumed to be a normally distributed random variable with mean zero. The method is equivalent to the commensurate prior in [32] in case of a single historical trial, except that the between-study variance is not estimated but set in advance. In addition, the random bias model is similar to the MAP and R-MAP approach. For further discussions on the similarities of these methods see [39] and [40].

Discussion and comparison of methods

Jiao et al. [9] compared several strategies to borrow historical external control data in the context of platform trials, including test-then-pool, dynamic pooling and MAP-prior, through a simulation study with respect to the type I error of rejecting the null hypothesis of a new added arm. They showed that when using the pooled approach, the type I error (T1E) might be strongly inflated if there are positive time trends. In the test-then-pool approach, there may be a T1E inflation but it depends on the significance level used in the pretest. In the same line, the dynamic pooling and the MAP approach might lead to an inflation when there are positive time trends depending on the value of the weight parameters used. A further note is that test-then-pool and MAP approaches have bounded inflation of the T1E, while for others, the T1E goes to 1 when the differences between the concurrent and non-concurrent controls become larger. Isogawa et al. compared in [41] MAP prior and the Power Prior approaches for the incorporation of historical control data in clinical trials with a binary endpoint. They summarise the results of their simulation study with the conclusion that if importance is attached to control T1E, the MAP approach based on a normal-normal hierarchical model may be preferred, while the power prior borrows in general more and hence, has larger power at the cost of a larger T1E inflation in case of conflict of historical and concurrent control data.

In Burger et al. [3], the authors elaborated on different sources of bias when using external controls including but not limited to calendar time bias, selection bias and regional bias. Platform trials were mentioned as one of the potential applications of external controls. They acknowledged that non-concurrent controls in platform trials can not be entirely considered “external” but are subject to similar biases as external controls, especially to time trends in the control group. As mentioned by Lee and Wason [19], or Saville et al. [20], regression models could be used to accommodate the time trends and lead to unbiased estimators and T1E control under the assumption of equal time trends on the model scale [10]. In [2], Jahanshahi et al. aim to better understand the use of external controls to support product development and approval. They reviewed FDA regulatory approval decisions between 2000 and 2019 for drug and biologic products to identify pivotal studies that leveraged external controls, with a focus on selected therapeutic areas. Although the scope of the paper is not to review the statistical methods on the use of external controls, they highlighted some of the methods and approaches often used. They referred to three statistical approaches often used to adjust for baseline imbalances: matching, covariate adjustment, and stratification. They discussed advantages and disadvantages of using propensity scores to match or stratify based on the score. As an alternative, they mentioned analysis approaches to evaluate the consistency among results using different sources of external control data.

Results of the guideline review

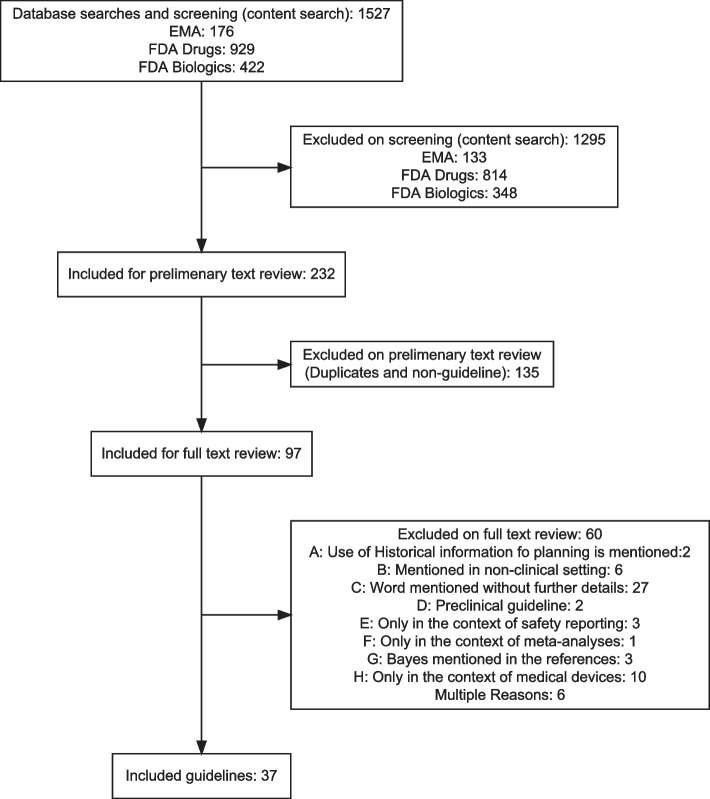

Overall, we found 1527 documents from the EMA and FDA database (see Fig. 4): 176 (11 %) documents from the EMA database and 1351 (88 %) documents from the FDA website. In all these documents, we searched for the above defined keywords which resulted in 232 documents. In a first filtering step, we excluded duplicates and old drafts for which a newer draft or final version was already included in the downloaded documents. As a result, 97 documents were included for a full text review. Based on the inclusion and exclusion criteria, 60 guidelines were excluded and 37 guidelines were identified as relevant for our final review and data extraction: 27 from the FDA, 6 from the EMA, and 4 from the ICH databases. Of these, 34 (92%) were guidelines, 2 (5%) were reflection papers and 1 (3 %) was a qualification opinion. As in the case of the methods review, we could note that there has been an increase in the number of guidelines mentioning this topic over the last few years, with 2019 being the year with more guidelines (10). The full list of included guidelines can be found in the Supplementary Material 1 Table 4. As already mentioned above, only four platform trial specific guidelines were available at the time of extraction [13-16]. All four documents raised the issue of potential time drifts. For this reason, the FDA guideline for Master Protocols in COVID-19 takes a clear position against the use of non-concurrent controls in this context. The other documents did not provide further guidance or details. Therefore, as mentioned above, we broadened our search to guidance provided on the use of external or historical controls in clinical trials and discussed the relevance and transferability of the results to non-concurrent controls in platform trials. The discussion of the regulatory guidelines is the interpretation of the authors only and cannot always be directly derived from the guidance documents.

Fig. 4.

Flow chart of guidelines identification. Date of search 20/05/2021

Circumstances in which the use of external controls is potentially acceptable

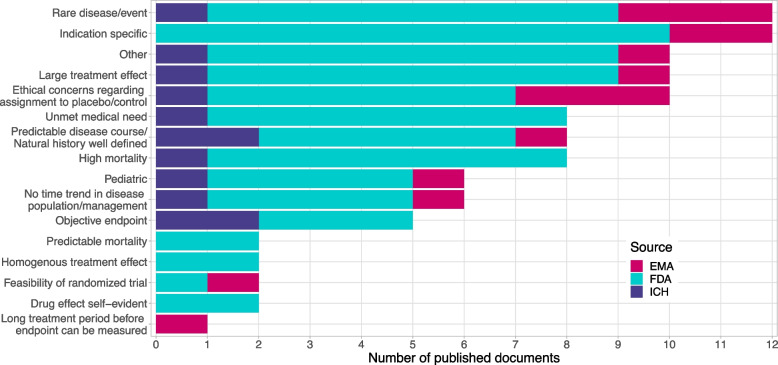

Based on a first text screening of the guidelines, we pre-specified the following circumstances in which the use of external controls might be acceptable: the targeted indication is related to a rare disease, the trial is in a paediatric context, there is an unmet medical need, the indication is related to a high mortality, a long treatment period is needed before the endpoint can be measured, a large treatment effect to be expected, no time trend in disease population or management is expected, a homogeneous treatment effect to be expected as well as there exist ethical concerns regarding assignment to the control group (see Fig. 5). In paediatric clinical trials, one of the ethical considerations is that children should not be enrolled in a clinical study unless necessary to achieve an important paediatric public health need [42]. Novel designs, including those that borrow external or historical data, might, in this case, be considered [43, 44] to conduct clinical trials in difficult experimental situations. The circumstance that was mentioned the most in the identified guideline documents was “rare disease” or was an “indication specific” concern. But also a “large treatment effect” or “ethical concerns” were mentioned quite often. All of these results relate quite generally to historical or external controls. If we look specifically at these circumstances under the focus of non-concurrent controls, we see that not all of these circumstances are one to one transferable to the question “when to use or not use non-concurrent controls” for the final analysis in a platform trial. But “large treatment effect”, “no time trend in disease course”, as well as an “objective endpoint” could, for example, be circumstances in which also the use of non-concurrent controls in a platform trial might be more appropriate.

Fig. 5.

Circumstances in which the use of external/historical/non-concurrent control is recommended or deemed acceptable. Categories are not mutually exclusive. One guideline can be present in multiple circumstance categories. Other was selected in case a guideline mentioned a circumstance which was not pre-specified such as a reference to ICH E10

Methods mentioned for the use

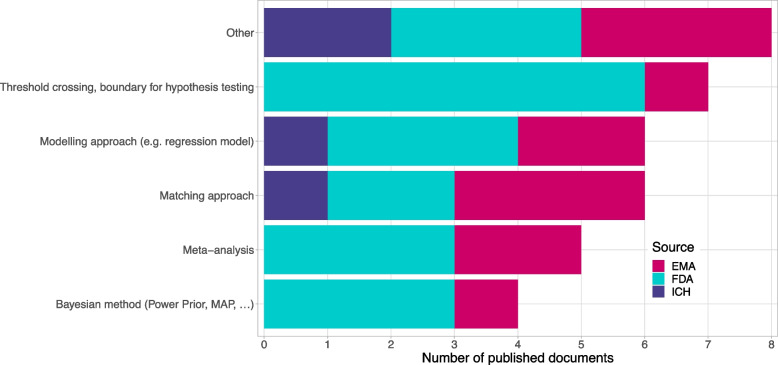

Within the methods one can of course distinguish between Bayesian as well as Frequentist methods. We furthermore decided to differentiate between (Frequentist) regression model approaches, matching approaches, a classical meta-analysis approach as well as classical threshold crossing whereby the threshold is based on historical control data (see Fig. 6). As an overall conclusion we can say, that the guidelines remain vague rather than instructive on the methods. None of the methods was mentioned conspicuously more often than another; and none of the methods was described or discussed in detail. More or less, if methods were mentioned at all, they were mentioned only in a passing sentence. Regarding the relevance of non-concurrent controls: all methods applicable are also applicable to non-concurrent controls. Due to overlapping time-periods, modelling approaches which attempt to model a time trend fit here probably even much better.

Fig. 6.

Methods mentioned for the use of historical/external/non-concurrent controls. Categories are not mutually exclusive. One guideline can be present in multiple methods categories. Other was selected in case a guideline mentioned a potential use of external data without a specific methodology (6 guidelines), or a methodology which was not pre-specified such as extrapolation or simulation (2 guidelines)

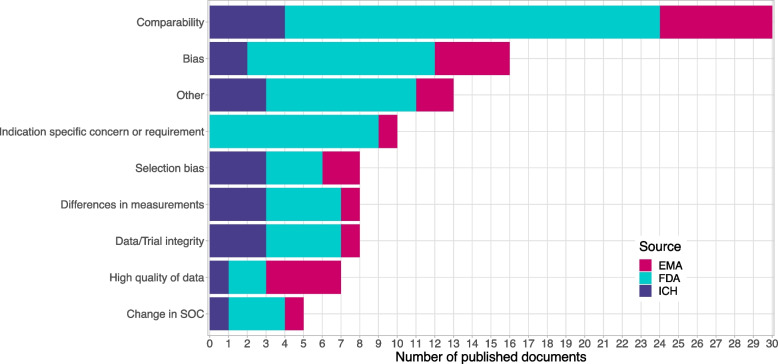

Concerns

Non-comparability and bias were raised most often as the general concerns with external controls. Selection bias, differences in measurements, data/trial integrity and changes in standard of care were raised several times as more specific concerns that can lead to non-comparability and bias (see Fig. 7). For a detailed explanation of these terms, refer to [3]. Evaluating the risks for these issues for non-concurrent controls in platform trials, the authors see the risks of selection bias and differences in measurement as significantly reduced: No selection of specific data sources and data points is done so that there cannot be any willful selection bias, nor is publication bias an issue. In platform trials, the definition of measurement systems and their quality assurance is part of the clinical trial planning, such that the use of the same instruments and standard operation procedures throughout the trial can be mandated, and e.g. for newly developed measurements, shift and drift and operator bias can be taken care of. For the risks on data and trial integrity in platform trials, the authors see platform trials comparable to adaptive trials rather than to trials using external controls, that means, they exist but can be mitigated more easily in platform trials than in trials with external controls: Changes in the behaviours of investigators, patients and carers upon revelation of results can be mitigated in platform trials by good planning and precaution measures in masking and information dissemination. This is a big advantage over external controls, where there is very limited masking possible, and often knowledge on the external controls is available to many stakeholders of the trial. Changes in the standard of care can occur in the course of a platform trial, and in that case, this clearly limits the comparability of non-concurrent controls just as it would be the case for any external control group. The advantage over external controls is the level of transparency on the timing and extent of such a change in platform trials. This allows informed decision making on which non-concurrent controls to use or not to use.

Fig. 7.

Concerns raised with the use of historical/external/non-concurrent controls. Categories are not mutually exclusive. One guideline can be present in multiple concern categories. Other was selected in case a guideline mentioned a concern which was not pre-specified such as missing unknown important prognostic factors in historical controls

Requirements

Requirements mentioned in guidelines mirror the concerns that are raised: the populations have to be comparable, and the data of high quality (see Fig. 7).

Indication specific recommendations

The most instructive guidance can be found on the use of external controls for specific indications. There is discouragement if the disease is very heterogeneous (amyotrophic lateral sclerosis [45], acute myeloid leukaemia [46]), when the disease is known to change over time (influenza [47], COVID-19 [13]), when bias or changes over time are suspected for procedures in general and endpoint evaluation (functional tests in Duchenne muscular dystrophy [48], evaluation techniques for objective response rate and progression free survival in oncology [49], dietary management [50]), and where standard of care is changing rapidly (COVID-19 [13], knee cartilage [51]). On the positive side, Table 1 gives examples of recommendations on including external controls. In case of the intravenous immunoglobulin replacement therapy trials, the FDA switched from a clear randomised clinical trial recommendation to a historically controlled recommendation between 1999 and 2000, and the process is described in more detail in [52]. These examples show how the details of trial designs help in deciding about the variance-bias trade-off for external controls, and raise the hope that instructive guidance can be developed for platform trials for specific scientific questions.

Table 1.

Recommendations for the use of external controls

| Document title | Citation |

|---|---|

| Chronic hepatitis C virus infection: developing direct-acting antiviral drugs for treatment | The primary efficacy comparison (either superiority or noninferiority depending on regimen studied) should be to a historical reference of a recommended HCV treatment regimen rather than a comparison to those receiving placebo (in the deferred treatment arm) because it is expected that no patient will respond virologically while receiving placebo. |

| Antibacterial therapies for patients with an unmet medical need for the treatment of serious bacterial diseases | We recommend randomising at least a small number of patients to the active control (e.g. through disproportionate randomisation of 4:1), if feasible and ethical based on an active control considered to be best-available therapy. This will allow for an assessment of the comparability of the external control to the trial population. Frequentist and Bayesian statistical methods can then be used to combine external control data with data from the patients randomised to the active control in assessing differences between treatment groups for the primary comparison. |

| Reflection paper on the regulatory requirements for vaccines intended to provide protection against variant strain(s) of SARS-CoV-2 | If the epidemiology of SARS-CoV-2 indicates that it is no longer in the best interest of subjects to receive primary vaccination with the parent vaccine, an alternative approach to immunobridge from the efficacy previously documented with the parent vaccine to the variant vaccine could be a comparison between immune responses elicited by primary vaccination with the variant vaccine against the variant strain and prior data on the immune response elicited by primary vaccination with the parent vaccine against the parent strain. |

| Safety, efficacy, and pharmacokinetic studies to support marketing of as immune globulin intravenous (human) replacement therapy for primary humoral immunodeficiency | The protocol should prospectively define the study analyses. We expect that the data analyses presented in the BLA will be consistent with the analytical plan submitted to the IND. Based on our examination of historical data, we believe that a statistical demonstration of a serious infection rate per person-year less than 1.0 is adequate to provide substantial evidence of efficacy. You may test the null hypothesis that the serious infection rate is greater than or equal to 1.0 per person-year at the 0.01 level of significance or, equivalently, the upper one-sided 99% confidence limit would be less than 1.0 |

Conclusions

We conducted a systematic search for statistical methods to incorporate non-concurrent controls when analysing platform trials. We identified methods originally proposed in the context of the use of historical and real-world data in clinical trials, as well as methods proposed for platform trials utilising non-concurrent controls. The approaches can be classified in two broad categories: downweighting-based approaches, which concerns methods that downweight the non-concurrent control data in favour of the concurrent control data depending on how similar the concurrent and non-concurrent control data are; and modelling-based approaches, which are methods that use regression models to incorporate historical or non-concurrent control data while adjusting for time trends by incorporating time as covariate in the model. Downweighting-based approaches have been widely discussed in the context of historical data. The validity of the results when using these downweighting approaches might strongly depend on the pre-specified parameters and assumptions made. Besides, none of these approaches control the type 1 error in all the scenarios. For example, when using down-weighting approaches with Bayesian methods, Kopp-Schneider et al. [53] have shown that strict control of the type 1 error rate is not possible when incorporating external information. In the case of model-based approaches, unbiasedness and type 1 error control depend on the assumption of equal time trends in all arms on the model scale. Some methods also use other covariates to adjust for temporal drifts. When using covariate-adjustment approaches, the unbiasedness and error control will depend on whether all covariates causing time trends are included in the model and collected in the data.

Recently, the estimand framework [54] has become an important part of clinical trial protocols. Of further note is the fact that the estimand, defined as the target of estimation, is derived from the trial objective and is therefore not directly affected by the use of non-concurrent controls. However, the inclusion of controls other than concurrent controls is an important aspect when discussing if the estimators are aligned to the estimand. Also, the methods considered to incorporate them, as the properties of estimators will depend on how non-concurrent controls are employed in the estimation of treatment effects [55].

With regard to the guidelines, it can be stated that current guidelines are rather vague when it comes to recommendations and considerations on using non-concurrent controls in a platform trial. Only four guidelines specifically for platform trials were available at the time of our search. Therefore, we broadened our search to not only non-concurrent controls in platform trials but to the general use of external/historical data in clinical trials. We discussed the relevance and transferability of our results to non-concurrent controls. The listed requirements and concerns regarding the use of external controls in the guidelines under consideration are related but cannot be applied directly on the use of non-concurrent controls. Despite the broadening, there was still a lack of clear guidance on which statistical methods would be more or less appropriate to incorporate external data for regulatory decision making. Issues about the “quality of historical data” do usually not arise with non-concurrent controls in platform trials. Furthermore, the concerns about “selection bias” and “changes in measurement” are reduced. The concern about “comparability” is decreased when inclusion and exclusion criteria are identical for concurrent and non-concurrent controls. However, that alone does not guarantee “comparability”, for example, new treatments could be added to the platform or intermediate results may become publicly available such that different patients are attracted than before. Furthermore, the concerns about “change in standard of care” as well as “time trends” of unknown origin are still an issue. Due to this, the FDA guideline for Master protocols in COVID-19 takes a clear position against the use of non-concurrent controls in this context. At the time of writing this article, the EMA [56] has published a QnA on complex clinical trials in May 2022. The issue of time trends is also raised in the document and a reference to ICH E10 [11] is made for the choice and justification of a control group. However, it is also acknowledged that platform trials are typically more complex than what is mentioned in ICH E10. Additional sources of bias may arise due to the complex features of the trial such as the introduction of new treatment arms in the trial at different time points or the use of shared controls. Especially the use of non-concurrent controls may affect trial interpretability. Therefore, early interaction with regulators [57] is also recommended in the QnA [56]. Similarly to discussions a decade ago on which adaptations [58-60] are useful or not, it is expected that regulatory experience and acceptance of methods using non-concurrent controls as supportive or primary analysis will probably grow over the next years. This scoping review was based on publicly available documents, but companies may already have had more elaborated discussions with regulators, e.g. via EMA scientific advice and protocol assistance procedures. Hence, a review on recent scientific advices would be of interest for future research as it usually takes some time until arising issues are reflected in regulatory guidance documents. The EMA has just released a new concept paper on platform [61] trials announcing that in the upcoming years a reflection paper also addressing the issue of non-concurrent control data should be addressed complementing the already existing guidelines.

One other key finding of our systematic guideline search is that indication-specific guidelines may have the highest potential to be instructive, since the question “To use or not to use” strongly depends on the specific indication and trial setting. For example, for rare diseases and indications less prone to changes over time, there might be a higher willingness to utilise non-concurrent control data within a platform trial compared to “broad” and dynamic indications.

Supplementary information

Additional file 1. In the first supplementary material, the process for the methods review, as well as the guideline review are described in detail. The list of pre-defined articles and the forms for the information extraction process are included. Besides, a list of the included articles and guidelines for extraction are presented.

Additional file 2. The second supplementary material includes the PRISMA Checklist.

Acknowledgements

The authors also wish to thank Peter Mesenbrink and Ekkehard Glimm for useful discussions, and comments on the draft manuscript. Furthermore, we would like to thank Natalia Muhlemann for the help in discussions and review of guidelines.

Abbreviations

- FDA

U.S. Food and Drug Administration

- EMA

European Medicine Agency

- ICH

International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use

Authors’ contributions

MBR and MP conceived and designed the research, MBR and KH jointly led the work, designed the review, and drafted the article. MBR, KH, UG, CB and QN participated in the screening process. CB and QN analysed the data regarding the methods and guidelines reviews, respectively. All authors contributed to the interpretation of findings, critically reviewed and edited the manuscript. All authors critically appraised the final manuscript. The authors read and approved the final manuscript.

Funding

EU-PEARL (EU Patient-cEntric clinicAl tRial pLatforms) project has received funding from the Innovative Medicines Initiative (IMI) 2 Joint Undertaking (JU) under grant agreement No 853966. This Joint Undertaking receives support from the European Union’s Horizon 2020 research and innovation programme and EFPIA and Children’s Tumor Foundation, Global Alliance for TB Drug Development non-profit organisation, Springworks Therapeutics Inc. This publication reflects the authors’ views. Neither IMI nor the European Union, EFPIA, or any Associated Partners are responsible for any use that may be made of the information contained herein.

Availability of data and materials

Not applicable.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests regarding the content of this article. The views expressed in this article are the personal views of the authors and may not be understood or quoted as being made on behalf of or reflecting the position of the Paul-Ehrlich-Institut, Cytel or any other of the institutions involved. Also, note that the discussion of regulatory guidelines is the interpretation of the authors only and cannot be directly derived from the guidance documents.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Marta Bofill Roig, Email: marta.bofillroig@meduniwien.ac.at.

Katharina Hees, Email: katharina.hees@pei.de.

References

- 1.Collignon O, Schritz A, Spezia R, Senn SJ. Implementing Historical Controls in Oncology Trials. Oncologist. 2021;26(5):859–62. doi: 10.1002/onco.13696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Jahanshahi M, Gregg K, Davis G, Ndu A, Miller V, Vockley J, et al. The Use of External Controls in FDA Regulatory Decision Making. Ther Innov Regul Sci. 2021;(0123456789). 10.1007/s43441-021-00302-y. [DOI] [PMC free article] [PubMed]

- 3.Burger HU, Gerlinger C, Harbron C, Koch A, Posch M, Rochon J, et al. The use of external controls: To what extent can it currently be recommended? Pharm Stat. 2021;(September 2020):pst.2120. 10.1002/pst.2120. [DOI] [PubMed]

- 4.Schmidli H, Häring DA, Thomas M, Cassidy A, Weber S, Bretz F. Beyond Randomized Clinical Trials: Use of External Controls. Clin Pharmacol Ther. 2020;107(4):806–816. doi: 10.1002/cpt.1723. [DOI] [PubMed] [Google Scholar]

- 5.Collignon O, Schritz A, Senn SJ, Spezia R. Clustered allocation as a way of understanding historical controls: Components of variation and regulatory considerations. Stat Methods Med Res. 2020;29(7):1960–1971. doi: 10.1177/0962280219880213. [DOI] [PubMed] [Google Scholar]

- 6.Lim J, Wang L, Best N, Liu J, Yuan J, Yong F, et al. Reducing patient burden in clinical trials through the use of historical controls: appropriate selection of historical data to minimize risk of bias. Ther Innov Regul Sci. 2020;54(4):850–860. doi: 10.1007/s43441-019-00014-4. [DOI] [PubMed] [Google Scholar]

- 7.Pocock SJ. The combination of randomized and historical controls in clinical trials. J Chron Dis. 1976;29(3):175–188. doi: 10.1016/0021-9681(76)90044-8. [DOI] [PubMed] [Google Scholar]

- 8.Viele K, Berry S, Neuenschwander B, Amzal B, Chen F, Enas N, et al. Use of historical control data for assessing treatment effects in clinical trials. Pharm Stat. 2014;13(1):41–54. doi: 10.1002/pst.1589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jiao F, Tu W, Jimenez S, Crentsil V, Chen YF. Utilizing shared internal control arms and historical information in small-sized platform clinical trials. J Biopharm Stat. 2019;29(5):845–859. doi: 10.1080/10543406.2019.1657132. [DOI] [PubMed] [Google Scholar]

- 10.Bofill Roig M, Krotka P, Burman CF, Glimm E, Gold SM, Hees K, et al. On model-based time trend adjustments in platform trials with non-concurrent controls. BMC Med Res Methodol. 2022;22(1):228. doi: 10.1186/s12874-022-01683-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use. ICH Harmonised Tripartite Guidance: Choice of Control Group and Related Issues in Clinical Trials, E10. 2000. https://database.ich.org/sites/default/files/E10_Guideline.pdf.

- 12.Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, The PRISMA, et al. statement: an updated guideline for reporting systematic reviews. BMJ. 2020;2021:372. 10.1136/bmj.n71. https://www.bmj.com/content/372/bmj.n71.full.pdf. [DOI] [PMC free article] [PubMed]

- 13.FDA/CDER/CBER/OCE: COVID-19: Master Protocols Evaluating Drugs and Biological Products for Treatment or Prevention. 2021. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/covid-19-master-protocols-evaluating-drugs-and-biological-products-treatment-or-prevention.

- 14.FDA/CDER/CBER/OCE: Guidance for industry - Master Protocols: Efficient Clinical Trial Design Strategies to Expedite Development of Oncology Drugs and Biologics. 2022. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/master-protocols-efficient-clinical-trial-design-strategies-expedite-development-oncology-drugs-and.

- 15.FDA/CBER/CDER: Guidance for industry - Interacting with the FDA on Complex Innovative Trial Designs for Drugs and Biological Products. 2020. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/interacting-fda-complex-innovative-trial-designs-drugs-and-biological-products.

- 16.Clinical Trials Facilitation and Coordination Group (CTFG): Recommendation paper on initiation and conduct of complex clinical trials. 2019. http://www.hma.eu/fileadmin/dateien/Human_Medicines/01-About_HMA/Working_Groups/CTFG/2019_02_CTFG_Recommendation_paper_on_Complex_Clinical_Trials.pdf.

- 17.Dejardin D, Delmar P, Warne C, Patel K, van Rosmalen J, Lesaffre E. Use of a historical control group in a noninferiority trial assessing a new antibacterial treatment: A case study and discussion of practical implementation aspects. Pharm Stat. 2018;17(2):169–181. doi: 10.1002/pst.1843. [DOI] [PubMed] [Google Scholar]

- 18.Ren Y, Li X, Chen C. Statistical considerations of phase 3 umbrella trials allowing adding one treatment arm mid-trial. Contemp Clin Trials. 2021;109(August):106538. doi: 10.1016/j.cct.2021.106538. [DOI] [PubMed] [Google Scholar]

- 19.Lee KM, Wason J. Including non-concurrent control patients in the analysis of platform trials: Is it worth it? BMC Med Res Methodol. 2020;20(1):1–12. doi: 10.1186/s12874-020-01043-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Saville BR, Berry DA, Berry NS, Viele K, Berry SM. The bayesian time machine: Accounting for temporal drift in multi-arm platform trials. Clin Trials. 2022;19(5):490–501. doi: 10.1177/17407745221112013. [DOI] [PubMed] [Google Scholar]

- 21.Bofill Roig M, König F, Meyer E, Posch M. Commentary: Two approaches to analyze platform trials incorporating non-concurrent controls with a common assumption. Clin Trials. 2022;19(5):502–503. doi: 10.1177/17407745221112016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70(1):41–55. doi: 10.1093/biomet/70.1.41. [DOI] [Google Scholar]

- 23.Yuan J, Liu J, Zhu R, Lu Y, Palm U. Design of randomized controlled confirmatory trials using historical control data to augment sample size for concurrent controls. J Biopharm Stat. 2019;29(3):558–573. doi: 10.1080/10543406.2018.1559853. [DOI] [PubMed] [Google Scholar]

- 24.Chen WC, Wang C, Li H, Lu N, Tiwari R, Xu Y, et al. Propensity score-integrated composite likelihood approach for augmenting the control arm of a randomized controlled trial by incorporating real-world data. J Biopharm Stat. 2020;30(3):508–520. doi: 10.1080/10543406.2020.1730877. [DOI] [PubMed] [Google Scholar]

- 25.Han B, Zhan J, John Zhong Z, Liu D, Lindborg S. Covariate-adjusted borrowing of historical control data in randomized clinical trials. Pharm Stat. 2017;16(4):296–308. doi: 10.1002/pst.1815. [DOI] [PubMed] [Google Scholar]

- 26.Ibrahim JG, Chen MH. Power prior distributions for regression models. Stat Sci. 2000;15(1):46–60. doi: 10.1214/ss/1009212673. [DOI] [Google Scholar]

- 27.Duan Y, Ye K, Smith EP. Evaluating water quality using power priors to incorporate historical information. Environmetrics: Off J Int Environmetrics Soc. 2006;17(1):95–106.

- 28.Neuenschwander B, Branson M, Spiegelhalter DJ. A note on the power prior. Stat Med. 2009;28(28):3562–3566. doi: 10.1002/sim.3722. [DOI] [PubMed] [Google Scholar]

- 29.Banbeta A, van Rosmalen J, Dejardin D, Lesaffre E. Modified power prior with multiple historical trials for binary endpoints. Stat Med. 2019;38(7):1147–1169. doi: 10.1002/sim.8019. [DOI] [PubMed] [Google Scholar]

- 30.Gravestock I, Held L, consortium CN. Adaptive power priors with empirical Bayes for clinical trials. Pharm Stat. 2017;16(5):349–60. [DOI] [PubMed]

- 31.Bennett M, White S, Best N, Mander A. A novel equivalence probability weighted power prior for using historical control data in an adaptive clinical trial design: A comparison to standard methods. Pharm Stat. 2021;20(3):462–484. doi: 10.1002/pst.2088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hobbs BP, Carlin BP, Mandrekar SJ, Sargent DJ. Hierarchical Commensurate and Power Prior Models for Adaptive Incorporation of Historical Information in Clinical Trials. Biometrics. 2011;67(3):1047–1056. doi: 10.1111/j.1541-0420.2011.01564.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Normington J, Zhu J, Mattiello F, Sarkar S, Carlin B. An efficient Bayesian platform trial design for borrowing adaptively from historical control data in lymphoma. Contemp Clin Trials. 2020;89:105890. doi: 10.1016/j.cct.2019.105890. [DOI] [PubMed] [Google Scholar]

- 34.Schmidli H, Gsteiger S, Roychoudhury S, Hagan AO, Spiegelhalter D, Neuenschwander B. Robust Meta-Analytic-Predictive Priors in Clinical Trials with Historical Control Information. Biometrics. 2014;(December):1023–1032. 10.1111/biom.12242. [DOI] [PubMed]

- 35.Hupf B, Bunn V, Lin J, Dong C. Bayesian semiparametric meta-analytic-predictive prior for historical control borrowing in clinical trials. Stat Med. 2021;40(14):3385–3399. doi: 10.1002/sim.8970. [DOI] [PubMed] [Google Scholar]

- 36.Wang C, Lin M, Rosner GL, Soon G. A Bayesian model with application for adaptive platform trials having temporal changes. Biometrics. 2022:0–29. 10.1111/biom.13680. arXiv:2009.06083. [DOI] [PubMed]

- 37.Jiang L, Nie L, Yuan Y. Elastic priors to dynamically borrow information from historical data in clinical trials. Biometrics. 2021;1–12. 10.1111/biom.13551. [DOI] [PMC free article] [PubMed]

- 38.Zhang W, Pan Z, Yuan Y. Elastic meta-analytic-predictive prior for dynamically borrowing information from historical data with application to biosimilar clinical trials. Contemp Clin Trials. 2021;110(June):106559. doi: 10.1016/j.cct.2021.106559. [DOI] [PubMed] [Google Scholar]

- 39.van Rosmalen J, Dejardin D, van Norden Y, Löwenberg B, Lesaffre E. Including historical data in the analysis of clinical trials: Is it worth the effort? Stat Methods Med Res. 2018;27(10):3167–3182. doi: 10.1177/0962280217694506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Callegaro A, Galwey N, Abellan JJ. Historical controls in clinical trials: a note on linking Pocock’s model with the robust mixture priors. Biostatistics. 2023;24(2):443–8. doi: 10.1093/biostatistics/kxab040. [DOI] [PubMed] [Google Scholar]

- 41.Isogawa N, Takeda K, Maruo K, Daimon T. A Comparison Between a Meta-analytic Approach and Power Prior Approach to Using Historical Control Information in Clinical Trials With Binary Endpoints. Ther Innov Regul Sci. 2020;54(3):559–570. doi: 10.1007/s43441-019-00088-0. [DOI] [PubMed] [Google Scholar]

- 42.FDA/CDER/CBER: Guidance for industry - E11(R1) Addendum: Clinical Investigation of Medicinal Products in the Pediatric Population. 2018. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/e11r1-addendum-clinical-investigation-medicinal-products-pediatric-population.

- 43.FDA/CDER/CBER: Guidance for industry - Interacting with the FDA on Complex Innovative Trial Designs for Drugs and Biological Products. 2020. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/interacting-fda-complex-innovative-trial-designs-drugs-and-biological-products.

- 44.European Medicines Agency: Reflection paper on the use of extrapolation in the development of medicines for paediatrics. EMA/189724/2018. 2018. https://www.ema.europa.eu/en/documents/scientific-guideline/adopted-reflection-paper-use-extrapolation-development-medicines-paediatrics-revision-1_en.pdf.

- 45.FDA/CDER/CBER: Guidance for industry - Amyotrophic Lateral Sclerosis: Developing Drugs for Treatment Guidance for Industry. 2019. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/amyotrophic-lateral-sclerosis-developing-drugs-treatment-guidance-industry.

- 46.FDA/OCE/CDER/CBER: Guidance for industry - Acute Myeloid Leukemia: Developing Drugs and Biological Products for Treatment. 2022. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/acute-myeloid-leukemia-developing-drugs-and-biological-products-treatment-0.

- 47.FDA/CDER: Influenza: Developing Drugs for Treatment and/or Prophylaxis. 2011. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/influenza-developing-drugs-treatment-andor-prophylaxis.

- 48.FDA/CDER/CBER: Guidance for industry - Duchenne Muscular Dystrophy and Related Dystrophinopathies: Developing Drugs for Treatment Guidance for Industry. 2018. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/duchenne-muscular-dystrophy-and-related-dystrophinopathies-developing-drugs-treatment-guidance.

- 49.FDA: Guidance for industry - Clinical Trial Endpoints for the Approval of Cancer Drugs and Biologics. 2018. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/clinical-trial-endpoints-approval-cancer-drugs-and-biologics.

- 50.FDA/CDER/CBER: Guidance for industry - Inborn Errors of Metabolism That Use Dietary Management: Considerations for Optimizing and Standardizing Diet in Clinical Trials for Drug Product Development. 2018. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/inborn-errors-metabolism-use-dietary-management-considerations-optimizing-and-standardizing-diet.

- 51.FDA/CBER/CDER: Guidance for industry - Preparation of IDEs and INDs for Products Intended to Repair or Replace Knee Cartilage. 2011. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/preparation-ides-and-inds-products-intended-repair-or-replace-knee-cartilage.

- 52.Schroeder HW, Dougherty CJ. Review of intravenous immunoglobulin replacement therapy trials for primary humoral immunodeficiency patients. Infection. 2012;40(6):601–611. doi: 10.1007/s15010-012-0323-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kopp-Schneider A, Calderazzo S, Wiesenfarth M. Power gains by using external information in clinical trials are typically not possible when requiring strict type I error control. Biom J. 2020;62(2):361–374. doi: 10.1002/bimj.201800395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use. ICH Harmonised Guideline: Addendum on estimands and sensitivity analysis in clinical trials to the guideline on statistical principles for clinical trials, E9(R1). 2019. https://database.ich.org/sites/default/files/E9-R1_Step4_Guideline_2019_1203.pdf.

- 55.Collignon O, Schiel A, Burman CF, Rufibach K, Posch M, Bretz F. Estimands and complex innovative designs. Clin Pharmacol Ther. 2022;112(6):1183–90. doi: 10.1002/cpt.2575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.European Medicines Agency: Complex clinical trials - Questions and answers. EMA/298712/2022. 2022. https://health.ec.europa.eu/system/files/2022-06/medicinal_qa_complex_clinical-trials_en.pdf.

- 57.Regnstrom J, Koenig F, Aronsson B, Reimer T, Svendsen K, Tsigkos S, et al. Factors associated with success of market authorisation applications for pharmaceutical drugs submitted to the European Medicines Agency. Eur J Clin Pharmacol. 2010;66(1):39–48. doi: 10.1007/s00228-009-0756-y. [DOI] [PubMed] [Google Scholar]

- 58.Elsäßer A, Regnstrom J, Vetter T, Koenig F, Hemmings RJ, Greco M, et al. Adaptive clinical trial designs for European marketing authorization: a survey of scientific advice letters from the European Medicines Agency. Trials. 2014;15(1):1–10. doi: 10.1186/1745-6215-15-383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Collignon O, Koenig F, Koch A, Hemmings RJ, Pétavy F, Saint-Raymond A, et al. Adaptive designs in clinical trials: from scientific advice to marketing authorisation to the European Medicine Agency. Trials. 2018;19(1):1–14. doi: 10.1186/s13063-018-3012-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Bauer P, Bretz F, Dragalin V, König F, Wassmer G. Twenty-five years of confirmatory adaptive designs: opportunities and pitfalls. Stat Med. 2016;35(3):325–347. doi: 10.1002/sim.6472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.European Medicines Agency: Concept paper on platform trials. EMA/CHMP/840036/2022. 2022. https://www.ema.europa.eu/en/documents/scientific-guideline/concept-paper-platform-trials_en.pdf.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. In the first supplementary material, the process for the methods review, as well as the guideline review are described in detail. The list of pre-defined articles and the forms for the information extraction process are included. Besides, a list of the included articles and guidelines for extraction are presented.

Additional file 2. The second supplementary material includes the PRISMA Checklist.

Data Availability Statement

Not applicable.