Abstract

Advancing diversity in the biomedical research workforce is critical to the ability of the National Institutes of Health (NIH) to achieve its mission. The NIH Diversity Program Consortium is a unique, 10-year program that builds upon longstanding training and research capacity-building activities to promote workforce diversity. It was designed to rigorously evaluate approaches to enhancing diversity in the biomedical research workforce at the student, faculty, and institutional level. In this chapter we describe (a) the program’s origins, (b) the consortium-wide evaluation, including plans, measures, challenges, and solutions, and (c) how lessons learned from this program are being leveraged to strengthen NIH research-training and capacity-building activities and evaluation efforts.

PROMOTING DIVERSITY IN BIOMEDICAL RESEARCH

NIH interests and activities and the origins of the DPC

The National Institutes of Health (NIH) maintains a diverse portfolio of biomedical research and workforce development. In aligning with its mission to “seek fundamental knowledge about the nature and behavior of living systems and the application of that knowledge to enhance health, lengthen life, and reduce illness and disability,” the NIH understands this mission can only be achieved by supporting rigorous scientists from a wide variety of backgrounds who will bring their perspectives, life experiences, and creativity to address biomedical research challenges (NIH, 2017, 2019).

NIH funding comes through taxpayer investments; thus, to ensure stewardship and fairness, it is imperative that biomedical research careers are accessible to all Americans (Working Group on Diversity in the Biomedical Research Workforce, 2012). Moreover, the Notice of NIH’s Interest in Diversity highlights the wealth of research showing some of the benefits resulting from a diverse NIH-supported scientific workforce such as “fostering scientific innovation, enhancing global competitiveness, contributing to robust learning environments, improving the quality of research, advancing the likelihood that underserved or health disparity populations participate in and benefit from health research and enhancing public trust” (NIH, 2019). This motivates NIH support for and development of a variety of initiatives, including training and capacity-building programs, intended to promote diversity at levels throughout the biomedical research workforce.

The NIH’s earliest activities to promote diversity in biomedical research began in the 1970s with the Minority Biomedical Support and Minority Access to Research Careers (MARC) programs (Alexander, 1975; Garrison & Brown, 1985). These programs provided support for institutions to train students from underrepresented backgrounds to pursue graduate training leading to a PhD, and for faculty research, pilot projects, and research resources. Through these and other longstanding efforts, including but not limited the Minority Biomedical Research Support (MBRS), the Research Initiative for Scientific Enhancement (RISE), the Support of Competitive Research Program (SCORE), and Research Centers in Minority Institutions (RCMI), the NIH has invested in training and research capacity-building activities to promote a strong and diverse biomedical research enterprise. As a result of these and other efforts, the pool of biomedical PhDs from backgrounds traditionally underrepresented in the biomedical sciences grew substantially from the 1980s through the early 2010s (NSF, 2021).

Despite this progress, it was clear in the 2010s that challenges remained relating to the NIH’s ability to support a diverse biomedical research workforce. For example, despite the exponential growth in PhD attainment for Black/African American, Hispanic/Latina/o/x, and Indigenous biomedical PhDs, fewer of these scientists moved on to independent research-intensive faculty careers relative to their white or Asian peers (Gibbs, Jr., Basson, Xierali, & Broniatowski, 2016; Gibbs, McGready, Bennett, & Griffin, 2014). Many minority-serving institutions also struggled to compete for significant NIH research funding. For example, despite their disproportionate share in training future Black scientists and serving the needs of Black communities, Historically Black Colleges and Universities (HBCUs) received less than 1% of funding awarded by NIH and other Health and Human Services agencies to institutions of higher education in Fiscal Year 2014 (Toldson & Washington, 2015). Further, a study by Ginther et al. (2011), “Race, Ethnicity, and NIH Research Awards,” demonstrated structural issues in NIH funding such that after controlling for “educational background, country of origin, training, previous research awards, publication record, and employer characteristics,” Black applicants were 10% less likely than white applicants to be awarded NIH research funding (p. 6).

Ginther et al.’s (2011) study prompted then-NIH Director Dr. Francis Collins to create a Working Group on Diversity in the Biomedical Research Workforce as part of the Advisory Committee to the NIH Director. This working group was tasked with

[developing] concrete recommendations toward improving the recruitment and retention of underrepresented minorities (URM), people with disabilities, and people from disadvantaged backgrounds across the lifespan of a biomedical research career from graduate study to acquisition of tenure in an academic position or the equivalent in a non-academic setting

(ACD, 2012).

The working group also noted the importance of data collection and evaluation of NIH training programs:

NIH must ensure that appropriate resources for the systematic tracking, reporting, and evaluation of the immediate and long-term outcomes of all trainees (ranging from college students engaged in summer research activities through recipients of career development awards), regardless of NIH-funding mechanism

(ACD, 2012).

As a result, in 2014, the NIH launched the 10-year Common Fund initiative called the Diversity Program Consortium (DPC). The stated goal on the Common Fund website is to:

develop, implement, assess and disseminate innovative and effective approaches to engaging, training and mentoring students; enhancing faculty development; and strengthening institutional research training infrastructure to enhance the participation and persistence of individuals from underrepresented backgrounds in biomedical research careers

The DPC: Structure and the centrality of evaluation

The DPC was developed to take a broad, empirical approach to determining what “‘works,’ for whom, in what context, and why” at three levels: (1) engaging, training, and mentoring students; (2) enhancing faculty development; and (3) strengthening institutional research training infrastructure. To address these questions, the DPC was designed to include rigorous evaluation at both the institutional and consortium-wide level from the beginning, which set it apart from prior research training and capacity-building programs. Through applying the knowledge gained from these efforts, the NIH and grantee institutions will be better positioned to engage a more diverse field of individuals in biomedical research careers (Valantine & Collins, 2015).1

The three original components of the DPC were the BUilding Infrastructure Leading to Diversity (BUILD) initiative, the National Research Mentoring Network (NRMN), and the Coordination and Evaluation Center (CEC). BUILD was designed to support institutions with modest NIH research activity (<$7.5 million in research project grants annually) that enroll undergraduate populations where at least 25% of the students are supported by Pell grants. Each of the 10 awardees developed distinct programmatic approaches and a rigorous evaluation plan intended to determine the most effective ways to engage and retain students from diverse backgrounds in biomedical research, support faculty career and research development, and increase institutional research and research training capacity. The NRMN was focused on developing: (a) a national network of mentors and mentees from biomedical disciplines to provide individualized mentoring and resources as trainees navigated career transitions; (b) opportunities to enhance the mentoring skills of biomedical researchers, such as enhancing cultural awareness (Byars-Winston et al., 2018; Womack et al., 2020); and (c) professional and skills-development opportunities, such as grant writing (Weber-Main et al., 2020).

The CEC was designed to coordinate and evaluate the DPC activities. Coordinating activities have included supporting consortium-wide working groups, helping develop and plan publications, managing the DPC website,2 and organizing the DPC annual grantees’ meeting. The CEC’s evaluation work has included designing a detailed evaluation plan to assess the outcomes and impact of the BUILD and NRMN awardees’ training and mentoring approaches based on the consortium’s logic models and Hallmarks of Success (discussed in the next section).3 For the purposes of this chapter, we focus on the interventions developed by the BUILD awardees and the CEC-led evaluation of these interventions (also called the consortium-wide evaluation or the Enhance Diversity Study).

BUILD activities and evaluation

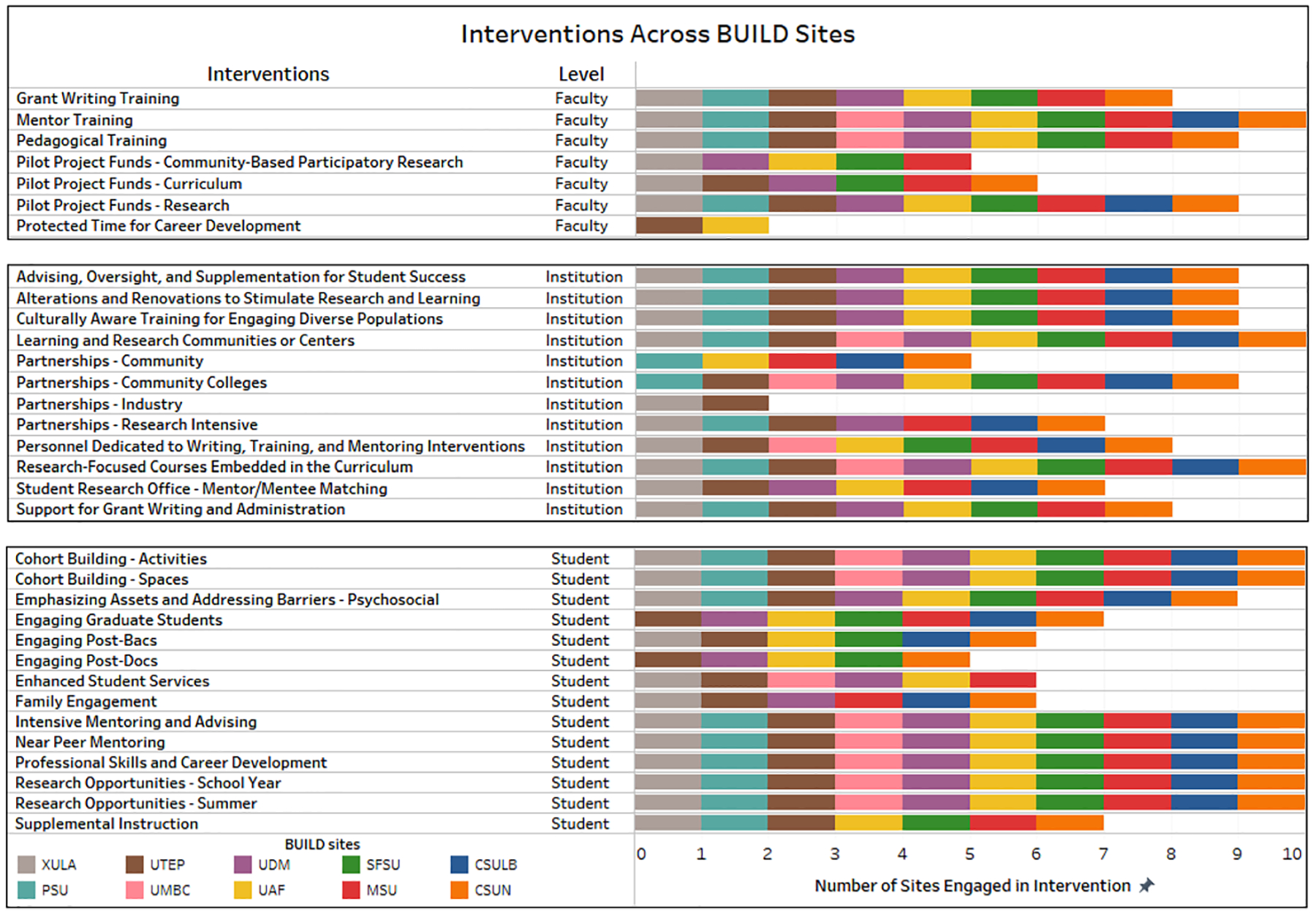

The BUILD initiative, composed of 10 awardee institutions, is the DPC’s largest research training and institutional-capacity-building initiative. Each awardee proposed site-specific approaches intended to engage students from diverse backgrounds in biomedical research, develop faculty mentoring and research skills, and promote institutional research and training capacity. Activities offered by BUILD programs include a variety of traditional interventions, including tutoring and mentored research experiences, which have been shown to increase students’ persistence, skills, and identity as scientists, as well as approaches meant to address unique geographic and cultural interests of their students. For example, the BUILD program at the University of Alaska Fairbanks is based on a “One Health” model that incorporates Alaska Native and Indigenous approaches to research as well as a focus on local environments (Hueffer, Ehrlander, Etz, & Reynolds, 2019), while the BUILD program at Morgan State University in Baltimore, Maryland has focused on training students to approach their research with an entrepreneurial mindset (Kamangar et al., 2017). Figure 1 provides a depiction of the BUILD interventions occurring across each of the sites at the student, faculty, and institutional levels.

FIGURE 1.

BUILD Program Interventions Note. This stacked bar graph depicts many of the interventions employed by BUILD awardees at the faculty, institutional, and student levels. Each of the following awardees is represented by a distinct color: Xavier University of Louisiana (XULA – light gray), The University of Texas at El Paso (UTEP – brown), University of Detroit Mercy (UDM – lavender), San Francisco State University (SFSU – green), California State University, Long Beach (CSULB – blue), Portland State University (PSU – teal), University of Maryland Baltimore County (UMBC – pink), University of Alaska Fairbanks (UAF – golden yellow), Morgan State University (MSU – red), and California State University, Northridge (CSUN – orange)

The size and scope of the BUILD program, with over 3000 students and over 4000 faculty involved in the activities, provided an opportunity to conduct a large-scale, longitudinal evaluation designed to have relevance to research-training, faculty-development, and capacity-building programs for years to come. The evaluation proposed to compare the impacts of the BUILD initiatives within each institution (i.e., comparing outcomes of students and faculty who were BUILD participants vs. those who were not) as well as across institutional contexts (i.e., comparing outcomes at BUILD institutions to those at comparable institutions that did not have BUILD funding (Crespi, 2019)).

Hallmarks of Success

The CEC’s task was distinct from many large-scale NIH studies in a few meaningful ways. In contrast to multisite clinical trials where the same clinical protocol is implemented at two or more sites, the BUILD awardees proposed and implemented distinct approaches to student training, faculty development, and institutional capacity building. Moreover, many potential outcomes of interest cannot be measured until after the funding ends (e.g., degree attainment from a graduate biomedical research program). To guide the consortium-wide evaluation and address some of these challenges, the DPC awardees developed Hallmarks of Success. These Hallmarks represent key elements at stages of the biomedical research career trajectory (e.g., attaining baccalaureate biomedical degrees for undergraduates) that lead to the development of a successful biomedical research career. They span the individual student, faculty/mentor, and institutional levels, matching the three levels of DPC program impact (NIGMS, 2019d).

Developing the Hallmarks of Success was an iterative and collaborative process in which the members of the consortium worked together to develop evaluation tools that could be used at the student, faculty, and institutional levels targeted by the BUILD and NRMN programs. The Hallmarks represent benchmarks for achievement or maintenance, depending on the baseline data.

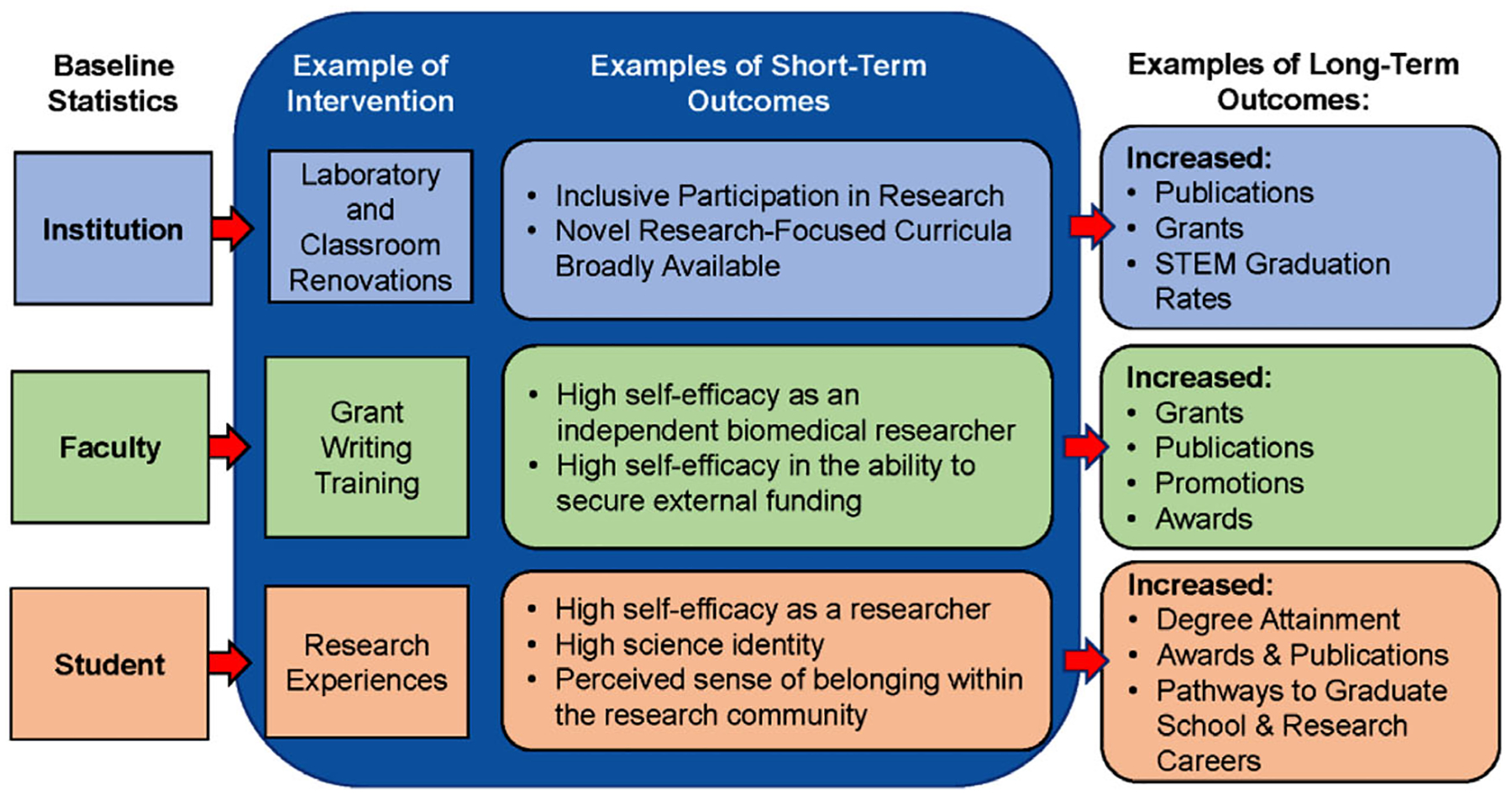

The 18 student-level Hallmarks address psychosocial elements, such as a trainee’s high science identity, high research self-efficacy, and sense of belonging within the university, as well as educational milestones, such as attaining an undergraduate degree and applying to graduate programs. The faculty-level Hallmarks (17 total) follow a similar format, including psychosocial elements as well as career- and research-development milestones, such as engaging in activities to secure research or research-training funding, advancing to the next career stage, and developing strong professional networks. There are 11 institutional-level Hallmarks covering a variety of topics focused on diversity, equity, and inclusion. Consortium-wide evaluation survey questions align with the Hallmarks of Success so that data can be consistently compared across sites and with comparator institutions. Figure 2 provides examples of BUILD interventions at the student, faculty, and institutional levels, and associated short-term and long-term outcomes.

FIGURE 2.

Examples of Interventions and Hallmarks of Success in the Consortium-Wide Evaluation Plan. Note. Baseline statistics were collected and measured at the beginning of funding and used for assessing changes in short-term and long-term Hallmarks of Success at the institutional (blue), faculty (green), and student (salmon) levels. Representative interventions and short- and long-term outcomes illustrating progress toward achieving Hallmarks are shown. STEM = science, technology, engineering, and mathematics

Consortium-wide evaluation implementation challenges and strategies for success

As could be expected for any large-scale project requiring data collection, the awardees faced challenges during the implementation of the consortium-wide evaluation. Below, we describe some of the challenges in operationalizing this evaluation and the strategies employed to overcome these obstacles.

Consortium cohesion and compliance

An early challenge involved building relationships and structures, spanning vast geographic regions, so that the BUILD sites and the CEC could operate as a cohesive unit. The nature of a consortium-wide evaluation, in which the awardee institutions’ interventions and outcomes are viewed in light of the whole, stood in contrast to traditional training and capacity-building programs where the focus of implementation and evaluation is typically at the site level. Ensuring awardees remained cognizant of and appropriately prioritized the needs of the consortium-wide evaluation (in addition to their site-level activities) added a layer of complexity to the CEC’s task relative to traditional training or capacity-building program evaluations. In the first years of the DPC, a great deal of effort was expended developing and implementing consortium activities, ranging from governance to evaluation deployment.

The DPC developed governance documents and guidelines, such as the DPC Data Sharing Agreement, charters for various working groups and subcommittees, and guidelines for collaborative manuscript development. These documents detailed policies and procedures so that disparate sites could function effectively as a consortium (NIGMS, 2019a). Because of the scale of the endeavor, communication avenues were developed to ensure that necessary information was being shared widely among all interested parties at the sites. The CEC frequently led these efforts and facilitated review and approval of the documents. For example, the nature of the DPC required a greater degree of data sharing than in traditional research training or capacity-building projects. To ensure that all involved would have fair access to data, the DPC Data Sharing Agreement outlines the types and ownership of the data collected, rules about who may access the data, as well as protocols for requesting access. Because one of the long-term goals of the DPC is to disseminate findings from these data and to make the de-identified data available to the community, setting standards early was an important milestone for the consortium.

In addition to establishing governance policies and procedures, consortium members needed to work together to follow rigorous scientific research protocols with the appropriate approvals and clearances. Initially, each site sought local institutional review board (IRB) approvals of the survey materials and methods, as is required for any research involving human subjects. Gaining IRB approval from each of the 10 BUILD sites and the CEC contributed to delays in data collection. For example, different changes were required across the sites based on the variability of institutional standards. Because of the collaborative nature of the consortium-wide surveys, changes had to be synthesized and propagated across all the sites. In later years, the CEC and BUILD sites applied for IRB approval through the review board at the University of California, Los Angeles, simplifying the process.

DPC awards are structured as cooperative agreements, resulting in more substantial NIH staff involvement than with traditional federal grants. Consequently, the CEC’s ability to carry out the evaluation was reliant on receiving approval from the U.S. Office of Management and Budget (OMB). Among other functions, the OMB ensures federal agencies are not putting undue survey burdens on the general population. The process to apply for and receive OMB clearance, which was necessary before the CEC could access and use data collected by the BUILD sites, took over a year. This was a longer time frame than originally planned and caused setbacks in data analysis, data cleaning, and data sharing; however, it also helped strengthen the partnership between BUILD awardees and the CEC, as the BUILD sites worked to collect and store data that would later be transferred to the CEC.

Identifying participants

The promise of the DPC—learning what works, for whom, in what context, and why—presented several measurement issues. The CEC needed to work with sites to determine who should be counted as a BUILD participant and who would be a comparator. As noted above, the sites designed training, mentoring, and capacity-building interventions that ranged in degree of intensity and frequency and were meant to have a transformational and sustainable impact. For example, some of the BUILD interventions included renovating laboratory and classroom spaces or buying new equipment to improve institutional research and research-training capacity. Thus, even students unaffiliated with the BUILD program might be impacted by the initiative.

From the perspective of an evaluation, did a student who participated in any BUILD activity “count” as a BUILD participant? Alternatively, should only those who had the most intensive exposure to the interventions and support (e.g., those on full scholarships and with mentored research experiences) “count”? Similarly, for faculty, the range of exposure to BUILD could include mentor training, pilot project funding, or use of equipment or facilities that were procured through BUILD funds. Discussion about definitions and clarification of inclusion criteria took time and necessitated close cooperation between the BUILD and CEC teams.

Apart from the complexity of defining the BUILD student and faculty participants, keeping track of who participated in programmatic activities—and to what extent—represented an additional challenge. To facilitate collection of participation data in the variety of activities at BUILD institutions, the CEC developed an online tool called “the Tracker.” The Tracker allowed BUILD sites to upload activity rosters (e.g., participant rosters from trainings, workshops, and conferences) and include agreed-upon keywords. Users could view bar graphs to illustrate the range of activities within the BUILD initiative, separated by student and faculty activities. Development and refinement of the Tracker required considerable effort, as did the interactions with representatives from the BUILD sites during the deployment and maintenance phases. The ultimate goal of the Tracker is to map participation in BUILD activities to survey responses and the associated Hallmarks of Success.

Survey implementation challenges and response rates

A major component of the consortium-wide evaluation focuses on surveying BUILD participants and comparators. While important for the evaluation, the comparators had little incentive to respond because they had not received any direct benefit from the BUILD interventions. As for all surveys, the strength of the data is dependent upon response rates. Various factors contribute to achieving acceptable survey response rates—for example, understanding how best to reach the intended population, building trust with respondents, packaging the surveys in a way that encourages responsiveness, and designing surveys that are likely to be completed (Clayton, 2021; Glasow, 2005). Specific factors that may have influenced DPC response rates and the tactics to improve the numbers are detailed in this section.

Often, strategies to improve response rates are piloted during a pre-launch or testing phase. This was not possible with the DPC, given that the CEC and BUILD sites received their awards concurrently. However, the surveys were primarily derived from widely used instruments created by the Higher Education Research Institute, and therefore some of the pitfalls of new surveys were avoided.

As mentioned previously, the NIH expected rigorous evaluation and reporting at both the site and consortium-wide levels. In some cases, this resulted in multiple surveys being deployed simultaneously. This in turn had the potential to contribute to “survey fatigue” and decreased response rates. The NIH worked with the CEC and BUILD sites to better coordinate survey deployment and clarify expectations of NIH reporting requirements. In some cases, this resulted in the CEC and BUILD sites combining surveys or eliminating duplicate survey items.

Throughout the survey administration periods, a variety of methods were employed to address response rates. These included improving survey distribution methods, enhancing communication and branding, personalizing survey invitations, increasing financial incentives, and expanding the pool of individuals invited to respond. (See Chapter 3 by Ramirez et al. for more information.) For example, BUILD campuses worked with the CEC to get past email filters, to develop “influencer emails” featuring recognizable campus figures, and to create short videos and gather quotes from students involved in the programs. They found that increasing monetary incentives along with increasing the number of comparators invited to complete the survey proved to be effective in improving response rates and sample sizes.

Longitudinal data analyses

One of the potentially valuable aspects of the consortium-wide evaluation is following student, faculty, and institutional progress over time. In practice, a variety of factors complicate longitudinal analyses (Derzon, 2018). We provide specific examples for the DPC in this section.

Defining who is a BUILD student or a comparator over time can complicate longitudinal data analyses. This is because, within cohorts, student progression does not always follow the same path or timescale (e.g., data sets for different cohorts may not align because students take a leave from BUILD or enter BUILD later in their course of study). Additional challenges include gaps in survey responses as well as refinements to interventions and the surveys over time. Furthermore, because BUILD sites implemented a variety of distinct interventions simultaneously, drawing robust conclusions about the impact of a single intervention over time may prove challenging.

To combat these challenges, BUILD sites worked to increase response rates, used site-level data to complement consortium-wide datasets, and collaborated on intervention data analyses to increase sample sizes and the rigor of the conclusions. The CEC also facilitated the collection of institutional records data from the BUILD-funded institutions to supplement and verify the survey outcomes data.

LESSONS LEARNED

Impact of the DPC

The long-term impact of the DPC will be in the broad dissemination of the findings and of the methods developed to evaluate training, mentoring, and capacity-building activities using short-term and long-term Hallmarks of Success. Furthermore, at the BUILD sites, the size and multileveled approach of this NIH investment promoted broader institutional “culture changes” to enhance inclusivity and support of faculty and students from backgrounds traditionally underrepresented in the biomedical sciences. Rather than simply developing activities attempting to change individuals from underrepresented backgrounds to fit into a system that was not designed with them in mind, institutions have made lasting alterations to celebrate and support faculty and student identities.

Despite the challenges described above, DPC awardees have already contributed to the evidence base (NIGMS, 2022). These findings and methods set the stage for changes in how the NIH and grantee institutions approach diversity-enhancing training, mentoring, and capacity-building activities. Below, we reflect on some of the lessons we’ve learned as they relate to future efforts to promote a diverse biomedical research workforce.

Evaluating interventions: Scope and sustainability

The scope and complexity of the DPC created challenges to implementing a true consortium-wide evaluation. For the second phase—spanning Years 6–10 of the grant—the structure changed to allow for more focused and efficient efforts. The CEC concentrated its primary evaluation activities on BUILD, while the NRMN evaluation was redesigned as a sub-consortium of research projects with an NRMN Coordination Center and Resource Center. The NRMN research projects employ focused interventions intended to explore mentoring and networking approaches to advance careers of individuals from diverse backgrounds. Its Coordination Center was designed to promote synergies among NRMN projects and provide feedback and guidance on data collection. The Resource Center continued efforts from the first phase by providing a web-based networking platform, online training sessions, and other resources for mentors and mentees throughout their biomedical education and careers.

Creating a common evaluation platform for NIH-funded workforce development and capacity-building programs is sometimes raised as a potential approach for enhancing stewardship of these important federal investments. While this idea has many positive attributes, the logistical and institutional issues that had to be addressed for the 10 BUILD sites underscored the challenges of evaluating programs with distinct settings, infrastructure, populations, methodologies, and activities. The BUILD site evaluation showed that, with targeted investments, individual institutions can develop their capacity to assess their programs effectively and rigorously. As a result, the NIGMS has developed an annual administrative supplement opportunity to allow institutions with existing institutional training awards to receive funds to focus on building evaluation capacity in a sustainable way (Gammie, 2022).

Similarly, early research outcomes from the DPC demonstrate that while much is known, there are many areas where more research is needed to understand why and how certain training, mentoring, and capacity-building practices are effective. Additionally, there is a breadth of creative approaches that are yet to be tested but have the potential to enhance the diversity of the biomedical research workforce. As a means of sustaining these efforts, the NIGMS supports a research program to increase the evidence base for effective practices. The “Research on Interventions that Promote the Careers of Individuals in the Biomedical Research Enterprise” program was developed “to support research to test interventions to enhance research-oriented individuals’ interest, motivation, persistence, and preparedness for careers in the biomedical research workforce” (NIGMS, 2021b). The expectation is that these studies, along with the findings from the DPC, will guide the implementation of interventions in a variety of academic settings and at a range of career levels to enhance the diversity of the biomedical research workforce.

Transformative impact of targeted investments

The size of the BUILD investments at the institutional level is not scalable across all contexts where biomedical research training and career development occur. However, early implementation of the BUILD program provided insights into focused areas where, with defined investments, the NIH could extend the impact of the DPC beyond the original awardee institutions. As a result, two initiatives were added to the second phase: the Sponsored Program Administration Development (SPAD) Program and the Diversity Program Consortium Dissemination and Translation Awards (DPC DaTA).4

As part of a larger strategy to enhance the diversity of the biomedical research workforce, SPAD was developed so that a broader range of institutions would have the capacity to successfully compete for and administer NIH training, research, and capacity-building funds (NIGMS, 2019c). Discussions are underway on how best to sustain and expand these efforts through the NIH UNITE Initiative to Address Structural Racism so that a diversity of institutions (e.g., those with an historic mission of serving students from underrepresented groups) are able to compete for and effectively administer NIH funds (NIH, 2022a).

DPC DaTA offers an opportunity for institutions to employ DPC experimental and evaluation methods toward understanding the effectiveness of biomedical research training, mentoring, and capacity-building interventions on a smaller scale when compared to the BUILD sites. These awards provide resources to allow awardees to implement and evaluate the effectiveness of targeted interventions. Through this initiative, it is expected that the field will gain greater understanding about the effectiveness of the duration, frequency, and intensity of various interventions. The goal is to provide the scientific community with sound evidence of the efficacy of the interventions and provide a blueprint for activities that will be cost effective, practical, realistic, scalable, and sustainable at a broad range of institutions (NIGMS, 2019b).

Propagating the Hallmarks of Success

One contribution from the DPC that is already having an impact are the Hallmarks of Success. By defining short-, medium-, and long-term measures to assess the elements that predict career advancement in the biomedical research workforce, the research training community is in a better position to identify effective activities to promote development. All NIGMS undergraduate and predoctoral training programs—from community colleges to medical scientist training programs—are asked to describe their training activities “that will build a strong cohort of research-oriented individuals while enhancing the science identity, self-efficacy, and a sense of belonging among the cohort members” (NIH, 2022b). This language, focusing on psychosocial elements associated with advancement, is derived from the literature, and found in the DPC Hallmarks of Success. The Hallmarks have also informed NIH-wide faculty-diversity initiatives, such as the Maximizing Opportunities for Scientific and Academic Independent Careers (MOSAIC) program, and the new NIH Common Fund initiative Faculty Institutional Recruitment for Sustainable Transformation (FIRST).

MOSAIC is designed to facilitate the transition of promising postdoctoral researchers from diverse backgrounds into independent, tenure-track or equivalent research-intensive faculty positions (NIGMS, 2021a). The program provides a career transition award to postdoctoral scholars who are placed into a cohort-based mentoring and career-development program administered by scientific societies. These societies provide courses to promote self-efficacy and skills that align with many of the faculty Hallmarks of Success (e.g., grant writing), and a series of mentoring activities that employ practices and measures developed through NRMN.

The FIRST program aims to enhance and maintain cultures of “inclusive excellence” in the biomedical research community. Like the DPC, it consists of awards to institutions that employ distinct interventions and approaches to achieve similar goals (in this case, cohort hiring models with additional mentoring and professional development to promote institutional culture change). The program has a Coordination and Evaluation Center to work with FIRST awardees and facilitate the development of strategies to conduct a comprehensive evaluation of the program. The leadership of the FIRST Coordination and Evaluation Center has been a part of the DPC since its inception, and the effective practices from the DPC are being employed to enhance the implementation and evaluation of this new NIH initiative.

CONCLUDING REMARKS

The DPC and its consortium-wide evaluation represent a once-in-a-generation opportunity to take a rigorous approach to the science of workforce diversity, mentoring, and research capacity building. The program is already having significant impacts on NIH’s research training and capacity-building evaluation activities. As we learn more about the efficacy of various approaches through the ongoing consortium-wide and site-level evaluations, the program will extend its impact on cultivating and sustaining a research enterprise that better reflects and serves the needs of the entire American public.

Biographies

Dr. Kenneth Gibbs, Jr., is Chief of the Undergraduate and Predoctoral Cross-Disciplinary Training Branch in the Division of Training, Workforce Development and Diversity, at the National Institute of General Medical Sciences. He serves as NIH Project Scientist for the NIH Diversity Program Consortium Coordination and Evaluation Center.

Christa Reynolds, M.A., is Communications Editor for the NIH Diversity Program Consortium. She develops and implements communications and outreach strategies for the Diversity Program Consortium initiatives, and assists with analysis and data reporting.

Sabrina Epou, MPH, is Scientific Program Manager in the Division of Data Integration, Modeling, and Analytics, at the National Institute of General Medical Sciences. She serves as an analyst for the NIH Diversity Program Consortium.

Dr. Alison Gammie is the Director of the Division of Training, Workforce Development and Diversity, at the National Institute of General Medical Sciences. She serves as Program Leader for the NIH Diversity Program Consortium.

Footnotes

For more information, see the DPC Overview Webpage at https://www.nigms.nih.gov/training/dpc/Pages/default.aspx.

For more information on the Hallmarks of Success, see https://www.nigms.nih.gov/training/dpc/Pages/success.aspx.

For more information on SPAD, see https://www.nigms.nih.gov/training/dpc/Pages/SPAD.aspx; for more information on DPC DaTA, see https://www.nigms.nih.gov/training/dpc/Pages/DPC-DaTA.aspx.

REFERENCES

- ACD. (2012). Biomedical research workforce working group report. Retrieved from: https://acd.od.nih.gov/working-groups/dbr.html#:~:text=Diversity%20in%20the%20Biomedical%20Research%20Workforce%20Working%20Group%20Report

- Alexander BH (1975). Minority biomedical support: program for change predominantly minority colleges and universities. Negro History Bulletin, 38(6), 442–445. [Google Scholar]

- Byars-Winston A, Womack VY, Butz AR, McGee R, Quinn SC, Utzerath E, … Thomas S (2018). Pilot study of an intervention to increase cultural awareness in research mentoring: Implications for diversifying the scientific workforce. Journal of Clinical and Translational Science, 2(2), 86–94. 10.1017/cts.2018.25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clayton J (2021). Best practices in survey administration. Retrieved from: https://www.uww.edu/documents/ir/Survey%20Research/Best%20Practices%20in%20Survey%20Administration.pdf

- Crespi CM (2019). BUILD program evaluation: Identification of comparator institutions. Retrieved from: https://diversityprogramconsortium.org/briefs/pages/Identification_Comparator_Inst

- Derzon JH (2018). Challenges, opportunities, and methods for large-scale evaluations. Evaluation & the Health Professions, 41(2), 321–345. 10.1177/0163278718762292 [DOI] [PubMed] [Google Scholar]

- Gammie A (2022). Administrative supplements for NIGMS training, research education, and career development grants to develop curricular, training, and evaluation activities. NIGMS Feedback Loop Blog, Retrieved from: https://loop.nigms.nih.gov/2022/01/administrative-supplements-for-nigms-training-research-education-and-career-development-grants-to-develop-curricular-training-and-evaluation-activities-3/ [Google Scholar]

- Garrison HH, & Brown PW (1985). Minority access to research careers: An evaluation of the honors undergraduate research training program: National Academy Press. [Google Scholar]

- Gibbs KD Jr., Basson J, Xierali IM, & Broniatowski DA (2016). Decoupling of the minority PhD talent pool and assistant professor hiring in medical school basic science departments in the US. eLife, 5, e21393. 10.7554/eLife.21393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibbs KD, McGready J, Bennett JC, & Griffin K (2014). Biomedical science Ph.D. career interest patterns by race/ethnicity and gender. PloS One, 9(12), 10.1371/journal.pone.0114736 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ginther DK, Schaffer WT, Schnell J, Masimore B, Liu F, Haak LL, & Kington R (2011). Race, ethnicity, and NIH research awards. Science, 333(6045), 1015–1019. Retrieved from: https://www.science.org/doi/10.1126/science.1196783 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasow PA (2005). Fundamentals of survey research. Retrieved from: https://www.mitre.org/sites/default/files/pdf/05_0638.pdf

- Hueffer K, Ehrlander M, Etz K, & Reynolds A (2019). One health in the circumpolar North. International Journal of Circumpolar Health, 78(1), 1607502. 10.1080/22423982.2019.1607502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamangar F, Silver G, Hohmann C, Hughes-Darden C, Turner-Musa J, Haines RT, … Sheikhattari P, (2017). An entrepreneurial training model to enhance undergraduate training in biomedical research. Paper presented at the BMC Proceedings. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NIGMS. (2019a). Diversity program consortium data sharing agreement. Retrieved from: https://www.nigms.nih.gov/training/dpc/Pages/datasharing.aspx

- NIGMS. (2019b). Diversity Program Consortium Dissemination and Translation Awards (DPC DaTA) (U01). Retrieved from: https://www.nigms.nih.gov/training/dpc/Pages/DPC-DaTA.aspx

- NIGMS. (2019c). Diversity Program Consortium Sponsored Programs Administration Development (SPAD) Program (UC2). Retrieved from: https://www.nigms.nih.gov/training/dpc/Pages/SPAD.aspx

- NIGMS. (2019d). Hallmarks of Success. Retrieved from: https://www.nigms.nih.gov/training/dpc/Pages/success.aspx

- NIGMS. (2021a). Maximizing Opportunities for Scientific and Academic Independent Careers (MOSAIC) (K99/R00 and UE5). Retrieved from: https://www.nigms.nih.gov/training/careerdev/Pages/MOSAIC.aspx

- NIGMS. (2021b). Research on interventions that Promote the Careers of Individuals in the Biomedical Research Enterprise (R01/R35). Retrieved from: https://www.nigms.nih.gov/training/Pages/Interventions.aspx

- NIGMS. (2022). Diversity Program Consortium Publications. Retrieved from: https://www.nigms.nih.gov/training/dpc/Pages/DPC-Publications.aspx

- NIH. (2017). Mission and Goals. Retrieved from: https://www.nih.gov/about-nih/what-we-do/mission-goals

- NIH. (2019). Notice of NIH’s Interest in Diversity. Retrieved from: https://grants.nih.gov/grants/guide/notice-files/NOT-OD-20-031.html

- NIH. (2022a). Ending Structural Racism: UNITE. Retrieved from: https://www.nih.gov/ending-structural-racism/unite

- NIH. (2022b). Undergraduate Research Training Initiative for Student Enhancement (U-RISE) (T34). Retrieved from: https://grants.nih.gov/grants/guide/pa-files/PAR-21-146.html

- NIH Common Fund. (2019). Diversity Program Consortium: Enhancing the Diversity of the NIH Funded Workforce. Retrieved from: https://commonfund.nih.gov/diversity

- NSF. (2021). Women, Minorities, and Persons with Disabilities in Sciences and Engineering. Retrieved from: https://ncses.nsf.gov/pubs/nsf21321/

- Toldson IA, & Washington A (2015). How HBCUs can get federal sponsorship from the United States Department of Education (Editor’s Commentary). Journal of Negro Education, 84(1), 1–6. [Google Scholar]

- Valantine HA, & Collins FS (2015). National Institutes of Health addresses the science of diversity. Proceedings of the National Academy of Sciences, 112(40), 12240–12242. 10.1073/pnas.1515612112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber-Main AM, McGee R, Eide Boman K, Hemming J, Hall M, Unold T, … Okuyemi KS (2020). Grant application outcomes for biomedical researchers who participated in the National Research Mentoring Network’s Grant Writing Coaching Programs. PloS One, 15(11), e0241851. 10.1371/journal.pone.0241851 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Womack VY, Wood CV, House SC, Quinn SC, Thomas SB, McGee R, & Byars-Winston A (2020). Culturally aware mentorship: Lasting impacts of a novel intervention on academic administrators and faculty. PloS One, 15(8), e0236983. 10.1371/journal.pone.0236983 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Working Group on Diversity in the Biomedical Research Workforce. (2012). Draft report of the Advisory Committee to the Director Working Group on Diversity in the Biomedical Research Workforce. Retrieved from: https://acd.od.nih.gov/documents/reports/DiversityBiomedicalResearchWorkforceReport.pdf