Abstract

Estimating the likelihood, timing, and nature of events is a major goal of modeling stochastic dynamical systems. When the event is rare in comparison with the timescales of simulation and/or measurement needed to resolve the elemental dynamics, accurate prediction from direct observations becomes challenging. In such cases a more effective approach is to cast statistics of interest as solutions to Feynman-Kac equations (partial differential equations). Here, we develop an approach to solve Feynman-Kac equations by training neural networks on short-trajectory data. Our approach is based on a Markov approximation but otherwise avoids assumptions about the underlying model and dynamics. This makes it applicable to treating complex computational models and observational data. We illustrate the advantages of our method using a low-dimensional model that facilitates visualization, and this analysis motivates an adaptive sampling strategy that allows on-the-fly identification of and addition of data to regions important for predicting the statistics of interest. Finally, we demonstrate that we can compute accurate statistics for a 75-dimensional model of sudden stratospheric warming. This system provides a stringent test bed for our method.

Keywords: neural network, rare event, Feynman-Kac equation, high-dimensional PDE, adaptive sampling, Holton-Mass model

1. Introduction

In many complex dynamical systems, behaviors of strong interest occur infrequently compared to the system’s fastest timescale phenomena. For example, most climate-related destruction is due to extreme weather events (e.g., hurricanes, heat waves, flooding) [1, 2, 3, 4, 5]. More broadly, fluid turbulence in 5 both natural and engineered systems produces intermittent, damaging extreme events [6]. In the molecular sciences, chemical reactions and molecular rearrangements occur on timescales many orders of magnitude longer than the timescale of individual bond vibrations [7, 8]. In the biomedical sciences, it may take many mutations before a virulent strain of a pathogen emerges [9], or many heart beats before a cardiac arrhythmia becomes life-threatening [10, 11].

Among the most common computational tasks related to these rare events is prediction—assessing the likelihood and extent of an event (i.e., the risk and cost in the case of a deleterious event)—before it occurs. When the event is not too rare, it can often be predicted with sufficient accuracy by direct forward-in-time integration of a computer model as is frequently done, for example, in weather prediction. However, when the event is very rare, direct forward-in-time integration becomes prohibitively expensive because many simulated model trajectories are required to observe even one instance of the event, leave alone compute statistics. The computational cost increases further when the goal is to gain an understanding of how the rare event develops, which requires predictions generated from many initial conditions.

One common approach to this problem is to construct a “coarse-grained” model, in which some details of the system are treated implicitly [12, 13, 14]. One example is a Markov State Model (MSM), in which one groups the states of the full system into discrete sets and then evolves the system between these sets according to transition probabilities that are estimated from trajectories of the full system [15, 16, 17, 18]. A variety of machine learning approaches that instead yield continuous coarse-grained representations of systems have come to be known as “equation discovery” [19, 20, 21, 22, 23, 24]. When an accurate coarse-grained model can be constructed, it can be simulated extensively to make predictions with statistical confidence. However, building an accurate coarse-grained model can be challenging, in particular, because it is often not clear a priori which features must be included. The construction of coarse-grained models thus remains a subject of intense inquiry.

Here, we pursue an alternative approach: directly estimating conditional expectations of a Markov process as a function of initial condition. We term these conditional expectations “prediction functions.” Prediction functions can be used to reveal how a rare event develops in remarkable detail. For example, the committor (also known as the splitting probability)—the probability that a process proceeds to a set of target states before a competing set of states—can be used to define the transition state ensemble of a molecular rearrangement [25, 26], as well as the pathways that lead between the reactant and product states [27, 28]. Prediction functions can also provide important information for decision making. For example, the committor can be used by energy, transportation, and financial sectors to measure risk due to extreme weather and allocate resources accordingly [29]. Committor estimation is a growing research focus in meteorology [30, 31, 32, 33]. In real-time settings, the lead time—the expected time until onset of the event given that it occurs—is also essential to know [34, 35, 33].

Prediction functions satisfy Feynman-Kac equations, linear equations of the operator that describes the evolution of expectations of functions of a process, the transition operator (also known as the Koopman operator [36]) and its infinitesimal generator [37, Chapter 3]. Feynman-Kac equations cannot be solved by conventional discretization approaches because they involve a high-dimensional independent variable (the state of the underlying process). Moreover, the form of the transition operator is generally not known. Nonetheless, we showed recently that Feynman-Kac equations can be solved approximately by a basis expansion in which inner products of basis functions are estimated from a data set of short trajectories [27, 38].

While this approach has been successfully applied to such diverse processes as protein folding [27], molecular dissociation [28], and sudden stratospheric warming [34], it relies on identifying an effective basis set. One choice is to use a basis of indicator functions for discrete sets, in which case the approach reduces to construction of an MSM (but with appropriate boundary conditions for the prediction function). However, just as it can be challenging to group states into sets that satisfy the Markov assumption in construction of an MSM [18, 39], the choice of basis set is not always straightforward.

Here, we address this issue through a neural network ansatz for prediction functions. Our work builds on recent studies, which showed that a neural network ansatz can be used to solve for the committor if one assumes particular, explicit forms for the dynamical operator [40, 41, 42, 43] (and see [44] for a closely related approach using tensor network approximation). Similar neural-network techniques have been devised to solve a wide variety of partial differential equations [45, 46, 47, 48, 49, 50]. Because we work directly with a data set of short trajectories, our approach is free of restrictive assumptions about the dynamics (e.g., microscopic reversibility) and does not require explicit knowledge of a model generating the data, opening the door to treating high-fidelity models, and even experimental and observational data [33], without simplifying assumptions.

In Section 2, we review prediction functions and the Feynman-Kac equation that we need to solve to estimate them. In Section 3 we introduce our neural network approach to solving Feynman-Kac equations using a data set of paired trajectories. In Section 4, we compare with Galerkin methods and explore the role of the lag time and the distribution of trajectory initial conditions on performance. In Section 5 we introduce an adaptive sampling method that enriches the data set based on the current neural network approximation. Finally, in Section 6 we apply our algorithm to estimating the probability of onset and the lead time of a sudden stratospheric warming event.

2. Prediction functions and their Feynman-Kac equations

We consider events defined by a set of target states ; often, there is also a competing set of states . For example, if we want to estimate the probability that a moderate storm develops into an intense hurricane before dissipating, we would take to include all weather states consistent with an intense hurricane and to include all quiescent states. The initial moderate storm would be a state in the domain .

Mathematically, we select states in with the indicator function

| (1) |

where denotes a particular state of the system. We define analogous indicator functions for other sets.

We assume the dynamics of the system can be described by a Markov process . In the example above, is a moderate storm state, and the probability that it develops into an intense hurricane before the weather returns to a quiescent state is the committor:

| (2) |

where the subscript indicates the initial condition, and is the stopping time, i. e., when the process leaves the domain .

Continuing the example above, we may also want to compute the lead time, i.e., the average time until a moderate storm develops into an intense hurricane, given that the intense hurricane occurs ( occurs before ). The lead time tells us how much time we have to prepare for the worst case; by definition, it is shorter than the average time until an intense hurricane develops, which can be misleadingly large if the storm has a high probability of dissipating ( occurs before ). Mathematically, the lead time is

| (3) |

When the event of interest is rare, computing or by direct forward-in-time simulation is difficult. It involves repeatedly simulating starting in a selected initial condition and running until either or is reached (which defines the stopping time ), and then assembling a sample average. This approach has significant drawbacks: first, when the time is very large, generation of a single sample trajectory may be prohibitively computationally expensive, and second, when is small, many sample trajectories will be required to observe a single trajectory reaching . For example, starting from a typical weather state, the expected time to the next extreme event may be years, and the probability that it occurs on a much shorter time scale may be very small.

In this paper we estimate prediction functions by solving operator equations for them approximately. In the case of the committor, the operator equation takes the form

| (4) |

where is a time interval known as the lag time and is the identity operator. Here we focus on finite ; the case of infinitesimal is discussed in Section 4.4. Above, the stopped transition operator encodes the full dynamics of the system when it is in ; it is defined by its action on an arbitrary test function :

| (5) |

where . Physically, (4) reflects the fact that the average probability that occurs before after time over all trajectories emanating from is the same as the probability that occurs before starting from . Similarly, the lead time satisfies

| (6) |

In this case, the right hand side accumulates the time until reaching , weighted by the likelihood of reaching before .

Eqs. (4) and (6) are examples of Feynman-Kac equations [37, Chapter 3], which can take more general forms, such as

| (7) |

which is solved by the prediction function.

| (8) |

We recover (4) by setting and and (6) by setting and ; the latter case yields , and we must solve separately for and divide by it to obtain . Crucially, (7) exactly characterizes for any choice of . In particular, can be chosen much shorter than typical values of .

On its own, (7) brings us no closer to a practically viable approximation of the prediction function. The independent variable is typically high-dimensional, rendering useless any standard discretization approach to solving (7) for . Instead, the current state-of-the-art approach involves expansion of in a problem-dependent basis [27, 28, 38]. In the next section, we explore a potentially more flexible and automated approach to solving (7).

3. Solving Feynman-Kac equations with neural networks

The goal of the present study is to solve (7) by approximating by a neural network with a vector of parameters . Specifically, we seek that minimizes a mean square difference between the left and right hand sides of the Feynman-Kac equation and boundary condition in (7):

| (9) |

with

| (10) |

The norm that we use is the -weighted norm , where is the sampling distribution. Importantly, unlike the many existing estimators [40, 41, 42, 43, 44, 51, 52, 53, 54, 55], our data need not be generated from (or re-weighted according to) the invariant distribution of (which may not exist), a feature that we exploit in Section 4.5. In (10), and are both zero when equals the desired prediction function. The parameter controls the strength of the first norm, which enforces the Feynman-Kac equation, relative to the second norm, which enforces the boundary condition. Smaller values enforce the boundary conditions more strictly but can compromise the satisfaction of the Feynman-Kac equation. For our numerical tests below, we tuned by trial and error to the smallest value that still enforced the boundary conditions to the desired precision.

The gradient of includes the integral of a product of two terms of the form with and , the gradient of with respect to the parameters . While we cannot hope to evaluate exactly for any non-trivial , as long as we can evaluate we have access to the random variable whose expectation is . With only one sample of for each sample of , we would not be able to build an unbiased estimate of the product of two terms of the form . One approach, common in reinforcement learning applications, is to simply drop the term involving this product from the gradient [56]. However, given at least two independent samples of for each sample of , we can construct an unbiased estimator of the full gradient of that converges to the exact gradient of in the limit of many samples of (even when the number of independent samples of for each sample of does not increase). Below we outline a procedure that constructs an unbiased estimate of the gradient of given a data set of samples of , together with samples of for each sample of (in tests of , we found the results to be insensitive to the choice of , and we use throughout).

Our procedure is as follows.

Select a set of initial conditions from the sampling distribution .

From each , launch independent unbiased simulations to generate trajectories . Here we assume that .

For trajectory with initial condition , determine the index of its stopping time as .

- Compute the total approximate loss function as

which converges to the loss in (9) as increases.(13) Adjust the parameters to minimize (13).

Check termination criteria and stop if met (discussed further below).

If adaptively sampling, apply the procedure in Section 5 and set to the total number of initial conditions.

Go to step 3.

In principle, the loss can be minimized over any sufficiently flexible ansatz . In this work, is a fully connected feed-forward neural network, and we determine the optimal parameters via the Adam algorithm [57]. In the present study, we stop training (step 7) when the average loss for an epoch is less than zero. It is possible for to become negative because the two parenthetical factors in (11) are evaluated using independent samples of . While this could be avoided by choosing , the result would be a biased estimator of . In the limit of large , converges to , which must be non-negative. When using any sample approximation of , some regularization is required to avoid overfitting. We find early stopping at the first occurrence of a negative value of to be a natural and effective approach. Further details are given in conjunction with the numerical examples.

4. Illustration of numerical considerations

In this section, we use a model for which we are able to compute reference results to illustrate the advantages of our approach relative to existing ones. Specifically, we compare our approach with one that employs a basis expansion (a Markov State Model) and one that employs a neural network with an assumed form for the dynamical operator. Finally, we examine common choices for the sampling distribution. We show that an important practical advantage of our approach is the freedom to choose the sampling distribution with which to weight the norm in .

4.1. Müller-Brown model

The system that we study is specified by the Müller-Brown potential [58], which is a sum of four Gaussian functions:

| (14) |

For all results shown, we use , , , , , . The potential is shown in Figure 1(left).

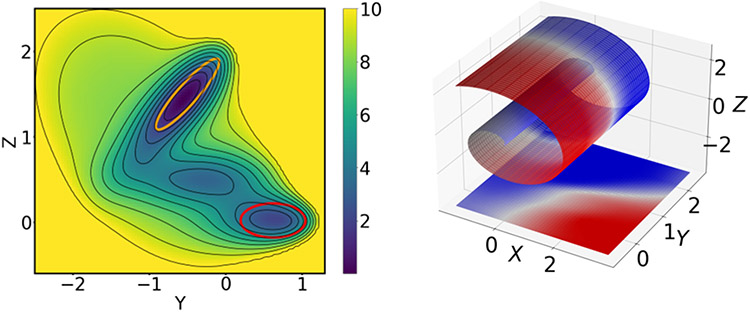

Figure 1:

The system used for the numerical experiments in Section 4. (left) Müller-Brown potential [58]. Sets and are marked by the orange and red ellipses, respectively, and contours are spaced at intervals of 1 in the units of (14). (right) Reference committor for the Müller-Brown dynamics mapped to the Swiss roll, and below on the two-dimensional surface. We compute the reference from a finite difference scheme [38] in two dimensions and then map it to the Swiss roll using (17).

We consider the overdamped Langevin dynamics associated with , discretized with the BAOAB algorithm [59]:

| (15) |

where is the time step, is the inverse temperature, , is the normal distribution with zero mean and unit standard deviation (i.e., is Gaussian noise), and with unless otherwise specified. In practice, we use a time step of , saving the configuration every time step, such that (cf. step 2 in Section 3). When the parameter is large, makes only very rare transitions between the local minima of .

We define states and as

| (16) |

neighborhoods of two of the three local minima of (Figure 1(left)).

The Müller-Brown model described above is commonly employed as a simple illustration of the features of molecular rearrangements [58, 38, 41, 42, 43]. The presence of local minima in addition to and , and the fact that, at low noise, the trajectories connecting the minima do not align with the coordinate axes are both features that can be challenging for algorithms that enhance the sampling of transitions between the reactant () and product () states. In our tests, we specifically focus on the committor. We compute a reference committor by the finite difference scheme outlined in the appendices of [38, 60] with . In all tests, we compare the estimated committor to the reference committor computed using the same potential energy function used to generate the data.

Finally, to represent the fact that one of the most challenging aspects of treating complex systems is that the manifold on which the dynamics take place is generally not known, we transform the trajectories and sets and to a new set of coordinates. Specifically, we map the two-dimensional system onto a Swiss roll (Figure 1(right)):

| (17) |

where the parameter controls how tightly the roll is wound, and is an offset to ensure that the range of is positive. Unless otherwise specified, we use and . For the remainder of the tests based on the Müller-Brown potential, we use the three-dimensional coordinates as input features for all neural networks and -means clustering. For clarity of visualization, we plot the estimated committors on the original two-dimensional coordinates. The error metric that we use is independent of coordinate system.

Unless otherwise specified, for our experiments with the Müller-Brown model below, we draw 30,000 initial conditions uniformly from the region:

| (18) |

Two independent trajectories of length (to be specified below) are then generated from each initial condition using (15).

4.2. Neural network details

For all the numerical experiments involving the Müller-Brown potential, we use fully connected feed-forward neural networks with three inputs, three hidden layers, each consisting of 30 sigmoid activation functions, and an output layer with a single sigmoid activation function. In all trials with fixed data sets, we trained for a maximum of 3000 epochs with a learning rate of 0.0005 and a batch size of 1500. Each epoch proceeds by drawing a permutation of the data set, then one step of Adam is performed using mini-batches of size 1500 (that is, 1500 pairs of trajectories) such that each trajectory pair is used exactly once per epoch (that is, the number of Adam steps is the data set size divided by the mini-batch size). The boundary term is computed with the same mini-batch as ; we use to weight the terms in the loss function. We also explored deeper networks with ReLU activation functions, and they performed comparably and generally required shorter training times (results not shown); we focus on the shallower networks with sigmoid activation functions because they allow a direct comparison with loss functions involving explicit derivatives of in Section 4.4.

4.3. Galerkin methods

As discussed in the Introduction, one of our main motivations in introducing an approach based on neural networks is that it can be difficult to identify basis functions for linear (e.g., Finite Element or other Galerkin) methods for solving Feynman-Kac equations. To illustrate this issue explicitly, we compare estimates for the committor from our approach with those obtained from dynamical Galerkin approximation [38, 27] using a basis of indicator functions, which can be considered a MSM [38]. We do so as a function of the parameter in (17) and generate data sets with .

To construct an MSM, we clustered the configurations in each data set by the -means algorithm (with as specified below) applied to the three-dimensional coordinates of the model. The indicator functions of the set of points closest to each cluster centroid form a basis for a Galerkin approximation of the committor function. A transition matrix was constructed by counting transitions of the stopped process between clusters among our trajectory data set with a lag time of . Here we use the convention that the row and column indices are zero for and their maximum values for . The committor is then computed from with the last component of the solution vector set to 1. The neural network and its training were as described in Section 4.2.

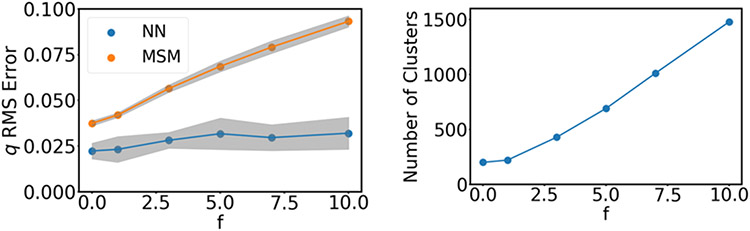

Figure 2 shows the results. We see that as the roll is wound tighter (higher ), the MSM estimates, constructed with a constant 300 clusters, decrease in accuracy, while the network estimates remain consistently good. In the right panel, we vary the number of clusters and report the number required to reach a root mean squared error threshold of 0.045. This threshold is chosen because it results in numbers of clusters in a range that is typical in MSM studies [27, 34]. We increase the number of trajectories in proportion to the number of MSM clusters to ensure that each cluster is sampled a consistent amount. In this test, we see that large numbers of MSM clusters, and hence large amounts of data, are needed. Intuitively, the MSM encounters problems when a single cluster spans adjacent layers. Therefore, it is necessary to vary the size of the clusters with the distance between layers of the Swiss roll, which is a linear function of . Consistent with this idea, in Figure 2 we find an approximately linear dependence of the number of clusters needed to achieve a certain error threshold.

Figure 2:

Comparison with Galerkin methods. (left) For an MSM estimate with clusters, the root mean square (RMS) error in the committor for the Müller-Brown model as the Swiss roll is wound tighter (higher in (17)). Shading shows the standard deviation in the error from training on ten independent data sets. (right) Number of MSM clusters needed to achieve an RMS error in the committor of less than 0.045.

We note that in practice MSMs are often constructed on coordinates obtained from a method for dimensionality reduction and/or manifold learning. With such pre-processing, linear methods can clearly be successful. However, kernel-based methods for dimensionality reduction (e.g., diffusion maps [61] or kernel time-lagged independent component analysis [62, 63]) scale poorly with the size of the data set. A neural network (e.g., an autoencoder [64, 65]) can be used for dimensionality reduction, but the approach presented here is simpler in that we go directly from model coordinates to prediction function estimates.

4.4. Lag time

As discussed in the Introduction, neural networks have been applied to estimating high-dimensional committors assuming a partial-differential form for the dynamical operator [40, 41, 42]. This form arises in the limit that one considers an infinitesimal lag time. In this case, one can write (7) as

| (19) |

where is the is the infinitesimal generator:

| (20) |

For a diffusion process, takes the form

| (21) |

where and are the drift and diffusion coefficients that determine the evolution of . In the limit of small , the dynamics in (15) correspond to a generator with and . In this case, the loss function becomes

| (22) |

with an appropriate boundary condition term.

The loss function in (22) differs from the one used in many recent articles on the subject of committor estimation with neural-network (or recently tensor-network) approximations [40, 41, 42, 43, 44]. Those papers focus specifically on the case of reversible overdamped diffusive dynamics. In this case the committor can be found by minimizing a sample approximation of the loss function (for constant, isotropic diffusion coefficient) where is the invariant distribution of the dynamics [55]. Relatedly, despite a resemblance to (10), the estimator that appears in [51, 52, 53, 54] is, in fact, a small approximation of .

We stress that (22) is only appropriate for diffusion processes and requires working with the full set of variables in which the dynamics are formulated. Importantly, one generally analyzes only functions of a subset of the variables (termed collective variables or order parameters) [27, 28, 33, 38]. For example, in a molecular simulation of a solute in solvent, one may include only the dihedral angles of the solute. In a weather simulation, one may focus on the wind speed and geopotential height at particular altitudes. When working with observational data, one only has access to the features that were measured. Even when the tracked variables can be described by an accurate coarse-grained model, that model is not known explicitly and is difficult to identify from data. These considerations make minimization of any loss function explicitly involving (21) impossible for many practical applications.

Nonetheless, the loss function in (22) is appealing because it involves only a single time point, so no trajectories need to be generated if explicit forms for the drift and diffusion coefficients are known. While this would appear to be an advantage, we show in this section that, even when the dynamics can be reasonably described by (21), it can be preferable to work with finite lag times.

To make this point, we consider dynamics governed by the Müller-Brown potential with a small oscillating perturbation (Figure 3A):

| (23) |

where controls the spatial frequency of the perturbation. Again we represent the data on the Swiss roll as described in Section 4.1. As shown in Figure 3B, the perturbation is sufficiently small that it makes no qualitative change to the committor.

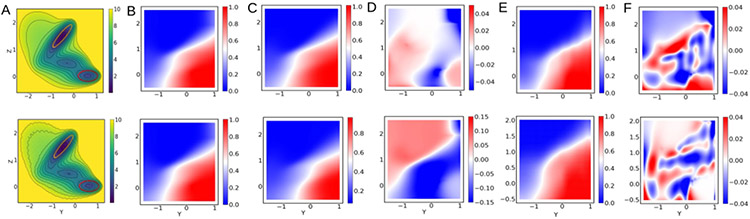

Figure 3:

Effect of potential roughness on the performance when using a loss function based on the infinitesimal lag time limit. Results shown are obtained with (22) and (23) with (top row), and (bottom row). (A) The potentials. (B) Reference committors obtained from the finite-difference scheme in [38, 60]. (C) Neural network prediction of the committors. (D) Differences between the references and the predictions. (E,F) Same as columns C and D, except for a lag time of 100 steps. Note the different scales on the difference maps in columns D and F.

Given this data set, we train neural networks to minimize the loss function in (13), using either (11) or (22) for , with and , corresponding to the committor. The network architecture was the same as above: i.e., fully connected feed forward with two inputs, 30 activation functions per hidden layer, and one output. The neural network and its training were as described in Section 4.2.

Typical results are shown in Figure 3C and D, and the error in the committor is quantified in Figure 4. As the frequency of the the perturbation increases, the drift becomes large, with rapid sign changes, and the training of the infinitesimal lag time network tends to get stuck at poor estimates of the committor (Figure 4(left)). By contrast, finite lag time networks consistently achieve low errors at longer lag times (Figure 4(center and right)). This presumably results from averaging over values of the drift. Interestingly, we found that when the potential is smooth (Figure 4(center)), slightly lower errors can be obtained using the zero lag time approach. However, in the presence of even such a small amount of roughness that the committor is qualitatively unchanged (Figure 3A and B), our finite lag time approach performs better (Figure 4(right)). We expect the latter case to be more relevant in many practical applications.

Figure 4:

Comparison of infinitesimal and finite lag time loss functions. (left) RMS error in the committor for the Müller-Brown dynamics mapped to the Swiss roll obtained with the infinitesimal lag time loss function in (22) as the frequency of the sinusoidal perturbation, , is increased. The other panels show the error as the lag time is increased with the frequency fixed at (center) or (right). Shading shows the standard deviation in the error from training on ten independent data sets.

It may be tempting to assume that the zero lag time approach has lower computational cost since there is no need to actually simulate the stochastic differential equation (here, (15)). This is not necessarily the case. With the infinitesimal lag time loss function, the drift needs to be evaluated for each data point for every pass over the data set (one epoch). By contrast, the finite lag time loss function introduced here does not require evaluation of the drift once the data set is generated. Therefore, if the number of epochs needed to train the zero lag time network is comparable to the number of time steps used to generate the data set for the two-trajectory method, the finite lag time method will be less computationally costly.

4.5. Sampling distribution

In this section, we investigate the role of the choice of sampling distribution. Following generation of a data set as described in Section 4.1, we selected initial points from the region specified in (18) with weight ( need not be the same as ) and trained over a range of values. To a good approximation when is small, the invariant density of (15) is with . When is large, the data set of initial conditions concentrates at the local minima of . As becomes small, the distribution approaches uniform. While this parametric form for the sampling distribution is convenient for the tests performed in this section, we emphasize that, unlike many existing schemes [40, 41, 42, 53, 54], our algorithm does not require explicit knowledge of the invariant density.

We trained ten networks on each pair of sampling distribution and lag time following the procedure in Section 4.2, and the resulting errors and their standard deviations are plotted as a function of in Figure 5. At low , which concentrates the initial points in the minima, the network is unable to find a good solution at any lag time. As increases and the distribution becomes more uniform, the solution improves significantly. This suggests that it is important to have the regions between minima well-represented in the data set, which is consistent with previous observations [66, 38, 27, 34, 43]. In high-dimensional examples, sampling the transition regions is not straightforward, and we present a solution to this problem in the next section.

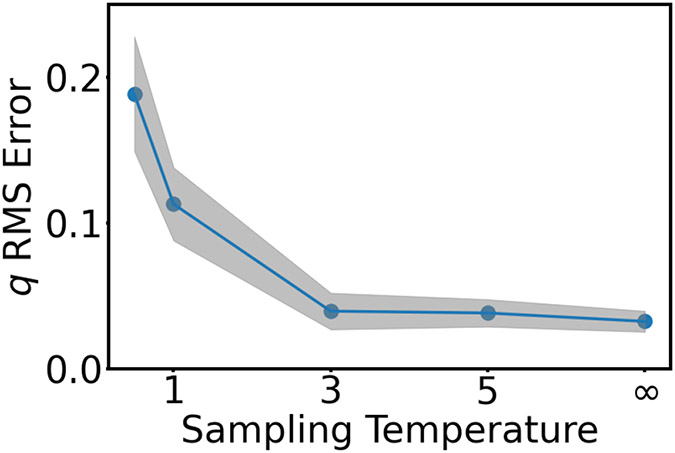

Figure 5:

RMS error of the committor as the sampling temperature, , is increased. The point at corresponds to the stationary distribution for the Müller-Brown model (in the small limit) at the temperature of the dynamics. Increasing the temperature makes the distribution more uniform. The point labeled is uniform. Shading shows the standard deviation in the error from training on ten independent data sets.

5. Adaptive Sampling

As we showed in Section 4.5, the choice of sampling distribution is important. In this section, we propose a simple method for adding data as the training proceeds. Since the approach depends on constructing a spatial grid we must first select a low-dimensional (e.g., two-dimensional) set of (possibly non-linear) coordinates which, as noted above, we term collective variables. We then partition the space of possible values into bins of equal volume labeled , and estimate

| (24) |

for each bin. The weights are then used to select bins, and new initial points are then sampled from the selected bins with uniform probability. The essential idea is that we add data to the regions (bins) that contribute most to .

When adaptively sampling to learn the committor we approximate (24) by

| (25) |

We compute (25) for each bin, select bins with probability proportional to with replacement, and sample a single additional initial point from each selected bin. From each of the new initial points we generate a trajectory. In practice, we observed that (25) can become negative for some bins in the same way that can become negative. In this case, we set all negative probabilities to zero.

The success of our adaptive sampling approach depends on the choice of . In the absence of other knowledge, a reasonable choice is the current estimate of the committor function itself. We adopt this choice to test our adaptive sampling procedure on the Müller-Brown model. A related adaptive sampling approach using stratified sampling [67, 68] based on a current committor estimate is proposed in [43].

The simulation and Swiss roll parameters, as well as neural network and training parameters are the same as above. We initially train with 10,000 pairs of trajectories drawn uniformly from the region in (18) for 1000 epochs. Then we alternate between adding new pairs of trajectories and training for 500 epochs, for four cycles. We compare to 30,000 trajectory pairs drawn uniformly from the region in (18). Results are presented in Figure 6. We find that the adaptive sampling and uniform sampling perform similarly at long lag times, although the adaptive procedure gives more reproducible results as shown by the smaller error bars. At short lag times the average error is lower as well. The adaptive sampling procedure concentrates sampling in the transition region, that is, near . In the next section, we illustrate the adaptive sampled distribution on our atmospheric model, and we again see that sampling is effectively directed to the transition region. For low noise diffusions, the transition region becomes narrower, and this is reflected by a sharper peak than in Figure 6. In our testing, our adaptive sampling scheme remains effective, although more data are required at lower noise. We find that our method works for barriers .

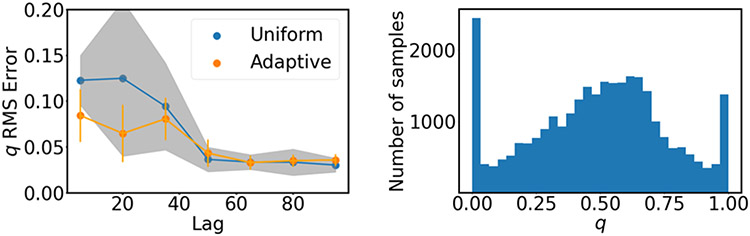

Figure 6:

Adaptive sampling scheme applied to the Müller-Brown dynamics mapped to the Swiss roll. (left) Comparison of uniform and adaptive sampling. Shading and error bars show the standard deviation in the error from training on ten independent data sets. (right) Histogram of the final data set as a function of the committor from training a neural network with a lag time of 100.

6. Predicting an atmospheric transition

As a demanding test of our method, we compute the committor and lead time for a model of sudden stratospheric warming (SSW), aiming to improve upon the benchmarks computed in [34]. Like other models of geophysical flows, the dynamics are irreversible and the stationary distribution is unknown. As a consequence, many competing approaches for computing the committor (e.g., [40, 41, 42, 53, 54]) are not applicable.

Typical winter conditions in the Northern Hemisphere stratosphere support a strong polar vortex, fueled by a large equator-to-pole temperature gradient. Approximately once every two years, planetary waves rising from the troposphere impart a disturbance strong enough to weaken and destabilize the vortex, in some cases splitting it in half. Such events cause stratospheric temperatures to rise by about 50°C over several days, affecting surface weather conditions for up to three months. The polar vortex is dynamically coupled to the midlatitude (tropospheric) jetstream, which sometimes weakens in response to SSW. This can engulf the midlatitudes in Arctic air and alter storm tracks, bringing severe weather conditions to unprepared locations. Predicting SSW events is therefore a prime objective in subseasonal-to-seasonal weather prediction, but their abruptness poses a real challenge. For a review of SSW observations, predictability and modeling, see [69] and references therein.

We consider the Holton-Mass model [70], augmented by time-dependent stochastic forcing as in [34] to represent unresolved processes and excite transitions between the strong and weak vortices. Despite the simplicity of the model relative to state-of-the-art climate models, these transitions capture essential features of SSW such as the rapid upward burst of wave activity mediated by the “preconditioned” vertical structure of zonal-mean flow [71, 72]. We briefly describe the model here, but refer the interested reader to [34, 73, 70, 74] for additional background and details.

The model domain is the region of the atmosphere north of 30° and above the altitude of (the tropopause). The Holton-Mass model describes stratospheric flow in terms of a wave-mean flow interaction between two physical fields. The mean flow refers to the zonal-mean zonal wind : the horizontal wind velocity component in the east-west (zonal) direction, averaged over a ring of constant latitude (zonal-mean, denoted by the overbar). The spatial coordinate denotes the north-south (meridional) distance from the latitude line , i.e., , where is the Earth’s radius and is the latitude. The wave refers to the perturbation streamfunction : the deviation from zonal mean (denoted by a prime symbol) of the geostrophic streamfunction, which is proportional to the potential energy of a given air parcel. Holton and Mass worked with the following ansatz for the interaction:

| (26) |

where and are zonal and meridional wavenumbers, and km is a scale height. The equations in (26) prescribe the horizontal structure entirely, so the model state space consists of and , the latter being complex-valued. Insertion of (26) into the quasigeostrophic potential voriticity equation yields a system of two coupled PDEs. Following [34, 70, 73, 74], we discretize the PDEs along the dimension in 27 layers. After enforcing boundary conditions, this results in a 75-dimensional state space:

| (27) |

The two states of interest in this model are a strong polar vortex, with large positive (meaning eastward wind, marked as state in Figure 7), and a weak polar vortex, with a weak wind profile in which sometimes dips negative (marked as state in Figure 7). Specifically, we define and as spheres centered on the model’s two stable fixed points () and () in the 75-dimensional state space. The two spheres have radii of 8 and 20 respectively, with distances measured in the non-dimensionalized state space specified in [34]. In physical units, these correspond to the ellipsoids

| (28) |

| (29) |

where is the complex vector 2-norm.

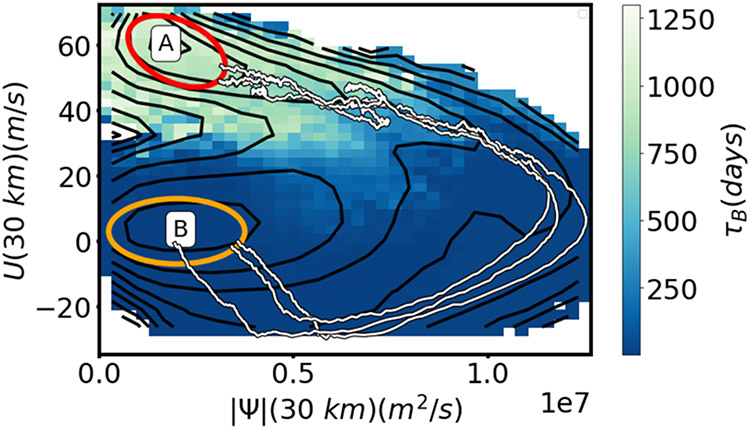

Figure 7:

Illustration of some key properties of the Holton Mass model relevant to the prediction problems considered here. Red and yellow ellipses approximately mark the projections of states and , respectively, on the collective variables. The background color shows the average time to hit state , clipped to a maximum of 1300 days to show detail. Black contours show the negative logarithm of the stationary density marginalized on these collective variables. Three transition paths harvested from a long simulation are shown in white.

Figure 7 illustrates the key features of this model relevant to the prediction problems we consider here. We see that the average time to reach starting from is over 1000 days, which is substantially longer than the longest lag times we consider here (≤ 10 days). We can also see that the transition paths do not proceed through the saddlepoint of the effective free energy (i.e., the negative logarithm of the stationary density, marked by the contours), indicating that dynamical, non-diffusive, irreversible dynamics are important. Specifically, the transition path can be roughly divided into two stages: a “preconditioning” phase, in which the vortex gradually weakens, followed by an upward burst of wave activity that rips the vortex apart. Most of the committor’s increase happens during the preconditioning phase, which siphons enstrophy (that is, squared vorticity, a measure of vortex strength that is conserved in the absence of dissipation) away from the mean flow and into the wave activity. The wave eventually dissipates, but only after its magnitude bypasses the saddlepoint (Figure 7). See [35, 75] for further discussion.

To generate an initial data set, we sampled 30,000 points uniformly in and from a long (50,000 days) trajectory and ran two ten-day trajectories from each starting point. Simulation details are reported in [34]. We simulated with a time step of 0.005 days, and saved the state of the system every 0.1 days. To validate our neural network results, we use a long trajectory of 500,000 days to compute

| (30) |

where are the parameters obtained from solving (9). This is the mean reference committor over the isocommittor surfaces from the neural network function. A perfect prediction corresponds to . We use a similar construction for , which we denote . For the committor, we take the overall error to be

| (31) |

Because the lead time does not have a fixed range and scales exponentially with the noise, for it, we instead compute the relative error

| (32) |

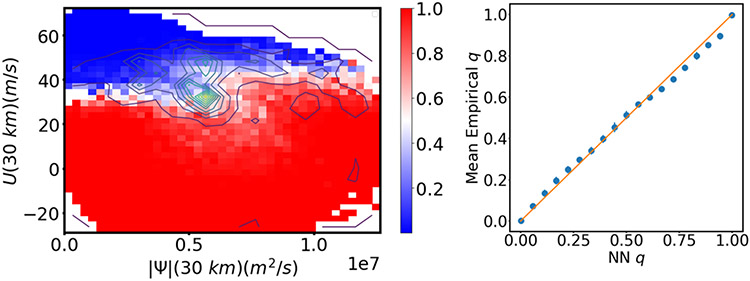

Figure 8 shows the reference committor and lead time projected onto the collective variables.

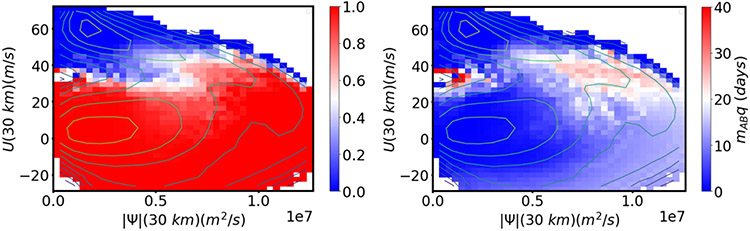

Figure 8:

Reference statistics for the Holton-Mass model. (left) Committor and (right) lead time computed from a long trajectory and projected onto and . Colors show reference statistics, and contours show the effective free energy.

Figure 9(left) shows results for the committor obtained with the adaptive sampling method. As collective variables in the adaptive sampling scheme we use , . The space between and 72.5 m/s and between and 1.26 × 107 m/s2 is partitioned into a 20 × 20 grid of bins. We choose this collective variable space because it is physically intuitive, coming directly from the model’s state space, and because it resolves SSW events well. Physically, measures the strength of the vortex while measures the strength of the disruptive wave. Their coupling is key to the nonlinear dynamics of the model. We begin with 50,000 pairs of short trajectories and add 22,000 new pairs of ten-day trajectories every 100 epochs for a total of 10 cycles. Thus the final number of trajectory pairs is 270,000. We take in (13). The network architecture is a fully connected feed forward network with 75 inputs, 10 hidden layers of width 50, with ReLU activation functions, and an output layer with a single sigmoid activation function. We stop training between each addition of data whenever the loss goes below zero (Figure 10). Networks for the lead time have the same structure, except that they have a quadratic output layer. The contour lines in Figure 9(left) indicate the density produced at the end of the training by the adaptive sampling procedure. The method concentrates new samples in the transition region without being given any information about its location. The method identifies the transition region on the fly.

Figure 9:

Committor for the Holton-Mass model. (left) Colors show predictions, and contour lines show the density of added points from the adaptive sampling scheme described in Section 5. (right) Comparison of predicted and reference committors. Symbols show (30). Error bars show the standard deviation from networks trained on ten separate data sets resulting from the adaptive sampling scheme.

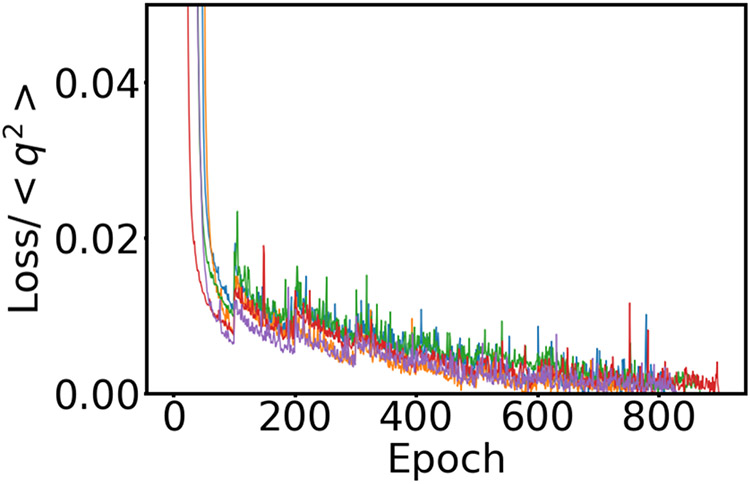

Figure 10:

The value of the loss as the training progresses for several replicates. We add data adaptively every 100 epochs and halt training when the loss goes below zero. Synchronized spikes in the loss function result from adding data where the loss is high.

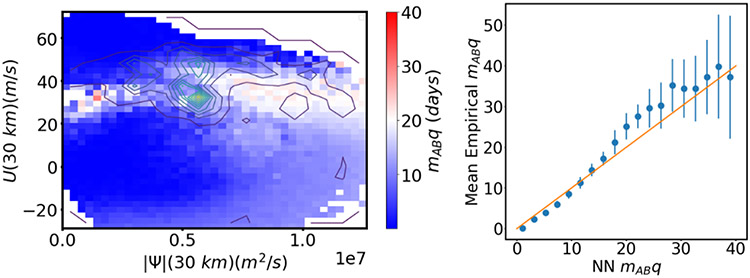

To validate the results, we trained ten networks on the data set produced by the adaptive sampling method and computed (30) (Figure 9(right)). The error bars show the standard deviation in . We see that the training is robust and consistently able to produce good estimates of the committor. We used the data set obtained from the adaptive sampling scheme for the committor to train the neural network to predict the lead time (Figure 11). Once again, we find that the method consistently produces good results compared with estimates from a long trajectory. We expect the errors in Figure 11 to be larger than those in Figure 9 because the estimated committor is used in the loss function for the lead time (as discussed below (7)), allowing errors to compound.

Figure 11:

Lead time for the Holton-Mass model. (left) Colors show predictions, and contour lines show the density of points in the data set obtained from the adaptive sampling scheme for the committor. (right) Comparison of predicted and reference lead times. Symbols show . Error bars show the standard deviation from networks trained on ten indpendent data sets resulting from the adaptive sampling scheme used to train the committor.

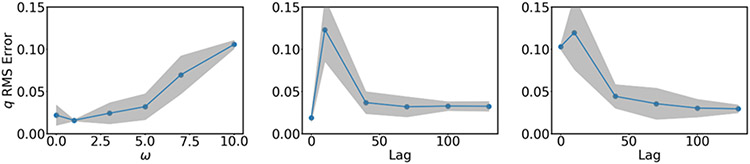

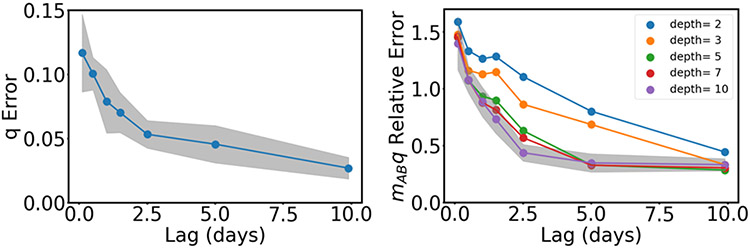

Finally, we determined how the performance of our method depended on key hyperparameters. To elucidate trends, we trained 10 networks on the data set produced by our adaptive sampling method. Figure 12 shows the error in our scheme as the lag time is increased. As we observed in the case of the rugged Müller-Brown potential, the error decreases as the lag time increases. We note that as the lag time goes to infinity, all trajectories reach , and the algorithm reduces to nonlinear regression of point estimates of the conditional expectation of being in state (see (2)). We also investigated the dependence of the performance on the network depth, as shown in the right panel of Figure 12. We found that deeper networks were able to achieve low errors at intermediate lag times, although there was relatively little sensitivity to this hyperparameter at short and long lag times.

Figure 12:

Dependence of performance as key hyperparameters are varied. (left) RMS error of the committor as a function of lag time. (right) Relative error in the product used to solve for the lead time as a function of the network depth and lag time. Shading shows the standard deviation from networks trained on ten indpendent data sets resulting from the adaptive sampling scheme used to train the committor; on the right, shading is only shown for the deepest network for clarity.

7. Conclusions

In this work, we have proposed a machine learning method for solving prediction problems given a data set of short trajectories. By computing conditional expectations that solve Feynman-Kac equations rather than trying to learn the full dynamical law, we reduce the scope of the problem and hence render it more tractable. Our method has a number of advantages over existing ones:

it allows computation of any statistic that can be cast in Feynman-Kac form;

it does not require explicit knowledge of either the model underlying the data or its dynamics (e.g., the form of the generator and its parameters, such as the diffusion tensor);

it allows for use of arbitrary lag times;

it allows use of an arbitrary sampling distribution;

it does not require microscopic reversibility.

We illustrate these advantages using two numerical examples. Using a three-dimensional model for which we can compute an accurate reference solution, we show that our method using short trajectory data is often more robust than related methods that instead use the differential operator form of the Feynman-Kac equation [40, 41, 42, 43]. With the same model, we demonstrate the importance of having data in the low probability regions between metastable states and adequately weighting it against the data in the high probability regions. We propose a simple adaptive sampling scheme that allows us to add data so as to target the largest contributions to the loss during training. Finally, we show that we can compute key statistics for a 75-dimensional model of SSW events (not just the committor but also the lead time) from trajectories that are significantly shorter than the times between events.

Our method opens new possibilities for the study of rare events using experimental and observational data. For example, data sets of short trajectories generated by weather forecasting centers can be analysed by our method to study extreme weather and climate events [33]. However, the requirement that two trajectories be generated from each initial condition poses an obstacle to application of our method to many other data sets. Future work will focus on relaxing this restriction.

Highlights.

We develop an approach that represents predicted value functions by neural networks.

This enables long-time predictions from short-duration trajectories.

The data is sampled adaptively during training.

The approach does not require knowledge of or access to an underlying model.

We demonstrate the method on data from a model of sudden stratospheric warming.

Acknowledgments

We wish to thank Adam Antoszewski, Michael Lindsey, Chatipat Lorpaiboon, and Robert Webber for useful discussions and the Research Computing Center at the University of Chicago for computational resources. This work was supported by National Institutes of Health award R35 GM136381 and National Science Foundation award DMS-2054306. J.F. was supported at the time of writing by the U.S. DOE, Office of Science, Office of Advanced Scientific Computing Research, Department of Energy Computational Science Graduate Fellowship under Award Number DE-SC0019323.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declaration of interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Credit Author Statement

John Strahan: Conceptualization, Writing, Investigation

Justin Finkel: Conceptualization, Writing

Aaron Dinner: Conceptualization, Writing

Jonathan Weare: Conceptualization, Writing

References

- [1].Easterling DR, Meehl GA, Parmesan C, Changnon SA, Karl TR, Mearns LO, Climate extremes: Observations, modeling, and impacts, Science 289 (5487) (2000) 2068–2074. [DOI] [PubMed] [Google Scholar]

- [2].AghaKouchak A, Cheng L, Mazdiyasni O, Farahmand A, Global warming and changes in risk of concurrent climate extremes: Insights from the 2014 California drought, Geophysical Research Letters 41 (24) (2014) 8847–8852. [Google Scholar]

- [3].Lesk C, Rowhani P, Ramankutty N, Influence of extreme weather disasters on global crop production, Nature 529 (7584) (2016) 84–87. [DOI] [PubMed] [Google Scholar]

- [4].Mann ME, Rahmstorf S, Kornhuber K, Steinman BA, Miller SK, Coumou D, Influence of anthropogenic climate change on planetary wave resonance and extreme weather events, Scientific Reports 7 (1) (2017) 45242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Frame DJ, Rosier SM, Noy I, Harrington LJ, Carey-Smith T, Sparrow SN, Stone DA, Dean SM, Climate change attribution and the economic costs of extreme weather events: a study on damages from extreme rainfall and drought, Climatic Change 162 (2) (2020) 781–797. [Google Scholar]

- [6].Sapsis TP, Statistics of extreme events in fluid flows and waves, Annual Review of Fluid Mechanics 53 (1) (2021) 85–111. [Google Scholar]

- [7].Brooks CL III, Karplus M, Pettitt BM, Proteins, John Wiley & Sons, New York, 1988. [Google Scholar]

- [8].Zwier MC, Chong LT, Reaching biological timescales with all-atom molecular dynamics simulations, Current opinion in pharmacology 10 (6) (2010) 745–752. [DOI] [PubMed] [Google Scholar]

- [9].Sohail MS, Louie RH, McKay MR, Barton JP, Mpl resolves genetic linkage in fitness inference from complex evolutionary histories, Nature Biotechnology 39 (4) (2021) 472–479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Mirams GR, Davies MR, Cui Y, Kohl P, Noble D, Application of cardiac electrophysiology simulations to pro-arrhythmic safety testing, British Journal of Pharmacology 167 (5) (2012) 932–945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Liu W, Kim TY, Huang X, Liu MB, Koren G, Choi B-R, Qu Z, Mechanisms linking t-wave alternans to spontaneous initiation of ventricular arrhythmias in rabbit models of long qt syndrome, Journal of Physiology 596 (8) (2018) 1341–1355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Marrink SJ, Risselada HJ, Yefimov S, Tieleman DP, De Vries AH, The martini force field: coarse grained model for biomolecular simulations, Journal of Physical Chemistry B 111 (27) (2007) 7812–7824. [DOI] [PubMed] [Google Scholar]

- [13].Saunders MG, Voth GA, Coarse-graining methods for computational biology, Annual Review of Biophysics 42 (2013) 73–93. [DOI] [PubMed] [Google Scholar]

- [14].Jumper JM, Faruk NF, Freed KF, Sosnick TR, Trajectory-based training enables protein simulations with accurate folding and Boltzmann ensembles in cpu-hours, PLoS Computational Biology 14 (12) (2018) e1006578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Zwanzig R, From classical dynamics to continuous time random walks, Journal of Statistical Physics 30 (2) (1983) 255–262. [Google Scholar]

- [16].Noé F, Schütte C, Vanden-Eijnden E, Reich L, Weikl TR, Constructing the equilibrium ensemble of folding pathways from short off-equilibrium simulations, Proceedings of the National Academy of Sciences 106 (45) (2009) 19011–19016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Bowman GR, Pande VS, Noé F, An introduction to Markov state models and their application to long timescale molecular simulation, Vol. 797, Springer Science & Business Media, 2013. [Google Scholar]

- [18].Husic BE, Pande VS, Markov state models: From an art to a Science, Journal of the American Chemical Society 140 (7) (2018) 2386–2396. [DOI] [PubMed] [Google Scholar]

- [19].Kutz JN, Deep learning in fluid dynamics, Journal of Fluid Mechanics 814 (2017) 1–4. [Google Scholar]

- [20].Rudy SH, Brunton SL, Proctor JL, Kutz JN, Data-driven discovery of partial differential equations, Science Advances 3 (4) (2017) e1602614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].O’Gorman PA, Dwyer JG, Using machine learning to parameterize moist convection: Potential for modeling of climate, climate change, and extreme events, Journal of Advances in Modeling Earth Systems 10 (10) (2018) 2548–2563. [Google Scholar]

- [22].Zanna L, Bolton T, Data-driven equation discovery of ocean mesoscale closures, Geophysical Research Letters 47 (17) (2020) e2020GL088376. [Google Scholar]

- [23].Chattopadhyay A, Hassanzadeh P, Subramanian D, Data-driven predictions of a multiscale Lorenz 96 chaotic system using machine-learning methods: reservoir computing, artificial neural network, and long short-term memory network, Nonlinear Processes in Geophysics 27 (3) (2020) 373–389. [Google Scholar]

- [24].Kashinath K, Mustafa M, Albert A, Wu J-L, Jiang C, Esmaeilzadeh S, Azizzadenesheli K, Wang R, Chattopadhyay A, Singh A, Manepalli A, Chirila D, Yu R, Walters R, White B, Xiao H, Tchelepi HA, Marcus P, Anandkumar A, Hassanzadeh P, Prabhat, Physics-informed machine learning: case studies for weather and climate modelling, Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 379 (2194) (2021) . [DOI] [PubMed] [Google Scholar]

- [25].Du R, Pande VS, Grosberg AY, Tanaka T, Shakhnovich ES, On the transition coordinate for protein folding, Journal of Chemical Physics 108 (1) (1998) 334–350. [Google Scholar]

- [26].Bolhuis PG, Chandler D, Dellago C, Geissler PL, Transition path sampling: Throwing ropes over mountain passes in the dark, Annual Review of Physical Chemistry 53 (2002) 291–318. [DOI] [PubMed] [Google Scholar]

- [27].Strahan J, Antoszewski A, Lorpaiboon C, Vani BP, Weare J, Dinner AR, Long-time-scale predictions from short-trajectory data: A benchmark analysis of the trp-cage miniprotein, Journal of Chemical Theory and Computation 17 (5) (2021) 2948–2963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Antoszewski A, Lorpaiboon C, Strahan J, Dinner AR, Kinetics of phenol escape from the insulin R6 hexamer, Journal of Physical Chemistry B 125 (42) (2021) 11637–11649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Bloomfield HC, Brayshaw DJ, Gonzalez PLM, Charlton-Perez A, Sub-seasonal forecasts of demand and wind power and solar power generation for 28 European countries, Earth System Science Data 13 (5) (2021) 2259–2274. [Google Scholar]

- [30].Tantet A, van der Burgt FR, Dijkstra HA, An early warning indicator for atmospheric blocking events using transfer operators, Chaos: An Interdisciplinary Journal of Nonlinear Science 25 (3) (2015) 036406. [DOI] [PubMed] [Google Scholar]

- [31].Lucente D, Rolland J, Herbert C, Bouchet F, Coupling rare event algorithms with data-based learned committor functions using the analogue Markov chain, arXiv preprint arXiv:2110.05050 (2021). [Google Scholar]

- [32].Lucente D, Herbert C, Bouchet F, Committor functions for climate phenomena at the predictability margin: The example of el niño southern oscillation in the jin and timmermann model, Journal of the Atmospheric Sciences (2022). [Google Scholar]

- [33].Finkel J, Gerber EP, Abbot DS, Weare J, Revealing the statistics of extreme events hidden in short weather forecast data, arXiv preprint arXiv:2206.05363 (2022). [Google Scholar]

- [34].Finkel J, Webber RJ, Gerber EP, Abbot DS, Weare J, Learning forecasts of rare stratospheric transitions from short simulations, Monthly Weather Review 149 (11) (2021) 3647–3669. [Google Scholar]

- [35].Finkel J, Webber RJ, Gerber EP, Abbot DS, Weare J, Exploring stratospheric rare events with transition path theory and short simulations, arXiv preprint arXiv:2108.12727 (2021). [Google Scholar]

- [36].Williams MO, Kevrekidis IG, Rowley CW, A data–driven approximation of the Koopman operator: Extending dynamic mode decomposition, Journal of Nonlinear Science 25 (6) (2015) 1307–1346. [Google Scholar]

- [37].Pavliotis GA, Stochastic Processes and Applications: Diffusion Processes, the Fokker-Planck and Langevin Equations, Vol. 60 of Texts in Applied Mathematics, Springer New York, New York, NY, 2014. [Google Scholar]

- [38].Thiede EH, Giannakis D, Dinner AR, Weare J, Galerkin approximation of dynamical quantities using trajectory data, Journal of Chemical Physics 150 (24) (2019) 244111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Sidky H, Chen W, Ferguson AL, High-resolution markov state models for the dynamics of Trp-cage miniprotein constructed over slow folding modes identified by state-free reversible VAMPnets, Journal of Physical Chemistry B 123 (38) (2019) 7999–8009. [DOI] [PubMed] [Google Scholar]

- [40].Li Q, Lin B, Ren W, Computing committor functions for the study of rare events using deep learning, Journal of Chemical Physics 151 (5) (2019) 054112. [Google Scholar]

- [41].Khoo Y, Lu J, Ying L, Solving for high-dimensional committor functions using artificial neural networks, Research in the Mathematical Sciences 6 (1) (2019) 1–13. [Google Scholar]

- [42].Li H, Khoo Y, Ren Y, Ying L, A semigroup method for high dimensional committor functions based on neural network, in: Mathematical and Scientific Machine Learning, PMLR, 2022, pp. 598–618. [Google Scholar]

- [43].Rotskoff GM, Mitchell AR, Vanden-Eijnden E, Active importance sampling for variational objectives dominated by rare events: Consequences for optimization and generalization, in: Mathematical and Scientific Machine Learning, PMLR, 2022, pp. 757–780. [Google Scholar]

- [44].Chen Y, Hoskins J, Khoo Y, Lindsey M, Committor functions via tensor networks, arXiv preprint arXiv:2106.12515 (2021). [Google Scholar]

- [45].Han J, Jentzen A, W. E, Solving high-dimensional partial differential equations using deep learning, Proceedings of the National Academy of Sciences 115 (34) (2018) 8505–8510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Han J, Lu J, Zhou M, Solving high-dimensional eigenvalue problems using deep neural networks: A diffusion Monte Carlo like approach, Journal of Computational Physics 423 (2020) 109792. [Google Scholar]

- [47].Karniadakis GE, Kevrekidis IG, Lu L, Perdikaris P, Wang S, Yang L, Physics-informed machine learning, Nature Reviews Physics 3 (6) (2021) 422–440. [Google Scholar]

- [48].Raissi M, Perdikaris P, Karniadakis GE, Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, Journal of Computational Physics 378 (2019) 686–707. [Google Scholar]

- [49].Chen F, Huang J, Wang C, Yang H, Friedrichs learning: Weak solutions of partial differential equations via deep learning, arXiv preprint arXiv:2012.08023 (2020). [Google Scholar]

- [50].Zeng Q, Bryngelson SH, Schäfer F, Competitive physics informed networks, arXiv preprint arXiv:2204.11144 (2022). [Google Scholar]

- [51].Banushkina PV, Krivov SV, Nonparametric variational optimization of reaction coordinates, The Journal of chemical physics 143 (18) (2015) 11B607_1. [DOI] [PubMed] [Google Scholar]

- [52].Krivov SV, Blind analysis of molecular dynamics, Journal of Chemical Theory and Computation 17 (5) (2021) 2725–2736. [DOI] [PubMed] [Google Scholar]

- [53].Roux B, String method with swarms-of-trajectories, mean drifts, lag time, and committor, Journal of Physical Chemistry A 125 (34) (2021) 7558–7571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Roux B, Transition rate theory, spectral analysis, and reactive paths, The Journal of Chemical Physics 156 (13) (2022) 134111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].W. E W Ren E Vanden-Eijnden, Transition pathways in complex systems: Reaction coordinates, isocommittor surfaces, and transition tubes, Chemical Physics Letters 413 (1-3) (2005) 242–247. [Google Scholar]

- [56].Sutton RS, Barto AG, Reinforcement learning: An introduction, MIT press, 2018. [Google Scholar]

- [57].Kingma DP, Ba J, Adam: A method for stochastic optimization, arXiv:1412.6980 (2017). [Google Scholar]

- [58].Müller K, Brown LD, Location of saddle points and minimum energy paths by a constrained simplex optimization procedure, Theoretica Chimica Acta 53 (1) (1979) 75–93. [Google Scholar]

- [59].Leimkuhler B, Matthews C, Rational Construction of Stochastic Numerical Methods for Molecular Sampling, Applied Mathematics Research eXpress 2013 (1) (2013) 34–56. [Google Scholar]

- [60].Lorpaiboon C, Weare J, Dinner AR, Augmented transition path theory for sequences of events, arXiv preprint arXiv:2205.05067 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Coifman RR, Lafon S, Diffusion maps, Applied and Computational Harmonic Analysis 21 (1) (2006) 5–30. [Google Scholar]

- [62].Schwantes CR, Pande VS, Modeling Molecular Kinetics with tICA and the Kernel Trick, Journal of Chemical Theory and Computation 11 (2) (2015) 600–608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Bittracher A, Klus S, Hamzi B, Koltai P, Schütte C, Dimensionality reduction of complex metastable systems via kernel embeddings of transition manifolds, Journal of Nonlinear Science 31 (1) (2020) 3. [Google Scholar]

- [64].Kingma DP, Welling M, Auto-encoding variational Bayes, arXiv preprint arXiv:1312.6114 (May 2014). [Google Scholar]

- [65].Wehmeyer C, Noé F, Time-lagged autoencoders: Deep learning of slow collective variables for molecular kinetics, Journal of Chemical Physics 148 (24) (2018) 241703. [DOI] [PubMed] [Google Scholar]

- [66].Ma A, Dinner AR, Automatic method for identifying reaction coordinates in complex systems, Journal of Physical Chemistry B 109 (14) (2005) 6769–6779. [DOI] [PubMed] [Google Scholar]

- [67].Thiede EH, Van Koten B, Weare J, Dinner AR, Eigenvector method for umbrella sampling enables error analysis, Journal of Chemical Physics 145 (8) (2016) 084115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [68].Dinner AR, Thiede EH, Koten BV, Weare J, Stratification as a general variance reduction method for Markov chain Monte Carlo, SIAM/ASA Journal on Uncertainty Quantification 8 (3) (2020) 1139–1188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [69].Baldwin MP, Ayarzagüena B, Birner T, Butchart N, Butler AH, Charlton-Perez AJ, Domeisen DIV, Garfinkel CI, Garny H, Gerber EP, Hegglin MI, Langematz U, Pedatella NM, Sudden stratospheric warmings, Reviews of Geophysics 59 (1) (2021) e2020RG000708. [Google Scholar]

- [70].Holton JR, Mass C, Stratospheric vacillation cycles, Journal of Atmospheric Sciences 33 (11) (1976) 2218–2225. [Google Scholar]

- [71].Sjoberg JP, Birner T, Stratospheric wave–mean flow feedbacks and sudden stratospheric warmings in a simple model forced by upward wave activity flux, Journal of the Atmospheric Sciences 71 (11) (2014) 4055 – 4071. [Google Scholar]

- [72].Maher P, Gerber EP, Medeiros B, Merlis TM, Sherwood S, Sheshadri A, Sobel AH, Vallis GK, Voigt A, Zurita-Gotor P, Model hierarchies for understanding atmospheric circulation, Reviews of Geophysics 57 (2) (2019) 250–280. [Google Scholar]

- [73].Finkel J, Abbot DS, Weare J, Path properties of atmospheric transitions: illustration with a low-order sudden stratospheric warming model, Journal of the Atmospheric Sciences 77 (7) (2020) 2327–2347. [Google Scholar]

- [74].Christiansen B, Chaos, quasiperiodicity, and interannual variability: Studies of a stratospheric vacillation model, Journal of the Atmospheric Sciences 57 (18) (2000) 3161–3173. [Google Scholar]

- [75].Yoden S, Dynamical Aspects of Stratospheric Vacillations in a Highly Truncated Model, Journal of the Atmospheric Sciences 44 (24) (1987) 3683–3695. [Google Scholar]