Abstract

Identifying the neural mechanisms underlying spatial orientation and navigation has long posed a challenge for researchers. Multiple approaches incorporating a variety of techniques and animal models have been used to address this issue. More recently, virtual navigation has become a popular tool for understanding navigational processes. Although combining this technique with functional imaging can provide important information on many aspects of spatial navigation, it is important to recognize some of the limitations these techniques have for gaining a complete understanding of the neural mechanisms of navigation. Foremost among these is that, when participants perform a virtual navigation task in a scanner, they are lying motionless in a supine position while viewing a video monitor. Here, we provide evidence that spatial orientation and navigation rely to a large extent on locomotion and its accompanying activation of motor, vestibular, and proprioceptive systems. Researchers should therefore consider the impact on the absence of these motion-based systems when interpreting virtual navigation/functional imaging experiments to achieve a more accurate understanding of the mechanisms underlying navigation.

INTRODUCTION

Is navigation in virtual reality with functional imaging (fMRI) really navigation? The short answer is yes and no. Yes, because a participant must use visual cues to solve a spatial problem. However, virtual navigation differs from real navigation, which relies heavily on motor, proprioceptive, and vestibular information—none of which are activated when a participant is lying supine in a functional imaging scanner while performing a virtual reality navigational task. Virtual navigation, of course, shares many features in common with real-world navigation, but the two processes are not identical. Just as there are differences in brain activity when imagining an apple and actually seeing it (Kosslyn, Ganis, & Thompson, 2001), there are many differences between navigating in the virtual world and navigating in the real world. Foremost, among these differences is that vestibular and proprioceptive information (idiothetic cues) do not match the visual cues viewed by the participant on the monitor, and this mismatch has been shown to produce reorientation of the participant (Wang & Spelke, 2002). Consequently, reorientations most likely occur more frequently during virtual reality, and virtual navigation may therefore differ from real-world navigation. Although fMRI has become an incredibly unique and important tool in understanding brain function, its use with virtual reality technology to study brain mechanisms underlying spatial orientation and navigation are limited because of the specific brain networks that it has been used to study. Our focus here is not to discuss the relevance of the BOLD signal per se but, rather, to discuss what we believe are important considerations when combining virtual reality and fMRI techniques to understand the neural processes involved in naturalistic spatial navigation.

THE NEURAL BASIS OF SPATIAL NAVIGATION: SINGLE-UNIT RECORDINGS

Researchers have made significant strides during the past decade in understanding the neural processes that underlie navigation. Approaches using both behavioral and electrophysiological recording techniques in rodents have uncovered many of the processes involved in navigation (Jeffery, 2003; Gallistel, 1990; OʼKeefe & Nadel, 1978). The existence of a “cognitive map” within the brain has historically been a controversial topic (Benhamou, 1996; Tolman, 1948), but it has provided a foundation for many studies that have sought an understanding of these processes. Neurons that form the basis for spatial orientation have been identified and extensively studied—as single-unit recordings have found various cell types that respond to different spatial aspects of an animalʼs environment. Place cells in the hippocampus (OʼKeefe & Dostrovsky, 1971) show increased or decreased firing rates based on an animalʼs location. Grid cells in the entorhinal cortex and subicular complex discharge in multiple locations, with these locations collectively forming a repeating hexagonal grid pattern (Boccara et al., 2010; Hafting, Fyhn, Molden, Moser, & Moser, 2005). Head direction (HD) cells, which have been identified in a number of limbic system areas (Taube, 2007), fire based on the animalʼs perceived directional heading within the environment, independent of the animalʼs place or behavior. Border cells (also known as boundary vector cells) fire in relation to the boundaries of the environment, regardless of head orientation, and are also found in portions of the hippocampal system (Solstad, Boccara, Kropff, Moser, & Moser, 2008). In addition to this electrophysiological approach, many lesion and behavioral studies have been performed in rodents to address the underlying brain areas and behavioral processes involved in navigation (Dolins & Mitchell, 2010; Gallistel, 1990).

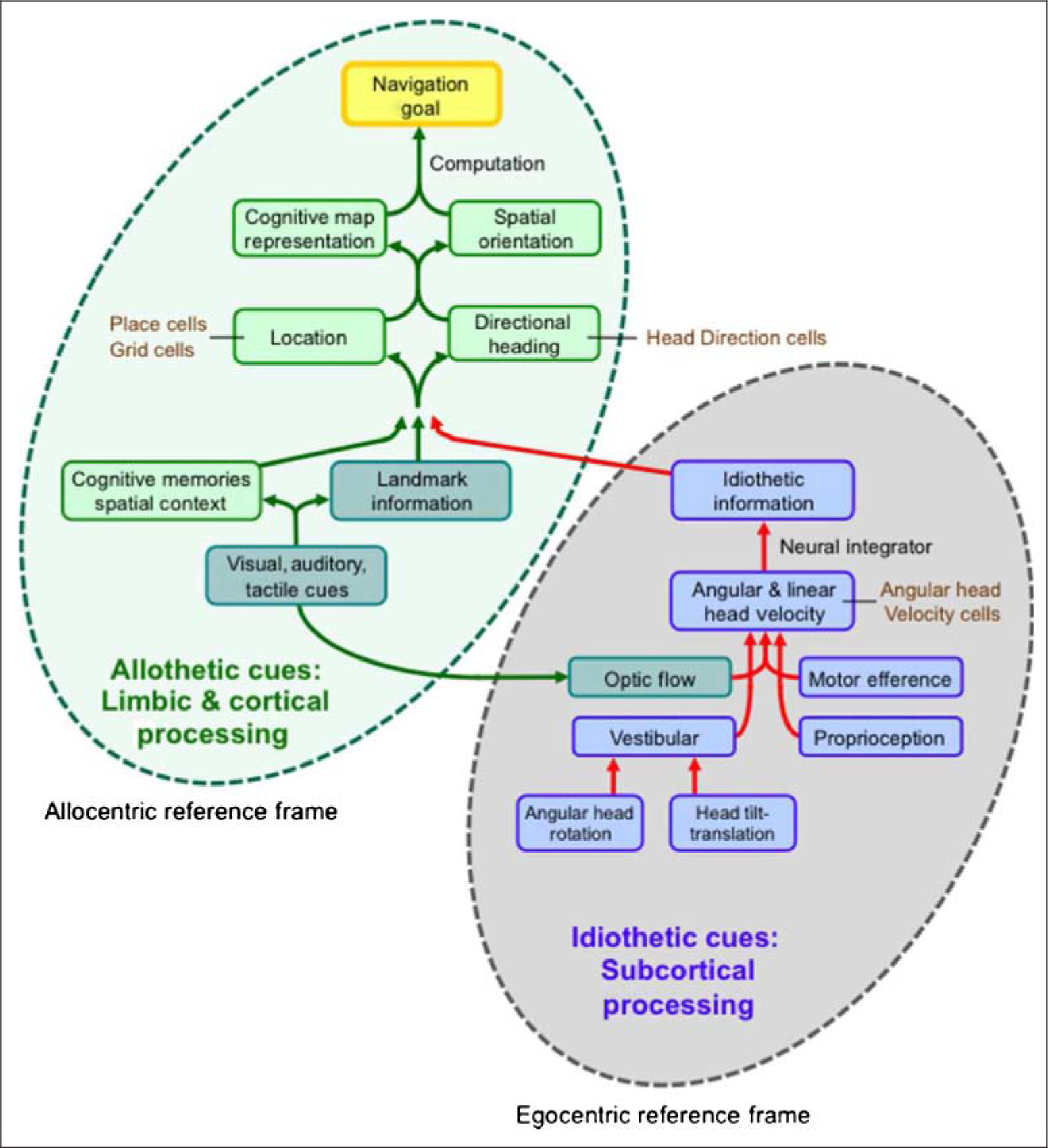

Figure 1 summarizes the processes involved in orientation and navigation, which are divided into two main components—(1) the use of idiothetic cues (vestibular, proprioceptive, motor efference) for path integration, which involves information concerning self-motion and engages more (but not solely) subcortical areas, and (2) the use of allothetic cues (visual, auditory, tactile) for processing landmark information, which engages higher level cognitive processes including contextual spatial memory. Information from the path integration and landmark systems is integrated within limbic and cortical areas to yield information about the participantʼs spatial orientation in allocentric coordinates. Combining the participantʼs spatial orientation with navigational knowledge about the location of a goal enables the computation of a route that leads to the goal. Both the path integration and landmark systems usually act together, but when spatial information from the two systems do not match one another and are in conflict, then unless the average information from the two systems is adopted, information from one system will dominate over the other (Dolins & Mitchell, 2010; Golledge, 1999). For mammals, the dominant system is usually landmark information, which can reorient the participant quickly in the face of spatial errors (Valerio & Taube, 2012; Wang & Spelke, 2002; Gallistel, 1990). Reorientation is also capable of occurring suddenly when new visual (landmark) cues are provided or existing ones are reinterpreted (Jönsson, 2002; Goodridge & Taube, 1995). Thus, since the discovery of place cells, important advances have been accomplished in our understanding of spatial processes in the rodent brain, but the invasive methods used to gain this information cannot be used in the human brain. Recent technological advances, however, are now providing new approaches for the investigation of spatial processes in humans.

Figure 1.

Conceptual framework for spatial orientation and navigation. Two major component processes are depicted. Within the gray ellipse on the right, idiothetic cues, which use an egocentric reference frame, are primarily processed by subcortical structures and are used in the path integration system. Within the green ellipse on the left, allothetic cues shown in teal, such as visual, auditory, and tactile information, are combined to provide spatial information about landmarks. Landmark information along with spatial memories concerning context are integrated with the path integration system to yield spatial cells that provide information about the participantʼs spatial orientation with respect to the environment using an allocentric reference frame. This landmark navigational system involves higher level cognitive processes and takes place within limbic and cortical brain areas.

fMRI: AN ACCESS TO THE HUMAN BRAIN

Presently, only one single-unit recording study has shown the existence of spatially tuned cells in human hippocampus (Ekstrom et al., 2003), and even in that study, the spatial tuning of the cells appears different from the ordered and topographically smooth firing patterns observed in rodent place cells. But the advent of functional neural imaging techniques, such as fMRI, combined with the development of highly realistic virtual reality environments have enabled researchers to further explore mechanisms involved in human navigation under well-controlled conditions. It is noteworthy that virtual reality technology has improved immensely during the past decade and now provides a highly realistic environment that mimics real-world navigation. This endeavor is accomplished by using immersion, where the participant is able to move and thus, at least partially, can use internal cues to navigate. However, because of the fMRI constraint, this immersion technology cannot be used without the elimination of the participantʼs movements. Consequently, in fMRI studies, participants have to use a “desktop virtual reality” system. Although virtual reality under these conditions is still multisensory, providing visual, auditory, and limited tactile information, the participant must lie supine in the fMRI scanner looking upwards at a video display and navigate via a joystick control within the virtual environment (Bohil, Alicea, & Biocca, 2011). These studies have described a network of brain structures that are activated during navigational tasks, including the hippocampus, the parahippocampal place area, and retrosplenial cortex (Rodriguez, 2010; Spiers & Maguire, 2006; Wolbers & Büchel, 2005; Janzen & Van Turennout, 2004; reviewed in Epstein, 2008). Some studies have also reported activation in the posterior parietal cortex and portions of the OFC (Rodriguez, 2010; Spiers & Maguire, 2006, 2007). One common theme that has emerged from these studies is that the hippocampus proper is more involved in processing the spatial components of a navigational task, whereas parahippocampal areas are more involved in processing contextual spatial information (Brown, Ross, Keller, Hasselmo, & Stern, 2010; Rauchs et al., 2008). One interesting finding that parallels findings in animals was that, in a virtual navigation task, either the hippocampus or the caudate nucleus was activated depending on the spatial strategy used by the participant (Iaria, Petrides, Dagher, Pike, & Bohbot, 2003; Packard & McGaugh, 1996). The right hippocampus showed greater activation in the early stages of learning when participants utilized landmarks. But with increased practice, the caudate nucleus became activated, and this activity correlated with the use of a nonspatial strategy as judged from verbal reports. Activation that was common to both spatial and nonspatial strategy groups occurred in the posterior parietal cortex and areas 9 and 46 of the pFC. Importantly, however, all of these experiments only engaged high-level cognitive processes—they were performed while the participants were immobile and certainly were not moving their heads. The motor systems required to move a joystick are very different from those used to walk around in the real world. It is therefore possible that the brain processes activated under these conditions are considerably different from those that are activated during navigation in the real world, where participants are usually locomoting and making numerous head turns.

To partially address this problem, some studies (e.g., Spiers & Maguire, 2006, 2007) have used video games that are realistic renditions of real-world environments (e.g., London), where participants, mostly professional London taxi cab drivers, have learned the spatial layouts of their environment from real-world work experiences. While undergoing fMRI, the participants were then tested while performing a navigational video game that had spatial layouts similar to their experiences in the streets of London. The participants had thus learned their real-world experiences (and consequently, the virtual maps of London) while fully engaging both their path integration and landmark systems while driving around London. In contrast, other studies (e.g., Brown et al., 2010; Rodriguez, 2010; Wolbers & Büchel, 2005) have had their participants learn the testing environments completely from the video games on a desktop monitor. Under these circumstances, the participants would not have engaged their path integration/subcortical systems while learning the spatial relationships of the environment but would have relied primarily on the landmark-based cortical system to learn the spatial layouts (see Figure 1). Although the preimaging experiences of these two groups are different and various systems are activated, the imaging procedures are the same for both types of studies and, in general, lead to similar findings in terms of the brain areas activated. Note, however, that, for both preimaging procedures, when participants are scanned during navigational testing, they are lying supine and their heads are motionless—conditions in which idiothetic cues and the path integration system would not be activated. Therefore, both types of studies suffer from the same problem, namely, that only one navigational system, the landmark system, is activated during fMRI.

THE IMPORTANCE OF ACTIVE MOVEMENT FOR SPATIAL NAVIGATION

Decades of research using rodents, monkeys, and humans have repeatedly shown the important role that active movement plays in navigation. Oneʼs sense of spatial orientation depends on proprioceptive feedback and motor efference copy, which inform the participants about their body movements, and vestibular signals, which provide information about head position and movement through space. It is well known that active exploration usually results in greater spatial knowledge of that environment than passive exploration of it, and this difference is usually not accounted for by different attentional levels while navigating. For example, studies have shown that scene recognition across views was impaired when an array of objects rotated relative to a stationary observer but not when the observer moved relative to a stationary display (Simons & Wang, 1998). Additional experiments suggested that information obtained through self-motion facilitated scene recognition from novel viewpoints compared with when a participant passively viewed the scenes (Wang & Simons, 1999). Other studies have reported that participants tend to underestimate distance in virtual environments compared with moving in the real world (Witmer & Kline, 1998). The role that active locomotion serves for orientation is also highlighted by studies reporting differences in heading judgments between active locomotion and passive transport. For example, one study monitored participantsʼ abilities to update their directional heading and to point to the origin of a two-segment path that they traversed under four different conditions: physical walking, imagined walking from a verbal description, watching another person walk, and experiencing optic flow that simulated the walking (Klatzky, Loomis, Beall, Chance, & Golledge, 1998). Performance was impaired in the verbal description and watching conditions but not for physical walking. More importantly, simulated optic flow alone, which would occur in virtual reality conditions, was not sufficient to induce accurate turn responses (also see Riecke, Sigurdarson, & Milne, 2012). Another study compared navigational performance between participants that used a virtual reality system when walking a route (creating an impression of full immersion) and one where they viewed the same scenes but locomoted through it using a hand-operated joystick while seated (visual imagery condition; Chance, Gaunet, Beall, & Loomis, 1998). The researchers found performance to be significantly better in the immersion condition compared with the visual imagery condition. These findings emphasize the importance that physical movement and its accompanying motor, proprioceptive, and vestibular inputs play in accurate spatial orientation.

Not all studies have found impaired spatial performances when this internal spatial information is deprived from the participant. In an early study that compared learning a large-scale virtual environment via a helmet-mounted display or a desktop monitor, Ruddle, Payne, and Jones (1997) found that participants who wore the helmet-mounted display navigated the buildings more quickly and developed a better sense of straight-line distances within the environment (suggesting better survey knowledge of the environment) than the desktop monitor group. However, both groups traveled the same distance to the goals and showed similar accuracy in estimating directions. In another study using a large-scale environment, participants who were driven on a mile-long car trip performed as well as participants who only viewed a video of the route on a test assessing their recall of the environmentʼs spatial layout (Waller, Loomis, & Steck, 2003). Note, however, that the participants were deprived of the motor and proprioceptive cues that would accompany a normal walk through the environment. In subsequent studies that included active locomotion, participants in a video-only viewing condition performed similar to participants who received matching motor, proprioceptive, or vestibular cues along with the video. There was no difference between groups on tasks that estimated the distance between points and in constructing maps of the spatial layout of the environment (Waller & Greenauer, 2007; Waller, Loomis, & Haun, 2004). In contrast, participants who did not receive the matching idiothetic information were impaired at pointing accurately to the recalled locations—suggesting that their judgment about their perceived directional orientation was deficient. This inability to point accurately is somewhat surprising, given that the participants remained in an upright position during the task—a position which improves pointing accuracy relative to a supine position (Smetanin & Popov, 1997). Whether supine participants, such as those in fMRI studies, would have been able to accurately estimate distance and construct maps of the spatial layout remains to be tested. Overall, spatial perception appears to be most accurate when motor, proprioceptive, and vestibular signals are available but can persist in some cases when one or more of these signals are absent. Furthermore, the influence of body position on spatial performance is an important consideration for the interpretation of virtual navigation experiments (see below). The processes activated in virtual reality navigation tasks of course share many of the same mechanisms that are activated in real-world navigation (Richardson, Montello, & Hegarty, 1999), but our point is that the two events are not identical, and researchers should appreciate the differences between the two events when defining the neural mechanisms underlying navigation. As we have highlighted above, the high-level cognitive processes involved in processing landmark information and the participantʼs perceived spatial orientation undoubtedly activate both limbic and cortical networks during both virtual reality and real-world navigation. However, the use of virtual reality with fMRI confines the activation to mostly these cortical brain areas and does not begin to tap into the processes that occur at the subcortical level. It is important to note that much of our perceived orientation is obtained from these subcortical systems, which are later integrated with the limbic and cortical processes (Figure 1).

THE VESTIBULAR SYSTEM: A KEY COMPONENT IN SPATIAL ORIENTATION AND NAVIGATION

Although visual information usually dominates control of perceived directional heading, vestibular signals are important for accurate heading judgments in humans (Angelaki & Cullen, 2008; Telford, Howard, & Ohmi, 1995). In darkness, the loss of vestibular signals results in impaired navigational abilities in humans (Glasauer, Amorim, Viaud-Delmon, & Berthoz, 2002; Brookes, Gresty, Nakamura, & Metcalfe, 1993; Heimbrand, Muller, Schweigart, & Mergner, 1991). And under light conditions, the loss of vestibular information leads to impaired spatial memory and navigational deficits in a virtual water maze task (Brandt et al., 2005). Similar findings have also been reported in rodent studies where vestibular dysfunction, whether complete or specific to the semicircular canals or otolith organs, produced marked disruption of both HD and place cell signals (Muir et al., 2009; Yoder & Taube, 2009; Stackman, Clark, & Taube, 2002; Stackman & Taube, 1997) and impaired performance in spatial tasks (Wallace, Hines, Pellis, & Whishaw, 2002; Ossenkopp & Hargreaves, 1993). These findings suggest that both accurate navigation and intact spatial signals rely heavily (although not totally) on vestibular signals and that active movement, which typically results in vestibular activation, is fundamental for normal spatial awareness, although additional sensory systems are obviously also important. This point is not to suggest that a healthy participant requires vestibular stimulation to navigate or orient accurately. Rather, the data suggest that active movement, which results in the activation of the vestibular system, plays an important role in these processes. Of particular importance to the present discussion, both proprioceptive and vestibular systems are not activated when a participant is performing a virtual reality navigational task in a scanner.

THE MOTOR SIGNAL: ANOTHER IMPORTANT ELEMENT FOR NAVIGATION

Although some motor systems are activated while performing a virtual reality task in the scanner, they are not activated in the same manner as they would during real-world navigation. In fact, virtual navigation only involves motor activation of the upper limbs, whereas the lower limbs are usually involved in real-world locomotion. Although neural structures involved in high-level navigational processes and decisions certainly become active during virtual navigation, these processes probably only engage one portion of the navigational system—namely the one that computes routes to a goal by flexibly using associations and problem-solving skills. These areas most likely overlap with the brain areas activated during real-world navigation (Kupers, Chebat, Madsen, Paulson, & Ptito, 2010), including those areas that are particularly activated by optic flow, such as area MST (Britten & van Wezer, 1982). It is important to note, however, that the idiothetic-based systems stimulated during active movement would not be activated during virtual navigation. Furthermore, a recent study reported that participantsʼ short-term spatial memory capacity was greatest when their visual view of landmarks could be encoded either in reference to themselves (egocentric view) or in relation to the surrounding environment (allocentric view), but their memory capacity suffered when the visually viewed objects had to be spatially encoded in relation to each other, independent of the surrounding environment (Lavenex et al., 2011). These findings demonstrate that an image placed (or moved) in front of a stationary participant, such as would occur in a virtual reality environment, is not equivalent to the movement of the participant around stationary images. Thus, we contend that imaging studies using virtual reality tasks only activate a portion of the neural network that is engaged during more naturalistic conditions that involve active movement. This limitation is especially true for how spatial cells might represent their encoded information during virtual reality tasks. In particular, the absence of self-motion during virtual reality tasks may lead to differences in how spatial information is encoded in place, grid, and HD cells. Before turning to how these spatial cells fire during virtual reality tasks, it is important to review what we know about these spatial cells in humans.

COHERENCE BETWEEN SINGLE-UNIT STUDIES AND fMRI

HD cells have yet to be identified in humans, although they have been reported in nonhuman primates (Robertson, Rolls, Georges-François, & Panzeri, 1999). Their firing was independent of the monkeyʼs eye position suggesting that the cells were encoding true directional heading rather than attentional gaze. Place cells and grid cells appear to be present in the human hippocampus and entorhinal cortex, both of which show increased activation during performance of virtual reality spatial tasks under limited head movement conditions (Morgan, MacEvoy, Aguirre, & Epstein, 2011; Doeller, Barry, & Burgess, 2010). Indeed, single-unit recordings revealed place-specific activity in a population of hippocampal neurons during a virtual navigation task, even if the signal was not as robust as typically seen in rodent studies (Ekstrom et al., 2003). Additionally, prominent activation of medial parietal cortex, specifically Brodmannʼs area 31, also occurred when participants performed a virtual reality spatial task that was dependent on the participantʼs perceived directional heading (Baumann & Mattingley, 2010). This brain region encompasses the retrosplenial region (as defined by Epstein, 2008) and became active when participants passively viewed navigationally relevant stimuli (Epstein & Kanwisher, 1998). The types of neural correlates conveying spatial information that are present in the human retrosplenial region is currently unknown but, based on rat studies, may include HD cells. The human medial parietal/retrosplenial region, however, is different from the rat retrosplenial cortex (Brodmannʼs area 29), which contains HD cells and many other types of cells, although the extent of these differences is not fully understood (Cho & Sharp, 2001; Chen, Lin, Green, Barnes, & McNaughton, 1994).

PLACE CELL RECORDING IN STATIONARY CONDITIONS

Hippocampal place cells in rodents have also been monitored under conditions of passive movement—conditions that only provide access to vestibular and somatosensory cues. Under these conditions, a lower percentage of cells expressed location-specific firing, and those that did were found to have larger fields and lower information content values than cells recorded during active locomotion (Terrazas et al., 2005). Recent experiments have shown that robust place cell activity was present when head-restrained mice ran on a track ball that simulated their movements on a linear track within a virtual environment, where the corresponding visual stimuli (that would have been experienced by movement on the track) were projected onto a visual screen (Harvey, Collman, Dombeck, & Tank, 2009). Although vestibular activation was absent under this experimental arrangement because of the head restraint, the animals experienced all of the motor and proprioceptive features that would be present if they had indeed been moving through the environment. Specifically, their limbs were moving and simulating the active motion their movements would make if they had been physically moving in the apparatus. Note here, too, that their limb movements would coincide with the optic flow they experienced when viewing the surrounding visual screens. Thus, these experimental conditions are different from the stationary virtual navigational tasks employed when participants are scanned. In a more recent and similar experiment, Chen, King, Burgess, and OʼKeefe (2012) compared recordings from hippocampal place cells as mice either ran back and forth on a linear track or while they were head-fixed and locomoted in a stationary position in a similar but virtual reality track. The authors reported that visual information alone in the virtual reality environment was only sufficient to evoke normal place cell firing and theta rhythmicity in 25% of the cells, and even in these cells, there was a reduction in theta power. For the remaining 75% of cells, movement was required to elicit normal place cell firing. These findings again emphasize the point that movement contributes importantly to normal spatial representations in the hippocampus.

THETA RHYTHM AND VIRTUAL REALITY

It is also well known that the presence of theta rhythm is important for normal cognitive processing in both humans (Kahana, 2006) and rodents (Winson, 1978), and some researchers have even proposed that the precise temporal coordination between spikes and theta may provide the critical information for spatial navigation (Robbe & Buzsaki, 2009). For rodents, active movement is the most prominent correlate of theta rhythm, although theta can occur in brief epochs during immobility (Vanderwolf, 1969). Like the place cell and HD cell signals, movement-related theta depends heavily on vestibular signals, as lesions of the vestibular system reduced theta amplitude during locomotion (Russell, Horii, Smith, Darlington, & Bilkey, 2006). Thus, without the normal vestibular processes accompanying active locomotion, there may be an abnormal or reduced theta rhythm during navigation. Immobility is generally devoid of continuous theta, although continuous theta can be induced by passive rotation, which stimulates the vestibular system (Tai, Ma, Ossenkopp, & Leung, 2012). Moreover, during passive movement, theta power remained dependent on perceived movement (velocity) through the environment but was reduced in magnitude compared with active locomotion (Terrazas et al., 2005). This power reduction also led to a marked decrease in the gain of the theta power function, indicating that the animal perceived that it was moving more slowly through the environment. Whether active locomotion is required to obtain the same robust theta rhythm in humans is unclear. Theta EEG frequencies are clearly evident during virtual reality spatial tasks (Ekstrom et al., 2005; Caplan, Madsen, Schulze-Bonhage, Aschenbrenner-Scheibe, & Kahana, 2003), and some theta that was generated independently from movement was correlated with the participantʼs spatial view (Watrous, Fried, & Ekstrom, 2011). However, it is not known whether the amplitude and characteristics of this theta are the same as during active locomotion. To date, no studies have made a direct comparison between theta generated during active versus virtual movement in humans—an issue that warrants further investigation, particularly one using a within-subject design. This issue has obvious implications for drawing conclusions about brain mechanisms when participants are lying motionless and performing virtual navigation tasks in a scanner.

HD CELLS AND THE VESTIBULAR SYSTEM

The rodent HD cell signal is particularly important for our understanding of the human navigation system because its generation is independent of other spatial signals—HD cell activity does not depend on the place cell (Calton et al., 2003) or grid cell (Clark & Taube, 2011) signals. However, preliminary evidence indicates that the grid cell signal depends on the HD cell signal (Clark, Valerio, & Taube, 2011), suggesting that deficits in heading perception may have detrimental effects on other spatial signals. Like human heading perception, HD signal stability is known to depend on active locomotion, as rats that were passively transported to a novel arena showed considerably less HD signal stability than rats that actively walked to the novel arena (Yoder et al., 2011; Stackman, Golob, Bassett, & Taube, 2003). Although a recent study demonstrated that HD cell firing was similar between active versus passive head turns (Shinder & Taube, 2011a), other studies have found differences between these conditions in terms of cell peak firing rates (Zugaro, Tabuchi, Fouquier, Berthoz, & Wiener, 2001; Taube, 1995). Thus, if humans have HD cells, their representations of direction may be different during virtual reality tasks because active locomotion is absent, which may, in turn, affect other spatial representations such as those of location.

Studies in rodents have shown that visual information can override internally derived movement cues (e.g., Goodridge & Taube, 1995), but an intact vestibular system is crucial for the generation of HD and place cell signals (Muir et al., 2009; Russell, Horii, Smith, Darlington, & Bilkey, 2003; Stackman et al., 2002; Stackman & Taube, 1997). Although a typical participant in an fMRI experiment has an intact vestibular system, the system remains in a relatively inactive state in the motionless participant and contributes little in updating the participantʼs perceived orientation. The vestibular systemʼs contribution to spatial updating and normal hippocampal processing should not be underestimated. Stimulation of the vestibular labyrinth activates hippocampal neurons at relatively short latencies (40 msec; Cuthbert, Gilchrist, Hicks, MacDougall, & Curthoys, 2000). Similarly, electrical stimulation of the medial vestibular nucleus increases the firing rate of complex spike cells in CA1, possibly including place cells (Horii, Russell, Smith, Darlington, & Bilkey, 2004). Behaviorally, vestibular lesions disrupt performance on hippocampal-dependent spatial tasks (Smith et al., 2005). The importance of the vestibular system to normal hippocampal function is also underscored by the hippocampal atrophy and impaired spatial memory that occurs in humans after loss of vestibular functions (Brandt et al., 2005).

In summary, we currently have evidence that the human hippocampus and entorhinal cortex, which may contain place cells and grid cells, are active during virtual navigation, but it is not known how HD cells might respond under virtual reality conditions such as those used in fMRI studies in humans.

THE HD NETWORK IS A “BLIND SPOT” IN FUNCTIONAL IMAGING STUDIES

There is currently no evidence in humans that the brain regions that form the generative portion of the HD circuit (in rats)—the lateral mammillary nuclei, anterodorsal thalamus, and postsubiculum—are activated during virtual navigation tasks. These areas, which are certainly integral for navigation in rodents, are likely involved in human navigation, too. For example, in one case study, a participant who had an infarct in the right anterior thalamus was impaired at route finding, despite normal recognition of salient landmarks, indicating the importance of this brain area for normal navigation (Ogawa et al., 2008). But thus far, no imaging study has reported activation in the anterior thalamus when participants perform a virtual navigation task. Thus, one might wonder how accurate or complete a picture we have of brain areas activated during human navigation. Although several fMRI studies have shown activation of retrosplenial cortex in humans during navigational tasks using virtual reality (Epstein, 2008; Wolbers & Büchel, 2005), the retrosplenial cortex is not considered an integral part of the HD generative circuit, at least in rodents (Taube, 2007).

fMRI AND THE SPECIFICITY OF THE HD SIGNAL

One reason that could explain why the HD network is not activated in fMRI could come from the differences in the ways brain activity is detected by neural imaging systems versus how the activity is collected by electrophysiological recording systems. Many experimental strategies that use fMRI rely on neural adaptation, which is often referred to as repetition-related effects (Grill-Spector, Henson, & Martin, 2006). A recent study used this approach to demonstrate that distance was encoded by the hippocampus and landmark recognition was encoded by the parahippocampal place area (Morgan et al., 2011). Similarly, single-cell recordings in the inferior temporal cortex provide evidence for neural adaptation with repeated presentations of the same visual stimuli (Desimone, 1996; Ringo, 1996). Although neural adaptation is frequently used as a technique in fMRI studies, this phenomenon does not appear to occur consistently across all brain areas and can be context-dependent. For example, in area V1, the BOLD signal and local single-unit activity were strongly linked during conventional stimulus presentation but were not coupled during perceptual suppression (Maier et al., 2008). In the Baumann and Mattingley (2010) study discussed above, the authors concluded that area 31 was activated based on the participantʼs perceived directional heading in the virtual environment because the BOLD signal was attenuated when participants viewed a second image that represented the same heading direction. If true, this finding is not consistent with electrophysiological studies because rodent HD cells do not adapt over a series of passes through the same directional heading—firing rates through the tenth pass through the cellʼs preferred direction are as robust as the first pass over a span of several minutes (Bassett et al., 2005, see Figure 1; Taube, 2010). Interestingly, there is a sustained ~30% decrease in an HD cellʼs instantaneous firing rate after about 4 sec when an animal maintains its heading in the cellʼs preferred firing direction (Shinder & Taube, 2011b), although this decrease is not seen over short intervals on the order of 1 sec (Taube & Muller, 1998). More importantly, though, the firing rate changes that occur during an animalʼs first pass through the cellʼs preferred firing direction are similar to the changes that occur during subsequent passes through the cellʼs preferred firing direction. Thus, although HD cell firing may decrease over a period of several seconds when an animal maintains the same directional heading, the amount of this decrease is generally the same across different epochs. It is therefore unclear how the relatively consistent firing rate changes seen in HD cells over repeated passes through the cellsʼ preferred firing directions relates to imaging studies that report a decrease in the BOLD signal across different episodes—findings that seem to be at odds with one another. It is vitally important for future experiments to address and understand these discrepancies.

MEASURE OF THE HD NETWORK IN fMRI: AN INTRINSIC IMPOSSIBILITY?

The issue of observing a change in the BOLD signal in brain areas that contain HD cells when performing a virtual task is also questionable on grounds that, theoretically, the directional system is always active—it is always “on” whether or not a participant is using this information at the moment. For example, HD cells reliably show an increased firing rate when the head is pointed in a single direction within the environment, regardless of whether an animal is actively navigating or sitting still and grooming (Taube, Muller, & Ranck, 1990). Accordingly, it is difficult to imagine how the directional system might be “more” or “less” active during a particular task, because the information appears to be always present. The directional information is not more, or less, present just because a participant is, or is not, navigating at the moment. What is relevant is whether the participant is utilizing the information when performing a particular task. Thus, assuming that the HD signal in humans operates with similar properties as those found in rodents, utilization of directional information should not influence the generation of the BOLD signal in HD cell areas. Along related lines, direction-specific firing of HD cells ceases or is markedly attenuated when an animal is inverted (Calton & Taube, 2005), but the cellsʼ average firing rates increase because the cells now fire at a sustained low rate at directional headings they were not firing in before. Whether human cells show similar firing average rate changes or whether these changes could be detected by fMRI is not known.

In addition to this constant activity that makes it difficult to find a “resting condition” to compare with, the fact that HD cells are not topographically organized is also a problem for fMRI studies. Indeed, a single voxel (~55mm3, 5.5 million, neurons; Logothetis, 2008) may contain HD cells representing the entire 360° range, which would make the bold signal irrelevant to detect the direction sensitivity of these cells.

THE REPETITION-RELATED EFFECT AND RATE REMAPPING

Along the same lines, another issue to consider is the relationship between the repetition-related effect and rate remapping. Hippocampal place cells undergo rate remapping when environmental changes occur, which is manifested as a change, either an increase or decrease, in the cellʼs peak firing rate when the animal is in the cellʼs place field (Leutgeb et al., 2005). Although rate remapping over a population of cells may average out when conditions change, if it does not, then there will be an overall increase or decrease in the activity across the population. If monitored via the BOLD signal, this overall firing rate change would appear as an alteration in the BOLD signal and would be interpreted as a change in the amount a brain area is contributing to a task, when in fact, the population of cells was undergoing rate remapping.

When considering differences in findings between single-unit recordings and fMRI studies, it is important to keep in mind the differences between what these two techniques measure. Single-unit activity measures the firing of nearby neurons but does not provide any information on local synaptic activity—excitatory postsynaptic potentials (EPSPs) and inhibitory postsynaptic potentials (IPSPs). The BOLD signal is believed to represent more closely the local synaptic activity and not whether local neurons are discharging (Viswanathan & Freeman, 2007; Logothetis, Pauls, Augath, Trinath, & Oeltermann, 2001). Of course, EPSPs can drive cells to their threshold potentials and cause them to fire, but this input does not necessarily always lead to cell firing. Alternatively, the BOLD response, which reflects the sum of local network activity, appears to remain constant, even when the different quantitative parameters of the input are changed (Angenstein, Kammerer, & Scheich, 2009). Other studies have questioned the strength of the relationship between the BOLD fMRI signal and underlying neural activity, particularly as it relates to the spatial coincidence of these two measures (Cardoso, Sirotin, Lima, Glushenkova, & Das, 2012; Conner, Ellmore, Pieters, DiSano, & Tandon, 2011), and Ekstrom, Suthana, Millett, Fried, and Bookheimer (2009) reported that neither neural firing rates nor local field potential changes correlated well with BOLD signal changes in the hippocampus (although the local field potential changes did correlate better with the BOLD signal in the parahippocampal cortex). Together, these findings may explain some of the disparities observed in results from single-unit and fMRI studies. For example, the BOLD response decreased while spike activity increased in the caudate/putamen, whereas the BOLD response and spike activity were consistent in cortical and thalamic areas (Mishra et al., 2011). In summary, the extent to which the BOLD signal corresponds to spike rates in brain structures that participate in spatial processing is currently unclear.

POSITION IN THE SCANNER: A PROBLEM FOR NORMAL NAVIGATION?

A final important point to consider is the participantʼs perceived directional heading while lying supine in a scanner, looking upward, during navigation within a virtual environment. On one level, they are immersed in the spatial video game and perceive their location within the world of the video game. On another level, their otolith organs indicate that they are lying on their back, facing upward, and motionless. The head position during virtual navigation in the scanner is thus markedly different from that of real-world navigation. This aspect may impair normal path integration in the virtual reality task, particularly if participants do not perceive themselves as upright when immersed in the navigational task, because there is a disparity between real-world idiothetic cues (the participant is lying in the scanner) and task allocentric reference frames engaged by the participant in the task (the participant appears upright when interacting with the virtual reality monitor). Head position is a particularly important consideration because both HD cell activity (Calton et al., 2005) and navigational performance (Valerio et al., 2010) are degraded during inverted navigation, possibly the result of altered otolith signals (Yoder & Taube, 2009). It is important to note, however, that a supine participant is not inverted while lying in the scanner, as the head and body are only pitched backward by 90°, a position that results in normal HD cell activity in rodents (Calton et al., 2005). Nonetheless, this situation raises the issue of whether a participant is capable of simultaneously holding multiple reference frames “on-line” in their working memory or if only one reference frame is activated and perceived at a time.

In addition to the supine orientation of their head, participants probably maintain some sense of their orientation with respect to the surrounding room environment. For example, a participant may perceive himself as facing east and looking up at the ceiling in the scanning room—both of which may be different directions relative to their perceived directional heading within the virtual reality task. It is interesting to ponder how HD cells might respond under such circumstances. Is their firing tied to the scanning room and the real-world environment, or is their firing associated with their perceived directional heading in the virtual reality spatial task? Again, could both representations be encoded simultaneously, possibly across separate HD cell networks in different brain areas? It is interesting to entertain this notion because, in rodents, different HD cell populations exist across multiple brain areas. It is noteworthy that, in rodents, the place cell population can split and encode two different reference frames simultaneously (Knierim & Rao, 2003; Zinyuk, Kubik, Kaminsky, Fenton, & Bures, 2000; Shapiro, Tanila, & Eichenbaum, 1997). For example, Zinyuk et al. (2000) showed how simultaneously recorded place cells fired to different aspects of a spatial task with some cells firing in relation to the rotating platform they were on, whereas other cells fired in relation to the stationary room reference frame. Regardless, both the cancellation of a conflicting otolith signal and the task of handling two different reference frames simultaneously may significantly influence the brain activity recorded in these experimental conditions. The nature of the interaction between different neural circuits that maintain multiple reference frames poses an interesting problem for future research.

Conclusions

In summary, the recent use of virtual reality tasks with brain imaging systems has provided important insight into the human brain mechanisms that contribute to navigation. These techniques have enabled researchers to study navigation in humans at levels that are difficult to accomplish in freely moving individuals because of ethical and technical reasons. Furthermore, the use of animal models for studying the mechanisms of navigation has some drawbacks, too, that methods using virtual reality can overcome. For example, virtual reality techniques easily lend themselves to using large-scale, naturalistic environments that are often difficult to perform in animals, and a recent study has exploited the use of combined calcium imaging techniques with virtual reality to monitor calcium levels in head-fixed zebrafish as they rapidly adapted their motor output to changes in visual feedback—an experiment that would not be possible in a freely moving fish (Ahrens et al., 2012). Thus, virtual reality can exploit certain conditions that animal models have difficulty simulating easily. However, the vast literature on animal navigation suggests that there are clear differences between the spatial systems that are activated in virtual reality navigation tasks and the systems activated during real-world navigation. Although participants orient correctly and are able to accurately perform the virtual navigational tasks in a scanner, we question whether the brain performs these tasks in the same manner as in more naturalistic conditions, where vestibular, motor, and proprioceptive activation contribute to normal spatial processing. We therefore urge caution when comparing results across human and rodent studies. A better appreciation of these differences would lead to improved understanding of the neural mechanisms underlying navigation and spatial cognition. In particular, researchers should be mindful of the differences between perceptual (where I am now physically) and cognitive (where I am in the virtual spatial task) factors when performing a navigational task (Shelton & Marchette, 2010). As research moves forward in this field, particularly with developments enabling ever finer spatial and temporal resolution with fMRI techniques, it will be important that the dialogue among researchers using real-world conditions and those using virtual reality systems refer to the same thing.

Acknowledgments

The authors thank Brad Duchaine and Thomas Wolbers for comments on the manuscript. Supported by grants from NIH: NS053907 and DC009318.

REFERENCES

- Ahrens MB, Li JM, Orger MB, Robson DN, Schier AF, Engert F, et al. (2012). Brain-wide neuronal dynamics during motor adaptation in zebrafish. Nature, 485, 471–477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelaki DE, & Cullen KE (2008). Vestibular system: The many facets of a multimodal sense. Annual Review of Neuroscience, 31, 125–150. [DOI] [PubMed] [Google Scholar]

- Angenstein F, Kammerer E, & Scheich H (2009). The BOLD response in the rat hippocampus depends rather on local processing of signals than on the input or output activity. Journal of Neuroscience, 29, 2428–2439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bassett JP, Zugaro MB, Muir GM, Golob EJ, Muller RU, & Taube JS (2005). Passive movements of the head do not abolish anticipatory firing properties of head direction cells. Journal of Neurophysiology, 93, 1304–1316. [DOI] [PubMed] [Google Scholar]

- Baumann O, & Mattingley JB (2010). Medial parietal cortex encodes perceived heading direction in humans. Journal of Neuroscience, 30, 12897–12901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benhamou S (1996). No evidence for cognitive mapping in rats. Animal Behaviour, 52, 201–212. [Google Scholar]

- Boccara CN, Sargolini F, Thoresen VH, Solstad T, Witter MP, Moser EI, et al. (2010). Grid cells in pre- and parasubiculum. Nature Neuroscience, 13, 987–994. [DOI] [PubMed] [Google Scholar]

- Bohil CJ, Alicea B, & Biocca FA (2011). Virtual reality in neuroscience research and therapy. Nature Neuroscience Review, 12, 752–762. [DOI] [PubMed] [Google Scholar]

- Brandt T, Schautzer F, Hamilton DA, Brüning R, Markowitsch HJ, Kalia R, et al. (2005). Vestibular loss causes hippocampal atrophy and impaired spatial memory in humans. Brain, 128, 2732–2741. [DOI] [PubMed] [Google Scholar]

- Britten KH, & van Wezer RJA (1982). Area MST and heading perception in macaque monkeys. Cerebral Cortex, 12, 692–701. [DOI] [PubMed] [Google Scholar]

- Brookes GB, Gresty MA, Nakamura T, & Metcalfe T (1993). Sensing and controlling rotational orientation in normal subjects and patients with loss of labyrinthine function. The American Journal of Otology, 14, 349–351. [PubMed] [Google Scholar]

- Brown TI, Ross RS, Keller JB, Hasselmo ME, & Stern CE (2010). Which way was I going? Contextual retrieval supports the disambiguation of well learned overlapping navigational routes. Journal of Neuroscience, 30, 7414–7422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calton JL, Stackman RW, Goodridge JP, Archey WB, Dudchenko PA, & Taube JS (2003). Hippocampal place cell instability following lesions of the head direction cell network. Journal of Neuroscience, 23, 9719–9731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calton JL, & Taube JS (2005). Degradation of head direction cell activity during inverted locomotion. Journal of Neuroscience, 25, 2420–2428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caplan JB, Madsen JR, Schulze-Bonhage A, Aschenbrenner-Scheibe R, & Kahana MJ (2003). Human theta oscillations related to sensorimotor integration and spatial learning. Journal of Neuroscience, 23, 4726–4736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardoso MMB, Sirotin YB, Lima B, Glushenkova E, & Das A (2012). The neuroimaging signal is a linear sum of neurally distinct stimulus- and task-related components. Nature Neuroscience, 15, 1298–1306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chance SS, Gaunet F, Beall AC, & Loomis JM (1998). Locomotion mode affects the updating of objects encountered during travel: The contribution of vestibular and proprioceptive inputs to path integration. Presence, 7, 168–178. [Google Scholar]

- Chen G, King JA, Burgess N, & OʼKeefe J (2012). How vision and movement combine in the hippocampal place code. Proceedings of the National Academy of Sciences, U.S.A, 110, 379–383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen LL, Lin LH, Green EJ, Barnes CA, & McNaughton BL (1994). Head-direction cells in the rat posterior cortex: I. Anatomical distribution and behavioral modulation. Experimental Brain Research, 101, 8–23. [DOI] [PubMed] [Google Scholar]

- Cho J, & Sharp PE (2001). Head direction, place, and movement correlates for cells in the rat retrosplenial cortex. Behavioral Neuroscience, 115, 3–25. [DOI] [PubMed] [Google Scholar]

- Clark BJ, & Taube JS (2011). Intact landmark control and angular path integration by head direction cells in the anterodorsal thalamus after lesions of the medial entorhinal cortex. Hippocampus, 21, 767–782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark BJ, Valerio S, & Taube JS (2011). Disrupted grid and head direction cell signal in the entorhinal cortex and parasubiculum after lesions of the head direction system. Program No. 729.11. 2011 Neuroscience Meeting Planner. Washington, DC: Society for Neuroscience. [Google Scholar]

- Conner CR, Ellmore TM, Pieters TA, DiSano MA, & Tandon N (2011). Variability of the relationship between electrophysiology and BOLD–fMRI across cortical regions in humans. Journal of Neuroscience, 31, 12855–12865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cuthbert PC, Gilchrist DP, Hicks SL, MacDougall HG, & Curthoys IS (2000). Electrophysiological evidence for vestibular activation of the guinea pig hippocampus. NeuroReport, 11, 1443–1447. [DOI] [PubMed] [Google Scholar]

- Desimone R (1996). Neural mechanisms for visual memory and their role in attention. Proceedings of the National Academy of Sciences, U.S.A, 93, 13494–13499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doeller CF, Barry C, & Burgess N (2010). Evidence for grid cells in a human memory network. Nature, 463, 657–661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dolins FL, & Mitchell RW (2010). Spatial cognition, spatial perception: Mapping the self and space. Cambridge, UK: Cambridge University Press. [Google Scholar]

- Ekstrom AD, Caplan JB, Ho E, Shattuck K, Fried I, & Kahana MJ (2005). Human hippocampal theta activity during virtual navigation. Hippocampus, 15, 881–889. [DOI] [PubMed] [Google Scholar]

- Ekstrom AD, Kahana MJ, Caplan JB, Fields TA, Isham EA, Newman EL, et al. (2003). Cellular networks underlying human spatial navigation. Nature, 425, 184–188. [DOI] [PubMed] [Google Scholar]

- Ekstrom A, Suthana N, Millett D, Fried I, & Bookheimer S (2009). Correlation between BOLD fMRI and theta-band local field potentials in the human hippocampal area. Journal of Neurophysiology, 101, 2668–2678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R (2008). Parahippocampal and retrosplenial contributions to human spatial navigation. Trends in Cognitive Science, 12, 388–396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R, & Kanwisher N (1998). A cortical representation of the local visual environment. Nature, 392, 598–601. [DOI] [PubMed] [Google Scholar]

- Gallistel CR (1990). The organization of learning. Cambridge, MA: MIT Press. [Google Scholar]

- Glasauer S, Amorim MA, Viaud-Delmon I, & Berthoz A (2002). Differential effects of labyrinthine dysfunction on distance and direction during blindfolded walking of a triangular path. Experimental Brain Research, 145, 489–497. [DOI] [PubMed] [Google Scholar]

- Golledge RG (1999). Wayfinding behavior. Baltimore, MD: Johns Hopkins University Press. [Google Scholar]

- Goodridge JP, & Taube JS (1995). Preferential use of the landmark navigational system by head direction cells. Behavioral Neuroscience, 109, 49–61. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Henson R, & Martin A (2006). Repetition and the brain: Neural models of stimulus-specific effects. Trends in Cognitive Science, 10, 14–23. [DOI] [PubMed] [Google Scholar]

- Hafting T, Fyhn M, Molden S, Moser M-B, & Moser EI (2005). Microstructure of a spatial map in the entorhinal cortex. Nature, 436, 801–806. [DOI] [PubMed] [Google Scholar]

- Harvey CD, Collman F, Dombeck DA, & Tank DW (2009). Intracellular dynamics of hippocampal place cells during virtual navigation. Nature, 461, 941–946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heimbrand S, Muller M, Schweigart G, & Mergner T (1991). Perception of horizontal head and trunk rotation in patients with loss of vestibular functions. Journal of Vestibular Research, 1, 291–298. [PubMed] [Google Scholar]

- Horii A, Russell NA, Smith PF, Darlington CL, & Bilkey DK (2004). Vestibular influences on CA1 neurons in the rat hippocampus: An electrophysiological study in vivo. Experimental Brain Research, 155, 245–250. [DOI] [PubMed] [Google Scholar]

- Iaria G, Petrides M, Dagher A, Pike B, & Bohbot VD (2003). Cognitive strategies dependent on the hippocampus and caudate nucleus in human navigation: Variability and change with practice. Journal of Neuroscience, 23, 5945–5952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janzen G, & Van Turennout M (2004). Selective neural representation of objects relevant for navigation. Nature Neuroscience, 7, 673–677. [DOI] [PubMed] [Google Scholar]

- Jeffery KJ (2003). The neurobiology of spatial behavior. Oxford, UK: Oxford University Press. [Google Scholar]

- Jönsson E (2002). Inner navigation: Why we get lost and how we find our way. New York: Scribner. [Google Scholar]

- Kahana MJ (2006). The cognitive correlates of human brain oscillations. Journal of Neuroscience, 26, 1669–1672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klatzky RL, Loomis JM, Beall AC, Chance SS, & Golledge RG (1998). Spatial updating of self-position orientation during real, imagined, and virtual locomotion. Psychological Science, 9, 293–298. [Google Scholar]

- Knierim JJ, & Rao G (2003). Distal landmarks and hippocampal place cells: Effects of relative translation versus rotation. Hippocampus, 13, 604–617. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Ganis G, & Thompson WL (2001). Neural foundations of imagery. Nature Reviews Neuroscience, 2, 635–642. [DOI] [PubMed] [Google Scholar]

- Kupers R, Chebat DR, Madsen KH, Paulson OB, & Ptito M (2010). Neural correlates of virtual route recognition in congenital blindness. Proceedings of the National Academy of Sciences, U.S.A, 107, 12716–12721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavenex PB, Lecci S, Prêtre V, Brandner C, Mazza C, Pasquier J, et al. (2011). As the world turns: Short-term human spatial memory in egocentric and allocentric coordinates. Behavioural Brain Research, 219, 132–141. [DOI] [PubMed] [Google Scholar]

- Leutgeb S, Leutgeb JK, Barnes CA, Moser EI, McNaughton BL, & Moser MB (2005). Independent codes for spatial and episodic memory in hippocampal neuronal ensembles. Science, 309, 619–623. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Pauls J, Augath M, Trinath T, & Oeltermann A (2001). Neurophysiological investigation of the basis of the fMRI signal. Nature, 412, 150–157. [DOI] [PubMed] [Google Scholar]

- Maier A, Wilke M, Aura C, Zhu C, Ye FQ, & Leopold DA (2008). Divergence of fMRI and neural signals in V1 during perceptual suppression in the awake monkey. Nature Neuroscience, 11, 1193–1200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishra AM, Ellens DJ, Schridde U, Motelow JE, Purcaro MJ, DeSalvo MN, et al. (2011). Where fMRI and electrophysiology agree to disagree: Corticothalamic and striatal activity patterns in the WAG/Rij rat. Journal of Neuroscience, 31, 15053–15064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan LK, MacEvoy SP, Aguirre GK, & Epstein RA (2011). Distances between real-world locations are represented in the human hippocampus. Journal of Neuroscience, 31, 1238–1245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muir GM, Brown JE, Carey JP, Hirvonen TP, Della Santina CC, Minor LB, et al. (2009). Semicircular canal occlusions disrupt head direction cell activity in the anterodorsal thalamus of chinchillas. Journal of Neuroscience, 29, 14521–14533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogawa N, Kawai H, Sanada M, Nakamura H, Idehara R, Shirako H, et al. (2008). A case of defective route finding following right anterior thalamic infarction. Brain Nerve, 60, 1481–1485. [PubMed] [Google Scholar]

- OʼKeefe J, & Dostrovsky J (1971). The hippocampus as a spatial map: Preliminary evidence from unit activity in the freely-moving rat. Brain Research, 34, 171–175. [DOI] [PubMed] [Google Scholar]

- OʼKeefe J, & Nadel L (1978). The hippocampus as a cognitive map. Oxford, UK: Oxford University Press. [Google Scholar]

- Ossenkopp K-P, & Hargreaves EL (1993). Spatial learning in an enclosed eight-arm radial maze in rats with sodium arsanilate-induced labyrinthectomies. Behavioral Neural Biology, 59, 253–257. [DOI] [PubMed] [Google Scholar]

- Packard MG, & McGaugh JL (1996). Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiology of Learning & Memory, 65, 65–72. [DOI] [PubMed] [Google Scholar]

- Rauchs G, Orban P, Balteau E, Schmidt C, Degueldre C, Luxen A, et al. (2008). Partially segregated neural networks for spatial and contextual memory in virtual navigation. Hippocampus, 18, 503–518. [DOI] [PubMed] [Google Scholar]

- Richardson AE, Montello DR, & Hegarty M (1999). Spatial knowledge acquisition from maps and from navigation in real and virtual environments. Memory and Cognition, 27, 741–750. [DOI] [PubMed] [Google Scholar]

- Riecke BE, Sigurdarson S, & Milne AP (2012). Moving through virtual reality without moving? Cognitive Processes, 13(Suppl. 1), S293–S297. [DOI] [PubMed] [Google Scholar]

- Ringo JL (1996). Stimulus specific adaptation in inferior temporal and medial temporal cortex of the monkey. Behavioural Brain Research, 76, 191–197. [DOI] [PubMed] [Google Scholar]

- Robbe D, & Buzsaki G (2009). Alteration of theta timescale dynamics of hippocampal place cells by a cannabinoid is asssociated with memory impairment. Journal of Neuroscience, 29, 12597–12605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robertson RG, Rolls ET, Georges-François P, & Panzeri S (1999). Head direction cells in the primate pre-subiculum. Hippocampus, 9, 206–219. [DOI] [PubMed] [Google Scholar]

- Rodriguez PF (2010). Human navigation that requires calculating heading vectors recruits parietal cortex in a virtual and visually sparse water maze task in fMRI. Behavioral Neuroscience, 124, 532–540. [DOI] [PubMed] [Google Scholar]

- Ruddle RA, Payne SJ, & Jones DM (1997). Navigating buildings in “desk-to” virtual environments: Experimental investigations using extended navigational experience. Journal of Experimental Psychology: Applied, 3, 143–159. [Google Scholar]

- Russell NA, Horii A, Smith PF, Darlington CL, & Bilkey DK (2006). Lesions of the vestibular system disrupt hippocampal theta rhythm in the rat. Journal of Neurophysiology, 96, 4–14. [DOI] [PubMed] [Google Scholar]

- Russell NA, Horii A, Smith PF, Darlington CL, & Bilkey DK (2003). Long-term effects of permanent lesions on hippocampal spatial firing. Journal of Neuroscience, 23, 6490–6498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shapiro ML, Tanila H, & Eichenbaum H (1997). Cues that hippocampal place cells encode: Dynamic and hierarchical representation of local and distal stimuli. Hippocampus, 7, 624–642. [DOI] [PubMed] [Google Scholar]

- Shelton AL, & Marchette SA (2010). Where do you think you are? Effects of conceptual current position on spatial memory performance. Journal of Experimental Psychology: ?, 36, 686–698. [DOI] [PubMed] [Google Scholar]

- Shinder ME, & Taube JS (2011a). Active and passive movement are encoded equally by head direction cells in the anterodorsal thalamus. Journal of Neurophysiology, 106, 788–800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shinder ME, & Taube JS (2011b). Firing rates of head direction cells are different between when moving and when motionless. Program No. 729.11. 2011 Neuroscience Meeting Planner. Washington, DC: Society for Neuroscience. [Google Scholar]

- Simons DJ, & Wang RF (1998). Perceiving real-world viewpoint changes. Psychological Science, 9, 315–320. [Google Scholar]

- Smetanin BN, & Popov KE (1997). Effect of body orientation with respect to gravity on directional accuracy of human pointing movements. European Journal of Neuroscience, 9, 7–11. [DOI] [PubMed] [Google Scholar]

- Smith PF, Horii A, Russell N, Bilkey DK, Zheng Y, Liu P, et al. (2005). The effects of vestibular lesion on hippocampal function in rats. Progress in Neurobiology, 75, 391–405. [DOI] [PubMed] [Google Scholar]

- Solstad T, Boccara CN, Kropff E, Moser M-B, & Moser EI (2008). Representation of geometric borders in the entorhinal cortex. Science, 322, 1865–1868. [DOI] [PubMed] [Google Scholar]

- Spiers HJ, & Maguire EA (2006). Thoughts, behaviour, and brain dynamics during navigation in the real world. Neuroimage, 31, 1826–1840. [DOI] [PubMed] [Google Scholar]

- Spiers HJ, & Maguire EA (2007). A navigational guidance system in the human brain. Hippocampus, 17, 618–626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stackman RW, Clark AS, & Taube JS (2002). Hippocampal spatial representations require vestibular input. Hippocampus, 12, 291–303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stackman RW, Golob EJ, Bassett JP, & Taube JS (2003). Passive transport disrupts directional path integration by rat head direction cells. Journal of Neurophysiology, 90, 2862–2874. [DOI] [PubMed] [Google Scholar]

- Stackman RW, & Taube JS (1997). Firing properties of head direction cells in rat anterior thalamic neurons: Dependence upon vestibular input. Journal of Neuroscience, 17, 4349–4358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tai SK, Ma J, Ossenkopp K-P, & Leung LS (2012). Activation of immobility-related hippocampal theta by cholinergic septohippocampal neurons during vestibular stimulation. Hippocampus, 22, 914–925. [DOI] [PubMed] [Google Scholar]

- Taube JS (1995). Head direction cells recorded in the anterior thalamic nuclei of freely moving rats. Journal of Neuroscience, 15, 70–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taube JS (2007). The head direction signal: Origins and sensory-motor integration. Annual Review of Neuroscience, 30, 181–207. [DOI] [PubMed] [Google Scholar]

- Taube JS (2010). Interspike interval analyses reveal irregular firing at short, but not long intervals in rat head direction cells. Journal of Neurophysiology, 104, 1635–1648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taube JS, & Muller RU (1998). Comparison of head direction cell activity in the postsubiculum and anterior thalamus of freely moving rats. Hippocampus, 8, 87–108. [DOI] [PubMed] [Google Scholar]

- Taube JS, Muller RU, & Ranck JB Jr. (1990). Head direction cells recorded from the postsubiculum in freely moving rats: I. Description and quantitative analysis. Journal of Neuroscience, 10, 420–435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Telford L, Howard IP, & Ohmi M (1995). Heading judgments during active and passive self-motion. Experimental Brain Research, 104, 502–510. [DOI] [PubMed] [Google Scholar]

- Terrazas A, Krause M, Lipa P, Gothard KM, Barnes CA, & McNaughton BL (2005). Self-motion and the hippocampal spatial metric. Journal of Neuroscience, 25, 8085–8096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tolman EC (1948). Cognitive maps in rats and men. Psychological Review, 55, 189–206. [DOI] [PubMed] [Google Scholar]

- Valerio S, Clark BJ, Chan JHM, Frost CP, Harris MJ, & Taube JS (2010). Directional learning, but no spatial mapping by rats performing a navigational task in an inverted orientation. Neurobiology of Learning & Memory, 93, 495–505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valerio S, & Taube JS (2012). Path integration: How the head direction signal maintains and corrects spatial orientation. Nature Neuroscience, 15, 1445–1453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanderwolf C (1969). Hippocampal electrical activity and voluntary movement in the rat. Electroencephalography Clinical Neurophysiology, 26, 407–418. [DOI] [PubMed] [Google Scholar]

- Viswanathan A, & Freeman RD (2007). Neurometabolic coupling in cerebral cortex reflects synaptic more than spiking activity. Nature Neuroscience, 10, 1308–1312. [DOI] [PubMed] [Google Scholar]

- Wallace DG, Hines DJ, Pellis SM, & Whishaw IQ (2002). Vestibular information is required for dead reckoning in the rat. Journal of Neuroscience, 22, 10009–10017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waller D, & Greenauer N (2007). The role of body-based sensory information in the acquisition of enduring spatial representations. Psychological Research, 71, 322–332. [DOI] [PubMed] [Google Scholar]

- Waller D, Loomis JM, & Haun DBM (2004). Body-based senses enhance knowledge of directions in large-scale environments. Psychonomic Bulletin Review, 11, 157–163. [DOI] [PubMed] [Google Scholar]

- Waller D, Loomis JM, & Steck S (2003). Inertial cues do not enhance knowledge of environmental layout. Psychonomic Bulletin Review, 10, 987–993. [DOI] [PubMed] [Google Scholar]

- Wang RF, & Simons DJ (1999). Active and passive scene recognition across views. Cognition, 70, 191–210. [DOI] [PubMed] [Google Scholar]

- Wang RF, & Spelke ES (2002). Human spatial representation: Insights from animals. Trends in Cognitive Sciences, 6, 376–382. [DOI] [PubMed] [Google Scholar]

- Watrous AJ, Fried I, & Ekstrom AD (2011). Behavioral correlates of human hippocampal delta and theta oscillations during navigation. Journal of Neurophysiology, 105, 1747–1755. [DOI] [PubMed] [Google Scholar]

- Winson J (1978). Loss of hippocampal theta rhythm results in spatial memory deficit in the rat. Science, 201, 160–163. [DOI] [PubMed] [Google Scholar]

- Witmer BG, & Kline PB (1998). Judging perceived and traversed distance in virtual environments. Presence: Teleoperators and virtual environments, 7, 144–167. [Google Scholar]

- Wolbers T, & Büchel C (2005). Dissociable retrosplenial and hippocampal contributions to successful formation of survey representations. Journal of Neuroscience, 25, 3333–3340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoder RM, Clark BJ, Brown JE, Lamia MV, Valerio S, Shinder ME, et al. (2011). Both visual and idiothetic cues contribute to head direction cell stability during navigation along complex routes. Journal of Neurophysiology, 105, 2989–3001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoder RM, & Taube JS (2009). Head direction cell activity in mice: Robust directional signal depends on intact otoliths. Journal of Neuroscience, 29, 1061–1076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zinyuk L, Kubik S, Kaminsky Y, Fenton AA, & Bures J (2000). Understanding hippocampal activity by using purposeful behavior: Place navigation induces place cell discharge in both task-relevant and task-irrelevant spatial reference frames. Proceedings of the National Academy of Sciences, U.S.A, 97, 3771–3776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zugaro MB, Tabuchi E, Fouquier C, Berthoz A, & Wiener SI (2001). Active locomotion increases peak firing rates of anterodorsal thalamic head direction cells. Journal of Neurophysiology, 86, 692–702. [DOI] [PubMed] [Google Scholar]