Abstract

Pancreatic cancer is associated with higher mortality rates due to insufficient diagnosis techniques, often diagnosed at an advanced stage when effective treatment is no longer possible. Therefore, automated systems that can detect cancer early are crucial to improve diagnosis and treatment outcomes. In the medical field, several algorithms have been put into use. Valid and interpretable data are essential for effective diagnosis and therapy. There is much room for cutting-edge computer systems to develop. The main objective of this research is to predict pancreatic cancer early using deep learning and metaheuristic techniques. This research aims to create a deep learning and metaheuristic techniques-based system to predict pancreatic cancer early by analyzing medical imaging data, mainly CT scans, and identifying vital features and cancerous growths in the pancreas using Convolutional Neural Network (CNN) and YOLO model-based CNN (YCNN) models. Once diagnosed, the disease cannot be effectively treated, and its progression is unpredictable. That's why there's been a push in recent years to implement fully automated systems that can sense cancer at a prior stage and improve diagnosis and treatment. The paper aims to evaluate the effectiveness of the novel YCNN approach compared to other modern methods in predicting pancreatic cancer. To predict the vital features from the CT scan and the proportion of cancer feasts in the pancreas using the threshold parameters booked as markers. This paper employs a deep learning approach called a Convolutional Neural network (CNN) model to predict pancreatic cancer images. In addition, we use the YOLO model-based CNN (YCNN) to aid in the categorization process. Both biomarkers and CT image dataset is used for testing. The YCNN method was shown to perform well by a cent percent of accuracy compared to other modern techniques in a thorough review of comparative findings.

Subject terms: Cancer, Computational biology and bioinformatics

Introduction

The most prevalent solid pancreatic cancer is pancreatic ductal adenocarcinoma (PDAC). Aggressive and challenging to cure, pancreatic cancer is the more general name for this disease. Pancreatic cancer has a meager endurance rate than other cancer types1, 2. Although there have been improvements in surgical methods, medication, and radiotherapy, the 5-year existence rate is still just 8.7%3. Pancreatic cancer is difficult to diagnose since most patients experience vague illnesses. While surgical resection plus chemotherapy gives the highest coincidental of life, with a 5-year survival rate of roughly 31.5%, only 10–20% of patients appear. Eighty to ninety percent of patients do not benefit from treatment because of widespread or regional metastases4, 5.

Compared to the incidence rates of other cancers with a higher death rate, such as lung, breast, and colorectal cancer, the overall incidence of malignancy is significantly lower. Therefore, age-based population screening is challenging because possible screening tests have low positive prediction performance, and there are many unnecessary assessments for individuals with false-positive findings. In addition, not many identified risk factors have a high penetrance for pancreatic cancer, making early identification of this illness difficult. For many years, the danger of pancreatic cancer has been evaluated based on family background, behavioral and physical lifestyle influences, and, more generally, systemic biomarkers and hereditary factors. This process began in the 1970s6. At this time, the serial pancreas-directed scan is performed on some associated with an increased risk due to family heritage or pathogenic genetic variations or cystic lesions of the pancreatic to detect slightly earlier pancreatic cancers.

However, accurate early diagnosis is still challenging and primarily reliant on imaging modalities7. Computed tomography (CT) is the most frequent imaging sense modality for the first examination of alleged pancreatic cancer8, 9, outranking ultrasonography, MRI, and endoscopic ultrasonography. Subclinical people with a high risk of developing pancreatic cancer can also be screened with CT scans. The average survival time for patients with pancreatic cancer identified accidentally during an imaging scan for a particular condition is significantly longer than those who presented with clinical symptoms10. CT has a 70–90 percent sensitivity for detecting pancreatic adenocarcinoma11. Thin-section contrast-enhanced dual-phase multidetector computed tomography is the modality of choice for diagnosing pancreatic cancer12.

The main objective of this research is to predict pancreatic cancer early using deep learning and metaheuristic techniques. Also, Analyze both biomarkers and CT image datasets to test the performance of the developed models. Specifically, the research aims to develop a deep learning and metaheuristic techniques-based system to predict pancreatic cancer early by analyzing medical imaging data, particularly CT scans. The objective is to identify vital features and cancerous growths in the pancreas using Convolutional Neural Network (CNN) and YOLO model-based CNN (YCNN) models.

Recent advances in deep neural networks and rising healthcare demands have shifted the focus of AI research toward CAD systems. Some early breakthroughs have been seen in using deep learning to evaluate radiological images. A deep learning-aided decision-making approach has been utilized to help diagnose lung nodules and skin tumors13, 14. Given the severity of pancreatic cancer, it is essential to work on creating CAD systems that can tell cancerous from noncancerous tissue. Consequently, creating a sophisticated discriminating mechanism for pancreatic cancer is essential. A convolutional neural network (CNN) can extract characteristics from the image by probing the local spatial correlations in a picture. CNN models have been able to effectively address a wide variety of challenges relating to the classification of pictures15. In this paper, we used clinical CT scans to show that a deep learning approach can accurately classify pancreatic ductal adenocarcinoma, as confirmed by a pathologist.

The following is the structure of this paper: In Section “Related works”, we discuss the work done on supervised and unsupervised learning to diagnose pancreatic cancer. In Section “Proposed YOLOv3 based CNN methodology for pancreatic cancer classification”, we will discuss the algorithm that we use for deep understanding. In the previous section (Section “Dataset”), we discussed the experimental set and the datasets utilized for the investigation. The discussion of the results of the experiment can be found in Section “Sample code and Implications”. Discussions and closing comments are included in the last section, numbered 6.

Related works

This section offers a comprehensive analysis of the many categorization schemes for pancreatic tumors that have previously been published. A CNN classifier was developed by Ma et al.16 to detect pancreatic cancers in CT data automatically. A dataset of 3494 CT scans was obtained from 3751 CT scans of 190 people with typical pancreatic cancer and 222 with pathologically proven pancreas tumors. This dataset was used to develop a CNN algorithm. They extracted three datasets from the picture, calculated the approach concerning ternary classifiers, and evaluated the algorithm's efficacy in specificity, accuracy, and sensitivity with tenfold cross-validation.

In17, an eightfold cross-validation approach is used to measure performance after a CNN-based DL technique was applied to CECT images to get three methods (arterial or venous, arterial, and venous). When evaluating the TML and DL algorithms for predicting the pathological grading of pNEN, the optimal CECT picture is used for comparison. Quantitative and qualitative CT data were also used to assess radiologists' efficiency. Using an eightfold cross-validation procedure, we could estimate the best DL approach for scanning a separate testing set of 19 individuals from Hospital II using different scanners. For the challenging task of pancreatic segmentation, Fu et al.18 introduced a novel pancreatic segmentation network that initially extends the RCF described to the edge detection domains. The divulged connectivity carried out per-pixel categorization by meticulously considering objects' multi-resolution extensive contexture data (pancreas). This was possible using a multilayer up-sampling design instead of each level's most fundamental up-sampling activities. This network was also trained and fed with CT images, producing a productive outcome.

Manabe et al.19 calculated a modified CNN technique to boost the efficiency of medical pictures. They changed the AlexNet technique based on convolutional neural networks to work with a 512-by-512 input space. Maximum pooling and convolutional layers both had their filter sizes decreased. Many other approaches were tested and developed using this modified CNN. Improved Convolutional Neural Network (CNN) estimates for pancreatic absence/presence CT image classification were made. Total accuracy measured on test photos not used to train the Resnet was also correlated.

Malignancy classification of lung nodules benefited from the knowledge of many high-level picture characteristics. Eighty-two percent of lobulated nodules, ninety-three percent of ragged nodules, ninety-seven percent of heavily spiculated nodules, and one hundred percent of halo nodules were malignant in a dataset studied by20. Automatic identification of characteristics and kinds of lung nodules was investigated in21. This project aimed to categorize six distinct forms of nodules (solid, non-solid, part-solid, calcified, perivisceral, and spiculated nodules). This method relies on 2D CNN, which is inadequate for assessing lung nodule malignancy. Further, benign status was assigned to 66% of the round nodules.

By using massive volumes of imaging data, artificial intelligence has the potential to aid radiologists in the early identification of PDAC. In particular, CNNs belong to the family of AI algorithms known as deep learning models, and they have demonstrated excellent accuracy in the image-based diagnosis of several cancers22, 23. With the scan as input, CNNs routinely extract features useful for the diagnostic job through a chain of convolutions and pools. Attempts to automate the diagnosis of PDAC have recently shifted focus to deep learning models24–29. However, the majority of studies conduct binary classification, determining whether or not a given input picture contains cancer, and do not also localize lesions at the same time. Not only that, but just one research27 reported the model’s performance for tumors less than 2 cm in size. In contrast, most papers paid little attention to these early-stage lesions.

Transfer learning is a machine learning technique where knowledge gained from solving one problem is applied to predict pancreatic cancers30, 31. In this case, pre-trained deep learning models trained on large image datasets are fine-tuned to detect cancerous lesions in pancreatic images. The aim is to improve the detection of pancreatic cancer at an early stage, which is critical for better patient outcomes. Also, authors in32 have suggested that deep learning has more effective in predicting pancreatic cancer when compared to other ML techniques. The article33 highlights the poor prognosis of pancreatic cancer due to late diagnosis and the importance of early detection and identification of molecular targets for treatment. It also suggests that screening and surveillance in high-risk groups, combined with new biomarkers, may offer new strategies for risk assessment, detection, and prevention of pancreatic cancer.

The paper34 describes the development of a machine-learning algorithm based on changes in 5-hydroxymethylcytosine signals in cell-free DNA from plasma for early detection of pancreatic cancer in high-risk individuals. The ML algorithm shows high specificity and sensitivity in distinguishing pancreatic cancer from noncancer subjects, with a sensitivity of 68.3% for early-stage cancer and an overall specificity of 96.9%. The article35 describes a study investigating the link between serum proteins and early-stage cancer detection. The study found that a positive history of alcohol consumption can diminish the sensitivity of serum protein-mediated liquid biopsy in detecting early-stage malignancies, resulting in a 44% decline in sensitivity. A grouped neural network is proposed in36 for early diagnosis of pancreatic cancer using laboratory health tracking. The work37 describes a study that aims to develop new methods to simplify the large volume of patient medical records to improve clinical decision-making. The study uses deep-learning architectures to create simplified patient state representations that are predictive and interpretable to physicians.

The radiology department has most cancer diagnosis tests using machine and deep learning algorithms38, 39. The prediction accuracy is relatively achieved higher using these machine learning algorithms. Various medical diagnosis of pancreatic symptoms is tested in the radiology department40. The article41 induces multiple applications on the radiology side that have used AI. This paper discusses various applications for pancreatic cancer prediction.

The study proposes that this beneficial effect of long noncoding RNA p21 on endothelial repair is mediated by a pathway involving three protein molecules: SESN2, AMPK, and TSC242. The study proposes that the inhibitory effect of homocysteine on pro-insulin receptor cleavage is caused by a process called cysteine-homo cysteinylation. Cysteine is another amino acid containing a sulfur atom, and homocysteine can form disulfide bonds with cysteine residues on proteins, altering their function43. It involves using hyperpolarization techniques to increase the sensitivity of NMR, which allows for the detection of rare and subtle interactions between molecules44. Drug delivery systems are methods for delivering drugs to specific targets in the body, such as diseased cells or tissues, while minimizing the potential for side effects45. The method also incorporates DS evidence theory, a mathematical framework for combining different types of evidence and uncertainty in decision-making46. Deep learning is a type of machine learning that uses artificial neural networks to learn from large amounts of data and make predictions or classifications47. The study also identified some critical factors that influence the thermal behavior of solid propellants, such as the presence of additives and the effects of moisture48, 49. The study results showed that silencing GTF2B expression led to a significant decrease in the proliferation of A549 cells, suggesting that GTF2B plays a role in promoting cell growth50, 51.

The study evaluated the proposed method's effectiveness using a large lung CT image dataset. It showed that it outperformed existing image retrieval methods regarding accuracy and computational efficiency52, 53. The study evaluated the effectiveness of the proposed method using a large dataset of CT image sequences. It showed that it significantly improved retrieval time and accuracy compared to existing methods for mobile telemedicine networks54. ViT-Patch is a deep learning model based on a type of neural network called a transformer that has recently shown state-of-the-art performance on a range of image classification tasks55. The method involves first transforming the input images into a sparse representation using a sparse dictionary56.

The researchers evaluated the efficacy of their surface-functionalized biomaterials using in vitro and in vivo experiments57. The researchers also demonstrated the feasibility of using phased array technology to generate and detect guided waves in curved plates, which could have critical applications in structural health monitoring and damage detection58, 59. The results showed a significant association between the health status of family members and the health behaviors of other family members. Specifically, having a family member with good health was associated with a higher likelihood of engaging in healthy behaviors such as regular exercise and not smoking60, 61. OCT is a non-invasive imaging technique that uses light waves to capture detailed images of the retina, and it is commonly used for diagnosing and managing ERM62, 63. The results showed that treatment with the therapeutic aptamer significantly increased bone formation in the mice with OI without increasing their cardiovascular risk64, 65.

This study utilized spectral domain optical coherence tomography (SD-OCT) to examine the postoperative outcomes of vitrectomy in highly myopic macular holes66. Sclerostin is a naturally occurring protein that inhibits the activity of cells responsible for bone formation called osteoblasts. It is crucial in regulating bone metabolism and preventing excessive bone growth67. It targets specific proteins, known as immune checkpoints, that inhibit immune cell activity68. Nanotherapeutic platforms refer to nanoscale materials that can be utilized for therapeutic purposes. In this case, the focus is on metal-based nanoparticles and their potential applications in treating bacterial infections69. The researchers used SRS microscopy to acquire high-resolution prostate core needle biopsies images. These images captured the distribution and composition of different biomolecules within the tissue, enabling a detailed analysis of the cancerous features70.

From the literature study, it is shown that deep learning algorithms are well suited to the diagnosis of pancreatic cancers. This research contributes by using deep learning and metaheuristic model to predict pancreatic cancer earlier.

First, CNN is used to classify the pancreatic cancer images

Data pre-processing and segmentation process is done using sail fish optimizer.

Finally, a novel YOLO-based CNN model is used to predict cancer objects and classify cancer patients with high accuracy

The significant limitations of literature studies as follows

Studies mentioned in the discussion may have limitations regarding evaluating their proposed techniques. For example, the review might be limited to a specific dataset or may not involve a large and diverse sample size, which could affect the generalizability of the results.

Many studies primarily focus on the binary classification of pancreatic cancer (presence or absence) and may not adequately address the detection and characterization of early-stage lesions. Early detection improves prognosis, but only some papers address this.

IWhile deep learning models have been employed for cancer detection, most studies do not simultaneously focus on localizing lesions within the pancreas. Localization is essential for accurate diagnosis and treatment planning; more research is needed in this area.

Only one study reports the model’s performance for tumors smaller than 2 cm. The detection and characterization of small tumors are critical for early diagnosis, and further investigation is needed to assess the effectiveness of the proposed methods for these lesions.

The studies mentioned utilize various image datasets for training and evaluation. It is essential to consider the potential biases and limitations in these datasets, such as variations in image quality, patient demographics, and imaging protocols, which may affect the generalizability of the results.

Some studies may need more clinical validation or evaluation in real-world settings. Further research is necessary to assess the effectiveness of the proposed techniques in clinical practice and their integration into existing diagnostic workflows.

Proposed YOLOv3 based CNN methodology for pancreatic cancer classification

The process of the model is illustrated in Fig. 1. During training, we determined which of the initial CT scans of the abdomen had accurate pictures for diagnosis. Following the augmentation of the data, we created a deep-learning model consisting of three linked sub-networks. These models are commonly utilized in medical picture recognition due to their established effectiveness29, 71. Images of the pancreas that include it may be recognized with the help of ResNet50. The transverse plane CT scans shown in Fig. 1 do not have the pancreas and are not directly utilized in the YCNN model diagnosis. It does it by making predictions on each pixel of the picture, which produces binary values for the pancreatic segmentation.

Figure 1.

Overall framework of the pancreatic cancer model.

This research incorporated texture characteristics of the pancreas into the segmentation outcome so that the future sub-network would have a more robust diagnostic base. This was done during the subsequent image fusion process. ResNet50, the last neural network in the YCNN model, is employed to determine whether or not a patient has a pancreatic tumor. The quantity of discrepancy between the production of the neural network and the label is used to calculate the loss function, and the back-propagation approach is applied to calculate how each gradient weight must be upgraded. The loss function is based on the level of divergence that persists between the production of the human brain and the classification. After analyzing the data, we chose the weights that would result in the least amount of data being lost and then locked them down for later application to the testing dataset.

In Fig. 1, we depict our unique and practical framework for tumor identification. The network's core is an amalgamation of Feature Pyramid Networks (FPN) and P-CNN, and its contributions are comprised of three parts: augmented FPNs, SAFF, and a Dependencies Computation Module. First, we use a convolutional neural network (CNN) to extract features from the pre-processed CT images. Next, we construct the feature pyramid using up-sampling and horizontal connections. Second, a bottom-up approach is set up to make the transmission of low-level localization information more efficient, which improves the overall feature hierarchy and, in turn, the detection performance. Finally, we use a Region Proposal Network (RPN) at each tier to create proposals before employing Feature Fusion to increase the associated region of interest and encode richer background information across various balances. To further capture each proposal's interdependencies with its surrounding tissues, we run the Dependencies Computation Module.

Segmentation

In order to segment images, we use a method based on Kapur's thresholding (SFO-KT)72 and the sailfish optimizer. During the image decomposition method, the pre-processed photograph is used as input by the SFO-KT technique in order to find the problematic regions in the CT scan. Therefore far, it has seen the most useful in determining the best threshold for histogram-based picture segmentation. At first, the entropy criteria were proposed for bilevel thresholding, much like the Otsu model. It is expressed in72,

| 1 |

| 2 |

The equation represents the calculation of the Kapur's entropy function, denoted as f kapur(t), based on two probabilities distributions of a discrete variable YR. The variable YR represents a set of non-negative real numbers, and it can be interpreted as the ratio of two positive quantities ω0 and ω1.

The formula is split into two terms, E0 and E1, which are the entropy of YR for two different intervals. The first term, E0, is the entropy of YR for the interval [0, t], and it is calculated by summing over all YR values in that interval. Specifically, for each YR value in that interval, we calculate its probability as YRi / ω0, where ω0 is a reference quantity, and then we take the logarithm of this probability and multiply it by the probability itself. This process is done for all YR values in the interval, and the results are summed to obtain E0.

The second term, E1, is the entropy of YR for the interval [t + 1, L-1], where L is the total number of YR values. It is calculated in a similar way as E0, except that we use ω1 as the reference quantity instead of ω0.

Finally, the Kapur's entropy function, f_kapur(t), is obtained by adding E0 and E1 together. The parameter t is a threshold value that splits the YR values into two groups, [0, t] and [t + 1, L-1], and the function f kapur(t) measures the total entropy of YR for these two groups. The optimal value of t is the one that minimizes f kapur(t), and it is used as a criterion for selecting the best threshold value for classification or segmentation tasks.

Next, threshold values of kapur entropy is optimized using sail fish optimizer (SFO). SFO is metaheuristic approach which is based on sail fish attack alteration strategy. The position of the ith sailfish in the kth search round was denoted by SAi,k and its corresponding fitness was evaluated as f(SAi,k). In the SFO technique, sardines also played a significant role. They were represented as a school moving through the search space, and the position of the ith sardine was denoted by SRi, with its fitness evaluated as f(SRi).

The elite sailfish, possessing the optimal position, was selected during the SFO technique to influence the manoeuvrability and acceleration of sardines under attack. Additionally, the optimal position of any injured sardines from previous rounds was chosen for collaborative hunting by the sailfish to avoid selecting previously discarded solutions. These elite sailfish and injured sardines were designated as Ynew SAi, which represents72 an upgraded solution dependent on subsequent iterations, is represented as,

| 3 |

| 4 |

where SRD denote sardine density.

| 5 |

In this, A represent amount sailfish and sardine. Then the new position of sardine is updated. Next, attack power of sailfish is computed with new position. Sardine upgrade to new position. if sardine is hunted, then fitness is superior to sail fish. Once the value is optimized then the segmentation is performed.

Feature pyramid network

CNNs can glean semantic information throughout the feature extraction process. Similarly, high-level feature maps have a very positive response to global characteristics, making them ideal for spotting massive objects. However, because the tumor is so tiny in CT images, the successive pooling layers risk distorting the feature maps' spatial information. In addition, tumour recognition relies heavily on low-level exact localization data; however, the propagation effect is influenced by the lengthy (over a hundred layers) data communication channel in FPN. For this purpose, we construct an Augmented Feature Pyramid working from the bottom up. Initially, we create using FPN. Then, starting at , the enhanced route is formed, and is immediately utilised as without further transformation. The next step is to execute a convolutional operator with stride 2 on a higher resolution feature map in order to shrink the size of the map. After that, we combine the down sampled feature map with another, coarser feature map, , by summing their respective elements. We then apply a second convolutional operator to each fused feature map to produce for subsequent feature map creation. This procedure is repeated until the level is reached. A fresh Augmented Feature Pyramid is thus obtained. The Fig. 2. displays the proposed YCNN construction.

Figure 2.

The proposed CNN architecture.

Feature fusion

As a result of focusing on a single level for all of the actions after obtaining the suggested areas with RPN, certain potentially relevant details from lower levels are lost in the process. To fully use context information at many scales, we propose a Self-adaptive Feature Fusion module that integrates hierarchical feature maps from several layers. Each proposal's level of the Augmented Feature Pyramid is formally determined by assigning the ROI with dimensions and .

| 6 |

denotes the input image size 224. Through an examination of CT scans, clinicians are able to locate tumours by studying the image's universal context, local geometric edifices, shape changes, and most importantly, spatial interactions with adjacent tissues. To calculate the retort at a given point, which is a biased sum of the characteristics at all positions on the expanded region , we make use of the Dependencies Computation Module. One of the most helpful pieces of data for detecting tumours may be accessed by performing this process, which allows the network to focus more on connections and dependencies at various scales, from the local to the global. To be more precise, the whole Addictions Computation Module is well-defined as follows, with as the input.

| 7 |

| 8 |

We created a CNN model to classify CT scan images for use in the early identification of pancreatic cancer. As shown in Fig. 3, our suggested CNN model has the following architectural layout. With three convolutional layers and a fully linked layer, our model was somewhat complex. Evey convolutional layer was followed by a weaker than expected max-pooling layer, a rectified linear unit (ReLU) layer that applied an activation function, and a batch normalisation (BN) layer to constrain the layer's output results. To further minimize the dimensionality of the feature values sent into the fully connected layer, we also implemented an average-pooling layer beforehand. To avoid overfitting and overspecialization, we chose a 0.5 percentage point dropout rate between both the median and fully linked layers. In addition, we attempted implementing a Spatial Dropout between each max-pooling layer and the convolutional layer that followed, however this led to a decrease in overall performance. This is why Spatial Dropout was not used. The network accepts the CT image's pixel values as input and returns the likelihood that the picture belongs to a certain class as output. Our model was gradually given the CT scans. Every layer receives as input the numbers generated by the layer above it. Layers process the input values by applying various transformations before sending them on to the following layer.

Figure 3.

Construction of CNN model.

Our model was trained on the training set with a mini-batch size of 32 using a dataset with n target classes. The loss between our model's predictions and the true outcomes was computed using the cross-entropy loss function at the end of each training cycle. This reduction influenced Adam's optimization of weight modifications to our CNN model. After making changes to the model, we evaluated it based on its performance on the validation data. Our model was trained for up to 100 iterations before the one with the greatest accuracy on the validation set was chosen. Our methods were tested using a cross-validation procedure with a tenfold increase in sample size. Each set of photos from each stage was randomly split into 10 groups , of which 8 were used for training, 1 for validation, and 2 for testing the model. This was done ten times, with each "fold" serving as the test set once. Results were noted as being around average. We measured the accuracy, precision, and recall of our CNN model on the test sets.

Classification model

In order to advance the accuracy of our finding, we made advantage of the overall design of YOLOv3, with DarkNet53 serving as the network's backbone and a three-layer spatial pyramid serving as the neck. The BCE Loss function was utilised as the target loss function in the detection head, and a branch and loss function that was particularly optimised was added to the original YOLOv3 implementation. YOLOv3 model is used in detection of cancer as an object and classify the image. Because the accuracy of the classification was more significant than the detection area for the early cancer detection, we decided to provide a bigger weight to the loss of classification. We made to start generating the network configuration in a random fashion. This was done to ensure that the activation function insights for each layer at the starting of the training stage were within a reasonable interval, which was necessary to ensure that the network would converge quickly.

Since the dataset we utilized was much smaller than the data used in the YOLOv3 network, it was possible that overfitting would occur if we had learned effectively with the provided boundaries. Therefore, in order to determine the characteristics of the DarkNet53 backbone network, Upon first, we did some preliminary training on the Image Net's image recognition job and the dataset's object classification task. After that, we added a three-layer pyramid detecting neck and fine-tuned it using the data set for early cancer. As can be seen in Fig. 4, we normalized the photographs from Image Net and the data sources by using the range and mean of the slightly earlier tumor training set to best align the learning rate with the early tumor data set. This lets us go as close as possible to fitting the model parameters to the early cancer data set. We utilized 64 images for each iteration of the network's fine-tuning process, with entries measuring 224 by 224 pixels. As a result of the limited memory available on the GPU, a batch was split into 32 divisions. The total number of epochs that were performed was one hundred, with the first two epochs serving as the warm-up training and employing a cosine learning rate of 0.01 for each epoch. After the warm-up, the learning rate dropped to 0.001 each epoch. We worked to provide context to the data, increasing their value.

Figure 4.

The sample architecture of YOLO Model used in our proposed model.

Dataset

Image dataset

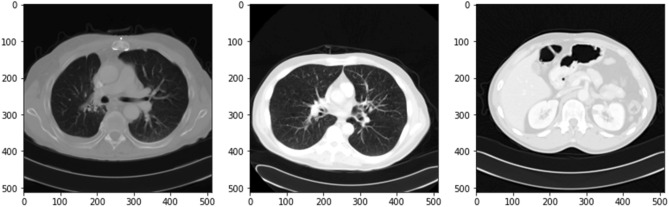

In the period between June 2017 and June 2018, a dataset of 3494 CT pictures was gathered from 222 patients with pathologically proven pancreatic cancer, while a dataset of 3751 CT images was gathered from 190 individuals with healthy pancreas, and using these images, a CNN model was developed. The sample images are shown in the Fig. 5.

Figure 5.

Sample CT scan images from the dataset.

We divided these pictures into three phases-based datasets, tested the method using tenfold cross validation for binary classification (i.e., cancer or not) as shown in the Table 1.

Table 1.

Image dataset information.

| Particulars | Data |

|---|---|

| Total pancreatic cancer images | 3494 |

| Patient with pancreatic cancer | 222 |

| Total healthy pancreas images | 3451 |

| Patient with healthy pancreas | 190 |

| Thickness | 5.0 mm |

Urinary biomarkers

Urinary biomarkers have been investigated for their potential role in the detection of pancreatic cancer. Pancreatic cancer is often diagnosed at an advanced stage when treatment options are limited, and the prognosis is poor. Therefore, identifying reliable and non-invasive biomarkers for early detection is critical for improving outcomes.

Several urinary biomarkers have been studied for their potential in detecting pancreatic cancer, including:

CA 19–9: A glycoprotein that is often elevated in pancreatic cancer patients and is currently used as a biomarker in clinical practice.

MUC1: A transmembrane mucin protein that has been found to be overexpressed in pancreatic cancer.

Osteopontin: A glycoprotein that has been shown to be overexpressed in pancreatic cancer and can be detected in urine.

Tumor-associated trypsin inhibitor (TATI): A protein that is often elevated in pancreatic cancer patients and has been investigated as a potential biomarker.

Human epididymis protein 4 (HE4): A glycoprotein that has been found to be overexpressed in pancreatic cancer and can be detected in urine.

This research uses LYVE1 (Lymphatic Vessel Endothelial Hyaluronan Receptor 1), REG1B (Regenerating islet-derived protein 1 beta), TFF1 (Trefoil factor 1) and REG1A (Regenerating islet-derived protein 1-alpha) biomarker as a dataset.

LYVE1 has been investigated as a potential target for cancer therapy, as its expression has been found to be upregulated in various types of tumors, including breast, lung, and pancreatic cancer. A study published in the journal Pancreas in 2014 found that REG1B was significantly elevated in the serum of pancreatic cancer patients compared to healthy controls and patients with pancreatitis. Another study published in the same journal in 2017 found that REG1B levels were higher in the urine of pancreatic cancer patients compared to healthy controls and patients with chronic pancreatitis. A study published in the journal PLOS ONE in 2017 found that TFF1 levels were significantly higher in the serum of pancreatic cancer patients compared to healthy controls and patients with pancreatitis. Another study published in the journal Oncotarget in 2018 found that TFF1 levels were higher in the urine of pancreatic cancer patients compared to healthy controls and patients with chronic pancreatitis. REG1A has been shown to be a prognostic indicator of pancreatic cancer, with higher levels of REG1A associated with poorer outcomes. The Fig. 6 shows data of biomarkers present in the used dataset.

Figure 6.

the circulation of data in biomarkers dataset.

They collected biomarkers from the urine of three distinct patient populations:

Health indicators

Pancreatic ductal adenocarcinoma patients, malignant pancreatic environments

They were coordinated by age and sex when accurate. The purpose was to discover a reliable method of diagnosing pancreatic cancer. The Fig. 7. exhibits the dataset data. This figure shows 590 patients sample_id, patient_cohort,sample origin,age,sex,diagnosis, 199 stages, 208 diagnosis samples, 350 plasma tests, 590 cretinine, biomarkers are presented in the dataset.

Figure 7.

The dataset information.

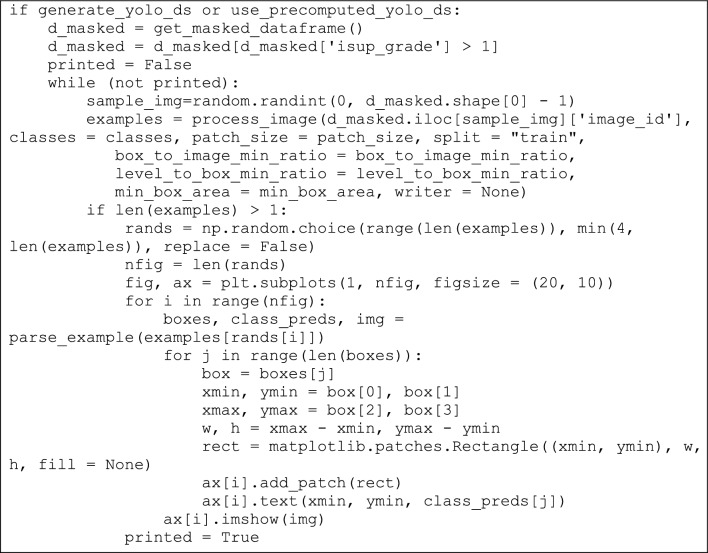

Sample code and implications

In this section sample codes are discussed. After computing threshold values, the YOLOv3 and DarknetRes is computed in python environment. Code snippets of YOLOv3 model, which is used to detect the tumour region based on computation value, is shown in Fig. 8. Finally, DarknetRes model used for classification. The few samples are shown below.

Figure 8.

YOLOv3 code with detection strategy.

Figure 9 shows sample coding snippets for the YOLO and DarknetRes neural networks. in Fig. 10 you can see how input is loaded in the platform to compute. The predicted output values are shown in the Fig. 11 and error value computation are shown in the Fig. 12. However algorithm computes for 11 min and does not fail in any instances during the computation. From confusion matrix below it can be confirmed that the proposed model predicts data with high accuracy level.

Figure 9.

Sample snippets of YOLO layer and DraknetRes computation.

Figure 10.

Input loading.

Figure 11.

Prediction values and sample predicted output.

Figure 12.

Error value computation.

Result and discussion

Using the two datasets, we tested our method for both binary and ternary classifications, and we determined how well it performed using the standard measures for such endeavours as accuracy, precision, and recall. The proportion of correctly supervised classification (abbreviated ) is used to quantify the quality of a picture. The accuracy of class K is defined as the fraction of pictures properly labelled as belonging to class K (denoted ) relative to the total number of images labelled as belonging to class ( + FPi). Among all the photos that should be identified as class K, the quantity of those that are properly classified as class K (signified as ) is the recall for class K. The following is how these measurements are made:

| 9 |

The Fig. 13 depicts the accuracy on the dataset Urinary Biomarkers and also on the image dataset. Both dataset produces the accuracy nearlyb100% on classifying the pancreatic cancer.

| 10 |

| 11 |

| 12 |

Figure 13.

Accuracy on the datasets.

Given that accuracy evaluates a classifier's performance across all classes and not just for a single class Ci, it was the primary metric we used to assess the efficacy of our method. The Fig. 14. Shows the loss of the model on both datasets.

Figure 14.

Loss on the dataset.

In cancer diagnosis, sensitivity equals recall, or the ratio of properly predicted malignant lesions to total malignant lesions.

| 13 |

Specificity in detecting non-cancer cases is the Recall in detecting non-malignant cases which is the properly predicted non-malignant instances divided by the all-non-malignant cases);

| 14 |

Accuracy in cancer diagnosis is measured by the percentage of malignant lesions for which a correct prediction was made, relative to the overall number of malignant lesions.

The Fig. 15 shows confusion matrix for urinary biomarker dataset. Creatinine biomarker was predicted as Creatinine with 100% accuracy. However, Creatinine biomarker has mispredicted as LYVE1 as 34%, REG1B as 26%, TFF1 as 40%. LYVE1 biomarker has mispredicted as Creatinine as 34% and TFF1 as 58%. REG1B biomarker has mispredicted Creatinine as 26%, LYVE1 as 54% and TFF1 as 69%. TFF1 biomarker has mispredicted Creatinine as 40%, REG1B as 69%.

Figure 15.

Confusion matrix on the dataset Urinary Biomarkers.

Figure 16 shows the confusion matrix of the non-cancer and cancer result predictions for image dataset. The confusion matrix confirms that the model achieves the 100% accuracy on Urinary Biomarkers dataset and 99.9% accuracy on the CT image dataset.

Figure 16.

Confusion matrix on the CT image dataset.

The Table 2 predicts the precision recall and the f1-score produced by our proposed YCNN model. The results ensures that the proposed model gives the 100% accuracy on classification.

Table 2.

Performance evaluation of the model YCNN.

| Precision | Recall | f1-score | |

|---|---|---|---|

| 1 | 100% | 100% | 100% |

| 2 | 98% | 100% | 99% |

| 3 | 100% | 99% | 98% |

| Accuracy | 100% | ||

| Macro avg | 100% | 100% | 100% |

| Weighted avg | 100% | 100% | 100% |

The domains of illness diagnosis and treatment, care coordination, medication research and development, and precision medicine stand to benefit greatly from the use of machine learning. Its applicability to seeing the pancreas has been hampered since the pancreatic is very changeable in shape, size, and position, but it only occupies a very small percentage of a CT picture. This makes it difficult to observe the pancreas. As a direct consequence of this, diagnostic efficiency and accuracy have been subpar. The model consists of four stages: image screening, the localization of the pancreas, the division of the pancreas, and the diagnosis of pancreatic tumours. It achieves an area under the curve (AUC) of 1.00, an F1 score of 99.9%, and an accuracy of 100.0% on an independent testing dataset. The Fig. 17 shows the accuracy of AUC.

Figure 17.

AUC curve of the proposed model.

The creation of a more comprehensive dataset that includes a wider variety of pancreatic tumours than was previously possible as a result of this work is yet another advantage of the study. This may be of assistance to the deep learning system when it comes to recognising photos of the various pancreatic tumour kinds. The model can distinguish between the many cancers that can occur in the pancreas. The end-to-end automatic diagnosis is another strength of the system. This type of diagnosis takes only about 16.5 s per patient to complete, beginning with the input of the initial abdominal CT image and ending with a diagnosis result. It has great diagnostic and curative promise since it can manage and substantially interpret huge volumes of data fast, correctly, and affordably in clinical settings. For instance, the model might be utilised for large-scale pre-diagnosis during physical examinations, or it could be used to aid with diagnosis at low-level facilities that have limited resources. One more feature of the model that has the potential to help improve its reliability is its capacity to generate saliency maps, which can be used to pinpoint the aspects of diagnostic decision making that are of the utmost significance. Despite the fact that our method relies solely on evidence obtained from CT scans, medical professionals have access to additional information, such as the medical histories of patients and their testimonies. Consequently, the decision making of independent practitioners, and not just the results of a deep learning system, should proceed to be the basis for convincing symptoms and care planning. We conducted the same research on the other baseline model such as VGG, DenseNet, etc. The Table 3 displays the promising outcomes of the performance judgement. For instance, the MobileNet achieves nearly 99% but not more than YCNN model.

Table 3.

Accuracy comparative analysis with other models.

| Model | Urinary biomarkers accuracy (%) | Image dataset accuracy (%) |

|---|---|---|

| MLP | 75.8 | 80.6 |

| LSTM | 85.9 | 88.5 |

| CNN | 96.8 | 97.5 |

| VGG19 | 98.9 | 98.5 |

| Resnet50 | 97.5 | 98.6 |

| Inception | 96.5 | 97.8 |

| DenseNet | 98.6 | 98.4 |

| MobileNet | 98.8 | 99.5 |

| YCNN | 100 | 100 |

The results presented in the Table 3 indicate the performance of different models for pancreatic cancer detection using urinary biomarkers and image datasets. Some useful insights that can be derived from presented results are as follows:

Urinary Biomarkers vs. Image Dataset Accuracy The table shows that the accuracy of urinary biomarkers is generally lower than that of image datasets. This suggests that image-based diagnostic tests might have a higher predictive power for pancreatic cancer detection compared to urinary biomarkers alone.

Model Performance The table includes various models used for pancreatic cancer detection. Some models, such as YCNN, achieve perfect accuracy (100%) for both urinary biomarkers and image datasets. These models demonstrate the potential for highly accurate detection of pancreatic cancer.

Performance Variations among Models There are notable variations in performance among different models. For instance, MLP and LSTM models achieve lower accuracy compared to other models. On the other hand, CNN-based models, including VGG19, Resnet50, Inception, and DenseNet, show higher accuracy for both urinary biomarkers and image datasets. MobileNet also performs well in terms of accuracy. These results suggest that convolutional neural network (CNN)-based models have the potential to be effective tools for pancreatic cancer detection.

Potential Clinical Applications The strong performance of certain models, especially those based on CNN architecture, indicates their potential for clinical application in pancreatic cancer detection. These models could be integrated into computer-aided diagnostic systems to assist healthcare professionals in making more accurate and timely diagnoses. However, further research and validation studies are necessary before implementing these models in clinical practice

Conclusion and future work

In the realm of medicine, deep learning has contributed to developments in the analysis and forecast of a wide variety of disorders. In this study, a novel automated YCNN was presented to help the pathologist classify pancreatic cancer grades based on pathological images. The pycharm and Google Colab platforms serve as the backbone of the system, which also includes the YOLO model for making predictions based on the data. Input pictures are scaled up and divided into 224 × 224 pixel patches before being fed into the YCNN all at once. After that, CNN is used to categorise the patches into their respective grades, and then the patches are stitched back together to produce a single complete image before the final result is sent to the pathologist. On the datasets, f1-score measures of 0.99 and 1.00 have been reported, which are both encouraging. The proposed system could be enhanced by adopting the most advanced deep learning model, expanding the image dataset, and using augmentation to improve the learning rate of the model on a variety of colour variations. In addition, a more recent method of synthetic image generation, can be planned to generate more images of pancreatic cancer pathology with the guidance of specialists before the training process begins. At this point, the findings of this research may be able to assist in giving pathologists a consistent diagnosis for the grade of pancreatic cancer by making use of a straightforward web interface that does not require any installation. In the future, we anticipate that the system will provide the pathologist with a second opinion if it is able to improve its performance and achieve an accuracy that is closer to 1.

Acknowledgements

Researchers Supporting Project number (RSP2023R167), King Saud University, Riyadh, Saudi Arabia.

Author contributions

M.G.D. and M.A. conducted research and performed basic simulations, N.B. has validated experiments and written initial manuscript draft, S.S.A. has reviewed mansucript and prepared visualization.

Funding

This project is funded by King Saud University, Riyadh, Saudi Arabia.

Data availability

The datasets generated during and/or analysed during the current study are available in the [kaggle] repository, [https://www.kaggle.com/datasets/kmader/siim-medical-images?select=dicom_dir], [https://www.kaggle.com/code/kerneler/starter-pancreas-ct-dataset-628d2558-a/data], [https://www.kaggle.com/datasets/johnjdavisiv/urinary-biomarkers-for-pancreatic-cancer].

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Nebojsa Bacanin, Email: nbacanin@singidunum.ac.rs.

Mohamed Abouhawwash, Email: abouhaww@msu.edu.

References

- 1.Wolfgang CL, Herman JM, Laheru DA, Klein AP, Erdek MA, Fishman EK, Hruban RH. Recent progress in pancreatic cancer. CA Cancer J. Clin. 2013;63:318–348. doi: 10.3322/caac.21190]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kamisawa T, Wood LD, Itoi T, Takaori K. Pancreatic cancer. Lancet. 2016;388:73–85. doi: 10.1016/S0140-6736(16)00141-0]. [DOI] [PubMed] [Google Scholar]

- 3.Howlader, N., Noone, A. M., Krapcho, M., Miller, D., Brest, A., Yu, M., Ruhl, J., Tatalovich, Z., Mariotto, A., Lewis, D. R., Chen, H. S., Feuer, E. J., Cronin, K. A. (eds). SEER cancer statistics review, 1975–2016, National cancer institute. Bethesda. Available from: https://seer.cancer.gov/csr/1975_2016/, based on November 2018 SEER data submission, posted to the SEER web site, April (2019)

- 4.Khorana AA, Mangu PB, Berlin J, Engebretson A, Hong TS, Maitra A, Mohile SG, Mumber M, Schulick R, Shapiro M, Urba S, Zeh HJ, Katz MHG. Potentially curable pancreatic cancer: American society of clinical oncology clinical practice guideline update. J. Clin. Oncol. 2017;35:2324–2328. doi: 10.1200/JCO.2017.72.4948]. [DOI] [PubMed] [Google Scholar]

- 5.Balaban EP, Mangu PB, Khorana AA, Shah MA, Mukherjee S, Crane CH, Javle MM, Eads JR, Allen P, Ko AH, Engebretson A, Herman JM, Strickler JH, Benson AB, Urba S, Yee NS. Locally advanced unresectable pancreatic cancer: American society of clinical oncology clinical practice guideline. J. Clin. Oncol. 2016;34:2654–2668. doi: 10.1200/JCO.2016.67.5561]. [DOI] [PubMed] [Google Scholar]

- 6.Kim J, Yuan C, Babic A, Bao Y, Clish CB, Pollak MN, Amundadottir LT, et al. Genetic and circulating biomarker data improve risk prediction for pancreatic cancer in the general population. Cancer Epidemiol. Biomark. Prev. A Publ. Am. Assoc. Cancer Res. Cosponsored Am. Soc. Prev. Oncol. 2020;29(5):999–1008. doi: 10.1158/1055-9965.EPI-19-1389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Takhar AS, Palaniappan P, Dhingsa R, Lobo DN. Recent developments in diagnosis of pancreatic cancer. BMJ. 2004;329:668–673. doi: 10.1136/bmj.329.7467.668]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chari ST. Detecting early pancreatic cancer: Problems and prospects. Semin. Oncol. 2007;34:284–294. doi: 10.1053/j.seminoncol.2007.05.005]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Al-Hawary MM, Francis IR, Chari ST, Fishman EK, Hough DM, Lu DS, Macari M, Megibow AJ, Miller FH, Mortele KJ, Merchant NB, Minter RM, Tamm EP, Sahani DV, Simeone DM. Pancreatic ductal adenocarcinoma radiology reporting template: Consensus statement of the society of abdominal radiology and the American pancreatic association. Radiology. 2014;270:248–260. doi: 10.1148/radiol.13131184]. [DOI] [PubMed] [Google Scholar]

- 10.Zhou B, Xu JW, Cheng YG, Gao JY, Hu SY, Wang L, Zhan HX. Early detection of pancreatic cancer: Where are we now and where are we going? Int. J. Cancer. 2017;141:231–241. doi: 10.1002/ijc.30670]. [DOI] [PubMed] [Google Scholar]

- 11.Chen FM, Ni JM, Zhang ZY, Zhang L, Li B, Jiang CJ. Presurgical evaluation of pancreatic cancer: A comprehensive imaging comparison of CT versus MRI. AJR Am. J. Roentgenol. 2016;206:526–535. doi: 10.2214/AJR.15.15236]. [DOI] [PubMed] [Google Scholar]

- 12.Vargas R, Nino-Murcia M, Trueblood W, Jeffrey RB., Jr MDCT in Pancreatic adenocarcinoma: Prediction of vascular invasion and resectability using a multiphasic technique with curved planar reformations. AJR Am. J. Roentgenol. 2004;182:419–425. doi: 10.2214/ajr.182.2.1820419]. [DOI] [PubMed] [Google Scholar]

- 13.Yang Y, Feng X, Chi W, Li Z, Duan W, Liu H, Liang W, Wang W, Chen P, He J, Liu B. Deep learning aided decision support for pulmonary nodules diagnosing: A review. J. Thorac. Dis. 2018;10:S867–S875. doi: 10.21037/jtd.2018.02.57]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fujisawa Y, Otomo Y, Ogata Y, Nakamura Y, Fujita R, Ishitsuka Y, Watanabe R, Okiyama N, Ohara K, Fujimoto M. Deep-learning-based, computer-aided classifier developed with a small dataset of clinical images surpasses board-certified dermatologists in skin tumor diagnosis. Br. J. Dermatol. 2019;180:373–381. doi: 10.1111/bjd.16924. [DOI] [PubMed] [Google Scholar]

- 15.Krizhevsky, A., Sutskever, I., Hinton, G. E. Imagenet classification with deep convolutional neural networks. In: Pereira, F., Burges, C. J. C., Bottou, L., Weinberger, K. Q. (eds). Advances in Neural Information Processing Systems 25. Curran Associates Inc. 1097–1105, (2012).

- 16.Ma H, Liu Z-X, Zhang J-J, et al. Construction of a convolutional neural network classifier developed by computed tomography images for pancreatic cancer diagnosis. World J. Gastroenterol. 2020;26(34):5156–5168. doi: 10.3748/wjg.v26.i34.5156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Luo Y, Chen X, Chen J, et al. Preoperative prediction of pancreatic neuroendocrine neoplasms grading based on enhanced computed tomography imaging: validation of deep learning with a convolutional neural network. Neuroendocrinology. 2020;110(5):338–350. doi: 10.1159/000503291. [DOI] [PubMed] [Google Scholar]

- 18.Fu M, Wu W, Hong X, et al. Hierarchical combinatorial deep learning architecture for pancreas segmentation of medical computed tomography cancer images. BMC Syst. Biol. 2018;12(4):56–127. doi: 10.1186/s12918-018-0572-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Manabe K, Asami Y, Yamada T, Sugimori H. Improvement in the convolutional neural network for computed tomography images. Appl. Sci. 2021;11(4):1505. doi: 10.3390/app11041505. [DOI] [Google Scholar]

- 20.Furuya K, Murayama S, Soeda H, Murakami J, Ichinose Y, Yauuchi H, Katsuda Y, Koga M, Masuda K. New classification of small pulmonary nodules by margin characteristics on high resolution CT. Acta Radiol. 1999;40(5):496–504. doi: 10.3109/02841859909175574. [DOI] [PubMed] [Google Scholar]

- 21.Ciompi F, Chung K, Van Riel SJ, Setio AAA, Gerke PK, Jacobs C, Scholten ET, Schaefer-Prokop C, Wille MM, Marchiano A, et al. Towards automatic pulmonary nodule management in lung cancer screening with deep learning. Sci. Rep. 2017;7:46479. doi: 10.1038/srep46479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.McKinney SM, Sieniek M, Godbole V, Godwin J, Antropova N, Ashrafian H, Back T, Chesus M, Corrado GC, Darzi A, et al. International evaluation of an AI system for breast cancer screening. Nature. 2020;577:89–94. doi: 10.1038/s41586-019-1799-6. [DOI] [PubMed] [Google Scholar]

- 24.Yasaka K, Akai H, Abe O, Kiryu S. Deep learning with convolutional neural network for differentiation of liver masses at dynamic contrast-enhanced CT: A preliminary study. Radiology. 2018;286:887–896. doi: 10.1148/radiol.2017170706. [DOI] [PubMed] [Google Scholar]

- 25.Zhu, Z., Xia, Y., Xie, L., Fishman, E. K., Yuille, A. L. Multi-scale coarse-to-fine segmentation for screening pancreatic ductal adenocarcinoma. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), (Springer: Cham, Switzerland, 2019), Volume 11769 LNCS, pp. 3–12.

- 26.Xia, Y., Yu, Q., Shen, W., Zhou, Y., Fishman, E. K., Yuille, A. L. Detecting pancreatic ductal adenocarcinoma in multi-phase CT scans via alignment ensemble. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, Volume 12263 LNCS, pp. 285–295 (2020).

- 27.Ma H, Liu ZX, Zhang JJ, Wu FT, Xu CF, Shen Z, Yu CH, Li YM. Construction of a convolutional neural network classifier developed by computed tomography images for pancreatic cancer diagnosis. World J. Gastroenterol. 2020;26:5156–5168. doi: 10.3748/wjg.v26.i34.5156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Liu KL, Wu T, Chen PT, Tsai YM, Roth H, Wu MS, Liao WC, Wang W. Deep learning to distinguish pancreatic cancer tissue from non-cancerous pancreatic tissue: A retrospective study with cross-racial external validation. Lancet Digit. Health. 2020;2:e303–e313. doi: 10.1016/S2589-7500(20)30078-9. [DOI] [PubMed] [Google Scholar]

- 29.He, K., Zhang, X., Ren, S., Sun, J. Deep residual learning for image recognition. CVPR, 770–8 (2016).

- 30.Silvana D, O’Brien H, Algahmdi AS, Malats N, Stewart GD, Plješa-Ercegovac M, Costello E, et al. A combination of urinary biomarker panel and PancRISK score for earlier detection of pancreatic cancer: A case–control study. PLoS Med. 2020;17(12):e1003489. doi: 10.1371/journal.pmed.1003489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Baldota, S., Sharma, S. and Malathy, C. Deep transfer learning for pancreatic cancer detection, 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kharagpur, India, pp. 1–7, (2021).

- 32.Gupta, A., Koul, A. and Kumar, Y. Pancreatic Cancer detection using machine and deep learning techniques, 2022 2nd International Conference on Innovative Practices in Technology and Management (ICIPTM), Gautam Buddha Nagar, India, pp. 151–155 (2022).

- 33.Stoffel EM, Brand RE, Goggins M. Pancreatic cancer: Changing epidemiology and new approaches to risk assessment. Early Detect. Prev. Gastroenterol. 2023;164(5):752–765. doi: 10.1053/j.gastro.2023.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Haan, D., Bergamaschi, A., Friedl, V., Yuhong, G. D. G. et al.“Epigenomic blood-based early detection of pancreatic cancer employing cell-free DNA, Clin. Gastroenterol. Hepatol., (2023) [DOI] [PubMed]

- 35.Lee H, Kim SY, Kim WD, et al. Serum protein profiling of lung, pancreatic, and colorectal cancers reveals alcohol consumption-mediated disruptions in early-stage cancer detection. Heliyon. 2022;8(12):e12359. doi: 10.1016/j.heliyon.2022.e12359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Park J, Artin MG, Lee KE, Pumpalova YS, Ingram MA, et al. Deep learning on time series laboratory test results from electronic health records for early detection of pancreatic cancer. J. Biomed. Inform. 2022;131:104095. doi: 10.1016/j.jbi.2022.104095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Park J, Artin MG, Lee KE, May BL, Park M, et al. Structured deep embedding model to generate composite clinical indices from electronic health records for early detection of pancreatic cancer. Patterns. 2023;4(1):100636. doi: 10.1016/j.patter.2022.100636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kaissis G, Ziegelmayer S, Lohöfer F, et al. A machine learning model for the prediction of survival and tumor subtype in pancreatic ductal adenocarcinoma from preoperative diffusion-weighted imaging. Eur. Radiol. Exp. 2019;3:41. doi: 10.1186/s41747-019-0119-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Yokoyama S, Hamada T, Higashi M, Matsuo K, Maemura K, Kurahara H, Horinouchi M, Hiraki T, Sugimoto T, Akahane T, Yonezawa S. Predicted prognosis of patients with pancreatic cancer by machine learningprognosis of pancreatic cancer by machine learning. Clin. Cancer Res. 2020;26(10):2411–2421. doi: 10.1158/1078-0432.CCR-19-1247. [DOI] [PubMed] [Google Scholar]

- 40.Xing H, Hao Z, Zhu W, et al. Preoperative prediction of pathological grade in pancreatic ductal adenocarcinoma based on 18F-FDG PET/CT radiomics. EJNMMI Res. 2021;11:19. doi: 10.1186/s13550-021-00760-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Chu LC, Park S, Kawamoto S, Wang Y, Zhou Y, Shen W, Zhu Z, Xia Y, Xie L, Liu F, Yu Q. Application of deep learning to pancreatic cancer detection: Lessons learned from our initial experience. J. Am. Coll. Radiol. 2019;16(9):1338–1342. doi: 10.1016/j.jacr.2019.05.034. [DOI] [PubMed] [Google Scholar]

- 42.Li C, Lin L, Zhang L, Xu R, Chen X, Ji J, Li Y. Long noncoding RNA p21 enhances autophagy to alleviate endothelial progenitor cells damage and promote endothelial repair in hypertension through SESN2/AMPK/TSC2 pathway. Pharmacol. Res. 2021;173:105920. doi: 10.1016/j.phrs.2021.105920. [DOI] [PubMed] [Google Scholar]

- 43.Zhang X, Qu Y, Liu L, Qiao Y, Geng H, Lin Y, Zhao J. Homocysteine inhibits pro-insulin receptor cleavage and causes insulin resistance via protein cysteine-homocysteinylation. Cell Rep. 2021;37(2):109821. doi: 10.1016/j.celrep.2021.109821. [DOI] [PubMed] [Google Scholar]

- 44.Zeng Q, Bie B, Guo Q, Yuan Y, Han Q, Han X, Zhou X. Hyperpolarized Xe NMR signal advancement by metal-organic framework entrapment in aqueous solution. Proc. Natl. Acad. Sci. 2020;117(30):17558–17563. doi: 10.1073/pnas.2004121117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Zhang Q, Li S, He L, Feng X. A brief review of polysialic acid-based drug delivery systems. Int. J. Biol. Macromol. 2023;230:123151. doi: 10.1016/j.ijbiomac.2023.123151. [DOI] [PubMed] [Google Scholar]

- 46.Xie, X., Tian, Y., & Wei, G, Deduction of sudden rainstorm scenarios: integrating decision makers' emotions, dynamic Bayesian network and DS evidence theory. Nat. Hazards, (2022).

- 47.Zhan C, Dai Z, Yang Z, Zhang X, Ma Z, Thanh HV, Soltanian MR. Subsurface sedimentary structure identification using deep learning: A review. Earth-Sci. Rev. 2023;239:104370. doi: 10.1016/j.earscirev.2023.104370. [DOI] [Google Scholar]

- 48.Luo H, Lou Y, He K, Jiang Z. Coupling in-situ synchrotron X-ray radiography and FT-IR spectroscopy reveal thermally-induced subsurface microstructure evolution of solid propellants. Combust. Flame. 2023;249:112609. doi: 10.1016/j.combustflame.2022.112609. [DOI] [Google Scholar]

- 49.Lei Z, Gengming W, Xiaojun L, Zhen T, Chao S, Yang G, Wei W. The role of GTF2B in the regulation of proliferation and apoptosis in A549 cells. J. Biol. Regul. Homeost. Agents. 2022;36(3):667–676. [Google Scholar]

- 50.Zhuang, Y., Chen, S., Jiang, N., & Hu, H. An Effective WSSENet-based similarity retrieval method of large lung CT image databases. KSII Transact. Internet Inform. Syst., 16(7), (2022).

- 51.Zhuang, Y., Jiang, N., Xu, Y., Xiangjie, K., & Kong, X. Progressive distributed and parallel similarity retrieval of large CT image sequences in mobile telemedicine networks. Wirel. Commun. Mobile Comput., (2022).

- 52.Feng H, Yang B, Wang J, Liu M, Yin L, Zheng W, Liu C. Identifying malignant breast ultrasound images using ViT-patch. Appl. Sci. 2023;13(6):3489. doi: 10.3390/app13063489. [DOI] [Google Scholar]

- 53.Qin X, Ban Y, Wu P, Yang B, Liu S, Yin L, Liu M, Zheng W. Improved image fusion method based on sparse decomposition. Electronics. 2022;11(15):2321. doi: 10.3390/electronics11152321. [DOI] [Google Scholar]

- 54.Ban Y, Wang Y, Liu S, Yang B, Liu M, Yin L, Zheng W. 2D/3D multimode medical image alignment based on spatial histograms. Appl. Sci. 2022;12(16):8261. doi: 10.3390/app12168261. [DOI] [Google Scholar]

- 55.Zhang Z, Ma P, Ahmed R, Wang J, Akin D, SotoDemirci FU. Advanced point-of-care testing technologies for human acute respiratory virus detection. Adv. Mater. (Weinheim) 2021;34:2103646. doi: 10.1002/adma.202103646. [DOI] [PubMed] [Google Scholar]

- 56.Chen H, Wang Q. Regulatory mechanisms of lipid biosynthesis in microalgae. Biol. Rev. Camb. Philos. Soc. 2021;96(5):2373–2391. doi: 10.1111/brv.12759. [DOI] [PubMed] [Google Scholar]

- 57.Zheng, J., Yue, R., Yang, R., Wu, Q., Wu, Y., Huang, M., & Liao, Y. Visualization of Zika Virus Infection via a Light-Initiated Bio Orthogonal Cycloaddition Labeling Strategy. Front. Bioeng. Biotechnol., 1051, (2022). [DOI] [PMC free article] [PubMed]

- 58.Wang, Y., Zhai, W., Cheng, S. et al. Surface-functionalized design of blood-contacting biomaterials for preventing coagulation and promoting hemostasis. Friction, (2023).

- 59.Yuan Q, Kato B, Fan K, Wang Y. Phased array guided wave propagation in curved plates. Mech. Syst. Signal Process. 2023;185:109821. doi: 10.1016/j.ymssp.2022.109821. [DOI] [Google Scholar]

- 60.Xu, Y., Zhang, F., Zhai, W., Cheng, S., Li, J., Wang, Y, Unraveling of Advances in 3D-Printed Polymer-Based Bone Scaffolds. Polymers, 14(3), (2022). [DOI] [PMC free article] [PubMed]

- 61.Hu, F., Shi, X., Wang, H., Nan, N., Wang, K., Wei, S., Zhao, S, Is health contagious?—based on empirical evidence from china family panel studies' data. Front. Public Health, 9, (2021). [DOI] [PMC free article] [PubMed]

- 62.Jin, K., Yan, Y., Wang, S., Yang, C., Chen, M., Liu, X., Ye, J. iERM: An interpretable deep learning system to classify epiretinal membrane for different optical coherence tomography devices: A multi-center analysis. J. Clin. Med., 12(2), (2023). [DOI] [PMC free article] [PubMed]

- 63.Gao, Z., Pan, X., Shao, J., Jiang, X., Su, Z., Jin, K., Ye, J. Automatic interpretation and clinical evaluation for fundus fluorescein angiography images of diabetic retinopathy patients by deep learning. B. J. Ophthalmol., (2022). [DOI] [PubMed]

- 64.Wang L, Yu Y, Ni S, Li D, Liu J, Xie D, Chu HY, Ren Q, Zhong C, Zhang N, Li N, Sun M, Zhang ZK, Zhuo Z, Zhang H, Zhang S, Li M, Xia W, Zhang Z, Chen L, Zhang G. Therapeutic aptamer targeting sclerostin loop3 for promoting bone formation without increasing cardiovascular risk in osteogenesis imperfecta mice. Theranostics. 2022;12(13):5645–5674. doi: 10.7150/thno.63177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Lu L, Dong J, Liu Y, Qian Y, Zhang G, Zhou W, Zhao A, Ji G, Xu H. New insights into natural products that target the gut microbiota: Effects on the prevention and treatment of colorectal cancer. Front. Pharmacol. 2022;13:964793. doi: 10.3389/fphar.2022.964793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ye, X., Wang, J., Qiu, W., Chen, Y., & Shen, L. Excessive gliosis after vitrectomy for the highly myopic macular hole: A spectral domain optical coherence tomography study. RETINA, 43(2), (2023). [DOI] [PubMed]

- 67.Yu Y, Wang L, Ni S, Li D, Liu J, Chu HY, Zhang G. Targeting loop3 of sclerostin preserves its cardiovascular protective action and promotes bone formation. Nat. Commun. 2022;13(1):4241. doi: 10.1038/s41467-022-31997-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Xu, H., Van der Jeught, K., Zhou, Z., Zhang, L., Yu, T., Sun, Y., Lu, X. Atractylenolide I enhances responsiveness to immune checkpoint blockade therapy by activating tumor antigen presentation. J. Clin. Investig., 131(10), (2021). [DOI] [PMC free article] [PubMed]

- 69.Li Y, Xia X, Hou W, Lv H, Liu J, Li X. How effective are metal nanotherapeutic platforms against bacterial infections? A comprehensive review of literature. Int. J. Nanomed. 2023;18:1109–1128. doi: 10.2147/IJN.S397298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Ao J, Shao X, Liu Z, Liu Q, Xia J, Shi Y, Ji M. Stimulated Raman scattering microscopy enables gleason scoring of prostate core needle biopsy by a convolutional neural network. Can. Res. 2023;83(4):641–651. doi: 10.1158/0008-5472.CAN-22-2146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Ronneberger O, Fischer P, Brox T. U-Net: Convolutional networks for biomedical image segmentation. Med. Image Comput. Comput. Assist. Interv. 2015;9351:234–241. [Google Scholar]

- 72.Althobaiti, M.M., Almulihi, A., Ashour, A.A., Mansour, R. F. and Gupta, D. Design of optimal deep learning-based pancreatic tumor and nontumor classification model using computed tomography scans. J. Healthcare Eng., (2022). [DOI] [PMC free article] [PubMed] [Retracted]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available in the [kaggle] repository, [https://www.kaggle.com/datasets/kmader/siim-medical-images?select=dicom_dir], [https://www.kaggle.com/code/kerneler/starter-pancreas-ct-dataset-628d2558-a/data], [https://www.kaggle.com/datasets/johnjdavisiv/urinary-biomarkers-for-pancreatic-cancer].