Abstract

This study assesses the diagnostic accuracy of the Generative Pre-trained Transformer 4 (GPT-4) artificial intelligence (AI) model in a series of challenging cases.

Recent advances in artificial intelligence (AI) have led to generative models capable of accurate and detailed text-based responses to written prompts (“chats”). These models score highly on standardized medical examinations.1 Less is known about their performance in clinical applications like complex diagnostic reasoning. We assessed the accuracy of one such model (Generative Pre-trained Transformer 4 [GPT-4]) in a series of diagnostically difficult cases.

Methods

We used New England Journal of Medicine clinicopathologic conferences. These conferences are challenging medical cases with a final pathological diagnosis that are used for educational purposes; they have been used to evaluate differential diagnosis generators since the 1950s.2,3,4

We used the first 7 case conferences from 2023 to iteratively develop a standard chat prompt (eAppendix in Supplement 1) that explained the general conference structure and instructed the model to provide a differential diagnosis ranked by probability. We copied each case published from January 2021 to December 2022, up to but not including the discussant’s initial response and differential diagnosis discussion, and pasted it along with our prompt into the model. We excluded cases that were not diagnostic dilemmas (such as cases on management reasoning), as determined by consensus of Z.K. and A.R., or that were too long to run as a single chat. We chose recent cases because most of the model’s training data ends in September 2021. Each case, including the cases used to develop the prompt, was run in independent chats to prevent the model applying any “learning” to subsequent cases.

Our prespecified primary outcome was whether the model’s top diagnosis matched the final case diagnosis. Prespecified secondary outcomes were the presence of the final diagnosis in the model’s differential, differential length, and differential quality score using a previously published ordinal 5-point rating system based on accuracy and usefulness (in which a score of 5 is given for a differential including the exact diagnosis and a score of 0 is given when no diagnoses are close).2 All cases were independently scored by Z.K. and B.C., with disagreements adjudicated by A.R. Crosstabs and descriptive statistics were generated with Excel (Microsoft); a Cohen κ was calculated to determine interrater reliability using SPSS version 25 (IBM).

Results

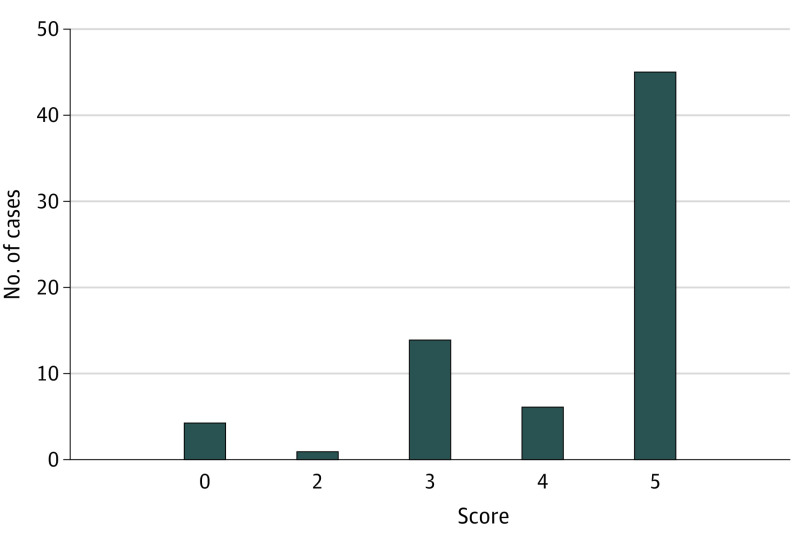

Of 80 cases, 10 were excluded (4 were not diagnostic dilemmas; 6 were deleted for length). The 2 primary scorers agreed on 66% of scores (46/70; κ = 0.57 [moderate agreement]). The AI model’s top diagnosis agreed with the final diagnosis in 39% (27/70) of cases. In 64% of cases (45/70), the model included the final diagnosis in its differential (Table). Mean differential length was 9.0 (SD, 1.4) diagnoses. When the AI model provided the correct diagnosis in its differential, the mean rank of the diagnosis was 2.5 (SD, 2.5). The median differential quality score was 5 (IQR, 3-5); the mean was 4.2 (SD, 1.3) (Figure).

Table. Representative Examples of AI-Generated Differential Diagnoses Compared With the Final Complex Diagnostic Challenge Diagnosis, Along With Subsequent Differential Quality Score.

| Final diagnosis | Final GPT-4 diagnosis | List of diagnoses | Differential quality score |

|---|---|---|---|

| Encephalitis due to Behçet disease | Neuro-Behçet disease |

|

5 (the actual diagnosis was suggested in the differential) |

| Regional myocarditis due to infection with Listeria monocytogenes | Lyme carditis |

|

4 (the suggestions included something very close, but not exact) |

| Erysipelothrix rhusiopathiae infection | Streptococcus pyogenes cellulitis with possible necrotizing fasciitis |

|

3 (the suggestions included something closely related that might have been helpful) |

| Cerebral amyloid angiopathy–related inflammation | Autoimmune encephalitis (possibly anti-NMDA receptor encephalitis) |

|

2 (the suggestions included something related, but unlikely to be helpful) |

| Anti–melanoma differentiation–associated protein 5 dermatomyositis | Coccidioidomycosis (Valley fever) |

|

0 (no suggestions were close to the target diagnosis) |

Abbreviations: AI, artificial intelligence; CASPR2, contactin-associated protein-like 2; CNS, central nervous system; GPT-4; Generative Pre-trained Transformer 4; LGI1, leucine-rich glioma-inactivated protein 1; NMDA, N-methyl-d-aspartate.

Figure. Performance of Generative Pre-trained Transformer 4 (GPT-4).

Histogram of GPT-4’s performance. Performance scale scores (Bond et al2): 5 = the actual diagnosis was suggested in the differential; 4 = the suggestions included something very close, but not exact; 3 = the suggestions included something closely related that might have been helpful; 2 = the suggestions included something related, but unlikely to be helpful; 0 = no suggestions close to the target diagnosis. (The scale does not contain a score of 1.)

Discussion

A generative AI model provided the correct diagnosis in its differential in 64% of challenging cases and as its top diagnosis in 39%. The finding compares favorably with existing differential diagnosis generators. A 2022 study evaluating the performance of 2 such models also using New England Journal of Medicine clinicopathological case conferences found that they identified the correct diagnosis in 58% to 68% of cases3; the measure of quality was a simple dichotomy of useful vs not useful. GPT-4 provided a numerically superior mean differential quality score compared with an earlier version of one of these differential diagnosis generators (4.2 vs 3.8).2

Study limitations include some subjectivity in the outcome measure, which was mitigated with a standardized approach used in similar diagnostics literature. In some cases, important diagnostic information was not included in the AI prompt due to protocol limitations, likely leading to an underestimation of the model’s capabilities. Also, the agreement on the quality score between scorers was moderate.

Generative AI is a promising adjunct to human cognition in diagnosis. The model evaluated in this study, similar to some other modern differential diagnosis generators, is a diagnostic “black box”; future research should investigate potential biases and diagnostic blind spots of generative AI models. Clinicopathologic conferences are best understood as diagnostic puzzles; once privacy and confidentiality concerns are addressed, studies should assess performance with data from real-world patient encounters.5

Section Editors: Jody W. Zylke, MD, Deputy Editor; Kristin Walter, MD, Senior Editor.

eAppendix. Standard Chat Prompt Used in the Study

Data Sharing Statement

References

- 1.Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. doi: 10.1371/journal.pdig.0000198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bond WF, Schwartz LM, Weaver KR, Levick D, Giuliano M, Graber ML. Differential diagnosis generators: an evaluation of currently available computer programs. J Gen Intern Med. 2012;27(2):213-219. doi: 10.1007/s11606-011-1804-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fritz P, Kleinhans A, Raoufi R, et al. Evaluation of medical decision support systems (DDX generators) using real medical cases of varying complexity and origin. BMC Med Inform Decis Mak. 2022;22(1):254. doi: 10.1186/s12911-022-01988-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ledley RS, Lusted LB. Reasoning foundations of medical diagnosis; symbolic logic, probability, and value theory aid our understanding of how physicians reason. Science. 1959;130(3366):9-21. doi: 10.1126/science.130.3366.9 [DOI] [PubMed] [Google Scholar]

- 5.Dorr DA, Adams L, Embí P. Harnessing the promise of artificial intelligence responsibly. JAMA. 2023;329(16):1347-1348. doi: 10.1001/jama.2023.2771 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eAppendix. Standard Chat Prompt Used in the Study

Data Sharing Statement