Abstract

Despite the many mistakes we make while speaking, people can effectively communicate because we monitor our speech errors. However, the cognitive abilities and brain structures that support speech error monitoring are unclear. There may be different abilities and brain regions that support monitoring phonological speech errors versus monitoring semantic speech errors. We investigated speech, language, and cognitive control abilities that relate to detecting phonological and semantic speech errors in 41 individuals with aphasia who underwent detailed cognitive testing. Then, we used support vector regression lesion symptom mapping to identify brain regions supporting detection of phonological versus semantic errors in a group of 76 individuals with aphasia. The results revealed that motor speech deficits as well as lesions to the ventral motor cortex were related to reduced detection of phonological errors relative to semantic errors. Detection of semantic errors selectively related to auditory word comprehension deficits. Across all error types, poor cognitive control related to reduced detection. We conclude that monitoring of phonological and semantic errors relies on distinct cognitive abilities and brain regions. Furthermore, we identified cognitive control as a shared cognitive basis for monitoring all types of speech errors. These findings refine and expand our understanding of the neurocognitive basis of speech error monitoring.

INTRODUCTION

Speech Error Monitoring in Aphasia

Effective spoken communication relies on detection and correction of speech errors, an ability referred to as speech error monitoring (SEM). While there are many proposals concerning the cognitive and neural basis of SEM, there is little consensus about how these proposals fit together and their relative accuracy in depicting SEM (Gauvin & Hartsuiker, 2020; Nozari, 2020). Our understanding of SEM might be clarified by identifying deficits and lesions that are associated with reduced SEM. Such associations can be identified in aphasia, a language impairment that commonly results from stroke. Reduced SEM in aphasia depends on the location and size of the stroke lesion and is associated with certain behavioral deficits (e.g., Mandal et al., 2020). Examining the causes of reduced SEM in aphasia could broadly inform our understanding of the cognitive and neural basis of SEM. Moreover, understanding more about SEM in aphasia could inform future therapies to improve SEM ability or inform treatment decisions for individuals with SEM deficits that might preclude specific therapeutic approaches that strongly rely on SEM.

Critical Speech and Language Processes for SEM in Aphasia

To understand the mental processes that are critical for SEM in aphasia, we must first consider the various proposed models of how speakers monitor their own speech. Speech processing encompasses various stages, including lexical access, motor aspects of speech production, speech perception, and speech comprehension (Levelt, Roelofs, & Meyer, 1999; Dell & O'Seaghdha, 1992). Correspondingly, SEM models vary by which aspects of speech processing they emphasize. Notably, however, the different stages described in SEM models are not necessarily mutually exclusive (Nozari, 2020; Postma, 2000). In addition, these models differ in the extent to which SEM relies on cognitive control (Gauvin & Hartsuiker, 2020; Roelofs, 2020; Nozari, Dell, & Schwartz, 2011). Here, the term cognitive control is used broadly to refer to cognitive abilities that support dynamic adaptation of mental processing. Because contemporary models of SEM differ on the aspects of speech-language processing and cognitive control that contribute to SEM, they make distinct predictions about which deficits should reduce SEM in aphasia. Broadly, three groups of models exist, which are discussed below.

One group of SEM models emphasizes the role of motor speech and perceptual processing in SEM. This group of models, as well as the speech processing models that contain them, is referred to by many names, including forward models, feedforward models, efference models, production-perception models, and sensorimotor models (Gauvin & Hartsuiker, 2020; Nozari, 2020; Hickok, 2012; Tourville & Guenther, 2011; Postma, 2000). Here, we refer to such SEM models as sensorimotor models (Figure 1, blue and magenta). Two prominent sensorimotor models include Hickok's Hierarchical State Feedback Control (HSFC) model (Hickok, 2012), and Guenther and colleagues' Directions into Velocities of Articulators (DIVA) model (Tourville & Guenther, 2011; Bohland, Bullock, & Guenther, 2010; Guenther, 2006). In HSFC as well as DIVA, expectations of the perceptual consequences of speech (i.e., the somatosensory and auditory consequences) are generated during preparation for motor speech execution. These expectations are then compared with the actual perception of one's own speech. If the actual perception does not match the expectation, then a speech error is detected and corrected. As the comparison between expectation and perception is performed in perceptual modalities, these models suggest that perceptual deficits might impair SEM. Although these models describe motor speech execution, they do not clearly predict that motor speech deficits would impair SEM. On the contrary, motor speech deficits such as apraxia of speech could potentially distort perceptual expectations (Miller & Guenther, 2021). We consequently suggest that such distortions might paradoxically increase SEM, because these distortions would increase the mismatch between expected and actual perceptions.

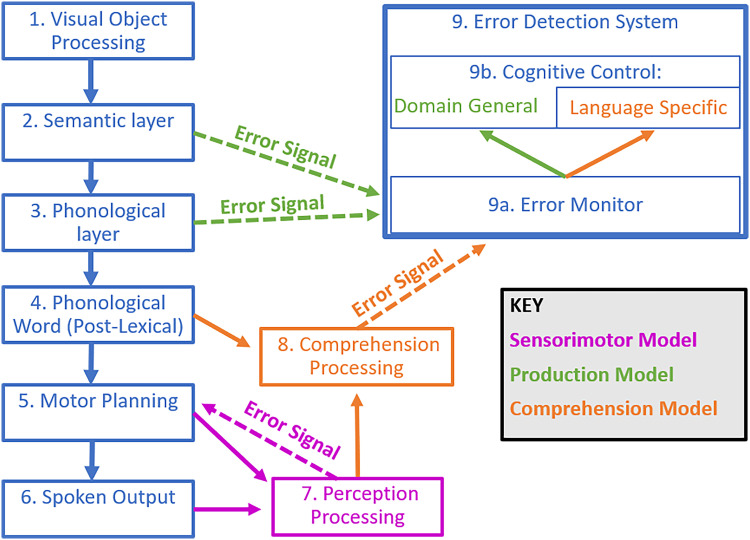

Figure 1. .

Models of SEM during picture naming. Adapted from Goldrick and Rapp (2007) incorporating aspects of Fama and Turkeltaub (2020), Roelofs (2020), Hickok (2012), Nozari et al. (2011), Tourville and Guenther (2011), and Levelt (1983). Theoretical components shared across families of SEM models are outlined in blue. Components specific to production models are shown in green; comprehension models, in orange; sensorimotor models, in magenta.

Neither DIVA nor HSFC incorporates a role for cognitive control, so they do not predict that cognitive control deficits in aphasia should impair SEM. Importantly, as noted by Gauvin and Hartsuiker (2020), although these models present clear mechanisms for SEM of phonological errors (i.e., errors related to the sound of the intended words), they do not present clear mechanisms for SEM of semantic errors (i.e., errors related to the meaning of the intended words; Gauvin & Hartsuiker, 2020; Nozari, 2020). Thus, predictions by DIVA and HSFC may be more relevant in the context of SEM of phonological errors than semantic errors.

Notably, HSFC can be elaborated to account for SEM of lexical errors, because it includes architecture at the level of the lemma as well as a conceptual level (Hickok, 2012). Furthermore, models with similar principles to HSFC describe syntactic processing (e.g., Matchin & Hickok, 2020) and could thus account for SEM of syntactic errors, an error type not readily addressed by most models of SEM. Although HSFC and related models can potentially account for SEM of lexical and syntactic errors, it could be useful for them to address SEM of semantic errors as well.

Consistent with sensorimotor models, it appears that many people with aphasia are impaired at monitoring the sensory consequences of their speech. Such a sensorimotor monitoring impairment can be measured in altered auditory feedback studies, where participants produce speech while hearing an electronically altered version of their speech (Sangtian, Wang, Fridriksson, & Behroozmand, 2021; Johnson, Sangtian, Johari, Behroozmand, & Fridriksson, 2020). Often in these paradigms, participants adjust their speech to feedback, despite not being explicitly instructed to do so (e.g., Burnett, Freedland, Larson, & Hain, 1998). For example, if the altered auditory feedback alterations include a raised pitch, then a participant might compensate by lowering their pitch. These altered auditory feedback studies reveal that people with aphasia are impaired at compensating their speech in response to altered feedback; specifically, they are slower to compensate (Johnson et al., 2020) and less accurate at detecting feedback alterations (Sangtian et al., 2021). However, researchers have pointed out limitations of altered auditory feedback paradigms including that the altered auditory feedback does not approximate naturally occurring speech errors (Meekings & Scott, 2021), and that the paradigm is inherently limited to external detections of speech errors (i.e., because the error is externally applied after production, compensation cannot reflect SEM during preparation for speech production; Gauvin, De Baene, Brass, & Hartsuiker, 2016). It is also worth noting that altered auditory feedback paradigms may have limited relevance for SEM of semantic errors, as sensorimotor processing does not clearly explain SEM of semantic errors. Therefore, the current study investigated the role of motor speech and perceptual processing in SEM of spontaneous semantic and phonological speech errors during picture naming to more closely approximate naturally occurring speech errors.

A second group of SEM models emphasizes the role of lexical access in SEM. This group has been referred to as production-based models (Figure 1, blue and green). One notable example of a production-based model is the conflict-based account proposed by Nozari and colleagues (2011), which emphasizes the role of SEM during lexical access in speech production. In the conflict-based account, semantic and phonological errors are detected when there is increased conflict during the semantic or phonological encoding stages of lexical access (Figure 1, Boxes 2 and 3). As such, the conflict-based account predicts that deficits in the semantic encoding stage of lexical access would impair SEM of semantic errors, and that deficits in the phonological encoding stage of lexical access would impair SEM of phonological errors. Another notable production-based model is Gauvin and Hartsuiker's Hierarchical Conflict Model for Self and Other Monitoring, here referred to as the Gauvin and Hartsuiker model (Gauvin & Hartsuiker, 2020). Similar to the conflict-based account, the Gauvin and Hartsuiker model describes the importance of lexical access in SEM (Gauvin & Hartsuiker, 2020). The model builds on the conflict-based account by adding a role for speech perception in SEM. Thus, the Gauvin and Hartsuiker model makes the same predictions as the conflict-based account with regard to how lexical access deficits would impair SEM in aphasia, with an additional prediction that deficits in speech perception might impair SEM in aphasia.

Consistent with production-based models, a study of spontaneous speech errors in a group of people with aphasia found that SEM of semantic errors relates to s weights, a measure of semantic aspects of lexical access derived from a computational model of picture naming performance (Nozari et al., 2011). The same study also found that SEM of phonological errors relates to p weights, a measure of phonological aspects of lexical access derived from the same model mentioned above (Nozari et al., 2011). In addition, another study in a group of people with aphasia found that SEM of spontaneous errors was related to a measure of fluency, specifically the mean length of utterance produced while describing a picture (Mandal et al., 2020). The relationship between fluency and SEM in aphasia is broadly consistent with a role of lexical access in SEM as predicted by production-based models. Alternatively, the relationship between fluency and SEM might be explained by other aspects of speech processing such as motor speech abilities.

A third group of SEM models emphasizes the role of comprehension in SEM. These models are referred to here as comprehension-based models of SEM (Figure 1, blue and orange). One of the most well-known comprehension-based models is Levelt's Perceptual Loop Theory (Levelt, 1983), and one of the most recent comprehension-based models is Roelofs' WEAVER++ (Roelofs, 2014). In comprehension-based models, one detects errors in their own speech using the same comprehension abilities used for understanding someone else's speech. One may comprehend either their externally produced speech (Figure 1, Box 6) or their inner speech (Figure 1, Box 4). In comprehension-based models, SEM of semantic errors occurs when a person comprehends their own speech, and notices that it is different from what they intended to say. The specific mechanisms for SEM of phonological errors are not described in depth in comprehension-based models. One mechanism discussed for comprehension-based models is that comprehended speech is evaluated using a lexicality criterion (Roelofs, 2004), which could conceivably allow for SEM of nonword phonological errors. On the other hand, SEM of real-word phonological errors could conceivably occur through the same mechanism of SEM of semantic errors. In their simplest interpretation, comprehension-based models would predict that comprehension deficits impair SEM in aphasia.

The evidence for comprehension deficits impairing SEM in aphasia is mixed. On one hand, several researchers have identified a double dissociation between SEM and comprehension in case studies of people with aphasia (for a review, see Nozari et al., 2011; but also see Roelofs, 2020, for an explanation of how double dissociations can still be consistent with a comprehension-based model). On the other hand, two studies have found that SEM of spontaneous errors in groups of people with aphasia relates to performance on an auditory word to picture matching task, suggesting some role for comprehension abilities in SEM (Mandal et al., 2020; Dean, Della Sala, Beschin, & Cocchini, 2017).

Critical Cognitive Control Abilities for SEM in Aphasia

Several domain-general error monitoring theories, and some of the SEM models discussed above, propose that error monitoring interacts with cognitive control. For example, Conflict Monitoring Theory, as described by Botvinick and colleagues, suggests that upon detecting an error, the error monitoring system recruits cognitive control (Botvinick, Braver, Barch, Carter, & Cohen, 2001). Recruitment of cognitive control can then aid in correction of the error, as well as other posterror behavioral adjustments (Danielmeier & Ullsperger, 2011). In addition, Hierarchical Representation Theory suggests a bidirectional relationship in which the error monitoring recruits cognitive control, and cognitive control also can boost error monitoring (Alexander & Brown, 2015).

In line with domain-general theories of error monitoring, both production-based models reviewed above (i.e., the conflict-based account as well as the Gauvin and Hartsuiker model) as well as WEAVER++ emphasize a role of cognitive control in SEM. In particular, the production-based models both describe a role of domain-general cognitive control (Figure 1, Box 9B) in error monitoring. For instance, the conflict-based account proposes that dynamics in lexical access are monitored for conflict via a domain-general monitor that recruits domain-general cognitive control (Figure 1, green arrows). On the other hand, WEAVER++ discusses a role of language-specific cognitive control (Figure 1, Box 9B; Roelofs, 2020). As mentioned above, in WEAVER++, errors are detected as mismatches in the comparison between one's intended message and the comprehension of their spoken output. WEAVER++ proposes that language-specific cognitive control is needed to perform this comparison (Figure 1, orange arrows). Thus, the conflict-based account and the Gauvin and Hartsuiker models predict that domain-general cognitive control deficits should impair SEM in aphasia, whereas Roelofs' WEAVER++ predicts that language-specific cognitive control deficits should impair SEM in aphasia. Again, in contrast, sensorimotor models of SEM do not describe any role of cognitive control in SEM.

There is little evidence concerning whether cognitive control deficits underlie reduced SEM ability in aphasia. Mandal and colleagues (2020) found that SEM in aphasia relates to an indirect measure of cognitive control, the difference between forward minus backward span performance (Mandal et al., 2020). This measure is a broad assessment of cognitive ability in that it may reflect multiple cognitive control processes. Moreover, there are multiple interpretations of poor scores on span tasks that do not reflect cognitive control deficits, such as impaired articulatory rehearsal (Ghaleh et al., 2019). In contrast, Dean and colleagues (2017) examined another broad measure of cognitive ability, the Brixton spatial anticipation test, and found it had no relationship with SEM in aphasia (Dean et al., 2017). Overall, available evidence relating cognitive control deficits to SEM in aphasia does not differentiate between specific cognitive control abilities, nor specify whether the cognitive control abilities are domain-general or language-specific.

Critical Neural Substrates for Monitoring Phonological and Semantic Errors in Aphasia

Our neurocognitive understanding of SEM requires identifying the critical brain regions for SEM. Critical brain regions have been investigated using functional neuroimaging in neurotypical speakers and lesion studies in aphasia. In neurotypical speakers, errors in verbal and nonverbal tasks activate regions in the posterior medial frontal cortex (pMFC; Ridderinkhof, 2004). Correspondingly, functional neuroimaging of SEM of spontaneous speech errors consistently demonstrates activation of the pMFC, as well as left hemisphere (LH) regions thought to be involved in speech processing such as the inferior frontal gyrus (IFG) and insula (Runnqvist et al., 2021; Gauvin et al., 2016; Abel et al., 2009). Thus, functional neuroimaging studies suggest that the pMFC, IFG, and insula may critically support SEM.

To date, few studies have investigated the neural structures that, when lesioned, reduce SEM in aphasia. Altered auditory feedback studies in aphasia indicate that feedback compensation is impaired by lesions in the left middle and superior temporal gyri, IFG, and supramarginal gyrus (Behroozmand et al., 2018). However, as noted above, altered-auditory feedback paradigms might not generalize to SEM of natural speech errors and may have limited relevance for detecting semantic errors. One prior lesion study evaluated SEM of spontaneous speech errors during picture naming, which might better approximate natural speech errors. This study found that LH frontal white matter damage reduces SEM in aphasia (Mandal et al., 2020). This study further implicated frontal white matter damage in phonological SEM but did not identify lesions that reduce semantic SEM, possibly because the sample size was too small. A follow-up analysis of the participants from Mandal and colleagues (2020) directly examined white matter fiber tracts that, when lesioned, reduce SEM. Using a connectome-based approach, McCall, Dickens, and colleagues (2022) found that reduced SEM corresponded to lesioned tracts connecting lateral frontal brain regions implicated in speech production with medial frontal brain regions implicated in cognitive control. These lesioned connections corresponded to SEM of phonological errors; however, the authors did not identify lesioned connections that correspond to SEM of semantic errors.

Importantly, the SEM models described in the Introduction section predict that different neural substrates may underlie SEM for phonological versus semantic errors. For example, production-based models predict that SEM of phonological errors should rely in part on brain regions involved in phonological encoding, which are thought to include dorsal stream regions spanning from the left posterior superior temporal cortex to the left precentral gyrus and the left IFG (Roelofs, 2014; Schwartz, Faseyitan, Kim, & Coslett, 2012; Indefrey, 2011). In contrast, production-based models predict that SEM of semantic errors should rely on brain regions involved in the semantic encoding stage of lexical access. Although semantic knowledge is thought to be widely distributed throughout the cortex (Lambon Ralph, Jefferies, Patterson, & Rogers, 2017; Binder, Desai, Graves, & Conant, 2009), some models suggest that semantic aspects of lexical access relies on the left middle temporal gyrus (Indefrey, 2011; Hickok & Poeppel, 2007), as well as the left anterior temporal lobe (Schwartz et al., 2009; Damasio, Tranel, Grabowski, Adolphs, & Damasio, 2004). In contrast to production models, comprehension models do not clearly predict that different neural substrates underlie SEM for phonological versus semantic errors. In these models, SEM of all error types should rely on brain regions involved in speech comprehension, which include the superior temporal cortex (Specht, 2014).

Language comprehension more broadly has been proposed to rely on a set of brain regions organized sequentially in a posterior–anterior direction, starting posteriorly in the temporal cortex and ending anteriorly in anterior temporal cortex and/or anterior inferior frontal cortex (Matchin & Hickok, 2020; Pylkkänen, 2019; Friederici, 2017; Hickok & Poeppel, 2007). This organization, or pathway, of brain areas is often referred to as the ventral stream (Rauschecker & Scott, 2009; Hickok & Poeppel, 2007). Comprehension models accordingly predict that SEM should rely on ventral stream brain regions.

As noted above, sensorimotor models do not address how semantic errors are monitored. Because these models propose that comparison between expected and actual auditory perception occurs in the posterior superior temporal cortex, the clearest neuroanatomical prediction of these models is that SEM of phonological errors should rely on this region (Hickok, 2012; Tourville & Guenther, 2011). In HSFC, this prediction is tempered by the fact that the posterior superior temporal cortex also contains area Spt, which is proposed to generate perceptual expectations. Damage to area Spt therefore could distort perceptual expectations, which as explained above would increase SEM.

Although several models suggest that SEM of phonological errors relies on brain structures that are distinct from those of SEM of semantic errors, lesion–behavior studies have not investigated distinct lesions that reduce SEM of phonological errors versus SEM of semantic errors. It is important to identify such distinctions because they may aid in clinical prognosis and assessment, as well as inform our understanding of the mechanisms underlying SEM.

Present Study

Here, we investigated the behavioral and neural basis of SEM in aphasia. We specifically focused the basis of monitoring phonological versus semantic errors because the models outlined above make specific predictions for these different error types, but only with modest empirical support. Critically, all of the SEM models predict that speech and/or language deficits should impair SEM in aphasia, but only some predict that cognitive control deficits impair SEM in aphasia. Therefore, our first goal was to investigate speech and language processes that predict SEM performance in LH stroke survivors (Experiment 1).

Next, we investigated whether cognitive control deficits reduce SEM, while accounting for the speech and language abilities identified as important for SEM (Experiment 1). To that end, we employed several cognitive control tasks to disentangle specific cognitive control abilities important for SEM, including language-specific cognitive control measures and nonlinguistic cognitive control measures. We further examined whether cognitive control of phonological representations (i.e., phonological control) relates to phonological SEM, and whether cognitive control of semantic representations (i.e., semantic control) relates to semantic SEM, as predicted by some models.

Finally, we used support vector regression lesion symptom mapping (SVR-LSM) to determine whether there are distinct neural substrates for SEM of phonological errors versus SEM of semantic errors (Experiment 2). We expected these neural substrates would correspond to the behavioral predictors of SEM in aphasia. This Experiment 2 investigation included data from participants in Experiment 1, as well as prior studies in the laboratory, namely, Mandal and colleagues (2020) and McCall, Dickens, and colleagues (2022; see Experiment 2 Methods).

EXPERIMENT 1

Methods

Experiment 1 analyzed behavioral data from an ongoing cross-sectional study of individual language outcomes after stroke (R01DC014960).

Participants

Participants were native English speakers with no history of psychiatric or neurological disorders aside from stroke. In addition, participants were screened for hearing and vision loss. This study was approved by Georgetown University's institutional review board, and all participants provided written informed consent.

Participants included 41 LH stroke survivors at least 3 months post stroke (Table 1). Prior work in this laboratory has found that sample sizes of approximately 50 participants are sufficiently powered for regression-based behavioral analyses (Fama, Henderson, et al., 2019). LH stroke survivors were only included if they committed at least five errors during confrontation picture naming. A cutoff of five errors has been adopted in prior studies of SEM (e.g., van der Stelt, Fama, McCall, Snider, & Turkeltaub, 2021; Mandal et al., 2020) to promote precision in the measurement of SEM abilities. For example, if a participant only commits one error, their SEM ability can only be measured as 0% success (did not detect the error) or 100% success (detected the error). Furthermore, if a participant commits two errors, their SEM abilities can be measured as either 0% success (detected neither error), 50% success (detected one of the two errors), or 100% success (detected both errors). Consequently, precision improves with more errors, and at five errors, SEM abilities can be measured as 0%, 20%, 40%, 60%, 80%, or 100%.

Table 1. .

Demographics for Experiment 1

| LH Stroke Survivors | |

|---|---|

| Total | 41 |

| Sex | 27 M, 14 F |

| Age | M = 59.2, SD = 11.4 [40–84] |

| Education (years) | M = 16.5, SD = 2.8 [12–21] |

| Western Aphasia Battery (WAB) Aphasia Quotient | M = 73.9, SD = 22.7 [20.1–97.3] |

| Time since stroke (months) | M = 48.6, SD = 48.3 [3.3–184.1] |

| WAB aphasia diagnosis | 21 Anomic, 10 Broca, 4 Conduction, 6 Transcortical Motor |

| Picture naming errors (out of total 120 items) | M = 30.1, SD = 23.8 [5–106] |

| Picture naming error detection rate | M = 0.46, SD = 0.29 [0–0.98] |

M = mean; SD = standard deviation; [min–max] = range.

Measures

We collected scores of SEM, five speech and language measures, five cognitive control measures, and two personality and motivation measures. These measures are described in detail below.

SEM measurement.

SEM was scored as detections of errors committed during picture naming. Participants named 120 black-and-white images presented one at a time on a computer. Images included two 30-item short forms of the Philadelphia Naming Test (Walker & Schwartz, 2012), as well as 60 items from an internally normed publicly available corpus (www.cognitiverecoverylab.com/researchers; Fama, Snider, et al., 2019).

Participants were instructed to give the best one-word name for each picture and were told that “what really counts is the first thing you say, but if you make a mistake you can try to fix it.” Participants had up to 20 sec to name each picture and could choose to advance to the next item sooner. No feedback was provided. Item-wise performance measures included accuracy, the type of errors committed, and whether each error was detected.

Error scoring.

Trained researchers coded picture naming errors on the basis of semantic and/or phonological relationships to the target. Errors were coded as having a semantic relationship with the target if the error has a relevant meaning to the target. Errors were coded as having a phonological relationship with the target if both the error and target share any of the following: an initial phoneme, a final phoneme, a phoneme at the same syllable and word position, a stressed vowel, or two phonemes (not counting unstressed vowels) at any word position. Semantic and phonological relationships to the target were considered orthogonally. That is, errors could have both semantic and phonological relationships to the target, have just one relationship (i.e., semantic but not phonological and vice, versa), or have neither a semantic nor phonological relationship to the target. Overall, 58.0% of errors had a phonological relationship to the target, 28.4% of errors had a semantic relationship to the target, and 24.6% had neither a semantic nor phonological relationship to the target. In addition, 11.0% of errors had both a semantic and phonological relationship to the target.

Error detection scoring.

Participants had the opportunity to detect and correct their errors, but they were not explicitly directed to judge the accuracy of their response on each trial. Much of the prior research on error monitoring, particularly research on the relationship between error monitoring and clinical outcomes, uses this approach (McCall, Dickens, et al., 2022; van der Stelt et al., 2021; Mandal et al., 2020; Schwartz, Middleton, Brecher, Gagliardi, & Garvey, 2016; Nozari et al., 2011; Marshall, Neuburger, & Phillips, 1994). In Experiment 1, detections were scored by trained researchers, and afterward, detection scores were verified by a separate researcher to ensure consistency with the scoring protocol.

Any verbal statement indicating awareness of an error on a trial was recorded as a detection. For example, detection of an error would be recorded if a participant either verbally declared that their response is incorrect, for example, “garlic…no that's not it!” [Target: Onion] or produced a second attempt to repair their initial utterance, for example, “thermometer um uh raincoat” [Target: Umbrella]. In the absence of verbal statements indicating error awareness, nonverbal behaviors (grunts, facial expressions, etc.) were not recorded as detections.

Speech and language measures.

In total, five speech and language scores were measured in LH stroke survivors (s weights, p weights, Word–Picture Matching accuracy, Pseudoword Discrimination accuracy, and Apraxia of Speech Rating Scale 3; Table 2). Each measure was collected in all 41 participants, with the exception of Word–Picture Matching, which was collected in 40 participants. In addition, Word–Picture Matching accuracy was measured in 42 age- and education-matched neurotypical controls to establish a normal performance range. These speech and language scores were selected as prospective predictors of SEM because they met at least one of the following two criteria:

-

(1)

The score has been previously found to relate to SEM in aphasia. This criterion was met by s weights, p weights, and Word–Picture Matching accuracy (Mandal et al., 2020; Dean et al., 2017; Nozari et al., 2011).

-

(2)

The score has strong theoretical potential to relate to SEM in aphasia. This criterion was met by Pseudoword Discrimination accuracy, as well as Apraxia of Speech Rating Scale 3.

S weights and p weights. Performance on the picture naming task was used to evaluate phonological and semantic aspects of word retrieval in individual participants based on Dell's interactive two-step model of lexical access (Dell & O'Seaghdha, 1992). Here, Foygel and Dell's semantic-phonological computational model was used to estimate s weights representing the weight of semantic–lexical connections, and p weights representing the weight of lexical–phonological connections (Foygel & Dell, 2000). These weights were estimated via the web-based interface (available at https://langprod.cogsci.illinois.edu/cgi-bin/webfit.cgi; Dell, Martin, & Schwartz, 2007).

Table 2. .

Performance on Speech and Language Measures

| Measure | LH Stroke Survivors | Percent Impaired |

|---|---|---|

| Word to Picture Matching | M = 85%, SD = 20% [13–100%] | 45.0% |

| S Weights | M = 0.01, SD = 0.03 [0–0.10] | NA |

| P Weights | M = 0.02, SD = 0.02 [0–0.10] | NA |

| Pseudoword Discrimination | M = 89%, SD = 11% [48–98%] | 36.6% |

| Apraxia of Speech Rating Scale 3 | M = 9.66, SD = 8.81 [0–30] | NA |

Percent impaired column indicates the proportion of participants categorized as impaired at either Word to Picture Matching, or Pseudoword Discrimination. M = mean; SD = standard deviation; [min–max] = range.

Word–Picture Matching (48 items). Participants were presented with an auditory word, and selected a target picture representing that word. The target picture was presented simultaneously in an array of five semantic foils. This task is a computerized version of the word–picture matching task described and normed in Rogers and Friedman (2008). Each target and foil were selected from a standardized corpus of black-and-white drawings (Rogers & Friedman, 2008; Snodgrass & Vanderwart, 1980). Accuracy scores on this measure correlated with participant's scores of Western Aphasia Battery–Revised (WAB; from Kertesz, 2007) subtests of speech comprehension (WAB Auditory Word Recognition r = .62, p = .000018; WAB Yes No Questions r = .56, p = .00015; WAB Sequential Commands r = .64, p = .000011).

Performance was scored as percent accuracy. As there was an apparent ceiling effect and a highly skewed distribution in scores, LH stroke survivors' accuracy scores were categorized on the binary basis of whether they were impaired relative to neurotypical performance. Impairment was defined as performance worse than the entire range of neurotypical scores. Thus, the cutoff for impairment was the minimum neurotypical score, which was 94% accuracy.

Pseudoword discrimination task (46 items). Participants indicated via button-press if two pseudowords are the same or different in a task adapted from the Temple Assessment of Language and Short-term Memory in Aphasia (TALSA; Martin, Minkina, Kohen, & Kalinyak-Fliszar, 2018). Performance was scored as percent accuracy. Accuracy on this task has theoretical potential to relate to SEM because the task may require abilities critical for evaluating phonological errors against their intended target. Although pseudoword discrimination was not measured in neurotypical control participants in the present study, in-house norms were available via a prior study's scores in 20 neurotypical participants (Fama, Snider, et al., 2019). In the present study, this measure correlated with participant scores of WAB subtests of speech comprehension (WAB Auditory Word Recognition r = .61, p = .000027; WAB Yes No Questions r = .50, p = .00094; WAB Sequential Commands r = .46, p = .0023).

Similar to scores on the word–picture matching task, the scores on the pseudoword discrimination task had an apparent ceiling effect and skewed distribution, so they were binarized using the same approach described above. The cutoff for impairment was the minimum neurotypical control score, which was 89% accuracy.

Apraxia of Speech Rating Scale 3. Apraxia of speech scores such as the Apraxia of Speech Rating Scale 3 (ASRS3) have theoretical potential to relate to SEM because deficits in motor speech planning and execution may contribute to the incidence of phonological speech errors. Lesion–symptom mapping evidence supports the contribution of motor speech brain areas to the incidence of phonological errors during picture naming in people with aphasia (Schwartz et al., 2012). Furthermore, motor-speech somatosensory (e.g., proprioceptive) feedback is thought to support online correction of the articulation of phonemes (Hickok, 2012; Guenther, 1994). To account for the potential role of motor speech deficits in SEM, we included the ASRS3 (Wambaugh, Bailey, Mauszycki, & Bunker, 2019). Performance was scored as the sum total across all 16 ASRS3 item scores. Each item-level ASRS3 score is based on a 5-point scale describing the presence and severity of motor speech characteristics as identified by the examining clinician, a trained speech-language pathologist. For each item, a higher score indicates greater severity. Therefore, a higher sum total of all scores suggests a more severe motor speech deficit. Table 2 includes the raw ASRS3 scores. Before statistical modelling, these scores were rescaled by mean-centering and dividing by the standard deviation.

Cognitive control measures.

Five cognitive control scores were measured in LH stroke survivors (i.e., Simon cost, no-go accuracy, task switching cost, phonological control, semantic control; Table 3). Three of these scores were selected because they measure cognitive control of processes that do not critically rely on language (Simon cost, no-go accuracy, task switching cost). The final two scores were selected because they measure cognitive control of language-based processes (semantic control, phonological control).

Table 3. .

Performance on Cognitive Control Measures

| Measure | LH Stroke Survivors |

|---|---|

| Phonological Cost | M = 0.25, SD = 0.16 [0.04–0.98] |

| Semantic Cost | M = 0.39, SD = 0.23 [0.03–1.21] |

| Switching Cost | M = 0.34, SD = 0.12 [0.11–0.64] |

| Simon Cost | M = 0.10, SD = 0.10 [−0.03–0.40] |

| No Go Accuracy | M = 0.85, SD = 0.22 [0–1.00] |

M = mean; SD = standard deviation; [min–max] = range.

Simon task (100 items: 50 congruent, 50 incongruent). Participants must select between two alternative colored (red vs. blue) blocks in trials that are either congruent or incongruent on dimensions of color and location (left vs. right). Performance in each condition is measured as the inverse efficiency (mean reaction time divided by accuracy; Townsend & Ashby, 1983). The behavioral index, cost, is measured as the difference in inverse efficiency between the incongruent and congruent conditions. Cost on the Simon task is thought to reflect abilities in conflict monitoring and resolution (Cespón, Hommel, Korsch, & Galashan, 2020).

Go/no-go task (100 items: 82 go, 18 no-go). Participants are presented with shapes one at a time and press a button when they see a circle (the go trials), but they do not press the button when they see any other shape (the no-go trials). The task consists of 100 total trials: 82 go trials and 18 no-go trials. Performance is scored as percent accuracy on the no-go trials. Accuracy on no-go trials reflects inhibitory control ability, as those trials require the participant to refrain from pressing the button.

Task switching.

Participants perform the nonverbal version of the Antelopes and Cantaloupes task paradigm, which is briefly described below (see McCall, van der Stelt, et al., 2022, for an in-depth description of task and summary measures). This paradigm was completed by 40 participants. Participants select the picture of a target object in a 2 × 2 array of four pictures on a touch screen as many times as possible within seven 20-sec blocks. To minimize the use of inner speech-based self-cueing strategies in this nonverbal version of the task, the four pictures in the 2 × 2 array are abstract shapes that have no canonical name. These blocks require selecting a single target repeatedly (S1), two separate targets successively (S2), or three separate targets successively (S3). Performance is calculated as the block duration divided by number of successful selections and is referred to as time per target selection (TTS). Switching cost is calculated as average TTS across S2 and S3 blocks, minus TTS in the S1 blocks. A lower switching cost reflects greater ability in task switching.

Phonological control task.

Participants perform two versions of the Antelopes and Cantaloupes paradigm. In the phonological version, the four pictures in the array are phonologically related to one another. In the baseline version, the four pictures in the array are neither phonologically nor semantically related to one another. Phonological cost is measured as the difference between the TTS in the phonological version and the TTS in the baseline version.

Semantic control task.

Participants perform two versions of the Antelopes and Cantaloupes paradigm. In the semantic version, the four pictures in the array are semantically related to one another. The baseline version is the same as in the phonological control task. Semantic cost is measured as the difference between the TTS of the semantic version and the TTS in the baseline version (McCall, van der Stelt, et al., 2022).

Personality and motivation measures.

To measure the extent to which participants are generally motivated to monitor their errors across task domains, we scored personality and motivation in two ways: (1) We utilized a self-report personality measure, and (2) we examined spontaneous error monitoring behavior on a nonlanguage task that was designed to elicit errors.

Short almost perfect scale.

As a personality measure, we utilized the Short Almost Perfect Scale (SAPS; Rice, Richardson, & Tueller, 2014), which yields two subscale scores: Standards and Discrepancy. Standards is thought to reflect positive aspects of perfectionism related to conscientiousness, whereas Discrepancy is thought to reflect negative aspects of perfectionism related to neuroticism (Rice et al., 2014). This measure was administered in 40 participants.

Picture copy.

As a measure of motivation to spontaneously self-monitor one's own errors, we designed a picture copy drawing task. In this task, participants used a touchscreen computer to recreate 10 trials of black-and-white line drawings composed of either one geometric shape or two concentric geometric shapes. To elicit increased errors in the drawings, during the final five trials, the pixels drawn on the screen were offset diagonally from the location touched on the touchscreen. All trials were presented with clear and erase buttons for participants to correct their drawing. Error monitoring behavior, called picture copy corrections, was scored as the total selections of the clear and erase buttons across all 10 trials. This measure was administered in 40 participants.

Experimental Design and Statistical Analyses

Motivational influences on SEM.

We first set out to validate our measure of SEM. An oft-noted downside of the present measure of SEM is that it may be influenced by motivation or personality, because participants are not explicitly instructed to judge their accuracy on each trial. Consequently, it is possible that participants detect their errors but choose not to overtly display monitoring behavior. To investigate this possibility, we examined the relationship between SEM and scores on a personality scale as well as a spontaneous error monitoring task. This relationship was modeled using a mixed-effects logistic regression model examining error trials, with the dependent variable as a binary outcome of whether the error was detected, and the independent variable as fixed-effect measures of personality (i.e., SAPS Standards, and SAPS Discrepancy) and motivation (i.e., picture copy corrections). This analysis was run on 39 participants instead of 41, because SAPS scores were unavailable in two participants. Subject ID was included as a random effect to model participant-wise random intercepts. Because this model only considered error trials, which could occur on any of the 120 items, there was insufficient sampling to consider item as a random effect.

Behavioral predictors of SEM in aphasia.

We set out to examine behavioral predictors of SEM while accounting for differences between SEM of phonological errors versus SEM of semantic errors.

This examination involved two rounds of analysis (1) identifying speech and language measures that predict SEM, and (2) identifying cognitive control scores that predict SEM while accounting for the measures from (1).

Speech and language measures that predict SEM.

To identify speech and language measures that predict SEM, we entered candidate speech and language measures into a statistical model of SEM and eliminated measures that did not inform the model. To model SEM, we designed a mixed-effects logistic regression examining error trials with the dependent variable set to a binary outcome of whether the error was detected. Trials with no response were not included in this model. The independent variables included subject-level fixed effects of scores from the speech and language measures (Table 4). To account for differences between SEM of phonological errors versus SEM of semantic errors, the phonological and semantic relatedness of the error to the target were included as separate item-level fixed effects and in interactions with select scores. These item-level fixed effects and interactions are listed in Table 4.

Table 4. .

Initial Set of Candidate Speech and Language Predictors of SEM

| Effects | Model Terms |

|---|---|

| Main effects (fixed effects): | Semantic Error, Phonological Error, Word to Picture Matching, s weights, p weights, pseudoword discrimination, Apraxia of Speech Rating Scale 3 (ASRS3) |

| Interactions (fixed effects): | Semantic Error * Word to Picture Matching |

| Semantic Error * s weights | |

| Phonological Error * p weights | |

| Phonological Error * ASRS3 | |

| Phonological Error * Pseudoword Discrimination | |

| Random effects | Subject code (random intercepts) |

Akaike Information Criterion (AIC) values were used in the backward elimination of speech and language measures that were not informative in predicting SEM (Burnham & Anderson, 2004; Akaike, 1974). Starting with a model containing the full initial set of candidate predictors, AIC values were used to identify fixed terms to remove in the following manner. First, the AIC value was calculated for all the possible models that would result from dropping one of the fixed effect terms from the model (R function drop1). For example, if a model has five fixed effect terms, drop1 calculates the AIC of the five possible models that would result from dropping one of those five terms. Next, of those possible models, we selected the model with the lowest AIC value, removing one fixed effect term. The selected model was then entered into drop1 to proceed to selection of the next model. Iterations of drop1-based term removal continued until the model entered into drop1 had an AIC at least two points below all possible models with one removed term. The two-point difference was selected as the cutoff because a difference of two points in AIC values is considered substantial support for the model with the lower AIC value (Burnham & Anderson, 2004). Thus, selection of a model without removed terms required substantial support, promoting parsimony of the final model. We aimed to minimize the overall number of final predictors because our sample size is relatively modest (n = 41).

It can be tempting to interpret the elimination of model terms as indicating that the eliminated terms have no relationship with SEM. However, in the present model selection approach, terms might be eliminated if there is simply insufficient power to determine their relationship with SEM. To discern whether terms are eliminated because of a lack of a relationship versus a lack of power, a Bayes factor for each removed term was estimated based on each model's Bayesian Information Criterion before and after eliminating the term (Masson, 2011). Each Bayes factor was also used to compute a p value representing the posterior probability that the term does not relate to SEM (Masson, 2011).

Cognitive control scores that predict SEM.

To identify cognitive control scores that relate to SEM, we started with the surviving model of speech and language predictors described above. On top of this model, we added cognitive control scores as fixed effect predictors (Table 5). To account for differences between SEM of phonological versus semantic errors, the phonological and semantic relatedness of the error to the target was included as separate item-level predictors and in interactions with select scores. The model terms for these candidate cognitive control predictors are listed in Table 5. Backward elimination based on model fit as described above allowed for backward elimination of cognitive control scores that did not predict SEM. Bayes factors for the elimination of terms were also calculated as described above.

Table 5. .

Initial Set of Candidate Cognitive Control Predictors of SEM

| Effects | Model Terms |

|---|---|

| Main effects (fixed effects): | Semantic Error, Phonological Error, Phonological Control, Semantic Control, Simon Cost, No-go Accuracy, Switching |

| Interactions (fixed effects): | Semantic Error * Phonological Control |

| Semantic Error * Semantic Control | |

| Phonological Error * Phonological Control | |

| Phonological Error * Semantic Control | |

| Random effects | Subject code (random intercepts) |

Implementation of statistical analyses.

Mixed-effects models were run using R Version 4.1.0 (https://cran.r-project.org/). Logistic mixed-effects models were estimated using the R function glmer, with default settings, except for: family = binomial, control = glmerControl(optimizer = “bobyqa”). Model summary tables (Table 6, 7, and 8) were generated using the R function tab_model. All other analyses were run in MATLAB R2018b (MathWorks, https://www.mathworks.com/products/matlab.html).

Table 6. .

Logistic Mixed-Effects Regression of Motivational Influences on SEM

| Predictors | Error Detection | ||

|---|---|---|---|

| Odds Ratios | CI | p | |

| (Intercept) | 0.86 | 0.50–1.47 | .58 |

| SAPS discrepancy | 1.21 | 0.70–2.10 | .49 |

| SAPS standards | 1.25 | 0.66–2.39 | .49 |

| Picture copy corrections | 1.31 | 0.65–2.60 | .45 |

| Random Effects | |||

| σ2 | 3.29 | ||

| τ00 Subject Code | 2.55 | ||

| ICC | 0.44 | ||

| NSubject Code | 39 | ||

| Observations | 1124 | ||

| Marginal R2/conditional R2 | 0.015/0.446 | ||

Table 7. .

Final Model Identifying Speech and Language Predictors of SEM

| Predictors | Odds Ratios | CI | p |

|---|---|---|---|

| (Intercept) | 1.23 | 0.56–2.70 | .598 |

| Semantic error | 0.63 | 0.37–1.07 | .090 |

| Phonological error | 0.71 | 0.51–0.99 | .041 |

| Word–picture matching impairment | 1.50 | 0.52–4.32 | .453 |

| ASRS3 | 0.68 | 0.36–1.28 | .235 |

| Semantic Error * Word–Picture Matching Impairment | 0.38 | 0.19–0.76 | .006 |

| Phonological Error * ASRS3 | 0.70 | 0.50–0.96 | .027 |

| Random Effects | |||

| σ2 | 3.29 | ||

| τ00 Subject Code | 2.32 | ||

| ICC | 0.41 | ||

| NSubject Code | 40 | ||

| Observations | 1245 | ||

| Marginal R2/conditional R2 | 0.095/0.469 | ||

Table 8. .

Final Model Identifying Cognitive Control Predictors of SEM

| Predictors | Odds Ratios | CI | p |

|---|---|---|---|

| (Intercept) | 1.14 | 0.52–2.48 | .747 |

| Semantic error | 0.65 | 0.38–1.11 | .111 |

| Phonological error | 0.57 | 0.40–0.82 | .002 |

| Word–picture matching impairment | 2.22 | 0.76–6.54 | .146 |

| ASRS3 | 0.50 | 0.26–0.96 | .036 |

| Semantic control (cost) | 0.41 | 0.24–0.70 | .001 |

| Semantic Error * Word–Picture Matching Impairment | 0.32 | 0.15–0.65 | .002 |

| Phonological Error * ASRS3 | 0.71 | 0.51–0.99 | .042 |

| Semantic Error * Semantic Control (Cost) | 1.68 | 1.16–2.44 | .006 |

| Random Effects | |||

| σ2 | 3.29 | ||

| τ00 Subject Code | 2.10 | ||

| ICC | 0.39 | ||

| NSubject Code | 39 | ||

| Observations | 1139 | ||

| Marginal R2/conditional R2 | 0.193/0.507 | ||

Results

Motivational Influences on SEM

First, we sought to determine whether personality and motivation play a role in our measure of SEM. To that end, we tested the relationship between SEM and measures on the SAPS and Picture Copy using a mixed-effects logistic regression. We hypothesized that SEM is driven by specific linguistic or cognitive abilities, rather than personality or motivation. Therefore, we did not expect to find a strong relationship between scores on the SAPS or picture copy and SEM. As expected, SEM did not significantly relate to scores on the SAPS (SAPS Discrepancy: OR = 1.21, CI [0.70, 2.10], p = .49; SAPS Standards: OR = 1.25, CI [0.66, 2.39] p = .49), nor picture copy (OR = 1.31, CI [0.65, 2.60], p = .45; Table 6).

Behavioral Predictors of SEM in Aphasia

Speech and language predictors of SEM.

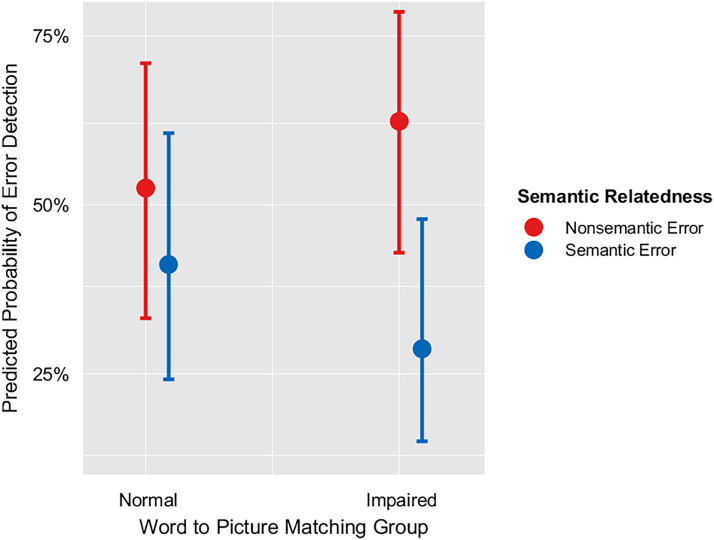

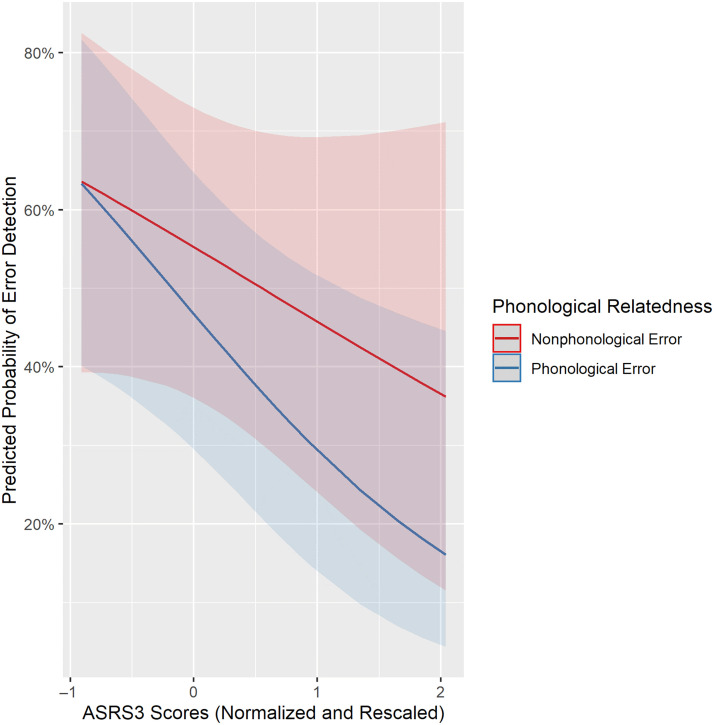

To identify speech and language predictors of SEM, we used stepwise backward elimination of terms in a mixed-effects logistic regression, starting with the initial set of terms listed in Table 4. In the final model, the error type was a significant factor such that phonologically related errors were less likely to be detected than non-phonologically related errors (i.e., errors that are semantically but not phonologically related to the target, and errors that are neither semantically nor phonologically related to the target; p = .041; Table 7). In addition, there was a significant interaction between Word–Picture Matching and SEM of semantic errors (p = .006), which indicates that having an impairment in Word–Picture Matching relates to reduced semantic SEM (Table 7, Figure 2). This relationship was expected because Word–Picture Matching has previously been shown to relate to SEM (Dean et al., 2017) and specifically to semantic SEM (Mandal et al., 2020). There was also a significant interaction between ASRS3 and phonological errors (p = .027), which indicates that worse apraxia of speech relates to reduced detection of phonological errors (Table 7, Figure 3). This relationship between apraxia of speech and phonological SEM is in the opposite direction of what we would expect from sensorimotor models of SEM (see Introduction section; e.g., Hickok, 2012; Tourville & Guenther, 2011).

Figure 2. .

Interaction between word–picture matching and SEM of semantic errors.

Figure 3. .

Interaction between ASRS3 and SEM of phonological errors. Higher ASRS3 scores indicate greater severity of apraxia of speech.

Notably, stepwise model selection eliminated three variables: s weights, p weights, and pseudoword discrimination. For each of these eliminated variables, we used Bayes factors to assess the evidence for an absence of a relationship with SEM. Bayesian analysis on the elimination of s weights during model selection supported an absence of a relationship between s weights and SEM (Bayes factor = 0.028; p = .028). On the other hand, Bayesian analysis on the elimination of p weights did not strongly support an absence of a relationship between p weights and SEM (Bayes factor = 0.16; p = .14). Finally, Bayesian analysis on the elimination of pseudoword discrimination did support an absence of a relationship between pseudoword discrimination and SEM (Bayes factor = 0.073; p = .068).

Cognitive control predictors of SEM.

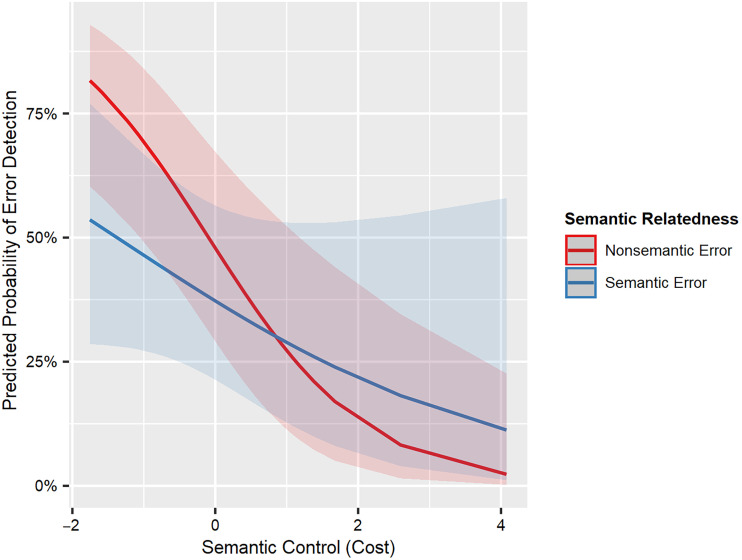

Next, we assessed the relationship between cognitive control and SEM, building on the model above using speech and language predictors of SEM. In the final model, the error type was a significant factor such that phonologically related errors were less likely to be detected than non-phonologically related errors (OR = 0.57, CI [0.40, 0.82], p = .002; Table 8) . Because we hypothesized that cognitive control deficits impair SEM in aphasia, we expected at least one cognitive control measure would relate to SEM. Indeed, one cognitive control measure (i.e., the semantic control measure) survived backward elimination (Table 8), supporting that hypothesis. In addition, we hypothesized that if SEM relied on language-specific cognitive control, then phonological control and semantic control would relate to SEM. In support of that hypothesis, there was a significant main effect of semantic control, which indicates that worse semantic control (in the form of increased cost) was related to reduced SEM (OR = 0.41, CI [0.24, 0.70], p = .001). However, we did not find evidence supporting a role of phonological control in SEM, because the phonological control measure was eliminated during stepwise selection. On the other hand, Bayes factor analysis of the removal of the phonological control measure did not strongly support an absence of a relationship between phonological control and SEM (Bayes factor = 0.16; p = .14). Therefore, we cannot strongly conclude that phonological control does not relate to SEM.

Furthermore, we predicted that phonological control would relate to detection of phonological errors and semantic control would relate to detection of semantic errors. Although there was a significant interaction between semantic control and SEM of semantic errors (p = .006), this finding does not support our prediction. Surprisingly, semantic control influenced SEM of nonsemantic errors to a greater extent than SEM of semantic errors (Table 8, Figure 4).

Figure 4. .

Interaction between semantic control and SEM of semantic errors. Higher values of semantic control (cost) indicate worse semantic control.

We also hypothesized that if domain-general cognitive control was used in SEM, then nonlanguage cognitive control scores would relate to SEM. That prediction was not supported because the nonlanguage cognitive control scores of no-go accuracy, Simon cost, and task switching were all eliminated during stepwise selection. Furthermore, Bayes factor analyses on the removal of Simon cost, and no-go accuracy during model selection supported an absence of a relationship between these factors and SEM (Simon cost: Bayes factor = 0.046; p = .044, no-go accuracy: Bayes factor = 0.049; p = .046). However, Bayes factor analysis of the removal of task switching during model selection did not strongly support an absence of a relationship between task switching and SEM (Bayes factor = 0.15; p = .13).

Experiment 1 interim summary.

The first experiment examined how speech, language, and cognitive control abilities relate to SEM of phonological and semantic errors in people with aphasia. As predicted by comprehension-based models, we found that worse auditory comprehension abilities were associated with reduced detection of semantic errors. We also found that worse motor speech deficits in the form of apraxia of speech were associated with reduced SEM of phonological errors. Although the association of motor deficits and phonological SEM is predicted by sensorimotor models, we found the direction of the association was the opposite of expected. Consistent with both production and comprehension models, we found that worse cognitive control abilities were associated with reduced SEM. As predicted by comprehension-based models, semantic control, a language-specific cognitive control ability was associated with SEM. Intriguingly, we found an interaction effect between semantic control and SEM of semantic errors that was opposite of what we expected. This interaction effect is further elaborated in the Discussion section. Next, Experiment 2 builds upon these findings by examining the brain structures critical for SEM of phonological and semantic errors.

EXPERIMENT 2

Methods

Experiment 2 investigated lesion–behavior relationships for SEM from participants across three studies on language and stroke (R01DC014960, R03DC014310, Doris Duke Charitable Foundation 2012062).

Participants

As in Experiment 1, all participants were native speakers of English and did not have any psychiatric or neurological disorders aside from stroke. These participants were also screened for perceptual deficits such as hearing or vision loss. This study was approved by Georgetown University's Institutional Review Board, and all participants provided written informed consent.

Here in Experiment 2, we analyzed lesion–behavior relationships in a data set of 76 stroke survivors (Table 9; McCall, Dickens, et al., 2022; Mandal et al., 2020). Of these 76 participants, 30 were common to Experiment 1, and 43 were common to McCall, Dickens, and colleagues (2022), which examined a subset of Mandal and colleagues (2020). In addition, four participants intersected across all studies: Experiment 1, Experiment 2, and McCall, Dickens, and colleagues (2022). Power analyses of lesion–behavior investigations indicate that sample sizes of 60 or fewer can overestimate effect sizes, whereas sample sizes of 120 or greater can identify weak, trivial relationships (Lorca-Puls et al., 2018). All participants were at least 6 months poststroke. Participants were included based on the types of errors they committed during confrontation picture naming. Specifically, participants were included if they committed at least five semantic errors or at least five phonological errors. As described in Experiment 1, this cutoff has been adopted in prior studies of SEM to promote precision in the calculation of SEM abilities.

Table 9. .

Demographics for Experiment 2

| LH Stroke Survivors | |

|---|---|

| Total | 76 |

| Sex | 47 M, 29 F |

| Age | M = 60.11, SD = 9.45 [43–84] |

| Education (years) | M = 16.04, SD = 2.99 [10–24] |

| Picture naming accuracy | M = 0.57, SD = 0.26 [0.08–0.98] |

| Picture naming error detection rate | M = 0.41, SD = 0.26 [0–0.97] |

| Time since stroke months | M = 47.41, SD = 49.81 [6.2–255.7] |

| Lesion volume (cm3) | M = 114, SD = 84 [1–415] |

M = mean; SD = standard deviation; [min–max] = range.

SEM measurement.

In Experiment 2, the only behavioral measure analyzed was SEM. As in Experiment 1, SEM was scored as detections of errors committed during picture naming. Picture naming task instructions were identical to those in Experiment 1. Here, participants named all or a subset (i.e., 60) of the 120 images from Experiment 1 presented on a computer. Because Experiment 2 used data pooled across multiple studies, several participants who were included in multiple studies completed multiple administrations of the picture naming task. For those participants, data from all of their administrations were combined for the present experiment.

Error scoring.

Errors were identified as belonging to standard categories using coding rules adapted from the Philadelphia Naming Test. This can be contrasted with Experiment 1, where errors were coded irrespective of whether they fit into a Philadelphia Naming Test category. Furthermore, a semantic error was considered an error that had a semantic but not a phonological relationship to the target word, whereas a phonological error was considered an error that had a phonological but not semantic relationship to the target word. Thus, errors that were both semantically and phonologically related to the target were not considered for analyses.

Error detection scoring.

Trained researchers scored error detection following the same procedure as in Experiment 1. Error detection was summarized across error types for each participant. Error detection scores for each error type equaled the proportion of detected errors to committed errors. For example, phonological error detection equaled the number of detected phonological errors divided by the number of committed phonological errors. Error detection was only calculated where a participant committed a minimum of five errors of the respective type. That is, phonological error detection was not calculated for participants with fewer than five phonological errors, and semantic error detection was not calculated for participants with fewer than five semantic errors.

Brain Imaging Measures

Brain imaging acquisition.

Participants from Experiment 2 underwent MRI scans including structural T1-weighted magnetization prepared rapid gradient echo (MPRAGE) scans and structural T2-weighted scans, which were collected using Siemens 3.0 Tesla scanners. T2-weighted images where available were used to assist in the manual segmentation of stroke lesions by a board-certified neurologist (author P.E.T). These segmented lesions were then normalized to the same template as the T1 images. Before analysis, all lesions were transformed into Montreal Neurological Institute (MNI) space, with a voxel size of 2.5 × 2.5 × 2.5 mm.

Scans collected in Experiment 2 followed the following scanning sequence.

T1-weighted MPRAGE. One hundred sixty sagittal slices, voxels =1 × 1 × 1 mm, matrix = 246 × 256, field of view = 250 mm, slice thickness = 1 mm, repetition time (TR) = 1900 msec, echo time (TE) = 2.56 msec.

T2-weighted. One hundred seventy-six sagittal slices, voxels = 0.625 × 0.625 × 1.25 mm, matrix = 384 × 384, field of view = 240 mm, slice thickness = 1.25 mm, TR = 3200 msec, variable TE and flip angle.

The scanning sequence for participants who were scanned as part of enrollment in Experiment 1 is listed below.

T1-weighted MPRAGE. 176 sagittal slices, voxels = 1 × 1 × 1 mm, matrix = 256 × 256, field of view = 256 mm, GeneRalized Autocalibrating Partially Parallel Acquisition (GRAPPA) = 2, slice thickness = 1 mm, TR = 1900 msec, TE = 2.98 msec.

T2-weighted (Fluid-Attenuated Inversion Recovery). One hundred ninety-two sagittal slices, voxels = 1 × 1 × 1 mm, matrix = 256 × 256, field of view = 256 mm, slice thickness = 1 mm, TR = 5000 msec, TE = 38.6 msec, TI = 1800 msec, flip angle = 120.

Experimental design and statistical analyses.

To determine the brain bases of phonological SEM and semantic SEM, we followed two SVR-LSM approaches (Zhang, Kimberg, Coslett, Schwartz, & Wang, 2014). First, we replicated the lesion–symptom mapping study of phonological SEM and semantic SEM in Mandal and colleagues (2020) on a cohort that includes participants from Experiment 1 in addition to participants from Mandal and colleagues (2020; Table 9). Second, we investigated lesions that selectively reduce phonological SEM more than semantic SEM, as well as the reverse, by running a variant of SVR-LSM referred to below as Dissociation SVR-LSM.

The first set of SVR-LSM analyses was performed using the SVR-LSM GUI (https://github.com/atdemarco/svrlsmgui; DeMarco & Turkeltaub, 2018). The Dissociation SVR-LSM analyses were performed in MATLAB using a customized version of the SVR-LSM GUI program. All lesion–symptom mapping analyses were conducted in MATLAB R2018b.

Standard SVR-LSM.

The first SVR-LSM approach we took involved analyses based on Mandal and colleagues (2020), serving as a replication of their study. These analyses are referred to here as “standard” SVR-LSM analyses to contrast with Dissociation SVR-LSM analyses described in the following section. The SVR-LSM GUI toolbox was used to run two standard SVR-LSM analyses investigating the effect of stroke lesions on SEM. One SVR-LSM analysis examined SEM of phonological errors, and the other analysis examined SEM of semantic errors. In both analyses, voxels were considered if they were lesioned in at least 10% of participants. Before modeling, lesion volume was regressed out of both the lesion images and the behavioral scores. Voxel-wise p values were derived via permutation testing using 10,000 permutations. Next, voxel-level multiple comparisons correction was applied using continuous family-wise error rate (CFWER) method, with an FWE rate of .05 and v set to 100 (Mirman et al., 2018). The same 10,000 permutations were also used for cluster-level correction with a p value threshold of .05.

Dissociation SVR-LSM.

To determine how lesions in the brain differentially affect behaviors, we utilized an extension of SVR-LSM, here referred to as Dissociation SVR-LSM. Dissociation SVR-LSM is a whole-brain technique designed to map lesions that selectively impair one behavior more than another behavior. As in standard SVR-LSM, Dissociation SVR-LSM observes lesion–behavior relationships in the form of voxel-wise SVR-β values. Critically, Dissociation SVR-LSM observes lesion–behavior relationships for two behaviors consecutively, resulting in two sets of voxel-wise SVR-β values. Next, the observed difference in lesion–behavior relationships is calculated by subtracting the two sets of voxel-wise SVR-β values from one another. The difference value resulting from this subtraction is called the ΔSVR-β value. Finally, voxel-wise p values are calculated by comparing the observed ΔSVR-β values to a null distribution of ΔSVR-β values derived from permutations using shuffled behaviors. The two compared behaviors are identically shuffled on each permutation. In Dissociation SVR-LSM, both tails of the p values are interrogated, as each tail indicates the opposite selective impairment.

All other settings in the Dissociation SVR-LSM analyses were identical to the standard SVR-LSM analyses. That is, lesion volume was regressed from both voxels and behavior, voxels were only considered if they were lesioned in 10% of participants, voxel-wise p values were generated using 10,000 permutations, and multiple comparisons corrections on the vowel-wise values included CFWER v = 100 as well as cluster correction with FWER p threshold of .05.

Results

Lesion Symptom Mapping Analyses

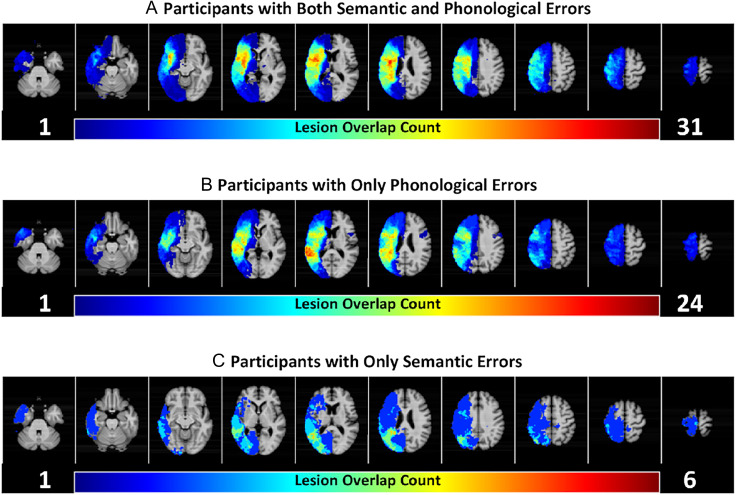

Lesion symptom mapping analyses were used to investigate the brain basis of phonological SEM and semantic SEM. Lesion–behavior relationships for phonological SEM were investigated in participants who committed at least five phonological errors (n = 70). Lesion overlap maps revealed broad anatomical lesion coverage of the left middle cerebral artery territory for participants included in phonological SEM analyses (Figure 5). Similarly, lesion–behavior relationships for semantic SEM were investigated in participants who committed at least five semantic errors (n = 42). Lesion overlaps maps also revealed broad anatomical lesion coverage of the left middle cerebral artery territory for participants included in semantic SEM analyses (Figure 5A, C). Across the groups, participants had an average lesion volume of 114 cm3 (SD = 84 cm3). On average, participants detected 44% (SD = 29%) of their phonological speech errors; and 34% (SD = 24%), of their semantic speech errors.

Figure 5. .

Lesion coverage for included participants. Participants are grouped by whether they committed sufficient (i.e., at least five) phonological and/or semantic errors, a requirement for inclusion in analyses of phonological SEM and/or semantic SEM. (A) Those with both sufficient phonological errors and sufficient semantic errors (n = 36). In other words, individuals in (A) committed at least five phonological errors and at least five semantic errors. (B) Those with sufficient phonological errors but not semantic errors (n = 34). (C) Those with sufficient semantic errors but not phonological errors (n = 6). Phonological SEM is analyzed in participants from (A) and (B), totaling to 70 participants. Semantic SEM is analyzed in participants from (A) and (C) totaling to 42 participants.

Standard Support Vector Lesion Symptom Mapping of SEM

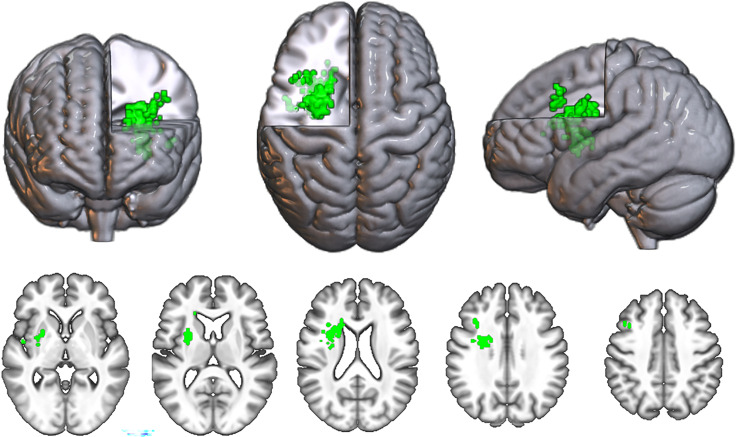

Lesion symptom map of phonological SEM.

The SVR-LSM symptom map for phonological errors contained 230 surviving voxels, surpassing the CFWER threshold of v = 100 voxels (Figure 6). As the resultant SVR-LSM map exceeded the threshold of 100 voxels, we reject the null hypothesis that there is no lesion–symptom association for phonological SEM.

Figure 6. .

Standard SVR-LSM map for phonological SEM. All significant voxels at CFWER v = 100 are shown in green.

The map contained one significant cluster at FWE < .05 that consisted of 199 voxels with a center of mass at MNI coordinates −27, 3, 20. As expected, this cluster was located in and around the frontal white matter, consistent with the findings in Mandal and colleagues (2020).

Lesion symptom map of semantic SEM.

The lesion symptom map for semantic errors contained zero voxels that surpassed the CFWER threshold at v = 100 voxels.

Dissociation Lesion Symptom Mapping of SEM

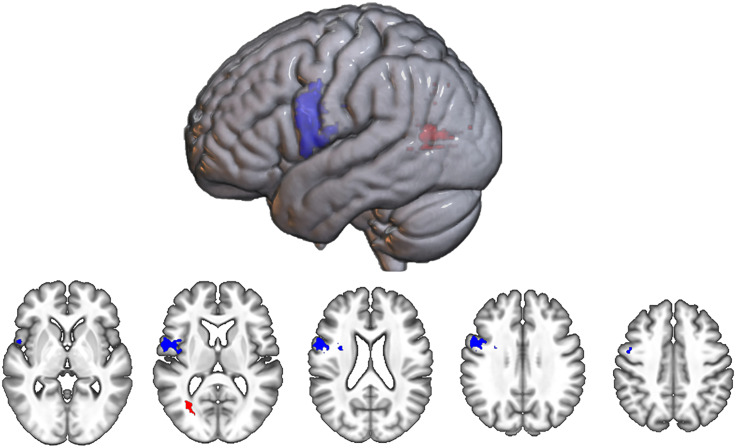

Next, we used Dissociation SVR-LSM to determine whether lesions reveal a double-dissociation between phonological SEM and semantic SEM. On the basis of the results of Experiment 1, we expected lesions affecting brain regions associated with apraxia of speech (e.g., ventral motor and premotor cortex; see Basilakos, Rorden, Bonilha, Moser, & Fridriksson, 2015; Graff-Radford et al., 2014) to be more related to phonological SEM than semantic SEM. On the basis of the results of Experiment 1, we expected ventral stream lesions associated with auditory comprehension or semantic deficits to be related to semantic SEM more than phonological SEM.

Lesions that reduce phonological SEM more than semantic SEM.

The Dissociation map for lesions that reduce phonological SEM more than semantic SEM contained 421 surviving voxels, surpassing the CFWER threshold of v = 100 voxels (Figure 7), which allows us to reject the null hypothesis that there is no lesion–symptom association. The map contained one significant cluster at FWE < .05, consisting of 414 voxels. This cluster was predominantly localized to the ventral precentral gyrus with a center of mass at MNI coordinates −49, 2, 19 (Figure 7).

Figure 7. .

Dissociation SVR-LSM maps. Includes map for lesions that reduce phonological SEM more than semantic SEM (blue) and that reduce semantic SEM more than phonological SEM (red). All significant voxels at CFWER v = 100 are shown.

Lesions that reduce semantic SEM more than phonological SEM.

The Dissociation map for lesions that reduce semantic SEM more than phonological SEM contained 71 surviving voxels and so did not pass the CFWER threshold of 100 voxels (Figure 7), indicating there is a plausible chance (i.e., greater than 5%) that this result could be produced under the null hypothesis. The map did not contain any significant clusters at FWE < .05, but one cluster centered at MNI coordinates −31, −66, 8 approached significance (56 voxels, p = .052. This cluster was located posteriorly in the brain, within white matter beneath the temporo-parieto-occipital (TPO) junction.

GENERAL DISCUSSION

Main Findings

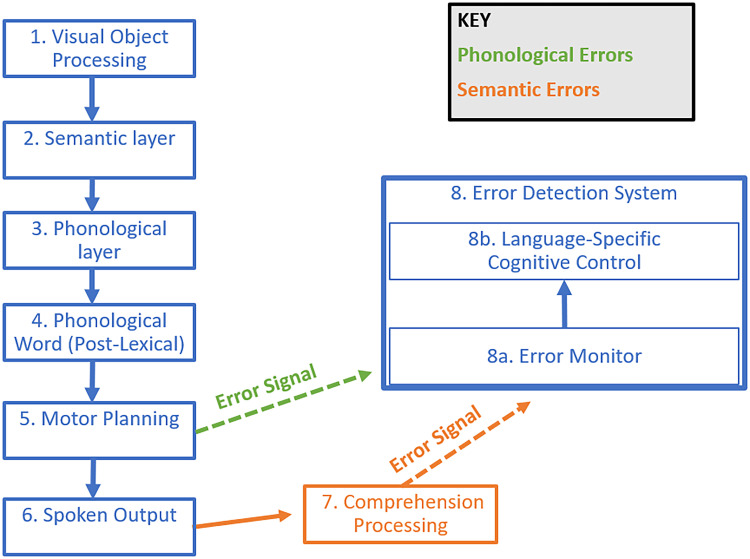

We investigated critical behavioral and neural substrates supporting SEM of phonological and semantic errors in people with aphasia. Altogether, the findings indicate that SEM of phonological errors involves different neurocognitive mechanisms relative to SEM of semantic errors. Motor speech deficits as well as lesions to the ventral motor cortex, a region of the brain involved in motor speech (Conant, Bouchard, & Chang, 2014), selectively reduced SEM of phonological errors relative to SEM of semantic errors. Furthermore, deficits in auditory word comprehension related to reduced SEM of semantic errors. We then found a trending relationship between reduced semantic SEM and white matter lesions beneath the TPO junction. Finally, we found that semantic control deficits reduced SEM across all error types, supporting the proposition that cognitive control is a critical underlying ability for SEM.

Distinct Mechanisms for Monitoring Phonological Errors and Monitoring Semantic Errors

Critically, our neural and behavioral investigations differentiated SEM of phonological errors from SEM of semantic errors. In particular, it appeared that the motor cortex and motor abilities support SEM of phonological errors, whereas auditory comprehension abilities support SEM of semantic errors. Consequently, in line with proposals by various researchers (Nozari, 2020; Postma, 2000), we conclude that there are multiple SEM mechanisms at play during speech. That is, throughout speaking, people monitor their motor planning for phonological errors (Figure 8, green), while also monitoring their speech output for semantic errors (Figure 8, orange). Importantly, we found evidence that there are mechanisms common to both SEM of semantic errors as well as SEM of phonological errors. One such common mechanism we identified was the language-specific cognitive control ability of semantic control, which supported SEM irrespective of the error type (Figure 8, Box 8B). In the following sections, we elaborate and propose finer details for these distinct and common mechanisms.

Figure 8. .

Hypothesized accounts for SEM of phonological errors (green) versus SEM of semantic errors (orange).

Phonological Error Monitoring Leverages Conflict Monitoring of Motor Speech

Although our findings suggest that motor speech is monitored for phonological errors, it remains unclear how motor speech is monitored. To date, mechanisms for monitoring motor speech have only been proposed by sensorimotor models. For example, as mentioned above, sensorimotor models in their simplest interpretation indicate that auditory perception of speech as well as somatosensory perception of speech are important for SEM. However, our Bayesian analysis supported an absence of a relationship between SEM and auditory speech perception. Notably, our measure of auditory speech perception, pseudoword discrimination, required the explicit comparative judgment of two pseudowords that differ on a single phoneme, and as such may not perfectly approximate the SEM mechanisms proposed by sensorimotor models. This measure might also rely on sensorimotor processing in addition to the sensory-perceptual processing described in sensorimotor models. Nevertheless, sensorimotor models would still predict a relationship between SEM and this measure to the extent that they both rely on phoneme perception. Moreover, the relationship we found between motor speech ability and phonological SEM was in the opposite direction from our sensorimotor model predictions. In summary, although we did not examine the contribution of somatosensory perception to SEM, our findings largely oppose sensorimotor model predictions of SEM.