Abstract

Dense pixel matching problems such as optical flow and disparity estimation are among the most challenging tasks in computer vision. Recently, several deep learning methods designed for these problems have been successful. A sufficiently larger effective receptive field (ERF) and a higher resolution of spatial features within a network are essential for providing higher-resolution dense estimates. In this work, we present a systemic approach to design network architectures that can provide a larger receptive field while maintaining a higher spatial feature resolution. To achieve a larger ERF, we utilized dilated convolutional layers. By aggressively increasing dilation rates in the deeper layers, we were able to achieve a sufficiently larger ERF with a significantly fewer number of trainable parameters. We used optical flow estimation problem as the primary benchmark to illustrate our network design strategy. The benchmark results (Sintel, KITTI, and Middlebury) indicate that our compact networks can achieve comparable performance in the class of lightweight networks.

Keywords: Dense prediction, optical flow estimation, dilated convolution, compact network, network receptive field, gridding artifact

1. Introduction

Deep learning methods, especially convolutional neural networks (CNNs), have been successful and in some cases surpassed other classical methods for pixel-level prediction problems. Semantic segmentation [21, 20], next frame prediction [22, 43], depth estimation from a single image [6, 5] and optical flow estimation [31] are among these problems which can be solved effectively using machine learning algorithms.

Current models for optical flow estimation generally adapt existing CNN architectures that are designed inherently for other computer vision tasks such as image classification or semantic segmentation [4, 14]. The optical flow problem, however, is substantially different from all these other computer vision tasks. Unlike image classification procedures which require a holistic, scale and shift-invariant approaches, flow estimation involves pixel-level coordinate and photometric transformations and require correspondence of spatial features.

Considering the spatiotemporal nature of the dense flow estimation problem, we have designed a receptive field-guided network that takes two images (reference frame and its next frame) as input and generates a motion vector for every pixel in the reference image. Our systematic network design 1) has much fewer parameters when compared to other learning-based methods; 2) can estimate large motions while maintaining fine motion resolution; 3) does not suffer from the vanishing problem of estimating optical flow in regions of a scene with smaller objects moving at a faster velocity and 4) can perform well in the presence of excessive occlusion.

While dilated convolutions are often used to design networks with a larger receptive field such as for semantic segmentation [38, 2], none of the existing designs, however, go beyond several consecutive layers of convolutions with higher dilation rates [30, 7, 44]. Increasing dilation rates by a factor of two in successive convolutional layers doubles the receptive field of the network, This, however, introduces severe gridding artifacts which may limit the accuracy and resolution of the estimates for dense prediction problems [37]. One of our key findings from this research work is that increasing the dilation rate linearly (instead of an exponential increase) results in a faster rate of increase in size of the receptive field with minimal gridding artifacts and a desirable Gaussian ERF of the network.

The main contributions of this work are as follows:

A simpler design strategy to build compact networks for dense prediction problems such as optical flow estimation using standard network layers.

A new design strategy to effectively increase the network receptive field with no or minimal reduction in feature resolution and to minimize gridding problem.

We introduce two lightweight networks called DDCNets that can serve as building blocks for constructing more complex networks.

2. Related Work

While the primary focus of this work is on deep learning based methods for optical flow estimation, a comprehensive review of optical flow estimation methods is available elsewhere [31].

FlowNet is a pioneer supervised CNN-based framework developed for learning optical flow tasks from ground truth motion vectors [3]. FlowNet-Simple is designed as an encoder-decoder structure. Flownet-Correlation is a variation of FlowNet-Simple that uses a custom layer called correlation layer to explicitly match feature maps extracted from each image in a sequence. Both methods lack the ability to recover high-resolution features needed to accurately estimate optical flow and clear motion boundaries. These methods use a variational approach as the last optional refinement to improve the estimation.

Flownet2 improved upon the FlowNets by stacking multiple networks, adding a small-displacement estimation network, and by scheduling the order of presenting the training data to the network [13]. In a stacked network, higher-level blocks take the estimated flows from the previous blocks along-side the copies of input images as their input. Some of these FlowNet-Simple blocks were trained on specific datasets to achieve higher accuracy in estimating smaller displacements. Their experiments show that using intermediate flow estimates to warp one of the images and using the difference between the warped image and the reference image further improves the performance of FlowNet2. DispNet extends this idea of supervised learning for optical flow estimation to disparity and scene flow estimation [24].

Other methods such as a 3D Voxel2Voxel CNN [36], unsupervised learning [1, 16], and a pyramidal approach [27] have been developed based on FlowNet. Most of these methods follow an encoder-decoder architecture [18] with convolutional layers are stacked in the encoder section to extract high-level features from input data. Convolutional strides more than one in the downsampling convolutional layers in the encoder section are used to increase the receptive field of the network. The decoder section attempts to recover the feature resolution using deconvolutional (or fractionally-strided convolutions) layers [39] or upsampling layers followed by a convolutional layer.

Teney et al. [35] designed a CNN for optical flow estimation inspired by the classical spatiotemporal motion-energy filters of Heeger, et al . [8]. Their design requires much fewer training samples compared to FlowNet. They achieve this by enforcing different constraints on learned filters at different layers of the network.

SpyNet is much smaller than FlowNet models and performs better on some standard benchmarks but also suffers from issues associated with classical spatial-pyramid approaches [27]. PWC-Net and LiteFlowNet models use feature warping instead of image warping along with correlation layers as in FlowNet-Correlation [32]. LiteFlowNet is among the best-performing methods in the lightweight deep learning category. It uses convolution layers with strides of two to build a pyramid of feature maps. A descriptor matching approach is used to obtain a coarser estimate of optical flow using a custom layer similar to the correlation layer used in the FlowNet-Correlation network. Feature maps are warped (as in PWC-Net) from the finer levels of the pyramid using coarser flow estimates and a descriptor matching is applied to obtain a finer flow estimate [11]. The feature warping steps improve the efficiency of descriptor matching. However, errors introduced in the coarser levels are propagated to the finer levels in the presence of occlusion and homogeneous regions. LiteFlowNet2 and LiteFlowNet3 are designed to address the issues associated with pyramidal error propagation. These models are faster and more accurate than the original LiteFlowNet [12, 10].

Spatial pyramid approach has two other major issues namely vanishing problem due to smaller objects moving at a faster velocity in the scene, and ghosting artifact when warping images (as in FlowNet2 and SpyNet) using coarser-level flow estimates in a scene; for example, ghosting artifact in a warped image due to motion of an occluding object over a stationary background [15]. In contrast to the LiteFlowNet and SpyNet, our model does not utilize a coarse-to-fine approach and therefore does not suffer from ghosting artifacts [15]. Our approach is to design networks with appropriate receptive fields and allow the network to learn network parameters necessary for estimating larger motions.

All three LiteFlowNets have complicated network structures. The training procedure of the LiteFlowNet3 is also complicated as they introduce extra network modules after initial training, adjust learning rates for these modules and proceed with training the whole network.

More recent techniques utilize attention and gating mechanism to select pertinent features and improve performance on a wider range of flow magnitudes and regions with occlusion. CA-DCNN is a recent design that uses a correlation volume computed from pyramidal feature maps (convolutional layers), a series of dilated convolution layers for expanding the network field of view of the correlation volume and an attention mechanism to select only relevant feature maps for further processing [40]. In contrast, there are no specialized pyramidal, warping or correlation volume in the DDCNets presented in this work. Among the attention based methods, RAFT is based on an iterative improvement of full resolution optical flow estimates based on 4D multi-scale correlation volumes computed using pyramidal features. Iterative improvement was achieved using convolutional gated recurrent units (convGRU) with convGRU weights fixed or tied across iterations [34]. GMA model uses a self-attention weighting of context or appearance features of self-similarity (key) to update motion features (values) estimated based on a correlation volume of pyramidal features as in RAFT [17]. For improving estimation of large displacement, SAMFL model uses PWC-Net as baseline along with self-attention mechanism to identify long range feature dependencies and an explicit estimate of occlusion features [41]. PMC-PWC utilizes multiscale context maps for occlusion detection and a cost component as a function of motion edge boundaries to achieve higher optical flow contrast along the motion boundaries [42].

Several unsupervised networks are also designed to tackle the optical flow problem. The matching task that is done by the network, depends on the structure of the network and is mostly similar to unsupervised models. The major difference between supervised and unsupervised networks is in the choice of the loss function. In general, the loss function of these methods is based on classical formulations of flow estimation, brightness constancy, and smoothness assumptions. To train an unsupervised method, there is no need for ground-truth flow vectors, only two input images are sufficient. In such methods, the cost function for training is defined without requiring a knowledge of the ground truth motion in the input sequence. Typically a backward warping procedure is used to quantify the accuracy of the network flow estimated indirectly by comparing the first frame with the second frame warped using the estimated optical flow.

Dense Spatial Transform Flow (DSTFlow) is among the earlier unsupervised networks and is based on the Spatial Transformer Network (STN) [28]. DSTFlow learns optical flow by directly minimizing photometric constancy. The cost function is designed based on variational methods. USCNN [1], DSTFlow [28], UnFlow [25], etc. are among the methods in this category. Back to Basics is an end-to-end and unsupervised method based on variational loss [16]. This method uses the FlowNet-Simple network structure but with an unsupervised loss function. USCNN is also among the early unsupervised CNNs for optical flow estimation. Ahmadi et al . utilized the brightness constancy term of Horn-Schunck to train this network [1].

Several other methods such as [19] can be categorized as semi-supervised methods. These methods have more complex cost functions based on several terms from various supervised and unsupervised methods.

3. Deep Dilated Convolutional Neural Network (DDCNet)

Receptive field (RF) of a neuron in the output layer represents the spatial extent of its visibility and access to a neighborhood of pixels in the input image sequence. Effective receptive field (ERF) represents the actual RF of a trained network [23]. Therefore, to detect presence of large motion and to estimate large flow vectors, each neuron in the output layer should have a sufficiently large ERF.

ERF of a network depends on two main factors namely the network architecture (arrangement and type of the layers) and the learned network weights. Size, stride length, and activation functions of filters, network depth, and use of other types of layers contribute to the final ERF of output units in a CNN. Even in fully connected networks, the learned weights can suppress the influence of selected input neurons on the network response. Therefore, we utilized ERF instead of RF as a guiding criterion for designing our networks.

We defined the spatial extent of ERF based on the access and visibility of the neurons in one of the input channels with respect to the central neuron in the u channel of the output layer. We numerically estimated the ERF of a network as the partial derivative of output neuronal responses with respect to each of the input neurons [23]. For general analysis, we focus on the central unit of just one output channel (u channel) in the network. Therefore, this ERF-map represents weighted contributions of each of the input neurons (image pixels) on the response of the central unit in the u channel of the output layer as a gradient measure (defined similarly for the v channel).

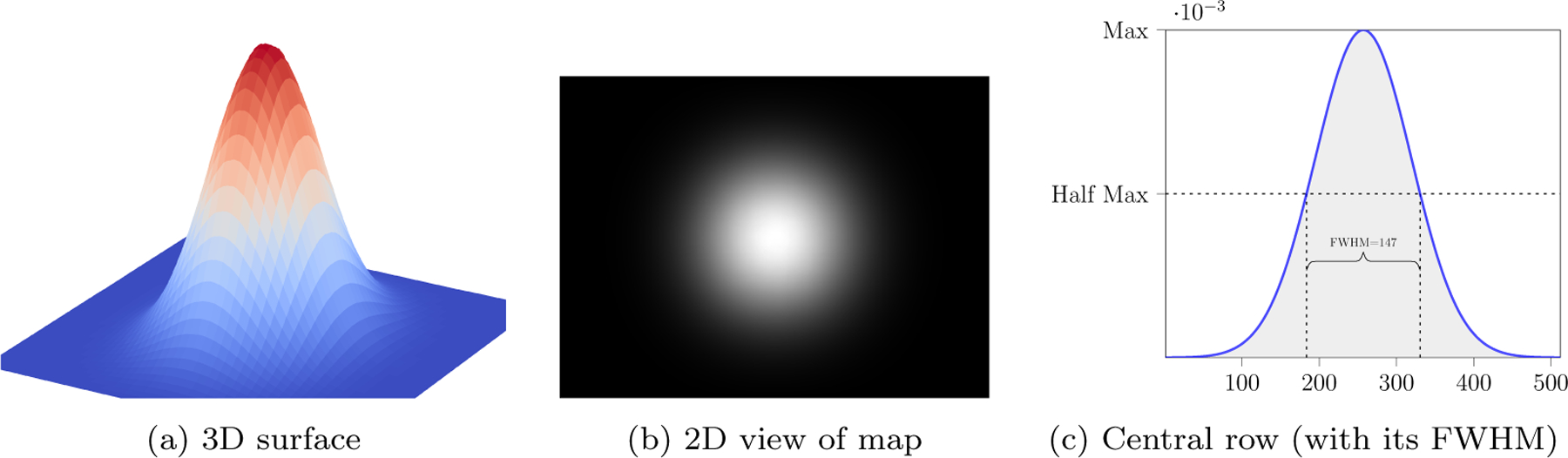

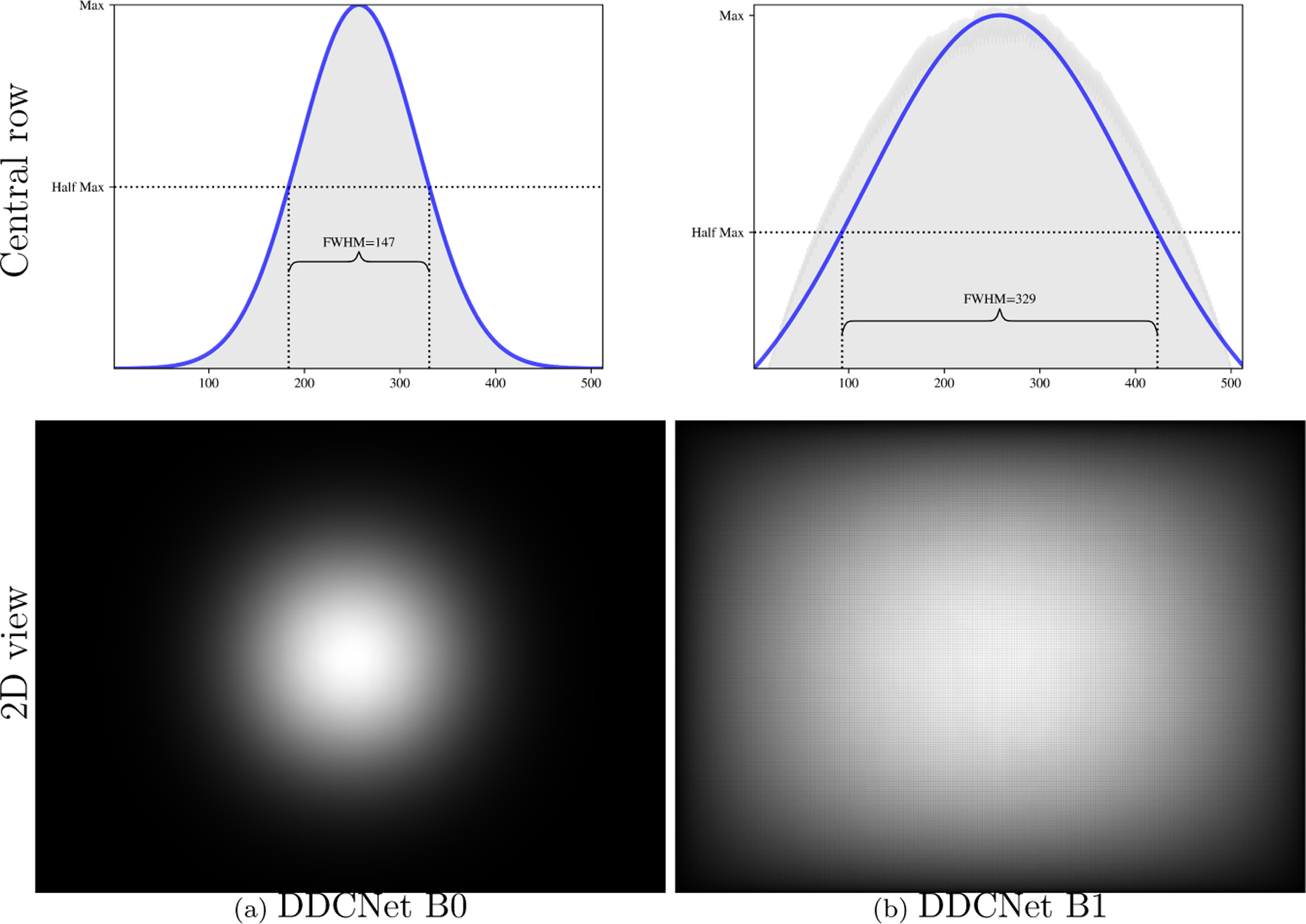

ERF maps can be visualized as 3D surfaces or gray-scale images as in Figure 1 for visual assessment of network ERF and for selecting candidate networks. For quantitative evaluation, we derived a measure of Full Width at Half Maximum (FWHM1) from a Gaussian model of ERF weights along the center row of the ERF map (i.e. the row corresponding to the target output unit) as in Figure 1c.

Figure 1:

Effective Receptive Field of central output unit from DDCNet-B0. All kernels of the network were initialized with a constant weight. (a) shows surface plot of the ERF map, (b) shows the ERF as gray-scale image. (c) shows the 1-D plot of the central row in the ERF map and its FWHM.

3.1. Extent and Shape of the Network ERF

Candidate optical flow networks should be able to find matching pixels in the input sequence and therefore requires a sufficiently larger ERF extent. More precisely, the network ERF should have coverage and visibility of matched pixels corresponding to the largest motions in the data. Therefore, our first design criterion is that the FWHM of ERF of candidate networks should be larger than the flow magnitudes for majority of the pixel locations.

ERF extent can also be increased by adding more convolutional layers, however, it increases linearly with the network depth as shown in Figure 2b. Deeper networks not only have more parameters to learn but also are more challenging to train due to the vanishing gradient problem [9]. Choosing larger filter kernels in the first network layer may provide a larger ERF. It is less effective in CNNs as it drastically increases the learning parameters [26].

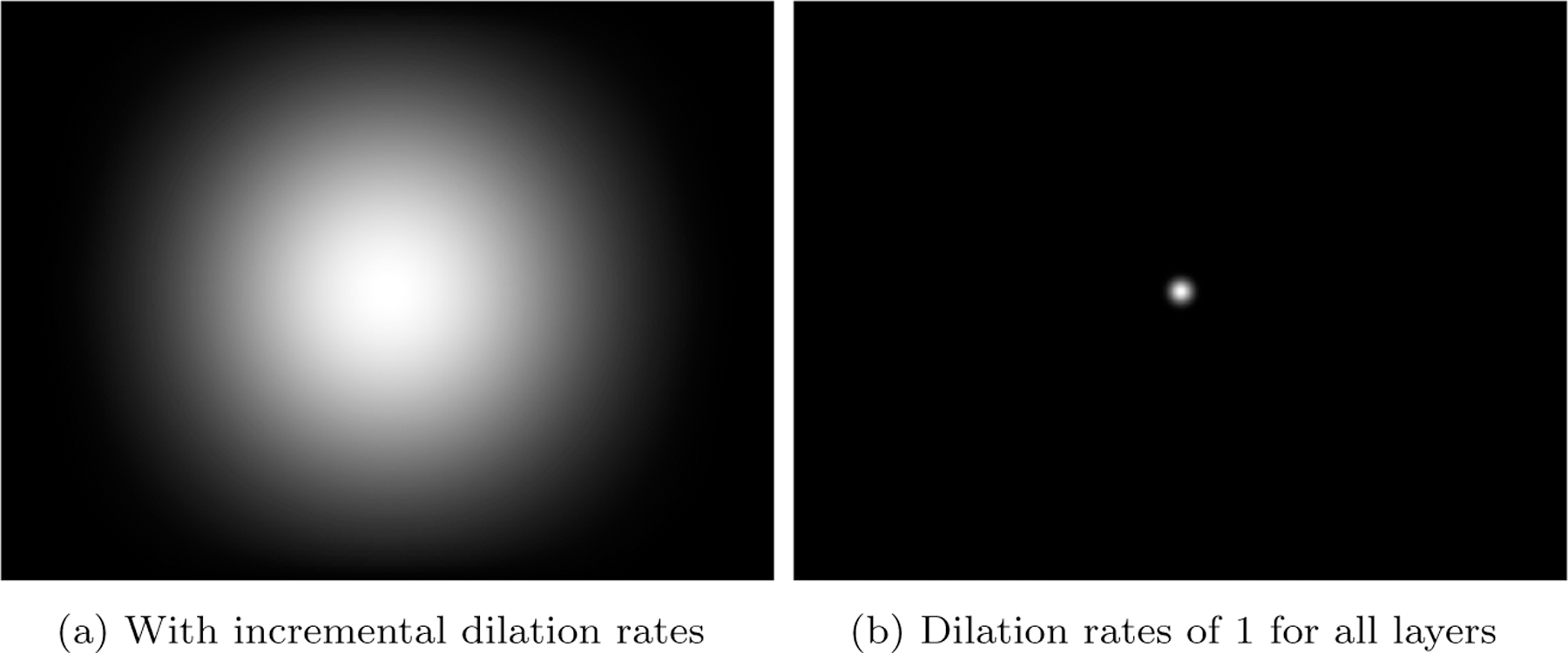

Figure 2:

ERF of dilated CNN vs classical CNN with 30 layers. (a): ERF when dilation rates increased from 1 through 30 in steps of 1. (b): ERF when dilation rate kept at 1 for all 30 layers i.e. no dilation.

Pooling layers allows a network to be spatially-invariant and allow the ERF extent to grow exponentially as a function of number of pooling layers as in FlowNet [14, 4] and LiteFlowNet [11, 12, 10]. They are useful for classification tasks but undesirable for tasks such as optical flow where higher spatial resolution of features is likely desired.

Unlike the pooling layers, dilated layers [38] do not cause loss of spatial resolution. We illustrate the effectiveness of dilated convolutional layers in Figure 2 by comparing the ERF of a network with 30 convolutional layers (Figure 2b) to a a network with 30 dilated-convolutional layers with increasing dilation rates 2a. In both the networks, all hyper-parameters (e.g . number of layers, size of filters, activation functions, etc.) were kept the same. It can be observed that a standard convolutional network would require hundreds of convolutional layers to achieve the ERF extent of the dilated-network. Therefore, increasing the depth is not an effective approach to achieving a larger ERF.

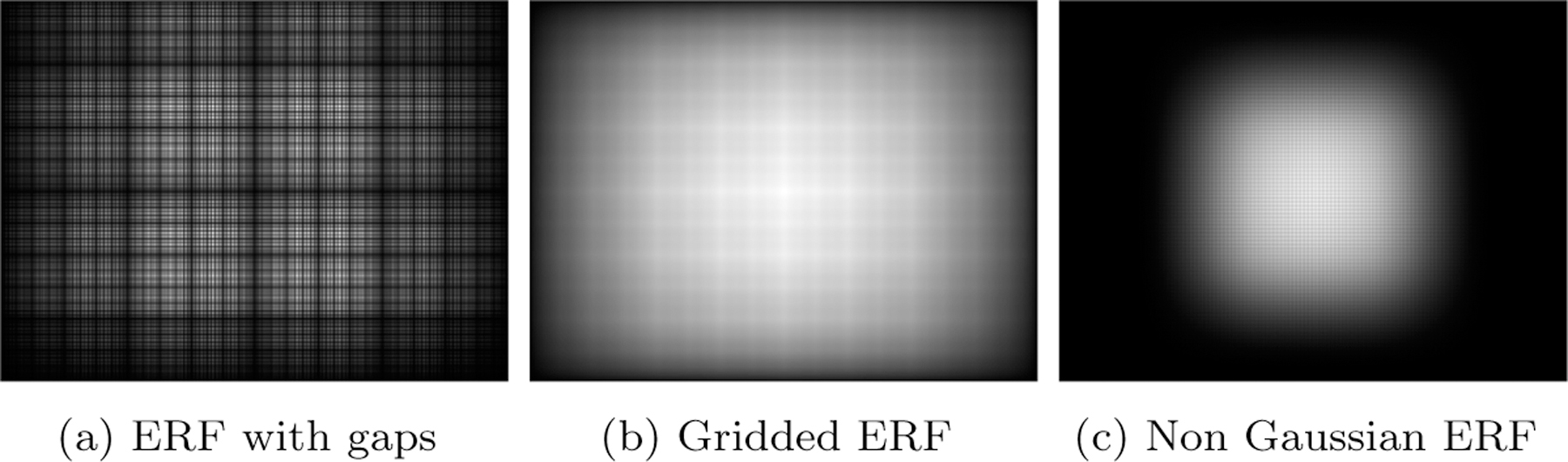

ERF shape is also an important determinant of network performance. While several approaches are available to achieve a bigger ERF, not all of them provide an optimal receptive field leading to the highest network accuracy. For example, a larger ERF with a much shallower network can be achieved by rapidly increasing the dilation rates between subsequent dilated convolution layers (e.g . doubling dilation rates). This, however, introduces undesirable ERF gridding artificats. Figure 3 shows a few examples of undesirable ERFs.

Figure 3:

Examples of networks with undesirable ERF shapes.

In general, when the temporal sampling rate is high, it is expected that the majority of the pixels have smaller flow magnitudes. Even when the frame rate is high, several locations may exhibit larger flow magnitudes due to relative motion between segments of a scene with respect to the observer (camera) such as due to quick movement of a scene segment toward or away from the observer. Therefore, weighted spatial localization of the receptive field is important for flow estimation because of the following reasons.

First, the matching pixels in the subsequent frames for any pixels in the reference frame are expected to be closer and within a certain radius. While it is important for a method to look far enough in the subsequent frames to account for large motions, the matching pursuit should give priority to matching pixels that are near the reference pixels. This property is also essential for achieving high contrast motion estimates. Second, the brightness constancy constraints typically employed in non-learning-based optical flow estimation methods are too restrictive and are likely violated in real-world sequences.

Therefore, similar to patch-matching methods, an optimal matching is achieved between a reference pixel and candidate matching pixels when there is also flow agreement among neighboring pixels. In classical optical flow methods, this is achieved through a smoothness constraint on the flow estimates. Our earlier experiments revealed that directly enforcing this smoothness constraint in the cost function is not useful for learning-based methods and introduces difficulties in correctly estimating displacements, especially in the motion boundaries. These two somewhat contradictory requirements suggest that a Gaussian-shaped ERF is ideally suited for optical flow estimation. Therefore, a sufficiently larger Gaussian ERF could be useful for estimating larger flows. And by the nature of its spatial weighting of matching pixels based on their distance from a reference pixel, a Gaussian ERF could provide sharper motion boundaries in the flow estimates.

3.2. Deep Dilated Convolutional Neural Network Basic: DDCNet-B0

Based on the desired characteristics of the learning-based models discussed earlier, we devise the following criteria to guide our network designs.

ERF Extent : FWHM of ERF map should cover majority of motion vectors in the input training data.

ERF Shape: ERF of the untrained network should be Gaussian without significant gridding artifacts. It should be noted that fine gridding may be introduced by the network after training to achieve high contrast motion boundaries.

Feature Resolution: Because spatial information is critical for optical flow estimation, we propose to avoid or minimize pooling and downsampling layers in the network as much as possible. We expect that this will retain the features at their original resolution for eventual summative processing.

Compactness: Achieve a compact network by using relatively fewer layers and fewer filters to minimize the number of trainable parameters, accelerate training time and testing time.

End-to-end training: After designing the network, minimum user supervision should be used to train the network. We will not apply any post-processing on the results of the network. Entire training should be performed in an end-to-end fashion.

Our basic learning-based optical flow estimation model receives two consecutive frames as input and generates optical flow estimates as output. As per our design criteria, we minimized use of downsampling to avoid losing spatial resolution. Therefore, all the convolutions are with stride one and there is no downsampling layer in the network. Two main parameters of our design were the number of layers and dilation factors of each of the convolution layers. Based on our findings, we increased the dilation rates linearly across the network to minimize gridding artifacts and to achieve a Gaussian ERF. The dilation rate was set to 1 for the first layer and was increased by 1 for each of the subsequent layers.

The optimal number of dilated convolutional layers was determined as the minimum number of dilated convolutional layers required to achieve a network whose FWHM of the ERF was large enough to cover the maximum flow magnitudes in the training data (see Figure 4). Figure 5 shows the receptive field for different networks as a function of the number of layers in the network. With 25 dilated convolutional layers, the spatial extent of the network ERF was sufficient to estimate larger flow velocities from the image sequences.

Figure 4:

Histogram of endpoint flow vectors (log scale) in Sintel training data vs Full-Width Half Maximum (FWHM) of DDCNet-Basic0 (B0). Using the histogram of flow magnitudes of training image sequences shown in Figure (a), the depth of the network was increased until the FWHM of the network ERF, shown in (b), covered the majority of the flow magnitudes in the training data.

Figure 5:

Effect of having more convolutional layers with increasing dilation rates on ERF. Each image shows the effect of the number of layers L on the network ERF. Starting with a dilation rate of 1 for the first layer, the dilation rates of each of the subsequent layers were increased in steps of 1 up to the Lth layer. For example, the network corresponding to the left-most ERF had 1 convolutional layer with a dilation rate of 1, and the network corresponding to the rightmost ERF had 30 convolutional layers with dilation rates from 1 through 30 in steps of 1. The network (DDCNet-B0) with 25 layers was chosen as an optimal choice based on our design criteria.

Figure 6 shows the architecture of our basic deep dilated CNN (DDCNet-B0) for optical flow estimation. It has 25 layers of dilated convolutions with dilation rates from 1 through 25 in steps of 1. To improve the accuracy of flow estimates and motion boundaries, we appended the network with four more layers with decreasing dilation rates of (12, 6, 3, 1). Except for the last layer, there are 64 3 × 3 filters in each of these layers with a ReLU activation function. The last layer is a convolution layer (dilation rate is 1) with two 1 × 1 filters and a linear activation function to generate the final flow estimates (UV-map). In this and other following network diagrams, M[ℓ] is the number of filters, r[ℓ] is the dilation rate, and g[ℓ] denotes activation function in layer ℓ.

Figure 6:

Architecture of our basis deep dilated CNN (DDCNet-B0) for optical flow estimation.M [ℓ] is the number of filters, r[ℓ] is the dilation rate, and g[ℓ] is the activation function of layer ℓ.

Endpoint Error characterizes network performance at a given pixel location (i, j) within an image sequence and is estimated as the Euclidean distance between the ground truth and estimated flow vector at that location as follows.

| (1) |

Average Endpoint Error (AEE) characterizes network performance at all pixel locations within an image sequence. We use AEE metric for training the networks and is estimated as the average of EEs at all pixel locations as follows.

| (2) |

An average AEE measure based on the AEEs of all image sequences in a benchmark dataset is used for assessing the network performance on benchmark datasets.

3.3. Strategies for Improving DDCNet-B0 Network

To increase the accuracy of the method and reduce the training time, we considered further improvements to our basic network described in the previous section. Adding more layers, more filters, and stacking several sub-networks on top of each other are some of the common strategies available for improving the accuracy of learning-based methods [14]. These strategies, however, require substantially more memory and computational resources. Therefore, we retain our focus on simplicity and compactness as we systematically improve our basic network architecture DDCNet-B0.

3.4. Spatial Feature Extractor Module

Raw pixel intensities of the image sequences are not ideal for matching pursuits as they are strongly influenced by changes in scene illumination and scene orientation. As can be observed from our preliminary results of DDCNet-B0, the network was able to internally extract relevant and robust features, identify pixel correspondences between frames, and estimate the displacement vectors using only the raw input images and a suitable cost function for optimization and learning.

One possible avenue to improve the accuracy of the estimates is by directly allowing the network to extract and utilize illumination-independent image sequences for flow estimation. The network estimation accuracy slightly improved when gradient image sequences (spatial derivatives) along with raw image sequences were used as input to the same network, thus validating the merits of this approach (results not shown). The gradient images likely provided more robust features less dependent on temporal noise and illumination changes for flow estimation. By decoupling spatial feature extraction steps from temporal matching steps in our basic network design DDCNet-B0, the network can possibly learn these spatial derivatives and other space-invariant features before matching pursuits. Therefore, we added a 3-layer section in the network for this preprocessing task which takes each image separately and generates 64 feature maps for further processing. The preprocessing section is shared between the first and second frames of the image sequence i.e. same filters are used for both images in the input image sequence. We refer to this preprocessing part of the network as a spatial feature extractor and the corresponding network segment is highlighted in light green color in Figure 7.

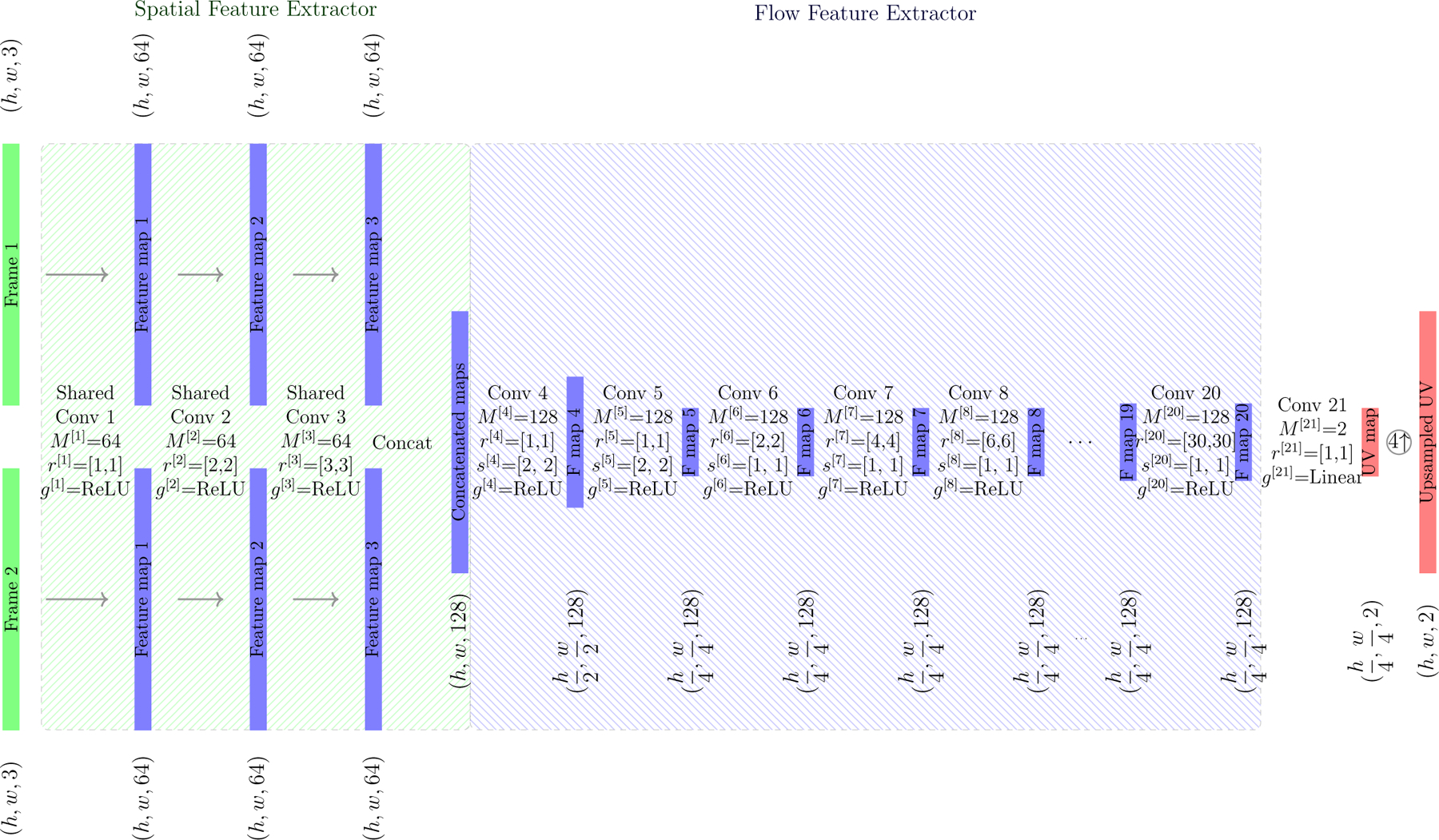

Figure 7:

Improvements to DDCNet-B0: A spatial feature extractor module (highlighted by light green) is added to the initial design DDCNet-B0 to guide the network to extract illumination invariant image features before learning to do the matching task. The second part of the design improvement, highlighted in light blue color, is called the flow feature extractor module. Compared to DDCNet-B0, the resolution of feature maps is reduced by a factor of four to increase ERF and the dilation rates were increased in steps of 2 to reduce the processing overhead.

3.5. Flow Feature Extractor Module

A second major design improvement to DDCNet-B0 is to reduce the computational demands by decreasing the depth of the network while maintaining its receptive field and accuracy. This is achieved by dropping every other layer from the DDCNet-B0. Our experiments show that an even step size of dilation rates yields better results compared to odd step sizes. By adjusting the number of layers to have the desired ERF, we ended up with a 15-layer sub-net with the dilation rates of 1, 2, 4, 6, …, 30. The resulting network segment that we refer to as a flow feature extractor module is highlighted in blue color in Figure 7.

To further reduce the processing time and increase the ERF of the network, we reduce the spatial dimensions of feature maps in the first and second layers of the flow feature extractor part by using a stride length of 2 for convolutions with an effective downsampling rate of 4:1. These parameters were experimentally determined to provide a balance between reduction in computational load and preserving spatial resolution of the feature maps.

3.6. Improved DDCNet Basic: DDCNet-B1

Figure 8 shows full design of the improved DDCNet-B1 network. In brief, DDCNet-B1 is comprised of a Spatial Feature Extractor (SFE) module, a Flow Feature Extractor (FFE) module (details shown in Figure 7), a Flow Feature Refiner (FFR) module and a Flow Estimator (FE) module. The SFE module is comprised of 3 dilated layers with dilation rates of 1, 2 and 3 operating on each of the raw input images (common weights for processing raw frame 1 and frame 2). Because of the shared weights in the SFE module between the raw input frames, the SFE module is expected to learn to extract spatial features from any given frame without any significant dependence on the frame sequence. The FFE module is comprised of 15 dilated layers with increasing dilation rates of 1, 2, 4, 6, …, 30 providing a large receptive field and thus allowing to aggregate a larger extent of features among the two frames. It is worth noting that we increased the number of filters in the FFE module from 64 to 128 filters per layer.

Figure 8:

The full architecture of the DDCNet-B1 network. This network has an additional module called Feature Refiner which consists of 10 dilated convolutional layers with increasing dilation rates from 1 to 10. This module helps the network to generate more refined estimation. M [ℓ] is number of filters, r[ℓ] is dilation rates, and g[ℓ] is activation function of layer ℓ. Details of the Spatial Feature Extractor and Flow Feature Extractor modules are presented in Figure 7

With reduced-resolution feature maps, though the networks can be trained much faster, some flow details would be lost. One possibility is to simply upsample the generated flows at 1/4th resolution to the original resolution as shown in Figure 7. However, it is preferred to allow the network to learn this upsampling process through training. To achieve this, we upsample the feature maps from the last layer of the FFE module by a factor of 2 and pass it to the FFR module with increasing dilation rates of 1, 2, 3, …, 10. In other words, the features are refined and aggregated at half of the original resolution in the feature refiner module. Our experimental results indicated that increasing dilation rates is beneficial for this module in terms of improving the endpoint error. In contrast, decreasing dilation rates were used in the latest layers of DDCNet-B0. Using the refined features at one-half resolution, flow estimates were generated at one-half resolution and upsampled using a nearest-neighbor approach to generate the final full resolution flow estimate in the FE module.

4. Experiments

4.1. Network and Training Details

Details of each of the networks with 3 × 3 filters in all layers are presented in Figures 6 and 8.

As with other methods, we utilized a simpler training dataset (in terms of types of motion and texture of images in the input sequence) such as the Flying Chair sequences initially to guide the network toward an optimal network configuration for optical flow estimation followed by training on Flying Things dataset (with 80% of available sequences with ground truth for training, 10% for validation and 10% for testing). To reduce computational demand, avoid over-fitting, and enable faster convergence during training, we emphasized training of selected network segments (sub-nets) by freezing previously trained network sections. For example, for training DDCNet-B1, the SFE, FFE and FE modules were trained before adding the flow feature refiner. Once individual blocks were trained, all the layers were fine-tuned until convergence on the training dataset without overfitting on the validation dataset. A batch size of 8 (if the model can be fit in the GPU memory), 4, or 2 were used for training using the Adam optimizer with He initialization and L2 regularization of the model error.

Train-time-augmentation methods were used to increase the number and quality of the training dataset. Specifically, geometric augmentation using affine transformations (e.g . rotation and resizing) and photometric augmentation (e.g . contrast, saturation, hue, and brightness) were used. From an initial rate of 10−3, we experimentally determined a learning rate that is optimal for network convergence. The learning rate was adjusted when the rate of decrease in the training error (loss function) was smaller without further significant improvement in the network accuracy.

4.2. Datasets

A summary of various benchmark image sequences that we utilized for assessing the performance of our receptive field guided networks is presented in Table 1. For each benchmark sequence, the ground truth optical flow was available.

Table 1:

Summary of benchmark image sequences used for assessing our receptive field guided network designs. GT : Ground truth.

| Frame pairs | Frames with GT | GT density per frame | Size (Width Height) | Type | |

|---|---|---|---|---|---|

| Middlebury | 72 | 8 | 100 | 388 × 584 | Real |

| KITTI12 | 389 | 194 | ~ 50 | 375 × 1242 | Real |

| KITTI15 | 400 | 194 | ~ 50 | 375 × 1242 | Real |

| Sintel | 2,145 | 1,041 | 100 | 436 × 1024 | Synthetic |

| Flying Chairs | 22,872 | 22,872 | 100 | 384 × 512 | Synthetic |

| Flying Things3D 3D subset | 77,757 | 77,757 | 100 | 540 × 960 | Synthetic |

4.3. Results

Average endpoint error (AEE) in Equation 2 was the main metric used for assessing network performance during the design and development of our networks. We also used Flall on KITTI datasets, where Flall measures the percentage of outlier estimates based on the number of pixels with endpoint error (EE) ≥ 3 pixels and EE ≥ 5% of the magnitude of its ground-truth flow vector were counted as outliers).

4.3.1. ERF Analysis

Figure 9 shows the ERF of our proposed DDCNet models along with the extents of their FWHM. B0 has a smaller ERF extent that may limit its ability to estimate large motion such as in Sintel image sequences. This smaller but smoother ERF helped the network to perform well when estimating fine motions in the Middlebury dataset (See Table 3: AEE of 0.67 for B0 versus 1.2 for B1).

Figure 9:

ERFs of our receiptive field guided networks DDCNet-B0 and DDCNet-B1

Table 3:

Average endpoint error of different methods on benchmark datasets. Entries with parentheses indicate the testing performance on data that was previous used for fine-tuning the network. Flall measures the percentage of outlier estimates; pixels with EE ≥ 3 and EE ≥ 5% of the magnitude of its ground-truth flow vector were counted as outliers.

| Method | Sintel clean | Sintel final | KITTI12 | KITTI15 | Middlebury | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| train | test | train | test | train | test | train | train (Fl-all) | test (Fl-all) | train | test | ||

| Heavyweight CNN | FlowNetS [4] | 4.50 | 7.42 | 5.45 | 8.43 | 8.26 | - | - | - | - | 1.09 | - |

| FlowNetS ft-sintel [4] | (3.66) | 6.96 | (4.44) | 7.76 | 7.52 | 9.1 | - | - | - | 0.98 | - | |

| FlowNetC [4] | 4.31 | 7.28 | 5.87 | 8.81 | 9.35 | - | - | - | - | 1.15 | - | |

| FlowNetC ft-sintel [4] | (3.78) | 6.85 | (5.28) | 8.51 | 8.79 | - | - | - | - | 0.93 | - | |

| FlowNet2 [14] | 2.02 | 3.96 | 3.54 | 6.02 | 4.01 | - | 10.08 | 29.99% | - | 0.35 | 0.52 | |

| FlowNet2 ft-sintel [14] | (1.45) | 4.16 | (2.19) | 5.74 | 3.54 | - | 9.94 | 28.02% | - | 0.35 | - | |

| FlowNet2 ft-kitti [14] | 3.43 | - | 4.83 | - | (1.43) | 1.8 | (2.36) | (8.88%) | 11.48% | 0.56 | - | |

|

| ||||||||||||

| Lightweight CNN | SPyNet [27] | 4.12 | 6.69 | 5.57 | 8.43 | 9.12 | - | - | - | - | 0.33 | 0.58 |

| SPyNet ft-sintel [27] | (3.17) | 6.64 | (4.32) | 8.36 | 3.36 | 4.1 | - | - | 35.07% | 0.33 | 0.58 | |

| PWC-Net ft-sintel [33] | (1.7) | 3.86 | (2.21) | 5.13 | - | - | - | - | - | - | - | |

| PWC-Net ft-kitti [33] | - | - | - | - | (1.08) | 1.5 | (1.45) | (7.59%) | 7.9% | - | - | |

| PWC-Net+ ft-sintel [33] | (1.71) | 3.45 | (2.34) | 4.60 | ||||||||

| LiteFlowNet [11] | 2.52 | - | 4.05 | - | 4.25 | - | 10.46 | 29.30% | - | 0.39 | - | |

| LiteFlowNet ft-sintel [11] | (1.64) | 4.54 | (2.23) | 5.38 | - | - | - | - | - | - | - | |

| LiteFlowNet ft-kitti [11] | - | - | - | - | (1.26) | 1.7 | (2.16) | (8.16%) | 10.24% | - | - | |

| LiteFlowNet2 ft-sintel [12] | (1.30) | 3.48 | (1.62) | 4.69 | - | - | - | - | - | - | - | |

| LiteFlowNet2 ft-kitti [12] | - | - | - | - | (0.95) | 1.4 | (1.33) | (4.32%) | 7.62% | - | - | |

| LiteFlowNet3 ft-sintel [10] | (1.32) | 2.99 | (1.76) | 4.45 | - | - | - | - | - | - | - | |

| LiteFlowNet3 ft-kitti [10] | - | - | - | - | (0.91) | 1.3 | (1.26) | (3.82%) | 7.34% | - | - | |

|

| ||||||||||||

| CA-DCNN [40] | (1.97) | 5.32 | (3.75) | 7.05 | - | - | - | - | - | - | ||

| RAFT (2-frame) [34] | (0.77) | 2.08 | (1.2) | 3.41 | - | - | (0.64) | (1.5) | 5.27% | - | ||

| GMA [17] | (0.62) | 1.39 | (1.06) | 2.47 | - | - | (0.57) | (1.2) | 5.15% | - | - | |

| SAMFL [41] | - | 4.48 | - | 4.77 | - | 1.4 | - | 7.68% | - | - | ||

| PMC-PWC [42] | (1.53) | 3.17 | (2.41) | 4.56 | (0.93) | - | (1.32) | - | 7.22% | - | - | |

|

| ||||||||||||

| DDCNet-B0 ft-sintel | (2.71) | 7.20 | (3.27) | 7.46 | 7.35 | - | 15.29 | 47.78% | - | 0.67 | - | |

| DDCNet-B1 | 4.12 | - | 5.46 | - | 9.57 | - | 16.43 | 59.03% | - | 1.2 | - | |

| DDCNet-B1 ft-sintel | (1.96) | 6.19 | (2.25) | 6.91 | 6.65 | - | 13.22 | 52.68% | - | 1.14 | - | |

| DDCNet-B1 ft-kitti | 6.65 | - | 8.38 | - | (1.76) | 4.2 | (2.57) | (15.56)% | 38.23 | 1.74 | - | |

4.3.2. Model Size / Compactness and Processing Time

Table 2 presents a summary of model parameters (number of layers, and number of learnable parameters) and computational speed of processing Sintel image sequences of selected heavyweight and lightweight models.

Table 2:

Number of trainable parameters and computational speed of processing Sintel image sequences for lightweight and heavyweight models. Runtime is measured using Sintel image sequences with a frame size of 1024 × 436 pixels. General speed differences between the computational frameworks such as Tensorflow and Caffe should be considered when comparing the run times of various networks.

| Method | Number of layers | Number of parameters (M) | Framework | GPU (NVIDIA) | Time (ms) | FPS |

|---|---|---|---|---|---|---|

| DDCNet-B0 | 31 | 1.03 | TF2 | Quadro RTX 8000 | 76 | 13 |

| DDCNet-B1 | 30 | 2.99 | TF2 Possible Caffe |

Quadro RTX 8000 Quadro RTX 8000 |

30 ≈7 |

33 142 |

|

| ||||||

| FlowNet Simple | 17 | 38 | TF1 Caffe |

Tesla K80 GTX 1080 |

86 18 |

11 55 |

| FlowNet Correlation | 26 | 39.16 | TF1 Caffe |

Tesla K80 GTX 1080 |

179 32 |

5 31 |

| FlowNet2 | 115 | 162.49 | TF1 Caffe |

Tesla K80 GTX 1080 |

692 123 |

1 8 |

|

| ||||||

| LiteFlowNet | 94 | 5.37 | Caffe | GTX 1080 | 88.53 | 12 |

| SPyNet | 35 | 1.2 | Torch | GTX 1080 | 129.83 | 8 |

| PWC-Net+ | 59 | 8.75 | Caffe | TITAN Xp | 39.63 | 25 |

|

| ||||||

| CA-DCNN | - | - | Caffe | RTX 1080Ti | 480 | - |

| RAFT | - | 5.3 | PyTorch | RTX 2080Ti | 100 | - |

| GMA | - | 5.9 | PyTorch | RTX 2080Ti | 73 | - |

| SAMFL | - | 10.45 | PyTorch | - | - | - |

| PMC-PWC | - | 7.86 | PyTorch | RTX 1080Ti | 200 | - |

SpyNet (1.2 M), DDCNet-B0 (1.03 M) and DDCNet-B1 (2.99 M) networks had the lowest number of trainable parameters among all the methods studied in this work. More elaborate and heavyweight models such as FlowNet models have 38 million to 162 million trainable parameters.

All of our DDCNets were developed using Tensorflow. FlowNet implementations are available in both Tensorflow and Caffe. In terms of processing speed, Caffe implementations are up to several times faster than Tensorflow models. Since our GPU has more RAM, we limit the batch size to 1 to make test times comparable. With 30 ms processing time in Tensorflow, our DDCNet-B1 model is about 3 times faster than Tensorflow implementation of FlowNet-Simple on a less powerful GPU. B1 is also 3 times faster than LiteFlowNet implemented using Caffe and ran on GTX 1080.

Figure 10 shows the frame processing rate of both DDCNet models as a function of frame sizes. All DDCNet models are very fast (processing time per frame < 10 ms) for frame size smaller than 200 × 200 pixels. B1 is the fastest model across all frame sizes. Though B0 has the least number of learnable parameters with full resolution feature maps within the network, the number of convolution operations is much higher compared to the B1 model.

Figure 10:

Testing time of the DDCNet models as a function of sequence frame size. DDCNet-B1 is computationally more efficient and more accurate than DDCNet-B0. Tests are performed on a single GPU Quadro RTX 8000 with batch sizes of 1.

4.3.3. Qualitative Results

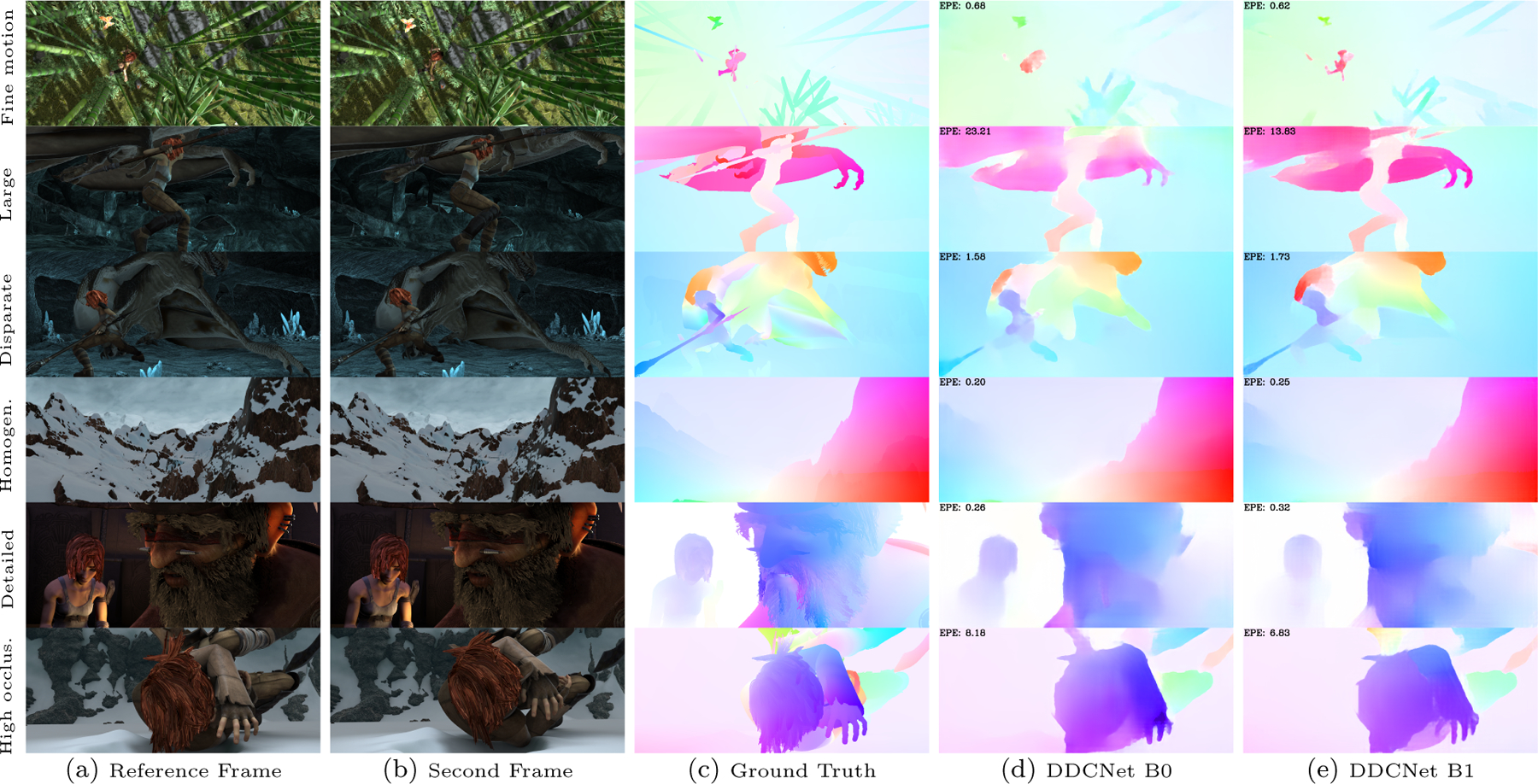

Figure 12 shows performance of each of the DDCNet models on a broad variety of six example sequences with varied scene composition and motion dynamics from the Sintel Training Clean datasets. The examples are namely 1) fine motion sequence (first row in Figure 12) involving finer motions of smaller objects in various directions; 2) large motion sequence (second row) involving higher flow velocities; 3) disparate motion sequence (third row) involving varying flow dynamics and flow velocities in various scene segments; 4) homogeneous texture sequence (fourth row) with motions involving scene segments with homogeneous texture; 5) high texture sequence (fifth row) with motions involving highly textured scene segments; and 6) high occlusion sequence (sixth row) with aperture problem including views of entire or partial object entering and / or leaving the scene.

Figure 12:

Optical flow estimates of DDCNet-B0 vs DDCNet-B1 for qualitative assessment of the network performance

Figure 11 shows the corresponding error maps for each of the example sequences. The error map represents the magnitude of the difference between the estimated and ground-truth flow vector at each pixel. Therefore, locations with larger estimation errors will appear brighter and locations with lower estimation errors will appear darker in the error maps.

Figure 11:

Error maps of DDCNet-B0 vs B1 based on optical flow estimates shown in Figure 12 for quantitative assessment of the network performance

Difficulties of the B0 model in detecting larger motions and providing clear motion boundaries in the larger motion sequence (e.g. near the dragon’s legs in the scene) are likely due to the smaller extent of its ERF. With a broader ERF extent, B1 model was able to detect larger motions and with significantly clearer motion boundaries. In image sequences with multi-directional flows in the scene (evident from multiple colors in the color-coded optical flow) as in the disparate motion sequence, we observed that activations of neurons in the latest layers due to multi-directional flows could cancel each other leading to less accurate flow estimates. The performance of B0 is better than B1 on this sequence. DDCNet models performed well on homogeneous texture sequence. For the high texture sequence both methods have difficulties identifying fine-grained flow estimates and motion boundaries in the high-textured zones. B0 was able to estimate finer flow velocities compared to B1. For the most challenging high occlusion sequence, both models faced difficulties in the occluded zones or zones with aperture problem.

4.4. Quantitative Evaluation on Benchmark Datasets

Quantitative performance of our DDCNet models and other similar lightweight and heavyweight models for the Sintel, KITTI12, KITTI15, and Middlebury datasets are presented in Table 3. Regional statistics elucidating the effects of occlusion and flow magnitudes on the model performance are presented in Table 4 and Table 5 respectively. DDCNet-B1 performed better than B0 on image sequences with larger motions as in the Sintel dataset. For datasets with large displacements such as Sintel, almost always B1 outperformed B0. For example, B0 failed in regions with motion larger than 250 pixels in the ‘large motion’ sequence (second row) in Figure 11 and in Figure 12. But for Middlebury with small to moderate flow velocities, B0 performed better than B1. Similar performance was observed in regions with lower flow velocities in Sintel sequences (B0 with a s0–10 score of 1.18 EPE when compared to B1 with 1.51 EPE score in Sintel Final sequences as shown in Table 5).

Table 4:

Average end point error in Sintel dataset as a function of regions with occlusion. All methods were fine-tuned on Sintel training dataset.

| Method | Matched | Unmatched | d0–10 | d10–60 | d60–140 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Clean | Final | Clean | Final | Clean | Final | Clean | Final | Clean | Final | |

| FlowNetS | - | - | - | - | 5.99 | 7.25 | 3.56 | 4.61 | 2.19 | 2.99 |

| FlowNetC | - | - | - | - | 5.58 | 7.19 | 3.18 | 4.62 | 1.99 | 3.30 |

| SpyNet | 3.01 | 4.51 | 36.19 | 39.69 | 5.50 | 6.69 | 3.12 | 4.37 | 1.72 | 3.29 |

| PWC-Net+ | 1.41 | 2.25 | 20.12 | 23.70 | 3.92 | 4.78 | 1.25 | 2.05 | 0.49 | 1.23 |

| LiteFlowNet | 1.63 | 2.42 | 28.29 | 29.54 | 3.27 | 4.09 | 1.44 | 2.10 | 0.93 | 1.73 |

| GMA | 0.58 | 1.24 | 7.96 | 12.50 | 1.54 | 2.86 | 0.46 | 1.06 | 0.28 | 0.65 |

| SAMFL | 1.76 | 2.28 | 26.64 | 25.01 | 3.95 | 4.21 | 1.62 | 1.85 | 0.81 | 1.45 |

| PMC-PWC | 1.19 | 2.21 | 19.37 | 23.71 | 2.88 | 4.08 | 1.01 | 1.80 | 0.56 | 1.45 |

| DDCNet-B0 | 3.85 | 4.17 | 34.53 | 34.31 | 6.10 | 6.78 | 3.89 | 4.16 | 2.61 | 2.71 |

| DDCNet-B1 | 3.52 | 4.11 | 27.88 | 29.68 | 5.53 | 6.42 | 3.54 | 4.08 | 2.31 | 2.75 |

Table 5:

Average end point error in Sintel dataset as a function of regional flow velocities. All methods were fine-tuned on Sintel training dataset.

| Method | s0–10 | s10–40 | s40+ | |||

|---|---|---|---|---|---|---|

| Clean | Final | Clean | Final | Clean | Final | |

| FlowNetS | 1.42 | 1.87 | 3.82 | 5.83 | 40.10 | 43.24 |

| FlowNetC | 1.62 | 2.31 | 3.97 | 6.17 | 33.37 | 40.78 |

| SpyNet | 0.83 | 1.4 | 3.34 | 5.53 | 43.44 | 49.71 |

| PWC-Net+ | 0.75 | 0.945 | 2.23 | 2.98 | 19.85 | 26.62 |

| LiteFlowNet | 0.50 | 0.75 | 1.73 | 2.75 | 31.41 | 34.72 |

| GMA | 0.33 | 0.57 | 0.96 | 1.82 | 7.66 | 13.49 |

| SAMFL | 0.62 | 0.89 | 1.86 | 2.59 | 30.00 | 29.23 |

| PMC-PWC | 0.64 | 0.88 | 1.75 | 2.51 | 19.22 | 27.78 |

| DDCNet-B0 | 0.84 | 1.18 | 3.74 | 4.94 | 47.12 | 44.68 |

| DDCNet-B1 | 1.17 | 1.51 | 3.96 | 4.93 | 36.38 | 38.40 |

Figure 13 shows the ground truth optical flow and optical flow estimated by FlowNet-Simple, FlowNet2, LiteFlowNet, and DDCNet-B1. As summarized in Table 2, FlowNet-Simple has 12 times more parameters (38 million vs 2.99) than DDCNet-B1. Despite having an extra variational-based refiner step on top of the main network, FlowNet-Simple has a higher endpoint error on almost all of the benchmark datasets. Superior quality of DDCNet-B1 estimates when compared to FlowNet-Simple can be observed in Figure 13.

Figure 13:

Performance of our DDCNet-B1, lightweight and heavyweight models for estimating optical flow for selected Sintel image sequences.

The endpoint error of FlowNet2 is better than our DDCNet models, but FlowNet2 has 25 times more learnable parameters than our model. Further, FlowNet2 is more than 6 times slower during testing and it is expected to require a longer training duration since each network needs to be trained separately.

DDCNet-B1 outperforms FlowNetS, FlowNetC and SpyNet in nearly all datasets (Tables 3, 4 and 5) and provided lower performance but comparable to that of LiteFlowNet, CA-DCNN, PWC-Net, SAMFL and PMC-PWC methods on regional analysis of occlusion and flow magnitudes (Tables 4 and 5 respectively). Because we are not building any explicit spatial pyramids such as in LiteFlowNet, our models do not suffer from the vanishing problem related to faster moving objects with smaller spatial extents in a scene that are often encountered in classical multi-resolution algorithms. This can be observed from fourth row (sequence Ambush 3 ) of Figure 13. A narrow, stick-like object that moves fast is apparent in this sample. DDCNet-B1 and FlowNet2 are able to estimate its motion, but LiteFlowNet has difficulties in that region.

More recent methods namely, CA-DCNN, RAFT, GMA, SAMFL and PMC-PWC use attention and feature-gating strategies along with pyramid, warping and cost volume mechanisms and iterative / recurrent update operations to achieve higher performance. These models use custom layers allowing them to perform well only on optical flow estimation whereas the DDCNet can be easily applied to other dense estimation tasks. Extension of the current deep dilated networks for other pixel-level prediction tasks such as semantic segmentation will be presented in a future work.

When compared to LiteFlowNet, DDCNet provides a lower but comparable performance. The design of DDCNet was simpler and was guided by the effective receptive field characteristics of the network. The simplicity of the design allows it to serve as a building block for more complex yet compact networks. First, for example, the accuracy of heterogeneous motion estimates can be improved using a simpler multiresolution strategy by cascading multiple DDCNets [29]. A coarse-to-fine strategy with spatial pyramids and feature warping as in the LiteFlowNet generally introduces ghosting artifacts and vanishing problems in the optical flow estimates. In contrast, a cascaded DDCNet approach would use subnets with decreasing extents of their effective receptive fields to achieve multiresolution capability without resorting to spatial pyramids. Further, the cascaded subnets design would retain the simplicity of the DDCNet framework. Second, the performance may increase significantly by utilizing explicit matching features (cost volumes) and attention mechanisms within our deep dilated network design. While our DDCNet design allows to engineer the extent of the effective receptive field, the specificity of the pixel matching can be improved significantly with the use of cost volumes as demonstrated by LiteFlowNet and attention mechanism as demonstrated by GMA. In other words, the impact of the specialized matching features, gating and attention mechanisms can be significantly improved by integrating with the DDCNet framework. These possible improvements to the DDCNet framework will be addressed in future studies.

4.5. Ablation Study of DDCNet-B1

After initial training using the Flying Chair and Flying Things, DDCNet-B1 method provided an average end point error of 2.31. Upon removing the FFR refiner module, the error increased to 2.75 highlighting the importance of the FFR module.

5. Software

DDCNet models along with necessary instructions for running the soft-ware are available to the public in the following URL: https://github.com/Computational-Ocularscience/DDCNet.

6. Conclusion

In this work, we present two key strategies or recommendations for building a successful dense prediction network namely 1) preserving spatial information throughout the network (preferably implicitly by keeping extracted features at each layer in their respective spatial order by avoiding spatial aggregation operations such as pooling and downsampling) and 2) designing networks with large-enough receptive field (effective receptive field) for the output units to enhance their ability to provide an accurate estimate for the task (e.g. spatiotemporal matching). While these strategies are necessary, they are not sufficient. A generic design with a sufficiently larger receptive field and high-resolution features can be further refined and tailored to more specific computer vision tasks such as disparity estimation and semantic segmentation. Through our design strategy, we have demonstrated that we can design CNNs to perform complex long-range matching tasks without requiring specialized layers for extracting pyramidal features, warping and cost volumes. The benchmark results (Sintel, KITTI, and Middlebury) indicate that our compact networks can achieve comparable performance in the class of lightweight optical flow networks. Further, such DDCNet models (sub-nets) can be used as building blocks and can be scaled up by either increasing the number of sub-nets or by increasing the number of layers in each of the sub-nets for better performance. Using a base network built systematically with a larger network receptive field, the impact of attention and gating mechanisms used in recent methods may be significantly improved.

Acknowledgement

The research was supported in part by financial support in the form of a Herff Fellowship from the Herff College of Engineering, The University of Memphis (UoM); tuition fees support from the Department of Electrical and Computer Engineering, UoM; a summer student fellowship from the Fight for Sight organization; and in part by the National Institutes of Health, National Eye Institute Grant EY020518.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Full width at half maximum (FWHM) of distribution represents the width or spread of the distribution at half of the maximum frequency or density value.

References

- [1].Ahmadi Aria and Patras Ioannis. Unsupervised convolutional neural networks for motion estimation In Image Processing (ICIP), 2016 IEEE International Conference on, pages 1629–1633. IEEE, 2016. [Google Scholar]

- [2].Chen Liang-Chieh, Papandreou George, Kokkinos Iasonas, Murphy Kevin, and Yuille Alan L Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence, 40(4): 834–848, 2018. [DOI] [PubMed] [Google Scholar]

- [3].Dosovitskiy Alexey, Fischer Philipp, Ilg Eddy, Hausser Philip, Hazirbas Caner, Golkov Vladimir, Smagt Patrick Van Der, Cremers Daniel, and Brox Thomas. Flownet: Learning optical flow with convolutional networks In Proceedings of the IEEE international conference on computer vision, pages 2758–2766, 2015. [Google Scholar]

- [4].Dosovitskiy Alexey, Fischer Philipp, Ilg Eddy, Hausser Philip, Hazirbas Caner, Golkov Vladimir, Smagt Patrick van der, Cremers Daniel, and Brox Thomas. Flownet: Learning optical flow with convolutional networks In Proceedings of the IEEE International Conference on Computer Vision, pages 2758–2766, 2015. [Google Scholar]

- [5].Eigen David, Puhrsch Christian, and Fergus Rob. Depth map prediction from a single image using a multi-scale deep network. In Advances in neural information processing systems, pages 2366–2374, 2014. [Google Scholar]

- [6].Gur Shir and Wolf Lior. Single image depth estimation trained via depth from defocus cues In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 7683–7692, 2019. [Google Scholar]

- [7].Hamaguchi Ryuhei, Fujita Aito, Nemoto Keisuke, Imaizumi Tomoyuki, and Hikosaka Shuhei. Effective use of dilated convolutions for segmenting small object instances in remote sensing imagery In 2018 IEEE winter conference on applications of computer vision (WACV), pages 1442–1450. IEEE, 2018. URL https://arxiv.org/abs/1709.00179. [Google Scholar]

- [8].Heeger David J. Optical flow using spatiotemporal filters. International journal of computer vision, 1(4):279–302, 1988. ISSN 0920-5691. [Google Scholar]

- [9].Huang Gao, Sun Yu, Liu Zhuang, Sedra Daniel, and Weinberger Kilian Q Deep networks with stochastic depth In European conference on computer vision, pages 646–661. Springer, 2016. [Google Scholar]

- [10].Hui Tak-Wai and Loy Chen Change Liteflownet3: Resolving correspondence ambiguity for more accurate optical flow estimation In European Conference on Computer Vision, pages 169–184. Springer, 2020. [Google Scholar]

- [11].Hui Tak-Wai, Tang Xiaoou, and Loy Chen Change Liteflownet: A lightweight convolutional neural network for optical flow estimation In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 8981–8989, 2018. [Google Scholar]

- [12].Hui Tak-Wai, Tang Xiaoou, and Loy Chen Change A lightweight optical flow cnn-revisiting data fidelity and regularization. arXiv preprint arXiv:1903.07414, 2019. [DOI] [PubMed] [Google Scholar]

- [13].Ilg Eddy, Mayer Nikolaus, Saikia Tonmoy, Keuper Margret, Dosovitskiy Alexey, and Brox Thomas. Flownet 2.0: Evolution of optical flow estimation with deep networks In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2462–2470, 2017. [Google Scholar]

- [14].Ilg Eddy, Mayer Nikolaus, Saikia Tonmoy, Keuper Margret, Dosovitskiy Alexey, and Brox Thomas. Flownet 2.0: Evolution of optical flow estimation with deep networks. In IEEE conference on computer vision and pattern recognition (CVPR), volume 2, page 6, 2017. [Google Scholar]

- [15].Janai Joel, Guney Fatma, Ranjan Anurag, Black Michael, and Geiger Andreas. Unsupervised learning of multi-frame optical flow with occlusions In Proceedings of the European Conference on Computer Vision (ECCV), pages 690–706, 2018. [Google Scholar]

- [16].Jason J Yu, Harley Adam W, and Derpanis Konstantinos G. Back to basics: Unsupervised learning of optical flow via brightness constancy and motion smoothness In European Conference on Computer Vision, pages 3–10. Springer, 2016. [Google Scholar]

- [17].Jiang Shihao, Campbell Dylan, Lu Yao, Li Hongdong, and Hartley Richard. Learning to Estimate Hidden Motions with Global Motion Aggregation. In Proceedings of the IEEE International Conference on Computer Vision, pages 9752–9761. Institute of Electrical and Electronics Engineers Inc., 2021. ISBN 9781665428125. doi: 10.1109/ICCV48922.2021.00963. [DOI] [Google Scholar]

- [18].Kendall Alex, Badrinarayanan Vijay, and Cipolla Roberto. Bayesian segnet: Model uncertainty in deep convolutional encoder-decoder architectures for scene understanding. arXiv preprint arXiv:1511.02680, 2015. [Google Scholar]

- [19].Lai Wei-Sheng, Huang Jia-Bin, and Yang Ming-Hsuan. Semi-supervised learning for optical flow with generative adversarial networks. In Advances in Neural Information Processing Systems, pages 354–364, 2017. [Google Scholar]

- [20].Liu Chenxi, Chen Liang-Chieh, Schroff Florian, Adam Hartwig, Hua Wei, Yuille Alan L, and Fei-Fei Li. Auto-deeplab: Hierarchical neural architecture search for semantic image segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 82–92, 2019. [Google Scholar]

- [21].Long Jonathan, Shelhamer Evan, and Darrell Trevor. Fully convolutional networks for semantic segmentation In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3431–3440, 2015. [DOI] [PubMed] [Google Scholar]

- [22].Lotter William, Kreiman Gabriel, and Cox David. Deep predictive coding networks for video prediction and unsupervised learning. arXiv preprint arXiv:1605.08104, 2016. [Google Scholar]

- [23].Luo Wenjie, Li Yujia, Urtasun Raquel, and Zemel Richard. Understanding the effective receptive field in deep convolutional neural networks. arXiv preprint arXiv:1701.04128, 2017. [Google Scholar]

- [24].Mayer Nikolaus, Ilg Eddy, Hausser Philip, Fischer Philipp, Cremers Daniel, Dosovitskiy Alexey, and Brox Thomas. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4040–4048, 2016. This is DispNet. They have introduced several methods here. [Google Scholar]

- [25].Meister Simon, Hur Junhwa, and Roth Stefan. Unflow: Unsupervised learning of optical flow with a bidirectional census loss. arXiv preprint arXiv:1711.07837, 2017. [Google Scholar]

- [26].Mishkin Dmytro, Sergievskiy Nikolay, and Matas Jiri. Systematic evaluation of convolution neural network advances on the imagenet. Computer Vision and Image Understanding, 161:11–19, 2017. URL https://arxiv.org/pdf/1606.02228v2.pdf. [Google Scholar]

- [27].Ranjan Anurag and Black Michael J Optical flow estimation using a spatial pyramid network. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), volume 2, page 2. IEEE, 2017. [Google Scholar]

- [28].Ren Zhe, Yan Junchi, Ni Bingbing, Liu Bin, Yang Xiaokang, and Zha Hongyuan. Unsupervised deep learning for optical flow estimation. In AAAI, pages 1495–1501, 2017. [Google Scholar]

- [29].Salehi Ali and Balasubramanian Madhusudhanan. DDCNet-Multires: Effective receptive field guided multiresolution cnn for dense prediction. Neural Processing Letters, pages 1–25, 2022. [Google Scholar]

- [30].Schuster Ren, Wasenmuller Oliver, Unger Christian, and Stricker Didier. Sdc-stacked dilated convolution: A unified descriptor network for dense matching tasks In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2556–2565, 2019. URL https://openaccess.thecvf.com/content_CVPR_2019/papers/Schuster_SDC_-_Stacked_Dilated_Convolution_A_Unified_Descriptor_Network_for_CVPR_2019_paper.pdf. [Google Scholar]

- [31].Sun Deqing, Roth Stefan, and Black Michael J A quantitative analysis of current practices in optical flow estimation and the principles behind them. International Journal of Computer Vision, 106(2):115–137, 2014. [Google Scholar]

- [32].Sun Deqing, Yang Xiaodong, Liu Ming-Yu, and Kautz Jan. Pwc-net: Cnns for optical flow using pyramid, warping, and cost volume In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 8934–8943, 2018. [Google Scholar]

- [33].Sun Deqing, Yang Xiaodong, Liu Ming-Yu, and Kautz Jan. Models matter, so does training: An empirical study of cnns for optical flow estimation. IEEE transactions on pattern analysis and machine intelligence, 42(6):1408–1423, 2019. [DOI] [PubMed] [Google Scholar]

- [34].Teed Zachary and Deng Jia. RAFT: Recurrent All-Pairs Field Transforms for Optical Flow (Extended Abstract) . In IJCAI International Joint Conference on Artificial Intelligence, pages 4839–4843, mar 2021. ISBN 9780999241196. doi: 10.24963/ijcai.2021/662. URL http://arxiv.org/abs/2003.12039. [DOI] [Google Scholar]

- [35].Teney Damien and Hebert Martial. Learning to extract motion from videos in convolutional neural networks In Asian Conference on Computer Vision, pages 412–428. Springer, 2016. [Google Scholar]

- [36].Tran Du, Bourdev Lubomir, Fergus Rob, Torresani Lorenzo, and Paluri Manohar. Deep end2end voxel2voxel prediction In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 17–24, 2016. [Google Scholar]

- [37].Wang Zhengyang and Ji Shuiwang. Smoothed dilated convolutions for improved dense prediction In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 2486–2495, 2018. URL 10.1145/3219819.3219944?casa_token=NP_6AX9ySR0AAAAA:fKL1MV-ojI-cvx2n5mYv_Qlbuz2zTIYsoYrufJ1q5JG_wpXWOW9OoO_JVLYwl5V4Y7pncqy73m1wbHg. [DOI] [Google Scholar]

- [38].Yu Fisher and Koltun Vladlen. Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122, 2015. URL https://arxiv.org/abs/1511.07122. [Google Scholar]

- [39].Zeiler Matthew D, Krishnan Dilip, Taylor Graham W, and Fergus Rob. Deconvolutional networks In 2010 IEEE Computer Society Conference on computer vision and pattern recognition, pages 2528–2535. IEEE, 2010. [Google Scholar]

- [40].Zhai Mingliang, Xiang Xuezhi, Zhang Rongfang, Lv Ning, and El Saddik Abdul-motaleb Optical flow estimation using channel attention mechanism and dilated convolutional neural networks. Neurocomputing, 368:124–132, 2019. ISSN 18728286. doi: 10.1016/j.neucom.2019.08.040. URL 10.1016/j.neucom.2019.08.040. [DOI] [Google Scholar]

- [41].Zhang Congxuan, Zhou Zhongkai, Chen Zhen, Hu Weiming, Li Ming, and Jiang Shaofeng. Self-attention-based Multiscale Feature Learning Optical Flow with Occlusion Feature Map Prediction. IEEE Transactions on Multimedia, 9210(c), 2021. ISSN 19410077. doi: 10.1109/TMM.2021.3096083. [DOI] [Google Scholar]

- [42].Zhang Congxuan, Feng Cheng, Chen Zhen, Hu Weiming, and Li Ming. Parallel multiscale context-based edge-preserving optical flow estimation with occlusion detection. Signal Processing: Image Communication, 101 (October 2021), 2022. ISSN 09235965. doi: 10.1016/j.image.2021.116560. [DOI] [Google Scholar]

- [43].Zhang Junbo, Zheng Yu, Sun Junkai, and Qi Dekang. Flow prediction in spatio-temporal networks based on multitask deep learning. IEEE Transactions on Knowledge and Data Engineering, 32(3):468–478, 2019. [Google Scholar]

- [44].Zhu Yi and Newsam Shawn. Learning optical flow via dilated networks and occlusion reasoning In 2018 25th IEEE International Conference on Image Processing (ICIP), pages 3333–3337. IEEE, 2018. URL https://arxiv.org/abs/1805.02733. [Google Scholar]