Hardly a day passes without the announcement of new successes delivered by artificial intelligence (AI) and machine learning (ML). The list of AI/ML applications that directly or indirectly touch our lives is growing rapidly, including health care. But, has AI/ML reached the dialysis space, and if so, where and to what extent?

Although there exists no universally accepted definition, AI can be described as computer-based intelligence that encompasses the ability to learn from data. AI combines concepts from several fields, such as computer science, mathematics, statistics, and data science. ML is a subdiscipline of AI that aims to identify associations or patterns in data by a computer using mathematical algorithms. Broadly speaking, the ML process can be categorized as either supervised or unsupervised learning. Supervised learning seeks associations in situations where both input and output data are known. By contrast, unsupervised learning aims to identify patterns in input data without a priori knowledge of a target.

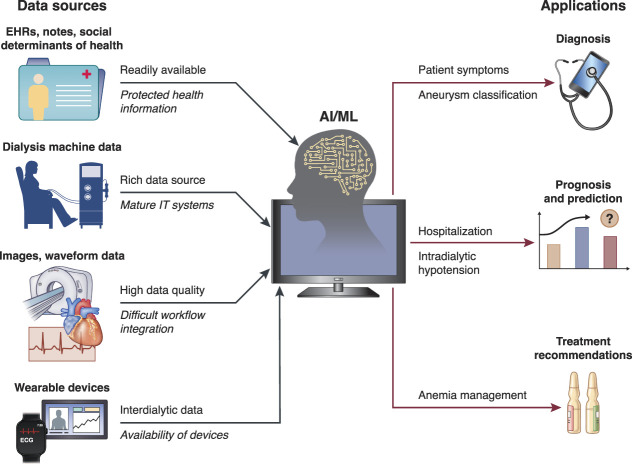

Irrespective of methodological nuances, AI/ML models usually require large amounts of training data. This makes dialysis a particularly attractive field for AI/ML applications because dialysis care is a highly standardized process that generates a large volume of longitudinal patient-level data. Dialysis prescription (e.g., treatment time, ultrafiltration volume, flow rates) and medical treatment administered on site (e.g., erythropoiesis-stimulating agents) are usually documented in electronic health records (EHRs). These data are complemented by information on patient demographics, comorbidities, and laboratory results. In many US facilities, intradialytic biosignals are automatically recorded (e.g., BP, heart rate). In addition, electronically stored free-text notes are accessible to AI/ML-based natural language processing. In some use cases, there is potential value of incorporating other types of information, such as social determinants of health data, which may have just as large or even larger effect on outcomes than standard medical information. In the future, data from wearable devices may provide insights into the interdialytic period (Figure 1).

Figure 1.

Examples of data sources and applications of AI/ML in dialysis. The icons and arrows indicate the various components and flow pathways. On the input side, examples above the arrows indicate strengths, whereas those below the arrows (in italic) indicate challenges. On the output side, some examples of use cases are indicated. AI, artificial intelligence; ECG, electrocardiogram; EHR, electronic health records; IT, information technology; ML, machine learning.

Given the availability of large databases and multimodal data, such as text, images, and waveform data, it is not surprising that literature describing AI/ML applications for dialysis is proliferating, mostly on research studies and much less on real-world routine use. Broadly speaking, reports on AI/ML in dialysis focus on diagnosis, prognosis, and treatment recommendations. For example, Chan et al.1 applied natural language processing to EHRs to identify symptom burden in dialysis patients; their study revealed that natural language processing had higher sensitivity compared with International Classification of Diseases codes for identification of common hemodialysis-related symptoms. Zhang et al.2 successfully used cloud-based AI/ML-driven image analysis to classify vascular access aneurysms as nonadvanced or advanced with an area under the receiver operating characteristic curve of 0.96. Prognosis is the most widely used application of AI/ML in dialysis. Using data from over 260,000 hemodialysis sessions, Lee et al.3 developed a recurrent neural network model that predicted intradialytic hypotension with an area under the receiver operating characteristic curve of 0.94. Other examples are prognosis of hospitalization,4 mortality,5 coronavirus disease 2019,6 and abnormal oxygen saturation pattern.7 For treatment recommendation, Barbieri et al.8 developed an anemia control model that suggests iron and erythropoiesis-stimulating agent doses. As pointed out by Correa and Mc Causland,9 AI/ML can help improve dialysis patient safety.

However, as noted earlier, routine use of AI/ML applications in dialysis is rare. The previously mentioned anemia control model8 and ML-driven interventions to reduce hospitalization rates4 are notable exceptions. The hesitancy on the side of dialysis organizations and health care providers to implement AI/ML models in their daily workflow is understandable. Development and implementation of AI/ML methods in dialysis shares common challenges as in other areas, such as data privacy considerations and lack of a clear cause-and-effect interpretation of model output. AI/ML models only rarely allow the user to understand how a specific outcome was reached; their inherent black-box nature can be frustrating to users and may cause communication issues with health care providers and patients. Other problems arise regarding the portability of AI/ML models between patient populations. Even if a successful use case is well-described in the literature and the code made publicly available, it is likely that the respective AI/ML model needs to be adjusted when applied to a different patient population. Experience has shown that applying the same model (e.g., a model with the same neural network topology and neuron transfer functions and weights) to a different population may result in inferior performance. Therefore, if model performance is poor, retraining is a sensible step. Real-time prediction of intradialytic hypotension, for example, requires fast and reliable connectivity; as such, applications are mostly web-based. In addition, because most AI/ML models draw on data from multiple sources, such as EHRs, machine data, etc., extracting and merging of such multimodal data can be challenging. A frequently encountered problem when developing AI/ML applications for dialysis is the different time scales of data recordings, ranging from seconds (e.g., some machine data) to minutes (e.g., BP) to days (e.g., administration of drugs) and to weeks or months (e.g., laboratory results). AI/ML methods to address this specific complication are under development. Another practical challenge is that AI/ML models require a mature technical infrastructure and experts to maintain it. The situation is further compounded by the lack of clear regulatory requirements and pathways because AI/ML applications may be classified as medical devices or clinical decision support systems.10 Given these challenges, it is not surprising that the real-world adoption of AI/ML models in the dialysis field is slow and that only a few AI/ML applications have left the realm of research and found their way into routine patient care. Despite the pervasive AI/ML hype, it is important to manage expectations and understand that AI/ML models cannot provide general solutions but are tools to serve very specific and narrowly defined tasks. AI/ML algorithms are meant to assist and not to substitute clinicians' work. Any successful implementation of AI models in real practice will depend on the user experience part, on how smoothly they are integrated in the real-life point-of-care processes.

The US Food and Drug Administration (FDA), Health Canada, and the United Kingdom's Medicines and Healthcare Products Regulatory Agency have jointly identified ten guiding principles that inform the development of good ML practice.11 They address issues including good software engineering practices, data collection protocols to ensure that the relevant characteristics of the intended patient population are sufficiently represented in the training and test datasets, human interpretability of the model outputs, and monitoring of models in real-world use. Validation of data elements in the process of model development is critically important.

To further advance the application of AI/ML in the dialysis space, several steps need to be taken. First, the effectiveness and safety of AI/ML tools should be rigorously and repeatedly evaluated, ideally in pragmatic clinical trials. Of note, it is important to perform validation studies before initiating pragmatic trials. Second, when developing AI/ML models, special care must be exercised to avoid implicit and explicit biases. Third, the medical workforce needs to be trained in the interpretation and communication of AI/ML model output. These measures will help to better define the place for AI/ML models in dialysis care.

Acknowledgments

This article is part of the Artificial Intelligence and Machine Learning in Nephrology series, led by series editor Girish N. Nadkarni.

The content of this article reflects the personal experience and views of the author(s) and should not be considered medical advice or recommendation. The content does not reflect the views or opinions of the American Society of Nephrology (ASN) or CJASN.

Responsibility for the information and views expressed herein lies entirely with the author(s).

Disclosures

P. Kotanko reports employment with Renal Research Institute, a wholly owned subsidiary of Fresenius Medical Care; holds stock in Fresenius Medical Care; reports research funding from Fresenius Medical Care, KidneyX, and NIH; is an inventor on patent(s) in the field of dialysis; receives author royalties from HS Talks and UpToDate; and serves on the Editorial Boards of Blood Purification, Frontiers in Nephrology, and Kidney and Blood Pressure Research. H. Zhang reports employment and patents with Renal Research Institute, a wholly owned subsidiary of Fresenius Medical Care. The remaining author has nothing to disclose.

Funding

National Institute of Diabetes and Digestive and Kidney Diseases of the National Institutes of Health (Grant/Award Number: “R01DK130067”).

Author Contributions

P. Kotanko conceptualized the article; and all authors wrote the original draft and reviewed and edited the manuscript.

References

- 1.Chan L Beers K Yau AA, et al. Natural language processing of electronic health records is superior to billing codes to identify symptom burden in hemodialysis patients. Kidney Int. 2020;97(2):383–392. doi: 10.1016/j.kint.2019.10.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zhang H Preddie D Krackov W, et al. Deep learning to classify arteriovenous access aneurysms in hemodialysis patients. Clin Kidney J. 2022;15(4):829–830. doi: 10.1093/ckj/sfab278 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lee H Yun D Yoo J, et al. Deep learning model for real-time prediction of intradialytic hypotension. Clin J Am Soc Nephrol. 2021;16(3):396–406. doi: 10.2215/CJN.09280620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chaudhuri S Han H Usvyat L, et al. Machine learning directed interventions associate with decreased hospitalization rates in hemodialysis patients. Int J Med Inform. 2021;153:104541. doi: 10.1016/j.ijmedinf.2021.104541 [DOI] [PubMed] [Google Scholar]

- 5.Yang CH, Chen YS, Moi SH, Chen JB, Wang L, Chuang LY. Machine learning approaches for the mortality risk assessment of patients undergoing hemodialysis. Ther Adv Chronic Dis. 2022;13:20406223221119617. doi: 10.1177/20406223221119617 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Monaghan CK Larkin JW Chaudhuri S, et al. Machine learning for prediction of patients on hemodialysis with an undetected SARS-CoV-2 infection. Kidney360. 2021;2(3):456–468. doi: 10.34067/kid.0003802020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Galuzio PP Cherif A Tao X, et al. Identification of arterial oxygen intermittency in oximetry data. Scientific Rep. 2022;12(1):16023. doi: 10.1038/s41598-022-20493-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Barbieri C Molina M Ponce P, et al. An international observational study suggests that artificial intelligence for clinical decision support optimizes anemia management in hemodialysis patients. Kidney Int. 2016;90(2):422–429. doi: 10.1016/j.kint.2016.03.036 [DOI] [PubMed] [Google Scholar]

- 9.Correa S, Mc Causland FR. Leveraging deep learning to improve safety of outpatient hemodialysis. Clin J Am Soc Nephrol. 2021;16(3):343–344. doi: 10.2215/CJN.00450121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Barbieri C Neri L Chermisi M, et al. How to assess the risks associated with the usage of a medical device based on predictive modeling: the case of an anemia control model certified as medical device. Expert Rev Med Devices. 2021;18(11):1117–1121. doi: 10.1080/17434440.2021.1990037 [DOI] [PubMed] [Google Scholar]

- 11.US Food and Drug Administration. Good machine learning practice for medical device development: guiding principles. Published October 27, 2021. Accessed February 2, 2023. https://www.fda.gov/medical-devices/software-medical-device-samd/good-machine-learning-practice-medical-device-development-guiding-principles [Google Scholar]