Abstract

Background:

Transcranial magnetic stimulation (TMS) can modulate neural activity by evoking action potentials in cortical neurons. TMS neural activation can be predicted by coupling subject-specific head models of the TMS-induced electric field (E-field) to populations of biophysically realistic neuron models; however, the significant computational cost associated with these models limits their utility and eventual translation to clinically relevant applications.

Objective:

To develop computationally efficient estimators of the activation thresholds of multi-compartmental cortical neuron models in response to TMS-induced E-field distributions.

Methods:

Multi-scale models combining anatomically accurate finite element method (FEM) simulations of the TMS E-field with layer-specific representations of cortical neurons were used to generate a large dataset of activation thresholds. 3D convolutional neural networks (CNNs) were trained on these data to predict thresholds of model neurons given their local E-field distribution. The CNN estimator was compared to an approach using the uniform E-field approximation to estimate thresholds in the non-uniform TMS-induced E-field.

Results:

The 3D CNNs estimated thresholds with mean absolute percent error (MAPE) on the test dataset below 2.5% and strong correlation between the CNN predicted and actual thresholds for all cell types . The CNNs estimated thresholds with a 2–4 orders of magnitude reduction in the computational cost of the multi-compartmental neuron models. The CNNs were also trained to predict the median threshold of populations of neurons, speeding up computation further.

Conclusion:

3D CNNs can estimate rapidly and accurately the TMS activation thresholds of biophysically realistic neuron models using sparse samples of the local E-field, enabling simulating responses of large neuron populations or parameter space exploration on a personal computer.

Keywords: Transcranial magnetic stimulation, Convolutional neural network, Machine learning, Neuron models, Finite element method, Threshold

1. Introduction

Transcranial magnetic stimulation (TMS) is a technique for noninvasive modulation of brain activity in which an electric field (E-field) is induced in the head by a current pulse applied through an external coil (Barker et al., 1985). TMS is FDA-cleared to treat depression, obsessive compulsive disorder, smoking addiction, and migraine (Blumberger et al., 2018; Carmi et al., 2019; Dinur-Klein et al., 2014; George et al., 2010; Levkovitz et al., 2015; O’Reardon et al., 2007), and is under investigation for numerous other psychiatric and neurological disorders (Lefaucheur et al., 2014). In addition, non-invasive stimulation of the cerebral cortex with TMS is valuable for human neuroscience research (Ziemann, 2010). Nonetheless, TMS suffers from several limitations including large inter- and intra-subject variability in responses (George, 2019; Huang et al., 2017) and modest effect sizes relative to those achieved, for example, by electroconvulsive therapy (Kolar, 2017). Improving TMS efficacy and reliability is difficult using empirical methods alone due to the vast parameter space and limited understanding of the neural mechanisms by which TMS activates neurons and produces long-lasting changes to excitability.

Computational modeling of the TMS-induced E-field distribution in subject-specific volume conductor head models enables quantifying the E-field delivery to cortical targets (Gomez-Tames et al., 2020; Opitz et al., 2011; Peterchev et al., 2012; Thielscher et al., 2011; Weise et al., 2020). However, the E-field distribution alone does not predict the response to stimulation, particularly when considering temporal characteristics of the stimulus (e.g., pulse shape and direction) or the diversity of neural elements in the brain. How TMS affects neural activity is still unclear and presents a complex problem, as the cortex is composed of various cell types, differing in morphology, electrophysiology, and connectivity, which all can contribute to the responses evoked by stimulation.

Previously, we developed models of human cortical neurons to study the response to the simulated TMS E-field (Aberra et al., 2020, 2018). This multi-scale modeling framework computes the polarization and activation of neural elements in response to arbitrary coil geometries and placements, as well as pulse waveforms, and it successfully reproduced trends in TMS thresholds as a function of pulse shape, width, and direction (Aberra et al., 2020). However, this approach has the considerable computational cost of solving numerically the large system of partial differential equations associated with each neuron model, and requires high performance computing (HPC) resources when simulating large populations of neurons or variations in stimulus parameters. For example, simulating the threshold of a single neuron in our model (Aberra et al., 2020) required 5–15 s; therefore, simulating the response of all neurons in the precentral gyrus (approximately 154 million1) to a TMS pulse would require 24–73 years run serially on a typical laptop or 3–10 months if parallelized over 100 CPUs. Thus, alternative approaches are required to advance models of the effects of TMS on neurons.

Machine learning provides a potential alternative to generate accurate, computationally efficient estimators of the neural response. Artificial neural networks (ANNs) and deep learning have achieved substantial success in multiple problem domains involving complex, high-dimensional data (Lecun et al., 2015). Convolutional neural networks (CNNs) are a class of deep, feed-forward ANNs that use convolutional kernels to extract local features from spatially structured data (Lecun et al., 2015). 3D CNNs were used to estimate TMS-induced E-field distributions in real-time (Li et al., 2022; Yokota et al., 2019) and to learn the mapping between the E-field distribution and evoked muscle responses (Akbar et al., 2020). CNNs were also used to learn the input–output properties of single neuron models for synaptic inputs (Beniaguev et al., 2021; Olah et al., 2021), but they have yet to be applied to estimating neural activation by extracellular E-fields.

We designed a CNN that learned the mapping between TMS induced E-field distributions and the firing responses of biophysically realistic, multicompartmental model neurons, providing a rapid, computationally efficient method to quantify neural activation within E-field volume conductor models. We evaluated the performance of the CNNs and found them to produce accurate estimates of activation threshold, with mean absolute percent error close to the 2% window used in the simulated threshold binary search, in comparison to a simpler estimation approach using the uniform E-field approximation, which had mean absolute percent error of over 6%. Crucially, the CNN estimators ran 2–4 orders of magnitude faster than the full neuronal simulations, with further speedup if the threshold is estimated at the neuron population level.

2. Methods

We developed computationally efficient CNN estimators of the activation thresholds of biophysically realistic, multi-compartmental cortical neuron models in response to a TMS-induced electric field in subject-specific FEM head models derived from MRI data. After determining activation thresholds in the biophysically realistic neuron models, we trained a CNN to take as input the local E-field at regularly defined points around a neuron and output the threshold E-field magnitude to activate the neuron. CNNs were trained on thresholds for neurons placed in one head model (almi5) and tested on neuron thresholds from another head model (ernie).

2.1. Multi-scale model of TMS-induced cortical activation

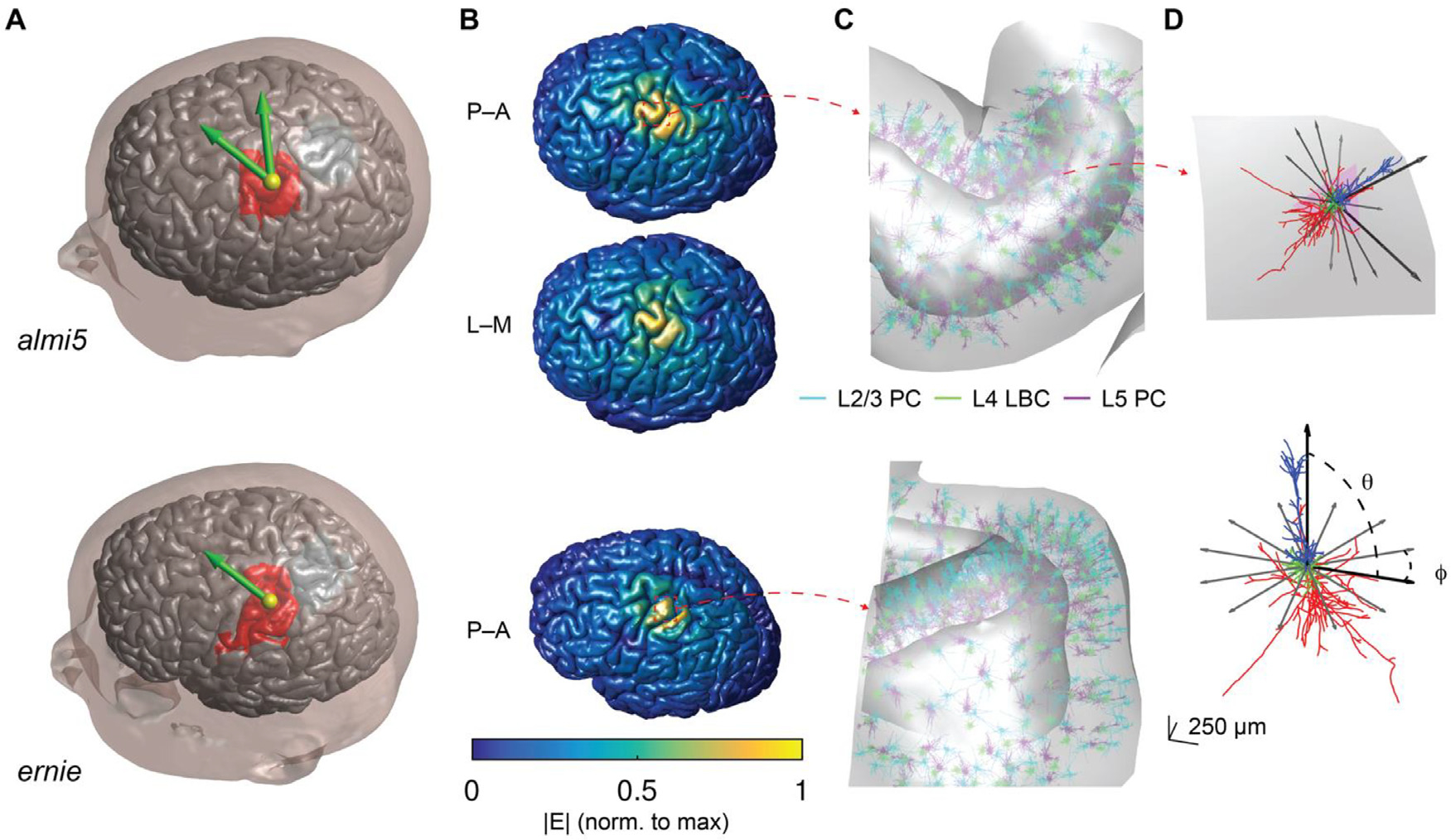

The “ground truth” neural responses were obtained by simulating biophysically realistic models of L2/3 pyramidal cells (PCs), L4 large basket cells (LBCs), and L5 PCs coupled to E-fields computed within two MRI-derived volume conductor head models in SimNIBS v3.1 (Thielscher et al., 2015).

2.1.1. E-field model

Two tetrahedral FEM meshes were generated using the almi5 and ernie datasets included with SimNIBS, consisting of both T1- and T2-weighted images and diffusion tensor imaging (DTI) data. We used the mri2mesh pipeline (Windhoff et al., 2013) with white matter surface resolution set to 60,000 vertices for the almi5 dataset and 120,000 vertices for the ernie dataset. The almi5 volume head mesh consisted of 646,359 vertices and 3.6 million tetrahedral elements, and the ernie volume head mesh consisted of 1.6 million vertices and 8.8 million tetrahedral elements. The meshes included five homogenous compartments: white matter, gray matter, cerebrospinal fluid (CSF), bone, and scalp. For the ernie mesh, all compartments were assigned default conductivity values, with anisotropic conductivity in the white matter using the DTI data and the volume normalized approach (mean conductivity = 0.126 S/m), and isotropic conductivities in the other tissues: gray matter: 0.275 S/m, CSF: 1.654 S/m, bone: 0.01 S/m, scalp: 0.25 S/m. All conductivities were the same in the almi5 mesh, except for the gray matter (0.276 S/m) and CSF (1.79 S/m), which were the values used in our previous publication (Aberra et al., 2020).

E-field distributions were simulated for the MC-B70 figure-of-8 coil (P/N 9016E056, MagVenture A/S, Farum, Denmark), which has ten turns in each of the two windings with outer and inner diameters of 10.8 and 2.4 cm, respectively (Thielscher and Kammer, 2004). The coil was positioned in both cases above the left motor hand knob, located on the precentral gyrus (Yousry et al., 1997). For the almi5 mesh, we simulated both posterior–anterior (P–A) and latero–medial (L–M) coil orientations, with the coil handle oriented 45° and 90° relative to the midline, respectively. Using these two orientations, the E-field distributions for the A–P and M–L pulse directions were generated by flipping all E-field vectors. For the ernie mesh, we simulated the P–A coil orientation of the motor hand knob. The E-field distributions were computed with a coil-to-scalp distance of 2 mm and coil current of 1 A/μs.

2.1.2. Neuron models

Previously, we adapted the multi-compartmental, conductance-based models of juvenile (P14) rat cortical neurons implemented by the Blue Brain Project (Markram et al., 2015; Ramaswamy et al., 2015) to the biophysical and geometric properties of adult, human cortical neurons in the NEURON simulation software (Hines and Carnevale, 1997). TMS activated with lowest intensity the L5 and L2/3 PCs as well as L4 large basket cells (LBCs); therefore, the current study focused on these neurons (we focused our simulations on LBCs in L4, but preliminary simulations indicated LBCs in other layers had similar thresholds (Aberra et al., 2020)). Each cell type had five “virtual clones”, which had stochastically varied morphologies but identical biophysical parameters (Aberra et al., 2020, 2018). The cell morphologies are plotted in Supplementary Figure S1.

2.1.3. Embedding neuron populations in head model

Regions of interest (ROIs) were defined within each head mesh to embed layer-specific populations of model neurons. The almi5 mesh ROI was defined as a 32 × 34 × 50 mm3 region containing the M1 hand knob on the precentral gyrus and opposing postcentral gyrus (Aberra et al., 2020). For the ernie mesh, a larger ROI was defined to include the precentral gyrus, central sulcus, and postcentral gyrus labeled regions generated by Freesurfer’s automatic cortical parcellation with the Destrieux Atlas (Destrieux et al., 2010). These regions were cropped with a 48 × 55 × 50 mm3 box. Neuron models were positioned and oriented within the gray matter by interpolating surface meshes representing each cortical layer between the gray matter and white matter surfaces and discretizing the surfaces with the number of triangular elements matching the desired number of neurons in each layer. For the almi5 and ernie ROIs, 3000 and 5000 elements were used for each layer, resulting in surfaces with mean density of 1.7 and 0.64 elements (i.e., neuron positions) per mm2, respectively. The layer depths were defined using layer depth boundaries from the recently published layer segmentation of the BigBrain histological atlas; in von Economo area FA, the boundaries between adjacent layers were at normalized depths of 0.0993 (L1–L2/3), 0.466 (L2/3–L4), 0.524 (L4–L5), 0.753 (L5–L6) (total depth of gray matter is 1) (Wagstyl et al., 2020). Accordingly, the cell placement surface meshes were positioned between these boundaries at normalized depths of L2/3: 0.4, L4: 0.5, L5: 0.75 for the ernie model and L2/3: 0.4, L4: 0.55, L5 0.65 for the almi5 model. The slight differences in the tissue conductivity values and layer depths add to the anatomical variation between the two head models which was advantageous for testing of the robustness of the CNN estimators.

Single model neurons were placed with their cell bodies centered in each element and oriented to align their somatodendritic axis normal to the element. Using the somatodendritic axis as the polar axis of the local spherical coordinate system (Fig. 1D), each model neuron was placed with initial random azimuthal orientation and then rotated to 11 additional orientations with 30° steps to sample the full range of possible orientations and generate a larger dataset for training and evaluating the CNNs (discussed in Section 2.2.4).

Fig. 1.

Multi-scale model of TMS-induced activation. (A) FEM head models used in this study to compute E-fields with simulated TMS coil positions (yellow sphere is location of coil center) and directions (green arrow direction opposite of coil handle). Model neurons were populated throughout ROI (red region) encompassing the motor hand knob and opposing postcentral gyrus. (B) E-field magnitudes plotted on gray matter surface for P–A and L–M coil orientations (top) and P–A (bottom) in corresponding meshes. (C) Neuron populations in corresponding head meshes shown with zoomed in view. (D) Example L5 PC placed in gyral crown (top), oriented with the somatodendritic axis normal to the element and reference vectors indicating azimuthal rotations simulated at all positions (tangential to element normal). Same L5 PC model neuron shown in cell-centered coordinate system with somatodendritic axis aligned to polar axis (bottom) with polar angle θ and azimuthal angle φ. The azimuthal rotations shown are the same as in the top panel.

Mesh generation, placement of neuronal morphologies, extraction of E-field vectors from the SimNIBS output, NEURON simulation control, analysis, and visualization were conducted in MATLAB (R2016a & R2017a, The Mathworks, Inc., Natick, MA, USA).

2.1.4. Neuron simulations

Applying the quasi-static approximation (Bossetti et al., 2008; Plonsey and Heppner, 1967) allows the separation of the spatial and temporal components of the TMS-induced E-field. The spatial component was derived from the E-field distributions computed in SimNIBS with a coil current rate of change of 1 A/μs by interpolating the E-field vectors at each model neuron’s compartments after placement within the head mesh. The E-field at the model neuron compartments was linearly interpolated from the 10 nearest mesh points (tetrahedral vertices in SimNIBS) within the gray and white matter volumes using the MATLAB <monospace>scatteredInterpolant</monospace> function. The E-field vectors were integrated along each neural process to generate a quasipotential (Nagarajan and Durand, 1996; Roth and Basser, 1990; Wang et al., 2018), which was coupled as an extracellular voltage to each compartment in NEURON using the <monospace>extracellular</monospace> mechanism (Hines and Carnevale, 1997). The neuron models were discretized with isopotential compartments no longer than 20 μm.

The temporal component of the E-field was included by scaling uniformly the quasipotentials over time by either a monophasic or biphasic TMS pulse recorded from a MagPro X100 stimulator (MagVenture A/S, Denmark) with a MagVenture MCF-B70 figure-of-8 coil (P/N 9016E0564) using a search coil and sampling rate of 5 MHz. The E-fields were down-sampled to twice the simulation time step and normalized to unity amplitude for subsequent scaling in the neural simulations. We used a simulation time step of 5 μs, simulation window of 1 ms, and backward Euler integration. Activation thresholds were determined by scaling the pulse waveform using a binary search algorithm to find the minimum stimulus intensity, within 2%, necessary to elicit an action potential, defined as the membrane potential in at least 3 compartments crossing 0 mV with positive slope.

2.2. Convolutional neural network for threshold estimation

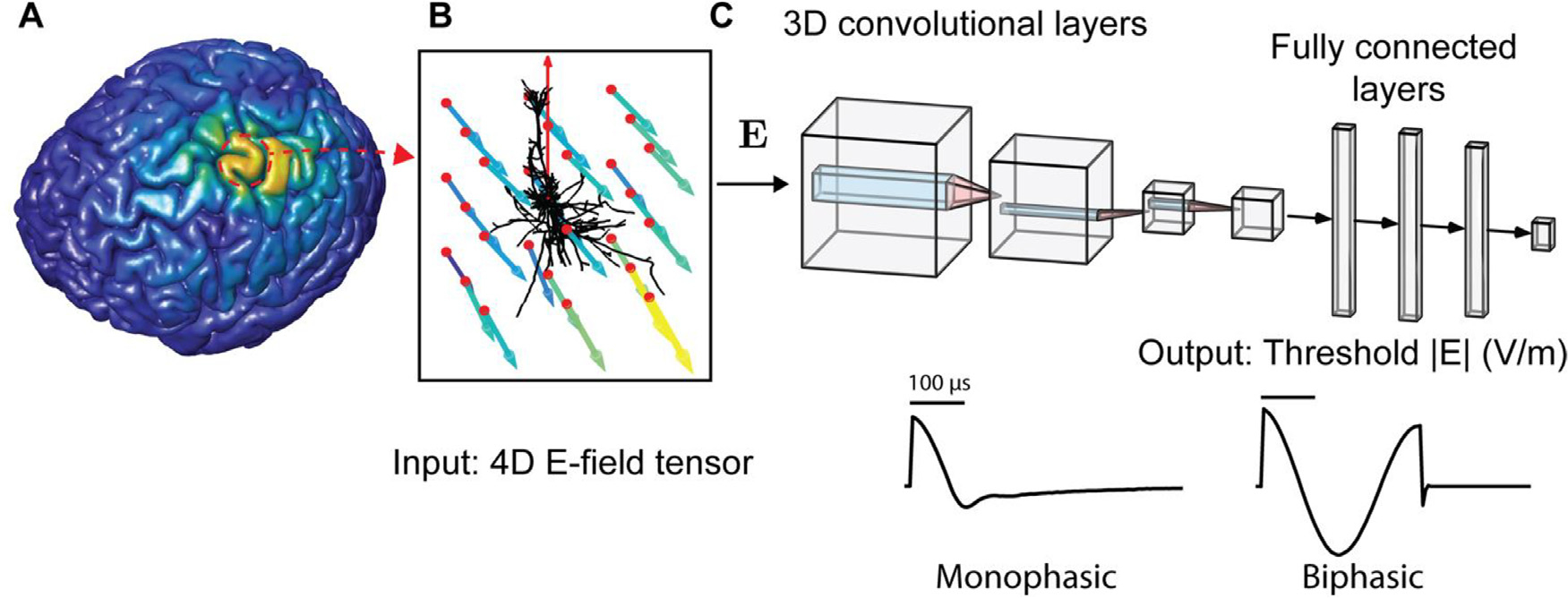

We used a two-stage 3D CNN followed by 1D dense layers to estimate the threshold to activate each model neuron (Fig. 2). The CNN does not represent the temporal dynamics of the neural response; therefore, each CNN estimates the activation thresholds for the specific TMS pulse waveform that was used to generate the training data. CNNs were trained on thresholds for neurons placed in the almi5 model and tested on neuron thresholds from the ernie model. Hyperparameters were tuned using random search with the training dataset. The result was a set of 15 trained CNNs for each of the 15 model neurons, with each CNN outputting the activation threshold of a model neuron for any local E-field distribution and the specific pulse waveform.

Fig. 2.

Estimating E-field threshold of multi-compartmental neurons using convolutional neural networks (CNNs). (A) E-field distribution computed throughout head model using SimNIBS, shown on gray matter surface. (B) For a model neuron at any location, local E-field vectors were sampled at regular grid (red points) centered on cell body and rotated into cell-centered coordinate system (red vector indicates somatodendritic axis, i.e., polar axis). Color and length of each vector indicates magnitude. (C) After normalizing the E-field vector magnitudes by that of the grid central node, the E-field vectors were structured as a 4D tensor input to the 3D convolutional layers. The output of the final 3D convolutional layer was flattened and input into dense, fully connected layers, which ended with a single linear output layer element with the predicted threshold E-field magnitude in V/m. E-field magnitude is referenced to E-field at the central grid point, as off-center points have, in general, different direction/magnitude. Separate models were trained on thresholds obtained with different pulse shapes, either a monophasic or biphasic TMS pulse, shown below.

2.2.1. E-field input and preprocessing

The input to the CNN was E-field vectors defined on an cubic grid with side length centered on the cell body and rotated into the cell-centered coordinate system, comprising a 4D tensor . This ensured the spatial relationship between the E-field sampling points and the morphology was constant for any model neuron placed in the brain. We set l to encompass approximately the cell dimensions while minimizing grid points penetrating the CSF: 2 mm for L2/3 PCs, 1.5 mm for L4 LBCs, and 1.5 mm for L5 PCs. The effect of varying the number of grid points and grid size was tested with the L5 PCs, using , and 9 points per dimension and to in 0.5 mm steps. The sampling grids used in the main results for all cells are shown in Supplementary Figure S1.

The E-field tensors were input to the CNN with E-field components in either Cartesian coordinates or spherical coordinates , again using the somatodendritic axis as the polar axis of the local spherical coordinate system. Since the azimuthal angle ranged from 0 to 360°, creating a periodic discontinuity at 0°, the azimuthal angle was separated into two components ranging from −1 to 1 using cos and sin, i.e., . We hypothesized using spherical coordinates would enable the CNN learn the strong dependence of model thresholds on the E-field magnitude (Aberra et al., 2020), which is included explicitly in the spherical coordinate representation.

2.2.2. Local E-field characterization

To determine how well the E-field sampling grids represented spatial gradients of the E-field from the FEM solution, we computed the magnitude of the directional gradients at each neuronal position, given by the norm of the E-field Jacobian,

| (1) |

and extracted the median value of this metric within each sampling grid size . The E-field vectors at each grid point were first divided by the magnitude of the E-field at the center grid point (cell body). We also computed this gradient metric with an sampling grid to capture better the higher spatial frequencies in the FEM solution. Additionally, we quantified how well the E-field sampling grids captured the E-field at the action potential (AP) initiation site by linearly interpolating the E-field vector within each sampling grid at the AP initiation site, extracted from the NEURON simulations. We compared this interpolated E-field vector to the actual E-field vector from the FEM solution by computing the absolute percent error in magnitude and error in angle between them, given by

| (2) |

and

| (3) |

2.2.3. CNN architecture

The architecture consisted of a series of 3D convolutional layers (Conv3D) followed by a flattening operation (Flatten layer) and a series of fully connected, 1D dense layers (Dense) and a final one-unit output layer corresponding to the estimated threshold E-field magnitude. This threshold can then be converted to the threshold TMS device intensity setting by scaling with the ratio between the coil current rate of change and the local E-field magnitude from the FEM simulation. The model weights (both convolutional kernels and dense layers) were initialized with Xavier uniform initialization (Glorot and Bengio, 2010) (glorot_uniform) and trained using the Adam adaptive, stochastic gradient-based optimization algorithm (Kingma and Ba, 2015) with the mean squared error loss function. The CNNs were implemented using Tensorflow 2.2 (Abadi et al., 2016) with the Keras deep-learning API in Python 3.7.

ANN hyperparameters are still commonly tuned by hand based on intuition, due to the vast parameter space and often slow training times required to evaluate each selected hyperparameter set. Here, we tuned the hyperparameters of the general architecture described above (e.g., number of convolutional layers, dense layers, etc.) using random search, based on previous work showing it was more efficient than grid search (Bergstra and Bengio, 2012), followed by some manual tuning of individual hyperparameters. Each set of hyperparameters tested in the random search was used to train a candidate CNN architecture on a third of the training dataset to speed up training time. The search ranges and best hyperparameters obtained are included in Table 1.

Table 1.

Convolutional neural network (CNN) hyperparameters. Columns include tuning range randomly searched for each hyperparameter and best hyperparameters identified.

| Hyperparameter | Tuning range | Best |

|---|---|---|

|

| ||

| Number of convolutional layers | 2–6 | 4 |

| Convolutional kernel size | 1–3 | 3 |

| Number of convolutional filters (1st layer) | 50–121 | 115 |

| Number of dense layers | 1–5 | 3 |

| Number of dense units (1st layer) | 50–1000 | 57 |

| Shrink rate | 0.5–0.9 | 0.8 |

| Batch size | 8–128 | 62 |

| Learning rate (initial) | 10−8–10−5 | 10−5 |

| Activation function | ReLU or leaky ReLU | ReLU |

| Dropout rate | 0–0.5 | 0 |

| Batch normalization | off or on | off |

| Output activation function | - | linear |

Using the <monospace>RandomizedSearchCV</monospace> function in the scikit-learn Python module (Pedgregosa et al., 2011), a total of 52 hyperparameter sets were randomly selected and evaluated based on their final test dataset loss (MSE) after 1000 epochs for each of the L5 PC clones, without using cross validation to reduce search time. We first conducted the hyperparameter search for the five L5 PCs and found that model performance was always the same across clones. Therefore, we ran random searches for a single L2/3 PC and L4 LBC clone and found that the best hyperparameters from the first search with the L5 PCs were not outperformed by any new hyperparameter sets tested.

After exploring the hyperparameter space, we found the best performing CNN architecture for the 9 × 9 × 9 E-field sampling grid consisted of four convolutional layers and three dense layers with 3 × 3 × 3 convolutional kernels (Table 1). The number of filters and dense units were defined for the initial convolutional and dense layer, respectively, and then decreased in each subsequent layer by multiplying the first layer’s value (115 filters and 57 dense units) by a shrink rate ri−1, where i is the index of the convolutional or dense layer. The output of each convolutional and dense layer was passed through rectified linear units (<monospace>ReLU</monospace>). The convolutional layers all had kernels of size 3 × 3 × 3 (stride of 1) and did not use zero-padding, resulting in layers with decreasing dimension: for the 9 × 9 × 9 E-field vector sampling grid, the first three dimensions of the layer outputs were 7, 5, 3, and 1. For the models using fewer E-field sampling points , the dimensions of the input were incompatible with this architecture, so we used two approaches to adapt the network to these inputs. The first was to reduce the kernel size to 2 × 2 × 2, and, for the case, reduce the number of convolutional layers to two (variable network size). However, since this changed the overall number of weights, we tested a second approach in which the architecture was kept constant and the E-fields were upsampled to the 9 × 9 × 9 grid using linear interpolation (constant network size).

Training was conducted using mini-batches of 62 samples and an initial learning rate of 10−5. To train the final set of CNNs with the best parameter set, we used a maximum of 2000 epochs, and training was terminated to reduce overfitting if the validation loss did not decrease after 30 epochs (<monospace>EarlyStop</monospace>). We also used learning rate scheduling to reduce the learning rate by a factor of 5 if the validation loss plateaued for 15 epochs (<monospace>ReduceLRonPlateau</monospace>). Example training curves are shown in Supplementary Fig. S2.

We tested including dropout layers between each dense layer, as well as batch normalization either before or after the non-linearity for regularization. These operations did reduce training time, but they resulted in higher prediction error and were not included in the final hyperparameter set. The same hyperparameter set was then used for each of the 15 CNNs corresponding to the model neurons.

2.2.4. Training and testing datasets

The training and test datasets for each model neuron consisted of pairings of 4D E-field tensors sampled around each model neuron position within the cortical geometry and the corresponding activation thresholds. For each of the 11 additional azimuthal rotations, the E-field sampling grids were rotated to ensure the E-field vector orientations relative to the model neuron were constant, while providing a unique E-field distribution for training the CNN. The training dataset was derived from the E-field simulations in the almi5 mesh, consisting of four stimulation directions (P–A, A–P, L–M, M–L), 2999–3000 neuron positions per cell, and 12 rotations, totaling 143,952 or 144,000 unique E-field–threshold combinations for each of the 15 model neurons (2.16 million simulations). This training dataset was split, with 85% of the data used for training the model and 15% used for validation. CNNs were trained on HPC cluster nodes each equipped with a GeForce RTX 2080 Ti graphics card.

To test model generalization to a new head model, the test dataset consisted of E-field distributions from two stimulation directions (P–A and A–P) in the ernie mesh, with 4999 neuron positions, and 12 rotations at each position, totaling 119,976 unique E-field–threshold combinations for each model neuron. After training with the training/validation dataset, the performance of each CNN was evaluated on this test dataset.

It may be preferable in some applications to estimate the threshold statistics of a given cell type directly, rather than estimating cell-specific thresholds and subsequently computing population statistics. Therefore, we also trained a set of CNNs to estimate directly the median threshold across clones and azimuthal rotations within each cell type (L2/3 PC, L4 LBC, and L5 PC) using Cartesian coordinates for the E-field and the same hyperparameters listed in Table 1. Additionally, the same training and test datasets were used but the thresholds across clones and azimuthal rotations were collapsed into one median value for each of the TMS coil directions. However, we included 12 rotated E-field sampling grids at each position in the training data set paired with identical median threshold values. This maintained the same dataset size, and we hypothesized that this approach would also reduce the influence of azimuthal neuron rotations, which were still represented in the sampled E-field vectors.

2.3. Method for estimating TMS activation thresholds with uniform E-field simulations

We implemented an additional method to estimate the neuron model thresholds that approximated the local E-field as uniform. The thresholds were pre-simulated for uniform E-field applied at range of directions spanning the polar and azimuthal directions. To simulate the response to uniform E-field, the extracellular potential was computed at each compartment with position using

| (4) |

where the direction of the uniform E-field was given by polar angle and azimuthal angle , in spherical coordinates with respect to the somatodendritic axes (Fig. 1 D), and the potential of the origin (soma) was set to zero, as in (Aberra et al., 2020, 2018). Uniform E-field was applied with the monophasic or biphasic TMS pulse at each direction with steps of 5° for both the polar and azimuthal directions, for a total of 2522 directions, generating threshold–direction maps for each model neuron.

The threshold–direction maps were then used to estimate thresholds of neurons embedded in the non-uniform FEM E-field. For a given neuron, the E-field vector at the soma was extracted and rotated into the cell-centered coordinate system used in the uniform E-field simulations (Fig. 1D). The threshold (in V/m) for the corresponding E-field direction was then interpolated from the uniform E-field threshold–direction map. This threshold was divided by the FEM E-field vector’s magnitude per A/μs current rate of change to convert to stimulator output intensity. This latter step was only necessary to determine thresholds in terms of stimulator intensity, which would be the relevant “knob” for an experimenter, rather than local E-field intensity. The threshold–direction map interpolant was implemented in MATLAB as a <monospace>griddedInterpolant</monospace> using first-order (linear) interpolation.

2.4. Code and data availability

The code and relevant data of this study can be downloaded at 10.5281/zenodo.7326454 and 10.5281/zenodo.7326394, respectively. They are also available on GitHub at https://github.com/Aman-A/TMSsimCNN_Aberra2023.

3. Results

3.1. CNN predicts accurately the threshold response of single neurons to TMS

The best CNN architecture provided remarkably accurate predictions of the activation thresholds for L2/3 PC, L4 LBC, and L5 PC neurons. Fig. 3A shows the spatial distribution of thresholds for monophasic, P–A TMS of M1 in the ernie head model (test dataset) calculated in NEURON, predicted with the ML trained on the almi5 head model, and estimated with the uniform E-field approximation. The relative errors were substantially lower for the CNN compared to the uniform E-field method (Fig. 3B). Median errors across clones and rotations for the L4 LBCs and L5 PCs ranged from −3.4 to 6.6% and −1.9 % to 3.5%, respectively, for the CNN, and −29.5 % to 38.8% and −27.5 to 49.5%, respectively, for the uniform E-field method. The L2/3 PCs had the largest errors for either approach, with median error ranging from −23.7 % to 37.1% with the CNN and −31.9 % to 97.1% with the uniform E-field method. These outer extrema of the median error distributions were driven by outliers, with 97.3% below 5% error for the CNN, compared to only 59.2% for the uniform E-field method. The full error distributions across layers for both methods are shown in Supplementary Fig. S3.

Fig. 3.

CNN accurately predicts thresholds for activation of neurons across the cortex. Actual and predicted thresholds for monophasic, P–A TMS of M1 in ernie head model (test dataset). (A) Surface plots of median threshold stimulator intensity (in coil current rate of change) across clones and rotations of L2/3 PCs (top row), L4 LBCs (middle row), and L5 PCs (bottom row) for NEURON simulations (left column), CNN prediction (middle column), and uniform E-field method (right column). (B) Surface plots of median percent error of thresholds across clones and rotations of L2/3 PCs (top row), L4 LBCs (middle row), and L5 PCs (bottom row) for CNN prediction (left column), and uniform E-field method (right column). Note the different color bar limits for the error distributions with CNN and uniform E-field method.

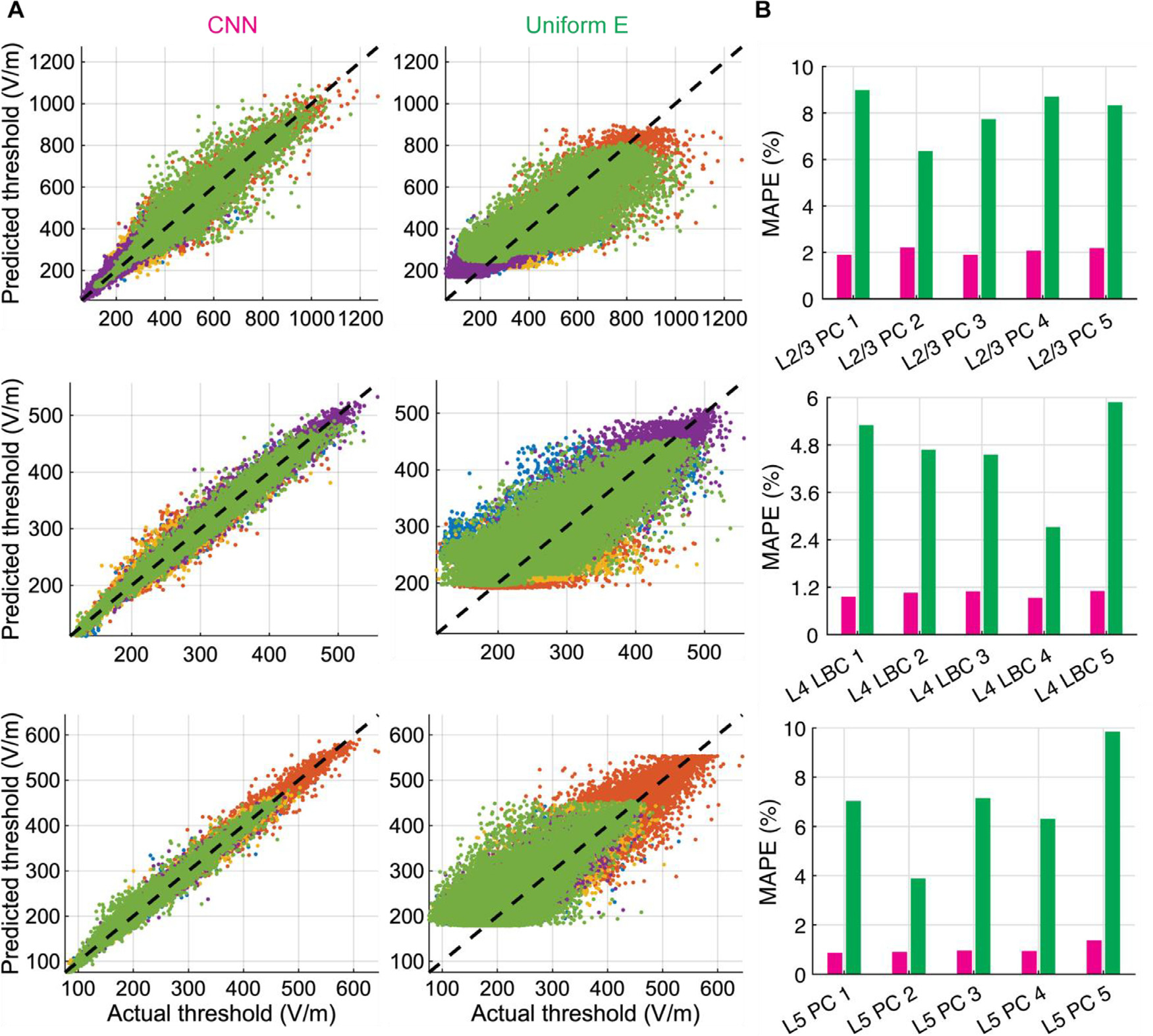

The CNN-predicted thresholds had high correlations with the thresholds calculated by repeatedly solving the non-linear cable equations across the entire set of neurons and positions, with ranging 0.959 – 0.980, 0.974 – 0.993, and 0.988 – 0.996 across the L2/3 PC, L4 LBC, and L5 PC clones, respectively (Fig. 4A). The correlations for thresholds predicted with the uniform E-field method were much weaker, with ranging 0.6371–0.8405, 0.618–0.938, and 0.645–0.914 across the L2/3 PC, L4 LBC, and L5 PC clones, respectively (Fig. 4A). The CNN outperformed the uniform E-field approach for all model neurons, yielding mean absolute percent error (MAPE) less than 2.2% (L2/3 PCs), 1.1% (L4 LBCs), and 1.4% (L5 PCs), and median absolute percent errors were less than 1.2% (L2/3 PCs), 0.75% (L4 LBCs), and 0.74% (L5 PCs). For the uniform E-field approach, MAPE was less than 9.0% (L2/3 PCs), 5.9% (L4 LBCs), and 9.8% (L5 PCs) (Fig. 4B), and median absolute percent errors were less than 5.4% (L2/3 PCs), 3.3% (L4 LBCs), and 4.5% (L5 PCs). We also trained a set of CNNs on thresholds obtained with biphasic TMS pulses and found their performance was similar to that of the monophasic pulse CNN estimators (Supplementary Fig. S4).

Fig. 4.

Distribution of threshold E-field errors for CNN and uniform E-field approximation. (A) Predicted thresholds by CNN (left column) and uniform E-field approximation (middle column) across entire test dataset plotted against thresholds from NEURON simulations (actual) in magnitude of E-field at soma for L2/3 PCs (top row), L4 LBCs (middle row), and L5 PCs (bottom row). Different colors correspond to different clones within layer. (B) Mean absolute percent error (MAPE) on test dataset for CNN (magenta) and uniform E-field approach (green), separated by clone within layer.

3.2. Dependence of CNN performance on E-field representation

The performance of ANNs often benefit from data pre-processing to assist in training or pre-identifying features that are known a priori to correlate with the output (Lathuilière et al., 2018); thus, we tested the effect of E-field representation and sampling parameters on CNN performance with the L5 PCs. Representing the E-field vectors in Cartesian coordinates yielded lower errors than spherical coordinates (Fig. 5A). This held true for the L2/3 PCs and L4 LBCs as well (Supplementary Fig. S5). Focusing on the L5 PCs, the optimal sampling grid size varied by clone, with lowest errors for the grid size that best matched the spatial extent of each specific axonal morphology (Fig. 5B). The 1.5 mm grid produced the lowest mean error across all L5 PC clones, and we used this single grid size for the remaining results. Finally, reducing the number of sampling points increased the mean error, although MAPE was still below 4% for even the 3 × 3 × 3 sampling grid (27 E-field vectors) (Fig. 5C). This trend held for both the variable and constant network sizes, with prediction errors differing by less than 1% (Supplementary Fig. S6).

Fig. 5.

Effect of E-field representation on CNN performance. (A) Mean absolute percent error metric on test dataset for L5 PC CNNs with E-field input in either spherical coordinates (represented with four variables; pre-processing described in Section 2.2.1) or Cartesian coordinates (represented with three variables). (B) MAPE of test dataset for L5 PC CNNs for varying sampling grid sizes l. (C) MAPE metric for example L5 PC (clone 1) CNN for different sampling resolutions (magenta bars), using constant network size, with uniform E-field error metric included for comparison (green bar). (D) Median magnitude of directional E-field gradients (left) and error in magnitude (middle) and direction (right) of the interpolated E-field at the AP initiation site in test dataset for different sampling resolutions for L5 PC (clone 1).

We hypothesized that increasing sampling resolution improved CNN performance by more accurately representing local E-field gradients and the E-field magnitude and direction at the site of AP initiation. As expected, the FEM E-field was represented on average with lower spatial gradients when using fewer sampling points (Fig. 5D, left), indicating higher spatial gradients of the E-field were lost due to undersampling. Furthermore, at the AP initiation site identified from the NEURON simulations, the E-field amplitude and direction were estimated less accurately using linear interpolation with fewer sampling points (Fig. 5D, middle and right). We tested the contribution of these E-field metrics to prediction errors across the test dataset for each sampling resolution using linear regression (Supplementary Fig. S7). Prediction errors were significantly correlated with all three metrics individually, with for the median magnitude of the directional E-field gradients, for the absolute percent error in the E-field magnitude at the AP initiation site, and for the angle error of the E-field at the AP initiation site (all ) (Supplementary Fig. S7D). Correlation strength was inversely related to sampling resolution, and multiple linear regression with all three metrics yielded from 0.30 to 0.20 for , respectively. Of these metrics, the strongest contributor to the overall prediction error was the error in the interpolated E-field magnitude at the AP initiation site (), followed by the E-field gradients ) and error in the E-field direction at the AP initiation site ) (Supplementary Fig. S7E).

The performance on the test dataset demonstrated that the CNN could generalize to TMS thresholds for E-fields simulated in a head model not used in training, but the space of possible E-field distributions experienced by the model neurons may not be substantially different between head models. Therefore, we tested whether the CNNs could also predict response thresholds to uniform E-fields, which are essentially zeroth order polynomial components of the non-uniform E-fields seen in the training and test datasets. Fig. 6A shows the threshold–direction maps for an example L5 PC generated with NEURON simulations and with the CNN; the CNN produced extremely low error, ranging from −2.5 to 1.9% across directions (Fig. 6B).

Fig. 6.

CNN trained on non-uniform TMS-induced E-field reproduces response to uniform E-field. (A) Threshold–direction maps generated with NEURON simulations (left) and CNN estimation (right) for an example L5 PC. Mollweide projection of thresholds on a sphere in which normal vectors represent uniform E-field direction. Arrows indicate direction of E-field relative to cell (shown on right). Crossed circle represents E-field pointing into the page, and circle with dot represents E-field pointing out of the page. (B) Percent error of CNN prediction. Inset: morphology of L5 PC used in this figure (blue are apical dendrites, green are basal dendrites, and black is axon).

The CNN trained on the TMS data also approximated the thresholds for a point current source (e.g., for intracortical microsimulation), reproducing qualitatively the current–distance relationship (Supplementary Fig. S8). However, as expected, the prediction error was much higher than for TMS, as the point source generates a highly non-uniform E-field distribution. For comparison, the mean peak magnitude of directional gradients for the point source E-field was 482.3 V/m/mm, about 340 times higher than the mean peak gradient of the TMS-induced E-fields sampled with the 9 × 9 × 9 grid. Lower distances between the point source and activated neuronal compartment, which subject the neuron to higher spatial gradients, also led to higher errors (Supplementary Fig. S8D).

3.3. Median CNNs yielded comparable accuracy with fewer inferences than cell-specific CNNs

As an initial test of the ability of the CNN architecture to generalize across morphological variants of each cell type, we trained a set of CNNs to predict the median threshold across clones and azimuthal rotations. Fig. 7 shows the median threshold and error distributions for monophasic, P–A TMS in the ernie head model compared with computing the median threshold from the output of the cell-specific CNNs. Percent error of median thresholds ranged from −22.9 to 45.8%, −10.9 to 12.2%, and −10.8 to 6.7% for the median CNNs and −19.3 to 45.5%, −5.3 to 9.9%, and −4.5 to 6.1% for the cell-specific CNNs (L2/3 PC, L4 LBC, and L5 PC, respectively). The outer extrema of errors were again driven by outliers: 98.8% of median threshold predictions for the cell-specific CNNs and 98.0% for the median CNNs were below 5% error across the test dataset. The median CNNs had slightly weaker correlations (Fig. 8A) and higher mean errors (Fig. 8B) than the cell-specific CNNs. Overall, the median CNNs provided slightly lower accuracy relative to the cell-specific CNNs but reduced the number of required CNN inferences by a factor of 60, as a single model estimated the median threshold corresponding to the twelve azimuthal rotations and five clones simulated at each position.

Fig. 7.

Threshold distributions for CNNs trained on median thresholds compared to cell-specific CNNs. Actual and predicted thresholds for monophasic, P–A TMS of M1 in ernie head model (test dataset). (A) Surface plots of median threshold stimulator intensity (in coil current rate of change) across clones and rotations of L2/3 PCs (top row), L4 LBCs (middle row), and L5 PCs (bottom row) for NEURON simulations (left column), cell-specific CNN prediction (middle column), and median CNN prediction (right column). (B) Surface plots of percent error of median thresholds computed across clones and rotations at each position for L2/3 PCs (top row), L4 LBCs (middle row), and L5 PCs (bottom row) for cell-specific CNN prediction (left column) and median CNN prediction (right column).

Fig. 8.

Distribution of median threshold E-field errors for cell-specific and median CNNs. (A) Predicted median thresholds across clones and rotations by either cell-specific or median CNNs for L2/3 PCs (top), L4 LBCs (middle), and L5 PCs (bottom) plotted against median thresholds from NEURON simulations (actual) in magnitude of E-field at soma. values for linear regression included in each panel for cell-specific CNN (magenta) and median CNN (cyan) ( for all regressions). (B) Mean absolute percent error (MAPE) on test dataset for cell-specific CNN (magenta) or median CNN (cyan).

3.4. CNN estimation provided massive speed up over neuron simulations

We quantified the computational savings resulting from using the cell-specific CNNs to estimate thresholds compared to running simulations in NEURON on a single CPU of a typical laptop (Macbook Pro-with 2.2 GHz i7–4770 CPU) or on an HPC machine with 76 CPUs (2.2 GHz Xeon Gold 6148) (Fig. 9). Determining a single threshold with a binary search algorithm requires several evaluations, depending on the proximity of the initial guess to the threshold value; on a single CPU, this required 5.0 ± 0.2 s for the L2/3 PCs (mean ± STD), 8.8 ± 0.5 s for the L4 LBCs, and 9.5 ± 1.3 s for the L5 PCs ( for all). In contrast, estimating thresholds for 1000 E-field inputs with the CNNs required 0.75 ± 0.17 ms per threshold , which was similar between cell models due to the identical model architecture. Fig. 9 illustrates how total time scales with the number of simulations (i.e., thresholds), showing sublinear increase in total time until ~100 simulations for the CNN on a single CPU laptop, at which point total time increases linearly. In addition, Fig. 9 depicts the best-case scenario for NEURON simulations by extrapolating total time based on the minimum time per simulation for each cell type if run on a single CPU or run in parallel on a 76 CPU HPC node with no parallelization overhead. By this conservative estimate, the CNN provides 2 to 4.2 orders of magnitude in computational savings compared to the NEURON simulations run serially2 and 2.1 to 2.7 orders of magnitude in computational savings compared to the NEURON simulations run in parallel on 76 CPUs on an HPC machine.

Fig. 9.

Cell-specific CNN threshold estimation is 2–4 orders of magnitude faster than NEURON. Simulation time for determining thresholds with binary search in NEURON on single CPU (light green), parallelized on single high-performance computing (HPC) node with 76 CPUs (dark green), or with CNN on single CPU (magenta) for example L2/3 PC (circles), L4 LBC (squares), and L5 PC (triangles). NEURON data points are based on average single threshold simulation time on a single CPU of laptop or HPC machine, and these were then extrapolated by assuming linear scaling (best case) and parallelization across all 76 CPUs for the latter approach. CNN data points are the actual run times for estimating the corresponding number of thresholds.

4. Discussion and conclusion

We developed a 3D CNN architecture that provided accurate estimates of the thresholds of biophysically realistic cortical neuron models for activation by TMS-induced E-fields. Using E-field vectors sampled on regularly spaced grids encompassing the neuronal morphologies, the CNNs predicted activation thresholds for TMS pulses with mean absolute percent error less than 2.5% for all models. The CNNs substantially outperformed an alternative approach in which the non-uniform E-field in the vicinity of the neurons was approximated as uniform, which yielded lower correlations and higher error. Reducing the number of E-field sampling points slightly increased error while still outperforming the uniform E-field method. The optimal E-field sampling grid size was determined by a balance between encompassing the spatial extent of the neuron without reducing significantly the sampling density or sampling extraneous distant points, e.g., E-fields outside the gray matter tissue volume. Representing the E-field vectors in spherical coordinates using four variables, compared to Cartesian coordinates using three variables, increased the error of the CNN predictions. Additionally, the CNNs were able to predict accurately thresholds for uniform E-fields, which were never seen during training, and the CNNs also performed well at predicting thresholds for point source stimulation, even though the CNNs were not trained on such data and the point source E-field is highly non-uniform compared to the TMS or uniform E-fields. On a single CPU, estimating thresholds with the CNN for 1000 unique E-field distributions, corresponding to different neuron locations and orientations in the brain, required only 670 ms, while the equivalent NEURON simulations would take, at minimum, 1.4 to 2.6 h (depending on model complexity), providing three to four orders of magnitude speedup. Training the CNNs to predict the median threshold of populations of collocated neurons sped up computation further by more than an order of magnitude.

Our approach took advantage of the ability of CNNs to extract salient features from data with regular spatial structure, as exists in 2D and 3D images. The 3D grid of E-field vectors is analogous to a volumetric image with three color channels for each E-field component. By convolving the entire image with multiple local kernels, in this case 9 × 9 × 9 images convolved with 3 × 3 × 3 kernels, CNNs extract spatially invariant features of input images for processing by deeper layers with fewer parameters than fully connected 1D networks.

In the neuron simulations, action potentials were initiated at axon terminals aligned with the local E-field, and the estimated TMS thresholds were inversely correlated with the E-field magnitude, degree of branching, myelination, and diameter (Aberra et al., 2018; Wu et al., 2016). Therefore, for a given morphology, one would expect the exact location of the E-field vectors to be important for predicting the threshold, i.e., whether the E-field is high or low near an aligned axonal branch. The CNN predicted accurately thresholds for a wide range of non-uniform and uniform E-field inputs, with mean percent error close to the window used in the simulated binary search (2%), which suggests the CNN, to some degree, encoded features of the E-field distribution relative to an internal representation of the axonal geometry. CNNs are classically thought to be insensitive to absolute spatial location; however, several studies have shown CNNs can learn implicitly spatial information by exploiting differences in convolutional kernel activations near image borders (Alsallakh et al., 2020; Islam et al., 2021; Kayhan and van Gemert, 2020).

An important consideration for our approach is the accuracy of the biophysically-realistic neuron models in predicting thresholds for TMS-induced E-fields. As discussed previously (Aberra et al., 2020), the lowest thresholds observed in our L5 PC models are close to the range of experimentally measured motor thresholds of hand muscles. For example, for the abductor digiti minimi, Sommer et al. reported resting motor thresholds of 46–103 A/μs with monophasic P–A TMS, and Bungert et al. estimated E-field magnitudes at the cortical target of 159.6 ± 15.3 V/m based on FEM models correlated with experiments (Bungert et al., 2016; Sommer et al., 2006). Other studies using E-field-based localization methods predicted E-field magnitudes in the putatively activated regions for several hand muscles below 80 V/m using biphasic TMS pulses (Numssen et al., 2021; Weise et al., 2020). While these experimental measurements overlap with the lower range of thresholds in our models, some cortical neurons are activated at intensities well below the motor threshold (Kallioniemi et al., 2014; Li et al., 2022b), and these observations suggest that our models may be overestimating thresholds. The overestimation may be due to the lack of endogenous activity or axon-specific ion channels that may reduce thresholds for E-field stimulation (Aberra et al., 2020). Alternatively, we assume the E-field computed from the macroscopic volume conductor can be directly coupled to the microscopic neuron models, and it is possible that local inhomogeneities in the neuropil microstructure may increase the E-field strength. Computational methods for incorporating microscale neuronal structures within the E-field volume conductor models would allow exploration of the impact of these effects (Agudelo-Toro and Neef, 2013). Nonetheless, these factors are unrelated to the accuracy of the CNN threshold estimation approach presented here, as the CNNs can be retrained as the underlying neuron models are refined.

Several factors may have contributed to the residual error and outliers. One factor was likely the under-sampling of E-field variations in regions with higher gradients or near tissue boundaries (i.e., gray matter / white matter and gray matter / CSF), as errors were increased by reducing the sampling resolution while keeping the CNN architecture fixed. Using fewer sampling points would be advantageous to reduce the time spent interpolating E-field vectors within the FEM mesh, which can be substantial for high sampling densities and large neuronal populations. For reference, the NEURON simulations require interpolating E-field vectors at all compartments, which number over 880 for all cell types, except one L2/3 PC with 610 compartments, and are as high as 4196 for one of the L4 LBCs, due to its dense axonal arborization. Still, even the 9 × 9 × 9 CNN E-field sampling grid (729 E-field vectors total) required fewer E-field samples than most of the biophysically realistic neuron simulations. Reducing the sampling resolution of the E-field distributions provided the network with less accurate representations of the E-field gradients and the E-field strength and direction at the site of AP initiation. Metrics related to these three factors explained nearly 30% of the variance in CNN prediction error at the lowest sampling resolution. The CNNs were able to generalize well to predict thresholds for uniform E-field (zero spatial gradient), but, as expected, prediction errors were significantly higher for the point source E-fields, which had over 100 times higher spatial gradients. Nonetheless, the CNNs reproduced qualitatively the current–distance relationship without retraining, suggesting the models could learn to estimate more accurately responses to intracortical microstimulation, as well. This would require training a set of CNNs using the desired pulse waveform (e.g., 0.2 ms cathodic square pulse) and potentially using a higher E-field sampling resolution, although alternative approaches to spatial and temporal representation of the E-field can also be considered, as discussed below.

TMS thresholds are strongly correlated with the E-field magnitude (Aberra et al., 2020; Bungert et al., 2016; Weise et al., 2020), so we expected that converting the E-field to spherical coordinates would reduce error further, since the magnitude is explicitly represented as one of the coordinate variables. However, the CNNs performed best with Cartesian E-field coordinates. This was possibly due to the additional E-field coordinate variable introduced to represent the azimuthal component; while avoiding circular discontinuities in the data (Li et al., 2017; Zhou et al., 2019), the use of spherical coordinates increased the dimensionality of the data without providing more information to the network (Bartal et al., 2019). Furthermore, prediction errors were slightly higher in the L2/3 PCs compared to the L4 LBCs and L5 PCs. The L2/3 PCs not only had a wider range of E-field thresholds due to their high threshold anisotropy (i.e., strong dependence on orientation with respect to the E-field), but they also have long horizontal axon branches that extend several millimeters at oblique and tangential angles. This made it difficult to select a grid size that encompassed the neuron models without also sampling from points in the CSF tissue volume of the FEM, potentially resulting in some positions where the CNN did not have adequate E-field samples near the activated axonal branch. Using the regular sampling grid allowed the same grid points to be used for every clone at each position and reduced the number of E-field points to interpolate by a factor of five. However, one limitation of this approach is the sampling grid shape does not always conform to the anatomy of the neuron or the cortex, depending on neuron depth, local curvature, and thickness of the cortical sheet. An alternative approach might be to instead represent the E-field on the neural compartments, e.g. using graph convolutional neural networks (Niepert et al., 2016), as the model morphologies are more reliably positioned within the gray matter. Sampling along the morphology may also more efficiently capture variations of the E-field magnitude at relevant locations without including irrelevant E-field vectors distant from an axonal branch or E-field vectors that fall in a different tissue compartment. This approach, however, will require more preprocessing of the E-field simulations to obtain unique spatial sampling for each cell morphology and orientation.

We also found the same CNN architecture could be trained to predict the median threshold within a cell type across morphological variants (“clones”) and their arbitrary azimuthal rotations about their somatodendritic axes within the cortical columns. This approach allowed direct prediction of the population response at a given cortical position using a single E-field sampling grid and CNN inference, rather than the 60 used with the cell-specific CNNs for all twelve azimuthal rotations and five clones. The accuracy of this approach of estimating the ground-truth population statistics derived from the biophysical neuron model simulations was lower than the cell-specific CNNs but still yielded mean absolute percent errors below 2%. Interestingly, these median CNNs were trained on the E-field samples in Cartesian coordinates, which suggests they were able to ignore irrelevant information about the azimuthal orientation of the cell within the E-field distributions. Alternatively, other approaches such as mean-field approximation could be deployed to estimate the average threshold of different neuronal populations (Weise et al., 2023).

This is the first study to use ANNs to represent the response of morphologically realistic, multicompartmental neuron models to stimulation with exogenous E-fields. Previously, Chaturvedi et al. used an ANN with one hidden layer to estimate the volume of tissue activated (VTA) by multi-contact configurations of deep brain stimulation as computed with multicompartmental straight axon models (Chaturvedi et al., 2013). Otherwise, recent studies used deep learning to predict the subthreshold and suprathreshold temporal dynamics of neuron models in response to synaptic inputs (Beniaguev et al., 2021; Oláh et al., 2022). Olah et al. tested the ability of multiple ANN architectures to predict somatic voltage and current time series of biophysically realistic models of L5 PCs in NEURON, similar to those included in this study (Oláh et al., 2022). They found the only architecture capable of predicting accurately the subthreshold and suprathreshold dynamics was a model with both convolutional and long short-term memory (CNN-LSTM). Interestingly, the CNN-LSTM reliably reproduced the response of the NEURON models to distributed synaptic inputs using only the somatic voltage. At the same time, it is unclear whether this approach could predict efficiently the dynamics in the rest of the neuronal compartments, which is necessary for applications involving extracellular stimulation, as in the present study. Beniaguev et al. conducted a similar study and found a temporal convolutional network (TCN) could reproduce the input–output properties of a biophysically detailed L5 PC (Beniaguev et al., 2021). Our focus on estimating thresholds for activation allowed a simpler implementation that resulted in low prediction error, indicating the CNNs learned the dependency of activation thresholds on E-field spatial distributions without requiring explicit representation of underlying neural dynamics, i.e., voltage and current time courses in any compartment. Still, these studies suggest ANNs can accurately represent the spatiotemporal computations performed by spatially extended neuron models. In our approach, pulse waveform was implicit in the training data, and this requires separate CNNs to be trained on threshold data with other pulse waveforms. For most TMS applications, this approach is likely acceptable, due to the limited range of pulse waveforms produced by conventional TMS devices (e.g., monophasic and biphasic pulses). However, a time-dependent approach is necessary to estimate thresholds for novel pulse waveforms, such as those generated by the specific inductance and resistance of novel coils or by TMS devices with controllable pulse shape (Peterchev et al., 2014, 2013), without requiring separate training sets and CNNs for discrete waveform selections. Additionally, the calculation of “ground truth” thresholds was conducted with quiescent neurons, and endogenous activity can alter the response to TMS experimentally and shift the thresholds of individual neurons (Di Lazzaro et al., 2008, 1999; Goetz and Peterchev, 2012). Combining 3D convolutional layers to encode the interaction between the extracellular E-field and the neuron morphology with recurrent layers to capture temporal dynamics of the pulse and/or the neural membrane could combine the advantages of both approaches and is likely an important direction for future research.

The CNNs provided several orders of magnitude reductions in required computation, making it feasible to incorporate neural response models in E-field simulation packages for use on commonly available computational resources. Simulating the TMS-induced E-field in head models with 1st order FEM, as used in SimNIBS (Thielscher et al., 2015), requires on the order of 1–2 min on a typical computer (Saturnino et al., 2019). Estimating the response of the full population we modeled in the ernie mesh (3 layers, 5000 neurons per layer, 12 rotations) would add less than 3 min on a single CPU. This time would be dramatically reduced by using a graphics processing unit (GPU), but even if a GPU is not available, further speedup on CPUs is feasible by optimization of the CNN implementation. The computational demands of estimation can be reduced further by estimating statistics, e.g., the median, of the activation threshold across the clones and rotations of each cell type.

In conclusion, subject-specific head models of the E-field can support accurate dosing and targeting of cortical structures by TMS; however, these models alone cannot predict the physiological response. Combining E-field models with biophysically realistic neuron models addresses this limitation, but calculating the neural response is extremely computationally demanding (Aberra et al., 2020). Combining ANN estimators of the neural response with fast approaches to E-field computation (Gomez et al., 2021; Stenroos and Koponen, 2019; Yokota et al., 2019) may enable more TMS users to adopt these multi-scale biophysically-based models in research and clinical applications.

Supplementary Material

Acknowledgments

We thank Dr. Boshuo Wang, Dr. Nicole Pelot, Minhaj Hussein, and Dr. David Carlson, for their technical assistance and helpful discussions. Dr. Wang also assisted in revising early drafts of the manuscript. We also thank the Duke Compute Cluster team for computational support. This work was supported by NIH Grant Nos. R01NS088674, R01NS117405, and R01MH128422, and NSF Graduate Research Fellowship DGF Grant No. 1106401. The content is solely the responsibility of the authors and does not necessarily represent the official views of the funding agencies.

Footnotes

A preliminary version of this work was deposited in bioRxiv on May 20, 2022 DOI:10.1101/2022.05.18.490331.

The estimate of 154 million neurons was calculated based on a neocortical neuron density of 120,000 per mm2 (through the cortical depth) (Pakkenberg and Gundersen, 1997) and the surface area of the precentral gyrus of 1,280 mm2 in the SimNIBS v1.0 almi5 example head mesh (Thielscher et al., 2015).

Single CPU run times in NEURON varied between the different CPUs tested, with faster serial run times on the Macbook Pro (shown in Figure 9). On the HPC node, single CPU run times were 6.6 ± 0.3 sec for the L2/3 PC, 10.5 ± 0.3 sec for the L4 LBC, and 11.2 ± 0.2 sec for the L5 PC.

Declaration of Competing Interest

A.V.P. is an inventor on patents on transcranial magnetic stimulation technology and in the past 3 years has received equity options and served on the scientific advisory board of Ampa and has received research funding, travel support, patent royalties, consulting fees, equipment loans, hardware donations, and/or patent application support from Rogue Research, Magstim, MagVenture, Neuronetics, BTL Industries, Soterix Medical, Magnetic Tides, and Ampa.

Credit authorship contribution statement

Aman S. Aberra: Conceptualization, Methodology, Software, Investigation, Formal analysis, Data curation, Writing – original draft, Visualization. Adrian Lopez: Methodology, Software, Investigation, Writing – review & editing. Warren M. Grill: Conceptualization, Methodology, Resources, Writing – review & editing, Supervision, Funding acquisition. Angel V. Peterchev: Conceptualization, Methodology, Resources, Writing – review & editing, Supervision, Project administration, Funding acquisition.

Supplementary materials

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.neuroimage.2023.120184.

Data availability

I have shared the links to my data and code at the ‘Attach File’ step.

Data availability

The code and relevant data of this study can be downloaded at 10.5281/zenodo.7326454 and 10.5281/zenodo.7326394, respectively. They will also be made available on GitHub upon publication.

References

- Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M, Ghemawat S, Goodfellow I, Harp A, Irving G, Isard M, Jia Y, Jozefowicz R, Kaiser L, Kudlur M, Levenberg J, Mane D, Monga R, Moore S, Murray D, Olah C, Schuster M, Shlens J, Steiner B, Sutskever I, Talwar K, Tucker P, Vanhoucke V, Vasudevan V, Viegas F, Vinyals O, Warden P, Wattenberg M, Wicke M, Yu Y, Zheng X, 2016. TensorFlow: large-scale machine learning on heterogeneous distributed systems. arXiv. 265–283. doi: 10.48850/arXiv.1603.04467. [DOI] [Google Scholar]

- Aberra AS, Peterchev AV, Grill WM, 2018. Biophysically realistic neuron models for simulation of cortical stimulation. J. Neural Eng. 15, 066023. doi: 10.1088/1741-2552/aadbb1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aberra AS, Wang B, Grill WM, Peterchev AV, 2020. Simulation of transcranial magnetic stimulation in head model with morphologically-realistic cortical neurons. Brain Stimul. 13, 175–189. doi: 10.1016/j.brs.2019.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Agudelo-Toro A, Neef A, 2013. Computationally efficient simulation of electrical activity at cell membranes interacting with self-generated and externally imposed electric fields. J. Neural Eng. 10, 1–19. doi: 10.1088/1741-2560/10/2/026019. [DOI] [PubMed] [Google Scholar]

- Akbar MN, Yarossi M, Martinez-Gost M, Sommer MA, Dannhauer M, Rampersad S, Brooks D, Tunik E, Erdomu ş D, 2020. Mapping motor cortex stimulation to muscle responses: a deep neural network modeling approach. In: Proceedings of the ACM International Conference Proceeding Series, pp. 101–106. doi:10.1145/3389189.3389203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alsallakh B, Kokhlikyan N, Miglani V, Yuan J, Reblitz-Richardson O, 2020. Mind the Pad – CNNs can Develop Blind Spots 1–15. [Google Scholar]

- Barker AT, Jalinous R, Freeston IL, 1985. Non-invasive magnetic stimulation of human motor cortex. Lancet 1, 1106–1107. doi: 10.1016/S0140-6736(85)92413-4. [DOI] [PubMed] [Google Scholar]

- Bartal Y, Fandina N, Neiman O, 2019. Dimensionality reduction: theoretical perspective on practical measures. Adv. Neural. Inf. Process. Syst 32. [Google Scholar]

- Beniaguev D, Segev I, London M, 2021. Single cortical neurons as deep artificial neural networks. Neuron 109, 2727–2739. doi: 10.1016/j.neuron.2021.07.002, e3. [DOI] [PubMed] [Google Scholar]

- Bergstra J, Bengio Y, 2012. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305. [Google Scholar]

- Blumberger DM, Vila-Rodriguez F, Thorpe KE, Feffer K, Noda Y, Giacobbe P, Knyahnytska Y, Kennedy SH, Lam RW, Daskalakis ZJ, Downar J, 2018. Effectiveness of theta burst versus high-frequency repetitive transcranial magnetic stimulation in patients with depression (THREE-D): a randomised non-inferiority trial. Lancet 391, 1683–1692. doi: 10.1016/S0140-6736(18)30295-2. [DOI] [PubMed] [Google Scholar]

- Bossetti CA, Birdno MJ, Grill WM, 2008. Analysis of the quasi-static approximation for calculating potentials generated by neural stimulation. J. Neural Eng. 5, 44–53. doi: 10.1088/1741-2560/5/1/005. [DOI] [PubMed] [Google Scholar]

- Bungert A, Antunes A, Espenhahn S, Thielscher A, 2016. Where does TMS stimulate the motor cortex? combining electrophysiological measurements and realistic field estimates to reveal the affected cortex position. Cereb. Cortex 27, 5083–5094. doi: 10.1093/cercor/bhw292. [DOI] [PubMed] [Google Scholar]

- Carmi L, Tendler A, Bystritsky A, Hollander E, Blumberger DM, Daskalakis J, Ward H, Lapidus K, Goodman W, Casuto L, Feifel D, Barnea-Ygael N, Roth Y, Zangen A, Zohar J, 2019. Efficacy and safety of deep transcranial magnetic stimulation for obsessive-compulsive disorder: a prospective multicenter randomized double-blind placebo-controlled trial. Am. J. Psychiatry 176, 931–938. doi: 10.1176/appi.ajp.2019.18101180. [DOI] [PubMed] [Google Scholar]

- Chaturvedi A, Luján JL, McIntyre CC, 2013. Artificial neural network based characterization of the volume of tissue activated during deep brain stimulation. J. Neural Eng. 10. doi: 10.1088/1741-2560/10/5/056023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Destrieux C, Fischl B, Dale A, Halgren E, 2010. Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. Neuroimage 53, 1–15. doi: 10.1016/j.neuroimage.2010.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Lazzaro V, Restuccia D, Oliviero A, Profice P, Ferrara L, Insola A, Mazzone P, Tonali PA, Rothwell JC, 1999. Effects of voluntary contraction on descending volleys evoked by transcranial electrical stimulation over the motor cortex hand area in conscious humans. Exp. Brain Res. 124, 525–528. doi: 10.1007/s002210050649. [DOI] [PubMed] [Google Scholar]

- Di Lazzaro V, Ziemann U, Lemon RN, 2008. State of the art: physiology of transcranial motor cortex stimulation. Brain Stimul. 1, 345–362. doi: 10.1016/j.brs.2008.07.004. [DOI] [PubMed] [Google Scholar]

- Dinur-Klein L, Dannon P, Hadar A, Rosenberg O, Roth Y, Kotler M, Zangen A, 2014. Smoking cessation induced by deep repetitive transcranial magnetic stimulation of the prefrontal and insular cortices: a prospective, randomized controlled trial. Biol. Psychiatry 76, 742–749. doi: 10.1016/j.biopsych.2014.05.020. [DOI] [PubMed] [Google Scholar]

- George MS, 2019. Whither TMS: a one-trick pony or the beginning of a neuroscientific revolution? Am. J. Psychiatry 176, 904–910. doi: 10.1176/appi.ajp.2019.19090957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- George MS, Lisanby SH, Avery D, McDonald WM, Durkalski V, Pavlicova M, Anderson B, Nahas Z, Bulow P, Zarkowski P, Holtzheimer PE, Schwartz T, Sackeim HA, 2010. Daily left prefrontal transcranial magnetic stimulation therapy for major depressive disorder. Arch. Gen. Psychiatry 67, 507–516. doi: 10.1001/archgenpsychiatry.2010.46. [DOI] [PubMed] [Google Scholar]

- Glorot X, Bengio Y, 2010. Understanding the difficulty of training deep feedforward neural networks. J. Mach. Learn. Res. 9, 249–256. [Google Scholar]

- Goetz SM, Peterchev AV, 2012. A model of variability in brain stimulation evoked responses. In: Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society. EMBS, pp. 6434–6437. doi: 10.1109/EMBC.2012.6347467. [DOI] [PubMed] [Google Scholar]

- Gomez LJ, Dannhauer M, Peterchev AV, 2021. Fast computational optimization of TMS coil placement for individualized electric field targeting. Neuroimage 228, 117696. doi: 10.1016/j.neuroimage.2020.117696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomez-Tames J, Laakso I, Murakami T, Ugawa Y, Ugawa Y, Hirata A, 2020. TMS activation site estimation using multiscale realistic head models. J. Neural Eng. 17. doi: 10.1088/1741-2552/ab8ccf. [DOI] [PubMed] [Google Scholar]

- Hines ML, Carnevale NT, 1997. The NEURON simulation environment. Neural Comput. 9, 1179–1209. doi: 10.1162/neco.1997.9.6.1179. [DOI] [PubMed] [Google Scholar]

- Huang YZ, Lu MK, Antal A, Classen J, Nitsche M, Ziemann U, Ridding MC, Hamada M, Ugawa Y, Jaberzadeh S, Suppa A, Paulus W, Rothwell JC, 2017. Plasticity induced by non-invasive transcranial brain stimulation: a position paper. Clin. Neurophysiol. 128, 2318–2329. doi: 10.1016/j.clinph.2017.09.007. [DOI] [PubMed] [Google Scholar]

- Islam MA, Kowal M, Jia S, Derpanis KG, Bruce NDB, 2021. Position, padding and predictions: a deeper look at position information in CNNs 1–19. [Google Scholar]

- Kallioniemi E, Säisänen L, Könönen M, Awiszus F, Julkunen P, 2014. On the estimation of silent period thresholds in transcranial magnetic stimulation. Clin. Neurophysiol. 125, 2247–2252. doi: 10.1016/j.clinph.2014.03.012. [DOI] [PubMed] [Google Scholar]

- Kayhan OS, van Gemert JC, 2020. On translation invariance in CNNs: convolutional layers can exploit absolute spatial location. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 14262–14273. doi: 10.1109/CVPR42600.2020.01428. [DOI] [Google Scholar]

- Kingma DP, Ba JL, 2015. Adam: a method for stochastic optimization. Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings 1–15. [Google Scholar]

- Kolar D, 2017. Current status of electroconvulsive therapy for mood disorders: a clinical review. Evid. Based Ment. Health 20, 12–14. doi: 10.1136/eb-2016-102498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lathuilière S, Mesejo P, Alameda-Pineda X, Horaud R, 2018. A Comprehensive Analysis of Deep Regression. arXiv 42, 2065–2081. [DOI] [PubMed] [Google Scholar]

- Lecun Y, Bengio Y, Hinton G, 2015. Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- Lefaucheur JP, Andre-Obadia N, Antal A, Ayache SS, Baeken C, Benninger DH, Cantello RM, Cincotta M, de Carvalho M, De Ridder D, Devanne H, Di Lazzaro V, Filipovic SR, Hummel FC, Jaaskelainen SK, Kimiskidis VK, Koch G, Langguth B, Nyffeler T, Oliviero A, Padberg F, Poulet E, Rossi S, Rossini PM, Rothwell JC, Schonfeldt-Lecuona C, Siebner HR, Slotema CW, Stagg CJ, Valls-Sole J, Ziemann U, Paulus W, Garcia-Larrea L, 2014. Evidence-based guidelines on the therapeutic use of repetitive transcranial magnetic stimulation (rTMS). Clin. Neurophysiol. 125, 2150–2206. [DOI] [PubMed] [Google Scholar]

- Levkovitz Y, Isserles M, Padberg F, Lisanby SH, Bystritsky A, Xia G, Tendler A, Daskalakis ZJ, Winston JL, Dannon P, Hafez HM, Reti IM, Morales OG, Schlaepfer TE, Hollander E, Berman JA, Husain MM, Sofer U, Stein A, Adler S, Deutsch L, Deutsch F, Roth Y, George MS, Zangen A, 2015. Efficacy and safety of deep transcranial magnetic stimulation for major depression: a prospective multicenter randomized controlled trial. World Psychiatry 14, 64–73. doi: 10.1002/wps.20199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li H, Deng ZD, Oathes D, Fan Y, 2022a. Computation of transcranial magnetic stimulation electric fields using self-supervised deep learning. Neuroimage 264, 119705. doi: 10.1016/j.neuroimage.2022.119705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li H, Hou J, Adhikari B, Lyu Q, Cheng J, 2017. Deep learning methods for protein torsion angle prediction. BMC Bioinf. 18, 1–13. doi: 10.1186/s12859-017-1834-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Z, Peterchev AV, Rothwell JC, Goetz SM, 2022b. Detection of motor-evoked potentials below the noise floor: rethinking the motor stimulation threshold. J. Neural Eng. 19, 056040. doi: 10.1088/1741-2552/ac7dfc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markram H, Muller E, Ramaswamy S, Reimann MW, Abdellah M, Sanchez CA, Ailamaki A, Alonso-Nanclares L, Antille N, Arsever S, Kahou GAA, Berger TK, Bilgili A, Buncic N, Chalimourda A, Chindemi G, Courcol JD, Delalondre F, Delattre V, Druckmann S, Dumusc R, Dynes J, Eilemann S, Gal E, Gevaert ME, Ghobril JP, Gidon A, Graham JW, Gupta A, Haenel V, Hay E, Heinis T, Hernando JB, Hines ML, Kanari L, Keller D, Kenyon J, Khazen G, Kim Y, King JG, Kisvarday Z, Kumbhar P, Lasserre S, Le Bé JV, Magalhães BRC, Merchán-Pérez A, Meystre J, Morrice BR, Muller J, Muñoz-Céspedes A, Muralidhar S, Muthurasa K, Nachbaur D, Newton TH, Nolte M, Ovcharenko A, Palacios J, Pastor L, Perin R, Ranjan R, Riachi I, Rodríguez J−R., Riquelme JL., Rössert C., Sfyrakis K, Shi Y, Shillcock JC., Silberberg G, Silva R., Tauheed F., Telefont M, Toledo-Rodriguez M, Tränkler T, Van Geit W, Díaz JV., Walker R, Wang Y., Zaninetta SM, DeFelipe J, Hill SL, Segev I, Schürmann F, 2015. Reconstruction and simulation of neocortical microcircuitry. Cell 163, 456–492. doi: 10.1016/j.cell.2015.09.029. [DOI] [PubMed] [Google Scholar]

- Nagarajan SS, Durand DM, 1996. A generalized cable equation for magnetic stimulation of axons. IEEE Trans. Biomed. Eng. 43, 304–312. doi: 10.1109/10.486288. [DOI] [PubMed] [Google Scholar]

- Niepert M, Ahmed M, Kutzkov K, 2016. Learning convolutional neural networks for graphs. In: Proceedings of the 33rd International Conference on Machine Learning, 1 doi: 10.1109/ITC.2010.5608729. [DOI] [Google Scholar]

- Numssen O, Zier AL, Thielscher A, Hartwigsen G, Knösche TR, Weise K, 2021. Efficient high-resolution TMS mapping of the human motor cortex by nonlinear regression. Neuroimage 245, 118654. doi: 10.1016/j.neuroimage.2021.118654. [DOI] [PubMed] [Google Scholar]

- Oláh VJ, Pedersen NP, Rowan MJ, 2022. In: Ultrafast Simulation of Large-Scale Neocortical Microcircuitry With Biophysically Realistic Neurons, 11. eLife, p. e79535. doi: 10.7554/eLife.79535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olah VJ, Pedersen NP, Rowan MJM, 2021. Ultrafast Large-Scale Simulations of Biophysically Realistic Neurons Using Deep Learning. bioRxiv 1–35. [Google Scholar]

- Opitz A, Windhoff M, Heidemann RM, Turner R, Thielscher A, 2011. How the brain tissue shapes the electric field induced by transcranial magnetic stimulation. Neuroimage 58, 849–859. doi: 10.1016/j.neuroimage.2011.06.069. [DOI] [PubMed] [Google Scholar]