Abstract

Body ownership is the multisensory perception of a body as one’s own. Recently, the emergence of body ownership illusions like the visuotactile rubber hand illusion has been described by Bayesian causal inference models in which the observer computes the probability that visual and tactile signals come from a common source. Given the importance of proprioception for the perception of one’s body, proprioceptive information and its relative reliability should impact this inferential process. We used a detection task based on the rubber hand illusion where participants had to report whether the rubber hand felt like their own or not. We manipulated the degree of asynchrony of visual and tactile stimuli delivered to the rubber hand and the real hand under two levels of proprioceptive noise using tendon vibration applied to the lower arm’s antagonist extensor and flexor muscles. As hypothesized, the probability of the emergence of the rubber hand illusion increased with proprioceptive noise. Moreover, this result, well fitted by a Bayesian causal inference model, was best described by a change in the a priori probability of a common cause for vision and touch. These results offer new insights into how proprioceptive uncertainty shapes the multisensory perception of one’s own body.

Keywords: Bodily awareness, Multisensory integration, Psychophysics, Bayesian causal inference, Bodily illusion, Proprioception

1. Introduction

Sensory signals inform us about our own bodies as much as about our surroundings. Visual, tactile, proprioceptive, and other body-related signals contribute to the perception of our own body and blend seamlessly together into a coherent experience of our physical self (Ehrsson, 2020, pp. 179–200). For example, lift your hand in front of your face and wiggle your fingers. You not only see finger movements and feel movements as separate sensations and events but also experience the visual and somatosensory impressions as part of a unitary entity that is your own hand. This feeling that body parts are one’s own is referred to as ‘body ownership’ (Ehrsson, 2020); it is perceptual in nature and closely related to multisensory bodily awareness (Ehrsson, 2012, 2020), somatosensory processing (Tsakiris, 2010) and the kinesthetic senses (Proske & Gandevia, 2018). Body ownership is also a crucial component of bodily self-consciousness (Blanke, Slater, & Serino, 2015) and the sense of self (Vignemont, 2018) and is considered a key dimension in the embodiment of prosthetic limbs (Collins et al., 2017; Rognini et al., 2019; Zbinden et al., 2022) and virtual avatars in computer-generated environments (Kilteni et al., 2012).

The sense of body ownership can be studied under controlled laboratory conditions in healthy individuals using bodily illusion paradigms (Ehrsson, 2023), such as the famous rubber hand illusion (Botvinick & Cohen, 1998). The rubber hand illusion is elicited using correlated visuotactile stimulation: with synchronous and repeated stroking of the rubber hand, which is in full view of the participant, and the participant’s corresponding real hand, which is hidden, most participants begin, after some time, to experience a perceptual illusion where proprioceptive and tactile sensations seem to originate from the rubber hand and the rubber hand feels like part of the participant’s own body (Botvinick & Cohen, 1998). Extensive literature has shown that body ownership is constrained by temporal (Chancel & Ehrsson, 2020; Costantini et al., 2020; Shimada et al., 2009) and spatial (Kalckert & Ehrsson, 2014; Lloyd, 2007) limits beyond which the conflict between different sensory signals is too great for them to be integrated, which, in turn, disrupt the body ownership percept (Blanke et al., 2015; Ehrsson, 2012, p. 18; Ehrsson et al., 2004); other rules that determine body ownership are a humanoid body shape (Petkova & Ehrsson, 2008; Tsakiris, 2010; Tsakiris & Haggard, 2005), matching seen and felt arm orientation (Ehrsson et al., 2004; Ide, 2013; Tsakiris & Haggard, 2005), and matching tactile (Chancel & Ehrsson, 2020; Crucianelli et al., 2013; Schütz-Bosbach et al., 2009; Ward et al., 2015) and thermosensory properties (Crucianelli & Ehrsson, 2022) of seen and felt events on the body surface. Notably, the rubber hand illusion does not arise when the asynchrony between the visual and tactile stimulation becomes too great; in most participants, asynchronies longer than 200–300 ms significantly reduce the illusion, and delays greater than 500 ms typically eliminate the illusion.

These observations are well aligned with what is known of the general principles governing multisensory perception of external objects and events: sensory signals from different modalities will be integrated or segregated depending on how spatially and temporally congruent they are (Ernst and Bülthoff 2004; Stein 2012; Stein and Meredith 1993). Computational behavioral studies suggest that multisensory integration does not simply occur as a “passive” process where signals that meet fixed temporal and spatial rules are bound together; instead, the brain’s perceptual system seems to work more as an “inference machine” that infers how likely it is for the different sensory signals to come from one common cause given the spatial proximity, simultaneity and temporal correlation of the sensory signals, their relative uncertainty, and prior constraints extracted from the environment and previous experience. The outcome of this probabilistic decision process determines whether the sensory signals should be combined or segregated and the extent of the multisensory integration. This probabilistic framework for sensory perception is called Bayesian causal inference (Körding et al., 2007; Sato et al., 2007) and has been applied to various multisensory paradigms related to the perception of events and objects in the external environment (Aller & Noppeney, 2019; Kayser & Shams, 2015; Rohe & Noppeney, 2015); for example, the integration of vision and touch in object size and shape perception (Ernst & Banks, 2002), perception of visual and tactile motion (Gori et al., 2011), and the spatial localization of visual and tactile events (Badde, Navarro, & Landy, 2020) all seem to follow Bayesian weighting and Bayesian causal inference principles.

This computational framework, efficiently modeling multisensory integration for the perception of external objects and events, can also be applied to the multisensory perception of one’s own body and the sense of body ownership (Chancel, Ehrsson, & Ma, 2022; Ehrsson & Chancel, 2019; Fang et al., 2019; Kilteni et al., 2015; Samad et al., 2015). For example, the probability of the emergence of the rubber hand illusion for a given degree of visuotactile asynchrony can be described by an inference of a common cause for vision and somatosensation according to the Bayesian principles, i.e., it takes into account the trial-by-trial visuotactile uncertainty (Chancel, Ehrsson, et al., 2022; Fang et al., 2019; Samad et al., 2015). In the Bayesian computation framework of multisensory integration, sensory reliability plays a key role, since uncertainty influences the probabilistic decision process and perceptual threshold in a way that is not captured in models based on “fixed” temporal and spatial rules; however, we still know very little about how sensory uncertainty affects the rubber hand illusion. To the best of our knowledge, only visual uncertainty has been experimentally manipulated in earlier work (Chancel, Ehrsson, et al., 2022), so new studies investigating the effects of somatosensory noise are needed to test the model’s key predictions.

In the classical visuotactile rubber hand illusion, the distance between the rubber hand and the participant’s real hand significantly impacts the body ownership percept (Chancel & Ehrsson, 2020; Fan et al., 2021; Kalckert & Ehrsson, 2014; Lloyd, 2007). Thus, the spatial conflict between the visual (sight of the rubber hand) and proprioceptive (sensation from the real hand) signals influences the resulting illusion, as does the temporal congruence or incongruence of the tactile and visual stimuli as described above. The greater the spatial visuoproprioceptive conflict is, the weaker the illusion (Chancel & Ehrsson, 2020; Fan et al., 2021; Kalckert & Ehrsson, 2014; Lloyd, 2007). Notably, the influence of proprioceptive precision (i.e., reliability) on the body ownership percept elicited in the rubber hand illusion has recently been questioned (Litwin et al., 2020; Motyka & Litwin, 2019), which is at odds with a Bayesian account of the sensory processes involved in body ownership (Litwin, 2020). However, in these studies, proprioceptive reliability was not experimentally manipulated but indirectly inferred in a separate hand localization task and used to investigate individual differences in illusion strength. Such individual differences may not directly relate to the probabilistic multisensory processes that determine the illusion based on proprioceptive uncertainty “online”, i.e., when individual participants' brains must decide whether the visual and somatosensory signals should be combined or segregated in a trial-by-trial fashion. Given the crucial role of proprioception in bodily awareness and the rubber hand illusion, it is important to clarify how proprioceptive uncertainty affects the sense of body ownership.

In the present study, we used an experimental paradigm we recently developed to quantitatively apply a Bayesian causal inference model to the visuotactile rubber hand illusion (Chancel, Ehrsson, et al., 2022) to investigate the contribution of the proprioceptive signal’s reliability to the elicitation of illusory hand ownership. In this paradigm, healthy participants were repeatedly exposed to 12-sec trials of visuotactile stimulation delivered to the rubber hand (in view) and their real hand (out of view) with systematic and subtle variations in the degree of asynchrony (0, ±150, ±300 and ±500 ms). After each trial, participants judged whether the rubber hand felt like their own hand or not (yes/no detection-like judgments). Using this paradigm, we first aimed to assess the impact of proprioceptive uncertainty on the visuotactile rubber hand illusion in quantitative terms. To reduce the reliability of the proprioceptive signals from the forearm, we delivered simultaneous vibration (20 Hz) of the participants' right biceps and triceps muscles (“covibration”) in half of the illusion trials. This muscle vibration stimulation degrades the reliability of the proprioceptive signals by targeting and masking the natural discharge of the muscle spindles (Calvin-Figuière, Romaiguère, Gilhodes, & Roll, 1999; Chancel, Blanchard, Guerraz, Montagnini, & Kavounoudias, 2016; Chancel, Brun, Kavounoudias, & Guerraz, 2016; Roll et al., 1989). Thus, the signal from the muscle spindle afferents of one muscle is cancelled by the corresponding message from the antagonist muscle, leading to reduced precision regarding arm posture and movement perception when the covibration is applied (Gilhodes et al., 1986). When covibration is delivered, according to the Bayesian hypothesis, the added proprioceptive noise is expected to lower the relative contribution of the proprioceptive signal when integrated with the visual signal about the hand position perceived by the participant (Ernst & Bülthoff, 2004). As a consequence, the spatial estimate of the participant’s perceived hand location should be closer to that of the rubber hand (Samad et al., 2015). Moreover, such loss of proprioceptive reliability should cause the relative contribution of the visuoproprioceptive estimate to the illusory body ownership percept to decrease compared to the relative contribution of the visuotactile estimate.

Thus, we first tested the hypothesis that adding proprioceptive uncertainty by muscle covibration (proprioceptive noise condition) would increase the number of rubber hand ownership reports compared to the condition without covibration (no noise condition). Our behavioral results supported this prediction, thus demonstrating a direct contribution of proprioceptive uncertainty on the rubber hand illusion. Second, we fitted the participants' responses to a Bayesian causal inference model of body ownership (Chancel, Ehrsson, et al., 2022) to test the validity of this computational framework in terms the proprioceptive noise manipulation. We also used this model-fitting approach to investigate which component of the model can explain how changes in proprioceptive reliability led to changes in perceived illusory rubber hand ownership. Specifically, we assessed if the behavioral differences observed in response to the proprioceptive uncertainty manipulation resulted in a change at the level of the sensory measurements, the prior information, or a combination of both. Our modeling-fitting results revealed that proprioceptive noise influences the body ownership percept elicited by the visuotactile stimulation not by affecting the processing of visuotactile signals directly but by changing the prior probability of a common cause for vision and touch which, in turn, influences the causal structure inferred for vision and touch. Finally, we discuss our findings with respect to different alternative hierarchical Bayesian models of body ownership that implement causal inference by combining spatial (visuoproprioceptive) and temporal (visuotactile) estimates after an initial stage of separate processing of these respective signals.

2. Methods

2.1. Participants

We report how we determined our sample size, all data exclusions, all inclusion/exclusion criteria, whether inclusion/exclusion criteria were established prior to data analysis, all manipulations, and all measures in the study. Thirty-one healthy, naïve participants were recruited for this experiment (16 males, age 27 ± 8 years). A power analysis based on our previous study using visual noise required a sample of 20 participants (Chancel, Ehrsson, et al., 2022). However, because the type of sensory noise was different and because the previous study excluded individuals who had a weak rubber hand illusion, which we do not do in the current study, we chose to increase this minimum sample size by approximately 50%. All volunteers provided their written informed consent prior to their participation. All participants received 150 SEK as compensation for each hour spent on the experiment. All experiments were approved by the Swedish Ethical Review Authority.

2.2. Experimental setup

During the main experiment, the participant’s right hand lay hidden, palm down, on a flat support surface beneath a table (30 cm lateral to the body midline), while, on this table (15 cm above the real hand), a right male cosmetic prosthetic hand (Natural Definition Glove, Color Y02, Fillauer LLC, Chattanooga, USA) filled with gypsum (hereafter, “the rubber hand”) was placed in the same orientation as the real hand, aligned with the participant’s arm (Fig. 1A). A black cloth covered the gap between the rubber hand and the participant’s body. The participant’s left hand rested on his or her lap. A chin rest and an elbow rest (Ergorest Oy®, Finland) ensured that the participant’s head and right arm remained in a steady and relaxed posture throughout the experiments. Two robot arms (designed in our laboratory by Martti Mercurio and Marie Chancel) applied tactile stimuli (taps) to the index finger of the rubber hand and that of the participant’s hidden real right hand. Each robot arm was made of three parts: two 17-cm-long, 3-cm-wide metal pieces and a metal slab (10 × 20 cm) as a support. The joint between the two metal pieces and the one between the proximal piece and the support were powered by two HS-7950TH Ultra Torque servos that included 7.4 V optimized coreless motors (Hitec Multiplex®, USA). The distal metal piece ended with a ring containing a plastic tube (diameter: 7 mm) that was used to touch the rubber hand and the participant’s real hand.

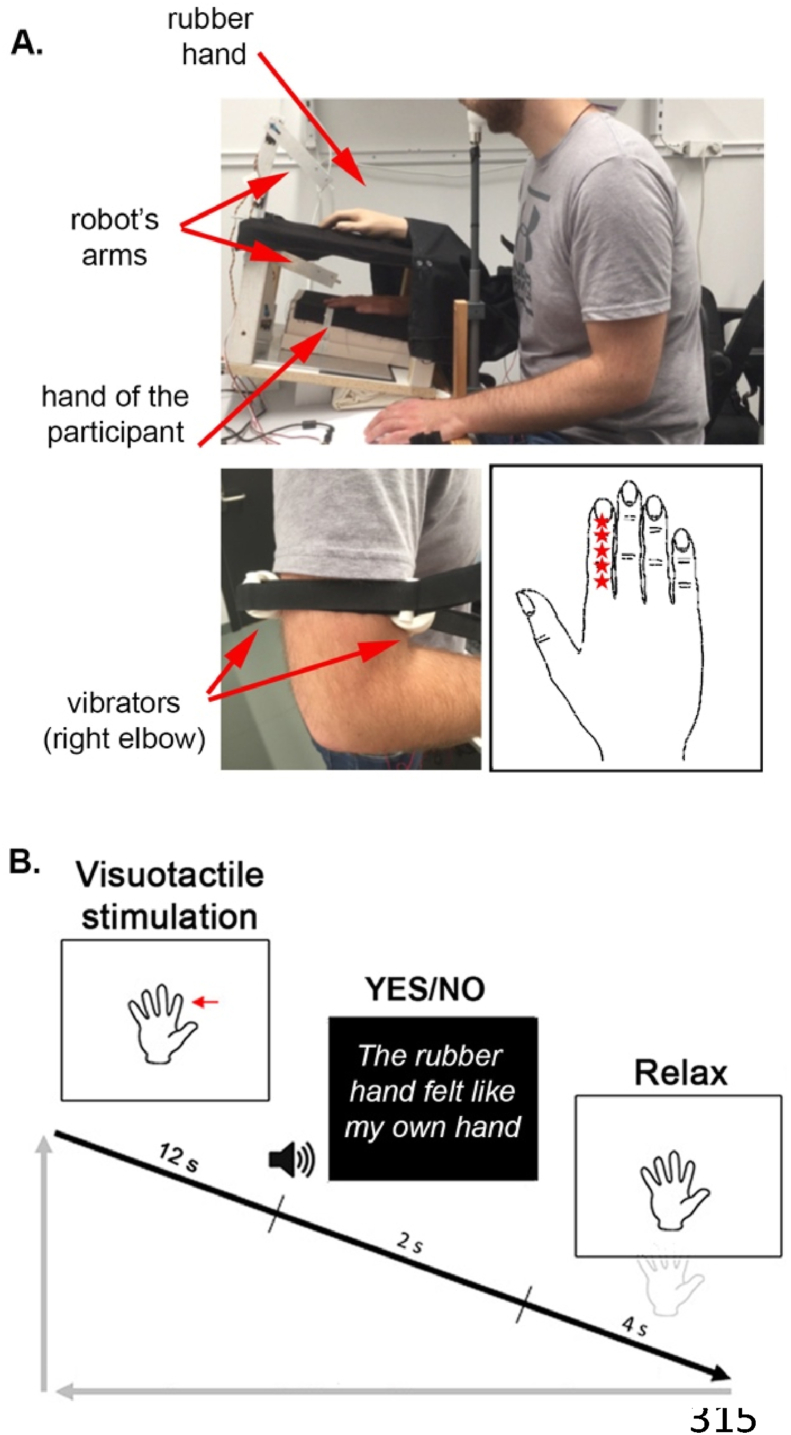

Fig. 1.

Experimental setup (A) and experimental procedure (B) for the ownership judgment task. A participant’s real right hand was hidden under a table while they saw a life-sized cosmetic prosthetic right hand (rubber hand) on this table, and two vibrators were strapped on the participant’s right arm near the elbow over the biceps and triceps muscle tendons. Using this electromechanical vibratory apparatus, two proprioceptive noise conditions were tested: no noise (vibrators off) and noise (vibrators on, 20 Hz). Five different locations (red stars) were stimulated in a pseudorandomized order on the participant's and the rubber hand's index fingers (A). In each trial, the rubber hand and the real hand were touched six times for periods of 12 sec, either synchronously or with the rubber hand touched slightly earlier or later at a degree of asynchrony that was systematically manipulated (±150 ms, ±300 ms or ± 500 ms). The participant was then required to answer, in a yes/no form, whether the rubber hand felt like their own hand (B).

An electromechanical vibratory apparatus (designed in our laboratory by Martti Mercurio and Marie Chancel using 28 mm vibration motors from Precision Microdrive, Model No. 328–400) was attached to the right biceps and triceps tendons with straps (Fig. 1A). Microneurographic studies (the recording of afferent units via a microelectrode inserted in a human superficial nerve) have shown that low-amplitude vibration preferentially activates muscle spindle endings; this stimulation masks the spontaneous discharges recorded in the absence of vibratory stimulation (Roll et al., 1989). When applied to a single muscle group, vibration stimulation can elicit an illusory sensation of limb movement (Blanchard, Roll, Roll, & Kavounoudias, 2011; Goodwin et al., 1972; Kavounoudias et al., 2008; Naito et al., 1999; Proske & Gandevia, 2012), but any illusion is cancelled when a concomitant vibration of equal frequency and amplitude is applied to antagonist muscles. Therefore, by equally costimulating antagonist muscles of the elbow, we expected to disturb proprioceptive afferents without inducing any illusory sensation of movement. In half of the trials, the muscle afferents of the right arm were masked by switching on the vibrators (frequency: 20 Hz) immediately before the visuotactile stimulation of the rubber hand and the real hand started. When the trial was finished, the vibrators were switched off. A frequency of 20 Hz was chosen to mask natural discharges of muscle spindles without inducing illusory arm movement sensations (Naito et al., 1999) and be low enough to avoid skin irritation and unpleasant skin sensation for the duration of the experiment. As said, the efficiency of the 20 Hz covibration for masking natural muscle spindle discharges and reducing proprioceptive reliability has been shown in previous work (Calvin-Figuière et al., 1999; Chancel, Blanchard, et al., 2016; Chancel, Brun, et al., 2016; Gilhodes et al., 1986), and thus we decided to use an identical covibration stimulation for all participants since we had a within-subject design and perform individual-level model fitting. Note that we did not register the resulting behavioral effect of the covibration on each participant’s unisensory hand localization ability, which means that the relative increase in proprioceptive uncertainty may have varied across participants and thus contributing to individual differences in the magnitude of the proprioceptive noise manipulation’s effect on the rubber hand illusion. During the experiment, the participants wore earphones playing white noise to block any auditory information from the robots' movements, which otherwise may potentially interfere with the rubber hand illusion (Radziun & Ehrsson, 2018).

2.3. Procedure

For each trial, participants were asked to decide whether the rubber hand felt like their own hand, i.e., whether they felt a body ownership illusion (same task as used in Chancel, Iriye, & Ehrsson, 2022) (Fig. 1B). Each trial followed the same sequence: the robots repeatedly tapped the index fingers of the rubber hand and the actual hand six times each for a total period of 12 sec (.5 Hz) in five different locations in a randomized order (‘stimulation phase’): just proximal to the nail on the distal phalanx, on the distal interphalangeal joint, on the middle phalanx, on the proximal interphalangeal joint, and on the proximal phalanx (Fig. 1A). Then, the robots stopped while the participants heard a tone instructing them to verbally report whether the rubber hand felt the like theirs (by saying “yes” or “no”). A period of 12 sec was chosen because earlier studies with individuals susceptible to the illusion have shown that the illusion is typically elicited within this time period when fully synchronous visuotactile stimulations are delivered (Chancel, Iriye, et al., 2022; Ehrsson et al., 2004; Lloyd, 2007); different locations on the finger were chosen to avoid irritation of the skin during the long psychophysics session and to follow the procedure of earlier studies that often stimulated different parts of the hand and fingers to elicit the rubber hand illusion (e.g., Guterstam et al., 2011). During this period of stimulation, the participants were instructed to look at the rubber hand where the robot was touching it and focus on the rubber hand.

After the stimulation period, the participants were asked to wiggle their right fingers to avoid any potential numbness or muscle stiffness from keeping their hand still and to eliminate any possible carry-over effect (Abdulkarim, Hayatou, & Ehrsson, 2021) on the next stimulation period by breaking the illusory ownership sensations (the movement of the real hand while the rubber hand stays still abolishes the rubber hand illusion). They also relaxed their gaze, i.e., they were allowed to look away from the rubber hand. Five seconds later, a second tone informed them that the next trial was about to start; the trial started 1 sec after this sound cue. Every 5 trials, before that trial commenced, the participant was reminded to focus on the rubber hand during the stimulation phase.

Two variables were manipulated in this experiment: (1) the synchronicity between the taps that are seen and the ones that are felt by the participants (= asynchrony conditions) and (2) the level of proprioceptive noise (= noise conditions). (1) Seven different asynchrony conditions were tested. The touches applied to the rubber hand could be synchronized with the touches on the participant’s real hand, or these touches could be delayed or advanced by 150, 300, or 500 ms. For the rest of this article, negative values of asynchrony (−150, −300, −500 ms) mean that the rubber hand was touched first, and positive values of asynchrony (+150, +300, +500 ms) mean that the participant’s hand was touched first. (2) Two different proprioceptive noise conditions were tested: without (no noise) or with (noise) proprioceptive noise, corresponding to the vibrators being off or on, respectively.

Each condition was repeated 16 times, leading to a total of 224 judgments per participant and per task. These trials were randomly divided into four experimental blocks per task, each lasting 15 min. The proportion of answers stating that “the rubber hand felt like my hand” at different asynchronies was collected to be compared between conditions and fitted by our causal inference models (see further below).

2.4. Data analysis

For each degree of asynchrony, the percentage of trials in which the participants felt like the rubber hand was theirs was determined. The behavioral impact of the visuotactile asynchrony and proprioceptive noise was assessed by a two-way repeated-measures ANOVA. We also performed a paired t test on the total percentage of “yes” answers across all asynchrony conditions to focus only on the influence of proprioceptive noise on the participants' perception regardless of the magnitude of the visuotactile asynchrony.

2.5. Modelling

In a previous behavioral and computational study, Chancel, Ehrsson, et al. (2022) developed a Bayesian causal inference model that successfully describes how the perceptual system infers a common cause of visual and tactile signals originating from one’s own hand and how this relates to the subjective feeling hand ownership in the rubber hand illusion. We used the same Bayesian causal inference (BCI) model and the same procedure to fit it to the participants' answers to this model in the present study. Here the aim was to investigate the effect of proprioceptive noise on body ownership within this Bayesian computational framework and clarify which components of the model that are impacted by experimental manipulation of proprioceptive uncertainty. Below, we briefly describe our modeling approach; more details can be found in Chancel, Ehrsson, et al. (2022).

Bayesian inference is based on a generative model, which is a statistical model of the world that the observer believes gives rise to observations. By “inverting” this model for a given set of observations, the observer can make an “educated guess” about a hidden state. In our case, the model contained three variables: the causal structure category (), the tested asynchrony (), and the measurement of this asynchrony () by the participant. Even though the true frequency of synchronous stimulation (C = 1) was 1/7 = .14, we allowed it to be a free parameter, which we denoted as psame. Next, we assumed that for the observer when C = 1, the asynchrony s was always 0. When C = 2, the true asynchrony took one of several discrete values; we did not presuppose that the observer knew these values or their probabilities but instead assumed that asynchrony was normally distributed with the correct standard deviation of 348 ms (i.e., the true standard deviation of the stimuli used in this experiment). In other words, . Next, we assumed that the observer made a noisy measurement x of the asynchrony. We made the standard assumption (inspired by the central limit theorem) that this noise adhered to the following normal distribution:

where the variance depends on the sensory noise for a given trial.

From this generative model, we turned to inference. Visual and tactile inputs are to be integrated, leading to the emergence of the rubber hand illusion if the observer infers a common cause ( for both sensory inputs. On a given trial, the model observer uses to infer the category . Specifically, the model observer computes the posterior probabilities of both categories, and , i.e., the belief that the category was . Then, the observer would report “yes, it felt like the rubber hand was my own hand” if the former probability were higher, or in other words, if , where

The decision rule d > 0 is thus equivalent to

where

where is the sensory noise level of the trial under consideration. Consequently, the decision criterion changes as a function of the sensory noise affecting the observer’s measurement. The output of the BCI model is the probability of the observer reporting the visual and tactile inputs as emerging from the same source when presented with a specific asynchrony value :

Here, the additional parameter reflects the probability of the observer lapsing, i.e., randomly guessing. This equation is a prediction of the observer’s response probabilities and can thus be fitted to a participant’s behavioral responses.

Thus, our BCI model has five free parameters: and are the prior probability of a common cause for vision and touch, independent of any sensory stimulation in the no noise and noise conditions, respectively; and are the noise impacting the measurement , in the no noise and noise conditions, respectively; and is a lapse rate to account for random guesses and unintended responses, which is common to the two proprioceptive noise conditions because it depends on the participant’s attention level during the experimental session (during which both conditions are tested in a pseudorandom order). We assumed a value of 348 ms for , i.e., we set equal to the actual standard deviation of the asynchronies used in the experiment. Model fitting was performed using maximum-likelihood estimation implemented in MATLAB (MathWorks). We used the Bayesian adaptive direct search (BADS) algorithm (Acerbi & Ma, 2017, pp. 1836–1846), each using 100 different initial parameter combinations per participant. The overall goodness of fit was assessed using the coefficient of determination, R2 (Nagelkerke, 1991), defined as

where log and log denote the log-likelihoods of the fitted and null models, respectively, and n is the number of data points. For the null model, we assumed that an observer randomly chose one of the two response options, i.e., we assumed a discrete uniform distribution with a probability of .5. As the models' responses were discretized in our case to relate them to the two discrete response options; the coefficient of determination was divided by the maximum coefficient (Nagelkerke, 1991), defined as

3. Results

3.1. Behavioral results

As described above, participants performed a detection-like task on the ownership they felt toward a rubber hand; the tactile stimulation they felt on their hidden real hand was synchronized or systematically delayed or advanced with respect to the taps applied to the rubber hand that they saw. Moreover, during half of the trials, the reliability of the proprioceptive signals from the participants' elbow was degraded by covibration of the biceps and triceps muscles. For each degree of asynchrony and each degree of proprioceptive noise, the percentage of trials in which the participants felt that the rubber hand was theirs was determined.

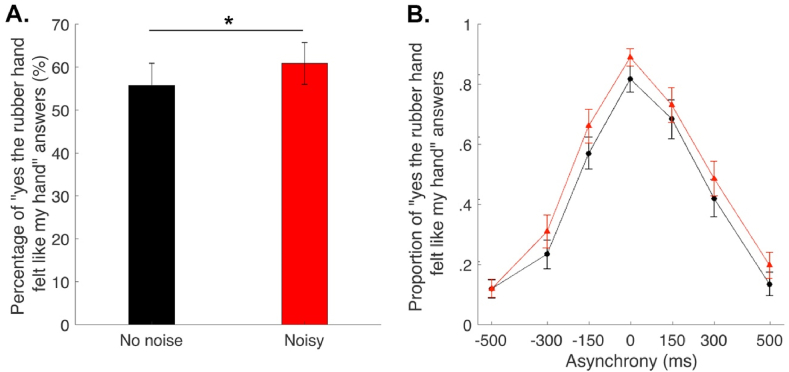

The results showed that the rubber hand illusion was successfully induced in the synchronous condition; indeed, the participants reported perceiving the rubber hand as their own hand in 88 ± 4% (mean ± SEM) of the 16 trials when the visual and tactile stimulations were synchronous; more precisely, 84 ± 4% and 91 ± 3% of responses were “yes” responses for the no noise and noise conditions (noise experimentally added to the natural proprioceptive signal), respectively. Moreover, for every participant, increasing the asynchrony between the seen and felt taps decreased the prevalence of the illusion: when the rubber hand was touched 500 ms before the real hand, the illusion was reported in only 12 ± 3% of the 15 trials (no noise: 11 ± 3%, noise: 14 ± 3%); when the rubber hand was touched 500 ms after the real hand, the illusion was reported in only 17 ± 4% of the 15 trials (no noise: 15 ± 4%, noise: 19 ± 4%).

As hypothesized, we observe a significant main effect of proprioceptive noise [F (1, 390) = 8.71, P = .003] and a main effect of asynchrony [F (6, 390) = 156.8, P < .001, Fig. 2B]. Moreover, participants answered “yes [the rubber hand felt like my own hand]” more often when their proprioceptive inputs were less reliable, as shown by the significant increase in the overall proportion of “yes” answers (all asynchronies confounded) in the noise condition compared to the no-noise condition [t (30) = 5.22, P < .001, 95% CI [3.89; 8.88]; Fig. 2A]. However, the interaction between proprioceptive noise and asynchrony was not significant [F (6, 390) = 1.12, P = .15], i.e., the impact of proprioceptive noise on the rubber hand illusion did not significantly differ depending on the degree of asynchrony between the seen and felt touches. This lack of interaction between proprioceptive noise and visuotactile asynchrony implies that the relative change in proprioceptive noise does not directly influence the specific contribution of visuotactile asynchrony for the illusory hand ownership but is more in line with an effect of visuoproprioceptive integration and the contribution of the visuoproprioceptive spatial incongruence of the seen and felt locations of the rubber hand and the real hand.

Fig. 2.

Elicitation of the rubber hand illusion at different levels of proprioceptive noise. A. Bars represent how many times (as a percentage) the participants answered ‘yes [the rubber hand felt like my own hand]’ under the no noise (black) and the proprioceptive noise (red) conditions. There was a significant increase in the number of ‘yes’ answers when proprioceptive noise was applied. B. Dots and triangles represent the mean reported proportion of elicited rubber hand illusions (±SEM) for each asynchrony for the no noise (black) and the proprioceptive noise (red) conditions, respectively. ∗P < .05.

The next step was to examine if the BCI model we previously designed (Chancel, Ehrsson, et al., 2022) could equally well capture the participants' reports in both proprioceptive noise conditions. By fitting individual responses with the BCI model, we also investigate how the increased emergence of the rubber hand illusion with noisier proprioceptive information would be accounted for within this computational framework in agreement with the absence of interaction between the proprioceptive noise level and the asynchrony levels.

3.2. Computational results

We applied our BCI model to the participants' answers for both proprioceptive noise conditions. Depending on the condition, different parameter values were estimated for the a priori probability that the tactile and visual signals came from a common source ( & ) and for the sensory noise affecting the participant’s measurement of the stimulation ( & ). The overall goodness of fit of our model was satisfactory (mean ± SEM: pseudo-R2 = .61 ± .03, Fig. 3A) and similar to the value we observed in previous studies using the same model (Chancel, Ehrsson, et al., 2022).

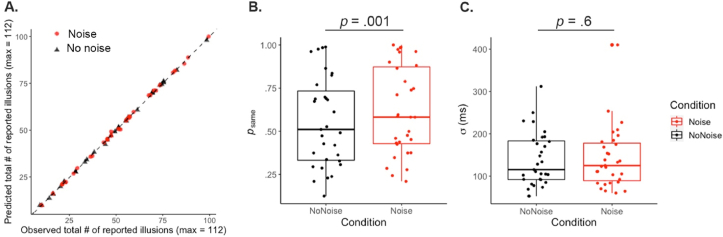

Fig. 3.

BCI model predictions and parameter estimates. A. This plot displays the total number of “yes [it felt like the rubber hand I saw was my own hand]” answers predicted by the BCI model against the observed total number of “yes” answers across all tested values of visuotactile asynchrony; each dot corresponds to one participant, with the black triangles being for the no noise condition and the red dots being for the noise condition. At the computational level, more proprioceptive noise led to a higher estimated a priori probability for vision and touch to have a common cause in the noise condition (red) than in the no noise condition (black, B). However, adding proprioceptive uncertainty did not significantly alter the visuotactile sensory noise estimate (C). The lower and upper edges of each box correspond to the first and third quartiles, respectively, with the thick horizontal lines representing the medians. The upper and lower whiskers extending from the boxes indicate the maximum and minimum values.

When comparing the parameter values estimated between the two proprioceptive conditions, we observe that the prior probability of touch and vision coming from a common source is significantly higher in the noise condition than in the no noise condition (Mean ± SEM: .55 ± .05; = .62 ± .05; t = −3.51, df = 30, P = .001, 95% CI = [−.10; −.03]; Fig. 3. B), while the sensory noise did not significantly vary (mean ± SEM: 139 ± 11 ms; = 142 ± 13; t = −.54, df = 30, P = .60, 95% CI = [−16.45; 9.62]; Fig. 3C). This result suggests that the sensory noise taken into account in the Bayesian threshold governing the emergence of the rubber hand illusion includes only visuotactile noise trial-to-trial variations and not proprioceptive noise variations.

Given these results and for the sake of parsimony, we tested a second version of the BCI model, called BCI∗, where only one value of sensory noise was estimated for both proprioceptive uncertainty conditions. This model included only four free parameters ([, , , ]). The goodness of fit of this model is of the same order of magnitude as that of our main model (mean ± SEM: pseudo-R2 = .61 ± .03). To compare these two versions of the model, we used the Akaike information criterion (AIC; Akaike, 1973) and Bayesian information criterion (BIC; Schwarz, 1978): the lower the AIC or BIC is, the better the fit. The BIC penalizes the number of free parameters more heavily than the AIC. We calculated AIC and BIC values for each model version and participant and then calculated the difference between models and summed the values across the participants. We estimated a confidence interval using bootstrapping: 31 random AIC/BIC differences were drawn with replacement from the actual participants' AIC/BIC differences and summed; this procedure was repeated 10,000 times to compute the 95% CI. Both analyses favored the more parsimonious version of the BCI model (BCI∗; raw difference [95% CI]: AICBCI∗-BCI: −44 [−50; −36], BICBCI∗-BCI: −149 [−156; −142]): the efficiency of the BCI model at capturing body ownership in our experiment was not reduced when we assumed a shared level of sensory uncertainty between our conditions. This result confirms that our participants' behavioral differences between the noise and no noise conditions are well captured at the computational level by a difference in the prior and not by a change in the sensory parameters.

4. Discussion

In the present study, participants performed a body ownership detection task based on the rubber hand illusion: they were required to answer “yes” or “no” when asked whether the hand they saw felt like their own hand after receiving visuotactile stimulations (six consecutive touches on the rubber hand and the real hand) of varying asynchrony over a 12-s period. While the rubber hand and the participants' real hand remained at the same locations throughout the experiment, in half of the conditions, the reliability of the proprioceptive signal coming from the participants' hand was reduced using covibration of the participants' biceps and triceps muscles at the elbow joint (Calvin-Figuière et al., 1999; Gilhodes et al., 1986; Goodwin et al., 1972; Roll et al., 1989). In line with our hypothesis, we found that this proprioceptive noise condition was associated with an increase in the frequency of rubber hand illusion detection judgments. At the computational level, the participants' illusory body ownership detection responses were well predicted by a BCI model, and the increase in the probability of emergence of the rubber hand illusion when the proprioceptive precision decreased was captured by an increase in the a priori probability that the tactile and visual signals came from a common source. Collectively, these findings demonstrate that proprioceptive uncertainty influences the rubber hand illusion, pinpoint a possible computational mechanism for this effect, and provide valuable support for the BCI model of multisensory perception of one’s own body (Chancel, Ehrsson, et al., 2022; Fang et al., 2019; Samad et al., 2015).

4.1. Rubber hand illusion and proprioception

The contribution of multiple sensory modalities to body ownership has been repeatedly shown by numerous studies successfully inducing bodily illusions using visuotactile (e.g., Botvinick & Cohen, 1998; Ehrsson et al., 2004; Longo et al., 2008; Tsakiris & Haggard, 2005), visuoproprioceptive (e.g., Fang et al., 2019; Kalckert & Ehrsson, 2012, 2014; Metral & Guerraz, 2019), tactile–proprioceptive (Aimola Davies, White, & Davies, 2013; Bono & Haggard, 2019; Ehrsson, Holmes, & Passingham, 2005; Gallagher et al., 2020), and visuo-thermosensory (Crucianelli & Ehrsson, 2022) stimulation, sometimes even combined with auditory (Radziun & Ehrsson, 2018) or interoceptive inputs (e.g., Crucianelli et al., 2018; Suzuki et al., 2013). This multisensory perception of one’s own body, similar to the exteroceptive perception of objects and events, is governed by spatial and temporal principles (Fetsch et al., 2013; Stein & Meredith, 1993). The current study investigated how manipulation of proprioceptive noise influences the sense of body ownership in rubber hand illusion. Although visuoproprioceptive spatial incongruence (see next paragraph) and incongruent visual and proprioceptive feedback from finger movements influence the rubber hand illusion (Butler, Héroux, & Gandevia, 2017; Héroux et al., 2013; Kalckert & Ehrsson, 2012; Walsh et al., 2011), the specific contribution of muscle spindle afferent signals has not received much attention. Héroux et al. (2013) applied digital anesthesia to minimize cutaneous feedback during a nonvisual version of the rubber hand illusion (Ehrsson, Holmes, & Passingham, 2005) elicited by congruent tactile and proprioceptive information during illusory self-touch, providing evidence that muscle spindle signals contribute to the sense of body ownership in this paradigm. Thus, our finding that covibration of biceps and triceps muscles, which stimulates muscle spindle receptors but in such a way as to degrade proprioceptive information, influenced the rubber hand illusion provides further evidence for the role of muscle spindle signals in the sense of body ownership.

As mentioned, several studies have shown that the magnitude of a spatial visuoproprioceptive conflict between the seen location of the rubber hand and the felt location of the real hand impacts the resulting body ownership percept induced by visuotactile stimulation in the rubber hand illusion (Kalckert & Ehrsson, 2014; Kalckert et al., 2019; Lloyd, 2007; Preston, 2013; Qureshi et al., 2018; Zopf et al., 2010). In a recent study, we manipulated this visuoproprioceptive spatial conflict by changing the position of the rubber hand while the participants performed a discrimination task in which they had to choose which of two rubber hands felt more like their own (Chancel & Ehrsson, 2020). In this study, both rubber hands could be touched synchronously with the participant’s real hand, or one rubber hand could be touched with a delay of up to 200 ms. Thanks to this psychophysical approach, we were able to calculate for which delay the two rubber hands were perceived as equally illusory owned by the participant. The point of subjective equality in body ownership was significantly impacted by a change in the position of the rubber hand, and a tradeoff could be calculated between temporal and spatial conflict. However, the minimal difference in visuotactile asynchrony needed for the participant to reliably discriminate between the two competing hand-ownership percepts (discrimination threshold) was not altered by a change in the position of the rubber hand, suggesting that the corresponding sensory information from the visuotactile correlations is processed along the temporal dimension only and is not mixed with visuoproprioceptive information processing in the spatial dimension. The present findings add to this conclusion by showing that even when the spatial visuoproprioceptive conflict remains equal, as in our conditions where the placement of the rubber hand or the real hand is always constant (15 cm apart in the vertical plane), an increase in proprioceptive uncertainty most likely affects the way visual and proprioceptive inputs are combined and leads to a stronger body ownership percept. As predicted, when proprioceptive uncertainty was increased by muscle covibration, the participants reported more often that the hand they saw felt like their own hand, i.e., visual and tactile signals were more often integrated for the same level of temporal conflict. Note that no interaction between the level of asynchrony and the proprioceptive noise condition was observed, suggesting that temporally correlated visuotactile cues and spatially congruent visuoproprioceptive cues were processed relatively independently at low levels of sensory processing. Such partial dissociation in the interplay between spatial and temporal cues in perception has previously been shown in other domains; for example, in the visual domain, temporal information in a virtual environment influences the perceived size of a virtual room but not the perceived time needed to travel the distance between two landmarks (Riemer et al., 2018).

The observation of a significant impact of proprioceptive uncertainty on the visuotactile rubber hand illusion stands at odds with a previous study focusing on the influence of proprioceptive precision on the visuotactile rubber hand illusion (Motyka & Litwin, 2019). The authors of that work measured the precision of their participants' proprioception using a task requiring active reproduction of one’s arm’s position (operationalized as inversely related to the variance of the estimations). They found this precision to have little relationship to the strength of the visuotactile rubber hand illusion. The authors concluded that their results were in opposition to Bayesian models of body ownership, such as the one proposed by Samad and colleagues (2015). More recently, another study investigated the link between proprioceptive imprecision and the rubber hand illusion, once again with mixed results (Botan, Salisbury, Critchley, & Ward, 2021): they found a correlation between proprioceptive imprecision and proprioceptive drift in some of their experimental conditions, but this effect was driven by a subgroup of participants classified as vicarious pain responders. However, in both studies, the variations in proprioceptive precision were considered between participants, i.e., as an individual difference, while in the present study, we experimentally manipulated proprioceptive precision within participants, i.e., a causal manipulation that allowed us to investigate the effect of proprioceptive uncertainty on the rubber hand illusion for each participant. Furthermore, we directly tested the Bayesian model of body ownership by fitting the individual subjects' response profiles to the model, unlike the previous studies. Moreover, the conclusions presented in Motyka & Litwin (2019) were based on a negative finding, which may be inconclusive if the individual differences in proprioceptive precision are small and if proprioceptive precision and changes in rubber hand illusion are not quantified with sensitive enough methods. We believe that the current results resolve this debate by establishing that changes in proprioceptive uncertainty do affect the rubber hand illusion in line with the BCI models' prediction.

4.2. Sensory uncertainty in causal inference of body ownership

The present behavioral results provide support for a probabilistic account for body ownership based on Bayesian principles (Chancel, Ehrsson, et al., 2022; Fang et al., 2019; Kilteni et al., 2015; Samad et al., 2015). Indeed, according to this computational framework, the extent to which a sensory input will contribute to the final perception depends on its relative weight and is thus inversely proportional to its relative uncertainty (Ernst & Bülthoff, 2004). As we explained when developing our hypothesis in the Introduction, the increase in proprioceptive uncertainty in the noise condition is thought to have lowered the relative contribution of the proprioceptive signal relative to the visual signal in the combined visuoproprioceptive estimate. According to the Bayesian framework, such change in proprioceptive uncertainty results in the participant perceiving their hand as closer to the rubber hand, i.e., closer to the source of the visual signal, a more uncertain hand position estimate (Ernst & Bülthoff, 2004), promoting in turn a stronger bodily feeling that the seen rubber hand is one’s own. This latter prediction fits the behavioral difference in illusory hand ownership reports between conditions. Moreover, the Bayesian causal inference model we previously designed for body ownership based on visuotactile asynchrony (Chancel, Ehrsson, et al., 2022) captured the body ownership reports equally well for both experimental conditions and offers insight on the nature of the influence of proprioceptive uncertainty on body ownership. More specifically, increasing proprioceptive uncertainty in the noise condition resulted in an increased a priori probability of integrating vision and touch independently of the level of visuotactile asynchrony compared to the no noise condition. Such an increase in illusory body ownership when the reliability of the proprioceptive input decreases bears similarities to the increase in hand movement perception caused by decreased proprioceptive reliability (Chancel, Blanchard, et al., 2016), which may thus indicate a general principle of multisensory bodily awareness. As mentioned, our previous rubber hand illusion study (Chancel, Ehrsson, et al., 2022) manipulated the reliability of the visual signals through the presentation of visual noise in augmented-reality glasses worn by the participants and highlighted the importance of visual uncertainty for body ownership; together with the current findings, these studies underscore the critical role of sensory uncertainty in the perception of one’s own body in support of the Bayesian theory of perception.

4.3. Computational mechanisms and alternative models

But how is visual, tactile, and proprioceptive information combined in the rubber hand illusion, and at what computational stage in the causal inferential process? The causal inference model we used in the current study was designed to describe how temporally congruent or incongruent visual and tactile stimuli are inferred to have a common origin from one’s own hand (Chancel, Ehrsson, et al., 2022); it allowed effective model testing against psychophysics data manipulating temporal visuotactile incongruence, in presence or absence of experimentally manipulated proprioceptive noise. The effect of this latter manipulation is captured by the change in the prior probability of a common cause of vision and touch we discussed above. But this model and the current psychophysics task were not designed to examine the noise manipulation’s influence on proprioceptive signal processing. Thus, the mechanism whereby proprioception varying in reliability cause the said changes in illusory hand ownership is unclear; the prior captures information extracted from the body and the environment but does not describe the mechanism in detail. Thus, to advance our theoretical understanding with future model testing experiments in mind, we will next discuss and compare three more complex Bayesian causal inference models that include proprioceptive information processing, and which can be seen as possible extensions of the Chancel, Ehrsson, et al. (2022) model.

Let us begin by first consider a parsimonious model (Fig. 4A) where visual, tactile, and proprioceptive information is combined or segregated in a single causal inference process with no distinction between temporal and spatial congruencies or types of sensory information. Such a model would use a single multisensory noise estimate based on the three unisensory noise estimates and a single causal inference process and assume a tight relationship between tactile and proprioceptive processing from the arm (Kavounoudias et al., 2008). According to this model, increasing proprioceptive uncertainty would lead to an increase in multisensory uncertainty that should affect the causal inference of the tactile and visual signals and change the shape of the probabilistic function in a characteristic way with respect to the level of asynchrony, similar to the visual noise manipulation in our previous study (Chancel, Iriye, et al., 2022). However, this is not what we found in our data, and thus the current results seem to reject this first hypothetical model.

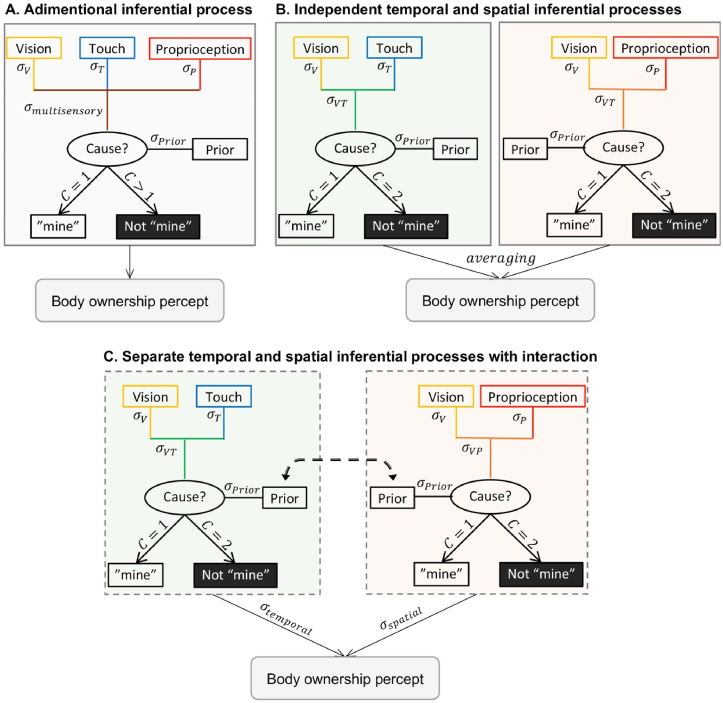

Fig. 4.

Theoretical models for the integration of visual, tactile, and proprioceptive signals into a body ownership percept. In the first model (A), the most likely causal structure (common cause C = 1 or different causes C > 1) for the visual, tactile, and proprioceptive signals is inferred from the combination of the three sensory cues and the corresponding sensory uncertainty ( resulting from the combination of unisensory noise ), without distinction between temporal and spatial dimensions. From this Bayesian causal inference process, the participant will perceive the hand in front of them as theirs or not, depending on whether a common cause is inferred or not. The lack of significant change in the sensory uncertainty parameter estimates between our no noise and noise conditions tends to disagree with this model. Instead, our results suggest that bimodal congruencies along temporal (visuotactile) and spatial (visuoproprioceptive) dimensions lead to separate estimates, which are subsequently merged in a single multisensory estimate that corresponds to the subjective feeling of body ownership. The last two models both agree with this hierarchical structure but diverge in their assumption about how the spatial and temporal estimates interact. In the work of Samad and colleagues (2015), the two estimates are assumed to be fully independent, and the final percept is computed from an average of these two estimates (B). Alternatively, we propose a model where the estimates influence each other via a modulation of their respective priors; additionally, when the estimates are combined, each one contributes to the final sense of body ownership according to its relative reliability ( or , C).

Instead, our computational results would agree with the notion of a hierarchical Bayesian inference model where spatial and temporal estimates are combined according to their relative reliability only after a causal structure is inferred from sensory inputs for each dimension separately (Fig. 4B). This probabilistic model would be similar to the theoretical model for the rubber hand illusion proposed by Samad and colleagues (2015), which was supported by simulated data and qualitative group results from rubber hand illusion experiments. Samad et al. (2015) proposed separate spatial visuoproprioceptive and temporal visuotactile estimates through two parallel causal inference processes, whose resulting estimates are combined by model averaging into a unified body ownership percept that incorporates both spatial and temporal dimensions from all three senses. Our observation of a change in the prior distribution for body ownership when proprioceptive uncertainty increases rather than an increase in the parameter representing sensory uncertainty in our Bayesian causal inference model agrees with this idea of relatively independent processing of spatial visuoproprioceptive and temporal visuotactile information at the initial level before the two estimates are combined into a multisensory estimate of body ownership at the higher level. This view would also be consistent with the lack of significant interaction between the proprioceptive noise condition and the asynchrony effects on the body ownership reports.

However, it is unlikely that the visuotactile and visuoproprioceptive estimates are totally independent, as both refer to the more general causal scenario of the hand in view being one’s own explaining all the visual, tactile, and proprioceptive signals. Thus, we suggest that each of the two estimates influences the other estimate via an interaction mechanism at the level of the prior (Fig. 4C). Moreover, instead of a simple averaging of the two estimates as in Samad et al. (2015), a brain functioning as a Bayesian inference machine would take into account the uncertainty affecting each individual estimate when combining them, thus producing a weighted average (Fig. 4C). We have recently proposed a similar weighted averaging mechanism in a hierarchical Bayesian causal inference model explaining how visuotactile and visuovestibular estimates are combined in a full-body ownership illusion (Mattsson et al., 2022). However, the current data cannot distinguish between the two versions of the hierarchical BCI model presented in Fig. 4B and C; testing which of these two models best describes the rubber hand illusion will require future experiments that include systematic manipulation of the spatial visuoproprioceptive incongruence information (e.g., systematic and gradual manipulation of the rubber hand’s position relative to the real hand, equivalent to the current manipulation of visuotactile asynchrony). Nevertheless, the present study advances our theoretical understanding of the Bayesian computational principles that determine the sense of body ownership and multisensory integration of bodily signals and establishes a role for proprioceptive uncertainty in this probabilistic process.

4.4. Possible neural mechanisms of proprioceptive uncertainty affecting body ownership

What could be the neural mechanisms of the current behavioral findings? In a recent fMRI study using the current rubber hand illusion detection task (but without sensory noise manipulation), we observed that activity in the posterior parietal cortex follows the current BCI model’s predictions of body ownership (Chancel, Ehrsson, et al., 2022). Moreover, Fang et al. (2019) found that neuronal activity in the premotor cortex of monkeys fit with a causal inference model of visuoproprioceptive integration in a version of the rubber hand illusion based on arm-pointing movements. These findings converge with many human imaging studies showing the importance of the premotor and parietal cortices in the multisensory processing of signals from one’s own body in humans (Brozzoli, Gentile, & Ehrsson, 2012; Ehrsson et al., 2004; Gentile et al., 2011, 2013; Guterstam et al., 2019; Kavounoudias et al., 2008; Limanowski & Blankenburg, 2016; Macaluso & Driver, 2001) and electrophysiological recordings in nonhuman primates that have identified multisensory neurons in these areas responding to visual, tactile, and proprioceptive signals (Avillac, Ben Hamed, & Duhamel, 2007; Fang et al., 2019; Graziano, Cooke, & Taylor, 2000; Graziano & Gross, 1993; Grefkes & Fink, 2005). Future studies should directly investigate the impact of proprioceptive (and visual) uncertainty on the neural signatures of body ownership in the posterior parietal cortex (Chancel, Iriye, et al., 2022) and premotor cortex (Fang et al., 2019) to better understand how spatial visuoproprioceptive information and temporal visuotactile information are processed and combined at the cerebral level when building a coherent multisensory percept of one’s own body.

4.5. Possible clinical relevance for psychiatric and neurodevelopmental disorders

Computational approaches to multisensory perception allow to account for individual differences within a common theoretical framework and link individual differences to specific computational processes within the Bayesian causal inference model. For example, it has been proposed that people with autistic spectrum disorders may rely less on prior information in the context of multisensory processing (Pellicano & Burr, 2012), and that in order to understand complex multisensory behaviors one need models that are able to capture individual variability in the reliance on different sources of information (Paton et al., 2012). Thus, in the future, it could be interesting to investigate how individual differences in psychopathological traits related to schizophrenia (Asai, Mao, Sugimori, & Tanno, 2011; Torregrossa & Park, 2022), eating disorders (Eshkevari et al., 2012; Preston & Ehrsson, 2014) and autistic spectrum disorders (Cascio, Foss-Feig, Burnette, Heacock, & Cosby, 2012; Ide & Wada, 2016) would relate to variations in different computational processes within the current Bayesian probabilistic modeling framework.

5. Conclusion

The current results suggest that proprioceptive uncertainty promotes the rubber hand illusion and support Bayesian probabilistic models of body ownership. The findings extend previous empirical findings related to the impact of visual uncertainty on body ownership and underscore the critical role of sensory uncertainty in bodily awareness more generally. The computational modeling of the behavioral results further advances our understanding of interactions between visuotactile integration and visuoproprioceptive integration across temporal and spatial dimensions in the multisensory perception of one’s own body. We conclude that the sense of bodily self is based on uncertainty and probabilistic principles, similar to the perception of events and objects in the external world (Cao, Summerfield, Park, Giordano, & Kayser, 2019; Dokka et al., 2019; Kayser & Shams, 2015; Körding et al., 2007; Rohe et al., 2019; Sato et al., 2007; Shams & Beierholm, 2022).

Data Availability Declaration

Data are available here: https://osf.io/qjf5h. Analysis code can be provided upon request. No part of the study procedures or analyses was pre-registered prior to the research being conducted.

Author contributions

The experiment was performed at the Neuroscience department at Karolinska Institute. MC and HHE contributed to the conception and design of the work and interpretation of results, and they wrote the article together. MC conducted data collection, data analysis, and computational modeling. Both authors approved the final version of the manuscript; they agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved; and all persons designated as authors qualify for authorship, and all those who qualify for authorship are listed.

Funding

This project was funded by the Swedish Research Council (#2017-03135), Göran Gustafssons Foundation and Horizon 2020 European Research Council (Advanced Grant SELF-UNITY #787386).

Open Practices Section

The study in this article earned Open Data badge for transparent practices. The data for this study is available at: https://osf.io/qjf5h

Acknowledgments

We would like to thank Martti Mercurio for designing the robot and the mechanical vibrator system and software. We would also like to thank Linnéa Påvenius for her help during data acquisition and Prof. Anne Kavounoudias for her help in designing the mechanical vibrators used in this study.

Reviewed 23 February 2023

Action editor Ryo Kitada

References

- Abdulkarim Z., Hayatou Z., Ehrsson H.H. Sustained rubber hand illusion after the end of visuotactile stimulation with a similar time course for the reduction of subjective ownership and proprioceptive drift. Experimental Brain Research. 2021;239(12):3471–3486. doi: 10.1007/s00221-021-06211-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Acerbi L., Ma W.J. In: Practical Bayesian optimization for model fitting with Bayesian adaptive direct search. Guyon I., Luxburg U.V., Bengio S., Wallach H., Fergus R., Vishwanathan S., et al., editors. Vol. 30. Curran Associates, Inc; 2017. http://papers.nips.cc/paper/6780-practical-bayesian-optimization-for-model-fitting-with-bayesian-adaptive-direct-search.pdf (Advances in neural information processing systems). [Google Scholar]

- Aimola Davies A.M., White R.C., Davies M. Spatial limits on the nonvisual self-touch illusion and the visual rubber hand illusion: Subjective experience of the illusion and proprioceptive drift. Consciousness and Cognition. 2013;22(2):613–636. doi: 10.1016/j.concog.2013.03.006. [DOI] [PubMed] [Google Scholar]

- Akaike H. Academiai Kiado; 1973. Information theory and an extension of the maximum likelihood principle; pp. 267–281. (Second international symposium on information theory). [Google Scholar]

- Aller M., Noppeney U. To integrate or not to integrate: Temporal dynamics of hierarchical Bayesian causal inference. PLOS Biology. 2019;17(4) doi: 10.1371/journal.pbio.3000210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asai T., Mao Z., Sugimori E., Tanno Y. Rubber hand illusion, empathy, and schizotypal experiences in terms of self-other representations. Consciousness and Cognition: An International Journal. 2011;20:1744–1750. doi: 10.1016/j.concog.2011.02.005. [DOI] [PubMed] [Google Scholar]

- Avillac M., Ben Hamed S., Duhamel J.-R. Multisensory integration in the ventral intraparietal area of the macaque monkey. Journal of Neuroscience. 2007;27(8):1922–1932. doi: 10.1523/JNEUROSCI.2646-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badde S., Navarro K.T., Landy M.S. Modality-specific attention attenuates visual-tactile integration and recalibration effects by reducing prior expectations of a common source for vision and touch. Cognition. 2020;197 doi: 10.1016/j.cognition.2019.104170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanchard C., Roll R., Roll J.-P., Kavounoudias A. Combined contribution of tactile and proprioceptive feedback to hand movement perception. Brain Research. 2011;1382:219–229. doi: 10.1016/j.brainres.2011.01.066. [DOI] [PubMed] [Google Scholar]

- Blanke O., Slater M., Serino A. Behavioral, neural, and computational principles of bodily self-consciousness. Neuron. 2015;88(1):145–166. doi: 10.1016/j.neuron.2015.09.029. [DOI] [PubMed] [Google Scholar]

- Bono D., Haggard P. Where is my mouth? Rapid experience-dependent plasticity of perceived mouth position in humans. European Journal of Neuroscience. 2019;50(11):3814–3830. doi: 10.1111/ejn.14508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botan V., Salisbury A., Critchley H.D., Ward J. Vicarious pain is an outcome of atypical body ownership: Evidence from the rubber hand illusion and enfacement illusion. The Quarterly Journal of Experimental Psychology: QJEP. 2021;74(11):1888–1899. doi: 10.1177/17470218211024822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick M., Cohen J. Rubber hands “feel” touch that eyes see. Nature. 1998;391(6669):756. doi: 10.1038/35784. [DOI] [PubMed] [Google Scholar]

- Brozzoli C., Gentile G., Ehrsson H.H. That’s near my hand! Parietal and premotor coding of hand-centered space contributes to localization and self-attribution of the hand. The Journal of Neuroscience. 2012;32(42):14573–14582. doi: 10.1523/JNEUROSCI.2660-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Butler A.A., Héroux M.E., Gandevia S.C. Body ownership and a new proprioceptive role for muscle spindles. Acta Physiologica (Oxford, England) 2017;220(1):19–27. doi: 10.1111/apha.12792. [DOI] [PubMed] [Google Scholar]

- Calvin-Figuière S., Romaiguère P., Gilhodes J.C., Roll J.P. Antagonist motor responses correlate with kinesthetic illusions induced by tendon vibration. Experimental Brain Research. 1999;124(3):342–350. doi: 10.1007/s002210050631. [DOI] [PubMed] [Google Scholar]

- Cao Y., Summerfield C., Park H., Giordano B.L., Kayser C. Causal inference in the multisensory brain. Neuron. 2019;102(5):1076–1087.e8. doi: 10.1016/j.neuron.2019.03.043. [DOI] [PubMed] [Google Scholar]

- Cascio C.J., Foss-Feig J.H., Burnette C.P., Heacock J.L., Cosby A.A. The rubber hand illusion in children with autism spectrum disorders: Delayed influence of combined tactile and visual input on proprioception. Autism: the International Journal of Research and Practice. 2012;16(4):406–419. doi: 10.1177/1362361311430404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chancel M., Blanchard C., Guerraz M., Montagnini A., Kavounoudias A. Optimal visuotactile integration for velocity discrimination of self-hand movements. Journal of Neurophysiology. 2016;116(3):1522–1535. doi: 10.1152/jn.00883.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chancel M., Brun C., Kavounoudias A., Guerraz M. The kinaesthetic mirror illusion: How much does the mirror matter? Experimental Brain Research. 2016;234(6):1459–1468. doi: 10.1007/s00221-015-4549-5. [DOI] [PubMed] [Google Scholar]

- Chancel M., Ehrsson H.H. Which hand is mine? Discriminating body ownership perception in a two-alternative forced-choice task. Attention, Perception & Psychophysics. 2020 doi: 10.3758/s13414-020-02107-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chancel M., Ehrsson H.H., Ma W.J. Uncertainty-based inference of a common cause for body ownership. ELife. 2022;11 doi: 10.7554/eLife.77221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chancel M., Iriye H., Ehrsson H.H. Causal inference of body ownership in the posterior parietal cortex. Journal of Neuroscience. 2022 doi: 10.1523/JNEUROSCI.0656-22.2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins K.L., Guterstam A., Cronin J., Olson J.D., Ehrsson H.H., Ojemann J.G. Ownership of an artificial limb induced by electrical brain stimulation. Proceedings of the National Academy of Sciences of the United States of America. 2017;114(1):166–171. doi: 10.1073/pnas.1616305114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costantini M., Salone A., Martinotti G., Fiori F., Fotia F., Di Giannantonio M., et al. Body representations and basic symptoms in schizophrenia. Schizophrenia Research. 2020;222:267–273. doi: 10.1016/j.schres.2020.05.038. [DOI] [PubMed] [Google Scholar]

- Crucianelli L., Ehrsson H.H. Visuo-thermal congruency modulates the sense of body ownership. Communications Biology. 2022;5(1) doi: 10.1038/s42003-022-03673-6. Article 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crucianelli L., Krahé C., Jenkinson P.M., Fotopoulou A., Katerina) Interoceptive ingredients of body ownership: Affective touch and cardiac awareness in the rubber hand illusion. Cortex. 2018;104:180–192. doi: 10.1016/j.cortex.2017.04.018. [DOI] [PubMed] [Google Scholar]

- Crucianelli L., Metcalf N.K., Fotopoulou A., Katerina), Jenkinson P.M. Bodily pleasure matters: Velocity of touch modulates body ownership during the rubber hand illusion. Frontiers in Psychology. 2013;4 doi: 10.3389/fpsyg.2013.00703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dokka K., Park H., Jansen M., DeAngelis G.C., Angelaki D.E. Causal inference accounts for heading perception in the presence of object motion. Proceedings of the National Academy of Sciences of the United States of America. 2019;116(18):9060–9065. doi: 10.1073/pnas.1820373116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrsson H.H. In: The Routledge Handbook of Bodily Awareness. Alsmith A.J.T., Longo M.R., editors. Routledge; New York: 2023. Bodily illusions; pp. 201–229. [Google Scholar]

- Ehrsson H.H. In: The New handbook of multisensory processes. Stein B.E., editor. MIT Press; Cambridge, Massachusetts: 2012. The concept of body ownership and its relation to multisensory integration; pp. 775–792. [Google Scholar]

- Ehrsson H.H. In: Multisensory processes in body ownership. Sathian K., Ramachandran V.S., editors. Elsevier Inc./Academic Press; San Diego: 2020. pp. 179–200. (Multisensory Perception: From Laboratory to Clinic). [Google Scholar]

- Ehrsson H.H., Chancel M. Premotor cortex implements causal inference in multisensory own-body perception. Proceedings of the National Academy of Sciences of the United States of America. 2019 doi: 10.1073/pnas.1914000116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrsson H.H., Spence C., Passingham R.E. That's My hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science. 2004;305(5685):875–877. doi: 10.1126/science.1097011. [DOI] [PubMed] [Google Scholar]

- Ehrsson H.H., Holmes N.P., Passingham R.E. Touching a rubber hand: feeling of body ownership is associated with activity in multisensory brain areas. Journal of Neuroscience. 2005;25(45):10564–10573. doi: 10.1523/JNEUROSCI.0800-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst M.O., Banks M.S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415(6870):429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Ernst M.O., Bülthoff H.H. Merging the senses into a robust percept. Trends in Cognitive Sciences. 2004;8(4):162–169. doi: 10.1016/j.tics.2004.02.002. [DOI] [PubMed] [Google Scholar]

- Eshkevari E., Rieger E., Longo M.R., Haggard P., Treasure J. Increased plasticity of the bodily self in eating disorders. Psychological Medicine. 2012;42(4):819–828. doi: 10.1017/S0033291711002091. [DOI] [PubMed] [Google Scholar]

- Fan C., Coppi S., Ehrsson H.H. The supernumerary rubber hand illusion revisited: Perceived duplication of limbs and visuotactile events. Journal of Experimental Psychology. Human Perception and Performance. 2021;47(6):810–829. doi: 10.1037/xhp0000904. [DOI] [PubMed] [Google Scholar]

- Fang W., Li J., Qi G., Li S., Sigman M., Wang L. Statistical inference of body representation in the macaque brain. Proceedings of the National Academy of Sciences of the United States of America. 2019;116(40):20151–20157. doi: 10.1073/pnas.1902334116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch C.R., DeAngelis G.C., Angelaki D.E. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nature Reviews Neuroscience. 2013;14(6):429–442. doi: 10.1038/nrn3503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallagher M., Colzi C., Sedda A. Dissociation of proprioceptive drift and feelings of ownership in the somatic rubber hand illusion. Acta Psychologica. 2020;212 doi: 10.1016/j.actpsy.2020.103192. [DOI] [PubMed] [Google Scholar]

- Gentile G., Guterstam A., Brozzoli C., Ehrsson H.H. Disintegration of multisensory signals from the real hand reduces default limb self-attribution: An fMRI study. The Journal of Neuroscience: the Official Journal of the Society for Neuroscience. 2013;33(33):13350–13366. doi: 10.1523/JNEUROSCI.1363-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentile G., Petkova V.I., Ehrsson H.H. Integration of visual and tactile signals from the hand in the human brain: An fMRI study. Journal of Neurophysiology. 2011;105(2):910–922. doi: 10.1152/jn.00840.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilhodes J.C., Roll J.P., Tardy-Gervet M.F. Perceptual and motor effects of agonist-antagonist muscle vibration in man. Experimental Brain Research. 1986;61(2):395–402. doi: 10.1007/BF00239528. [DOI] [PubMed] [Google Scholar]

- Goodwin G.M., McCloskey D.I., Matthews P.B. Proprioceptive illusions induced by muscle vibration: Contribution by muscle spindles to perception? Science. 1972;175(4028):1382–1384. doi: 10.1126/science.175.4028.1382. [DOI] [PubMed] [Google Scholar]

- Gori M., Mazzilli G., Sandini G., Burr D. Cross-sensory facilitation reveals neural interactions between visual and tactile motion in humans. Frontiers in Psychology. 2011;2 doi: 10.3389/fpsyg.2011.00055. https://www.frontiersin.org/article/10.3389/fpsyg.2011.00055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano M.S., Cooke D.F., Taylor C.S. Coding the location of the arm by sight. Science. 2000;290(5497):1782–1786. doi: 10.1126/science.290.5497.1782. [DOI] [PubMed] [Google Scholar]

- Graziano M.S.A., Gross C.G. A bimodal map of space: Somatosensory receptive fields in the macaque putamen with corresponding visual receptive fields. Experimental Brain Research. 1993;97(1) doi: 10.1007/BF00228820. [DOI] [PubMed] [Google Scholar]

- Grefkes C., Fink G.R. The functional organization of the intraparietal sulcus in humans and monkeys. Journal of Anatomy. 2005;207(1):3–17. doi: 10.1111/j.1469-7580.2005.00426.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guterstam A., Collins K.L., Cronin J.A., Zeberg H., Darvas F., Weaver K.E., et al. Direct electrophysiological correlates of body ownership in human cerebral cortex. Cerebral Cortex. 2019;29(3):1328–1341. doi: 10.1093/cercor/bhy285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guterstam A., Petkova V.I., Ehrsson H.H. The illusion of owning a third arm. Plos One. 2011;6(2) doi: 10.1371/journal.pone.0017208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Héroux M.E., Walsh L.D., Butler A.A., Gandevia S.C. Is this my finger? Proprioceptive illusions of body ownership and representation. The Journal of Physiology. 2013;591(Pt 22):5661–5670. doi: 10.1113/jphysiol.2013.261461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ide M. The effect of “anatomical plausibility” of hand angle on the rubber-hand illusion. Perception. 2013;42(1):103–111. doi: 10.1068/p7322. [DOI] [PubMed] [Google Scholar]

- Ide M., Wada M. Periodic visuotactile stimulation slowly enhances the rubber hand illusion in individuals with high autistic traits. Frontiers in Integrative Neuroscience. 2016;10 doi: 10.3389/fnint.2016.00021. https://www.frontiersin.org/articles/10.3389/fnint.2016.00021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalckert A., Ehrsson H.H. Moving a rubber hand that feels like your own: A dissociation of ownership and agency. Frontiers in Human Neuroscience. 2012;6 doi: 10.3389/fnhum.2012.00040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalckert A., Ehrsson H.H. The spatial distance rule in the moving and classical rubber hand illusions. Consciousness and Cognition. 2014;30:118–132. doi: 10.1016/j.concog.2014.08.022. [DOI] [PubMed] [Google Scholar]

- Kalckert A., Perera A.T.-M., Ganesan Y., Tan E. Rubber hands in space: The role of distance and relative position in the rubber hand illusion. Experimental Brain Research. 2019;237(7):1821–1832. doi: 10.1007/s00221-019-05539-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kavounoudias A., Roll J.P., Anton J.L., Nazarian B., Roth M., Roll R. Proprio-tactile integration for kinesthetic perception: An fMRI study. Neuropsychologia. 2008;46(2):567–575. doi: 10.1016/j.neuropsychologia.2007.10.002. [DOI] [PubMed] [Google Scholar]

- Kayser C., Shams L. Multisensory causal inference in the brain. Plos Biology. 2015;13(2) doi: 10.1371/journal.pbio.1002075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilteni K., Groten R., Slater M. The sense of embodiment in virtual reality. Presence: Teleoperators and Virtual Environments. 2012;21(4):373–387. doi: 10.1162/PRES_a_00124. [DOI] [Google Scholar]

- Kilteni K., Maselli A., Kording K.P., Slater M. Over my fake body: Body ownership illusions for studying the multisensory basis of own-body perception. Frontiers in Human Neuroscience. 2015;9:141. doi: 10.3389/fnhum.2015.00141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Körding K.P., Beierholm U., Ma W.J., Quartz S., Tenenbaum J.B., Shams L. Causal inference in multisensory perception. Plos One. 2007;2(9):e943. doi: 10.1371/journal.pone.0000943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Limanowski J., Blankenburg F. Integration of visual and proprioceptive limb position information in human posterior parietal, premotor, and extrastriate cortex. The Journal of Neuroscience. 2016;36(9):2582–2589. doi: 10.1523/JNEUROSCI.3987-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litwin P. Extending Bayesian models of the rubber hand illusion. Multisensory Research. 2020;33(2):127–160. doi: 10.1163/22134808-20191440. [DOI] [PubMed] [Google Scholar]

- Litwin P., Zybura B., Motyka P. Tactile information counteracts the attenuation of rubber hand illusion attributable to increased visuo-proprioceptive divergence. Plos One. 2020;15(12) doi: 10.1371/journal.pone.0244594. [DOI] [PMC free article] [PubMed] [Google Scholar]