Abstract

Background Medical data can be difficult to comprehend for patients, but only a limited number of patient-friendly terms and definitions are available to clarify medical concepts. Therefore, we developed an algorithm that generalizes diagnoses to more general concepts that do have patient-friendly terms and definitions in SNOMED CT. We implemented the generalizations, and diagnosis clarifications with synonyms and definitions that were already available, in the problem list of a hospital patient portal.

Objective We aimed to assess the extent to which the clarifications cover the diagnoses in the problem list, the extent to which clarifications are used and appreciated by patient portal users, and to explore differences in viewing problems and clarifications between subgroups of users and diagnoses.

Methods We measured the coverage of diagnoses by clarifications, usage of the problem list and the clarifications, and user, patient and diagnosis characteristics with aggregated, routinely available electronic health record and log file data. Additionally, patient portal users provided quantitative and qualitative feedback about the clarification quality.

Results Of all patient portal users who viewed diagnoses on their problem list ( n = 2,660), 89% had one or more diagnoses with clarifications. In addition, 55% of patient portal users viewed the clarifications. Users who rated the clarifications ( n = 108) considered the clarifications to be of good quality on average, with a median rating per patient of 6 (interquartile range: 4–7; from 1 very bad to 7 very good). Users commented that they found clarifications to be clear and recognized the clarifications from their own experience, but sometimes also found the clarifications incomplete or disagreed with the diagnosis itself.

Conclusion This study shows that the clarifications are used and appreciated by patient portal users. Further research and development will be dedicated to the maintenance and further quality improvement of the clarifications.

Keywords: diagnoses, electronic health records, patient portals, patient-friendly terms, SNOMED CT

Background and Significance

Medical data can be difficult to comprehend for patients; nonetheless, patients increasingly access their electronic health records (EHRs) through patient portals and personal health records. 1 2 3 Many patients prefer patient-friendly, lay language and may require easier synonyms, definitions, and explanations to clarify medical terminology. 2 3 4 5 6 In previous research, it has been shown that clarifications increase the comprehension of deidentified notes. 7 8 9 10 However, these studies were not performed with the actual EHRs of patients themselves, have not reported the coverage of their tools in clinical practice, i.e., how many difficult terms were covered by their functionality, and neither have they assessed how much the clarification functionality was used in clinical practice, which is important to determine whether it fulfils an information need from patients. In an earlier study we performed, we found that patients appreciate clarifications of their personal EHR notes, but that only few difficult terms were clarified by the functionality, due to limited coverage of the terminology used and issues with free-text annotation. 11 Data are ideally encoded with medical terminology systems, such that no ambiguity may arise in identifying the medical concepts that are represented by the terms in certain text ( Supplementary Appendix 1 , available in the online version).

Fortunately, the SNOMED CT Netherlands edition (Nictiz, The Hague) contains the patient-friendly Dutch language reference set (PFRS) that “states which descriptions are appropriate to show to patients, caregivers and other stakeholders who have not received care-related training.” This provides the opportunity to clarify medical data to patients. SNOMED CT is not used for all types of medical data, but in particular, diagnoses are registered with the interface terminology Diagnosethesaurus (DT; DHD, Utrecht, Netherlands) in the Netherlands. DT diagnoses are mapped to SNOMED CT clinical findings and disorders, through which PFRS descriptions can be obtained. For instance, “phlebitis” in Table 1 can be clarified by providing a synonym and definition from the PFRS. However, the coverage of DT diagnoses by PFRS descriptions was low, 1.2% in 2018. 12 Therefore, we developed an algorithm to generalize diagnoses to one or more general, supertype concepts that do have patient-friendly terms and definitions in the PFRS, by employing the SNOMED CT hierarchy. 12 13 For instance, “pulmonic valve regurgitation” in Table 1 can be generalized to the medical concept heart valve regurgitation, to provide the PFRS synonym “leaky heart valve” and the PFRS definition as a clarification. We showed that this algorithm increases the coverage of diagnoses by clarifications significantly to 71%. 12 This algorithm is especially relevant for languages such as Dutch that have limited resources available for medical terminology and language processing, 14 because few patient-friendly terms were needed to clarify a large number of medical concepts. 12 Two raters with a medical and terminological background validated these generalizations and considered more than 80% of the clarifications to be correct and acceptable to use in clinical practice. 15 The clarifications had not previously been evaluated by actual patient portal users in a real-life setting.

Table 1. Examples of diagnoses registered in Dutch problem lists of medical records and their corresponding clarifications that can be displayed after clicking on the diagnosis description or info button.

| Medical diagnosis description | Clarification |

|---|---|

| Phlebitis | Another word for “phlebitis” is vein inflammation: inflammation of a vein, which makes it red, swollen, and painful. |

| Pulmonic valve regurgitation | A type of leaky heart valve. Leaky heart valve: this is a heart valve that closes poorly so that oxygen-rich blood no longer flows properly through the body. This causes complaints such as shortness of breath, fatigue after exertion, and dizziness. |

| Congenital cyst of adrenal gland | A type of inborn abnormality and hormonal disorder. Cyst: cavities in the body filled with liquid. |

| Lowe syndrome | A type of inborn abnormality, mental disorder, and disorder of brain, kidney, eye, and metabolism. It is hereditary. |

Objective

The current study aims to evaluate the implementation of these diagnosis clarifications in a patient portal problem list, which contains diagnoses, complications, and attention notes. First, we aimed to evaluate to what extent the clarification functionality met patient portal users' information needs by assessing the coverage of their diagnoses by clarifications and by analyzing to what extent they actually use the clarification functionality when they view their problem list. Second, we evaluated the quality of the clarifications from the perspective of the users and explored differences in user, patient and diagnosis characteristics for those users who view the problem list, and those who use the clarification functionality.

Study Context

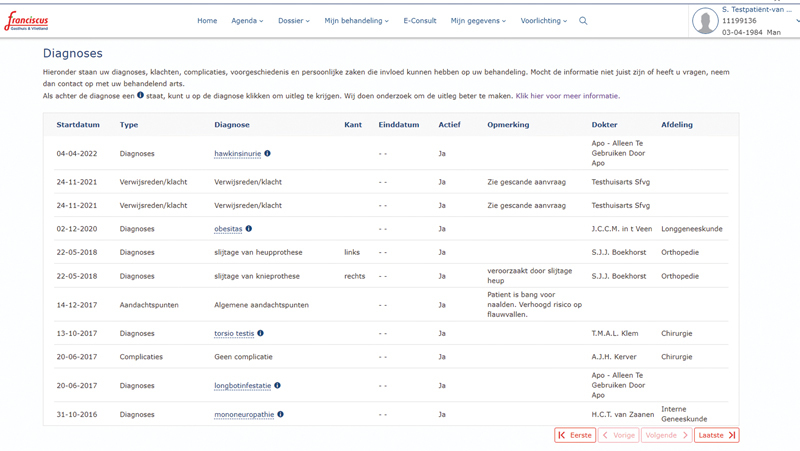

The study was performed at the teaching hospital Franciscus Gasthuis & Vlietland (Franciscus). 16 The hospital used the health information system HiX and its patient portal (version 6.2; ChipSoft B.V., Amsterdam, Netherlands). Patients, or their authorized proxies, use the patient portal, for instance, to view their medical data, schedule appointments, securely message their health care provider, and complete questionnaires. Proxy users can be anyone authorized by the hospital or the patients (depending on their age), such as informal caregivers, case managers, or the parents of a child. The diagnosis clarifications were implemented in the problem list as illustrated in Fig. 1 . The description of the diagnosis was highlighted, underlined, and provided with an info icon if a clarification was available. When clicked, the diagnosis description and a clarification of the diagnosis were displayed. Figs. 2 and 3 illustrate the clarifications and functionality to provide feedback. Details on the terminology implemented in the system are provided in Supplementary Appendix 1 (available in the online version).

Fig. 1.

Problem list with diagnoses, complications, and attention notes. Diagnoses with clarifications have info buttons: these are highlighted, underlined and followed by an information icon. Users can click on the info button to view a pop-up with a diagnosis clarification. (all data presented in Fig. 1 are not real and completely imaginary)

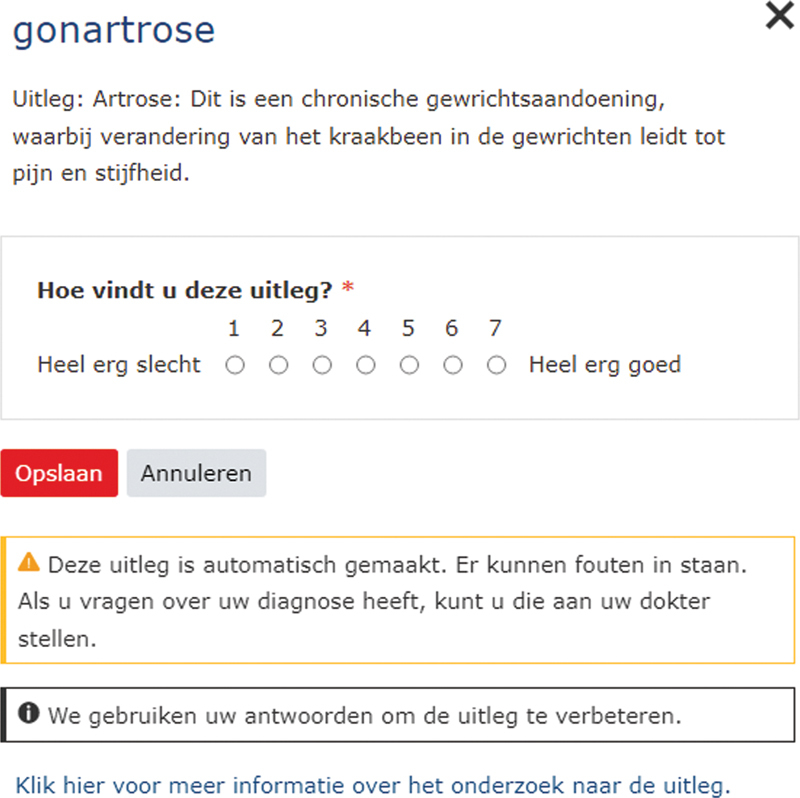

Fig. 2.

Example of diagnosis clarification for the diagnosis “Osteoarthritis of knee.” This clarification defines the supertype osteoarthritis. Users can provide a rating of the clarification from (1) very bad to (7) very good. Below, a warning is provided that the clarification was generated automatically, and questions can be addressed to the clinician. Additionally, information is provided that the feedback is used to improve the clarifications and a link is provided to gain further information about the research.

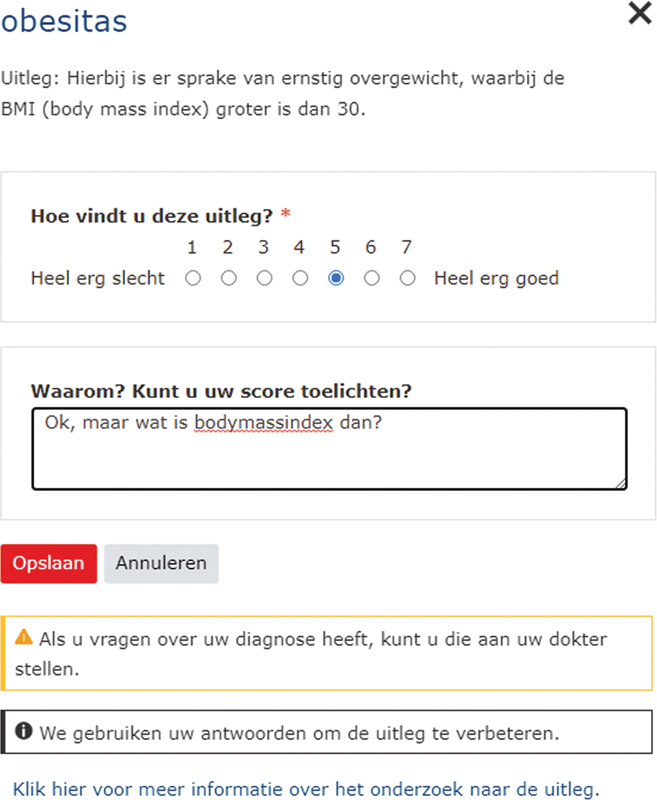

Fig. 3.

Example of diagnosis clarification for the diagnosis “Obesity.” This clarification consists of a definition from the Dutch SNOMED CT patient-friendly extension. Users can motivate their rating in free text.

Methods

Study Design

We performed a prospective postimplementation evaluation study with quality improvement feedback and the reuse of routinely collected data.

Participants

During the 9-week study period from Monday, April 4 to Monday, June 6, 2022, all patient portal users were included. We analyzed usage data about the logins into the patient portal, problem list views, diagnoses displayed on the problem list, the number of diagnoses with clarifications, and which info buttons were clicked on. Users could log in, view the problem list, display clarifications, and provide feedback multiple times. This thus resulted in a convenience sample with those users who went through each of these steps, which we refer to as “conversion steps” and we call the percentage of users that convert from one step (e.g., logging in) to another (e.g., view the problem list) conversion rates.

Conversion steps:

Login into the patient portal.

View the problem list.

Click on the info button to view the clarification.

Provide feedback on the clarification.

Outcome Measures

The coverage of diagnosis by clarifications was measured as the diagnosis clarification token coverage 17 : the number of diagnoses with a clarification divided by the total number of diagnoses viewed on the problem list. The use of the clarification functionality was measured as the info button click conversion: the number of info buttons clicked on divided by the total number of info buttons viewed. For each conversion step from login to rating the clarifications, we reported the percentage of users that converted to that step, the number of actions (i.e., logins, views, clicks, ratings) they performed, and the number of unique problems, diagnoses, and info buttons where the actions were performed on. We aggregated user, patient, and diagnosis characteristics for each step, to compare differences between subgroups in the conversion rates. User characteristics were user type (patient or proxy account), and the age group, gender, latest diagnosis year, and the number of diagnoses of the patient for whom the user used the patient portal. Diagnosis characteristics were DT concept, clarification type, and medical specialty.

Data Acquisition

We prospectively reused EHR and audit trail data to derive which diagnoses were viewed by patients, by which account types, and for which diagnoses users clicked on the info button. We retrospectively reused diagnoses, age, and gender already registered in the EHR to explore differences in user, patient, and diagnosis characteristics. The feedback questions asked were simple and minimally invasive: (1) Please rate this explanation (1: very bad; 7: very good)? (2) Why? Can you explain your score? The questionnaire functionality of the EHR was used for this purpose. The feedback was monitored by the hospital staff, to assess whether it contained questions or if any issues arose that needed to be addressed.

Aggregated data on all patient portal users during the study period were exported from the EHR. To protect the privacy of the patients, variables such as gender and age were aggregated in separate tables so they could not be combined. Free text from the feedback provided was anonymized by an authorized hospital functionary. Anonymization was performed by removing directly identifying data, such as dates, places, and names of patients, clinicians, or others. EHR, audit trail, and free-text data were made available by the hospital without any directly identifying personal information.

Statistical Analysis

Conversion rates were calculated and aggregated by the different outcome measure levels. For the number of actions, the interquartile ranges (IQRs) and the maximum number of actions per user were reported. We calculated the total diagnosis clarification token coverage and info button click conversion rate, and calculated it for each patient and took the median and IQR per patient. For the clarification quality ratings, we used the median and IQR of the median rating per patient and clarification. The difference in ratings for clarifications with patient-friendly synonyms and definitions compared with clarifications with generalizations to concepts with patient-friendly synonyms and definitions was tested with the Mann–Whitney U test. 18 Two authors (H.J.T.v.M. and G.E.G.H.) analyzed the comments thematically and summarized them narratively. Any differences were discussed until consensus was achieved. Differences among users and diagnosis characteristics in the proportions of problem list views and info button clicks were tested with the Fisher exact test. 19 The p -values were corrected for false discovery rate with the Benjamini–Yekutieli method. 20 Odds ratios (ORs) were calculated post-hoc for each variable to estimate the associations between the characteristics and the views and clicks, comparing the odds of the particular variable (e.g., patients of the female gender) with a reference group (e.g., male gender). We took the largest group as the reference group. Data were analyzed using the R programming language (R Foundation for Statistical Computing, Vienna, Austria; Version: 4.2.1, 2022–06–23) in RStudio (RStudio Inc., Boston, Massachusetts, United States; Version: 2022.07.1). See the R script in Supplementary Appendix 2 (available in the online version).

Results

Study Population

In total, for 19,961 patients users had logged in at least once during the 9-week study period. Logins came from all age groups, the largest group logged in for patients in their 30s (18.1%), followed by 60s (17.4%) and 50s (16.5%). Relatively more logins were for women (61.8%) and few users logged in with proxy accounts (0.2%). Table 2 shows the user characteristics for each step. Supplementary Appendix 3 (available in the online version) contains the complete results dataset.

Table 2. The number of users that logged in per patient characteristic and user account type, additionally for whether they viewed the problem list, viewed info buttons on the problem list, clicked on info buttons on the problem list and provided feedback, with the number of patients and percentages of the total number of patients for which that action was performed.

| Statistic | Value | Logged in | Viewed problem list | Viewed info buttons on list | Clicked on info buttons | Provided feedback | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| n | (%) | n | (%) | n | (%) | n | (%) | n | (%) | ||

| Age | 00–09 | 373 | (1.9) | 130 | (2.0) | 54 | (2.3) | 32 | (2.5) | 2 | (2) |

| 10–19 | 492 | (2.5) | 166 | (2.5) | 58 | (2.5) | 33 | (2.6) | 0 | (0) | |

| 20–29 | 2,280 | (11.4) | 764 | (11.7) | 181 | (7.7) | 95 | (7.4) | 6 | (6) | |

| 30–39 | 3,612 | (18.1) | 1,085 | (16.6) | 265 | (11.2) | 138 | (10.7) | 7 | (6) | |

| 40–49 | 2,807 | (14.1) | 929 | (14.2) | 333 | (14.1) | 191 | (14.8) | 17 | (15) | |

| 50–59 | 3,284 | (16.5) | 1,110 | (17.0) | 442 | (18.7) | 259 | (20.1) | 18 | (16) | |

| 60–69 | 3,478 | (17.4) | 1,193 | (18.3) | 487 | (20.6) | 264 | (20.5) | 28 | (25) | |

| 70–79 | 2,861 | (14.3) | 923 | (14.1) | 417 | (17.7) | 218 | (16.9) | 25 | (23) | |

| 80–89 | 711 | (3.6) | 211 | (3.2) | 114 | (4.8) | 56 | (4.3) | 5 | (5) | |

| 90– | 63 | (0.3) | 19 | (0.3) | 12 | (0.5) | 5 | (0.4) | 0 | (0) | |

| Age subgroup |

00 | 17 | (0.1) | 7 | (0.1) | 2 | (0.1) | 1 | (0.1) | 0 | (0) |

| 01–09 | 356 | (1.8) | 123 | (1.9) | 52 | (2.2) | 31 | (2.4) | 2 | (2) | |

| 10–11 | 54 | (0.3) | 14 | (0.2) | 3 | (0.1) | 1 | (0.1) | 0 | (0) | |

| 12–15 | 131 | (0.7) | 51 | (0.8) | 24 | (1.0) | 15 | (1.2) | 0 | (0) | |

| 16–17 | 94 | (0.5) | 33 | (0.5) | 13 | (0.6) | 6 | (0.5) | 0 | (0) | |

| 18–19 | 213 | (1.1) | 68 | (1.1) | 18 | (0.8) | 11 | (0.9) | 0 | (0) | |

| Gender | Male | 7,629 | (38.2) | 2,375 | (36.4) | 856 | (36.2) | 471 | (36.5) | 46 | (43) |

| Female | 12,332 | (61.8) | 4,155 | (63.6) | 1,507 | (63.8) | 820 | (63.5) | 62 | (57) | |

| Account | Proxy | 42 | (0.2) | 12 | (0.2) | 7 | (0.3) | 2 | (0.2) | 0 | (0) |

| Patient | 19,923 | (99.8) | 6,519 | (99.8) | 2,357 | (99.8) | 1,289 | (99.9) | 108 | (100) | |

| Total | 19,961 | (100.0) | 6,530 | (100.0) | 2,363 | (100.0) | 1,291 | (100.0) | 108 | (100) | |

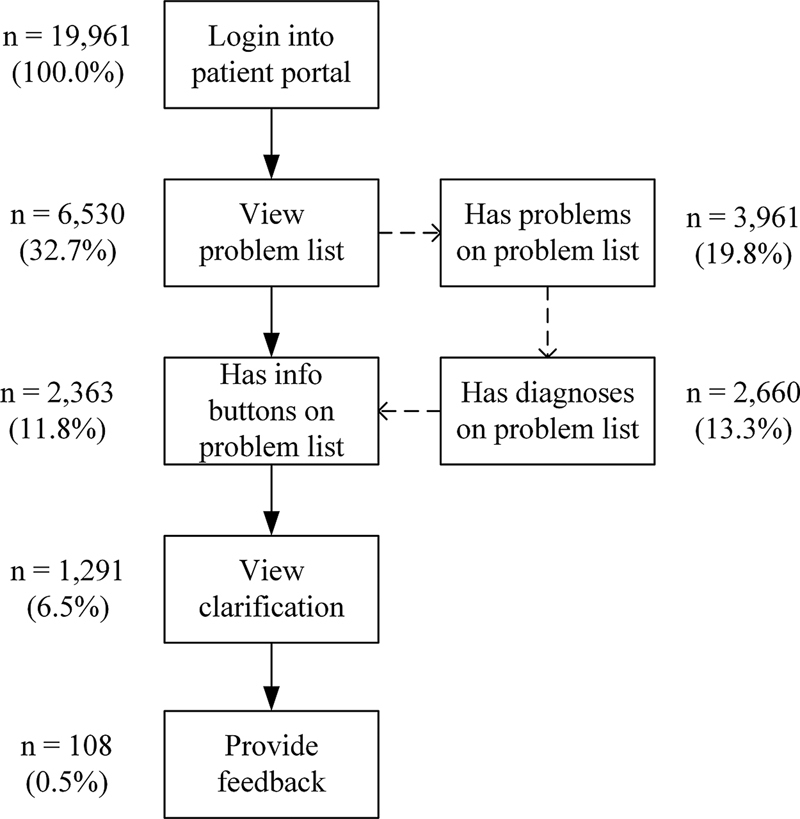

Problem List and Clarification Views

Fig. 4 and Table 3 show the conversion rates and the number of actions performed for each step. This provides detailed insight into the usage patterns. The problem list of 6,530 patients was viewed (32.7% of the patients for whom users had logged in), 2,660 (13.3%) had viewed DT-encoded diagnoses on their problem list, and 2,363 (11.8%) had viewed info buttons on their problem list on which they could have clicked. Therefore, for 88.8% (2,363/2,660) of patients of whom DT encoded diagnoses on their problem list were viewed, an info button was available to view a clarification. When info buttons were available, a median of 1 (IQR: 1–2; maximum: 10) info button was on their problem list. The diagnosis clarification token coverage was 81.7% (4,977/4,069) and the coverage per patient had a mean of 100% (IQR: 75–100%). One or more info buttons were clicked on for diagnoses of 1,291 patients, which is 54.6% of the patients for whom info buttons were viewed and 6.5% of the patients for whom was logged in. On average, users clicked twice (IQR: 1–3; maximum: 31) on one info button (IQR: 1–1; maximum: 8). The info button click conversion rate for all info buttons viewed was 43.5% (1,770/4,069) with a median click conversion of 50% (IQR: 0–100%) per patient. Of the patients who clicked on an info button, 108 (8.4%) provided a rating (0.5% of the patients who had logged in).

Fig. 4.

The number and percentage (conversion rates) of patients for whom users went through the conversion steps. Not all patients had problems, diagnoses, or info buttons on their problem list, which is illustrated with the dashed arrows.

Table 3. Percentages of logins, views, clicks and ratings, with the number of patients ( n ) for whom was logged in, the percentage of patients for whom the problem list was viewed, for whom a problem was viewed on the problem list, for whom diagnoses were viewed, for whom info buttons were viewed, for whom an info button was clicked on and for whom a clarification was rated, of the total number of patients for whom users logged in; it additionally shows the total number of actions, with the quartiles min, 25%, median, 75%, and max, and the average number of actions per patient; the number of actions is distinguished from the number of problems or diagnoses that were viewed (i.e., the number of times ratings were provided for which number of diagnoses) .

| Statistic | Patients | Actions | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| n | % | Level | Total | Min | 25% | Median | 75% | Max | Average | |

| Logged in | 19,961 | 100.0 | Logins | 69,112 | 1 | 1 | 2 | 3 | 260 | 3.5 |

| Viewed problem list | 6,530 | 32.7 | Views | 17,414 | 1 | 1 | 2 | 3 | 63 | 2.7 |

| Viewed problems | 3,961 | 19.8 | Views | 34,539 | 1 | 2 | 4 | 9 | 474 | 8.7 |

| Problems | 11,145 | 1 | 1 | 2 | 4 | 20 | 2.8 | |||

| Viewed diagnoses | 2,660 | 13.3 | Views | 16,012 | 1 | 2 | 3 | 6 | 197 | 6.0 |

| Diagnoses | 4,977 | 1 | 1 | 1 | 2 | 16 | 1.9 | |||

| Viewed info buttons | 2,363 | 11.8 | Views | 13,235 | 1 | 1 | 3 | 6 | 165 | 5.6 |

| Diagnoses | 4,069 | 1 | 1 | 1 | 2 | 10 | 1.7 | |||

| Clicked on info buttons | 1,291 | 6.5 | Clicks | 2,979 | 1 | 1 | 2 | 3 | 31 | 2.3 |

| Diagnoses | 1,770 | 1 | 1 | 1 | 1 | 8 | 1.4 | |||

| Rated clarifications | 108 | 0.5 | Ratings | 133 | 1 | 1 | 1 | 1 | 4 | 1.2 |

| Diagnoses | 127 | 1 | 1 | 1 | 1 | 4 | 1.2 | |||

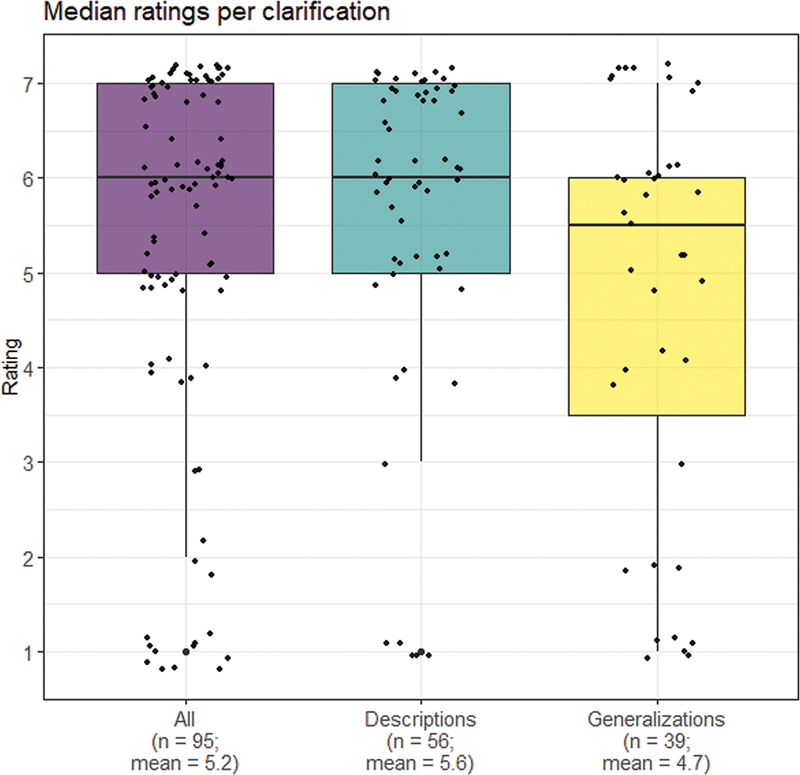

Clarification Quality Ratings

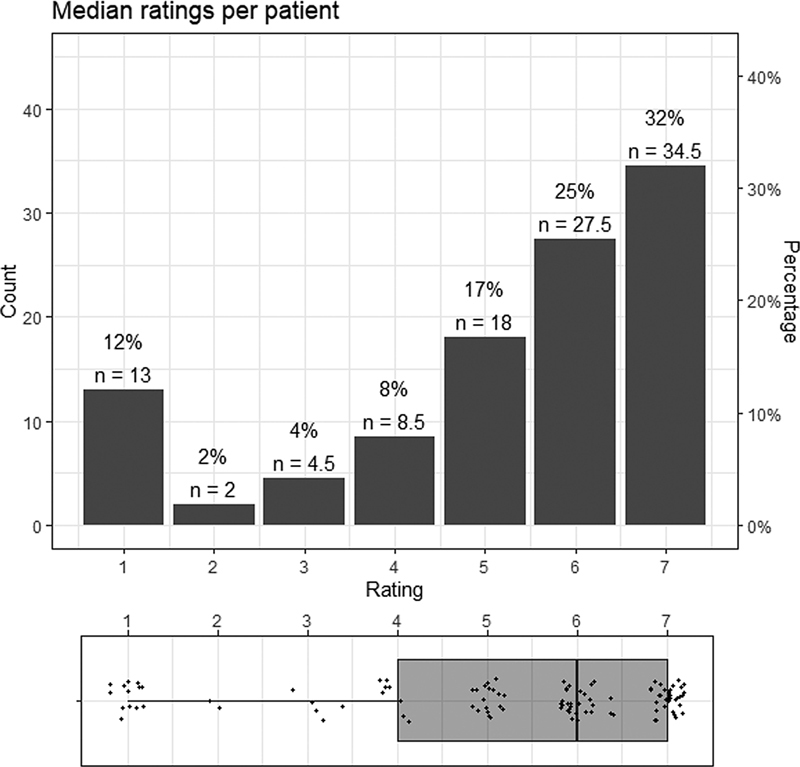

A total of 108 users rated the quality of 127 diagnoses (103 unique diagnoses with 95 unique clarifications). Users rated the clarifications with a median of 6 (IQR: 4–7; Fig. 5 ). Clarifications with synonyms and definitions were rated higher than clarifications with generalizations to supertypes (median: 6, compared with median: 5.5; p = 0.0379), see Fig. 6 . Users provided a comment on 66 of the 127 diagnoses (56%). We identified 16 themes in the comments and the most common ones were that they found the clarification clear ( n = 25; 38%) or incomplete ( n = 10; 15%), provided input for improvement ( n = 10; 15%), found the clarification unclear ( n = 5; 8%), or disagreed with the diagnosis rather than the clarification ( n = 4; 6%). Additionally, some users ( n ≤ 3; ≤5%) commented they recognized the clarification based on their own experience, that they found the clarification was right or useful, asked for a solution for their health problem, disagreed with the treatment, clarification, and/or diagnosis (sometimes not a clear distinction), or mentioned the existence of alternative sources of clarifications.

Fig. 5.

Bar plot and box plot of the median ratings per patient. The bar plot shows the median ratings per patient for each rating from (1) very bad to (7) very good and the percentage, and the number of patients ( n ). The box plot below the bar plot shows the median (median: 6), interquartile range (IQR: 4–7), and jittered scatter of the ratings.

Fig. 6.

Box plots of ratings from (1) very bad to (7) very good for all clarifications (“All,” left), clarifications with patient-friendly synonyms and definitions (“Descriptions,” center), and clarifications with generalization to supertypes with patient-friendly synonyms and definitions (“Generalizations,” right).

Differences between Subgroups

After correcting for the false discovery rate (see Supplementary Table S2 in Supplementary Appendix 4 , available in the online version), differences in the proportion of users who viewed the problem list were found significant for gender ( p = 0.0037) and latest diagnosis year ( p = 0.0037). The odds of viewing the problem list were lower for male compared with female patients (OR: 0.89; confidence interval [CI]: 0.84–0.95) and higher for patients having the latest diagnosis in the year 2022 (when the study was performed) compared with those having no diagnosis (OR: 1.35; CI: 1.20–1.53). Differences in the proportion of users who clicked on an info button were significant for the latest diagnosis year ( p = 0.0003) and medical specialty ( p = 0.0037). The odds of clicking on an info button were higher for patients having the latest diagnosis in 2022 (OR: 3.08; CI: 2.30–4.15) and 2021 (OR: 1.33; CI: 1.02–1.74) compared with 2020. Compared with orthopaedics, the odds of clicking were lower for ear, nose and throat surgery (OR: 0.78; CI: 0.61–0.99), dermatology (OR: 0.56; CI: 0.42–0.74), surgery (OR: 0.75; CI: 0.56–0.99), ophthalmology (OR: 0.64; 0.48–0.85), urology (OR: 0.52; CI: 0.34–0.78), plastic surgery (OR: 0.51; CI: 0.28–0.93), and gynecology (OR: 0.51; CI: 0.26–0.98). See Supplementary Appendix 4 (available in the online version) and Supplementary Tables Supplementary Table S3 – S8 (available in the online version) for the proportions and ORs of the subgroup variables.

Unexpected Observations

During monitoring, we noticed two events that were not expected. One user (rating: 5) wrote “ I have this pain already for [x] years, why can they not do anything about it, life keeps getting more unbearable .” The hospital verified whether the patient required a follow-up, but there already was a follow-up scheduled. Therefore, it was decided that further action was not necessary. In a second case (rating: 1), a user commented he or she did not have the diagnosis and that this was confirmed by the clinician.

Discussion

This study provided insight into patient portal user information needs by measuring and evaluating the actual coverage and use of a clarification functionality for the problem list. The coverage of diagnoses by clarifications was high, with almost 90% of patients having clarifications for one or more diagnoses on their problem list. More than half of the users who could use the info buttons clicked on them during the study period and on average they clicked on half of the info buttons available in their problem list. Overall, clarifications were rated as having good quality. Clarifications by synonyms and definitions of supertypes were rated relatively lower than clarifications with synonyms and definitions of the diagnoses themselves. The odds that the problem list was viewed were relatively higher for patients of the female gender and with a more recent diagnosis. The odds that info buttons were clicked to view clarifications were relatively higher for patients with a recent diagnosis and relatively lower (compared with orthopaedics) for diagnoses from the specialties ear, nose and throat surgery, dermatology, surgery, ophthalmology, urology, plastic surgery, and gynecology.

Similar studies have not performed an evaluation study in clinical practice but relied on online surveys, 21 laboratory situations, 7 10 21 or only performed expert evaluation. 22 23 24 Additionally, previous studies did not use personal medical data and were focused on notes, rather than encoded diagnoses. Therefore, the current study is novel in that we prospectively evaluated clarifications in a real-time patient portal with patients' personal medical data, showing that end-users use and appreciate clarification functionality. Patients have been reported to find errors in their notes and to consider some medical record content to be judgmental and offensive. 25 26 27 It appears that the clarifications help users to verify whether the diagnosis is correct, as our second example in the “Unexpected Observations” section illustrates. Some authors 25 28 argue that medical jargon should be replaced by language that treats patients less belittling, passive, childish, and blamable. The evaluated solution in the present study, however, does not require clinicians to change the way they register their data. It combines the strength of more professional phrasing, as the content was already encoded with terminology systems, with clarification by the functionality.

To our knowledge, this is the first study that evaluates clarifications in a patient portal. Reusing existing log and EHR data provides a more representative picture of users and their behavior than making patients or laymen fill out surveys and using fabricated nonpersonal data, 7 10 21 as we were able to include a wide variety of users in the convenience samples of each conversion step. The brief quality ratings were minimally invasive for end users. Some users disagreed with the diagnosis and one with their treatment, and accordingly rated the clarification as very bad. Conversely, a user commented that the clarification was a good addition to the drawing a clinician made and rated the clarification as very good. Where users did not comment, we could not verify whether they based the rating on the clarification only or also on the diagnosis or experience with their clinician. This might affect the ratings and the ratings thus reflect a mix of the quality of the clarification, the data quality, and the experience with the clinician. Without the permission of the users, we could not obtain individual patient data to run a multivariate model. Therefore, this research was limited to aggregate data and associations could hence not be corrected for confounders. The aggregate data provided insight into different user groups. However, the few differences in conversion we found between users and diagnoses were based on sample sizes that lowered along the conversion steps. Differences might have resulted coincidentally due to multiple testing and confounding. We tried to minimize the false discovery rate and might have unnecessarily discarded associations such as age and problem list viewing (e.g., the problem list appears to be viewed significantly more often for patients in their 30s compared with patients in their 60s). However, we still were able to provide some insight for further studies with a rich descriptive dataset.

This study shows that generalization is a useful technique to generate clarifications from the perspective of actual patient portal users. For terminology developers, the approach has the potential to make more maintainable terms and definitions that can be reused among several medical concepts. In further research, tailoring clarifications to end-users, especially on a more accessible language difficulty level, and developing clarifications for particular diagnosis classes should be investigated, improving the clarifications and functionality. The coverage of the current system can be increased by updating the terminology versions, developing clarifications for other types of medical data, and applying other clarification methods, such as using relationships other than is-a relationships in SNOMED CT, such as the finding site (e.g., pancreas) and associated morphology (e.g., inflammation) to clarify concepts (e.g., deriving “inflammation of the pancreas” from “pancreatitis”). The associations found indicate that there are differences in usage between groups, which might reflect that they have different information needs. The unexpected observations imply that asking for free-text feedback about diagnosis clarifications should also involve follow-up, as patients sometimes do not understand or agree with the diagnosis. The hospital decided to continue showing the clarifications after the study period, but without asking for free-text feedback, because there was no solution yet for continuing follow-up and free-text anonymization to share the feedback for clarification quality improvement. Health care institutions should determine how to deal with these issues before implementing such functionality, as user input can help improve medical record accuracy and clarification quality.

Conclusion

The coverage of diagnoses by clarifications based on an algorithm that generalizes diagnoses to concepts with patient-friendly terms and definitions was high and the majority of users used the clarification functionality. Overall, users considered it good clarifications, but they also identified opportunities for improving the clarity and completeness of some clarifications. Future research should address the improvement of the clarification coverage and quality, and further investigate differences between subgroups to assess specific user group needs and prioritize areas of improvement.

Clinical Relevance Statement

While medical data had traditionally been registered for clinical purposes and clinicians only, patients—who often have not had any medical training—currently access their health records. This study presents a generic solution to make medical data, in particular diagnoses, more understandable for patients, without creating an additional administrative burden for clinicians, because clarifications are provided to data that already are routinely registered in health records. The functionality is used and appreciated by patient portal users.

Multiple-Choice Questions

-

What method was applied to increase the coverage of diagnoses by clarifications?

Synonymy

Generalization

Definition

Patient education

Correct Answer: The correct answer is option b. The coverage of diagnoses by clarifications was increased 9.4 times by generalizing diagnoses to more general concepts with patient-friendly terms and definitions.

-

What part of the patient portal users actually views diagnosis clarifications by clicking on an info button in this study?

Less than one-third.

More than ninety percent.

More than half.

Less than ten percent.

Correct Answer: The correct answer is option c. More than half of the users who viewed their problem list and had one or more info buttons to click on, clicked on an info button to view a diagnosis clarification.

-

The odds ratios were found to be significantly different for some groups of patients and diagnoses. For which patients or diagnoses were the odds ratios of viewing clarifications lower?

Diagnoses from dermatology

Patients with a recent diagnosis

Diagnoses from orthopaedics

Patients with female gender

Correct answer: The correct answer is option a. The odds ratio of diagnosis clarifications being viewed from dermatology compared with orthopaedics was 0.56 (CI: 0.42–0.74). However, the relative odds of viewing clarifications were higher for patients with a recent diagnosis and they were not different for female compared with male patients.

Acknowledgments

The authors thank Mark de Keizer, Thabitha Scheffers-Buitendijk, Savine Martens, Daniël Huliselan, Theo van Mens, Leonieke van Mens, and Arthur Schrijer for their contributions to this study.

Conflict of Interest H.J.T.v.M., G.E.G.H., and R.N. are or have been employed by ChipSoft. ChipSoft is a software vendor that develops the health information system HiX. R.J.B. is employed at the Franciscus hospital, where the study was performed. The authors declare that they have no further conflicts of interest in the research. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Protection of Human and Animal Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects. A waiver from the Medical Research Ethics Committee of Amsterdam UMC, location AMC was obtained on June 3, 2021, and filed under reference number W21_259 # 21.285. It confirmed that the Medical Research Involving Human Subjects Act (in Dutch: “WMO”) does not apply to the study and that therefore an official approval of this study by the ethics committee was not required under Dutch law. Approval from the Data Protection Officer and the Scientific Bureau of Franciscus was obtained on March 10, 2022 (reference 2021–109). The final research protocol was registered at the ISRCTN. 29 No consent for publication was required because no individual person's data were published.

Supplementary Material

References

- 1.Keselman A, Smith C A. A classification of errors in lay comprehension of medical documents. J Biomed Inform. 2012;45(06):1151–1163. doi: 10.1016/j.jbi.2012.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Irizarry T, DeVito Dabbs A, Curran C R. Patient portals and patient engagement: a state of the science review. J Med Internet Res. 2015;17(06):e148. doi: 10.2196/jmir.4255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Janssen S L, Venema-Taat N, Medlock S. Anticipated benefits and concerns of sharing hospital outpatient visit notes with patients (Open Notes) in Dutch hospitals: mixed methods study. J Med Internet Res. 2021;23(08):e27764. doi: 10.2196/27764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Antonio M G, Petrovskaya O, Lau F. The state of evidence in patient portals: umbrella review. J Med Internet Res. 2020;22(11):e23851. doi: 10.2196/23851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hemsley B, Rollo M, Georgiou A, Balandin S, Hill S. The health literacy demands of electronic personal health records (e-PHRs): an integrative review to inform future inclusive research. Patient Educ Couns. 2018;101(01):2–15. doi: 10.1016/j.pec.2017.07.010. [DOI] [PubMed] [Google Scholar]

- 6.Woollen J, Prey J, Wilcox L. Patient experiences using an inpatient personal health record. Appl Clin Inform. 2016;7(02):446–460. doi: 10.4338/ACI-2015-10-RA-0130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lalor J P, Hu W, Tran M, Wu H, Mazor K M, Yu H. Evaluating the effectiveness of NoteAid in a community hospital setting: randomized trial of electronic health record note comprehension interventions with patients. J Med Internet Res. 2021;23(05):e26354. doi: 10.2196/26354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zeng-Treitler Q, Goryachev S, Kim H, Keselman A, Rosendale D. Making texts in electronic health records comprehensible to consumers: a prototype translator. AMIA Annu Symp Proc. 2007:846–850. [PMC free article] [PubMed] [Google Scholar]

- 9.Kandula S, Curtis D, Zeng-Treitler Q. A semantic and syntactic text simplification tool for health content. AMIA Annu Symp Proc. 2010;2010:366–370. [PMC free article] [PubMed] [Google Scholar]

- 10.Ramadier L, Lafourcade M. Cham: Springer International Publishing; 2018. Radiological Text Simplification Using a General Knowledge Base; pp. 617–627. [Google Scholar]

- 11.van Mens H JT, van Eysden M M, Nienhuis R, van Delden J JM, de Keizer N F, Cornet R. Evaluation of lexical clarification by patients reading their clinical notes: a quasi-experimental interview study. BMC Med Inform Decis Mak. 2020;20 10:278. doi: 10.1186/s12911-020-01286-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.van Mens H JT, de Keizer N F, Nienhuis R, Cornet R. Clarifying diagnoses to laymen by employing the SNOMED CT hierarchy. Stud Health Technol Inform. 2018;247:900–904. [PubMed] [Google Scholar]

- 13.SNOMED CT Netherlands National Release Center . Hague, The Netherlands: Nictiz; 2022. SNOMED CT Patient-Friendly Extension Release. [Google Scholar]

- 14.Cornet R, Van Eldik A, De Keizer N. Inventory of tools for Dutch clinical language processing. Stud Health Technol Inform. 2012;180:245–249. [PubMed] [Google Scholar]

- 15.van Mens H JT, Martens S SM, Paiman E HM. Diagnosis clarification by generalization to patient-friendly terms and definitions: validation study. J Biomed Inform. 2022;129:104071. doi: 10.1016/j.jbi.2022.104071. [DOI] [PubMed] [Google Scholar]

- 16.Franciscus Gasthuis & Vlietland . 2021. Jaarverslag 2021 (Annual Report) [Google Scholar]

- 17.Cornet R, de Keizer N F, Abu-Hanna A. A framework for characterizing terminological systems. Methods Inf Med. 2006;45(03):253–266. [PubMed] [Google Scholar]

- 18.Nachar N. The Mann-Whitney U: a test for assessing whether two independent samples come from the same distribution. Tutor Quant Methods Psychol. 2008;4:13–20. [Google Scholar]

- 19.Patefield W M. Algorithm AS 159: an efficient method of generating random R × C tables with given row and column totals. J R Stat Soc Ser C Appl Stat. 1981;30:91–97. [Google Scholar]

- 20.Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Stat. 2001;29:1165–1188. [Google Scholar]

- 21.Lalor J P, Woolf B, Yu H. Improving electronic health record note comprehension with NoteAid: randomized trial of electronic health record note comprehension interventions with crowdsourced workers. J Med Internet Res. 2019;21(01):e10793. doi: 10.2196/10793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Alfano M, Lenzitti B, Lo Bosco G, Muriana C, Piazza T, Vizzini G. Design, development and validation of a system for automatic help to medical text understanding. Int J Med Inform. 2020;138:104109. doi: 10.1016/j.ijmedinf.2020.104109. [DOI] [PubMed] [Google Scholar]

- 23.Bala S, Keniston A, Burden M. Patient perception of plain-language medical notes generated using artificial intelligence software: pilot mixed-methods study. JMIR Form Res. 2020;4(06):e16670. doi: 10.2196/16670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Chen J, Druhl E, Polepalli Ramesh B. A natural language processing system that links medical terms in electronic health record notes to lay definitions: system development using physician reviews. J Med Internet Res. 2018;20(01):e26. doi: 10.2196/jmir.8669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fernández L, Fossa A, Dong Z. Words matter: what do patients find judgmental or offensive in outpatient notes? J Gen Intern Med. 2021;36(09):2571–2578. doi: 10.1007/s11606-020-06432-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chimowitz H, Gerard M, Fossa A, Bourgeois F, Bell S K. Empowering informal caregivers with health information: OpenNotes as a safety strategy. Jt Comm J Qual Patient Saf. 2018;44(03):130–136. doi: 10.1016/j.jcjq.2017.09.004. [DOI] [PubMed] [Google Scholar]

- 27.Wright A, Feblowitz J, Maloney F L. Increasing patient engagement: patients' responses to viewing problem lists online. Appl Clin Inform. 2014;5(04):930–942. doi: 10.4338/ACI-2014-07-RA-0057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cox C, Fritz Z. Presenting complaint: use of language that disempowers patients. BMJ. 2022;377:e066720. doi: 10.1136/bmj-2021-066720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.van Mens H JT, Hannen G, Nienhuis R, Bolt R J, de Keizer N F, Cornet R.Better explanations for diagnoses in your medical record: use and ratings of an information button to clarify diagnoses in a hospital patient portalISRCTN2022https://doi.org/10.1186/ISRCTN59598141 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.