Abstract

Indecipherable black boxes are common in machine learning (ML), but applications increasingly require explainable artificial intelligence (XAI). The core of XAI is to establish transparent and interpretable data-driven algorithms. This work provides concrete tools for XAI in situations where prior knowledge must be encoded and untrustworthy inferences flagged. We use the “learn to optimize” (L2O) methodology wherein each inference solves a data-driven optimization problem. Our L2O models are straightforward to implement, directly encode prior knowledge, and yield theoretical guarantees (e.g. satisfaction of constraints). We also propose use of interpretable certificates to verify whether model inferences are trustworthy. Numerical examples are provided in the applications of dictionary-based signal recovery, CT imaging, and arbitrage trading of cryptoassets. Code and additional documentation can be found at https://xai-l2o.research.typal.academy.

Subject terms: Computational science, Computer science

Introduction

A paradigm shift in machine learning is to construct explainable and transparent models, often called explainable AI (XAI)1. This is crucial for sensitive applications like medical imaging and finance (e.g. see recent work on the role of explainability2–5). Yet, many commonplace models (e.g. fully connected feed forward) offer limited interpretability. Prior XAI works give explanations via tools like sensitivity analysis5 and layer-wise propagation6,7, but these neither quantify trustworthiness nor necessarily shed light on how to correct “bad” behaviours. Our work shows how learning to optimize (L2O) can be used to directly embed explainability into models.

The scope of this work is machine learning (ML) applications where domain experts can create approximate models by hand. In our setting, the inference of a model with input d solves an optimization problem. That is, we use

| 1 |

where is a function and is a constraint set (e.g. encoding prior information like physical quantities), and each (possibly) includes dependencies on weights . Note the model is implicit since its output is defined by an optimality condition rather than an explicit computation. To clarify the scope of the word explainable in this work, we adopt the following conventions. We say a model is explainable provided a domain expert can identify the core design elements of a model and how they translate to expected inference properties. We say a particular inference is explainable provided its properties can be linked to the model’s design and intended use. Explainable models and inferences are achieved via L2O with our proposed certificates.

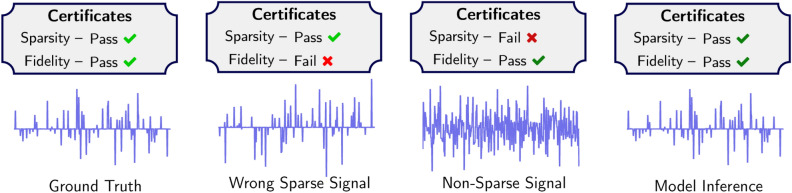

A standard practice in software engineering is to code post-conditions after function calls return. Post-conditions are criteria used to validate what the user expects from the code and ensure code is not executed under the wrong assumptions8. We propose use of these for ML model inferences (see Fig. 1 and Supplementary Fig. A1). These conditions enable use of certificates with labels—pass, warning or fail—to describe each model inference. We define an inference to be trustworthy provided it satisfies all provided post-conditions.

Figure 1.

The L2O model is composed of parts (shown as colored blocks) based on prior knowledge or data. L2O inferences solve the optimization problem for given model inputs. Certificates label if each inference is consistent with training. If so, it is trustworthy; otherwise, the faulty model part errs.

Two ideas, optimization and certificates, form a concrete notion of XAI. Prior and data-driven knowledge can be encoded via optimization, and this encoding can be verified via certificates. To illustrate, consider inquiring why a model generated a “bad” inference (e.g. an inference disagrees with observed measurements). The first diagnostic step is to check certificates. If no fails occurred, the model was not designed to handle the instance encountered. In this case, the model in (1) can be redesigned to encode prior knowledge of the situation. Alternatively, each failed certificate shows a type of error and often corresponds to portions of the model (see Figs. 1 and 2). The L2O model allows debugging of algorithmic implementations and assumptions to correct errors. In a sense, this setup enables one to manually backpropagate errors to fix models (similar to training).

Figure 2.

Left shows learning to optimize (L2O) model. Colored blocks denote prior knowledge and data-driven terms. Middle shows an iterative algorithm formed from the blocks (e.g. via proximal/gradient operators) to solve optimization problem. Right shows a trained model’s inference and its certificates. Certificates identify if properties of inferences are consistent with training data. Each label is associated with properties of specific blocks (indicated by labels next to blocks in right schematic). Labels take value pass  , warning

, warning  , or fail

, or fail  , and values identify if inference features for model parts are trustworthy.

, and values identify if inference features for model parts are trustworthy.

Contributions

This work brings new explainability and guarantees to deep learning applications using prior knowledge. We propose novel implicit L2O models with intuitive design, memory efficient training, inferences that satisfy optimality/constraint conditions, and certificates that either indicate trustworthiness or flag inconsistent inference features.

Related works

Closely related to our work is deep unrolling, a subset of L2O wherein models consist of a fixed number of iterations of a data-driven optimization algorithm. Deep unrolling has garnered great success and provides intuitive model design. We refer readers to recent surveys9–12 for further L2O background. Downsides of unrolling are growing memory requirements with unrolling depth and a lack of guarantees.

Implicit models circumvent these two shortcomings by defining models using an equation (e.g. as in (1)) rather than prescribe a fixed number of computations as in deep unrolling. This enables inferences to be computed by iterating until convergence, thereby enabling theoretical guarantees. Memory-efficient training techniques were also developed for this class of models, which have been applied successfully in games13, music source separation14, language modeling15, segmentation16, and inverse problems17,18. The recent work18 most closely aligns with our L2O methodology.

Related XAI works use labels/cards. Model Cards19 document intended and appropriate uses of models. Care labels20,21 are similar, testing properties like expressivity, runtime, and memory usage. FactSheets22 are modeled after supplier declarations of conformity and aim to identify models’ intended use, performance, safety, and security. These works provide statistics at the distribution level, complementing our work for trustworthiness of individual inferences.

Explainability via optimization

Model design

The design of L2O models is naturally decomposed into two steps: optimization formulation and algorithm choice. The first step is to identify a tentative objective to encode prior knowledge via regularization (e.g. sparsity) or constraints (e.g. unit simplex for classification). We may also add terms that are entirely data-driven. Informally, this step identifies a special case of (1) of the form

| 2 |

where the constraints are encoded in the objective using indicator functions, equaling 0 when constraint is satisfied and otherwise. The second design step is to choose an algorithm for solving the chosen optimization problem (e.g. proximal-gradient or ADMM23). We use iterative algorithms, and the update formula for each iteration is given by a model operator . Updates are typically composed in terms of gradient and proximal operations. Some parameters (e.g. step sizes) may be included in the weights to be tuned during training. Given data d, computation of the inference is completed by generating a sequence via the relation

| 3 |

By design, converges to a solution of (1), and we set

| 4 |

In our context, each model inference is defined to be an optimizer as in (1). Hence properties of inferences can be explained via the optimization model (1); note this is unlike blackbox models where one has no way of explaining why a particular inference is made. The iterative algorithm is applied successively until stopping criteria are met (i.e. in practice we choose an iterate K, possibly dependent on d, so that ). Because converges, we may adjust stopping criteria to approximate the limit to arbitrary precision, which implies we may provide guarantees on model inferences (e.g. satisfying a linear system of equations to a desired precision13,17,18). The properties of the implicit L2O model (1) are summarized by Table 1.

Table 1.

Summary of design features and corresponding model properties. Design features yield additive properties, as indicated by “+ (above).” Proposed implicit L2O models with certificates have intuitive design, memory efficient training, inferences that satisfy optimality/constraint conditions, certificates of trustworthiness, and explainable errors.

| L2O | Implicit | Flags | Obtainable model property |

|---|---|---|---|

| Intuitive design | |||

| Memory efficient | |||

| Satisfy constraints + (above) | |||

| Trustworthy inferences | |||

| Explainable errors + (above) |

Example of model design

To make the model design procedure concrete, we illustrate this process on a classic problem: sparse recovery from linear measurements. These problems appear in many applications such as radar imaging24 and speech recognition25. Here the task is to estimate a signal via access to linear measurements d satisfying for a known matrix A.

Step 1: Choose model Since true signals are known to be sparse, we include regularization. To comply with measurements, we add a fidelity term. Lastly, to capture hidden features of the data distribution, we also add a data-driven regularization. Putting these together gives the problem

| 5 |

where and and are two tunable matrices. This model encodes a balance of three terms—sparsity, fidelity, data-driven regularization—each quantifiable via (5).

Step 2: Choose Algorithm The proximal-gradient scheme generates a sequence converging to a limit which solves (5). By simplifying and combining terms, the proximal-gradient method can be written via the iteration

| 6 |

where is a step-size, W is a matrix defined in terms of , , and , and is the shrink operator given by

| 7 |

From the update on the right hand side of (6), we see the step size can be “absorbed” into the tunable matrix W and the shrink function parameter can be set to . That is, this example model has weights with model operator

| 8 |

which resembles the updates of previous L2O works26–28. Inferences are computed via a sequence with updates

| 9 |

The model inference is the limit of this sequence .

Convergence

Evaluation of the model is well-defined and tractable under a simple assumption. By a classic result29, it suffices to ensure, for all d, is averaged, i.e. there is and Q such that , where Q is 1-Lipschitz. When this property holds, the sequence in (3) converges to a solution . This may appear to be a strong assumption; however, common operations in convex optimization algorithms (e.g. proximals and gradient descent updates) are averaged. For entirely data-driven portions of , several activation functions are 1-Lipschitz30,31 (e.g. ReLU and softmax), and libraries like PyTorch32 include functionality to force affine mappings to be 1-Lipschitz (e.g. spectral normalization). Furthermore, by making a contraction, a unique fixed point is obtained. We emphasize, even without forcing to be averaged, is often observed to converge in practice15,17,18 upon tuning the weights .

Trustworthiness certificates

Explainable models justify whether each inference is trustworthy. We propose providing justification in the form of certificates, which verify various properties of the inference are consistent with those of the model inferences on training data and/or prior knowledge. Each certificate is a tuple of the form with a property name and a corresponding label which has one of three values: pass, warning, or fail (see Fig. 3). Each certificate label is generated by two steps. The first is to apply a function that maps inferences (or intermediate states) to a nonnegative scalar value quantifying a property of interest. The second step is to map this scalar to a label. Labels are generated via the flow:

| 10 |

Figure 3.

Example inferences for test data d. The sparsified version Kx of each inference x is shown (c.f. Fig. 5) along with certificates. Ground truth was taken from test dataset of implicit dictionary experiment. The second from left is sparse and inconsistent with measurement data. The second from right complies with measurements but is not sparse. The rightmost is generated using our proposed model (IDM), which approximates the ground truth well and is trustworthy.

Property value functions

Several quantities may be used to generate certificates. In the model design example above, a sparsity property can be quantified by counting the number of nonzero entries in a signal, and a fidelity property can use the relative error (see Fig. 3). To be most effective, property values are chosen to coincide with the optimization problem used to design the L2O model, i.e. to quantify structure of prior and data-driven knowledge. This enables each certificate to clearly validate a portion of the model (see Fig. 2). Since various concepts are useful for different types of modeling, we provide a brief (and non-comprehensive) list of concepts and possible corresponding property values in Table 2.

Table 2.

Certificate examples. Each certificate is tied to a high-level concept, and then quantified in a formula. For classifier confidence, we assume x is in the unit simplex. The proximal is a data-driven update for with weights .

| Concept | Quantity | Formula |

|---|---|---|

| Sparsity | Nonzeros | |

| Measurements | Relative error | |

| Constraints | Distance to set | |

| Smooth images | Total variation | |

| Classifier Confidence | Probability short of one-hot label | |

| Convergence | Iterate residual | |

| Regularization | Proximal residual |

One property concept deserves particular attention: data-driven regularization. This regularization is important for discriminating between inference features that are qualitatively intuitive but difficult to quantify by hand. Rather than approximate a function, implicit L2O models directly approximate gradients/proximals. These provide a way to measure regularization indirectly via gradient norms/residual norms of proximals. Moreover, these norms (e.g. see last row of Table 2) are easy to compute and equal zero only at local minima of regularizers. To our knowledge, this is the first work to quantify trustworthiness using the quality of inferences with respect to data-driven regularization.

Certificate labels

Typical certificate labels should follow a trend where inferences often obtain a pass label to indicate trustworthiness while warnings occur occasionally and failures are obtained in extreme situations. Let the samples of model inference property values come from distribution . We pick property value functions for which small values are desirable and the distribution tail consists of larger . Intuitively, smaller property values of resemble property values of inferences from training and/or test data. Thus, labels are assigned according to the probability of observing a value less than or equal to , i.e. we evaluate the cumulative distribution function (CDF) defined for probability measure by

| 11 |

Labels are chosen according to the task at hand. Let , , and be the probabilities for pass, warning, and fail labels, respectively. Labels are made for via

| 12 |

The remaining task is to estimate the CDF value for a given . Recall we assume access is given to property values from ground truths or inferences on training data, where N is the number of data points. To this end, given an value, we estimate its CDF value via the empirical CDF:

| 13a |

| 13b |

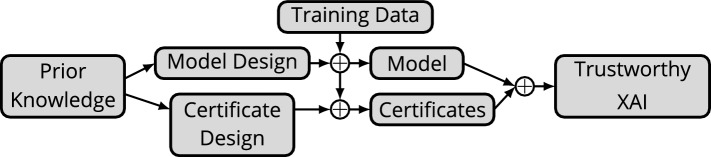

where denotes set cardinality. Figure 4 shows how these certificates can be combined with the L2O methodology.

Figure 4.

This diagram illustrates relationships between certificates, models, training data, and prior knowledge. Prior knowledge is embedded directly into model design via the L2O methodology. This also gives rise to quantities to measure for certificate design. The designed model is tuned using training data to obtain the “optimal” L2O model (shown by arrows touching top middle sign). The certificates are tuned to match the test samples and/or model inferences on training data (shown by arrows with bottom middle sign). Together the model and certificates yield inferences with certificates of trustworthiness.

Certificate implementation

As noted in the introduction, trustworthiness certificates are evidence an inference satisfies post-conditions (i.e. passes various tests). Thus, they are to be used in code in the same manner as standard software engineering practice. Consider the snippet of code in Supplementary Fig. A1. As usual, an inference is generated by calling the model. However, alongside the inference SPSVERBc1, certificates SPSVERBc2 are returned that label whether the inference SPSVERBc1 passes tests that identify consistency with training data and prior knowledge.

Experiments

Each numerical experiment shows an application of novel implicit L2O models, which were designed directly from prior knowledge. Associated certificates of trustworthiness are used to emphasize the explainability of each model and illustrate use-cases of certificates. Experiments were coded using Python with the PyTorch library32, the Adam optimizer33, and, for ease of re-use, were run via Google Colab. We emphasize these experiments are for illustration of intuitive and novel model design and trustworthiness and are not benchmarked against state-of-the-art models. The datasets generated and/or analysed during the current study are available in the following repository: github.com/typal-research/xai-l2o. All methods were performed in accordance with the relevant guidelines and regulations.

Algorithms

To illustrate evaluation of L2O model used herein, we begin with an example L2O model and algorithm. Specifically, models used for the first two experiments take the form

| 14 |

where K and M are linear operators, is a noise tolerance, and f and g are proximable functions. Introducing auxiliary variables w and p and dual variable , linearized ADMM34 (L-ADMM) can be used to iteratively update the tuple of variables via

| 15a |

| 15b |

| 15c |

| 15d |

| 15e |

| 15f |

where is the Euclidean projection onto the Euclidean ball of radius centered at d, is the proximal operator for a function f, and the scalars are appropriate step sizes. Further details, definitions, and explanations are available in the appendices. We note the updates are ordered so that is the final step to make it easy to backprop through the final update.

Implicit model training

Standard backpropagation cannot be used for implicit models as it requires memory capacities beyond existing computing devices. Indeed, storing gradient data for each iteration in the forward propagation (see (3)) scales the memory during training linearly with respect to the number of iterations. Since the limit solves a fixed point equation, implicit models can be trained by differentiating implicitly through the fixed point to obtain a gradient. This implicit differentiation requires further computations and coding. Instead of using gradients, we utilize Jacobian-Free Backpropagation (JFB)35 to train models. JFB further simplifies training by only backpropagating through the final iteration, which was proven to yield preconditioned gradients. JFB trains using fixed memory (with respect to the K steps used to estimate ) and avoids numerical issues arising from computing exact gradients36, making JFB and its variations37,38 apt for training implicit models.

Implicit dictionary learning

Setup

In practice, high dimensional signals often approximately admit low dimensional representations39–44. For illustration, we consider a linear inverse problem where true data admit sparse representations. Here each signal admits a representation via a transformation M (i.e. ). A matrix is applied to each signal to provide linear measurements . Our task is to recover given knowledge of A and d without the matrix M. Since the linear system is quite under-determined, schemes solely minimizing measurement error (e.g. least squares approaches) fail to recover true signals; additional knowledge is essential.

Model design

All convex regularization approaches are known lead to biased estimators whose expectation does not equal the true signal46. However, the seminal work47 of Candes and Tao shows minimization (rather than additive regularization) enables exact recovery under suitable assumptions. Thus, we minimize a sparsified signal subject to linear constraints via the implicit dictionary model (IDM)

| 16 |

The square matrix K is used to leverage the fact x has a low-dimensional representation by transforming x into a sparse vector. Linearized ADMM34 (L-ADMM) is used to create a sequence as in (3). The model has weights . If it exists, the matrix is known as a dictionary and is the corresponding sparse code; hence the name IDM for (16). To this end, we emphasize K is learned during training and is different from M, but these matrices are related since we aim for the product to be sparse. Note we use L-ADMM to provably solve (16), and is easy to train. More details are in Appendix C.

Discussion

IDM combines intuition from dictionary learning with a reconstruction algorithm. Two properties are used to identify trustworthy inferences: sparsity and measurement compliance (i.e. fidelity). Sparsity and fidelity are quantified via the norm of the sparsified inference (i.e. ) and relative measurement error. Figure 5 shows the training the model yields a sparsifying transformation K. Figure 3 shows the proposed certificates identify “bad” inferences that might, at first glance, appear to be “good” due to their compatibility with constraints. Lastly, observe the utility of learning K, rather than approximating M, is K makes it is easy to check if an inference admits a sparse representation. Using M to check for sparsity is nontrivial.

Figure 5.

Training IDM yields sparse representation of inferences. Diagram shows a sample true data x (left) from test dataset and its sparsified representation Kx (right).

CT image reconstruction

Setup

Comparisons are provided for low-dose CT examples derived from the Low-Dose Parallel Beam dataset (LoDoPab) dataset48, which has publically available phantoms derived from actual human chest CT scans. CT measurements are simulated with a parallel beam geometry and a sparse-angle setup of only 30 angles and 183 projection beams, giving 5490 equations and 16,384 unknowns. We add Gaussian noise to each individual beam measurement. Images have resolution . To make errors easier to contrast between methods, the linear systems here are under-determined and have more noise than those in some similar works. Image quality is determined using the Peak Signal-To-Noise Ratio (PSNR) and structural similarity index measure (SSIM). The training loss was mean squared error. Training/test datasets have 20,000/2000 samples.

Model design

The model for the CT experiment extends the IDM. In practice, it has been helpful to utilize a sparsifying transform49,50. We accomplish this via a linear operator K, which is applied and then this product is fed into a data-driven regularizer with parameters . We additionally ensure compliance with measurements from the Radon transform matrix A, up to a tolerance . In our setting, all pixel values are also known to be in the interval [0, 1]. Combining our prior knowledge yields the implicit L2O model

| 17 |

Here has weights with , and step-sizes in L-ADMM. More details are in Appendix D.

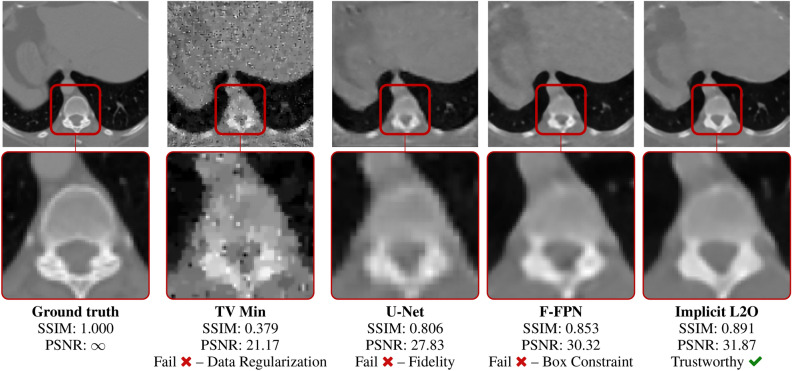

Discussion

Comparisons of our method (Implicit L2O) with U-Net45, F-FPNs17, and total variation (TV) Minimization are given in Fig. 6 and Table 3. Table 3 shows the average PSNR and SSIM reconstructions. Our model obtains the highest average PSNR and SSIM values on the test data while using 11% and 62% as many weights as U-Net and F-FFPN, indicating greater efficiency of the implicit L2O framework. Moreover, the L2O model is designed with three features: compliance with measurements (i.e. fidelity), valid pixel values, and data-driven regularization. Table 3 also shows the percentage of “fail” labels for these property values. Here, an inference fails if its property value is larger than of the property values from the training/true data, i.e. we choose , , and in (12). For the fidelity, our model never fails (due to incorporating the constraint into the network design). Our network fails of the time for the data-driven regularization property. Overall, the L2O model generates the most trustworthy inferences. This is intuitive as this model outperforms the others and was specifically designed to embed all of our knowledge, unlike the others. To provide better intuition of the certificates, we also show the certificate labels for an image from the test dataset in Fig. 6. The only image to pass all provided tests is the proposed implicit L2O model. This knowledge can help identify trustworthy inferences. Interestingly, the data-driven regularization enabled certificates to detect and flag “bad” TV Minimization features (e.g. visible staircasing effects51,52), which shows novelty of certificates as these features are intuitive, yet prior methods to quantify this were, to our knowledge, unknown.

Figure 6.

Reconstructions on test data computed via U-Net45, TV minimization, F-FPNs17, and Implicit L2O (left to right). Bottom row shows expansion of region indicated by red box. Pixel values outside [0, 1] are flagged. Fidelity is flagged when images do not comply with measurements, and regularization is flagged when texture features of images are sufficiently inconsistent with true data (e.g. grainy images). Labels are provided beneath each image (n.b. fail is assigned to images that are worse than 95% of L2O inferences on training data). Shown comparison methods fail while the Implicit L2O image passes all tests.

Table 3.

Average PSNR/SSIM for CT reconstructions on the 2000 image LoDoPab testing dataset. Reported from original work17. U-Net was trained with filtered backprojection as in prior work45. Three properties are used to check trustworthiness: box constraints, compliance with measurements (i.e. fidelity), and data-driven regularization (via the proximal residual in Table 2). Failed sample percentages are numerically estimated via (). Sample property values “fail” if they perform worse than of the inferences on the training data, i.e. , its CDF value exceeds 0.95. Implicit L2O yields the most passes on test data.

| Method | Avg. PSNR | Avg. SSIM | Box constraint fail | Fidelity fail | Data Reg. fail | # Params |

|---|---|---|---|---|---|---|

| U-Net | 27.32 dB | 0.761 | 5.75 % | 96.95% | 3.20% | 533,593 |

| TV Min | 28.52 dB | 0.765 | 0.00 % | 0.00% | 25.40% | 4 |

| F-FPN | 30.46 dB | 0.832 | 47.15% | 0.40% | 5.05% | 96,307 |

| Implicit L2O | 31.73 dB | 0.858 | 0.00% | 0.00% | 5.70% | 59,697 |

Optimal cryptoasset trading

Setup

Ethereum is a blockchain technology anyone can use to deploy permanent and immutable decentralized applications. This technology enables creation of decentralized finance (DeFi) primitives, which can give censorship-resistant participation in digital markets and expand the use of stable assets53,54 and exchanges55–57 beyond the realm of traditional finance. Popularity of cryptoasset trading (e.g. GRT and Ether) is exploding with the DeFi movement58,59.

Decentralized exchanges (DEXs) are a popular entity for exchanging cryptoassets (subject to a small transaction fee), where trades are conducted without the need for a trusted intermediary to facilitate the exchange. Popular examples of DEXs are constant function market makers (CFMMs)60, which use mathematical formulas to govern trades. To ensure CFMMs maintain sufficient net assets, trades within CFMMs maintain constant total reserves (as defined by a function ). A transaction in a CFMM tendering x assets in return for y assets with reserves assets r is accepted provided

| 18 |

with a trade fee parameter. Here with each vector nonnegative and i-th entry giving an amount for the i-th cryptoasset type (e.g. Ether, GRT). Typical choices61 of are weighted sums and products, i.e.

| 19 |

where has positive entries. Figure 7 shows an example of a CFMM network.

Figure 7.

Network with 5 CFMMs and 3 tokens; structure replicates an experiment in recent work63. Black lines show available tokens for trade in each CFMM.

This experiment aims to maximize arbitrage. Arbitrage is the simultaneous purchase and sale of equivalent assets in multiple markets to exploit price discrepancies between the markets. This can be a lucrative endeavor with cryptoassets62. For a given snapshot in time, our arbitrage goal is to identify a collection of trades that maximize the cryptoassets obtainable by trading between different exchanges, i.e. solve the (informal) optimization problem

| 20 |

The set of valid trades is all trades satisfying the transaction rules for CFMMs given by (18) with nonnegative values for tokens tendered and received (i.e. ). Prior works61,63 deal with an idealistic noiseless setting while recognizing executing trades is not without risk (e.g. noisy information, front running64, and trade delays). To show implications of trade risk, we incorporate noise in our trade simulations by adding noise to CFMM asset observations, which yields noisy observed data . Also, we consider trades with CFMMs where several assets can be traded simultaneously rather than restricting to pairwise swaps.

Model design

The aim is to create a model that infers a trade (x, y) maximizing utility. For a nonnegative vector of reference price valuations, this utility U is the net change in asset values provided by the trade, i.e.

| 21 |

where is a matrix mapping global coordinates of asset vector to the coordinates of the j-th CFMM (see Appendix E for details). For noisy data d, trade predictions can include a “cost of risk.” This is quantified by regularizing the trade utility, i.e. introducing a penalty term. For matrices , we model risk by a simple quadratic penalty via

| 22 |

The implicit L2O model infers optimal trades via , i.e.

| 23 |

where encodes constraints for valid transactions. The essence of is to output solutions to (20) that account for transaction risks. A formulation of Davis-Yin operator splitting65 is used for model evaluation. Further details of the optimization scheme are in Appendix E.

Discussion

The L2O model contains three core features: profit, risk, and trade constraints. The model is designed to output trades that satisfy provided constraints, but note these are noisy and thus cannot be used to a priori determine whether a trade will be executed. For this reason, fail flags identify conditions to warn a trader when a trade should be aborted (due to an “invalid trade”). This can avoid wasting transaction fees (i.e. gas costs). Figure 8 shows an example of two trades, where we note the analytic method proposes a large trade that is not executed since it violates the trade constraints (due to noisy observations). The L2O method proposes a small trade that yielded arbitrage profits (i.e. ) and has pass certificates. Comparisons are provided in Table 4 between the analytic and L2O models. Although the analytic method has “ideal” structure, it performs much worse than the L2O scheme. In particular, no trades are executable by the analytic scheme since the present noise always makes the proposed transactions fail to satisfy the actual CFMM constraints. Consistent with this, every proposed trade by the analytic trade is flagged as risky in Table 4. The noise is on the order of 0.2% Gaussian noise of the asset totals.

Figure 8.

Example of proposed L2O (left) and analytic (right) trades with noisy data d. Blue and green lines show proposed cryptoassets x and y to tender and receive, respectively (widths show magnitude). The analytic trade is unable to account for trade risks, causing it to propose large trades that are not executed (giving executed utility of zero). This can be anticipated by the failed trade risk certificate. On the other hand, the L2O scheme is profitable (utility is 0.434) and is executed (consistent with the pass trade risk label).

Table 4.

Averaged results on test data for trades in CFMM network. The analytic method always predicts a profitable trade, but fails to satisfy the constraints (due to noise). This failure is predicted by the certificates “risk” certificate and reflected by the 0% trade execution. Alternatively, the L2O scheme makes conservative predictions regarding constraints, which limits profitability. However, using these certificates, executed L2O trades are always profitable and satisfy constraints.

| Method | Predicted utility | Executed utility | Trade execution | Risk fail | Profitable fail | # Params |

|---|---|---|---|---|---|---|

| Analytic | 11.446 | 0.00 | 0.00% | 100.00% | 0% | 0 |

| Implicit L2O | 0.665 | 0.6785 | 88.20% | 3.6% | 11.80% | 126 |

Conclusions

Explainable ML models can be concretely developed by fusing certificates with the L2O methodology. The implicit L2O methodology enables prior and data-driven knowledge to be directly embedded into models, thereby providing clear and intuitive design. This approach is theoretically sound and compatible with state-of-the-art ML tools. The L2O model also enables construction of our certificate framework with easy-to-read labels, certifying if each inference is trustworthy. In particular, our certificates provide a principled scheme for the detection of inferences with “bad” features via data-driven regularization. Thanks to this optimization-based model design (where inferences can be defined by fixed point conditions), failed certificates can be used to discard untrustworthy inferences and may help debugging the architecture. This reveals the interwoven nature of pairing implicit L2O with certificates. Our experiments illustrate these ideas in three different settings, presenting novel model designs and interpretable results. Future work will study extensions to physics-based applications where PDE-based physics can be integrated into the model66–68.

Supplementary Information

Acknowledgements

The authors thank Wotao Yin, Stanley Osher, Daniel McKenzie, Qiuwei Li, and Luis Tenorio for many fruitful discussions. Howard Heaton and Samy Wu Fung were supported by AFOSR MURI FA9550-18-1-0502 and ONR grants: N00014-18-1-2527, N00014-20-1-2093, and N00014-20-1-2787. Samy Wu Fung was also partially funded by the National Science Foundation award number DMS-2309810.

Author contributions

H.H. and S.W.F. performed the research and wrote the manuscript. H.H. created each figure except for Fig. 6. S.W.F. created Fig. 6.

Data availability

The datasets generated and/or analysed during the current study are available in the following repository: github.com/typal-research/xai-l2o.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Howard Heaton, Email: research@typal.academy.

Samy Wu Fung, Email: swufung@mines.edu.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-36249-3.

References

- 1.Van Lent, M., Fisher, W., Mancuso, M. An explainable artificial intelligence system for small-unit tactical behavior. In Proceedings of the National Conference on Artificial Intelligence, 900–907. (AAAI Press; MIT Press, 1999).

- 2.Arrieta AB, Díaz-Rodríguez N, Del Ser J, Bennetot A, Tabik S, Barbado A, García S, Gil-López S, Molina D, Benjamins R, et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion. 2020;58:82–115. doi: 10.1016/j.inffus.2019.12.012. [DOI] [Google Scholar]

- 3.Adadi A, Berrada M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI) IEEE Access. 2018;6:52138–52160. doi: 10.1109/ACCESS.2018.2870052. [DOI] [Google Scholar]

- 4.Došilović, F. K., Brčić, M., Hlupić, N. Explainable artificial intelligence: A survey. In 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), 0210–0215. (IEEE, 2018).

- 5.Samek, W., Müller, K.-R. Towards explainable artificial intelligence. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, 5–22. (Springer, 2019).

- 6.Bach S, Binder A, Montavon G, Klauschen F, Müller K-R, Samek W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS One. 2015;10(7):e0130140. doi: 10.1371/journal.pone.0130140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Montavon, G., Binder, A., Lapuschkin, S., Samek, W., Müller, K.-R. Layer-wise relevance propagation: an overview. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, 193–209 (2019).

- 8.Anaya, M. Clean Code in Python: Refactor Your Legacy Code Base. (Packt Publishing Ltd, 2018).

- 9.Amos, B. Tutorial on amortized optimization for learning to optimize over continuous domains (2022). arXiv preprint arXiv:2202.00665.

- 10.Chen, T., Chen, X., Chen, W., Heaton, H., Liu, J., Wang, Z., Yin, W. Learning to optimize: A primer and a benchmark (2021). arXiv preprint arXiv:2103.12828.

- 11.Shlezinger, N., Whang, J., Eldar, Y. C., Dimakis, A. G. Model-based deep learning (2020). arXiv preprint arXiv:2012.08405.

- 12.Monga V, Li Y, Eldar YC. Algorithm unrolling: Interpretable, efficient deep learning for signal and image processing. IEEE Signal Process. Mag. 2021;38(2):18–44. doi: 10.1109/MSP.2020.3016905. [DOI] [Google Scholar]

- 13.Heaton, H., McKenzie, D., Li, Q., Fung, S. W., Osher, S., Yin, W. Learn to predict equilibria via fixed point networks (2021). arXiv preprint arXiv:2106.00906.

- 14.Koyama, Y., Murata, N., Uhlich, S., Fabbro, G., Takahashi, S., Mitsufuji, Y. Music source separation with deep equilibrium models (2021). arXiv preprint arXiv:2110.06494.

- 15.Bai, S., Kolter, J. Z., Koltun, V. Deep equilibrium models (2019). arXiv preprint arXiv:1909.01377.

- 16.Bai, S., Koltun, V., Kolter, J. Z. Multiscale deep equilibrium models (2020). arXiv preprint arXiv:2006.08656.

- 17.Heaton, H., Fung, S. W., Gibali, A., Yin, W. Feasibility-based fixed point networks (2021). arXiv preprint arXiv:2104.14090.

- 18.Gilton, D., Ongie, G., Willett, R. Deep equilibrium architectures for inverse problems in imaging (2021). arXiv preprint arXiv:2102.07944.

- 19.Mitchell, M., Wu, S., Zaldivar, A., Barnes, P., Vasserman, L., Hutchinson, B., Spitzer, E., Raji, I. D., Gebru, T. Model cards for model reporting. In Proceedings of the Conference on Fairness, Accountability, and Transparency, 220–229 (2019).

- 20.Morik, K., Kotthaus, H., Heppe, L., Heinrich, D., Fischer, R., Pauly, A., Piatkowski, N. The care label concept: A certification suite for trustworthy and resource-aware machine learning (2021). arXiv preprint arXiv:2106.00512.

- 21.Morik, K., Kotthaus, H., Heppe, L., Heinrich, D., Fischer, R.; , Mücke, S., Pauly, A., Jakobs, M., Piatkowski, N. Yes We Care!–Certification for machine learning methods through the care label framework (2021). arXiv preprint arXiv:2105.10197. [DOI] [PMC free article] [PubMed]

- 22.Arnold M, Bellamy RK, Hind M, Houde S, Mehta S, Mojsilović A, Nair R, Ramamurthy KN, Olteanu A, Piorkowski D, et al. FactSheets: Increasing trust in AI services through supplier’s declarations of conformity. IBM J. Res. Dev. 2019;63(4/5):1–6. doi: 10.1147/JRD.2019.2942288. [DOI] [Google Scholar]

- 23.Deng W, Yin W. On the global and linear convergence of the generalized alternating direction method of multipliers. J. Sci. Comput. 2016;66(3):889–916. doi: 10.1007/s10915-015-0048-x. [DOI] [Google Scholar]

- 24.Siddamal, K., Bhat, S. P., Saroja, V. A survey on compressive sensing. In 2015 2nd International Conference on Electronics and Communication Systems (ICECS), 639–643. (IEEE, 2015).

- 25.Gemmeke JF, Van Hamme H, Cranen B, Boves L. Compressive sensing for missing data imputation in noise robust speech recognition. IEEE J. Sel. Top. Signal Process. 2010;4(2):272–287. doi: 10.1109/JSTSP.2009.2039171. [DOI] [Google Scholar]

- 26.Liu, J., Chen, X. ALISTA: Analytic weights are as good as learned weights in LISTA. In International Conference on Learning Representations (ICLR) (2019).

- 27.Gregor, K., LeCun, Y. Learning fast approximations of sparse coding. In Proceedings of the 27th International Conference on International Conference on Machine Learning, 399–406 (2010).

- 28.Chen, X., Liu, J., Wang, Z., Yin, W. Theoretical linear convergence of unfolded ISTA and its practical weights and thresholds (2018). arXiv preprint arXiv:1808.10038.

- 29.Krasnosel’skii M. Two remarks about the method of successive approximations. Uspekhi Mat. Nauk. 1955;10:123–127. [Google Scholar]

- 30.Combettes PL, Pesquet J-C. Lipschitz certificates for layered network structures driven by averaged activation operators. SIAM J. Math. Data Sci. 2020;2(2):529–557. doi: 10.1137/19M1272780. [DOI] [Google Scholar]

- 31.Gao, B., Pavel, L. On the properties of the softmax function with application in game theory and reinforcement learning (2017). arXiv preprint arXiv:1704.00805.

- 32.Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural. Inf. Process. Syst. 2019;32:8026–8037. [Google Scholar]

- 33.Kingma, D. P., Ba, J. Adam: A method for stochastic optimization (2014). arXiv preprint arXiv:1412.6980.

- 34.Ryu, E., Yin, W. Large-Scale Convex Optimization: Algorithm Designs via Monotone Operators. (Cambridge University Press, 2022).

- 35.Fung, S. W., Heaton, H., Li, Q., McKenzie, D., Osher, S., Yin, W. JFB: Jacobian-free backpropagation for implicit networks (2021). arXiv preprint arXiv:2103.12803.

- 36.Bai, S., Koltun, V., Kolter, Z. Stabilizing equilibrium models by jacobian regularization. In Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research (eds. Meila, M., Zhang, T.) 554–565 (PMLR, 2021).

- 37.Geng, Z., Zhang, X.-Y., Bai, S., Wang, Y., Lin, Z. On training implicit models. In Thirty-Fifth Conference on Neural Information Processing Systems (2021).

- 38.Huang, Z., Bai, S., Kolter, J. Z. Implicit2: Implicit layers for implicit representations. Adv. Neural Inf. Process. Syst.34 (2021).

- 39.Osher S, Shi Z, Zhu W. Low dimensional manifold model for image processing. SIAM J. Imag. Sci. 2017;10(4):1669–1690. doi: 10.1137/16M1058686. [DOI] [Google Scholar]

- 40.Zhang Z, Xu Y, Yang J, Li X, Zhang D. A survey of sparse representation: Algorithms and applications. IEEE Access. 2015;3:490–530. doi: 10.1109/ACCESS.2015.2430359. [DOI] [Google Scholar]

- 41.Carlsson G, Ishkhanov T, De Silva V, Zomorodian A. On the local behavior of spaces of natural images. Int. J. Comput. Vis. 2008;76(1):1–12. doi: 10.1007/s11263-007-0056-x. [DOI] [Google Scholar]

- 42.Lee AB, Pedersen KS, Mumford D. The nonlinear statistics of high-contrast patches in natural images. Int. J. Comput. Vis. 2003;54(1–3):83–103. doi: 10.1023/A:1023705401078. [DOI] [Google Scholar]

- 43.Peyré G. Image processing with nonlocal spectral bases. Multiscale Model. Simul. 2008;7(2):703–730. doi: 10.1137/07068881X. [DOI] [Google Scholar]

- 44.Peyré G. Manifold models for signals and images. Comput. Vis. Image Underst. 2009;113(2):249–260. doi: 10.1016/j.cviu.2008.09.003. [DOI] [Google Scholar]

- 45.Jin KH, McCann MT, Froustey E, Unser M. Deep convolutional neural network for inverse problems in imaging. IEEE Trans. Image Process. 2017;26(9):4509–4522. doi: 10.1109/TIP.2017.2713099. [DOI] [PubMed] [Google Scholar]

- 46.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001;96(456):1348–1360. doi: 10.1198/016214501753382273. [DOI] [Google Scholar]

- 47.Candès EJ, Romberg J, Tao T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory. 2006;52(2):489–509. doi: 10.1109/TIT.2005.862083. [DOI] [Google Scholar]

- 48.Leuschner, J., Schmidt, M., Baguer, D. O., Maaß, P. The LoDoPaB-CT dataset: A benchmark dataset for low-dose CT reconstruction methods (2019). arXiv preprint arXiv:1910.01113. [DOI] [PMC free article] [PubMed]

- 49.Jiang C, Zhang Q, Fan R, Hu Z. Super-resolution CT image reconstruction based on dictionary learning and sparse representation. Sci. Rep. 2018;8(1):1–10. doi: 10.1038/s41598-018-27261-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Xu Q, Yu H, Mou X, Zhang L, Hsieh J, Wang G. Low-dose X-ray CT reconstruction via dictionary learning. IEEE Trans. Med. Imaging. 2012;31(9):1682–1697. doi: 10.1109/TMI.2012.2195669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ring W. Structural properties of solutions to total variation regularization problems. ESAIM Math. Model. Numer. Anal. 2000;34(4):799–810. doi: 10.1051/m2an:2000104. [DOI] [Google Scholar]

- 52.Chan T, Marquina A, Mulet P. High-order total variation-based image restoration. SIAM J. Sci. Comput. 2000;22(2):503–516. doi: 10.1137/S1064827598344169. [DOI] [Google Scholar]

- 53.Kamvar, S., Olszewski, M., Reinsberg, R. Celo: A multi-asset cryptographic protocol for decentralized social payments. White Paper (2019). storage. googleapis. com/celo whitepapers/Celo A Multi Asset Cryptographic Protocol for Decentralized Social Payments.pdf.

- 54.Project, M. The Maker Protocol: MakerDAO’s Multi-Collateral Dai (MCD) System (2020). White Paper. storage. googleapis. com/celo whitepapers/Celo A Multi Asset Cryptographic Protocol for Decentralized Social Payments.pdf.

- 55.Zhang, Y., Chen, X., Park, D. Formal specification of constant product (xy= k) market maker model and implementation. White Paper (2018).

- 56.Warren, W., Bandeali, A. 0x: An open protocol for decentralized exchange on the Ethereum blockchain. White Paper (2017). github.com/0xProject/whitepaper.

- 57.Hertzog, E., Benartzi, G., Benartzi, G. Bancor protocol. White Paper (2017). storage.googleapis. com/website-bancor/2018/04/01ba8253-bancor_protocol_whitepaper_en.pdf (accessed 24 Apr 2022).

- 58.Werner, S. M., Perez, D., Gudgeon, L., Klages-Mundt, A., Harz, D., Knottenbelt, W. J. Sok: Decentralized finance (defi) (2021). arXiv preprint arXiv:2101.08778.

- 59.Schär F. Decentralized finance: On blockchain-and smart contract-based financial markets. Louis Review: FRB of St; 2021. [Google Scholar]

- 60.Angeris, G., Chitra, T. Improved price oracles: Constant function market makers. In Proceedings of the 2nd ACM Conference on Advances in Financial Technologies, 80–91 (2020).

- 61.Angeris, G., Agrawal, A., Evans, A., Chitra, T., Boyd, S. Constant function market makers: Multi-asset trades via convex optimization (2021). arXiv preprint arXiv:2107.12484.

- 62.Makarov I, Schoar A. Trading and arbitrage in cryptocurrency markets. J. Financ. Econ. 2020;135(2):293–319. doi: 10.1016/j.jfineco.2019.07.001. [DOI] [Google Scholar]

- 63.Angeris, G., Chitra, T., Evans, A., Boyd, S. Optimal routing for constant function market makers (2021).

- 64.Daian, P., Goldfeder, S., Kell, T., Li, Y., Zhao, X., Bentov, I., Breidenbach, L., Juels, A. Flash boys 2.0: Frontrunning, transaction reordering, and consensus instability in decentralized exchanges (2019). arXiv preprint arXiv:1904.05234.

- 65.Davis D, Yin W. A three-operator splitting scheme and its optimization applications. Set-Valued Var. Anal. 2017;25(4):829–858. doi: 10.1007/s11228-017-0421-z. [DOI] [Google Scholar]

- 66.Raissi M, Perdikaris P, Karniadakis GE. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019;378:686–707. doi: 10.1016/j.jcp.2018.10.045. [DOI] [Google Scholar]

- 67.Ruthotto L, Osher SJ, Li W, Nurbekyan L, Fung SW. A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proc. Natl. Acad. Sci. 2020;117(17):9183–9193. doi: 10.1073/pnas.1922204117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Lin, A. T., Fung, S. W., Li, W., Nurbekyan, L., Osher, S. J. Alternating the population and control neural networks to solve high-dimensional stochastic mean-field games. Proce. Natl. Acad. Sci.118(31) (2021). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated and/or analysed during the current study are available in the following repository: github.com/typal-research/xai-l2o.