Abstract

We develop new quasi-experimental tools to measure disparate impact, regardless of its source, in the context of bail decisions. We show that omitted variables bias in pretrial release rate comparisons can be purged by using the quasi-random assignment of judges to estimate average pretrial misconduct risk by race. We find that two-thirds of the release rate disparity between white and Black defendants in New York City is due to the disparate impact of release decisions. We then develop a hierarchical marginal treatment effect model to study the drivers of disparate impact, finding evidence of both racial bias and statistical discrimination.

Keywords: C26, J15, K42

1. Introduction

Racial disparities are pervasive throughout much of the U.S. criminal justice system. Black individuals are, for example, more likely than white individuals to be searched by the police, charged with a serious crime, detained before trial, convicted of an offense, and incarcerated.1 Such racial disparities are often taken as evidence of discrimination, driven by racially biased preferences or stereotypes. But this interpretation overlooks at least two alternative explanations. First, the observed disparities may reflect legally relevant differences in criminal behavior that are partially observed by police officers, prosecutors, and judges but not by the econometrician. Second, the observed disparities may be driven by statistical discrimination, instead of or alongside racially biased preferences and stereotypes.2 Distinguishing between these explanations for racial disparities and correctly measuring racial discrimination remains difficult, hampering efforts to formulate appropriate policy responses.

This paper develops new quasi-experimental tools to estimate disparate impact, a broad and legally based definition of discrimination encompassing both racial bias and statistical discrimination. We develop these tools in the context of bail, where the sole legal objective of judges is to allow most defendants to be released before trial while minimizing the risk of pretrial misconduct (such as failing to appear in court or being arrested for a new crime). Bail judges thus risk violating U.S. anti-discrimination law if they release white and Black defendants with the same objective misconduct potential at different rates.3 Correspondingly, we measure disparate impact as the difference in a judge’s release rates between white and Black individuals with identical misconduct potential. This measure is consistent with the legal theory of disparate impact, as well as economic notions of discrimination that compare white and Black individuals with the same productivity (Aigner and Cain, 1977) and notions of algorithmic discrimination that compare equally “qualified” white and Black individuals (Berk et al., 2018).

Estimating the disparate impact of release decisions among white and Black defendants is fundamentally challenging. Observed disparities do not adjust for unobserved misconduct potential and can therefore suffer from omitted variables bias (OVB) when there are unobserved racial differences in misconduct risk.4 Observational comparisons can also suffer from included variables bias (IVB) when they adjust for non-race characteristics, such as criminal history and crime type, that can mediate disparate impact. Randomized audit studies (e.g., Bertrand and Mullainathan, 2004; Ewens, Tomlin and Choon Wang, 2014) can test whether decision-makers treat fictitious white and Black individuals with the same non-race characteristics in the same way, but do not capture disparate impact that arises via non-race characteristics and are infeasible in high-stakes and face-to-face settings such as bail decisions. Outcome-based tests can detect one potential driver of disparate impact—racial bias at the margin of release decisions (e.g., Arnold, Dobbie and Yang, 2018; Marx, Forthcoming)—but cannot detect accurate statistical discrimination or measure the overall extent of disparate impact.

Our primary methodological contribution is to show that disparate impact in bail decisions, regardless of its source, can be measured by leveraging the quasi-random assignment of decision-makers (such as bail judges) to white and Black individuals. This approach proceeds in two steps. First, we show that to purge OVB from observational release rate comparisons we need only to measure average white and Black misconduct risk. Intuitively, the OVB in observational comparisons comes from the correlation between defendant race and unobserved misconduct potential in each judge’s defendant pool. When judges are as-good-as-randomly assigned, this correlation is common to all judges and is furthermore a simple function of misconduct risk (i.e., average misconduct potential) by race. We can therefore use estimates of race-specific misconduct risk to rescale observational release rate comparisons in such a way that makes released white and Black defendants comparable in terms of misconduct potential within each as-good-as-randomly assigned judge’s defendant pool. The rescaled comparisons avoid OVB by revealing the rates at which each judge releases white and Black defendants with the same objective misconduct potential. Our rescaling approach further avoids IVB by conditioning on pretrial misconduct potential directly, instead of conditioning on non-race characteristics that can mediate disparate impact. The key econometric challenge is then to estimate the average misconduct risk parameters, which is difficult since misconduct outcomes are only selectively observed among the subset of defendants who a judge endogenously releases before trial.

In the second step of our approach, we estimate the required average misconduct risk inputs from quasi-experimental variation in pretrial release and misconduct rates. To build intuition for this step, consider an idealized setting with an as-good-as-randomly assigned bail judge who is supremely lenient in that she releases nearly all defendants assigned to her. The supremely lenient judge’s release rates among white and Black defendants are close to one, meaning (by as-good-as-random assignment) that the misconduct rates among her released white and Black defendants are close to the average misconduct risk inputs. In practice, we do not observe such a supremely lenient judge. Instead, we estimate average misconduct risk by extrapolating observed release misconduct rates across observed quasi-randomly assigned judges with high release rates. Importantly, we do not require a model of judge decision-making for either our approach to extrapolating pretrial misconduct risk or to estimating discrimination from these extrapolations. Our model-free approach to measuring disparate impact only requires that the statistical extrapolations and judges’ legal objective are well-specified.

We use our quasi-experimental approach to measure the disparate impact of release decisions in New York City (NYC), home to one of the largest pretrial systems in the country. Our most conservative estimates show that approximately two-thirds of the average release rate disparity between white and Black defendants is due to the disparate impact of release decisions (62 percent, or 4.2 percentage points out of 6.8 percentage points), with the remaining one-third attributable to OVB. The average release rate disparity due to disparate impact shrinks by 17 percent (0.7 percentage points out of 4.2 percentage points) when we condition on observable characteristics such as criminal history and crime type, additionally highlighting the importance of IVB in this setting. Our main finding applies to most defendant subgroups and is robust to different extrapolations of average misconduct risk, specifications of pretrial misconduct, classifications of pretrial release, and definitions of defendant race. Judge-specific estimates further show that the vast majority of bail judges make decisions with nonzero disparate impact (87 percent, by our most conservative estimate), with higher levels among more stringent judges, judges assigned a lower share of cases with Black defendants, and judges who are not newly appointed in our sample period.

Our second methodological contribution is to develop a hierarchical marginal treatment effect (MTE) model that imposes additional structure on the quasi-experimental variation to investigate the drivers of disparate impact in NYC bail decisions. The model allows us to decompose disparate impact into components due to racial bias and statistical discrimination, two drivers that have historically been the focus of the economics literature. The model specifies a joint distribution of judge preferences for releasing defendants of a given race and judge skill at inferring misconduct potential by race. The distributions of judge preferences and skill imply a distribution of judge- and race-specific MTE curves that can be used to test for racial bias at the margin of release and measure racial differences in average risk or signal quality that generate statistical discrimination. The model also allows racial disparities in the quality of misconduct signals to generate indirect (or “systemic”) discrimination, in the absence of racial bias or statistical discrimination (Bohren, Hull and Imas, 2022).

We estimate the distribution of judge MTE curves using a tractable simulated minimum distance (SMD) procedure that matches moments of the quasi-experimental variation in pretrial release and misconduct rates. Model estimates show evidence of both racial bias and statistical discrimination in NYC, with the latter coming from a higher level of average risk (that exacerbates disparate impact) and less precise risk signals (that alleviates disparate impact) for Black defendants. The finding of statistical discrimination implies that outcome-based tests of racial bias (as in Arnold, Dobbie and Yang (2018)) would miss important sources of disparate impact in this setting.

We conclude by using our MTE model to investigate whether disparate impact can be reliably targeted, and potentially reduced, with existing data. We simulate counterfactuals in which judges can be subjected to race-specific release rate quotas that eliminate the disparate impact in release decisions, as estimated by a policymaker. We find that targeting the most discriminatory NYC judges with a quota based on our quasi-experimental estimates can reduce the average level of disparate impact by 36 percent, and that targeting all judges with such a quota can essentially eliminate disparate impact despite the noise in our estimation procedure. By comparison, targeting judges with a quota based on observational release rate disparities can lead to a small but non-zero level of disparate impact against white defendants, due to the OVB in observed release rates.

This paper complements a recent empirical literature that uses quasi-experimental variation to test for racial bias in the criminal justice system, which is one potential driver of the disparate impact we measure. Arnold, Dobbie and Yang (2018) use the release tendencies of quasi-randomly assigned bail judges to test for racial bias using a conventional MTE framework, while Marx (Forthcoming) uses a similar approach to test for racial bias at the margin of police stops under a weaker first-stage monotonicity assumption. The outcome-based tests developed by Arnold, Dobbie and Yang (2018) and Marx (Forthcoming) detect racial bias from taste-based discrimination or inaccurate stereotypes, but cannot detect accurate statistical discrimination or measure the magnitude of any disparate impact. Our primary contribution to this literature is to show how quasi-experimental judge assignment can be used to measure these magnitudes and detect all possible disparate impact violations of U.S. anti-discrimination law, regardless of their source. Our secondary contribution is to show how to investigate the drivers of disparate impact by imposing alternative structure on the quasi-experimental variation, providing a way to quantify the relative importance of the racial bias detected in the outcome-based tests of Arnold, Dobbie and Yang (2018) and Marx (Forthcoming).5

Methodologically, this paper builds on a recent literature on estimating average treatment effects (ATEs) and MTEs with multiple discrete instruments (Kowalski, 2016; Brinch, Mogstad and Wiswall, 2017; Mogstad, Santos and Torgovitsky, 2018; Hull, 2020). An important feature of our approach is that we do not impose the usual first-stage monotonicity assumption, which has received scrutiny both in general (Mogstad, Torgovitsky and Walters, 2020) and in the specific context of so-called “judge designs” (Mueller-Smith, 2015; Frandsen, Lefgren and Leslie, 2019; Norris, 2019). Our extrapolation-based solution to estimating mean misconduct risk (which can be viewed as an ATE) without imposing monotonicity is most closely related to Hull (2020), who considers non-parametric extrapolations of quasi-experimental moments in the spirit of “identification at infinity” in sample selection models (Chamberlain, 1986; Heckman, 1990; Andrews and Schafgans, 1998). Our hierarchical MTE framework is closely related to the contemporaneous work of Chan, Gentzkow and Yu (2021), who use a similar model to study variation in physician preferences and skill when making pneumonia diagnoses.

The remainder of the paper is organized as follows. Section 2 describes how U.S. anti-discrimination laws motivate our approach and provides an overview of the NYC pretrial system. Section 3 outlines the conceptual framework underlying our analysis. Section 4 describes our data and documents pretrial release rate differences for Black and white defendants. Section 5 develops and implements our quasi-experimental approach to measuring disparate impact in bail decisions. Section 6 develops and estimates our hierarchical MTE model to explore the drivers of disparate impact and conduct policy counterfactuals. Section 7 concludes.

2. Setting

2.1. Disparate Impact and U.S. Anti-Discrimination Law

The two main legal doctrines of discrimination in the United States are disparate impact and disparate treatment, with each requiring distinct statistical and non-statistical evidence. In this section we first discuss disparate impact and motivate an idealized statistical measure, which we formalize and estimate in this paper. We then compare disparate impact to disparate treatment, which generally requires non-statistical evidence to establish or strongly suggest discriminatory intent under the law. We emphasize that our empirical analysis draws on the legal doctrine of disparate impact and not alternative definitions that use the same terminology to refer to, for example, unconditional disparities in treatments or outcomes between groups.

The disparate impact doctrine concerns the discriminatory effects of a policy or practice, rather than a decision-maker’s intent. Under this doctrine, a policy or practice is discriminatory if it leads to an adverse impact on a protected class and either the decision-maker cannot offer a substantial legitimate justification or if it can be shown that such a justification could be reasonably achieved by less disparate means. The disparate impact standard was formalized in the landmark U.S. Supreme Court case of Griggs v. Duke Power Co. (1971). This case began in 1965, when the Duke Power Company instituted a policy requiring employees to have a high school diploma in order to be considered for promotion. The policy had the effect of drastically limiting the eligibility of Black employees, despite being race-neutral. The Court found that these promotion disparities reflected illegal discrimination, since having a high school diploma had little to no relationship to a worker’s productivity at Duke Power (the “legitimate justification” in this setting). Notably, the employer’s motivation for instituting the diploma requirement was irrelevant to the Court’s decision, as was the fact that the policy was applied equally to white and Black employees.6 Subsequent court decisions, such as Albemarle Paper Co. v. Moody (1975), have clarified that policies like the diploma requirement in the Griggs v. Duke Power Co. case remain illegal even when they are related to a worker’s productivity—provided there is an alternative policy that could reasonably achieve the same goal by less disparate means.

An important question in interpreting the disparate impact doctrine, both in general and in our specific context of pretrial decisions, is how to define a legitimate justification for potential disparities. In the employment context, the U.S. Supreme Court has consistently found that an employer charged with a disparate impact must show that their hiring practices “bear a demonstrable relationship to successful performance of the jobs for which it was used” (Griggs v. Duke Power Co., 1971). In the lending context, guidance issued by various U.S. banking regulators has similarly explained that legitimate justifications are typically related to cost, profitability, soundness, or other measurable objectives of the lender (see, e.g., the Interagency Fair Lending Examination Procedures). We interpret these decisions as saying that the legitimate justification that defines disparate impact is based on objective potential outcomes, such as worker productivity in an employment context, profits in a lending context, or (as we discuss more below) pretrial misconduct in the pretrial context.

The ideal statistical test for disparate impact would therefore compare the treatment of different protected groups with identical potential for achieving a given relevant outcome. In the context of bail decisions, discussed further below, this means that we would like to compare the release decisions of white and Black defendants with identical pretrial misconduct potential. The finding of disparities conditional on misconduct potential would likely be necessary (though perhaps not sufficient) evidence to win a disparate impact case—depending on, for example, whether or not it can be shown that there is a decision rule yielding less of a conditional disparity while achieving similar or better outcomes.

By design, the ideal statistical test will measure disparate impact coming from both “direct” discrimination on the basis of race itself and “indirect” discrimination from non-race characteristics—as with the race-neutral policy considered in Griggs v. Duke Power Co. (1971). For example, a bail judge using defendant criminal history to accurately predict pretrial misconduct potential in a race-neutral way may make release decisions with disparate impact because she fails to take into account existing racial disparities in criminal history (e.g., due to discrimination in policing).7 The statistical measure of disparate impact we develop below captures such indirect discrimination by comparing the release rates of white and Black defendants with identical pretrial misconduct potential, without conditioning on other non-race characteristics like criminal history. Our measure also quantifies the extent of such disparate impact, not just its presence, allowing for the comparisons of different decision-making rules (e.g., bail judges vs. algorithmic decision rules) that may serve as the basis for disparate impact litigation.

The disparate treatment doctrine contrasts with disparate impact by prohibiting polices or practices motivated by a “discriminatory purpose” and thus requiring proof of intent.8 There are two competing views on the ideal statistical test for disparate treatment, with broad agreement that statistical evidence alone is insufficient because of the need to show intent. The first view is that one would still like to compare the treatment of different groups with identical potential outcomes, as in a disparate impact case, but augment this comparison with non-statistical evidence showing or strongly suggesting intent.9 The second view is that we would like to compare the treatment of different groups with identical observable characteristics using, for example, a well-designed audit study or observational analysis that controls for all observable differences between groups. Such a test would reveal whether the decision-maker is impartial with respect to protected attributes such as race (i.e., is “race-blind”) and may, along with proof of intent, be enough to establish disparate treatment.

2.2. The New York City Pretrial System

We study disparate impact in the New York City pretrial system, which is one of the largest pretrial systems in the country. U.S. pretrial systems are meant to allow most criminal defendants to be released from legal custody while minimizing the risk of pretrial misconduct. Bail judges in both NYC and the country as a whole are granted considerable discretion in determining which defendants should be released before trial, but they cannot discriminate against minorities and other protected classes even when membership in a protected class contains information about the underlying risk of criminal misconduct (Yang and Dobbie, 2020). Judges are also not meant to assess guilt or punishment when determining which individuals should be released from custody, nor are they meant to consider the political consequences of their bail decisions.

In NYC, bail conditions are set by a judge at an arraignment hearing held shortly after an arrest. These hearings usually last a few minutes and are held through a videoconference to the detention center. The judge typically receives detailed information on the defendant’s current offense and prior criminal record, as well as a release recommendation based on a six-item checklist developed by a local nonprofit (New York City Criminal Justice Agency Inc., 2016). The judge then has several options in setting bail conditions. First, she can release defendants who show minimal risk on a promise to return for all court appearances, known broadly as release on recognizance (ROR) or release without conditions. Second, she can require defendants to post some sort of bail to be released. The judge can also send higher-risk defendants to a supervised release program as an alternative to cash bail. Finally, the judge can detain defendants pending trial by denying bail altogether. Cases such as murder, kidnapping, arson, and high-level drug possession and sale almost always result in a denial of bail, for example, though these cases make up only about 0.8 percent of our sample.

We exploit three features of the pretrial system in our analysis. First, the legal objective of bail judges is both narrow and measurable among the set of released defendants for whom pretrial misconduct outcomes are observed (although not among detained defendants, for whom such outcomes are unobserved). This narrow legal objective yields a natural approach to measuring disparate impact from the difference in a judge’s release rates between white and Black defendants with identical misconduct potential. Second, bail judges can be effectively viewed as making binary decisions, releasing low-risk defendants (generally by ROR or setting a low cash bail amount) and detaining high-risk defendants (generally by setting a high cash bail amount). We explore alternative characterizations of bail decisions in our analysis, such as viewing judges as deciding between release without conditions and any cash bail amount. Third, the case assignment procedures used in most jurisdictions, including NYC, generate quasi-random variation in judge assignment for defendants arrested at the same time and place. The quasi-random variation in judge assignment, in turn, generates quasi-experimental variation in the probability that a defendant is released before trial which we exploit in our analysis.

There are two differences between the NYC pretrial system and other pretrial systems around the country that are potentially relevant for our analysis. First, New York instructs judges to only consider the risk that defendants will not appear for a required court appearance when setting bail conditions (a so-called failure to appear, or FTA), not the risk of new criminal activity as in most states (§510.10 of New York Criminal Procedure Law). We explore robustness to this narrower definition of pretrial misconduct in our analysis. Second, many defendants in NYC will never have bail set, either because the police gave them a desk appearance ticket that does not require an arraignment hearing or because the case was dismissed or otherwise disposed at the arraignment hearing before bail was set. However, the decision of whether or not to issue a desk appearance ticket is made before the bail judge is assigned, and cases should only be dismissed or otherwise disposed at arraignment if there is a clear legal defect in the case (Leslie and Pope, 2017). We show below that there is no relationship between the assigned bail judge and the probability that a case exits our sample due to case disposal or dismissal at arraignment, and exclude these cases from our analysis.

3. Conceptual Framework

3.1. Formalizing Disparate Impact

We formalize the disparate impact standard in a setting where a set of decision-makers j make binary decisions Dij ∈ {0, 1} across a population of individuals i. Each decision-maker’s goal is to align Dij with a latent binary state which captures the legitimate justification for setting Dij = 1.10 In the context of bail decisions, Dij = 1 indicates that judge j would release defendant i if assigned to her case (with Dij = 0 otherwise) while indicates that the defendant would subsequently fail to appear in court or be rearrested for a new crime if released (with otherwise). Each judge’s objective is to release individuals without misconduct potential and detain individuals with misconduct potential, but may differ in their predictions of which individuals fall into which category. We note that Dij is defined as the potential decision of judge j for defendant i, setting aside for now the judge assignment process which yields actual release decisions from these latent variables.

We measure disparate impact, both overall and for each judge, by the average release rate disparity between white and Black defendants with identical misconduct potential. To build up to this measure, let Ri ∈ {w, b} index the race of white and Black defendants and define:

| (1) |

as the release rate disparity among white and Black defendants without misconduct potential and:

| (2) |

as the release rate disparity among white and Black defendants with misconduct potential. Each Δjy parameter can be understood as capturing racial differences in the tendency of judge j to correctly and incorrectly classify individuals by their misconduct potential. The average level of disparate impact in judge j’s decisions is then given by:

| (3) |

with weights given by the average misconduct risk in the population, . The system-wide level of disparate impact is given by the case-weighted average of Δj across all judges.

We say that judge j discriminates against Black defendants when Δj > 0, that she discriminates against white defendants when Δj < 0, and that she does not discriminate against either Black or white defendants when Δj = 0, again recognizing that the Dij capture a judge’s potential release decisions. By holding the potential defendant population fixed, estimates of Δj can be used to calculate both the average level of disparate impact in a bail system as well as any variation in the level of disparate impact across judges. We choose the weights such that Δj captures the expected level of disparate impact in a pool of defendants where pretrial misconduct potential is unknown. We explore robustness to other weighted averages of Δj0 and Δj1 below.

By design, our measure of disparate impact, Δj, captures the discriminatory effects of judge j’s release decisions rather than any discriminatory intent underlying her decisions. As noted in Section 2.1 the disparate impact measured by Δj can arise from direct discrimination, via the conscious or unconscious use of defendant race, as well as indirect discrimination through the conscious or unconscious use of non-race characteristics that are correlated with race. Importantly, this measure is not meant to test whether judges treat fictitious white and Black individuals with the same non-race characteristics in the same way, as in a randomized audit study measuring such direct discrimination. As we discuss more below, conditioning on non-race characteristics beyond pretrial misconduct potential can bias our measure when disparate impact arises through these characteristics.11

The economics literature has historically focused on two potential drivers of racial discrimination, though it has not always been clear on how they can manifest as disparate impact. The first driver is racial bias, in which judges discriminate against Black defendants at the margin of pretrial release due to either racial preferences (Becker, 1957) or some form of inaccurate beliefs or stereotypes (Bohren et al., 2020; Bordalo et al., 2016). The second theoretical driver is statistical discrimination, in which judges act on accurate risk predictions but discriminate due to racial differences in average risk or the precision of received risk signals (Phelps, 1972; Arrow, 1973; Aigner and Cain, 1977). In Section 6, we formalize these potential drivers of Δj with a simple decision-making model. The model further allows disparate impact to arise from systemic differences in the distribution of non-race characteristics judges use to form misconduct predictions—a channel not usually considered in economic analyses of direct discrimination (Bohren, Hull and Imas, 2022). Estimates of this model allow us to quantify the role of each channel in driving our main estimates of disparate impact.

We emphasize that our analysis of disparate impact is not premised on the idea that the differences in misconduct potential which we condition on are innate or unaffected by discrimination at other points of the criminal justice system (or society as a whole). Differences in could, for example, be driven by various systemic factors such as the over-policing of Black neighborhoods relative to white neighborhoods, discrimination in the types of crimes that are reported to and investigated by the police, discrimination in local housing and labor markets, and so on. Thus a finding of Δj = 0 need not suggest pretrial release decisions are unaffected by disparate impact, only that there is no disparate impact conditional on these other potentially discriminatory systems and conditions. A finding of Δj ≠ 0 in turn isolates only one form of disparate impact in bail decisions, which may be reliably targeted and potentially reduced by policy, holding fixed other potentially harder to quantify forms of discrimination.

3.2. Empirical Challenges

Observational disparity analyses, whether in bail decisions or another area of the criminal justice system, often come from “benchmarking” regressions of decisions (such as pretrial release) on an indicator for an individual’s race and potentially other controls for the observed non-race characteristics (e.g., Gelman, Fagan and Kiss, 2007; Abrams, Bertrand and Mullainathan, 2012). Since such analyses cannot control for unobserved misconduct potential, they may suffer from omitted variables bias (OVB) when viewed as a measure of disparate impact. They may further suffer from included variables bias (IVB) when controlling for non-race characteristics through which disparate impact arises.

We formalize these empirical challenges in an idealized version of our setting with complete random assignment of judges to defendants. Let Zij = 1 if defendant i is assigned to judge j, let indicate the defendant’s release status, and let indicate the observed pretrial misconduct outcome. The expression for observed misconduct reflects the fact that an individual who is detained (Di = 0) cannot fail to appear in court or be rearrested for a new crime, such that Yi = 0 when Di = 0 regardless of individual i’s misconduct potential . The econometrician observes (Ri, Zi1, …, ZiJ, Di, Yi) for each defendant, and records whether the defendant is white in an indicator Wi = 1[Ri = w]. Under complete random assignment, each Zij is independent of .

We first formalize the OVB challenge by considering a simple judge-specific benchmarking regression of release decisions on judge-by-race interactions and judge main effects:

| (4) |

We omit the constant term from this regression in order to include all judge fixed effects, and for now abstract away from other controls. The interaction coefficients measure differences in judge release rates for white defendants relative to Black defendants, and under random judge assignment:

| (5) |

The difference between these regression coefficients and our disparate impact measure, ξj = αj−Δj, measures OVB in the simple benchmarking analysis. To unpack ξj, note first that we can write:

| (6) |

where gives the race- and judge-specific release rate of defendants with or without misconduct potential and gives the average misconduct risk among individuals of race r. In contrast, with Δj0 = δjw0 −δjb0 and Δj1 = δjw1 −δjb1, the disparate impact of judge j’s decisions given by Equation (3) can be written:

| (7) |

where is the average misconduct risk across all defendants, with pr = Pr(Ri = r) denoting racial shares. The difference in these expressions shows OVB can be written:

| (8) |

where the second line follows by definition of the population risk . The regression coefficient αj will be biased upward for Δj when ξj > 0 and biased downward when ξj < 0.

Two key insights follow from the OVB formula in Equation (8). First, the simple benchmarking regression (4) will generally yield biased estimates of disparate impact. The exception is when either judge release decisions are uncorrelated with misconduct potential (so δjr0 = δjr1 for each race r) or when misconduct potential is uncorrelated with defendant race (so μb = μw). Both scenarios are unlikely in practice.12 Second, Equation (8) suggests a potential avenue for addressing OVB and measuring disparate impact when bail judges are as-good-as-randomly assigned, using familiar econometric objects. One of the terms driving the bias of each αj is the difference in race-specific misconduct risk in the population, μb − μw, which is common to all judges. With capturing defendant i’s potential for pretrial misconduct when released and Yi = 0 for all detained individuals, each can be understood as an average treatment effect (ATE) of pretrial release on pretrial misconduct among individuals of race r. We show in Section 5 how such ATEs can be estimated from quasi-experimental judge assignment and used to purge OVB from benchmarking estimates, recovering estimates of Δj.

We can similarly formalize the potential for IVB in observational analyses by considering a simple case where white and Black misconduct risk are equal, μw = μb, so there is no OVB in the simple benchmarking regression: αj = Δj. Appendix B.2 shows how adjusting for some binary non-race characteristic Xi (such as an indicator for crime type) in this scenario yields an analogous formula for bias in the “overcontrolled” disparity :

| (9) |

where δjr,X=x = E[Dij | Ri = r,Xi = x] gives the race- and X-specific release rate of judge j and gives the race-specific average of Xi. Here, IVB arises whenever the judge’s decisions are correlated with the included non-race characteristic (so δjw,X=0 ≠ δjw,X=1 and this characteristic is correlated with race (so ). Similar IVB formulas can be derived when μw ≠ μb but when the econometrician has adjusted for so white and Black misconduct risk are conditionally comparable. We avoid IVB in our empirical strategy by making such an adjustment but not conditioning on non-race characteristics like crime type or criminal history.

4. Data and Observational Comparisons

4.1. Sample and Summary Statistics

Our analysis of disparate impact in bail decisions is based on the universe of 1,458,056 arraignments made in NYC between November 1, 2008 and November 1, 2013. The data contain information on a defendant’s gender, race, date of birth, and county of arrest, as well as the (anonymized) identity of the assigned bail judge. In our primary analysis, we categorize defendants as white (including both non-Hispanic and Hispanic white individuals), Black (including both non-Hispanic and Hispanic Black individuals), or neither. We explore alternative categorizations of race in robustness checks below.

In addition to detailed demographics, our data contain information on each defendant’s current offense, history of prior criminal convictions, and history of past pretrial misconduct (both rearrests and FTA). We also observe whether the defendant was released at the time of arraignment and whether this release was due to release without conditions or some form of money bail. We categorize defendants as either released (including both release without conditions and with paid cash bail) or detained (including cash bail that is not paid) at the first arraignment, though we again explore robustness to other categorizations of the initial pretrial release decision below. Finally, we observe whether a defendant subsequently failed to appear for a required court appearance or was subsequently arrested for a new crime before case disposition. We take either form of pretrial misconduct as the primary outcome of our analysis, but again explore robustness to other measures below.

We make four key restrictions to arrive at our estimation sample. First, we drop cases where the defendant is not charged with a felony or misdemeanor (N=26,057). Second, we drop cases that were disposed at arraignment (N=364,051) or adjourned in contemplation of dismissal (N=230,517). This set of restrictions drops cases that are likely to be dismissed by virtually every judge: Appendix Table A1 confirms that judge assignment is not systematically related to case disposal or case dismissal. Third, we drop cases in which the defendant is assigned a cash bail of $1 (N=1,284). This assignment occurs in cases in which the defendant is already serving time in jail on an unrelated charge; the $1 cash bail is set so that the defendant receives credit for served time, and does not reflect a new judge decision. Fourth, we drop defendants who are non-white and non-Black (N=45,529). Finally, we drop defendants assigned to judges with fewer than 100 cases (N=3,785) and court-by-time cells with fewer than 100 cases, only one unique judge, or only Black or only white defendants for a given judge (N=191,647), where a court-by-time cell is defined by the assigned courtroom, shift, day-of-week, month and year (e.g., the Wednesday night shift in Courtroom A of the Kings County courthouse in January 2012). The final sample consists of 595,186 cases, 367,434 defendants, and 268 judges.13

Table 1 summarizes our estimation sample, both overall and by race. Panel A shows that 73.0 percent of defendants are released before trial. A defendant is defined as released before trial if either the defendant is released without conditions (ROR) or the defendant posts the required bail amount before disposition. The vast majority of these releases are without conditions, with only 14.4 percent of defendants being released after being assigned money bail. White defendants are more likely to be released before trial than Black defendants, with a 76.7 percent release rate relative to a 69.5 percent release rate. Among released defendants, however, the distribution of release conditions (e.g., the ROR share) is virtually identical across race.

Table 1:

Descriptive Statistics

| All Defendants | White Defendants | Black Defendants | |

|---|---|---|---|

|

|

|||

| Panel A: Pretrial Release | (1) | (2) | (3) |

|

|

|||

| Released Before Trial | 0.730 | 0.767 | 0.695 |

| Share ROR | 0.852 | 0.852 | 0.851 |

| Share Money Bail | 0.144 | 0.144 | 0.145 |

| Share Other Bail Type | 0.004 | 0.004 | 0.004 |

| Share Remanded | 0.000 | 0.000 | 0.000 |

| Panel B: Defendant Characteristics | |||

| White | 0.478 | 1.000 | 0.000 |

| Male | 0.821 | 0.839 | 0.804 |

| Age at Arrest | 31.97 | 32.06 | 31.89 |

| Prior Rearrest | 0.229 | 0.204 | 0.253 |

| Prior FTA | 0.103 | 0.087 | 0.117 |

| Panel C: Charge Characteristics | |||

| Number of Charges | 1.150 | 1.184 | 1.118 |

| Felony Charge | 0.362 | 0.355 | 0.368 |

| Misdemeanor Charge | 0.638 | 0.645 | 0.632 |

| Any Drug Charge | 0.256 | 0.257 | 0.256 |

| Any DUI Charge | 0.046 | 0.067 | 0.027 |

| Any Violent Charge | 0.143 | 0.124 | 0.160 |

| Any Property Charge | 0.136 | 0.127 | 0.144 |

| Panel D: Pretrial Misconduct, When Released | |||

| Pretrial Misconduct | 0.299 | 0.266 | 0.332 |

| Share Rearrest Only | 0.499 | 0.498 | 0.499 |

| Share FTA Only | 0.281 | 0.296 | 0.269 |

| Share Rearrest and FTA | 0.220 | 0.205 | 0.232 |

|

| |||

| Total Cases | 595,186 | 284,598 | 310,588 |

| Cases with Defendant Released | 434,201 | 218,256 | 215,945 |

Notes. This table summarizes the NYC analysis sample. The sample consists of bail hearings that were quasi-randomly assigned judges between November 1, 2008 and November 1, 2013, as described in the text. Information on demographics and criminal outcomes is derived from court records as described in the text. Pretrial release is defined as meeting the bail conditions set by the first assigned bail judge. ROR (released on recognizance) is defined as being released without any conditions. FTA (failure to appear) is defined as failing to appear at a mandated court date.

Observed release rate disparities will generally not measure disparate impact when white and Black defendants have different misconduct rates. Suggestive evidence of such OVB is found in Panel B of Table 1. Black defendants are, for example, 4.9 percentage points more likely to have been arrested for a new crime before trial in the past year compared to white defendants, as well as 3.0 percentage points more likely to have a prior FTA in the past year. Panel C further shows that Black and white defendants tend to have different crime types. Black defendants are 1.3 percentage points more likely to have been charged with a felony compared to white defendants, as well as 3.6 percentage points more likely to have been charged with a violent crime. Finally, Panel D shows that Black defendants who are released are 6.6 percentage points more likely to be rearrested or have an FTA than white defendants who are released (though the composition of such misconduct is similar). Importantly, and in contrast to the other statistics in Table 1, the risk statistics in Panel D are only measured among released defendants. Pretrial misconduct potential is, by definition, unobserved among detained individuals despite being the key legal objective for bail judges.

4.2. Quasi-Experimental Judge Assignment

Our empirical strategy exploits variation in pretrial release from the quasi-random assignment of judges who vary in the leniency of their bail decisions. There are three features of the NYC pretrial system that make it an appropriate setting for this research design.

First, NYC uses a rotation calendar system to assign judges to arraignment shifts in each of the five county courthouses in the city, generating quasi-random variation in bail judge assignment for defendants arrested at the same time and in the same place. Each county courthouse employs a supervising judge to determine the schedule that assigns bail judges to the day (9 a.m. to 5 p.m.) and night arraignment shift (5 p.m. to 1 a.m.) in one or more courtrooms within each courthouse. Individual judges can request to work certain days or shifts but, in practice, there is considerable variation in judge assignments within a given arraignment shift, day-of-week, month, and year cell.

Second, there is limited scope for influencing which bail judge will hear any given case, as most individuals are brought for arraignment shortly after their arrest. Each defendant’s arraignment is also scheduled by a coordinator, who seeks to evenly distribute the workload to each open courtroom at an arraignment shift. Combined with the rotating calendar system described above and the processing time required before the arraignment, it is unlikely that police officers, prosecutors, defense attorneys, or defendants could accurately predict which judge is presiding over any given arraignment.

Finally, the rotation schedule used to assign bail judges to cases does not align with the schedule of any other actors in the criminal justice system. For example, different prosecutors and public defenders handle matters at each stage of criminal proceedings and are not assigned to particular bail judges, while both trial and sentencing judges are assigned to cases via different processes. As a result, we can study the effects of being assigned to a given bail judge as opposed to, for example, the effects of being assigned to a given set of bail, trial, and sentencing judges.

Appendix Table A3 verifies the quasi-random assignment of judges to bail cases in the estimation sample. Each column reports coefficient estimates from an ordinary least squares (OLS) regression of judge leniency on various defendant and case characteristics, with court-by-time fixed effects that control for the level of quasi-experimental bail judge assignment. We measure leniency using the leave-one-out average release rate among all other defendants assigned to a defendant’s judge. Following the standard approach in the literature (e.g., Arnold, Dobbie and Yang, 2018; Dobbie, Goldin and Yang, 2018), we construct the leave-one-out measure by first regressing pretrial release on court-by-time fixed effects and then using the residuals from this regression to construct the leave-one-out residualized release rate. By first residualizing on court-by-time effects, the leave-one-out measure captures the leniency of a judge relative to judges assigned to the same court-by-time cells. Most coefficients in this balance table are small and not statistically significantly different from zero, both overall and by defendant race. A joint F-test fails to reject the null of quasi-random assignment at conventional levels of statistical significance, albeit only marginally in certain specifications, with a p-value equal to 0.300 among white defendants and 0.101 among Black defendants.14

Appendix Table A4 further verifies that the assignment of different judges meaningfully affects the probability an individual is released before trial. Each column of this table reports coefficient estimates from an OLS regression of an indicator for pretrial release on judge leniency and court-by-time fixed effects. A one percentage point increase in the predicted leniency of an individual’s judge leads to a 0.96 percentage point increase in the probability of release, with a somewhat smaller first-stage effect for white defendants and a somewhat larger effect for Black defendants.

4.3. Observational Comparisons

Table 2 investigates the system-wide level of observed racial disparity in NYC pretrial release rates. We first estimate OLS regressions of the form:

| (10) |

where Di is an indicator equal to one if defendant i is released, Wi is an indicator for the defendant being white, and Xi is a vector of controls. Column 1 of Table 2 omits any controls in Xi, column 2 adds court-by-time fixed effects to adjust for unobservable differences at the level of quasi-experimental bail judge assignment to Xi, and column 3 further adds the defendant and case observables from Table 1. Such regressions generally follow the conventional benchmarking approach from the literature (e.g., Gelman, Fagan and Kiss, 2007; Abrams, Bertrand and Mullainathan, 2012), where we again note that because of potential of both OVB and IVB the defendant and case observables included in column 3 can lead us to either over- or understate the true level of disparate impact in bail decisions.

Table 2:

Observational Release Rate Disparities

| (1) | (2) | (3) | |

|---|---|---|---|

|

|

|||

| White | 0.072 (0.005) | 0.068 (0.005) | 0.052 (0.004) |

| Male | −0.092 (0.004) | ||

| Age at Arrest | −0.005 (0.000) | ||

| Prior Rearrest | −0.068 (0.004) | ||

| Prior FTA | −0.208 (0.005) | ||

| Felony Charge | −0.171 (0.005) | ||

| Any Drug Charge | −0.057 (0.007) | ||

| Any DUI Charge | 0.119 (0.004) | ||

| Any Violent Charge | −0.146 (0.007) | ||

| Any Property Charge | −0.072 (0.005) | ||

|

| |||

| Court × Time FE | No | Yes | Yes |

| Case/Defendant Observables | No | No | Yes |

| Mean Release Rate | 0.730 | 0.730 | 0.730 |

| Cases | 595,186 | 595,186 | 595,186 |

Notes. This table reports OLS estimates of regressions of an indicator for pretrial release on defendant characteristics. The regressions are estimated on the sample described in the notes to Table 1. Robust standard errors, two-way clustered at the individual and the judge level, are reported in parentheses.

Table 2 documents both statistically and economically significant release rate disparities between white and Black defendants in NYC. The unadjusted white-Black release rate difference α is estimated in column 1 at 7.2 percentage points, with a standard error (SE) of 0.5 percentage points. This release rate gap is around 10 percent of the mean release rate of 73 percent. The release rate gap falls slightly, to 6.8 percentage points (SE: 0.5), when we control for court-by-time fixed effects. The gap falls by an additional 24 percent, to 5.2 percentage points (SE: 0.4), when we add defendant and case observables. These estimates are similar in magnitude to the association, reported in column 3, between the probability of release and having an additional drug charge (−5.7 percentage points) or pretrial arrest (−6.8 percentage points) in the past year.

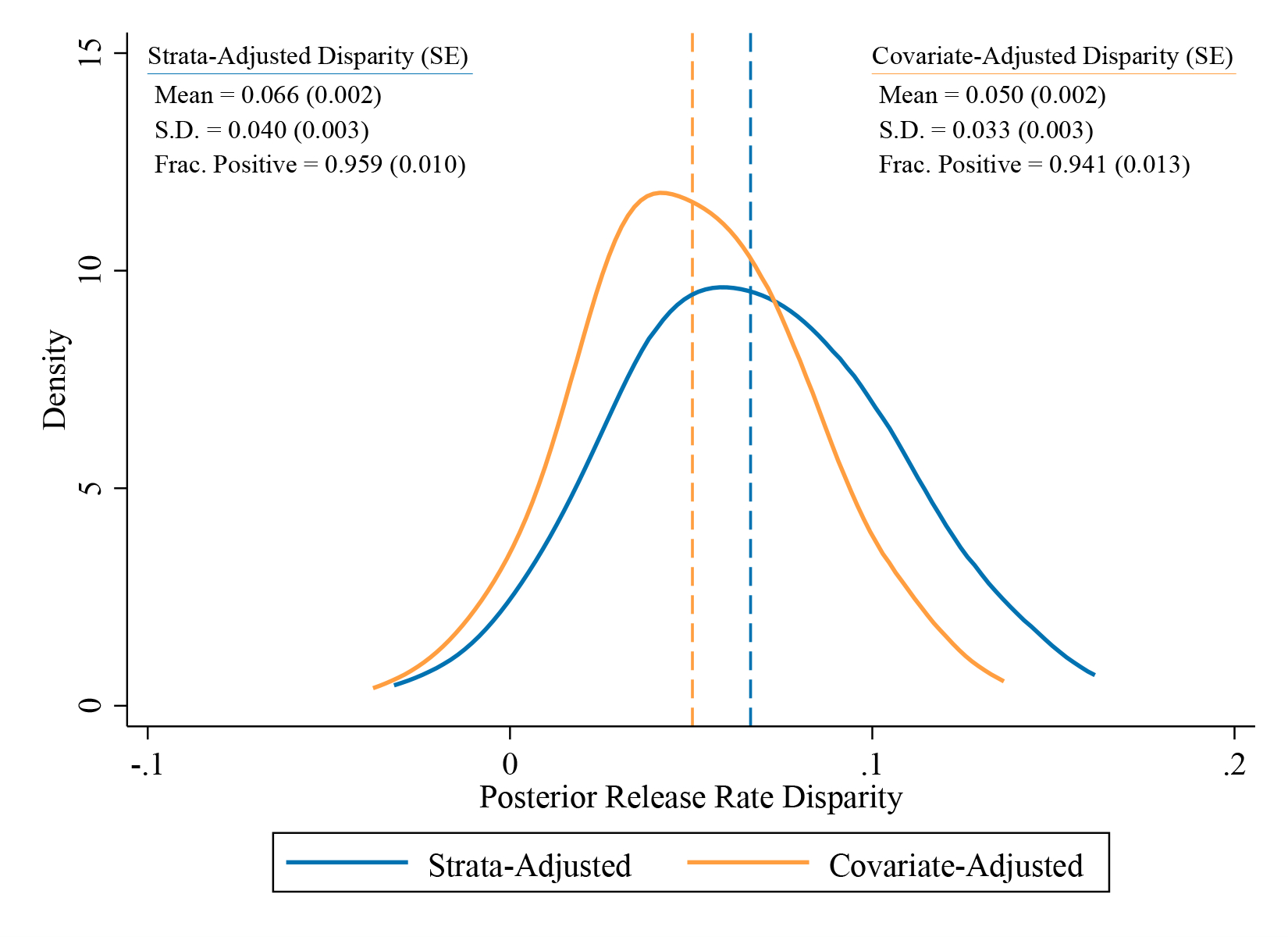

Figure 1 summarizes the distribution of judge-specific release rate disparities across the 268 bail judges in our sample. We estimate judge-specific disparities from OLS regressions of the form:

| (11) |

where Di is again an indicator equal to one if defendant i is released, WiZij is the interaction between an indicator for the defendant being white and the fixed effects for each judge, Zij are the noninteracted fixed effects for each judge, and Xi is again a control vector. We estimate Equation (11) with Xi demeaned, such that αj captures the regression-adjusted difference in release rates for white and Black individuals assigned to judge j. Figure 1 then plots empirical Bayes estimates of the posterior distribution of αj across judges, using the posterior average effect approach of Bonhomme and Weidner (2020) (see Appendix B.3 for details). We show the distribution when adjusting only for the main judge fixed effects and court-by-time fixed effects, following column 2 of Table 2, as well as the distribution when we add both defendant and case observables and court-by-time fixed effects, following column 3 of Table 2. We also report estimates of the prior mean and standard deviation of αj across judges, as well as the fraction of judges with positive αj (again following the posterior average effect approach of Bonhomme and Weidner (2020)).

Figure 1:

Observational Release Rate Disparities

Notes. This figure plots the posterior distribution of observational release rate disparities for the 268 judges in our sample. We estimate disparities by OLS regressions of an indicator for pretrial release on white×judge fixed effects, controlling for judge main effects. The strata-adjusted disparity regression controls only for the main judge fixed effects and court-by-time fixed effects. The covariate-adjusted disparity regression adds the baseline controls from Table 2. The distribution of judge disparities, and fractions of positive disparities, are computed from these estimates as posterior average effects; see Appendix B.3 for details. Means and standard deviations refer to the estimated prior distribution.

The posterior distributions of release rate disparities in Figure 1 are both located well above zero, revealing that nearly all judges in our sample release white defendants at a higher rate than Black defendants. We estimate that 95.9 percent (SE: 1.0) of judges in our sample release a larger share of white defendants in the specification that adjusts for court-by-time fixed effects, while 94.1 percent (SE: 1.3) are estimated to release a larger share of white defendants when we additionally adjust for defendant and case observables. Figure 1 nevertheless shows considerable variation in the magnitude of the release rate disparities across judges. The standard deviation of αj is estimated at 4.0 percentage points (SE: 0.3) when we adjust for court-by-time fixed effects, and 3.3 percentage points (SE: 0.3) when we additionally adjust for defendant and case observables. The average judge-specific disparities, which differ from the system-wide averages in Table 2 due to differences in weighting, are 6.6 percentage points (SE: 0.2) when we adjust for court-by-time fixed effects, and 5.0 percentage points (SE: 0.2) when we additionally adjust for defendant and case observables.

Together, the results from Table 2 and Figure 1 confirm large and pervasive racial disparities in NYC release decisions, both in the raw data and after accounting for observable differences between white and Black defendants. These observational estimates suggest that there may be a disparate impact of release decisions, but are not conclusive as we cannot directly adjust for unobserved misconduct potential and could thus either over- or understate the true level and distribution of disparate impact across judges in the NYC pretrial system. We next develop and apply a quasi-experimental approach to adjust for unobserved misconduct potential directly and measure disparate impact.

5. Quasi-Experimental Estimates of Disparate Impact

5.1. Methods

We estimate the disparate impact in NYC pretrial release decisions by rescaling the observational release rate comparisons in Figure 1 using quasi-experimental estimates of average white and Black misconduct risk. This quasi-experimental approach does not require a model of judge decision-making, only that average misconduct risk among white and Black defendants can be accurately extrapolated from the quasi-experimental data.

The first key insight underlying our approach is that when judges are as-good-as-randomly assigned, the problem of measuring disparate impact in release decisions for individual judges reduces to the problem of estimating the average misconduct risk among the full population of Black and white defendants. The source of OVB in an observational benchmarking comparison is the correlation between race and unobserved misconduct potential among a given judge’s pool of white and Black defendants. Under quasi-random judge assignment, this correlation is common to all judges and captured by race-specific population misconduct risk. Thus, given estimates of these race-specific risk parameters, observed release outcomes can be appropriately rescaled to make released white and Black defendants comparable in terms of their unobserved misconduct potential.

The rescaling that purges OVB from observational comparisons is given by expanding the conditional release rates from the definition of disparate impact in Equation (7):

| (12) |

| (13) |

where the third equalities in both lines follow from quasi-random judge assignment and the definition of mean risk . Substituting these expressions into Equation (7) yields:

| (14) |

where:

| (15) |

The rewritten definition in Equation (14) shows that the disparate impact in judge j’s release decisions Δj is given by the αj coefficients in a simple benchmarking regression, where the release decisions Di of each individual are rescaled by a positive factor Ωi. This Ωi reweights the sample to make released white and Black defendants comparable in terms of their unobserved misconduct potential. It therefore reveals the extent to which each judge discriminates against white and Black defendants with identical misconduct potential, even though misconduct potential is unobserved and cannot be directly conditioned on.15 Equation (15) shows that Ωi is a function of observed misconduct outcomes Yi and the unobserved average race-specific misconduct risk parameters μr, where again . The key econometric challenge is therefore to estimate average misconduct risk μr among the full population of white and Black defendants.

The second key insight underlying our approach is that the average race-specific misconduct risk parameters that enter Equation (14) can be estimated from quasi-experimental variation in pretrial release and misconduct rates. To build intuition for this approach, consider a setting with as-good-as-random judge assignment and a supremely lenient bail judge j∗ who releases nearly all defendants regardless of their race or potential for pretrial misconduct. This supremely lenient judge’s race-specific release rate among both Black and white defendants is close to one:

| (16) |

making the race-specific misconduct rate among defendants she releases close to the race-specific average misconduct risk in the full population:

| (17) |

where the first equality in both expressions follows by quasi-random assignment. Without further assumptions, the decisions of a supremely lenient and quasi-randomly assigned judge can therefore be used to estimate the average misconduct risk parameters needed for our disparate impact measure.

In the absence of such a supremely lenient judge, the required average misconduct risk parameters can be estimated using model-based or statistical extrapolations of release and misconduct rate variation across quasi-randomly assigned judges. This approach is conceptually similar to how average potential outcomes at a treatment cutoff can be extrapolated from nearby observations in a regression discontinuity (RD) design, particularly “donut RD” designs in which data in some window of the treatment cutoff is excluded. Here, released misconduct rates are extrapolated from quasi-randomly assigned judges with high leniency to the release rate cutoff of one given by a hypothetical supremely lenient judge. Mean risk estimates may, for example, come from the vertical intercept, at one, of linear, quadratic, or local linear regressions of estimated released misconduct rates on estimated release rates E[Dij | Ri = r] across judges j within each race r. As we show below, extrapolations may also come from a model of judge behavior. Absent any extrapolations, conservative bounds on mean risk may be obtained from the released misconduct rates of highly (but not supremely) lenient judges. Each of these approaches build on recent advances in ATE estimation with multiple discrete instruments (e.g., Brinch, Mogstad and Wiswall, 2017; Mogstad, Santos and Torgovitsky, 2018; Hull, 2020) and a long literature on “identification at infinity” in sample selection models (e.g., Chamberlain, 1986; Heckman, 1990; Andrews and Schafgans, 1998).16

A further practical complication arises in our setting, with NYC bail judges only quasi-randomly assigned conditional on court-by-time effects. Some adjustment for these strata is generally needed to estimate the potential judge- and race-specific release rates E[Dij | Ri = r] and released misconduct rates that enter our mean risk estimation. We use linear regression adjustment, which tractably incorporates the large number of court-by-time effects under an auxiliary linearity assumption. Specifically, we estimate release rates from the earlier benchmarking regression in Equation (11) and estimate released misconduct rates from the analogous OLS regression:

| (18) |

among released individuals (Di = 1), where again Xi contains demeaned court-by-time fixed effects. Here, ζj and ρj + ζj estimate and , respectively, just as ϕj and αj + ϕj estimate E[Dij | Ri = w] and E[Dij | Ri = b] in Equation (11).

The linear covariate adjustment in Equation (11) is appropriate when release rates are linear in the court-by-time effects for each judge and race, with constant coefficients: i.e., when . Similarly, a sufficient condition for Equation (18) to consistently estimate released misconduct rates is . Intuitively, both conditions require the court-by-time effects to shift judge actions similarly across the judges j and two races r. A judge who is lenient for a given race in one courtroom and time period is thus restricted to still be lenient in different courtrooms and time periods.17 Below, we relax this restriction in robustness checks that allow the control coefficients, β and γ, to vary flexibly by judge and race. We do this by separating the estimation of Equations (11) and (18) by borough and by interacting the judge effects with linear and quadratic functions of time.

5.2. Results

Mean Risk by Race

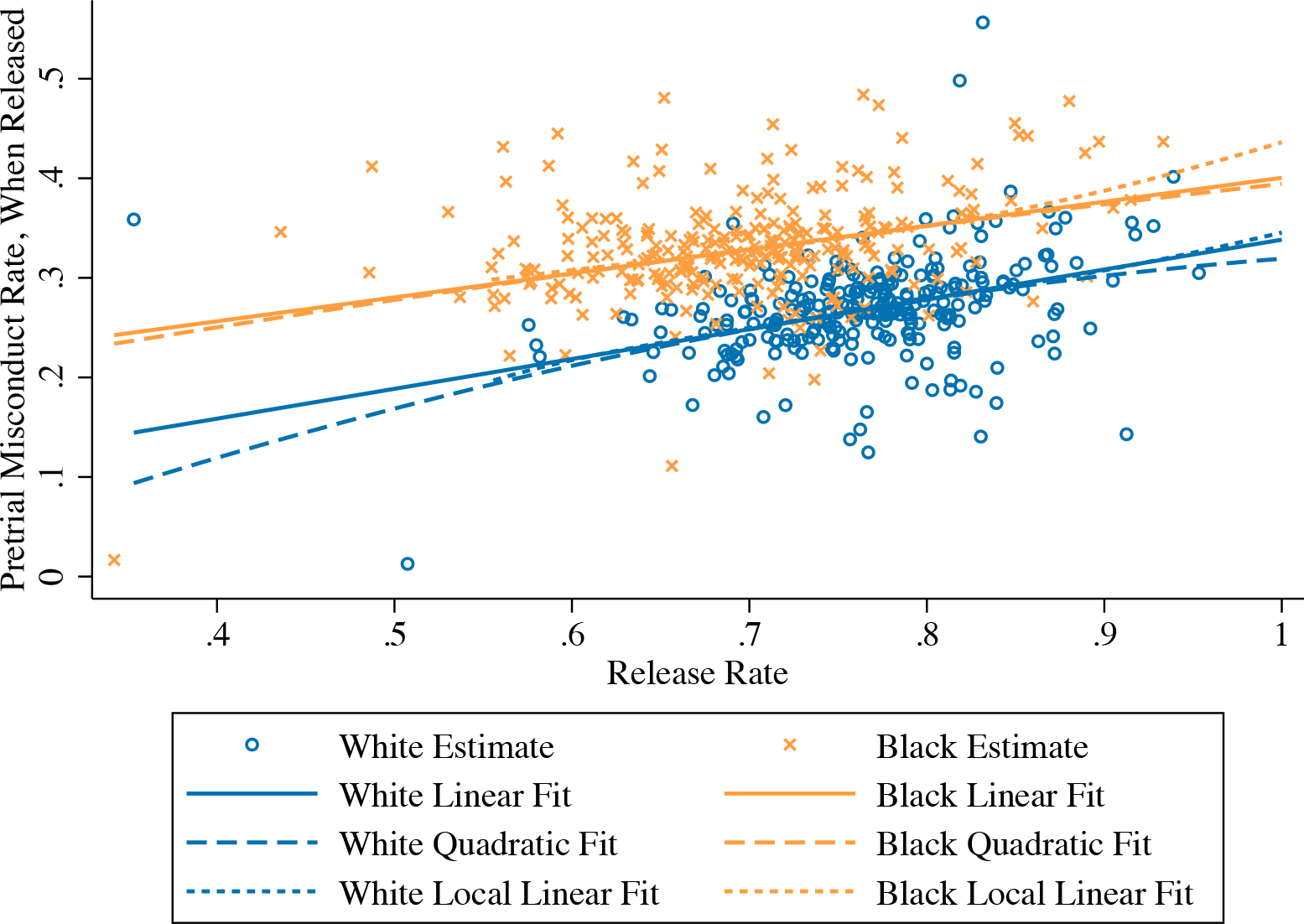

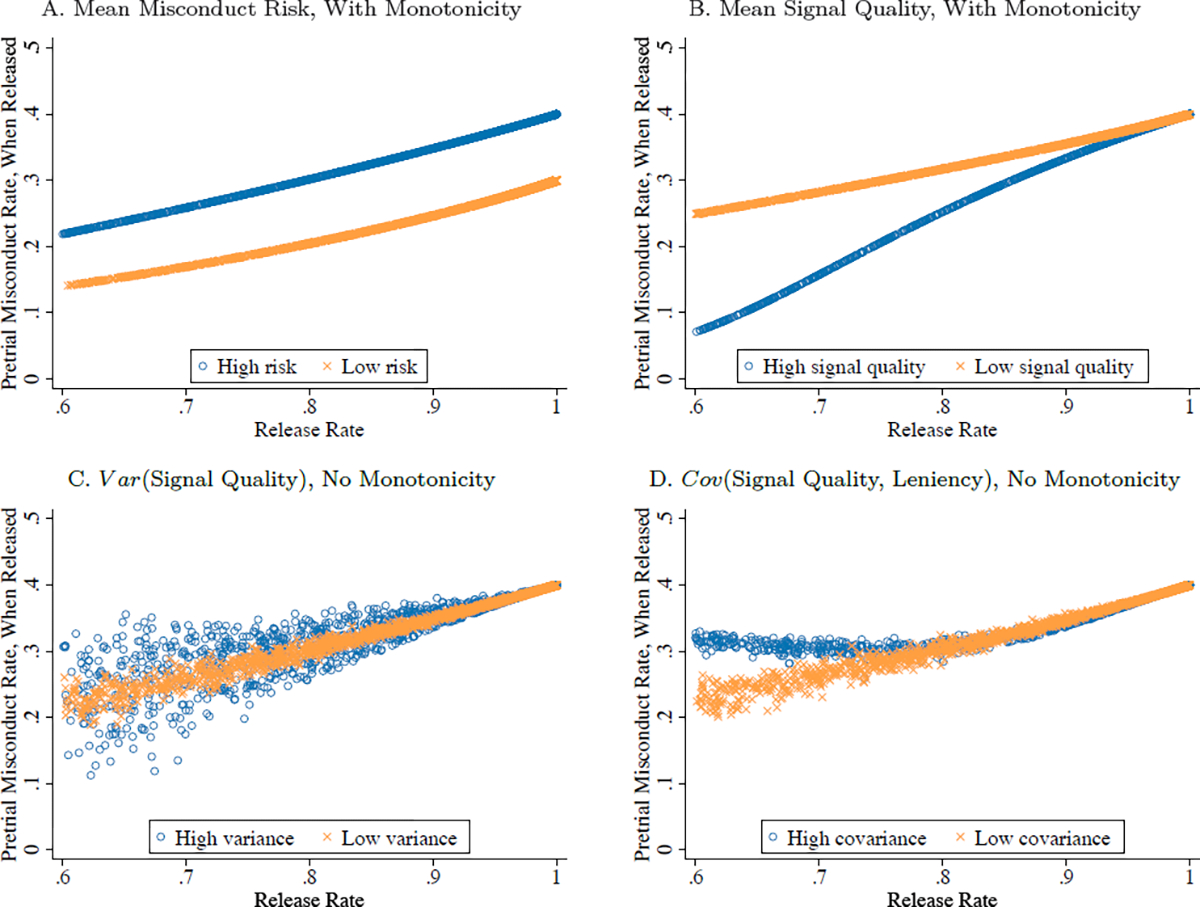

Figure 2 illustrates our extrapolation-based estimation of the mean risk parameters in NYC. The horizontal axis plots estimates of the regression-adjusted judge- and race-specific release rates. We find sizable variation across judges within each race, with several judges releasing a high fraction of white or Black defendants.18 Released misconduct rates, plotted on the vertical axis, tend to increase with judge leniency for both races—as would be predicted by a behavioral model in which the more lenient judges release riskier defendants at the margin. This pattern is shown by the two solid lines in Figure 2, representing the race-specific lines-of-best-fit through the first-step estimates. The lines-of-best-fit are obtained by OLS regressions of judge-specific released misconduct rate estimates on judge-specific release rate estimates, with the judge-level regressions weighted inversely by the variance of misconduct rate estimation error. We also plot curves-of-best-fit from judge-level quadratic and local linear specifications as dashed and dotted lines, respectively, with both specifications again weighted inversely by the variance of misconduct rate estimation error. The simple linear specification fits the local IV variation well, with quadratic and local linear specifications yielding similar fits across much of the leniency distribution.

Figure 2:

Judge-Specific Release Rates and Conditional Misconduct Rates

Notes. This figure plots race-specific release rates for the 268 judges in our sample against rates of pretrial misconduct among the set of released defendants. All estimates adjust for court-by-time fixed effects. The figure also plots race-specific linear, quadratic, and local linear curves of best fit, obtained from judge-level regressions that inverse-weight by the variance of the estimated misconduct rate among released defendants. The local linear regressions use a Gaussian kernel with a race-specific rule-of-thumb bandwidth.

The vertical intercepts of the different curves-of-best-fit, at one, provide different estimates of the race-specific mean risk parameters μr. These estimates and associated SEs are reported in Panel A of Table 3 (all SEs in this and subsequent sections are obtained from a bootstrap procedure which accounts for the first-step estimation of the judge- and race-specific release rates and released misconduct rates). The simplest linear extrapolation, summarized in column 1, yields precise mean risk estimates of 0.338 (SE: 0.007) for white defendants and 0.400 (SE: 0.006) for Black defendants. This extrapolation suggests that the average misconduct risk within the population of potential Black defendants is 6.2 percentage points higher than among the population of potential white defendants in NYC. Per Section 3.2, such a racial gap in misconduct risk is likely to generate OVB in observational release rate comparisons.

Table 3:

Mean Risk and Disparate Impact Estimates

| Linear Extrapolation | Quadratic Extrapolation | Local Linear Extrapolation | |

|---|---|---|---|

|

|

|||

| Panel A: Mean Risk by Race | (1) | (2) | (3) |

|

|

|||

| White Defendants | 0.338 (0.007) | 0.319 (0.021) | 0.346 (0.014) |

| Black Defendants | 0.400 (0.006) | 0.394 (0.021) | 0.436 (0.016) |

| Panel B: System-Wide Disparate Impact | |||

| Mean Across Cases | 0.054 (0.002) | 0.054 (0.007) | 0.042 (0.006) |

| Panel C: Judge-Level Disparate Impact | |||

| Mean Across Judges | 0.054 (0.003) | 0.054 (0.007) | 0.042 (0.006) |

| Std. Dev. Across Judges | 0.038 (0.003) | 0.037 (0.003) | 0.037 (0.003) |

| Fraction Positive | 0.929 (0.016) | 0.931 (0.036) | 0.873 (0.036) |

|

| |||

| Judges | 268 | 268 | 268 |

Notes. This table summarizes estimates of mean risk and disparate impact from different extrapolations of the variation in Figure 2. Panel A reports estimates of race-specific average misconduct risk, Panel B reports estimates of system-wide (case-weighted) disparate impact, and Panel C reports empirical Bayes estimates of summary statistics for the judge-level disparate impact prior distribution. To estimate mean risk, column 1 uses a linear extrapolation of the variation in Figure 2, while column 2 uses a quadratic extrapolation and column 3 uses a local linear extrapolation with a Gaussian kernel and a rule-of-thumb bandwidth. Robust standard errors, two-way clustered at the individual and judge level, are obtained by a bootstrapping procedure and appear in parentheses.

The quadratic and local linear extrapolations of the quasi-experimental variation yield similar race-specific mean risk estimates, as can be seen from Figure 2. The quadratic fit suggests a slight nonlinearity in the relationship between judge leniency and released misconduct rates, with a slightly concave dashed curve for white defendants and a more linear dashed curve for Black defendants. Column 2 of Table 3 shows that the former nonlinearity translates to a somewhat lower estimate of white mean risk, at 0.319 (SE: 0.021), with a similar estimate of Black mean risk, at 0.394 (SE: 0.021). Near one, the local linear fit of Figure 2 coincides with the linear fit for white defendants and is above both the quadratic and linear fit for Black defendants, yielding mean risk estimates in column 3 of 0.346 (SE: 0.014) and 0.436 (SE: 0.016), respectively. The implied racial gap in risk—and thus the potential for OVB—rises with these more flexible extrapolations, to 7.5 percentage points in column 2 and 9.0 percentage points in column 3. We take the most flexible local linear extrapolation as our baseline specification in NYC, which we show below gives the most conservative estimate of average disparate impact. We explore robustness to a wide range of alternative mean risk estimates below.

The extrapolations in Figure 2 yield accurate mean risk estimates when judge release rules are accurately parameterized or when there are many highly lenient judges. Appendix Figure A1 validates our extrapolations by plotting race-specific extrapolations of average predicted misconduct outcomes, among released defendants, in place of actual released misconduct averages in Figure 2. We first construct predicted misconduct outcomes using the fitted values from an OLS regression of actual pretrial misconduct on the controls in column 3 of Table 2 in the subsample of released defendants. Appendix Figure A1 then plots estimates of and E[Dij = 1 | Ri = r], constructed as in Figure 2. Since can be computed for the entire sample, we also include in this figure the overall averages that are analogous to the race-specific mean risk parameters of interest. Figure A1 shows that each of the linear, quadratic, and local linear extrapolations of predicted misconduct rates yields similar and accurate estimates of the overall actual averages. The 95 percent confidence intervals of the local linear extrapolations, for example, include the actual Black average and only narrowly exclude the actual white average. These results build confidence for the extrapolations of actual pretrial misconduct outcomes in this setting.19

Disparate Impact

Panels B and C of Table 3 summarize the estimates of disparate impact Δj given the corresponding ATE estimates in Panel A. These estimates are obtained from the sample analogue of Equation (7), noting that a judge’s release rate conditional on no misconduct potential can be written:

| (19) |

and similarly for her release rate condition on misconduct potential δjr1. We use the regression-adjusted estimates of E[Dij | Ri = r] and from Figure 2 and the sample share of Black defendants to complete the formula for Δj. Case-weighted averages of the resulting Δj estimates, reported in Panel B, estimate system-wide disparate impact. We also compute empirical Bayes posteriors of the distribution of Δj, again following Bonhomme and Weidner (2020). Summary statistics for the judge-level prior distribution (estimated as in Figure 1) are given in Panel C.

We find that approximately two-thirds of the system-wide release rate disparity between white and Black defendants in NYC is explained by disparate impact in release decisions, with about one-third explained by unobserved differences in pretrial misconduct risk (i.e., OVB). The local linear extrapolations yield the most conservative estimate of system-wide disparate impact in Table 3, implying that 62 percent (4.2 percentage points) of the case-weighted average disparity of 6.8 percentage points in Table 2 can be explained by disparate impact in release decisions. By comparison, both the linear and quadratic extrapolation-based estimates of race-specific mean risk imply that 79 percent (5.4 percentage points) of the average benchmarking disparity can be explained by disparate impact. We thus find that unobservable differences in defendant risk can explain 21 to 38 percent (1.4 to 2.6 percentage points) of the average disparity that remains after adjusting for court-by-time fixed effects.

We also find that IVB has a meaningful role in observational comparisons that adjust for non-race characteristics. Panels B and C of Appendix Table A8 show that adjusting the estimated release rates and released misconduct rates by the defendant and case characteristics in column 3 of Table 2 leads to smaller disparate impact estimates.20 With the local linear extrapolation, for example, the average disparate impact estimate shrinks by 17 percent (0.7 percentage points, out of 4.2 percentage points) compared to our baseline specification in Table 3. The reduction in the disparate impact estimate suggests that some of the findings in Table 3 are mediated by these defendant or case observables. As discussed above, our rescaling approach avoids such IVB concerns by conditioning on pretrial misconduct potential itself, rather than conditioning on these types of non-race characteristics.

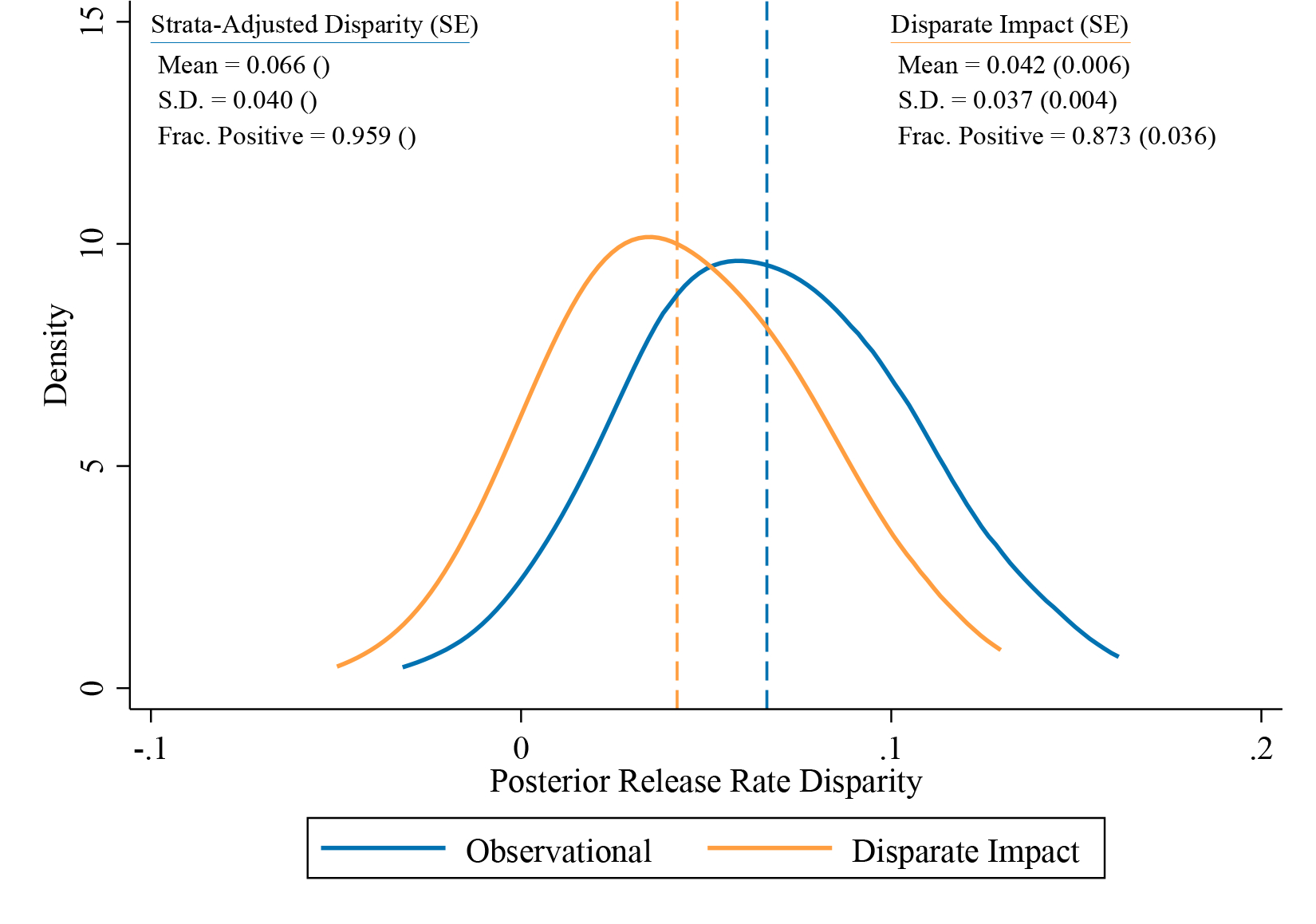

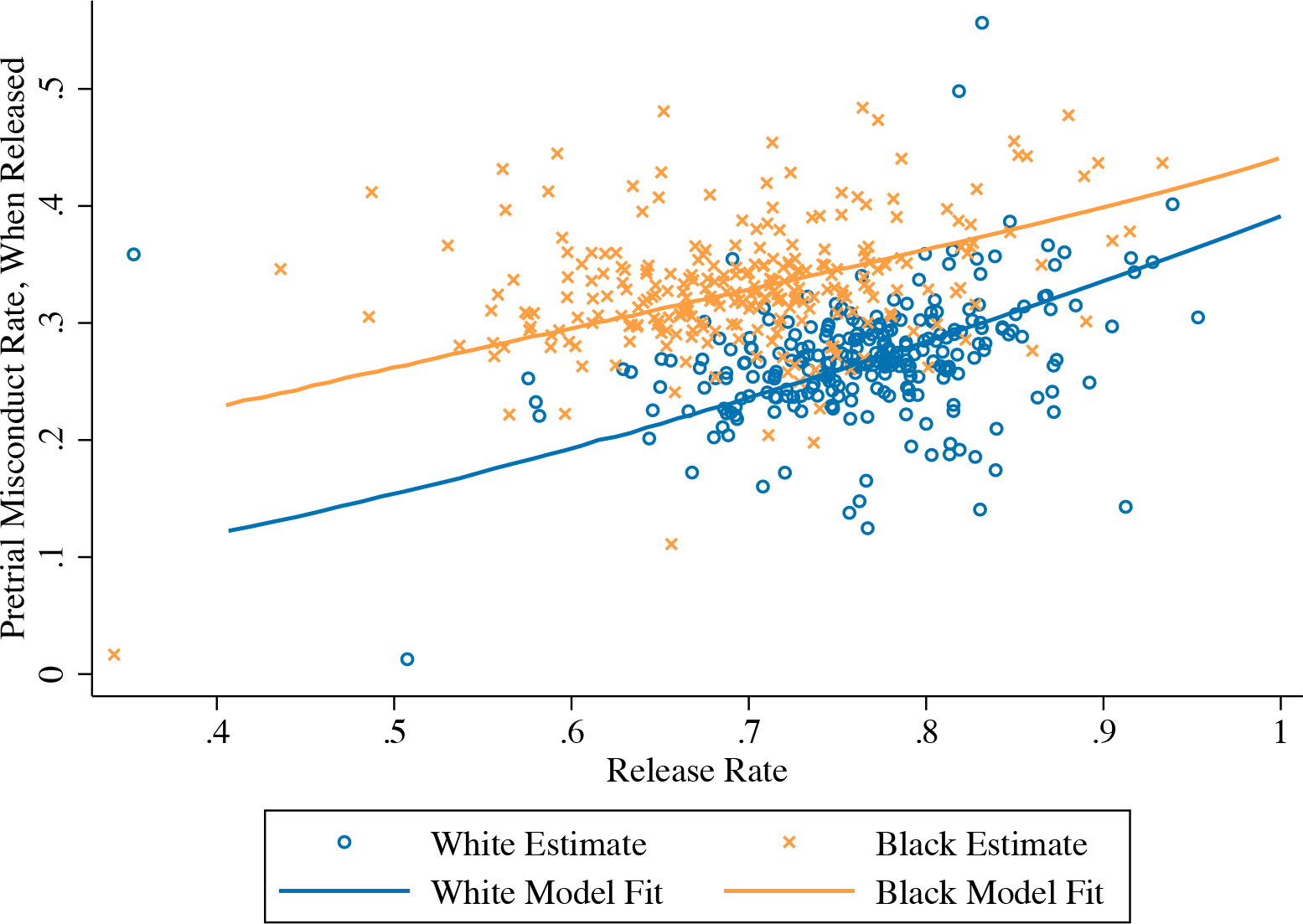

Figure 3 plots the full posterior distribution of judge-level disparate impact, paralleling Figure 1, again using the most conservative local linear estimates of mean risk and returning to the baseline court-by-time fixed effect adjustment. For comparison, we also include the posterior distribution of observed racial disparities from our benchmarking model that adjusts only for the court-by-time fixed effects. The former distribution is shifted evenly to the left of the latter distribution, consistent with nontrivial OVB across the judge-specific estimates. Around 62 percent of the judge-weighted average benchmarking disparity (4.2 percentage points, out of 6.6 percentage points) is found to be due to disparate impact in release decisions, the same as the case-weighted decomposition from Panel B of Table 3. The standard deviation of judge-specific disparate impact estimates remains large, at 3.7 percentage points, though it shrinks somewhat from the 4.0 percentage point standard deviation of observed release rate disparities. The clear majority of NYC judges have positive Δj, at 87.3 percent, though this share is also smaller than the 95.9 percent predicted by the benchmarking model. Panel C of Table 3 shows that these statistics are similar across different mean risk estimates.

Figure 3:

Observational Disparities and Disparate Impact Estimates

Notes. This figure plots the posterior distribution of observational disparities and disparate impact estimates for the 268 judges in our sample. Strata-adjusted disparities are estimated by the coefficients of an OLS regression of an indicator for pretrial release on white×judge fixed effects, controlling for judge main effects and court-by-time fixed effects. Disparate impact is estimated as described in Section 5, using the local linear extrapolations from Figure 2 to estimate the mean risk of each race. The distribution of judge disparities and disparate impact estimates, and fractions of positive disparities and disparate impact estimates, are computed from these estimates as posterior average effects; see Appendix B.3 for details. Means and standard deviations refer to the estimated prior distribution.

We explore patterns in this heterogeneity by regressing the judge-level disparate impact estimates on judge observables. Specifically, in columns 1–5 of Appendix Table A9 we regress the Δj estimates on indicators for whether a judge is newly appointed during our sample period, exhibits above-average leniency, or has an above-median share of Black defendants (as measured before the adjustment for court-by-time fixed effects, which makes Black defendant shares balanced across judges). We weight all regressions by estimates of the inverse variance of the disparate impact estimates, with similar results obtained from weighting by judge caseload. We find significantly lower levels of disparate impact among newly appointed judges, more lenient judges, and judges with a higher share of Black defendants. We also find that judges who primarily see cases in the Manhattan, Queens, and Richmond county courtrooms tend to exhibit higher levels of disparate impact, while those who primarily see cases in Brooklyn (the omitted category) and the Bronx have lower levels of disparate impact. Columns 6–7 of Appendix Table A9 further investigate the persistence of our disparate impact measure over time by computing separate Δj estimates in the first and second half of cases that each judge sees in our sample period, recomputing the race-specific mean risk estimates in each half, and estimating OLS regressions of current disparate impact estimates on lagged disparate impact posteriors and judge observables. We compute posteriors via a conventional empirical Bayes “shrinkage” procedure, detailed in Appendix B.3, and again weight by estimates of the inverse variance of the disparate impact estimates. We find that the judge-specific disparate impact estimates are highly correlated over time, with an autoregressive coefficient of 0.86. Lagged disparate impact alone explain about 29 percent of the variation in current disparate impact, with the lagged disparity and observable judge characteristics explaining about 43 percent.21

We further explore heterogeneity in the disparate impact estimates across defendants, using a conditional version of our baseline local linear approach that restricts to defendants with a particular criminal record or charge. For this more fine-grained analysis we restrict attention to judges who see at least 25 cases involving defendants with the indicated criminal record or charge in each specification. Appendix Table A10 shows we find disparate impact against Black defendants in each subgroup, with point estimates for the extent of disparate impact ranging from 1.0 percentage points for defendants charged with a property offense and 2.4 percentage points for defendants charged with a DUI and defendants without a prior criminal charge, to 3.0 percentage points for defendants charged with a felony, 4.6 percentage points for defendants charged with a misdemeanor, 5.5 percentage points for defendants charged with a drug offense, and 10.7 percentage points for defendants charged with a violent offense. The estimates are generally precisely estimated, with the exception of felony offenses and violent offenses where we obtain noisy estimates of the mean risk inputs.

Overall, our estimates show that there are both statistically and economically significant disparities in the release rates of Black and white defendants with identical potential for pretrial misconduct. The most conservative estimate in Table 3, for example, implies that the disparate impact in release rates could be closed if NYC judges released roughly 2,609 more Black defendants each year (or detained roughly 2,609 more white defendants). Using an estimate from Dobbie, Goldin and Yang (2018), releasing this many defendants would lead to around $78 million in recouped earnings and government benefits annually. We can also compare the average disparate impact in release rates to other observed determinants of pretrial release. Table 2 shows, for example, that the most conservative 4.2 percentage point disparate impact estimate corresponds to more than half of the decreased probability in release associated with having an additional pretrial arrest in the past year (−6.8 percentage points).

5.3. Robustness and Extensions

We verify the robustness of our main results to several deviations from the baseline specification, exploring alternative estimates of mean risk, weighting schemes, adjustments for court-by-time strata, definitions of pretrial misconduct, classifications of pretrial release, and definitions of defendant race.

Mean Risk Estimates:

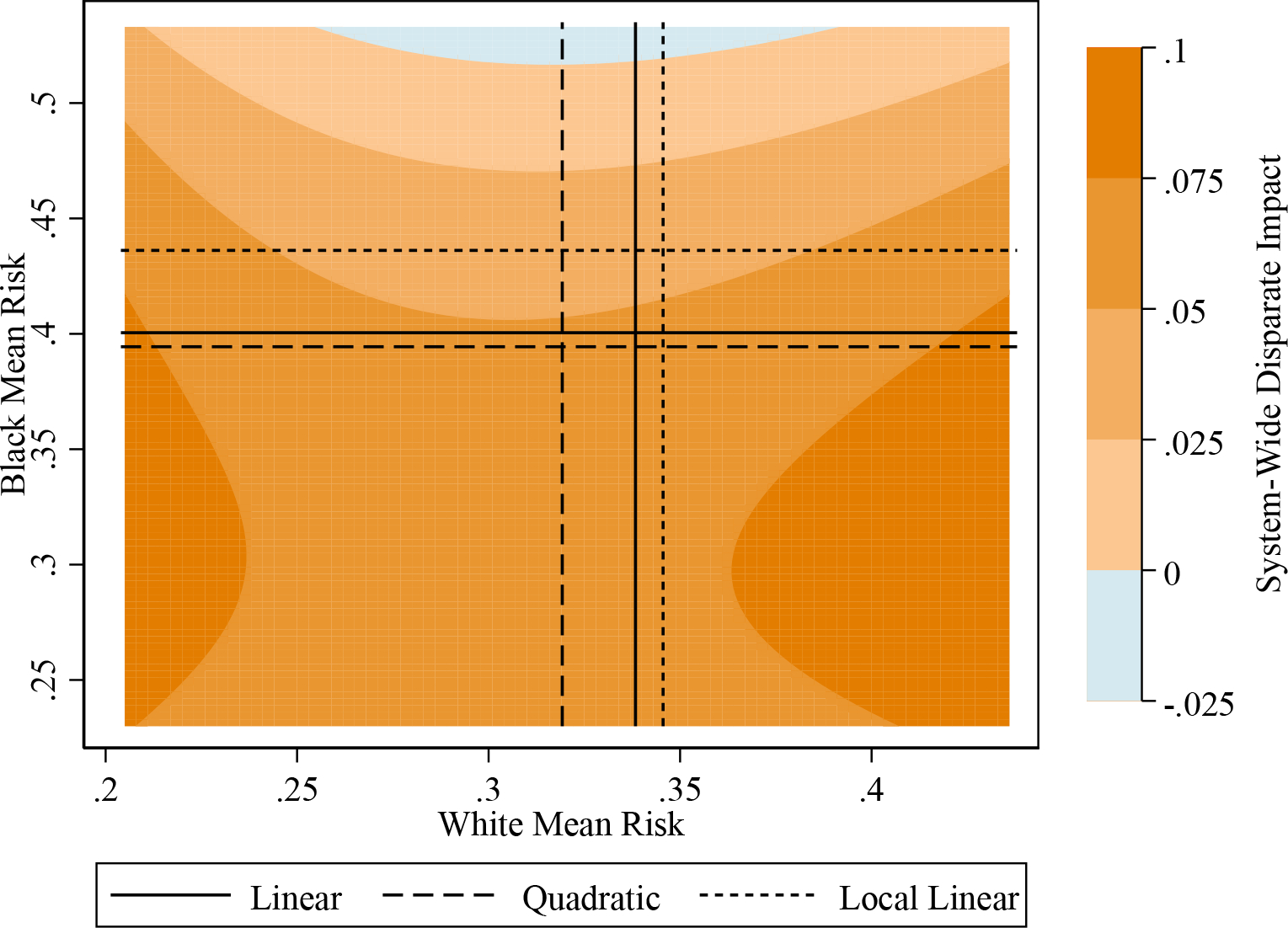

Figure 4 examines the sensitivity of our main results to different values of the mean misconduct risk inputs, showing that our finding of pervasive disparate impact does not depend on any particular extrapolation of the released misconduct rates in Figure 2. We first compute the range of possible mean risk parameters given the observed misconduct and release rates in the sample. Since , a lower bound on is given by race r’s unconditional average misconduct rate . Similarly, an upper bound on is given by . Plugging the rates from Table 1 into these formulas, we obtain white and Black mean risk bounds of μw ∈ [0.204, 0.437] and μb ∈ [0.231, 0.536]. We then plot in Figure 4 the range of system-wide disparate impact obtained from different pairs of white and Black mean risk in these bounds.

Figure 4:

Sensitivity Analysis