Abstract

Objective:

We assessed the feasibility and validity of remote researcher-led administration and self-administration of modified versions of two cognitive tasks sensitive to ADHD, a four-choice reaction time task (Fast task) and a combined Continuous Performance Test/Go No-Go task (CPT/GNG), through a new remote measurement technology system.

Method:

We compared the cognitive performance measures (mean and variability of reaction times (MRT, RTV), omission errors (OE) and commission errors (CE)) at a remote baseline researcher-led administration and three remote self-administration sessions between participants with and without ADHD (n = 40).

Results:

The most consistent group differences were found for RTV, MRT and CE at the baseline researcher-led administration and the first self-administration, with 8 of the 10 comparisons statistically significant and all comparisons indicating medium to large effect sizes.

Conclusion:

Remote administration of cognitive tasks successfully captured the difficulties with response inhibition and regulation of attention, supporting the feasibility and validity of remote assessments.

Keywords: ADHD, remote monitoring, attention regulation, response inhibition, RADAR-base

Introduction

Extensive past research links attention deficit hyperactivity disorder (ADHD), in both children and adults, to a cognitive profile that indicates difficulties with the regulation of attention and aspects of executive functioning, such as response inhibition (Franke et al., 2018; Pievsky & McGrath, 2018). Cognitive tasks that are particularly sensitive to differences between people with and without ADHD include the Continuous Performance Test (CPT) and the Go/No-Go (GNG) task, as well as simple four-choice reaction time tasks (Cheung et al., 2016; Kuntsi et al., 2010).

While cognitive task administration typically involves the participant attending an assessment session at a clinic or a research center, the possibility of remote administration of cognitive tasks has immense appeal. Remote self-administration of cognitive tasks would enable long-term monitoring for either research or clinical purposes. The Covid-19 pandemic has further illustrated the importance of remote data collection methods for times when in-person visits to clinics may not be feasible. Diversity and inclusion of participants can also improve, as remote assessments are more accessible to less mobile individuals or those living in isolated areas. Yet remote self-administration of cognitive tasks is not without challenges; its validity must be demonstrated.

We have recently developed a new remote measurement technology (RMT) system for adults and adolescents (ages 16+) with ADHD that also incorporates two cognitive tasks for remote self-administration. The initial goal for the development of the ADHD Remote Technology (ART) system is to enable long-term, real-world monitoring of symptoms, impairments, and health-related behaviors. ART is linked to the open source mobile-health platform RADAR-base (Ranjan et al., 2019; Stewart et al., 2018) and consists of both active and passive monitoring using mobile and web technologies. Passive monitoring, which requires no active input from the participant, is continuous using smartphone sensors and a wearable device. Active monitoring involves the participant completing questionnaires or other tasks on a smartphone Active App, and the self-administration of two cognitive tasks using a laptop or PC.

The cognitive tasks in the ART system consist of modified versions of the Fast task and a combined CPT/GNG task. The Fast task is a four-choice reaction time task that probes attention regulation (see Methods for a full description of the task). Individuals with ADHD show increased reaction time variability (RTV) on the task, which is associated with neurophysiological measures of attention allocation and arousal dysregulation (Cheung et al., 2017; James et al., 2016). Mean reaction time (MRT) on the task is also slower among individuals with ADHD. Our previous research indicates very high phenotypic and genetic/familial correlations (.8–.9) between RTV and MRT, suggesting they capture largely the same process on both the Fast task and the GNG task (Kuntsi et al., 2010, 2014). The combined CPT/GNG task probes attentional processes (omission errors (OE), RTV) and response inhibition (commission errors (CE)). The version we have developed for ART is a modified version based on a “CPT-OX” task we have used previously in studies on ADHD (Cheung et al., 2016; McLoughlin et al., 2010). It consists of a low target probability condition (“CPT”: 1:5) and a high target probability condition (“GNG”: 5:1). Our previous larger-scale studies have indicated differences between ADHD and comparison groups on OE, CE, MRT, and RTV on CPT-OX and GNG tasks (Cheung et al., 2016; Kuntsi et al., 2010), while in our smaller-scale study using the CPT-OX group differences emerged for OE, MRT and RTV, but not for CE (although a medium effect size was observed for the latter too; [McLoughlin et al., 2010]). Overall, the Fast and CPT/GNG tasks enable measurement of cognitive performance differences in people with ADHD that are linked to genetic risk for ADHD (Kuntsi et al., 2010; Vainieri et al., 2021) and capture markers of ADHD persistence/remission (James et al., 2016, 2020; Michelini et al., 2016, 2019; Vainieri et al., 2020).

As part of a 10 week remote monitoring pilot study on the ADHD Remote Technology (ART) system that involved an initial baseline remote administration by researcher and three subsequent remote self-administrations of the cognitive tasks, we addressed the following research questions and tested the following hypotheses:

Remote administration by researcher: Does remote administration of the Fast task and the CPT/GNG task by a researcher produce the ADHD-control differences expected based on past research? Our hypothesis is that participants with ADHD perform less well than control participants on each of the outcome variables (RTV, MRT, CE, and OE) at baseline administration by the researcher. The pilot study was carried out during the Covid-19 pandemic, which required remote (rather than in-person) baseline assessments by the researcher. The demonstration of the validity of such remote assessments is therefore the first step.

Remote self-administration: Does remote self-administration of the Fast task and the CPT/GNG task produce similar ADHD-control differences as observed during the baseline researcher-administration of the tasks? Our hypothesis is that participants with ADHD perform less well than control participants on each of the outcome variables (RTV, MRT, CE, and OE) at study week 2 (first self-administration).

Group differences at study weeks 6 and 10: Are similar ADHD-control group differences still observed at study weeks 6 and 10? We have no directional hypothesis here, as it is uncertain how participants may cope with the repeated administration of these typical ADHD tasks that are designed to be challenging for people with ADHD and are therefore purposefully rather monotonous.

Methods

Participants

We recruited 20 individuals with ADHD and 20 control participants between the ages of 16 and 39 into the study (Table 1). Participants were recruited from previous studies (where they had indicated that they were willing to be contacted regarding future research studies), via the Attention Deficit Disorder Information and Support Service (ADDISS), social media, King’s Volunteer circular and on the “Call for Participants” website (https://www.callforparticipants.com/). Exclusion criteria for the individuals with ADHD were: (1) having psychosis, major depression, mania, drug dependency, or a major neurological disorder, (2) any other major medical condition which might impact upon the individual’s ability to participate in normal daily activity, (3) pregnancy, (4) IQ of <70 and (5) not currently taking medication for their ADHD. Exclusion criteria for the control group were: (1) meeting diagnostic criteria for ADHD based on the self-report on Barkley Adult ADHD Rating scale on current symptoms (BAARS-IV) and Barkley ADHD functional impairment questionnaire, (2) having psychosis, major depression, mania, drug dependency, or a major neurological disorder, (3) any other major medical condition which might impact upon the individual’s ability to participate in normal daily activity, (4) pregnancy, and (5) IQ of <70.

Table 1.

Demographics Divided by Group, with Tests for Differences Between Participants With ADHD and Controls.

| ADHD (n = 20) | Control (n = 20) | p-Value | |

|---|---|---|---|

| Gender, female % | 75 | 75 | 1.0 |

| Age, mean (SD) | 27.49 (6.04) | 27.79 (6.17) | .88 |

| WASI-II vocabulary subscale, mean (SD) | 57.85 (7.53) | 56.80 (8.35) | .68 |

The study was approved by the North East—Tyne and Wear South Research Ethics Committee (REC reference: 20/NE/0034). Informed consent was obtained from participants before the assessments started. Participants were compensated £30 after completion of the baseline sessions, £20 after the first remote active monitoring follow-up (end of week 5) and a further £50 at study endpoint (end of week 10).

Procedure

ART-pilot is an observational non-randomized, non-interventional study, using commercially available wearable technology and smartphone sensors, representing no change to the usual care or treatments of participants due to participation.

Participants attended two remote baseline sessions with a research worker, using Microsoft Teams. The first remote baseline session with the participants with ADHD included the administration of the following assessments: (1) the Diagnostic Interview for ADHD (DIVA) in adults (Kooij & Francken, 2010) to confirm ADHD diagnosis, (2) vocabulary and digit span subscales from the Wechsler Abbreviated Scale of Intelligence (WASI-II) (Wechsler, 1999) and Wechsler Adult Intelligence Scale (WAIS-IV; Wechsler et al., 2008), respectively, and (3) web-based REDCap (https://projectredcap.org) baseline questionnaires. The second session was administered once participants had received their wearable device and smartphone by post, approximately a week after the first session. The second session included: (1) administration of two cognitive tasks (the Fast task and the combined cued continuous performance test (CPT-OX) and Go/NoGo (GNG) task), and (2) a training session on the use of the wearable device, and a smartphone Passive and Active App. The participant also received a leaflet summarizing key information (Participant Technology User Guide) and researcher contact details for future reference. Each session lasted for approx. 1.5 hr. Control participants were assessed in the same way, except that instead of the full ADHD diagnostic interview, they completed the ADHD symptom and impairment questionnaire (Barkley & Murphy, 2006).

Immediately following the second baseline session, participants then took part in a 10-week remote monitoring period, which included passive and active monitoring measures. Passive monitoring involved using the RADAR-base smartphone Passive App and a wrist-worn activity monitor (Fitbit Charge 3) (Sun et al., 2020, 2022; Zhang et al., 2021, 2022).

Active monitoring involved the participant completing clinical symptom questionnaires on the RADAR-base smartphone Active App, which is beyond the scope of this current analysis, and the two cognitive tasks on their home PC or laptop, and took place three times: at 2 weeks (the first remote self-administrated assessment), 6 weeks (the second remote self-administrated assessment) and 10 weeks (the third remote self-administrated assessment) after the baseline remote researcher-led session. We asked participants to complete the cognitive tasks in a quiet environment, free from distraction. Participants received an event notification on the Active App, which reminded the participant that it was time to complete their questionnaires and the cognitive tasks. Participants were asked to complete the cognitive tasks within 3 days of receiving the event notification. However, where the participant had to delay completing the tasks (e.g., until the weekend), the data were included in the analysis. Each participant was in the study for 10 weeks.

Measures

The Fast Task

The Fast task is a computerized four-choice RT task, which measures performance under a slow-unrewarded and a fast-incentive condition (Andreou et al., 2007; Kuntsi et al., 2006). In both conditions speed and accuracy were emphasized equally. The baseline (slow unrewarded) condition followed a standard warned four-choice reaction-time task. A warning signal (four empty circles, arranged side by side) first appeared on the screen. At the end of the fore-period lasting 8 s (presentation interval for the warning signal), the circle designated as the target signal for that trial was filled (colored) in. The participant was asked to make a compatible choice by pressing the response keys (F, G, H, and I using a QWERTY keyboard) that directly corresponded in position to the location of the target stimulus. Following a response, the stimuli disappeared from the screen and a fixed inter-trial interval of 2.5 s followed. If the participant did not respond within 10 s, the trial terminated. First, a practice session was administered, during which the participant had to respond correctly to five consecutive trials. For the ART program we shortened the baseline condition from 72 trials (used in our previous research) to 58 trials, equating to approx. 5 min reduction in length of administration. The task further includes a second condition that uses a fast event rate (fore-period of 1 s) and incentives. This condition started immediately after the baseline condition and consisted of 80 trials, with a fixed inter-trial interval of 2.5 s following the response. The baseline condition and the fast-incentive condition of the Fast task took approximately 5 and 10 min to complete, respectively. The participants were told to respond as quickly as possible to each target. Correct responses within a specified time window were followed by a feedback stimulus, a smiley face, indicating a correct response. Participants were rewarded with this smiley face feedback for responding faster than their own MRT during the baseline (first) condition consecutively for three trials. The smiley faces appeared below the circles in the middle of the screen and were updated continuously. We obtained performance measures of mean reaction time (MRT) and reaction time variability (RTV; SD of RTs). Performance measures were calculated on correctly answered trials only.

Combined Cued Continuous Performance Test (CPT-OX) and Go/NoGo (GNG) Task

The combined CPT/GNG task probes attention and response inhibition. The version we have developed for ART is partly based on a CPT-OX task we have used previously (Cheung et al., 2016; Doehnert et al., 2008; Valko et al., 2009) but further incorporates two conditions that differ by the target to non-target ratio: a low target probability condition (“CPT”: 1:5) and a high target probability condition (“GNG”: 5:1). The test consists of 400 letters presented for 150 ms with a stimulus onset asynchrony of 1.65 s in a pseudo-randomized order. The CPT and GNG conditions took approximately 11 min each to complete. Participants were instructed to press a space bar with the index finger of their dominant hand as fast as possible every time the cue was followed directly by the letter X [(O–X) target sequence] but had to withhold responses to O-not-X sequences (NoGo trials). Speed and accuracy were emphasized equally. We obtained performance measures of MRT, RTV, commission errors (CE) and omission errors (OE). MRT and RTV were calculated across correctly answered Go trials; CE were responses to Cue, NoGo and distractor stimuli or Go stimuli not following a Cue; and OE were non-responses to Go trials.

Barkley Adult ADHD Rating Scale on Current Symptoms (BAARS-IV) and Barkley ADHD Functional Impairment Scale (BFIS)—Self-Report Form

The BAARS-IV is an empirically developed self-report measure, based on DSM diagnostic criteria, for assessing current ADHD symptoms (Barkley, 2011a; Barkley & Murphy 2006). The scale includes the 18 diagnostic ADHD symptoms (nine items in each domain of inattention and hyperactivity/impulsivity), with a reported alpha of .92. The responses for each item are scored on a 4-point rating scale (0 = “never or rarely,” 1 = “sometimes,” 2 = “often,” and 3 = “very often”). The 18 items in the scale are arranged so that symptoms associated with inattention are the odd-numbered items and the hyperactive-impulsive symptoms are even-numbered. Inattention symptoms and hyperactive-impulsive symptoms should be scored separately. Symptoms are recorded as present if answered as “often” (2) or “very often” (3). For the present study, and consistent with DSM-V criteria, a symptom count of five or more items for inattention or hyperactivity-impulsivity is required.

The BFIS is a 10-item scale used to assess the level of functional impairments commonly associated with ADHD symptoms in five areas of everyday life: family/relationship, work/education, social interaction, leisure activities, and management of daily responsibilities. The BFIS has a reported alpha of .92 (Barkley, 2011b). The responses for each item are scored on a 4-point rating scale (0 = “never or rarely,” 1 = “sometimes,” 2 = “often,” and 3 = “very often”). To calculate the BFIS self-report total score, similar to the BAARS-IV, functional impairment is recorded as present if answered as “often” (2) or “very often” (3). For the BAARS-IV to be suitable as a monitoring measure, we changed the wording from “during the past 6 months” to “during the past 2 weeks” for each item.

The Diagnostic Interview for ADHD in Adults (DIVA)

The Diagnostic Interview for ADHD in Adults (DIVA) is a validated structured interview for ADHD (Kooij & Francken, 2010). The DIVA was conducted by trained researchers to assess DSM-5 criteria for adult ADHD symptoms and impairment. The DIVA is divided into categories of inattention symptoms, hyperactive-inattention symptoms, and impairments. For each of these areas, questions are asked about current symptoms and symptoms experienced in childhood (ages 5–12). Each item is scored affirmatively if the behavioral symptom was present “often” within the past 6 months.

Verbal IQ

The vocabulary subtest of the Wechsler Abbreviated Scale of Intelligence (WASI-II) (Wechsler, 2011) was administered to all participants to derive an estimate of verbal IQ.

Short-Term and Working Memory Assessment

The digit span subtest of the Wechsler Adult Intelligence Scale (WAIS-IV; Wechsler et al., 2008) was administered to all participants to measure short-term verbal memory (digit span forward) and working memory (digit span backward).

Statistical analyses

We used independent t-tests to examine group differences in age and WASI-II vocabulary subscale, and the chi-square test to test for a group difference in gender. We compared the performance measures in the cognitive tasks at four time points (baseline, week 2, week 6, and week 10) between participants with ADHD and controls. Specifically, we calculated MRT, RTV, OE, and CE for the combined conditions of the CPT/GNG task. Similarly, we calculated MRT and RTV for the combined conditions of the Fast task. The exclusion criteria were uncompleted tasks, implausible reaction time (<150 ms), and proportion of correct responses lower than 50% (for the CPT/GNG task). The number of excluded data points is given in Table 2. Normality of the derived performance measures was examined using the Shapiro-Wilk test for its power in detecting non-normality, where p-value ≥ .05 was considered normal distribution (Wah & Razali, 2011). Given that the data in the two groups at four time points were not all normally distributed, the Wilcoxon rank-sum tests were used to ensure robustness with our sample sizes and distributional characteristics (non-normal data). A statistically significant difference was defined as p-value < .05. In order to inform future larger-scale studies, multiple testing corrections were not undertaken to reduce the chance of type-two errors (i.e., false negative results) and to avoid over correction for multiple comparisons involving multiple correlated variables. Effect sizes were estimated as the standardized Z value divided by the total number of samples for the Wilcoxon rank-sum test. For small, medium and large effect size, the required thresholds are 0.1, 0.3, and 0.5, respectively (Fritz et al., 2012). To quantify the variability of these measures over time for each feature, the coefficient of variation was calculated as the standard deviation of four time points of the group-medians divided by its respective mean of the group-medians. All the statistical analyses were implemented in Python 3.7.4.

Table 2.

Data Exclusion Grouped by Tasks and Exclusion Criteria.

| Task | Criteria | ADHD | Control |

|---|---|---|---|

| Fast task | Uncompleted task | 14 (17.5%) a | 1 (1.3%) |

| Implausible reaction time b | 0 | 1 (1.3%) | |

| CPT/GNG | Uncompleted task | 16 (20%) | 3 (3.9%) |

| Implausible reaction time | 0 | 1 (1.3%) | |

| Low proportion of correct responses c | 3 (3.9%) | 0 |

Out of the 80 tasks put together at four time points completed by 20 participants

Implausible reaction time (<150 ms)

Proportion of correct responses lower than 50%.

Results

The groups did not differ in gender, age, or verbal IQ (Table 1).

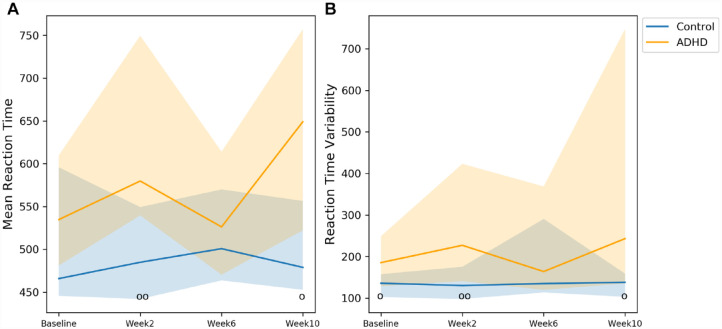

On the Fast task, significant group differences were detected in RTV at baseline, in MRT and RTV at week 2, and in MRT and RTV at week 10 (Figure 1 and Table 3). The corresponding effect sizes were all medium. The coefficient of variation for MRT were 0.09 and 0.03 and for RTV 0.15 and 0.02, for participants with ADHD and controls, respectively.

Figure 1.

Comparisons between participants with ADHD and controls in the Fast task. The markers o and oo represent p < .05 and p < .01, respectively. Solid line: median; shade: 25th percentile to 75th percentile.

Table 3.

p-Values, Effect Sizes and Numbers of Participants in each Comparison in the Fast Task.

| Variable | p-Value | Effect size | ADHD/Controls a | Median (IQR) b |

|---|---|---|---|---|

| Baseline | ||||

| MRT c | .10 | .26 | 20/19 | 534.76 (128.62)/465.91 (150.12) |

| RTV d | .02 * | .37 | 20/19 | 185.44 (120.35)/135.92 (55.03) |

| Week 2 | ||||

| MRT | .004 * | .47 | 17/20 | 579.83 (209.84)/484.87 (107.28) |

| RTV | .006 * | .44 | 17/20 | 227.14 (282.25)/130.44 (77.38) |

| Week 6 | ||||

| MRT | .55 | .10 | 14/20 | 526.25 (143.55)/500.8 (106.24) |

| RTV | .48 | .12 | 14/20 | 163.93 (248.83)/135.07 (176.86) |

| Week 10 | ||||

| MRT | .03 * | .37 | 15/19 | 649.11 (234.93)/478.92 (103.72) |

| RTV | .03 * | .38 | 15/19 | 243.18 (611.90)/138.16 (55.75) |

Number of participants who completed the tasks.

Median and interquartile range (IQR) of participants with ADHD and controls.

Mean reaction time.

Reaction time variability.

Statistically significant p<0.05

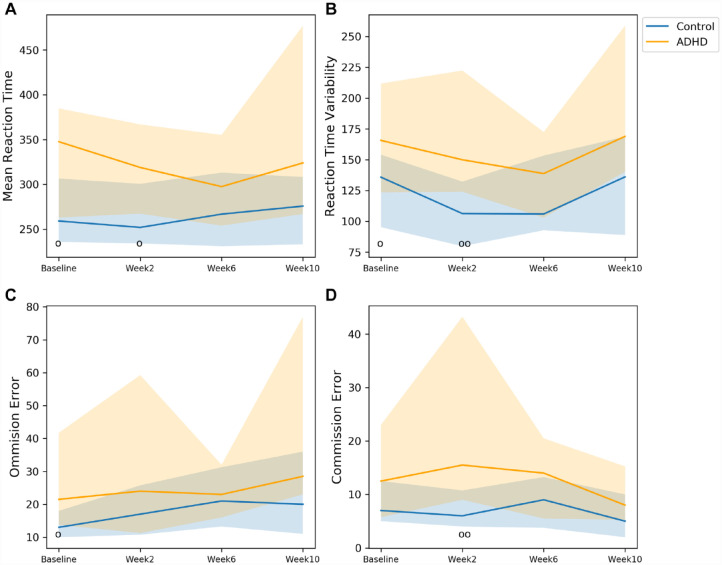

On the CPT/GNG task, significant group differences were found at baseline for MRT, RTV, and OE, at week 2 for MRT, RTV, and CE. (Figure 2 and Table 4). Effect sizes for these significant group differences ranged from medium to large. Although not statistically significant, the group differences in CE at baseline and RTV at week 10 exhibited medium effect sizes. The coefficient of variation for MRT were 0.06 and 0.03, for RTV 0.08 and 0.12, for OE 0.11 and 0.18 and for CE 0.22 and 0.22 for participants with ADHD and controls, respectively.

Figure 2.

Comparisons between participants with ADHD and controls in the CPT/GNG task. The markers o and oo represent p < .05 and p < .01, respectively. Solid line: median; shade: 25th percentile to 75th.

Table 4.

p-Values, Effect Sizes and Numbers of Participants in the CPT/GNG Task.

| Variable | p-Value | Effect size | ADHD/Controls a | Median (IQR) b |

|---|---|---|---|---|

| Baseline | ||||

| MRT c | .02 * | .37 | 18/19 | 347.68 (121.88)/259.12 (70.84) |

| RTV d | .02 * | .39 | 18/19 | 165.73 (88.41)/135.86 (58.86) |

| OE | .02 * | .39 | 18/19 | 21.5 (28.0)/13.0 (8.0) |

| CE | .07 | .30 | 18/19 | 12.5 (17.25)/7.0 (7.5) |

| Week 2 | ||||

| MRT | .02 * | 0.39 | 16/20 | 318.92 (99.57)/251.97 (66.50) |

| RTV | .007 * | .45 | 16/20 | 150.02 (98.39)/106.3 (52.41) |

| OE | .26 | 0.19 | 16/20 | 24.0 (48.0)/17.0 (15.0) |

| CE | .002 * | .51 | 16/20 | 15.5 (34.25)/6.0 (6.75) |

| Week 6 | ||||

| MRT | .29 | .18 | 15/20 | 297.53 (101.28) /266.80 (82.11) |

| RTV | .19 | .22 | 15/20 | 138.82 (69.50)/105.98 (60.67) |

| OE | .50 | .11 | 15/20 | 23.0 (16.0)/21.0 (18.0) |

| CE | .13 | .25 | 15/20 | 14.0 (15.0)/9.0 (9.5) |

| Week 10 | ||||

| MRT | .11 | .30 | 12/17 | 323.99 (210.21)/275.78 (75.19) |

| RTV | .06 | .35 | 12/17 | 168.98 (118.57)/136.12 (79.88) |

| OE | .14 | .28 | 12/17 | 28.5 (54.0)/20.0 (25.0) |

| CE | .29 | .20 | 12/17 | 8.0 (10.0)/5.0 (8.0) |

Number of participants who completed the tasks.

Median and interquartile range (IQR) of participants with ADHD and controls.

Mean reaction time.

Reaction time variability.

Statistically significant p<0.05

Discussion

As part of a pilot study using our new ADHD Remote Technology (ART) system, we show that both remote researcher-led administration and self-administration of cognitive tasks capture the difficulties people with ADHD have with the regulation of attention and response inhibition, supporting the feasibility and validity of remote assessments. Reaction time variability (RTV), mean reaction time (MRT) and commission errors (CE) were the variables most consistently sensitive to ADHD-control group differences at the remote baseline researcher-led administration and the first remote self-administration, with medium to large effect sizes (statistically significant for 8 of the 10 comparisons). Subsequent remote self-administrations of the tasks showed that group differences became small and non-significant at week 6, but emerged again as significant for the Fast task RT variables at week 10.

The remote researcher-led cognitive assessments indicated the expected ADHD-control group differences (Cheung et al., 2016; Kuntsi et al., 2010; McLoughlin et al., 2010) on nearly all cognitive variables at baseline. On the CPT/GNG task, participants with ADHD performed

less well than control participants on RTV, MRT, and OE, with the CE result further at trend-level (p = .07); all effect sizes were medium. On the Fast task, a significant group difference, with a medium effect size, emerged for RTV. Overall, these results indicate that remote researcher-led task administration of the Fast task and CPT/GNG task is a valid alternative to the traditional in-person task administration with adults and young people with and without ADHD.

The first remote self-administration (week 2) produced overall similar ADHD-control differences as observed for the researcher-led baseline administration. At week 2, the group comparisons on the CPT/GNG task RTV and MRT, and Fast task RTV remained significant; the OE result was no longer significant, however. In addition, the group comparisons on the Fast task MRT and CPT/GNG task CE were now significant too. Effect sizes were medium to large. This illustration of how remote self-administration of cognitive tasks can successfully capture the difficulties with attention regulation and response inhibition in adults and young people with ADHD is highly promising for future remote measurement studies. Aspects of the set up and research process that may have contributed toward this successful outcome include: the training on task administration provided as part of the baseline researcher-led session, and clear written task instructions.

Further repeated remote self-administrations of the tasks resulted in significant group differences at week 10, but not at week 6. For the Fast task, group comparisons for both RTV and MRT were significant at week 10, with medium effect sizes. For the CPT/GNG task, no group comparison was statistically significant at week 10, but medium effect sizes emerged also for MRT and RTV (with p-values of .11 and .06). Due to the lower numbers of completed tasks at week 10, with data available from only 12 participants with ADHD, the statistical power for the week 10 CPT/GNG task analyses would be substantially reduced for MRT and RTV. The overall variability in these results of showing group differences only at week 10 and not at week 6 might relate to factors that influence how well participants cope with repeated administration of tasks that are purposefully rather monotonous. Future research with larger sample sizes may investigate this variability further.

A limitation of the study is the modest sample sizes and that some of the remote monitoring data were missing, particularly for the ADHD group. The sample sizes were adequate for this pilot study, as illustrated by the many significant group differences despite the modest statistical power, reflecting the medium to large effect sizes. Future studies with larger sample sizes are required to establish whether significant group differences emerge with improved statistical power for those variables (especially CE) that did not pick up significant group differences in this pilot study. Another limitation of the study is that we were unable to randomize the order with which the cognitive tasks were presented due to our programing settings. We have improved this in our new, ongoing European Commission-funded clinical study “ART-CARMA” (ADHD Remote Technology study of cardiometabolic risk factors and medication adherence; Denyer et al., 2022b), which involves remote monitoring of 300 adults with ADHD over a period of 12 months.

The reasons why some of the remote monitoring data were missing particularly for the ADHD group are of interest. We completed endpoint debrief interviews with participants who took part in the pilot study to understand the barriers and facilitators to RMT (Denyer et al., 2022a, Denyer et al., in press). The interviews have been analyzed using thematic analysis, and barriers and facilitators to RMT have been compared between individuals with ADHD and control participants. More individuals with ADHD described the cognitive tasks as a “perceived cost” compared to the control group. This finding would be expected due to the demand of the cognitive tasks on individuals who have difficulties with attention on demanding tasks. That is, the cognitive tasks have been specifically designed to be challenging for individuals with ADHD. Overall, therefore, an implication for future remote monitoring studies is to consider longer intervals in between repeated administrations of relatively long cognitive tasks, such as the ones employed here. We have already incorporated this consideration in the “ART-CARMA” study where the remote self-administration of the cognitive tasks takes place at 6-month intervals (Denyer et al., 2022b).

Another example of a topic that can particularly benefit, in the future, from remote long-term monitoring of people with ADHD that includes cognitive tasks is the investigation of cognitive differences that are markers of remission (improve when ADHD remits) versus those that are enduring differences (observed in individuals with a past ADHD diagnosis irrespective of later outcome). Using the Fast task and a CPT-OX task, our previous ADHD follow-up study indicated that attention-vigilance measures, including RTV, were markers of remission, whereas executive control measures were not sensitive to ADHD outcomes (persistence/remission; (James et al., 2016, 2020; Michelini et al., 2016, 2019; Vainieri et al., 2020). IQ further moderated ADHD outcome. Yet the data from our study and most other previous follow-up studies (Franke et al., 2018) are limited to few (mostly just two) time points: we know little about whether any changes in cognitive impairments are stable or temporary over time. As we now show feasibility and validity for home self-administration of the cognitive tasks, this method enables future remote administration in future studies on persistence and remission of ADHD.

Remote monitoring technology and the mobile-health platform infrastructures supporting them are bringing major advances and opportunities for longitudinal research. One advantage is the possibility to collect data simultaneously, in the real world, on a wide range of both novel and conventional measures. Our data here show that remote self-administration of cognitive tasks, designed for ADHD research, is a feasible part of such remote data collection for studies on adults and young people with ADHD, supporting their inclusion in the ART system.

Author Biographies

Shaoxiong Sun is a Senior Research Associate at King’s College London. His research has been focused on data analytics for mobile health data collected via smartphones and wearable devices. He has been involved in the RADAR-CNS project for remotely monitoring participants with central nervous system diseases including depression, multiple sclerosis, and epilepsy. His PhD was about physiological monitoring via analyzing biomedical signals including photoplethysmography and electrocardiography.

Hayley Denyer is a PhD student on the Medical Research Council Doctoral Training Partnership (MRC-DTP) at the Social, Genetic and Developmental Psychiatry (SGDP) Centre at King’s College London. Her PhD focuses on the application of remote measurement technology to identify persisting impairments and targets for intervention in ADHD and other at-risk populations.

Heet Sankesara is a Software Developer and Data Scientist at Biostatistics and Health Informatics at King’s College London. His areas of interest/practice are working on data analyses and machine learning-based algorithms on real-world health data.

Qigang Deng is a PhD student at King’s College London. His special areas of interest are ADHD, relative age effect, and remote technology that monitors ADHD symptoms.

Yatharth Ranjan, MSc., is a Computer Scientist with keen interests in mHealth, mobile application development, and streaming applications. He is currently working on developing the RADAR-Base data collection platform. He is also enrolled in a part-time PhD which focuses on development of novel software and analytical solutions focused on scalability, interoperability, precision, just-in-time remote collection, and monitoring of mHealth data from smartphones and wearables in different disorder areas including COVID-19.

Pauline Conde is a Software Engineer on the RADAR-base platform under the PhiDataLab group. Her background is in computer science and software engineering.

Zulqarnain Rashid is a Senior Research Fellow and Technical Lead in the Institute of Psychiatry, Psychology & Neuroscience. He joined King’s College in November 2017 and is currently involved in coordinating, collecting, and analyzing wearables and smartphone data in different disease areas including ADHD. He designed and developed assistive mobile interfaces for people with severe communication and physical disabilities in the context of Augmentative and Alternative Communication (AAC). His PhD contributed toward the independent living of wheelchair users under the paradigm of Internet of Things (IoT).

Rebecca Bendayan, PhD, is an Affiliate Researcher at the Institute of Psychiatry, Psychology and Neurosciences at King’s College London, Honorary Researcher at South London and Maudsley NHS Trust and Senior Scientific Consultant for projects with social impact.

Philip Asherson is an Emeritus Professor at the SGDP Centre, Institiute of Psychiatry, Psychology and Neuroscience, King’s College London. He has been a leading clinical academic and opinion leader on the diagnosis and management of ADHD in adults. He retains close collaborative links with senior academic staff at the IoPPN for ongoing research, dissemination of research findings, clinical guideline development and training, and remains actively engaged with research grant development with colleagues in his area of expertise.

Andrea Bilbow, OBE, is the founder and CEO of the leading patient advocacy organization for ADHD in the UK (ADDISS). She has worked for 25 years supporting patients with ADHD and their families, organizing conferences, collaborating with research consortium across Europe, and has coauthored many articles on ADHD. Andrea is an immediate past president of ADHD Europe, an umbrella organization in Europe working with many patient advocacy groups, raising awareness and campaigning for better access to diagnosis and treatment.

Dr Madeleine (Maddie) Groom is an Associate Professor of Applied Developmental Cognitive Neuroscience at the University of Nottingham, U.K., and Director of the Centre for ADHD and Neurodevelopmental Disorders Across the Lifespan (CANDAL) in the Institute of Mental Health, Nottingham, U.K. Her research focuses on understanding the mechanisms underpinning neurodevelopmental conditions, including attention deficit hyperactivity disorder (ADHD), Tourette’s Syndrome, and autism, and in improving the assessment and monitoring of these conditions in clinical and nonclinical settings.

Chris Hollis, PhD, FRCPsych, is Professor of Child and Adolescent Psychiatry and Digital Mental Health at the University of Nottingham, Director of the NIHR MindTech MedTech Co-operative, NIHR Nottingham Biomedical Research Centre Mental Health & Technology Theme Lead, and Consultant in Developmental Neuropsychiatry with Nottinghamshire Healthcare NHS Foundation Trust. He has published more than 250 articles on ADHD, neurodevelopmental disorders, and digital mental health. He is a member of the European Network for Hyperkinetic Disorders (Eunethydis) and the European ADHD Guideline Group.

Amos A. Folarin is the Senior Software Development Group Leader at the Department of Biostatistics and Health Informatics of King’s College London. He manages a team of software developers and data scientists working on several mobile health (mHealth) and clinical informatics initiatives. His research is primarily focused on creating novel, noninvasive methods using remote monitoring and sensor technologies to capture rich patient data in real-world settings. His goal is to bring forward a more responsive and an efficient standard of individualized care.

Richard J. B. Dobson is the Head of the Department of Biostatistics and Health Informatics, one of the largest mental health informatics and methodology research groups in the world with expertise in computer and data science, machine learning and AI, software development, engineering, trials, and hospital IT. He is motivated by the integration of data from patient records with genomics data and mobile health for better understanding of the interplay between mental and physical health. He is the cochair of the Centre for Translational Informatics, PI for the HDRUK Text Analytics programme, and coinvestigator for the national Mental Health Data Hub (DATAMIND). His group led award winning technology components of several programs including RADAR-CNS, BigData@Heart, and AIMS-2-Trials (>€50m). He has implemented platforms for analytics into hospitals for direct patient care (e.g., PI NHSX AI Award £1.5m; London AI Centre for Value-Based Healthcare £26m).

Jonna Kuntsi is Professor of Developmental Disorders and Neuropsychiatry at King’s College London. Her research interests include remote measurement technology for ADHD and related disorders, long-term outcomes (remittance versus persistence of ADHD in adulthood), preterm birth as a risk factor for ADHD and impairments in cognitive and neural function.

Footnotes

The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: Prof. Jonna Kuntsi has given talks at educational events sponsored by Medice; all funds are received by King’s College London and used for studies of ADHD. Prof. Philip Asherson has received funding for research by Vifor Pharma and has given sponsored talks and been an advisor for Shire, Janssen-Cilag, Eli-Lilly, Flynn Pharma, and Pfizer, regarding the diagnosis and treatment of ADHD; all funds are received by King’s College London and used for studies of ADHD. The other authors report no conflicts of interest.

Funding: We thank all who made this research possible: all participants who contributed their time to the study; and the funders. The ADHD Remote Technology (ART) pilot study was funded by a King’s Together Strategic Grant. This study was funded in part by the National Institute for Health Research (NIHR) Maudsley Biomedical Research Centre at South London and Maudsley NHS (National Health Service) Foundation Trust and King’s College London. Hayley Denyer is supported by the UK Medical Research Council (MR/N013700/1) and King’s College London member of the MRC Doctoral Training Partnership in Biomedical Sciences. Qigang Deng is supported by the China Scholarship Council (201908440362).

ORCID iD: Shaoxiong Sun  https://orcid.org/0000-0003-3652-5266

https://orcid.org/0000-0003-3652-5266

References

- Andreou P., Neale B. M., Chen W., Christiansen H., Gabriels I., Heise A., Meidad S., Muller U. C., Uebel H., Banaschewski T., Manor I., Oades R., Roeyers H., Rothenberger A., Sham P., Steinhausen H. C., Asherson P., Kuntsi J. (2007). Reaction time performance in ADHD: Improvement under fast-incentive condition and familial effects Psychological Medicine, 37(12), 1703–1715. 10.1017/S0033291707000815 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barkley R. A. (2011. a). Barkley adult ADHD Rating Scale—IV (BAARS-IV). Guilford Press. [Google Scholar]

- Barkley R. A. (2011. b). Barkley Functional Impairment Scale (BFIS for Adults). Guilford Press. [Google Scholar]

- Barkley R. A., Murphy K. R. (2006). Attention-deficit hyperactivity disorder: A clinical workbook. Guilford Press. [Google Scholar]

- Cheung C. H. M., McLoughlin G., Brandeis D., Banaschewski T., Asherson P., Kuntsi J. (2017). Neurophysiological correlates of attentional fluctuation in attention-deficit/hyperactivity disorder. Brain Topography, 30(3), 320–332. 10.1007/s10548-017-0554-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheung C. H. M., Rijsdijk F., McLoughlin G., Brandeis D., Banaschewski T., Asherson P., Kuntsi J. (2016). Cognitive and neurophysiological markers of ADHD persistence and remission. The British Journal of Psychiatry, 208(6), 548–555. 10.1192/bjp.bp.114.145185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denyer H., Adanijo A., Deng Q., Asherson P., Bilbow A., Folarin A., Groom M., Hollis C., Wykes T., Dobson R., Kuntsi J., Simblett S. (2022. a). The acceptability of remote measurement technology in the long-term monitoring of individuals with ADHD—A qualitative analysis. Neuroscience Applied, 1, 100438. 10.1016/j.nsa.2022.100438 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denyer H, Deng Q, Adanijo A, Asherson P, Bilbow A, Folarin A, Groom M, Hollis C, Wykes T, Dobson R, Kuntsi J., Simblett S. (in press). Barriers to and facilitators of using remote measurement technology in the long-term monitoring of individuals with ADHD: A qualitative analysis. JMIR Formative Research [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denyer H., Ramos-Quiroga J. A., Folarin A., Ramos C., Nemeth P., Bilbow A., Woodward E., Whitwell S., Müller-Sedgwick U., Larsson H., Dobson R. J., Kuntsi J. (2022. b). ADHD remote technology study of cardiometabolic risk factors and medication adherence (ART-CARMA): A multi-centre prospective cohort study protocol. BMC Psychiatry, 22(1), 813. 10.1186/s12888-022-04429-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doehnert M., Brandeis D., Straub M., Steinhausen H. C., Drechsler R. (2008). Slow cortical potential neurofeedback in attention deficit hyperactivity disorder: Is there neurophysiological evidence for specific effects? Journal of Neural Transmission, 115(10), 1445–1456. 10.1007/s00702-008-0104-x [DOI] [PubMed] [Google Scholar]

- Franke B., Michelini G., Asherson P., Banaschewski T., Bilbow A., Buitelaar J. K., Cormand B., Faraone S. V., Ginsberg Y., Haavik J., Kuntsi J., Larsson H., Lesch K. P., Ramos-Quiroga J. A., Réthelyi J. M., Ribases M., Reif A. (2018). Live fast, die young? A review on the developmental trajectories of ADHD across the lifespan. European Neuropsychopharmacology, 28(10), 1059–1088. 10.1016/j.euroneuro.2018.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fritz C. O., Morris P. E., Richler J. J. (2012). Effect size estimates: Current use, calculations, and interpretation. Journal of Experimental Psychology General, 141(1), 2–18. 10.1037/a0024338 [DOI] [PubMed] [Google Scholar]

- James S. N., Cheung C. H. M., Rijsdijk F., Asherson P., Kuntsi J. (2016). Modifiable arousal in attention-deficit/hyperactivity disorder and its etiological association with fluctuating reaction times. Biological Psychiatry Cognitive Neuroscience and Neuroimaging, 1(6), 539–547. 10.1016/j.bpsc.2016.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- James S. N., Cheung C. H. M., Rommel A. S., McLoughlin G., Brandeis D., Banaschewski T., Asherson P., Kuntsi J. (2020). Peripheral hypoarousal but not preparation-vigilance impairment endures in ADHD remission. Journal of Attention Disorders, 24(13), 1944–1951. 10.1177/1087054717698813 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kooij J. J. S., Francken M. H. (2010). DIVA 2.0 diagnostic interview voor ADHD in adults bij volwassenen [DIVA 2.0 diagnostic interview for ADHD in adults]. DIVA Foundation. 2010. http://www.divacenter.eu/DIVA.aspx.

- Kuntsi J., Pinto R., Price T. S., van der Meere J. J., Frazier-Wood A. C., Asherson P. (2014). The separation of ADHD inattention and hyperactivity-impulsivity symptoms: Pathways from genetic effects to cognitive impairments and symptoms. Journal of Abnormal Child Psychology, 42(1), 127–136. 10.1007/s10802-013-9771-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuntsi J., Rogers H., Swinard G., Börger N., van der Meere J., Rijsdijk F., Asherson P. (2006). Reaction time, inhibition, working memory and ‘delay aversion’ performance: Genetic influences and their interpretation. Psychological Medicine, 36(11), 1613–1624. 10.1017/S0033291706008580 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuntsi J., Wood A. C., Rijsdijk F., Johnson K. A., Andreou P., Albrecht B., Arias-Vasquez A., Buitelaar J. K., Mcloughlin G., Rommelse N. N., Sergeant J. A., Sonuga-Barke E. J., Uebel H., van der Meere J. J., Banaschewski T., Gill M., Manor I., Miranda A., Mulas F., . . . Asherson P. (2010). Separation of cognitive impairments in attention deficit hyperactivity disorder into two familial factors. Archives of General Psychiatry, 67(11), 1159–1167. 10.1001/archgenpsychiatry.2010.139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLoughlin G., Albrecht B., Banaschewski T., Rothenberger A., Brandeis D., Asherson P., Kuntsi J. (2010). Electrophysiological evidence for abnormal preparatory states and inhibitory processing in adult ADHD. Behavioral and Brain Functions, 6(1), 66. 10.1186/1744-9081-6-66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michelini G., Jurgiel J., Bakolis I., Cheung C. H. M., Asherson P., Loo S. K., Kuntsi J., Mohammad-Rezazadeh I. (2019). Atypical functional connectivity in adolescents and adults with persistent and remitted ADHD during a cognitive control task. Translational Psychiatry, 9(1), 137. 10.1038/s41398-019-0469-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michelini G., Kitsune G. L., Cheung C. H., Brandeis D., Banaschewski T., Asherson P., McLoughlin G., Kuntsi J. (2016). Attention-deficit/hyperactivity disorder remission is linked to better neurophysiological error detection and attention-vigilance processes. Biological Psychiatry, 80(12), 923–932. 10.1016/j.biopsych.2016.06.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pievsky M. A., McGrath R. E. (2018). The neurocognitive profile of attention-deficit/hyperactivity disorder: A review of meta-analyses. Archives of Clinical Neuropsychology, 33(2), 143–157. 10.1093/arclin/acx055 [DOI] [PubMed] [Google Scholar]

- Ranjan Y., Rashid Z., Stewart C., Conde P., Begale M., Verbeeck D., Boettcher S., Dobson R., Folarin A. (2019). RADAR-base: Open source mobile health platform for collecting, monitoring, and analyzing data using sensors, wearables, and mobile devices. JMIR mhealth and uhealth, 7(8), e11734. 10.2196/11734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stewart C. L., Rashid Z., Ranjan Y., Sun S., Dobson R. J., Folarin A. A. (2018). RADAR-base: major depressive disorder and epilepsy case studies. In: Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers (ACM). Singapore (2018). p. 1735–43. 10.1145/3267305.3267540 [DOI] [Google Scholar]

- Sun S., Folarin A. A., Ranjan Y., Rashid Z., Conde P., Stewart C., Cummins N., Matcham F., Dalla Costa G., Simblett S., Leocani L., Lamers F., Sørensen P. S., Buron M., Zabalza A., Guerrero Pérez A. I., Penninx B. W., Siddi S., Haro J. M., . . . Dobson R. J. (2020). Using smartphones and wearable devices to monitor behavioral changes during COVID-19. Journal of Medical Internet Research, 22(9), e19992–NaN19. 10.2196/19992 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun S., Folarin A. A., Zhang Y., Cummins N., Liu S., Stewart C., Ranjan Y., Rashid Z., Conde P., Laiou P., Sankesara H., Dalla Costa G., Leocani L., Sørensen P. S., Magyari M., Guerrero A. I., Zabalza A., Vairavan S., Bailon R., . . . Dobson R. J. (2022). The utility of wearable devices in assessing ambulatory impairments of people with multiple sclerosis in free-living conditions. Computer Methods and Programs in Biomedicine, 227, 107204. 10.1016/j.cmpb.2022.107204 [DOI] [PubMed] [Google Scholar]

- Vainieri I., Martin J., Rommel A. S., Asherson P., Banaschewski T., Buitelaar J., Cormand B., Crosbie J., Faraone S. V., Franke B., Loo S. K., Miranda A., Manor I., Oades R. D., Purves K. L., Ramos-Quiroga J. A., Ribasés M., Roeyers H., Rothenberger A., . . . Kuntsi J. (2021). Polygenic association between attention-deficit/hyperactivity disorder liability and cognitive impairments. Psychological Medicine, 52, 3150–3158. 10.1017/S0033291720005218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vainieri I., Michelini G., Adamo N., Cheung C. H. M., Asherson P., Kuntsi J. (2020). Event-related brain-oscillatory and ex-Gaussian markers of remission and persistence of ADHD. Psychological Medicine, 52(2), 352–361. 10.1017/S0033291720002056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valko L., Doehnert M., Müller U. C., Schneider G., Albrecht B., Drechsler R., Maechler M., Steinhausen H. C., Brandeis D. (2009). Differences in neurophysiological markers of inhibitory and temporal processing deficits in children and adults with ADHD. Journal of Psychophysiology, 23(4), 235–246. 10.1027/0269-8803.23.4.235 [DOI] [Google Scholar]

- Wah Y., Razali N. (2011). Power comparisons of Shapiro-Wilk, Kolmogorov-Smirnov, Lilliefors and Anderson-Darling tests. Journal of Statistical Modeling and Analytics, 2, 21–33. [Google Scholar]

- Wechsler D. (1999). Wechsler Abbreviated Scale of Intelligence. PsychCorp. [Google Scholar]

- Wechsler D. (2011). Wechsler Abbreviated Scale of Intelligence—Second Edition (WASI-II). APA PsycTests. 10.1037/t15171-000. [DOI] [Google Scholar]

- Wechsler D., C D., R S. (2008). Wechsler Adult Intelligence Scale–Fourth Edition (WAIS–IV). NCS Pearson. [Google Scholar]

- Zhang Y., Folarin A. A., Sun S., Cummins N., Bendayan R., Ranjan Y., Rashid Z., Conde P., Stewart C., Laiou P., Matcham F., White K. M., Lamers F., Siddi S., Simblett S., Myin-Germeys I., Rintala A., Wykes T., Haro J. M., . . . Dobson R. J. (2021). Relationship between major depression symptom severity and sleep collected using a wristband wearable device: Multicenter longitudinal observational study. JMIR mhealth and uhealth, 9(4), e24604–e24615. 10.2196/24604 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Y., Folarin A. A., Sun S., Cummins N., Vairavan S., Bendayan R., Ranjan Y., Rashid Z., Conde P., Stewart C., Laiou P., Sankesara H., Matcham F., White K. M., Oetzmann C., Ivan A., Lamers F., Siddi S., Vilella E., . . . Dobson R. J. (2022). Longitudinal relationships between depressive symptom severity and phone-measured mobility: Dynamic structural Equation Modeling Study. JMIR Mental Health, 9(3), e34898. 10.2196/34898 [DOI] [PMC free article] [PubMed] [Google Scholar]