Summary

How do humans blink while driving a vehicle? Although gaze control patterns have been previously reported in relation to successful steering, eyeblinks that disrupt vision are believed to be randomly distributed during driving or are ignored. Herein, we demonstrate that eyeblink timing shows reproducible patterns during real formula car racing driving and is related to car control. We studied three top-level racing drivers. Their eyeblinks and driving behavior were acquired during practice sessions. The results revealed that the drivers blinked at surprisingly similar positions on the courses. We identified three factors underlying the eyeblink patterns: the driver’s individual blink count, lap pace associated with how strictly they followed their pattern on each lap, and car acceleration associated with when/where to blink at a moment. These findings suggest that the eyeblink pattern reflected cognitive states during in-the-wild driving and experts appear to change such cognitive states continuously and dynamically.

Subject areas: Behavioral neuroscience, Sensory neuroscience, Cognitive neuroscience

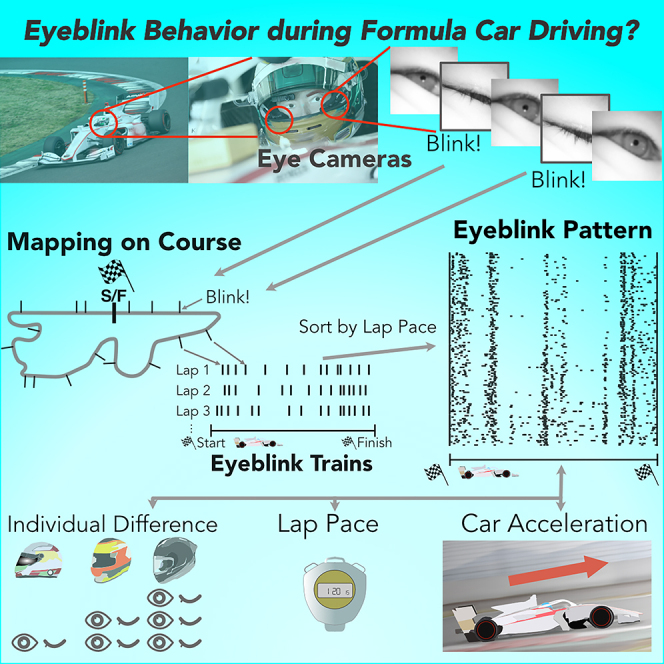

Graphical abstract

Highlights

-

•

Professional formula car drivers blinked at similar locations in circuits

-

•

Individual and car-control-related components are associated with eyeblink patterns

-

•

Eyeblink patterns presumably reflect cognitive state changes during formula car driving

Behavioral neuroscience; Sensory neuroscience; Cognitive neuroscience

Introduction

Vision is critical for efficient locomotion in humans. Many studies have shown that humans use effective eye movement strategies with eyes open to sample critical information during natural tasks, from making tea to batting a ball,1,2,3 revealing functional coupling between action and gaze direction. Human locomotion is not an exception.4,5,6 For approximately 30 years, driving, in particular, has been discussed in terms of reproducible gaze strategies used for efficient steering.7,8 However, few studies have addressed the impact and modulation of eyeblinks. Spontaneous eyeblinks, which involve unavoidable and frequent vision loss, have been considered to be randomly distributed9 or have simply been neglected, particularly in the context of visuomotor control. Humans blink 10–30 times per minute,10,11 and one eyeblink involves vision loss for about 200 ms.12 Thus, people with a mean eyeblink rate of 30/min can lose up to 10% of vision time.13 At this level, irrespective of the skill of gaze direction control, poor allocation of eyeblink timing may undermine visuomotor performance.14

Laboratory studies have revealed that humans blink much more often than necessary to keep the surface of the eye moist.15 Additionally, dopaminergic activity16 and cognitive states17,18 can influence the timing of eyeblinks. One of the most important variables to determine the probability of an eyeblink is the extent of attention to the task.17,19,20,21 Humans suppress eyeblinks to avoid loss of visual information at critical moments14,18,18,22 and adjunct perceptual distortion.23,24,25 Because drivers pay attention to visual features, such as optical flow, tangent points of corners, and near and far fields of vision, to trace the correct path effectively,5,8,26 it might be possible that they would modulate their eyeblink timing in such a way as to avoid missing critical visual features. Additionally, the level of required attention is likely to vary from time to time during driving,19 which may result in eyeblink timing modulation. Since attention to non-visual information also affects eyeblink rates,21 eyeblink timing may not be determined only by the visual demand but also by other cognitive factors, such as cognitive load.

However, laboratory experiment results cannot simply be extrapolated because the development of the related cognitive states during arbitrary driving over time is unknown. Moreover, the interactions between the cognitive effects, particularly those associated with motor control,27 are poorly understood. Here, we used real Formula car driving, not a simulator, as an extreme example, in which the cost of slight vision loss is maximally emphasized; it can lead to fatal crash. Expert car drivers drive the same courses repeatedly in an exact, stable driving manner,13 and the driving environment can be better controlled than on a public road with pedestrians and obstacles. We considered that the properties of Formula racing car driving may help identify the relationships between driving and eyeblink behavior.

In this study, we analyzed eyeblink timing and car control data to answer two questions. First, are there any reproducible eyeblink patterns during Formula car driving? Second, if the pattern exists, can it be explained by known blink-modulating effects? Importantly, if applicable effects were found, then this model may serve to capture the hard-to-reach temporal evolution of cognitive states during in-the-wild driving. In terms of eyeblink pattern formation, cognitive states for sensorimotor control would change rapidly according to the course topology.19 We hypothesized that eyeblinks may reflect such changes and then expected that eyeblinks would show spatial patterns along the course.

Results

Spatial reproducibility of eyeblinks during driving

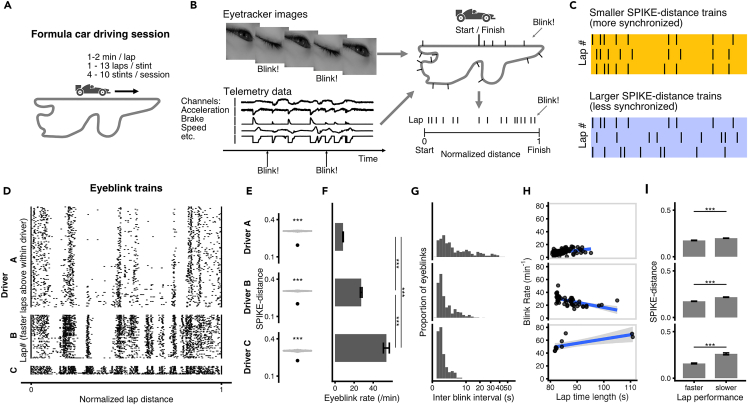

Eyeblink patterns and the driving behavior of three elite professional Formula car drivers were naturalistically observed on three circuits (Fuji, Suzuka, and Sugo. See STAR Methods section for more course details). The observations were made during free practice sessions before racing or dedicated car testing sessions. A session consists of one or more stints. Each stint (a sequence of leaving the garage, driving the course for several laps, and returning to the garage) lasted several minutes or longer (Figure 1A). The eye tracker video was synchronized with car behavioral data collected by the vehicle’s telemetry system (Figure 1B). The drivers were aware that we were conducting eyemetric observations, but they were not told the purpose of our observation in detail to prevent conscious control of eyeblinks. We first calculated eyeblink locations from eyeblink timing and telemetry data (Figure 1B) and then normalized the distance from the start/finish line to the maximum traveled distance in the lap (Figures 1B and 1D). Next, we tested how similar eyeblink locations were between each lap using the SPIKE-distance28 (Figures 1C and 1E) within driver and course combinations. Indeed, for all eight driver and course combinations, the actual SPIKE-distance values were significantly lower than those of shifted null eyeblink trains in which we had circularly shifted eyeblink timing (p < 0.001; Figures 1E, S1B, and S1D), suggesting that all three drivers blinked at the track-and-driver-specific locations.

Figure 1.

Driving task and eyeblink signatures during Formula car driving

(A) Formula car driving task.

(B) From the acquired eyetracker video images and car telemetry data, the eyeblink distance from the start/finish line was calculated and then was normalized to the maximum traveled distance in the lap.

(C) Depiction of smaller SPIKE-distance vs. larger SPIKE-distance spike trains.

(D–I) Data of three drivers in one representative course (Fuji). Upper: Driver A, middle: Driver B, and lower: Driver C. (D) Raster plot of eyeblinks, (E) Real value (black) and null distribution (gray) of SPIKE-distance, (F) Eyeblink rates per lap, (G) Interblink-interval distribution, (H) Correlation between eyeblink rate and lap time length, and (I) Piecewise SPIKE-distances within faster (lap time < the median of lap times) and slower laps (the median of lap times < lap time). Error bars indicate across-lap mean SEM. Asterisks indicate statistically significant differences between conditions using the notation: ∗∗∗ for p < 0.001.

Eyeblink patterns depend on individuality, lap time, and car acceleration

Eyeblink frequency substantially varies across individuals.10,11,14 These variations were also observed in the professional drivers while driving the courses (mean SEM: 8.4 0.3, 27.4 0.9, 53.8 3.1 per minute for course Fuji; Levene’s test indicated unequal variance for eyeblink rate variability: p < 0.001; Kruskal-Wallis test indicated eyeblink rate differed among the drivers: p < 0.001; All pairwise t-tests with Bonferroni correction revealed that they differed between each of all driver combinations:p < 0.001; Figure 1F) (similarly to course Fuji, mean 7.9 0.3, 28.0 0.7, 47.3 2.5 per minute for the entire three courses; Levene’s test indicated unequal variance for eyeblink rate variability: p < 0.001; Kruskal-Wallis test indicated eyeblink rate differed among the drivers: p < 0.001; All pairwise t-tests with Bonferroni correction revealed that they differed between each of all driver combinations:p < 0.001; Figure S2). Therefore, the number of eyeblinks included in the eyeblink patterns would be predicted per individual. Additionally, the literature on interblink interval (IBI) has highlighted that the eyeblink distributions differ across participants, even during the same task, and that interaction between task visual demand fluctuation and individual physiological properties (e.g., eye dryness and irritation) could partially explain the difference in distribution shape.14 Figure 1G displays the IBI distribution shapes of each driver. The distribution tail lengths were notably different, and the order agreed with the eyeblink rate order. The IBI distribution shapes of Drivers B and C were unimodal, while that of Driver A was multimodal, indicating differences in physiological properties and/or fluctuation of visual demand.

We next attempted to capture the relationship between a lap-scale driving property and eyeblink behavior. It has been suggested that the eyeblink rate is modulated by the task demand.19,29 In race car driving, the task demand would increase with improving lap pace, and consequently, drivers’ eyeblink rate might become lower. To test this, we correlated lap time with the eyeblink rate of each driver and course combination. Although three of the eight combinations of drivers’ eyeblink rates corresponded positively with lap times, as expected, the tests for another four combinations showed no significance (Figures 1H and S3). In the remaining combination, the eyeblink rate correlated negatively with lap time. Therefore, it was difficult to identify a consistent effect of lap performance on the eyeblink rate. Eyeblink patterns are synchronized when people are highly engaged in a task.30,31,32 If the engagement in driving increased while the lap pace became faster, eyeblinks might become more synchronized. To test this, we compared SPIKE-distances between faster and slower laps, relative to the median of lap times (Figures 1I and S4). The effect was significant in six of eight combinations (q < 0.001 false discovery rate-corrected; Figure S4), whereas no difference was detected in the other two conditions because of the small sample size. The effect sizes in Fuji were 0.35, 1.4, and 3.5 (Cohen’s d) for Drivers A, B, and C, respectively. For the other combinations, effect sizes have been mentioned in Figure S4 legend. Altogether, these results suggest that, on a per-lap scale, lap performance affected the reproducibility of spatial eyeblink patterns but not eyeblink frequency.

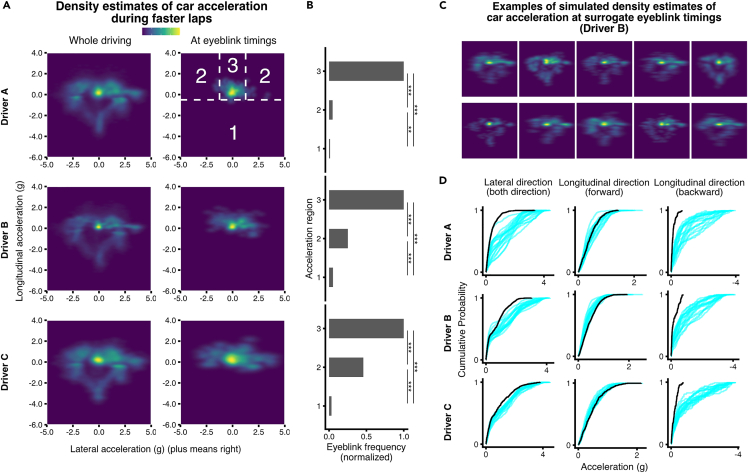

Individuality significantly explained baseline eyeblink frequency, and lap pace affected how strict eyeblink spatial patterns were followed in each lap. However, it was not clear how drivers implicitly determined when and where to blink, on a shorter timescale, and whether there were any specific driving behaviors that determined when or where to blink. It can be speculated that a momentary increase in cognitive demand could lead to short-term eyeblink suppression,14,21,22 which could be linked to short timescale driving behavior. Much of the skill of a racing driver involves keeping the tire grip force close to the limit.33 When it is below the limit, there is performance degradation, and when it is over the limit, the car will crash. Therefore, we hypothesized that drivers would constantly estimate the grip force limit by integrating various sensory information, which would assign higher cognitive effort near the grip force limit and would then suppress eyeblinks. To confirm this, we utilized “g-g” diagrams.33,34,35 A “g-g” diagram is a two-dimensional density estimate of time spent at car acceleration values that are used by engineers as an alternative to visualizing tire grip force. To create a “g-g” diagram, car acceleration values at time points are decomposed into two orthogonal components, the “forward-and-backward” and “sideways” components relative to the car heading direction, are projected onto the two-dimensional plane, and the density of the points is estimated. Each direction is called lateral and longitudinal, respectively.33 If a higher grip force and consequently higher acceleration create a higher cognitive demand in drivers, their active “g-g” diagram region at the eyeblink timing would bias to the origin when compared with that of overall driving.

Indeed, Figure 2A shows that the active region of “g-g” diagrams at eyeblink timing was seemingly biased to the origin compared to the whole driving stint during faster laps. The same trend held true across courses (Figure S5) and lap pace (Figure S8). To confirm this point, we defined three acceleration regions, as shown in Figure 2A top-right. The chi-square goodness of fit tests were performed against the null hypothesis that drivers generated eyeblinks irrespective of car acceleration. Therefore, their eyeblink count distribution for the three acceleration regions would not be significantly different from the time distribution of the whole driving. The chi-square tests with 2 degrees of freedom revealed that the drivers did not blink uniformly across the acceleration regions (Bonferroni corrected p < 0.001 for all combinations; Figure 2B). The order of frequency in the regions was 3 > 2 > 1 (post-hoc pairwise proportion test, Bonferroni corrected: p < 0.01 for all combinations).

Figure 2.

Drivers suppress eyeblinks in relation to car acceleration

(A and B) Upper: Driver A, middle: Driver B, and lower: Driver C. (A) Two-dimensionally estimated density of vehicle acceleration by three drivers throughout driving (left) and at eyeblink timing (right). (B) Eyeblink frequency (number of eyeblinks generated/number of samples in each acceleration Region 1–3) during faster laps. We normalized eyeblink frequency to each driver’s eyeblink frequency in Region 3.

(C) Ten representative examples of two-dimensionally estimated density of vehicle acceleration (shift from −0.45 to 0.45) at the eyeblink timing of Driver B, which corresponds to the right middle figure in A.

(D) Empirical cumulative distribution functions in each acceleration direction from data obtained in fast laps at the Fuji course for the three drivers. The solid black lines indicate actual data. Blue lines indicate surrogate data. Asterisks indicate statistically significant differences between conditions using the notation: ∗∗ for p < 0.01 and ∗∗∗ for p < 0.001.

One might consider that because the acceleration patterns were tightly coupled with geolocation factors unique to the courses, which solely determined the eyeblink locations, the accelerations at the blink timing were biased, i.e., drivers blinked because they arrived at certain locations on the course, not because drivers experienced this acceleration. To examine this question, we compared actual “g-g” diagrams to those of null data: we circularly shifted eyeblink trains within each driver and course combination by 20 levels from −0.5 to +0.5 in the normalized distance and calculated the acceleration at the shifted eyeblink locations. These shifted train-simulated eyeblink patterns tightly correlated with the geolocation but were no longer related to acceleration. If the active region at the eyeblink timing was concentrated near the origin on the “g-g” diagram for the null data as well, the eyeblink timing should be affected solely by the geolocation. However, this was not the case. As shown in Figure 2C, the shifted “g-g” diagrams were not suppressed during higher lateral acceleration and higher longitudinal deceleration i.e., in Regions 1 and 2. This was more evident when comparing the cumulative distribution function of the accelerations at the eyeblink timing (Figure 2D). The acceleration at the eyeblink timing is significantly biased to zero, except for the positive longitudinal direction.

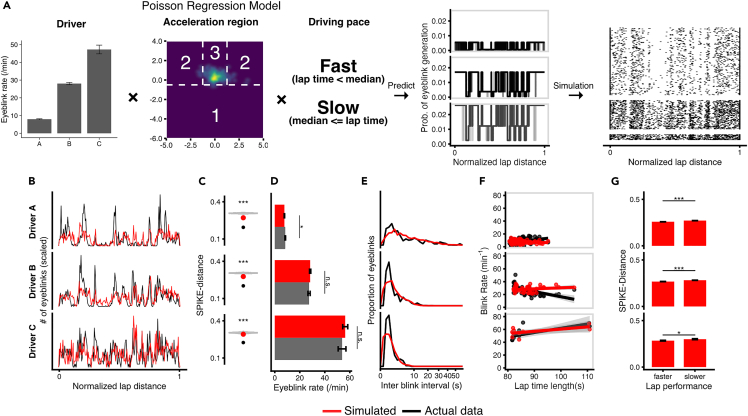

Based on the examinations above, we fitted a Poisson regression model to the three-way contingency table of the eyeblink generation probability, assuming that the drivers generated eyeblinks moment-by-moment based on the three factors stochastically.14 The three factors were individuality, lap performance, and acceleration region in Figure 2A (Figure 3A; details are in STAR Methods section). Then, we predicted the moment-by-moment eyeblink generation probability (Second from the right in Figure 3A). Finally, we obtained simulated eyeblink trains (the rightmost of Figure 3A), drawing random values from binomial distributions with the following parameters. p: moment-by-moment estimated eyeblink generation probability and n: 1. Simulation results replicated most of the eyeblink characteristics (Figures 3B–3G), but spatial synchronization features were notably degraded (Figures 3B and 3C): simulated SPIKE-distances were larger than those in the real data and the peaks of spatial eyeblink distribution for Drivers A and B were lower (Figure 3B).

Figure 3.

Three regressors in a Poisson generalized linear model (GLM) replicate many features of eyeblinks during Formula car driving

(A) A Poisson GLM model with three regressors: individuals, acceleration region, and lap driving pace predict the moment-by-moment probability of eyeblinks (one lap is represented by one thin gray line) (middle right). We simulated data by generating random values corresponding to each telemetry data moment that followed a binomial distribution with p = (predicted moment-by-moment probability) and n = 1. A raster plot for one simulated dataset is shown on the right.

(B–G) Signatures of one simulated eyeblink dataset (red) and real data (black). Similar to Figure 1, but for (B), a spatial histogram of eyeblink count is used instead of a raster plot. (C) SPIKE-distance of actual data (black circle), surrogate data generated from actual data (gray violin plot), and mean SPIKE-distance of 20 simulated datasets (red circle). Asterisks indicate the nonparametric statistical significance of the synchronization level of the simulated mean SPIKE-distance compared to the actual surrogate data. (D) Eyeblink rates of actual and simulated data. The error bar indicates one standard error. (E) Actual and simulated histograms of interblink-interval frequency. (F) Correlation between lap time length and blink rate in each lap for actual and simulated data. (G) Piecewise SPIKE-distances within faster and slower laps. Error bars indicating across-lap mean SEM. Asterisks indicate statistically significant differences between conditions using the notation: n.s. for not significant, ∗ for p < 0.05 and ∗∗∗ for p < 0.001.

Discussion

Our findings revealed eyeblink signatures during the naturalistic extreme sensorimotor activity of Formula car driving. There were hidden task-related spatial structures underlying the eyeblink pattern. Our analysis extrapolated findings from the known individual differences in eyeblink rate11,36 (Figures 1F and S2) and eyeblink synchronicity for engagement31,32 (Figures 1I and S4) and showed a correlation between short-term cognitive demand and eyeblink generation probability (Figures 2A and 2B).14,22 These findings were valid and underlay the spatial pattern, but cast doubt37,38 on the effect of the relationship between longer-term cognitive demand and eyeblink rate19 (Figure 1H) in a real-world extreme sensorimotor task.

Drew19 noticed relationships between eyeblink behavior and motor control. However, that study did not quantify motor control behavior in detail and the task environment was limited to a laboratory setting. Few studies on the relationship between eyeblinks and motor control were subsequently conducted. Research initiated by Land and Lee4 on eye movement, gaze control, motor control, and the task objective (mainly curve negotiation) in real vehicles continued,1,5,8 although blinking was not explicitly mentioned in those studies. Eyeblinks (and other artifacts) have been reported to result in more than 10% of eye tracker information loss9,13 in simulator conditions. Complete gaze patterns were eventually identified on a simulation basis,9,13 but the graph was interpolated, perhaps indicating that the authors considered that eyeblinks were distributed randomly.9 However, it may be reasonable to assume that, based on our data, the gaze direction data availability was somewhat biased and the gaze data were lost in specific situations (Figures 1D, S1A, and S1C). This raises the question of whether all estimates of gaze direction data should be treated equally. For a future study, in addition to spatiotemporal sampling strategies indicated by gaze direction, spatiotemporal sampling by blinking should be examined concurrently.

Observing driving at a variety of lap paces allowed us to examine how lap paces affected the lap scale eyeblink features. Cognitive demand is known to affect eyeblink frequency,16 while engagement in stimuli is known to affect eyeblink timing pattern similarity.31 With driving at a faster lap pace, cognitive demand and engagement in driving would increase, and a decrease in eyeblink rate and an increase in eyeblink pattern similarity were assumed. Our preliminary results32 suggested that the relationship between lap pace and eyeblink timing pattern similarity was more robust than the relationship between lap pace and eyeblink frequency. This was replicated in the larger dataset of this study (Figures 1I, S4, 1H, and S3). We are still determining the exact reason for the inconsistent relationship between the eyeblink rate and lap times. There have been numerous reports on the relationship between eyeblink rate and environment/stimulus factors (See review16). Although studies suggest the general trend that more cognitive demand leads to fewer eyeblinks, a considerable number of studies report no relationship between the eyeblink rate and environment/stimulus factors, depending on the specific environment or stimulus. Therefore, the inconsistency of the trends among drivers and courses during Formula car driving may not be so surprising.

One key conceptual issue of our eyeblink generation model was whether acceleration is a proxy of cognitive demand, as assumed in Figure 2. One might argue that drivers reflexively respond to sensory input, such as vestibular input or covarying visual flow. However, in course Sugo only (Figure S5B), where all laps were observed under wet road conditions, the active regions at eyeblink timing were much more biased to the origin than those for other courses. This was in line with the assumption that the grip level relative to the grip limit is important, rather than the car acceleration value per se, and suggests that there would be top-down modulation when evaluating acceleration or other confounders.

Our naturalistic observation method did not allow us to discern what eyeblink generation bias, based on acceleration, truly represents during sport driving. However, task-related visual demand bias would be a promising candidate. Laboratory psychophysical studies have revealed that performing a visual detection task, participants rapidly suppress eyeblinks according to the momentary increase of visual demand.14,18,22,39 Human factor studies have revealed the importance of visual features through gaze strategies during curve negotiations in real-life and simulators.7,40 Taken together, curve driving may require more visual demand than straight driving, resulting in the observed eyeblink bias due to acceleration. In addition, suppressing eye blinking early in a curve or longitudinally decelerating (Figure 2A) is consistent with the research that revealed visual information early in the curve is more important than the latter.41 Thus, a general rule of thumb “people blink straights because they can; they cannot in curves because they have to care about visual features for stable driving” would fit for Formula car driving.

For other possibilities, task-related auditory demand bias also would be a candidate. Because auditory task demand is known to momentarily lower eyeblink generation probability,21,39 the drivers may have carefully listened to something when experiencing an acceleration in Regions 1 and 2 compared to Region 3 (Figure 2A). Although, as far as we know, there is no research on whether specific auditory information enhances sports driving performance, it seems reasonable to estimate that detecting changes in car/environment sound contributes to skilled driving. Vestibular, haptics, force feedback from the steering wheel sensations, and the mix of the above could be similarly related to the eyeblink timing patterns; however, how they are related to each other is an open question. In addition, engagement change in relation to lap time may affect eyeblink generation timings (Figures 1I and S8; STAR Methods).

Short-term changes in the eyeblink pattern while driving were more evident around a curve than on a straight path (Figure 2A). Similar changes have previously been detected in the electroencephalography (EEG) power between straight and curve driving,42 and the eyeblink behavior and task-related EEG power changes may be closely related.43 It is important to note that eyeblink signatures are purely behavioral but provide a clue to cognitive state changes with a comparable time and spatial resolution to EEG.

Our three-factor-model simulation results replicated key eyeblink signatures, but the spatial synchrony was notably degraded (Figures 3B and 3G). We can consider several reasons for this gap. First, there may be a relation to eye physiology.44 For example, after prolonged eyeblink suppression, the probability of eyeblink generation could be higher owing to eye-surface irritation. As a result, eyeblinks might synchronize more after suppression than in our model. This physiological effect, which was excluded in the short-cycle task of Hoppe et al.,14 may have manifested itself in the longer inhibition time required for this driving task. The second reason may be the cognitive effort and the time course of eyeblink probability development around this phenomenon.21,39 For short-term cognitive effort, eyeblink generation probability decreases during effort, increases immediately afterward, and then returns to the baseline value. The mechanism of this effect is unclear, but for the latter increase, active disengagement of attention45 and dopaminergic activity in response to reward16 might be related. Finally, geolocation-specific reasons that we could not capture from our observation may be responsible. For example, drivers might look at some visual features at specific locations and suppress eyeblinks with the increase in visual demand. This was confirmed through changes in eyeblink generation probability in the absence of accompanying acceleration changes at a given location (Figure S6). Therefore, the location-dependent factor appears to have a considerable impact on eyeblink generation, while most of the features of eye blinking can be explained by individuality, lap performance, and car acceleration.

In conclusion, our investigations confirmed repeatable eyeblink patterns along the racecourse. The results indicated that the patterns reflected one individual-specific longer timescale feature and two shared-among-individuals features that reflect cognitive states during driving on shorter time scales. Moreover, our results show that expert drivers continuously shift these cognitive states while sport driving.

Limitations of the study

Limitations for observing blinking behavior during real Formula cars, not in simulators, race driving, need to be acknowledged.40

This study involved only three drivers as race driving is organized only on dozens of days a year and owing to the scarcity of eligible drivers for driving Formula cars in real race environments. However, the effects related to lap performance (Figures 1I and S4) and acceleration (Figures 2 and S5) were common among the drivers. First, regarding which population our results are generalizable to, for all drivers with great driving skills, our results would be generalizable to skilled drivers. Next, the lower bounds of the prevalence of the effects that showed interparticipant agreement could be as small as 30% according to a framework named conjunction analysis.46 Conjunction analysis is a method for determining the confidence interval for the frequency of a specific outcome based on observation of the outcome N times out of N total instances, which in this case was three out of three.

The drivers had criteria to enter the race activity, but we did not set any particular criteria for our observation. We acknowledged that this was not optimal for experimental control among observations. However, our objective was to observe drivers in real race driving situations, so setting our own criteria might disturb the situation. Several criteria to enter the races for drivers could contribute to improving experimental control. For example, although we did not prohibit nor monitor chemical substances during the observation period, they needed to comply with anti-doping47 regulations, which prohibit performance-enhancing drugs, substances that pose a risk to the athlete’s body, and other stimulants. No violations of the regulations by the drivers have been reported. Alcohol is prohibited in this scheme, but caffeine is not. According to previous research, caffeine intake significantly impacts the eyeblink conditioning process depending on the dosage volume.48 However, to the best of our exploration, there is no impact on eyeblink rate,49 and no study has been found on the relationship between caffeine and eyeblink timing. It cannot be denied that caffeine impacted our results through the unreported association, so future studies should consider monitoring caffeine intake.

Eye movement and gaze direction behavior were not analyzed simultaneously with blinking behavior. It was because we could not identify reliable pupil locations and gaze directions from the acquired data because of the variable illuminating conditions, the slippage of eye trackers that were attached to the drivers’ helmets, not to their heads, and not optimal cameras’ angle (See STAR Methods and Figure S7). A future study including simultaneous recording would reveal the association between eyeblink and gaze behavior during driving.

The contrast of drivers’ eye blinking patterns seems far more evident than that in other passive tasks.18,31,50 However, ecological validity, and consequentially, drivers’ expertise, their deep knowledge of the courses, risk of fatality of the race driving task, intensity of sensory information, and active motor control involved may all contribute to the clarity of the patterns, although it is impossible to disentangle them from the results in this project, necessitating further study.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| R software (4.1.2) | R Core Team. | https://www.r-project.org/ |

| Python Programming Language (3.6.10) | Python Software Foundation | https://www.python.org/ |

| Other | ||

| Pupil Core | Pupil Labs | https://pupil-labs.com/products/core/ |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Ryota Nishizono (ryota.nishizono.kw@hco.ntt.co.jp).

Materials availability

This study did not generate new unique reagents or materials.

Experimental model and subject details

Three male Asian drivers, aged 23–33 years, participated in this study. They drove for a top Japanese Formula car racing team. All drivers had started car racing from early childhood. There were no specific criteria for drivers to enter our experiments, but there were the criteria to enter the racing activities we observed. For example, driving skills above a certain level or compliance with anti-doping rules.47 Before the study, the testing procedure was verbally explained and written informed consent was obtained. This study was approved by the ethics committee board of NTT Communication Science Laboratories (Atsugi, Japan; the approval code H30-002) and was conducted in accordance with the Declaration of Helsinki.

Method details

Driving course and equipment

Three circuits (Fuji,51 Suzuka,52 and Sugo53) were used in this study. The track lengths were 4563 m, 5807 m, and 3737 m, respectively. Track widths are 15–25 m, 10–16 m, and 10–12 m. There were 16, 18, and 11 corners. Except for course Sugo, the recordings were obtained under dry road conditions. All drivers drove Dallara SF19 Formula car54 (Chassis: Dallara Automobili, Stradella, Italy; Engine: HR-417E, HONDA MOTOR CO. LTD., Tokyo, Japan & M-TEC COMPANY LTD., Asaka, Japan). The car weight was approximately 670 kg, including a driver. The engine power was over 405 kW and the top speed was approximately 300 km/h.

Eyeblink and car control behavior recording

An image-based technique was used to collect eyeblink data. The time required for attaching and removing electrodes for performing electromyography and electrooculography techniques was too long for the drivers’ schedule. We used a 120-Hz, typically 400 400 pixel configurated binocular eye tracker (Pupil Core,55 Pupil Labs Gmbh, Berlin, Germany). The world camera of this eye tracker recorded the outward view at 60 Hz. The resulting images were used as supplementary data to monitor the outward situation. The world camera and two eye trackers were placed on a 3D-printed frame that was then mounted on the edge of the helmet (Figure S7A). Image data were collected using a smartphone (Figure S7B) and were processed offline. Obtained driving recordings were 7.9 h or 304 laps. In one driving session, one eye camera was completely dead, so we solely relied on the video from the opposite eye. In another driving session, one eye camera did not work for about 2 min, so we solely relied on the opposite camera. For the other parts, our visual inspection did not reveal any apparent data loss, jitter, and noise in this dataset.

The car behavior was recorded using a car data logging system (Cosworth Ltd., Northampton, UK). The recorded data were exported at a sample rate of 50 Hz. Data were annotated using lap triggers that were triggered by an infrared (IR) transmitter (C16S IR Timing Beacon Receiver, Cosworth Ltd.) on the course. The IR transmitter was located close to the start/finish line by each circuit official. The same IR receiver was used to record the lap information in the eye-tracking data. It was installed in the car next to the car behavior lap-timing receiver (Figure S7C). A microcomputer (PIC16F1705, Microchip Technology, Inc., Novi, MI, USA) generated a 1000-Hz sine wave upon receiving the trigger signal. This sine wave was recorded by our eye-camera-recorder smartphone as a sound signal. This sound was used to synchronize the eye images and the car data logging system.

Quantification and statistical analysis

Eyeblink detection

The image feature-based approach,56 such as Pupil Labs app default eye blink detection methods,57 did not perform optimally for the wide variations in outdoor lighting conditions,58 non-optimal camera angle for pupil tracking, and often slippage of the cameras.59 On the other hand, manually detecting eyeblinks would be labor intensive, given that there were approximately 6.8 million images to be annotated for 7.9-h videos of both eyes at 120 Hz. Therefore, we decided to use a supervised machine learning algorithm. This algorithm was similar to the one in the study by Cortacero et al.60 except for that we used eye tracker images as input. In the first stage of the pipeline, our convolutional neural network (CNN) classifier predicted whether an eye image from the recorded video was a closed-eye image. ResNet-5061 This was used as a backbone, followed by fully connected layers that output the closedness of each eye. We used the binary cross-entropy-loss function. Data from 4 of the 18 driving sessions, involving two drivers and 2 days, were manually coded as with closed or open eyes. A randomly sampled set of 9576 images (3545 closed vs. 6031 open) was used as the dataset. Overall, 90% of the randomly sampled images were used as the training dataset and the remaining 10% were used as the validation set. The fine-tuned model achieved a 0.1% error rate in the validation set. We applied the model to all available eye images obtained during the driving sessions. In this step, the output was from zero to one for eye closedness confidence (softmax output of our CNN model). Then, open/close binarization was performed by thresholding at 0.6. We checked the length of eyeblink-defined image runs and retained only the runs with a length of less than 500 ms, assuming these to reflect eyeblinks.

For validation, 20 samples were randomly extracted from each session, eye (left/right), and our model-defined open/closed prediction. We manually coded these sampled images. The accuracy of open-eye detection was 99% and that of closed-eye detection was 92%. In one driving session, our model misclassified more than 10% of eyes as closed owing to malalignment and the exposure setting of the cameras; thus, we used the manually coded data as an alternative. If both cameras operated appropriately, we increased robustness by confirming when both eyes were judged as being closed. Finally, the onset of eyeblink timing was defined as the timing of the first frame during a run in which eye blinking occurred.

A set of data in one course (Sugo) was manually coded and compared with our auto-detection algorithm to confirm its accuracy. During manual coding, eyeblink onsets were manually annotated using the Pupil Player annotation tool (Pupil Labs Gmbh). The eye video-sampling rate was 120 Hz; however, the sampling rate for annotation was limited to 60 Hz (i.e., 16 ms interval). This was the same as that of the world camera. Eyeblink onset was defined from the first frame in which the eyelid rapidly moved downward to generate an eyeblink. Therefore, we considered the exact eyeblink onset timing, defined as the starting point when the eyelids moved fast, within 16 ms before the timing that we annotated. In contrast, the eyeblink onset timing by our auto-detection algorithm depended on the threshold value in the second stage of our pipeline.

The result was as follows. For Driver C, a human annotator detected 760 eyeblinks, while the auto-detector detected 764 eyeblinks. The annotations made by the human annotator preceded the nearest annotations made automatically by a median of 30.4 ms. For Driver A, a human annotator detected 171 eyeblinks, while our auto-detector detected 174 eyeblinks. The annotations made by the human annotator preceded the nearest annotations made automatically by a median of 25.6 ms.

(Dis)similarity measures for eyeblink trains

To assess the blink pattern similarity among laps statistically, we introduced a distance measure: the SPIKE-distance.28 This measure was originally developed to quantify the spike timing asynchrony of multiple firing neurons but is applicable to general event trains. SPIKE-distance decreases when event trains are more synchronized (Figure 1C). Eyeblink locations of each lap were treated as an “eyeblink train” (Figure 1B), analogous to neural spike trains. The eyeblink trains in one course are shown in Figure 1D. We tested the SPIKE-distance values for each driver and course combination against a null distribution of permutations, in which we circularly shifted eyeblink timing within each lap. The real SPIKE-distance and null distribution corresponding to Figure 1D are depicted in Figure 1E. Eyeblink position trains are formulated as follows.

| (Equation 1) |

where d is the normalized lap distance of the eyeblink position, and is the number of eyeblinks in the lap j. Eyeblink train (dis)similarity refers to the distance between and for k and l as a measure in this context. When , the distance is 0.

The surrogate data stored the whole mean eyeblink rate, eyeblink-to-eyeblink interval,17 and eyeblink timing sequences, but the information about when eyeblinks increased or decreased along the course was lost. We circulated eyeblink timing trains as follows:

| (Equation 2) |

where is the eyeblink timing from the start/finish, is the kth circular shifted , is the number of eyeblinks in the lap j. N is the number of laps for a driver at a course, is the time taken to complete a lap j, and was extracted from the uniform distribution of . Then, these circulated eyeblink lap timings were converted into normalized lap distances by linear interpolation or extrapolation combined with the corresponding telemetry data, yielding .

Generalized linear model fitting and eyeblink pattern simulation

Each time point of telemetry data annotated as being blinking or not (e.g., time h:mm:ss.sss 0:50:10.000; Driver A; acceleration at Region 1 in Figure 2A; fast lap pace; not blinking) were classified into 18 levels based on the three factors: 3 individuals 3 acceleration regions 2 lap performance (Figure 3A). Assuming that drivers generate eyeblinks moment-by-moment for these factors (Figure 3A), we made a three-way contingency table of the frequency at each combination of factor levels and fitted a Poisson regression model to account for the contingency table as follows:

| (Equation 3) |

where i, j, and k are the factor levels of driver, acceleration region (), and lap pace, respectively. represents the number of eyeblinks when each factor level was i,j, and k. Fitting was done using the R62 built-in glm (generalized linear model) function with a log link function.

Three terms were entered into the GLM model: , , and . The rationale of this decision was as follows. The term was included based on the examination of the association between the eyeblink rates and individuality (Figures 1F and 1H). Regarding the term , visual inspection revealed that the effect of acceleration region in Figure 2A on eyeblink frequency was not consistent among the drivers (Figures 2B and S8) while the relationship of eyeblink frequency in acceleration region (Region 3> Region 2> Region 1) was maintained. The chi-squared test confirmed that the factor was not jointly independent63 from the response ( or ) and the factor (p < 0.001). Therefore, the association between the driver and acceleration region was assumed. Finally, regarding the term , visual inspection revealed that the effect of acceleration region in Figure 2A on eyeblink frequency differed by lap pace (Figure S8), while the relationship of eyeblink frequency in acceleration region (Region 3> Region 2> Region 1) was maintained. The chi-squared test confirmed that the factor was not jointly independent from the response and the factor (p < 0.001). Therefore, the association between lap pace and acceleration region was assumed.

The fitted coefficients are described in Table S1. The coefficients are capable of reflecting the characteristics of Figures 2B and S8C. The degrees of freedom of the model was 6. Then, we predicted moment-by-moment using the obtained model (Second from the right in Figure 3A). Finally, we acquired simulated eyeblink trains (the rightmost of Figure 3A) by generating random values that followed a binomial distribution with parameters (p: ; n: 1) for each telemetry data sample.

Acknowledgments

We appreciate the collaboration of DOCOMO TEAM DANDELION RACING and the drivers who allowed the measurements. We thank Toshirou Fujimoto and Norihiro Ban for experimental assistance.

Author contributions

R.N. and N.S. conceived and designed the research. R.N. and N.S. conducted the experiments. R.N. analyzed the results. R.N. drafted the manuscript. All authors discussed the results and contributed to the final manuscript.

Declaration of interests

R.N. has patent pending to Nippon Telegraph and Telephone Corporation.

Published: May 19, 2023

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2023.106803.

Supplemental information

Data and code availability

-

•

DATA: The raw experimental data reported in this study cannot be deposited in a public repository because those include personally identifiable biometric data, technological development in blink pattern analysis that can potentially reveal drivers’ competitive characteristics for motorsport may be a disadvantage for our participants, who are still active professionals at the time of writing, and disclosing car telemetry data, such as acceleration, to the public may also be a disadvantage to the team participating in the experiment in terms of their equipment development. Therefore, we adopted “available upon request” policy for data requests.

-

•

CODE: This paper does not report original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon reasonable request.

References

- 1.Land M.F., McLeod P. From eye movements to actions: how batsmen hit the ball. Nat. Neurosci. 2000;3:1340–1345. doi: 10.1038/81887. [DOI] [PubMed] [Google Scholar]

- 2.Hayhoe M.M., Shrivastava A., Mruczek R., Pelz J.B. Visual memory and motor planning in a natural task. J. Vis. 2003;3:49–63. doi: 10.1167/3.1.6. [DOI] [PubMed] [Google Scholar]

- 3.Hayhoe M., Ballard D. Eye movements in natural behavior. Trends Cogn. Sci. 2005;9:188–194. doi: 10.1016/j.tics.2005.02.009. [DOI] [PubMed] [Google Scholar]

- 4.Land M.F., Lee D.N. Where we look when we steer. Nature. 1994;369:742–744. doi: 10.1038/369742a0. [DOI] [PubMed] [Google Scholar]

- 5.Land M.F., Tatler B.W. Steering with the head: the visual strategy of a racing driver. Curr. Biol. 2001;11:1215–1220. doi: 10.1016/S0960-9822(01)00351-7. [DOI] [PubMed] [Google Scholar]

- 6.Matthis J.S., Yates J.L., Hayhoe M.M. Gaze and the control of foot placement when walking in natural terrain. Curr. Biol. 2018;28:1224–1233.e5. doi: 10.1016/j.cub.2018.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lappi O. Future path and tangent point models in the visual control of locomotion in curve driving. J. Vis. 2014;14:21. doi: 10.1167/14.12.21. [DOI] [PubMed] [Google Scholar]

- 8.Lappi O., Mole C. Visuomotor control, eye movements, and steering: a unified approach for incorporating feedback, feedforward, and internal models. Psychol. Bull. 2018;144:981–1001. doi: 10.1037/bul0000150. [DOI] [PubMed] [Google Scholar]

- 9.Rendon-Velez E., van Leeuwen P.M., Happee R., Horváth I., van der Vegte W., de Winter J. The effects of time pressure on driver performance and physiological activity: a driving simulator study. Transp. Res. Part F: Traffic Psychol. Behav. 2016;41:150–169. doi: 10.1016/j.trf.2016.06.013. [DOI] [Google Scholar]

- 10.Cruz A.A.V., Garcia D.M., Pinto C.T., Cechetti S.P. Spontaneous eyeblink activity. Ocul. Surf. 2011;9:29–41. doi: 10.1016/S1542-0124(11)70007-6. [DOI] [PubMed] [Google Scholar]

- 11.McMonnies C. Encyclopedia of the Eye. Academic Press; 2010. Blinking mechanisms; pp. 202–208. [DOI] [Google Scholar]

- 12.Riggs L.A., Volkmann F.C., Moore R.K. Suppression of the blackout due to blinks. Vis. Res. 1981;21:1075–1079. doi: 10.1016/0042-6989(81)90012-2. [DOI] [PubMed] [Google Scholar]

- 13.van Leeuwen P.M., de Groot S., Happee R., de Winter J.C.F. Differences between racing and non-racing drivers: a simulator study using eye-tracking. PLoS One. 2017;12:e0186871. doi: 10.1371/journal.pone.0186871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hoppe D., Helfmann S., Rothkopf C.A. Humans quickly learn to blink strategically in response to environmental task demands. Proc. Natl. Acad. Sci. USA. 2018;115:2246–2251. doi: 10.1073/pnas.1714220115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kaminer J., Powers A.S., Horn K.G., Hui C., Evinger C. Characterizing the spontaneous blink generator: an animal model. J. Neurosci. 2011;31:11256–11267. doi: 10.1523/JNEUROSCI.6218-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jongkees B.J., Colzato L.S. Spontaneous eye blink rate as predictor of dopamine-related cognitive function—a review. Neurosci. Biobehav. Rev. 2016;71:58–82. doi: 10.1016/j.neubiorev.2016.08.020. [DOI] [PubMed] [Google Scholar]

- 17.Ponder E., Kennedy W.P. On the act of blinking. Q. J. Exp. Physiol. 1927;18:89–110. doi: 10.1113/expphysiol.1927.sp000433. [DOI] [Google Scholar]

- 18.Nakano T., Yamamoto Y., Kitajo K., Takahashi T., Kitazawa S. Synchronization of spontaneous eyeblinks while viewing video stories. Proc. Biol. Sci. 2009;276:3635–3644. doi: 10.1098/rspb.2009.0828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Drew G.C. Variations in reflex blink-rate during visual-motor tasks. Q. J. Exp. Psychol. 1951;3:73–88. doi: 10.1080/17470215108416. [DOI] [Google Scholar]

- 20.Stern J.A., Walrath L.C., Goldstein R. The endogenous eyeblink. Psychophysiology. 1984;21:22–33. doi: 10.1111/j.1469-8986.1984.tb02312.x. [DOI] [PubMed] [Google Scholar]

- 21.Oh J., Jeong S.-Y., Jeong J. The timing and temporal patterns of eye blinking are dynamically modulated by attention. Hum. Mov. Sci. 2012;31:1353–1365. doi: 10.1016/j.humov.2012.06.003. [DOI] [PubMed] [Google Scholar]

- 22.Shultz S., Klin A., Jones W. Inhibition of eye blinking reveals subjective perceptions of stimulus salience. Proc. Natl. Acad. Sci. USA. 2011;108:21270–21275. doi: 10.1073/pnas.1109304108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Volkmann F.C., Riggs L.A., Moore R.K. Eyeblinks and visual suppression. Science. 1980;207:900–902. doi: 10.1126/science.7355270. [DOI] [PubMed] [Google Scholar]

- 24.Maus G.W., Goh H.L., Lisi M. Perceiving locations of moving objects across eyeblinks. Psychol. Sci. 2020;31:1117–1128. doi: 10.1177/0956797620931365. [DOI] [PubMed] [Google Scholar]

- 25.Teichmann L., Edwards G., Baker C.I. Resolving visual motion through perceptual gaps. Trends Cogn. Sci. 2021;25:978–991. doi: 10.1016/j.tics.2021.07.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gibson J.J. Visually controlled locomotion and visual orientation in animals. Br. J. Psychol. 1958;49:182–194. doi: 10.1111/j.2044-8295.1958.tb00656.x. [DOI] [PubMed] [Google Scholar]

- 27.Kobayashi A., Kimura T. Compensative movement ameliorates reduced efficacy of rapidly-embodied decisions in humans. Commun. Biol. 2022;5:294. doi: 10.1038/s42003-022-03232-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mulansky M., Kreuz T. Pyspike-2014;a python library for analyzing spike train synchrony. SoftwareX. 2016;5:183–189. doi: 10.1016/j.softx.2016.07.006. [DOI] [Google Scholar]

- 29.Maffei A., Angrilli A. Spontaneous eye blink rate: an index of dopaminergic component of sustained attention and fatigue. Int. J. Psychophysiol. 2018;123:58–63. doi: 10.1016/j.ijpsycho.2017.11.009. [DOI] [PubMed] [Google Scholar]

- 30.Ranti C., Jones W., Klin A., Shultz S. Blink rate patterns provide a reliable measure of individual engagement with scene content. Sci. Rep. 2020;10:8267. doi: 10.1038/s41598-020-64999-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Nakano T., Miyazaki Y. Blink synchronization is an indicator of interest while viewing videos. Int. J. Psychophysiol. 2019;135:1–11. doi: 10.1016/j.ijpsycho.2018.10.012. [DOI] [PubMed] [Google Scholar]

- 32.Nishizono R., Saijo N., Kashino M. Proceedings of ACM Symposium on Eye Tracking Research and Applications Short Papers. 2021. Synchronization of spontaneous eyeblink during formula car driving; pp. 1–6. [DOI] [Google Scholar]

- 33.Lappi O., Dove A. 2022. The Science of the Racer’s Brain (Otto Lappi) [Google Scholar]

- 34.Rice R.S. Measuring car-driver interaction with the gg diagram. Technical Paper. 1973 doi: 10.4271/730018. SAE. [DOI] [Google Scholar]

- 35.Milliken W.F., Milliken D.L. Society of Automotive Engineers Warrendale; 1995. Race Car Vehicle Dynamics. [Google Scholar]

- 36.Nakano T. Blink-related dynamic switching between internal and external orienting networks while viewing videos. Neurosci. Res. 2015;96:54–58. doi: 10.1016/j.neures.2015.02.010. [DOI] [PubMed] [Google Scholar]

- 37.Cho Y. Proceedings of the CHI Conference on Human Factors in Computing Systems. 2021. Rethinking eye-blink: assessing task difficulty through physiological representation of spontaneous blinking; pp. 1–12. [DOI] [Google Scholar]

- 38.Bacher L.F., Smotherman W.P. Spontaneous eye blinking in human infants: a review. Dev. Psychobiol. 2004;44:95–102. doi: 10.1002/dev.10162. [DOI] [PubMed] [Google Scholar]

- 39.Brych M., Händel B. Disentangling top-down and bottom-up influences on blinks in the visual and auditory domain. Int. J. Psychophysiol. 2020;158:400–410. doi: 10.1016/j.ijpsycho.2020.11.002. [DOI] [PubMed] [Google Scholar]

- 40.Lappi O. Gaze strategies in driving–an ecological approach. Front. Psychol. 2022;13:821440. doi: 10.3389/fpsyg.2022.821440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Cavallo V., Brun-Dei M., Laya O., Neboit M. Vision in Vehicles II. Second International Conference on Vision in VehiclesApplied Vision AssociationErgonomics SocietyAssociation of Optometrists. 1988. Perception and anticipation in negotiating curves: the role of driving experience. [Google Scholar]

- 42.Rito Lima I., Haar S., Di Grassi L., Faisal A.A. Neurobehavioural signatures in race car driving: a case study. Sci. Rep. 2020;10:11537–11539. doi: 10.1038/s41598-020-68423-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wascher E., Arnau S., Gutberlet M., Chuang L.L., Rinkenauer G., Reiser J.E. Visual demands of walking are reflected in eye-blink-evoked eeg-activity. Appl. Sci. 2022;12:6614. doi: 10.3390/app12136614. [DOI] [Google Scholar]

- 44.Nomura R., Liang Y.-Z., Morita K., Fujiwara K., Ikeguchi T. Threshold-varying integrate-and-fire model reproduces distributions of spontaneous blink intervals. PLoS One. 2018;13:e0206528. doi: 10.1371/journal.pone.0206528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Nakano T., Kato M., Morito Y., Itoi S., Kitazawa S. Blink-related momentary activation of the default mode network while viewing videos. Proc. Natl. Acad. Sci. USA. 2013;110:702–706. doi: 10.1073/pnas.1214804110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Laurens J. The statistical power of three monkeys. bioRxiv. 2022 doi: 10.1101/2022.05.10.491373. Preprint at. [DOI] [Google Scholar]

- 47.Japan Automobile Federation JAF Anti Doping Rules. https://motorsports.jaf.or.jp/regulations/information/anti-doping

- 48.Flaten M.A. Caffeine-induced arousal modulates somatomotor and autonomic differential classical conditioning in humans. Psychopharmacology. 1998;135:82–92. doi: 10.1007/s002130050488. [DOI] [PubMed] [Google Scholar]

- 49.Berenbaum H., Williams M. Extraversion, hemispatial bias, and eyeblink rates. Pers. Individ. Dif. 1994;17:849–852. doi: 10.1016/0191-8869(94)90052-3. [DOI] [Google Scholar]

- 50.Wiseman R.J., Nakano T. Blink and you’ll miss it: the role of blinking in the perception of magic tricks. PeerJ. 2016;4 doi: 10.7717/peerj.1873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Fuji International Speedway Co.,Ltd Fuji SpeedWay Guide. https://www.fsw.tv/en/index.html

- 52.Honda Mobilityland Corporation Suzuka Circuit Course Guide. https://www.suzukacircuit.jp/en/course/

- 53.Sugo International Racing Course. https://www.sportsland-sugo.co.jp/course/c-racing/

- 54.Japan Race Promotion Inc. About SUPER FORMULA. https://superformula.net/sf2/about2021

- 55.Kassner M., Patera W., Bulling A. Adjunct Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing. 2014. Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction; pp. 1151–1160. [DOI] [Google Scholar]

- 56.Schmidt J., Laarousi R., Stolzmann W., Karrer-Gauß K. Eye blink detection for different driver states in conditionally automated driving and manual driving using eog and a driver camera. Behav. Res. Methods. 2018;50:1088–1101. doi: 10.3758/s13428-017-0928-0. [DOI] [PubMed] [Google Scholar]

- 57.Pupil Labs Pupil labs blink detector manual. https://docs.pupil-labs.com/core/software/pupil-player/#blink-detector

- 58.Schweizer T., Wyss T., Gilgen-Ammann R. Eyeblink detection in the field: a proof of concept study of two mobile optical. Mil. Med. 2022;187:e404–e409. doi: 10.1093/milmed/usab032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Niehorster D.C., Santini T., Hessels R.S., Hooge I.T.C., Kasneci E., Nyström M. The impact of slippage on the data quality of head-worn eye trackers. Behav. Res. Methods. 2020;52:1140–1160. doi: 10.3758/s13428-019-01307-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Cortacero K., Fischer T., Demiris Y. IEEE/CVF International Conference on Computer Vision Workshop. IEEE; 2019. RT-BENE: a dataset and baselines for real-time blink estimation in natural environments; pp. 1159–1168. [DOI] [Google Scholar]

- 61.He K., Zhang X., Ren S., Sun J. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. IEEE; 2016. Deep residual learning for image recognition; pp. 770–778. [DOI] [Google Scholar]

- 62.R Core Team . 2021. R: A Language and Environment for Statistical Computing.https://www.R-project.org/ [Google Scholar]

- 63.Agresti A. John Wiley & Sons; 2012. Categorical Data Analysis. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

-

•

DATA: The raw experimental data reported in this study cannot be deposited in a public repository because those include personally identifiable biometric data, technological development in blink pattern analysis that can potentially reveal drivers’ competitive characteristics for motorsport may be a disadvantage for our participants, who are still active professionals at the time of writing, and disclosing car telemetry data, such as acceleration, to the public may also be a disadvantage to the team participating in the experiment in terms of their equipment development. Therefore, we adopted “available upon request” policy for data requests.

-

•

CODE: This paper does not report original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon reasonable request.