Abstract

Statistical models, often based on electronic health records (EHRs), can accurately identify patients at high risk of suicide attempt or death, leading to health system implementation of risk prediction models as a component of population-based suicide prevention. At the same time, some have questioned whether statistical predictions can really inform clinical decisions. Appropriately reconciling statistical algorithms with traditional clinician assessment depends on whether predictions from those two methods are competing, complementary, or merely duplicative. In June 2019, the National Institute of Mental Health convened a meeting regarding Suicide Risk Algorithm Applications in Healthcare Setting. Participants in that meeting here summarize key issues regarding the potential clinical application of prediction models. This report attempts to clarify the key conceptual and technical differences between traditional clinician risk prediction and predictions from statistical models, reviews the limited evidence available regarding both the accuracy of and concordance between these alternative methods of prediction, presents a conceptual framework for understanding agreement and disagreement between clinician and statistical and clinician prediction, and identifies priorities for improving data regarding suicide risk as well as priority questions for future research. The future of suicide risk assessment will likely combine statistical prediction with traditional clinician assessment, but research is needed to determine the optimal combination of those methods.

Introduction

Despite increasing attention to suicide prevention, US suicide rates continue to rise – increasing by over one-third since 19991. Over three-quarters of people attempting suicide or dying by suicide had some outpatient healthcare visit during the prior year 2, 3. Healthcare encounters are a natural setting for identifying and addressing risk of suicidal behavior4, but effective prevention requires accurate identification of risk. Unfortunately, assessment based on traditional risk factors is not accurate enough to direct interventions to people at highest risk5, 6. Recent research demonstrates that statistical models, often based on electronic health records (EHRs), more accurately identify patients at high risk in mental health, general medical, and emergency department settings7–12. Motivated by that research, some healthcare systems have implemented risk prediction models as a component of population-based suicide prevention13. At the same time, some have questioned whether statistical predictions can really inform clinician decisions given concerns regarding high false-positive rates, potential for false labelling, and lack of transparency or interpretability14.

Current 13 and planned implementations of suicide risk prediction models typically call for delivering statistical predictions to treating clinicians. Clinicians then assess risk and implement specific prevention strategies (e.g., collaborative safety planning15) or treatments (e.g., Cognitive-Behavioral Therapy16 or Dialectical Behavior Therapy17). This sequence presumes that clinician assessment improves on a statistical prediction. Whether or not a clinician assessment adds to statistical risk predictions, however, depends on whether those two methods are competing, complementary, or simply duplicative.

In June 2019, the National Institute of Mental Health convened a meeting regarding Suicide Risk Algorithm Applications in Healthcare Settings23. That meeting considered a range of clinical, technical, and ethical issues regarding implementation of prediction models. As participants in that meeting, we here present our conclusions and recommendations regarding one central issue: how algorithms or statistical predictions might complement, replace, or compete with traditional clinician prediction. First, we attempt to clarify conceptual and practical differences between clinician prediction and predictions from statistical models. Second, we review the limited evidence available regarding accuracy of and concordance between these alternative methods. Third, we present a conceptual framework for understanding agreement and disagreement between clinician and statistical predictions. Finally, we identify priorities for improving data regarding suicide risk and priority questions for research.

While we focus prediction of suicidal behavior, clinicians can expect to encounter more frequent use of statistical models to predict other clinical events, such as psychiatric hospitalization24 or response to antidepressant treatment25. We hope the framework we describe can inform research and policy regarding the integration of statistical prediction and clinician judgment to improve mental health care.

Defining and Distinguishing Statistical and Clinician Prediction

We define statistical prediction as the use of empirically derived statistical models to predict or stratify risk of suicidal behavior. Those models might be developed using traditional regression or various statistical learning methods, but the defining characteristic is the development of a statistical model from a large dataset with the aim of applying that model to future practice. We define clinician prediction as a treating clinician’s estimate of risk during a clinical encounter. As summarized in Table 1, statistical and clinician predictions typically differ in the predictors considered, the process for developing predictions, the nature of the resulting prediction, and the practicalities of implementation.

Table 1 –

Contrasting statistical and clinical prediction of suicide risk

| DISTINGUISHING CHARACTERISTICS | STATISTICAL PREDICTION | CLINICAL PREDICTION |

|---|---|---|

| INPUTS | ||

| Data sources | EHRs and other computerized databases | Clinical observations, clinician review of available records |

| Number of possible predictors | Hundreds or thousands | Typically fewer than 10 |

| PROCESS | ||

| Combining and weighting predictors | Statistical optimization, often involving machine learning | Clinician judgment regarding relevance and weighting of risk factors |

| Accommodating heterogeneity | Interactions or tree-based approaches to identify subgroup-specific predictors | Clinician judgment regarding applicability of specific risk factors to individuals |

| Balancing sensitivity and specificity | Explicit assessment of performance at varying thresholds (May also evaluate alternative loss or cost functions) |

Clinician judgment regarding importance of false positive and false negative errors |

| OUTPUTS | ||

| Product | Unidimensional prediction – often a continuous score | Clinical formulation |

| Use in treatment decisions | Not intended to identify causal relationships or treatment targets | Aims to identify causal relationships and treatment targets |

| IMPLEMENTATION | ||

| Timing | Typically computed prior to clinical encounters | Formulated during clinical encounters |

| Scale | Batch calculation for large populations | Distinct assessments for each individual |

Inputs for generating predictions

Data available to statistical models are both broader and narrower than data available to human clinicians. Statistical models to predict suicidal behavior typically consider hundreds or thousands of potential predictors extracted from health records data (EHRs, insurance claims, hospital discharge data) 26. Those data most often include diagnoses assigned, prescriptions filled, and types of services used, but could also include information extracted from clinical text or laboratory data27, 28. They may also consider “big data” not typically included in health records, such as genomic information, environmental data, online search data, or social media postings29, 30. In contrast, clinician reasoning is typically limited to a handful of predictors rather than hundreds or thousands. Clinicians, however, can consider any data available during a clinical encounter, ranging from unstructured clinical interviews to standardized assessments tools21, 31–33. Most important, clinician assessment usually considers narrative data systematically recorded in EHRs, such as stressful life events, and subjective information such as clinicians’ observations of thought process or nonverbal cues34, 35.

Process for generating predictions

Clinician and statistical prediction differ fundamentally in accommodating high dimensionality and heterogeneity of predictive relationships. Statistical methods select among large numbers of correlated predictors and assign appropriate weights in ways that a human clinician cannot easily replicate. Those statistical methods for dimensionality reduction do involve choices and assumptions that may be obscure to clinicians and patients. In contrast, clinician prediction relies on individual judgment to select salient risk factors for individuals and assign each factor greater or lesser importance. Statistical prediction reduces dimensionality empirically, while clinician prediction reduces dimensionality using theory, heuristics, and individual judgment.

Statistical models account for heterogeneity or complexity by allowing non-linear relationships and complex interactions between predictors. Clinician prediction may consider interactions among risk factors, but in a subjective rather than quantitative sense. For example, clinicians might be less reassured by absence of suicidal ideation in a patient with a history of unplanned suicide attempt.

Statistical predictions typically produce a continuous risk score rather than a dichotomous classification. Selecting a threshold on that continuum must explicitly balance false negative and false positive errors. A higher threshold (less sensitive, more specific) is appropriate when considering an intervention that is potentially coercive or harmful. Machine learning methods for developing risk scores also permit explicit choices regarding loss or cost functions that emphasize accuracy in different portions of the risk spectrum. While clinician prediction may consider the relative importance of false negative and false positive errors, that consideration is not usually explicit or quantitative.

Outputs or products

Statistical prediction typically has narrower goals than clinician prediction. Statistical models simply identifies associations in large samples and combines those associations to optimize a unidimensional prediction, probability of suicidal behavior during some specific time period. Resulting statistical models are optimized for prediction but often have little value for explanation or causal inference. Even when statistical models consider potentially modifiable (e.g. alcohol use) risk factors, statistical techniques used for prediction models are not well-suited to assess causality or mechanism. In contrast, clinician prediction considers psychological states or environmental factors to generate a multidimensional formulation of risk. Explanation and interpretation are central goals of clinician prediction.

Because statistical prediction values optimal prediction over interpretation, statistical models can paradoxically identify appropriate treatments (such as starting a mood stabilizer medication) as risk factors. Recent discussion underlines the importance of properly modeling interventions in prognostic models to prevent this very phenomenon36. In contrast, clinician prediction often aims to identify modifiable risk factors as treatment targets. For example, hallucinations regarding self-harm would be both an indicator of risk and a target for treatment.

Implementing predictions in practice

The above-described differences in inputs and process have practical consequences for implementation. Statistical prediction occurs outside of a clinical encounter using data readily accessible to statistical models. Clinician prediction occurs in real time during face-to-face interactions. Statistical prediction is performed in large batches, with accompanying economies of scale. Clinician prediction is, by definition, a hand-crafted activity. Detailed clinician assessment may not be feasible in primary care or other settings where clinician time or specialty expertise are limited. In contrast, statistical prediction may not be feasible in settings without access to comprehensive electronic records.

The boundary between statistical prediction and clinician prediction is not crisply defined. Statistical prediction models may consider subjective information extracted from clinical text37. Clinician prediction may include use of risk scores calculated from structured assessments or checklists.6 Additionally, clinicians determine what information is entered into medical records and thus available for statistical predictions. Despite this imprecise boundary, we believe the distinction between statistical and clinician prediction has both practical and conceptual importance.

Empirical Evidence Regarding Accuracy and Generalizability of Clinician and Statistical Prediction

Little systematic information is available regarding the accuracy of clinicians’ predictions in everyday practice. Outside of research settings, clinicians’ assessments are not typically recorded in any form allowing formal assessment of accuracy or comparison to a statistical prediction. Nock and colleagues38 reported that psychiatric emergency department clinicians’ predictions were not significantly associated with probability of repeat suicidal behavior among patients presenting after a suicide attempt. A meta-analysis by Franklin and colleagues found that commonly considered risk factors (e.g., mental health diagnoses, medical illness, prior suicidal behavior) have only modest associations with risk of suicide attempt or suicide death5. That finding, however, may not apply to clinicians’ assessments using all data available during a clinical encounter. Any advantage of clinician prediction probably derives from use of richer information (e.g., facial expression, tone of voice) and from clinicians’ ability to assess risk in novel individual situations. No published data examine accuracy of real-world clinicians’ prediction in terms of sensitivity, positive predictive value, or overall accuracy (Area Under the Curve or AUC).

Some systematic data are available regarding accuracy of standard questionnaires or structured clinician assessments. For example, outpatients reporting thoughts of death or self-harm “nearly every day” through the PHQ-9 depression questionnaire39 were eight to ten times more likely to attempt or die by suicide in the following 30 days than those reporting such thoughts “not at all”31. Among Veterans Health Administration outpatients, the corresponding odds ratio was approximately three40. Nevertheless, this screening measure has significant shortcomings in both sensitivity and positive predictive value. More than one-third of suicidal behavior within 30 days of completing a PHQ-9 questionnaire occur among those reporting suicidal ideation “not at all”31. One-year risk of suicide attempt among those reporting suicidal ideation “nearly every day” was only four percent. The Columbia Suicide Severity Rating Scale has been reported to predict suicide attempts among outpatients receiving mental health treatment, when administered either by clinician interview or electronic self-report20, 21. The Columbia scale has shown overall accuracy (AUC) of approximately 80% in predicting future suicide attempts among US veterans entering mental health care41 and people seen in emergency departments for self-harm42.

Statistical models developed for a range of clinical situations (active duty soldiers9, 43, large integrated health systems11, academic health systems7, 44, emergency departments12, and the Veterans Health Administration8) all appear substantially more accurate than predictions based on clinical risk factors or structured questionnaires. Overall classification accuracy as measured by AUC typically exceeds 80% for statistical prediction of suicide attempt or suicide death, clearly exceeding the accuracy rates of 55% to 60% for predictions using clinical risk factors. Overall accuracy of statistical models7–9, 11 also exceeds that reported for self-report questionnaires31, 40 or structured assessments20, 21, 41, 42. As an indicator of practical utility, nearly half of suicide attempts and suicide deaths occur in patients or visits with computed risk scores in the highest 5%7–9, 11.

Comparison of overall accuracy across samples (clinician prediction assessed in one sample, statistical prediction in a different sample), however, cannot inform decisions about how the two methods can be combined to facilitate suicide prevention. The future of suicide risk assessment will likely involve some combination of statistical and clinician prediction45. Rather than framing a competition between the two methods, we should determine the optimal form of collaboration. Informing that collaboration requires detailed knowledge regarding how alternative methods agree or disagree.

Few data are available from direct comparisons of statistical predictions and clinician assessments within the same patient sample. Among ambulatory and emergency department patients undergoing suicide risk assessment, a statistical prediction from health records was substantially more accurate than clinicians’ assessments using an 18-item checklist10. Of patients identified as high risk by the Veterans Health Administration prediction model, the proportion “flagged” as high risk by treating clinicians ranged from approximately one-fifth for those with statistical predictions above the 99.9th percentile to approximately one-tenth for those with statistical predictions above the 99th percentile46. In outpatients treated in seven large health systems, adding item 9 of the PHQ-9 to statistical prediction models had no effect for mental health specialty patients and only a slight effect for primary care patients47.

While these data suggest substantial discordance between statistical predictions and clinician predictions, they do not identify patient, provider, or health system characteristics associated with disagreement. Neither do available data allow us to examine how statistical and clinician prediction agree using higher or lower risk thresholds or over shorter versus longer time periods.

Theoretically, statistical prediction can consider information available within and beyond EHRs. In statistical prediction models reported to date 7–11, however, the strongest predictors remain mental health diagnoses, mental health treatments, and record of previous self-harm. Thus, statistical predictions still rely primarily on data created and recorded by human clinicians. Any advantage of statistical prediction derives not from access to unique data but from rapid complex calculation not possible for human clinicians.

Prediction models based on EHR data could replicate or institutionalize bias or inequity in healthcare delivery48. Given large racial and ethnic differences in suicide mortality49, a statistical model considering race and ethnicity would yield lower estimates of risk for some traditionally underserved47. A model not allowed to consider race would yield less accurate predictions. If and how suicide risk predictions should consider race and ethnicity is a complex question. But the role of race and ethnicity in statistical predictions is at least subject to inspection, while biases of individual clinicians cannot be directly examined or easily remedied.

Any prediction involves applying knowledge based on prior experience. For either statistical or clinician predictions to support clinical decisions, knowledge regarding risk of suicidal behavior developed in one place at one time should still serve when transported to a later time, a different clinical setting, or a different patient population. Published data regarding transportability or generalizability, however, are sparse. Understanding the logistical and conceptual distinctions between clinician and statistical prediction, we can identify specific concerns regarding generalizability for each method. Because clinician prediction depends on the skill and judgment of human clinicians to assess risk, we must consider how clinicians’ abilities might vary across practice settings and how clinicians’ decisions might be influenced by the practice environment. Because statistical prediction usually depends on data elements from EHRs to represent underlying risk states, we must consider how differences in clinical practice, documentation practices, or data systems would affect how specific EHR data elements relate to actual risk47. Clinical practice or documentation could vary between health care settings or within one health care setting over time50. Even if statistical methods apply equally well across clinical settings, the resulting models may differ in predictors selected and weights applied51.

Understanding Disagreement Between Statistical and Clinician Prediction

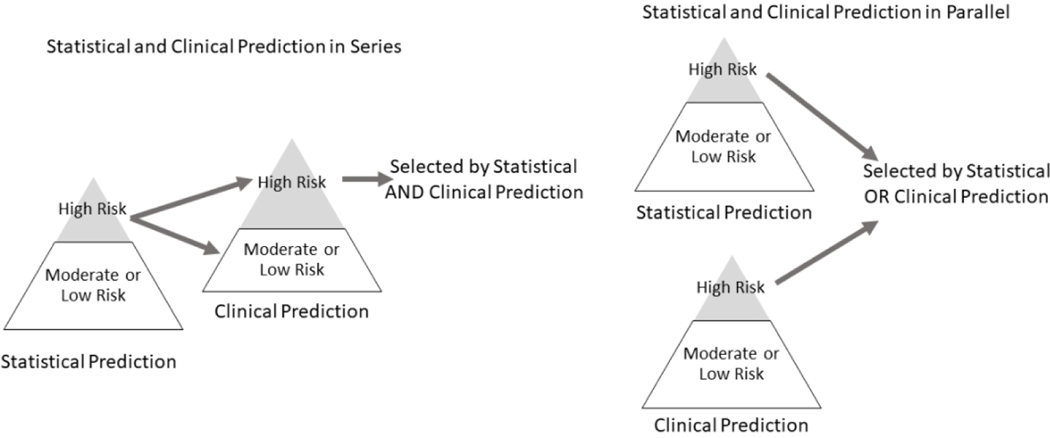

Current13 and planned implementations of statistical prediction models have typically called for a two-step process with statistical and clinician prediction operating in series. That scheme is shown in the left portion of Figure 1, simplifying risk as a dichotomous state. Statistical predictions are delivered to human clinicians who then evaluate risk and initiate appropriate interventions. This two-step process may or may not be reasonable, depending on how we understand the relationship between statistical and clinician risk predictions. Those two processes may act to estimate the same or different risk states or estimands. Understanding similarities and differences in the risk states or estimands of statistical and clinician prediction is essential to optimally combining these potentially complementary tools.

Figure 1.

Alternative logical combinations of statistical and clinical prediction. In a series arrangement, clinical assessment would focus on those already identified as at risk by a statistical model. Any subsequent intervention would be limited to those considered high risk by both statistical prediction and clinical assessment. In a parallel arrangement, clinical assessment would occur regardless of statistical prediction. Any subsequent intervention would be provided to those considered high risk by either statistical or clinical prediction.

If statistical and clinician prediction are imperfect measures of the same underlying risk state or estimand, then applying these tools in series may be appropriate. Even if clinician prediction is less accurate on average than statistical prediction, combining the two may improve overall accuracy. Statistical predictions can be calculated efficiently at large scale, while clinician prediction at the level of individual encounters is more resource intensive. Consequently, it would be practical to compute statistical predictions for an entire population and reserve detailed clinician assessment and clinician prediction for those above a specific statistical threshold (Figure 1 left). Such an approach would be especially desirable if clinician prediction were more accurate for patients in the upper portion of the risk distribution (i.e. those identified by a statistical model as needing further clinician assessment).

We can, however, identify a range of scenarios when a series arrangement would not be optimal. Statistical and clinician prediction could identify different underlying risk states or estimands. For example, statistical models might be more accurate for identifying sustained or longer-term risk, while clinician assessment could be more accurate for identifying short-term or imminent risk. Alternatively, statistical and clinician predictions could also identify distinct pathways to the same outcome. For example, clinician prediction might better identify unplanned suicide attempts while statistical prediction might identify suicidal behavior following a sustained period of suicidal ideation. Finally, statistical and clinician prediction might identify risk in different populations or subgroups. For example, statistical models could be more accurate for identifying risk in those with known mental disorder, while clinician assessment could be more accurate for identifying risk among those without mental health diagnosis. In any of these scenarios, we should implement statistical and clinician prediction in parallel rather than in series. That parallel logic is illustrated in the right portion of Figure 1. We would not limit clinician assessment to those identified by a statistical model or allow clinician assessment to override an alert based on a statistical model. Instead, we would consider either as an indicator of risk.

Some more complex combination of statistical and clinician prediction methods might lead to optimal prediction of risk. Logical combination of two imperfect measures may improve both accuracy and efficiency52. Clinician assessment might be guided or informed by results of statistical predictions, with tailoring of clinician assessment based on patterns detected by statistical models. For example, clinicians might employ specific risk assessment tools for patients identified because of substance use disorder and different tools for patients identified because of prior suicide attempt.

Effective prevention requires more than accurate risk predictions. Both statistical and clinician prediction aim to guide the delivery of preventive interventions, most likely delivered by treating clinicians. The discussion above presumes that clinician and statistical prediction aim to inform similar types of preventive interventions. But just as clinician and statistical predictions may identify different types of risk, they may be better suited to inform different types of preventive interventions. However, for both clinician and statistical predictions, we again caution against confusing predictive relationships with causal processes or intervention targets. If recent benzodiazepine use is selected as an influential statistical predictor, that does not imply that benzodiazepines causes risk or that interventions focused on benzodiazepine use would reduce risk. Clinicians may be more able to identify unmet treatment needs than are statistical models, but correlation does not equal causation (or imply treatment effectiveness) for either clinician or statistical predictions. Caution is warranted regarding causal inference even for risk factors typically considered to be modifiable, such as alcohol use or severity of anxiety symptoms.

Priorities for Future Research

Rather than framing a competition between statistical and clinician predictions of suicide risk, future research should address how these approaches can be combined. At this time, we cannot distinguish between the scenarios described above in order to rationally combine statistical and clinician prediction. We can, however, identify several specific questions that should be addressed by future research.

Addressing any of these questions, however, will require accurate data regarding assessment of risk by real-world clinicians in actual practice. Creating those data would depend on routine documentation of clinicians’ judgements based on all data at hand. This could be accomplished through use of some standard scale allowing for individual clinician judgment in integrating all available information. The widely used Clinical Global Impressions scale and Global Assessment of Functioning scales are examples of such clinician-rated standard measures of symptom severity and disability. Clearer documentation regarding clinicians’ assessment of risk would seem an essential component of high-quality care for people at risk for suicidal behavior, but changing documentation standards would likely require action from health systems and or payers. In addition, data regarding clinicians’ assessment of risk should be linked with accurate and complete data regarding subsequent suicide attempts and suicide deaths. Systematic identification of suicidal behavior outcomes is essential for effective care delivery and quality improvement, independent of any value for research. With an adequate data infrastructure in place, we can then address specific questions regarding the relationship between statistical and clinician predictions of suicidal behavior.

First, we must quantify agreement and disagreement between statistical and clinician predictions using a range of risk thresholds. Addressing this question would require linking data from clinicians’ assessments to statistical predictions using data available prior to that clinical encounter. Any quantification of agreement should include the same at-risk population and should consider the same outcome definition(s) and outcome period(s). Ideally, quantification of agreement should consider risk predictions at the encounter level (how methods agree in identifying high-risk patients during a health care visit) and at the patient level (how methods agree in identifying high-risk patients in a defined population).

Second, after identifying overall discordance between predictions, we should examine actual rates of suicidal behavior in those who are concordant (i.e., high or low risk by both measures) and discordant (high statistical risk but not high risk by clinician assessment OR high risk by clinician assessment but not high statistical risk). Addressing this question would require linking data regarding both statistical and clinician prediction to population-based data regarding subsequent suicide attempt or death. If these two methods are imperfect measures of the same risk state, then we would expect risk in both discordant groups to be lower than that in concordant groups. Equal or higher risk in either discordant group would suggest that one measure identifies a risk state not identified by the alternative measure.

Third, we should quantify disagreement and compare performance within distinct vulnerable subgroups: those with specific diagnoses (e.g., psychotic disorders, substance use disorders), groups known to be at high risk (e.g., older men, Native Americans), or groups for whom statistical prediction models do not perform as well (e.g., people with minimal or no history of mental health diagnosis or treatment). Either statistical prediction or clinician prediction may be superior in any specific subgroup.

Fourth, we should examine how predictions operate in series (i.e., how one prediction method adds meaningfully to the other). As discussed above, practical considerations argue for statistical prediction to precede clinician assessment. That sequence presumes that clinician assessment adds value to predictions identified by a statistical model. Previous research regarding accuracy of clinician prediction has typically examined overall accuracy and not accuracy conditional on a prior statistical prediction. Relevant questions include: Does clinician assessment improve risk stratification among those identified as high-risk by a statistical model? How often does clinician assessment identify risk not identified by a statistical model? Is clinician prediction of risk more or less accurate in subgroups with specific patterns of empirically derived predictors (e.g., young people with a recent diagnosis of psychotic disorder).

Finally, we should examine the effects of specific interventions among those identified by clinician and statistical predictions of risk. Clinical trials demonstrating the risk-reducing effects of either psychosocial or pharmacologic interventions have typically included participants identified by clinicians or selected according to clinical risk factors. We should not presume that those interventions would prove equally effective in people identified by statistical predictions. Addressing this question would likely require large pragmatic trials, not limited to those who volunteer to receive suicide prevention services. If statistical prediction identifies different types or pathways of risk, people identified by statistical models might experience different benefits or harms from treatments developed and tested in clinically identified populations.

Conclusions

We anticipate both that statistical prediction tools will see increasing use and that clinician prediction will continue to improve with development of more efficient and accurate assessment tools. Consequently, the future of suicide risk prediction will likely involve some combination of these two methods. Rational combination of traditional clinician assessment and new statistical tools will require both clear understanding of the potential strengths and weaknesses of these alternative methods and empirical evidence regarding how those strengths and weaknesses affect clinical utility across a range of patient populations and care settings.

Acknowledgments

Authors have received grant support from the National Institute of Mental Health, the National Institute on Drug Abuse, the Food and Drug Administration, the Agency for Healthcare Policy and Research, the Department of Veterans Affairs, the Tommy Fuss Fund, Bristol Meyers Squibb, and Pfizer.

References

- 1.Hedegaard H, Curtin SC, Warner M. Increase in suicide mortality in the United States, 1999–2018. In: Services USDoHaH, editor. Hyattsville, MD: National Center for Health Statistics; 2020. [Google Scholar]

- 2.Ahmedani BK, Simon GE, Stewart C, Beck A, Waitzfelder BE, Rossom R, Lynch F, Owen-Smith A, Hunkeler EM, Whiteside U, Operskalski BH, Coffey MJ, Solberg LI. Health care contacts in the year before suicide death. J Gen Intern Med. 2014;29(6):870–7. PMCID: PMC4026491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ahmedani BK, Stewart C, Simon GE, Lynch F, Lu CY, Waitzfelder BE, Solberg LI, Owen-Smith AA, Beck A, Copeland LA, Hunkeler EM, Rossom RC, Williams K. Racial/Ethnic differences in health care visits made before suicide attempt across the United States. Med Care. 2015;53(5):430–5. PMCID: PMC4397662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Patient Safety Advisory Group. Detecting and treating suicidal ideation in all settings. The Joint Commission Sentinel Event Alerts. 2016;56. [PubMed] [Google Scholar]

- 5.Franklin JC, Ribeiro JD, Fox KR, Bentley KH, Kleiman EM, Huang X, Musacchio KM, Jaroszewski AC, Chang BP, Nock MK. Risk factors for suicidal thoughts and behaviors: A meta-analysis of 50 years of research. Psychol Bull. 2017;143(2):187–232. [DOI] [PubMed] [Google Scholar]

- 6.Warden S, Spiwak R, Sareen J, Bolton JM. The SAD PERSONS scale for suicide risk assessment: a systematic review. Arch Suicide Res. 2014;18(4):313–26. [DOI] [PubMed] [Google Scholar]

- 7.Barak-Corren Y, Castro VM, Javitt S, Hoffnagle AG, Dai Y, Perlis RH, Nock MK, Smoller JW, Reis BY. Predicting Suicidal Behavior From Longitudinal Electronic Health Records. Am J Psychiatry. 2017;174(2):154–62. [DOI] [PubMed] [Google Scholar]

- 8.Kessler RC, Hwang I, Hoffmire CA, McCarthy JF, Petukhova MV, Rosellini AJ, Sampson NA, Schneider AL, Bradley PA, Katz IR, Thompson C, Bossarte RM. Developing a practical suicide risk prediction model for targeting high-risk patients in the Veterans health Administration. Int J Methods Psychiatr Res. 2017;26(3). PMCID: PMC5614864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kessler RC, Stein MB, Petukhova MV, Bliese P, Bossarte RM, Bromet EJ, Fullerton CS, Gilman SE, Ivany C, Lewandowski-Romps L, Millikan Bell A, Naifeh JA, Nock MK, Reis BY, Rosellini AJ, Sampson NA, Zaslavsky AM, Ursano RJ, Army SC. Predicting suicides after outpatient mental health visits in the Army Study to Assess Risk and Resilience in Servicemembers (Army STARRS). Mol Psychiatry. 2016((epub July 19)). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tran T, Luo W, Phung D, Harvey R, Berk M, Kennedy RL, Venkatesh S. Risk stratification using data from electronic medical records better predicts suicide risks than clinician assessments. BMC Psychiatry. 2014;14:76. PMCID: PMC3984680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Simon GE, Johnson E, Lawrence JM, Rossom RC, Ahmedani B, Lynch FL, Beck A, Waitzfelder B, Ziebell R, Penfold RB, Shortreed SM. Predicting Suicide Attempts and Suicide Deaths Following Outpatient Visits Using Electronic Health Records. Am J Psychiatry. 2018:appiajp201817101167. PMCID: PMC6167136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sanderson M, Bulloch AG, Wang J, Williams KG, Williamson T, Patten SB. Predicting death by suicide following an emergency department visit for parasuicide with administrative health care system data and machine learning. EClinicalMedicine. 2020;20:100281. PMCID: PMC7152812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Reger GM, McClure ML, Ruskin D, Carter SP, Reger MA. Integrating Predictive Modeling Into Mental Health Care: An Example in Suicide Prevention. Psychiatr Serv. 2018:appips201800242. [DOI] [PubMed] [Google Scholar]

- 14.Belsher BE, Smolenski DJ, Pruitt LD, Bush NE, Beech EH, Workman DE, Morgan RL, Evatt DP, Tucker J, Skopp NA. Prediction Models for Suicide Attempts and Deaths: A Systematic Review and Simulation. JAMA Psychiatry. 2019. [DOI] [PubMed] [Google Scholar]

- 15.Miller IW, Camargo CA Jr., Arias SA, Sullivan AF, Allen MH, Goldstein AB, Manton AP, Espinola JA, Jones R, Hasegawa K, Boudreaux ED, Investigators E-S. Suicide Prevention in an Emergency Department Population: The ED-SAFE Study. JAMA Psychiatry. 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Brown GK, Ten Have T, Henriques GR, Xie SX, Hollander JE, Beck AT. Cognitive therapy for the prevention of suicide attempts: a randomized controlled trial. JAMA. 2005;294(5):563–70. [DOI] [PubMed] [Google Scholar]

- 17.Linehan MM, Comtois KA, Murray AM, Brown MZ, Gallop RJ, Heard HL, Korslund KE, Tutek DA, Reynolds SK, Lindenboim N. Two-year randomized controlled trial and follow-up of dialectical behavior therapy vs therapy by experts for suicidal behaviors and borderline personality disorder. Arch Gen Psychiatry. 2006;63(7):757–66. [DOI] [PubMed] [Google Scholar]

- 18.Oquendo MA, Baca-Garcia E. Suicidal behavior disorder as a diagnostic entity in the DSM-5 classification system: advantages outweigh limitations. World Psychiatry. 2014;13(2):128–30. PMCID: PMC4102277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Schuck A, Calati R, Barzilay S, Bloch-Elkouby S, Galynker I. Suicide Crisis Syndrome: A review of supporting evidence for a new suicide-specific diagnosis. Behav Sci Law. 2019;37(3):223–39. [DOI] [PubMed] [Google Scholar]

- 20.Mundt JC, Greist JH, Jefferson JW, Federico M, Mann JJ, Posner K. Prediction of suicidal behavior in clinical research by lifetime suicidal ideation and behavior ascertained by the electronic Columbia-Suicide Severity Rating Scale. J Clin Psychiatry. 2013;74(9):887–93. [DOI] [PubMed] [Google Scholar]

- 21.Posner K, Brown GK, Stanley B, Brent DA, Yershova KV, Oquendo MA, Currier GW, Melvin GA, Greenhill L, Shen S, Mann JJ. The Columbia-Suicide Severity Rating Scale: initial validity and internal consistency findings from three multisite studies with adolescents and adults. Am J Psychiatry. 2011;168(12):1266–77. PMCID: PMC3893686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Simon GE, Shortreed SM, Johnson E, Beck A, Coleman KJ, Rossom RC, Whiteside US, Operskalski BH, Penfold RB. Between-visit changes in suicidal ideation and risk of subsequent suicide attempt. Depress Anxiety. 2017. PMCID: PMC5870867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.National Institute of Mental Health. Identifying Research Priorities for Risk Algorithms Applications in Healthcare Settings to Improve Suicide Prevention. 2019. [updated 2019; cited October 15, 2019]; Available from: https://www.nimh.nih.gov/news/events/2019/risk-algorithm/index.shtml. [Google Scholar]

- 24.Burningham Z, Leng J, Peters CB, Huynh T, Halwani A, Rupper R, Hicken B, Sauer BC. Predicting Psychiatric Hospitalizations among Elderly Veterans with a History of Mental Health Disease. EGEMS (Wash DC). 2018;6(1):7. PMCID: PMC5982950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Chekroud AM, Gueorguieva R, Krumholz HM, Trivedi MH, Krystal JH, McCarthy G. Reevaluating the Efficacy and Predictability of Antidepressant Treatments: A Symptom Clustering Approach. JAMA Psychiatry. 2017;74(4):370–8. PMCID: PMC5863470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Beam AL, Kohane IS. Big Data and Machine Learning in Health Care. JAMA. 2018;319(13):1317–8. [DOI] [PubMed] [Google Scholar]

- 27.Oh KY, Van Dam NT, Doucette JT, Murrough JW. Effects of chronic physical disease and systemic inflammation on suicide risk in patients with depression: a hospital-based case-control study. Psychol Med. 2020;50(1):29–37. [DOI] [PubMed] [Google Scholar]

- 28.McCoy TH Jr., Castro VM, Roberson AM, Snapper LA, Perlis RH. Improving Prediction of Suicide and Accidental Death After Discharge From General Hospitals With Natural Language Processing. JAMA Psychiatry. 2016;73(10):1064–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bryan CJ, Butner JE, Sinclair S, Bryan ABO, Hesse CM, Rose AE. Predictors of Emerging Suicide Death Among Military Personnel on Social Media Networks. Suicide Life Threat Behav. 2018;48(4):413–30. [DOI] [PubMed] [Google Scholar]

- 30.Barros JM, Melia R, Francis K, Bogue J, O’Sullivan M, Young K, Bernert RA, Rebholz-Schuhmann D, Duggan J. The Validity of Google Trends Search Volumes for Behavioral Forecasting of National Suicide Rates in Ireland. Int J Environ Res Public Health. 2019;16(17). PMCID: PMC6747463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Simon GE, Coleman KJ, Rossom RC, Beck A, Oliver M, Johnson E, Whiteside U, Operskalski B, Penfold RB, Shortreed SM, Rutter C. Risk of suicide attempt and suicide death following completion of the Patient Health Questionnaire depression module in community practice. J Clin Psychiatry. 2016;77(2):221–7. PMCID: PMC4993156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Horowitz LM, Bridge JA, Teach SJ, Ballard E, Klima J, Rosenstein DL, Wharff EA, Ginnis K, Cannon E, Joshi P, Pao M. Ask Suicide-Screening Questions (ASQ): a brief instrument for the pediatric emergency department. Arch Pediatr Adolesc Med. 2012;166(12):1170–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Boudreaux ED, Camargo CA Jr., Arias SA, Sullivan AF, Allen MH, Goldstein AB, Manton AP, Espinola JA, Miller IW. Improving Suicide Risk Screening and Detection in the Emergency Department. Am J Prev Med. 2016;50(4):445–53. PMCID: PMC4801719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Assessment and Management of Risk for Suicide Working Group. The VA/DoD Clinical Practice Guideline for Assessment and Management of Patients at Risk for Suicide. Washington, DC: Department of Veterans Affairs and Department of Defense; 2019. Contract No.: Document Number|. [Google Scholar]

- 35.American Psychiatric Association. Practice Guidelines for the Psychiatric Evaluation of Adults, Third Edition. Washington, DC: American Psychiatric Association; 2015. [Google Scholar]

- 36.Lenert MC, Matheny ME, Walsh CG. Prognostic models will be victims of their own success, unless. J Am Med Inform Assoc. 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Leonard Westgate C, Shiner B, Thompson P, Watts BV. Evaluation of Veterans’ Suicide Risk With the Use of Linguistic Detection Methods. Psychiatr Serv. 2015;66(10):1051–6. [DOI] [PubMed] [Google Scholar]

- 38.Nock MK, Park JM, Finn CT, Deliberto TL, Dour HJ, Banaji MR. Measuring the suicidal mind: implicit cognition predicts suicidal behavior. Psychol Sci. 2010;21(4):511–7. PMCID: PMC5258199 conflicts of interests with respect to their authorship and/or the publication of this article. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kroenke K, Spitzer RL, Williams JB, Lowe B. The Patient Health Questionnaire Somatic, Anxiety, and Depressive Symptom Scales: a systematic review. Gen Hosp Psychiatry. 2010;32(4):345–59. [DOI] [PubMed] [Google Scholar]

- 40.Louzon SA, Bossarte R, McCarthy JF, Katz IR. Does Suicidal Ideation as Measured by the PHQ-9 Predict Suicide Among VA Patients? Psychiatr Serv. 2016;67(5):517–22. [DOI] [PubMed] [Google Scholar]

- 41.Katz I, Barry CN, Cooper SA, Kasprow WJ, Hoff RA. Use of the Columbia-Suicide Severity Rating Scale (C-SSRS) in a large sample of Veterans receiving mental health services in the Veterans Health Administration. Suicide Life Threat Behav. 2019. [DOI] [PubMed] [Google Scholar]

- 42.Lindh AU, Dahlin M, Beckman K, Stromsten L, Jokinen J, Wiktorsson S, Renberg ES, Waern M, Runeson B . A Comparison of Suicide Risk Scales in Predicting Repeat Suicide Attempt and Suicide: A Clinical Cohort Study. J Clin Psychiatry. 2019;80(6). [DOI] [PubMed] [Google Scholar]

- 43.Kessler RC, Warner CH, Ivany C, Petukhova MV, Rose S, Bromet EJ, Brown M 3rd, Cai T, Colpe LJ, Cox KL, Fullerton CS, Gilman SE, Gruber MJ, Heeringa SG, Lewandowski-Romps L, Li J, Millikan-Bell AM, Naifeh JA, Nock MK, Rosellini AJ, Sampson NA, Schoenbaum M, Stein MB, Wessely S, Zaslavsky AM, Ursano RJ, Army SC. Predicting suicides after psychiatric hospitalization in US Army soldiers: the Army Study To Assess Risk and rEsilience in Servicemembers (Army STARRS). JAMA Psychiatry. 2015;72(1):49–57. PMCID: PMC4286426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Walsh CG, Ribeiro JD, Franklin JC. Predicting Risk of Suicide Attempts Over Time Through Machine Learning. Clinical Psychological Science. 2017;5(3):457–69. [Google Scholar]

- 45.Simon GE. Big Data From Health Records in Mental Health Care: Hardly Clairvoyant but Already Useful. JAMA Psychiatry. 2019;76(4):349–50. [DOI] [PubMed] [Google Scholar]

- 46.McCarthy JF, Bossarte RM, Katz IR, Thompson C, Kemp J, Hannemann CM, Nielson C, Schoenbaum M. Predictive Modeling and Concentration of the Risk of Suicide: Implications for Preventive Interventions in the US Department of Veterans Affairs. Am J Public Health. 2015;105(9):1935–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Simon GE, Shortreed SM, Johnson E, Rossom RC, Lynch FL, Ziebell R, Penfold ARB. What health records data are required for accurate prediction of suicidal behavior? J Am Med Inform Assoc. 2019;Sep 16. PMCID: PMC6857508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366(6464):447–53. [DOI] [PubMed] [Google Scholar]

- 49.Hedegaard H, Curtin SC, Warner M. Suicide Mortality in the United States, 1999–2017. NCHS Data Brief. 2018(330):1–8. [PubMed] [Google Scholar]

- 50.Lu CY, Stewart C, Ahmed AT, Ahmedani BK, Coleman K, Copeland LA, Hunkeler EM, Lakoma MD, Madden JM, Penfold RB, Rusinak D, Zhang F, Soumerai SB. How complete are E-codes in commercial plan claims databases? Pharmacoepidemiol Drug Saf. 2014;23(2):218–20. PMCID: PMC4100538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Barak-Corren Y, Castro VM, Nock MK, Mandl KD, Madsen EM, Seiger A, Adams WG, Applegate RJ, Bernstam EV, Klann JG, McCarthy EP, Murphy SN, Natter M, Ostasiewski B, Patibandla N, Rosenthal GE, Silva GS, Wei K, Weber GM, Weiler SR, Reis BY, Smoller JW. Validation of an Electronic Health Record-Based Suicide Risk Prediction Modeling Approach Across Multiple Health Care Systems. JAMA Netw Open. 2020;3(3):e201262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Denchev P, Kaltman JR, Schoenbaum M, Vitiello B. Modeled economic evaluation of alternative strategies to reduce sudden cardiac death among children treated for attention deficit/hyperactivity disorder. Circulation. 2010;121(11):1329–37. [DOI] [PubMed] [Google Scholar]