Abstract

Ill-posed linear inverse problems appear frequently in various signal processing applications. It can be very useful to have theoretical characterizations that quantify the level of ill-posedness for a given inverse problem and the degree of ambiguity that may exist about its solution. Traditional measures of ill-posedness, such as the condition number of a matrix, provide characterizations that are global in nature. While such characterizations can be powerful, they can also fail to provide full insight into situations where certain entries of the solution vector are more or less ambiguous than others. In this work, we derive novel theoretical lower- and upper-bounds that apply to individual entries of the solution vector, and are valid for all potential solution vectors that are nearly data-consistent. These bounds are agnostic to the noise statistics and the specific method used to solve the inverse problem, and are also shown to be tight. In addition, our results also lead us to introduce an entrywise version of the traditional condition number, which provides a substantially more nuanced characterization of scenarios where certain elements of the solution vector are less sensitive to perturbations than others. Our results are illustrated in an application to magnetic resonance imaging reconstruction, and we include discussions of practical computation methods for large-scale inverse problems, connections between our new theory and the traditional Cramér-Rao bound under statistical modeling assumptions, and potential extensions to cases involving constraints beyond just data-consistency.

Keywords: Inverse Problems, Performance Bounds, Data-Consistency Constraints, Condition Numbers, Characterization of Ill-Posedness

I. Introduction

This paper concerns the classical and well-studied finite-dimensional linear inverse problem of estimating a true unknown signal vector from a noisy data vector obtained as

| (1) |

where is the known system matrix and represents an unknown noise perturbation. In many practical scenarios, this inverse problem is ill-posed, meaning that the original value of might be ambiguous even in the absence of noise, and/or that solutions may be highly sensitive to noise.

While there are many existing theoretical results that characterize ambiguity for this inverse problem, it is frequent to see characterizations phrased in terms of global bounds on the estimation error as a function of the size of the noise [1]-[5]. To be concrete, if we let be the estimate of obtained through some estimation procedure, it is common to see global error bounds of the form

| (2) |

where denotes the standard euclidean norm and the constant will depend on the characteristics of the estimation procedure, the characteristics of the matrix , and potentially also the characteristics of the original vector and the data vector . Examples of this type of bound include:

- If has full column rank and is obtained by least-squares as , then condition number analysis shows that [1]-[5]

where is the ideal measurement that would have been obtained in the absence of noise,1 and is the condition number that provides a measure of the sensitivity of the inverse problem to perturbations of the data. The best (smallest) possible condition number is , while large values of the condition number imply that even relatively small amounts of noise can lead to potentially large relative errors.(4) Many error bounds have been derived that apply under special structural assumptions about , such as sparsity [7] or low-rank [8] assumptions. While we make no attempt to be comprehensive, a typical example is the work of Donoho [9], which showed that if and if is sufficiently sparse, then using subject to will result in a solution that obeys a bound with the same form as Eq. (2) for most large underdetermined matrices .

We refer to all of these as global bounds because they only provide insight into the aggregate error across all of the entries of the vector , in contrast to an entrywise bound that would provide insight into the potential errors for each of the individual entries of the vector .

In practical applications, there are many situations where certain entries of are easier to estimate than others and also many situations where certain entries of are more important to estimate accurately. In such situations, global performance bounds can be misleading, and it can be very valuable to know which entries of an obtained solution vector are more trustworthy. For example, this situation occurs frequently in biomedical imaging applications, where the data acquisition procedure can capture more information about certain image voxels than it does for others, leading to spatially-varying ambiguity about the reconstructed image. In such applications, this spatially-varying ambiguity has often previously been evaluated using statistical characterizations like variance maps or Cramér-Rao bounds [10]-[16]. Use of such techniques generally requires either explicit modeling of the noise statistics or multiple repetitions of the same data collection procedure to enable empirical variance estimation, and can involve restrictive assumptions about the matrix (e.g., that it has full column rank).

In this work, we derive novel tight entrywise bounds that apply to every nearly data-consistent vector , can be used with arbitrary matrices (including rank-deficient matrices), are agnostic to the estimation method used to obtain , and only require knowledge about the size of (via an upper bound on ) without requiring an accurate statistical model or multiple repetitions of the data collection procedure. To be precise about what we mean by “nearly data-consistent solutions,” we will define as the set of potential solution vectors that are consistent with the data vector within a tolerance of :

| (5) |

Our theoretical results will provide tight entrywise bounds that must be satisfied by all of the elements of . Notably, if and data is acquired according to Eq. (1), then the true value must belong to , and our entrywise bounds on must also be bounds for .

Our results (which will be given formally in the sequel as Thm. 1 and its corollaries) will allow us to make useful statements such as: If , then , the ith entry of , must belong to a fixed interval , where the values of , and can be computed explictly based on the values of , , , and . The values we obtain for and are also tight in the sense that we can easily identify specific values of that achieve the minimal and maximal values of . We are not aware of existing characterizations of this form, and we believe they enable fundamental new insights into the ambiguities associated with ill-posed inverse problems.

In addition, our results also allow us to define an entrywise condition number for the entry of . This entrywise condition number (given formally in Corollary 4) will be used in a similar way the condition number from Eq. (4), but will provide bounds that are generally less pessimistic than Eq. (4) (with ) and which explicitly capture the fact that some entries of can be substantially better conditioned than others.

During the final stages of writing this paper, we became aware of mathematical literature involving componentwise condition numbers [17]-[21]. Componentwise theory is similar to our proposed entrywise theory in the sense that they both focus on the individual entries of the vector . However, there are also important differences, including the fact that componentwise theory is frequently used to obtain a single global bound that summarizes the worst-case relative error across all of the individual entries of the vector, while we provide a finer-grained analysis by defining seperate/distinct bounds for each of the entries of individually. One exception to this is the componentwise theory by Chandrasekaran and Ipsen [19], who, like us, also focus on the individual entries of . Interestingly, Chandrasekaran and Ipsen also describe a version of a componentwise condition number that happens to perfectly match the definition of the entrywise condition number proposed in this work. Nevertheless, the literature on componentwise theory (including Ref. [19]) generally considers different problem setups, asks different kinds of questions, and uses very different proof techniques compared to our work, offering perspectives that are both distinct and complementary.

This paper is organized as follows. We start by introducing some additional notation in Sec. II. This is followed by a presentation of our principal theoretical results in Sec. III. To illustrate the usefulness of our theory, we apply it to characterize an inverse problem from a biomedical imaging application in Sec. IV. We conclude the paper with a discussion of several additional topics in Sec. V, including practical computational considerations for large problem sizes, connections between our proposed bounds and Cramér-Rao bounds under white Gaussian noise assumptions, and interesting potential extensions to common scenarios where additional constraints are available beyond just near data-consistency (e.g., sparsity constraints, low-rank constraints, manifold constraints, etc.).

II. Notation

In addition to the notation that we have already introduced, the following notation is also used throughout the paper. We use and to respectively denote the range space and nullspace of the matrix . For a subspace , we use to denote its orthogonal complement, use to denote its orthogonal projection matrix, and use to denote its dimension.

For a matrix of rank , we can use the singular value decomposition (SVD) to express as , where and are matrices with orthonormal columns (corresponding to the left and right singular vectors, respectively), and is a diagonal matrix with diagonal element equal to . The columns of form an orthonormal basis for , while the columns of form an orthonormal basis for . We can also write the matrix using the extended SVD as

| (6) |

where and are also matrices with orthonormal columns. The columns of form an orthonormal basis for and satisfy , while the columns of form an orthonormal basis for and satisfy .

We use to denote the identity matrix. We denote the Moore-Penrose pseudoinverse of the matrix as . If is square and nonsingular, then . If has full column rank, then . Note that , and that .

For the positive semidefinite matrix , we define its square-root as .

We will use to denote the vector whose entry is equal to one, with all the other entries equal to zero (i.e., is the column of ).

III. Main Results

Our main theoretical results are given by the following theorem and its corollaries.

Theorem 1: Let denote the set of all possible values for (the entry of ) for vectors that belong to the set of nearly data-consistent solutions :

If is orthogonal to , then is the finite interval , with

and midpoint . Conversely, if is not orthogonal to , then is unbounded with .

This theorem is valid for arbitrary , , and , and shows that, depending on how the nullspace of the matrix interacts with the vector , the value of is either restricted to a known interval of length or is entirely unconstrained and can be an arbitrary real number.

While Thm. 1 applies to the general case, simplifications occur under assumptions about the rank of . specifically:

If has full column rank (i.e., ), then is always orthogonal to , and is always a finite interval.

If has full row rank (i.e., ), then is always zero. This implies that is a finite interval of length when is orthogonal to , and is unbounded with when is not orthogonal to .

If is square and non-singular (i.e., ), then is always the finite interval of length with midpoint .

It should also be noted that Thm. 1 will still be valid if the vector is substituted everywhere with an arbitrary nonzero vector . This allows the use of these kinds of bounds in the case where we are potentially interested in the range of values for a weighted linear combination of the entries of in the form . For example, in practical imaging applications, if voxel appears to have a larger value than a neighboring voxel in the reconstructed image, it may be worthwhile to know tight upper and lower bounds on the value of across all , which would allow direct insight into whether voxel is always larger than voxel within the class of nearly data-consistent images. This could easily be achieved using the weight vector .

Before giving the proof of Thm. 1, we will first remark that minor manipulations of Thm. 1 also lead to the following corollaries. (As with Thm. 1, these corollaries are also valid if the vector is replaced everywhere with an arbitrary nonzero vector ).

Corollary 1: Assume that noisy data is measured according to Eq. (1), and that the noise is known to obey . Let denote the entry of the original true vector . Then , with defined as in Thm. 1.

Corollary 2: Assume that noisy data is measured according to Eq. (1), and that the noise is known to obey . Also assume that an estimation procedure is used to obtain an estimate of , and that this estimate is nearly data-consistent such that . Let and denote the entries of the estimate and the original true vector, respectively. Then if is orthogonal to , we must have

Corollary 3: Assume that noisy data is measured according to Eq. (1), that has full column rank, and that is obtained using . Then

Note that Cor. 3 can be viewed an entrywise analogue of the spectral norm bound from Eq. (3). Moreover, Cor. 3 provides a bound that is generally better and never worse than Eq. (3), since we always have that .

Corollary 4: Assume that noisy data is measured according to Eq. (1), that has full column rank, and that is obtained using . Then

where we define as the entrywise condition number for the entry of .

Note that Cor. 4 can be viewed as an entrywise analogue of the condition number bound from Eq. (4). In addition, Cor. 4 provides a bound that is generally better and never worse than Eq. (4), since we always have that (which can be established from the fact that .

The proof of Thm. 1 is given below.

Proof of Thm. 1

We first decompose as . Due to orthogonality between and (invoking the Pythagorean theorem for ), we can rewrite as

| (7) |

with and . Note that must be smaller than for to be a nonempty set.

Using the definition of the -norm, we can simplify the previous expression to

| (8) |

This set has the form of an ellipsoid [22], and it is well-known that if is nonsingular, then the volume of this ellipsoid is [23]

| (9) |

where denotes the gamma function (and should not be confused with the set ). Clearly, if the volume of this ellipsoid is large, then there will be many vectors that are nearly data-consistent, and the solution to the inverse problem will have more potential ambiguity. If were singular, then the ellipsoid is degenerate and must extend infinitely along directions aligned with the nullspace of , causing to have infinite volume. However, these statements only provide insight into the global ambiguity of the solution, and our goal in this work is to characterize the ambiguities associated with the individual entries of the elements of , which may be much better for some entries than might be interpreted based on a global characterization.

Invoking the SVD , we have

| (10) |

Furthermore, because of the orthonormality and mutual orthogonality properties of and , it is possible to uniquely represent an arbitrary vector as , where with , and with . We can then write

| (11) |

A simple change of variables then results in

| (12) |

Notably, the component (which corresponds to the part of that is in the nullspace of ) is completely unconstrained, and can be arbitrarily large. This results in a degenerate ellipsoid as described previously.

Given this geometric characterization of the set of nearly data-consistent solutions, we can now consider the behavior of the individual entries of . Substituting the results of Eq. (12) into the definition of as given in Thm 1, we obtain

| (13) |

At this point, there are two cases to consider.

1). Case 1:

First, consider the case where is not orthogonal to , such that . Then, because is unconstrained, we must have

| (14) |

indicating that the entry of is unbounded and could take on arbitrary values. In particular, for an arbitrary , we can achieve for by choosing with .

2). Case 2:

In the second case, assume that is orthogonal to such that . In this case, we have the simplification

| (15) |

The maximum and minimum possible values of under the constraint that are respectively given by . Note also that the extremal values of are achieved by taking , which satisfies , and that simple rescaling of this choice of by a real-scalar with modulus less than one will allow us to achieve any real value in between the upper and lower bounds. We therefore find that is simply the finite interval , with and as given in the statement of the theorem.

This completes the proof of Thm. 1. □

While most of the corollaries are trivial manipulations of Thm. 1, we will note that the derivation of Cor. 4 is based on the combination of Cor. 3 with the fact that .

IV. Illustrative Example

We illustrate the utility of our new theoretical results by applying them to an example application: image reconstruction in multi-channel magnetic resonance imaging (MRI). Specifically, under the sensitivity encoding model of multi-channel MRI [11], [24], we assume that we are interested in reconstructing a discrete image defined on an -voxel grid, where the value of the image at each voxel is complex-valued. We use to represent the unknown voxel values. Data measurements are then obtained from this image from a set of channels, where the data from the channel is represented as

| (16) |

where represents the noise for the channel, is a diagonal matrix whose diagonal entries are equal to the corresponding values of the spatially-varying sensitivity profile for the channel, and represents an operator that performs Fourier transformation followed by some form of sampling. Typically, the sampling rate is chosen to be below the Nyquist rate to allow accelerated data acquisition, resulting in . This allows us to represent the inverse problem as

| (17) |

where is the vertical concatenation of the vectors, is the vertical concatenation of the vectors, and is the vertical concatenation of the matrices, with .

An important observation is that the matrices and vectors in our example inverse problem are all complex-valued, while our theoretical results are all given assuming the real-valued case. We chose to present our theory for the real-valued case because there exists a standard ordering for real-numbers that enables a simple discussion of lower- and upper-bounds, while the complex-valued case would be much more complicated. However, in order to apply our theory to this scenario, we need to transform the complex-valued problem statement into an equivalent real-valued problem statement. This is easily done by separating each complex-value into its real- and imaginary-components, and then working with an equivalent purely-real expression of the inverse problem (see, e.g., [25], [26]). We have used this real-valued transformation approach in the results that follow. For simplicity and to avoid introducing new notation, we will simply refer to these now real-valued variables using the same notation we used for the complex-valued case (i.e., , , , etc.). From this point forward, all variables should now be interpreted as real-valued.

In our illustrative example application, we use real fully-sampled 32-channel MRI brain data acquired at our institution, which we retrospectively subsample to emulate an accelerated data acquisition procedure as illustrated in Fig. 1, with . Note that even though the matrix is underdetermined because , the overall system is still overdetermined because . We have determined numerically that the matrix has full column rank.

Fig. 1.

The (a) original fully-sampled 32-channel MRI brain data and (b) the retrospectively subsampled version of the data used for the illustrative MRI reconstruction example. The top row shows coil-combined magnitude images, with zero-filling of the missing Fourier data samples in the subsampled case. The bottom row shows the corresponding coil-combined Fourier magnitudes. The Fourier sampling pattern captures every fourth phase-encoding line, while also measuring the central (low-frequency) 24 phase encoding lines.

As can be seen in Fig. 1, our subsampling scheme is designed such that undersampling only occurs along one dimension of the 2D Fourier domain (i.e., the phase encoding dimension in MRI terminology), while the remaining dimension (i.e., the readout dimension in MRI terminology) is fully sampled at the Nyquist rate. This structure implies that one can apply a unitary inverse Fourier transform operator along the fully-sampled readout dimension as a preprocessing step to completely decouple the reconstruction of each image row [27]. Reconstructing each image row independently enables substantial reductions in computational complexity, and the results we present later in this section are all based on this row-decoupled approach.

In our illustrative example, we have used ESPIRiT [28] with circular neighborhoods [29] to estimate the sensitivity profiles needed to form the matrices from the fully-sampled central Fourier data. In addition, we incorporate a phase estimate directly into the sensitivity maps so that the reconstructed image will be approximately real-valued [25], [30]-[33]. Our formulation also uses prior knowledge of the support of the image and does not estimate voxel locations that are already known to be zero, which is achieved by removing the corresponding columns from the matrix [11]. We have also used direct measurements of the multi-channel thermal noise to prewhiten the channels, which allows all thermal noise samples to be treated as independent and identically distributed zero-mean circularly-symmetric complex Gaussian noise [24].

Our illustration will start by calculating the theoretical lower and upper bounds from Thm. 1 for this inverse problem. However, before this can be done, it is important to select an appropriate value of . Ideally, the noise perturbation in MRI would be purely due to thermal (white Gaussian) noise, such that the distribution of would follow the chi distribution, which has a well-characterized cumulative distribution function (CDF). If this were the case, then a reasonable approach for selecting might be to choose based on the CDF, e.g., choose so that the probability of observing is less than 1%. Unfortunately, this approach for determining turns out to be inappropriate for this data, since we observe empircally that we would have under this approach to choosing . The reason this approach fails is that we are working with real data, and there are many sources of error in the data beyond just the thermal noise, including minor motion of the human subject during the scan, flow of the subject’s blood and cerebrospinal fluid during the scan, and spin relaxation during the acquisition. All of these contribute to , causing it to be much larger than would be predicted from the statistics of thermal noise. This example also helps to underscore the point from the introduction that it can be important to have theoretical bounds that are not heavily dependent on statistical noise modeling assumptions, since these assumptions can fail for practical real-world data.

To overcome this practical issue, we have used a heuristic (and likely suboptimal) approach for choosing , that is nevertheless good enough for our illustration despite its imperfections. Specifically, we observe that, based on our inverse problem model from Eq. (1), the vector should not contain any of the true signal, and consists entirely of the projection of the noise onto the subspace . If we make the assumption that the noise energy distributes relatively evenly across all subspaces of and that and are large enough that concentration of measure principles can be applied, then we might reasonably expect that

| (18) |

In what follows, we therefore set . Before moving on, we would like to reemphasize that this choice is heuristic and not rigorously justified, and many other ways of choosing could have been used instead. Different choices of would result in a very simple and predictable change in the lower- and upper-bounds and , and would have no effect on other properties that may be of interest such as the entrywise spectral norm-type bound from Cor. 3 or the entrywise condition number from Cor. 4.

Figure 2 depicts the entrywise lower- and upper-bounds we obtain on the real- and imaginary-parts of the image based on our choice of . In this example, the lower-bounds and upper-bounds are relatively far separated from one another for most voxels of the images, suggesting that near data-consistency constraints themselves may be insufficient to guarantee accurate estimation of the image voxel amplitudes. Of course, it should also be kept in mind that these are worst-case bounds that are independent of any specific image estimation method, and that the practical performance of a specific estimator in the presence of typical (i.e., not worst-case) may be better. On the other hand, the fact that there can be this level of variation within the set of near data-consistent images may also encourage an experimenter to consider the use of noise-mitigation strategies so that can be reduced, and/or the redesign of the data acquisition matrix so that can be made smaller.

Fig. 2.

Illustration of spatial maps of the entrywise lowerbounds and upperbounds for the (a,b) real and (c,d) imaginary parts of nearly data-consistent images , as obtained by applying Thm. 1 to the multi-channel MRI reconstruction scenario.

An important thing to keep in mind is that the spatial maps of and shown in Fig. 2 may happen to look like realistic MRI images, but they are not themselves elements of . In particular, these maps only provide entrywise bounds, and it is unlikely that these bounds could be achieved by all voxels simultaneously. To illustrate this point, Fig. 3 gives examples of two images belonging to that achieve the upper- or lower-bounds for specific choices of voxels (using the technique described in the proof of Thm. 1 to identify the extremal cases).

Fig. 3.

Examples of two different nearly data-consistent images that achieve the (a) upper- and (b) lower-bounds from Fig. 2 for a specific column of voxels (indicated with red arrows). For simplicity, only the real parts of the images are shown (the imaginary parts of both images are close to zero).

As mentioned in Sec. III, we can also replace in Thm. 1 with a nonzero vector to construct bounds on linear combinations of the entries of . We illustrate this point by showing spatial maps of the upper- and lower-bounds on the difference between adjacent voxels ( as discussed in Sec. III, where corresponds to the voxel position in the image and corresponds to the voxel immediately to the right of the voxel) in Fig. 4. Interestingly, we observe that there are only a few voxels that are consistently larger or smaller than their neighbors (i.e., the lower-bounds and upper-bounds have consistent sign) within the set of nearly data-consistent solutions.

Fig. 4.

Spatial maps of the (a) lower-bounds and (b) upper-bounds for the difference between adjacent voxels, with .

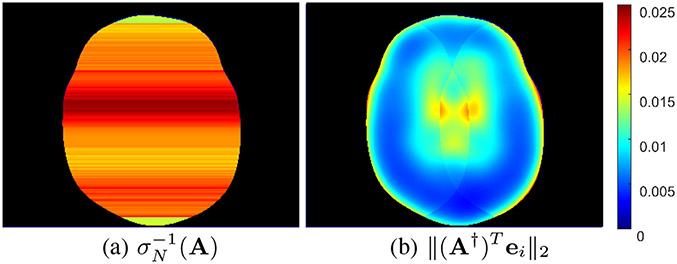

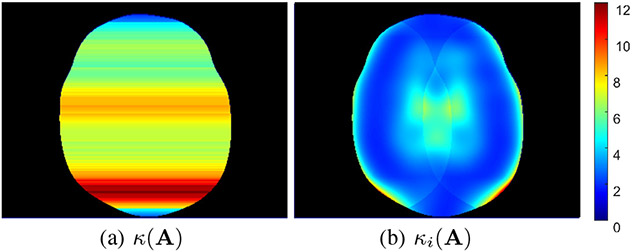

In our final set of illustrations, we compare the global bounds from Eqs. (3) and (4) against our new entrywise bounds from Cors. 3 and 4. Specifically, Fig. 5 focuses on the spectral norm-type bounds from Eq. (3) and Cor. 3, while Fig. 6 focuses on the condition number bounds from Eq. (4) and Cor. 4. In order to show spatial maps of the global bounds in these figures, we have made use of the equivalence of norms on finite dimensional spaces [1], [5]. Specifically, this equivalence implies that

| (19) |

for arbitrary , where is the standard infinity norm. This relationship immediately allows a global bound in the form of Eq. (2) to be converted into a bound on the individual elements of :

| (20) |

which allows us to depict spatial maps of the global bounds.

Fig. 5.

Spatial maps of the spectral norm-type (a) global bounds and (b) entrywise bounds . Note that because we use decoupling to solve for each image row independently, we have a different global bound for each image row.

Fig. 6.

Spatial maps of the (a) traditional condition number and the (b) entrywise condition number . Note that because we use decoupling to solve for each image row independently, we have a different condition numbers for each image row.

As can be seen from Figs. 5 and 6, our proposed entrywise bounds are usually better and never worse than the global bounds, consistent with our theoretical expectations. In addition, the entrywise characterizations both do a good job of capturing the fact that the values of certain voxels can be substantially less sensitive to noise perturbations than others, while the traditional global characterizations lack this nuance and are more pessimistic.

V. DISCUSSION

A. Efficient Computations for Large Problems

The new theoretical characterizations presented in this paper all depend on the ability to compute the numerical value of . When the problem size is small enough, it is possible to explicitly calculate the SVD of , at which point this calculation becomes trivial. However, in many practical scenarios, the matrix is too big to be stored in memory, and it is necessary to work with functions that compute matrix-vector multiplications with or rather than working directly with the matrix .

In situations like this, there exist well-established iterative procedures that allow computation of the quantity for arbitrary vectors and arbitrary matrices . For example, starting from zero initialization , the Landweber iteration [2], [34]-[36]2

| (21) |

will satisfy as as long as . Furthermore, if the value of is known where is the rank of , then it is also possible to use this value to precompute the number of iterations that are needed so that approximates within a prescribed level of accuracy.3 This type of approach allows for simple computation of .

However, this type of approach quickly becomes computationally burdensome if we wish to compute for a large number of different values. Fortunately, it is also possible to use stochastic methods to approximately compute for all simultaneously. In particular, let for be a collection of independent and identically distributed random vectors with zero mean and covariance matrix equal to , and let

| (22) |

where our notation assumes that the magnitude-squaring operation is applied separately to each entry of the vector. Then it is not hard to show that the expected value of entry of is equal to , and that converges to its expected value in the limit as . This allows approximate calculation of for all possible values of simultaneously, but only requiring the computation of for . If , then this can represent a massive improvement in computational complexity over directly calculating for each . Notably, this approach is quite similar to stochastic techniques designed to efficiently estimate the diagonal entries of spatially-varying covariance matrices [14].

B. Relationship to Cramér-Rao bounds

The theoretical bounds we obtained in this paper were derived without making any assumptions about the statistical characteristics of , which can be useful when the distribution of is unknown. Interestingly, if we make stronger assumptions about the statistical characteristics of , then we can establish direct links between the traditional Cramér-Rao bound from estimation theory [39] and a quantity that appears prominently in our theoretical bounds, namely .4

To derive Cramér-Rao bounds, we will now make the assumption that is a zero-mean Gaussian random vector with covariance matrix . In this case, assuming that , it can be shown that the entrywise Fisher information corresponding to the quantity is equal to [40]. This means that the entrywise Cramér-Rao bound for (which is this inverse of the entrywise Fisher information, as well as a lower bound on the variance of any unbiased estimator of [39]) is equal to . This establishes a clear parallel between Cramér-Rao bounds and our theoretical characterization.

This relationship is potentially interesting because minimization of the Cramér-Rao bound is a common objective in methods to design optimally-efficient matrices [40]. For example, this type of Cramér-Rao optimization approach has been explored to optimize data acquisition protocols in MRI applications with linear data acquisition models [16], [41], [42]. While this approach has historically been motivated by statistical variance-minimization arguments, our theoretical results demonstrate that this approach can also be justified based on deterministic principles, without requiring strong assumptions about the nature of the noise . Specifically, minimizing the Cramér-Rao bound for under white Gaussian noise assumptions implicitly has the same effect as minimizing the length of the interval [] for under near data-consistency constraints, regardless of the actual distribution of

It should be noted that we have only established a relationship between our new entrywise bounds and entrywise Cramér-Rao bounds for linear models in the form of Eq. (1). There are also many applications where nonlinear data acquisition procedures are optimized by minimizing Cramér-Rao bounds (e.g., a few MRI examples include [43]-[50]). Based on our experience with the linear case, we suspect that it may be possible to rigorously justify the goodness of such design approaches for nonlinear models without making strong statistical assumptions, and believe that this could be an interesting topic for future research.

C. Extensions to Constrained Reconstruction?

One of the features of our theory is that our bounds rely entirely on near data-consistency constraints , and can be agnostic to the specific estimation methods that are used to obtain or any additional prior information that we may have about . In practice, modern estimation methods often rely on more than just near data-consistency, and also impose other forms of constraints when solving an inverse problem. For example, in modern MRI reconstruction, it is common to look for reconstructed images that are not only nearly-data consistent, but which also obey sparsity constraints [51]-[55], low-rank constraints [56]-[61], autoregressive/structured low-rank constraints [62]-[66], or even manifold constraints that could be learned by applying machine learning methods to large databases of previous images [67]-[69]. (See also [70], [71] for additional historical context).

These kinds of additional constraints are all expected to reduce ambiguity in the solution to the inverse problem. As such, rather than just understanding entrywise ambiguity for the set of nearly data-consistent solutions , it may be even better to be able to identify entrywise ambiguity for solutions that are both nearly data-consistent and obey other constraints, i.e., , where is a set that might represent a sparsity constraint, a low-rank constraint, a manifold constraint, etc.

This is a difficult problem and a rigorous investigation of the interplay between and different types of complicated constraint sets would go far beyond the scope of this paper, though we expect this to be a promising direction for future exploration. We especially believe that developing interval bounds of the form for may be important for building trust in machine-learning methods designed to solve inverse problems, given the potential for instability and limited resolving power that has been observed with some of these kinds of methods [72], [73].

Acknowledgments

This work was supported in part by NIH research grants R01-MH116173 and R01-NS074980.

Biography

Justin P. Haldar (Senior Member, IEEE) received the Ph.D. in Electrical and Computer Engineering from the University of Illinois at Urbana-Champaign in 2011. He is now an Associate Professor in the Signal and Image Processing Institute and the Ming Hsieh Department of Electrical and Computer Engineering at the University of Southern California. His research interests include inverse problems, signal processing, computational imaging, and applications in biomedical imaging (especially magnetic resonance imaging). His research has been recognized with honors such as the NSF CAREER award, the IEEE ISBI best paper award, and the IEEE EMBC first-place student paper award, among others. He is an Associate Editor for the IEEE Transactions on Medical Imaging (2014-Present) and a Senior Area Editor for the IEEE Transactions on Computational Imaging (2021-Present; previously Associate Editor from 2018-2021), and has twice received (in 2019 and 2022) the Outstanding Editorial Board Award from the IEEE Signal Processing Society. He has also been active in the IEEE Signal Processing Society’s Technical Committees on Computational Imaging (Chair Elect, 2024-2025; Vice Chair, 2023; Advisory Member, 2022; Member, 2016-2021) and Bio Imaging and Signal Processing (Member, 2014-2019; Associate Member, 2011-2013).

Footnotes

Note that the condition number bound that we have presented assumes that belongs to the range of , which results in a simpler expression than would be obtained otherwise [1], [4], [5].

Other iterative algorithms like the conjugate gradient method [37] or LSQR [38] could also be employed to determine for arbitrary vectors . These methods will generally converge faster than Landweber iteration, although it can be important to watch out for numerical stagnation issues that may prevent these algorithms from converging to the desired value of .

While it is common to truncate the iterations early to take advantage of semiconvergence phenomena when using Landweber iteration to solve inverse problems [2], [35], [36], it is important to not use early-stopping criteria when computing our theoretical bounds, as this can lead to an underestimation of the ambiguity in the solution to the inverse problem.

Readers from the MRI community may benefit from knowing that the Cramér-Rao bound is highly related to the g-factor from the MRI literature [11], [14]. Specifically, the g-factor can simply be viewed as the normalized voxelwise ratio between the Cramér-Rao bounds for subsampled and fully-sampled acquisitions.

References

- [1].Golub G and van Loan C, Matrix Computations, 3rd ed. London: The Johns Hopkins University Press, 1996. [Google Scholar]

- [2].Bertero M and Boccacci P, Introduction to Inverse Problems in Imaging. London: Institute of Physics Publishing, 1998. [Google Scholar]

- [3].Moon TK and Stirling WC, Mathematical Methods and Algorithms for Signal Processing. Upper Saddle River: Prentice Hall, 2000. [Google Scholar]

- [4].Heath MT, Scientific Computing: An Introductory Survey, 2nd ed. Boston: McGraw-Hill, 2002. [Google Scholar]

- [5].Bresler Y, Basu S, and Couvreur C, “Hilbert spaces and least squares methods for signal processing,” 2008, unpublished manuscript. [Google Scholar]

- [6].Luenberger DG, Optimization by Vector Space Methods. New York: John Wiley & Sons, 1969. [Google Scholar]

- [7].Candès EJ and Wakin MB, “An introduction to compressive sampling,” IEEE Signal Process. Mag, vol. 25, pp. 21–30, 2008. [Google Scholar]

- [8].Davenport MA and Romberg J, “An overview of low-rank matrix recovery from incomplete observations,” IEEE J. Sel. Topics Signal Process, vol. 10, pp. 608–622, 2016. [Google Scholar]

- [9].Donoho DL, “For most large underdetermined systems of equations, the minimal -norm near-solution approximates the sparsest near-solution,” Comm. Pure Appl. Math, vol. 59, pp. 907–934, 2006. [Google Scholar]

- [10].Fessler JA, “Mean and variance of implicitly defined biased estimators (such as penalized maximum likelihood): Applications to tomography,” IEEE Trans. Image Process, vol. 5, pp. 493–506, 1996. [DOI] [PubMed] [Google Scholar]

- [11].Pruessmann KP, Weiger M, Scheidegger MB, and Boesiger P, “SENSE: Sensitivity encoding for fast MRI,” Magn. Reson. Med, vol. 42, pp. 952–962, 1999. [PubMed] [Google Scholar]

- [12].Qi J and Leahy RM, “Resolution and noise properties of MAP reconstruction for fully 3-D PET,” IEEE Trans. Med. Imag, vol. 19, pp. 493–506, 2000. [DOI] [PubMed] [Google Scholar]

- [13].Stayman JW and Fessler JA, “Efficient calculation of resolution and covariance for penalized-likelihood reconstruction in fully 3-D SPECT,” IEEE Trans. Med. Imag, vol. 23, pp. 1543–1555, 2004. [DOI] [PubMed] [Google Scholar]

- [14].Robson PM, Grant AK, Madhuranthakam AJ, Lattanzi R, Sodickson DK, and McKenzie CA, “Comprehensive quantification of signal-to-noise ratio and g-factor for image-based and k-space-based parallel imaging reconstructions,” Magn. Reson. Med, vol. 60, pp. 895–907, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Ahn S and Leahy RM, “Analysis of resolution and noise properties of nonquadratically regularized image reconstruction methods for PET,” IEEE Trans. Med. Imag, vol. 27, pp. 413–424, 2008. [DOI] [PubMed] [Google Scholar]

- [16].Haldar JP and Kim D, “OEDIPUS: An experiment design framework for sparsity-constrained MRI,” IEEE Trans. Med. Imag, vol. 38, pp. 1545–1558, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Higham NJ, “A survey of componentwise perturbation theory in numerical linear algebra,” in Mathematics of Computation 1943–1993: A Half-Century of Computational Mathematics, Gautschi W, Ed. Providence: American Mathematical Society, 1994, pp. 49–77. [Google Scholar]

- [18].Cucker F, Diao H, and Wei Y, “On mixed and componentwise condition numbers for Moore-Penrose inverse and linear least squares problems,” Math. Comput, vol. 76, pp. 947–963, 2007. [Google Scholar]

- [19].Chandrasekaran S and Ipsen ICF, “On the sensitivity of solution components in linear systems of equations,” SIAM J. Matrix Anal. Appl, vol. 16, pp. 93–112, 1995. [Google Scholar]

- [20].Baboulin M and Gratton S, “Using dual techniques to derive componentwise and mixed condition numbers for a linear function of a least squares solution,” BIT Numer. Math, vol. 49, pp. 3–19, 2009. [Google Scholar]

- [21].Diao H-A, Liang L, and Qiao S, “A condition analysis of the weighted linear least squares problem using dual norms,” Linear Multilinear Algebra, vol. 66, pp. 1085–1103, 2018. [Google Scholar]

- [22].Boyd S and Vandenberghe L, Convex Optimization. Cambridge: Cambridge University Press, 2004. [Google Scholar]

- [23].Friendly M, Monette G, and Fox J, “Elliptical insights: Understanding statistical methods through elliptical geometry,” Stat. Sci, vol. 28, pp. 1–39, 2013. [Google Scholar]

- [24].Pruessmann KP, Weiger M, Börnert P, and Boesiger P, “Advances in sensitivity encoding with arbitrary k-space trajectories,” Magn. Reson. Med, vol. 46, pp. 638–651, 2001. [DOI] [PubMed] [Google Scholar]

- [25].Bydder M and Robson MD, “Partial Fourier partially parallel imaging,” Magn. Reson. Med, vol. 53, pp. 1393–1401, 2005. [DOI] [PubMed] [Google Scholar]

- [26].Kim TH and Haldar JP, “Efficient iterative solutions to complex-valued nonlinear least-squares problems with mixed linear and antilinear operators,” Optim. Eng, vol. 23, pp. 749–768, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Kyriakos WE, Panych LP, Kacher DF, Westin C-F, Bao SM, Mulkern RV, and Jolesz FA, “Sensitivity profiles from an array of coils for encoding and reconstruction in parallel (SPACE-RIP),” Magn. Reson. Med, vol. 44, pp. 301–308, 2000. [DOI] [PubMed] [Google Scholar]

- [28].Uecker M, Lai P, Murphy MJ, Virtue P, Elad M, Pauly JM, Vasanawala SS, and Lustig M, “ESPIRiT – an eigenvalue approach to autocalibrating parallel MRI: Where SENSE meets GRAPPA,” Magn. Reson. Med, vol. 71, pp. 990–1001, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Lobos RA and Haldar JP, “On the shape of convolution kernels in MRI reconstruction: Rectangles versus ellipsoids,” Magn. Reson. Med, vol. 87, pp. 2989–2996, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Willig-Onwuachi JD, Yeh EN, Grant AK, Ohliger MA, McKenzie CA, and Sodickson DK, “Phase-constrained parallel MR image reconstruction,” J. Magn. Reson, vol. 176, pp. 187–198, 2005. [DOI] [PubMed] [Google Scholar]

- [31].Lew C, Pineda AR, Clayton D, Spielman D, Chan F, and Bammer R, “SENSE phase-constrained magnitude reconstruction with iterative phase refinement,” Magn. Reson. Med, vol. 58, pp. 910–921, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Haldar JP, Wedeen VJ, Nezamzadeh M, Dai G, Weiner MW, Schuff N, and Liang Z-P, “Improved diffusion imaging through SNR-enhancing joint reconstruction,” Magn. Reson. Med, vol. 69, pp. 277–289, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Blaimer M, Heim M, Neumann D, Jakob PM, Kannengiesser S, and Breuer FA, “Comparison of phase-constrained parallel MRI approaches: analogies and differences,” Magn. Reson. Med, vol. 75, pp. 1086–1099, 2016. [DOI] [PubMed] [Google Scholar]

- [34].Landweber L, “An iteration formula for Fredholm integral equations of the first kind,” Am. J. Math, vol. 73, pp. 615–624, 1951. [Google Scholar]

- [35].Hansen PC, Rank-Deficient and Discrete Ill-Posed Problems. Philadelphia: SIAM, 1998. [Google Scholar]

- [36].Vogel CR, Computational Methods for Inverse Problems. Philadelphia: SIAM, 2002. [Google Scholar]

- [37].Hestenes MR and Stiefel E, “Methods of conjugate gradients for solving linear systems,” J. Res. Natl. Bur. Stand, vol. 49, pp. 409–436, 1952. [Google Scholar]

- [38].Paige CC and Saunders MA, “LSQR: An algorithm for sparse linear equations and sparse least squares,” ACM Trans. Math. Soft, vol. 8, pp. 43–71, 1982. [Google Scholar]

- [39].Kay SM, Fundamentals of Statistical Signal Processing, Volume I: Estimation Theory. Upper Saddle River: Prentice Hall, 1993. [Google Scholar]

- [40].Pukelsheim F, Optimal Design of Experiments. New York: John Wiley & Sons, 1993. [Google Scholar]

- [41].Reeves SJ and Zhe Z, “Sequential algorithms for observation selection,” IEEE Trans. Signal Process, vol. 47, pp. 123–132, 1999. [Google Scholar]

- [42].Xu D, Jacob M, and Liang Z-P, “Optimal sampling of k-space with Cartesian grids for parallel MR imaging,” in Proc. Int. Soc. Magn. Reson. Med, 2005, p. 2450. [Google Scholar]

- [43].Jones JA, Hodgkinson P, Barker AL, and Hore PJ, “Optimal sampling strategies for the measurement of spin-spin relaxation times,” J. Magn. Reson. B, vol. 113, pp. 25–34, 1996. [Google Scholar]

- [44].Marseille GJ, Fuderer M, de Beer R, Mehlkopf AF, and van Ormondt D, “Reduction of MRI scan time through nonuniform sampling and edge-distribution modeling,” J. Magn. Reson. B, vol. 103, pp. 292–295, 1994. [DOI] [PubMed] [Google Scholar]

- [45].Cavassila S, Deval S, Huegen C, van Ormondt D, and Graveron-Demilly D, “Cramér-Rao bounds: an evaluation tool for quantitation,” NMR Biomed., vol. 14, pp. 278–283, 2001. [DOI] [PubMed] [Google Scholar]

- [46].Pineda AR, Reeder SB, Wen Z, and Pelc NJ, “Cramér-Rao bounds for three-point decomposition of water and fat,” Magn. Reson. Med, vol. 54, pp. 625–635, 2005. [DOI] [PubMed] [Google Scholar]

- [47].Alexander DC, “A general framework for experiment design in diffusion MRI and its application in measuring direct tissue-microstructure features,” Magn. Reson. Med, vol. 60, pp. 439–448, 2008. [DOI] [PubMed] [Google Scholar]

- [48].Haldar JP, Hernando D, and Liang Z-P, “Super-resolution reconstruction of MR image sequences with contrast modeling,” in Proc. IEEE Int. Symp. Biomed. Imag., 2009, pp. 266–269. [Google Scholar]

- [49].De Naeyer D, De Deene Y, Ceelen WP, Segers P, and Verdonck P, “Precision analysis of kinetic modelling estimates in dynamic contrast enhanced MRI,” Magn. Reson. Mater. Phy, vol. 24, pp. 51–66, 2011. [DOI] [PubMed] [Google Scholar]

- [50].Zhao B, Haldar JP, Liao C, Ma D, Jiang Y, Griswold MA, Setsompop K, and Wald LL, “Optimal experiment design for magnetic resonance fingerprinting: Cramer-Rao bound meets spin dynamics,” IEEE Trans. Med. Imag, vol. 81, pp. 1620–1633, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Lustig M, Donoho D, and Pauly JM, “Sparse MRI: The application of compressed sensing for rapid MR imaging,” Magn. Reson. Med, vol. 58, pp. 1182–1195, 2007. [DOI] [PubMed] [Google Scholar]

- [52].Block KT, Uecker M, and Frahm J, “Undersampled radial MRI with multiple coils. iterative image reconstruction using a total variation constraint.” Magn. Reson. Med, vol. 57, pp. 1086–1098, 2007. [DOI] [PubMed] [Google Scholar]

- [53].Trzasko J and Manduca A, “Highly undersampled magnetic resonance image reconstruction via homotopic -minimization,” IEEE Trans. Med. Imag, vol. 28, pp. 106–121, 2009. [DOI] [PubMed] [Google Scholar]

- [54].Jung H, Sung K, Nayak KS, Kim EY, and Ye JC, “k-t FOCUSS: A general compressed sensing framework for high resolution dynamic MRI,” Magn. Reson. Med, vol. 61, pp. 103–116, 2009. [DOI] [PubMed] [Google Scholar]

- [55].Haldar JP, Hernando D, and Liang Z-P, “Compressed-sensing MRI with random encoding,” IEEE Trans. Med. Imag, vol. 30, pp. 893–903, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Liang Z-P, “Spatiotemporal imaging with partially separable functions,” in Proc. IEEE Int. Symp. Biomed. Imag., 2007, pp. 988–991. [Google Scholar]

- [57].Haldar JP and Liang Z-P, “Spatiotemporal imaging with partially separable functions: A matrix recovery approach,” in Proc. IEEE Int. Symp. Biomed. Imag., 2010, pp. 716–719. [Google Scholar]

- [58].Lingala SG, Hu Y, DiBella E, and Jacob M, “Accelerated dynamic MRI exploiting sparsity and low-rank structure: k-t SLR,” IEEE Trans. Med. Imag, vol. 30, pp. 1042–1054, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Zhao B, Haldar JP, Christodoulou AG, and Liang Z-P, “Image reconstruction from highly undersampled ()-space data with joint partial separability and sparsity constraints,” IEEE Trans. Med. Imag, vol. 31, pp. 1809–1820, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Trzasko J and Manduca A, “Local versus global low-rank promotion in dynamic MRI series reconstruction,” in Proc. Int. Soc. Magn. Reson. Med, 2011, p. 4371. [Google Scholar]

- [61].Otazo R, Candès E, and Sodickson DK, “Low-rank plus sparse matrix decomposition for accelerated dynamic MRI with separation of background and dynamic components,” Magn. Reson. Med, vol. 73, pp. 1125–1136, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Haldar JP and Setsompop K, “Linear predictability in magnetic resonance imaging reconstruction: Leveraging shift-invariant Fourier structure for faster and better imaging,” IEEE Signal Process. Mag, vol. 37, pp. 69–82, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Shin PJ, Larson PEZ, Ohliger MA, Elad M, Pauly JM, Vigneron DB, and Lustig M, “Calibrationless parallel imaging reconstruction based on structured low-rank matrix completion,” Magn. Reson. Med, vol. 72, pp. 959–970, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].Haldar JP, “Low-rank modeling of local -space neighborhoods (LORAKS) for constrained MRI,” IEEE Trans. Med. Imag, vol. 33, pp. 668–681, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [65].Jin KH, Lee D, and Ye JC, “A general framework for compressed sensing and parallel MRI using annihilating filter based low-rank Hankel matrix,” IEEE Trans. Comput. Imaging, vol. 2, pp. 480–495, 2016. [Google Scholar]

- [66].Ongie G and Jacob M, “Off-the-grid recovery of piecewise constant images from few Fourier samples,” SIAM J. Imaging Sci, vol. 9, pp. 1004–1041, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [67].Knoll F, Hammernik K, Zhang C, Moeller S, Pock T, Sodickson DK, and Akcakaya M, “Deep-learning methods for parallel magnetic resonance imaging reconstruction: A survey of the current approaches, trends, and issues,” IEEE Signal Process. Mag, vol. 37, pp. 128–140, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [68].Liang D, Cheng J, Ke Z, and Ying L, “Deep magnetic resonance imaging reconstruction: Inverse problems meet neural networks,” IEEE Signal Process. Mag, vol. 37, pp. 141–151, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [69].Sandino CM, Cheng JY, Chen F, Mardani M, Pauly JM, and Vasanawala SS, “Compressed sensing: From research to clinical practice with deep neural networks,” IEEE Signal Process. Mag, vol. 37, pp. 117–127, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [70].Liang Z-P, Boada F, Constable T, Haacke EM, Lauterbur PC, and Smith MR, “Constrained reconstruction methods in MR imaging,” Rev. Magn. Reson. Med, vol. 4, pp. 67–185, 1992. [Google Scholar]

- [71].Haldar JP and Liang Z-P, ““Early” constrained reconstruction methods,” in Magnetic Resonance Image Reconstruction: Theory, Methods, and Applications, Doneva M, Akcakaya M, and Prieto C, Eds. London: Academic Press, 2022, ch. 5, pp. 105–125. [Google Scholar]

- [72].Antun V, Renna F, Poon C, Adcock B, and Hansen AC, “On instabilities of deep learning in image reconstruction and the potential costs of AI,” Proc. Natl. Acad. Sci. USA, vol. 117, pp. 30 088–30095, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].Chan C-C and Haldar JP, “Local perturbation responses and checker-board tests: Characterization tools for nonlinear MRI methods,” Magn. Reson. Med, vol. 86, pp. 1873–1887, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]