Abstract

Multiscale modeling is an effective approach for investigating multiphysics systems with largely disparate size features, where models with different resolutions or heterogeneous descriptions are coupled together for predicting the system’s response. The solver with lower fidelity (coarse) is responsible for simulating domains with homogeneous features, whereas the expensive high-fidelity (fine) model describes microscopic features with refined discretization, often making the overall cost prohibitively high, especially for time-dependent problems. In this work, we explore the idea of multiscale modeling with machine learning and employ DeepONet, a neural operator, as an efficient surrogate of the expensive solver. DeepONet is trained offline using data acquired from the fine solver for learning the underlying and possibly unknown fine-scale dynamics. It is then coupled with standard PDE solvers for predicting the multiscale systems with new boundary/initial conditions in the coupling stage. The proposed framework significantly reduces the computational cost of multiscale simulations since the DeepONet inference cost is negligible, facilitating readily the incorporation of a plurality of interface conditions and coupling schemes. We present various benchmarks to assess the accuracy and efficiency, including static and time-dependent problems. We also demonstrate the feasibility of coupling of a continuum model (finite element methods, FEM) with a neural operator, serving as a surrogate of a particle system (Smoothed Particle Hydrodynamics, SPH), for predicting mechanical responses of anisotropic and hyperelastic materials. What makes this approach unique is that a well-trained over-parametrized DeepONet can generalize well and make predictions at a negligible cost.

Keywords: Machine learning, Neural operator, DeepONet, Concurrent multiscale coupling, Finite element model, Domain decomposition

1. Introduction

Predicting and monitoring complex systems, where small-scale dynamics and interactions affect global behavior are ubiquitous in science and engineering [1–5]. In disciplines ranging from material fracture [6] to design problems [7], models at microscale have shown their capability in representing detailed material response. However, despite their improved accuracy, the usability of microscopic models is often compromised by several computational challenges. Specifically, microscopic models require a small spatio-temporal scale to fully resolve small-scale details and capture underlying stochastic dynamics, leading to a prohibitive computational expense. Therefore, simulating material dynamics at meso- or macroscale using microscopic models is still largely beyond reach. In addition, although bottom-up approaches such as fine-grained atomistic models have provided important insights into processes at microscale, they generally do not scale up to finite-size samples [8–10]. These challenges raise the need for efficient mathematical models and algorithms, which are capable of capturing small-scale behaviors while being computationally expedient.

In the last two decades, a variety of multiscale approaches have been proposed to address these challenges. Microscopic effects often concentrate locally, whereas a continuum model can accurately describe the system in the rest of the domain. Solving such a continuum model by well-established numerical methods would reduce the computational cost. Following this domain-decomposition strategy, several works that combine continuum and microscopic models have been proposed [11–19] to model multiscale systems. Another approach focuses on developing a fast surrogate as the fine-scale model represented by homogenization [20–25]. For example, [26] considered an approximation model of a partial differential equation (PDE) that contains small-scale oscillations in its coefficients, in essence, replacing these coefficients in the model with effective properties so that the resulting solutions can adequately approximate the solutions of the original problem. However, quantifying effective properties poses a challenging task in light of the feasibility of acquiring parameter values and the level of accuracy of the homogenized PDE model compared to the original microscopic model.

Recently, deep learning algorithms have been proposed for simulating physical problems [27–31]. In particular, neural networks have been employed in conjunction with standard numerical models to address the aforementioned challenges in multiscale modeling [2,32–37]. In [33], Arbabi et al. proposed a data-driven method that trains deep neural networks to learn coarse-scale partial differential operators based on fine-scale data. In [38], Bhatia et al. presented a novel paradigm of multiscale modeling that couples models at different scales using a dynamic-important sampling approach. A machine learning model is employed to dynamically sample in the phase space, hence enabling an automatic feedback from micro to macro scale. In [39], Masi and his collaborators developed a thermodynamics-based artificial neural network (TANN) and applied it in multiscale modeling of materials with microstructure. Their results demonstrated that TANN is capable of implicitly learning the constitutive model from data and predicting the corresponding stress fields based on state variables. The authors also demonstrated that TANN is capable of solving boundary value problem in a multiscale system with complex microstructure using double-scale homogenization scheme. Other applications of machine learning in multiscale modeling include parameter inference [40–43], uncertainty quantification [44,45], data-driven modeling [24,36,44,46–48], etc.

In the last few years, a new family of machine learning model, deep neural operators, have been proposed to learn the solution operator of a PDE system implicitly [49–52]. Unlike another type of scientific machine learning, physics-informed neural networks (PINNs) [53], these neural operators can solve a PDE system given a new instance of interface conditions or model parameters without retraining [54–60]. Hence, such computational advantage enables neural operators to serve as an efficient surrogate model in multiscale coupling tasks, and especially for time-dependent multiscale problems, which even today have remained prohibitively expensive. Among the state-of-the-art neural operators, the Deep Operator Network (DeepONet) serves as a unique model with exceptional generalization capability and flexibility, which can learn the solution operator in irregular domains even in the presence of noise [61]. Another possibility is to use the Fourier neural operator (FNO), which is fast but is limited to complex geometries and structured data [49,50]. Herein, we choose DeepONet as the surrogate model. For a more thorough comparison between DeepONet and FNO, we refer the reader to [61,62].

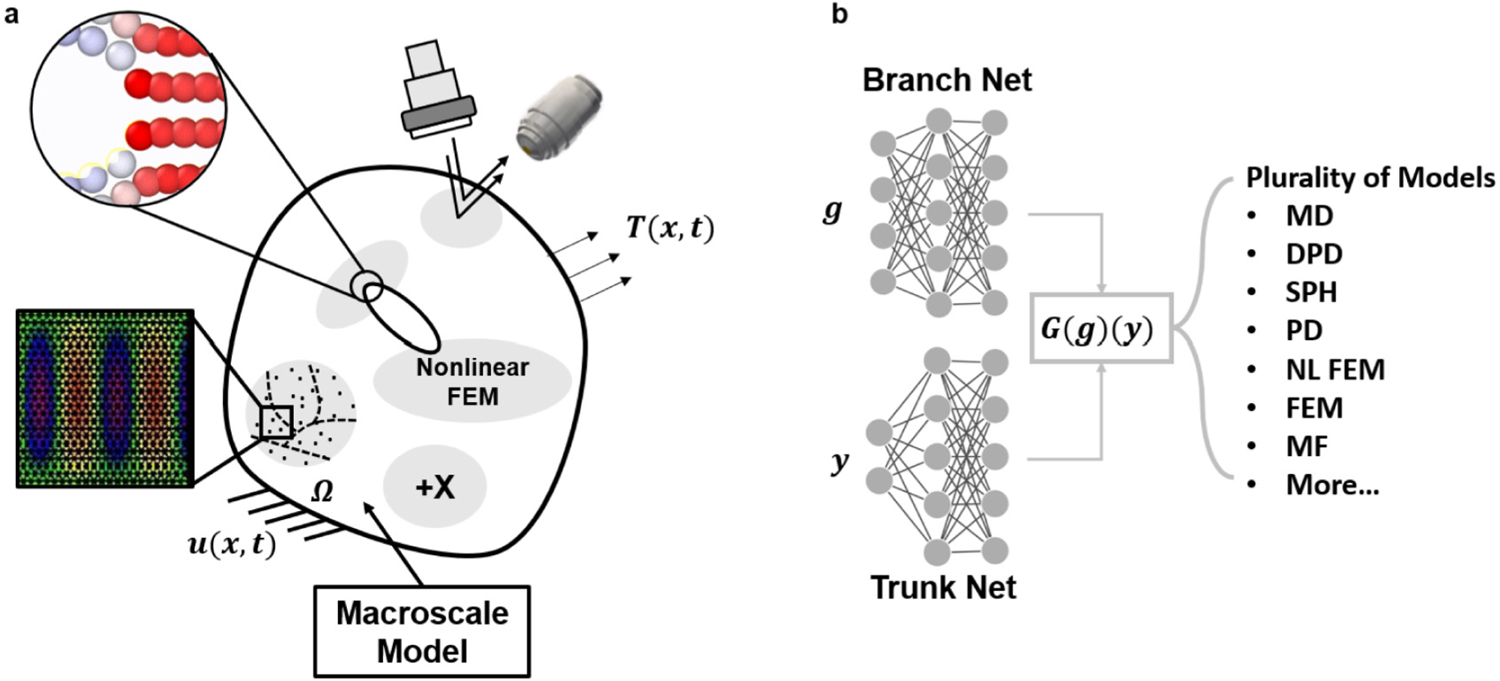

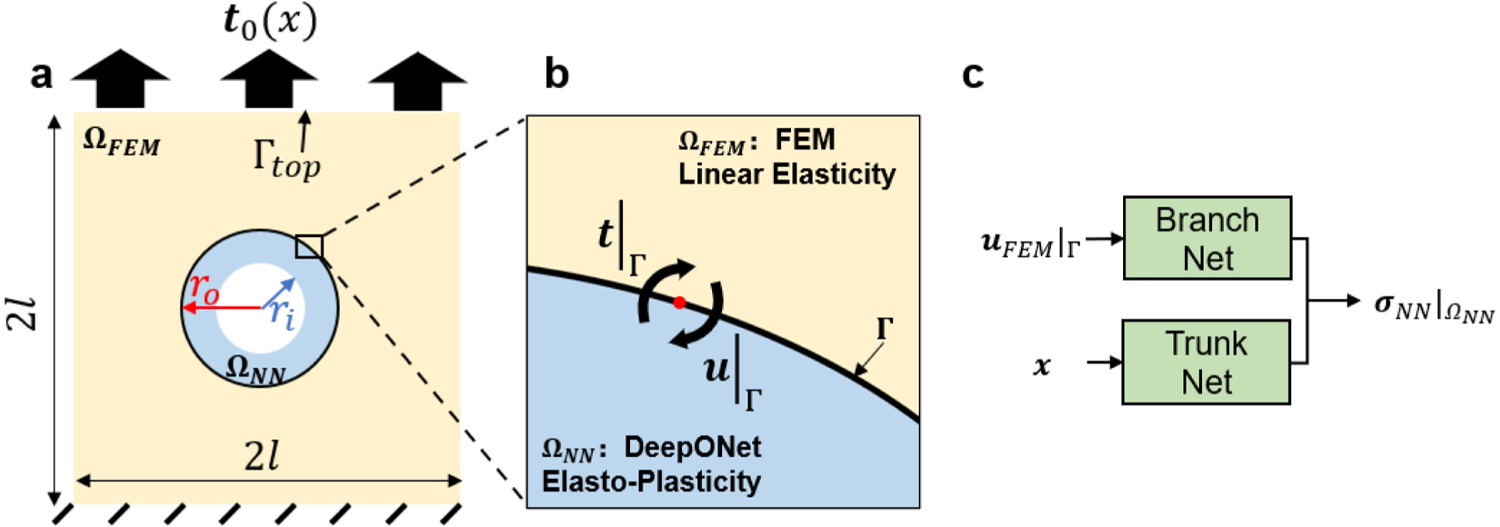

The present work aims to address the aforementioned challenges by developing a new multiscale coupling framework, as demonstrated in Fig. 1. Specifically, the new framework couples a surrogate of the microscopic system (DeepONet) with a standard finite element method (FEM) that represents the macroscopic model. The coupling framework is flexible in choosing the domain decomposition algorithms, the types of boundary condition, or the nature of multiphysics problems (static or time-dependent). In addition, the surrogate model can learn from a plurality of fine-resolution microscopic systems or from experimental data (Fig. 1(b)). Our approach is the first attempt to couple neural operators with standard numerical solvers using a concurrent coupling method.

Fig. 1.

Schematic of multiscale modeling with DeepONet. (a) In a multiscale mechanics system, traction acts on the boundary of domain , which is decomposed into sub-domains described by a variety of microscopic models. A macroscopic model describes the response in the bulk region whereas nonlinear, microscopic, or data-driven models capture the detailed response in the small regions. (b) DeepONet, composed of a branch and a trunk net, is able to learn the response of the microscopic systems (from simulated or multi-modal data) and serve as a surrogate in the multiscale system. MD: molecular dynamics, DPD: dissipative particle dynamics, SPH: smoothed particle hydrodynamics, PD: peridynamics, NL FEM: nonlinear finite element, FEM: finite element, MF: multifidelity.

The paper is organized as follows: In Section 2.1, we briefly introduce DeepONet and the adopted coupling algorithms. In Section 3, we report the coupling performance for a series of benchmarks, including a 2D Poisson equation, a 1D heat problem, and a uniaxial tension problem with elastoplastic materials and hyperelastic materials. We conclude by a brief discussion on the implications of the presented model in Section 4. In the appendices, we give an overview of the smoothed particle hydrodynamics (SPH) method and present additional results for FEM and SPH simulations. Finally, we provide further details on the network training and data generation.

2. Methodology

In this section, we introduce the general architecture of DeepONet (Section 2.1) and the domain decomposition methods for the coupling framework (Section 2.2).

2.1. Deep Operator Network (DeepONet)

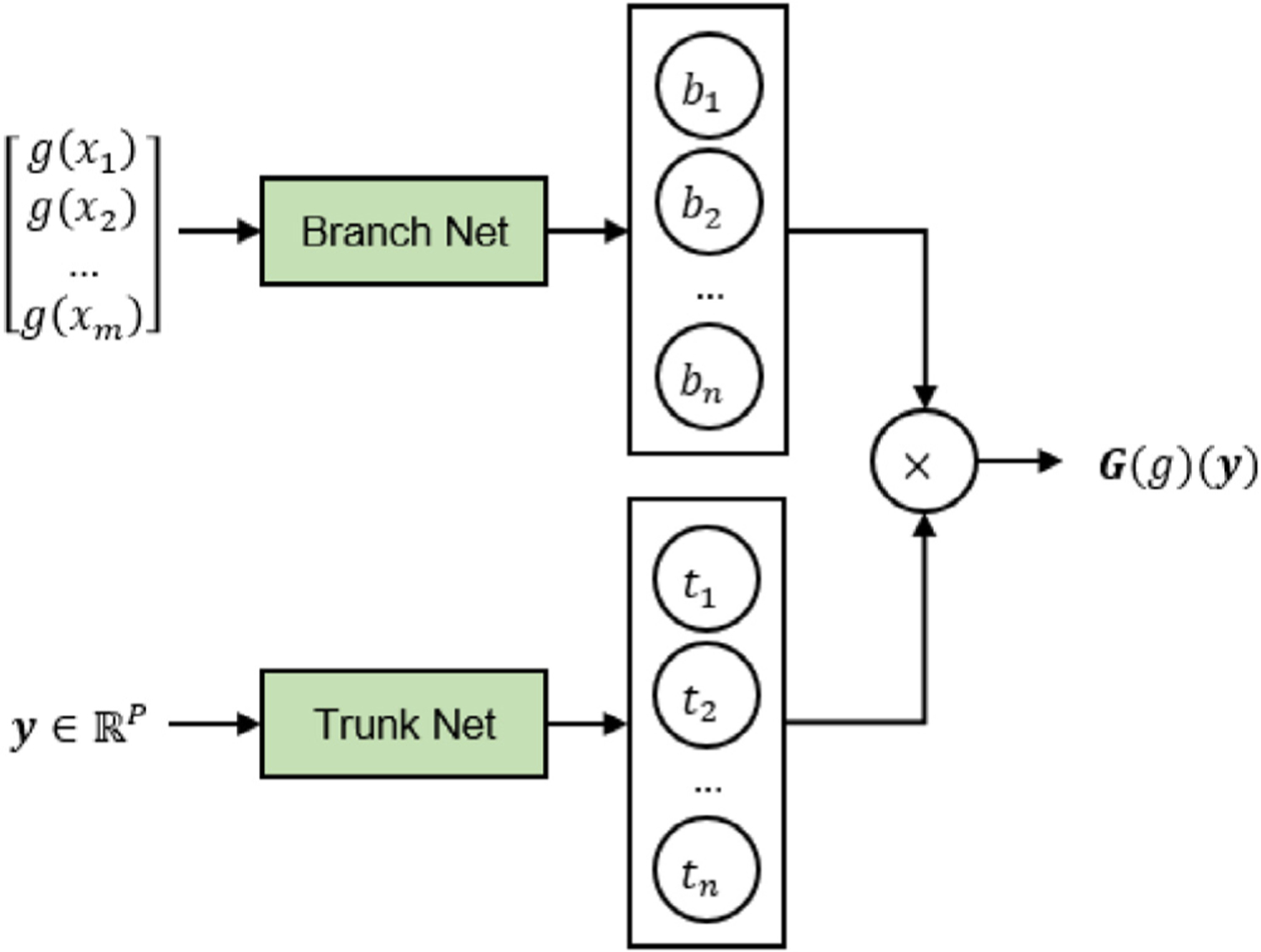

We briefly summarize the general architecture of DeepONet employed in this work. Let be a bounded open set, which is the domain of our input and output functions; DeepONet can learn a general continuous operator between two Banach spaces of functions taking values in and , respectively. We denote the input and output function spaces as and . The network aims at approximating a mapping between an input function and its corresponding output function . Although DeepONet can learn mappings between vector-valued functions, for simplicity of exposition we focus on learning scalar-valued functions in the following. For any , is the evaluation of function at . As shown in Fig. 2, the branch network takes a function in its discrete representation at locations as input and yields an array of operator features, , as its output. The trunk network takes as input and yields another array of operator features, . Finally, can be approximated by [51,63]:

| (1) |

In this work, we adopt fully-connected neural networks as the architecture of both sub-networks. We refer the readers to [51,64] for theoretical analysis and error estimations of DeepONet.

Fig. 2.

Schematic architecture of DeepONet. DeepONet learns the mapping operator from an input function to its corresponding output function . The input of branch and trunk net are and , which represents a discretized function from to and information such as coordinates and time, respectively. Note that the output dimension of the trunk net, , is consistent with that of the branch net. The final output is computed as the dot product of and

Regarding the loss function, we adopt the mean squared error (MSE) which measures the square of norm between model predictions and training data. Given sample input functions , , and the ground-truth of their corresponding output functions, on a set of points , the loss is expressed as:

| (2) |

where is the learned operator of DeepONet for approximation and denotes the data measurements for the th output function at th evaluation point. is an additional loss term for the purpose of regularization (see Section 3.1 and Eq. (11)).

2.2. Coupling methods

Here we introduce the procedure of coupling DeepONet with a numerical model. Consider a system defined on a computational domain ; we decompose the domain into and according to the features in each domain. These two domains are described by a macroscopic model (model I) and a microscopic model (model II), respectively. In this paper, we choose model I as FEM. Nonetheless, the proposed procedure can be generalized to couple other mesh-based models [65–68] or particle (meshfree) models [69–72] as shown in Fig. 1(b). Model II represents a DeepONet, which serves as a surrogate for the microscopic, fine-scale model.

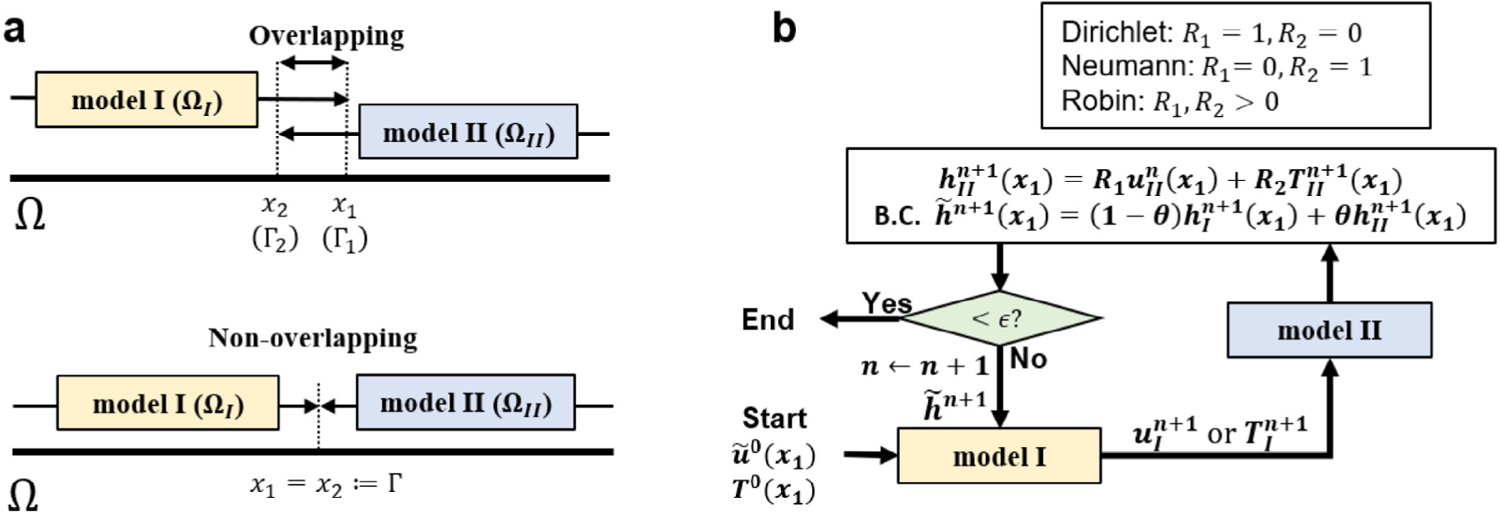

Notably, the domain decomposition can be either overlapping or non-overlapping. Fig. 3(a) shows a one-dimensional illustration of the domain decomposition method. For the overlapping setting, for this 1D case) represents the internal boundary of model I and is the boundary of model II. The overlapping region is . The two models communicate with each other by exchanging interface conditions on and . For the non-overlapping setting, we have for this 1D case) and the two model exchanges interface information on . Fig. 3(b) and Algorithm 1 summarize the iterative procedure of the coupling framework with a Robin-type boundary condition [73]. For the th iteration, we utilize quantities to calculate . To initiate the coupling procedure , we start with an initial guess of the interface solution value and derivative-related information on . Then, we take a linear combination of these quantities and forms a Robin-type boundary condition on , namely, , which is applied on Model I. The method proceeds by solving for , , from Model I, and interpolating the computed solution or its derivatives at . The interpolated information will then be transmitted to Model II as the boundary condition. Then, we solve for , , from Model II with the transmitted interface condition on and interpolate its solution on . If the stopping criterion

| (3) |

is satisfied, the coupling result is considered to be converged. The solution for the two domains is:

| (4) |

| (5) |

If the solution is not converged, we proceed to update the interface information on with a relaxation formulation: where are the Robin boundary condition from Model I and II. Here, the relaxation parameter can be either fixed or updated according to the Aitken’s rule [73,74]. Then, we proceed to a new iteration by transmitting the updated boundary condition to Model I and repeating the procedure stated above with . This procedure is repeated until the stopping criterion is satisfied. These coupling algorithms have their origin to the classical Schwarz coupling methods [75–77] and an iterative patching algorithms [77].

Fig. 3.

A flexible framework in domain decomposition. The proposed coupling framework is able to adopt either (a) overlapping or non-overlapping domain decomposition coupled with Dirichlet, Neumann, or Robin boundary conditions at the interface. As an illustration, (b) presents a Robin-type boundary condition imposed on model I with interfacial solution updated by a relaxation scheme. Dirichlet and Neumann boundary can be imposed by adjusting the value of and . The relaxation parameter, , is either fixed at a value or updated dynamically. represents the Neumann-related information at from Model II, e.g., traction or derivatives.

3. Results

In this section, we present the simulation results of four benchmark problems: 2D Poisson equation, 1D heat equation, 2D elastoplasticity, and 2D hyperelasticity, to demonstrate the applicability of our method. The detailed settings of these four examples, including the choices of model I and model II, interface conditions, and whether domains overlap, are provided in Table 1. For interface conditions, we studied four types of conditions, namely, Dirichlet-Dirichlet (D-D), Robin-Dirichlet (R-D), Neumann-Dirichlet (N-D), and Robin-Neumann(R-N). The first letter represents the boundary condition for Model I and the second letter for Model II. For each problem, our framework consists of two stages. In the first stage, we train a DeepONet offline to obtain a surrogate of model II. Then, we couple Model I with DeepONet in the online stage using our proposed coupling method. Training data of elastoplastic FEM in Section 3.3 and hyperelasticity in Section 3.4 are generated by Abaqus [78] and an SPH solver [79]. All the other usages of FEM (including FEM solver for model I and generation of training data for model II) are based on the FEniCS package [80] using second-order Lagrange polynomials. For all experiments, the networks are trained on a NVIDIA GeForce RTX 2060 GPU. The FEM simulations are performed on two 6-core Intel Core i7-8700 CPUs.

Table 1.

Setup for the four examples.

| Problem | Model I | Model II/Training data | Interface condition | Overlap |

|---|---|---|---|---|

| 2D Poisson | FEM | DeepONet/FEM | D-D, R-D | Yes |

| 1D Heat | FEM | DeepONet/FEM | N-D | No |

| 2D Elastoplasticity | Linear elastic FEM | DeepONet/Elastoplastic FEM | N-D | No |

| 2D Hyperelasticity | Hyperelastic FEM | DeepONet/Hyperelastic SPH | N-D, R-D/R-N | No |

D-D: Dirichlet–Dirichlet, R-D: Robin–Dirichlet, N-D: Neumann–Dirichlet, R-N: Robin–Neumann.

| Algorithm 1 Coupling Method. , . If non-overlapping, , else . |

| Initialization: Set model I with and model II with |

| Main Loop: |

| for do |

| Model I (FEM): |

| • Receive the interface information from Model II . |

| • Solve for from Model I. |

| • Calculate or and pass it to Model II . |

| Model II (NN): |

| • Receive the interface information or from Model I . |

| • Solve for from Model II. |

| • Calculate . |

| • Calculate and pass it to Model I . |

| If converged, stop; |

| end for |

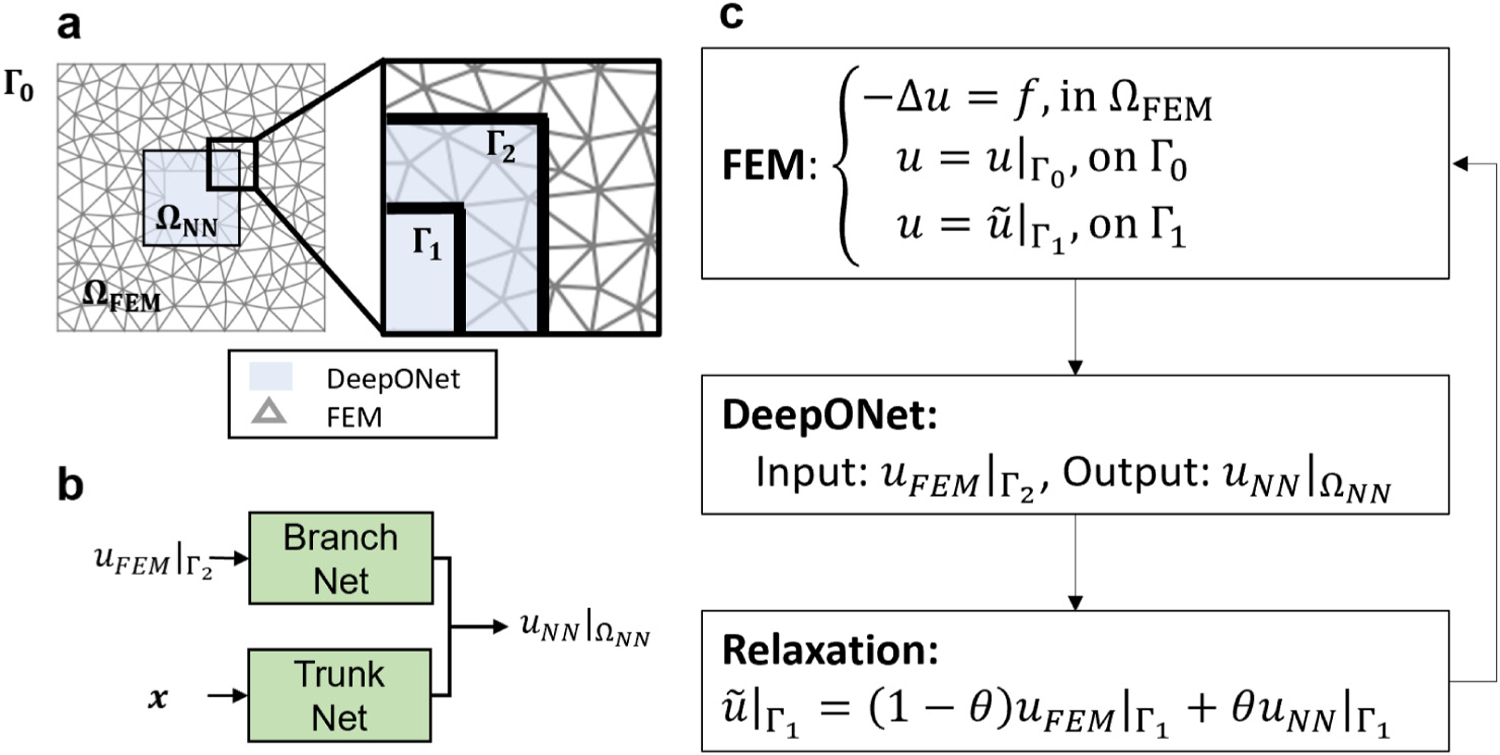

3.1. Poisson equation

We first study the feasibility of the proposed framework for solving a static problem. Let us consider a Poisson equation described by the PDE system in :

| (6) |

| (7) |

where we set for all . As shown in Fig. 4(a), we decompose one domain into two overlapping subdomains, namely (with an internal boundary ) and (with a boundary ). In , the system is governed by Eqs. (6) and (7) with a boundary condition

| (8) |

In this example, we first verify the efficacy of the coupling framework with a D-D interface condition. For this setup, in , the system is governed by Eqs. (6) and (7) with input

| (9) |

After presenting the results of the D-D case, we display results of parametric studies for better demonstrating the influence of other factors that may influence the convergence rate.

Fig. 4.

Setup of the Poisson equation. (a) A1 × 1 unit square is decomposed into two overlapping regions, (light blue) and (meshed). is bounded by and , whereas is a 0.3 × 0.3 square in the center with boundary . (b) DeepONet takes as input and yields the solution in , denoted as . (c) Formulation of an overlapping D-D method. Given the boundary condition on , the coupling framework iteratively updates the corresponding boundary condition on . The relaxation parameter is either fixed or updated based on the Aitken’s rule [73].

In the offline stage, we train a DeepONet as a surrogate of FEM for predicting solution in . First, we sample a set of boundary conditions from a random field with , a parameter in the correlation function in Eq. (D.1). Qualitatively, a smaller results in a less smooth function. More details of the random field generation are provided in Appendix D. Then, we solve the Poisson equation with the randomly sampled boundary conditions using FEM, whose computational results in are collected as the training cases. We generate 1000 cases as the training dataset of DeepONet following the aforementioned process. As shown in Fig. 4(b), the branch network input comes from an interpolation of the FEM solution on , while the trunk network takes the coordinate as its input. The network output is . We present more details related to the network training in Appendix C.

After the completion of the offline training, we proceed to the coupling stage as shown in Fig. 4(c). Given a fixed boundary condition on , we initiate the coupling framework with an initial guess of on , which is typically zero. Then, we solve the governing equation in with FEM and interpolate the solution on (denoted as , which will be used as the input of the branch network. With this input, the DeepONet then predicts , the solution of the Poisson equation in . Following that, a relaxation scheme is adopted to update the interface condition on as: , which will later be used as the boundary condition of FEM in the next iteration. The coupling iteration continues until the solution converges.

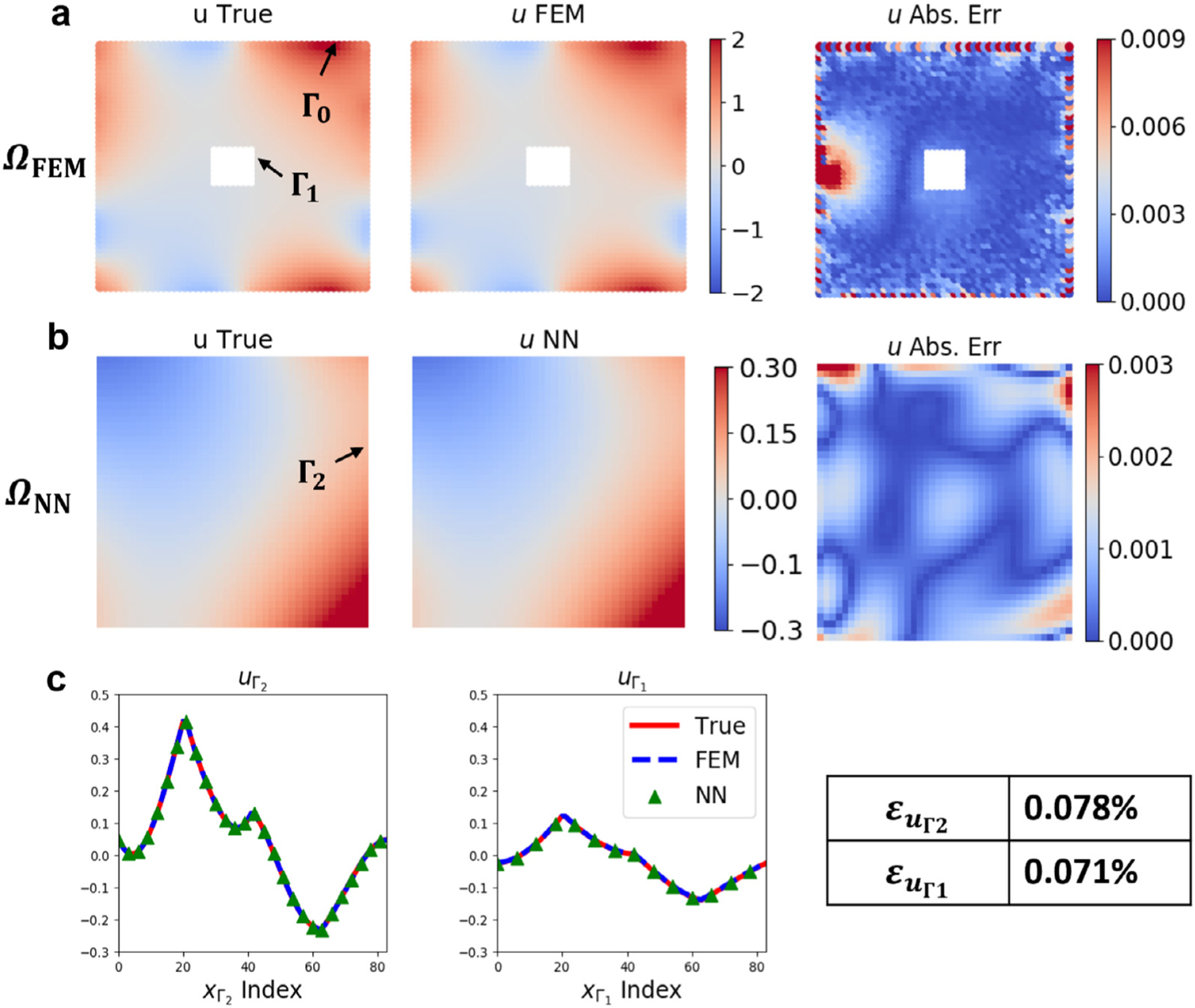

We show the performance of our coupling framework for the Poisson equation in Fig. 5. We fix the relaxation parameter as and impose a boundary condition on from the testing dataset (unseen to the DeepONet in the training stage). Figs. 5(a–b) show a comparison between the FEM ground truth (first column; computed directly in ) and the predictions from the coupling framework (second column; first row in from FEM, second row in from DeepONet) together with their difference (third column). We observe an agreement between the coupling predictions and the ground truth. The maximum absolute error presented in the third column is less than 1%. We also note that the error is larger in , especially in the region close to its external boundary . The observed error is mostly dominated by the interpolation accuracy in the FEM, not the error of the coupling framework. We further show a quantitative comparison between models prediction and ground-truth on and in Fig. 5(c): the predictions from coupled FEM/NN (blue dashed lines and green triangles, respectively) accurately reproduce the true solution (red lines) with a relative error at around 0.07%.

Fig. 5.

Results of coupling FEM and DeepONet for the Poisson equation. (a–b) Model predictions from the FEM and DeepONet with the corresponding absolute errors in and . (c) Model predictions at the interfaces ( and ) and the true solution (red line). The relative errors of model predictions at the interfaces are far less than 1%. Please see Fig. 4 for and .

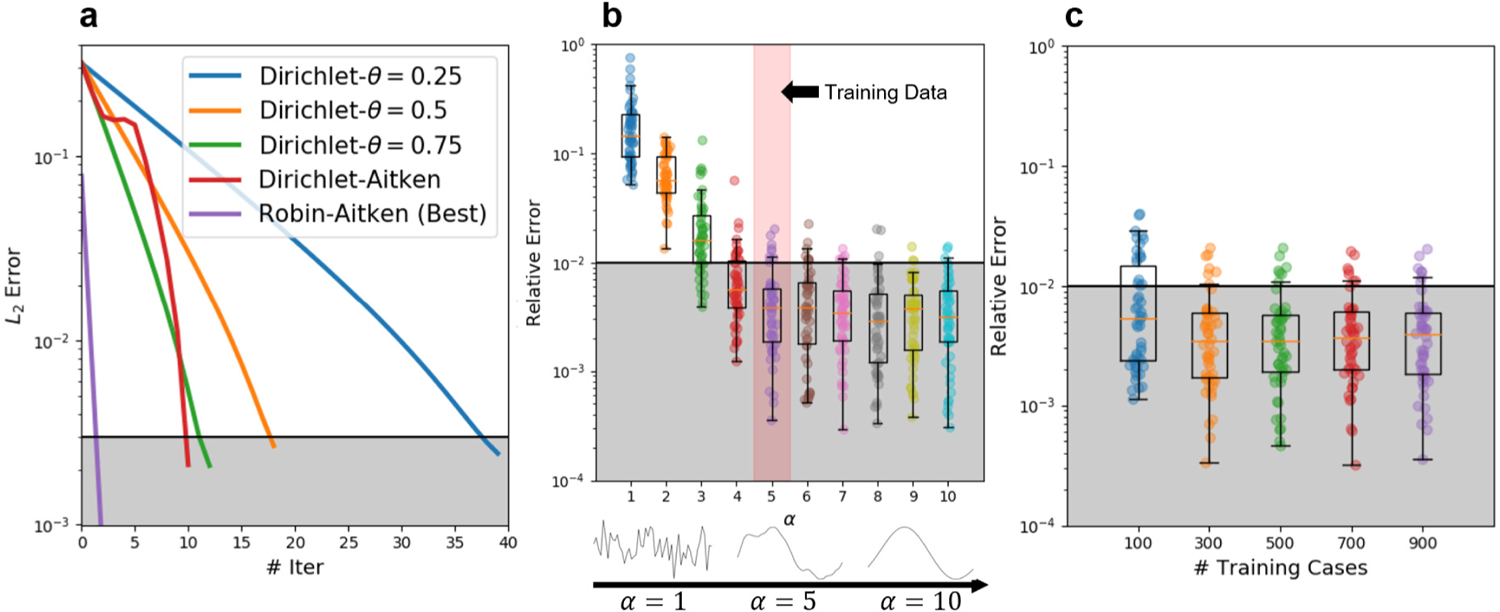

In addition to the results shown in Fig. 5, we also conducted parametric studies on diverse factors that influence the performance of our framework, including: convergence of the framework with different coupling methods (Fig. 6(a)); the coupling accuracy influenced by the extrapolation capability of the DeepONet (Fig. 6(b)) and the number of training cases (Fig. 6(c)). In Fig. 6(a), convergence of errors with different boundary conditions is plotted against iterations. The shaded area indicates that the relative error is less than 1%. Specifically, given various values of the relaxation parameter (, 0.5 and 0.75), the displacement errors with Dirichlet boundary conditions satisfy the stopping criterion ( error less than 2 × 10−3, or equivalently, relative error less than 1%) at iteration 37, 18, and 12, respectively. With the Aitken’s relaxation strategy for (see, e.g., [73]), the coupling error (denoted as “Dirichlet-Aitken”) converges a bit faster than that of a fixed relaxation parameter . We also adopt a Robin-type boundary (Robin–Aitken) with dynamic update in (purple line). In this case of Robin boundary condition, the system in domain is governed by Eqs. (6) and (7) with a Robin boundary:

| (10) |

where we set and . Since the FEM needs both the information of the solution and its derivative in the normal direction from DeepONet to update the Robin boundary condition, we adjust the training loss of the network following the strategy. The regularization term in Eq. (2) is set as:

| (11) |

to regularize the partial derivative with respect to the normal direction of . In Eq. (2), the partial derivative term is computed by taking the automatic differentiation of the network output with respect to the trunk net input [59,81–84]. We assign the weight of the regularization term as 1. With the employment of the Robin boundary condition, we observe that the method only takes two iterations to reach a relatively small error.

Fig. 6.

Parametric studies of the Poisson equation. (a) Given a boundary condition on , the convergence history of the coupling model varies with the coupling method (Robin or Dirichlet) and parameter . (b) The model is trained with data generated from a random field with . We test the generalization ability represented by the relative errors of the coupling model for cases corresponding to to 10. (c) Box plots of relative errors for DeepONets trained with 100 to 900 cases. Each column shows the coupling results by testing with 50 different cases. The shaded area indicates that the relative error on is less than 1%. Notice that we present the error with respect to the ground truth to show the convergence and accuracy of our framework.

In Fig. 6(b), we show the generalization ability of DeepONet and its impact on the accuracy of the coupling framework by testing with boundary conditions outside the training region. The minimal relative errors of are plotted against the correlation length of the random field that was used to generate training data. When the correlation length increases, the sampled curves become smoother and vice versa. Notice that we train the network on training samples with and test its performance on ranging from 1 to 10, each with 50 testing cases. The relative errors drop from around 10% to less than 1% with increasing from 1 to 5. For , the errors are statistically stable.

Fig. 6(c) exhibits the accuracy of the coupling framework as a function of the generalization ability of DeepONet, which is reflected by the number of training cases for DeepONet. The median of the relative errors of decreases slightly when the number of training cases increases from 100 to 300 and then stays statistically stable even with further increase. The computational results implicitly suggest that after 300 training cases, the errors are most contributed by the accuracy of boundary interpolation, not the generalization of DeepONet. The shaded area in (b–c) denotes relative error at 1%.

3.2. Heat equation

Next, we investigate the performance of the coupling framework for a dynamic problem, namely, 1D heat equation, with a N-D method. Consider a PDE system in :

| (12) |

| (13) |

| (14) |

where the thermal diffusivity is set as 0.1. As shown in Fig. 7(a), we decompose the computational domain into two non-overlapping subdomains: for FEM and for DeepONet. The interface is . For the FEM subdomain , we set a Dirichlet boundary condition at the interface :

| (15) |

where is the updated and relaxed solution at the interface. For the DeepONet subdomain , we impose the interfacial flux from FEM as input.

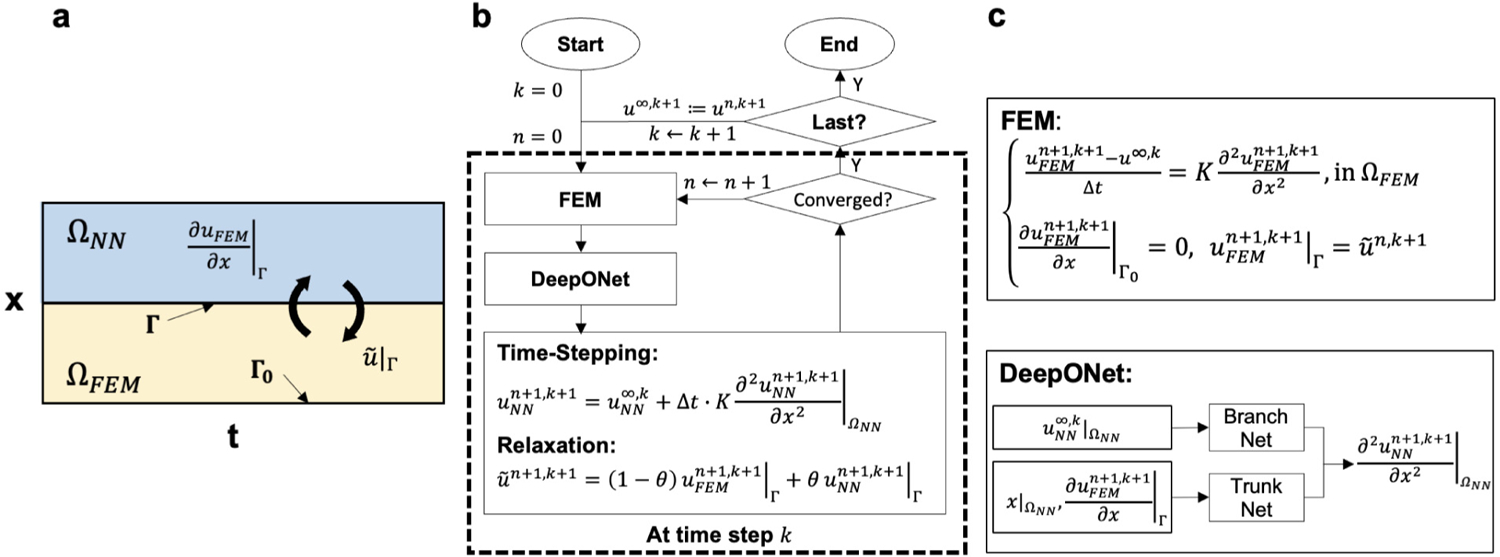

Fig. 7.

Setup of the time-dependent problem (heat equation). (a) The spatio-temporal domain is decomposed into two sub-domains with as the interface between FEM and NN domain. (b–c) Formulation of the coupling framework for the heat equation. The framework first solves for from FEM with updated information at the interface at th step. Then, the computed derivative at is transmitted into DeepONet, which predicts the spatial derivative in (c). The solution in is computed based on a time-stepping scheme, followed by a relaxation update (b). The initialization at and is described in the main text.

The coupling method is illustrated in Fig. 7(b–c). The solution process is a nested loop with indices and , where represents the time step and is the current iteration step. To initiate the framework, we set both and as zero with an initial guess of interfacial flux for the FEM. The FEM solves for the solution in at time step given the solution at the previous time step , denoted as . Then, we transmit the flux at , to DeepONet as a part of the trunk net input (Fig. 7 (c)). The branch network takes the system solution in at th time step as input. The output of DeepONet estimates an approximation of the diffusion term . The solution in is then calculated by a semi-discretized heat equation with the backward Euler method: . Next, the interfacial flux is updated by the relaxation scheme:

| (16) |

In this example, we fix the relaxation parameter . If the updated interfacial flux is not yet converged, we continue to the next iteration. Otherwise, if the flux is converged, then we proceed to the next time step in the outer loop and restart the inner loop with the reset iteration step .

Herein, we summarize the training procedure of DeepONet. We generate 1000 initial conditions in from a Gaussian random field with constant mean 0 and correlation length 0.3. For each case, we randomly sample the flux at times based on a uniform distribution from −3 to 3 as the boundary conditions. The training data of DeepONet is then generated by running FEM simulations on with the sampled initial/boundary conditions. Note that training a DeepONet in a spatio-temporal domain with disparate boundary/initial condition is a data-demanding task: one needs to sample in the spatio-temporal domain. Also, performance of the framework may be deteriorated when the network extrapolates the solution outside the training domain. Hence, we alleviate the challenges by training the network to learn the implicit spatial derivative operator with an explicit method to advance in time. In practice, we acquire the spatial derivative in from FEM simulations running from to 1.0 with . The simulated spatial derivative is then collected and utilized for DeepONet training. For more details of the network training, we refer the reader to Appendix C.

The coupling results are shown in Fig. 8, where (a) shows the prediction from both models in the spatio-temporal domain . In Fig. 8(b), the solution of DeepONet at is plotted against time. The prediction from DeepONet shows a good agreement with the ground truth solution even for the temporal region outside the training dataset , indicating that the proposed framework works well for extrapolation. The mean-squared errors (MSE) of both models in the coupling framework are plotted against time in Fig. 8(c): the coupling framework shows stability and accuracy over long-time integration. Although the prediction errors grow with the increase of , we note that the errors accumulate at a relatively low rate, demonstrating that the proposed coupling framework is capable of solving a time-dependent system. It may be misleading that, from , DeepONet outperforms FEM since the mean squared error of DeepONet is lower than that of FEM. In our concurrent coupling framework, the errors in FEM is induced by the oscillatory errors from DeepONet. Therefore, one can observe that the errors from FEM and DeepONet are both in a similar scale. In general, one might observe a slightly better accuracy from DeepONet, but these observations are generally problem-dependent and do not indicate that the data-driven DeepONet has a better generalization ability than FEM.

Fig. 8.

Results of coupling FEM and DeepONet for the heat equation. (a) Model predictions from DeepONet and FEM. DeepONet predicts the solution in while FEM predicts the solution for . The black line at denotes the interface of the two domains. The solution at (indicated by the white dashed line) is presented in (b). (c) The mean square errors of FEM (red) and DeepONet (blue) vs. time (t).

3.3. Elastoplasticity

In this section, we test the proposed framework for predicting the elastoplastic behavior of a solid material. As shown in Fig. 9(a), we consider a plane strain problem for a square-shaped solid of size with a circular void of radius , where vertical tension is applied on its top edge. In this example, we take , . For linear elastic materials, the kinematics, constitutive relation, and equilibrium equations are as follows:

| (17) |

| (18) |

| (19) |

where , , and are the strain, displacement, and (Cauchy) stress; and are Lamé moduli, which we take as and , and is the identity tensor. In this example, we consider a solid subject to no body load, and therefore set the body force term as zero.

Fig. 9.

Setup of the elastoplasticity problem. (a) A solid plate is clamped on the bottom edge with a traction distributed on the top edge. (b) The yellow region is modeled with linear elasticity, whereas the blue region is modeled with elastoplasticity. Interfacial displacement (Dirichlet boundary condition) computed from FEM is transmitted to DeepONet as input. (c) The DeepONet estimates the stress components in , based on which the interfacial traction can be calculated accordingly. The predicted traction is updated and provided to FEM as a Neumann-type boundary condition. In this example, we set the interior circle radius , the interface circle radius , and the plate size .

To model the plastic behavior of the material, we consider small-deformation, rate-independent elastoplasticity with isotropic hardening. The additive decomposition of the strain tensor writes , where and are the elastic and plastic strains, respectively. The elastic strain is related to the stress by

| (20) |

The plastic strain is purely deviatoric (i.e., ). We define the deviatoric stress , the increment of the equivalent plastic strain , and the equivalent tensile stress (Mises stress) as

| (21) |

| (22) |

| (23) |

respectively. The flow direction and the increment of the plastic strain are given by

| (24) |

| (25) |

Then, the yield function can be defined as

| (26) |

where the linear strain-hardening function is taken as

| (27) |

The initial strength and the hardening modulus are taken as two constant material parameters. The aforementioned mechanical quantities are subject to the Kuhn-Tucker complementary conditions:

| (28) |

In addition, when , the consistency condition also needs to be satisfied.

Due to the setup of our boundary value problem, the plastic deformation concentrates around the void whereas the material is dominated by elastic behaviors in regions away from the void. Hence, we decompose the square domain into two non-overlapping subdomains (see Fig. 9(a–b)): the internal region , which is an annulus with internal radius and external radius (on ), modeled by DeepONet as a surrogate for the solid’s elastoplastic response; the external region , modeled by FEM for linear elasticity. The two regions share a common interface on . In , we train several DeepONets as surrogates of each stress components to capture the plastic behavior. First, we sample 1000 displacement boundary conditions on the top edge using the sampling method described in Appendix D.2, and employ the sampled data as boundary conditions. Then, we solve for the displacement and stress fields in the entire domain with a FEM solver based on the elastoplasticity model described above. Next, we collect the simulation results in , which will be employed as the training data of DeepONets. As depicted in Fig. 9(c), DeepONet can predict the corresponding Cauchy stresses in with input as the displacement at the interface. In 2D problems, the Cauchy stress for each material point is a 2 × 2 symmetric matrix. Therefore, to model the stress we only need to predict its three components, namely, , , and . For each of these components, we train an independent DeepONet separately as a surrogate of these quantities. The traction at the interface is calculated accordingly based on the predicted stress from the DeepONets. More details regarding the training of this network are presented in Appendix C.

Then, we employ the trained DeepONets in the coupling framework. As depicted in Fig. 9, DeepONets and FEM communicate at the interface by transmitting the information of displacement and traction (Fig. 9). The interfacial displacement, , is computed in FEM and transmitted to the DeepONets as the input of the branch network. Then, the network solves for the corresponding stress in and calculates the traction at the interface . In the next iteration, the computed traction will be imposed as the boundary condition of the FEM model. In this example, we employ a relaxation scheme for the traction from the DeepONets. The relaxation parameter is fixed at 0.5.

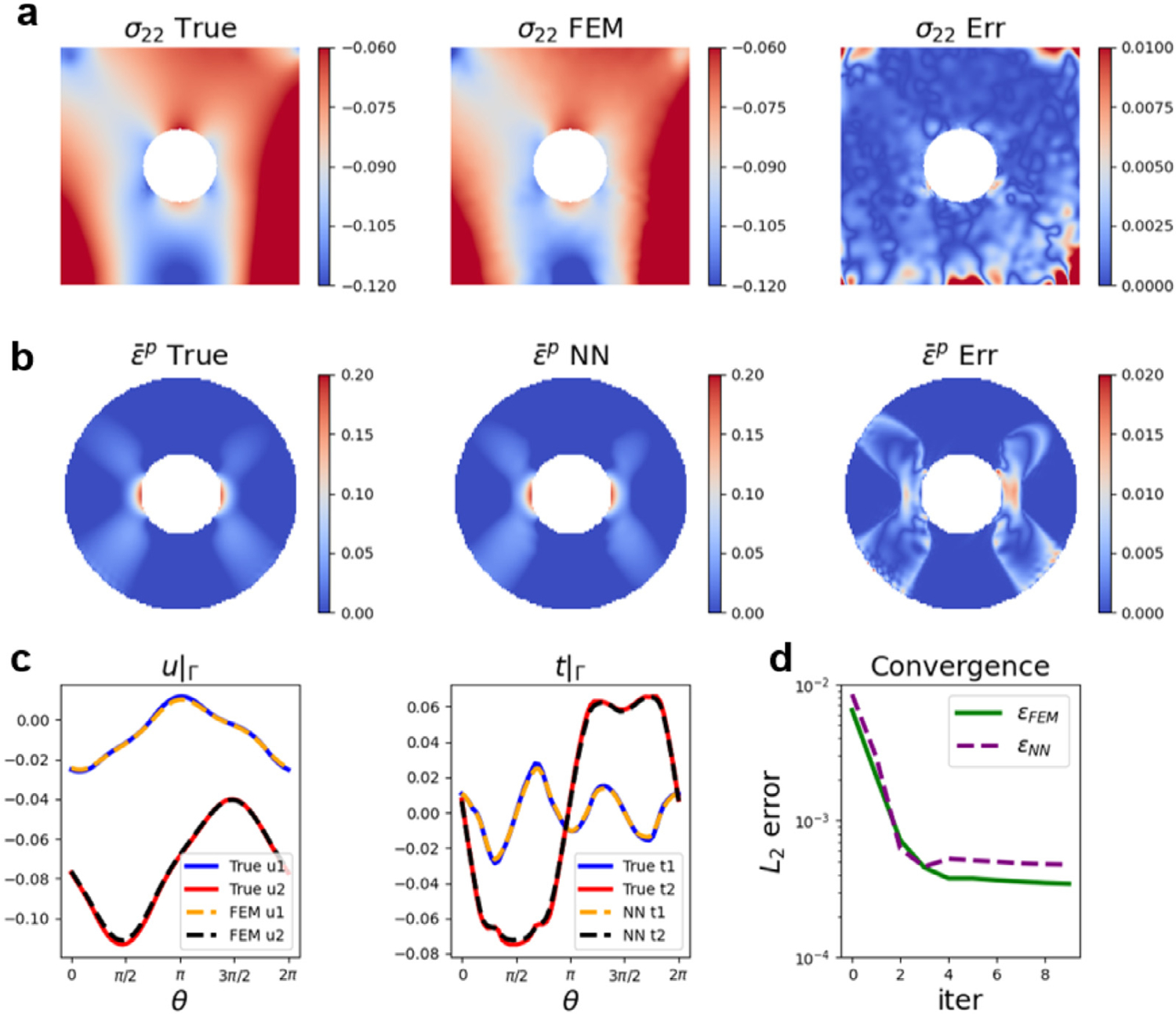

We present the coupling results with a N-D interface condition in Fig. 10. In Figs. 10(a–b), we plot the ground truth solution from FEM in the first column, the predictions from our coupling framework in the second column, together with their differences in the third column. In plot (a), we show the results of the normal stress in the vertical direction of . The results of the equivalent plastic strain, in are provided in plot (b). Although the coupling framework has generally well reproduced the stress component in the bulk region of , there exist relatively large errors at the top edge and the bottom corners. These errors either originate from numerical interpolation in the FEM solver or are caused by a reduced solution regularity. Apart from these regions, the errors are controlled at a low value with the maximum relative error lower than 10%. In , the profile of is well captured by the coupling scheme with the maximum relative error of less than 10%. The results demonstrate that plasticity in the region of interest is well predicted by the surrogate model. To provide a further quantitative verification of our coupling framework, we plot the ground-truth, the predictions of displacement, and traction components on in Fig. 10(c) as functions of , the angle in polar coordinate. The solid lines denote the ground-truth results from the nonlinear FEM. The dashed lines denote the predicted values of the framework. The model predictions match the ground truth well, with relative errors smaller than 2%. Fig. 10(d) shows the efficiency of the coupling framework: the errors between model predictions and the ground truth reach a plateau at the fourth iteration.

Fig. 10.

Results of coupling FEM and DeepONet in elastoplasticity. (a-b) Results of the normal stress in direction () and equivalent plastic strain . From left to right: True value, FEM/DeepONet predicted value from coupling, and their absolute errors. (c) Displacement and traction at the interface . True values and predicted values from coupling are presented. Solid lines: displacement and traction of true data in x (blue) and y (red) directions. Dashed lines: displacement and traction of the model predictions in x (yellow) and y (black) directions. (d) The history of the relative errors of the coupling model at the interface. and refer to the error of displacement and traction from FEM and DeepONet, respectively. Notice that we present the error with respect to the ground truth to show the convergence and accuracy of our framework.

3.4. Hyperelasticity

In the previous examples, DeepONet was trained based on data generated from FEM and was coupled with another FEM in the online stage. These examples illustrated the capability of our coupling framework in solving both static and dynamic problems. In this section, we demonstrate the capability of our framework in concurrently coupling a continuum model (FEM) with a surrogate from smooth particle dynamics (SPH) for describing a microscopic particle system. We consider using the coupled framework to predict the mechanics of a hyperelastic material. We first derive the energy minimization formulation of the continuum model. We denote by the strain energy density of the hyperelastic model and seek to find a displacement field that minimizes the total potential energy :

| (29) |

Here, denotes the body force in , is the traction load applied on the Neumann boundary . Hence, the total potential energy is the integration of strain energy density, , over the entire domain , deduced by the energy contributions from the body force and the traction .

We consider the Holzapfel–Gasser–Odgen (HGO) model [85] to describe the constitutive behavior of the material in this example. Essentially, the material is hyperelastic, anisotropic, fiber-reinforced in diverse directions. Its strain energy density is:

| (30) |

where denotes the Macaulay bracket. In this model, the fiber strain of the two fiber groups is expressed as:

| (31) |

where and are fiber modulus and the exponential coefficient, respectively, is the first principal invariant, and is the fourth principal invariants corresponding to the th fiber group. Mathematically, for the th fiber group with angle direction from the reference direction, is calculated by , where is the right Cauchy–Green tensor and . In our simulations, we consider a material with fiber reinforcement in the vertical direction (see Fig. 11 right for illustration). Therefore, for both fiber groups we set . In Eq. (31), fiber dispersion is denoted as , whose value ranges from 0 to . Intuitively, means no fiber dispersion whereas represents an isotropic fiber dispersion. In this example, we consider the fiber oriented vertically with no dispersion . All parameter values in this example are summarized in Table 2.

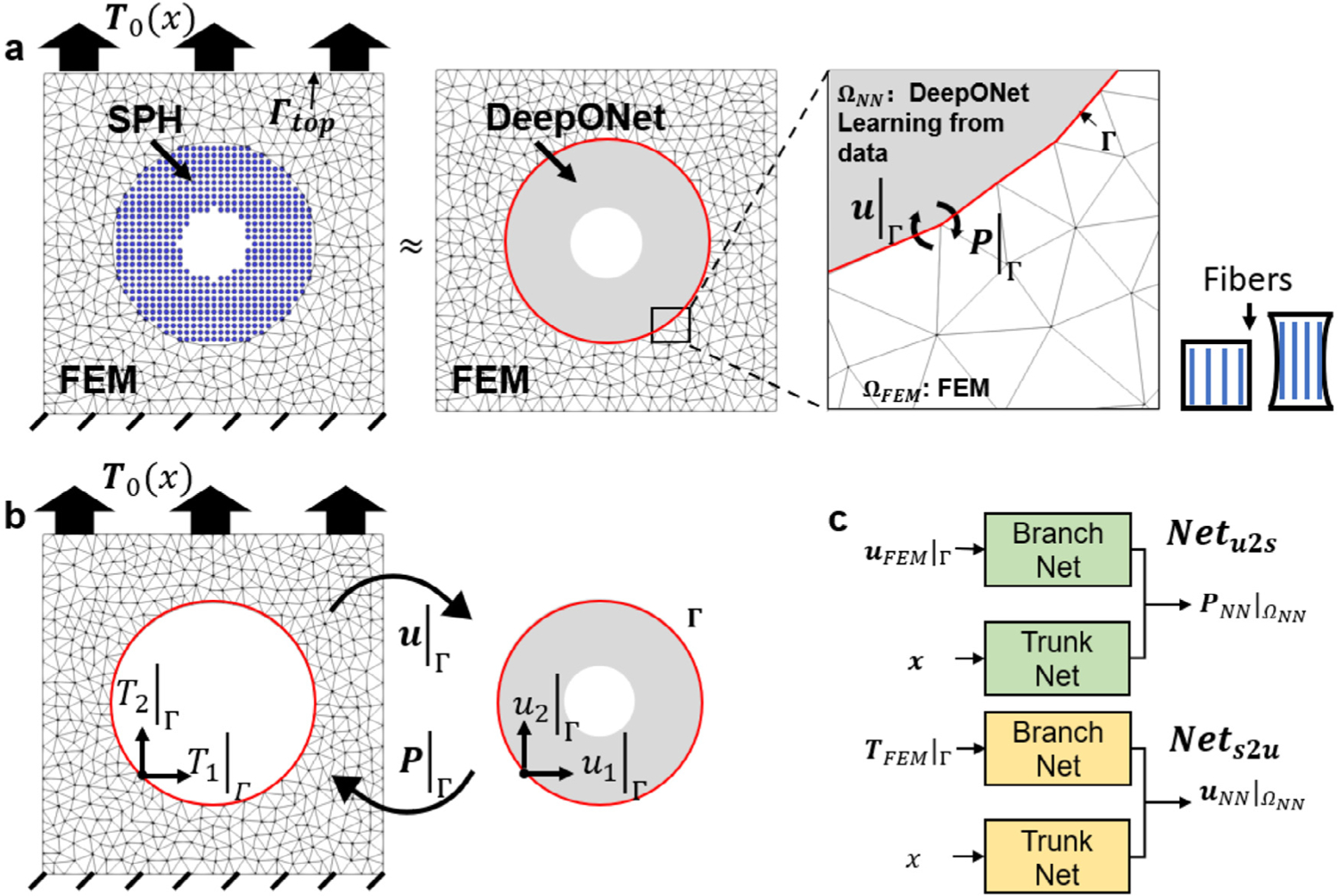

Fig. 11.

Setup of the hyperelasticity problem. (a) A unit square is decomposed into and . We impose a non-uniform traction boundary condition on the top edge and fix the displacement at the bottom and train multiple DeepONets to represent the mechanics of an SPH model. The material is reinforced by fibers in the vertical direction. Information at the interface (displacement and first Piola–Kirchhoff stress ) is transmitted between DeepONet and FEM. (b) Traction and displacement in different directions are exchanged at the interface . FEM predicts the external domain while the DeepONet is trained based on SPH data. (c) Two types of DeepONet are proposed to predict the mechanics of the system: predicting stresses based on displacement information (green boxes) and vice versa (yellow boxes).

Table 2.

Parameters value of the HGO model.

| Parameter | μ | K | k 1 | k 2 | α |

|---|---|---|---|---|---|

| Value | 0.3846 | 0.8333 | 0.1 | 1.5 | π/2 |

We set the value of , , and the same for and 2.

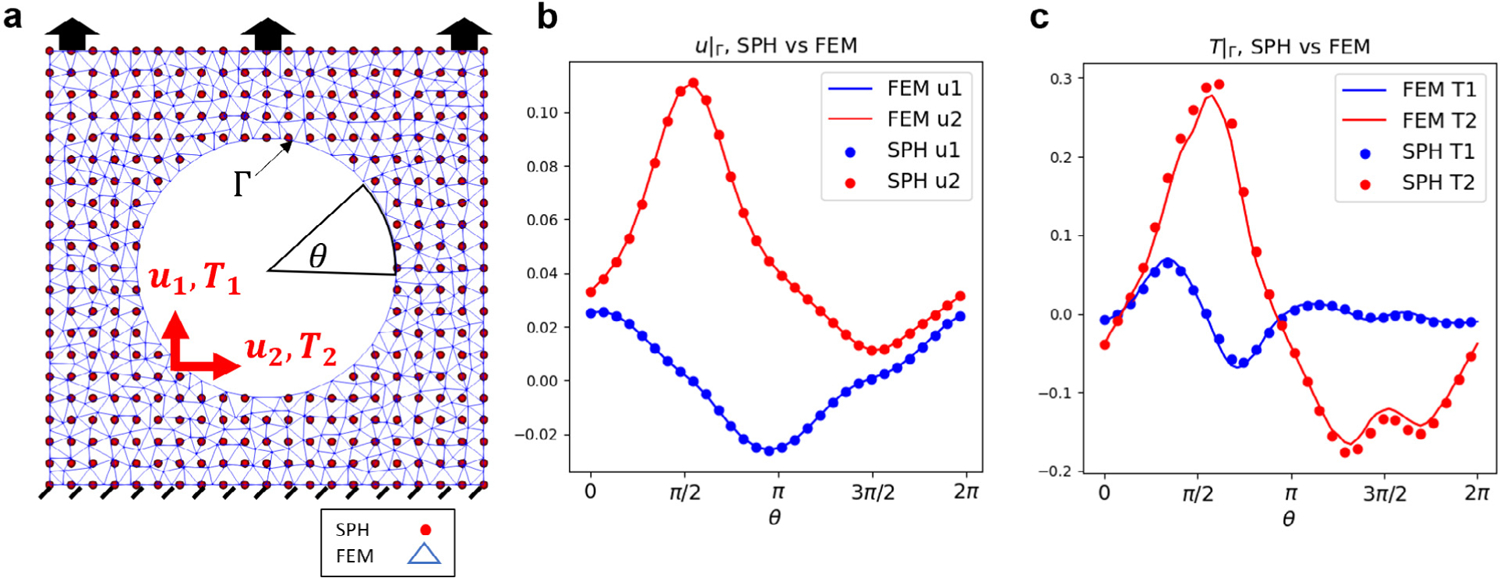

We now present the problem set up of this example. As depicted in Fig. 11(a), we consider a 2D unit square plate with a centered circular void (radius as 0.1). The plate deforms under a uniaxial tension, , applied on its top edge. The bottom edge is clamped. We model the material response of the entire domain using an SPH model, whose solution is taken as the ground-truth solution. More details of the SPH model are provided in Appendix A. To develop a coupling model, we consider a similar setting as in the previous example and decompose the entire domain into two non-overlapping subdomains. Then, we train DeepONets using SPH data to obtain a surrogate for the internal domain while the external region is described by a continuum FEM model. Models in these two domains communicate by exchanging proper interface conditions on their common interface, . In Fig. 11(b) we present a schematic of information transmission of the coupling framework with a N-D method. At the th iteration, the FEM solver receives an updated distributed traction on from DeepONets and solves the updated displacement field, , with the given information. Then, the FEM transmits the updated displacement information to DeepONets. With the displacement on as input, DeepONets estimate the first Piola-Kirchhoff (PK1) stresses in . Based on the predicted PK1 stresses, we then calculate the surface traction on and other associated quantities, such as the equivalent plastic strain and von Mises stress , accordingly. At the th iteration, the system solution at the interface is updated as .

Next, we briefly describe the training process of DeepONet. To generate the training/testing dataset, we sample 1000 different traction loading on the top edge from a random field (see Algorithm in Appendix D). Then, for each sampled traction loading, we perform an SPH simulation to obtain the solutions in the entire domain and collect the corresponding solutions of displacement and PK1 stress fields of in . Among these 1000 samples, 900 cases are employed as the training data while the rest is kept as testing data. As depicted in Fig. 11(c), we consider two approaches for network training. In the first approach, the network takes the displacement at the interface as input and predicts PK1 stress as the output. In the second approach, the interfacial traction is employed as the input of the network , yielding the displacement field as output. These approaches provide a flexibility of imposing different interface conditions. We adopt the first approach in the N-D method and combine the information of the two approaches ( and ) in the R-D/R-N method.

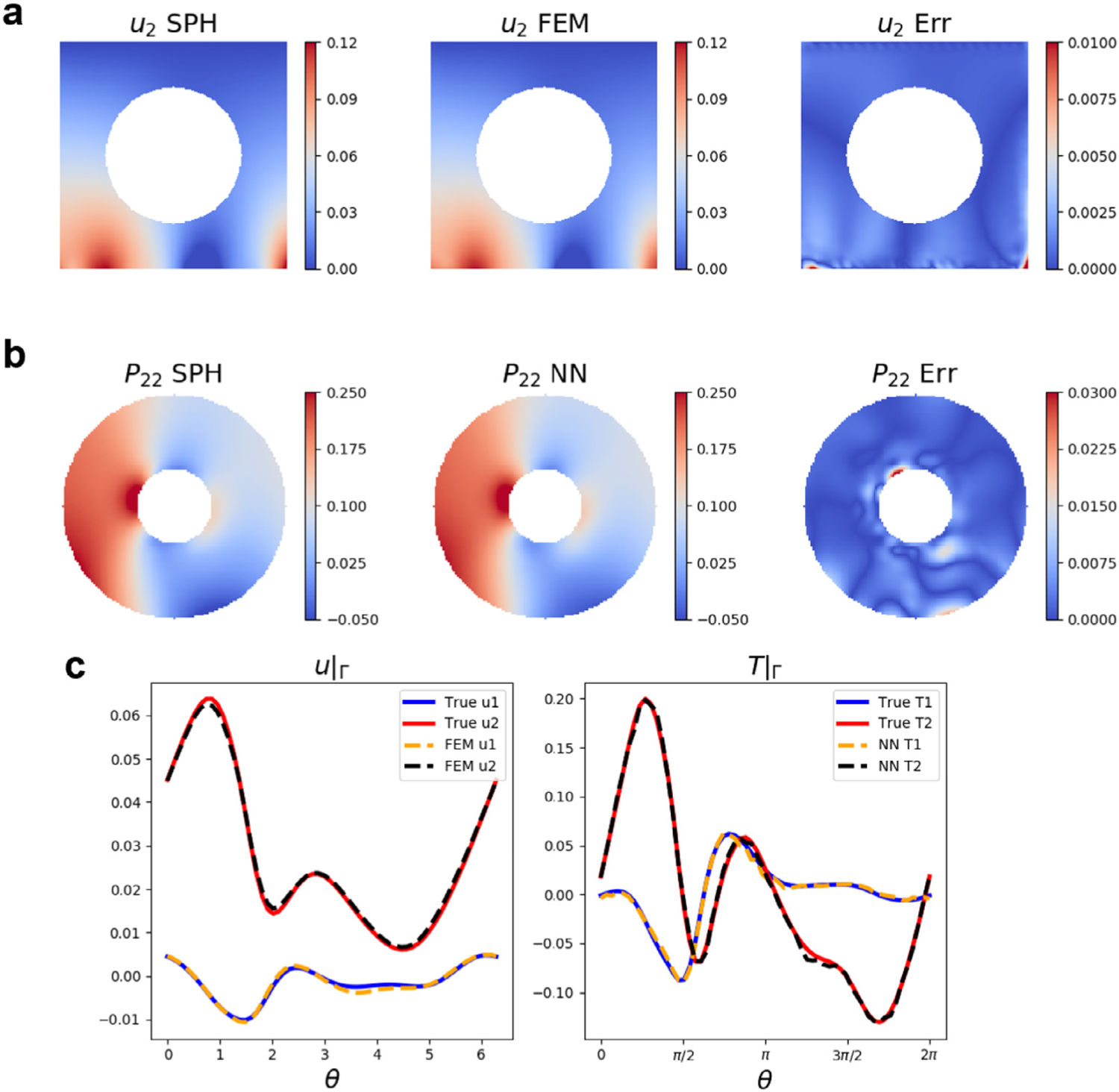

Fig. 12 shows the coupling results of a typical testing case with a N-D method. In Fig. 12(a–b), we compare the vertical displacement in (first row) and the PK1 stress component in (second row) between the SPH ground truth (first column) and the FEM/NN prediction (second column). The prediction errors are displayed in the third column. We observe that the coupling results match well with the ground truth solution with the largest prediction errors distributed near the bottom corners. The errors are partially induced by the numerical interpolations in the FEM and reduced regularity in that region. To further examine the prediction accuracy, in Fig. 12(c) we plot a quantitative comparison of displacement and traction on the interface as functions of the polar coordinate angle . Despite some numerical discrepancies between SPH and FEM (see Appendix B.1), the FEM/DeepONet predictions shown in dashed lines successfully reproduce the SPH simulation results depicted in solid lines. Therefore, our proposed method is capable of capturing the mechanics from SPH with a substantially improved efficiency: the time cost of performing an SPH simulation is approximately 4 h whereas running its surrogate just takes a fraction of a second (Table 3). Admittedly, the excessive cost of SPH is exacerbated because we use a time-dependent SPH solver to simulate a static problem. Nonetheless, we can see that replacing a particle model with its surrogate in a multiscale coupling framework poses unique advantages in both efficiency and programming easiness.

Fig. 12.

Results of coupling FEM and DeepONet in the hyperelasticity problem. Results of (a) displacement in and (b) in . From left to right: prediction from SPH, prediction from FEM, and their absolute differences. (c) Predicted displacement and traction at the interface. Predictions from both FEM and DeepONets are compared with the true data. Solid lines: displacement and traction of true data in x (blue) and y (red) directions. Dashed lines: displacement and traction of the model predictions in x (yellow) and y (black) directions.

Table 3.

Wall time comparison for SPH and DeepONet per iteration in the hyperelastic problem. The excessive cost of SPH is exacerbated because we use a time-dependent SPH solver to simulate a static problem.

| Model | Wall time |

|---|---|

| FEM-SPH | ~4 h |

| FEM-DeepONet | <1 s |

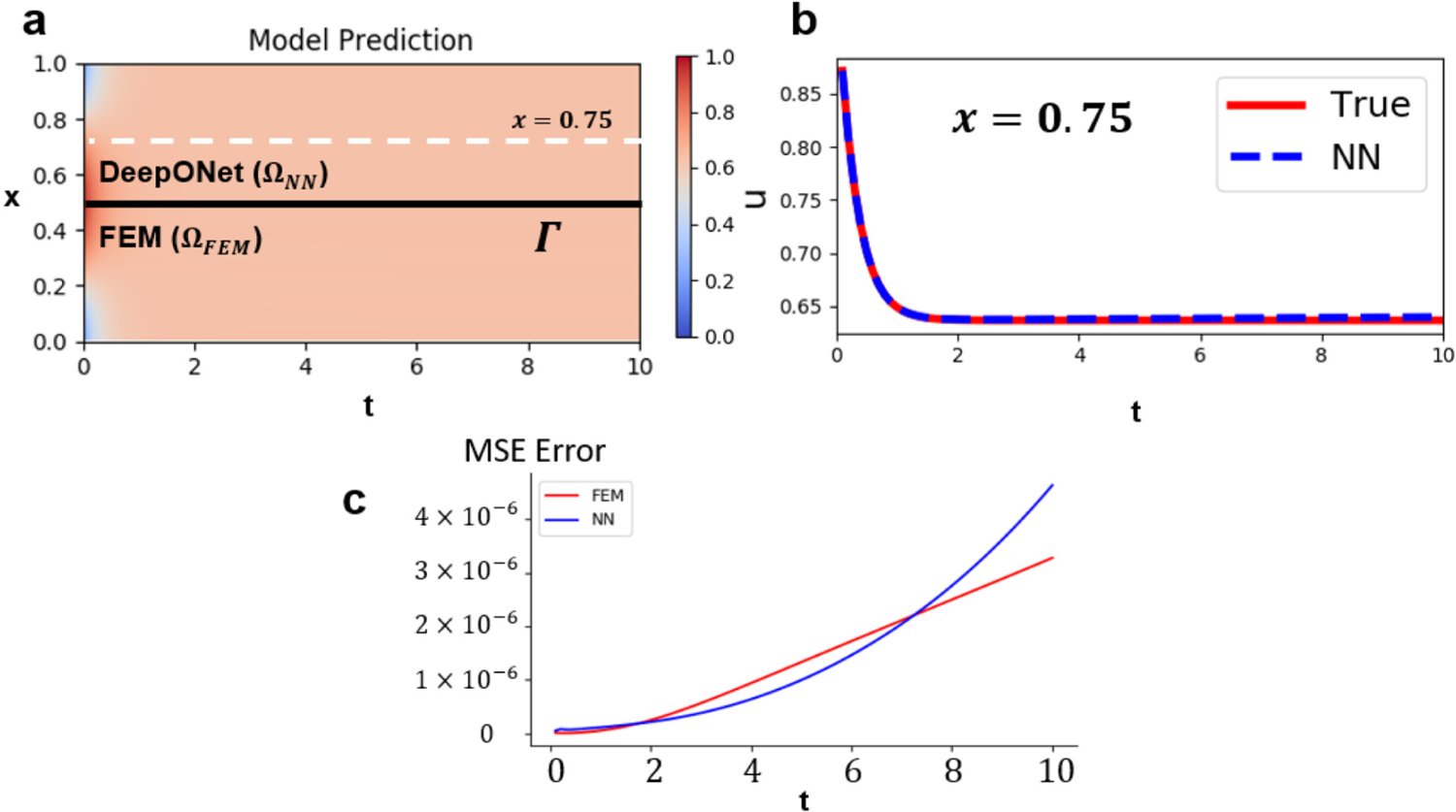

To further illustrate the flexibility and investigate the convergence rate of our coupling framework, we employ a R-D/R-N coupling method to our framework with a variety of values of the Robin coefficient . To clarify, R-D/R-N means that the FEM, imposed with a Robin-type boundary condition, separately transmits the information of displacement and traction to and in order to update the Robin information in the next step. The continuum model with a Robin boundary condition is modified as:

| (32) |

is the Robin boundary condition applied on the interface . We define as:

| (33) |

where is the (positive) Robin coefficient, and are the known traction and displacement.

The coupling procedure is changed as well. At the th iteration, we first solve the FEM model with a Robin boundary condition . Then, we transmit the interfacial traction and displacement of FEM to and , respectively. The corresponding displacement and tractions are estimated by DeepONets and used to update the Robin boundary condition: . Here, is taken as a fixed relaxation parameter.

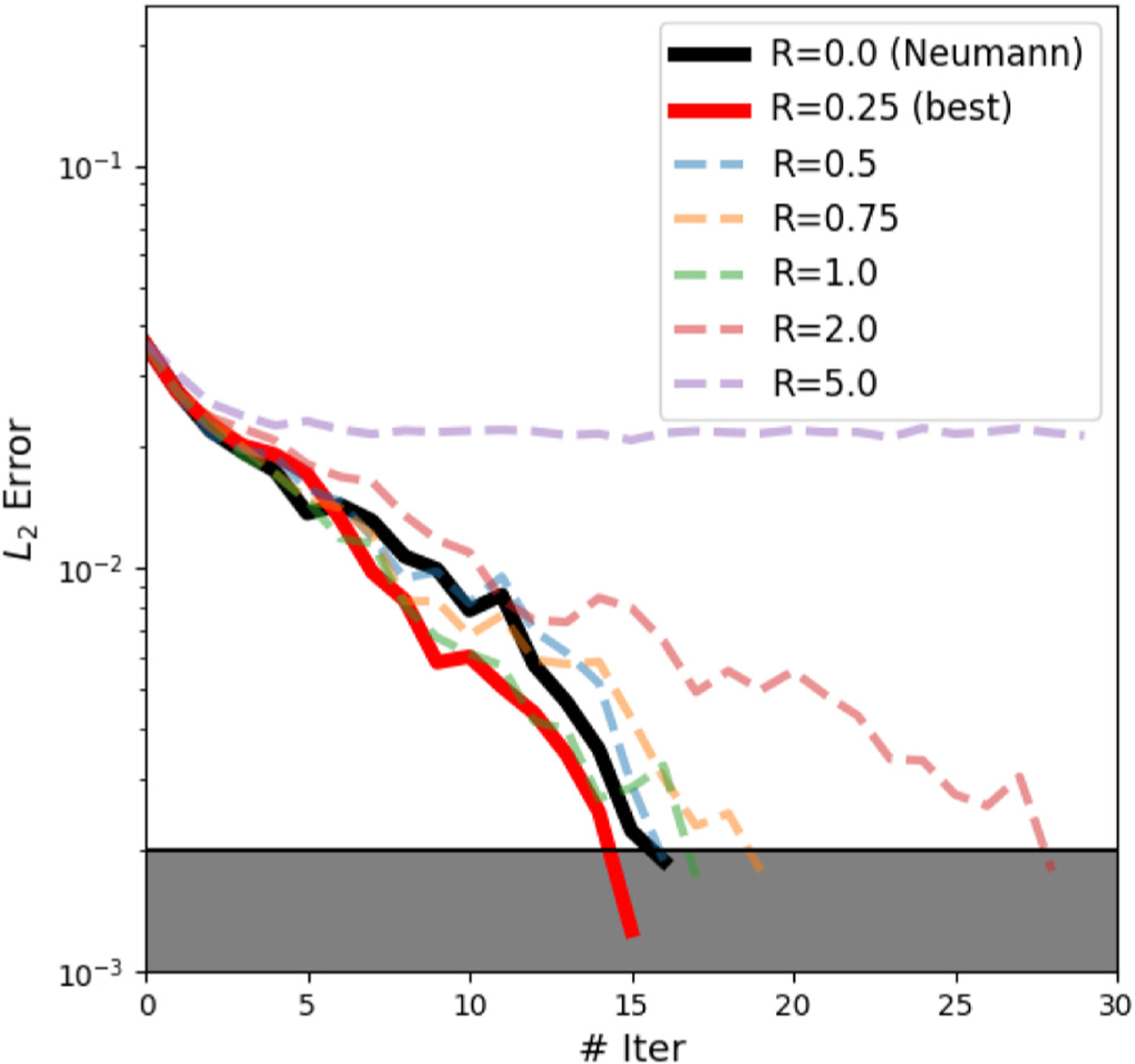

We further study the convergence of interfacial displacement errors with different values of . In Fig. 13, we highlight the results from the N-D coupling method with the black line and the results of R-D/R-N with (best result) in red. The shaded area indicates 2% equivalent relative error of the test case. Due to the nonlinearity of the problem, it generally takes more iterations for the coupling framework to reach the stopping criterion. When taking a R-N coupling method with a sub-optimal Robin coefficient (such as as shown in the purple line of Fig. 13), the coupling framework fails to converge. This fact again demonstrates the importance of choosing an appropriate coupling method.

Fig. 13.

errors of the interfacial displacement in the hyperelastic multiscale model with a Robin boundary. The red line with Robin coefficient R = 0.25 converges fastest among the testing cases. The black solid line represents the relative error history of a Neumann boundary condition (R = 0). The shaded area indicates that the relative error is less than 2%. Notice that we present the error with respect to the ground truth to show the convergence and accuracy of our framework.

4. Discussion

In this paper, we propose an efficient concurrent coupling framework for multiscale modeling of mechanics problems. In lieu of coupling an expensive microscopic model, we propose to employ a deep neural operator, DeepONet, as a surrogate to approximate the microscopic solution in the domain with fine-scale features. The response in the coarse-scale domain is simulated by a standard numerical model, such as the finite element method. The two models are coupled concurrently by exchanging information at the interface until convergence. To verify the performance of this framework, we study four benchmarks including solving static and dynamic problems for different materials. We have also demonstrated that the framework is readily applicable for various interface conditions. The results show that the cases with a Robin boundary condition tend to converge faster than a Neumann/Dirichlet boundary condition. Moreover, the predictions of the coupled model agree well with the true solution acquired from a numerical method, indicating the generalization ability of DeepONet and the accuracy of the proposed framework.

In addition, using a neural operator as surrogate enables the model to be trained directly from data. Such a model is particularly promising for learning dynamics of complex materials without explicit constitutive models. Moreover, coupling DeepONet with FEM substantially improves the computational efficiency in multiscale modeling: DeepONet predicts the expensive microscopic behavior only at a fraction of second. Hence, the overall computational cost of the proposed framework could be shorten by orders of magnitude than the existing multiscale coupling methods. In addition, we can train the surrogate model to learn based on partial information: it can predict the information only at the interface and neglect dynamics inside the microscopic region. Thus, the overall framework behaves as an artificial-intelligence type boundary condition, which would potentially improve the efficiency especially in the scenarios where only the macroscopic dynamics is of interest.

As a further note, we would like to point out the keys to build a successful neural operator based coupling framework with guaranteed convergence and to avoid possible pitfalls. First, the generalization ability of DeepONet determines if the result converges and its convergence rate. Utilizing an accurate neural operator model takes fewer iterations to converge. However, a poorly trained model could lead to slow convergence or even divergence. Second, normalizing data is another key to convergence. In our experiments, the training data are at disparate scales, ranging from 10−3 − 101. Properly normalization of the training data would be essential to the convergence of numerical iterations. In addition, choosing proper coupling strategies, such as the right Robin coefficient, also plays a critical role in guaranteeing fast numerical convergence as it affects the condition number of the stiffness matrix in FEM and the coupling system [86]. A large condition number may lead to a slow convergence or even divergent result in the coupling framework (see Section 3.4 and [87,88]).

Certainly, more improvements and directions can be considered in the future. Learning directly from data facilitates a unique feature that DeepONet can learn data that comes from different scales, which has been demonstrated in Section 3.4. In the future, it would be interesting to develop a data-driven model from noisy data, such as molecular dynamics and dissipative particle dynamics, as presented in Fig. 1. In addition, overcoming the multiscale characteristic length and time scale would be another challenge in multiscale modeling with machine learning, that is, the time and length of a microscopic model are usually orders of magnitude smaller than the continuum model, causing challenges in network training and long-term predictions. The coupling results may drift away from the true solution or even diverge due to inaccurate predictions from surrogate models. Another improvement would be considering microstructures and geometric variations (see [39]). Developing an operator-learning neural network that can predict dynamics with different geometric variations would be a great improvement for broadening the application of the proposed method. Also, simulating fracture progression [89] is another natural and promising application of the multiscale coupling framework. As fracture progresses, the field of interest that includes the damage region may also move along with the tip of a crack. Such modification is particularly useful for problems with discontinuity and/or multiple phases, such as fracture, phase transition, shock wave capturing, and etc. Our framework can be further extended to employ other coupling methods such as quasicontinuum [90], multigrid [91], or serves as a surrogate model for heterogeneous multiscale method (HMM) [92], etc.

Acknowledgments

MY, EZ, and GEK acknowledge the support by grant U01 HL142518 from the National Institutes of Health, United States. YY would like to acknowledge the support by the National Science Foundation, United States under award DMS 1753031.

Appendix A. Smoothed particle hydrodynamics

In this section, we briefly introduce the basic formulation of the Total Lagrangian Smoothed Particle Hydrodynamics (TLSPH). We refer the reader to [79,93] for more details. In the SPH framework, physical quantities are approximated with the neighboring information in a kernel. Consider a function at can be approximated by with the integration

| (A.1) |

where is a weighting kernel which is chosen as a third-order polynomial

| (A.2) |

denotes the radius of the integration kernel; is defined as the distance between and the reference point in the kernel with in two dimensions or in three dimensions. SPH numerically approximates the integration as

| (A.3) |

where is the volume of particle . The gradient of with respect to its reference coordinate is

| (A.4) |

with

| (A.5) |

For a vector , its gradient to the referential coordinate is approximated as the following using the integration rule:

| (A.6) |

We then introduce two ad-hoc corrections for keeping symmetrization and first-order completeness [94].

| (A.7) |

Another correction guarantees the first-order completeness [95], the corrected gradient tensor is defined as

| (A.8) |

where the shape tensor is

| (A.9) |

We introduce the SPH integration to the governing equation of solid at the continuum level. The equilibrium equation is:

| (A.10) |

where is the first Piola–Kirchhoff stress tensor, the body force vector, the referential mass density, and the acceleration. We define the deformation gradient as

| (A.11) |

According to basic continuum mechanics law,

| (A.12) |

As proved in [96], the internal forces emerge from the divergence of the first Piola–Kirchhoff stress P:

| (A.13) |

where the internal force is expressed as

| (A.14) |

We adopt another correction for suppressing spurious hourglassing mode [79]

| (A.15) |

We adopt the strain energy function for a fiber-enhanced tissue proposed in [85]

| (A.16) |

where principal invariants, and are defined as,

| (A.17) |

The parameters are set the same as the FEM model in Section 3.4. We refer the reader to [93,97] for more details.

Fig. B.14.

Comparison of SPH and FEM. (a) We impose the same Dirichlet boundary condition on the top and internal circle for a FEM and SPH model. (b–c) Interfacial displacement and traction of both models.

Fig. C.15.

Network size for various problems. We tabulate the branch/trunk network size for the four examples in Section 3 with the number of training epochs.

Appendix B. Accuracy of FEM and SPH

B.1. Accuracy of SPH

To compare the numerical solution of the FEM and SPH model, We present their computational results for a same benchmark problem. The problem is set up as shown in Fig. B.14(a). We impose a distributed traction boundary condition on the top edge and impose a displacement boundary condition (Dirichlet-type) at the interface for both models shown in Fig. B.14(b): the displacement in and at the interface are plotted against the angle . The corresponding tractions at the interface are presented in Fig. B.14(c). The solid lines are the FEM tractions in and directions with the dashed lines denoting the SPH results. The results correspond well in general with some deviation around and due to the difference in numerical integration methods. This discrepancy partially contributes to the overall errors observed in Section 3.4.

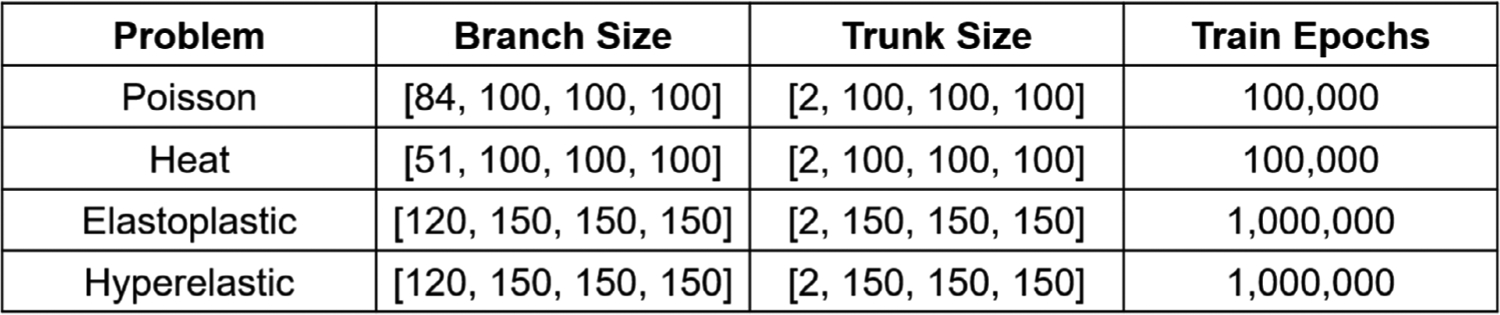

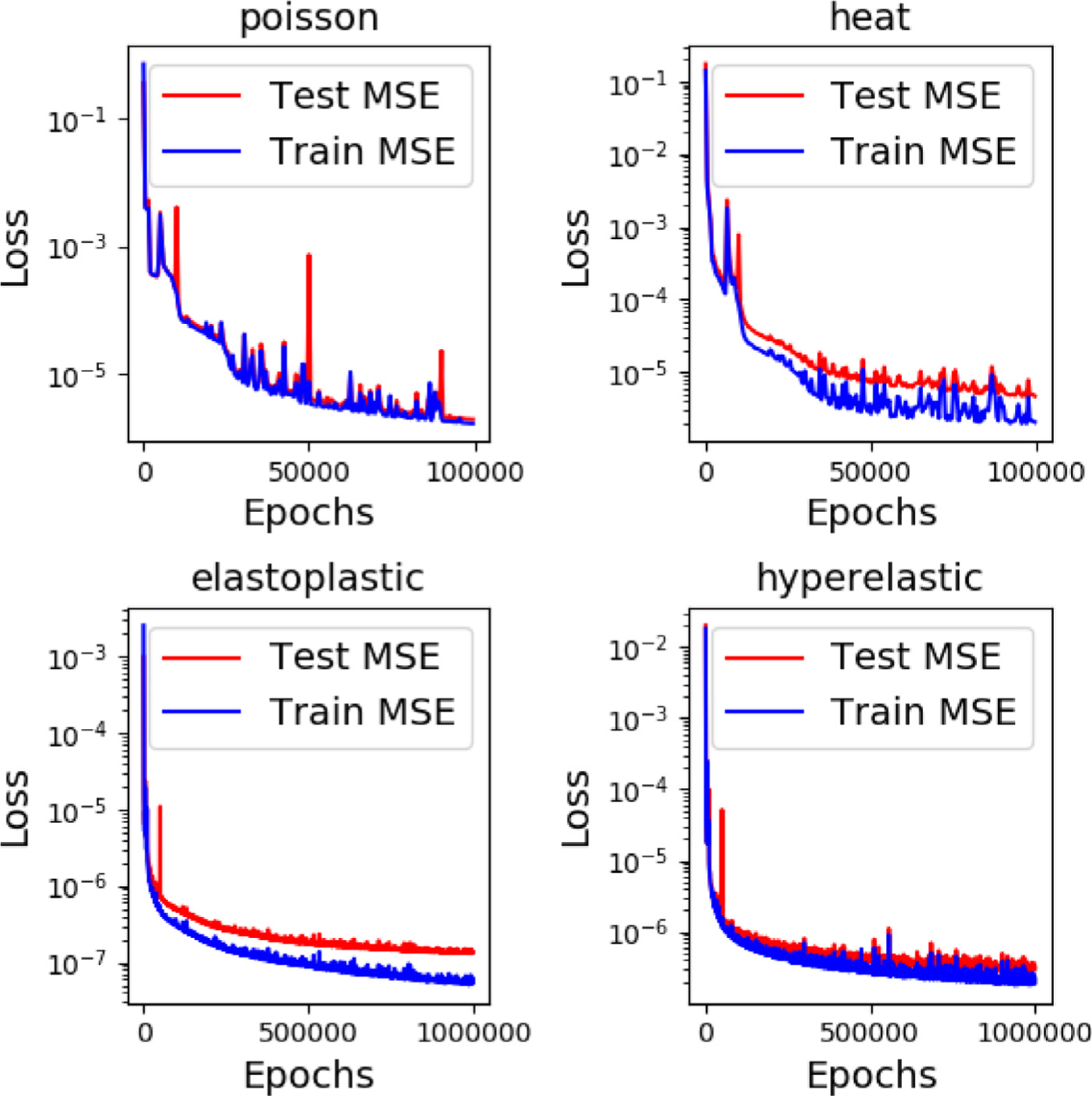

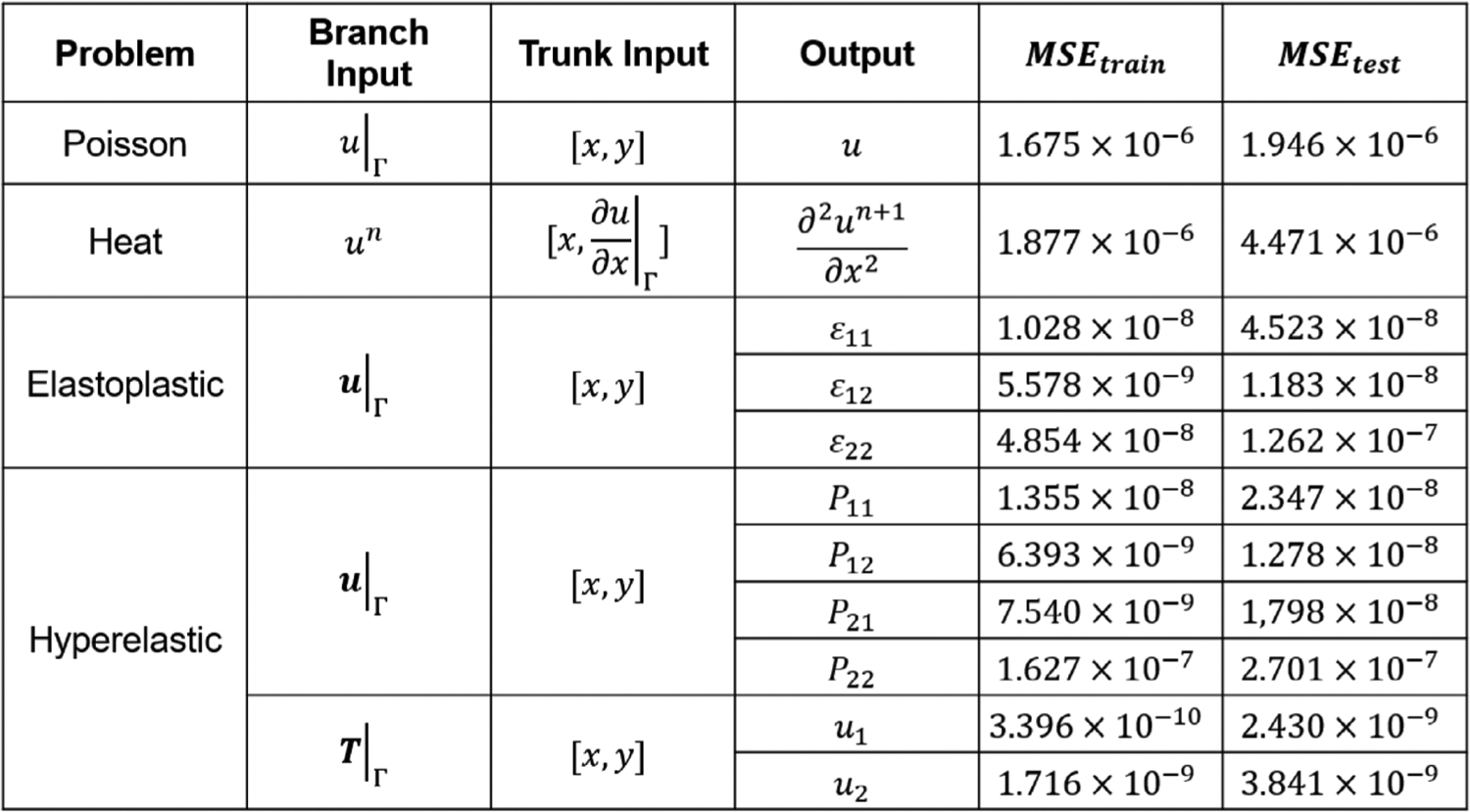

Appendix C. Network training details

We explain the details of network training in this section. Network architecture for each problem is presented in Fig. C.15. We adopt a fully-connected neural network (FNN) with four layers for all the sub-networks. The input layer dimension of the branch net depends on the number of points at the interface, which ranges from 51 to 120 in our examples. Three hidden layers are concatenated with the input layer where we adopt the hyperbolic tangent function as the activate function. Each hidden layer has 100 or 150 neurons shown in Fig. C.15. To minimize the loss function for each case, we set the training epoch as 100,000 steps for the heat and Poisson example and 1,000,000 for the other two examples. Moreover, we illustrate the error history of a few cases in Fig. C.16 where each case shows a relatively small difference between the training and testing errors. The low testing errors indicating a good generalization capability of the network. More specifically, we tabulate the input/output of each network in Fig. C.17 with MSEs of the training and testing data for each case.

Fig. C.16.

History of training/testing errors of DeepONet for different problems.

We define the following metrics proposed in [98] to quantitatively characterize the efficiency of our framework.

| (C.1) |

Here, is the training ratio where the time cost for training the DeepONet is divided by the number of simulations and the cost in time for each simulation in the training dataset. represents the ratio of time on one forward prediction in DeepONet over performing a standard numerical solver. The break even time indicates the number of evaluations in the numerical solver where training a DeepONet costs as much as using numerical solvers. Beyond this point, surrogate modeling will be more efficient than a classical PDE solver. Taking the elastoplasticity case as an example, each elastoplastic FEM takes approximately on a single CPU where we generated samples as the training data. The DeepONet training takes 62 min on a single GPU. The time cost of each forward evaluation using DeepONet is 0.0014 s. Thus, using this information, we obtained , , and . That means, as far as the resultant surrogate model is used for more than 1124 times in prediction tasks, DeepONet will be a more efficient choice.

Fig. C.17.

Details of network setup (inputs/outputs and MSE errors of training/testing) for each example in Section 3.

Appendix D. Data generation

D.1. Random field generation

We provide an overview of the algorithms of random fields generation using Fast Fourier Transformation (FFT). Let be a Gaussian white noise random field on . A random field can be sampled by

| (D.1) |

where and denote Fourier transformation and its inverse. represents a correlation function where is the norm of the wave number . In Sections 3.1, 3.2, and 3.4, we adopt this method for generating the training data with . We refer the reader to find more theoretical details in [99]

D.2. Sampling in elastoplasticity

In the case of elastoplasticity, the non-uniform tension (see Fig. 9) is generated by

| (D.2) |

where are independent normal random variables. We choose the standard deviation as 0.05 to make sure that the dataset contains similar numbers of cases with and without plastic deformation.

Footnotes

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- [1].Weinan E, Principles of Multiscale Modeling, Cambridge University Press, 2011. [Google Scholar]

- [2].Alber M, Tepole AB, Cannon WR, De S, Dura-Bernal S, Garikipati K, Karniadakis GE, Lytton WW, Perdikaris P, Petzold L, et al. , Integrating machine learning and multiscale modeling—perspectives, challenges, and opportunities in the biological, biomedical, and behavioral sciences, NPJ Digit. Med 2 (1) (2019) 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Dobson M, Luskin M, Ortner C, Stability, instability, and error of the force-based quasicontinuum approximation, Arch. Ration. Mech. Anal 197 (1) (2010) 179–202. [Google Scholar]

- [4].Tinsley Oden J, Prudhomme S, Romkes A, Bauman PT, Multiscale modeling of physical phenomena: Adaptive control of models, SIAM J. Sci. Comput 28 (6) (2006) 2359–2389. [Google Scholar]

- [5].Bazilevs Y, Calo V, Cottrell J, Hughes T, Reali A, Scovazzi G, Variational multiscale residual-based turbulence modeling for large eddy simulation of incompressible flows, Comput. Methods Appl. Mech. Engrg 197 (1–4) (2007) 173–201. [Google Scholar]

- [6].Holian BL, Ravelo R, Fracture simulations using large-scale molecular dynamics, Phys. Rev B 51 (17) (1995) 11275. [DOI] [PubMed] [Google Scholar]

- [7].Fish J, Wagner GJ, Keten S, Mesoscopic and multiscale modelling in materials, Nature Mater. 20 (6) (2021) 774–786. [DOI] [PubMed] [Google Scholar]

- [8].Wang Y, Li Z, Xu J, Yang C, Karniadakis GE, Concurrent coupling of atomistic simulation and mesoscopic hydrodynamics for flows over soft multi-functional surfaces, Soft Matter 15 (8) (2019) 1747–1757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Zhang T, Li X, Gao H, Fracture of graphene: a review, Int. J. Fract 196 (1–2) (2015) 1–31. [Google Scholar]

- [10].Jing N, Xue Q, Ling C, Shan M, Zhang T, Zhou X, Jiao Z, Effect of defects on Young’s modulus of graphene sheets: a molecular dynamics simulation, RSC Adv. 2 (24) (2012) 9124–9129. [Google Scholar]

- [11].Ortiz M, A method of homogenization of elastic media, Internat. J. Engrg. Sci 25 (7) (1987) 923–934. [Google Scholar]

- [12].Lin P, Theoretical and numerical analysis for the quasi-continuum approximation of a material particle model, Math. Comp 72 (242) (2003) 657–675. [Google Scholar]

- [13].Xiao S, Belytschko T, A bridging domain method for coupling continua with molecular dynamics, Comput. Methods Appl. Mech. Engrg 193 (17–20) (2004) 1645–1669. [Google Scholar]

- [14].Nie X, Chen S, Robbins M, et al. , A continuum and molecular dynamics hybrid method for micro-and nano-fluid flow, J. Fluid Mech 500 (2004) 55–64. [Google Scholar]

- [15].Tran JS, Schiavazzi DE, Ramachandra AB, Kahn AM, Marsden AL, Automated tuning for parameter identification and uncertainty quantification in multi-scale coronary simulations, Comput. & Fluids 142 (2017) 128–138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Kevrekidis IG, Samaey G, Equation-free multiscale computation: Algorithms and applications, Annu. Rev. Phys. Chem 60 (2009) 321–344. [DOI] [PubMed] [Google Scholar]

- [17].Theodoropoulos C, Qian Y-H, Kevrekidis IG, “Coarse” stability and bifurcation analysis using time-steppers: A reaction-diffusion example, Proc. Natl. Acad. Sci 97 (18) (2000) 9840–9843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Kevrekidis IG, Gear CW, Hyman JM, Kevrekidis PG, Runborg O, Theodoropoulos C, et al. , Equation-free, coarse-grained multiscale computation: enabling microscopic simulators to perform system-level analysis, Commun. Math. Sci 1 (4) (2003) 715–762. [Google Scholar]

- [19].D’Elia M, Littlewood D, Trageser J, Perego M, Bochev P, An optimization-based strategy for peridynamic-FEM coupling and for the prescription of nonlocal boundary conditions, 2021, arXiv preprint arXiv:2110.04420. [Google Scholar]

- [20].Zohdi TI, Homogenization methods and multiscale modeling, in: Encyclopedia of Computational Mechanics Second Edition, Wiley Online Library, 2017, pp. 1–24. [Google Scholar]

- [21].Bensoussan A, Lions J-L, Papanicolaou G, Asymptotic Analysis for Periodic Structures, Vol. 374, American Mathematical Soc., 2011. [Google Scholar]

- [22].Weinan E, Engquist B, Multiscale modeling and computation, Notices Amer. Math. Soc 50 (9) (2003) 1062–1070. [Google Scholar]

- [23].Efendiev Y, Galvis J, Hou TY, Generalized multiscale finite element methods (GMsFEM), J. Comput. Phys 251 (2013) 116–135. [Google Scholar]

- [24].You H, Yu Y, Silling S, D’Elia M, A data-driven peridynamic continuum model for upscaling molecular dynamics, Comput. Methods Appl. Mech. Engrg 389 (2022) 114400. [Google Scholar]

- [25].Blumers A, Yin M, Nakajima H, Hasegawa Y, Li Z, Karniadakis GE, Multiscale parareal algorithm for long-time mesoscopic simulations of microvascular blood flow in zebrafish, Comput. Mech 68 (2021) 1131–1152. [Google Scholar]

- [26].Milton GW, The Theory of Composites, Cambridge University Press, 2002 [Google Scholar]

- [27].Carleo G, Cirac I, Cranmer K, Daudet L, Schuld M, Tishby N, Vogt-Maranto L, Zdeborová L, Machine learning and the physical sciences, Rev. Modern Phys 91 (4) (2019) 045002. [Google Scholar]

- [28].Karniadakis GE, Kevrekidis IG, Lu L, Perdikaris P, Wang S, Yang L, Physics-informed machine learning, Nat. Rev. Phys 3 (6) (2021) 422–440 [Google Scholar]

- [29].Zhang L, Han J, Wang H, Car R, Weinan E, Deep potential molecular dynamics: a scalable model with the accuracy of quantum mechanics, Phys. Rev. Lett 120 (14) (2018) 143001. [DOI] [PubMed] [Google Scholar]

- [30].Cai S, Mao Z, Wang Z, Yin M, Karniadakis GE, Physics-informed neural networks (PINNs) for fluid mechanics: A review, Acta Mech. Sinica (2022) 1–12. [Google Scholar]

- [31].Pfau D, Spencer JS, Matthews AG, Foulkes WMC, Ab initio solution of the many-electron Schrödinger equation with deep neural networks, Phys. Rev. Res 2 (3) (2020) 033429. [Google Scholar]

- [32].Wang K, Sun W, A multiscale multi-permeability poroplasticity model linked by recursive homogenizations and deep learning, Comput. Methods Appl. Mech. Engrg 334 (2018) 337–380. [Google Scholar]

- [33].Arbabi H, Bunder JE, Samaey G, Roberts AJ, Kevrekidis IG, Linking machine learning with multiscale numerics: data-driven discovery of homogenized equations, JOM 72 (12) (2020) 4444–4457. [Google Scholar]

- [34].Rahman AS, Hosono T, Quilty JM, Das J, Basak A, Multiscale groundwater level forecasting: coupling new machine learning approaches with wavelet transforms, Adv. Water Resour 141 (2020) 103595 [Google Scholar]

- [35].Peng GC, Alber M, Tepole AB, Cannon WR, De S, Dura-Bernal S, Garikipati K, Karniadakis GE, Lytton WW, Perdikaris P, et al. , Multiscale modeling meets machine learning: What can we learn? Arch. Comput. Methods Eng 28 (3) (2021) 1017–1037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Regazzoni F, Dedè L, Quarteroni A, Machine learning of multiscale active force generation models for the efficient simulation of cardiac electromechanics, Comput. Methods Appl. Mech. Engrg 370 (2020) 113268. [Google Scholar]

- [37].Chattopadhyay A, Hassanzadeh P, Subramanian D, Data-driven predictions of a multiscale Lorenz 96 chaotic system using machine-learning methods: reservoir computing, artificial neural network, and long short-term memory network, Nonlinear Process. Geophys 27 (3) (2020) 373–389. [Google Scholar]

- [38].Bhatia H, Carpenter TS, Ingólfsson HI, Dharuman G, Karande P, Liu S, Oppelstrup T, Neale C, Lightstone FC, Van Essen B, et al. , Machine-learning-based dynamic-importance sampling for adaptive multiscale simulations, Nat. Mach. Intell 3 (5) (2021) 401–409. [Google Scholar]

- [39].Masi F, Stefanou I, Thermodynamics-based artificial neural networks (TANN) for multiscale modeling of materials with inelastic microstructure, 2021, arXiv preprint arXiv:2108.13137. [Google Scholar]

- [40].Wu L, Zulueta K, Major Z, Arriaga A, Noels L, Bayesian inference of non-linear multiscale model parameters accelerated by a deep neural network, Comput. Methods Appl. Mech. Engrg 360 (2020) 112693. [Google Scholar]

- [41].Pled F, Desceliers C, Zhang T, A robust solution of a statistical inverse problem in multiscale computational mechanics using an artificial neural network, Comput. Methods Appl. Mech. Engrg 373 (2021) 113540. [Google Scholar]

- [42].Xu X, D’Elia M, Glusa C, Foster JT, Machine-learning of nonlocal kernels for anomalous subsurface transport from breakthrough curves, 2022, arXiv preprint arXiv:2201.11146. [Google Scholar]

- [43].Park J, Zhu X, Physics-informed neural networks for learning the homogenized coefficients of multiscale elliptic equations, 2022, arXiv preprint arXiv:2202.09712v1. [Google Scholar]

- [44].Chan S, Elsheikh AH, A machine learning approach for efficient uncertainty quantification using multiscale methods, J. Comput. Phys 354 (2018) 493–511. [Google Scholar]

- [45].Rocha I, Kerfriden P, van der Meer F, On-the-fly construction of surrogate constitutive models for concurrent multiscale mechanical analysis through probabilistic machine learning, J. Comput. Phys.: X 9 (2021) 100083. [Google Scholar]

- [46].Pyrialakos S, Kalogeris I, Sotiropoulos G, Papadopoulos V, A neural network-aided Bayesian identification framework for multiscale modeling of nanocomposites, Comput. Methods Appl. Mech. Engrg 384 (2021) 113937. [Google Scholar]

- [47].Ingólfsson HI, Neale C, Carpenter TS, Shrestha R, López CA, Tran TH, Oppelstrup T, Bhatia H, Stanton LG, Zhang X, et al. , Machine learning-driven multiscale modeling reveals lipid-dependent dynamics of RAS signaling proteins, Proc. Natl. Acad. Sci 119 (1) (2022) [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Liu B, Kovachki N, Li Z, Azizzadenesheli K, Anandkumar A, Stuart AM, Bhattacharya K, A learning-based multiscale method and its application to inelastic impact problems, J. Mech. Phys. Solids 158 (2022) 104668. [Google Scholar]

- [49].Li Z, Kovachki N, Azizzadenesheli K, Liu B, Bhattacharya K, Stuart A, Anandkumar A, Fourier neural operator for parametric partial differential equations, 2020, arXiv preprint arXiv:2010.08895. [Google Scholar]

- [50].Li Z, Kovachki N, Azizzadenesheli K, Liu B, Bhattacharya K, Stuart A, Anandkumar A, Neural operator: Graph kernel network for partial differential equations, 2020, arXiv preprint arXiv:2003.03485. [Google Scholar]

- [51].Lu L, Jin P, Pang G, Zhang Z, Karniadakis GE, Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators, Nat. Mach. Intell 3 (3) (2021) 218–229. [Google Scholar]

- [52].You H, Yu Y, D’Elia M, Gao T, Silling S, Nonlocal kernel network (NKN): a stable and resolution-independent deep neural network, 2022, arXiv preprint arXiv:2201.02217. [Google Scholar]

- [53].Raissi M, Perdikaris P, Karniadakis GE, Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, J. Comput. Phys 378 (2019) 686–707. [Google Scholar]

- [54].Cai S, Wang Z, Lu L, Zaki TA, Karniadakis GE, DeepM&Mnet: Inferring the electroconvection multiphysics fields based on operator approximation by neural networks, J. Comput. Phys 436 (2021) 110296 [Google Scholar]

- [55].Lin C, Maxey M, Li Z, Karniadakis GE, A seamless multiscale operator neural network for inferring bubble dynamics, J. Fluid Mech 929 (2021). [Google Scholar]

- [56].Yin M, Ban E, Rego BV, Zhang E, Cavinato C, Humphrey JD, Karniadakis GE, Simulating progressive intramural damage leading to aortic dissection using DeepONet: an operator-regression neural network, J. R. Soc. Interface 19 (2021) 20140397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Lin C, Li Z, Lu L, Cai S, Maxey M, Karniadakis GE, Operator learning for predicting multiscale bubble growth dynamics, J. Chem. Phys 154 (10) (2021) 104118. [DOI] [PubMed] [Google Scholar]

- [58].Li Z, Kovachki N, Azizzadenesheli K, Liu B, Bhattacharya K, Stuart A, Anandkumar A, Multipole graph neural operator for parametric partial differential equations, 2020, arXiv preprint arXiv:2006.09535. [Google Scholar]

- [59].Goswami S, Yin M, Yu Y, Karniadakis GE, A physics-informed variational DeepONet for predicting crack path in quasi-brittle materials, Comput. Methods Appl. Mech. Engrg 391 (2022) 114587. [Google Scholar]

- [60].Mao Z, Lu L, Marxen O, Zaki TA, Karniadakis GE, DeepM&Mnet for hypersonics: Predicting the coupled flow and finite-rate chemistry behind a normal shock using neural-network approximation of operators, J. Comput. Phys 447 (2021) 110698. [Google Scholar]

- [61].Lu L, Meng X, Cai S, Mao Z, Goswami S, Zhang Z, Karniadakis GE, A comprehensive and fair comparison of two neural operators (with practical extensions) based on FAIR data, 2021, arXiv preprint arXiv:2111.05512. [Google Scholar]

- [62].Kovachki N, Li Z, Liu B, Azizzadenesheli K, Bhattacharya K, Stuart A, Anandkumar A, Neural operator: Learning maps between function spaces, 2021, arXiv preprint arXiv:2108.08481 [Google Scholar]

- [63].Chen T, Chen H, Universal approximation to nonlinear operators by neural networks with arbitrary activation functions and its application to dynamical systems, IEEE Trans. Neural Netw 6 (4) (1995) 911–917. [DOI] [PubMed] [Google Scholar]

- [64].Lanthaler S, Mishra S, Karniadakis GE, Error estimates for deeponets: A deep learning framework in infinite dimensions, 2021, arXiv preprint arXiv:2102.09618. [Google Scholar]

- [65].Patera AT, A spectral element method for fluid dynamics: laminar flow in a channel expansion, J. Comput. Phys 54 (3) (1984) 468–488. [Google Scholar]

- [66].Karniadakis GE, Sherwin S, Spectral/Hp Element Methods for Computational Fluid Dynamics, Oxford University Press, 2005. [Google Scholar]

- [67].Hughes TJ, The Finite Element Method: Linear Static and Dynamic Finite Element Analysis, Courier Corporation, 2012. [Google Scholar]

- [68].Versteeg HK, Malalasekera W, An Introduction to Computational Fluid Dynamics: The Finite Volume Method, Pearson education, 2007. [Google Scholar]

- [69].Monaghan JJ, Smoothed particle hydrodynamics, Annu. Rev. Astron. Astrophys 30 (1) (1992) 543–574. [Google Scholar]

- [70].Espanol P, Warren P, Statistical mechanics of dissipative particle dynamics, Europhys. Lett 30 (4) (1995) 191. [Google Scholar]

- [71].Groot RD, Warren PB, Dissipative particle dynamics: Bridging the gap between atomistic and mesoscopic simulation, J. Chem. Phys 107 (11) (1997) 4423–4435 [Google Scholar]

- [72].Rapaport DC, Rapaport DCR, The Art of Molecular Dynamics Simulation, Cambridge University Press, 2004. [Google Scholar]

- [73].Yu Y, Bargos FF, You H, Parks ML, Bittencourt ML, Karniadakis GE, A partitioned coupling framework for peridynamics and classical theory: analysis and simulations, Comput. Methods Appl. Mech. Engrg 340 (2018) 905–931. [Google Scholar]

- [74].Mok D, Wall W, Ramm E, Accelerated iterative substructuring schemes for instationary fluid-structure interaction, Comput. Fluid Solid Mech 2 (2001) 1325–1328. [Google Scholar]

- [75].Lions P-L, et al. , On the Schwarz alternating method. I, in: First International Symposium on Domain Decomposition Methods for Partial Differential Equations, Vol. 1, Paris, France, 1988, p. 42. [Google Scholar]

- [76].Mota A, Tezaur I, Alleman C, The Schwarz alternating method in solid mechanics, Comput. Methods Appl. Mech. Engrg 319 (2017) 19–51. [Google Scholar]

- [77].Funaro D, Quarteroni A, Zanolli P, An iterative procedure with interface relaxation for domain decomposition methods, SIAM J. Numer. Anal 25 (6) (1988) 1213–1236. [Google Scholar]

- [78].Abaqus Abaqus 2020 Documentation, Dassault Systèmes, 2020 [Google Scholar]

- [79].Ganzenmüller GC, An hourglass control algorithm for Lagrangian smooth particle hydrodynamics, Comput. Methods Appl. Mech. Engrg 286 (2015) 87–106. [Google Scholar]

- [80].Alnæs M, Blechta J, Hake J, Johansson A, Kehlet B, Logg A, Richardson C, Ring J, Rognes ME, Wells GN, The FEniCS project version 1.5, Arch. Numer. Softw 3 (100) (2015). [Google Scholar]

- [81].Wang S, Wang H, Perdikaris P, Learning the solution operator of parametric partial differential equations with physics-informed DeepONets, Sci. Adv 7 (40) (2021) eabi8605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [82].Yin M, Zheng X, Humphrey JD, Karniadakis GE, Non-invasive inference of thrombus material properties with physics-informed neural networks, Comput. Methods Appl. Mech. Engrg 375 (2021) 113603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [83].Zhang E, Yin M, Karniadakis GE, Physics-informed neural networks for nonhomogeneous material identification in elasticity imaging, 2020, arXiv preprint arXiv:2009.04525. [Google Scholar]

- [84].Zhang E, Dao M, Karniadakis GE, Suresh S, Analyses of internal structures and defects in materials using physics-informed neural networks, Sci. Adv 8 (7) (2022) eabk0644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [85].Holzapfel GA, Gasser TC, Ogden RW, A new constitutive framework for arterial wall mechanics and a comparative study of material models, J. Elast. Phys. Sci. Solids 61 (1) (2000) 1–48. [Google Scholar]

- [86].Dijkstra W, Mattheij R, The condition number of the BEM-matrix arising from Laplace’s equation, Electron. J. Bound. Elem 4 (2) (2006). [Google Scholar]