Abstract

Digital evidence is essential to criminal investigations and prosecutions, but its use is fraught with challenges: rapid changes in technology, the need to communicate those changes to stakeholders, and a sociopolitical landscape that leaves little room for error, particularly regarding electronic data privacy. In the criminal justice system, these challenges can affect the admissibility of evidence and its proper introduction at trial, as well as how cases are charged and resolved. A survey of 50 United States (U.S.)-based prosecutors, contextualized by data from a second survey of 51 U.S.-based investigators, explores these issues for the present and future, finding that crucial factors include training, prosecutors who specialize in digital evidence issues, and strong relationships between prosecutors and investigators.

Keywords: Prosecution, Digital evidence, Forensic service, Training, Decision making, Case building, Criminal justice

1. Introduction

By one estimate, digital evidence is a factor in about 90% of criminal cases [1]. As law enforcement investigations themselves become more digitized, their complexity rises along with the volume of data being managed [2]. Yet the traditional criminal justice system has struggled to adapt. With digital forensics having evolved as an investigative rather than a forensic discipline [3], emphasis on law enforcement success may have come at the expense of fair-trial discussions and requirements. [2].

For example, factors including metadata validation, “the lack of consensus regarding the needs in digital evidence processing,” the insufficiency of “methods and tools from ten years ago,” and “interconnected criminal justice issues that go beyond law enforcement's typical role in collecting evidence” are all part of “a multifaceted challenge” which prosecutors contend with by proxy: “Compared with the chain of custody for physical evidence, that for digital evidence is much more complex, volatile, and difficult to reliably maintain. Prosecutors must prove that only authorized persons had access to the evidence and guarantee that copies and analyses were made by authorized manipulations and using acceptable methods.” Another challenge includes digital evidence backlogs, which could in turn complicate prosecutors' decision-making: to charge, for one, or plea bargain, for another [4].

Yet in spite of an estimated 11,000 digital forensics laboratories across the United States [5], prosecutors often have a poor understanding of digital data's relevance to their cases, or how to use the evidence [4]. Of course, defendants always have the right to challenge the evidence against them, and a judge can reject a plea for insufficent evidence [6]. However, whether defendants and their attorneys have the technical ability or financial resources to meaningfully contest digital evidence—and whether judges can effectively evaluate the issues [7]—could render these kinds of challenges moot [8].

At the same time, so few cases ever make it to trial [9] that the chances are slim that any evidence—digital or not—would be admitted. One review of 145 cases appealed in U.S. federal circuit courts between 2010 and 2015 found that only 22 “were based on the science of computer forensics, including probative value, authenticity, hearsay, relevancy, and scientific merit.” [10].

These kinds of issues contribute to a fraught landscape for prosecutors, at a time when U.S. prosecutors’ offices have come under greater scrutiny in recent years [11], including for their approach to certain kinds of forensic evidence [12]. Indeed, noted one report on prosecutor priorities: “Prosecutors are expected to deliver fair and legitimate justice in their decisionmaking while balancing aspects of budgets and resources, working with increasingly larger volumes of digital and electronic evidence that have developed from technological advancements (such as social media platforms), partnering with communities and other entities, and being held accountable for their actions and differing litigation strategies.” [13].

The extent to which digital evidence factors into any of these “big picture” issues is unknown, but as digital evidence is used in increasingly controversial prosecutions [14]—while the U.S.‘s roughly two million prisoners continue to lead the world in total number of people incarcerated [15]—it is worth questioning whether the U.S. justice system has reached a point where the effectiveness of its current processes and procedures must be rethought. Towards that end, the stark gap between the extent to which digital evidence is relied upon, and its apparent reliability, was the foundation for the research described in this paper. Particularly how prosecutors and investigators around the country interact with digital evidence, and with one another, has perhaps never been more germane to a free society's notions of justice. This research, combining two surveys and a set of follow-up interviews, sought to better understand these interactions.

1.1. Definitions

The following terms are used throughout this paper and should be understood to be defined as:

-

•

Digital evidence/digital forensic evidence consists of data captured from digital devices, used to investigate and prosecute criminal cases.

-

•

Digital data consists of data captured from devices but not necessarily used as evidence.

-

•

Third party data/evidence refers to data captured from platforms operated by a third party, such as a cellular service or social media company.

-

•

Low-hanging fruit refers to digital data that is easy to obtain, either from a device or a third-party platform e.g. a publicly facing (unlocked) social media account.

As a caveat, however, these definitions were not provided to survey respondents, though the terms “digital evidence” and “third party data” were differentiated in some questions. That said, some respondent interviewees reflected the way they interpreted the questions. For example, one respondent said to her, “digital evidence” means social media, phones, and computers as opposed to digital surveillance videos and cellular tower sites. Another differentiated between seizures of device data from someone's home, for example, and seizures of bank records or other third-party data which may be in a digital format but are fundamentally more about traditional forensic accounting or other investigative methods.

2. Methodology

Survey participants were recruited from two different e-mail listservs—one dedicated to high tech crime investigation, and the other dedicated to prosecutors specializing in digital evidence—as well as from a prosecutor-oriented training course at the National Computer Forensics Institute. As a result, the prosecutor and investigator respondents are not necessarily connected by jurisdiction.

The surveys were built using Google Forms. With moderators' approval, emails were sent to the two listservs describing the authors, the project, the survey, and the intended outcome (to assess training needs as well as assist in policy development). Respondents were informed that results would be anonymized, though they would have the option to provide their email if they agreed to be available for comment. They were also informed that results would be available for review by any participant and might be the subject of a publication or publications. None of the respondents were compensated for participating in the survey.

The original survey consisted of eight questions for both respondent groups, 29 questions for prosecutors, and 21 questions for investigators. First, a series of demographic questions were asked regarding where respondents were located, the size population they serve, how many people are employed in their own agency, whether those people specialize or can be considered generalists, whether they can rely on a prosecutor dedicated to high tech, and the respondent's own years of experience.

These and the following, process-oriented questions consisted primarily of multiple choice, with a mix between “pick one” and “check all that apply.” The former included some questions in which respondents were asked to estimate about how much of their time – segmented into percent ranges, e.g. 0–20%, 20–40%, etc. – digital evidence factors into. Additionally, several of the questions asked respondents to rate their experiences or opinions according to scales of 1–5, “never” to “usually,” etc.

Only the first eight questions required responses. Respondents had the option to skip questions within their own sections. Although some respondents skipped some questions, especially when they weren't relevant to the respondent's own experience, this was not a common occurrence and none of the respondents failed to complete the full survey.

Because the survey was an independent project that started as a way to obtain data for journalism research, the author neither sought nor obtained approval from an Institutional Review Board to work with human subjects. However, all participants were made aware that the survey results as well as interview participants’ comments were intended for publication.

A link to each Google Form was sent in September 2019, and the data collection period lasted through the end of October. Two reminder emails were sent during this time. A total of 55 respondents – 51 investigators, and four prosecutors – came from the first survey. From the prosecutor-oriented survey came a total of 46 respondents, to which the responses from the four prosecutors in the original survey were added.

In sum, results from 51 investigators and 50 prosecutors were evaluated. The investigator respondents consisted of detectives performing a blend of investigative and digital forensic work, though they were not queried on the extent to which they themselves specialize in digital forensics.

The survey included an open answer field where respondents could provide their email address for followup. Of the investigator respondents, 13 provided email addresses where they could be contacted for followup, though for lack of time, no followup interviews were conducted with this cohort. Of the prosecutor respondents, 15 provided email addresses where they could be reached for followup.

Of these 15, 11 participated in follow-up interviews between December 2020 and March 2021, with some additional followups in June and July 2021. Interview questions sought context for these interviewees’ survey responses, to lend insight into what might have driven them. Interviewees were informed that their names and organizations might be directly quoted, and were given the opportunity to either not participate in the interviews, or to have their identities anonymized. Interviews were recorded and transcribed, and interviewees had the opportunity to review and approve their quotes for inclusion in the final publication.

3. Survey respondent demographics and experience

Rather than a 1:1 comparison between prosecutors and the investigators they work with, the survey sought to attain a general sense of what each group was experiencing in their regional locations, communities, and offices.

3.1. Prosecutor and investigator demographics

Generally speaking, prosecutors from larger jurisdictions—some including state attorney general offices—responded to the survey relative to investigators, who represented a broader range of local, county, and state agencies serving different population sizes.

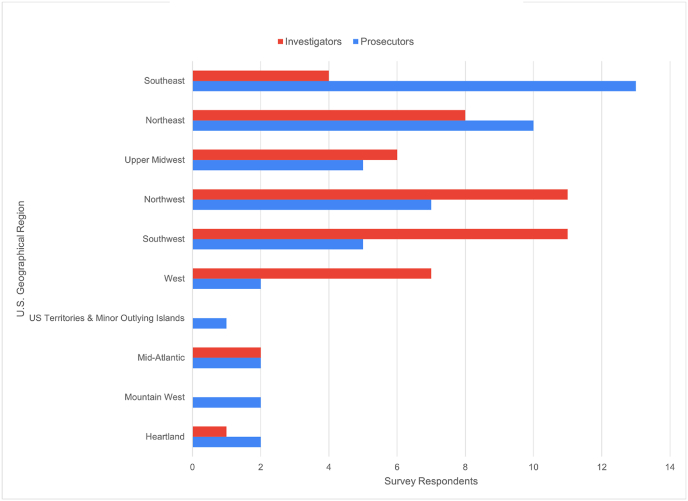

More than half of the prosecutor survey respondents were located on the US East Coast. A little less than one-third responded from the West Coast, with the remainder in the middle of the country. In contrast, more than half of the investigator respondents came from the US West Coast. East Coast-based investigators accounted for fewer than one-quarter of responses, and again, the remaining respondents were based in the middle of the country (see Fig. 1).

Fig. 1.

A higher proportion of prosecutors—nearly 50 percent—responded from the eastern part of the United States, and were about evenly split between the Northeast and Southeast. That proportion was reversed for investigators, who responded in similar proportions from the West.

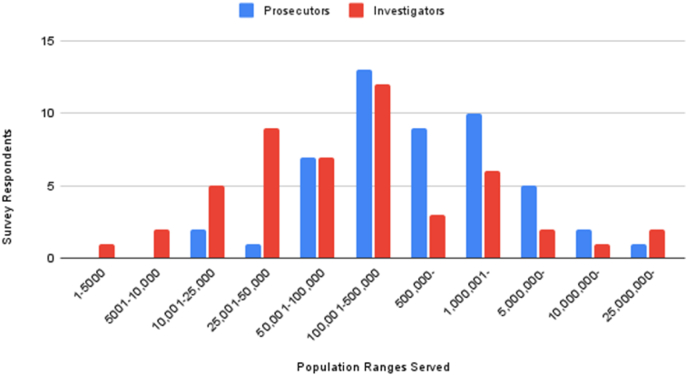

However, regional locations didn't suggest that most respondents came from metropolitan population centers. Most respondents represented mid-size communities, working in city, county, or district attorney's offices or local, county, or state law enforcement agencies; none of the respondents came from federal law enforcement or U.S. attorneys' offices (see Fig. 2).1

Fig. 2.

More prosecutors from larger jurisdictions responded to the survey, while the investigator respondents represented a broader range of populations served.

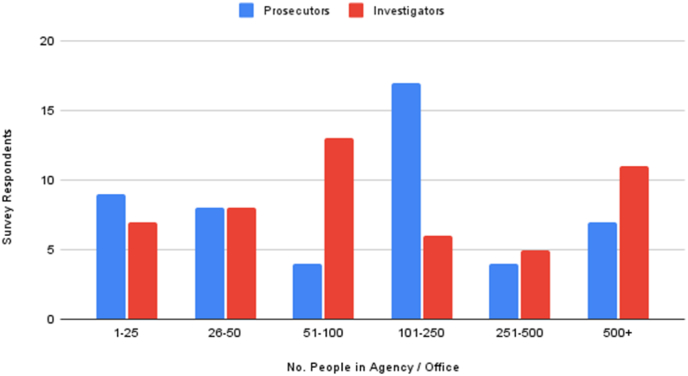

Along similar lines, about 40% of the prosecutor respondents came from offices with under 100 people, but about half came from much larger organizations. The proportions were roughly similar for investigators (see Fig. 3).

Fig. 3.

More than half of the prosecutor respondents came from offices staffed by 100+ people, but the same proportion came from much smaller offices. The investigators were somewhat more evenly distributed.

3.2. Experience with digital evidence

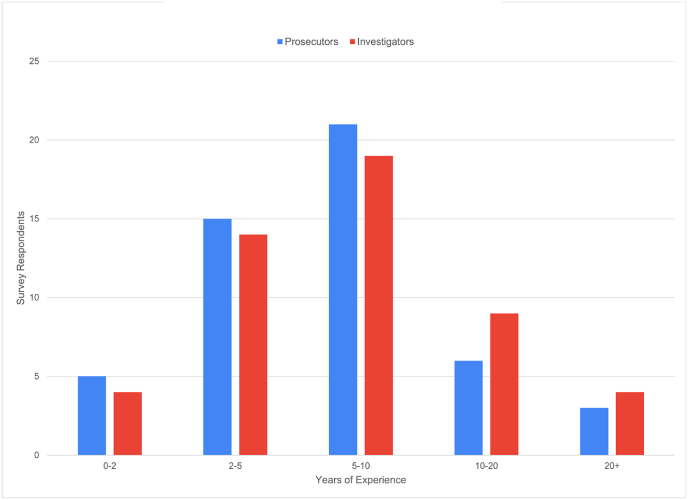

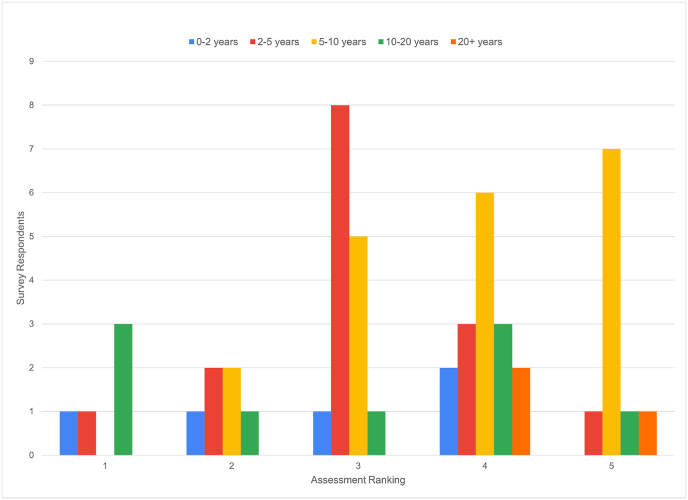

When it came to longevity, the proportions of experience were roughly the same across both groups. Very few respondents had less than two years—or more than 20 years—of experience with digital evidence. The range of experience was between two and 10 years across a vast majority of both groups, roughly corresponding with the degree to which digital evidence has become integral to criminal cases. Still, the investigators tended to have a longer range of experience than the prosecutors (see Fig. 4).

Fig. 4.

The overwhelming majority of prosecutor respondents had between two and ten years of experience with digital evidence, but more investigators have greater longevity of experience than prosecutors.

Whether the slight experience gap between prosecutor and investigator respondents is good, bad, or neutral is unclear. On one hand, technology changes so rapidly and in such profound ways that experience may matter less than good technical skills and a hunger for continuous learning. Several prosecutors reflected in follow-up interviews that they are computing or technology enthusiasts.

Others found themselves in the digital technology niche as a result of assignments or cases they worked, then, recognizing its importance, grew their expertise from there. For example, they read articles or communicate with forensic examiners about why it may not be possible to retrieve a piece of evidence, or self-educate on legal or constitutional, rather than technological or procedural questions.

On the other hand, prosecutors and investigators with fewer years of experience may not have the perspective on digital trends that those with longer experience do. Thus, because the main purpose of this research was to learn whether prosecutors make different decisions (charge, plea, or dismiss cases) based on digital evidence, the survey questioned whether the respondents’ experience included access to colleagues who specialize in law pertaining to digital evidence.

Specialization is understood to enable prosecutors' offices to more effectively and consistently implement and even innovate on best practices [16], including the support of “more-efficient case development and proceedings in complex cases.” [13] Although the survey didn't specify what “specialist” meant, in a digital evidence context, a specialist prosecutor may offer a variety of types of assistance including:

-

•

Assisting with less widely used devices or platforms.

-

•

Answering questions on the significance of a piece or set of data to their case.

-

•

Developing search warrant and subpoena templates for investigators to use to obtain records from third party companies like internet or electronic service providers and telecoms.

-

•

Coming up with protocols for introducing digital evidence at trial, and/or even training prosecutors in their state on understanding different aspects of digital evidence.

-

•

Communicating the reliability of digital evidence.

-

•

Helping other prosecutors understand how to lay a foundation to introduce digital evidence.

-

•

Navigating a defense challenge to digital evidence in the middle of trial.

-

•

Reviewing electronic warrants.

J.D., an assistant state's attorney in a Southeastern state, argues that for prosecutors, specializing comes down to the ability to more quickly identify what might be important. “An investigator can be very good,” J.D. explained, “but there may be something specific that I want for trial or that I think sheds light on something that they may not know to look for.” In turn, his involvement can help to ascertain what kind of legal process will be needed and thus, potentially obtain evidence more quickly. Further, understanding digital evidence allows J.D. to internalize the full spectrum of evidence to figure out what to introduce in court and how to present it, from juror-friendly chat conversations to timelines.

Most survey respondents—representing about two-thirds of both prosecutor and investigator groups—are generalists working multiple types of cases. In larger jurisdictions, investigators and prosecutors both reflected the presence of general crime or trial assignments, and specialized ones for homicides, fraud, gangs, and sex crimes among other types of crime, versus types of evidence.

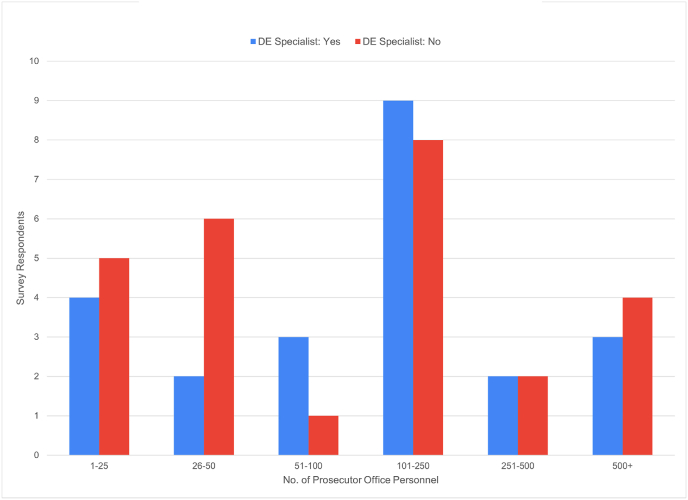

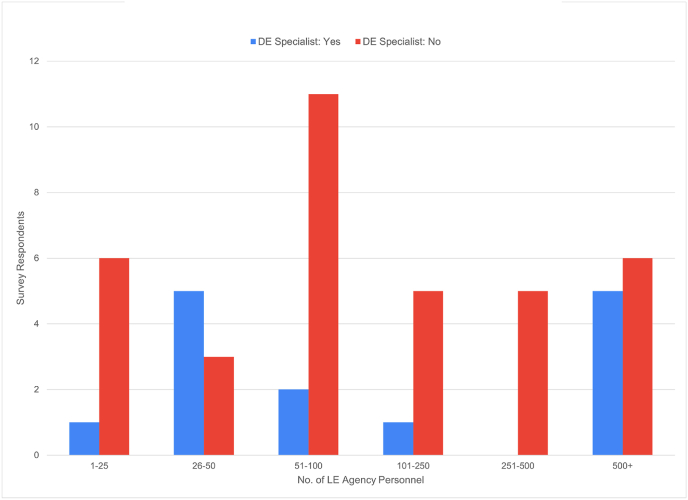

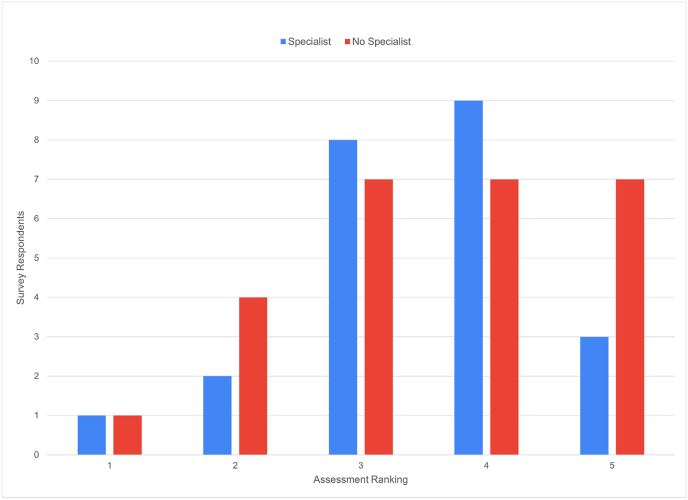

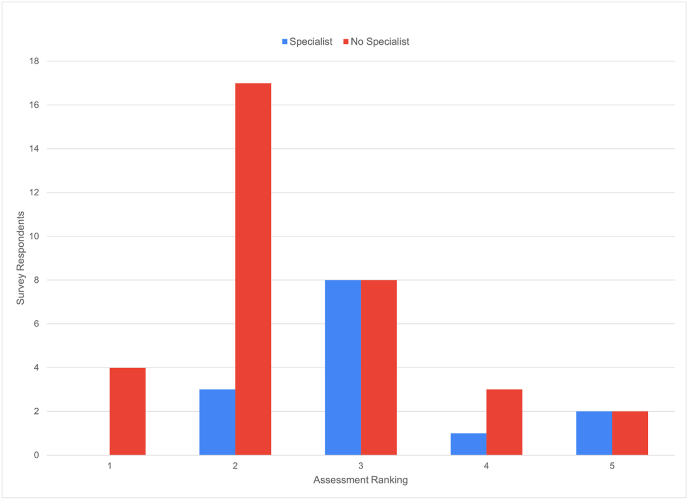

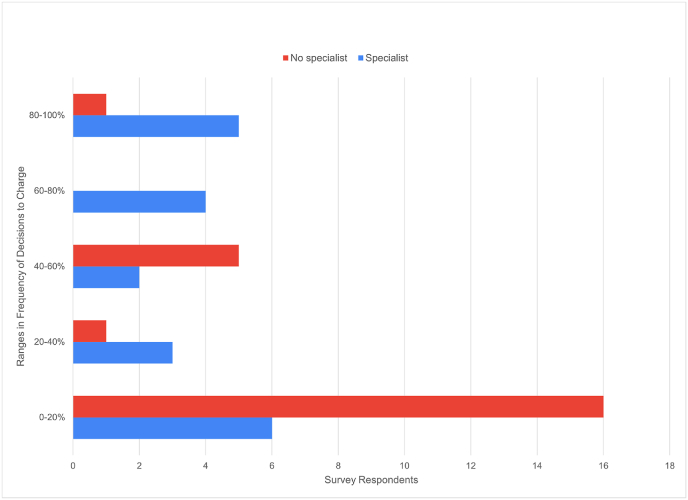

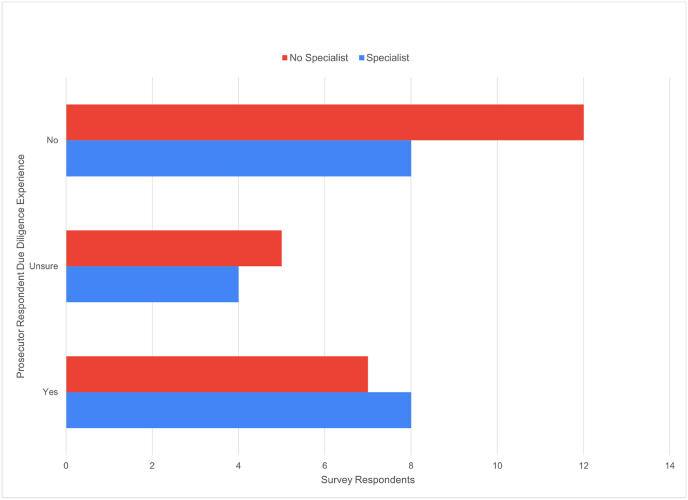

Even so, by a substantial margin, more prosecutor than investigator survey respondents said their agency had a prosecutor dedicated to digital evidence. Investigators overwhelmingly, even in larger agencies, said they have no such dedicated prosecutor to rely on (see Fig. 5, Fig. 6).

Fig. 5.

The most prosecutor respondents from mid-size offices—those with between 51 and 250 prosecutors—reflected the existence of a digital evidence specialist.

Fig. 6.

In contrast to the prosecutor respondents, the investigator respondents, even in larger agencies, said they have no prosecutor dedicated to digital evidence issues.

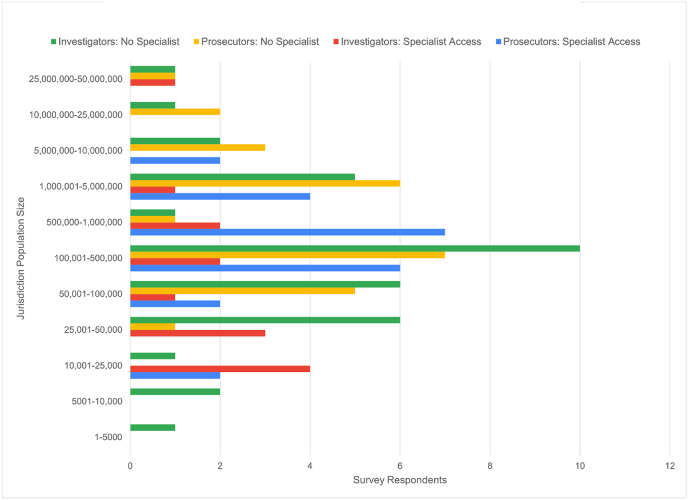

A better sense of these disparities comes from comparing responses across jurisdictional populations. Although to some extent, the responses reflect the number of respondents from each population size, they also show surprising inconsistency (see Fig. 7).

Fig. 7.

Comparing responses from the two groups reflects disparities in the extent investigators can rely on prosecutors from various jurisdictional sizes.

For example, far fewer investigators from jurisdictions serving between 100,000 and 1,000,000 people reflected that they could rely on a prosecutor specializing in digital evidence, even though more prosecutors from similarly sized jurisdictions said their offices had such a specialist on staff.

Follow-up interviews provided more context into these disparities. First, prosecutor specialists function in dedicated roles and units in only some district attorneys’ offices. They might advise all prosecutors, or else be limited to devices or platforms that were either the instrument or the target of crime: cyberstalking, child exploitation, or intellectual property theft.

In smaller jurisdictions, interviewees reflected that a dedicated prosecutor specialist might exist, but work centrally. In one northeastern state with a population of about 1.3 million, for instance, some 80 prosecutors can turn to a specialist at the attorney general's office, who serves as legal counsel for the state police computer crimes unit.

Still other specialists provide legal advice in an informal capacity, especially if their colleagues know them from having acted in an officially designated role, as a training provider, or in a related activity. J.S., an assistant attorney general in a Mountain West state, is one example of a prosecutor who has “developed an interest or an expertise in something through working a specific case or kind of case in the past,” whose colleagues then seek out their advice and recommendations.

Again, however, these technology-focused prosecutor respondents are the exception, not the rule. J.D. believes many prosecutors are overwhelmed by what they don't understand, so they place more trust in law enforcement analysts, who may or may not have the experience they need, nor the legal expertise, to carry a case.

Still, one problem remains for those who specialize: the potential for liability. Prosecutors engaged in the prosecutorial function have absolute immunity from liability for Constitutional violations, while investigators are subject to qualified immunity. V.J., a prosecutor on the West Coast, observed that courts take a narrow view of a prosecutor's specialty. Thus, he said, when prosecutors offer legal counsel to investigators, such as reviewing language for legal process, they are engaging in an investigative function. V.J. added that judges reviewing a prosecutor's immunity apply a standard of whether a prosecutor knew better, or should have known better, when they stepped outside of their role. Under that standard, prosecutors can be liable for deficiencies in a search warrant they review.

Other issues may also be in play. Notably, few respondents—about one-quarter of investigators, compared to less than one-fifth of prosecutors—have more than 10 years of experience in a field whose roots stretch back to the 1980s.

Attrition is likely one part of the problem. Prosecutors’ offices broadly struggle with recruitment and retention [13], and the role of a prosecutor itself is complex and dynamic [17]. Moreover, prosecutor workloads shift, and are difficult to measure [16]. Burnout, a contributing factor in reassignments and resignations alike, is common in this landscape [18]. Among those who investigate and prosecute child exploitation, burnout is especially high [19].

Burned out or not, though, those who build a deep skillset in digital evidence handling or law, can find the chance to command higher salaries in the private sector [20] doubly appealing. But C.D., a prosecutor in a northeastern state, said such attrition can compound an existing problem in the United States: a nationwide shortage of trained, qualified forensic examiners [21].

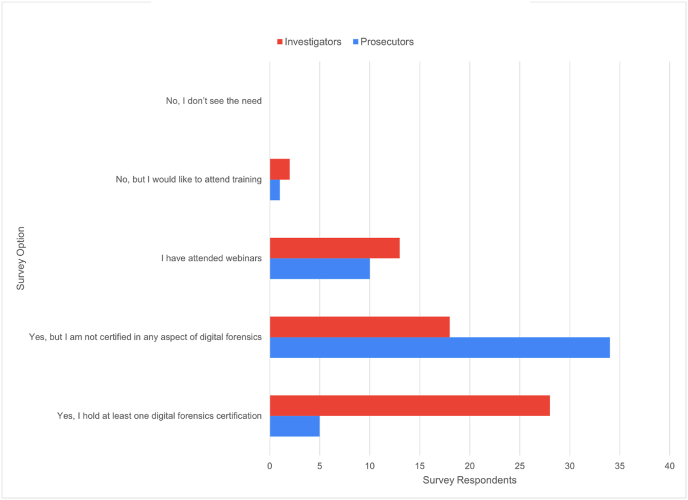

3.3. Digital evidence training

Whether they specialize or not, training is generally understood to be the main avenue by which investigators and attorneys can gain a better understanding of investigators’ processes and tools [22], more effectively apply reasoning in line with scientific as well as legal principles [23], and bridge gaps in their own perceptions of digital evidence and its value [4]. As B.H., a deputy district attorney working in a Mountain West state, put it: “[B]eing able, as a prosecutor, to do the same trainings that investigators do is incredibly helpful, both in preparing for trial and for being better able to advise law enforcement.”

Yet law schools generally do not cover digital evidence issues [23]. The survey asked not only whether prosecutors had attended training on digital evidence, but also factors which might keep them from training. As well, survey results describe these barriers in terms of demographic factors such as location and population size served.

Nearly 80% of the prosecutor respondents said they had attended training, though only four had obtained some level of certification in digital forensics (see Fig. 8). Indeed, even prosecutors who said they filled a specialist role at their agency weren't certified in any aspect of digital forensics.

Fig. 8.

The vast majority of prosecutor respondents—again, those solicited from technology-oriented e-mail listservs—have attended at least some training to familiarize themselves with digital evidence. Investigators, also solicited from a technology-oriented listserv, were far more likely than the prosecutors to have obtained some level of certification in their training.

In contrast, investigator respondents were far more likely to have obtained some level of certification in their training.

However, the prosecutor respondents don't see a need for the same type of certification training the investigators receive. “I just need to make sure that I understand the evidence and how it works, so that I can properly explain it to a jury,” said L.H., a prosecutor in the Pacific northwest. “The certifications are really needed by my forensic experts …. it's part of their training that supports their testimony.”

Yet training is important for prosecutors, too. Limited training introduces risks that prosecutors will not be able to adequately prepare themselves or their witnesses or, more broadly, to attempt processes or procedures which could compromise their cases [24].

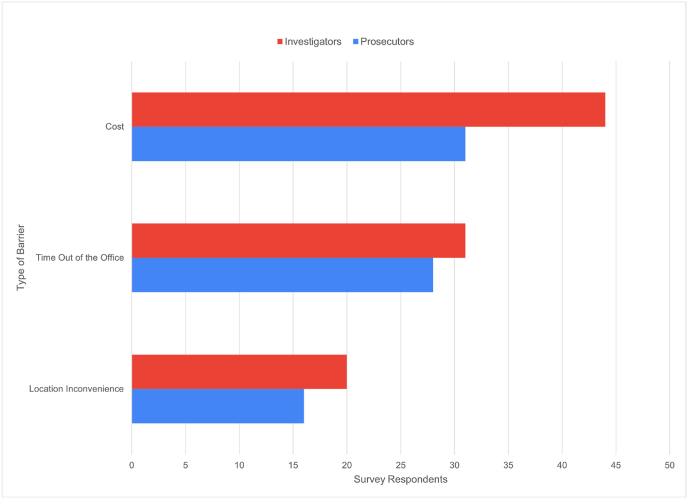

Even so, survey respondents reflected significant barriers to training. Cost, time away from the office—including staffing coverage—and inconvenience in location were all factors, with most respondents citing more than one factor as problematic (see Fig. 9). Indeed, related to “time away from the office” is location inconvenience. First, courses tend to be offered in locations convenient to regional trainees, but this proximity doesn't mean it's local to them. Prosecutors and investigators in rural locations could need to factor in travel time to a training location that could be an hour or more away.

Fig. 9.

Prosecutors cited location inconvenience as more of a barrier than cost, while the reverse was true for investigators and both groups cited time out of the office roughly equally.

These three factors weren't the only barriers to training. Survey respondents and interview follow-ups revealed additional complications. For one: curriculum that's overly simplistic, or targeted only to some types of crimes. Reflected one respondent: “I do financial crimes, but most digital evidence courses are full of child crimes folks who have very different issues.” Some prosecutors additionally identified a lack of information about available training, or infrequency of training. Only a single respondent reflected their agency was “very supportive” of training.

Additionally, training can be time-consuming, a degree of commitment that many prosecutors may not be able to make for the skillset they need, said C.D. As a result, unless a prosecutor has a long-term plan to leave for the private sector and intends to rely on certifications to market themself, certification training—in contrast to more generalized training that confers the type of competency L.H. referred to—doesn't hold a lot of value, which C.D. said is a problem in itself.

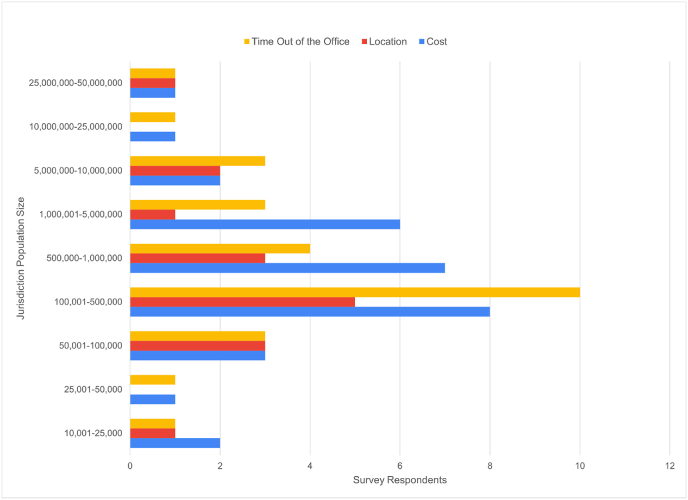

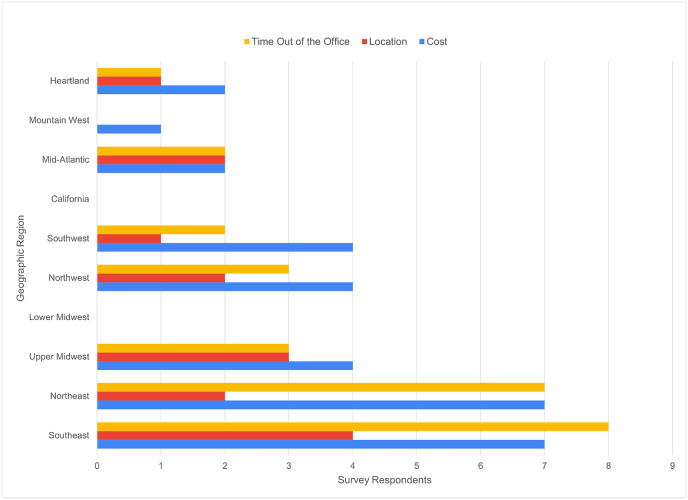

Breaking prosecutors’ barriers down further by population size and location, the survey results reveal that across community sizes and regions, location was less of a factor for the prosecutor respondents than cost and time out of the office (see Fig. 10, Fig. 11).

Fig. 10.

Across population sizes served, prosecutor respondents cited cost and time away from the office as much greater barriers to their training attendance than location. Note: blank/missing data indicates that no respondents from these areas reported these factors applied to them.

Fig. 11.

Cost and time away from the office are, across U.S. regions as well as population sizes served, much greater barriers than location to prosecutor respondents' training attendance. Note: blank/missing data indicates that no respondents from these areas reported these factors applied to them.

First, whether in-person or online, training tends to be pricey: anywhere from about $1200 for a two-day live remote course [25] to $3000 or more for one lasting four days [26]—not counting the cost of travel, hotel accommodations, and expenses for a multi-day trip [27].

Of course, both live and on-demand online training, accelerated by the COVID-19 pandemic, could alleviate some of these issues [23]. This survey was conducted about six months into the pandemic, so the results may indicate that location was less of a problem than in previous years. At the same time, however, virtual formats could be challenging for some [28].

In general, prosecutors’ lack of access to training on digital evidence specifically may be related to the fact that only a handful of nonprofit, grant- or federally-funded entities [29] currently offer it targeted to prosecutors, relative to for-profit training offered by vendors.

Likely it wouldn't be feasible for vendors to offer training to prosecutors specifically. For example, Cellebrite offers “Legal Professionals Training.” [30] However, course materials say nothing about whether the course offers continuing legal education (CLE) credits, which are annual state bar requirements for U.S.-based attorneys to continue practicing law [31].

Organizations such as the National District Attorneys Association (NDAA) and its state-level counterparts may offer some training on digital evidence, but appealing to a very broad range of prosecutors means balancing that topic with many others. For example, the NDAA's list of resources includes topics ranging from drug policy to trial advocacy to traffic law, in addition to topics like child abuse and violence against women [32]. In other words, training is both critically necessary and critically underresourced.

(Worth mentioning: an investigator's comment that “My agency has allowed me to attend some pretty advanced training, so I can't say that 'barriers' really applies to me.” “Allowed” is striking language considering that training and proficiency help to improve an expert's credibility and overall performance. Through that lens, advanced training should be a requirement.)

4. Survey results and discussion

The findings on the extent to which prosecutors specialize and/or receive training on digital evidence issues is germane to the way they assess the evidence for its relevance, strength, authenticity, and admissibility, as well as how it fits with other pieces of evidence.

In particular, this research sought to ascertain whether a relationship existed between the degree to which prosecutors understand digital evidence, and how they rated their relationships and case-building efforts with investigators, judges, and others.

Thus some of the survey questions asked about various types of digital evidence and their relevance to different types of cases. After first asking how often prosecutors encounter digital evidence in their cases, and how this frequency compared with the frequency investigators reported, the survey then drilled down into the broad types of cases hypothesized to be reliant upon digital evidence. The survey also differentiated between digital forensic evidence and digital third-party evidence.

4.1. Digital evidence assessment and case-building

The questions asked in this portion of the survey sought a sense for how investigators and prosecutors approach, and rely on, digital evidence to make decisions around individuals’ innocence, guilt, and liberty. In particular, the research was intended to explore the importance of digital evidence relative to other forms of evidence.

For example, said V.J.: “Generally speaking, digital evidence tends to corroborate what a witness or victim reports, and either the digital evidence doesn't exist, or it is not able to provide that corroboration, or the digital evidence exists and it tends to corroborate the opposite. It could show that the witness is mistaken, or not truthful.”

Communication between prosecutors and investigators can address these kinds of procedural and substantive issues with cases and evidence, as well as help with trial preparation [33]. In addition, prosecutor-investigator communication facilitates the service of legal process on companies that hold responsive digital data [22].

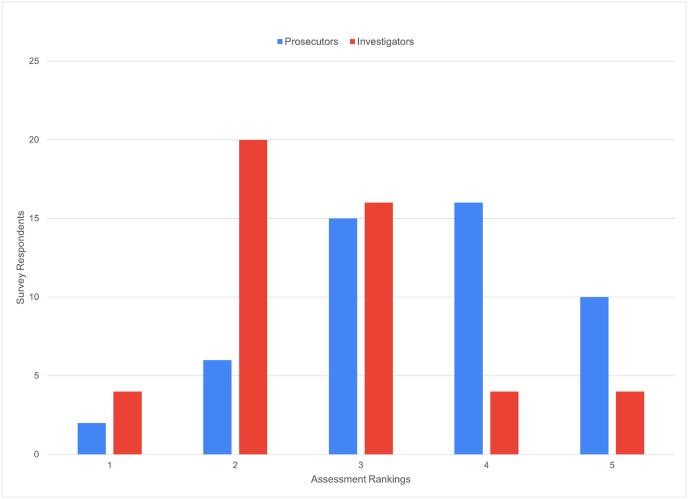

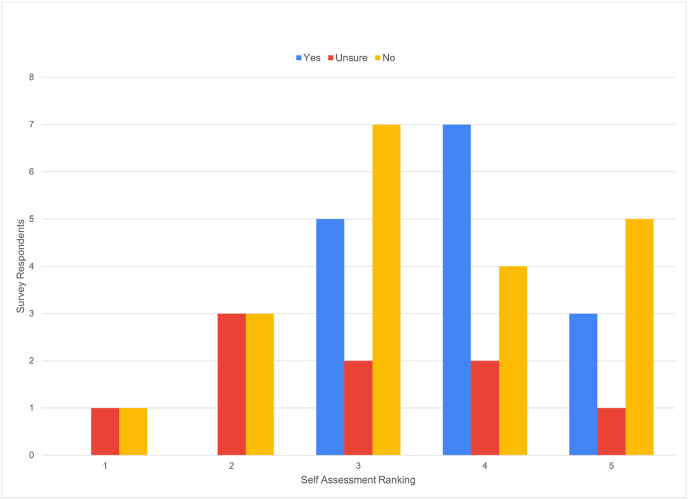

To that end, the survey asked the prosecutors to rate how involved they were in assessing digital evidence; and likewise asked the investigators to rate the involvement of the prosecutors they work with. The 1 to 5 rating scale defined 1 as minimal involvement, taking reports at face value and hardly ever asking questions; and 5 as active engagement, going through the data extensively as a team, understanding how the data supports the investigators’ decisions.

Most of the prosecutor respondents—again, recruited from listservs dedicated to technology in criminal justice—rated themselves either a 3 or a 4. In contrast, investigators’ responses rated the prosecutors they work with at only a 2 or 3 (see Fig. 12).

Fig. 12.

Investigator respondents feel less engaged with their prosecutors, than prosecutor respondents do with their forensic investigators.

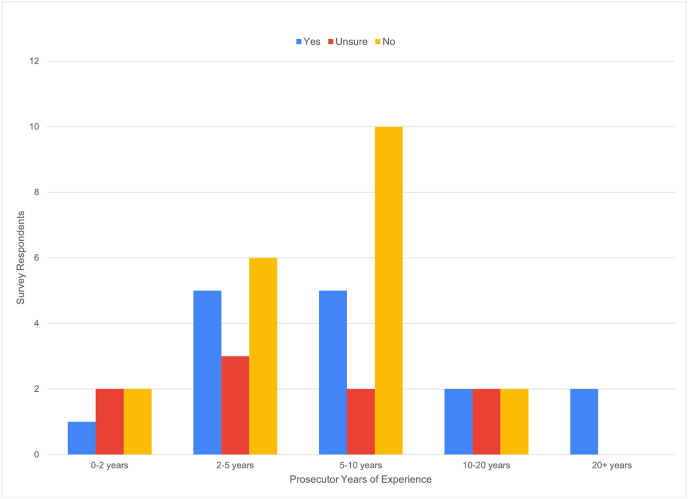

More context comes from cross-referencing the prosecutors’ self-assessment with their years of experience working with digital evidence. Those who said they were most involved tended to have between five and 10 years of experience. Further, although few of the survey respondents had more than 20 years working with digital evidence, those who did rated themselves a 4 or 5. Prosecutors with 10–20 years of experience, meanwhile, were much more divided in their self-ratings, with equal numbers reporting both greater and lesser involvement with investigators (see Fig. 13).

Fig. 13.

How prosecutors rate their involvement with digital evidence depends on their experience levels.

Whether the prosecutors had access to a digital evidence specialist didn't appear to affect their self-ratings. These respondents were only somewhat more likely to report more involvement in assessing digital evidence with access to a specialist, but even those without a specialist reported a higher degree of engagement (see Fig. 14).

Fig. 14.

Prosecutor respondents' access to a digital evidence specialist didn't appear to affect their self-ratings.

Because investigator respondents, again, largely reported they had no access to a prosecutor digital evidence specialist, their access or lack thereof had no bearing on their prosecutors’ involvement with their cases (see Fig. 15).

Fig. 15.

Whether investigators had access to a prosecutor specializing in digital evidence did not appear to improve the likelihood of better prosecutor engagement with the investigators' cases.

Further context for these insights comes from survey questions regarding how often respondents in both groups encounter digital evidence overall, the types of evidence they encounter, and the kinds of cases that rely on digital evidence.

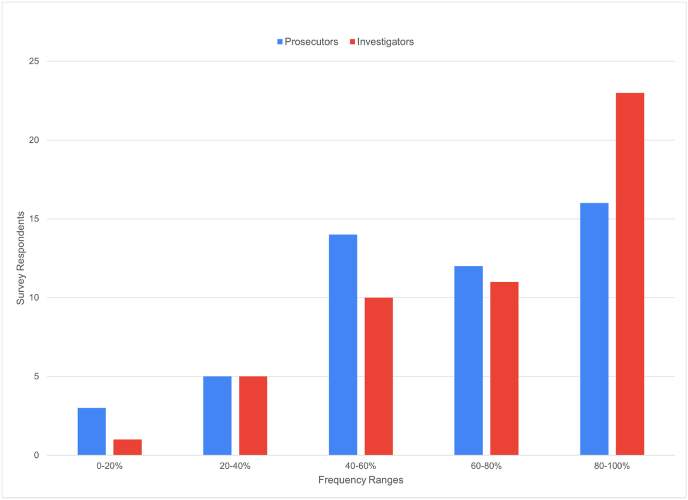

Fewer than one-third of the prosecutor respondents—but nearly half of the investigator respondents—said they encounter digital evidence 80 to 100% of the time. About one-quarter of each group said they see digital data 60–80% of the time, while about 30% of prosecutors and 20% of investigators said they encountered it only about half the time (see Fig. 16).

Fig. 16.

More investigators than prosecutors say they almost always encounter digital data on their cases.

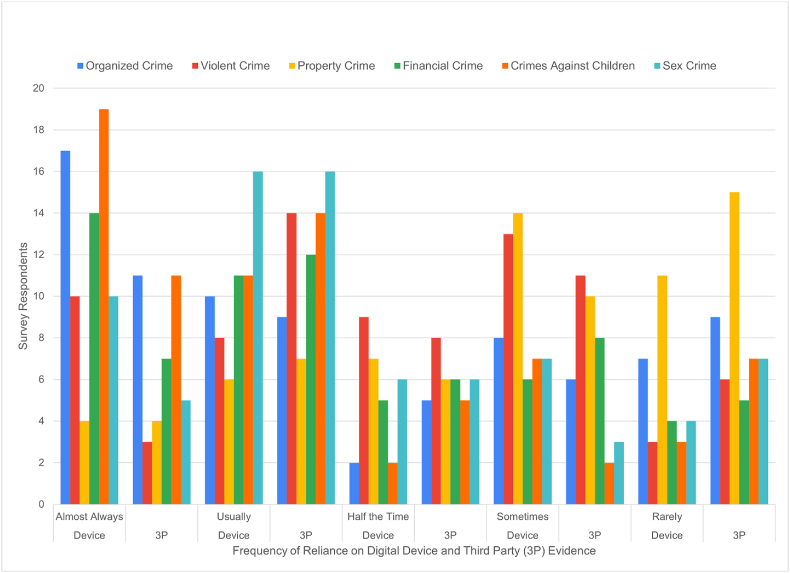

Encountering digital evidence is, of course, not the same as relying on it to build cases. Asked how frequently they relied on digital forensic evidence to build six different types of cases:

-

●

Respondents from both groups “almost always” rely on this type of evidence for cases involving crimes against children, organized crime, and sex offenses.

-

●

However, most of the respondents from each group “sometimes” or “rarely” rely on digital forensic data for property crimes.

-

●

Fewer prosecutors than investigators “almost always” or “usually” rely on digital forensic data for violent crime. Instead, they use this evidence “about half the time” or “sometimes” when building these cases.

-

●

More prosecutors “almost always” or “usually” rely on digital forensic data for financial crimes cases.

-

●

Digital forensic data also factors more strongly for investigators of organized crime than it does for prosecutors, who generally reflected that they use it for those cases only “sometimes” or “rarely.”

-

●

A slightly higher proportion of prosecutors reflected that they “rarely” rely on digital forensic data across all types of cases.

Digital devices are not the only sources of digital evidence, particularly when the devices are damaged beyond repair, encrypted, and/or otherwise inaccessible or unreadable to investigators. In recent years, “third party” data from companies such as wireless telecom providers, social media companies, online gaming platforms, etc. has become increasingly appealing to law enforcement [34]. The data might consist of subscriber information that can be obtained via subpoena, customer records that require a court order, or content that demands a search warrant [35].

Even when a device is unlocked and forensics can offer a complete picture of the data existing on the device, third-party data can be valuable to corroborate and authenticate that evidence; for example, by helping to put a suspect behind a device at the time and/or place an incriminating message was sent or video was shot.

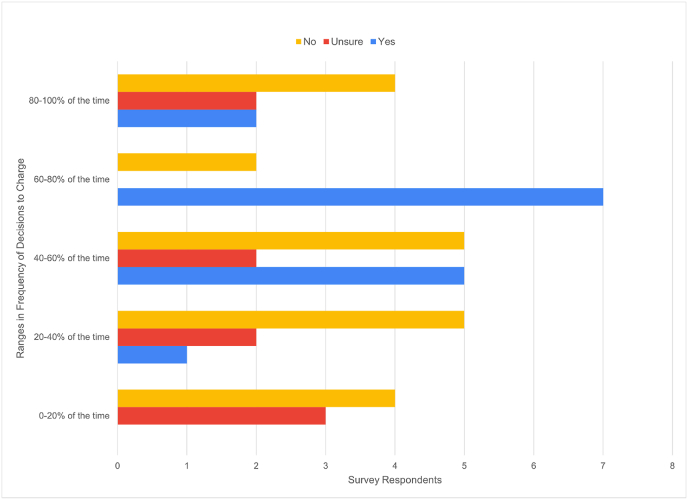

For these reasons, the survey also asked both groups about the extent to which they rely on third-party data for the six types of cases (seeFig. 17).

Fig. 17.

Prosecutors are more likely to rely on digital and third-party data as evidence of crimes with a strong digital element, such as crimes against children, organized crime, and financial crime.

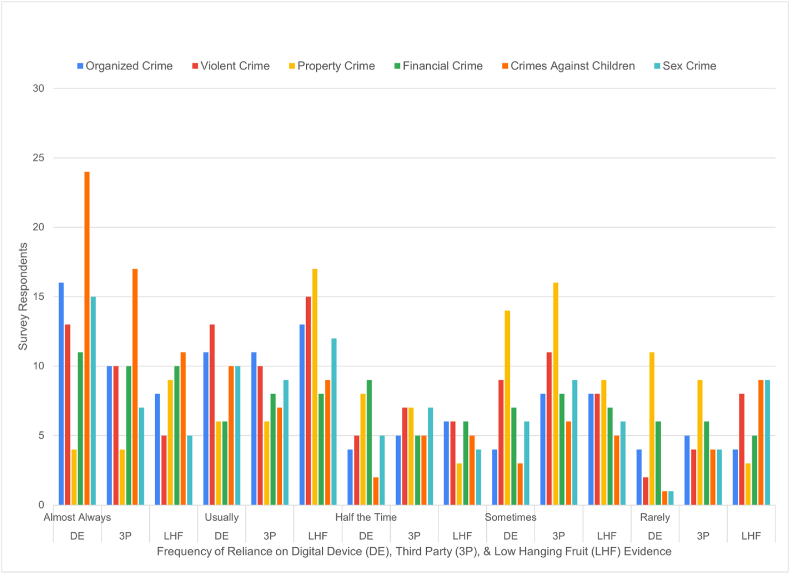

Investigators were additionally asked about their reliance on a third form of digital evidence for the six types of cases: the “low-hanging fruit” video, images, text messages, or other data that can come, say, from a device found at the scene of a crime and which may or may not require extensive forensic analysis [36].

Investigators’ responses indicated that “low hanging fruit” factors heavily in case-building for property crimes, violent crimes, crimes against children, and organized crimes, and only to a lesser extent for sex offenses and financial crimes (see Fig. 18).

Fig. 18.

Investigators' reliance on digital forensic evidence for 6 different types of cases.

It should be noted that investigators' reliance on low-hanging fruit doesn't mean this type of evidence leads straight to convictions. Instead, the data needs to “put the suspect behind the keyboard.” [37] Examining victim and witness devices and accounts alongside suspects', as well as physical trace evidence, medical or bank records, eyewitness statements, etc. all factor in.

The interviewees indicated that other factors, including how easy digital evidence is to obtain, and whether the seriousness of the case warrants additional effort when evidence is harder to obtain, also apply. For one, third-party data can take more time and effort to obtain. Whether owing to “size and lack of organization” or to provider pushback on legal process, these delays can hinder case-building.

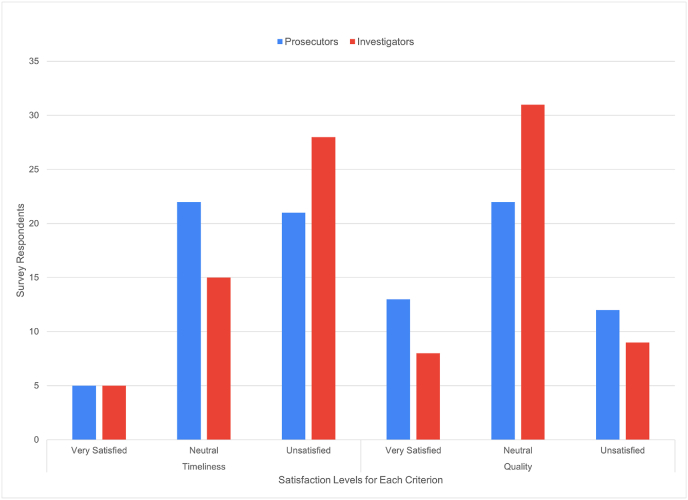

To that end, asked about their levels of satisfaction with both the timeliness and the quality of data returns from electronic service providers (ESPs), both prosecutors and investigators reflected concerns, particularly around returns’ timeliness (see Fig. 19). In responses to an open-ended survey question, investigators reported delays of three to five weeks, up to six to eight months, or in one case, a full year.

Fig. 19.

Prosecutors are slightly more concerned with warrant returns' timeliness than their quality. More investigators are unsatisfied with warrant returns' timeliness, but their views on quality are of about the same degree of concern as for prosecutors.

Overall, the survey results indicate that digital evidence that could help build cases and corroborate other evidence is simultaneously plentiful and elusive. How this contradiction affects prosecutor decision-making, then, has profound implications.

4.2. Prosecutor decision making

Prosecutors make a lot of decisions about their cases, largely based around two questions: whether they can prove a case, and whether they should prove the case [38]. These decisions come down to screening cases, charging criminal suspects, what and how to introduce evidence at trial, offering plea bargains to defendants, sentencing recommendations, and dismissing charges.

The survey focused specifically on how often digital evidence factored in making these four decisions:

-

●

Charging

-

●

Introducing/getting evidence admitted

-

●

Plea bargaining

-

●

Dismissing charges

In particular, because plea bargaining2 is estimated to resolve more than 90% of criminal cases at both federal and state levels [39], this research sought to find out whether, and to what extent, digital evidence influences a practice with such a profound impact on both the criminal justice system and the defendants within it.

B.H. provided greater detail on the role of digital evidence in his decision-making, which he said in his experience falls into three categories:

-

1.

Defense attorneys asking questions and sharing information that police and prosecutors didn't already have. In those cases, police can follow up with a fresh search warrant based on the new information.

-

2.

Evidence that supports or refutes the defendant's version of events, including from victims' or witnesses' devices or online accounts. Sometimes, new evidence also results from conversations with defense attorneys.

-

3.

Technological advancements in forensic tools—sometimes within a matter of the weeks or months it takes for a case to go to trial—that mean evidence can be recovered and parsed that couldn't be previously.

K.T., a prosecutor in a northeastern metropolitan location, pointed out that decision-making can depend on the quality of evidence. “In my experience when we get into a phone, it's two extremes of either, ‘This [device] has everything that we could possibly think of,’ or ‘There's absolutely nothing,’” she said.

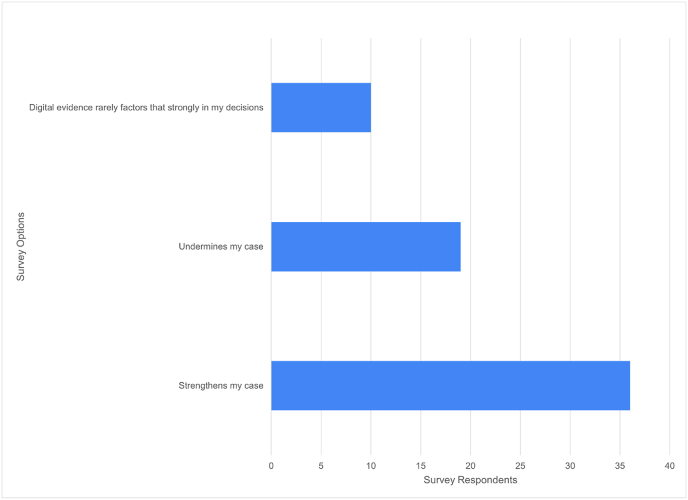

Asked whether they make these decisions based on whether digital evidence strengthens or undermines their cases—or whether digital evidence even factors that strongly for them—survey respondents were able to pick more than one choice.

Most respondents decide based on whether the evidence strengthens their cases. Fewer than half of all respondents were concerned about digital evidence undermining their cases; fewer than one-quarter said digital evidence rarely factored that strongly in their decisions (see Fig. 20).

Fig. 20.

Digital evidence factors most strongly in survey respondents' decisions to charge, introduce, plea bargain, and/or dismiss when it comes to strengthening their cases.

At the same time, though, charging decisions are based on more than just digital evidence [40], and the interviewees reflected that digital evidence factors into charging decisions only when it is the best or only evidence of an offense—not when it corroborates other evidence. J.S. reflected that it can be more important to tie the defendant to the crime by showing evidence on their device, email account, financial records, etc. Additionally, whether digital evidence is the “best or only” evidence of an offense ties to its quality and its admissibility. For example, printouts from a social media site could provide important leads, but may not be readily authenticated as evidence [41].

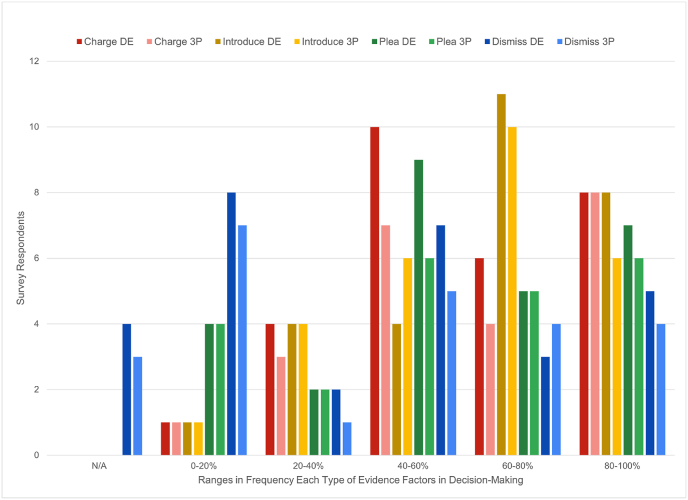

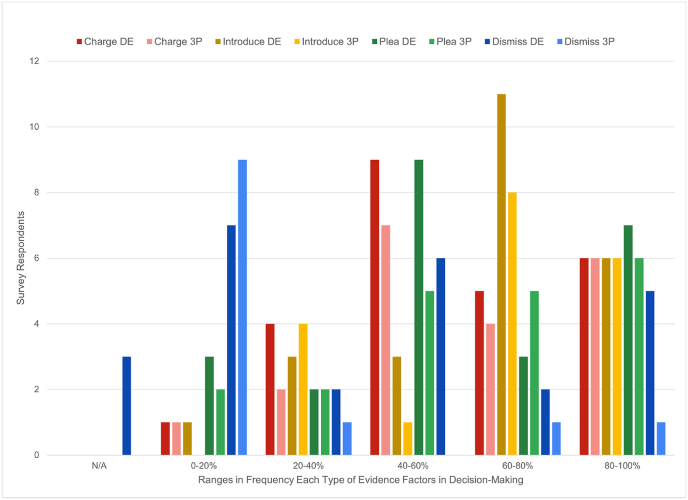

4.2.1. Prosecutor decisions based on crime types

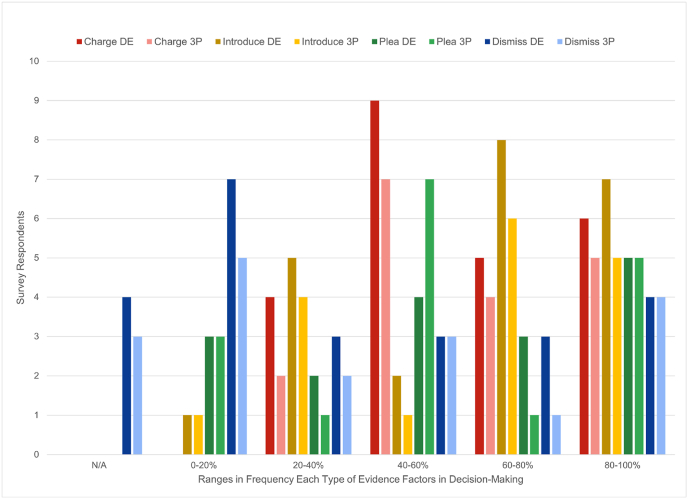

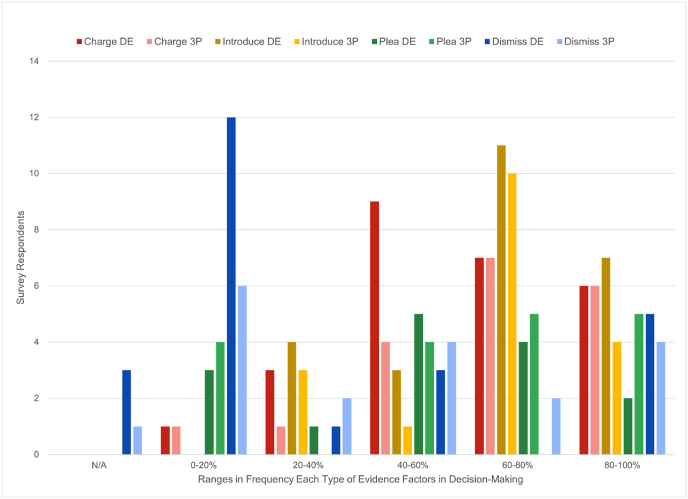

That digital evidence is most important when it strengthens prosecutions offers context for crime-specific data. In particular, the survey results were used to determine whether the frequency with which prosecutors encounter digital evidence affected the four types of decisions for each of the six crime types (see Fig. 21, Fig. 22, Fig. 23, Fig. 24, Fig. 25, Fig. 26). By cross-referencing the frequencies at which survey respondents said they encountered digital and third-party evidence, with how often they said they used it to make decisions for each crime type, the results revealed that:

-

•

Even though more prosecutor respondents “usually” rely on third-party data more than investigators do (see Fig. 17, Fig. 18), it still factors less in the prosecutors' decision making than digital forensic evidence—except plea bargaining, where third-party data factors more than digital evidence among prosecutors who see digital evidence about half the time.

-

•

Those who encounter digital evidence only about half the time seek to introduce it at trial far less than using it to charge or plea cases, as well as less than those who encounter it more than 60% of the time.

-

•

When prosecutors encounter digital evidence 60–80% of the time, they're more likely to seek to introduce it than other respondents are, even those who see it 80–100% of the time.

-

•

Across the six different crime types, prosecutors seek to introduce digital forensic data and third-party data about equally; the majority, 60 to 80% of the time.

-

•

Digital forensic and third-party evidence factored in dismissal decisions more strongly when the survey respondents encountered such evidence less than 20% of the time.

-

•

For prosecutors who encounter digital evidence more than 20% of the time, however, the evidence factored in dismissals less often than charging, introducing, or plea bargaining.

-

•

More survey respondents answered “not applicable” to this question than to the other decision questions, and nearly half the respondents said digital evidence very rarely affected their decision to dismiss cases.

Fig. 21.

Prosecutors of financial crimes use digital and third-party evidence to make decisions about evenly when they encounter it more than 80% of the time, but the usefulness of this evidence to their decisions varies more widely when they encounter it less often.

Fig. 22.

Prosecutors of organized crimes are somewhat less consistent in their use of digital and third-party evidence than financial crimes prosecutors.

Fig. 23.

Prosecutors of violent crimes use digital and third-party evidence to make decisions about evenly when they encounter it more than 80% of the time, but the usefulness of this evidence to their decisions varies more widely when they encounter it less often.

Fig. 24.

Respondents who reflected they prosecute property crimes were very few. For this limited cohort, digital evidence factored more heavily than third-party evidence among those encountering both types 0–20% and 80–100% of the time. Among those encountering digital evidence 20–40% of the time, though, third-party evidence was relied upon more.

Fig. 25.

Prosecutors who encounter digital evidence 60–80% of the time in crimes against children cases seek to introduce it at higher rates even than charging or plea bargaining, as well as dismissing.

Fig. 26.

Just as for violent crimes, prosecutors of sex crimes use digital and third-party evidence to make decisions about evenly when they encounter it more than 80% of the time, but the usefulness of this evidence to their decisions varies more widely when they encounter it less often.

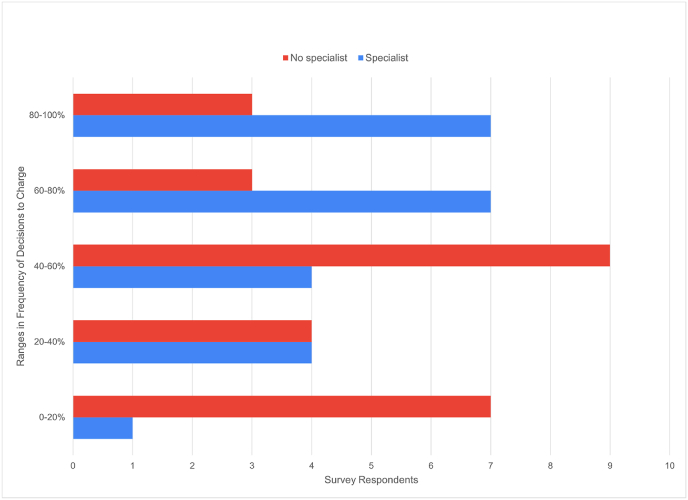

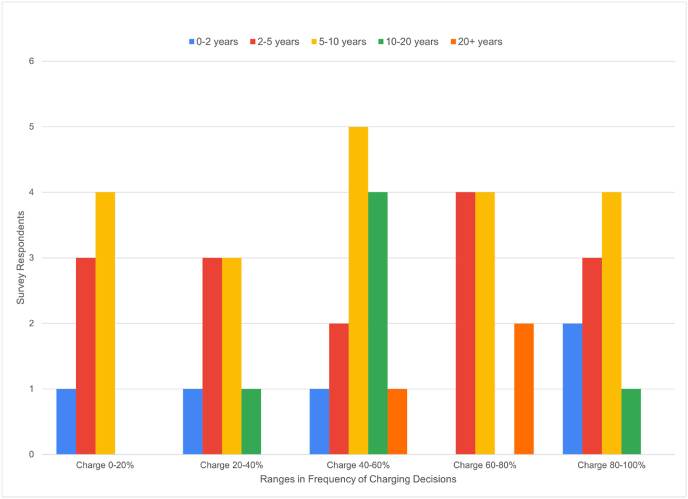

4.2.2. Decisions based on experience level

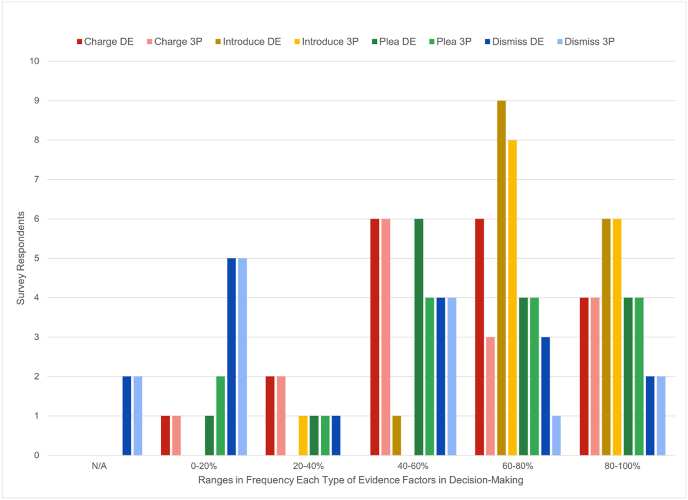

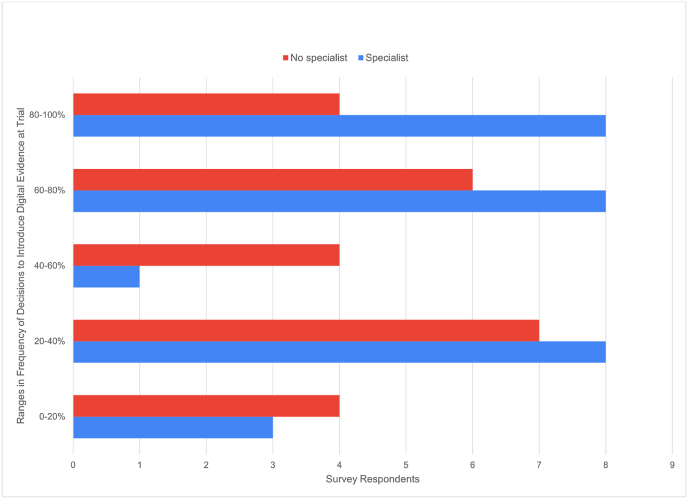

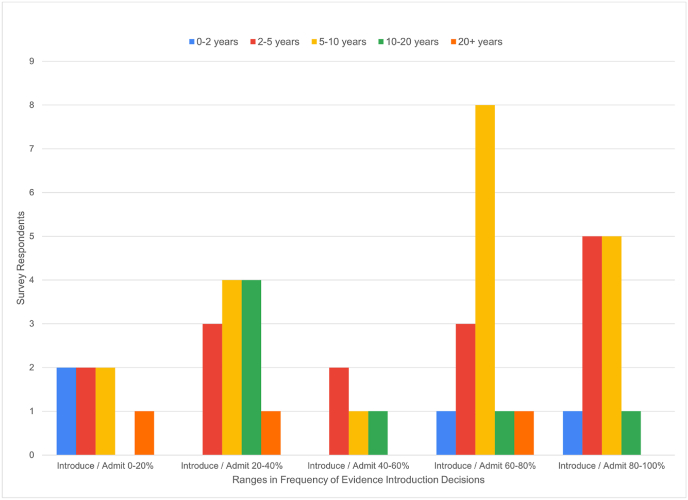

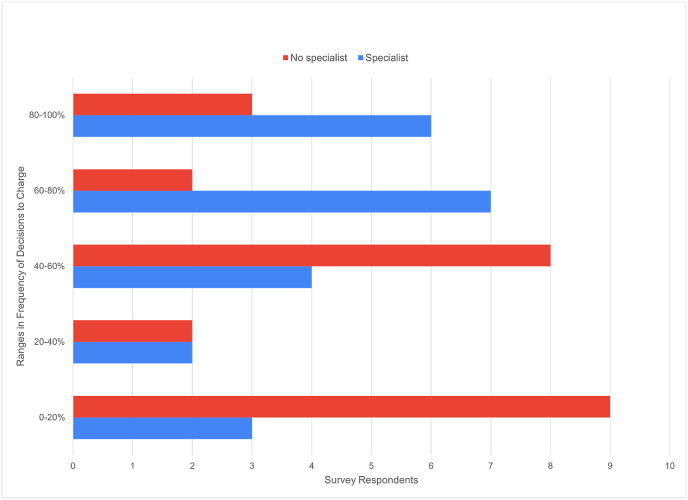

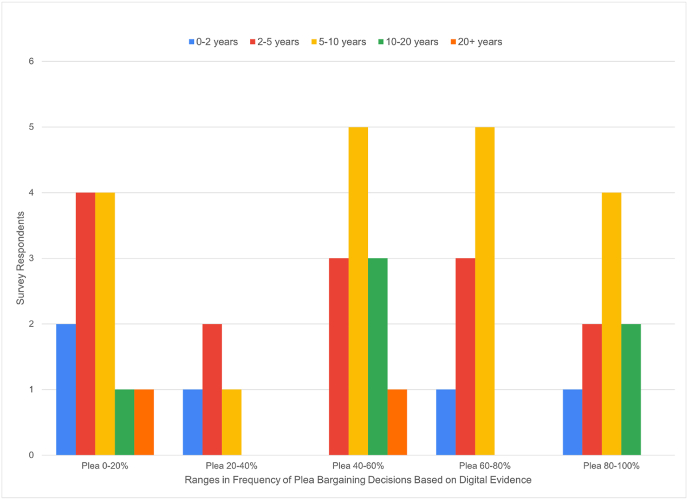

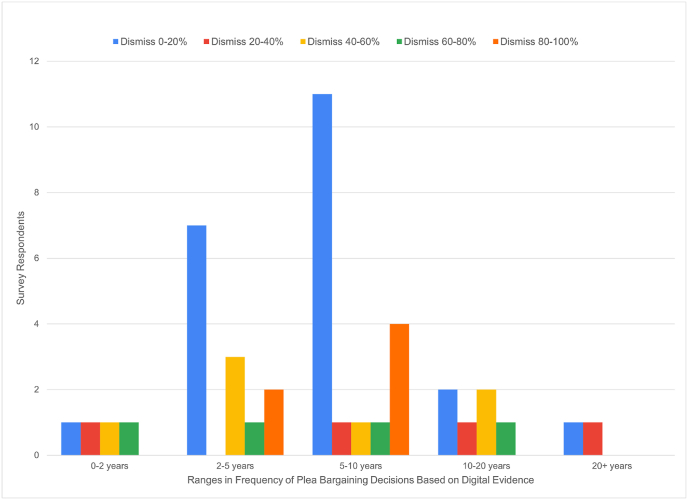

Another goal of the research was to see whether two factors influenced respondents’ decision-making: their levels of experience, and as well, whether they had access to a digital evidence specialist. Across the board, the results show that access to a specialist appears to influence decisions to charge, plea bargain, and dismiss cases to a greater extent than experience alone (see Fig. 27, Fig. 28, Fig. 29, Fig. 30, Fig. 31, Fig. 32, Fig. 33, Fig. 34):

-

•

When their office has access to a digital evidence specialist, more than half these prosecutors (about one-quarter of the total survey respondents) use digital data to charge and to plea bargain more than 60% of the time.

-

•

The prosecutors with more than 10 years of digital evidence experience generally appear to introduce, charge, plea, or dismiss digital evidence less frequently than those with less experience.

-

•

Although access to a digital evidence specialist appears to influence prosecutors' decisions to introduce the evidence, the influence isn't as strong as it is on the other decisions.

Fig. 27.

How strongly digital evidence factors in the decision to charge a suspect, correlated to whether the prosecutor's office has a digital evidence specialist.

Fig. 28.

How experienced prosecutors are with digital evidence doesn't appear to have much bearing on their charging decisions.

Fig. 29.

How strongly digital evidence factors in the decision to introduce it, correlated to whether the prosecutor's office has a digital evidence specialist.

Fig. 30.

The decision to introduce digital evidence doesn't appear to be influenced by experience level, though those with longer experience appear to introduce digital evidence less frequently than those with less experience.

Fig. 31.

The presence of a digital evidence specialist in survey respondents' offices appeared to influence their decisions using digital evidence to plea bargain in similar proportions as their decisions to charge.

Fig. 32.

The decision to plea bargain based on digital evidence doesn't appear to be influenced by experience level.

Fig. 33.

As with the other decisions, although having a digital evidence specialist may help strengthen prosecutors' confidence in their decisions, it isn't necessarily a predictor.

Fig. 34.

Respondents' years of experience did not appear to have any influence over whether digital evidence drove their case dismissal decisions.

The prosecutor interviewees offered insights that indicate the extent to which experience factors in their decision-making.

Introducing the data, said K.T., comes down to “a balancing test between how much the evidence is going to help move the [case] forward” versus its complexity and, therefore, the amount of education that will be needed to help the jury understand the evidence and its relevance.

Juror demographics can factor heavily into this kind of decision. A.B, district attorney in a small northern Midwestern county, said if digital evidence was strong enough to use as a basis to charge, then defendants typically take a plea bargain, which can achieve a similar result as going to trial. Because her district is populated largely by retired seniors, the plea bargain eliminates the need to call in an expert to explain digital evidence.

However, the strength of evidence also affects plea bargaining decisions. As J.S. put it: “If you had really great digital evidence that was directly on point, then you've got a much stronger case and potentially a different position in a plea bargain than if you've got digital evidence that is just adjacent or corroborating or maybe has admissibility issues. Then you've got to approach that process differently.”

In some jurisdictions, the law allows for only a few days between an arrest and a decision whether to bring or drop charges. This time limitation can restrict investigators’ ability to collect, much less analyze relevant evidence [13].

That's been J.D.‘s experience in his state, where charging and plea decisions are made simultaneously for felony cases. Although he's typically already aware of what digital evidence exists or doesn't exist in order to make decisions, he said, “Sometimes [the digital evidence] comes later or maybe we've downloaded a cell phone, but no one's had time to analyze it yet,” J. D explained. “In those cases I have to make the charging decision and the plea offer without the benefit of digital evidence.”

4.3. Digital evidence at trial and beyond

How prosecutors approach any evidence—how they build cases and relationships with investigators as witnesses, and how they make decisions to charge, introduce, plea bargain, and dismiss cases—ultimately comes down to how they anticipate judges and juries might perceive the evidence.

“I see my primary job in this area is … to give the fact finder, whether it's a judge or a jury, enough of a basis and understanding of the technology to make an informed decision about whether the digital evidence is admissible and how much weight to give it [relative to other forms of evidence],” said C.D.

However, he added, this task is becoming increasingly difficult as technology advances. Too much science, and judges and jurors can become bored and frustrated; not enough, and defense attorneys can sow seeds of reasonable doubt, regardless of whether they themselves understand digital data [4].

These outcomes often depend on the prosecutor's effectiveness in showing the evidence is what it purports to be; that it is authentic. A series of survey questions therefore asked about the admissibility of digital forensic evidence, prosecutors' sense of investigative due diligence in handling that evidence, and whether they thought technological advancement could ever outstrip a) their ability to demonstrate authenticity and b) jurors' ability to weigh the evidence appropriately.

As the prosecutor interviewees reflected:

-

●

Jurors might be predisposed to trust scientific processes, even if they don't understand them, relative to eyewitness testimony or other forms of evidence; conversely, they may be more skeptical of what they don't understand.

-

●

Juror demographics can play a significant role. As discussed previously, juries composed primarily of retirees, for example, may be less amenable to learning about how technology works than juries in locations where technology is a fact of life.

-

●

Even when a jury is composed of a defendant's peers, lifestyle variances can mean defendants use their devices and accounts in drastically different ways. Jurors thus can be challenged to make the connections they need to understand how the evidence—for example, hours' worth of livestreaming—fits the case at hand.

-

●

Forensic witness expertise on the stand depends in part on examiners' own training and experience, and in part on a prosecutor's ability to prepare them to testify. Good expert witnesses can help to bridge any gaps between familiarity and technical detail.

“After many trials I realize that I don't know how jurors' thought processes work in many regards,” H.W., a West Coast-based prosecutor, said. “Moreover, every jury is different.”

The tradeoffs between the complexity and probativity of digital evidence impact trial strategies to varying degrees. Sometimes, said B.H., jurors need only a rudimentary overview of how, say, mobile forensics software works and why it's reliable, without need to get into the differences between logical, file system, and physical extractions.

Other times, jurors need more detail. B.H. and L.H. have both walked jurors through technical details in trials where, said B.H., “[W]e knew [the jurors] were going to see it and we didn't want them to go back and deliberate and [ask] why is there all this other stuff in there,” he recalled. L.H. agreed: “[We were] essentially trying to answer every possible question that jurors could have about it in testimony, because obviously they don't have enough chance to ask us questions directly,” she explained.

But these strategies need to be carefully applied. “If you start putting all these cell phone records, all these phone records, all of these videos … before [jurors] over a period of a week or two, it's a lot for them to digest,” said J.D. That's why H.W. focuses his experts' testimony on key evidence, only addressing “boring” authentication issues on redirect in the event the defense presents evidence of tampering.

So much effort can go into educating juries, H.W. added, that it behooves digital forensics practitioners to adhere to accepted scientific processes. “We spend a lot of time teaching the ‘proper’ way to do things, often because of fear of what a jury would think if the weaknesses of the practice [are] exposed. For example, if a hash does not match it opens the door to speculation that the government planted data or spoiled it,” he explained.

The”‘proper’ way to do things”—the quality assurance standards and best practices that guide how data is preserved, collected, analyzed, and presented—forms the foundation of admissible evidence [42]. Yet:

“New advances in computer forensics technology will continually raise reliability issues, particularly as new techniques are deployed in the field without extensive review and testing seen in nontechnological scientific fields ….

“Digital device technology is changing at lightning speed, as is the technology to extract and analyze data from those devices. This poses serious problems for meeting requirements of Daubert—i.e., being able to demonstrate that digital evidence presented in court is reliable.” [4].

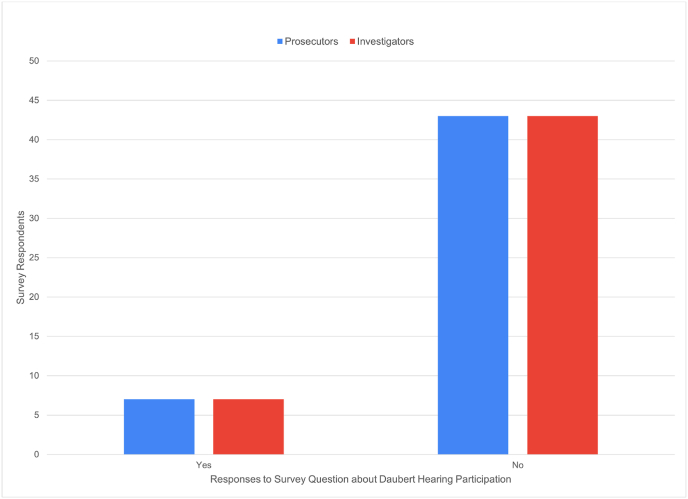

The “requirements of Daubert” refers to a legal standard for the introduction of scientific evidence.3 Typically, pretrial hearings establish whether a tool or method has met this (or equivalent) standard. However, the likelihood of a Daubert hearing depends in large part on a state's defense bar as well as its bench: how well defense attorneys understand the evidence enough to challenge it, and how well judges tolerate challenges [43].

The first hurdle for whether scientific evidence can be considered admissible is its methodology's validity in the scientific community. Different legal standards are applied, depending on the state.4

Few survey respondents had ever participated in a pretrial scientific admissibility hearing (see Fig. 35). Respondents who answered “yes” to this question followed up by describing, in an open-ended short-answer field, that the hearings they participated in were for mobile forensics analysis, including Cellebrite usage and admission of cell site locations specifically; metadata; peer-to-peer investigations; and to establish foundation and provide general education to judges.

Fig. 35.

Largely, neither prosecutors nor investigators have participated in admissibility hearings.

As long as the methods involve generally accepted forensic tools, said H.W., then courts do not need to hold these types of hearings. J.D. agreed: “I don't tend to see that we distrust the technology and therefore you should keep it out under Daubert,” he said. Because digital evidence can support the defense as well, he noted, defense attorneys frequently ask for clients' phone forensic images, and look for other ways to challenge the evidence. Within the context of digital forensics admissibility hearings, H.W. said, these other challenges might include “whether the tools were used in a legal manner, whether the tools were used correctly and whether the sponsoring witness has the expertise and factual foundation to give an opinion about their findings.”

Thus, said C.D., it isn't just a matter of forensic processes, but also the tools and the level of expertise required to understand them along with the ability to verify the data they reveal as a true and accurate copy of what's on the device [44].

Yet, C.D. added, relying on scientific principles and methods to determine admissibility—even in general terms, leaving case-specific facts for a judge or jury to assess [7]—is a skill in short supply, in part because so few cases ever go to trial. While forensic methods change [4], new technology, such as deepfake videos and “viral” social-media misinformation campaigns, can introduce reasonable doubt in ways that could be difficult to challenge [45].

These factors are compounded by the advent of tools designed to save investigative time and effort [23], as well as safeguarding investigators' mental health [46], through automation. Tradeoff risks of these benefits include the inability to evaluate the evidentiary value of evidence, the potential to overestimate the technical competence of the person performing the triage, and the possibility that inaccurate interpretation of evidence could “snowball” into a cascade of poor decisions—all without the prosecutor's awareness [23]. Relying more on the tool and not on their own decision making or investigative skill, said C.D., means forensic examiners are less able to explain how the tool works. “And that makes it more and more challenging for me to do my job putting them on the witness stand,” he said.

Compounding this challenge, C.D. continued, is the departure of professionals seeking career advancement. Forensic examiners must ultimately leave the forensic lab, either through promotion in a law enforcement agency, or to find better-paying work in the private sector. These departures, C.D. observed, affect prosecutions on two levels: not just the knowledge in itself, but also whether trained, experienced witnesses are available to testify. “We lose our institutional knowledge—real valuable people—because they can go make more money [in the private sector],” he explained, adding: “We've invested hundreds of thousands of dollars in training for them and then we lose them …. That's just maddening.”

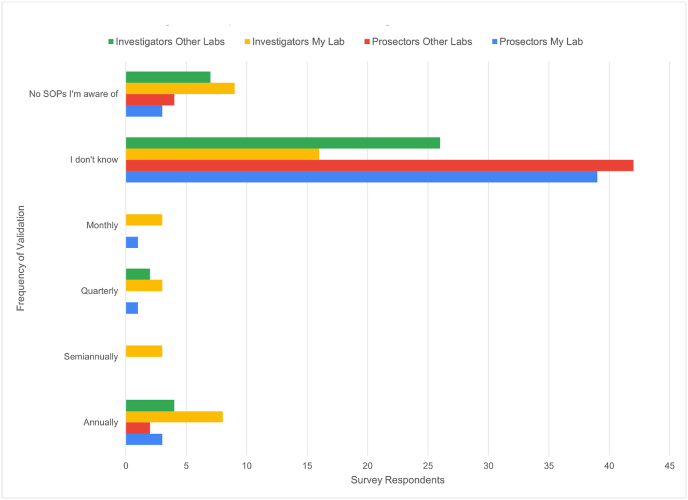

As a measure of survey respondents' awareness of the processes that go into fulfilling the requirements for scientific evidence, both survey groups were asked how familiar they were with their own labs', and others’, validation SOPs (see Fig. 36).

Fig. 36.

Respondents in both surveys overwhelmingly reflected that they don't know whether their labs have any SOPs for regular validation and verification.

This sort of “background information” on a lab's processes may not appear to have much relevance to individual cases. In fact, in her state, L.H. said, attorney questions about lab SOPs are a “one-off,” with attorneys asking experts whether everything, including their certification, is up to date.

However, processes and procedures define the chain of custody for digital evidence. Moreover, given the rapid pace of change in the industry, these processes and procedures are (or should be) dynamic. To be able to refute challenges, prosecutors need to know whether policies have been followed; if not, where the deviation occurred and why; and which practices were in use and applied to the evidence in question [33]. Not holding forensic examiners accountable in this way can risk entire convictions [47].

Understanding these processes can benefit prosecutors in other ways as well. First, prosecutors can get a better sense of their expert witnesses’ expertise [48], establishing their knowledge and comfort level with scientific processes like validation [49]. This knowledge helps them to know how and to what extent to prepare witnesses to testify, as well as to prepare for potential defense objections.

Second, prosecutor knowledge, when applied in their conversations with investigators and forensic examiners, can help them support due diligence so that they can be more confident that:

-

•

The inculpatory data is what it purports to be.

-

•

Any reasons for digital evidence not being correctly parsed or interpreted [50], e.g. due to tool or user error, are adequately explained.

-

•

Any exculpatory data has been identified for discovery [33].

-

•

Any absence of exculpatory evidence can be adequately explained [51].

In the event that a defense attorney brings new data to light that law enforcement didn't have access to six months previously, the prosecutor can more readily communicate with forensic examiners to revisit their evidence [51].

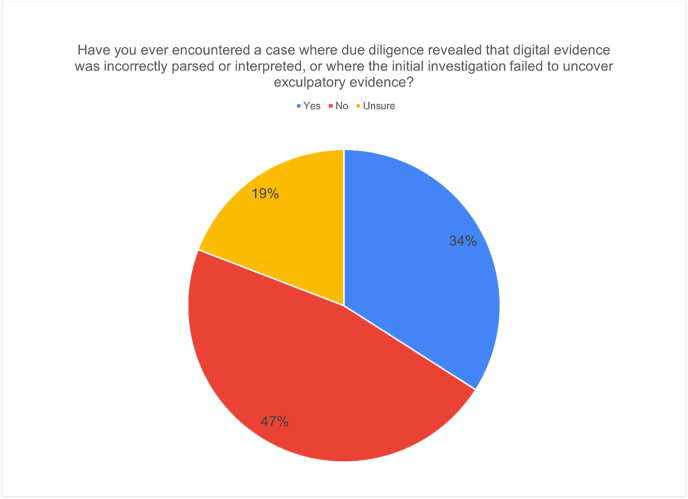

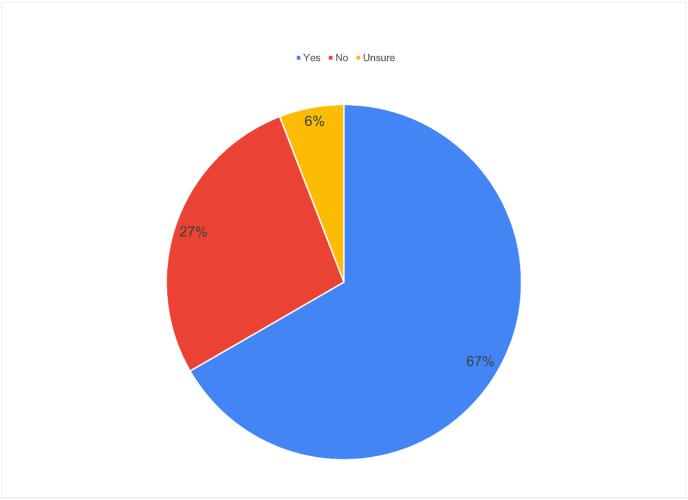

The survey asked whether respondents had ever encountered a case where the kind of due diligence described above revealed that digital evidence was incorrectly parsed or interpreted, or where the initial investigation failed to uncover exculpatory evidence.

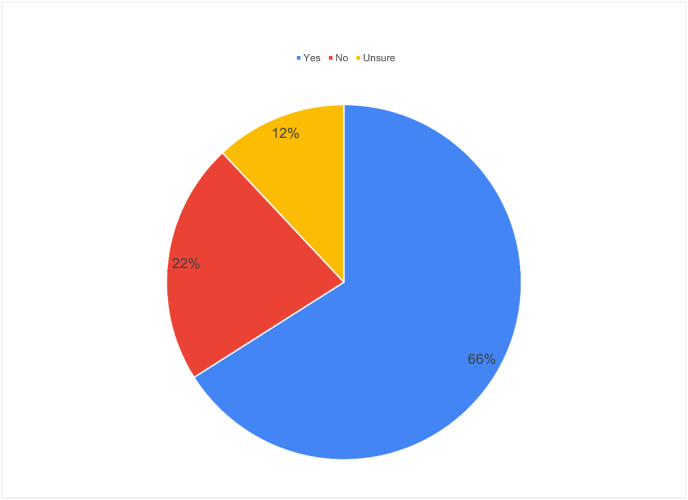

Asked whether they had ever encountered cases like these, either before or after they charged a defendant, the prosecutor respondents gave mixed responses (see Fig. 37). Only about one-third ever had, but another one-fifth said they were unsure. One prosecutor responded that they had not found incorrectly parsed or interpreted digital evidence—but they had encountered at least one initial investigation that failed to uncover exculpatory evidence.

Fig. 37.

More than half of prosecutor respondents had encountered—or couldn't be sure if they had encountered—at least one case where digital evidence didn't provide an accurate picture of what happened.

Going deeper into the survey responses, the results show that years of experience with digital evidence doesn't appear to indicate whether the prosecutors had encountered a problem with due diligence (see Fig. 38). The numbers may reflect more the number of survey respondents who answered from those demographics than they do the encounters.

Fig. 38.

Years of experience didn't seem to indicate the likelihood that a prosecutor either would have encountered a due diligence issue, or couldn't be certain.

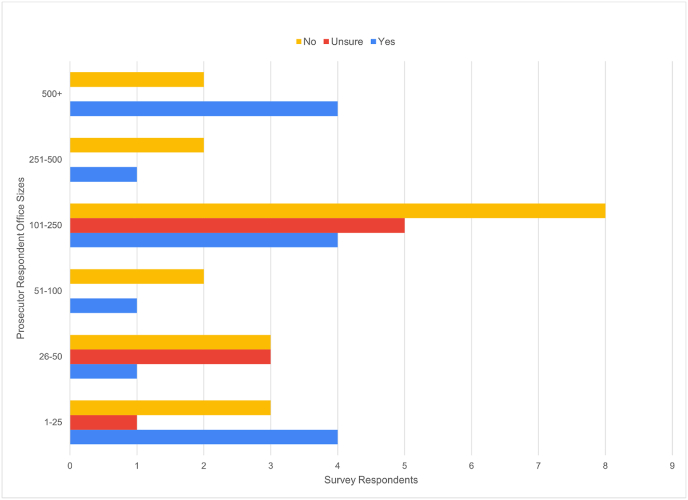

Likewise, office size also didn't appear to correlate to encountering due diligence issues. Survey respondents across all office sizes had encountered at least one such case. Notably, none of the respondents from the largest offices said they were uncertain about having encountered any issues (see Fig. 39).

Fig. 39.

Office size doesn't appear to predict whether prosecutor respondents had encountered issues with digital evidence due diligence.

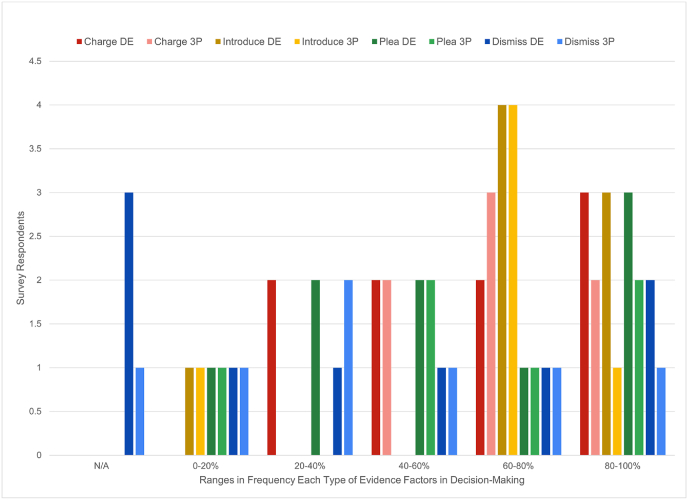

However, cross referencing due diligence responses with prosecutor involvement with digital evidence reveals that the more engaged respondents say they are with investigators, the more likely they are to spot due diligence issues. They are also somewhat more likely to be certain that they haven't encountered due diligence issues (see Fig. 40).

Fig. 40.

Respondents who are more engaged with investigators are both more likely to encounter due diligence issues—and to be certain that they hadn't.

Respondents who said they didn't have access to a digital evidence specialist appeared slightly more likely to say they hadn't encountered due diligence issues, but those who do have access didn't seem to encounter significantly more of those issues (see Fig. 41).

Fig. 41.

Access to a digital evidence specialist doesn't appear to correlate to whether survey respondents encountered due diligence issues.

As to the extent that due-diligence issues could affect prosecutor decision-making, the data is inconclusive (see Fig. 42, Fig. 43).

Fig. 42.

Whether prosecutor respondents had ever encountered due diligence issues didn't appear to influence their decisions to charge.

Fig. 43.

Whether prosecutor respondents had ever encountered due diligence issues didn't appear to influence their decisions to plea bargain, either.

What due-diligence issues highlight is that, as straightforward as digital data might appear, its interpretation is still performed by humans, using tools likewise developed by humans [23]. Thus, while digital forensic examiners are independent in the sense that they analyze and interpret evidence that is relevant to the case and its context, they are no more bias-free than their counterparts in physical forensic sciences [52]. Prosecutors must thus take care that their relationships don't devolve into tunnel vision and other confirmation biases, where the evidence supports an investigator's case hypothesis whether right or wrong [53].

Some of this risk can be mitigated through screening cases up front. Doing so allows investigators to communicate with prosecutors about what evidence was found or not found, or questions they're able or unable to answer. These pieces of information, said B.H., help prosecutors decide whether to move forward.

That strategy assumes, however, that prosecutors and investigators know how and what to communicate. Given criticisms of the shortcomings in more conventional forensic sciences, including DNA testing [54] or fingerprint analysis methodology [55], prosecutors who don't understand technology can't keep up with its advances, or the resulting adaptations in forensic processes [33].

For example, consider that a digital forensic tool's performance relies on its ability to parse data structures [56], such as the SQLite databases that underlie most mobile apps. “How the tables are structured are very different from database to database,” B.H. explained. New apps and databases, as well as regular app updates, make it possible for digital forensics tools to be outpaced [50].

The result, B.H. said, that the amount of data that can be parsed “ebbs and flows.” “So by the time GrayKey5 can crack an iPhone 11, the iPhone 12 is already out, and it can't crack the iPhone 12 yet,” K.T. explained. “On cross examination, any good defense attorney is going to ask, ‘Well, this can crack every phone, right?’ And they're just sowing doubt about the reliability of the [forensic] technology.” At that point, said L.H., the issue isn't so much the science as it is variables like the access that other people besides the defendant had to a device, which can help a defense claim that the evidence doesn't definitively place the defendant behind the device.

K.T. said the ability to communicate about issues like this comes down to good observational skills, in particular the ability to compare reports across cases. “It's looking at the [digital forensic] reports and saying, ‘Wait a second, I should have these photos on here, and I don't,’” she explained. “It's stuff that should be there if the request that I made was fully executed. Or I should know why it's not there. And I've had to do follow-ups that have resulted in, ‘We didn't have that [or] we didn't include that,’ whatever variation.” B.H. concurred, calling forensic tool parsing errors “very noticeable.”

Yet C.D. sees less and less testing as technology continues to advance. Although the verification of data is more likely to happen when a case goes to trial, said B.H., jury trials have become vanishingly rare—by his estimate, just twice per year on cases that rely heavily on digital evidence. “That's just because usually the evidence is what it is. It's not like an eyewitness with, ‘Did you really see it? Is it really him?’” he explained.

But the result, said H.W., is plea bargaining practices ensuring that “the tools that are involved aren't put to the test.” The result, said B.H.: forensic examiners might not feel the need to verify a tool's results, particularly if their software has been “very reliable for a very long period of time.” B.H. added: “And so the odds that we're going to go back and check the hex [code] [to see] exactly what's going on is going to be less often in that situation,” especially if the data makes logical sense.

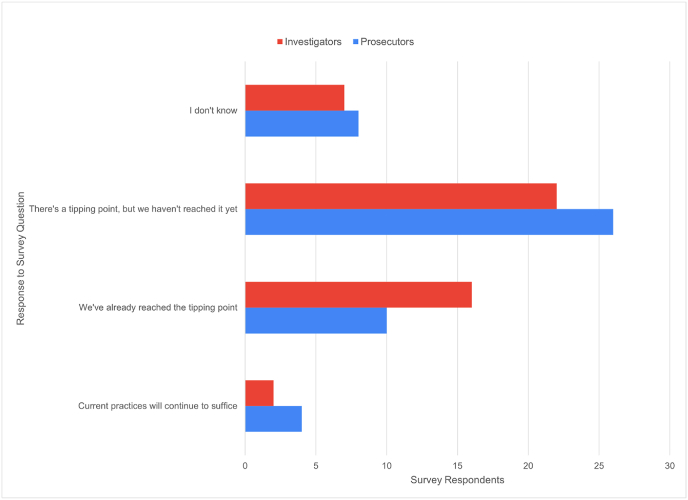

Indeed, whether rapidly advancing technology would continue to be admissible in court was a concern for most of the prosecutor respondents, even if they didn't believe a tipping point, where technological advances outpace the ability to educate jurors about them in court, had yet been reached. Of note: about one-third of the investigators, compared to one-fifth of the prosecutors, thought the tipping point had already been reached. Very few in each group believed that current practices would continue to suffice, and about 15% said they didn't know (see Fig. 44, Fig. 45, Fig. 46).

Fig. 44.

About two-thirds of prosecutor respondents expressed concern about digital evidence admissibility.

Fig. 45.

Although more prosecutors were unsure about jurors' ability to weigh digital evidence appropriately compared to those who were unsure about its admissibility, the majority of respondents again expressed concern about jurors' ability to weigh digital evidence in deliberations.

Fig. 46.

Prosecutors generally believe technology hasn't yet outstripped forensic tools' explainability. Many more investigators than prosecutors believe the technological tipping point has already been reached.

The trend away from demonstrating digital forensics' scientific foundations in court comes at a time when technology is becoming more complex and abstract. Artificial intelligence is perhaps the best example of complexity; reportedly, even experts in the field can find it difficult to explain the technology [57]. B.H. said it's more useful as an investigative tool, “… trying to [go from 2 million videos or images down to 200] as opposed to spending nine years looking through every video on somebody's phone,” he explained, “but I would never charge off that.”

That said, he and other prosecutors have seen more litigation around discovery of, first, how the software works—for which typically all that's needed is for an expert to come in and explain—and, second, how investigators identify [evidence such as] explicit or exploitative images. Indeed, most digital forensic tools are a proprietary “black box” disallowing the examination or testing of source code [10]. Likewise some methods conducted by private-sector forensic labs, which often relies on exploiting vulnerabilities in hardware or software [58,59].

Judges' determination of whether the evidence is allowable at trial could depend on defense attorneys' knowledge of digital evidence. The survey didn't explore this variable, but the prosecutor interviewees anecdotally suggest wide variances depending mainly on location. For instance, some interviewees mentioned experience with challenges mounted by better resourced defense attorneys from large metropolitan areas. Others said defense attorneys in their states generally didn't seem to be well versed in digital evidence issues at all, resulting in fewer challenges. A.B., a former public defender and now a prosecutor in the upper Midwest, said in spite of many public defenders' relative youth, their knowledge about the evidentiary aspects of digital data often takes a back seat to their general knowledge about the technology. Other times, said B.H., attorneys might be knowledgeable “just enough to know to challenge something, but not educated enough to really know what the issue is.” That, he added, is a matter of training: as limited as opportunities are for prosecutors, they're even more limited for criminal defense attorneys.

In general, said J.S.: “Some [defense attorneys] are fielding very impressive and sophisticated challenges to evidence [with] relatively creative and interesting legal arguments …. But there are also a lot of lawyers who are cutting and pasting stock motions, making very vague general allegations.”

That vagueness, J.S. continued, could be problematic for case law. Cogent arguments force prosecutors to research and respond, while “generic throw everything at the wall and see what sticks” motions make for a trial tactic that can confuse judges. In turn, judges’ lack of knowledge about digital forensic tools and techniques often lead to skepticism around validity [4].

Aggressive pretrial motion practices can help, said H.W., a strategy that B.H. agreed has served him well. “If a judge was having to make a call at the moment they were first hearing about it, they would likely come to a different answer as opposed to [being] able to take some time to think about it and hear from an expert,” he explained.

Indeed, how well judges understand any kind of scientific evidence has bearing on their role as “‘gatekeepers … obligated to determine whether the methods and principles underlying proffered expert testimony are … reliable and valid.” [7] Improving judge training is one solution, of course, but so is timely, systematic, standards-based tool and technique validation and evaluation [4].

One systemic solution could be “a new National Digital Evidence Policy, to be spearheaded by a National Digital Evidence Office” to coordinate and connect law enforcement efforts to obtain digital evidence in a way that would still support civil liberties and ensure transparency [28]. Still, laws that would establish and fund such an office – and any other such initiatives – remain behind the times because legislators are used to a pace that doesn't keep up with technology.

Indeed, U.S. judges are dealing with markedly wide variances in how digital evidence is treated across states. For example, said B.H.: “States like California and New York, on one extreme, have very encompassing state statutes … that [affect] almost every step of those investigations. And then you have states [that are almost] a developing country where there's not a lot.” Attorneys in the latter, he added, end up turning to federal case law to build their arguments.

In part, B.H. said, that's because technology-based crime was for many years considered the domain of federal law enforcement. “It's really been in the last 10 years where the states have [realized] there's too much of it [and] are racing to catch up,” he explained.

For example, child exploitation offenses that don't meet federal thresholds – when the offender is located in the same state as the victim(s) – come to local law enforcement and prosecutors via National Center for Missing and Exploited Children (NCMEC) CyberTipline reports, which reported nearly 22 million tips in 2020, an increase of 28% from 2019; and in 2021, more than 29 million tips [60].

Even at that, though, B.H. said many lawmakers approach criminal law updates in terms of more conventional crimes. “When we sit down and [say], ‘Hey, it's really bad that that's been unchanged in 10 years,’ you can get weird faces,” he explains. For example, although many states have criminalized intimate image abuse (“revenge porn”), many still don't regard it as a felony [61].

“The law was not written with this type of evidence in mind,” said L.H. “Sometimes that creates a conflict where it's unclear how some aspects of the law would apply to digital evidence … on a strict analysis versus a more relaxed analysis, reasonable minds can differ on some of those issues.” She points to self-driving cars as an example. “I don't know about the legal ability to differentiate between a motorist's liability in a collision, versus a malfunction with the computer, and how that will affect litigations in the future,” she said, anticipating these kinds of issues will only accelerate as more data sources become available. “Judges can initiate local court rules,” she said. “But I don't know if they are ready to, or fully understand how that would work.”