Artificial intelligence (AI) leverages computers and machines capable of performing high-level executive functions, mimicking human intelligence. Machine learning (ML) is a branch of AI capable of improving itself by learning from data, identifying patterns and making decisions without being explicitly programmed to do so. AI is already part of everyday life and used in areas including healthcare, banking systems and industry. In particular, AI has numerous applications in medicine, such as risk prediction, robotic surgery, automated imaging diagnosis and clinical research.[1,2] Cardiology is at the forefront of the AI revolution, and there are many potential applications. Though concerns with AI credibility are more of an issue in healthcare than in other fields, the potential benefits of well-integrated AI tools for medicine in general and cardiology in particular are fascinating.

The benefits of AI in cardiology span a broad spectrum of applications, ranging from primary prevention and diagnosis to invasive management.[3,4] ML algorithms may screen for cardiovascular diseases, sometimes outperforming traditional risk assessment tools. Despite using similar input variables (i.e., risk factors) as the American College of Cardiology/American Heart Association tool, an ML-based algorithm accurately identified 13% more high-risk patients and avoided unnecessary lipid-lowering therapies in 25% of those patients who are at low risk.[5]

In cardiac imaging, AI-assisted tools may minimise costs and maximise efficiency at the stages of image acquisition, processing and interpretation.[6] Furthermore, the accuracy of contemporary cardiovascular imaging technologies, when combined with large datasets from electronic health records, is likely to improve diagnostic and decision-making tools. For instance, ML models have correctly determined ejection fraction and longitudinal strain and identified hypertrophic cardiomyopathy, cardiac amyloid and pulmonary arterial hypertension.[7] In cardiac electrophysiology, automated electrocardiogram interpretation is now ubiquitous in healthcare as modern ML algorithms can identify wave morphologies and calculate critical parameters such as heart rate, interval length and axis.[8] Models to detect common arrhythmias, such as bundle branch block and premature ventricular contraction, have achieved accuracy greater than 98%, while models to predict ischaemic changes have achieved a sensitivity of 90.8%.[9,10]

Applications of AI in diagnostic cardiology are relevant today and, while interventional cardiology is lagging behind the field, many exciting avenues are being explored.

Clinical Decision Making

Risk stratification and prognostication are essential to clinical decision-making in treating patients with acute coronary syndrome (ACS). Current ACS risk scoring systems, such as the Global Registry of Acute Coronary Events and Thrombolysis In Myocardial Infarction, use algorithms developed based on data collected more than a decade ago and thus do not reflect contemporary clinical practices.[4]

In an observational study of patients with suspected MI, an ML-based model outperformed the European Society of Cardiology 0/3–hour pathway in predicting the likelihood of acute MI using sex, age and absolute values and changes in troponin I levels.[11] In another study that included patients with acute chest pain, AI-powered fractional flow reserve computed tomography (FFRCT) was successful in triaging patients by avoiding unnecessary downstream testing.[12]

Implementation of AI-powered algorithms has the potential to guide patient triage in emergency settings and to serve as a tool for interventionalists in risk assessment and prognostication. While these applications of AI in emergency medicine and interventional cardiology are still only at testing stage, they would undoubtedly be great tools to facilitate clinical decision-making and may cause a paradigm shift towards precision medicine.

Non-invasive Coronary Artery Imaging

For diagnosing coronary artery disease (CAD), applications of ML in coronary CT (cCTA) have shown promise as a diagnostic tool that might reduce the need for invasive diagnostic procedures.

For ruling out significant CAD, pre-TAVR evaluation with FFRCT analysis improved the diagnostic performance of cCTA by correctly reclassifying patients with signs of obstructive CAD, with a negative predictive value of 94.9%.[13] Additionally, ML-based approaches to automated coronary artery calcium scoring have shown similar predictive ability as experienced radiologists.[14] In contrast, in a comparison of dynamic CT myocardial perfusion imaging with ML-based FFRCT, the former outperformed the latter in identifying lesions causing ischaemia.[15]

The varying results of ML models in non-invasive imaging highlight the need to critically appraise each model individually.

Applications in the Cath Lab

Several projects are under way and might reshape the way procedures are performed and alleviate difficulties within the cath lab for interventionalists.[16]

AI could function as a voice-powered virtual assistant, allowing interventionalists to navigate a patient’s medical record or image library.[16] In addition to providing hands-free equipment control, AI could facilitate augmented reality use within the cath lab, easing the burden of having to view multiple monitors.[16] An example is an FDA-approved system that can visualise a patient’s coronary anatomy in a virtual format. A project is under way to develop an augmented reality hologram that would allow real-time viewing and assessment of a patient’s coronary anatomy.[16]

Furthermore, robotically assisted procedures have demonstrated success in interventional cardiology and might make common procedures such as percutaneous coronary intervention easier, more precise and more consistent.[17] The adoption of complete robotic systems will allow the interventionalist to perform the procedure at a distance, reducing physician exposure to radiation and offering the potential for fully remote procedures.[17]

What are the Challenges?

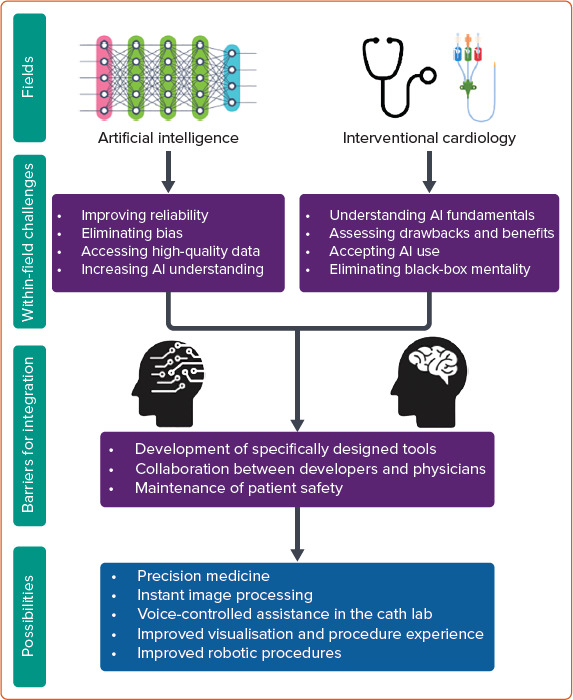

While AI has the potential to transform interventional cardiology both in the clinic and in the cath lab, its use in practice is still a long way ahead. Both interventional cardiology and AI need to evolve before they can integrate successfully, and there are limitations within each field that must be actively addressed (Figure 1).

Figure 1: Intersection of Artificial Intelligence with Interventional Cardiology.

AI = artificial intelligence.

One issue is that AI fundamentally does not understand what it is analysing but instead derives intelligence from associations and patterns, making it vulnerable to mistakes.[18] ML models are vulnerable to bias, much like current models, and bias rooted in ML may not be fully recognised by clinicians, particularly with unsupervised learning.[4] AI models have the potential to draw associations based on race and make racially driven assumptions that could exacerbate racial inequities in healthcare.[19] Furthermore, ML models are specific to the data they are trained on, potentially compromising external validity.

Lastly and most importantly, AI technology that is specific to interventional cardiology does not currently exist in a viable form, so there is a long road ahead in developing and validating technologies.

As AI continues to be integrated into different fields of medicine, clinicians and trainees must be educated on AI basics and the specific drawbacks and benefits of each AI-powered technology. Clinicians must avoid viewing AI as a black box but, instead, understand how it works and why it might have arrived at a given outcome. Furthermore, clinicians must critically appraise each AI tool individually and independently from other tools, as the validation of one does not mean the validation of another.

Interventional cardiologists must taper their expectations and understand that, although AI can be helpful in clinical practice, it cannot replace human intuition. Advice on treatment strategy and prognostics derived from AI must be critically appraised using sound clinical judgement; AI must be viewed as a tool rather than an authority.

The application of AI to healthcare raises new legal and ethical questions regarding patients’ rights to privacy and informed consent, particularly given the inevitability of commercialisation of AI algorithms to facilitate integration into clinical practice. The Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule permits free sharing of patient data for research and commercial purposes, provided that the data is de-identified.[20] However, this protection might fall short in the era of AI technology as data thought to be de-identified has the potential to be re-identified when multiple datasets are combined.

Furthermore, surveys of 4,000 American adults and 408 UK adults found that 72% and 78%, respectively, of people were comfortable sharing health data with physicians and healthcare institutions, while only 11% and 26.4%, respectively, were comfortable sharing health data with private institutions such as technology companies.[21]

While relative public faith in healthcare institutions is encouraging, these lines become blurred when data is shared with private, for-profit companies – a step that can prove necessary for the development of technology that can be integrated into clinical practice.

De-identification technology must improve quickly to outpace the capabilities of private companies to re-identify patient data. Additionally, large databases of patient data are often repurposed, such as when shared with private companies, and healthcare institutions collecting data could include a mechanism to update patients and offer the option to withdraw consent. While such a mechanism may not be legally required under HIPAA and may introduce consent bias to databases, the need to protect patient data and maintain good public faith may outweigh the downsides.[22]

Ultimately, the greatest ethical and legal concerns in the era of AI in healthcare surround ensuring patient privacy per HIPAA and maintaining public good faith as the lines between healthcare institutions and private companies blur in the development of technology. The current framework of institutional ethics oversight is equipped to handle the new challenges in ensuring de-identification of patient data, per HIPAA, and good-faith sharing of patient data with private commercial enterprises. However, it must adapt rapidly before it falls behind.

Conclusion

Though integrating AI-powered tools in interventional cardiology is still in its early stages, it is likely to be inevitable. It holds the potential to shape clinical practice over the following decades. Nonetheless, there are many barriers that must be overcome and potential pitfalls that could compromise patient safety. AI must be actively pursued by interventionalists and must be an active area of education and research for physicians and trainees. Furthermore, interventionalists must collaborate with engineers and data scientists to ensure that resources are being invested in tools that fit the actual needs of clinicians and have practical applications. All major inventions in interventional cardiology required several years of development and refinement, and AI is no exception.

References

- 1.Lin S. A clinician’s guide to artificial intelligence (AI): why and how primary care should lead the health care AI revolution. J Am Board Fam Med. 2022;35:175–84. doi: 10.3122/jabfm.2022.01.210226. [DOI] [PubMed] [Google Scholar]

- 2.Krittanawong C, Zhang H, Wang Z et al. Artificial intelligence in precision cardiovascular medicine. J Am Coll Cardiol. 2017;69:2657–64. doi: 10.1016/j.jacc.2017.03.571. [DOI] [PubMed] [Google Scholar]

- 3.Lindholm D, Holzmann M. Machine learning for improved detection of myocardial infarction in patients presenting with chest pain in the emergency department. J Am Coll Cardiol. 2018;71:A225. doi: 10.1016/S0735-1097(18)30766-6. [DOI] [Google Scholar]

- 4.Ben Ali W, Pesaranghader A, Avram R et al. Implementing machine learning in interventional cardiology: the benefits are worth the trouble. Front Cardiovasc Med. 2021;8:711401. doi: 10.3389/fcvm.2021.711401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kakadiaris IA, Vrigkas M, Yen AA et al. Machine learning outperforms ACC/AHA CVD risk calculator in MESA. J Am Heart Assoc. 2018;7:e009476. doi: 10.1161/JAHA.118.009476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dey D, Slomka PJ, Leeson P et al. Artificial intelligence in cardiovascular imaging: JACC state-of-the-art review. J Am Coll Cardiol. 2019;73:1317–35. doi: 10.1016/j.jacc.2018.12.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhang J, Gajjala S, Agrawal P et al. Fully automated echocardiogram interpretation in clinical practice. Circulation. 2018;138:1623–35. doi: 10.1161/CIRCULATIONAHA.118.034338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Al’Aref SJ, Anchouche K, Singh G et al. Clinical applications of machine learning in cardiovascular disease and its relevance to cardiac imaging. Eur Heart J. 2019;40:1975–86. doi: 10.1093/eurheartj/ehy404. [DOI] [PubMed] [Google Scholar]

- 9.Zhao Q, Zhang L. ECG feature extraction and classification using wavelet transform and support vector machines. International Conference on Neural Networks and Brain. 2005. pp. 1089–92. [DOI]

- 10.Afsar FA, Arif M, Yang J. Detection of ST segment deviation episodes in ECG using KLT with an ensemble neural classifier. Physiol Meas. 2008;29:747–60. doi: 10.1088/0967-3334/29/7/004. [DOI] [PubMed] [Google Scholar]

- 11.Than MP, Pickering JW, Sandoval Y et al. Machine learning to predict the likelihood of acute myocardial infarction. Circulation. 2019;140:899–909. doi: 10.1161/CIRCULATIONAHA.119.041980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Eberhard M, Nadarevic T, Cousin A et al. Machine learning-based CT fractional flow reserve assessment in acute chest pain: first experience. Cardiovasc Diagn Ther. 2020;10:820–30. doi: 10.21037/cdt-20-381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gohmann RF, Pawelka K, Seitz P et al. Combined cCTA and TAVR planning for ruling out significant CAD: added value of ML-based CT-FFR. JACC Cardiovasc Imaging. 2022;15:476–86. doi: 10.1016/j.jcmg.2021.09.013. [DOI] [PubMed] [Google Scholar]

- 14.Takx RA, de Jong PA, Leiner T et al. Automated coronary artery calcification scoring in non-gated chest CT: agreement and reliability. PLoS One. 2014;9:e91239. doi: 10.1371/journal.pone.0091239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li Y, Yu M, Dai X et al. Detection of hemodynamically significant coronary stenosis: CT myocardial perfusion versus machine learning CT fractional flow reserve. Radiology. 2019;293:305–14. doi: 10.1148/radiol.2019190098. [DOI] [PubMed] [Google Scholar]

- 16.Sardar P, Abbott JD, Kundu A et al. Impact of artificial intelligence on interventional cardiology: from decision-making aid to advanced interventional procedure assistance. JACC Cardiovasc Intv. 2019;12:1293–303. doi: 10.1016/j.jcin.2019.04.048. [DOI] [PubMed] [Google Scholar]

- 17.Beyar R, Davies JE, Cook C et al. Robotics, imaging, and artificial intelligence in the catheterisation laboratory. EuroIntervention. 2021;17:537–49. doi: 10.4244/EIJ-D-21-00145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Heaven D. Why deep-learning AIs are so easy to fool. Nature. 2019;574:163–6. doi: 10.1038/d41586-019-03013-5. [DOI] [PubMed] [Google Scholar]

- 19.Noseworthy PA, Attia ZI, Brewer LC et al. Assessing and mitigating bias in medical artificial intelligence: the effects of race and ethnicity on a deep learning model for ECG analysis. Circ Arrhythm Electrophysiol. 2020;13:e007988. doi: 10.1161/CIRCEP.119.007988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cohen IG, Mello MM. HIPAA and protecting health information in the 21st century. JAMA. 2018;320:231–2. doi: 10.1001/jama.2018.5630. [DOI] [PubMed] [Google Scholar]

- 21.Aggarwal R, Farag S, Martin G et al. Patient perceptions on data sharing and applying artificial intelligence to health care data: cross-sectional survey. J Med Internet Res. 2021;23:e26162. doi: 10.2196/26162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ploug T. In defence of informed consent for health record research – why arguments from ‘easy rescue’, ‘no harm’ and ‘consent bias’ fail. BMC Med Ethics. 2020;21:75. doi: 10.1186/s12910-020-00519-w. [DOI] [PMC free article] [PubMed] [Google Scholar]