Abstract

Tumor heterogeneity is a complex and widely recognized trait that poses significant challenges in developing effective cancer therapies. In particular, many tumors harbor a variety of subpopulations with distinct therapeutic response characteristics. Characterizing this heterogeneity by determining the subpopulation structure within a tumor enables more precise and successful treatment strategies. In our prior work, we developed PhenoPop, a computational framework for unravelling the drug-response subpopulation structure within a tumor from bulk high-throughput drug screening data. However, the deterministic nature of the underlying models driving PhenoPop restricts the model fit and the information it can extract from the data. As an advancement, we propose a stochastic model based on the linear birth-death process to address this limitation. Our model can formulate a dynamic variance along the horizon of the experiment so that the model uses more information from the data to provide a more robust estimation. In addition, the newly proposed model can be readily adapted to situations where the experimental data exhibits a positive time correlation. We test our model on simulated data (in silico) and experimental data (in vitro), which supports our argument about its advantages.

1. Introduction

In recent years the design of personalized anti-cancer therapies has been greatly aided by the use of high throughput drug screens (HTDS) [21, 9]. In these studies a large panel of drugs is tested against a patient’s tumor sample to identify the most effective treatment [22, 23, 18, 3]. HTDS output observed cell viabilities after initial populations of tumor cells are exposed to each drug at a range of dose concentrations. The relative ease of performing and analyzing such large sets of simultaneous drug-response assays has been driven by technological advances in culturing patient tumor cells in vitro, and robotics and computer vision improvements. In principle, this information can be used to guide the choice of therapy and dosage for cancer patients, facilitating more personalized treatment strategies.

However, due to the evolutionary process by which they develop, tumors often harbor many different subpopulations with distinct drug-response characteristics by the time of diagnosis [16]. This tumor heterogeneity can confound results from HTDS since the combined signal from multiple tumor subpopulations results in a bulk drug sensitivity profile that may not reflect the true drug response characteristics of any individual cell in the tumor. Small clones of drug-resistant subpopulations may be difficult to detect in a bulk drug response profile, but these clones may be clinically significant and drive tumor recurrence after drug-sensitive populations are depleted. As a result of the complex heterogeneities present in most tumors, care must be taken in the analysis and design of HTDS to ensure that beneficial treatments result from the HTDS. In recent work we developed a method, PhenoPop, that leverages HTDS data to probe tumor heterogeneity and population substructure with respect to drug sensitivity [15]. In particular, for each drug, PhenoPop characterizes i) the number of phenotypically distinct subpopulations present, ii) the relative abundance of those subpopulations and iii) each subpopulation’s drug sensitivity. This method was validated on both experimental and simulated datasets, and applied to clinical samples from multiple myeloma patients.

In the current work, we develop novel theoretical results and computational strategies that improve PhenoPop by addressing important theoretical and practical limitations. The original PhenoPop framework was powered by an underlying deterministic population dynamic model of tumor cell growth and response to therapy. Here we introduce a more sophisticated version of PhenoPop that utilizes stochastic linear birth-death processes, which are widely used to model the dynamics of growing cellular populations [20, 14, 6], as the underlying population dynamic model powering the method. This new framework addresses several important practical limitations of the original approach: First, our original framework assumed two fixed levels of observational noise; here, the use of an underlying stochastic population dynamic model enables an improved model of observational noise that more accurately captures the characteristics of HTDS data, and reflects the observed dependence of noise amplitude on population size (see Figure 1). Second, this framework allows for natural correlations in observation noise that are tailored to fit specific experimental platforms. Rather than assuming that all HTDS observations are independent, we may consider data generated using live-cell imaging techniques where the same cellular population is studied at multiple time points, resulting in observational noise that is correlated in time. By using these stochastic processes to model the underlying populations, we obtain an improved variance and correlation structure that more accurately models the data and enables more accurate estimators with smaller confidence intervals.

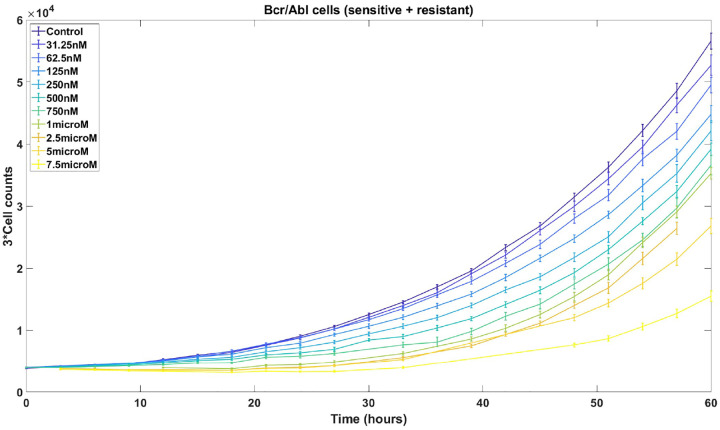

Figure 1:

One to one mixtures of imatinib-sensitive and resistant Ba/F3 cells are counted at 14 different time points under 11 different concentrations of imatinib. Error bars, based on 14 replicates with outliers removed, depict the sample standard deviation, which increase with larger cell counts.

The rest of the paper is organized as follows. In Section 2, we review the existing PhenoPop method and introduce the new estimation framework based on a stochastic birth-death process model of the underlying population dynamics. We propose two distinct statistical approaches in the new framework, aimed at analyzing data from endpoint vs. time series (e.g. live-cell imaging) HTDS. In Section 3, we conduct a comprehensive investigation of our newly proposed methods and compare them with the PhenoPop method on both in silico and in vitro data. Finally, we summarize the results of the investigation and discuss the advantages of the new framework in Section 4.

2. Data and model formulation

The central problem we address is to infer the presence of subpopulations with different drug sensitivities using data on the drug response of bulk cellular populations. Here the term ‘bulk cellular population’ refers to the aggregate of all subpopulations within the tumor. For each given drug, we assume that the data is in the standard format of total cell counts at a specified collection of time points and drug concentrations . Furthermore, assume that for each dose-time pair, independent experimental replicates are performed. We denote the observed cell count of replicate at dose and time by , and denote the total dataset by

2.1. PhenoPop for drug response deconvolution in cell populations

In [15], we introduced a statistical framework for identifying the subpopulation structure of a heterogeneous tumor based on drug screen measurements of the total tumor population. Here, we briefly review the statistical framework and the resulting HTDS deconvolution method (PhenoPop). First, define the Hill equation with parameters as

where and . A homogeneous cell population treated continuously with drug dose is assumed to grow at exponential rate per unit time. If the population has initial size , the population size at time is given by

Note that and as . Therefore, the population grows at exponential rate in the absence of drug and at rate for an arbitrarily large drug dose . The parameter represents the dose at which the drug has half the maximum effect, and represents the steepness of the dose-response curve .

For a heterogeneous cell population, each subpopulation is assumed to follow the aforementioned growth model with subpopulation-specific parameters and . Assume there are distinct subpopulations. Then, under drug dose , the number of cells in population at time is given by

To ease notation, the dose-response function for population will be denoted by in what follows. The initial size of population is , where is the known initial total population size and is the unknown initial fraction of population . The total population size at time is then given by

A statistical model for the observed data is obtained by adding independent Gaussian noise to the deterministic growth model prediction. The variance of the Gaussian noise is given by

The variance is allowed to depend on time and dose, since at large time points and low doses, a larger variance is expected due to larger cell counts [15]. Thus, the statistical model for the observation is given by

where are independent random variables with the normal distribution . This model has the parameter set

| (1) |

The initial fractions of the subpopulations and the parameters governing the drug responses of the subpopulations are unknown. In addition, the variance levels and are unknown. In practice, the precise values of the thresholds and have minimal effect on the performance of PhenoPop. Therefore, and are treated as known.

The goal of the PhenoPop algorithm is to use the experimental data to estimate the unknown parameters and the number of subpopulations . The parameters are estimated via maximum likelihood estimation, where the likelihood function is given by

| (2) |

The likelihood function describes the probability of observing the data as a function of the parameter vector for a given number of subpopulations. The number of subpopulations is then estimated by comparing the negative log likelihood across candidate values of via the elbow method or Akaike/Bayesian Information criteria. For further information, we refer to [15].

Limitations.

The assumption of the PhenoPop algorithm that the Gaussian observation noise has two levels of variance is made for methodological simplicity and does not reflect an observed bifurcation of experimental noise levels. It would be more natural to assume that the noise level is directly proportional to the cell count, as indicated by the experimental data shown in Figure 1. In addition, PhenoPop assumes that all observations are statistically independent. However, if cells are counted using techniques such as live-cell imaging (time-lapse microscopy), then observations of the same well at different time points will be positively correlated. Both of these limitations can be addressed by modeling the cellular populations with stochastic processes, as we will now show.

2.2. Linear birth-death process

A natural extension of PhenoPop [15] is to use a stochatic linear birth-death process to model the cell population dynamics. In the model, a cell in subpopulation (type- cell) divides into two cells at rate and dies at rate . This means that during a short time interval of length , a type- cell divides with probability and dies with probability . The death rate of type- cells is assumed dose-dependent according to

The net birth rate of type- cells is then given by

Using the substitution , we see that the drug affects the net birth rate of the stochastic model the same way it affects the growth rate of the deterministic population model of PhenoPop. Note however that here, the drug is assumed to act via a cytotoxic mechanism, that is, higher doses lead to higher death rates. Our framework can easily account for cytostatic effects, where higher doses lead to lower cell division rates, but we focus on cytotoxic therapies for simplicity.

Let denote the number of cells in subpopulation at time under drug dose . The mean and variance of the subpopulation size at time is given by

| (3) |

| (4) |

Next, denote the total population size at time under drug dose by

with mean and variance

Note that the mean size of the total population under the stochastic model equals the total population size under the deterministic model of PhenoPop, again with the substitution . However, the stochastic model introduces variability in the population dynamics at each time point arising from the stochastic nature of cell division and cell death. To account for experimental measurement error, we add independent Gaussian noise to each observation of the stochastic model. As a result, the new statistical model for each observation is

| (5) |

where are independent copies of for , and are i.i.d. random variables with the normal distribution , independent of the ’s. The model parameter set is now

| (6) |

In comparison with PhenoPop, on the one hand, the growth rate parameter for each subpopulation has been replaced by the birth and death rates and . On the other hand, there is only one parameter for the observation noise as opposed to four parameters for PhenoPop.

Under the new statistical model, the likelihood function is

| (7) |

where we assume that observations at different doses and from distinct replicates are independent, and represents an infinitesimally small interval around . We now discuss two different forms this likelihood function can take, depending on whether the data collected at different time points are correlated or not.

2.2.1. End-point experiments

For many common cell counting techniques, e.g. CellTiter-Glo [13], the experiment must be stopped to perform the viability assay. In this case, observations at different time points are actually observations of different cell populations exposed to drugs for different amounts of time, and can therefore be treated as independent. Thus, the likelihood function can be written as

We note that the distribution of can be computed exactly. However, for faster computation, one can approximate the distribution by a Gaussian distribution. To that end, consider the centered and normalized process

| (8) |

A straightforward application of the central limit theorem gives the following result.

Proposition 1 For and , as .

Note that means converge in distribution. The proof of this result will not be provided since it is a consequence of the more general Proposition 2.

Based on Proposition 1, we obtain the likelihood function

| (9) |

The right-hand side of (9) depends on the model parameters via the mean and variance functions and . As in [15], one can maximize this expression over the parameter set to obtain maximum likelihood estimates of the model parameters. The optimization problem for the new likelihood is more difficult to solve than the corresponding problem for the PhenoPop likelihood in (2), since the variance of the data now depends on the dose-response parameters for the subpopulations. However, the numerical optimization software we employ is able to deal with this more complex dependence on the model parameters, see Appendix 5.1.

2.2.2. Live-cell imaging techniques

Live-cell imaging techniques enable the experimenter to obtain cell counts for the same population across multiple different time points. For such datasets, observations of the same sample at different time points will be positively correlated. In this case, we must compute the joint distribution

| (10) |

for each . To ease notation, we will temporarily suppress dependence on the dose.

We first note that is not a Markov process, since the total cell count at each time point does not include information on the sizes of the individual subpopulations. Computing (10) exactly requires summing over the possible sizes of the subpopulations at each time point, which is computationally intensive. It is possible to speed up the computation using tools from hidden Markov models, which reduces the computational complexity to . However, this is still computationally infeasible since , resulting in a 1 second computation time to evaluate a single likelihood. The computational details are provided in Appendix 5.5.

A more efficient approach is to use a Gaussian approximation. For the centered and normalized process from (8), define the vector of observations across time points

By assuming that the set , number of subpopulations and initial proportion of each subtype are independent of the initial total cell count , we derive the following approximation for :

Proposition 2 As ,

where the element of the covariance matrix is given by

The proof of this proposition is given in Appendix 5.3. In Appendix 5.4, we relax the assumption that the initial proportion is independent of the initial total cell count, and a similar result still follows. In future work, we plan to relax the assumption that is independent of .

We now reintroduce dose dependence. For each , define the vector

and the identity matrix . Based on Proposition 2, the following approximation is used to compute the likelihood in expression (7):

The likelihood function is thus given by

| (11) |

Note that the computational complexity of evaluating the above likelihood is independent of , alleviating the computational burden associated with an exact evaluation of the likelihood.

The difference between the likelihood function for endpoint data and for live-cell imaging data lies in the structure of the covariance matrix for the observation vector . For , observations made at different time points are assumed independent, meaning that the covariance matrix is diagonal. For live-cell imaging data, the covariance matrix is not diagonal. Accurately accounting for time correlations in the likelihood (11) can improve the accuracy of parameter estimates, as we will discuss in Section 3. However, it does come at a cost, since it is obviously more computationally expensive to calculate the inverses and determinants present in . As a result, the optimization of can be more difficult than the optimization of .

2.2.3. Accuracy of Gaussian approximation

Proposition 2 states that the centered and normalized process is approximately Gaussian . However, in the derivation of the likelihood function (11), the distribution of the total cell number is approximated with a Gaussian distribution , whose mean and variance increases linearly with . To verify that the error in this approximation is reasonable for large , we will now compare the distributions of and using a well-known measure of the distance between two distributions.

The energy distance, introduced in [24], is a measure of the distance between probability distributions, which has previously been shown to be related to Cramer’s distance [5, 24]. The energy distance has been utilized in several statistical tests [2] and is easily computed for multivariate distributions. For probability distributions and on , we define their energy distance as

| (12) |

where all random variables are independent, and , and and , are distributed according to and respectively, and denotes the Euclidean norm.

Since it is unrealistic to compute the equation (12) directly, we approximate the true energy distance by computing the empirical energy distance. For two sets of i.i.d. realization , one can obtain the empirical energy distance by

| (13) |

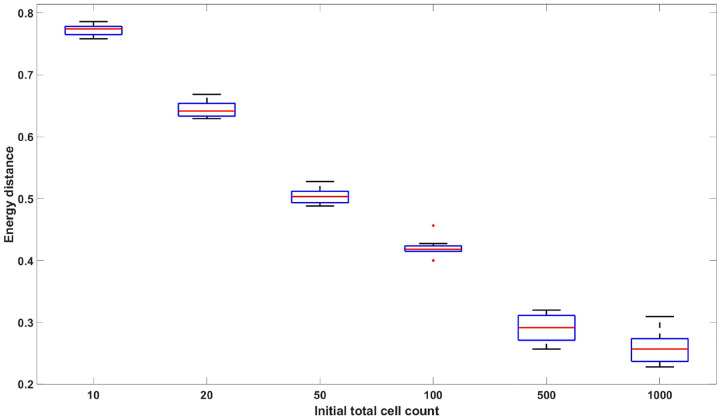

Denote the distribution of by and the normal distribution by . Let be i.i.d. samples from the distribution , and let be i.i.d samples from . We can then compute using (13). In Figure 2, we plot with varying initial cell counts. The plot shows a monotonic decrease in the empirical energy distance as a function of the initial cell count, which indicates that the distribution of is reasonably approximated by a Gaussian distribution for large values of the initial cell count.

Figure 2:

Empirical energy distance between linear birth-death simulated data and multivariate normal distributed data with respect to varying initial cell count: [10, 20, 50, 100, 500, 1000]. The data consists of replicates and 7 time points . No drug effect is assumed. The parameters used to generate the data are . The box plot represents the values from 10 distinct datasets. The figure demonstrates that the distribution of the linear birth-death process converges to the multivariate normal distribution with mean and covariance given by Proposition 2 as the initial cell count increases.

3. Numerical results

In this section, we use our new statistical methods to analyze both simulated (in silico) and experimental (in vitro) live cell imaging data. We apply both the simpler end-point estimation procedure (“end-points method”), based on the likelihood in (9), and the more complex live cell imaging procedure (“live cell image method”), based on the likelihood in (11). The performance of the new methods is compared with the existing PhenoPop algorithm. In all analyses it is assumed that the observation at time represents the known starting population size, i.e. .

3.1. Application to simulated data

We first apply our estimation methods to simulated (in silico) data. In Appendix 5.1, we provide details of the data generation and the parameter estimation for these in silico experiments.

3.1.1. Examples with 2 subpopulations

For illustrative purposes, we begin with a case study involving an artificial tumor with two subpopulations. Data is generated using a parameter vector selected uniformly at random from the ranges in Table 1. We assume that one tumor subpopulation is drug-sensitive and the other is drug-resistant. These subpopulations are indicated by the subscripts and , respectively.

Table 1:

Range for parameter generation of experiments with 2 subpopulations

| Range | [0.3, 0.5] | [0, 1] | [0.8, 0.9] | [0.05, 0.1] | [0.75, 2.5] | [1.5, 5] | [0, 10] |

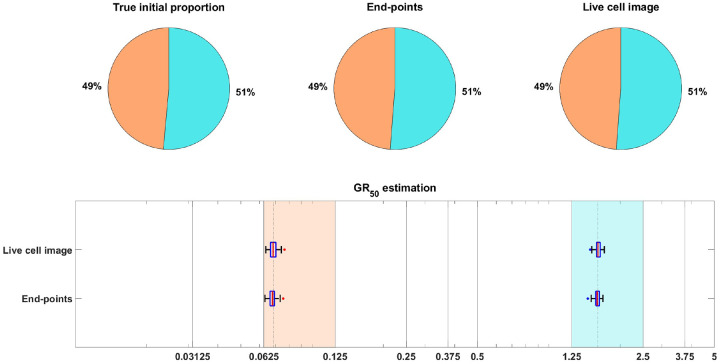

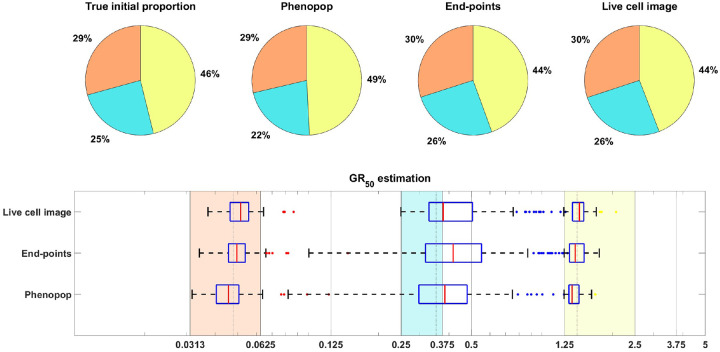

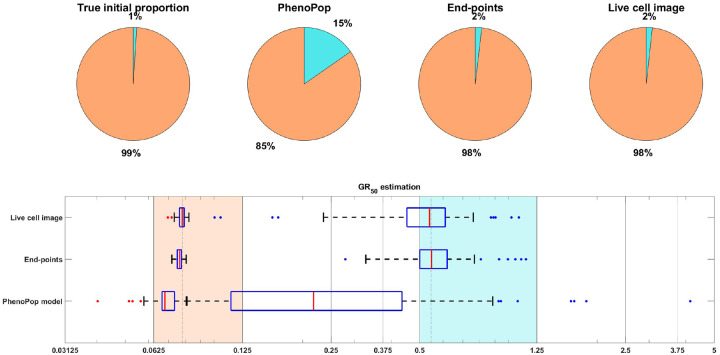

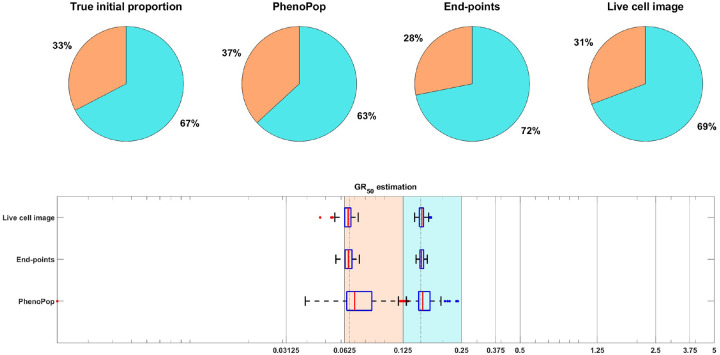

As in [15], we focus on inferring the initial proportion of sensitive cells, as well as the dose for each subpopulation. The is the dose at which the drug has half the maximal effect on the cell death rate, as is further explained in Appendix 5.1. Informally, the dose for each subpopulation is a measure of the subpopulation’s sensitivity to the drug. To assess the uncertainty in the parameter estimation, we compute maximum likelihood estimates for 100 bootstrapped datasets, as described in Appendix 5.1. The results of the case study are shown in Figure 3, where we see that both the live cell image method and the end-points method are able to recover the initial proportion of the sensitive population and the dose for each subpopulation accurately.

Figure 3:

Estimation of the initial proportion and for 2 subpopulations using the end-points method and the live cell image method on simulated data. The parameter vector and observation noise used in this example are . The pie chart illustrates the average of all bootstrap estimates for the initial proportion, while the box plot summarizes the distribution of the estimates for the ’s. The vertical dashed lines in the box plot correspond to the true values employed to generate the data, while the vertical solid lines indicate the concentration levels at which the data were collected. Each color in the plot represents a distinct subpopulation: orange for sensitive and blue for resistant. The shaded areas in the box plot indicate the concentration intervals where the true ’s are located, and the colored dots mark outliers in the estimation of the for each subpopulation, with red for sensitive and blue for resistant. This example demonstrates that our newly proposed models can accurately recover the initial proportion and values with high precision.

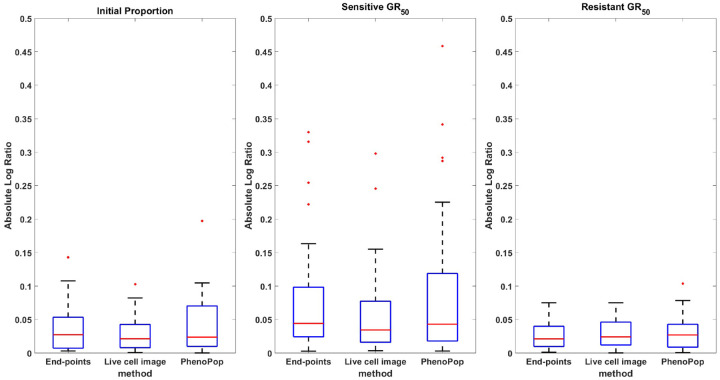

We next evaluate the performance of the estimation methods across 30 simulated datasets, where each parameter vector for is sampled from the ranges in Table 1. We furthermore compare the performance of the two new methods with the performance of PhenoPop. The error in the estimation of each parameter is measured by considering the absolute log ratio between the point estimate and the true value for the parameter,

| (14) |

This metric is chosen to address the logarithmic scale associated with the dose.

In Figure 4, a box plot of the estimation errors for the three methods across the 30 datasets is presented. Note that all three parameters are estimated accurately using all three methods. In addition, the error in estimating the sensitive is larger than the error in estimating the resistant for all three methods. One possible reason is that the initial proportion of sensitive cells is , so the experimental data contains less information on the sensitive subpopulation. Later experimental results will lend further support to this hypothesis.

Figure 4:

Absolute log ratio accuracy of three estimators using the PhenoPop, end-points and live cell image methods. The results are summarized based on 30 different simulated datasets. This figure demonstrates that there are no significant differences in estimation accuracy among these three methods when the true parameters fall within the range described in Table 1.

We next compare the estimation precision of the three methods. Specifically, we will compare the widths of the 95% confidence intervals for the three parameters between the three different methods. Since values vary significantly across the 30 generated datasets, we normalize the CI width for each method by dividing it by the sum of the CI widths of all three methods for the same dataset.

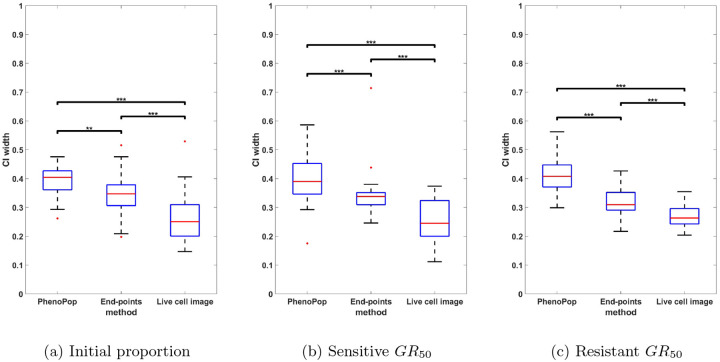

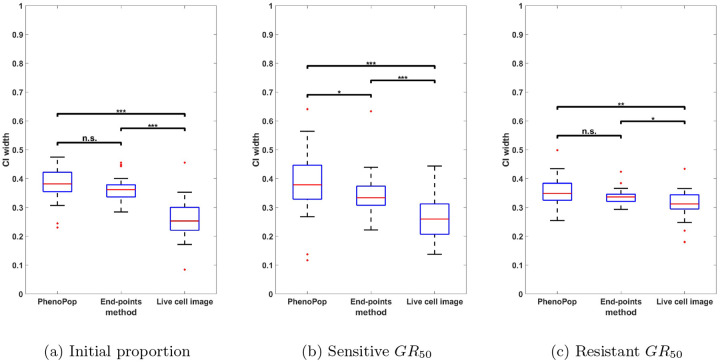

In Figure 5, the normalized CIs for are compared between the three methods across the 30 datasets. First, note that for the initial proportion , the live cell image method has significantly narrower CIs than the other two methods. Additionally, there is a small but statistically significant difference between the CI widths for the end-points method and the PhenoPop method. For the sensitive index, the live cell image method again has significantly narrower confidence intervals than the other two methods, and the end-points method has significantly narrower confidence intervals than the PhenoPop method. The results are similar for the resistant index. It is worth mentioning that for at least 28 out of the 30 datasets, the true parameters were located within the confidence intervals for all three methods.

Figure 5:

Comparison of the normalized CI widths of three estimators estimated from three different methods. The -axis represents the normalized CI width. The box plot summarizes the results across 30 different simulated datasets. The significance bar indicates the p-values derived from the Wilcoxon rank-sum test, with significance levels denoted as *** ≤ 0.001 ≤ ** ≤ 0.01 ≤ * ≤ 0.05. This figure demonstrates that the newly proposed models exhibit significant advantages in estimation precision, with the live cell image method demonstrating the highest level of precision.

In summary, the end-points and live cell image methods provide a significant improvement in estimator precision over the PhenoPop method for all three parameters, and furthermore, the live cell image method has the best precision out of all three methods.

3.1.2. Illustrative example with 3 subpopulations

In this section, we examine a case study involving an artificial tumor with 3 subpopulations. The subpopulations are assumed sensitive, moderate, and resistant with respect to the drug, and they are denoted using the subscripts , and , respectively. Data is generated using a parameter vector selected uniformly at random from the ranges in Table 2. Those parameters not listed in Table 2 are selected as in Table 1.

Table 2:

modified range of parameters

| Range | [0.167, 0.333] | [0.0313, 0.0625] | [0.25, 0.375] | [1.25, 2.5] |

Figure 6 shows estimation results for the initial proportions and the doses of the three subpopulations. Note that the end-points and live cell image methods provide more accurate estimates of the initial proportion for each subpopulation than PhenoPop. Furthermore, when estimating the for each subpopulation, the inter-quartile range (IQR) of 100 bootstrapped estimates covers the true value for all three methods. However, the estimation for the of the moderate subpopulation with is less precise than for the other two subpopulations, i.e., the IQR is wider. This is likely due to confounding between the moderate subpopulation and the other two subpopulations.

Figure 6:

Estimation of the initial proportion and for 3 subpopulations using the three estimation methods. The parameter vector and the observation noise in this example are . The pie chart illustrates the average of all bootstrap estimates for the initial proportion, while the box plot summarizes the distribution of all estimates for the ’s. The vertical dashed lines in the box plot correspond to the true values employed to generate the data, while the vertical solid lines indicate the concentration levels at which the data were collected. Each color in the plot represents a distinct subpopulation: orange for sensitive, blue for moderate, and yellow for resistant. The shaded areas in the box plot indicate the concentration intervals where the true ’s are located, and the colored dots mark outliers in the estimation of the for each subpopulation, with red for sensitive, blue for moderate, and yellow for resistant.

It is worth noting that for the 3 subpopulation example, the number of datapoints is the same as for the 2 subpopulation examples, since only total cell counts are observed at each time point. Furthermore, when computing maximum likelihood estimates for 3 subpopulations, we solved each optimization problem the same number of times as for 2 subpopulations. Overall, our conclusion is that all three methods can provide reasonable estimates of the true initial proportion and the of each subpopulation for 3 subpopulations. However, achieving equivalent levels of accuracy and precision as for 2 subpopulations may require a greater computational effort or the collection of more data, given that the 3 subpopulation model is more complex and has more parameters.

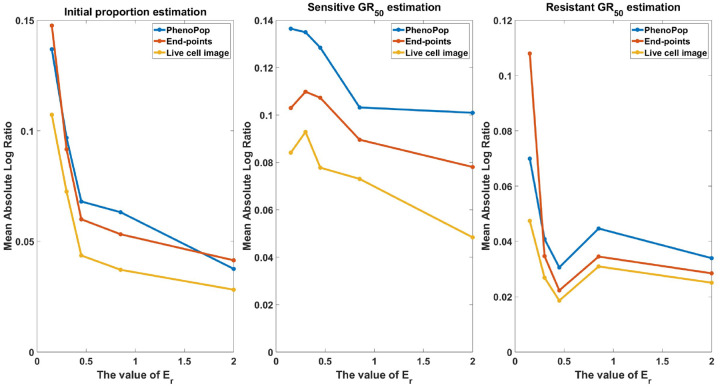

3.1.3. Performance in challenging conditions

In the previous work [15], three conditions under which the performance of PhenoPop deteriorates were identified: the case of a large observation noise, a small initial fraction of resistant cells, and similar drug-sensitivity of both subpopulations. We now investigate the performance of the end-points and live cell image methods in these conditions and compare to the performance of PhenoPop.

Large observation noise:

We first consider the case of large observation noise. Note that in the PhenoPop method, the only source of variability in the statistical model is the additive Gaussian noise. In the end-points and live cell image methods, however, there is an underlying stochastic process governing the population dynamics with an added Gaussian noise term. Thus, whereas PhenoPop deals with high levels of noise by adjusting the variance of the Gaussian term, the two new methods may also try to adjust the subpopulation growth and dose response parameters. This can complicate estimation with the two new methods compared to PhenoPop from data with high levels of noise.

We begin by considering a case study where the noise level is set to , and other parameters are chosen uniformly at random according to Table 1. The results are shown in Figure 7. For each method, the initial proportion is estimated with good accuracy, and the IQR of 100 bootstrap estimates for covers the true value. However, compared with the estimation in Figure 3, the estimation precision of the end-points method and live cell image method has degraded. In addition, observe that the IQRs of the three methods have about the same width, which implies the precision advantage observed in Section 3.1.1 disappears under a very large observation noise.

Figure 7:

An illustrative example under the high observation noise scenario, i.e. . The parameter vector and the observation noise in this example are . Results are presented as in Figure 3. This example demonstrates that all three methods are capable of recovering the initial proportion and even under the high observation noise scenario.

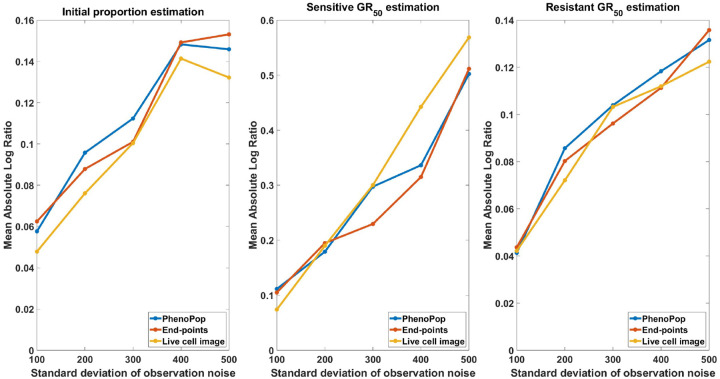

We next evaluate estimation performance across 30 simulated datasets for each noise value . Figure 8 shows the mean absolute log ratio across the 30 datasets for each parameter , each noise level and each estimation method. As expected, the estimation error increases for all three methods as a function of the observation noise. In fact, all three methods show a similar response to increasing levels of noise.

Figure 8:

Estimation error of with respect to varying standard deviation of observation noise. The metric of estimation error is the mean absolute log ratio of estimates across 30 simulated datasets, each generated from a distinct parameter set. The value of the observation noise parameter, , in these 30 generating parameter sets was assigned to 5 different values in the set to generate the line plot. Three different line plots correspond to three different methods, as indicated by the figure legends. This figure demonstrates that the estimations of the three methods deteriorate as the level of observation noise increases.

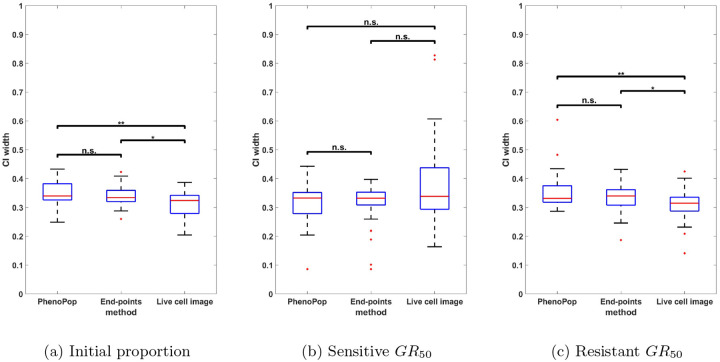

We next compare the widths of 95% confidence intervals for the three parameters under noise levels and , using 30 datasets for each noise level. The results are shown in Figures 9 and 10. For (Figure 9), the precision advantage of the live cell image method over the other two methods is less pronounced than in Figure 5, where , especially for the resistant . For (Figure 10), the advantage disappears for the sensitive . Importantly, however, Figure 9 shows that the precision advantage of the live cell method is statistically significant for all three parameters for an observation noise as large as 10% of the initial cell count. It should be noted that the standard deviation of observation noise reported from common automated and semi-automated cell counting techniques ranges from 1 – 15% [4, 19].

Figure 9:

Comparison of the normalized CI widths of the three estimators using the three different estimation methods, when the observation noise parameter is set to . The -axis represents the normalized CI width. The box plot summarizes the results across 30 different datasets. The significance bar indicates the p-values derived from the Wilcoxon rank-sum test, with significance levels denoted as *** ≤ 0.001 ≤ ** ≤ 0.01 ≤ * ≤ 0.05. This figure demonstrates the advantages of the live cell image method in estimation precision are preserved even when the standard deviation of observation noise is 10% of the initial cell count.

Figure 10:

Comparison of the normalized CI widths of three estimators using the three different estimation methods, when the observation noise parameter is set to . Results are presented as in Figure 9. This figure demonstrates that the advantages of the live cell image method in estimation precision become less significant as the standard deviation of observation noise increases to 50% of the initial cell count.

Small resistant subpopulation:

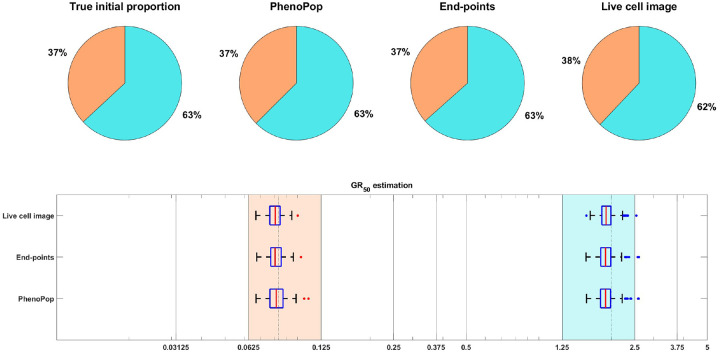

For the datasets investigated in Section 3.1.1, the initial proportion of sensitive cells was constrained to be in [0.3, 0.5]. We now consider the setting of a small resistant subpopulation. We begin with a case study in Figure 11, where is assigned to 0.99, and other parameters are sampled according to Table 1. For both the sensitive and resistant subpopulations, the IQR for the dose under PhenoPop does not cover the true value, whereas the IQR for the live cell image method does. The IQR for the end-points method covers the true resistant , but only barely covers the true sensitive . In addition, the end-points and live cell image methods have significantly narrower IQRs than PhenoPop. Finally, note that the estimate of the initial proportion of resistant cells is much more accurate for the end-points and live cell image methods. Thus, while PhenoPop provides a reasonable estimate of the sensitive , which is the dominant subpopulation in this scenario, inferring the population composition and the for the minority resistant subpopulation requires the use of the more powerful end-points and live cell image methods.

Figure 11:

An illustrative example under the unbalanced initial proportion scenario, i.e. . The parameter vector and the observation noise in this example are . Results are presented as in Figure 3. This example demonstrates that our newly proposed model can accurately estimate parameters even when the initial proportion of the resistant subpopulation is negligible, while the PhenoPop method fails to estimate the parameters accurately.

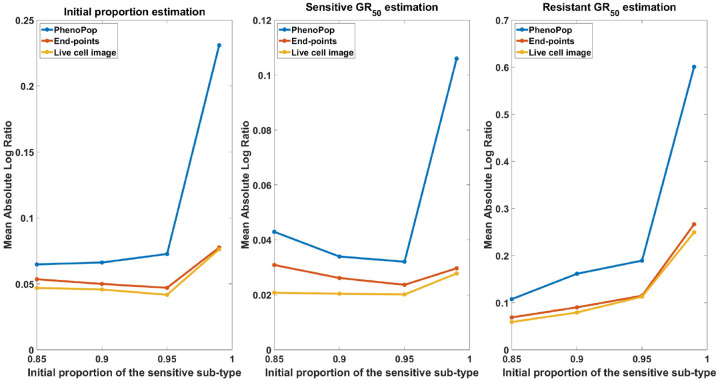

In Figure 12, we show the mean absolute log ratio for each parameter across 100 datasets for each . Note that both the end-points and live cell image methods have significantly smaller errors than PhenoPop, and that the difference becomes more pronounced as increases. Also note that the error in estimating the sensitive is smaller than for the resistant , opposite to the results of Figure 4, where . This further reinforces the hypothesis stated in Section 3.1.1 that the initial proportion of a subpopulation impacts the precision of estimating the for that subpopulation.

Figure 12:

Estimation error of with respect to varying resistant initial proportions. The metric of estimation error is the mean absolute log ratio across 100 simulated datasets, each generated from a distinct parameter set. The value of in these 100 generating parameter sets was assigned to 4 different values in the set to generate the line plot. Three different line plots correspond to three different methods, as indicated by the figure legends. This figure demonstrates the advantages of estimation accuracy provided by the newly proposed methods when the initial proportion of the resistant subpopulation decreases toward 0.

Similar subpopulation sensitivity:

For the datasets investigated in Section 3.1.1, the ’s for the two subpopulations were assumed to be significantly different. We now consider the case where the two ’s are similar. Figure 13 shows the results of a case study where , and other parameters are selected according to Table 1. Note that all three methods successfully recover the parameters , where the IQRs for the live cell image method are significantly narrower than for PhenoPop. For brevity, we omit the plots that depict the statistical comparison of confidence interval widths. In Figure 14, we perform estimation across 80 datasets for each , with other parameters sampled from Table 1, including . As expected, the accuracy in estimating the parameters improves as the sensitive and resistant become more different. We note however that the live cell image method has the lowest mean error when estimating the parameters, with all three methods showing similar degradation as the two subpopulations become more phenotypically similar.

Figure 13:

An illustrative example under the similar subpopulation sensitivity scenario. The parameter vector and the observation noise in this example are . Results are presented as in Figure 3. This example demonstrates that all three methods are capable of recovering the initial proportion and even when two subpopulations have similar drug sensitivity, while the newly proposed methods exhibit superior estimation precision compared to the PhenoPop method.

Figure 14:

Estimation error of with respect to varying similarity between subpopulation drug sensitivities. The metric of estimation error is the mean absolute log ratio across 80 simulated datasets, each generated from a distinct parameter set. The value of in these 80 generating parameter sets was assigned to 5 different values in the set to generate the line plot. Three different line plots correspond to three different methods, as indicated by the figure legends. This figure demonstrates that the estimation accuracy of the three methods improves as the discrepancy of drug sensitivity between the two subpopulations increases, with the live cell image method exhibiting the smallest average error among the three methods.

3.2. Application to in vitro data

We conclude by evaluating the performance of our two new methods on in vitro experimental data. The data consists of different mixtures of imatinib sensitive and resistant cells. In the experiments, cells were exposed to 11 different concentrations of imatinib and they were observed at 14 different time points. For each drug concentration, 14 independent replicates were performed starting with roughly 1000 cells. Cell counts were obtained using a live-cell imaging technique. Four datasets were produced with different starting ratios between sensitive and resistant cells: 1 : 1, 1 : 2, 2 : 1 and 4 : 1. These datasets are denoted BF11, BF12, BF21 and BF41, respectively. See [15] for further details on the experimental methods for generating the data.

In [15], we showed that the PhenoPop method can accurately identify the initial proportion of sensitive cells and both subpopulations’ indices from the datasets. Here, we apply the two new estimation methods to the datasets and compare how well the models fit the data. Model fits are assessed using the Akaike Information Criterion (AIC), which for a statistical model with parameters and likelihood function is given by

Here, is the maximum likelihood esitmate and is the cardinality of the vector . When comparing the three methods, the one with the lowest AIC is preferred.

Results are shown in Table 3. The AIC values of the end-points method (EP) and live cell image method (LC) are clearly lower than for the PhenoPop method (PP), indicating that the two new methods are superior for fitting the experimental datasets. As discussed in Section 2, the newly proposed methods have more sophisticated variance structures, which is likely the reason why they are able to provide a better fit to the datasets. Finally, we note that the end-points method has superior AIC scores to the live cell image method for three out of four of the experimental conditions. Therefore, it is not clear which of these two methods is more appropriate for fitting these datasets.

Table 3:

AIC scores of three methods: PhenoPop method(PP), end-points method(EP), and live cell image method(LC) for the four experimental datasets BF11, BF12, BF21 and BF41.

| DATA | PP(AIC) | EP(AIC) | LC(AIC) |

|---|---|---|---|

| BF11 | 28502 | 26300 | 25294 |

| BF12 | 30485 | 26816 | 27311 |

| BF21 | 27928 | 24064 | 24182 |

| BF41 | 28912 | 24066 | 24574 |

4. Discussion

In this work, we have proposed two methods for analyzing data from heterogeneous cell mixtures. In particular, we are interested in the setting where a mixture of at least two distinct cell subpopulations is exposed to a given drug at various concentrations. We then use the dose response curve of the composite population to learn about the two subpopulations. In particular, we are interested in estimates of the different subpopulations’ initial prevalence and also their distinct dose response curves. The challenge of this problem is that we do not observe direct information about the subpopulations, but instead only information about the dose response of the composite population.

This work is an extension of our prior work in [15]. The novelty of the current work is that we introduce a more realistic variance structure to our statistical model. We create a new variance structure by building our model using linear birth-death processes. In particular, we model each subpopulation as a linear birth-death process with a unique birth rate and a unique dose-dependent death rate. The dose dependence of the death rate is captured using a 3-parameter Hill function. Our observed process is then a sum of independent birth-death processes. Our goal is then to estimate the initial proportion of the subpopulations, as well as their birth rates and the parameters governing the dose response in their death rates.

Counting cells in in vitro experiments can generally be conducted in one of two fashions. In the first approach, cell numbers can only be estimated at the end of the experiment because the mechanism for estimating cell numbers requires killing the cells. In the second approach, cells are counted via live imaging techniques and the cells can be counted at multiple time points. When dealing with multiple time point data from cells collected via the first approach we can assume that observations at different time points are independent because they are the result of different experiments. However, when dealing with data from the second approach we can no longer make that assumption because the cell counts at different time points are from the same population and there is a positive correlation between those measurements. As a result of this differing structure we develop two methods, one that assumes independent observations at each time point, and one that assumes all the time points for a given dose are correlated. Evaluating the likelihood function under the second approach is not trivial at first glance since it requires evaluating the likelihood function of a sample path of a non-Markovian process (the total cell count). We are able to get around this difficulty by using a central limit theorem argument to approximate the exact likelihood function with a Gaussian likelihood.

In this work we compared three different methods: PhenoPop method from [15], end-points method (assumes measurements are independent in time), and live cell image method (assumes time correlations). We first performed this comparison using simulated data. We generated our data by simulating linear birth-death processes and then adding independent Gaussian noise terms to the simulations. We mainly focused on a mixture of two supbopulations, and we were interested in estimating three features of the mixed population: initial proportion of sensitive cells, of the sensitive cells, and of the resistant cells. Our first test for the simulated data was to look at confidence interval widths as a measure of estimator precision. In this study, we found that the live cell image method had significantly narrower confidence intervals than the other methods for estimating all three features. We next investigated the performance of our three methods in the setting of small resistant subpopulations, where less than 15% of initial cells are resistant. We found that in this small resistant fraction setting the live cell image method provides a significant improvement in accuracy over the original PhenoPop method. Furthermore, this improvement increases as the initial fraction of resistant cells goes to zero. We also compared the performance of the methods for simulations with increased levels of additive noise and subpopulations with similar dose response curves. In the scenario of subpopulations with similar dose response curves, we found that the live cell image method has the lowest mean error among the three methods. For increasing additive noise, all three methods perform similarly in terms of estimation accuracy. However, the live cell image method maintains its precision advantage over the other two methods for an observation noise of 10% of the initial cell count, while the advantage disappears for a 50% noise level.

We finally compared the three methods using in vitro data. In particular, we used data from our previous work [15] that considered different seeding mixtures of imatinib sensitive and resistant tumor cells. We then used all three methods to fit this data and used AIC as a model selection tool. We found that live cell image and end-points methods had significantly better scores than PhenoPop for all four initial mixtures studied. Interestingly the end-points method had lower AIC scores for three out of the four mixtures studied even though this data was generated using live-cell imaging techniques.

In our statistical model, there are several important features of cell biology that we have left out. For example, one type of cell may transition to another type of cell via a phenotypic switching mechanism (see e.g., [10, 11]). We believe that our current methods should be able to handle this type of switching with little modification since the underlying stochastic model will be very similar, i.e., a multi-type branching process. Another way the cell types can interact is via competition for scarce resources as the populations approach their carrying capacity. These types of interactions will require new statistical models since the underlying stochastic processes will no longer be linear birth-death processes. Another interesting direction of future work is to quantify the limits of when we can identify distinct subpopulations. For example, if the resistant subpopulation is present at fraction , what observation set would allow us to identify the presence of this subpopulation? Finally our stochastic model assumes that the time between cell divisions is exponential, but this is of course a great simplifcation. At the cost of a more complex model it would be possible to incorporate states for the different stages of the cell cycle. We leave this open as a question for future investigation.

Acknowledgements

The work of C. Wu was supported in part with funds from the Norwegian Centennial Chair Program. The work of K. Leder was supported in part with funds from NSF award CMMI 2228034 and Research Council of Norway Grant 309273. The work of EBG and JF was supported in part by NIH grant R01 CA241137, NSF DMS 2052465, NSF CMMI 2228034, and Research Council of Norway Grant 309273. The work of J.M. Enserink and D.S. Tadele was supported by grants from the Norwegian Health Authority South-East, grant numbers 2017064, 2018012, and 2019096; the Norwegian Cancer Society, grant numbers 182524 and 208012; and the Research Council of Norway through its Centers of Excellence funding scheme (262652) and through grants and 261936, 294916 and 314811

5. Appendix

5.1. Details of the numerical experiments

We define some of the basic algorithms and formulas used in the numerical results.

Generation of simulated data

To simulate data, the parameter set (as defined in (6)) is selected uniformly at random from a subset of the parameter space given in Table 1. Note that one can obtain the generating parameter set (defined in (1))from directly by setting for each subpopulation. Based on the parameter set , we simulated data is generated according to the statistical model specified in equation (5). Note that data is collected from the simulation continuously during the course of the experiment to replicate the live-cell imaging experiments.

Maximum likelihood estimation (MLE)

The maximum likelihood estimation was conducted by minimizing the negative log-likelihood, subject to constraints that were placed on the range of each parameter. The optimization process to find the minimum point was based on the MATLAB Optimization Toolbox [17] function fmincon with sequential quadratic programming (sqp) solver. Due to the non-convexity of the negative log-likelihood function, we performed the optimization starting from 100 uniformly sampled initial points within a feasible region. The feasible region sets limitations on the parameters based on prior knowledge about them. For simulation studies, the feasible region is given by Table 4, and for the in vitro data the feasible region is specified by Table 5. Among all the resulting local optima, the parameter set with the lowest negative log-likelihood as the estimated result.

Table 4:

Feasible interval for each parameter.

| Range | [0, 0.5] | [0, 1] | [0.27, 1] | [0, 10] | [0, 10] | [0, 2500] | [0, 10] |

Table 5:

Optimization Feasible region

| Range | [0, 1] | [0, 1] | [0.878, 1] | [0, 50] | [0.001, 20] | [0, 2500] | [0, 100]. |

Bootstrapping

In the simulated experiments, bootstrapping is used to quantify the uncertainty in the MLE estimator. In particular, 20 independent replicates of data measured at 11 concentration values and 13 time points are generated from the parameters at the beginning of the experiment. Then bootstrapping is employed to randomly re-sample 13 replicates from those 20 replicates with replacement 100 times. With 13 randomly sampled replicates it is possible to create an MLE for the parameter set . Since there are now 100 MLE’s for it is possible to construct confidence intervals as well by using the empirical quantiles of the estimators.

Our goal is to estimate the number of subpopulations, initial mixture proportion , and the drug sensitivity of each cellular subpopulation. The , introduced in [12], is a summary metric of drug-sensitivity. It is defined as the concentration at which a drug’s effect on cell growth is half the observed effect. Note that at the maximum concentration level, the drug may not reach its theoretical maximum effect.

In the context of the model, the can be defined as below. Denote the maximum dosage applied as , and define the half-maximum effect for subpopulation as . The explicit formula for the is then for subpopulation :

When , we will denote the higher as either the resistant or , and the lower as either the sensitive or . In this setting, parameters for the sensitive subpopulation and the resistant subpopulation, respectively, are denoted by subscripts and , e.g. and .

Initial conditions.

The initial number of cells is set as , and the initial size of each subpopulation is set by rounding to the nearest integer for subpopulation . The following drug concentration levels are used

and we collect the cell count data at the time points:

For these specific concentration levels and time points, we have chosen the threshold values of and in the PhenoPop model.

Optimization feasible region

When performing numerical optimization the parameters are restricted to a physically realistic region. Unless otherwise noted, the optimization was performed using 100 uniformly sampled initial points from Table 4.

5.2. Estimation on in vitro experimental data

When solving the maximum likelihood optimization problems for the in vitro data of Section 3.2, the optimization feasible region was chosen to be the same as the feasible region used in paper [15] for the data, i.e.,

We solved each optimization problem 500 times starting from randomly chosen initial points.

5.3. Proof of proposition 2

Given a set of time points , we first show for any that:

Where is a random variable that has normal distribution and the here is the variance of subpopulation linear birth-death process defined in equation (4). Following an argument from Either and Kurtz [7], we have the decomposition

By assuming the initial proportion for sub-type is independent of and using the Law of large numbers, as , we have

By assuming that the maximum number of time point and the length of time interval for any are both bounded and not depend on , the Law of large numbers also assures that for any the will diverge to infinity when . Therefore, we may apply the Central Limit Theorem to the following term:

Thus, we conclude that

Next we show that the random vector converges to the random vector , which has the multivariate normal distribution. We can obtain the distribution for from the independence between and for all :

where

Then we use the Cramer Wold device, given a constant vector , we have

Thus, we show that .

5.4. Proof of proposition 2 if initial proportions can go to 0 with

In proving Proposition 2, we made the assumption that the initial proportions for are not dependent on the initial cell count . In this sub-section, we aim to relax this assumption and demonstrate a similar result. Note we will assume that all other inputs are still independent of , i.e., and .

In particular, we will allow to depend on , and allow for . We denote two sets of subpopulations and , where

Due to , and being fixed with , we know that the set must not be an empty set. Then following a similar pattern as the proof of the Proposition 2, we derive:

Next, we may consider these two double sums separately. For , because the diverges to infinity as , the first double sum will converge to the same limit we established in Proposition 2. For will stay bounded. Therefore, in the second double sum, we will have converge to 0 as , which makes the second double sum vanish. In conclusion, we have

Note that this convergence result will lead to the same realization in practice, i.e., we will define the covariance by summing over all subtypes. This is because in practice we only have and we cannot actually assume subpopulations have zero contribution to the covariance.

5.5. Exact path likelihood computation

Here we show how to calculate the exact likelihood of a sample path observation of the total cell count of multiple heterogeneous birth-death processes. While we do not use this approach for likelihood evaluation in the current manuscript we report it here to show that it is not feasible.

We first consider the following joint probability of a homogeneous linear birth-death process:

For ease of notation define the transition probability . It is important to note that evaluating is the most computation-demanding task when evaluating the joint probability. As a result, we mainly consider the number of evaluations of this transition probability. The analytical form for this transition probability was derived in [1]:

| (15) |

where

Note that numerical evaluation (15) will be computationally expensive due to the presence of multiple factorial terms. We use a Gosper refined version of the Stirling formula [8] to approximate these factorials

We find that this approximation leads to good performance in our examples. It is thus straightforward to evaluate the path likelihood for the case of a homogeneous linear birth-death process.

If we instead have observations of a sum of birth-death processes, the evaluation of the path likelihood is much more difficult. In particular, the sum of the birth-death processes is no longer a Markov process and we must therefore sum over possible values of our unobserved subpopulations. Specifically, if we have two subpopulations we can formulate the equation (10), as

| (16) |

Note that the last equality is due to the assumption that subpopulation grow independently, and we can decompose the joint probability of mixture cell count into a summation of multiple joint probabilities of the homogeneous linear birth-death process. It is not hard to see that if we naively evaluate the above sum, the number of computations of the homogeneous joint probability is around . Since many examples have , and this is clearly an infeasible approach.

In order to avoid the exponential dependence on the number of time points, one option is to use techniques from Hidden Markov Models (HMM). The main assumption of HMM is the Markov property of the hidden process, and that the hidden process relates to the observable process according to a specified distribution . Recall that the time series of observed total cell count is given by and denote the time series of the subpopulations as . Then in the HMM is the observable process, and is the hidden Markov process due to the Markov property of the linear birth-death process. Notice that the relationship between the hidden process and the observable process can be defined as

We can translate the live-cell imaging experiment into an HMM and significantly improve the computational complexity of evaluating the exact likelihood function (7). In particular, we can use popular HMM techniques, such as the forward-backward procedure to reduce the total number of transition probability, i.e., equation (15), computation to for one replicate at one dosage level, where is the number of hidden states. In particular, we need to calculate the by transition matrix for every time point, and if we assume the length of time intervals are identical, we can reduce the upper bound to . However, the number of hidden states depends on both the maximum total number of cells observed at each time point and the number of subpopulations . As we will now show, this is unfortunately not a sufficient reduction in computational complexity. In particular, assume we observe total cells. In that case, the number of hidden states is given by , and assuming that , we have that as . With only two subpopulations this results in computational complexity of , and in many experiments, we might have leading to an extremely high computational burden. If we would end up with the far worse computational complexity of .

In conclusion, using a naive approach to compute the joint probability of mixture cell count would require many computations of transition probability, which is clearly infeasible when many cell counts are in the thousands with over 10 observed time points. We also discuss an HMM based approach to evaluating the likelihood that results in a significant reduction in the computational burden for evaluating this likelihood. In particular, with this approach we can reduce the number of computations of the transition probability to . Unfortunately we might have . Note that this will be the complexity for evaluating the likelihood of one single replicate at a single dose, so taking into account that we can have more than 10 different doses, with more than 10 replicates at each dose we see that unfortunately, this HMM approach is computationally infeasible. In addition, this HMM approach will have significantly worse computational complexity after we include observation noise terms and/or more than 2 subpopulations.

5.6. Data and code availability

All data and code used for running experiments, model fitting, and plotting is available on a GitHub repository at https://github.com/chenyuwu233/PhenoPop_stochastic. The required Matlab version is Matlab R2022a or newer.

References

- [1].Bailey Norman TJ. The elements of stochastic processes with applications to the natural sciences, volume 25. John Wiley & Sons, 1991. [Google Scholar]

- [2].Baringhaus L. and Franz C.. On a new multivariate two-sample test. Journal of Multivariate Analysis, 88(1):190–206, 2004. [Google Scholar]

- [3].de Campos Cecilia Bonolo, Meurice Nathalie, Petit Joachim L, Polito Alysia N, Zhu Yuan Xiao, Wang Panwen, Bruins Laura A, Wang Xuewei, Lopez Armenta Ilsel D, Darvish Susie A, et al. “direct to drug” screening as a precision medicine tool in multiple myeloma. Blood cancer journal, 10(5):1–16, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Cadena-Herrera Daniela, Esparza-De Lara Joshua E., Ramírez-Ibañez Nancy D., López-Morales Carlos A., Pérez Néstor O., Flores-Ortiz Luis F., and Medina-Rivero Emilio. Validation of three viable-cell counting methods: Manual, semi-automated, and automated. Biotechnology Reports, 7:9–16, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Cramér Harald. On the composition of elementary errors. Scandinavian Actuarial Journal, 1928(1):141–180, 1928. [Google Scholar]

- [6].Durrett Rick. Branching process models of cancer. Springer International Publishing, 2014. [Google Scholar]

- [7].Ethier Stewart N and Kurtz Thomas G. Markov processes: characterization and convergence. John Wiley & Sons, 2009. [Google Scholar]

- [8].Gosper R William Jr. Decision procedure for indefinite hypergeometric summation. Proceedings of the National Academy of Sciences, 75(1):40–42, 1978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Grandori Carla and Kemp Christopher J. Personalized cancer models for target discovery and precision medicine. Trends in cancer, 4(9):634–642, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Gunnarsson Einar Bjarki, De Subhajyoti, Leder Kevin, and Foo Jasmine. Understanding the role of phenotypic switching in cancer drug resistance. Journal of theoretical biology, 490:110162, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Gunnarsson Einar Bjarki, Foo Jasmine, and Leder Kevin. Statistical inference of the rates of cell proliferation and phenotypic switching in cancer. Journal of Theoretical Biology, 568:111497, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Hafner Marc, Niepel Mario, Chung Mirra, and Sorger Peter K. Growth rate inhibition metrics correct for confounders in measuring sensitivity to cancer drugs. Nature Methods, 13(6):521–527, October 2015. 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Hannah Rita, Beck Michael, Moravec Richard, and Riss T. Celltiter-glo? luminescent cell viability assay: a sensitive and rapid method for determining cell viability. Promega Cell Notes, 2:11–13, 2001. [Google Scholar]

- [14].Kimmel M and Axelrod DE. Branching processes in biology. Springer, New York, 2002. [Google Scholar]

- [15].Köhn-Luque Alvaro, Myklebust Even Moa, Tadele Dagim Shiferaw, Giliberto Mariaserena, Schmiester Leonard, Noory Jasmine, Harivel Elise, Arsenteva Polina, Mumenthaler Shannon M., Schjesvold Fredrik, Taskén Kjetil, Enserink Jorrit M., Leder Kevin, Frigessi Arnoldo, and Foo Jasmine. Phenotypic deconvolution in heterogeneous cancer cell populations using drug-screening data. Cell Reports Methods, page 100417, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Marusyk Andriy, Almendro Vanessa, and Polyak Kornelia. Intra-tumour heterogeneity: a looking glass for cancer? Nature reviews cancer, 12(5):323–334, 2012. [DOI] [PubMed] [Google Scholar]

- [17].Matlab optimization toolbox, R2022a. The MathWorks, Natick, MA, USA. [Google Scholar]

- [18].Matulis Shannon M, Gupta Vikas A, Neri Paola, Bahlis Nizar J, Maciag Paulo, Leverson Joel D, Heffner Leonard T, Lonial Sagar, Nooka Ajay K, Kaufman Jonathan L, et al. Functional profiling of venetoclax sensitivity can predict clinical response in multiple myeloma. Leukemia, 33(5):1291–1296, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Mumenthaler Shannon M, Foo Jasmine, Leder Kevin, Choi Nathan C, Agus David B, Pao William, Mallick Parag, and Michor Franziska. Evolutionary modeling of combination treatment strategies to overcome resistance to tyrosine kinase inhibitors in non-small cell lung cancer. Molecular pharmaceutics, 8(6):2069–2079, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Pakes Anthony G. Ch. 18. biological applications of branching processes. Handbook of statistics, 21:693–773, 2003. [Google Scholar]

- [21].Pauli Chantal, Hopkins Benjamin D, Prandi Davide, Shaw Reid, Fedrizzi Tarcisio, Sboner Andrea, Sailer Verena, Augello Michael, Puca Loredana, Rosati Rachele, et al. Personalized in vitro and in vivo cancer models to guide precision medicine. Cancer discovery, 7(5):462–477, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Pemovska Tea, Kontro Mika, Yadav Bhagwan, Edgren Henrik, Eldfors Samuli, Szwajda Agnieszka, Almusa Henrikki, Bespalov Maxim M, Ellonen Pekka, Elonen Erkki, et al. Individualized systems medicine strategy to tailor treatments for patients with chemorefractory acute myeloid leukemiaism approach to therapy selection. Cancer discovery, 3(12):1416–1429, 2013. [DOI] [PubMed] [Google Scholar]

- [23].Pozdeyev Nikita, Yoo Minjae, Mackie Ryan, Schweppe Rebecca E, Tan Aik Choon, and Haugen Bryan R. Integrating heterogeneous drug sensitivity data from cancer pharmacogenomic studies. Oncotarget, 7(32):51619, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Székely Gábor J. E-statistics: The energy of statistical samples. Bowling Green State University, Department of Mathematics and Statistics Technical Report, 3(05):1–18, 2003. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data and code used for running experiments, model fitting, and plotting is available on a GitHub repository at https://github.com/chenyuwu233/PhenoPop_stochastic. The required Matlab version is Matlab R2022a or newer.