Abstract

Objective

We aim to assess the effectiveness of a cataract surgery outcome monitoring tool used for continuous quality improvement. The objectives are to study: (1) the quality parameters, (2) the monitoring process followed and (3) the impact on outcomes.

Design and procedures

In this retrospective observational study we evaluated a quality improvement (QI) method which has been practiced at the focal institution since 2012: internal benchmarking of cataract surgery outcomes (CATQA). We evaluated quality parameters, procedures followed and clinical outcomes. We created tables and line charts to examine trends in key outcomes.

Setting

Aravind Eye Care System, India.

Participants

Phacoemulsification surgeries performed on 718 120 eyes at 10 centres (five tertiary and five secondary eye centres) from 2012 to 2020 were included.

Interventions

An internal benchmarking of surgery outcome parameters, to assess variations among the hospitals and compare with the best hospital.

Outcome measures

Intraoperative complications, unaided visual acuity (VA) at postoperative follow-up visit and residual postoperative refractive error (within ±0.5D).

Results

Over the study period the intraoperative complication rate decreased from 1.2% to 0.6%, surgeries with uncorrected VA of 6/12 or better increased from 80.8% to 89.8%, and surgeries with postoperative refractive error within ±0.5D increased from 76.3% to 87.3%. Variability in outcome measures across hospitals declined. Additionally, benchmarking was associated with improvements in facilities, protocols and processes.

Conclusion

Internal benchmarking was found to be an effective QI method that enabled the practice of evidence-based management and allowed for harnessing the available information. Continuous improvement in clinical outcomes requires systematic and regular review of results, identifying gaps between hospitals, comparisons with the best hospital and implementing lessons learnt from peers.

Keywords: ophthalmology, cataract and refractive surgery, quality in health care

STRENGTHS AND LIMITATIONS OF THIS STUDY.

The study is based on comprehensive data of eye hospitals that have been benchmarking outcomes for continuous improvement for the past decade.

Relatively complete data on all factors that influence quality of surgical outcomes were gathered and included in this study.

Although the process is based on eye hospitals, it can be applied usefully to other clinical disciplines.

Benchmarking results must be interpreted carefully, considering inclusion and exclusion criteria followed by hospitals and the definitions of outcome variables.

Since a retrospective, observational study design was employed, not all confounding factors can be ruled out.

Introduction

Quality healthcare increases the likelihood of desirable health outcomes. High quality of healthcare services is essential to create trust1 and increase demand.2 3 Delivering quality healthcare services is also important for Universal Health Coverage.1 Further, intensifying competition in healthcare markets4 is increasing pressure on providers to deliver high quality, cost-effective and patient-centred care.5

In the context of eye health, cataract is the leading cause of blindness in the world, accounting for 45.5% of all blindness, and the second leading cause of moderate to severe visual impairment.6 The success of cataract surgery is generally equated to achieving a threshold level of postoperative best corrected distance visual acuity (BCVA). However, significant concerns remain about quality of surgical outcomes, especially in developing countries.7 For instance, a summary8 of eight population-based studies in sub-Saharan Africa reports that the percentage of eyes with ‘good’ vision, defined by the WHO9 as postoperative visual acuity (VA) ≥6/18, ranged from 23% to 59% compared with the recommended level of 90%. The same summary also reports that the percentage of eyes that had ‘poor’ vision (WHO definition is postoperative VA<6/60) after surgery ranged from 23% to 64% compared with the recommended level of <5%.

The use of health information systems that enable evidence-based management is a critical foundational element to deliver quality healthcare services.1 Measurement and reporting of outcomes is crucial for a hospital to learn and improve care over time.

Background and context

The Aravind Eye Care System (Aravind; AECS) is a network of 14 specialty eye-care hospitals in Southern India. In 2019–2020, Aravind hospitals served over 4.6 million outpatient visits and performed 515 000 treatment procedures including 317 500 cataract surgeries. A third of the cataract surgeries are performed on patients brought in as part of outreach programmes. These programmes are conducted in remote areas, primarily on weekends. Being a postgraduate training and research institute, a significant number of cataract surgeries are performed by senior postgraduate students (15%) and postgraduate fellows (25%) who are undergoing specialisation training. The volume of surgeries performed by each surgeon varies from 250 to 3500 a year. Moreover, as a referral centre, a tertiary centre treats patients with advanced conditions and comorbidities referred by its satellite centres and other eye care providers. Considering all these factors, continuous quality improvement (CQI) is critical to ensure that outcomes are not compromised.

In 1999, Aravind began using its own software tool to track quality parameters and improve cataract surgery outcomes. While each hospital in the network was able to generate reports and improve outcomes, a casual comparison of outcomes across hospitals revealed a significant difference; this prompted the need for further actions for improvement.

While measuring outcomes that report the current status is necessary, comparing outcomes with peers both inside and outside the organisation helps to identify variations and hence generate opportunities to improve outcomes.4 CQI is practiced in hospitals by leveraging variability to optimise clinical care, reduce costs and enhance customer service quality.10 A systematic review of quality improvement (QI) methods11 for health outcomes identified six commonly used methods: benchmarking, collaborative care model, chronic care model, information technology (IT) driven interventions, plan-do-check-act, and learning and leadership collaborative.

Rationale

QI is not a one-time event. What is a standard of excellence today may be the expected minimum norm of tomorrow. For instance, in 2021 the WHO revised the VA threshold for a good visual outcome following cataract surgery to 6/12 or better from the previous norm of 6/18 or better.12 Therefore, improvement should be an ongoing process, and benchmarking should be considered one part of that process.13 A hospital can benchmark against itself by measuring variation in outcomes and tracking over time using control charts.14 Understanding the variation and its cause and taking appropriate actions would help to raise the bar and improve the outcome.14

Benchmarking involves ascertaining the gap in our performance compared with the best performing organisations. It provides an opportunity to learn new working methods and practices from others, and subsequently adapting and adopting appropriate practices in our settings.13 Existing literature primarily focuses on developing benchmarks15–17 as a one-time exercise,11 18 19 and comparing with published reports.20 Benchmarking is often described as comparing measurements in a limited time frame, but it also emphasises gathering indicators over the long term, making this a real CQI approach.21

To exploit the opportunities of benchmarking in improving quality, Aravind upgraded its Cataract Surgical Quality Assurance (CATQA) platform as a benchmarking tool in 2011, thus allowing hospitals and surgeons to compare themselves against each other and against the best performer within the Aravind network. This initiative aimed to narrow the variation between hospitals and between surgeons, so that quality of care could be improved across the system in a standard, consistent and continuous manner.

Benchmarking has been discussed in a variety of disciplines; however, there has been little research on CQI in the healthcare sector. A successful implementation of QI initiatives involves several factors that have been discussed.22–25 The objective of this study was to present and evaluate an internal benchmarking system whose goal is to improve quality of outcomes of cataract surgery in the network of eye hospitals of the AECS.

Methods

Design

We conducted a longitudinal retrospective observational study to evaluate the QI methods practiced in a network of hospitals of AECS, India.

Setting

AECS was established in 1976 in Madurai, India and currently has a network of 7 tertiary, 7 secondary, 6 community and 108 primary eye care centres across Tamil Nadu, Andhra Pradesh and Pondicherry states in India. Since its inception, AECS has been serving over half of its patients at deeply subsidised prices or for free. Online hospital management system (HMS) was implemented in 1991 to automate the patient care functions, capture necessary data and make the information available for real-time monitoring, planning and decision-making.

eyeNotes, a comprehensive electronic medical record (EMR) system, was introduced in 2016. It was developed by AECS’s in-house information technology team, using Microsoft (MS) technology (asp.net) and Google Angular for frontend with MS SQL server 2016 database at the backend. HTML, MS SQL server reporting services and Google charts were used for reports and dashboards. Using eyeNotes all the findings of clinical examinations and investigations are recorded in a structured way as part of examination processes. A/Scan, B/Scan and other investigation reports from the equipment are inserted into eyeNotes in real time. Surgery notes, including any intraoperative complications, are entered immediately after the surgery. Immediate postoperative findings are recorded by the examining doctor. eyeNotes has been undergoing regular upgrades based on feedback from the users. During the study period, CATQA database was not changed much.

Intervention

Introduction of benchmarking

In 2011, Aravind’s internal IT team upgraded the Cataract Surgical Quality AssuranceCATQA system (CATQA) as benchmarking tool and deployed it into the cloud. Benchmarking parameters for this study were selected from existing outcome monitoring variables and some additional variables were included to make the system more comprehensive. The data can be uploaded using Microsoft Excel files, which are populated with information extracted from HMSs and EMRs.

Quality parameters selected for benchmarking

Benchmarking is done for a number of outcome variables, with the option of filtering the outputs either individually or combined across factors that affect outcomes. Details of the parameters and filters included for benchmarking are shown in table 1.

Table 1.

List of outcome variables and variables to filter the outputs

| No. | Quality parameters (facts) | Description of the parameter |

| 1 | Preoperative uncorrected visual acuity in operated eye (<6/60) | To measure the proportion of patients with poor preoperative visual acuity |

| 2 | Cataract diagnosis in operated eye | To measure the proportion of patients with advanced conditions of cataract (mature cataract, hyper mature cataract, etc) who underwent surgery |

| 3 | Surgical procedure | Phacoemulsification (Phaco), manual small incision (SICS), extra-capsular extraction (ECCE), femto laser assisted (FLACS) and others |

| 4 | Anaesthesia | General, local or topical anaesthesia |

| 5 | Anaesthesia complications | These include the multitude of ocular or systemic complications that could occur during or after administration of local injectable or topical anaesthesia |

| 6 | Intraoperative complications | Complications occurring during the surgery |

| 7 | Postoperative complications | Postoperative complications noted a few hours after surgery or on first postoperative day |

| 8 | Re-surgeries | Procedures performed to manage complications occurring intraoperatively or postoperatively (immediately or later, but within 6 months) to enhance the outcome of surgery |

| 9 | Immediate postoperative (day 1 or discharge) pinhole visual acuity | Visual acuity measured at the time of discharge (or day after surgery for day-care patients) |

| 10 | Postoperative follow-up visit (2–8 weeks) | Whether patient was examined 2–8 weeks after cataract surgery |

| 11 | Complications at follow-up | Complications developed after discharge and found during the follow-up examination |

| 12 | Uncorrected distance visual acuity at follow-up visit | Uncorrected distance visual acuity in the operated eye |

| 13 | Best corrected distance visual acuity at follow-up visit | Best corrected distance visual acuity in the operated eye |

| 14 | Spherical equivalent | Spherical+0.5 (Cylinder value) of refraction |

| 15 | Infection | Patient is identified with endophthalmitis |

| 16 | Culture test | Result of the culture test |

| 17 | Visual recovery postinfection treated | Vision acuity after managing the infection |

| No. | Filter options (dimensions) | Description of filters |

| 1 | Period | Duration of report |

| 2 | Patient source | Paying, free (walk-in), outreach |

| 3 | Surgical procedure | Phaco, SICS, ECCE, others |

| 4 | Lens type | PMMA (polymethyl methacrylate), acrylic, aspheric, toric, multifocal, etc |

| 5 | Surgeon type | Medical officer/consultants, fellows, residents, trainees |

| 6 | Surgeon | Name of the surgeon |

| 7 | Surgery volume | Number of cataract surgeries performed by a surgeon in a year |

Several factors that have not been measured, measured inadequately or are misspecified, such as surgeons’ skill, clinical protocol, patient selection, data definition and data source, can confound the outcome of cataract surgery.26 Therefore, all relevant variables as well as details of all patients who have undergone cataract surgery are included in Aravind’s benchmarking platform. Across the system, the surgeon mix has been maintained consistently. All hospitals used standardised protocols and forms for recording findings. With these measures, the risks associated with uneven collection and definition of data, and the chance of including patients selectively, are reduced.

Continuous outcomes monitoring and improvement process

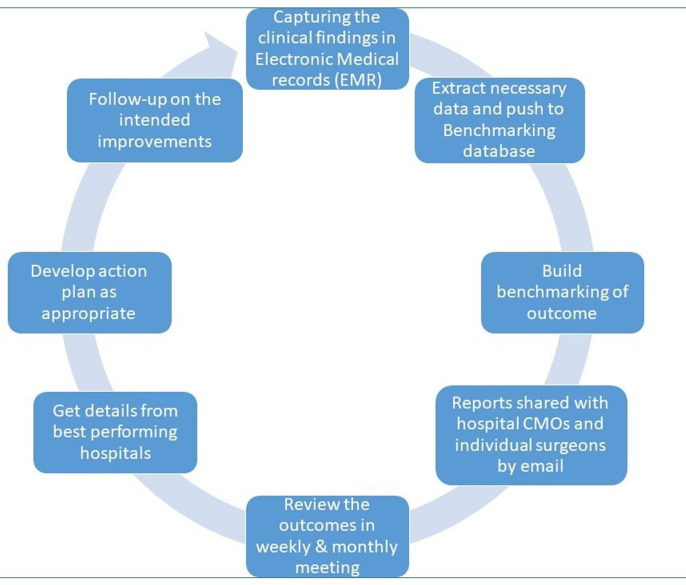

The following processes are used in all AECS hospitals. The process flow is given in figure 1.

Figure 1.

Outcome improvement process flow.

Data extraction and uploading

Data from EMRs is extracted and uploaded two times per surgery into the benchmarking platform. Data up to the point of discharge is extracted during the first week following surgery. A second extraction is performed at the beginning of the eighth week following surgery to ensure that all data has been included for patients who have returned to the clinic for routine follow-up within 49 days after surgery.

Data verification

After uploading the data, a summary report that gives counts of all the variables is generated in the benchmarking platform which is cross verified by the respective hospital with their own reports. In the event of discrepancies in the counts of any variable, a detailed checklist of patients is generated and verified against the EMR database. Each data set is verified and approved by the personnel who generate it; for instance, data on intraoperative complications is generated by staff at the operating theatres and data on postoperative complications by staff at the ward or outpatient clinic.

Data processing for benchmarking of quality parameters

Once the data is uploaded and verified, an internal software routine processes the data to generate summary reports for various parameters (facts) and filters (dimensions); this is referred to as building a data mart (warehousing). In the event of data being uploaded again for the same period for any reason, the process is repeated. This process enables users to access reports in less than a minute.

Communication email

After completing the data processing, an email (online supplemental figure 1) with the surgery results is sent to each surgeon who performed surgery during a given month. This report includes surgery volume, complication rate, and uncorrected and best-corrected visual outcomes on the follow-up visit. A hyperlink is included in this communication to access complete benchmark performance details, which allows a surgeon to compare their own outcome with either all the other surgeons or with the respective peer group, that is, a postgraduate can compare the scores with all the surgeons or only with postgraduates. The trend chart (online supplemental figure 2) compares the surgeon’s or hospital’s performance with the best and average scores over the past 6 months.

bmjopen-2023-071860supp001.pdf (435.3KB, pdf)

bmjopen-2023-071860supp002.pdf (427.7KB, pdf)

Internal review meetings

The head of the cataract clinic meets weekly with surgeons, especially those who have had complications during surgery, as well as operating room, ward and outpatient clinic nurses. In these meetings, medical records of patients with complications are reviewed. A monthly meeting is also held with surgeons, operating room nurses, ward nurses, biometry staff and key staff from outpatient clinics. A monthly meeting agenda typically includes the confirmation of minutes of the last meeting, the status of action taken on the minutes, a review of quality parameters for the hospital, and benchmark reports of complications, visual outcome, spherical equivalent and infection rates for the entire hospital.

Sharing of better practices

The gaps identified from the benchmarking reports are discussed at the monthly meeting as well as at the weekly meeting of cataract clinic heads from all the centres. Factors contributing to the best-performing hospitals are discussed. In order to implement necessary changes the Director of Quality conducts a detailed review of the protocol, facilities, etc if the variation persists or is present at multiple sites.

Follow-up on the intended improvements

The implementation plans are developed in accordance with inputs received and needs at each hospital. During the internal meeting, the status of the plans is discussed, and the results of the actions are tracked in benchmarking reports.

Measures

The hospital report compares performance of the focal hospital with the overall average of all the hospitals and the best performing hospital on the key outcomes shown below (online supplemental figure 3). The surgeon level outcome report follows the same format.

bmjopen-2023-071860supp003.pdf (2MB, pdf)

Preoperative conditions: % of eyes with advanced cataracts, % of eyes with poor vision.

Adverse events: % of eyes with intraoperative and postoperative complications

Visual outcome: following WHO classification, VA groups were created. The following measures of VA are used.

Pinhole VA at discharge or immediate next postoperative day.

Uncorrected and best-corrected visual outcome at follow-up visit between 7 and 49 days after surgery.

Accuracy of biometry: % of surgeries within ±0.5 spherical equivalent (Spherical+(0.5×Cylinder)).

Infection: % of endophthalmitis per 10 000 surgeries.

Data analysis and reporting

Excel was used to create a comparative report across hospitals and calculate average, SD and coefficient of variation (CV), for the selected outcome variables. We used the Standards for Quality Improvement Reporting Excellence guidelines to inform the presentation of this QI report.

Patient and public involvement

None.

Results

For the complete study period of 2012–2020, data were available for 10 eye hospitals, which performed 718 120 phacoemulsification cataract surgeries. To evaluate the effectiveness of internal benchmarking, we selected the following outcome variables to present in this study: intraoperative complications, unaided VA and residual postoperative refractive error at the postoperative follow-up visit. We analysed the trends in these three key outcomes variables.

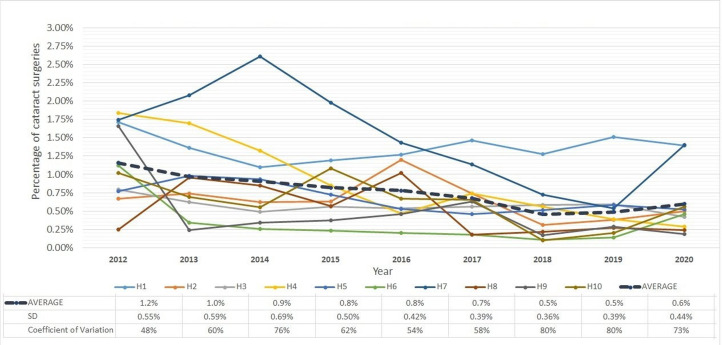

Intraoperative complications

Intraoperative complication is one of the most important factors affecting the visual outcome of cataract surgery. Additionally, it is often a predictor of postoperative complications. Managing high-risk cases by assigning a surgeon with the right level of experience could reduce the likelihood of complications, although it can never be completely eliminated.27 Results of a comparative analysis of intraoperative complications are presented in figure 2 and the data table of the figure in online supplemental table 1. The average complication rate across hospitals reduced by half from 1.2% in 2012 to 0.6% in 2020. The SD across hospitals also showed a declining trend indicating reduced variability. Nevertheless, the CV increased over the study period because the average declined faster than the SD.

Figure 2.

Intraoperative complications rate in cataract surgery in 10 study hospitals (H1–H10).

bmjopen-2023-071860supp005.pdf (177.8KB, pdf)

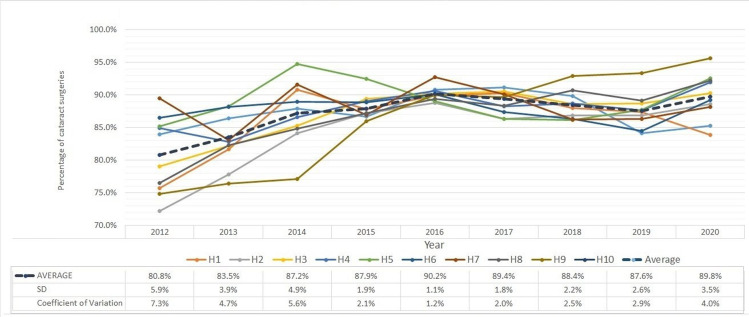

Unaided VA at postoperative follow-up visit

Good unaided visual outcomes are more likely to be achieved in surgeries without complications and with accurate biometric measurements. Figure 3 shows the percentage of patients who gained 6/12 vision or better without correction and the data table of the figure is presented in online supplemental table 1. On average all study hospitals improved in terms of this outcome measure over the study period. Further, both the SD and CV showed declining trends indicating reduced variability.

Figure 3.

Percentage of patients with uncorrected visual acuity (≥6/12 (20/40)) at postoperative follow-up visit (2–7 weeks) in 10 study hospitals (H1–H10).

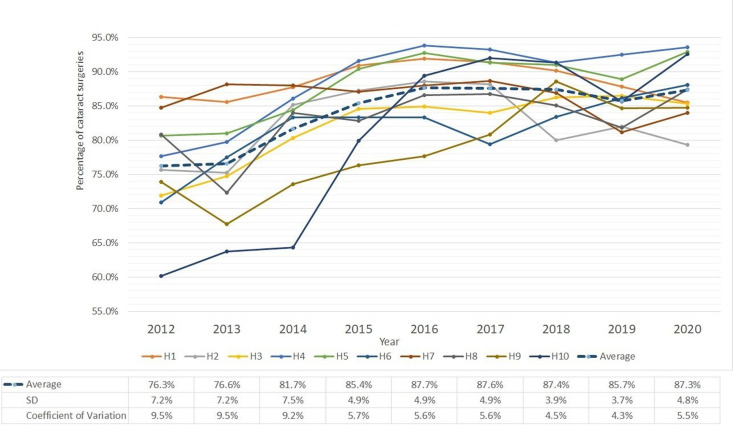

Residual postoperative refractive error (within ±0.5D)

Postoperative refractive error is caused by inaccurate biometric measurements, using the wrong intraocular lens (IOL) power or surgically induced. Figure 4 shows the percentage of surgeries within ±0.5D refractive error (without adjusting target refraction) and the data table of the figure is presented in online supplemental table 1. The positive trend in the average is consistent with the improvement in accuracy of biometry in recent years. Moreover, both the SD and CV showed declining trends indicating reduced variability across hospitals.

Figure 4.

Percentage of patients with spherical equivalent (within ±0.5D) at postoperative follow-up visit in 10 study hospitals (H1–H10).

Note that COVID-19 lockdowns in 2020 resulted in a larger fraction of patients with advanced conditions being operated on, which led to more variability in all three outcome measures studied—intraoperative complications, unaided VA at postoperative follow-up visit and residual post-operative refractive error.

Besides clinical outcomes, internal benchmarking has also resulted in improvement in processes, inputs and resources. The following are examples of the significant changes that were introduced in processes and resources due to benchmarking.

Standardisation of refraction: this was achieved by fixing the correct distance for refraction, upgrading refraction charts with self-illuminated charts and refining protocols on measuring postoperative patients by introducing a time gap after removing the eye pad and encouraging patients to read as many letters as possible. These changes were implemented both at the base hospitals and at outreach sites.

Design improvements for IOLs: the system detected variations in postoperative visual outcome and related them to a specific IOL model. As a result of the evidence obtained, the IOL manufacturing firm diagnosed the problem as using the wrong A-constant which they subsequently corrected.

Biometry equipment upgrade: this upgrade made it easier for technicians to interact with patients and ensure the measurements were accurate.

Strengthen postoperative counselling: patients with poor visual outcomes on discharge were counselled again about the importance of a follow-up visit.

Discussion

Continuous improvement requires a commitment to learning from a structured and evidence-based approach to managing, taking into account one’s own experience as well as others’ best practices.28

In our analysis of outcomes from cataract surgeries over the 9-year study period, we found significant improvements in all quality parameters. The study hospitals’ performance and outcomes improved across the board. The percentage of patients with good visual outcomes was better than WHO guidelines.9 In addition, the percentage of complications was lower than the percentage reported by hospitals in developed countries.29–31 Moreover, residual postoperative refractive error was reduced and remained well within acceptable limits. A noteworthy finding was the reduced variation and greater consistency in outcomes across hospitals over time, as expected with CQI and aided by internal benchmarking.

Internal benchmarking establishes performance standards within an organisation.32 It demonstrates successes within a hospital’s own culture and environment, establishes a communication channel and network for highlighting and sharing improvements and innovations, and stimulates internal competition. It is faster and less complex than external benchmarking. It does not present the challenge of obtaining confidential data; further, internal partners often use a common or similar database and employ uniform definitions of variables. Internal benchmarking is significantly less expensive compared with external benchmarking. Furthermore, it is often the starting point for all benchmarking processes since it is essential to know about internal business processes, services or products before embarking on an external benchmarking exercise.33 Using external benchmarks makes sense only when we have access to the details of the process involved in achieving a better outcome, so that a hospital can adopt them and improve the outcomes.

AECS implemented a number of strategies to achieve these improvements besides implementing a benchmarking platform: standardised clinical protocols, simplified forms for data collection, creation of a data quality team, implementation of an EMR to record data in real-time, development of a data warehouse and benchmarking platform, making information easily accessible to the right people, monthly email to individual surgeons with outcome summary, performing systematic reviews to identify gaps and opportunities for improvement, and implementing improvements. These strategies were developed at different times primarily based on monitoring results.

A benchmarking process based on evidence-based outcome monitoring gives an opportunity to evaluate variations and take appropriate measures to achieve better outcomes, such as changing processes and upgrade inputs, for example, standardising equipment across the system, choosing right IOL, training, etc. Specific interventions at Aravind and their results are as follows. Because of the introduction of immersion biometry in 2013 and its implementation in all centres in the following years, prediction error declined significantly in the immediately following year and thereafter.34 Since 2012, LED-illuminated vision charts have been introduced in eye camps, and vision drum charts were replaced with digital vision charts at base hospitals. These changes have led to improvement in refraction quality. Similarly, the analysis of outcome based on residual spherical equivalent with individual IOLs prompted changing of the A-constant of Aurovue IOL (hydrophobic acrylic IOL) from 118.4 to 118.7. This change helped to improve the refractive outcome and those within ±0.5D residual spherical equivalent increased from 81.5% in 2014 to 95% in the following years. Following chart is included as online supplemental figure 4.

bmjopen-2023-071860supp004.pdf (93.2KB, pdf)

QI is a journey that requires continuous feedback to ensure alignment. Monitoring surgical performance is an important tool to assess trainee progress, explain poor surgical outcomes, refine protocols and strengthen training.35–38 Internal learnings can be accepted and implemented more easily since the results are backed by evidence. Following standard protocols and processes is the key to delivering care consistently across the organisation and improving efficiency.

Hospital networks, whether government, missionary or private, have unique opportunities for learning and improving their outcomes through internal benchmarking and also reducing variability within the network. Funding organisations that support hospitals also have the opportunity to encourage such a benchmarking process among the hospitals they fund to induce cross-learning for overall improvement.

If learning is what makes a hospital outstanding in its field, benchmarking is a way of sharing the experience of improvements among staff members and creating healthy competition among them. To have the desired effect on performance, Gudmundsson et al emphasise that findings of benchmarking must be communicated to stakeholders within the organisation.39 Benchmarking encourages users to identify the root cause of variation. Benchmarking as a tool for continuous improvement at AECS has shown both improvement in outcomes and reduced variation among hospitals.

This study’s main strength was the use of comprehensive data of eye hospitals that have been benchmarking outcomes for continuous improvement for the past decade. Even though the process is based on eye hospitals, it can be applied usefully to other clinical disciplines. However, benchmarking results must be interpreted carefully, taking into account inclusion and exclusion criteria followed by hospitals and the definitions of outcome variables. We recognise that to conclusively establish the impact of benchmarking, a randomised control study would be required. The retrospective, observational design in the current study relies on time trends to assess the impact and therefore cannot fully rule out alternative explanations. As a result, our findings are suggestive rather than conclusive. Furthermore, while benchmarking shows opportunities for improvement, but actual improvement can only occur when the causes of deficiencies are identified and addressed. A limitation of this study is that it did not discuss in depth the change management process that was followed for improvement. This will be a subject of further research.

Conclusion

Benchmarking is a QI method that has proven to be very valuable in operationalising evidence-based management. Benchmarking results invite the attention of the users to focus on analysing and improving inputs and processes for better outcomes. Internal benchmarking allows hospitals to learn from their peers inside the organisation. Analysing the root cause for variation, implementing learnings and regular monitoring ensure continuous improvement in outcomes. The practice of internal benchmarking builds the organisation’s capacity to confidently engage in external benchmarking.

Supplementary Material

Footnotes

Contributors: G-BSB, TDR, CW and FvM conceived and designed the study. G-BSB data acquisition and performed the study. G-BSB, SG, RDR, TDR and FvM analysed and interpreted the data. GBS wrote the manuscript. G-BSB, SG, RDR, TDR, HM, CW, SVR and FvM reviewed the manuscript. All authors read and approved the manuscript. G-BSB acting as guarantor.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data are available upon reasonable request.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

The study was performed with adherence to the tenets of the Declaration of Helsinki. Ethical clearance was obtained from the institutional ethics committee at Aravind Eye Hospital, Madurai (reference number: RET202100350).

References

- 1. World Health Organization, World Bank Group, OECD . Delivering quality health services. 2018. Available: http://apps.who.int/bookorders

- 2. Sahn DE, Younger SD, Genicot G. The demand for health care services in rural Tanzania. Oxford Bull Econ & Stats 2003;65:241–60. 10.1111/1468-0084.t01-2-00046 Available: http://www.blackwell-synergy.com/toc/obes/65/2 [DOI] [Google Scholar]

- 3. Wellay T, Gebreslassie M, Mesele M, et al. Demand for health care service and associated factors among patients in the community of Tsegedie district, northern Ethiopia. BMC Health Serv Res 2018;18:697. 10.1186/s12913-018-3490-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. The strategy that will fix health care. 2021. Available: https://hbr.org/2013/10/the-strategy-that-will-fix-health-care

- 5. Harvard TH. n.d. Using health outcomes research to improve quality of care | executive and continuing professional education. Chan School of Public Health Available: https://www.hsph.harvard.edu/ecpe/using-health-outcomes-research-to-improve-quality-of-care/ [Google Scholar]

- 6. GBD 2019 Blindness and Vision Impairment Collaborators, Vision Loss Expert Group of the Global Burden of Disease Study . Causes of blindness and vision impairment in 2020 and trends over 30 years, and prevalence of Avoidable blindness in relation to VISION 2020: the right to sight: an analysis for the global burden of disease study. Lancet Glob Health 2021;9:e144–60. 10.1016/S2214-109X(20)30489-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Lewallen S, Thulasiraj RD. n.d. Global public health: an international Journal for research, policy and practice eliminating Cataract blindness – how do we apply lessons from Asia to Sub- Saharan Africa. [DOI] [PubMed]

- 8. Congdon N, Yan X, Lansingh V, et al. Assessment of Cataract surgical outcomes in settings where follow-up is poor: PRECOG, a Multicentre observational study. Lancet Glob Health 2013;1:e37–45.:S2214-109X(13)70003-2. 10.1016/S2214-109X(13)70003-2 [DOI] [PubMed] [Google Scholar]

- 9. WHO Informal Consultation on Analysis of Blindness Prevention Outcomes (1998: Geneva, Switzerland) & WHO Programme for the Prevention of Blindness and Deafness . Informal consultation on analysis of blindness prevention outcomes, Geneva, 16-18 16-18 February 1998. World Health Organization. WHO PBL Geneva, 16-18 February 1998. n.d. Available: https://apps.who.int/iris/handle/10665/67843

- 10. Health AC. Healthcare systems: supporting and advancing child health. J Hosp Med 2010:91–2. [DOI] [PubMed] [Google Scholar]

- 11. Kampstra NA, Zipfel N, Van Der Nat PB, et al. Health outcomes measurement and organizational readiness support quality improvement: a systematic review. n.d. 10.1186/s12913-018-3828-9 [DOI] [PMC free article] [PubMed]

- 12. Keel S, Müller A, Block S, et al. Keeping an eye on eye care: monitoring progress towards effective coverage. Lancet Glob Health 2021;9:e1460–4. 10.1016/S2214-109X(21)00212-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Kay JFL, Qid M, Uk M, et al. Health care Benchmarking. 2007;12:22–7. [Google Scholar]

- 14. Neuhauser D, Provost L, Bergman B. The meaning of variation to Healthcare managers, clinical and health-services researchers, and individual patients. BMJ Qual Saf 2011;20:i36–40. 10.1136/bmjqs.2010.046334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Hahn U, Krummenauer F, Kölbl B, et al. Determination of valid benchmarks for outcome indicators in Cataract surgery: A multicenter, prospective cohort trial. Ophthalmology 2011;118:2105–12. 10.1016/j.ophtha.2011.05.011 [DOI] [PubMed] [Google Scholar]

- 16. the UK EPR user group, Jaycock P, Johnston RL, et al. The Cataract national Dataset electronic multi-centre audit of 55 567 operations: updating benchmark standards of care in the United Kingdom and internationally. Eye 2009;23:38–49. 10.1038/sj.eye.6703015 [DOI] [PubMed] [Google Scholar]

- 17. Nihalani BR, VanderVeen DK. Benchmarks for outcome indicators in pediatric Cataract surgery. Eye (Lond) 2017;31:417–21. 10.1038/eye.2016.240 Available: https://pubmed.ncbi.nlm.nih.gov/27813517/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Staiger RD, Schwandt H, Puhan MA, et al. Improving surgical outcomes through Benchmarking. Br J Surg 2019;106:59–64. 10.1002/bjs.10976 [DOI] [PubMed] [Google Scholar]

- 19. de Korne DF, Sol KJCA, van Wijngaarden JDH, et al. Evaluation of an international Benchmarking initiative in nine eye hospitals. Health Care Manage Rev 2010;35:23–35. 10.1097/HMR.0b013e3181c22bdc [DOI] [PubMed] [Google Scholar]

- 20. Oyewole K, Tsogkas F, Westcott M, et al. Benchmarking cataract surgery outcomes in an ethnically diverse and diabetic population: final post-operative visual acuity and rates of post-operative cystoid macular oedema. Eye 2017;31:1672–7. 10.1038/eye.2017.96 Available: https://pubmed.ncbi.nlm.nih.gov/28643796/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ettorchi-Tardy A, Levif M, Michel P. Benchmarking: a method for continuous quality improvement in health. Hcpol 2012;7:E101–19. 10.12927/hcpol.2012.22872 Available: http://www.longwoods.com/publications/healthcarepolicy/22871 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Kaplan HC, Froehle CM, Cassedy A, et al. An exploratory analysis of the model for understanding success in quality. Health Care Manage Rev 2013;38:325–38. 10.1097/HMR.0b013e3182689772 [DOI] [PubMed] [Google Scholar]

- 23. Dewan M, Parsons A, Tegtmeyer K, et al. Contextual factors affecting implementation of in-hospital pediatric CPR quality improvement interventions in a resuscitation collaborative. Pediatric Quality & Safety 2021;6:e455. 10.1097/pq9.0000000000000455 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Griffin A, McKeown A, Viney R, et al. Revalidation and quality assurance: the application of the MUSIQ framework in independent verification visits to Healthcare organisations. BMJ Open 2017;7:e014121. 10.1136/bmjopen-2016-014121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Kaplan HC, Provost LP, Froehle CM, et al. The model for understanding success in quality (MUSIQ): building a theory of context in Healthcare quality improvement. BMJ Qual Saf 2012;21:13–20. 10.1136/bmjqs-2011-000010 [DOI] [PubMed] [Google Scholar]

- 26. Lovaglio PG. The scientific Worldjournal Benchmarking strategies for measuring the quality of Healthcare. Problems and Prospects 2012;2012. 10.1100/2012/606154 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Yorston D. Cataract complications. Community eye health 2008;21. Available: www.cehjournal.org [PMC free article] [PubMed] [Google Scholar]

- 28. Gravin DA. Building a learning organization. Harv Bus Rev 1993. [PubMed] [Google Scholar]

- 29. Day AC, Donachie PHJ, Sparrow JM, et al. The royal college of ophthalmologists’ national ophthalmology database study of cataract surgery: report 1, visual outcomes and complications. Eye (Lond) 2015;29:552–60. 10.1038/eye.2015.3 Available: 10.1038/eye.2015.3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Jaycock P, Johnston RL, Taylor H, et al. The cataract national dataset electronic multi-centre audit of 55,567 operations: updating benchmark standards of care in the united kingdom and internationally. Eye (Lond) 2009;23:38–49. 10.1038/sj.eye.6703015 Available: [DOI] [PubMed] [Google Scholar]

- 31. Lundström M, Barry P, Henry Y, et al. Evidence-based guidelines for Cataract surgery: guidelines based on data in the European Registry of quality outcomes for Cataract and refractive surgery database. J Cataract Refract Surg 2012;38:1086–93. 10.1016/j.jcrs.2012.03.006 [DOI] [PubMed] [Google Scholar]

- 32. Freytag PV, Hollensen S. The process of Benchmarking, Benchlearning and Benchaction. The TQM Magazine 2001;13:25–34. 10.1108/09544780110360624 [DOI] [Google Scholar]

- 33. Yasin MM, Zimmerer TW. The role of Benchmarking in achieving continuous service quality. Int J Contempor Hospit Manag 1995;7:27–32. 10.1108/09596119510083238 [DOI] [Google Scholar]

- 34. Shivkumar C, Aravind H, Ravilla RD. Using a quality improvement process to improve Cataract outcomes. Community Eye Heal J 2022;35:12–3. [PMC free article] [PubMed] [Google Scholar]

- 35. Bilgic E, Watanabe Y, McKendy K, et al. Reliable assessment of operative performance. Am J Surg 2016;211:426–30. 10.1016/j.amjsurg.2015.10.008 [DOI] [PubMed] [Google Scholar]

- 36. D’Angelo A-LD, Law KE, Cohen ER, et al. The use of error analysis to assess resident performance. Surgery 2015;158:1408–14. 10.1016/j.surg.2015.04.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Murzi M, Cerillo AG, Gilmanov D, et al. Exploring the learning curve for minimally invasive Sutureless aortic valve replacement. J Thorac Cardiovasc Surg 2016;152:1537–46. 10.1016/j.jtcvs.2016.04.094 [DOI] [PubMed] [Google Scholar]

- 38. Collins GS, Jibawi A, McCulloch P. Control chart methods for monitoring surgical performance: A case study from Gastro-Oesophageal surgery. Eur J Surg Oncol 2011;37:473–80. 10.1016/j.ejso.2010.10.008 [DOI] [PubMed] [Google Scholar]

- 39. Gudmundsson H, Wyatt A, Gordon L. Benchmarking and sustainable transport policy: learning from the BEST network. Transport Reviews 2005;25:669–90. 10.1080/01441640500414824 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2023-071860supp001.pdf (435.3KB, pdf)

bmjopen-2023-071860supp002.pdf (427.7KB, pdf)

bmjopen-2023-071860supp003.pdf (2MB, pdf)

bmjopen-2023-071860supp005.pdf (177.8KB, pdf)

bmjopen-2023-071860supp004.pdf (93.2KB, pdf)

Data Availability Statement

Data are available upon reasonable request.