Abstract

A central aspect of human experience and communication is understanding events in terms of agent (“doer”) and patient (“undergoer” of action) roles. These event roles are rooted in general cognition and prominently encoded in language, with agents appearing as more salient and preferred over patients. An unresolved question is whether this preference for agents already operates during apprehension, that is, the earliest stage of event processing, and if so, whether the effect persists across different animacy configurations and task demands. Here we contrast event apprehension in two tasks and two languages that encode agents differently; Basque, a language that explicitly case-marks agents (‘ergative’), and Spanish, which does not mark agents. In two brief exposure experiments, native Basque and Spanish speakers saw pictures for only 300 ms, and subsequently described them or answered probe questions about them. We compared eye fixations and behavioral correlates of event role extraction with Bayesian regression. Agents received more attention and were recognized better across languages and tasks. At the same time, language and task demands affected the attention to agents. Our findings show that a general preference for agents exists in event apprehension, but it can be modulated by task and language demands.

Keywords: event apprehension, eye tracking, event roles, agents, patients, case marking, Basque, Spanish, brief exposure paradigm

INTRODUCTION

To understand the complex reality of everyday life, we need to attend to the events unfolding around us. Events are dynamic interactions that develop over space and time (Altmann & Ekves, 2019; Richmond & Zacks, 2017). A crucial component of events is their participants or event roles, as well as the interaction that binds them. The most basic event roles are the doer of the action (“agent”) and the undergoer to whom the action is done (“patient”). The action in the event is defined by the specific relationship between these two roles (the event type, e.g., seeing or kicking).

Humans have been proposed to categorize critical information in events using event models (Zacks, 2020). These models are mental representations in memory used to segment the perceived ongoing activity into structured events (Radvansky & Zacks, 2011; Zacks et al., 2007), probably using specialized neural mechanisms (Baldassano et al., 2018; Stawarczyk et al., 2021). Event models are believed to store information on the abstract structure of events, including the event roles.

When exposed to events, humans can extract event role information in a quick and effortless way (Hafri et al., 2018). Hafri et al. (2013) presented participants with events for as short as 37 and 73 ms, and then asked a probe question about the event type, the agent, or the patient. They found that the event type and event roles could be recognized even with the shortest presentation time. Rissman and Majid (2019) review a range of experimental studies with adults, children and infants, and conclude that there is a universal bias to distinguish agent and patient roles.

The agent and patient event roles are asymmetric in their cognitive status, and so far the evidence suggests that agents are more salient. The agent role is characterized by distinguishing perceptual features, such as outstretched limbs (Hafri et al., 2013; Rissman & Majid, 2019). In contrast, the patient role is defined by the lack of these features, resulting in a more diffuse category (Dowty, 1991). When looking at pictures of events, humans tend to inspect agents more thoroughly than patients or other event elements (Cohn & Paczynski, 2013). Furthermore, the agent role is preferentially attended to in all stages of development in humans (Cohn & Paczynski, 2013; Galazka & Nyström, 2016; New et al., 2007), a preference shared with other animals (V. A. D. Wilson et al., 2022). Taken together, the reported evidence suggests that agents take a privileged position in the basic mechanisms of event processing (Dobel et al., 2007; Gerwien & Flecken, 2016; F. Wilson et al., 2011).

This is consistent with how humans attend to scenes in general: In the inspection of real-world scenes, conceptually relevant information guides attention (Henderson et al., 2009, 2018; Rehrig et al., 2020). This happens in a top-down fashion, that is, by higher-order cognitive representations affecting the information uptake. In the inspection of events specifically, the agent is arguably the conceptually most relevant or salient element, and would therefore guide visual attention in a top-down fashion. We use the term “agent preference” to refer to the presumably privileged status of agents in event cognition.

The agent preference finds parallels in other domains, too. When communicating about events, agents occupy privileged positions in how they are expressed. Semantic role categories in language are organized hierarchically, and theories converge on ranking agents the highest in this hierarchy for predicting the morphosyntactic properties of event role expressions (e.g., Bresnan, 1982; Fillmore, 1968; Gruber, 1965; Van Valin, 2006). Agents also play an important role in generating predictions in incremental sentence processing since they tend to be the expected default interpretation for noun phrases (Bickel et al., 2015; Demiral et al., 2008; Haupt et al., 2008; Kamide et al., 2003; Matzke et al., 2002; Sauppe, 2016). When gesturing about events, naïve participants across cultures tend to place the agent first, independently of the word order of their language (Gibson et al., 2013; Goldin-Meadow et al., 2008; Hall et al., 2013; Schouwstra & de Swart, 2014). This mirrors the tendency for placing agents first across languages (Dryer, 2013; Napoli & Sutton-Spence, 2014). In sum, this evidence supports the idea that there is a general preference for agents in cognition.

However, many studies investigating attention to events were not designed to specifically address this cognitive bias. Their findings can therefore only be interpreted indirectly and also might be at least partially confounded by other visual properties. Most studies on event cognition have used non-human and smaller-sized patients (Dobel et al., 2007; Gerwien & Flecken, 2016; Ünal, Richards, Trueswell, & Papafragou, 2021), such as, for example, an event in which a woman cuts a potato. Size, animate motion, and human features attract visual attention (Frank et al., 2009; Pratt et al., 2010; Wolfe & Horowitz, 2017) and this might have biased the attention toward agents in these studies.

So far, two studies have attempted to account for animacy when testing attention allocation in events (Cohn & Paczynski, 2013; Hafri et al., 2018). In these experiments, both agents and patients were human and of similar size. Cohn and Paczynski (2013) measured looking times to the event roles in cartoon strips that were presented frame by frame. They found longer looking times for agents than for patients and argued that the advantage for agents stemmed from them being the initiators of the action. Hafri et al. (2018) also used human agents and patients in their stimuli, but unlike Cohn and Paczynski (2013), they did not find a preference for agents. When viewing event photographs for short durations, participants responded faster to low-level features in patients than in agents. This is potential counter-evidence against the agent preference, opening the possibility that this preference is not a cognitive bias per se, but rather a side effect of an animacy bias, in line with findings from emergent sign languages (Meir et al., 2017). An agent preference could also emerge from conscious decision-making and only in a later time frame when attending to human-human interactions. This would explain why Cohn and Paczynski (2013) found an agent preference in a self-paced task, while Hafri et al. (2018) did not find such a preference when using brief stimulus presentation times and high time pressure. Hence, it remains unknown whether the agent preference operates independently of animacy, and whether it arises in the earliest stages of attending to events.

In the present work, we investigate whether an agent preference in event cognition is detectable in early visual attention. We include both human and non-human patients in the stimuli, as well as patients of different sizes. Following Dobel et al. (2007) and Hafri et al. (2018), we focus on the apprehension phase of processing events. We define event apprehension as the phase in which the gist of an event is obtained, covering approximately up to the first 400 ms after seeing an event picture (Griffin & Bock, 2000). We chose the apprehension phase specifically because it captures the earliest and most spontaneous allocation of visual attention. During apprehension, agent and patient roles are extracted spontaneously and independently of an explicit goal, that is, also when the task requires only the extraction of low-level features (such as color) and does not encourage the processing of event roles (Hafri et al., 2013, 2018). If there is a general agent preference in event cognition, it should be detectable already in this phase.

To target event apprehension, we adapted the brief exposure paradigm from Dobel et al. (2007) and Greene and Oliva (2009). In this paradigm, pictures of events are presented for only very short periods of time, typically between 30 and 300 ms, depending on the screen position in which the picture appears (Dobel et al., 2007, 2011). Because planning and launching a saccade already takes between 150 and 200 ms (R. H. S. Carpenter & Williams, 1995; Duchowski, 2007; Pierce et al., 2019), viewers need to make quick decisions about what to look at. These decisions are arguably based on prior information and task-related knowledge (Gerwien & Flecken, 2016).

As well as probing for an agent preference in the earliest time window of attention, we also test whether this preference persists across different languages and task configurations. Indeed, the agent preference is likely to interact with other top-down cues that guide visual attention, such as knowledge of the event, prior experiences, and task demands (e.g., Summerfield & de Lange, 2014). An important task is producing sentences in a specific language, because language can exert a top-down influence on the way events are inspected (Norcliffe & Konopka, 2015). For example, Norcliffe et al. (2015) used a picture description experiment with speakers of languages with different word order (subject-initial vs. verb-initial), and showed that speakers of verb-initial sentences in Tzeltal (Mayan) prioritized attending to verb- or action-related information over agents (cf. also Sauppe et al., 2013).

In our study, we test whether an agent preference persists across two languages that mark agents differently, namely Basque and Spanish. Basque has a case marker (conventionally known as ‘ergative’) specifically for agent noun phrases, while Spanish does not have an agent-specific case marker. This means that in Basque, agentive subjects are overtly marked (-k in the examples in 1), and non-agentive subjects are left unmarked. In Spanish, by contrast, both agent and patient subjects are treated alike, independently of their event role (carrying the unmarked nominative case, similar to English and German). This difference is illustrated in the following sentences in Basque and Spanish. In Basque, only agentive subjects (1a–b in contrast to 1c) receive the ergative case marker. In Spanish (2), instead, all subjects are unmarked (no ergative case marking).

-

(1)

Basque1

-

a.

Emma-k mahaia altxatu du

Emma-erg table lift aux

‘Emma lifted the table.’

-

b.

Emma-k borrokatu du

Emma-erg fight aux

‘Emma fought.’

-

c.

Emma-∅ iritsi da

Emma-nom arrive aux

‘Emma arrived.’

-

a.

-

(2)

Spanish

-

a.

Emma-∅ ha levantado la mesa

Emma-nom aux lift det table

‘Emma lifted the table.’

-

b.

Emma-∅ ha luchado

Emma-nom aux fight

‘Emma fought.’

-

c.

Emma-∅ ha llegado

Emma-nom aux arrive

‘Emma arrived.’

-

a.

Given this case marking system, speakers must commit to the agentivity of the subject noun phrase early on when planning a sentence in Basque, because they need this information to decide on its case marker (Egurtzegi et al., 2022; Sauppe et al., 2021). This may increase the need to search for agents in events, especially in languages such as Basque, where agency is the critical feature. Hence, the tendency to inspect agents might increase when planning sentences in Basque due to the demands imposed by case marking. In contrast, for Spanish speakers, agent-related information is not necessary to plan the subject argument of the sentence, because the case marking will not be affected. This means that they could defer making a decision for building a description of event roles to a later point in time and thus maintain more flexibility (Bock & Ferreira, 2014; F. Ferreira & Swets, 2002; V. S. Ferreira, 1996).

In our experiments, we tested native Basque and Spanish speakers in an event description task to investigate whether the agent preference persists across these two different case marking settings. This way, we explored whether an agent preference arises in a language production task, independently of language-specific grammatical features.

In addition, we also tested Basque and Spanish participants in a task that does not require sentence planning. Most previous studies that provide evidence for an agent preference involved a task that required participants to describe events (Gerwien & Flecken, 2016; Sauppe & Flecken, 2021). Given that most languages are agent-initial, it is possible that general sentence planning mechanisms give rise to the agent preference. In our experiments, we introduced a task manipulation to explore whether an agent preference emerges also in the absence of sentence planning demands.

Hence, participants in our experiments undertook two tasks: after being presented an event photograph for 300 ms, they either produced a sentence to describe the event (the event description task) or decided whether a probe picture matched an event participant from the briefly presented target picture (the probe recognition task). The event description task required the linguistic encoding of the event with the corresponding language-specific differences between the Basque-and Spanish-speaking groups. By contrast, the probe recognition task only demanded selective attention to event roles, with no linguistic response. It is still possible that participants covertly recruited or activated language in the probe recognition task (Ivanova et al., 2021), but this task did not require any sentence planning, which probably decreased the activation or use of language.

In Experiment 1, Basque and Spanish speakers participated on the internet and we measured their accuracy and reaction times for each event role in both tasks. In Experiment 2, participants were tested in a laboratory setting, and we used eye tracking to record the eye gaze to the event pictures during the brief exposure period. First fixations have been argued to closely reflect the processes underlying event apprehension (Gerwien & Flecken, 2016), as viewers collect parafoveal information on the event structure and use it to decide on the location of their first fixation to the picture. In comparison, the accuracy and reaction time of the response (the verbal description or the decision on the probe) reflect the outcome of the apprehension stage together with additional cognitive processes, such as memory, post-hoc reasoning, and judgment processes demanded by the task (Firestone & Scholl, 2016). Nevertheless, accuracy and reaction times contain valuable information on the apprehension phase (Hafri et al., 2013, 2018). Thus, we used fixations to pictures, accuracy, and reaction times as three different measures of participants’ attention allocation and information uptake during event apprehension. This procedure allows us to tackle two research questions: Is there an agent preference in attention patterns in visual apprehension? Does this preference persist across different language and task configurations? Based on the findings presented by Cohn and Paczynski (2013), we predicted that if there is a general agent preference in cognition, it should already be detectable in the earliest and most spontaneous stage of attention allocation. In the current experiments, agents thus should receive more visual attention than other event elements across both languages and tasks tested.

EXPERIMENT 1

Methods

Participants.

Native speakers of Basque (N = 90) and Spanish (N = 88) participated in an online study.2 Social media were used to advertise the study and recruit participants, and monetary prizes were raffled for participation. All participants reported that their native language was still the most common or one of the most common languages in their daily life at the time of participation. The experiment was approved by the ethics committees of the Faculty of Arts and Social Sciences of the University of Zurich (Approval Nr. 19.8.11) and the University of the Basque Country (Approval Nr. M10/2020/007), and all participants gave their informed written consent. All procedures were performed following the ethical standards of the 1964 Helsinki declaration and its later amendments.

Materials and procedure.

Stimuli consisted of 48 photographic gray-scale images depicting transitive, two-participant events with a human agent (see Figure 1 for an example). In half of the events, the patient was human (e.g., with actions such as “hit” or “greet”) and in the other half, an inanimate object was the patient (e.g., with actions such as “wipe” or “hammer”). Ten intransitive events featured a sole participant performing an action and were included as fillers. Within intransitive events, half of them featured an agent-like participant (e.g., “jump”), and the other half a patient-like participant (e.g., “fall down”). A full list of events is presented in Table A1. Photographs depicted the midpoints of events. Static images have been found to spontaneously convey motion information (Guterstam & Graziano, 2020; Kourtzi & Kanwisher, 2000; Krekelberg et al., 2005) so that participants could automatically represent the depicted event sequences as a whole.

Figure 1. .

Four example stimuli, depicting the events “brush”, “water”, “kick” and “read”. All events were photographed in an indoor setting against a white wall and actors performed the events either standing or sitting at a white table.

The events were portrayed by four different actors (two males, two females). Four versions of each event were photographed, one with each of the four actors as the agent. In the case of events with human patients, the respective actor of the patient was counterbalanced between events, so that the identity of the patient was independent of the identity of the agent. A horizontally mirrored version of each picture was also created to counterbalance the agent’s position across experimental participants. This led to a total of 232 stimulus pictures of 58 events.

The stimulus pictures were distributed over four lists. The set of events was identical across the lists, but with different combinations of actors in agent and patient roles across lists. The lists were used as blocks in the experiment, and participants were randomly assigned two out of the four blocks, with a total of 116 event pictures. The order of events within the blocks was randomized for each participant.

Participants responded to a short demographic questionnaire before the experiment, which included information on gender, age, language acquired from each of their parents, and their most commonly used language. The experiment was programmed in PsychoPy and exported to PsychoJS (Peirce et al., 2019). The experimental sessions were run in full-screen mode on Pavlovia (https://pavlovia.org). Pavlovia offers high temporal resolution (Anwyl-Irvine et al., 2021; Bridges et al., 2020), which ensured that the duration of the stimulus presentation was approximately 300 ms. Mobile phones and tablets were not allowed; participants were directed to the Pavlovia experiment through Psytoolkit (Stoet, 2010, 2017), which enabled blocking access from mobile devices.

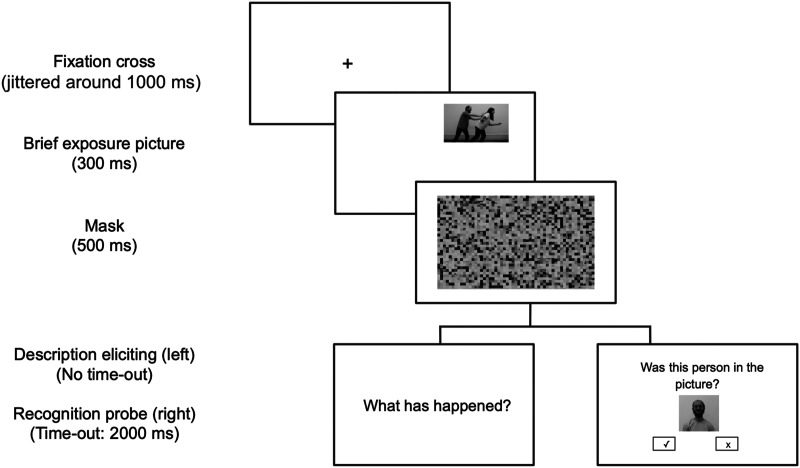

Trials began with a fixation cross (with a jittered duration time between 800 ms and 1200 ms), followed by the target event picture, which was displayed for 300 ms in one of the four corners of the screen. The orientation (agent left or right) and the picture’s screen position were counterbalanced within the same event types, so that each combination occurred equally often. A mask image appeared immediately after the event picture for a duration of 500 ms (cf. Figure 2). The mask was used to deprive participants of the ability to use their visuospatial sketchpad memory to reconstruct the image (Baddeley, 2007). Following the mask display, participants were prompted to perform an event description or a probe recognition task. The tasks were administered in separate blocks and the order in which the tasks were presented was counterbalanced across participants.

Figure 2. .

Trial structure. The trial procedure was the same until the task and it consisted of a fixation cross, the brief exposure image, and the mask image. Then, in the event description task, the participants were prompted with a question to describe the event image (Zer gertatu da? in Basque and ¿Qué ha ocurrido? in Spanish). In the probe recognition task, a probe image was displayed and participants had to press a button to answer whether that person or object was present in the event.

The event description blocks started with the presentation of the four actors and their names. Participants were instructed to learn the four names and use them to describe the events they would see (e.g., “Emma has kicked Tim”). Participants in both languages were instructed to describe events as if they were just completed to elicit sentences in the perfective aspect. This ensured that Basque speakers produced sentences with ergative case marking because there is no ergative marking in the progressive aspect (Laka, 2006). Six example trials were also provided, where sample written descriptions were displayed after the event pictures. The participants also completed six practice trials before proceeding to critical trials.

In the probe recognition task, participants indicated by a button press whether the actor or object in a probe picture had been present in the target event picture or not. The probes showed the agent of the event, the patient of the event, or another actor or object not present in the target picture. Participants were required to respond within 2000 ms, and six practice trials with longer time-outs (7000 ms, down to 3000 ms) were included prior to critical trials.

No feedback was provided for the practice trials in either of the tasks. Figure 2 provides a graphical representation of the trial structure. The experiment sessions lasted approximately 30 minutes. All instructions were provided in Basque or Spanish, respectively.

Data processing and analyses.

Nine participants who reported not speaking the same language with both parents were excluded. Data from two participants were lost due to technical errors in data saving. In total, data from 84 native Basque speakers (age range = 18–66 years, mean age = 31.4 years, SD = 11.4 years, 55 female) and 82 native Spanish speakers (age range = 18–68 years, mean age = 33.1, SD = 11.7 years, 48 female) were available for analysis.

Following the previous literature on the brief exposure paradigm (Dobel et al., 2007; Hafri et al., 2013), we relied on behavioral measures (task specificity, accuracy, and reaction time) as possible windows to event apprehension. In the event description task, written responses largely followed the agent-patient word order patterns, canonical in both languages (see details in Table C1). A native speaker of Basque (A.I.-I.) coded agent, patient, and action specificity for each response, specifying whether the description was specific, general, or inaccurate. We considered answers as specific if the name of the event role participant (“Emma”) or object (“bowl”) was correct and as general if the description contained correct general features, such as gender or category (e.g., “Lisa” or “One girl” for “Emma”, “pan” for “bowl”). The descriptions that were incorrect (“Tim” instead of “Emma”) or uninformative (“someone”) were coded as inaccurate. Event description trials were excluded from analysis if the intended target verb and event roles were inverted (e.g., “Emma has listened to Lisa” instead of “Lisa has shouted to Emma”), if the description was reciprocal (e.g., “Emma and Lisa have shouted to each other”) or if the sentence was not described in perfective aspect (in total, 8% of all event description trials).

For the probe recognition task, we analyzed the trials in which the probes matched either the agent or the patient of the previous event picture (half of all probe recognition trials). The other half of the probe recognition trials showed a foil that was not present in the event picture. We included these foils to ensure that the number of trials requiring a “true” or a “false” answer was balanced, but they were not informative about event role-related accuracy and hence were not included in the analyses. In addition, trials without responses and trials with response times shorter than 200 ms or 2.5 standard deviations longer than the mean were excluded (in total, 6.5% of all probe recognition trials).

Participants were additionally excluded from analyses if they performed with overall low accuracy, separately for each task. For the event description analyses, three participants who had less than 60% specific agent answers and five participants who had less than 50% trials remaining after applying the other exclusion criteria were excluded. For the probe recognition analyses, we excluded four participants who had less than 50% trials left after applying the other exclusion criteria or whose overall accuracy was below 60%. We applied these exclusion criteria to ensure that participants in the analyses understood and followed task instructions, and were not performing at chance. For the probe recognition task, we additionally checked that the participants had above-chance accuracy also for the trials with foils (“false” trials). We found that participants correctly rejected foil trials on average in 86% of trials (SE = 0.7%). This ensures that the results from the critical trials (“true” trials) were informative and not driven by a bias to simply answer positively. Figure B1 shows that all participants had above-chance accuracy for the whole set of trials in probe recognition.

On balance, data from 157 participants for the event description task (7071 trials) and from 163 participants (3739 trials) for the probe recognition task were included in statistical analyses.

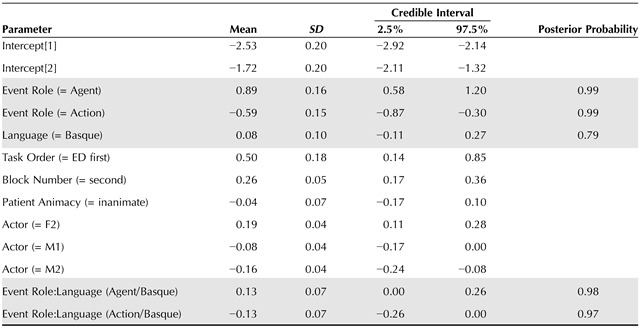

Statistical analyses were conducted in R (R Core Team, 2022) using hierarchical Bayesian regression through the brms (Bürkner, 2017, 2018) interface to Stan (B. Carpenter et al., 2017). Post-hoc contrasts between the predictor factor levels were extracted with the emmeans package (Lenth, 2020). A cumulative ordinal model with a logit link function was fit to jointly model agent, patient, and action specificity for event description trials (ranking specific > general > inaccurate). For probe recognition analyses, a Bernoulli model with a logit link function was fit to model accuracy in response to agent and patient probes. In both models, event role, language, and their interaction were predictors of interest; identity of the agent role actor, animacy of the patient, and task order were included as nuisance predictors (Sassenhagen & Alday, 2016). Animacy is known to attract visual attention (Frank et al., 2009), and therefore we included it as a covariate to capture its potentially large effects. This ensured that any evidence in favor of the agent preference was not driven by differences between agent and patient animacy in events depicting human-object interactions. We modeled log-transformed reaction times in the probe recognition task with a Gaussian model with an identity link function. Language, event role, and their interaction were the critical predictors, and animacy of the patient, trial accuracy, and task order were included as nuisance predictors. We included random intercepts and slopes for language and event role by participant and by event type in all models. Student-t distributed priors (df = 5, μ = 0, σ = 2) were used for the intercept and all population-level predictors in all models. Default priors (Student-t, df = 3, μ = 0, σ = 2.5) were used for group-level predictors. In all models, the block number and task order were standardized (z-transformed) and all other predictors were sum-coded (−1, 1).

When reporting the parameter estimates of interest (), we provide the mean and standard error of the posterior draws. We additionally include the posterior probability of the hypothesis that the estimate is smaller than or larger than 0. This is equal to the proportion of draws from the posterior distribution that fall on the same side of 0 as the mean of the posterior distribution, which is a direct indication of the strength of the evidence (Kruschke, 2015). We visualize this information with posterior density plots for each parameter of interest (Figure 3B and Figure 4C–D).

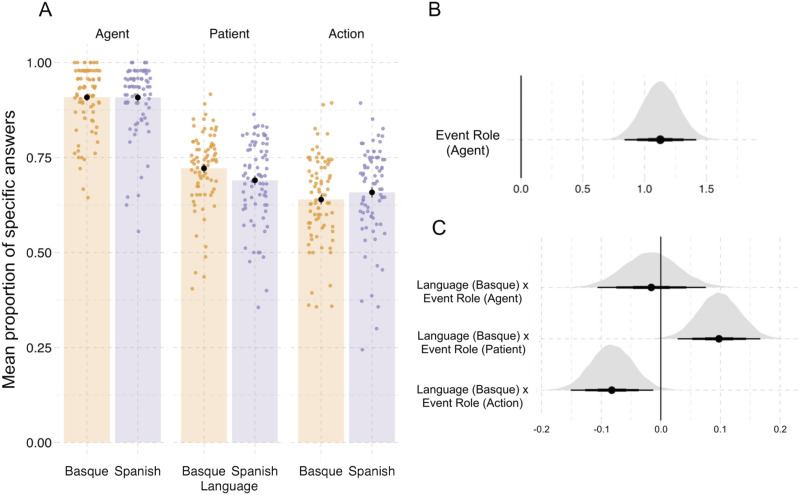

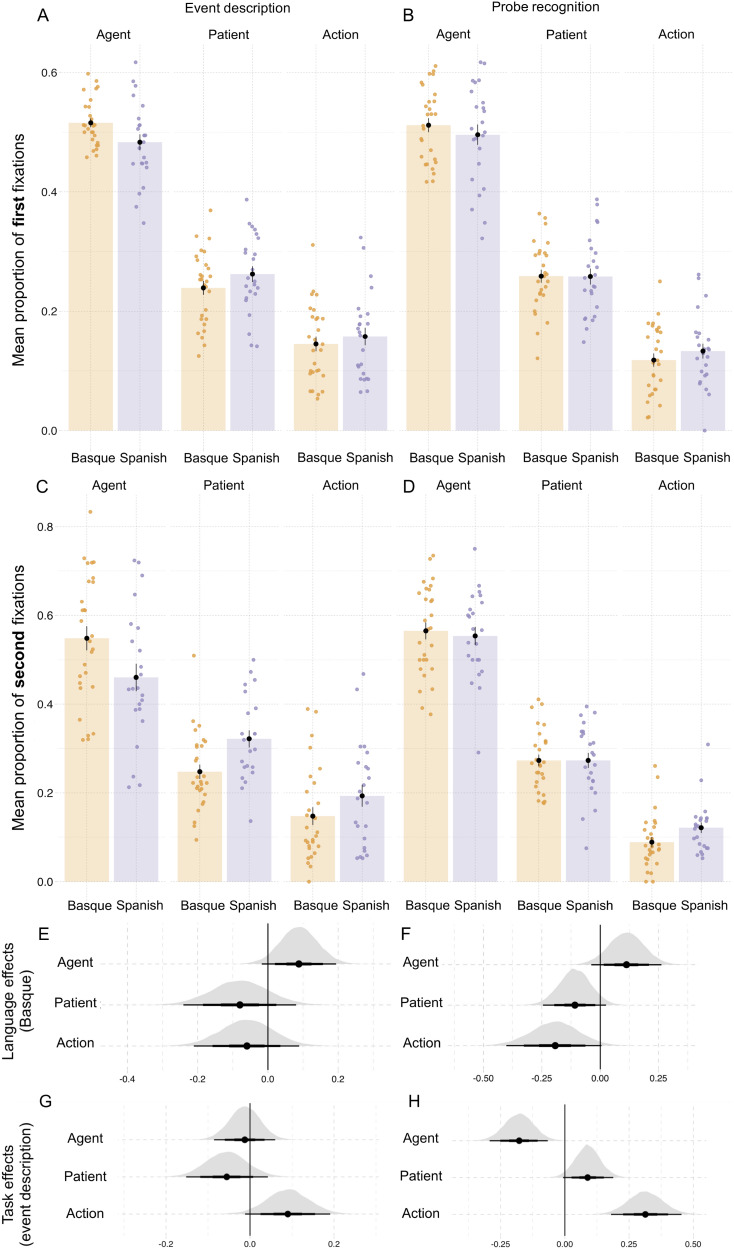

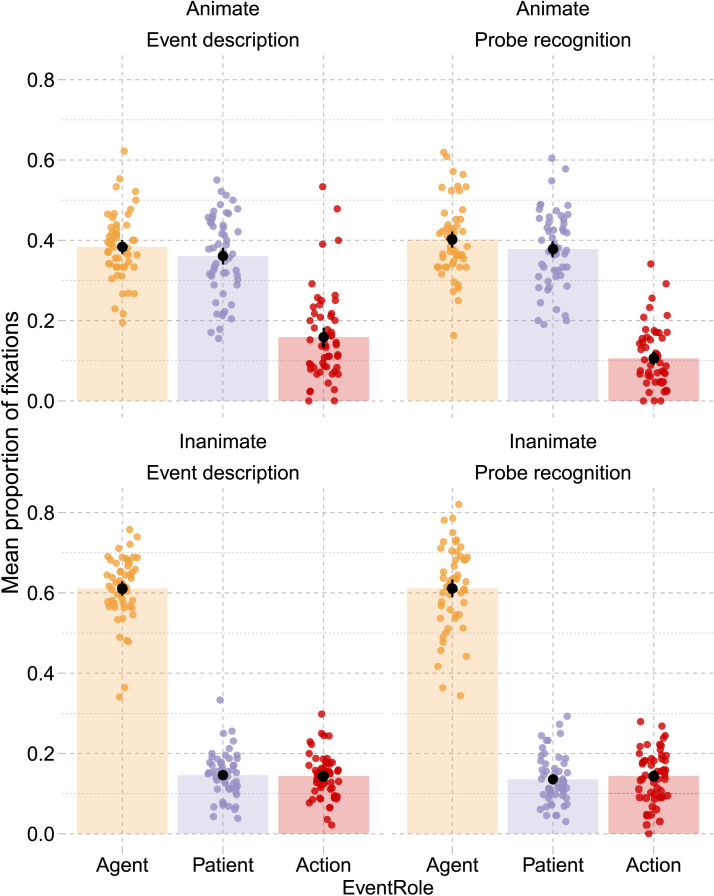

Figure 3. .

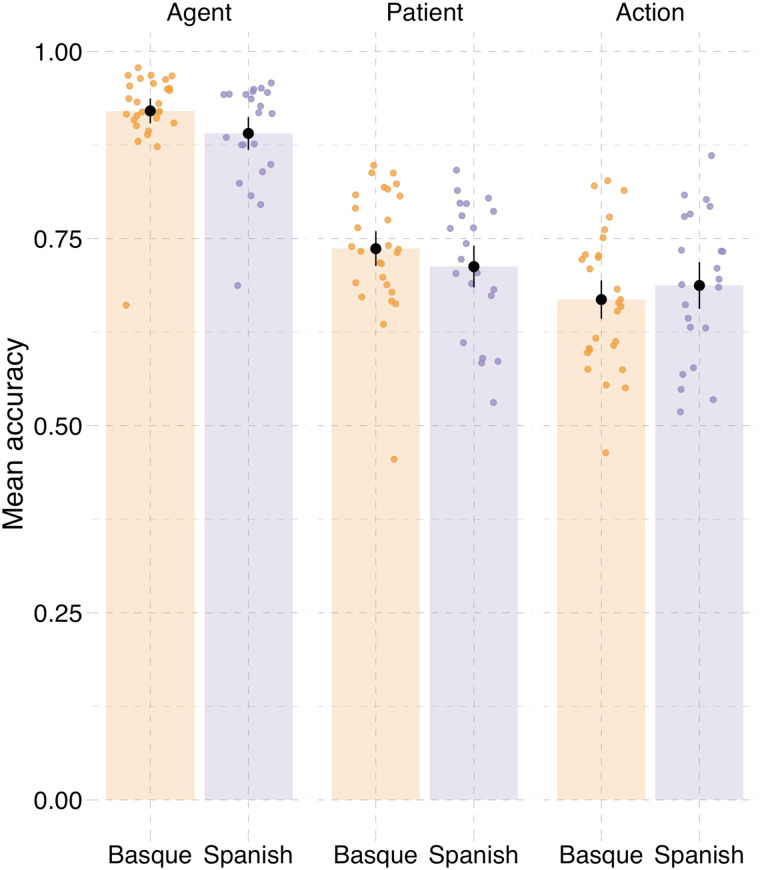

Results from event description task in Experiment 1. (A) Specificity in event description, showing the proportion of specific answers (in contrast to general and inaccurate answers). Individual dots represent participant means, black dots represent means of participant means, and error bars on the black dots indicate 1 standard error of the mean. Figure F1 in the Appendix shows fitted values from the Bayesian regression model. (B) Posterior estimates for the predictor event role from the Bayesian regression model (Table D1). (C) Posterior estimates of interactions between each of the event roles (agent, patient, action) and language from the same Bayesian regression model. Black horizontal lines represent the 50%, 80%, and 95% credible intervals.

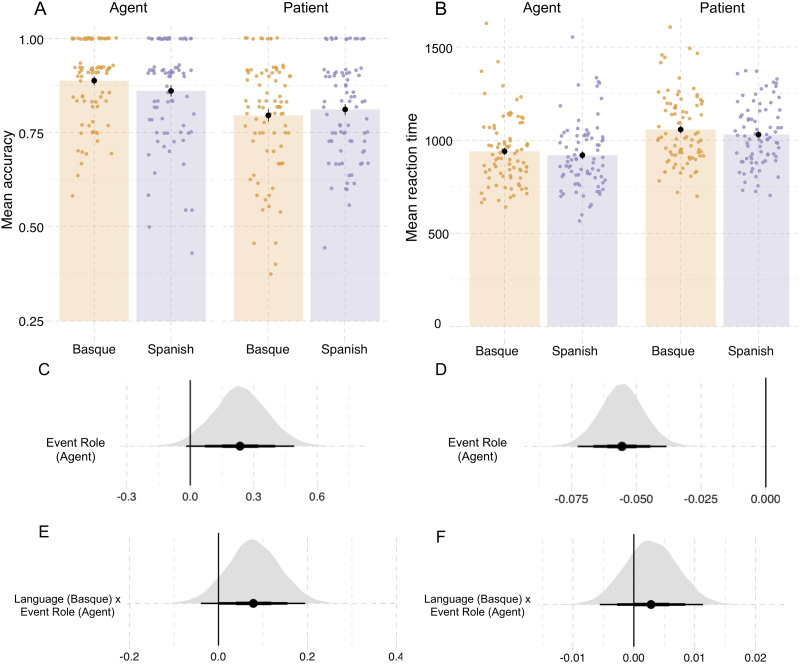

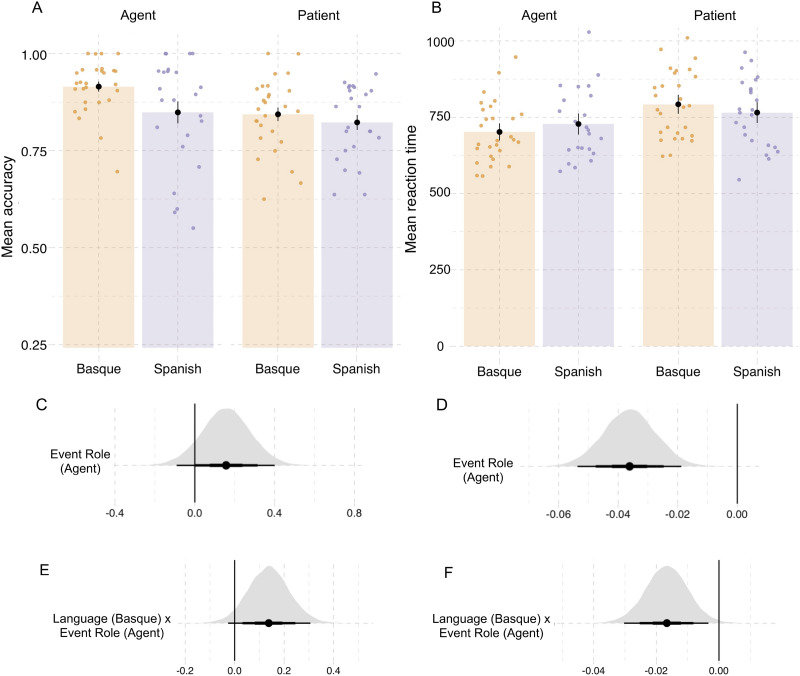

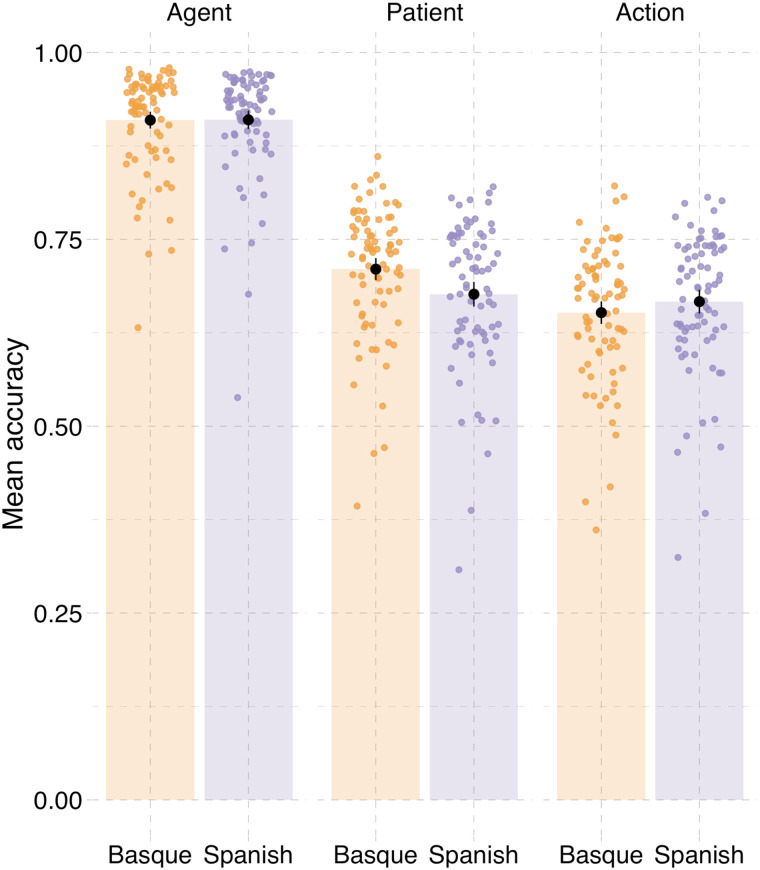

Figure 4. .

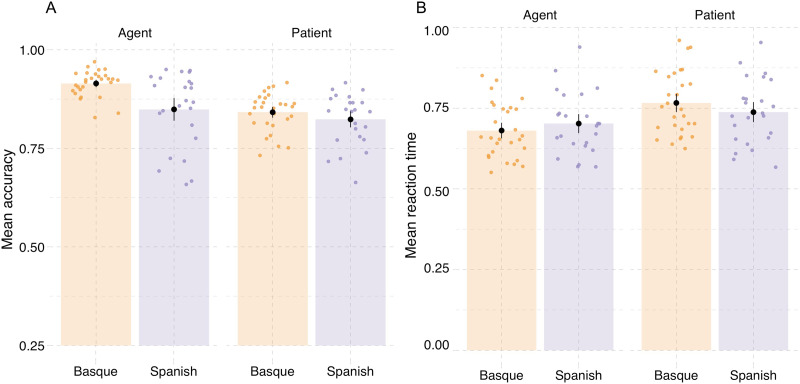

Results from probe recognition task in Experiment 1. (A) Recognition accuracy for probe pictures. (B) Reaction times (in milliseconds). Individual dots represent participant means, black dots represent the mean of participant means, and error bars indicate 1 standard error of the mean. Figure F2 in the Appendix shows fitted values from the Bayesian regression model. (C and D) Posterior estimates for the predictor event role from the Bayesian regression models for accuracy and reaction time, respectively (Tables D2 and D3). (E and F) Posterior estimates of the interaction between language and event role from the same models for accuracy and reaction time, respectively. Point intervals represent the 50%, 80%, and 95% credible intervals.

Results

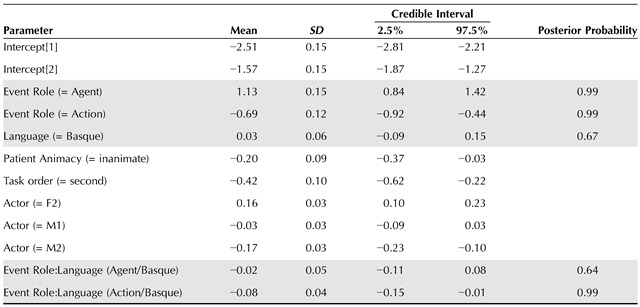

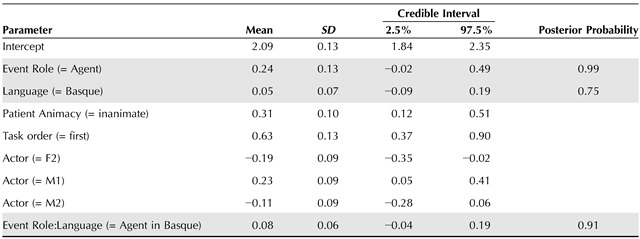

The results from the event description task are shown in Figure 3 and from the probe recognition task in Figure 4; regression model summaries are presented in Tables D1, D2, and D3. Compared to patients, agents were described with greater specificity (Agent: mean = 1.13, SE = 0.15, P( > 0) = 0.99; see Table 1 and Figure 3). Participants also recognized agents with greater accuracy than patients in probe recognition (Agent: mean = 0.24, SE = 0.13, P( > 0) = 0.99; see Table 1 and Figure 4A).

Table 1. .

Proportion of specific or accurate responses in event description and probe recognition tasks, as well as reaction times (in ms) for probe recognition in Experiment 1; standard deviations in parentheses.

| Event Role | Language | Event description | Probe recognition | |

|---|---|---|---|---|

| Proportion of specific responses | Proportion of correct responses | Reaction time | ||

| Agent | Basque | 0.90 (0.08) | 0.89 (0.10) | 939 (183) |

| Agent | Spanish | 0.91 (0.09) | 0.86 (0.13) | 728 (113) |

| Patient | Basque | 0.72 (0.09) | 0.79 (0.14) | 1057 (189) |

| Patient | Spanish | 0.69 (0.10) | 0.81 (0.13) | 1030 (168) |

| Action | Basque | 0.64 (0.11) | — | — |

| Action | Spanish | 0.65 (0.12) | — | — |

In line with the accuracy results, participants in both languages responded faster to agent probes compared to patient probes (EventRole = −0.055, SE = 0.008, P( < 0) = 0.99; Table 1, Figure 4B).

The higher specificity, accuracy, and faster reaction times for agents were detectable despite the high variability between participants and events (for event description Participant: mean = 0.63, SE = 0.05, P( > 0) = 0.99; Event: mean = 0.91, SE = 0.10, P( > 0) = 0.99; for probe recognition: Participant: mean = 0.53, SE = 0.08, P( > 0) = 0.99; Event: mean = 0.69, SE = 0.10, P( > 0) = 0.99). This high variability was expected due to the online setting, which allows only limited control of participants’ behavior.

On top of these effects for event roles, we also found an interaction between language and event role in both tasks. In event description, Basque speakers described patients with higher specificity than Spanish speakers (Language×Patient = 0.09, SE = 0.03, P( > 0) = 0.99; see Figure 3). By contrast, Spanish speakers described action verbs more precisely than Basque speakers (Language×Action = −0.08, SE = 0.04, P( < 0) = 0.99). There were no notable differences in the specificity of agent descriptions between languages (Language×Agent = −0.02, SE = 0.05, P( < 0) = 0.64).

In the probe recognition task (see Figure 4A; Table D2), the interaction between language and event role showed that the Basque participants were more accurate when responding to agent probes than the Spanish participants (Language×EventRole: mean = 0.08, SE = 0.06, P( > 0) = 0.91). In contrast, there was no substantial evidence for an interaction between language and event role in the reaction times (Language×EventRole = 0.002, SE = 0.004, P( > 0) = 0.75; Table D3).

Discussion

Speakers of Spanish and Basque both showed a general preference for agents in both tasks, reflected in higher specificity and accuracy for agents, as well as faster reaction times. This effect was consistent across languages and participants, even when the animacy of the patient was controlled for in the statistical analysis. This matches the results reported by Cohn and Paczynski (2013) and provides evidence for an agent preference in human cognition (New et al., 2007; Rissman & Majid, 2019). We will return to this finding in the General Discussion.

In addition to the general preference for agents over patients, there were differences between speakers of the two languages in their attention to event roles. In the probe recognition task, the Basque participants were more accurate than the Spanish participants in responding to agent probes, and less accurate than the Spanish participants in responding to patient probes. By contrast, we did not find any effect on the specificity of agent descriptions in the event description task. However, Basque speakers were more specific than Spanish speakers in describing patients.

Hence, we found effects of language on how speakers apprehended the event roles, although these were not consistent across tasks. A possible explanation for the divergent language effects between tasks could be the different time frames each task measured and the ability of the tasks to reflect event apprehension more or less directly (Firestone & Scholl, 2016). For event descriptions, the time required to type the responses could have led to memory decay (Gold et al., 2005; Hesse & Franz, 2010) and a deteriorated ability to reflect the apprehended information. Producing a sentence is a complex task and could also have contributed to memory distortion (Baddeley et al., 2011; Vandenbroucke et al., 2011). When producing descriptions, the words (lexical forms) of the agent and other parts of the sentence are usually retrieved in order of mention (Griffin & Bock, 2000; Meyer et al., 1998; Roeser et al., 2019). This potentially leaves the later elements of the sentence with a less clear memory trace. In Spanish, the patient is mentioned last (SVO order), while patients usually occupy the sentence-medial position in Basque (SOV order). This difference in word order could have interfered with the specificityof responses, because Basque speakers were able to “offload” patient information earlier (Baddeley et al., 2009, 2011). In fact, word order is known to influence the time course of sentence planning (Norcliffe et al., 2015; Nordlinger et al., 2022; Santesteban et al., 2015). In our experiment, all descriptions were agent-initial, and variations in word order were present only later in the sentence. Therefore, it does not appear likely that word order had an effect as early as in the apprehension phase, but we suggest that it affected how participants recalled and linearized the patient and action information when formulating their responses. It is also possible that additional post-hoc processes influenced the results because participants were not under time pressure to provide their answers.

By contrast, the time pressure was high in the probe recognition task because of the time-out. Participants responded by pressing the button on average 974 ms after the onset of the event picture (cf. Figure 2). This much shorter time between stimulus presentation and completed response may have reduced the effect of post-hoc cognitive processes, suggesting that the accuracy in this task better reflects the attention patterns during the event apprehension phase.

Therefore, the results from each task may reflect different stages of processing events: probe recognition accuracy would represent the outcome of event apprehension, and specificity in event descriptions would reflect a combination of event apprehension, possibly influenced by task demands and word order differences. These inherent differences between the tasks and the proneness of behavioral responses to be influenced by post-hoc processes make it necessary to further explore the event apprehension and to move beyond behavioral measures for doing so.

A way to bypass these problems and obtain a more direct measure of apprehension is to use eye tracking. Gaze allocation patterns to the briefly presented event pictures are considered direct reflexes of the event apprehension process (Gerwien & Flecken, 2016). When presented with an event picture (as in Figure 2), viewers collect parafoveal information on the event structure and use this coarse representation to decide where on the event picture to fixate first. Therefore, first fixations to event pictures can be reliably linked to the event apprehension process and provide an alternative to relying solely on offline measures. Measuring gaze allocation also provides a direct way of comparing the agent preference across tasks (in contrast to the behavioral task-specific measures in Experiment 1).

EXPERIMENT 2

We adapted the design from Experiment 1 for the laboratory and introduced eye tracking to measure how participants directed their overt visual attention during event apprehension. Consequently, the main measure in Experiment 2 was the location of the first fixation in the stimulus pictures. We adapted the response modalities in the tasks to elicit faster responses, by requiring oral responses in the event description task and by reducing the time-out in the probe recognition task to 1500 ms. We predicted that the agent preference would be detectable in the fixation patterns and behavioral correlates, replicating and further characterizing the agent preference found in Experiment 1.

Methods

Participants.

Native speakers of Basque (N = 38) and Spanish (N = 36) were recruited (age range = 18–40, mean age = 29, 49 female) and received monetary compensation for their participation. All Basque speakers and 29 Spanish speakers were tested in Arrasate (Basque Country); the other Spanish speakers participated in Zurich (Switzerland), due to constraints induced by the COVID-19 pandemic. In both locations, laboratories were set up ad hoc in school or university facilities and the same technical equipment was used. The experiment was approved by the ethics committees of the Faculty of Arts and Social Sciences of the University of Zurich (Approval Nr. 19.8.11) and the University of the Basque Country (Approval Nr. M10/2020/007). All participants gave written informed consent. All procedures are performed following the ethical standards of the 1964 Helsinki declaration and its later amendments.

Materials and procedure.

The stimuli were the same as in Experiment 1. The procedure followed mainly that of Experiment 1 but was adapted to the on-site setting and the eye tracking methodology. The experiment featured two consecutive blocks per task (instead of only one block per task in Experiment 1) with a self-timed pause between the tasks, i.e., after the second block. To characterize the two groups of participants (Basque speakers and Spanish speakers), individual differences measures were administered (see Table 2). Participants completed the Digit-Symbol Substitution Task from the Wechsler Adult Intelligence Scale (Wechsler, 1997) as a measure of perceptual and processing speed (Hoyer et al., 2004; Huettig & Janse, 2016; Salthouse, 2000). Participants also completed a lexical decision task to assess their lexical knowledge of Basque and Spanish (de Bruin et al., 2017) and completed a detailed questionnaire that collected information on their demographic profile, educational background, and linguistic habits.

Table 2. .

Language profiles and measures of linguistic competence and processing speed of participants in Experiment 2. Self-reported proficiency and lexical decision accuracy means could range from 1–10. The Digit Symbol Substitution Test scores ranged between 48–85. Standard deviations are given in parentheses.

| Measure | Basque group | Spanish group |

|---|---|---|

| Self reported proficiency in Basque | 9.08 (0.86) | 3.72 (3.61) |

| Self reported proficiency in Spanish | 7.64 (1.14) | 9.65 (0.47) |

| Basque lexical decision task accuracy mean | 8.97 (0.47) | 5.88 (2.2) |

| Spanish lexical decision task accuracy mean | 8.88 (0.75) | 8.88 (0.83) |

| Digit Symbol Substitution Test results | 68.5 (10.34) | 64.5 (10.32) |

Apparatus and data recording.

The experiment was programmed in E-Prime 2.0 (Schneider et al., 2002) and displayed on a 15.6″ computer screen with a resolution of 1920 × 1080 pixels. The participants placed their heads on a chin rest so that their eyes were at a distance of approximately 65 cm from the screen. The stimulus pictures subtended a visual angle of 11.73° horizontally (560 pixels) and 7.48° vertically (349 pixels). The center of each picture was 12.06° away from the central fixation cross on which the participants fixated at stimulus onset. Eye movements were recorded with a SMI RED250 mobile eye tracker (Sensomotoric Instruments, Teltow, Germany) sampling at 250 Hz. Button presses in the probe recognition task were recorded with a RB-844 response box (Cedrus, San Pedro, USA).

Data processing and analysis.

Data from six participants were lost due to technical errors or their inability to complete the experiment session. Twelve additional participants were excluded from the analysis due to the potentially heavy influence of the respective other language. This was determined to be the case when they reported using the respective other language frequently or preferentially (i.e., Spanish for native speakers of Basque or Basque for native speakers of Spanish), or when they scored equal or higher in the Basque lexical decision task than in the Spanish lexical decision task. The latter criterion was only applied to Spanish participants, because Basque speakers are usually very close or at the same level of performance as Spanish speakers due to diglossia. This exclusion criterion was applied to reduce the influence of possibly balanced bilinguals (Morales et al., 2015; Olguin et al., 2019; Yow & Li, 2015). The processing speed measures were similar in both language groups (cf. Table 2). On balance, 52 participants were included in the analyses (NBasque = 28 Basque, NSpanish = 24).

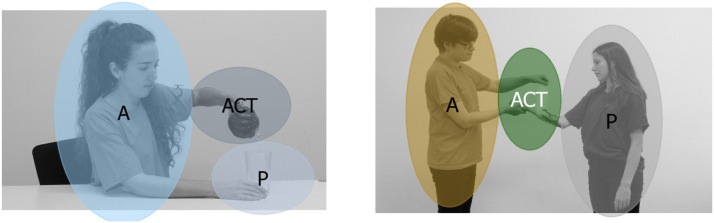

For each event picture, areas of interest for agents and patients were manually defined in the eye tracker manufacturer’s SMI BeGaze software (version 3.4). The areas of interest covered the face and upper part of the body for human characters and the whole object for inanimate patients (see Figure 5). We also defined an action area that encompassed the extended limbs of the agent (usually their hands) or the instruments involved in the action.3 The areas of interest for agents and patients were at least 30 pixels apart (mean = 74 pixels, SD = 38 pixels, corresponding to a visual angle of 1.85°) to avoid that fixations were assigned to the wrong area of interest due to measurement error.4 Fixations were detected using the algorithm implemented in SMI BeGaze.

Figure 5. .

Examples of agent (A), patient (P) and action (ACT) areas of interest for two stimuli. Areas of interest were not visible to participants.

For the eye tracking analyses, trials were excluded if participants’ fixations landed more than 100 pixels (visual angle of 2.5°) away from the edges of the target picture. Trials were also excluded if no response was given or, in the event description task, if the target verb and event roles were inverted (“Lisa heard Tim” instead of “Tim shouted to Lisa”). In total, 8821 trials were included in the first fixations analyses (93% of all trials).

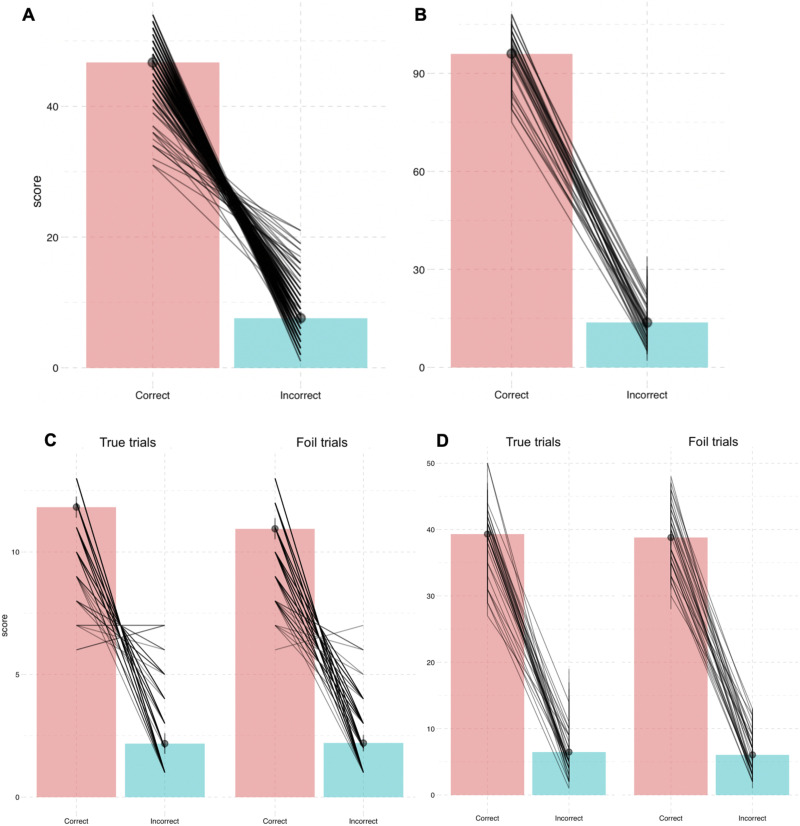

The analysis of first fixations was the main measurement in Experiment 2, given that these fixations provide the most direct window into the visual event apprehension process (Gerwien & Flecken, 2016; Sauppe & Flecken, 2021). In addition, we also conducted an exploratory analysis of second fixations on the event pictures. Participants fixated first on the picture on average 192 ms (SD = 22 ms) after exposure. In some trials, participants subsequently launched another saccade, on average 318 ms after stimulus onset (SD = 29 ms), with a mean duration of 283 ms (SD = 129 ms). Because these saccades were launched when the brief exposure time was almost over, second fixations generally landed on the subsequently presented mask image. However, programming and executing a saccade takes between 100 and 200 ms (R. H. S. Carpenter & Williams, 1995; Duchowski, 2007; Pierce et al., 2019), which means that the second fixations were planned while the event picture was still visible, and often even during the execution of the first saccade, i.e., before the eyes landed on the picture for the first fixation. Thus, it seems likely that the second fixations are generated by similar mechanisms as the first fixations. This suggests that the second fixations, although not landing on the briefly exposed picture, may provide additional information on the event apprehension process (cf. Altmann, 2004; F. Ferreira et al., 2008, for additional discussion of the usefulness of “looking at nothing”). Analyses of second fixations were conducted on a subset of trials that exhibited second fixations that were directed at least 50 pixels away from the first fixation’s location. This distance threshold ensures that the second fixations represented genuine new fixations and not just measurement and classification errors. In sum, 4956 trials were analyzed for second fixations.

For specificity, accuracy, and response time analyses, the exclusion criteria and statistical modeling were identical to those in Experiment 1. In the event description analysis, 4663 trials were included (75% of all trials); in the probe recognition analyses, 2387 trials were included (95% of all trials).

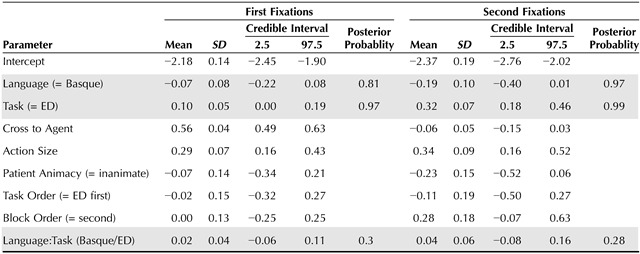

As for Experiment 1, statistical analyses were conducted in R (R Core Team, 2022) using hierarchical Bayesian regression models through the brms (Bürkner, 2017, 2018) interface to Stan (B. Carpenter et al., 2017). Eye tracking analyses modeled the likelihood of fixating on the agent, patient, or action areas of interest. We fitted Bernoulli models for agent, patient, and action fixations separately. Models for fixations were fitted jointly for both tasks. Language, task, and their interaction were the predictors of interest. We included the following as nuisance predictors: the size of the target area of interest in pixels, the animacy of the patient, the task order, the closeness of the agent to the fixation cross, and the block order within each task. For the second fixations analyses, we also included the area of interest to which the first fixation was directed as a nuisance predictor to account for the correlation between fixation locations on such short time scales (Barr, 2008). The size of the areas of interest, the order of tasks, and the number of blocks were standardized (z-transformed) and categorical predictors were sum-coded (−1, 1). We included random intercepts and slopes for language and task by participant and item. We used Student-t distributed priors (df = 5, μ = 0, σ = 2) for the intercept and all population-level predictors. For random intercepts and slopes, we used a default prior (Student-t, 3 degrees of freedom, mean = 0, scale = 2.5). For accuracy and response time analyses, statistical models were specified like those in Experiment 1.

Results

Fixations.

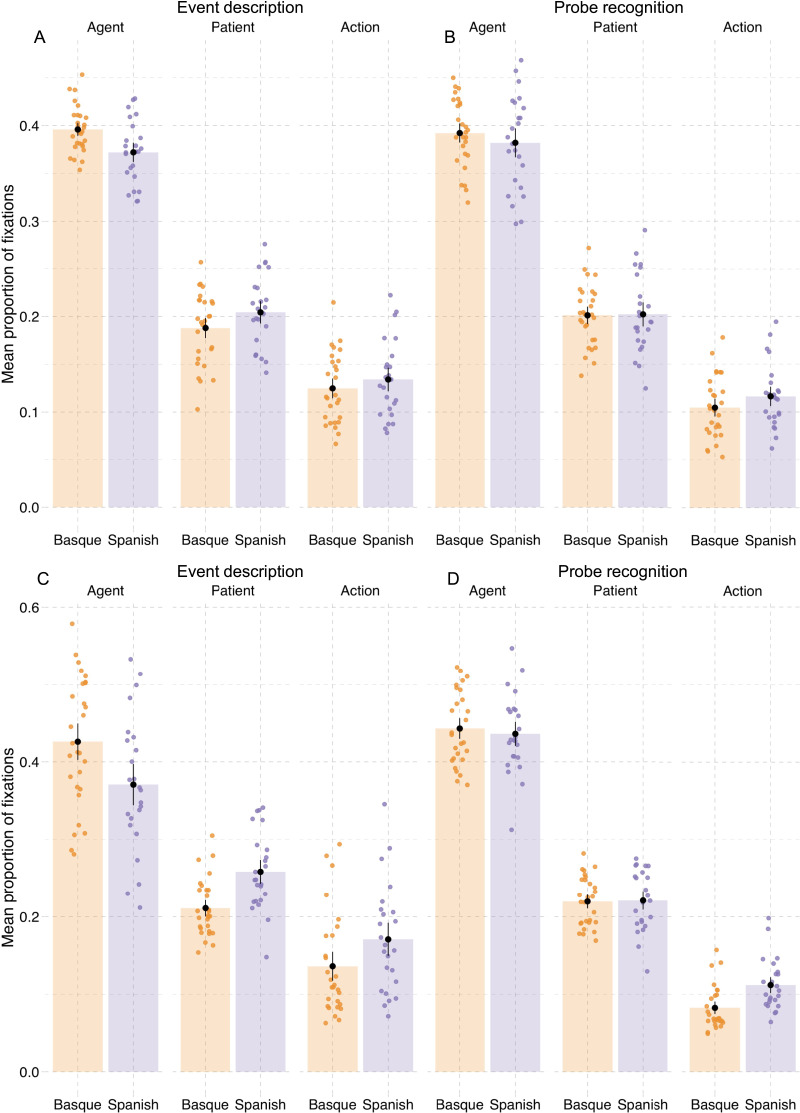

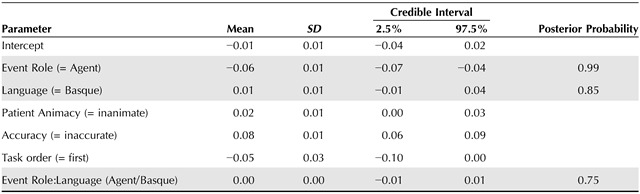

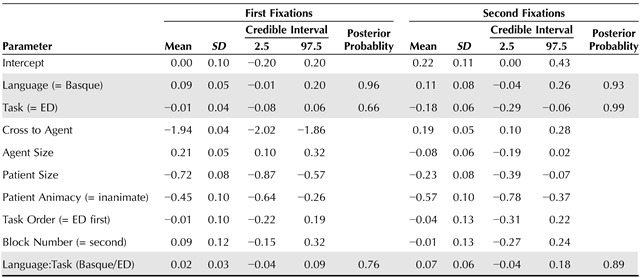

Overall, first fixations were directed primarily to agents (50.2% versus 25.2% to patients and 13.8% to actions). This effect was present in both language groups in both tasks (see Figure 6A–B). The animacy of the patient had a large effect on first fixations, but we still found more agent than patient fixations in the events where patients were animate (see Figure E1). For the second fixations, we again found a higher proportion of fixations to agents across language groups and tasks, although to a lesser degree than in first fixations (see Figure 6C–D).

Figure 6. .

Proportions of fixations to areas of interest in Experiment 2. (A and B) First fixations. (C and D) Second fixations. Colored dots represent individual participant means and black dots represent the means of participant means; error bars indicate 1 standard error of the mean. (E and F) Posterior distributions of the estimates for the predictor language, for each of the three event roles (agent, patient, action, from separate models, cf. Tables D4, D5, D6) for first and second fixations, respectively. (G and H) Posterior distributions of the estimates for the predictor task, for each of the three event roles for first and second fixations, respectively. Point ranges represent the 50%, 80%, and 95% credible intervals. Corresponding plots showing fitted values from the regression models are shown in Figure F3.

Beyond a general preference to fixate on agents first, we also found effects of language and task on first fixations. The proportions of first fixations to the three areas of interest varied between the language groups (Figure 6A–B, Table 3). Basque speakers fixated more on agents than the Spanish speakers in both the event description and the probe recognition task (Language = 0.09, SE = 0.05, P( > 0) = 0.96; Table D4). In turn, Spanish participants seemed to fixate more on patients (Language = −0.08, SE = 0.08, P( < 0) = 0.84; Table D5) and actions (Language = −0.07, SE = 0.08, P( < 0) = 0.81; Table D6), but these effects and the evidence for them were weaker.

Table 3. .

Proportions of fixations in Experiment 2 (means of participant means, standard deviations in parentheses).

| Event Role | Language | First fixations | Second fixations |

|---|---|---|---|

| Agent | Basque | 0.51 (0.03) | 0.55 (0.10) |

| Agent | Spanish | 0.49 (0.06) | 0.51 (0.10) |

| Patient | Basque | 0.24 (0.04) | 0.25 (0.05) |

| Patient | Spanish | 0.26 (0.05) | 0.29 (0.07) |

| Action | Basque | 0.13 (0.05) | 0.11 (0.08) |

| Action | Spanish | 0.14 (0.05) | 0.16 (0.07) |

For second fixations, language-related effects were similar to those found for the first fixations (Figure 6C–D), but more consistent across all event roles. Basque speakers looked more towards agents (Language = 0.11, SE = 0.08, P( > 0) = 0.93; Table D4), while the Spanish speakers were more likely to fixate on the patient (Language = −0.11, SE = 0.07, P( < 0) = 0.94; Table D5) and the action (Language = −0.19, SE = 0.10, P( < 0) = 0.97; Table D6).

Fixation patterns were also affected by the task. Participants in both language groups launched more first fixations to the action (Task = 0.10, SE = 0.05, P( < 0) = 0.97) and fewer fixations to patients (Task = −0.06, SE = 005, P( > 0) = 0.89) in the event description task compared to probe recognition. In second fixations, participants in both languages were more likely to fixate on actions (Task = 0.32, SE = 0.07, P( > 0) = 0.99) and less likely to fixate on agents (Task = −0.18, SE = 0.06, P( < 0) = 0.99) in the event description task compared to probe recognition.

We did not find that language effects substantially differed by task in first fixations for any of the event roles (Language×Task < 0.02, P( < 0) < 0.76 in all models). In contrast, language effects in second fixations more clearly varied by task: language differences were present in the event description task but not in the probe recognition task for agent fixations (Language×Task = 0.07, SE = 0.06, P( > 0) = 0.89) and patient fixations (Language×Task = −0.08, SE = 0.05, P( < 0) = 0.97). We did not find sufficient evidence for an interaction between task and language for action fixations (Language×Task = 0.04, SE = 0.06, P( < 0) = 0.72).

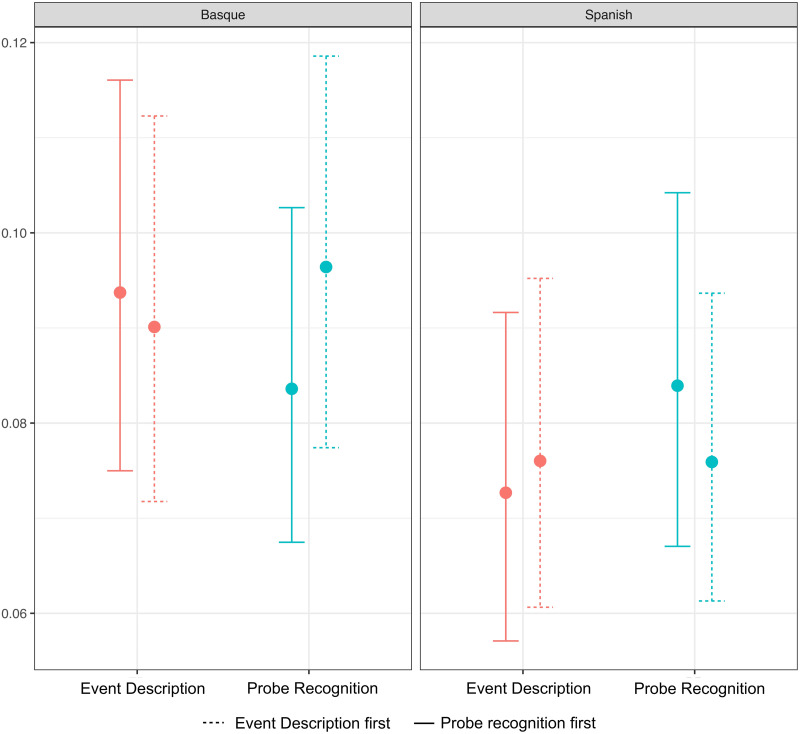

Additionally, we ran a supplementary analysis of first and second fixations to check whether the order of tasks (having event description task first or second) affected fixation patterns. For that, we fitted a model including a three way interaction between language, task and task order, keeping the rest of the model structure the same as in previous models. For first fixations to agents, we found evidence that task-order affected fixations (Language×Task×Task−Order = 0.09, SE = 0.06, P( > 0) = 0.92); in Basque, there were more fixations to agents during probe recognition when it was the second task. In contrast, there was no such effect for Spanish speakers, i.e., fixations to agents were not affected in probe recognition when this task followed the event description task (see Figure G1 in Appendix G). For first fixations to actions, we found some evidence for an effect of task order in the opposite direction (Language×Task×Task−Order = −0.08, SE = 0.08, P( < 0) = 0.84). This suggests that Basque speakers fixated more in the action area in probe recognition when this task was first, with no such effect of task order in Spanish. However, the evidence for this effect was rather weak. We did not find evidence that task order affected first fixations to patients (Language×Task×Task−Order = −0.02, SE = 0.09, P( > 0) = 0.58). Similarly, we did not find evidence that task-order affected second fixations for any of the event roles (P( > 0) ≤ 0.80 for all models).

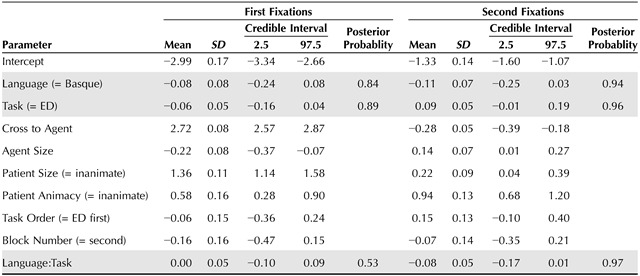

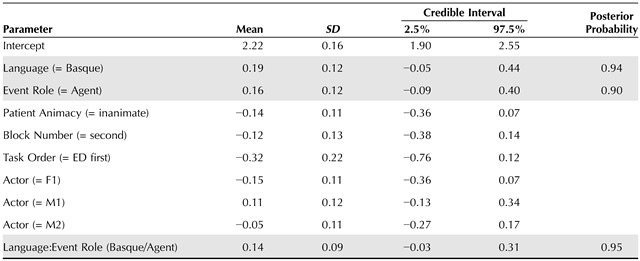

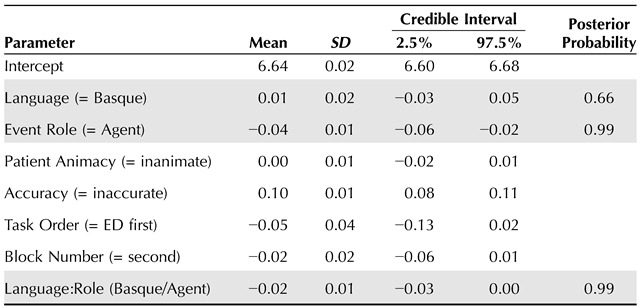

Specificity, accuracy and reaction times.

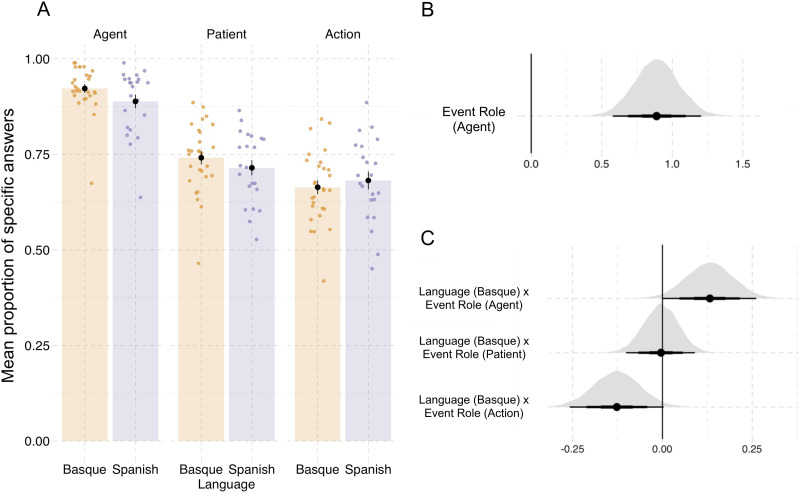

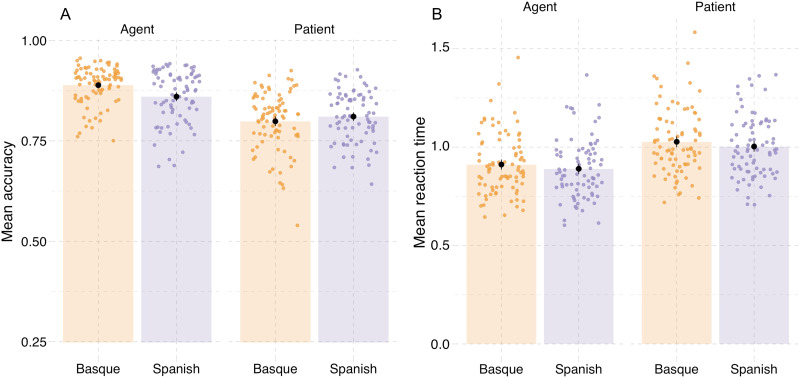

In both tasks, participants were overall more specific and accurate when describing agents or responding to agent probes compared to patients (event description specificity, Agent: mean = 0.89, SE = 0.16, P( > 0) = 0.99; probe recognition accuracy, Agent: mean = 0.16, SE = 0.12, P( > 0) = 0.90). Participants were also faster overall in responding to agent probes, compared to patient probes (Agent: mean = −0.04, SE = 0.01, P( < 0) = 0.99; see Figure 8, Table 4).

Figure 8. .

Results from the probe recognition task in Experiment 2. (A) Accuracy of probe detection. (B) Reaction times (button presses) in milliseconds. Individual dots represent participant means and black dots show means of participant means. (C and D) Posterior estimates for the predictor event role from the Bayesian regression models for accuracy and reaction time, respectively (Tables D8 and D9). (E and F) Posterior estimates of the interaction between language and event role from the same models for accuracy and reaction time, respectively. Point intervals represent the 50%, 80%, and 95% credible intervals. Equivalent figures plotting fitted values are shown in Figure F5.

Table 4. .

Proportion of specific or accurate responses in event description and probe recognition tasks in Experiment 2; standard deviations in parentheses.

| Event Role | Language | Event description | Probe recognition | |

|---|---|---|---|---|

| Proportion of specific responses | Proportion of correct responses | Reaction time | ||

| Agent | Basque | 0.92 (0.06) | 0.91 (0.06) | 701 (98) |

| Agent | Spanish | 0.88 (0.08) | 0.84 (0.14) | 728 (113) |

| Patient | Basque | 0.74 (0.09) | 0.84 (0.09) | 792 (109) |

| Patient | Spanish | 0.71 (0.09) | 0.82 (0.09) | 765 (112) |

| Action | Basque | 0.66 (0.09) | ||

| Action | Spanish | 0.68 (0.10) | ||

We additionally found interactions between event roles and language in behavioral measures. In event description (Figure 7, Table 4), Basque speakers were more specific than Spanish speakers when describing agents (Language×Agent = 0.13, SE = 0.07, P( > 0) = 0.98; Table D7), while less specific when describing actions (Language×Action = −0.13, SE = 0.07, P( < 0) = 0.97). We did not find differences in the specificity of the patient descriptions (Language×Patient = 0.00, SE = 0.04, P( < 0) = 0.53).

Figure 7. .

(A) Proportion of Specific answers (in contrast to General or Inaccurate answers) in event description by language and event role in Experiment 2. Individual dots represent participant means. Black dots represent means of participant means; error bars in indicate 1 standard error of the mean. (B) Posterior estimates for the predictor event role from the Bayesian regression model (Table D7). (C) Posterior estimates of interactions between each of the event roles (agent, patient, action) and language from the same Bayesian regression model. The three levels in the thickness of the point intervals represent the 50%, 80% and 95% credible intervals. Equivalent figures plotting fitted values are included in the Appendix, Figure F4.

In probe recognition (Figure 8, Table 4), Basque participants were overall more accurate than Spanish participants (Language = 0.19, SE = 0.12, P( > 0) = 0.94; Table D8). Furthermore, the Basque participants were more accurate than Spanish participants in responding to agent probes compared to patient probes (Language×EventRole: mean = 0.14, SE = 0.08, P( > 0) = 0.95).

This correlated with the effects in reaction times. Basque speakers were on average 27 ms faster responding to agent trials and 26 ms slower responding to patient trials than participants in the Spanish group (Language×EventRole: mean = −0.016, SE = 0.006, P( < 0) = 0.99, Figure 8B, Tables 4 and D9).

Discussion

The preference for agents observed in behavioral measures in Experiment 1 was replicated in Experiment 2. Fixation data provided additional evidence and expanded it to a measure that more closely reflects event apprehension processes. Eye movements (and especially first fixations) are a direct measure of the reflexes of event apprehension and are therefore assumed not to be as susceptible to post-hoc processes as the other behavioral measures. Hence, our results point to an agent preference in the earliest stage of event processing, across both languages and tasks tested.

Experiment 2 additionally provided evidence that language and task demands can modulate attention to event roles. Effects were small in size, but seemed to show that Basque speakers devoted more overt visual attention to agents than Spanish speakers, and, in turn, that Spanish speakers inspected patients and actions more often than Basque speakers. The effects were clearest in the measurements for the agent role. We also found a trend for patients and actions, although the effects for these roles were less strong and clear. The presence of effects between language groups suggests that the case marking of agents in language can modulate visual attention during event apprehension.

As for task effects, fixation data in Experiment 2 allowed a direct comparison of the effect of the upcoming task on event apprehension (unlike in Experiment 1, which relied solely on task-specific behavioral measures). Task effects showed that participants inspected action areas more in the probe recognition than in event descriptions, while the opposite was true for agents.

In fact, the gaze allocation patterns in Experiment 2 seem to provide detailed information on the subprocesses underlying event apprehension and how task demands would affect them. The fixation distributions suggest that there is an initial phase of apprehending the depicted event that is less susceptible to task demands. This is followed by a second phase that is more malleable and more strategically tuned to the task. When presented with the picture, the participants had access to coarse-grained, parafoveally perceived information to launch their first fixation. The coarse-grained nature of the information available before fixating on the picture may have limited the influence of task-specific demands for choosing the specific fixation location. By contrast, to decide on the location of the second fixation, participants benefited from a closer view of the event. This means that they had the opportunity to fine-tune their gaze to task-relevant areas. Indeed, the second fixations showed larger effects of task (and also language-task interactions). Participants in both language groups made more second fixations on action areas in the event description task than in the probe recognition task. This was most likely because the action information was crucial for the description task but not necessary for the recognition task. This suggests that participants were able to monitor their gaze more strategically and adapt to the task demands for the second fixations.

Thus, it is likely that the second fixations reflect a more flexible and refined process compared to the first fixations, with an increased influence of top-down factors such as the task and language requirements. A first fixation might suffice to extract coarse event-level information, including general information about the identity of event roles (cf. Dobel et al., 2011; Flecken et al., 2015). A second fixation, in turn, serves to further inspect the most relevant event areas in a strategic fashion.

An open question in this context is the benefit of launching the second fixations given their timing. In the majority of the trials, the brief exposure picture had already disappeared by the time this fixation landed on its target so that participants did not profit from additional foveally obtained visual information. Nonetheless, directing the gaze towards an “empty” area might have helped participants retrieve valuable information stored in their visual working memory about the event element previously located in that space (Altmann, 2004; F. Ferreira et al., 2008; Staudte & Altmann, 2017). Second fixations could thus reflect a memory retrieval process aimed at maximizing the visual representation of the event to perform more accurately in the task. Alternatively, second fixations might have been planned in the hope of still obtaining more visual input from the picture, as these were often planned during the execution of the first saccade.

GENERAL DISCUSSION

Our two experiments show evidence of an agent preference in visual attention across tasks and languages. This is reflected in early fixations to event participants, as well as in accuracy and reaction times. This indicates that event apprehension is driven by a deeply rooted cognitive bias to inspect agents in the first place. In addition to an agent preference, we also found that language-specific and general task demands modulated overt visual attention allocation. Basque speakers directed slightly more attention to agents than Spanish speakers in the event apprehension process. Overall, there were fewer fixations to agents and more fixations to actions in the event description task than in the probe recognition task. Together, these findings suggest that the agent preference is an early and robust mechanism in event cognition and that it interacts with other top-down mechanisms. In the following sections, we discuss these findings and their implications.

The Agent Preference as a Cognitive Universal

Speakers of Basque and Spanish allocated more attention to and were more accurate with agents than with patients or actions. This effect was robust and consistent across all measures in both experiments. Fixation data showed that this preference already emerged in the first fixations. The decision for the first saccade is made within the first 50–100 ms after the event picture is available and thus before the influence of any potential post-hoc reasoning. This early timing indicates that the preference for agents is spontaneous, as expected if it is a general cognitive bias.

The preference for agents also persisted when the patients were human, demonstrating that animacy (or humanness) was not the only factor driving this effect. Previous studies have used events that involved inanimate and smaller-sized patients, in events such as a woman cutting a potato (Gerwien & Flecken, 2016; Sauppe & Flecken, 2021). Character size (Wolfe & Horowitz, 2017) and animate motion (Pratt et al., 2010) are known to drive visual search and probably biased visual attention towards the agents in these previous studies. In the current study, we included larger-sized inanimate patients (e.g., a large plant) and animate patients (e.g., in kicking or greeting events), and still found a preference for agents. Our findings thus show that the agent preference is critically sensitive to event role information, and not animacy or salience alone.

The preference for agents was also consistent across tasks. In the event description task, this was expected because participants had to attend to the event to produce a written or oral description. Given that the canonical word order of Basque and Spanish is agent-initial, it is likely that the early attention to agents was driven by sentence planning demands. In picture description studies, agents are fixated more during early planning for the preparation of agent-initial sentences compared to patient-initial sentences (Griffin & Bock, 2000; Norcliffe et al., 2015; Nordlinger et al., 2022; Sauppe, 2017). Early agent fixations in the event description task are thus in line with what Slobin (1987) termed “thinking for speaking” because they constitute a preparatory step for sentence production.

However, the results of the probe recognition task also showed a preference for agents. This was the case even though the participants were not required to produce any linguistic output and could solve the task (answering by a button press whether a person or object had appeared in the previously shown target picture) without employing language. Thus, the preference for agents when visually scanning the picture in this task cannot be explained by the preparatory demands of sentence planning. The probe pictures were balanced with respect to whether they showed the agent or the patient, so neither event role was privileged in the experimental design. The finding that agents were still preferred thus points to a general top-down bias to search for agents, independent of task demands.

This result is consistent with the findings in Cohn and Paczynski (2013), who presented participants with depictions of agents and patients in cartoon strips. They measured looking times to each role while participants performed a non-linguistic task (rating how easy the event was to understand). Cohn and Paczynski found longer viewing times for agents than for patients and interpreted this as evidence that agents were initiating the building of event structures.

By contrast, our results are inconsistent with the findings by Hafri et al. (2018), who asked participants to indicate by button press whether a target actor appeared on the right or left side. This elicited longer response times for agents than for patients. In our study, however, response times were shorter for agents than for patients. The difference in the results patterns could be related to the level of role encoding encouraged by each task. In Hafri et al.’s study, the target actor that the participants had to identify was defined by a low-level feature (the gender or color of clothing). Instead, our probe recognition task required participants to recognize whether a character or object was present in the previously presented event. This means that participants could not only rely on low-level visual features but had to encode character information as a whole to answer the task, especially in events with human agents and human patients. This may have required participants to pay more attention to the event roles and their relations. Nonetheless, our results and the results from Hafri et al. (2018) converge in showing that event roles are detected and processed spontaneously, even when this is not necessary for the task.

On another level, agency is likely composed of multiple factors or cues (such as animacy or body posture) that each attract attention individually (Cohn & Paczynski, 2013; Gervais et al., 2010; Hafri et al., 2013; Verfaillie & Daems, 1996). The prominence of agents could also be related to their role as the initiator of the action. Cohn and Paczynski (2013) argue that agents initiate the building of event representations and provide anticipatory information on the rest of the event. Similarly, it has been proposed that agents are at the head of the causal chain that affects patients (cf. also Dowty, 1991; Kemmerer, 2012; Langacker, 2008). Active body postures are also known to attract attention and cue the processing of agents (Gervais et al., 2010). Our findings do not provide information on these individual mechanisms, but do show that agents on the whole are preferentially attended to already at the apprehension stage. Future research should test the degree to which the agent preference is based on each of its underlying factors.

The preference for agents in our experiments is, furthermore, consistent with the findings of an agent preference in grammar (Dryer, 2013; Fillmore, 1968; Napoli & Sutton-Spence, 2014; V. A. D. Wilson et al., 2022) and sentence comprehension (Bickel et al., 2015; Demiral et al., 2008; Haupt et al., 2008; Wang et al., 2009). V. A. D. Wilson et al. (2022) propose that grammar arises from general principles of event cognition. Based on evidence from primates and other species, V. A. D. Wilson et al. suggest that agent-based event representations are phylogenetically old and were already present in the ancestors of modern humans. From this it follows that an agent preference should be a likely candidate for a genuinely universal trait in human cognition (Henrich et al., 2010; Rad et al., 2018; Spelke & Kinzler, 2007). While our results are consistent with this, future studies should expand the sample and explore whether the agent preference not only generalizes across different languages but also across different cultural and social traditions (Henrich et al., 2010). This is crucial to ensure that this preference exists in the diversity of human populations and is not the by-product of Western education or cultural practices. Future research should also test the domain generality of the agent preference, for example, in lower-level perceptual tasks, and probe its neurobiological underpinning, with regard to the time courses and spatial distributions of neural activity (Kemmerer, 2012).

The Agent Preference as Modulated by General and Language-Specific Task Demands

In addition to a general preference for agents, our results also showed modulations of the attention to event roles following language and task differences. These effects were smaller and less consistent than the main effects for agents. Albeit weaker, this evidence is still valuable and seems to point to a more nuanced picture of the processes underlying visual attention in event apprehension, where the agent preference interacts with other top-down mechanisms.

On the level of general task effects, we found that fixations to actions increased and fixations to agents decreased in event description compared to probe recognition, in both Basque and Spanish. These differences in visual attention probably reflect the diverging affordances required by each task. In event description, participants needed to obtain information on the relationship between event roles to plan the verb and describe the event as a whole (Griffin & Bock, 2000; Norcliffe & Konopka, 2015; Sauppe, 2017). This probably biased participants to the action area in the event and attenuated the general agent preference. In contrast, the probe recognition task only required identifying one of the event roles, and the relationship between the agent and the patient was irrelevant to the task. This likely explains the increased attention to agents in this task. Hence, general task demands exert a top-down influence on event apprehension. Knowing what kind of information is required to perform a task allows viewers to adapt the uptake of visual information. This supports the idea that event perception is guided by prior expectations and context (Gilbert & Li, 2013; Henderson et al., 2007).

Producing language is also a task demand, and it impacted how participants inspected events during apprehension. Basque speakers paid more overt visual attention to agents than Spanish speakers. A general tendency to first look at agents was also observedamong Spanish speakers, but to a lesser extent than among Basque speakers. The language effects were small, although the size of the effects is within the range observed in previous brief exposure studies (Gerwien & Flecken, 2016; Hafri et al., 2018; Sauppe & Flecken, 2021).

Language effects were consistent across fixations and the behavioral measures in Experiment 2. Although event apprehension has been argued to be a prelinguistic process (i.e., to take place before language-related processes start, Griffin & Bock, 2000), our evidence seems to show that it is impacted by grammatical differences between languages.

When describing event pictures, Basque speakers need to decide early on which role the subject has, presumably based on information about agency in the picture (such as body posture). Obtaining information about agency would be necessary to commit to a sentence structure and to prepare the first noun phrase, i.e., to decide whether to use the ergative case or not at the beginning of the planning process (Griffin & Bock, 2000; Norcliffe & Konopka, 2015). In comparison, Spanish speakers could plan the subject noun phrase without committing to whether it is an agent or not because the form of the noun phrase remains the same in either situation. This might be especially so when time pressure is high and speakers plan sentences highly incrementally, i.e., in small units (F. Ferreira & Swets, 2002). Spanish allows greater flexibility in incremental sentence planning (at least for this particular grammatical feature), and so speakers of this language can defer their decision on which sentence structure to produce and the semantic role of the first noun phrase (Norcliffe et al., 2015; Stallings et al., 1998). This may allow Spanish speakers to attend more to other aspects of events during early visual inspection (van de Velde et al., 2014; Wagner et al., 2010).

This might explain the higher proportion of fixations to agents in Basque than in Spanish. This interpretation is consistent with previous picture description studies with ergative-marked sentences. In eye tracked picture description studies on Hindi and Basque, Sauppe et al. (2021) and Egurtzegi et al. (2022) showed that speakers looked more towards agent referents in the relational-structural encoding phase (approximately the first 800 ms) of sentence planning when preparing sentences with unmarked agents as first noun phrases. In comparison, when planning sentences with ergative-marked agent noun phrases, speakers distributed their visual attention more between agents and the rest of the scene. Sauppe et al. and Egurtzegi et al. propose that this difference in gaze behavior arises from speakers’ need to commit to the agentivity and thereby the case marking of first noun phrases earlier when planning ergative marked sentences. In our experiments, participants would obtain rough information on event roles from parafoveal vision, and they would be guided by the agent preference for launching their first fixation. In this process, Basque speakers would be influenced even more by thiscognitive bias, given their sentence planning demands (Egurtzegi et al., 2022). Hence, language demands would exert a pressure and modulate the attention to events via rapid cognition.

However, the effects of language on the attention to event roles need to be considered cautiously in the current experiments for several reasons. On the one hand, the behavioral results in the event description task in Experiment 1 yielded a different pattern of language effects than those in Experiment 2: we found higher agent specificity for Basque speakers in Experiment 2, but not in Experiment 1. Although this might be due to the changes introduced in the task modality (written vs. oral responses), it is not clear whether this can fully explain the divergence in language effects. In addition, the language manipulation we employed was between groups. Hence, it could be that the differences between Basque and Spanish speakers in our experiments were caused by cultural, educational, or other differences between the groups. In our experiments, the participants came from the same general population and their educational level was also largely similar. This reduces the possibility that group differences caused the effect; however, only a within-group manipulation with highly proficient, fully balanced bilingual participants could rule it out.