Abstract

Two fundamental difficulties when learning novel categories are deciding 1) what information is relevant, and 2) when to use that information. To overcome these difficulties, humans continuously make choices about which dimensions of information to selectively attend to, and monitor their relevance to the current goal. Although previous theories have specified how observers learn to attend to relevant dimensions over time, those theories have largely remained silent about how attention should be allocated on a within-trial basis, which dimensions of information should be sampled, and how the temporal ordering of information sampling influences learning. Here, we use the Adaptive Attention Representation Model (AARM) to demonstrate that a common set of mechanisms can be used to specify: 1) how the distribution of attention is updated between trials over the course of learning; and 2) how attention dynamically shifts among dimensions within-trial. We validate our proposed set of mechanisms by comparing AARM’s predictions to observed behavior across four case studies, which collectively encompass different theoretical aspects of selective attention. We use both eye-tracking and choice response data to provide a stringent test of how attention and decision processes dynamically interact during category learning. Specifically, how does attention to selected stimulus dimensions gives rise to decision dynamics, and in turn, how do decision dynamics influence our continuous choices about which dimensions to attend to via gaze fixations?

Keywords: categorization, learning, decision dynamics, eye tracking

Introduction

When asked to describe an object, we instinctively do so in terms of its components, or dimensions. To describe a jacket, we might note dimensions like its color or size, where its pockets are placed, or any insignia it has. When assigning objects to different categories, certain dimensions are often more relevant than others depending on the demands of the task. Distinguishing between spring and winter jackets, for example, might require us to specifically note dimensions like material, thickness, and types of closures, whereas distinguishing between formal and casual jackets might depend on dimensions like length and style.

How do we figure out which dimensions are relevant to a particular task, and how do we use that information to categorize new items? Theoretical accounts of category learning have suggested that over the course of experience with many items, humans gradually build up associations between features (i.e. “linen” and “wool” could be considered to be features of the “material” dimension) and the available category labels (i.e. spring and winter jackets). As more pairings between stimuli and category labels are presented, the observer learns that a subset of dimensions are particularly relevant for identifying category membership among all sources of information that are available.

Several models have described learning as a process of selectively attending to the most category-diagnostic dimensions to support an increase in accuracy across trials (e.g. Kruschke, 1992; Love, Medin, & Gureckis, 2004; R. Nosofsky, 1986). Although attention is often described as a latent mechanism, the general mode of learning via selective attention has garnered theoretical support from eye-tracking work. Results consistently show an increase in the proportions of fixations to task-relevant dimensions, which co-occur with increasing categorization accuracy (McColeman et al., 2014; Rehder & Hoffman, 2005a, 2005b). Despite these findings, the impact of learning on subsequent, generalized behaviors of information sampling and decision-making has remained under-explored. In other words, how does the knowledge we acquire through learning, such as memories of previous items and the task-relevance of individual dimensions, impact the manner in which we seek out information about new stimuli?

As suggested by Rehder and Hoffman (2005a, 2005b), one might reasonably assume that dimensions are fixated during each trial in proportion to their respective attention weights. Intriguing experimental work by Blair and colleagues (Blair, Watson, Walshe, & Maj, 2009; Chen, Meier, Blair, Watson, & Wood, 2013; McColeman et al., 2014; Meier & Blair, 2013), however, has indicated that there might be more to the story. In the paradigm illustrated in Figure 1A, stimuli were constructed using a hierarchical category structure where one superordinate dimension (i.e. rotation of the green square) indicated which of two subordinate dimensions was relevant to each trial (i.e. rotation of the orange triangle or the purple cross). While fixations were evenly distributed across dimensions early in the task, participants soon learned to consistently orient to the superordinate dimension as each new trial was presented (Figure 1B–C). Importantly, participants subsequently fixated to one subordinate dimension and ignored the other, depending on the feature identity of the superordinate dimension. In other words, participants tended to only fixate to the two dimensions that were relevant to each trial before making a response, despite all dimensions being equally predictive of category membership on average. These results indicate that humans not only prioritize the most relevant dimensions to make accurate categorization decisions, but also make ongoing decisions within-trial about which sources of information to sample next and when to terminate the sampling process with a response.

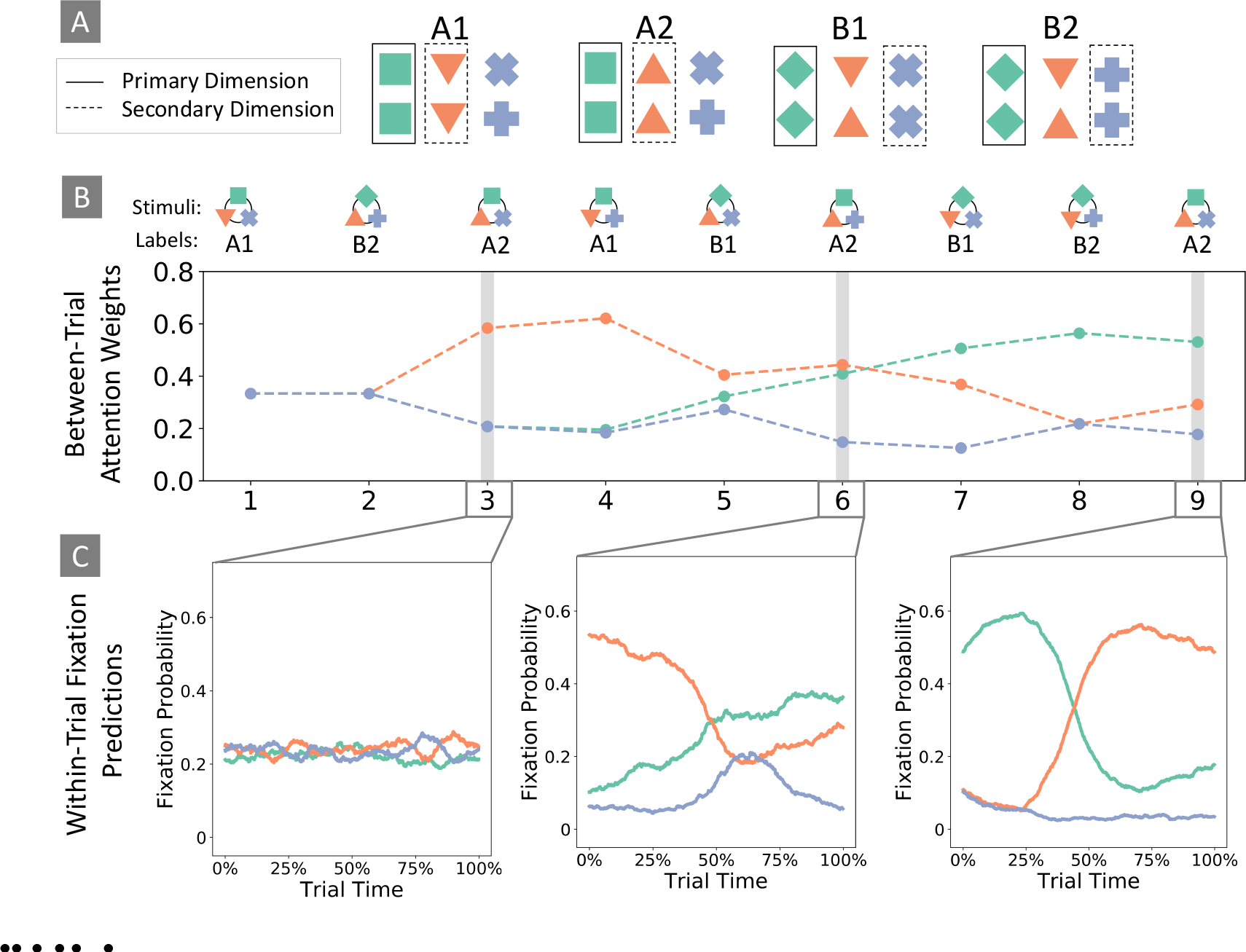

Figure 1. Within- and Between-trial Dynamics.

(A) Illustration of a hierarchical stimulus structure. Feature values (i.e. 0° or 45° rotation) in the superordinate dimension (green squares) indicated which of the two subordinate dimensions (orange triangles or purple crosses) were relevant for identifying category membership. (B) Attention weights generated by AARM’s between-trial module, given the sequence of stimuli shown in the top row. Weights were normalized for illustration. Line colors correspond to the colors of the stimulus dimensions. (C) 100 sequences of dimension fixations were generated using the within-trial module. Plots show mean fixation probabilities to each dimension as a function of the percentage of time within-trial, between stimulus onset and self-termination. Within-trial attention weights were initialized according to the outputs of the between-trial module for the relevant stimulus.

The goal of the current article is to establish a common set of mechanisms for allocating attention to relevant dimensions between-trials over the course of learning, and sampling sources of information within-trials over the course of individual decisions. We focus on the Adaptive Attention Representation Model (AARM), which was described and validated using data from five benchmark category learning paradigms in our previous work (Galdo, Weichart, Sloutsky, & Turner, 2021). AARM inherits its conceptual basis from context theory, which suggests that the feature and category information associated with previously-experienced items are stored in memory as discrete episodic traces (Medin & Schaffer, 1978). As a dynamic extension to the Generalized Context Model (GCM; R. Nosofsky, 1986), AARM describes how category representations are formed according to the similarity between new stimuli and stored exemplars, and are influenced by attention. The amount of attention allocated to each dimension is updated according to trial-level feedback, in a manner that is intended to optimize future responses with respect to the learner’s goals.

One major innovation of AARM is that it can be fit to both choice and eye-tracking data simultaneously, such that model-generated attention weights are informed by observed proportions of fixations to each dimension. With these constraints in place, Galdo et al. (2021) demonstrated that AARM could predict increasing proportions of fixations to task-relevant dimensions that co-occurred with increasing accuracy across paradigms of varying complexity (e.g. McColeman et al., 2014; Shepard, Hovland, & Jenkins, 1961). Like similar adaptive attention models of category learning (ALCOVE: Kruschke, 1992; SUSTAIN: Love et al., 2004), however, trial-level attention updates in AARM occur only after feedback has been observed. While attention weights on Trial may covary with proportions of fixations on Trial on average, the standard model lacks the specificity required to predict stimulus-dependent effects of information sampling like those observed by Blair et al. (2009). Here, we therefore extend the mechanisms of AARM that were presented by Galdo et al. (2021) to explain how humans use the knowledge acquired through experience to construct a representation of a new stimulus.

As illustrated in Figure 3, the current work presents the AARM framework as two interrelated modules: 1) a between-trial module to account for feedback-mediated changes in accuracy and attention; and 2) a within-trial module to account for information sampling and decision dynamics. Using insights from accumulation-to-bound decision models (e.g. Ratcliff, 1978) and theoretical notions of pattern completion (Estes, 1994), the within-trial module of AARM makes predictions about how participants use principles of attention to decide which dimensions of information to sample (i.e. via fixations), when to sample them, and when to make a response. Taking both modules of AARM together, the current article provides a comprehensive theoretical and computational framework for explaining how knowledge acquisition is fundamentally shaped by the experiences of the learner. Before introducing the mathematical details of AARM, we will first introduce four assumptions that are central to our approach.

Figure 3. Within- and Between-trial Modules of AARM.

(A) Between-trial updates to the category representation occur via influences of attention and decision components from the previous trial, in the context of feedback. (B) Within-trial updates require dynamic interactions among representation, attention, and decision components. First, the representation guides attention to a relevant dimension (1). Attention drives an encoding process for a fixated feature (2) to then update the amount of evidence (3) for each of a set of category responses. The representation is consulted (4) to guide subsequent attentional deployment.

Attention is the Mechanism of Learning

Categorization tasks provide a unique opportunity to study the relationship between learning and attention. From work with animals (Hall, 1991; Le Pelley, 2004) and humans (Bonardi, Graham, Hall, & Mitchell, 2005; Kruschke, 1996) demonstrating that learned dimension reliability influences how future stimuli are represented, we gain insight into how attention changes over the course of a task. In a standard type of categorization paradigm, stimuli are designed from a common set of dimensions, each of which can take on one of a unique set of possible feature values. In experiments conducted by Kruschke (1996), for example, stimuli were line drawings of box cars consisting of three dimensions, each of which could take on two possible features: height (tall or short), door position (left or right), and wheel color (black or white). Participants were asked to assign stimuli to arbitrary categories (e.g. categories ‘A’ and ‘B’) without receiving explicit instructions about how each category was defined. Instead, participants learned the experimentally-defined feature-to-category mapping through trial-and-error with corrective feedback, and learning was assessed through changes in accuracy over multiple trials.

For the sake of illustration, consider an example in which tall box cars belong to category A, and short box cars belong to category B. Assuming features are counterbalanced across dimensions, the only way a participant can achieve perfect accuracy is by categorizing stimuli according to the “height” dimension. Although a participant can categorize stimuli on the basis of another dimension like wheel color and be correct on a subset of trials by chance, humans do indeed achieve ceiling-level accuracy in these types of tasks when given sufficient training. In addition to simple “component” mappings (e.g. tall box cars belong to category A; short box cars belong to category B) humans can learn more complex “compound” mappings as well (XOR categories; e.g. short, black-wheeled and tall, white-wheeled box cars belong to category A; tall, black-wheeled and short, white-wheeled box cars belong to category B; Shepard et al., 1961). In general, findings across category learning studies have indicated that human learners 1) gradually acquire knowledge about which dimensions are relevant to the task; and 2) make categorization decisions according to which dimensions are perceived to be most relevant (see Ashby & Maddox, 2005; Markman & Ross, 2003, for review).

Category learning models often explain learning as a gradual shift in how stimuli are represented in psychological space. The influential GCM and its offspring have described successful categorization as a process of “stretching” multidimensional stimulus representations along relevant dimensions and “shrinking” them along irrelevant dimensions (Kruschke, 1992; Lamberts, 2000; R. Nosofsky, 1986; R. Nosofsky & Palmeri, 1997). As such, stimuli that differ along the relevant dimensions will be perceived as being more dissimilar to one another (i.e. belonging to different categories) than items that differ along the irrelevant dimensions. This manipulation of the psychological object representation comprises the definition of attention among many category learning models, such that allocating attention to a particular dimension distorts the representation across trials accordingly.

In GCM, categorization decisions are based on the perceived similarity between a stimulus probe and memory traces for exemplars with known category labels. The typical use of GCM in explaining attentional phenomena, however, has been to freely estimate attention weights independently across different blocks of an experiment. The model suggests that attention is distributed in a way that maximizes differences between categories and minimizes differences within categories, but does not specify a mechanism through which learning occurs. Instead, attention is allocated based on the properties of the category structure, and learning is retrospectively inferred. In the current article, we use intuitions from GCM to outline specific hypotheses about how learning and attention interact, suggesting that attention itself is the mechanism for learning.

The between-trial module of AARM uses gradient-based mechanisms to update attention upon observation of category feedback. Because the attention vector weights the influence of plausible feature-to-category mappings when the observer assigns an item to a category, gradient-based updating serves to reallocate attention on every trial in a manner that reduces the likelihood of future errors. As mentioned in the Introduction, our previous work demonstrated that AARM’s combination of iterative exemplar storage and attention updating were sufficient for predicting learning-related behaviors across paradigms of varying difficulty (Galdo et al., 2021; Shepard et al., 1961). Here, we additionally describe attention as the mechanism by which information is sampled from individual stimuli, such that fixations at each within-trial timestep are calculated directly from the model’s latent distribution of attention.

AARM’s specification of attention as the mechanism for learning departs conceptually from alternative rule-based classification and Bayesian updating accounts. Rule-based classification models seek to identify the boundary between categories, such that the category label can be determined through a conditional relation or weighted combination of feature values within the current stimulus (Goldberg & Jerrum, 1995; Vapnik, 1998). By contrast, the Bayesian approach is to construct an internal model of each category through iterative belief-updating, and assume that a latent category variable is responsible for generating a distribution of feature values (J. Anderson, 1991a; Oaksford & Chater, 1998; Tenenbaum & Griffiths, 2001). The Sampling Emergent Attention model (SEA; Braunlich & Love, 2021) combines intuitions from rule-based and Bayesian learning accounts to account for both information sampling and learning behaviors in the context of categorization problems. Like AARM, SEA consists of two interrelated parts: 1) a concept-learning component, which sorts stimuli into clusters (i.e. J. Anderson, 1991a) and determines the probability that a new item belongs to each one; and 2) a utility-sensitive sampling component, which performs preposterior analysis to balance the expected information gain of each dimension against a prespecified cost of additional sampling.

Because SEA provides a similarly comprehensive account of within-trial dynamics, we will refer to it throughout the introductory sections to provide theoretical contrast. In particular, we describe SEA as comprising a “rational” alternative to AARM’s “mechanistic” approach. As described by Sakamoto, Jones, and Love (2008), rational theories assume that humans learn to behave optimally within the constraints of the environment. Mechanistic theories, by contrast, aim to predict behavior by defining how information is processed and represented in the brain. For example, parameters representing costs in SEA are primarily used to instantiate different goals (e.g. responding accurately vs. responding quickly), but also comprise the time and effort involved in the perceptual encoding and processing of a stimulus feature. As such, if the observer elects to sample information from a dimension as a result of preposterior analysis, the relevant feature value is automatically used to update the observer’s state of belief about the identity of the stimulus. Feature encoding in SEA is therefore considered to be rational because it uses all known information about the task environment to select the action that will maximize gain and minimize loss: sample information, or make a choice. AARM’s within-trial module instead samples information from the dimension with the largest attention weight at each timestep. Attention weights are updated continuously throughout the trial, relative to an evolving working representation of the stimulus. Using familiar terms from the visual search literature (see Itti & Koch, 2001, for review), overt attention (i.e. describing the movement of the eyes) in AARM is explicitly linked to endogenous covert attention (i.e. reflecting latent, goal-directed processing). Encoding a feature value occurs as a function of the cumulative covert attention that is applied to an overtly attended spatial location. We consider feature encoding in AARM to be mechanistic by Sakamoto et al.’s definition because it occurs as a direct consequence of latent theoretical subprocesses. Whereas rational approaches are often considered to have an advantage of precision in terms of the predicted behavior and justification (J. Anderson, 1991b), mechanistic models are more appropriate for generating novel predictions and understanding nuanced behaviors (Sakamoto et al., 2008). Given the relative merits of each, we use this distinction to highlight how AARM’s mechanisms give rise to detailed predictions in various novel contexts.

Attention is Not a Zero-sum Game

Since seminal work by Sutherland and Mackintosh (1971), attention has often been understood as a fixed-quantity resource that observers use until its limit is reached. The authors presented the inverse hypothesis of animal learning, which described stimulus dimensions in terms of attention units that were modulated by reinforcement (e.g., food reward for correct category discrimination; Mackintosh & Little, 1969). Importantly, the theory imposed the constraint that attention activation across all dimensions must sum to a constant value, such that increasing the strength of one unit will decrease the strength of the others. Follow-up empirical and theoretical work by Mackintosh (1975), however, rejected the inverse hypothesis in light of evidence that attending to one dimension did not prevent learning of a second dimension in complex stimuli. Nevertheless, the convention of treating attention as a “zero-sum game” persists across many contemporary category learning models, such that attention weights across dimensions are constrained to sum to a constant of one (chosen arbitrarily by, for example, GCM). Similar intuitions about attention being represented as a constant sum have appeared in perceptual work as well; for example, the assumption that attending to a target stimulus in an array requires equivalent inhibition of distractors (White, Ratcliff, & Starns, 2011).

While we do not contest that attentional capacity limitations exist (i.e. as demonstrated in: Brydges et al., 2012; Janssens, De Loof, Boehler, Pourtois, & Verguts, 2018; Muller & von Muhlenen, 2000; Muller, von Muhlenen, & Geyer, 2007), there is little empirical evidence to suggest that the reserve of attention remains fixed across trials and tasks such that a sum-to-constant constraint is justified. Instead, an expansive literature has shown that task difficulty, perceptual load, and parallel processing affect the extent to which the capacity of the attention system becomes a limiting factor (see Chun, Golomb, & Turk-Browne, 2011, for review). For example, Lavie and colleagues (Lavie, 1995; Lavie & Cox, 1997; Lavie & Tsal, 1994) have shown that both relevant and irrelevant items are processed in visual search tasks when perceptual load is low, and inhibition of task-irrelevant items only occurs when perceptual load is sufficiently high. The sum-to-constant constraint, however, implies that the capacity limit is reached across tasks, regardless of difficulty.

Other studies have noted fluctuations in attention related to the stimuli themselves, including perceptual and emotional salience (Theeuwes, 1992, 2010), novelty (Johnston & Schwarting, 1997), and motion (B. Anderson, Laurent, & Yantis, 2011; Yantis & Egeth, 1999). For example, visual search work showed that the presence of high-salience, task-irrelevant cues significantly impaired subsequent overt attention to task-relevant targets relative to low-salience cues (Baker, Kim, & Hoffman, 2021; Most, Chun, Widders, & Zald, 2005). One interpretation of the results is that a greater quantity of covert attention continued to be allocated to the high-salience cues despite being removed from the screen before the target even appeared. Considering findings of flexible attention together, it is potentially overly constraining to assume that all attention is known and is entirely allocated to the stimuli intended by a given experimental manipulation, as would be required for inhibition to occur in the presence of a sum-to-constant constraint.

In line with connectionist models such as ALCOVE (Kruschke, 1992) and SUSTAIN (Love et al., 2004) which will be reviewed in detail below, AARM does not adhere to a sum-to-one constraint. Instead, attention to each dimension can fluctuate within- and between-trials depending on a learned history of predictive reliability, and the sum reserve of available attention is unconstrained. In previous work, Galdo et al. (2021) used model-fitting and comparison methods to evaluate various forms of attentional constraints during category learning. In addition to the standard sum-to-constant constraint, the authors implemented the following within AARM’s basic between-trial structure: 1) a norm-to-constant constraint, which allows for different forms of competition between dimensions in addition to the assumption of fixed-quantity attention (e.g. EXIT; Kruschke, 2001; Paskewitz & Jones, 2020); 2) LASSO regularization, which limits the number of dimensions that can be attended within a trial (Park & Casella, 2008); and 3) Ridge regularization, which imposes an upper bound on attention to individual dimensions (Busemeyer & Townsend, 1993). The results provided evidence against fixed-quantity attention constraints across five studies, with the model variant containing LASSO regularization and between-dimension competition performing the best overall. These results are considered to be consistent with findings from other empirical and modeling work, which similarly demonstrated that humans prefer to form representations based on a subset of the available dimensions (Lee, 2001; Shepard & Arabie, 1979; Sloutsky, 2003; Tversky, 1977; Ullman, Vidal-Naquet, & Sali, 2002). Galdo et al. (2021) therefore concluded that humans demonstrate a bias toward parsimonious solutions during learning, but nevertheless maintain some ability to flexibly allocate attention in order to improve performance.

We designed the within-trial module of AARM with these results in mind. While it is reasonable that capacity limitations or other factors could manifest in reduced sampling after training, the between-trial module is insufficient for explaining how humans decide when to terminate the information sampling process and commit to a choice during individual trials. The within-trial module predicts self-termination through a combination of stochastic feature imputation and thresholded evidence accumulation. In the decision-making literature, accumulation-to-bound models specify mechanisms through which an observer samples information from a stimulus through time, and a response is made when evidence in favor of a particular choice exceeds a prespecified threshold. Unlike standard implementations (Brown & Heathcote, 2008; Ratcliff, 1978; Usher & McClelland, 2001) or extensions to multi-attribute choice (Busemeyer & Townsend, 1993; Krajbich, Armel, & Rangel, 2010; Trueblood, Brown, & Heathcote, 2014), however, AARM makes no assumption that moment-to-moment samples of information are independent, but rather are integrated with information from other dimensions to activate memories of exemplars and contribute evidence toward a category response. To determine which sources of information to sample, AARM first forms expectations about which features might occur in each dimension (based on past exemplars), and randomly draws potential feature values into a working representation of the stimulus. The observer then orients to dimensions that provide additional evidence in favor of the leading category option at each timestep, and updates the working representation as features are encoded. This “confirmatory search” behavior naturally arises from the within-trial module’s gradient-based mechanisms for updating attention, as will be discussed in the Attention as an Optimization Problem section below. For now, it is sufficient to establish that AARM continuously reorients attention to encode stimulus features into its working representation, and self-terminates when it samples enough information to surpass a decision threshold.

Although SEA’s calculations are driven by predicted utility rather than a theoretical measure of attention, it is worth noting that SEA does not impose explicit constraints on its estimates of utility. The model instead implements parsimonious resource expenditure by 1) comparing the predicted utility of sampling a dimension to an expected cost; and 2) limiting the depth of forward search when predicting utility (i.e. what Braunlich and Love (2021) refer to as a “mypoic” rather than full preposterior analysis). Through ongoing utility calculations, SEA predicts self-termination when the potential gain of sampling any dimension no longer exceeds the potential cost of time and energy. Although this strategy is relatively efficient for low-dimensional stimuli, preposterior analysis requires the observer to determine the likelihood and category association of every possible combination of feature values across dimensions. This quickly incurs high computational cost as more dimensions are added, even when using the myopic strategy of only making predictions one step into the future. Although this forward computing is necessary to fulfill SEA’s intended purpose of identifying optimal sampling trajectories, AARM’s approach incorporates human-like biases in the interest of approximating observed behavior. Its approach is therefore readily extendable to tasks involving higher-dimensional stimuli, given that expected feature values are spontaneously retrieved from memory rather than being exhaustively considered.

In this way, AARM is similar to extensions to GCM that allow for sequential acquisition and retrieval of information. In the extended generalized context model (EGCM-RT Lamberts, 2000), stimulus dimensions are sampled sequentially to facilitate a gradual formation of a category representation through time. Similarly, the Exemplar-based Random Walk model (EBRW; R. Nosofsky & Palmeri, 1997) samples exemplars from memory and makes a decision when evidence surpasses a threshold. Unlike AARM, however, neither EGCM-RT nor EBRW have mechanisms for prioritizing dimensions according to task-relevance, strategically reorienting to additional dimensions within-trial, or self-terminating the sampling process. Instead, both models sample and encode all available stimulus information before making a choice. Relative to these examples, only AARM and SEA can account for these effects, all of which were observed in the studies of Blair et al. (2009).

Attention as an Optimization Problem

Alhough GCM made a major theoretical contribution by relating attention to learning, an open question remained as to how attention would change as learning occurred. After a few early attempts to solve this problem (Estes, 1986; Gluck & Bower, 1988), perhaps the most complete theoretical description was provided by ALCOVE (Kruschke, 1992). ALCOVE combines exemplar-like representations used by GCM with an adaptive reinforcement policy engineered by a connectionist architecture. The model consists of three layers, connected by intervening sets of weights: an input layer contains the stimulus features, a hidden layer contains a set of exemplars, and an output layer contains the model’s representation of a response probability. The set of weights that connect the latter two layers are referred to as “attention,” given that they fulfill a similar purpose to the attention weights in GCM. As in the typical connectionist approach, back propagation is used to alter both sets of weights after each new experience by minimizing a loss function that compares the response probability output from the model to a vector representing the true category label (e.g., provided by feedback). Over time, adjustments to the attention weights minimize the total number of categorization errors. This updating process can be thought of as a first-order optimization process, solved by gradient descent. The intuition of the problem solved by ALCOVE is that attention weights should move to a location in the abstract, multidimensional attention space that minimizes the squared loss function over time. Later, a similar procedure was assumed by SUSTAIN (Love et al., 2004).

Although ALCOVE has many similarities to GCM, a major departure is that it does not allow for explicit storage of new episodic events as they are experienced. Instead, ALCOVE presupposes that a set of basis exemplars are specified prior to learning, and the connection weights between experienced events and these basis exemplars are adjusted through time. As clarified by Turner (2019), most learning models take one of two forms: an “instance” representation, or a “strength” representation. The former consists of a class of models that assume that each new experience is captured in episodic memory, creating an “instance” of the event (Estes, 1994; Logan, 1988, 2002; Medin & Schaffer, 1978; R. Nosofsky, 1986). The latter consists of a class of models that simply adjusts a set of weights according to a rule, leaving no permanent storage of those events for future retrieval (D. Cohen, Dunbar, & McClelland, 1990; Rumelhart & McClelland, 1988). By this definition, ALCOVE is a strength-based model because it learns by modifying its weight structures over time.

When making efforts to distinguish between these two classes of theories, one pervasive problem is the confound between attention and representation. Specifically, encoded information affects the representation of the feature-to-category map, and this representation can subsequently drive the deployment of selective attention. In this way, an introspective learner may wonder during a task “Am I attending this dimension because I have learned that it is relevant, or is this dimension only relevant because I have attended to it before?” Assuming prototypical structure, strength-based models incur major theoretical limitations due to their lack of an explicit encoding structure for experienced events.

Recent research has begun to elucidate the interactions between the information that is stored, and the search for subsequent information. For example, Rich and Gureckis (2018) have shown that when only a subset of information is attended, subjects can fall into “learning traps” by inappropriately generalizing information to unattended dimensions. In other work, Turner et al. (2021) have shown that selective attention can cause subjects to falsely believe that one dimension is more relevant than it actually is, which can potentially eliminate a learner’s willingness to explore new dimensions of information. These results suggested that increasingly-selective deployment of attention across trials could be explained by the individual-specific history of encoded features and their learned relevance. The notion that attention orients based on an individual’s “selection history” has become a popular way of thinking about how selective attention should be deployed in response to one’s knowledge and one’s goals (Awh, Vogel, & Oh, 2006). If we apply such logic in the context of category learning, there clearly becomes a need to specify which experiences enter into an observer’s representation when determining how attention should orient.

To this end, AARM’s within-trial module enables confirmatory information search, which is well-documented in human learning (Lefebvre, Summerfield, & Bogacz, 2022; Nickerson, 1998; Talluri, Urai, Tsetsos, Usher, & Donner, 2018). Using similar mechanisms to ALCOVE, AARM’s between-trial module updates attention on each trial with respect to the correct category label, as provided by feedback. To extend the same mechanisms to account for sampling and decision dynamics, within-trial attention is first initialized with weights inherited from the previous trial. Attention is then updated at each timestep with respect to the category label that currently has the most evidence, given that the true category label is not known until after within-trial processes terminate in a response. As such, the observer reorients to dimensions that are expected to provide additional evidence for the category that is believed to be correct, given the current state of knowledge about the stimulus and previous exemplars.

By contrast, SEA specifies unbiased information search via preposterior analysis. The observer elects to sample dimensions that are expected to serve the overall goal (i.e. increase the probability of making a correct category response) in excess of the potential cost. Broader sampling beyond the relevant dimensions is made possible in the model by adjustment of an exploration parameter. The distinction between confirmatory and unbiased information search exemplifies the differences between AARM and SEA and their respective purposes. For example, developmental work has shown that while adults tend to categorize new items according to a perfectly-reliable dimension, children often make decisions in consideration of multiple dimensions with less regard for overall reliability (Blanco & Sloutsky, 2019; Deng & Sloutsky, 2015). While AARM can be used to identify which cognitive mechanisms potentially account for observed group-level differences in behavior, SEA can be used to assess the efficiency of the two strategies relative to rational predictions for behavior. Although confirmatory search in AARM provides a natural extension to between-trial mechanisms related error-minimization to account for the unsupervised aspects of within-trial dynamics, this notable departure from optimal sampling may have limitations beyond the context of category learning. These potential limitations and directions for future investigation on its relevance to human behavior are addressed in the General Discussion.

ALCOVE, SUSTAIN, AARM, and SEA all fundamentally specify learning as an optimization problem with respect to the observer’s goals, but use different mechanisms to solve it. Given that the models make very clear predictions for how dimensions of information are attended over time in order to predict learning (Braunlich & Love, 2021; Galdo et al., 2021; Kruschke, 1992; Love et al., 2004; Mack, Love, & Preston, 2016; Mack, Preston, & Love, 2013), constraining and adjucating between their respective theoretical assumptions potentially requires insights beyond what behavioral data alone can provide.

The Necessity of Eye-tracking Data

A central theme of this article is to use measures of gaze fixation as a guide for developing a model of category learning that considers both between- and within-trial dynamics. We are certainly not the first to use eye tracking data to shed light on theories of category learning (see Lai et al., 2013, for review). To investigate the connection between latent and observable correlates of attention, Rehder and Hoffman (2005a) collected eye tracking data while participants completed category learning tasks with different levels of complexity (Shepard et al., 1961). The authors demonstrated that eye tracking data can distinguish among alternative model-based assumptions about how attention is allocated at the beginning of the task as opposed to the end after learning has occurred. ALCOVE (Kruschke, 1992), for example, predicts that observers initially distribute attention evenly across all dimensions before identifying which dimensions are most relevant. An alternative theory outlined by the rule-plus-exception model (RULEX R. Nosofsky, Palmeri, & McKinley, 1994) assumes that observers implicitly form and test hypotheses during learning, and therefore predicts that observers would initially attend to a single dimension until its relevance could be sufficiently ascertained. It is important to note that these divergent assumptions could not have been examined with a measure as coarse as trial-level accuracy. One reason is that the distinction between pre- and post-learning was essential to the question of interest. Given that only the first trial contains information about attention in the absence of learning, using accuracy as the outcome measure would require conclusions to be heavily based on what is effectively a single data point. A second reason is that observers could use either an ALCOVE-like strategy of distributing attention evenly across dimensions, or a RULEX-like strategy of fixating on one dimension at random, and the predicted accuracy would be approximately equivalent on average. With eye-tracking data, however, Rehder and Hoffman identified fixation probabilities that were consistent with ALCOVE rather than RULEX: when considered in aggregate, participants fixated to all dimensions with approximately equal probability at the beginning of the task and attended only to the most relevant dimensions toward the end.

While the results of Rehder and Hoffman (2005a) relied on trial-level fixation probabilities, additional evidence suggests that gaze fixation data can be used as a continuous measure of within-trial attention as well (Blair et al., 2009; Chen et al., 2013; Krajbich et al., 2010; Krajbich & Rangel, 2011; Rehder & Hoffman, 2005a; S. Smith & Krajbich, 2019a, 2019b; Thomas, Molter, Krajbich, Heekeren, & Mohr, 2019). In work by Blair et al. (2009), gaze fixation data was recorded while participants completed a category learning task with hierarchically-organized stimulus dimensions (see Figure 1). As described in the Introduction of the current article, the feature value in one superordinate dimension indicated which of two subordinate dimensions would be relevant for determining the category label for each stimulus.

If one were to fit a model like ALCOVE, SUSTAIN, or AARM’s between-trial module to data from an experiment like this (see Palmeri, 1999, for an application of ALCOVE), we should expect the superordinate dimension to be preferentially weighted because it is relevant across all trials. The two subordinate dimensions would be weighted equally, but would receive lower weights than the superordinate dimension because they are each only relevant to 50% of trials (Figure 1B). If one were to predict proportions of gaze fixations directly from these attention weights, one might expect a high probability of fixating to the superordinate dimension, and lower, but equal, probabilities of fixating to the two subordinate dimensions. In reality, Blair et al. (2009) noted distinct stimulus effects on the trajectory of within-trial fixations, such that participants conditionally fixated to only one subordinate dimension per trial after observing the feature identity of the superordinate dimension (see Chen et al., 2013; McColeman et al., 2014; Meier & Blair, 2013, for replication). The results suggest that in addition to using learned information about dimension relevance to sample information, humans additionally prioritize dimensions dynamically within a trial in response to the stimulus itself. Although Braunlich and Love (2021) demonstrated that SEA could predict a reduction in the number of dimensions sampled within-trial across learning instances, the computationally-parsimonious “myopic” variant of SEA does not predict the ordering effects observed by Blair et al. (2009). Given that SEA considers all dimensions to have equal utility on average, it does not produce preferential orienting behaviors that are consistent with the hierarchical structure of the task. As we will show in Case Study 2, however, AARM’s within-trial module can produce stimulus-dependent information prioritization effects through its combination of attention-mediated orientation and confirmatory information search.

In light of empirical and theoretical work indicating that the hierarchical organization of information is ubiquitous in human learning (e.g. Barto & Mahadevan, 2003; Botvinick, 2012; Botvinick, Niv, & Barto, 2009), we suggest that the within-trial attention effects that emerge from hierarchical category structures can potentially make a more general statement about how humans sample information from naturalistic environments. For example, contextual features of the environment may serve as a set of superordinate dimensions for deciding which sources of information to attend when making judgements about new examples of recognizable objects that people encounter in everyday life. We therefore place particular emphasis on hierarchical category structures in the present article, given the distinct patterns of within-trial fixations observed by Blair et al. (2009) and theoretical generalizability to naturalistic attention and categorization principles.

As a final example to motivate the use of eye-tracking data in developing our theory of category learning, it is relevant to note that multiple modes of information sampling could yield inseparable patterns of behavior under certain conditions (Figure 2). Consider two hypothetical learners who are assigning four-dimensional stimuli to categories A and B. One dimension (D1) is perfectly reliable for determining category membership, such that an observer could achieve 100% accuracy by learning the appropriate D1 feature-to-category mapping (e.g., when , respond category “A”, and when , respond category “B”). The three other dimensions (D2, D3, and D4) are each 75% reliable for determining category membership. Learner 1 is very efficient; they identified the most reliable dimension, and exclusively sampled information from D1 after gaining experience with the task. In Figure 2A–B, we show an example in which a learner fixated to D1, and concurrently accumulated considerable evidence to support a Category “A” decision.

Figure 2. Information sampling and decision dynamics.

Hypothetical fixation paths were generated by AARM’s within-trial module, such that one of four spatially segregated dimensions was fixated at each timestep up to a response. Left panels show the probabilities of fixating to each dimension (y-axis), plotted as a function of percentage of time within-trial between stimulus onset and response (x-axis). Right panels show the decision evidence for each of two possible category choices as a result of the information sampling behavior (i.e. fixation paths) in corresponding left panels. Choice probability (y-axis) is plotted as a function of absolute time in milliseconds (x-axis). Dotted lines indicate when self-termination (i.e. a response) occurred. Each row shows the timecourses of fixations and decision evidence for: (A) a hypothetical subject who learned to attend to the deterministic (100% predictive of category; D1) dimension; (B) a hypothetical subject who received conflicting evidence across three probabilistic dimensions (D2, D3, and D4). Although each simulation reflects different information sampling behaviors, category A was selected in both examples.

By contrast to Learner 1, Learner 2 happened not to notice that D1 was the most reliable dimension. Instead, they found that by attending to some combination of D2, D3, and D4, they could achieve very high accuracy that in fact rivaled that of Learner 1. Figure 2C–D shows an example in which a learner prioritized D4, which provided some initial evidence for a “B” response. Sampling information from D2 subsequently contradicted the information in D4, and created uncertainty in the choice. To resolve this conflict, the learner sampled information from D3, which provided sufficient information for making an “A” response. Given that these two divergent learning profiles could yield identical accuracy, responses alone may say very little about whether or not a model is accurately capturing which dimensions are being attended. While different modes of information sampling could of course be dissociated by clever task design (e.g. Blanco & Sloutsky, 2019; Deng & Sloutsky, 2015), measures of attention such as those provided by eye tracking data provide strong constraints on how attention is deployed over time within a trial. In particular, a viable model of category learning that uses latent attention to predict behavioral changes should be able to account for multiple modes of observable attention allocation as well. We will further explore the impact of different sampling paths on response probability in Case Study 1.

The examples highlighted in this section seek to clarify that there are at least two problems in using behavioral data as the lone metric for validating the assumptions of attentional deployment. First, when stimuli are multidimensional, it is possible for many patterns of attention allocation to produce identical responses. Rehder and Hoffman (2005a) showed that eye tracking data could be used to support a broad distribution of attention early in the learning period, as opposed to a systematic testing of one dimension at a time. Relatedly, Figure 2 illustrated how different sequences of fixation patterns within a trial could ultimately produce the same category choice. Second, the determination of relevance may be highly contextualized within a trial, based on the properties of the stimulus itself rather than the feedback about the stimulus. A particularly striking example comes from hierarchical category learning experiments in which participants fixate to dimensions in a stimulus-dependent manner before feedback is even observed (Figure 1, Blair et al., 2009).

Despite overwhelming evidence that eye-tracking data provide a rich source of information about the timecourse of selective attention during individual decisions, few efforts have been made to extend the logic of categorization models to account for within-trial dynamics (but see Braunlich & Love, 2021). Taking these findings together, we assert that gaze fixations serve as a viable, necessary means for evaluating category learning models in terms of predicted attention allocation. By using eye-tracking data in the current work, we are equipped to examine the theoretical mechanisms put forth by AARM using a new standard of specificity, to which other models of category learning have been infrequently subjected.

Summary and Outline

We believe that no theory of human learning would be complete without a description about how selective attention should be deployed. The introductory sections have supported the notion that attention is a critical component for learning problems: it accelerates learning by identifying which dimensions are relevant, and thereby limits the time-consuming search for information when making decisions. Our conceptualization of how attention should be deployed follows those of Kruschke (1992), Love et al. (2004), and Galdo et al. (2021) by treating attention as an optimization problem. At face value, the problem of optimizing attention should be similar at the between- and within-trial level, but there are important differences in this optimization problem that make for an interesting challenge. Across trials, the learning problem is well defined: one needs only to specify how attention should be modified in response to feedback (i.e. supervised learning). However, within a trial, the problem is made more complex because the learner does not know the true category label until after they make a response, but must nevertheless decide which dimensions to sample (i.e. unsupervised learning).

The gold standard in solving these problems is some type of forward computing, where relevance is determined by considering all possible values of a dimension and then aggregating the results to form an expected utility of each (Nelson & Cottrell, 2007; Yang & Lengyel, 2016). SEA is perhaps the most striking display of this approach, wherein the utility of sampling is computed for all dimensions prior to making a decision to act (e.g., sample a dimension or make a response). Although this approach has considerable promise, one potential weakness is that it assumes an incredible amount of computation at each moment in time to assess the potential utility of every sampling outcome. It is possible that humans do indeed make these computations, but it certainly is not an economical approach if suitable heuristic alternatives were available, particularly in consideration of high-dimensional stimuli.

By contrast, AARM focuses on the representation of the current stimulus information rather than on the utility of would-be collected information. The rationale behind this strategy is that subjects maintain a sense of the distribution of features that occur within each dimension, and they use this distribution to form expectations about the current stimulus. By dynamically updating the expectations, a “working” representation can subserve attention and the search for subsequent information. To solve the unsupervised aspect of this problem, we critically assume that information is sought after in a confirmatory manner until category evidence surpasses a decision threshold. This assumption appears to be vital to our approach, as it naturally extends the between-trial module of AARM (Galdo et al., 2021) to account for within-trial dynamics.

To articulate our proposed framework, we consider how latent attention is updated between-trials to facilitate learning, and within-trials to facilitate individual categorization decisions. Although details and justification will be provided in the sections to follow, our theoretical framework can be summarized by the following core components:

Both within- and between-trial dynamics are described by a common set of mechanisms. Interactions among attention, representations, and decisions extend across timescales to account for how humans acquire information about individual stimuli, and learn the relevant information for distinguishing between categories.

Over the course of learning, humans form simplified stimulus representations composed of the dimensions that are most relevant to the current task. Within-trial dynamics of information sampling and decision making describe how these simplified representations are formed, such that only a subset of information needs to be attended before a categorization response is made.

Attention is optimized with respect to the current goal. Gradient-based mechanisms typically require the observation of feedback to update the attention weighting structure between-trials. If we extend the same logic to the within-trial level, we must define how the observer orients attention before the correct category label is known. We therefore describe how representations gradually evolve within-trial according to experience-based predictions and confirmatory information search.

Hierarchical category structures are ideal for studying within-trial dynamics, due to an implicit temporal ordering of relevant information. In addition to giving rise to gaze prioritization effects in an experimental setting, hierarchical structures are ubiquitous in nature. In particular, we suggest that in real-world scenarios, learners use environmental context as a superordinate cue for processing the dimensions of new stimuli.

To explicate these theoretical components, the remainder of this article is organized as follows. First, we discuss the mathematical details of AARM in terms of two separable but interacting modules. We begin with a description of how AARM is applied to between-trial learning, and then describe how expectations about features can be managed dynamically to create a working representation of the stimulus probe. We then describe how attention orients to confirm the existing beliefs about a stimulus to complete our description of within-trial dynamics. Second, we examine AARM’s ability to capture important empirical effects by simulating its behavior in four case studies. The case studies examine how attention is deployed in several unique situations: (1) when expectations are violated, (2) when relevance is contextualized within a stimulus (e.g., as in the hierarchical category learning task), (3) when multiple stimulus dimensions occupy the same location in space, and (4) when learning of stimulus dimensions occurs incidentally (e.g., dimensions are not relevant to the learning process but become relevant when tested). Third, we close with a discussion about future directions and alternative mechanisms.

Model Specification

We define the dynamics of category learning in terms of three components, as shown in Figure 3: 1) the representation describes how information is stored and maintained through a set of experiences; 2) attention describes what information contributes to the representation; and 3) the decision describes how information is used to generate an action (e.g. a response). To present the details of AARM, we separate our description into distinct between- and within-trial updating processes. First, as described by Galdo et al. (2021), the model updates its representation of stimuli in response to feedback on each successive trial (i.e., a between-trial update). This type of update entails (1) storing a new episodic trace containing the stimulus information on the current trial, (2) storing information about the category label (e.g., from feedback), and (3) updating the quantity of attention that is allocated to each dimension.

Second, the model maintains a representation of the current stimulus probe, which it updates through time as it encodes new information about each feature (i.e., a within-trial update). This type of update entails (1) an encoding process where attention is applied to a stimulus dimension in order to access the feature value contained therein, (2) an imputation process where the model uses the available information to form expectations about which feature values will occur in unattended dimensions, and (3) an attention rule that allows the model to reorient according to its updated knowledge and expectations about the current stimulus probe. We begin with a general overview of the between-trial module, and expand this model structure to accommodate within-trial dynamics. We then close this section by explaining how this expanded structure relates back to dynamics that occur across trials. For reference, a notation table and parameter definitions are provided in Appendix B.

Between-trial Updating Rule

The relevant mechanisms of AARM’s between-trial updating rule will be provided here, but we refer the reader to Galdo et al. (2021) for additional details. On each trial of a categorization task, the observer is asked to assign a -dimensional stimulus to one of categories. To do this, the observer is thought to retrieve memories of previously-experienced exemplars and their associated category labels (i.e. as supplied by corrective feedback).

As in GCM, AARM assumes that memories of stored exemplars are “activated” in proportion to their similarity to the current stimulus. Similarity is computed by way of a factorizable exponential kernel (R. Nosofsky, 1986; Shepard, 1987), such that activation of the nth exemplar in response to probe is

| (1) |

Here, is the specificity of the between-trial similarity kernel function, contains the memory strength associated with each exemplar, and quantifies the attention allocated to each of the stimulus dimensions. Although Galdo et al. (2021) assumed was determined by a weighting function that incorporates primacy and recency biases (Pooley, Lee, & Shankle, 2011), here we assumed all exemplars had equivalent memory strength to provide constraint in our simulation case studies.

The probability of choosing category is the summed similarity of the exemplars associated with that category, normalized by the total across all exemplars (i.e., a weighted average). Specifically, the choice probability associated with Category is

| (2) |

where is an indicator function returning a one if the statement is true and a zero otherwise.

After a response is made and feedback is observed, two actions occur. First, the features of stimulus are stored in exemplar matrix as a memory trace, and the true category label is stored in feedback matrix . Second, attention is updated in the direction of an error-gradient, similarly to the adaptive attention models described in the Attention as an optimization problem section (i.e. ALCOVE and SUSTAIN):

| (3) |

Here, is a positive constant describing a between-trial learning rate, and is a shorthand denoting a “gradient operator” for computing the set of partial derivatives of a loss function with respect to each element of the vector :

To define the loss function, ALCOVE and SUSTAIN use the so-called “humble teacher” rule, which allows for variability in category activation between exemplars. Specifically, more category-typical exemplars elicit greater activation than those that are more peripheral (Kruschke, 1992). For the purposes of our previous work on simplicity biases in human learning (Galdo et al., 2021), we instead selected a cross-entropy loss function because it allows for faster training and more reliable extension to multiclass problems than squared-loss alternatives (Demirkaya, Chen, & Symak, 2020). Given successful fits to behavioral and eye-tracking data with our previous specification for between-trial attention updating, we apply cross-entropy loss in the current work as well.

When using a soft-max rule (such as Luce-choice), the cross entropy loss function is simply the negative log likelihood of correct classification (Goodfellow, 2016):

where is the choice probability associated with the feedback given on the ith Trial (i.e., the correct response). Hence, to derive the gradient, we need only take the partial derivative of Equation 2 with respect to along each of the dimensions. We provide this derivation in Appendix A. Our update equation for the attention vector after observing feedback therefore becomes

| (4) |

We stress that this updating procedure departs from strength-based connectionist architectures in which back propagation solutions provide the rule to update the attention vector and hidden layer weights. As shown by Galdo et al. (2021), simply defining how attention should be updated across time within an instance representation is sufficient to capture categorization behavior.

Within-trial Updating Rule

Figure 3B illustrates the important components of the within-trial updating process, as well as its “default” temporal order (i.e., nodes with numbers). Upon stimulus presentation, a set of initial attention weights inherited from the previous trial of the between-trial module first dictates which information will be sampled, a process by which the eyes are oriented to the location of the prioritized dimension (Node 1 in Figure 3B). Once the eyes have fixated upon the intended dimension, an encoding process is initiated for the feature residing in that dimension. After a feature has been encoded, that information is passed to the representation (Node 2 in Figure 3B). Similarity-based activation of the stored exemplars is then used to calculate the total evidence for each category response (Node 3 in Figure 3B). The state of accumulated evidence at each moment in time is used to determine which dimension to orient to next. The model reorients attention in a confirmatory manner, according to which dimension is most likely to provide further evidence that would support whichever choice currently has the largest amount of supporting evidence. This dynamic process self-terminates and makes a response when a sufficient amount of evidence has accumulated for an option.

For ease of exposition, we organize this section into the following four stages of processing: Stimulus Encoding, Exemplar Activation, Evidence for Category Response, and Attention Orientation. Figure 4 provides an illustrative example of how each of these components contribute to within-trial dynamics, and we will use this figure as a working example to facilitate descriptions of each component.

Figure 4. Illustration of Within-trial Dynamics.

(A) An example stimulus is presented on the screen, and a stimulus dimension is sampled for processing (e.g. prioritized from the between-trial module). (B) The observer generates a working representation of the stimulus and predicts what features might occur in each dimension. As a feature is attended, predictions are replaced with true feature values. (C) Previously-stored exemplars are activated in proportion to their similarity to the probe. (F) The category labels associated with retrieved exemplars accrue noisy response evidence. (E) Attention updates to discriminate among the currently most active category options. (D) Gaze fixations are determined from the attention process, resulting in reorientation to new dimensions as needed to sample more category-relevant information.

Stimulus Encoding

Memory theories often describe the psychological representations of stored items or events as memory “traces,” which are organized into discrete features of perceptual, contextual, and conceptual information. While the contents of a memory trace cannot be directly observed, recall and recognition paradigms provide insight into which features are encoded under various conditions. For example, if a lure item is falsely recognized among previously-studied targets at test, it indicates overlap between the features of the lure and some subset of target memory traces (Deese, 1959; Roediger & McDermott, 1995). Additional work has shown that the distribution of features across stored traces and the extent to which they can be associated with one another influence which information will be encoded and subsequently retrieved (Dosher, 1984; Dosher & Rosedale, 1991; Greene & Tussing, 2001). With these insights in mind, a recent dynamic model of encoding and retrieval (Cox & Criss, 2020; Cox & Shiffrin, 2017) described trace formation as a time-varying process. Specifically, the iterative encoding of individual probe features selectively activates memory traces on the basis of similarity, and drives an evolving familiarity signal toward a recognition threshold. Similarly, AARM’s within-trial module was designed to build up an informative representation of the probe throughout the trial, using retrieval of previous exemplars to drive an evidence accumulation signal for making a category response.

Our specification of encoding in AARM’s within-trial module builds upon mechanisms of prediction and pattern completion observed in the hippocampus, in which previously-observed item representations are reinstated during encoding in order to fill-in missing information or properly orthogonalize overlapping cues (see Bowman & Zeithamova, 2020; Hunsaker & Kesner, 2013, for review). Prior to encoding any information about a new stimulus, we assume that a working representation is populated by experience-based expectations of feature values. Expectations are gradually replaced with the true features of the stimulus as they are attended and concurrently encoded over the course of the trial.

To incorporate the logic of pattern completion into AARM’s encoding mechanism, we follow a procedure outlined by Estes (1994). Borrowing his example, suppose an observer experiences the following three-dimensional stimuli: , , and . Suppose on Trial 4, the observer is presented with a partial stimulus and is asked to guess which feature value will occur in Dimension 3. We assume that an observer will predict the feature value based on memories of previous items and the current state of knowledge about the stimulus. To make and evaluate predictions, one can impute each feature value that was previously-observed in Dimension 3 (i.e. [1,2]) into the partial stimulus, and evaluate which feature value is more likely to represent the missing information.

Starting arbitrarily with a candidate feature value of 1 as shown in the table below, we compare the imputed stimulus (i.e. 111) to all stored exemplars and indicate whether the feature values match or mismatch in each dimension. In the Comparison column, “matches” and “mismatches” are indicated by values of 1 and respectively, where represents a baseline level of perceptual discriminability. We then compute the product across comparison values to determine the Similarity column below (Medin & Schaffer, 1978). Finally, we compute the sum similarity across all stored exemplars to determine the activation of imputed stimulus 111:

| Imputed Stimulus | Stored Exemplars | Comparison | Similarity |

|---|---|---|---|

|

| |||

| 111 | 111 | 1 | |

| 111 | 121 | ||

| 122 | |||

|

| |||

| Sum: | |||

Similarly, we can calculate the activation when “2” is the missing value using the same strategy:

| Imputed Stimulus | Stored Exemplars | Comparison | Similarity |

|---|---|---|---|

|

| |||

| 111 | |||

| 112 | 121 | ||

| 122 | |||

|

| |||

| Sum: | |||

The probability of selecting a value is simply the activation of its respective imputed stimulus, normalized by the total activation across all candidates. In our example, the probability that the stimulus has a feature value of 1 in Dimension 3 (i.e., ) is

As long as is small enough to indicate sufficient perceptual discriminability among candidate feature values, will approach 1 in the current example. In other words, when asked to complete the partial stimulus , the observer is most likely to respond “1”.

In extending the intuition of Estes’s example for the purposes of dynamic encoding, it is necessary to distinguish between the “true” identity of the stimulus and a “working” representation that changes through time. As in our description of the between-trial module, we use the notation to denote the true identity of the probe on the ith trial. We denote the working representation of the stimulus probe at Timestep of Trial as , and omit the “” trial notation for convenience. We next require a general expression for the probability that a candidate feature value will occur in a particular dimension. We define the set of unique feature values that were previously observed in Dimension as . We then use the equation

| (5) |

to calculate activation of stored feature value in response to an imputed feature value , drawn from . This equation is a more general form of the exemplar activation calculation provided in Equation 1. Here, however, is the within-trial specificity of the similarity kernel function, indicates the encoding status of exemplar features, and indicates the attention weight at moment . We will describe how attention changes through time in the Attention Orientation section, but for now it is sufficient to acknowledge that attention is updated throughout the trial and will affect how stimuli are encoded.

Using the relation , the probability that the “true” feature value is equal to is

| (6) |

Note that Equation 6 takes the same form as the feature probability calculation from Estes’s example. Here, the numerator is simply the activation associated with an imputed feature value , and the denominator is the total activation associated with all values in . To specify a feature value in the working representation at moment , we randomly draw a value from the distribution defined by the probability mass function in Equation 6. Importantly, a new value of is re-drawn at each timestep within the trial, such that the working representation is non-stationary. This imputation process continues until sufficient attention has been applied to Dimension for a true feature value to be encoded, at which point is predominantly represented in (see Attention Orientation section). Although Equation 6 expresses the pattern matching probabilities for discrete feature values, it can easily be extended to continuous values by replacing the summation over the set to be an integration over the space , in the same way that the similarity kernel (e.g., Equation 5) was generalized from Medin and Schaffer’s 1978 context model to Nosofsky’s 1986 GCM. We demonstrate an extension to a paradigm with continuously-valued dimensions in Case Study 3.

Our specification of a prediction-based working representation is somewhat related to utility predictions in SEA. Both models assume the observer maintains an ongoing sense of what features might occur in each dimension, with an associated likelihood of occurrence that depends on the state of knowledge about the current stimulus. One critical distinction is how each model uses these insights to decide which sources of information to sample. While SEA requires a pairwise assessment of every possible combination of features in order to determine a single utility prediction for each dimension, AARM’s working representation is more reflective of spontaneous, noisy retrieval of features that are unbounded by specific exemplar representations. As such, our approach has a similar intuition to Monte Carlo algorithms in which probability distributions are approximated through repeated sampling, some specifications of which can be recursively updated as more information is obtained (Doucet, de Freitas, & Gordon, 2001; Gilks, Richardson, & Spiegelhalter, 1996). Random sampling approaches have been suggested to provide an advantage of cognitive plausibility over rational models on the grounds of computational parsimony (Sanborn, Griffiths, & Navarro, 2010). Relative to SEA, the feature imputation strategy in AARM is arguably more consistent with the capabilities of resource-limited humans because there is no requirement that every possible feature combination is assessed within the working representation. Expected or observed feature values are instead drawn from a distribution, and attention and decision components update accordingly.

Our specification is also similar to other extensions to GCM that were designed to characterize the timecourse of stimulus encoding during category learning tasks (Brockdorff & Lamberts, 2000; A. Cohen & Nosofsky, 2003; Lamberts, 2000). As mentioned previously, the EGCM-RT (Lamberts, 2000) incorporated a stochastic stimulus representation mechanism into GCM, which results in a similarity output that changes throughout the trial as probe dimensions are encoded. Unlike AARM, however, EGCM-RT does not specify a precise order in which dimensions should be encoded, only that encoding is sequential and that all feature values of the stimulus need to be encoded before a response is made. A variant of EBRW (R. Nosofsky & Palmeri, 1997) for perceptual encoding (EBRW-PE A. Cohen & Nosofsky, 2003) contains similar stochastic dimension-sampling mechanisms, such that exemplars race toward a threshold at rates that are proportional to their total similarity to the probe. At each timestep within a trial, there is an increasing probability that a feature will be encoded and thus included in the continuous similarity calculation. As such, encoding a feature value within the stimulus representation is strictly probabilistic, whereas AARM offers a mechanism for encoding individual feature values that is driven by attention and is gated by gaze fixations.

Instead of populating the working representation with random draws from an expected distribution of feature values, an alternative approach would have been to define the working representation as an empty vector prior to encoding. The retrieving effectively from memory model (REM; Shiffrin & Steyvers, 1997), for example, assumes that observers begin with an empty trace consisting of a vector with all zeros. Over time, the zero elements of the trace are replaced with samples from a pre-specified distribution (e.g., a Geometric distribution) with properties intended to reflect the details of the stimulus set. In the context of a model designed to capture within-trial dynamics, however, we found that the expectation-formation component of the working representation was essential for the model to reorient to additional dimensions after processing the first. In hierarchical paradigms like the one illustrated in Figure 1 (Blair et al., 2009), various iterations of the model in which the stimulus representation was initialized with an uninformed (e.g. zero or average) basis vector provided no impetus for the model to reorient to one dimension over the other. As we will show in Case Study 2, our implementation achieves human-like reorientation to the stimulus-relevant subordinate dimension by updating its feature predictions after initial encoding, and fixating to a second dimension through confirmatory search.

Figure 4B illustrates how the encoding dynamics occur in AARM’s within-trial module after initial orientation to a dimension (e.g. food source; Figure 4A) when an observer is categorizing images of animals. Before a new image is even presented, the observer has some expectation about what feature values each dimension could possibly take on, given their experience with previous stimuli. After the food source dimension is sufficiently attended and the observer encodes the “true” feature value (e.g. acorn), the working representation of the stimulus is updated to accommodate this information. As shown in Figure 4C and discussed below, this shift in the probe representation directly affects which stored exemplars are subsequently activated to facilitate the reorientation of attention.

Exemplar Activation

We assume that encoding (and by extension, attention) is the primary mechanism driving memory activation of previously-stored exemplars. This is in contrast to EBRW (R. Nosofsky & Palmeri, 1997) which assumes that the similarity of previously stored exemplars to the stimulus probe is what dictates how frequently each exemplar is retrieved. In AARM, attention is what guides the similarity computation itself, causing potentially rapid nonlinear activation in both the activation of past exemplars and the evidence for a category response.

Exemplars are activated in a nearly identical way as described in the between-trial case (see Equation 1), with the one exception that activation is based on the working representation of the stimulus probe, , and not the true contents of the stimulus probe itself. In addition, activation is expressed as a function of time, given by

| (7) |

where we denote the attentional state at Time as .

As discussed in the previous section, the working stimulus representation in AARM’s within-trial module is non-stationary and gradually comes to resemble the stimulus’s true identity as features are encoded. As a consequence, the distribution of expected feature values in Equation 6 will change dynamically through time and affect which dimensions are prioritized, given the information available at Time . Pertaining to the hierarchical paradigm shown in Figure 1 (Blair et al., 2009), Figure 5 shows how attention and exemplar activation mutually impact one another. At the beginning of the trial, memories for all exemplars are equally active (left panel, ). Attention initially orients to the D1 dimension (right panel; x-axis) per weights inherited form the between-trial module. As the working representation is updated with D1 feature information, there is a concurrent retrieval bias for exemplars belonging to “A” categories (left panel, and ). When attention then updates again, the observer will reorient to D2 in an effort to distinguish between the categories associated with the most active exemplars (right panel; y-axis). When sufficient attention is applied to encode the feature value of D2, exemplars with similar features in both D1 and D2 are selectively activated (left panel, and ).

Figure 5. Illustration of Attention Gradient.

(A) Heatmaps show the activation of each unique exemplar in the task paradigm shown in Figure 1. Y-axis labels show trial numbers and the feedback associated with each exemplar. Activation at 4 different time points within an individual trial are shown, given a probe with a true category label of A2. (B) The plot shows the progression of within-trial attention weights assigned to dimensions D1 and D2, which are the relevant dimensions for determining the category membership of the given stimulus. As time progresses (as indicated by black arrows), the attention weights (x- and y-axis values) move in a direction to support a category response (contour values).