Abstract

The last few years have witnessed significant advances in developing machine learning methods for molecular energetics predictions, including calculated electronic energies with high-level quantum mechanical methods and experimental properties, such as solvation free energy and logP. Typically, task-specific machine learning models are developed for distinct prediction tasks. In this work, we present a multi-task deep ensemble model sPhysNet-MT-ens5, which can simultaneously and accurately predict electronic energies of molecules in the gas, water, and octanol phases, as well as transfer free energies at both calculated and experimental levels. On the calculated dataset Frag20-solv-678k, which is developed in this work and contains 678,916 molecular conformations up to 20 heavy atoms and their properties calculated at B3LYP/6–31G* level of theory with continuum solvent models, sPhysNet-MT-ens5 predicts density functional theory (DFT)-level electronic energies directly from force field optimized geometry within chemical accuracy. On the experimental datasets, sPhysNet-MT-ens5 achieves state-of-the-art performances, which predicts both experimental hydration free energy with RMSE of 0.620 kcal/mol on the FreeSolv dataset and experimental logP with RMSE of 0.393 on the PHYSPROP dataset. Furthermore, sPhysNet-MT-ens5 also provides a reasonable estimation of model uncertainty which shows correlations with prediction error. Finally, by analyzing the atomic contributions of its predictions, we find that the developed deep learning model is aware of the chemical environment of each atom by assigning reasonable atomic contributions consistent with our chemical knowledge.

Graphical Abstract

Introduction

To accurately and rapidly predict molecular energetics has been of great interest in computational chemistry. Theoretically, molecular energetics can be calculated by solving the Schrödinger equation of the system, where is the set of nuclear charges and is the set of atomic positions. Unfortunately, the exact solution of this equation is intractable for most chemical systems and numerical approximations require the tradeoff between accuracy and computational efficiency.1–4 In recent years, researchers have shown great interest in developing machine learning (ML) approaches for energetics prediction.5–10 Instead of explicitly solving the Schrödinger equation for energetics calculation , machine learning methods11–22 can learn the mapping from the geometry to the energetics of the system directly . Recently, deep learning (DL) methods, which directly utilize the atomic coordinates and type and predict molecular energetics in an end-to-end fashion, are gaining increasing popularity. In 2017, deep tensor neural network (DTNN)23 achieved Mean-Absolute-Error (MAE) of less than 1 kcal/mol (chemical accuracy) on the QM9 benchmark dataset,24–26 which contains minimized structures and molecular properties in the gas phase calculated using the DFT method at the B3LYP/6–31G(2df, p) level of theory for 133,885 molecules composed of H, C, N, O, and F with no larger than nine heavy atoms. Subsequently, many DL models such as MPNN,27 SchNet,28 HIP-NN,29 MGCN,30 DTNN_7ib,31 PhysNet,32 DimeNet,33 and DimeNet++34 have been developed for the end-to-end prediction of molecular energetics, with DimeNet++ achieving as low as 0.15 kcal/mol MAE on the QM9 benchmark dataset. However, these highly accurate deep learning models developed based on the QM9 dataset require the input of the DFT optimized geometries for their accurate energetics prediction, and thus the original motivation for developing ML and DL models is not fully achieved: reducing the computational cost of high-level Quantum Mechanical (QM) calculations. To address this problem, we previously demonstrated31 that it was possible to predict DFT-level electronic energy directly from Merck Molecular Force Field3 (MMFF) optimized geometry. We then further pushed the limit of molecular energetics prediction by developing the Frag20 dataset,35 which contains over half a million molecules composed of elements H, B, C, N, O, F, P, S, Cl, and Br with up to 20 heavy atoms. Eventually, we obtained a DL model named sPhysNet which can predict DFT-level energies directly from the MMFF-optimized geometry of molecules. The model achieved close to chemical accuracy performance on real-world datasets, turning out to be a promising tool to explore the chemical space with 3D molecular geometries for calculated energetics. On the other hand, experimental transfer free energies, such as hydration free energy and logP, are typically treated as separate tasks by ML models with completely different architectures.36–43

In this work, motivated by traditional molecular modeling methods which utilize the direct relationship between absolute energies and transfer energies,44–47 we present sPhysNet-MT, a multitask DL model which predicts molecular electronic energies in gas, water, and octanol phases directly and corresponding transfer free energies indirectly, from MMFF-optimized molecule geometries. Unlike some previous multitask models for less correlated tasks that usually perform worse than their single-task variants,32, 33 well-correlated tasks facilitate the model for simultaneous predictions,48, 49 and the tasks in sPhysNet-MT benefit from mutual learning which help the model to achieve better performance. After training on our prepared Frag20–678k-solv dataset containing over 678,000 molecular conformations labeled with calculated DFT-level energetics in gas, water, and octanol phases, sPhysNet-MT achieves a MAE of less than 0.6 kcal/mol on molecular electronic energy predictions and MAE of less than 0.2 kcal/mol on the transfer free energies between phases. For the experimental energetics, we develop a multitask training set combining experimental hydration free energy from FreeSolv50 dataset and experimental logP from PHYSPROP51, 52 to fully utilize the limited amount of experimental data. After fine-tuning53 on the experimental dataset, the model can simultaneously predict hydration free energy with kcal/mol MAE and kcal/mol RMSE (Root-Mean-Squared-Error), as well as logP with MAE and RMSE from MMFF-optimized molecular geometries, achieving state-of-the-art performance for both tasks. Further analysis shows that the model is aware of the chemical environment of atoms in the molecule by assigning atomic contributions consistent with our chemical knowledge. Corresponding source code and datasets are freely available at https://www.nyu.edu/projects/yzhang/IMA.

Dataset

Multitask training set for calculated energetics: Frag20-solv-678k.

In order to simultaneously predict both molecular electronic energies and transfer free energies, we develop Frag20-solv-678k, a multi-task training set that contains 678,916 conformations and their calculated energetics in gas, water, and octanol phase (Table 1, Supplementary Figure 1). The conformations come from the following three sources: 565,896 conformations are generated from the 565,438 unique molecules in the Frag20 dataset35 which contains molecules up to 20 heavy atoms carefully selected from ZINC,54–56 and PubChem57, 58 based on the number of heavy atoms, extended-functional groups, and fragment frequency;35 39,816 conformations are generated from 33,572 unique molecules in CSD20 dataset which contains molecules up to 20 heavy atoms selected from the Cambridge Structure Database;59 the rest 73,204 conformations are generated in this work from 11,939 unique molecules selected from CSD20 dataset. The distributions of the number of heavy atoms and elements in the training datasets are shown in Supplementary Figure 1. More Frag20-solv-678K dataset construction details are described in Supplementary Information.

Table 1. Datasets that are used for model training and evaluation.

: gas phase electronic energy;: water phase electronic energy;: octanol phase electronic energy. The terms are defined in Equation 1. DFT: the property is calculated by DFT method; EXP: the property is measured by experiments; CAL: the property is calculated indirectly from Equation 1 or Equation 3.

| Frag20-solv-678k |

FreeSolv-PHYSPROP-14k |

|||||||

|---|---|---|---|---|---|---|---|---|

| Frag20 | CSD20 | Conf20 | Together | FreeSolv | PHYSPROP | Together | ||

| Source | 35 | 35,59 | This work | This work | 50 | 60 | This work | |

| #Mols | 565,438 | 33,572 | 11,939 | 599,010 | 640 | 14,500 | 14,339 | |

| #Confs | 565,896 | 39,816 | 73,204 | 678,916 | 640 | 14,500 | 14,339 | |

|

| ||||||||

| Geometries | MMFF | Y | Y | Y | Y | Y | Y | Y |

| DFT | Y | Y | Y | Y | Y | N | N | |

|

| ||||||||

| Properties | DFT | DFT | DFT | DFT | DFT | DFT | DFT | |

| DFT | DFT | DFT | DFT | --- | --- | CAL | ||

| DFT | DFT | DFT | DFT | --- | --- | CAL | ||

| CAL | CAL | CAL | CAL | EXP | --- | EXP | ||

| CAL | CAL | CAL | CAL | --- | --- | CAL | ||

| CAL | CAL | CAL | CAL | --- | EXP | EXP | ||

The label generation protocol is shown in Figure 1. We perform single-point energy calculations at B3LYP/6–31G* level with Gaussian1661 in gas (), water (), and 1-octanol () phase. The solvents are modeled with Universal Solvation Model (SMD)62. The calculated single-point electronic energies are then used to calculate the transfer free energies between phases, resulting in six labels for each of the 678,916 conformations. The transfer free energies are defined as:

| Equation 1 |

Figure 1.

Frag20-solv-678k dataset generation protocol. A. 3D Geometry generation protocol. B. Single-point energy calculation in three phases. C. The post calculation to generate the transfer free energy terms.

: gas phase electronic energy;: water phase electronic energy;: octanol phase electronic energy. The terms are defined in Equation 1.

Multitask training set for experimental energetics: FreeSolv-PHYSPROP-14k.

To fully utilize the limited amount of existing chemical knowledge at the experimental level for multitask training, we combine the compounds in FreeSolv50 and PHYSPROP51, 52 datasets to develop a multi-tasking data set for experimental transfer free energies (Table 1). FreeSolv50 dataset contains experimental hydration free energies () for around 640 fragment-like neutral molecules. The molecules are selected from prior literature and curated to correct a number of errors and redundancies. The PHYSPROP51, 52 dataset contains around 14,500 molecules and their experimental logP which is further curated following an established protocol60, 63 including removal of inorganics and mixtures, structural conversion and cleaning, normalization of specific chemotypes, removal of duplicates and manual inspection. The experimental octanol to water transfer free energy is calculated from experimental logP by the following thermodynamic equation.47

| Equation 2 |

Where R is the ideal gas constant and T is the temperature assumed to be 298.15K in this work.

As shown in Figure 2 A and B, for each compound in the combined dataset, the MMFF conformations are generated with ETKDG and optimized with MMFF. Unlike Frag20 where only one conformation is generated for each molecule, here up to 300 geometries are generated for each molecule and the one with the lowest MMFF-level energy is selected.

Figure 2.

Experimental dataset generation protocol. A. Up to 300 conformations are generated with ETKDG64 in RDKit65 and optimized with MMFF94. B. The one conformation with the lowest MMFF level energy is selected for each compound. C. Label calculation if the molecule is presented in both datasets.

As shown in Figure 2 C, if the molecules are presented in both datasets, both and are available, so all six labels can be calculated as long as we can obtain the gas-phase electronic energies. For those molecules, the gas-phase single-point DFT calculation is performed to calculate the gas phase electronic energies and the electronic energies in other phases are calculated following these equations derived from Equation 1.

| Equation 3 |

For those molecules presented in only one dataset, we do not perform further calculation and all labels other than the corresponding experimental transfer free energies are marked unavailable. The combined FreeSolv-PHYSPROP dataset contains 14,339 molecules with 13,700 molecules exclusively in PHYSPROP dataset, 165 molecules only in FreeSolv dataset, and 474 molecules in both datasets.

Model and Training

sPhysNet-MT model.

Inspired by the thermodynamic relationships between the properties as described in Equation 1 and Equation 2, we develop sPhysNet-MT, a multitasking DL model which predicts electronic energies directly and transfer free energies indirectly.

Architecture.

Figure 3 shows an overview of sPhysNet-MT. Initially, the N atoms in a molecule go through an embedding matrix and are embedded into vectors (node embedding). The distances between each atom pair are expanded with radial basis functions (RBFs) into vectors (edge embedding). The node embeddings are then updated by three interaction modules with message passing, residual layers, and gate layers. The node embeddings of the final layer go through the output layer to predict targeted properties. For each input conformation, sPhysNet-MT directly predicts three values: gas phase electronic energy , water phase electronic energy and octanol phase electronic energy and calculates the transfer free energies based on Equation 1.

Figure 3.

Overview of sPhysNet-MT model architecture and training procedure. A. The architecture of sPhysNet-MT. The model takes the 3D coordinate and the atomic number of each atom and predicts the three electronic energies in three phases. B. The loss function for multitask training (Equation 4). C and D. The single-task scenarios when the energetics are partially available on the experimental dataset.

Figure 4 shows the detailed architecture of each layer in sPhysNet-MT. The embedding layer contains an embedding matrix , where 94 is the maximum supported atomic number and is the feature dimension. Each atom with atomic number will be encoded into a vector , where is the atom index of the molecule of N atoms. The Euclidean distances between each atom pair within a cutoff of are expanded into vectors using RBFs (Figure 4 A) of the form:

Figure 4.

The detailed architecture of the layers in sPhysNet-MT. A. RBF expansion layer. Distances between atom pairs are expanded into vectors. B. Embedding layer. The atoms are embedded into vectors based on their atomic number. C. Interaction layer. sPhysNet-MT contains three interaction layers with message passing, residual layers, and gate layers. D. Output layer. The updated node embeddings go through the output layer to predict target properties. E. Residual layer architecture used in A and B.

Where , and is the total number of RBFs to map each distance pair to a vector . and are learnable parameters that specify the center and width of . The centers are initialized to K equally spaced values between and 1, and all widths are initialized to . is a smooth cutoff function defined as:

The encoded atom embeddings and edge embeddings then go through 3 interaction layers as shown in Figure 4 C. The node embedding of each atom is first updated through message passing based on the node embeddings of its neighbors as well as the expanded distances:

Where , , and are all trainable parameters. denotes the Hadamard product. is the shifted softplus activation function which operates on every element of the tensor:

The updated atom embeddings go through a residual layer (Figure 4 E), an activation operation, a linear layer, a gating layer controlled by a learnable gating vector u, and another residual layer. The output of the interaction layer will be used as the input of next interaction layer or the output layer.

After three interaction layers, an output layer is used to predict the properties from the atom embeddings. The final linear transformation in the output layer uses a matrix for the atom-level raw prediction of electronic energies in gas, water, and octanol phases . The raw predictions are then scaled and shifted before summed up to the final molecule properties:

Where and are learnable element-specific scale and shift parameters, and N is the total number atoms in the molecule.

Training on calculated data.

Since all three electronic energies and three transfer free energies are available in the calculated dataset, the objective of the training is to optimize the loss function:

| Equation 4 |

| Equation 5 |

| Equation 6 |

Here is the Mean Absolute Error (MAE) of three direct prediction terms and is the MAE of three transfer free energy terms. is a hyperparameter that makes the model focus more on the energy difference terms than the direct terms. We have tried other values including 10, 50, and 100, and very similar results were observed. All E and terms are in the unit of electron volt (eV) during training. The detailed optimization hyperparameters are included in Supplementary Table 1.

Frag20-solv-678k provides both MMFF and DFT-optimized geometries as input, as mentioned above, a model trained with DFT-optimized geometry would be impractical to use. Therefore, we chose MMFF-optimized geometry as the input for training.

We randomly split Frag20-solv-678k into a training/validation/testing set of 658,916/10,000/10,000. In each epoch, the model is trained on the training split and evaluated on the validation set, and the model with the lowest validation loss is saved as the best model for testing. No early stopping is implemented so the model is trained until reaching the time limit (36 hours on a Tesla V100-SXM2–16GB) or 1000 epochs, whichever comes first.

Training on experimental data.

The model is initialized with the weights of the trained model on Frag20-solv-678k, and is trained with the same loss function as Equation 4. However, as shown in Figure 2C, only 474 molecules have all six labels available. For the remaining 165 molecules in FreeSolv and 13,700 molecules in PHYSPROP, only the corresponding transfer free energy is available, therefore, we mask out the unavailable terms in calculating the loss.

Due to the imbalance between the number of hydration free energy data and the logP data, we apply the random oversampling66 strategy to make sure the molecules in the two datasets have a closer chance of being sampled during training. Aside from the oversampling, the model is optimized with slightly different hyperparameters included in Supplementary Table 1.

Deep Ensemble.

Deep ensemble67 is used to further improve the model performance and also provide calibration of model uncertainty. Five independent models are randomly initialized and trained with identical hyperparameters and training data. During prediction, the average of all five models is used as the ensemble prediction and the standard deviation is used as the ensemble model uncertainty.

In this paper, sPhysNet-MT-ens5 stands for a deep ensemble of five independent models and sPhysNet-MT stands for a single model.

Results and Discussion

Calculated Energetics Prediction.

After training sPhysNet-MT and sPhysNet-MT-ens5 as described above, we test the model on the reserved test set composed of 10,000 molecules of Frag20-solv-678k. As shown in Table 2, sPhysNet-MT predicts electronic energies with MAE of 0.7 kcal/mol and transfer free energies with MAE of 0.2 kcal/mol. Deep ensemble further improves the model performance on all tasks: sPhysNet-MT-ens5 predicts electronic energies with MAE of less than 0.6 kcal/mol and three transfer electronic energy terms with MAE of 0.187 kcal/mol, 0.145 kcal/mol, and 0.079 kcal/mol, respectively.

Table 2.

The test performance on the Frag20-solv-678k dataset. sPhysNet-MT-ens5 is a deep ensemble of five sPhysNet-MT models. The errors are reported in kcal/mol.

| Model | Metric | Target |

|||||

|---|---|---|---|---|---|---|---|

| On the test set of Frag20-solv-678k | |||||||

|

| |||||||

| sPhysNet-MT | MAE | 0.719 | 0.691 | 0.695 | 0.205 | 0.161 | 0.086 |

| RMSE | 1.143 | 1.074 | 1.087 | 0.377 | 0.300 | 0.139 | |

|

| |||||||

| sPhysNet-MT-ens5 | MAE | 0.599 | 0.571 | 0.573 | 0.187 | 0.145 | 0.079 |

| RMSE | 0.993 | 0.932 | 0.943 | 0.357 | 0.283 | 0.131 | |

Experimental Energetics Prediction.

Following the multi-task training strategy described above, we further fine-tuned the model for the multitask prediction of experimental properties. To be consistent with previous publications, we perform 50 independent runs with random splits and then report both mean and standard deviation performance on the testing splits. As shown in Table 3, our sPhysNet-MT-ens5 model predicts experimental hydration free energy with RMSE of 0.620±0.140 kcal/mol and logP with RMSE of 0.393±0.012, achieving state-of-the-art performance on both tasks.

Table 3.

The test performance on the FreeSolv-PHYSPROP dataset. Each model is trained on 50 random splits and the mean and standard deviation are reported. sPhysNet-MT-ens5 is a deep ensemble of five sPhysNet-MT models. The errors in hydration free energies are reported in kcal/mol and the errors in logP are reported in log unit.

| Ref. | Model | Target | |||

|---|---|---|---|---|---|

| Hydration (FreeSolv) | logP (PHYSPROP) | ||||

| MAE | RMSE | MAE | RMSE | ||

| sPhysNet-MT | 0.392±0.064 | 0.663±0.135 | 0.262±0.006 | 0.421±0.017 | |

| sPhysNet-MT-ens5 | 0.359±0.063 | 0.620±0.140 | 0.242±0.004 | 0.393±0.012 | |

| Previous hydration free energy prediction models | |||||

| 36 | SVM | --- | 0.852±0.171 | --- | --- |

| XGBoost | --- | 1.025±0.185 | --- | --- | |

| RF | --- | 1.143±0.230 | --- | --- | |

| DNN | --- | 1.013±0.197 | --- | --- | |

| GCN | --- | 1.149±0.262 | --- | --- | |

| GAT | --- | 1.304±0.272 | --- | --- | |

| MPNN | --- | 1.327±0.279 | --- | --- | |

| Attentive FP | --- | 1.091±0.191 | --- | --- | |

| 40 | 3DGCN | 0.575±0.053 | 0.824±0.140 | --- | --- |

| 42 | AGBT | 0.594±0.090 | 0.994±0.217 | --- | --- |

| 39 | D-MPNN | --- | 0.998±0.207 | --- | --- |

| 37, 68 | weave | --- | 1.220±0.280 | --- | --- |

| 43 | A3D-PNAConv | 0.417±0.066 | 0.719±0.168 | --- | --- |

| 69 | FML | 0.570 | --- | --- | --- |

| Previous logP prediction models | |||||

| 70 | QSPR | --- | --- | --- | 0.78 |

| 60 | GraphCNN | --- | --- | --- | 0.56 |

| 71 | --- | --- | --- | 0.47±0.02 | |

| --- | --- | --- | 0.50±0.02 | ||

| 72 | OPERA | --- | --- | --- | 0.78 |

Atom contribution analysis for model prediction explanation.

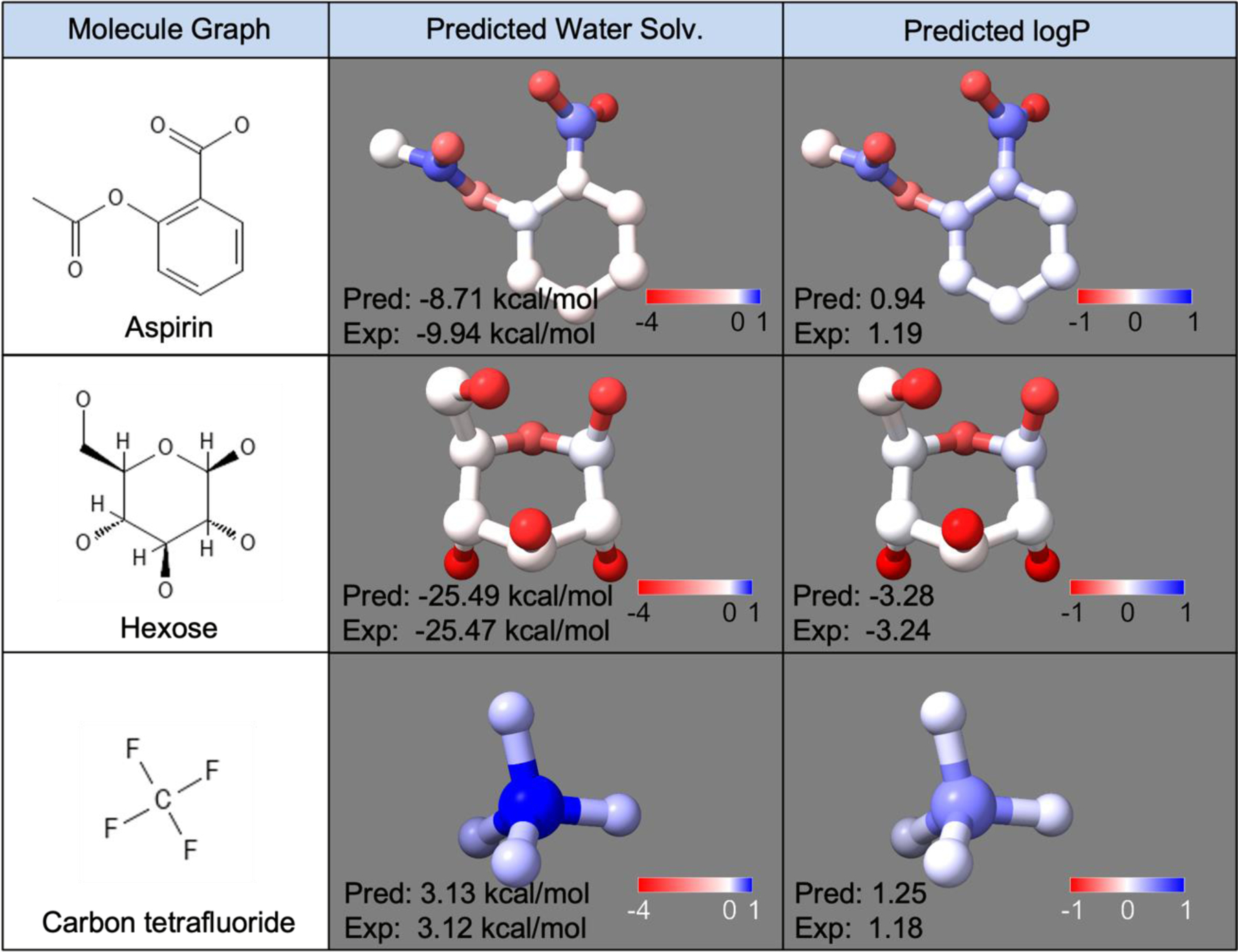

One of the disadvantages of DL models is the difficulty of model explanation because of the complex architecture and the huge number of parameters. Fortunately, sPhysNet-MT-ens5 directly predicts atom-level energetics and sums them over for the molecular energetics, which allows us to break out the model prediction back into atom-level contributions for further explanation. As shown in Figure 5, we select 3 examples and visualize the predicted atomic contributions for both tasks. For aspirin (row 1), most of the hydration free energy is contributed by the four oxygen atoms, while the oxygen atom in the hydroxyl group has the highest contribution, which is reasonable because it can dissociate or form hydrogen bonds. The two oxygen atoms in the carbonyl group also show strong contributions, which is consistent with the fact that they are hydrogen bond acceptors. For hexose (row 2), all the hydroxyl group oxygens are assigned strong contributions to the hydration free energy, and the single ether oxygen is also assigned relatively weaker (lighter in color) contributions. Conversely, as an extremely non-polar compound, all atoms in carbon tetrafluoride show positive contributions to the solvation energy. The logP contributions show very similar trends to the hydration free energy prediction. In summary, from the atomic contribution analysis, the model is aware of different atomic types and chemical environments, showing predictions consistent with our chemical knowledge. It should be noted that atomic contribution analysis is solely used for model prediction explanation and is different from Energy Decomposition Analysis.73, 74

Figure 5.

The atomic contributions assigned by sPhysNet-MT-ens5 on both hydration free energy prediction and logP prediction for aspirin, hexose, and carbon tetrafluoride.

Uncertainty analysis.

Uncertainty prediction is a popular topic in DL models.29, 34, 75–85 As a deep ensemble model,67 sPhysNet-MT-ens5 provides a measure of model uncertainty. Supplementary Figure 2 and Supplementary Figure 3 show the relationship between prediction errors and uncertainties on the calculated dataset and the experimental dataset respectively. Statistically, when the model uncertainty increases, both cumulative and interval error increase, which means those molecules with higher uncertainties have higher expected errors than those molecules with lower uncertainties.

Conclusion

In this work, we present sPhysNet-MT-ens5, a deep ensemble model which can simultaneously predict electronic energies and transfer free energies. The model unifies these tasks which are typically treated separately by other ML and DL models. On the calculated dataset, the model benefits from mutual learning and achieves chemical accuracy on predictions of both electronic energies and transfer free energies with MMFF-optimized geometries as input. On the experimental dataset, the model fully utilizes the limited amount of experimental data through multitasking and outperforms existing models on both experimental hydration free energies and logP predictions. Further decomposition of atomic contribution also reveals that the model learns reasonable chemical knowledge by assigning meaningful values for different atoms based on the atom properties and their chemical environment. Our work not only produces a useful computational model for electronic energies and transfer free energies prediction directly from MMFF-optimized geometry, but also provides a multitasking protocol for ML and DL model design to learn a more robust molecular representation.

Several limitations of our method are also worth future research. As discussed by Ulrich et al,71 if a compound is ionizable, the octanol-water partition coefficient (logD) would be dependent on the pH of the solvent and the pKa of the compound, and a more complex model would be needed for logD prediction. Stemming from this problem, sPhysNet-MT does not explicitly use the total charge of the system as input, as a result, it would be challenging for the model to predict the properties of ionizable compounds which tend to be charged in water solution. Therefore, the model should be upgraded to sense charged molecules to further deal with the system where compounds are ionizable.

Supplementary Material

ACKNOWLEDGMENT

This work was supported by the U.S. National Institutes of Health ( R35-GM127040 ). We thank NYU-ITS for providing computational resources.

Footnotes

ASSOCIATED CONTENT

SUPPORTING INFORMATION

Training hyperparameters (Supplementary Table 1); The distribution of number of heavy atoms and elements in the training dataset (Supplementary Figure 1); Uncertainty analysis on calculated dataset (Supplementary Figure 2); Uncertainty analysis on experimental dataset (Supplementary Figure 3); DFT calculated vs. experimental hydration free energy (Supplementary Figure 4); DFT calculated vs. experimental logP (Supplementary Figure 5); DFT calculated vs. experimental hydration free energy with different optimized geometries (Supplementary Figure 6); Frag20-solv-678k Dataset Construction Details and Discussions. (PDF)

This material is available free of charge via the Internet at http://pubs.acs.org.

The authors declare no competing financial interest.

References

- (1).Rossi M; Chutia S; Scheffler M; Blum V Validation Challenge of Density-Functional Theory for Peptides—Example of Ac-Phe-Ala 5 -LysH +. J Phys Chem 2014, 118 (35), 7349–7359. DOI: 10.1021/jp412055r. [DOI] [PubMed] [Google Scholar]

- (2).Hawkins PCD Conformation Generation: The State of the Art. J Chem Inf Model 2017, 57 (8), 1747–1756. DOI: 10.1021/acs.jcim.7b00221. [DOI] [PubMed] [Google Scholar]

- (3).Halgren TA Merck molecular force field. II. MMFF94 van der Waals and electrostatic parameters for intermolecular interactions. J. Comput. Chem 1996, 17 (5‐6), 520–552. DOI: . [DOI] [Google Scholar]

- (4).Bannwarth C; Ehlert S; Grimme S GFN2-xTB—An Accurate and Broadly Parametrized Self-Consistent Tight-Binding Quantum Chemical Method with Multipole Electrostatics and Density-Dependent Dispersion Contributions. J Chem Theory Comput 2019, 15 (3), 1652–1671. DOI: 10.1021/acs.jctc.8b01176. [DOI] [PubMed] [Google Scholar]

- (5).Huang B; Lilienfeld OA v. Ab Initio Machine Learning in Chemical Compound Space. Chem Rev 2021, 121 (16), 10001–10036. DOI: 10.1021/acs.chemrev.0c01303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (6).Lilienfeld O. A. v.; Müller K-R; Tkatchenko A Exploring chemical compound space with quantum-based machine learning. Nat Rev Chem 2020, 4 (7), 347–358. DOI: 10.1038/s41570-020-0189-9. [DOI] [PubMed] [Google Scholar]

- (7).Musil F; Grisafi A; Bartók AP; Ortner C; Csányi G. b.; Ceriotti M Physics-Inspired Structural Representations for Molecules and Materials. Chem Rev 2021, 121 (16), 9759–9815. DOI: 10.1021/acs.chemrev.1c00021. [DOI] [PubMed] [Google Scholar]

- (8).Langer MF; Goeßmann A; Rupp M Representations of molecules and materials for interpolation of quantum-mechanical simulations via machine learning. Npj Comput Mater 2022, 8 (1), 41. DOI: 10.1038/s41524-022-00721-x. [DOI] [Google Scholar]

- (9).Keith JA; Vassilev-Galindo V; Cheng B; Chmiela S; Gastegger M; Müller K-R; Tkatchenko A Combining Machine Learning and Computational Chemistry for Predictive Insights Into Chemical Systems. Chem Rev 2021, 121 (16), 9816–9872. DOI: 10.1021/acs.chemrev.1c00107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (10).Haghighatlari M; Li J; Heidar-Zadeh F; Liu Y; Guan X; Head-Gordon T Learning to Make Chemical Predictions: The Interplay of Feature Representation, Data, and Machine Learning Methods. Chem 2020, 6 (7), 1527–1542. DOI: 10.1016/j.chempr.2020.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (11).Rupp M; Tkatchenko A; Müller K-R; Lilienfeld O. A. v. Fast and Accurate Modeling of Molecular Atomization Energies with Machine Learning. Phys Rev Lett 2012, 108 (5), 058301. DOI: 10.1103/physrevlett.108.058301. [DOI] [PubMed] [Google Scholar]

- (12).Montavon G; Rupp M; Gobre V; Vazquez-Mayagoitia A; Hansen K; Tkatchenko A; Müller K-R; Lilienfeld O. A. v. Machine learning of molecular electronic properties in chemical compound space. New J Phys 2013, 15 (9), 095003. DOI: 10.1088/1367-2630/15/9/095003. [DOI] [Google Scholar]

- (13).Hansen K; Montavon G; Biegler F; Fazli S; Rupp M; Scheffler M; Lilienfeld O. A. v.; Tkatchenko A; Müller K-R Assessment and Validation of Machine Learning Methods for Predicting Molecular Atomization Energies. J Chem Theory Comput 2013, 9 (8), 3404–3419. DOI: 10.1021/ct400195d. [DOI] [PubMed] [Google Scholar]

- (14).Hirn M; Poilvert N; Mallat S Quantum Energy Regression using Scattering Transforms. arXiv:1502.02077 2015. [Google Scholar]

- (15).Hansen K; Biegler F; Ramakrishnan R; Pronobis W; von Lilienfeld OA; Müller K-R; Tkatchenko A Machine Learning Predictions of Molecular Properties: Accurate Many-Body Potentials and Nonlocality in Chemical Space. J Phys Chem Lett 2015, 6 (12), 2326–2331. DOI: 10.1021/acs.jpclett.5b00831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (16).Bartók AP; Payne MC; Kondor R; Csányi G Gaussian Approximation Potentials: The Accuracy of Quantum Mechanics, without the Electrons. Phys Rev Lett 2010, 104 (13), 136403. DOI: 10.1103/physrevlett.104.136403. [DOI] [PubMed] [Google Scholar]

- (17).Bartók AP; Kondor R; Csányi G On representing chemical environments. Physical Review B 2013, 87 (18), 184115. DOI: 10.1103/physrevb.87.184115. [DOI] [Google Scholar]

- (18).Behler J Constructing high‐dimensional neural network potentials: A tutorial review. Int J Quantum Chem 2015, 115 (16), 1032–1050. DOI: 10.1002/qua.24890. [DOI] [Google Scholar]

- (19).Behler J Atom-centered symmetry functions for constructing high-dimensional neural network potentials. J Chem Phys 2011, 134 (7), 074106. DOI: 10.1063/1.3553717. [DOI] [PubMed] [Google Scholar]

- (20).Behler J Neural network potential-energy surfaces in chemistry: a tool for large-scale simulations. Phys Chem Chem Phys 2011, 13 (40), 17930–17955. DOI: 10.1039/c1cp21668f. [DOI] [PubMed] [Google Scholar]

- (21).Smith JS; Isayev O; Roitberg AE ANI-1: an extensible neural network potential with DFT accuracy at force field computational cost. Chem Sci 2017, 8 (4), 3192–3203. DOI: 10.1039/c6sc05720a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (22).Haghighatlari M; Li J; Guan X; Zhang O; Das A; Stein CJ; Heidar-Zadeh F; Liu M; Head-Gordon M; Bertels L; Hao H; Leven I; Head-Gordon T NewtonNet: a Newtonian message passing network for deep learning of interatomic potentials and forces. Digital Discov 2022, 1 (3), 333–343. DOI: 10.1039/d2dd00008c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (23).Schütt KT; Arbabzadah F; Chmiela S; Müller KR; Tkatchenko A Quantum-chemical insights from deep tensor neural networks. Nat Commun 2017, 8 (1), 13890. DOI: 10.1038/ncomms13890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (24).Ruddigkeit L; Deursen R. v.; Blum LC; Reymond J-L Enumeration of 166 Billion Organic Small Molecules in the Chemical Universe Database GDB-17. J Chem Inf Model 2012, 52 (11), 2864–2875. DOI: 10.1021/ci300415d. [DOI] [PubMed] [Google Scholar]

- (25).Ramakrishnan R; Dral PO; Rupp M; Lilienfeld O. A. v. Quantum chemistry structures and properties of 134 kilo molecules. Sci Data 2014, 1 (1), 140022. DOI: 10.1038/sdata.2014.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (26).Glavatskikh M; Leguy J; Hunault G; Cauchy T; Mota BD Dataset’s chemical diversity limits the generalizability of machine learning predictions. J Cheminformatics 2019, 11 (1), 69. DOI: 10.1186/s13321-019-0391-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (27).Gilmer J; Schoenholz SS; Riley PF; Vinyals O; Dahl GE Neural Message Passing for Quantum Chemistry In Proceedings of the 34th International Conference on Machine Learning, Proceedings of Machine Learning Research; 2017. [Google Scholar]

- (28).Schütt KT; Sauceda HE; Kindermans PJ; Tkatchenko A; Müller KR SchNet – A deep learning architecture for molecules and materials. J Chem Phys 2018, 148 (24), 241722. DOI: 10.1063/1.5019779. [DOI] [PubMed] [Google Scholar]

- (29).Lubbers N; Smith JS; Barros K Hierarchical modeling of molecular energies using a deep neural network. J Chem Phys 2018, 148 (24), 241715. DOI: 10.1063/1.5011181. [DOI] [PubMed] [Google Scholar]

- (30).Lu C; Liu Q; Wang C; Huang Z; Lin P; He L Molecular Property Prediction: A Multilevel Quantum Interactions Modeling Perspective. Proceedings of the AAAI Conference on Artificial Intelligence 2019, 33, 1052–1060. DOI: 10.1609/aaai.v33i01.33011052. [DOI] [Google Scholar]

- (31).Lu J; Wang C; Zhang Y Predicting Molecular Energy Using Force-Field Optimized Geometries and Atomic Vector Representations Learned from an Improved Deep Tensor Neural Network. J Chem Theory Comput 2019, 15 (7), 4113–4121. DOI: 10.1021/acs.jctc.9b00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (32).Unke OT; Meuwly M PhysNet: A Neural Network for Predicting Energies, Forces, Dipole Moments, and Partial Charges. J Chem Theory Comput 2019, 15 (6), 3678–3693. DOI: 10.1021/acs.jctc.9b00181. [DOI] [PubMed] [Google Scholar]

- (33).Gasteiger J; Groß J; Günnemann S Directional Message Passing for Molecular Graphs. arXiv:2003.03123 2020. [Google Scholar]

- (34).Klicpera J; Giri S; Margraf JT; Günnemann S Fast and Uncertainty-Aware Directional Message Passing for Non-Equilibrium Molecules. arXiv:2011.14115 2020. [Google Scholar]

- (35).Lu J; Xia S; Lu J; Zhang Y Dataset Construction to Explore Chemical Space with 3D Geometry and Deep Learning. J Chem Inf Model 2021, 61 (3), 1095–1104. DOI: 10.1021/acs.jcim.1c00007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (36).Jiang D; Wu Z; Hsieh CY; Chen G; Liao B; Wang Z; Shen C; Cao D; Wu J; Hou T Could graph neural networks learn better molecular representation for drug discovery? A comparison study of descriptor-based and graph-based models. J Cheminform 2021, 13 (1), 12. DOI: 10.1186/s13321-020-00479-8 From NLM PubMed-not-MEDLINE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (37).Wu Z; Ramsundar B; Feinberg EN; Gomes J; Geniesse C; Pappu AS; Leswing K; Pande V MoleculeNet: a benchmark for molecular machine learning. Chem Sci 2017, 9 (2), 513–530. DOI: 10.1039/c7sc02664a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (38).Xiong Z; Wang D; Liu X; Zhong F; Wan X; Li X; Li Z; Luo X; Chen K; Jiang H; Zheng M Pushing the Boundaries of Molecular Representation for Drug Discovery with the Graph Attention Mechanism. J Med Chem 2020, 63 (16), 8749–8760. DOI: 10.1021/acs.jmedchem.9b00959. [DOI] [PubMed] [Google Scholar]

- (39).Yang K; Swanson K; Jin W; Coley C; Eiden P; Gao H; Guzman-Perez A; Hopper T; Kelley B; Mathea M; Palmer A; Settels V; Jaakkola T; Jensen K; Barzilay R Analyzing Learned Molecular Representations for Property Prediction. J Chem Inf Model 2019, 59 (8), 3370–3388. DOI: 10.1021/acs.jcim.9b00237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (40).Cho H; Choi IS Enhanced Deep‐Learning Prediction of Molecular Properties via Augmentation of Bond Topology. ChemMedChem 2019, 14 (17), 1604–1609. DOI: 10.1002/cmdc.201900458. [DOI] [PubMed] [Google Scholar]

- (41).Lo Y-C; Rensi SE; Torng W; Altman RB Machine learning in chemoinformatics and drug discovery. Drug Discov Today 2018, 23 (8), 1538–1546. DOI: 10.1016/j.drudis.2018.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (42).Chen D; Gao K; Nguyen DD; Chen X; Jiang Y; Wei G-W; Pan F Algebraic graph-assisted bidirectional transformers for molecular property prediction. Nat Commun 2021, 12 (1), 3521. DOI: 10.1038/s41467-021-23720-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (43).Zhang D; Xia S; Zhang Y Accurate Prediction of Aqueous Free Solvation Energies Using 3D Atomic Feature-Based Graph Neural Network with Transfer Learning. J Chem Inf Model 2022, 62 (8), 1840–1848. DOI: 10.1021/acs.jcim.2c00260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (44).Klamt A; Eckert F; Arlt W COSMO-RS: An Alternative to Simulation for Calculating Thermodynamic Properties of Liquid Mixtures. Annu Rev Chem Biomol 2010, 1 (1), 101–122. DOI: 10.1146/annurev-chembioeng-073009-100903. [DOI] [PubMed] [Google Scholar]

- (45).Bannan CC; Calabró G; Kyu DY; Mobley DL Calculating Partition Coefficients of Small Molecules in Octanol/Water and Cyclohexane/Water. J Chem Theory Comput 2016, 12 (8), 4015–4024. DOI: 10.1021/acs.jctc.6b00449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (46).Kundi V; Ho J Predicting Octanol–Water Partition Coefficients: Are Quantum Mechanical Implicit Solvent Models Better than Empirical Fragment-Based Methods? J Phys Chem B 2019, 123 (31), 6810–6822. DOI: 10.1021/acs.jpcb.9b04061. [DOI] [PubMed] [Google Scholar]

- (47).Warnau J; Wichmann K; Reinisch J COSMO-RS predictions of logP in the SAMPL7 blind challenge. J Comput Aid Mol Des 2021, 35 (7), 813–818. DOI: 10.1007/s10822-021-00395-5. [DOI] [PubMed] [Google Scholar]

- (48).Wu K; Zhao Z; Wang R; Wei GW TopP–S: Persistent homology‐based multi‐task deep neural networks for simultaneous predictions of partition coefficient and aqueous solubility. J Comput Chem 2018, 39 (20), 1444–1454. DOI: 10.1002/jcc.25213. [DOI] [PubMed] [Google Scholar]

- (49).Jiang J; Wang R; Wang M; Gao K; Nguyen DD; Wei G-W Boosting Tree-Assisted Multitask Deep Learning for Small Scientific Datasets. J Chem Inf Model 2020, 60 (3), 1235–1244. DOI: 10.1021/acs.jcim.9b01184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (50).Mobley DL; Guthrie JP FreeSolv: a database of experimental and calculated hydration free energies, with input files. J Comput Aid Mol Des 2014, 28 (7), 711–720. DOI: 10.1007/s10822-014-9747-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (51).Beauman J; Howard P Physprop database. Syracuse Research, Syracuse, NY, USA: 1995. [Google Scholar]

- (52).Leo A; Hoekman D Exploring QSAR; American Chemical Society, 1995. [Google Scholar]

- (53).Pan SJ; Yang Q A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering 2009, 22 (10), 1345–1359. DOI: 10.1109/tkde.2009.191. [DOI] [Google Scholar]

- (54).Irwin JJ; Shoichet BK ZINC− a free database of commercially available compounds for virtual screening. J Chem Inf Model 2005, 45 (1), 177–182. DOI: 10.1021/ci049714+. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (55).Irwin JJ; Sterling T; Mysinger MM; Bolstad ES; Coleman RG ZINC: A Free Tool to Discover Chemistry for Biology. J Chem Inf Model 2012, 52 (7), 1757–1768. DOI: 10.1021/ci3001277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (56).Sterling T; Irwin JJ ZINC 15 – Ligand Discovery for Everyone. J Chem Inf Model 2015, 55 (11), 2324–2337. DOI: 10.1021/acs.jcim.5b00559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (57).Kim S; Chen J; Cheng T; Gindulyte A; He J; He S; Li Q; Shoemaker BA; Thiessen PA; Yu B; Zaslavsky L; Zhang J; Bolton EE PubChem 2019 update: improved access to chemical data. Nucleic Acids Res 2018, 47 (D1), D1102–D1109. DOI: 10.1093/nar/gky1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (58).Kim S; Chen J; Cheng T; Gindulyte A; He J; He S; Li Q; Shoemaker BA; Thiessen PA; Yu B; Zaslavsky L; Zhang J; Bolton EE PubChem in 2021: new data content and improved web interfaces. Nucleic Acids Res 2020, 49 (D1), D1388–D1395. DOI: 10.1093/nar/gkaa971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (59).Groom CR; Bruno IJ; Lightfoot MP; Ward SC The Cambridge Structural Database. Acta Crystallogr Sect B Struct Sci Cryst Eng Mater 2016, 72 (2), 171–179. DOI: 10.1107/s2052520616003954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (60).Korshunova M; Ginsburg B; Tropsha A; Isayev O OpenChem: A Deep Learning Toolkit for Computational Chemistry and Drug Design. J Chem Inf Model 2021, 61 (1), 7–13. DOI: 10.1021/acs.jcim.0c00971. [DOI] [PubMed] [Google Scholar]

- (61).Gaussian 16 Rev. C.01; Wallingford, CT, 2016. https://gaussian.com/gaussian16/ (accessed 2022-06-15). [Google Scholar]

- (62).Marenich AV; Cramer CJ; Truhlar DG Universal Solvation Model Based on Solute Electron Density and on a Continuum Model of the Solvent Defined by the Bulk Dielectric Constant and Atomic Surface Tensions. J Phys Chem B 2009, 113 (18), 6378–6396. DOI: 10.1021/jp810292n. [DOI] [PubMed] [Google Scholar]

- (63).Fourches D; Muratov E; Tropsha A Trust, But Verify: On the Importance of Chemical Structure Curation in Cheminformatics and QSAR Modeling Research. J Chem Inf Model 2010, 50 (7), 1189–1204. DOI: 10.1021/ci100176x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (64).Riniker S; Landrum GA Better Informed Distance Geometry: Using What We Know To Improve Conformation Generation. J Chem Inf Model 2015, 55 (12), 2562–2574. DOI: 10.1021/acs.jcim.5b00654. [DOI] [PubMed] [Google Scholar]

- (65).RDKit: Open-Source Cheminformatics Software; 2016. http://www.rdkit.org (accessed 2022-06-15).

- (66).Mohammed R; Rawashdeh J; Abdullah M Machine learning with oversampling and undersampling techniques: overview study and experimental results In 2020 11th international conference on information and communication systems (ICICS), 2020; IEEE: pp 243–248. [Google Scholar]

- (67).Lakshminarayanan B; Pritzel A; Blundell C Simple and scalable predictive uncertainty estimation using deep ensembles. Advances in neural information processing systems 2017, 30. [Google Scholar]

- (68).Kearnes S; McCloskey K; Berndl M; Pande V; Riley P Molecular graph convolutions: moving beyond fingerprints. J Comput Aid Mol Des 2016, 30 (8), 595–608. DOI: 10.1007/s10822-016-9938-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (69).Weinreich J; Browning NJ; Lilienfeld OA v. Machine learning of free energies in chemical compound space using ensemble representations: Reaching experimental uncertainty for solvation. J Chem Phys 2021, 154 (13), 134113. DOI: 10.1063/5.0041548. [DOI] [PubMed] [Google Scholar]

- (70).Tetko IV; Tanchuk VY; Villa AE P. Prediction of n-Octanol/Water Partition Coefficients from PHYSPROP Database Using Artificial Neural Networks and E-State Indices. J Chem Inf Comp Sci 2001, 41 (5), 1407–1421. DOI: 10.1021/ci010368v. [DOI] [PubMed] [Google Scholar]

- (71).Ulrich N; Goss K-U; Ebert A Exploring the octanol–water partition coefficient dataset using deep learning techniques and data augmentation. Commun Chem 2021, 4 (1), 90. DOI: 10.1038/s42004-021-00528-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (72).Mansouri K; Grulke CM; Judson RS; Williams AJ OPERA models for predicting physicochemical properties and environmental fate endpoints. J Cheminformatics 2018, 10 (1), 10. DOI: 10.1186/s13321-018-0263-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (73).Phipps MJS; Fox T; Tautermann CS; Skylaris C-K Energy decomposition analysis approaches and their evaluation on prototypical protein–drug interaction patterns. Chem Soc Rev 2015, 44 (10), 3177–3211. DOI: 10.1039/c4cs00375f. [DOI] [PubMed] [Google Scholar]

- (74).Andrés J; Ayers PW; Boto RA; Carbó‐Dorca R; Chermette H; Cioslowski J; Contreras‐García J; Cooper DL; Frenking G; Gatti C; Heidar‐Zadeh F; Joubert L; Pendás ÁM; Matito E; Mayer I; Misquitta AJ; Mo Y; Pilmé J; Popelier PLA; Rahm M; Ramos‐Cordoba E; Salvador P; Schwarz WHE; Shahbazian S; Silvi B; Solà M; Szalewicz K; Tognetti V; Weinhold F; Zins ÉL Nine questions on energy decomposition analysis. J. Comput. Chem. 2019, 40 (26), 2248–2283. DOI: 10.1002/jcc.26003. [DOI] [PubMed] [Google Scholar]

- (75).Soleimany AP; Amini A; Goldman S; Rus D; Bhatia SN; Coley CW Evidential Deep Learning for Guided Molecular Property Prediction and Discovery. Acs Central Sci 2021, 7 (8), 1356–1367. DOI: 10.1021/acscentsci.1c00546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (76).Nigam A; Pollice R; Hurley MFD; Hickman RJ; Aldeghi M; Yoshikawa N; Chithrananda S; Voelz VA; Aspuru-Guzik A Assigning confidence to molecular property prediction. Expert Opin Drug Dis 2021, 16 (9), 1009–1023. DOI: 10.1080/17460441.2021.1925247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (77).Zhu H; Foley TL; Montgomery JI; Stanton RV Understanding Data Noise and Uncertainty through Analysis of Replicate Samples in DNA-Encoded Library Selection. J Chem Inf Model 2022, 62 (9), 2239–2247. DOI: 10.1021/acs.jcim.1c00986. [DOI] [PubMed] [Google Scholar]

- (78).Scalia G; Grambow CA; Pernici B; Li Y-P; Green WH Evaluating Scalable Uncertainty Estimation Methods for Deep Learning-Based Molecular Property Prediction. J Chem Inf Model 2020, 60 (6), 2697–2717. DOI: 10.1021/acs.jcim.9b00975. [DOI] [PubMed] [Google Scholar]

- (79).Hirschfeld L; Swanson K; Yang K; Barzilay R; Coley CW Uncertainty Quantification Using Neural Networks for Molecular Property Prediction. J Chem Inf Model 2020, 60 (8), 3770–3780. DOI: 10.1021/acs.jcim.0c00502. [DOI] [PubMed] [Google Scholar]

- (80).Ryu S; Kwon Y; Kim WY A Bayesian graph convolutional network for reliable prediction of molecular properties with uncertainty quantification. Chem Sci 2019, 10 (36), 8438–8446. DOI: 10.1039/c9sc01992h. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (81).Maddox WJ; Izmailov P; Garipov T; Vetrov DP; Wilson AG A simple baseline for bayesian uncertainty in deep learning. Advances in Neural Information Processing Systems 2019, 32. [Google Scholar]

- (82).Zhang Y; Lee AA Bayesian semi-supervised learning for uncertainty-calibrated prediction of molecular properties and active learning. Chem Sci 2019, 10 (35), 8154–8163. DOI: 10.1039/c9sc00616h. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (83).Schwaller P; Laino T; Gaudin T. o.; Bolgar P; Hunter CA; Bekas C; Lee AA Molecular Transformer: A Model for Uncertainty-Calibrated Chemical Reaction Prediction. Acs Central Sci 2019, 5 (9), 1572–1583. DOI: 10.1021/acscentsci.9b00576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (84).Gal Y; Hron J; Kendall A Concrete dropout. Advances in neural information processing systems 2017, 30. [Google Scholar]

- (85).Gal Y; Ghahramani Z Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning In Proceedings of The 33rd International Conference on Machine Learning, Proceedings of Machine Learning Research; 2016. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.