Introduction: Artificial Intelligence and Health Equity

Artificial intelligence (AI) and machine learning (ML) technologies can leverage massive amounts of data for predictive modeling in a wide variety of fields and are increasingly used to inform complex decision-making and clinical processes in healthcare1. Examples include computer vision-assisted mammograms to improve breast cancer detection,2 models that predict respiratory decompensation in patients with COVID-193 and AI tools which predict length of stay, facilitate resource allocation and lower healthcare costs.4

In gastroenterology and hepatology, opportunities for AI and ML (AI/ML) implementation are burgeoning. Recent studies have explored AI applications such as computer-aided detection and diagnosis of premalignant and malignant gastrointestinal lesions, prediction of treatment response in patients with inflammatory bowel disease (IBD), histopathologic analysis of biopsy specimens, assessment of liver fibrosis severity in chronic liver disease, models for liver transplant allocation and others.5,6,7,8

AI-based systems are increasingly making the transition from research to bedside and have the potential to revolutionize patient care. However, these advances must be matched by corresponding regulatory and ethical frameworks being developed by the Food and Drug Administration (FDA) and other agencies that oversee the intended and unintended consequences of their use.9 Concerns have already been raised regarding the biases and health inequities that can be introduced or amplified when applying computer algorithms in healthcare.10 For instance, a commercial algorithm applied to approximately 200 million patients in the United States (U.S.) was racially biased—White patients were preferentially enrolled in “high-risk care management programs” compared to Black patients with similar risk scores, resulting in fewer healthcare dollars spent on Black patients.11 Another study demonstrated that a ML algorithm that predicts intensive care unit (ICU) mortality and 30-day psychiatric readmission rates had poorer predictive performance for women and patients with public insurance.12

Prior to deploying and scaling AI/ML tools, it is critical to ensure that the risk of bias is minimized and opportunities to promote health equity are amplified. In this work, we identify areas in gastroenterology and hepatology where algorithms could exacerbate disparities, and offer potential areas of opportunity for advancing health equity through AI/ML. For the purposes of this paper, health equity is centered on distributive justice to eliminate systematic racial, ethnic and sex disparities.13

Five Key Mechanisms Through Which AI/ML Can Contribute to Health Inequities

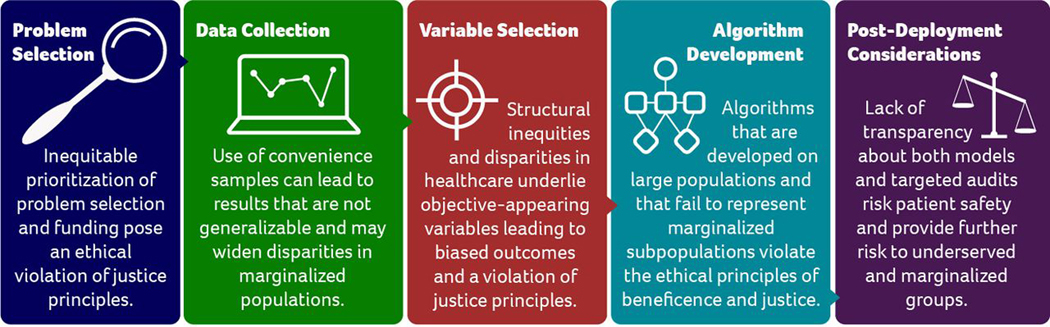

Early efforts to promote responsible and ethical applications of AI and ML in clinical medicine have revealed several mechanisms through which algorithms can introduce bias and exacerbate health inequities (Figure 1).10,14 It is essential for researchers and clinicians in gastroenterology and hepatology to understand these mechanisms as new technologies are being applied to our field.

FIGURE 1:

Mechanisms Through Which AI Contributes to Health Inequities

Adapted from Chen I, Pierson E, Sherri R, Joshi S, Ferryman K, Ghassemi M. Ethical Machine Learning in Healthcare. Annual Review of Biomedical Data Science. 2020;4

The first theme is disparities in clinical or research problem selection14— research questions often target concerns in majority populations due to unequal funding availability and/or interest in the problem by industry, researchers, funders, and grant review committees. As a result, we see critical racial, ethnic and sex disparities in the research problems that are prioritized and funded in AI/ML.14,15

The second theme is bias in data collection,14 where collected data may capture a disproportionate share of one population group over another. This can result in algorithms that are not widely generalizable,14,16–19 especially for individuals from traditionally underrepresented and marginalized groups that are not commonly or appreciably represented in research databases.

The third theme is bias due to variable selection.14 Variables and outcome measures may appear unbiased on initial evaluation even though they are proxies for, or confounded by, explicit or implicit biases against underrepresented or marginalized groups.

The fourth theme is bias in algorithm development.14 In this case, the assumptions made and used by the research team lead to inherently biased models or models that are overfitted to narrow training data.13,15,17 Additionally, the performance metrics of AL/ML model training, such as area under the curve (AUC) are not inherently optimal for equitable performance in diverse populations.14,20

Finally, inequities may results from post-deployment considerations. 14 Even a potentially unbiased AI tool may lead to biased behavior when deployed in the clinical setting. It is important to consider 1) how a tool will perform in a disease that has different conditional distributions in a population and 2) the potentially negative human-computer interactions that may occur. For instance, providers may follow an AI/ML generated treatment recommendation when it confirms their biased beliefs but disregard treatment recommendations that do not conform to their beliefs.14,21 The impacts of the algorithm should be evaluated in population subgroups to assess differences in clinical behavior, performance and outcomes by sociodemographic factors, rather than at the population level alone.14

AI in Gastroenterology and Hepatology: Impacts on Health Equity

We have chosen specific clinical examples from the literature to illustrate the specific ways existing AI/ML algorithms may already exacerbate bias and inequities within the fields of gastroenterology and hepatology (Table 1).

Table 1:

Types of bias observed in artificial intelligence in clinical medicine

| Theme | Definition | Examples |

|---|---|---|

| Problem Selection | Differential research priority and funding for issues that affect marginalized groups. | • AI has been used extensively to detect Barrett’s esophagus and esophageal adenocarcinoma, which mainly affects White individuals. • In contrast, AI applications in esophageal squamous carcinoma—which is more prevalent in underserved populations—are under-researched. |

| Data collection | Inadequate representation of underserved groups in training datasets result in biased algorithms that yield inaccurate outputs for these subgroups. | • A model trained on Veteran’s Health Administration electronic database to predict IBD flares may generate incorrect predictions for non-white populations that are underrepresented in the training dataset. |

| Variable Selection | Seemingly objective predictor and outcome variables that are included in a model may be confounded by or proxies for factors that lead to biased results. | • MELD exception points may appropriately prioritize patients with hepatocellular carcinoma on the transplant waitlist; however, this is confounded by the increased prevalence of HCC in men which leads to lower transplant rates for women. • The inclusion of serum creatinine in the MELD leads to lower scores and transplant priority for women as serum creatinine underestimates renal dysfunction in women. |

| Algorithm development | Models are developed to recreate patterns in the training dataset and may not account for systemic biases. | • Racial and ethnic minorities are less likely to be referred for liver transplant and more likely to be offered a lower quality allograft. Predictive models could learn these patterns and propagate existing disparities. |

| Post-deployment considerations | Potentially unbiased AI tools may lead to biased outcomes when deployed in real life either due to differential conditional distribution of outcomes of interests across sub-populations. | • Computer vision has been shown to aid detection of traditional adenomas; however, there is limited data on proximal and sessile serrated lesions which are more prevalent in Black individuals. |

Esophageal Cancer

Prevention and early recognition of esophageal cancer is an area in which AI may hold particular promise, and there has been meaningful research progress in this area. In the U.S., the vast majority of esophageal cancer research focuses on technologies (with and without AI) to improve the early identification and treatment of Barrett’s esophagus and esophageal adenocarcinoma (EAC).22–26 Unfortunately, this emphasis on EAC in the US primarily benefits White populations, who have the highest incidence and mortality from EAC.27

Esophageal squamous cell carcinoma (ESCC) is more common than EAC and has a higher incidence and mortality in non-White populations in the US and worldwide.28,29 Specifically, Black individuals in the US have the highest incidence of ESCC at 4.9 per 100,000 people, followed by Asians at 1.9 per 100,000, and Whites with the lowest rates at 1.4 per 100,000. These rates are higher than overall EAC rates and EAC rates among non-Whites in the US: 2.3 per 100,000 for Whites, compared to 0.5 per 100,000 in Black and Asian indiviudals.28 Furthermore, AI research in ESCC has largely been performed in Asian countries, and thus, it is uncertain whether findings may be generalizable to Black individuals or other population subgroups globally.30–34

This research disparity may be due to a clinical or research problem selection bias (theme 1); both researchers and funders should work to ensure more equity in problem selection. AI-based tools for early recognition of esophageal cancer and precursor lesions have the potential to save many lives, but in the current state will largely impact White patients and not individuals from underrepresented groups. It is imperative that we consider inclusive AI/ML research questions to avoid preferential development of technologies that consistently benefit one group over others.

Inflammatory Bowel Disease (IBD)

In IBD, AI/ML computer vision tools have been developed for endoscopic assessment of disease severity, to distinguish colitis from neoplasia, and to differentiate sporadic adenomas from non-neoplastic lesions.6,35 AI algorithms have also been trained to predict treatment response and assess risk of disease recurrence.35–37 AI has the potential to play an important role in IBD treatment decisions by predicting response earlier in the treatment course and guiding personalized therapy choices.

However, many AI/ML models developed in IBD have been created in largely White populations. For example, one study used AI to predict future corticosteroid use and hospitalizations in IBD patients from a cohort of 20,368 patients at the Veterans Health Administration (VA).36 The authors concluded that their model had the potential to predict IBD flares, improve patient outcomes and reduce healthcare costs. They also noted that their algorithm would be easy to implement at the point of care to individualize and tailor therapies for individual patients. The population that was used to derive the model was 93% male which may make predictions for female patients with IBD less relevant. The algorithm also included race as a predictor, though the dataset was racially skewed: the study population was 70% White, 8% Black, 1.7% Other and 19% Unknown. This study was replicated in a large insurance-based cohort of 95,878 patients—though women were more adequately represented (57.1%), the patient population was still predominantly white (87.7%).38

While IBD was previously thought of as a disease that predominantly affects White individuals, there is now an increasing incidence in other racial and ethnic groups in the U.S. and worldwide.39–41 In addition, IBD management and outcomes are worse for Black and Latino patients compared to White patients, which should prompt increased research and clinical decision support for these groups.42, 43 In the VA study, the proportion of the population that was Hispanic/Latinx or South and East Asian was not included, despite the fact that these groups comprise an increasingly large share of the IBD populations. While this study may be beneficial to the patient population served by the VA, it suggests that even in very large cohorts, there may be entrenched patterns of bias in data collection (theme 2): algorithms that do not include the rich diversity of IBD patients can result in biased systems, care and outcomes, particularly if extrapolated to the general population.

Liver Transplantation

There are numerous opportunities for AI/ML applications in hepatology, including the assessment of hepatic fibrosis progression, detection of nonalcoholic fatty liver disease, identification of patients at risk of hepatocellular carcinoma (HCC) and optimization of organ transplant protocols.6,44 As we explore the complex and opaque nature of emerging AI clinical prediction tools, it is important to recognize that bias can be encoded even in conventional prediction models, including simple, rule-based algorithms.

Prior to the adoption of the Model for End-Stage Liver Disease (MELD) score in 2002, the liver allocation process was fraught with variability, subjectivity and opportunities for manipulation, which resulted in inequities45. To address these shortcomings and standardize the organ allocation process, the United Network for Organ Sharing (UNOS) turned to the MELD—an algorithmic model which predicts three-month survival rates in patients with cirrhosis—as a way to more fairly prioritize patients for liver transplantation46. Variables included in the model appear to be objective lab values—bilirubin, creatinine, international normalized ratio (INR) and sodium. Creatinine however underestimates renal dysfunction in women, leading to lower MELD scores compared to men with similar disease severity. This underestimation negatively impacts equitable organ allocation for liver transplant.47,48,49

A similar example occurs with MELD exception points— a system where patients with certain conditions that confer excess risk beyond that captured by the lab variables that comprise MELD (such as HCC) may accumulate points and advance their position on the transplant waitlist.50 Review of data from Organ Procurement and Transplantation Network (OPTN) registries shows that at similar listing priority, patients with MELD exception points are less likely to die on the waitlist, more likely to receive a transplant and less likely to be women.49,51,52 Part of this discrepancy is because HCC—the indication for MELD exception points in approximately 70% of patients49,52— is 2 to 4 times more common in men.53,54 Therefore, the inclusion of this variable in the model inadvertently deprioritizes women and perpetuates sex disparities in liver transplantation: women are up to 20% less likely to receive a liver transplant and 8.6% more likely to die on the transplant waitlist.52,55

While conventional prediction models use few variables that appear to be transparent, high-capacity ML algorithms may employ innumerable variables from large volumes of data and identify highly complex nonlinear patterns that are less comprehensible—i.e., black box models—with the promise of increased predictive accuracy44,56. Regardless of the type of model used, the variables selected (theme 3) as inputs for these models— conventional and AI-based alike—may appear objective at face value but can unwittingly introduce bias and lead to inequitable outcomes as illustrated with the MELD.

Bias due to variable selection is intrinsically related to the introduction of bias during algorithm development. Datasets used for predictive modeling may have unintended encoded biases, which has the potential to generate biased algorithms in the algorithm development phase (theme 4) as ML models aim to fit the datasets on which they train. For example, review of OPTN registry data reveals that medically underserved groups are less likely to be referred for liver transplant, less likely to undergo liver transplantation and more likely to receive lower quality allografts compared to White patients.57 Predictive models trained on such datasets could recreate these biases and amplify existing racial and ethnic liver transplantation disparities when deployed. Assigning transplant priorities based on predicted outcomes from biased models has huge ramifications for health equity in organ allocation. Identifying and rectifying biases after the model has been deployed can prove to be difficult— it took several years to show that an estimated glomerular filtration rate equation widely used to assign renal transplant priorities was biased against Black patients.58 Therefore, it is imperative that fairness and potential biases are addressed upfront.

Colorectal Cancer (CRC) Screening

CRC prevention and control are major public health contributions of gastroenterologists. Effective colorectal cancer screening depends significantly on the endoscopist’s ability to identify and remove high-risk colon and rectal polyps during colonoscopy. Adenoma detection rate (ADR) is a validated measure of colonoscopy quality and significant predictor of interval CRC risk.59 Wide variability in ADR has been observed among endoscopists,60 which contributes to suboptimal colonoscopy effectiveness to prevent CRC incidence and deaths. Advances in machine learning have led to the application of computer vision to aid polyp detection during colonoscopy with data supporting the use of computer-aided detection (CADe) to increase ADR.61,62 Recently, the US Food and Drug Administration approved the first AI software based on ML to assist clinicians in the detection of colorectal polyps.9 However, when implementing these tools, it is important to consider the conditional distributions (theme 5) of colorectal polyps across sub-populations.

Both proximal (right-sided) and sessile serrated lesions (sessile serrated polyps and serrated adenomas) are more challenging to detect as they can be flat and subtle compared to traditional adenomas.63 Data is limited on the sensitivity of CADe for proximal and sessile serrated lesions; one study suggests lower sensitivity for sessile serrated lesions.64 As Black patients are more likely to have proximal polyps65,66,67,68 and to have sessile serrated lesions,69,70 CADe models trained primarily on traditional adenomas may have higher miss rates for precancerous lesions and be less effective for Black patients. As Black individuals have 20% higher CRC incidence, 40% higher CRC deaths, and 30% higher interval CRC risk, CADe has the potential to exacerbate existing racial disparities if their ability to detect high-risk polyps is reduced among Black individuals or other patient populations.71,72

It is essential to determine whether these AI/ML-powered models are also adequately trained to detect the high-risk polyps that are more commonly seen in Black populations and in other populations that also suffer disproportionately from CRC. Optimizing and validating these models and their miss rates across multiple and diverse populations has the potential to reduce variability in colonoscopy quality and improve racial disparities in CRC, especially as these technologies begin to gain FDA approval and are applied to diverse community settings. However, it is important to highlight that medically underserved and vulnerable populations often face barriers to accessing these clinically-indicated tests to begin with. For instance, Black patients are less likely to receive colonoscopy screening/surveillance73–76, surveillance imaging for hepatocellular carcinoma77,78 and cross-sectional abdominal staging scans for pancreatic cancer79. This challenge further limits the opportunities for AI research to optimize these tests for diverse populations and promote health equity.

Increasing Equity in AI: Potential Solutions

It is imperative that we identify and implement pragmatic solutions to emphasize and optimize health equity in AI/ML development and application in gastroenterology and hepatology. Tools are needed to debias data collection, model training, model outputs, and clinical application. Recent increased focus on health equity in health and healthcare has motivated discussion about how to achieve these goals, and these approaches are also urgently relevant to our field.11,14,20,80 Potential solutions to the equity challenges we have highlighted in this piece include incorporating a health equity lens early and often in AI/ML research and development, increasing the diversity of patients involved in AI/ML clinical trials, regulatory standards for reporting, and pre- and post-deployment auditing (Table 2).

Table 2:

Approaches to eliminate bias in AI/ML

| Appropriate research expertise | Involve health equity experts in the conception, development, and deployment of AI/ML. |

| Diverse study populations | Diversify study populations to adequately represent marginalized populations in training datasets. Convenience samples such as datasets from electronic health records, claims data and so on, may not be adequately representative of marginalized groups. |

| Diverse study settings | Expand research locations to non-conventional settings where traditionally under-represented and vulnerable populations can be easily reached such as community health centers, faith-based organizations, barbershops, community service organizations, and other settings. |

| Regulatory measures | Determine fair, clear, specific and quantifiable regulatory measures of inequitable outcomes. Researchers should be required to report descriptive data on study populations by sex, race, ethnicity as long as privacy is protected. Standards should be consistent across regulatory bodies, peer-reviewed scientific journals and gastroenterology/hepatology professional societies. |

| Pre-deployment auditing | Mandate auditing processes and sensitivity analyses to assess algorithmic performance across subpopulations in the pre-deployment phases. |

| Post-deployment auditing | Establish auditing processes to assess algorithmic performance across subpopulations in the post-deployment phase and pathways for rapidly mitigating bias if discovered in the post-deployment phase. |

First, a health equity approach to AI/ML requires technically diverse research teams that are aware of how bias can creep into all aspects of the research continuum. Beyond this, gastroenterology and hepatology research teams that employ AI/ML methods should engage health equity experts early in their work so that potential sources of bias are identified early and are addressed in a robust and effective manner.

Second, it is vitally important to increase the diversity of patient populations that are involved in algorithm development and validation in gastroenterology and hepatology. Data collection in AI in our field is currently limited by overfitting and spectrum bias. Overfitting occurs when models are closely tailored to a training set, which can reduce overall generalizability of the model when other datasets are used.81 Spectrum bias occurs when the datasets used to develop models do not reflect the diversity of the population they are meant to serve.81,82 Datasets used for AI in gastroenterology and hepatology are often collected via retrospective or case control design which poses risk for spectrum bias.81,82 Ideally, all algorithms should be developed and tested using a population that reflects the racial, ethnic, age, sex and gender diversity of our society to maximize generalizability in routine practice. Historically, research studies have not been conducted in settings that regularly serve these populations resulting in their ongoing exclusion. Therefore, it is critical to consider where marginalized populations are being served and how best to reach them, both in AI and non-AI contexts. Partnerships between gastroenterology practices and clinics and health centers that provide care for these populations can be leveraged to extend reach and promote generalizability when conducting research studies to advance equity. In addition, non-traditional settings where vulnerable populations routinely receive services should be considered to diversify representation in AI/ML studies and ensure equity in algorithm performance. Furthermore, models should be externally validated with new patient populations and datasets to limit the potential for spectrum bias and overfitting.81

Beyond diversifying the training data, it is crucial that labels (or data classifiers) used in prediction models are adequately representative of the desired outcome alone and are independent of societal inequities11. It is also important to carefully consider the different conditional distributions of labels across subgroups and any variations in how they are classified and measured— these may suggest a need for optimizing benchmarks or developing separate models for different subgroups83. Some tools like Datasheets for Datasets84 or Model Cards for Model Reporting85 do exist, but identifying the precise cause of bias can be challenging and requires careful audits by multidisciplinary teams.

A third focus should be on regulatory standards. Mandating explicit reporting of descriptive data of the patient populations used in AI/ML development—such as race, ethnicity, income, insurance, and sex—is a necessary step, as long as privacy is protected. Doing so enables a clear assessment of appropriate representation in the algorithm’s training dataset and the generalizability of its results. This type of descriptive data will also provide insight regarding which algorithms and models may not represent certain patient groups adequately.

Fourth, there must be robust processes in the pre-deployment phase to audit model outputs and ensure equal algorithmic performance for diverse patient populations. Sensitivity analyses evaluating algorithmic performance in subgroups can identify biased models with inequitable outcomes. Preemptive efforts to adjust models before deployment and mass dissemination protect marginalized subpopulations from inequitable outcomes and can also have cost-saving implications—the excess cost of racial health disparities in the United States is estimated at ~$230 billion over a four-year period.86 For effective impact, the definition of “inequitable outcome” set by regulators must be fair, clear, specific and quantifiable. Ongoing surveillance in the post-deployment setting is also imperative to monitor for unintended consequences of AI/ML and confirm unbiased algorithmic performance in actuality. Of note, access to training data and prediction methodologies of most-large scale AI/ML algorithms is frequently restricted, thus limiting independent efforts to assess for algorithmic biases and how they may have arisen.11 This reality underscores the importance of deidentified open-access data sharing in accordance with FAIR87 data principles—findability, accessibility, interoperability and reusability—which could be highly instrumental in promoting health equity by providing insight into which AI/ML algorithms could perpetuate and/or exacerbate disparities.

Finally, combining AI/ML models with physician clinical-decision making—that is, an augmented intelligence approach with a physician-in-the-loop configuration—may be beneficial in in generating ethical and equitable AI/ML tools.88 Augmented intelligence may be of bidirectional utility as AI/ML models can standardize approaches where considerable provider variability exists while physician interaction can help limit biases that may arise from these tools. However, this must be done with careful consideration as biases can also arise from physician interaction with prediction models including automation bias (overreliance on prediction models), feedback loops, dismissal bias (conscious or unconscious desensitization), and allocation discrepancy.89

While these efforts can minimize bias and create more ethical AI tools, they do not serve as substitutes for repairing medical mistrust90,91 and certainly do not obviate the structural changes needed to build a more equitable health system.92–94

Conclusions

We describe 5 themes to illustrate how AI/ML can lead to inequities in gastroenterology/hepatology, examples of the impact on health equity and several potential actionable solutions to ensure equity in AI. By the year 2045, White individuals will comprise less than 50% of the U.S. population, thus this work is critical as AI/ML becomes more common globally and the U.S becomes more diverse.95

Our primary limitation was the inability to measure or quantify inequities in each clinical example provided. Though each example provided relates directly to a major theme of mechanism of inequities in AI/ML, the degree to which each specific example led to bias cannot be directly measured. In addition, we did not have access to all of the model information used to develop the algorithms discussed nor to robust cost information that could enable a review of cost implications of current AI approaches. This fact highlights the importance of transparency to enable researchers’ access to data and inputs included in each algorithm to advance equity. Lastly, the examples provided in this paper are not an exhaustive list but rather focus on strong and relevant illustrations of how prediction models and AI/ML algorithms in gastroenterology and hepatology can lead to biased systems and inequitable health outcomes.

There are several key strengths of this paper. Firstly, we provide clear and actionable solutions to address health equity in machine learned that can be utilized by researchers and clinicians alike. Secondly, we provide concise themes that illustrate how AI/ML can lead to health inequity in gastroenterology matched to specific examples. Our overarching goal is to increase attention to an important potential downside of AI as its use become more prevalent and pervasive in the fields of gastroenterology and hepatology.

Here, we adapt a framework to consider equity in AI/ML algorithms used in gastroenterology/hepatology and a platform for discussion around an increasingly relevant topic. In other fields of medicine, we have started to reassess prediction models and algorithms and incorporate a health equity lens. The field of gastroenterology and hepatology has already taken a leading role in clinical applications for AI in medicine, and it is therefore especially important that, as a field, we take a leading role in ensuring that equity considerations are emphasized. This framework will help gastroenterology/hepatology researchers and clinicians prioritize equity in AI/ML development, implementation, and evaluation so that we can give every patient an opportunity to benefit from the technologic advances that the future brings.

Key Messages.

Artificial intelligence (AI) and machine learning (ML) systems are increasingly used in medicine to improve clinical decision making and health care delivery.

In gastroenterology and hepatology, studies have explored a myriad of opportunities for AI/ML applications which are already making the transition to bedside.

Despite these advances, there are challenges vis-à-vis biases and health inequities introduced or exacerbated by these technologies. If unrecognized, these technologies could generate or worsen systematic racial, ethnic and sex disparities when deployed on a large scale.

There are several mechanisms through which AI/ML could contribute to health inequities in gastroenterology and hepatology, including diagnosis and management of esophageal cancer, inflammatory bowel disease, liver transplant, colorectal cancer screening and many others.

This review adapts a framework for ethical AI/ML development and application to gastroenterology and hepatology such that clinical practice is advanced while minimizing bias and optimizing health equity.

Grant Support:

FPM is supported by the UCLA Jonsson Comprehensive Cancer Center and the Eli and Edythe Broad Center of Regenerative Medicine and Stem Cell Research Ablon Scholars Program.

Abbreviations:

- AI

Artificial intelligence

- ML

machine learning

- IBD

inflammatory bowel disease

- FDA

Food and Drug Administration

- ICU

intensive care unit

- AUC

area under the curve

- U.S.

United States

- EAC

esophageal adenocarcinoma

- ESCC

esophageal squamous cell carcinoma

- HCC

hepatocellular carcinoma

- MELD

model for end-stage liver disease

- OPTN

organ procurement and transplantation network

- INR

international normalized ratio

- CRC

colorectal cancer

- ADR

adenoma detection rate

- CADe

computer-aided detection

Footnotes

Author Conflict of Interest Statement: T. Berzin is a consultant for Wision AI, Docbot AI, Medtronic, Magentiq Eye. F. May is a consultant for Medtronic and receives research funding from Exact Sciences.

Disclaimer: The opinions and assertions contained herein are the sole views of the authors and are not to be construed as official or as reflecting the views of Massachusetts General Hospital, Beth Israel Deaconess Medical Center, Harvard University, Massachusetts Institute of Technology, University of California, the Department of Veteran Affairs or of the United States government.

REFERENCES

- 1.Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. Dec 2017;2(4):230–243. doi: 10.1136/svn-2017-000101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shen L, Margolies LR, Rothstein JH, Fluder E, McBride R, Sieh W. Deep Learning to Improve Breast Cancer Detection on Screening Mammography. Sci Rep. August 2019;9(1):12495. doi: 10.1038/s41598-019-48995-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Burdick H, Lam C, Mataraso S, et al. Prediction of respiratory decompensation in Covid-19 patients using machine learning: The READY trial. Comput Biol Med. September 2020;124:103949. doi: 10.1016/j.compbiomed.2020.103949 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nordling L. A fairer way forward for AI in health care. Nature. September 2019;573(7775):S103–S105. doi: 10.1038/d41586-019-02872-2 [DOI] [PubMed] [Google Scholar]

- 5.Pannala R, Krishnan K, Melson J, et al. Artificial intelligence in gastrointestinal endoscopy. VideoGIE. Dec 2020;5(12):598–613. doi: 10.1016/j.vgie.2020.08.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Le Berre C, Sandborn WJ, Aridhi S, et al. Application of Artificial Intelligence to Gastroenterology and Hepatology. Gastroenterology. January 2020;158(1):76–94.e2. doi: 10.1053/j.gastro.2019.08.058 [DOI] [PubMed] [Google Scholar]

- 7.Chen B, Garmire L, Calvisi DF, Chua MS, Kelley RK, Chen X. Harnessing big ‘omics’ data and AI for drug discovery in hepatocellular carcinoma. Nat Rev Gastroenterol Hepatol. April 2020;17(4):238–251. doi: 10.1038/s41575-019-0240-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ahn JC, Connell A, Simonetto DA, Hughes C, Shah VH. The application of artificial intelligence for the diagnosis and treatment of liver diseases. Hepatology. Oct 2020; doi: 10.1002/hep.31603 [DOI] [PubMed] [Google Scholar]

- 9.FDA. Artificial Intelligence and Machine Learning in Software as a Medical Device. https://wwwfdagov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device#transforming. 2021; [Google Scholar]

- 10.Obermeyer Z, Nissan R, Stern M, Eaneff S, Bembeneck EJ, Mullainathan S. Algorithmic Bias Playbook. University of Chicago Booth, Center for Applied Artificial Intelligence; 2021. [Google Scholar]

- 11.Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 10 25 2019;366(6464):447–453. doi: 10.1126/science.aax2342 [DOI] [PubMed] [Google Scholar]

- 12.Chen IY, Szolovits P, Ghassemi M. Can AI Help Reduce Disparities in General Medical and Mental Health Care? AMA J Ethics. February 2019;21(2):E167–179. doi: 10.1001/amajethics.2019.167 [DOI] [PubMed] [Google Scholar]

- 13.Braveman P, Gruskin S. Defining equity in health. J Epidemiol Community Health. Apr 2003;57(4):254–8. doi: 10.1136/jech.57.4.254 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chen I, Pierson E, Sherri R, Joshi S, Ferryman K, Ghassemi M. Ethical Machine Learning in Healthcare. Annual Review of Biomedical Data Science. 2020;4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Racial Konkel L. and Ethnic Disparities in Research Studies: The Challenge of Creating More Diverse Cohorts. Environ Health Perspect. Dec 2015;123(12):A297–302. doi: 10.1289/ehp.123-A297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Vollmer S, Mateen BA, Bohner G, et al. Machine learning and artificial intelligence research for patient benefit: 20 critical questions on transparency, replicability, ethics, and effectiveness. BMJ. March 2020;368:l6927. doi: 10.1136/bmj.l6927 [DOI] [PubMed] [Google Scholar]

- 17.Polit DF, Beck CT. Generalization in quantitative and qualitative research: myths and strategies. Int J Nurs Stud. Nov 2010;47(11):1451–8. doi: 10.1016/j.ijnurstu.2010.06.004 [DOI] [PubMed] [Google Scholar]

- 18.Noseworthy PA, Attia ZI, Brewer LC, et al. Assessing and Mitigating Bias in Medical Artificial Intelligence: The Effects of Race and Ethnicity on a Deep Learning Model for ECG Analysis. Circ Arrhythm Electrophysiol March 2020;13(3):e007988. doi: 10.1161/CIRCEP.119.007988 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gianfrancesco MA, Tamang S, Yazdany J, Schmajuk G. Potential Biases in Machine Learning Algorithms Using Electronic Health Record Data. JAMA Intern Med. November 2018;178(11):1544–1547. doi: 10.1001/jamainternmed.2018.3763 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Vyas DA, Eisenstein LG, Jones DS. Hidden in Plain Sight - Reconsidering the Use of Race Correction in Clinical Algorithms. N Engl J Med. August 2020;383(9):874–882. doi: 10.1056/NEJMms2004740 [DOI] [PubMed] [Google Scholar]

- 21.Grote T, Berens P. On the ethics of algorithmic decision-making in healthcare. J Med Ethics. March 2020;46(3):205–211. doi: 10.1136/medethics-2019-105586 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Swager AF, Tearney GJ, Leggett CL, et al. Identification of volumetric laser endomicroscopy features predictive for early neoplasia in Barrett’s esophagus using high-quality histological correlation. Gastrointest Endosc. May 2017;85(5):918–926.e7. doi: 10.1016/j.gie.2016.09.012 [DOI] [PubMed] [Google Scholar]

- 23.van der Sommen F, Zinger S, Curvers WL, et al. Computer-aided detection of early neoplastic lesions in Barrett’s esophagus. Endoscopy. Jul 2016;48(7):617–24. doi: 10.1055/s-0042-105284 [DOI] [PubMed] [Google Scholar]

- 24.van der Sommen F, Klomp SR, Swager AF, et al. Predictive features for early cancer detection in Barrett’s esophagus using Volumetric Laser Endomicroscopy. Comput Med Imaging Graph. July 2018;67:9–20. doi: 10.1016/j.compmedimag.2018.02.007 [DOI] [PubMed] [Google Scholar]

- 25.Ebigbo A, Mendel R, Probst A, et al. Real-time use of artificial intelligence in the evaluation of cancer in Barrett’s oesophagus. Gut. April 2020;69(4):615–616. doi: 10.1136/gutjnl-2019-319460 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hashimoto R, Requa J, Dao T, et al. Artificial intelligence using convolutional neural networks for real-time detection of early esophageal neoplasia in Barrett’s esophagus (with video). Gastrointest Endosc. June 2020;91(6):1264–1271.e1. doi: 10.1016/j.gie.2019.12.049 [DOI] [PubMed] [Google Scholar]

- 27.Islami F, DeSantis CE, Jemal A. Incidence Trends of Esophageal and Gastric Cancer Subtypes by Race, Ethnicity, and Age in the United States, 1997–2014. Clin Gastroenterol Hepatol. February 2019;17(3):429–439. doi: 10.1016/j.cgh.2018.05.044 [DOI] [PubMed] [Google Scholar]

- 28.Chen S, Zhou K, Yang L, Ding G, Li H. Racial Differences in Esophageal Squamous Cell Carcinoma: Incidence and Molecular Features. Biomed Res Int. 2017;2017:1204082. doi: 10.1155/2017/1204082 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zhang Y.Epidemiology of esophageal cancer. World J Gastroenterol. Sep 2013;19(34):5598–606. doi: 10.3748/wjg.v19.i34.5598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ohmori M, Ishihara R, Aoyama K, et al. Endoscopic detection and differentiation of esophageal lesions using a deep neural network. Gastrointest Endosc. February 2020;91(2):301–309.e1. doi: 10.1016/j.gie.2019.09.034 [DOI] [PubMed] [Google Scholar]

- 31.Horie Y, Yoshio T, Aoyama K, et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest Endosc. January 2019;89(1):25–32. doi: 10.1016/j.gie.2018.07.037 [DOI] [PubMed] [Google Scholar]

- 32.Cai SL, Li B, Tan WM, et al. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest Endosc. November 2019;90(5):745–753.e2. doi: 10.1016/j.gie.2019.06.044 [DOI] [PubMed] [Google Scholar]

- 33.Tokai Y, Yoshio T, Aoyama K, et al. Application of artificial intelligence using convolutional neural networks in determining the invasion depth of esophageal squamous cell carcinoma. Esophagus. July 2020;17(3):250–256. doi: 10.1007/s10388-020-00716-x [DOI] [PubMed] [Google Scholar]

- 34.Guo L, Xiao X, Wu C, et al. Real-time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos). Gastrointest Endosc. January 2020;91(1):41–51. doi: 10.1016/j.gie.2019.08.018 [DOI] [PubMed] [Google Scholar]

- 35.Kohli A, Holzwanger EA, Levy AN. Emerging use of artificial intelligence in inflammatory bowel disease. World J Gastroenterol. Nov 2020;26(44):6923–6928. doi: 10.3748/wjg.v26.i44.6923 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Waljee AK, Lipson R, Wiitala WL, et al. Predicting Hospitalization and Outpatient Corticosteroid Use in Inflammatory Bowel Disease Patients Using Machine Learning. Inflamm Bowel Dis. December 2017;24(1):45–53. doi: 10.1093/ibd/izx007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Waljee AK, Liu B, Sauder K, et al. Predicting Corticosteroid-Free Biologic Remission with Vedolizumab in Crohn’s Disease. Inflamm Bowel Dis. May 2018;24(6):1185–1192. doi: 10.1093/ibd/izy031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gan RW, Sun D, Tatro AR, et al. Replicating prediction algorithms for hospitalization and corticosteroid use in patients with inflammatory bowel disease. PLoS One. 2021;16(9):e0257520. doi: 10.1371/journal.pone.0257520 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Aniwan S, Harmsen WS, Tremaine WJ, Loftus EV. Incidence of inflammatory bowel disease by race and ethnicity in a population-based inception cohort from 1970 through 2010. Therap Adv Gastroenterol. 2019;12:1756284819827692. doi: 10.1177/1756284819827692 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Afzali A, Cross RK. Racial and Ethnic Minorities with Inflammatory Bowel Disease in the United States: A Systematic Review of Disease Characteristics and Differences. Inflamm Bowel Dis. August 2016;22(8):2023–40. doi: 10.1097/MIB.0000000000000835 [DOI] [PubMed] [Google Scholar]

- 41.Ng SC, Shi HY, Hamidi N, et al. Worldwide incidence and prevalence of inflammatory bowel disease in the 21st century: a systematic review of population-based studies. Lancet. December 2017;390(10114):2769–2778. doi: 10.1016/S0140-6736(17)32448-0 [DOI] [PubMed] [Google Scholar]

- 42.Dos Santos Marques IC, Theiss LM, Wood LN, et al. Racial disparities exist in surgical outcomes for patients with inflammatory bowel disease. Am J Surg. 04 2021;221(4):668–674. doi: 10.1016/j.amjsurg.2020.12.010 [DOI] [PubMed] [Google Scholar]

- 43.Barnes EL, Loftus EV, Kappelman MD. Effects of Race and Ethnicity on Diagnosis and Management of Inflammatory Bowel Diseases. Gastroenterology. Feb 2021;160(3):677–689. doi: 10.1053/j.gastro.2020.08.064 [DOI] [PubMed] [Google Scholar]

- 44.Spann A, Yasodhara A, Kang J, et al. Applying Machine Learning in Liver Disease and Transplantation: A Comprehensive Review. Hepatology. March 2020;71(3):1093–1105. doi: 10.1002/hep.31103 [DOI] [PubMed] [Google Scholar]

- 45.Merion RM, Sharma P, Mathur AK, Schaubel DE. Evidence-based development of liver allocation: a review. Transpl Int. Oct 2011;24(10):965–72. doi: 10.1111/j.1432-2277.2011.01274.x [DOI] [PubMed] [Google Scholar]

- 46.Ahearn A.Ethical Dilemmas in Liver Transplant Organ Allocation: Is it Time for a New Mathematical Model? AMA J Ethics. Feb 01 2016;18(2):126–32. doi: 10.1001/journalofethics.2016.18.2.nlit1-1602 [DOI] [PubMed] [Google Scholar]

- 47.Cholongitas E, Marelli L, Kerry A, et al. Female liver transplant recipients with the same GFR as male recipients have lower MELD scores--a systematic bias. Am J Transplant . Mar 2007;7(3):685–92. doi: 10.1111/j.1600-6143.2007.01666.x [DOI] [PubMed] [Google Scholar]

- 48.Mindikoglu AL, Regev A, Seliger SL, Magder LS. Gender disparity in liver transplant waiting-list mortality: the importance of kidney function. Liver Transpl. Oct 2010;16(10):1147–57. doi: 10.1002/lt.22121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Allen AM, Heimbach JK, Larson JJ, et al. Reduced Access to Liver Transplantation in Women: Role of Height, MELD Exception Scores, and Renal Function Underestimation. Transplantation. October 2018;102(10):1710–1716. doi: 10.1097/TP.0000000000002196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Martin P, DiMartini A, Feng S, Brown R, Fallon M. Evaluation for liver transplantation in adults: 2013 practice guideline by the American Association for the Study of Liver Diseases and the American Society of Transplantation. Hepatology. Mar 2014;59(3):1144–65. doi: 10.1002/hep.26972 [DOI] [PubMed] [Google Scholar]

- 51.Massie AB, Caffo B, Gentry SE, et al. MELD Exceptions and Rates of Waiting List Outcomes. Am J Transplant. Nov 2011;11(11):2362–71. doi: 10.1111/j.1600-6143.2011.03735.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Nephew LD, Goldberg DS, Lewis JD, Abt P, Bryan M, Forde KA. Exception Points and Body Size Contribute to Gender Disparity in Liver Transplantation. Clin Gastroenterol Hepatol. Aug 2017;15(8):1286–1293.e2. doi: 10.1016/j.cgh.2017.02.033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Petrick JL, Braunlin M, Laversanne M, Valery PC, Bray F, McGlynn KA. International trends in liver cancer incidence, overall and by histologic subtype, 1978–2007. Int J Cancer. October 2016;139(7):1534–45. doi: 10.1002/ijc.30211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Singal AG, El-Serag HB. Hepatocellular Carcinoma From Epidemiology to Prevention: Translating Knowledge into Practice. Clin Gastroenterol Hepatol. Nov 2015;13(12):2140–51. doi: 10.1016/j.cgh.2015.08.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Locke JE, Shelton BA, Olthoff KM, et al. Quantifying Sex-Based Disparities in Liver Allocation. JAMA Surg. July 2020;155(7):e201129. doi: 10.1001/jamasurg.2020.1129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Nitski O, Azhie A, Qazi-Arisar FA, et al. Long-term mortality risk stratification of liver transplant recipients: real-time application of deep learning algorithms on longitudinal data. Lancet Digit Health. 05 2021;3(5):e295–e305. doi: 10.1016/S2589-7500(21)00040-6 [DOI] [PubMed] [Google Scholar]

- 57.Wahid NA, Rosenblatt R, Brown RS. A Review of the Current State of Liver Transplantation Disparities. Liver Transpl. February 2021;27(3):434–443. doi: 10.1002/lt.25964 [DOI] [PubMed] [Google Scholar]

- 58.Ahmed S, Nutt CT, Eneanya ND, et al. Examining the Potential Impact of Race Multiplier Utilization in Estimated Glomerular Filtration Rate Calculation on African-American Care Outcomes. J Gen Intern Med. Feb 2021;36(2):464–471. doi: 10.1007/s11606-020-06280-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Corley DA, Jensen CD, Marks AR, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. Apr 2014;370(14):1298–306. doi: 10.1056/NEJMoa1309086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Corley DA, Jensen CD, Marks AR. Can we improve adenoma detection rates? A systematic review of intervention studies. Gastrointest Endosc. Sep 2011;74(3):656–65. doi: 10.1016/j.gie.2011.04.017 [DOI] [PubMed] [Google Scholar]

- 61.Repici A, Badalamenti M, Maselli R, et al. Efficacy of Real-Time Computer-Aided Detection of Colorectal Neoplasia in a Randomized Trial. Gastroenterology. August 2020;159(2):512–520.e7. doi: 10.1053/j.gastro.2020.04.062 [DOI] [PubMed] [Google Scholar]

- 62.Hassan C, Spadaccini M, Iannone A, et al. Performance of artificial intelligence in colonoscopy for adenoma and polyp detection: a systematic review and meta-analysis. Gastrointest Endosc. Jan 2021;93(1):77–85.e6. doi: 10.1016/j.gie.2020.06.059 [DOI] [PubMed] [Google Scholar]

- 63.Rex DK, Boland CR, Dominitz JA, et al. Colorectal cancer screening: Recommendations for physicians and patients from the U.S. Multi-Society Task Force on Colorectal Cancer. Gastrointest Endosc. July 2017;86(1):18–33. doi: 10.1016/j.gie.2017.04.003 [DOI] [PubMed] [Google Scholar]

- 64.Zhou G, Xiao X, Tu M, et al. Computer aided detection for laterally spreading tumors and sessile serrated adenomas during colonoscopy. PLoS One. 2020;15(4):e0231880. doi: 10.1371/journal.pone.0231880 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Jackson CS, Vega KJ. Higher prevalence of proximal colon polyps and villous histology in African-Americans undergoing colonoscopy at a single equal access center. J Gastrointest Oncol. Dec 2015;6(6):638–43. doi: 10.3978/j.issn.2078-6891.2015.096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Nouraie M, Hosseinkhah F, Brim H, Zamanifekri B, Smoot DT, Ashktorab H. Clinicopathological features of colon polyps from African-Americans. Dig Dis Sci. May 2010;55(5):1442–9. doi: 10.1007/s10620-010-1133-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Thornton JG, Morris AM, Thornton JD, Flowers CR, McCashland TM. Racial variation in colorectal polyp and tumor location. J Natl Med Assoc. Jul 2007;99(7):723–8. [PMC free article] [PubMed] [Google Scholar]

- 68.Devall M, Sun X, Yuan F, et al. Racial Disparities in Epigenetic Aging of the Right vs Left Colon. J Natl Cancer Inst. Dec 2020;doi: 10.1093/jnci/djaa206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Nouraie M, Ashktorab H, Atefi N, et al. Can the rate and location of sessile serrated polyps be part of colorectal Cancer disparity in African Americans? BMC Gastroenterol. May 2019;19(1):77. doi: 10.1186/s12876-019-0996-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Ashktorab H, Delker D, Kanth P, Goel A, Carethers JM, Brim H. Molecular Characterization of Sessile Serrated Adenoma/Polyps From a Large African American Cohort. Gastroenterology. 08 2019;157(2):572–574. doi: 10.1053/j.gastro.2019.04.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.DeSantis CE, Miller KD, Goding Sauer A, Jemal A, Siegel RL. Cancer statistics for African Americans, 2019. CA Cancer J Clin. May 2019;69(3):211–233. doi: 10.3322/caac.21555 [DOI] [PubMed] [Google Scholar]

- 72.Fedewa SA, Flanders WD, Ward KC, et al. Racial and Ethnic Disparities in Interval Colorectal Cancer Incidence: A Population-Based Cohort Study. Ann Intern Med. Jun 2017;166(12):857–866. doi: 10.7326/M16-1154 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Almario CV, May FP, Ponce NA, Spiegel BM. Racial and Ethnic Disparities in Colonoscopic Examination of Individuals With a Family History of Colorectal Cancer. Clin Gastroenterol Hepatol. Aug 2015;13(8):1487–95. doi: 10.1016/j.cgh.2015.02.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Benarroch-Gampel J, Sheffield KM, Lin YL, Kuo YF, Goodwin JS, Riall TS. Colonoscopist and primary care physician supply and disparities in colorectal cancer screening. Health Serv Res. Jun 2012;47(3 Pt 1):1137–57. doi: 10.1111/j.1475-6773.2011.01355.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Laiyemo AO, Doubeni C, Pinsky PF, et al. Race and colorectal cancer disparities: health-care utilization vs different cancer susceptibilities. J Natl Cancer Inst. Apr 21 2010;102(8):538–46. doi: 10.1093/jnci/djq068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Lansdorp-Vogelaar I, Kuntz KM, Knudsen AB, van Ballegooijen M, Zauber AG, Jemal A. Contribution of screening and survival differences to racial disparities in colorectal cancer rates. Cancer Epidemiol Biomarkers Prev. May 2012;21(5):728–36. doi: 10.1158/1055-9965.EPI-12-0023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Goldberg DS, Taddei TH, Serper M, et al. Identifying barriers to hepatocellular carcinoma surveillance in a national sample of patients with cirrhosis. Hepatology. March 2017;65(3):864–874. doi: 10.1002/hep.28765 [DOI] [PubMed] [Google Scholar]

- 78.Singal AG, Li X, Tiro J, et al. Racial, social, and clinical determinants of hepatocellular carcinoma surveillance. Am J Med. Jan 2015;128(1):90.e1–7. doi: 10.1016/j.amjmed.2014.07.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Riall TS, Townsend CM, Kuo YF, Freeman JL, Goodwin JS. Dissecting racial disparities in the treatment of patients with locoregional pancreatic cancer: a 2-step process. Cancer. Feb 15 2010;116(4):930–9. doi: 10.1002/cncr.24836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Gao Y, Cui Y. Deep transfer learning for reducing health care disparities arising from biomedical data inequality. Nat Commun. 10 December 2020;11(1):5131. doi: 10.1038/s41467-020-18918-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Yang YJ, Bang CS. Application of artificial intelligence in gastroenterology. World J Gastroenterol. Apr 14 2019;25(14):1666–1683. doi: 10.3748/wjg.v25.i14.1666 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Chen H, Sung JJY. Potentials of AI in medical image analysis in Gastroenterology and Hepatology. J Gastroenterol Hepatol. Jan 2021;36(1):31–38. doi: 10.1111/jgh.15327 [DOI] [PubMed] [Google Scholar]

- 83.Suresh H, Guttag J. Understanding Potential Sources of Harm throughout the Machine Learning Life Cycle.: MIT Case Studies in Social and Ethical Responsibilities of Computing; 2021. [Google Scholar]

- 84.Gebru T, Morgenstern J, Vecchione B, et al. Datasheets for Datasets. Communications of the ACM; 2021. p. 86–92. [Google Scholar]

- 85.Mitchell M, Wu S, Zaldivar A, et al. Model Cards for Model Reporting. Proceedings of the Conference on Fairness, Accountability, and Transparency; 2019. p. 220–229. [Google Scholar]

- 86.LaVeist TA, Gaskin D, Richard P. Estimating the economic burden of racial health inequalities in the United States. Int J Health Serv. 2011;41(2):231–8. doi: 10.2190/HS.41.2.c [DOI] [PubMed] [Google Scholar]

- 87.Wilkinson MD, Dumontier M, Aalbersberg IJ, et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data. Mar 15 2016;3:160018. doi: 10.1038/sdata.2016.18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Crigger E, Reinbold K, Hanson C, Kao A, Blake K, Irons M. Trustworthy Augmented Intelligence in Health Care. J Med Syst. Jan 12 2022;46(2):12. doi: 10.1007/s10916-021-01790-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Rajkomar A, Hardt M, Howell MD, Corrado G, Chin MH. Ensuring Fairness in Machine Learning to Advance Health Equity. Ann Intern Med. 12 18 2018;169(12):866–872. doi: 10.7326/M18-1990 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.DeCamp M, Tilburt JC. Why we cannot trust artificial intelligence in medicine. Lancet Digit Health. December 2019;1(8):e390. doi: 10.1016/S2589-7500(19)30197-9 [DOI] [PubMed] [Google Scholar]

- 91.Ryan M.In AI We Trust: Ethics, Artificial Intelligence, and Reliability. Sci Eng Ethics. October 2020;26(5):2749–2767. doi: 10.1007/s11948-020-00228-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Char DS, Shah NH, Magnus D. Implementing Machine Learning in Health Care - Addressing Ethical Challenges. N Engl J Med. Mar 15 2018;378(11):981–983. doi: 10.1056/NEJMp1714229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Kerasidou A. Artificial intelligence and the ongoing need for empathy, compassion and trust in healthcare. Bull World Health Organ. Apr 01 2020;98(4):245–250. doi: 10.2471/BLT.19.237198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Baron RJ, Khullar D. Building Trust to Promote a More Equitable Health Care System. Ann Intern Med. April 2021;174(4):548–549. doi: 10.7326/M20-6984 [DOI] [PubMed] [Google Scholar]

- 95.Bahrampour T, Mellnik T. Census data shows widening diversity; number of White people falls for first time. Washington Post; 2021. [Google Scholar]