Abstract

In this article, we introduce Semantic Interior Mapology (SIM), a web app that allows anyone to quickly trace the floor plan of a building, generating a vectorized representation that can be automatically converted into a tactile map at the desired scale. The design of SIM is informed by a focus group with seven blind participants. Maps generated by SIM at two different scales have been tested by a user study with 10 participants, who were asked to perform a number of tasks designed to ascertain the spatial knowledge acquired through map exploration. These tasks included cross-map pointing and path finding, and determination of turn direction/walker orientation during imagined path traversal. By and large, participants were able to successfully complete the tasks, suggesting that these types of maps could be useful for pre-journey spatial learning.

Keywords: Tactile maps

1. INTRODUCTION

Tactile maps have been used to convey spatial concepts to blind people for decades. When used by orientation and mobility (O&M) professionals, these spatial displays represent important tools for teaching blind individuals about key routes through their neighborhood or city or for conveying the global layout of an environment that is otherwise hard to apprehend using nonvisual sensing [1, 2]. As with traditional visual maps, tactile maps can be used to convey information at different scales, for example, a floor plan versus a world map, and for different purposes, for example, to support navigation or to learn spatial relations [3].

Unfortunately, creating a tactile map by hand can be time-consuming and requires specific expertise, which may be one of the reasons why tactile maps are not universally available. Thus, methods for the automatic production of tactile maps are highly desirable. For example, the TMAP system [4], hosted by the San Francisco Lighthouse for the Blind and Visually Impaired, allows anyone to specify the boundaries of an urban area, then automatically generates a file that can be rendered as a tactile map. While TMAP and similar systems have been well received by the blind community, there is no equivalent system for indoor maps (although the issue has been addressed by a few research projects; see Section 2.2.2). Outdoor environments often have consistent street networks, clear street naming, and building addressing conventions to help mentally organize the space and scaffold the information being perceived into a logical mental schema. By contrast, indoor environments lack many of these naming conventions and environmental regularities, have many occlusions from walls and ceilings that limit perceptual access, and often have multiple floors that complicate learning and integration of the space into a coherent mental representation [5]. Research has shown that pre-travel learning of building layouts from tactile maps (using both tactile map overlays and digital haptic maps) can help overcome these problems and supports accurate cognitive map development and subsequent wayfinding behavior once in the physical space [6, 7]. Post-test surveys from studies investigating the use of accessible indoor maps by blind participants suggest that access to tactile maps improved blind users’ confidence in learning the space compared with situations in which access to these spatial supports was not available [7]; that tactile map usage improved perceived knowledge of the direction between pairs of indoor locations [6]; and that learning tactile maps positively correlated with post-test questions assessing allocentric knowledge [8].

In this contribution, we introduce Semantic Interior Mapology (SIM), a web tool designed to support the creation of tactile maps of indoor buildings, which can be embossed on a regular Braille printer or rendered on microcapsule paper. SIM “democratizes” the tactile map design process by enabling anyone with access to a floor plan of a building in image format to generate maps with a standardized set of symbols and primitives. SIM supports two main pipelined processes: (1) vectorization of the floor plan, which can be geo-registered to facilitate indoor-outdoor transitions (for example, to generate a map that includes a portion of a building and of the sidewalk area); and (2) generation of an embossable tactile map at the desired scale. While most sighted people are accustomed to using digital maps that can be easily zoomed in and out to show the environment at different scales and granularities [9, 10], tactile maps are traditionally based on hardcopy output (using paper, plastic, or metal sheets) and are thus fixed in scale and resolution. The limited research studying nonvisual zooming based on accessible digital haptic maps suggests that while nonvisual zooming is possible, it is best done using functional zoom levels related to intuitive transitions of map information [11, 12]. Although poorly studied, considering scale is particularly relevant to tactile maps, as all such displays are limited in the amount of information being presented (due to the inherently lower resolution of touch than vision [13]). Most current hardcopy maps resolve this problem by either being embossed on many pages or by being rendered in a very large format. However, these approaches require significant spatial and temporal integration, which is cognitively effortful and error-prone using touch [14]. The ability to choose a scale level is an important feature of SIM. SIM supports three scale levels: Structure, Section, and Room level. Each scale level defines the set of symbols that can be embossed without excessive cluttering. Large-scale maps afford a general view of the layout of the building (rooms, corridors), though in many cases they do not have space for embossing the room numbers. Smaller-scale maps allow for rendering of more details, such as large appliances and possibly furniture.

In order to evaluate the quality of indoor maps generated by SIM, we conducted a user study with 10 blind participants, who were asked to explore tactile maps (embossed on paper) of a real campus building at two different scales. Participants performed a series of tasks testing their ability to read and decipher the maps as well as to form an accurate spatial understanding of the layouts they were exploring. Some questions asked them to imagine standing at a given location and to point to another location, either on the same map or on the map with a different scale. Other tasks asked them to find walkable paths within and between the two map scales, and, as they imagined walking, to describe the direction of each turn along the path (from an egocentric frame of reference) as well as indicating their cardinal orientation after the turn (expressed in an allocentric frame). An egocentric frame references locations with respect to the individual’s current location and orientation (for example, “an object in front of me”); an allocentric frame defines locations with respect to a fixed, “world” reference frame (for example, using cardinal direction).

The map engagement activities considered in this study are of interest, as they require a scale transition that necessitates integrating the two maps into a common frame of reference, that is, consolidating the different map scales into a globally coherent cognitive map in memory. The experiment was conducted remotely (via phone or Zoom) due to COVID-19–related social distancing requirements. We employed a think-aloud procedure [15], in which we asked participants to articulate what they were thinking and doing as they explored the map and engaged in targeted spatial tasks. Their responses were recorded and later evaluated in an informal protocol analysis to capture process-level data about what cues were used and most helpful and how the participant was thinking about performing the task with respect to the map information facilitating the behavior. Such qualitative data are not exposed by traditional map reading metrics, whose emphasis is often more on the output of the learning, for example, did the user correctly navigate the route when having access to a map or build up an accurate mental representation of the space based on its access? This focus often ignores the process of how people are actually using the map, which is important for understanding the information used and thought processes employed during map reading and when performing key spatial tasks.

All participants except for one successfully completed all tasks. They correctly answered questions about the maps they explored, except for one question that asked participants to find all rooms with two doors (only three participants found all such rooms). All were able to discover walkable paths between two points (within and between map scales). However, in two cases, these paths were longer than necessary, and in one case a path was unrealizable (it would go through a wall). These results, along with the good scores from a Likert-scale exit questionnaire, suggest that multi-scale maps produced with SIM are easy to read and interpret.

2. RELATED WORK

2.1. Tactile Maps for Spatial Learning

Spatial learning with a tactile map is often compared to unaided in situ navigation, with performance evaluated on the accuracy of learning routes, relations between landmarks and key points of interest, or global structure, for example, a cognitive map [16-18]. In recent years, there has been a move from the development and use of paper-based tactile maps to accessible digital maps based on combinations of auditory and haptic cues. These maps have been rendered using vibrotactile stimulation [7, 19], force feedback information [20, 21], dynamic pin arrays [22], or by combining traditional tactile maps overlaid on a digital touchpad [23-25]. Whereas these digital maps have a range of benefits (they are interactive, multimodal, and easily updated), they also have drawbacks, including requiring some form of technology to use (which is often expensive). Importantly, with the exception of maps deployed on smart devices, they are not able to be used in situ as the equipment is not portable (see [26] for a review of dynamic haptic displays). For these reasons, most accessible digital maps have been used as a spatial learning tool for offline, pre-journey learning or for studying more theoretical investigations of spatial cognition without vision. A proposal has been presented recently [27] for complementing information from offline maps learned before visiting a building with pin-matrix maps installed within the building that can be accessed on-site.

We should emphasize that map learning, as elicited by exploring a tactile map, is different from route learning, which can be facilitated by real-time turn-by-turn navigation systems for traveling routes (global positioning system [GPS]–based outdoors or using various sensors indoors, e.g., Bluetooth/WiFi/UWB/magnetometer). These systems have been studied with blind users in indoor settings and work well, as described in [28-32]. They convey route information and have been shown to support route learning. Map learning, our interest here, leads to very different types of spatial knowledge (environmental learning and cognitive map development) than route following [33], and employs different underlying neural networks [34]. For this type of “survey” learning, one needs access to a map to convey global layout, spatial relations, and provide an allocentric frame of reference.

2.2. Tactile Map Production

2.2.1. Outdoor Maps from Geographical Information Systems (GIS) Data.

In the field of accessible map design, there has been substantial interest in the automatic generation of tactile maps for outdoor environments from GIS datasets [35-41]. In particular, the TMAP project [4], hosted by the San Francisco Lighthouse for the Blind and Visually Impaired, offers an online service for on-demand tactile map production. Anyone can register to the service, use TMAP’s web page to input a specific location and the desired area, and download a tactile map in SVG format, that can be rendered on Braille paper (using a regular Braille embosser) or on microcapsule paper (a process that involves a regular inkjet printer and a tactile image enhancer [42]).

2.2.2. Indoor Maps.

Automatic map generation for indoor environments has received relatively less attention [43-47]. In most cases, indoor maps are still created using “tricks of the trade,” relying on the cartographer’s own experience rather than on a systematic approach [48, 49]. A main problem with automatic indoor map production is the lack of available geographical data. If a floor plan is already available in a vectorized format such as AutoCAD [45] or OpenStreetMaps (OSM) [50], then transferring geographical information to a tactile map is conceptually simple (although it should be noted that indoor spaces are structurally and semantically more complex than typical urban outdoor areas [5]). An alternative to the floor plan tracing system described in this contribution is offered by algorithms that can automatically vectorize a rasterized floor plan [51-53]. Madugalla et al. [54] used similar algorithms to transform an image of a floor plan into a tactile map. In addition, their system creates a natural language description as well as a GraVVITAS format presentation [55] of the floor plan. Automatic floor plan vectorization is very desirable; however, when trying a few of these conversion algorithms, we found that they are generally sensitive to the characteristics of the original image, often requiring hand editing of the results (as also noted in [54]). This is compounded by the fact that available floor plans are often of poor quality (e.g., hand-drawn) and may use a wide variety of graphical symbols to represent spatial primitives. SIM’s tracing mechanism was designed to enable quick vectorization of floor plans regardless of the quality of the available image.

2.2.3. Tactile Symbols.

Due to the reduced resolution of tactile sensing (e.g., to be able to distinguish two points from each other via touch, they need to be at least 2.4 mm apart [56]), the tactile symbols used in a map must satisfy specific constraints on minimum size and separation [57]. The Braille Authority of North America (BANA) provides the following recommendations [58]: the minimum diameter of point symbols should be 1/4 inch (6.35 mm); the space between a point symbol and any other component should be at least 1/8 inch (3.17 mm); lines should be a minimum of 1/2 inch (12.7 mm) in length; and area symbols should have size of at least 1/4 square inch (40.32 mm2). If the height of the tactile symbols can be controlled (e.g., using a Braille embosser), the standard recommendations are for 1.5 mm height for point symbols, 1 mm height for line symbols, and 0.5 to 1 mm height for area symbols [49].

In choosing tactile symbols, one needs to strike a balance between the need to represent multiple semantic entities, the reader’s ability to discriminate between tactile patterns, and the reader’s ability to memorize the meaning of different symbols. There is no accepted standard reference for tactile symbols [59], though symbol sets have been proposed for outdoor scenarios [60, 61]. Research on tactile symbol design has focused on issues such as meaningfulness [62-67] and distinctiveness [68, 60].

3. SIM: A WEB TOOL TO GENERATE TACTILE MAPS OF BUILDINGS

In this section we introduce the SIM web tool (https://sim.soe.ucsc.edu) for tactile map authoring. Creating a tactile map with SIM comprises two tasks. The first task is the generation of a vectorized map, which can be geo-registered and exported to GeoJSON for easy visualization (Section 3.1). In SIM, this is obtained by manually tracing (using an intuitive and efficient interface) a floor plan of the building, which is assumed to be available in a standard graphical format (e.g., JPEG). Note that in many cases, floor plans can be downloaded from an organization’s web site (e.g., hospitals, schools, shopping malls). The SIM tracing subsystem generates vectorized representation using specific primitives. SIM does not currently support direct input of indoor maps already vectorized; conversion from other formats to SIM’s internal representation is the object of future work. Once a vectorized map has been obtained, the second task is the conversion of this map into an embossable file, using standardized tactile symbols (Section 3.2). This procedure is completely automatic; the user only needs to select the desired scale level for rendering and the desired portion of the building to be rendered. The SIM tracing system was originally described in [69]; the subsystem for conversion to tactile maps was originally presented in [70].

3.1. Map Tracing and Geo-Registration

3.1.1. Spatial Primitives.

SIM represents a building layout in terms of the following elements. Here, “polylines” represent connected chains of line segments. Unlike polygons, polylines do not necessarily form close contours. By “trace” we mean the intersection of a planar surface (a wall or a door) and the ground plane.

Spaces, represented as two-dimensional (2-D) polygons, encode any floor areas such as rooms, corridors/hallways, or portions thereof. They are normally (though not necessarily) enclosed, at least partially, by walls. Spaces can be given names if desired (e.g., an office number). Each space has a type, selected from a standard list (currently including default, corridor, stairs, elevators). In addition, spaces can be assigned any number of custom properties (name/argument pairs.)

Walls are the 2-D traces (represented as segments) of vertical wall segments. A long wall that intersects multiple other walls can be represented as single unit or as a sequence of collinear wall segments, terminating at the junction with other intersecting walls.

Entrances are the 2-D traces (represented as segments) of doors. Geometrically, they are sub-segments of wall segments.

Point features represent any localized feature of interest for which the spatial extent is not relevant. A point feature can be given a name and any number of custom properties.

Extended features, represented as 2-D polylines, are used to record the contours of furniture, cubicles, and similar relevant entities. Note that point and extended features can be defined anywhere in the building, although normally they are fully contained within a space.

Tracing a building layout thus requires identifying spaces, wall segments, and entrances, and, if desired, point and extended features. While this could be accomplished by drawing shapes and walls using graphic design software such as PowerPoint or Illustrator, the quality of the result would depend on the user’s ability to precisely mark the endpoints of walls and the corners of spaces. SIM facilitates this operation through a simple interface that leverages the notion that in most buildings, walls are aligned along one of two perpendicular axes. While SIM supports tracing walls at any angle, it is particularly effective when interconnected walls form a 90° angle and when multiple co-planar wall segments are present, as often happens in regular buildings (see Figure 3). In the following, we describe SIM’s unique procedure for defining spaces, walls and entrances. Point and extended features can be defined using standard point-and-click modalities.

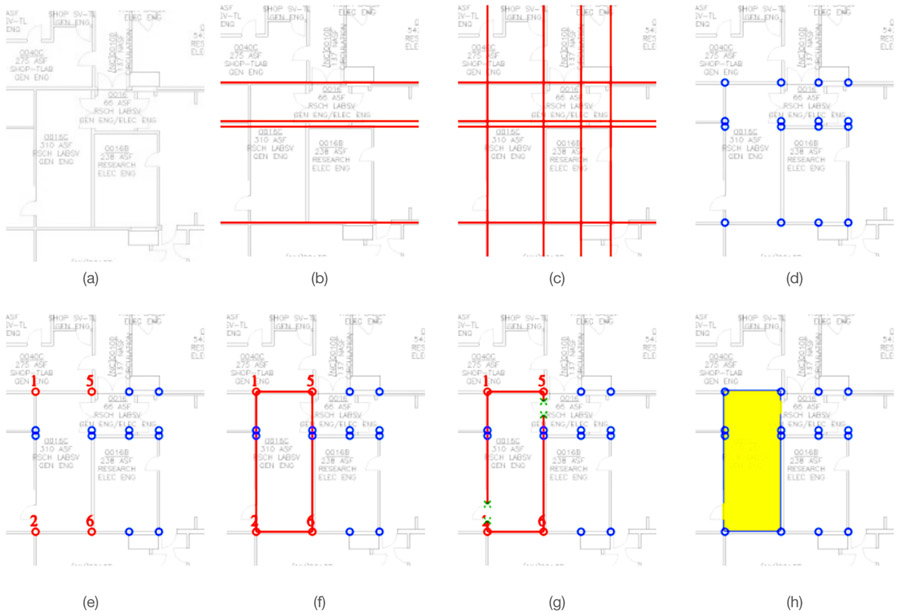

Fig. 3.

Sample results of building tracing with SIM starting from the maps shown in the left column. The right column shows the rendering of the geo-registered GeoJSON files using the MapboxGL JS engine.

3.1.2. Tracing a Floor Plan with SIM.

A SIM session starts by uploading an image file of the floor plan to be traced, which can then be properly scaled and rotated such that its main axes are aligned with Cartesian screen axes. (A grid overlay is provided to facilitate correct alignment.) Then, for each direction (horizontal or vertical, selectable through a switch), the user can shift-click on any point contained in a wall segment visible in the underlying floor plan. Each shift-click generates a line in the chosen orientation (Figures 1(b) and 1(c)), where each such line “contains” one or more collinear wall segments. The system automatically generates all line intersections, which represent potential wall connections (corners) or possibly wall endpoints. At any time, one can select to only see the line intersections (shown as circles in Figure 1(d)). An individual space can be generated by identifying the corner or endpoints of the walls surrounding the space. This is obtained by clicking on the line intersections associated with these corners (Figure 1(e)). In addition, users are prompted to identify which pairs of these intersections correspond to wall segments (Figure 1(f)) and to mark the endpoints of any entrances on existing wall segments (Figure 1(g)). Note that different layouts, including walls that do not terminate in another wall, spaces without surrounding walls, and diagonal walls, can be easily accommodated by generating “ghost” vertical or horizontal lines (Figure 2).

Fig. 1.

Tracing a map with SIM. (a) A portion of the floor plan. (b) Horizontal lines are generated via shift-clicks. (c) Vertical lines are generated next. (d) All line intersections (potential wall corner or endpoints) are automatically computed and displayed. (e) The user clicks on the appropriate line intersections that identify the boundary of a space (Room 310). These intersections are automatically assigned ID numbers. (f) The user then selects the walls surrounding the space by choosing the intersection pairs (#1, #5), (#5, #6), (#6, #2), (#2, #1). (g) The user defines one entrance in the wall segment (#1, #2) and one in the wall segment (#5, #6). (h) At this point, the space can be recorded and the user can move on to defining a new space.

Fig. 2.

(a) A diagonal wall can be created by joining the intersections of lines representing real or “ghost” walls. (b) A space does not need to be enclosed by walls.

We found this tracing strategy to be simple and intuitive, especially in the case of floor plans with repetitive layouts, where many similar spaces aligned with each other (such as those in Figure 3) can be recorded very rapidly and accurately. This is because the trace of multiple coplanar walls can be represented with a single line, and because wall segments and corners are reused for adjacent spaces, which reduces the number of required input selections.

The geometric primitives obtained through this procedure (spaces, walls, entrances, point and extended features) are stored in a sim file. Our sim format is inspired by the Polygon File Format (PLY [71]), which represents three-dimensional (3-D) objects as lists of flat polygons. A PLY file contains a list of vertices and a list of polygons, where each polygon is defined as an ordered list of vertex IDs. Similarly, a sim file contains a list of wall corners and a list of entrance corners. Each space is assigned an ordered list of wall corner IDs and a (possibly empty) list of entrance corner IDs, with flags indicating whether two adjacent corners are joined by a wall segment. Space attributes are also recorded with each space. Details of the structure of a sim file can be found in [69]. Importantly, all geometric entities in a sim file are expressed in Cartesian coordinates. The spatial coordinate units are normalized such that the horizontal coordinate is 0 at the left edge of the floor plan and 1 at its right edge, whereas the vertical coordinate is 0 at the top edge of the floor plan and 1 at its bottom edge.

3.1.3. Geo-registration.

The information contained in a sim file can easily be converted to other formats. SIM contains a conversion tool to GeoJSON, which is used to display the building structure in 3D using the MapboxGL JS engine (Figure 3) [69]. GeoJSON [72] encodes geographic information using a variety of elementary Geometry types. Each Geometry type object contains position in terms of geodetic coordinates (latitude, longitude, and possibly elevation). Additional properties can be encoded using a Feature type. Note that, while the geometric primitives in a sim file are expressed as Cartesian coordinates, GeoJSON uses WGS84 geodetic coordinates (latitude, longitude). Hence, a coordinate reference system conversion (geo-registration) is required. Geo-registration in SIM is obtained through a procedure that asks the user to input the geodetic coordinates of at least four wall corners in the building, normally chosen from the building’s external walls. These geodetic coordinates can be easily obtained, for example, from web map applications such as Google Map or Apple Map. Provided that the external building walls are visible in the “satellite” view mode, one can just click on the considered wall corners and copy the coordinates generated by the web map.

3.2. Conversion to Embossable Format

The SIM web tool enables conversion of a traced map into a format that can be fed to a regular embosser (if using Braille paper) or to a regular printer (if using microcapsule paper) to produce a tactile map. The different types of primitives (spaces, walls, entrances, point and extended features) encoded in a sim file are rendered using appropriate tactile symbols. Our current choice for tactile symbols was guided by results from prior research [60, 67, 68] and validated through a focus group with blind participants [73].

Following BANA recommendations [58], our map authoring tool renders walls as raised segments with length of at least 13 mm, with a minimum distance of 5 mm between segments. For easy discrimination, symbols representing different features have a minimum diameter of 6.5 mm, with minimum distance between two symbols of 12 mm. SIM produces tactile maps that can be printed on a 11.5 in × 11 in (292 mm × 279 mm) embosser sheet, with a 0.5 in (13 mm) margin on all sides. In our experiments, we embossed these maps on a ViewPlus Max Braille embosser with a resolution of 20 DPI.

3.2.1. Tactile Symbols.

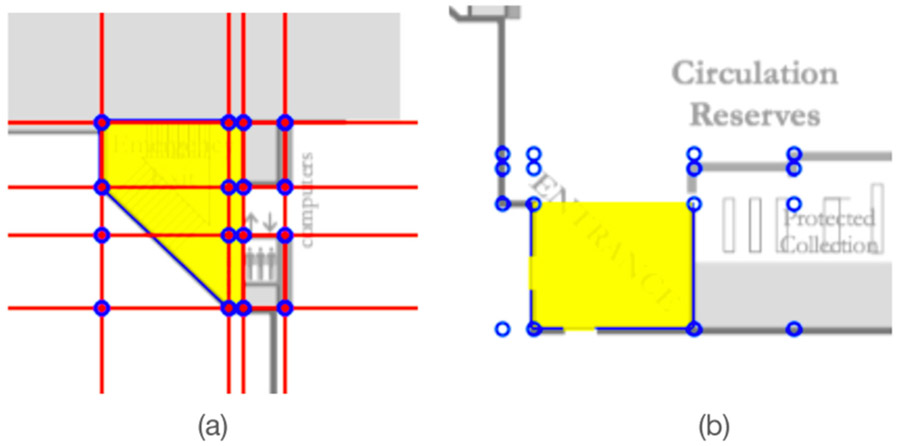

The tactile symbols and patterns used to represent geometric primitives are shown in Figure 5(d). We purposely chose to represent only a small taxonomy of primitives, with symbols that are easy to memorize. Note that, as explained later, the type of primitives represented in the map depends on the chosen scale for the map.

Fig. 5.

(a) A structure-level map. (b) A section-level map. (c) A room-level map. (d) The tactile symbols used by SIM. Text in black ink added to the figure. Maps (b) and (c) were featured in our user study.

Spaces are represented in different ways depending on their type: default type spaces (typically, rooms) are rendered as untextured areas; corridors are rendered as small dot textured areas [67]; stairs are shown by parallel segments as suggested in [68]; elevators are shown with a triangular symbol on an otherwise untextured area. For spaces that have a label (e.g., an office number), their label is reproduced in Braille. Walls are represented by thin segments. Entrances are shown as small empty circles. In a prior implementation of our map authoring tool, which was tested with a focus group [73], entrances were represented with a “wedge” (itself derived from a “square arch” symbol proposed in [60]). We decided to change the symbol for entrance to a circle based on feedback from the focus group.

Point features are represented with symbols that depend on the feature type. For example, in the case shown in Figure 5(d), a water fountain is shown as a textured full circle, with a texture that is distinctively stronger than that used for corridors. Finally, extended features are shown with raised edges. A short description of the extended feature, provided as a property, is displayed when possible either inside the feature itself (e.g., see the symbol for a table shown in Figure 5(d)), or in the immediate vicinity (e.g., see the fridge and shelf in the lower part of Figure 5(c)).

3.2.2. Multi-scale Rendering.

A map designer needs to select the “scale” at which to represent the map. This is a familiar concept to anyone who has ever looked at an onscreen map (such as Google Maps), which enables seamless rendering over a wide range of scales. This is particularly important for tactile maps, whose spatial resolution is constrained by the limited human tactile resolution. For example, if rendering a large building within one paper, there may not be enough resolution to display important details. In this case, it may be useful to generate tactile maps at different scales (e.g., Madugalla et al. [54] created maps of buildings and individual rooms, with their contents).

Changing the scale of a map almost invariably involves some form of generalization [74-76]. Generalization operators remove, join, exaggerate or diminish map features in order to increase visibility while maintaining some degree of faithfulness to the original map [77, 74]. Generalization is necessary each time a feature (e.g., the contour of an object) becomes too small to be perceived correctly, or objects become too densely packed. For example, when zooming out, small objects may disappear, text labels may be removed, and contours may be simplified by removing small details. For tactile maps, which have a much smaller spatial resolution than computer screens, these forms of generalization are even more critical. The goal should be to maximize the information displayed for a given rendering scale level while ensuring good comprehension of the map (which is compromised when maps are too detailed [78]). For example, Touya et al. [37] proposed to use classic cartographic generalization for the automatic generation of tactile maps of the outdoors. Madugalla et al. [54] created maps of buildings along with those of individual rooms, including their contents. The work in [79] used touch-sensitive pin-matrix display to represent urban geographical data in two possible scales, with the street names and points of interest displayed only at the larger scale. The work in [80] used a low-resolution refreshable pin array with zoom. To facilitate identification of the location of a zoomed-in (smaller scale) area within a larger scale map, Prescher et al. [80] used a minimap (i.e., an overview indicating the location of the zoomed-in area within the map).

SIM defines three scale levels, each characterized by a set of available symbols:

Structure level. This scale level is used to provide a bird’s-eye view of a whole building (possibly embossed over multiple sheets in the case of elongated buildings; see Figure 5(a)). To make the best use of the available area and resolution, space labels and entrance symbols are not rendered. The structure-level scale would be appropriate to display the general layout of a large building but not to identify specific features. Note that we did not use a structure-level map in our user study, described later in Section 4, as we were interested in studying how users of our maps could find specific features and routes.

Section level. In this case, a certain portion of the building (selectable on SIM via a draggable and resizable rectangle; see Figure 4(b)) is reproduced. This level is used when the scale is small enough to afford rendering of doors and space labels (provided that the space label can fit within the space itself). Point and extended features are also rendered if there is enough room to reproduce their label without overlapping with other elements. An example of a section-level map, which was used in our user study, is shown in Figure 5(b). Note that the water fountain (a point feature) was reproduced, but other extended features such as the cubicles in laboratories were not. In order to fit the Braille version of the two-digit number of each office within the perimeter of the room, we removed the number sign prefix that denotes numbers in Braille.

Room level: This scale level is used to reproduce a room and its interior. For example, Figure 5(c) shows a map used in our user study, representing a laboratory with several cubicles and furniture (represented by extended features.)

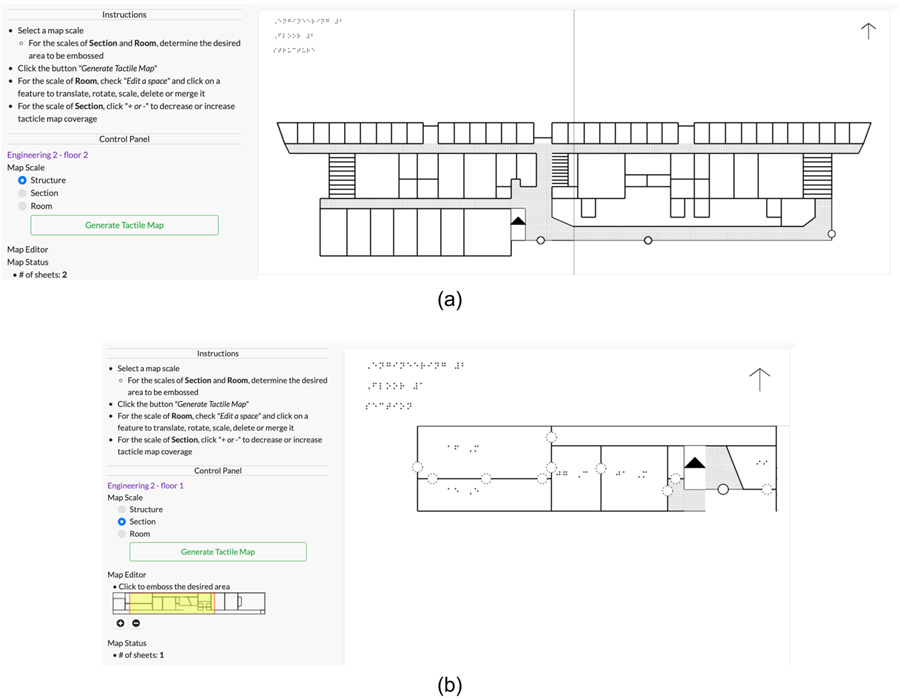

Fig. 4.

Screenshots from the SIM web application showing tactile map generation from a previously traced floor plan. (a) Structure-level map. (b) Section-level map.

The embossed maps produced by SIM contain a header where building and scale-level information can be displayed. For the two maps used in our user study, we simply marked the section-level map as “Full” and the room-level map as “Room 09.” Each map has a North-pointing arrow embossed at the top right corner, which was used to orient the participants.

3.3. Initial Evaluation

A preliminary evaluation of the quality of these maps for tactile exploration was conducted by one of the authors of this article, himself a congenitally blind individual. We also conducted a focus group with seven blind participants, who explored early versions of maps produced by SIM over all three scale levels. The focus group study was presented in an earlier paper [73]. This focus group was not meant to be a rigorous study of the effectiveness of our tactile maps. Rather, it is a vehicle to elicit a discussion on the potentials and drawbacks of automated, multi-scale tactile map production. Participants were first asked to familiarize themselves with copies of the maps that were taken to the meeting. They were asked to identify and locate several features in the maps: entrances, staircases, corridors, office spaces, office doors, office numbers, and furniture. Then, a discussion started, facilitated by the experimenters. An analysis of the focus group transcript was conducted independently by the two experimenters, with a later phase in which a consensus was found on the set of topics used to code the relevant parts of the conversation. Here are the main themes that emerged from this analysis:

Perceived utility of the maps for indoor pre-journey learning: While several participants felt that these maps could be useful for supporting independent travel, some had concerns about the map size and practicality of consulting these maps during a trip. Some participants also pointed out that, while independence is important, there often are people nearby who can offer help.

What can be learned from a map? There was some debate as to whether tactile maps can provide more information than, for example, a verbal explanation of the layout of the space. One participant said that it would be useful to consult a map after visiting a place to obtain confirmation that a correct mental image was built. Another topic of discussion was about the importance of metric information. While some felt that information about the size of spaces as provided by the map can be useful, others said that knowing the actual extent of spaces is less important than, for example, knowing whether one needs to turn right or left to reach a destination.

One or more scales? Several participants appreciated the need for rendering maps at multiple scales. Some pointed out that the first time one visits a building, the larger-scale map would be most useful. After familiarizes themselves with the space, maps at smaller scales can be used for discovering more specific properties of the location. There was disagreement about the utility of the Room-level map. While some participants thought that all three scales would be important to have, others felt that they would not take the time to explore the Room-level map, as they would be able to acquire good spatial knowledge of the room layout by direct exploration.

Universal map access: Participants pointed out that the tactile symbols used should be easy to understand and that specific symbols (e.g., a building’s entrance) should catch the attention of the reader. Participants also commented on the trade-off of using Braille characters in lieu of symbols (e.g., to label an entrance). While Braille may take more space, it is easier to interpret—but only for those who know Braille. Indeed, it was noted that many of the potential users of these maps may not be able to read Braille. (It should be noted that only a minority of people who are blind, possibly as few as 10% [81], can read Braille.)

4. MAP EXPLORATION: AN EXPERIMENTAL STUDY

In order to evaluate the usability and effectiveness of the tactile maps produced by SIM for indoor spaces, we conducted a study with 10 blind participants, who performed a series of tasks testing their ability to read and decipher the maps as well as to form an accurate spatial understanding of the layouts they were exploring. Our interest was (1) to determine whether people could learn the two map scales and integrate knowledge across scales when performing the pointing/navigation tasks, (2) to understand what type of language they used to describe the paths and their updated orientation on these paths as they moved, and (3) if they were able to find shortcuts and alternative routes through rooms that deviated from the walking paths along hallways. The experiment was conducted remotely (via phone or Zoom) due to COVID-19–related social distancing requirements. If connecting with Zoom, we did not ask our participants to use the camera due to the expected difficulty of aiming the camera correctly at the maps being explored. Due to the remote nature of the study, we did not ask our participants to reconstruct the maps from memory as in [54, 60], as this would have been exceedingly difficult to implement through a remote audio-only connection. All tasks were performed perceptually, that is, while accessing the maps, and no purely memory-based tasks were considered. This is acceptable, in our view, as our focus was on behaviors related to map access and learning, rather than behaviors based on its representation in memory.

4.1. Participants

We recruited 10 blind participants for this study (5 self-identifying as female, 5 as male) through a local blindness organization. All participants were totally blind or had only some residual light perception. Their ages ranged from 51 to 73 (median: 66). Eight participants were blind since birth, while two acquired blindness late in life. All participants except for P1 were proficient Braille readers. P1, who had not been using Braille in a while, often had difficulties reading labels with Braille content in the maps. All of them stated that they had previously accessed tactile maps, although experience level with tactile graphics varied widely across participants.

4.2. Apparatus

For this study, we used the section-level and room-level maps shown in Figures 5(b) and 5(c). In addition, we generated two simple “practice maps” (not shown here), one at section scale (“Sample Full”) containing four adjacent rooms surrounded by a hallway and one at room scale (“Sample Room 24”) containing two cubicles, a table, and a shelf. Each map had its title embossed in the top left corner and a North-pointing arrow embossed at the top right corner, which were used to orient the participants.

Following the protocol approved by our Institutional Review Board, each participant was mailed a copy of the tactile maps in advance and asked not to open the envelope before the beginning of the test. The envelope contained the four maps and the “key” sheet (Figure 5(d)). Communication with the participants was through their phone (P1, P2, P4, P5) or using Zoom (with their camera off). Participants were advised in advance to reserve a large table on which they could lay the maps.

4.3. Modalities

4.3.1. Task Categories.

During the study, participants were asked to perform a number of tasks while examining the maps. These tasks can be grouped into four categories.

Map Exploration Tasks: Participants were asked several questions designed to evaluate their ability to correctly read the maps, locate details of interest, and assess the relative location and distance of items in the map. These tasks are important for identifying the salience and utility of the included map information, and to validate the choice of tactile symbols.

Pointing Tasks: Participants were asked to imagine standing in certain locations on the map, with their body facing a certain cardinal direction. Then, they were asked to point to a certain location on the same map or on the other map at a different scale level by imagining to raise their arm towards that location and to specify the direction of their arm with respect to their body. These tasks are important for assessing the mental representation (cognitive map) built up from map exploration, as pointing requires a Euclidean judgment that is independent of the routes learned on the map. Being able to do so between map scales also reflects accurate consolidation of the two maps into a unified representation in memory.

Cross-Map Path Finding Tasks: Participants were asked to find a walkable route from one location on one map at a certain scale (section or room) to another location on the map at another scale. Performance on this task reflects whether participants can accurately ‘read’ the map and determine/execute routes between requested locations, which is important for real-world wayfinding behavior. These data also speak to whether they could seamlessly cross scale boundaries in planning/traversing this route, which, if successful, also suggests an integrated map representation in memory.

Turn Direction/Walking Orientation Tasks: Participants were asked to imagine walking on a path in the map. At each turn in the path, they were asked to declare whether they would turn left or right, including the cardinal orientation that their body would now be facing after the turn. These tasks sought to verify whether participants were able to maintain awareness of their (allocentric) orientation while imagining traversing the path and simultaneously updating their first-person (egocentric frame) as they walked the route. The use of mixed-reference frames is common in verbal descriptions [82, 83] and is often used in speech-based wayfinding apps. However, this is not well studied when accessing tactile maps, as most studies do not require the participants to talk their way along the routes that they are feeling, as we have done here. With our method, we were able to assess whether people felt immersed in the map as they walked the routes, for example, whether they used a first-person egocentric perspective while still maintaining the global (allocentric) perspective that is afforded by accessing a map.

4.3.2. Key Sheet and Practice Maps.

Upon opening the envelope and extracting the embossed sheet, participants were advised to lay the maps on the table, oriented such that the arrow was positioned on the top right corner, pointing up. They were informed that maps would be provided at two different scales, and the type of spatial information represented at each scale was explained. Then, they were asked to find the “key sheet” containing an explanation of the tactile symbols used and to explore each symbol as guided by the experimenter. They were asked to describe the shape of each symbol and to read the accompanying text. After exploring the key sheet, participants were asked to find the “Sample Full” map. They were encouraged to explore this practice map, were asked to locate all key map elements, and were invited to ask questions if something was not clear. Then, participants were asked to find the “Sample Room 24” map. The experimenter pointed out that this map represents the “zoomed-in” version of Room 24 in the practice map that they just explored. They were asked to explore the room and to find the table, shelf, and cubicles. Once the participants felt comfortable with both practice maps, they were asked to put them away and were informed that the actual study would begin. The duration of the practice phase ranged from approximately 5 to 10 minutes. All participants were able to perform the tasks.

4.3.3. Task Execution.

Map Exploration—Pointing Tasks. Participants started by finding the “Room 09” map and were informed that this map was at room-level scale. Participants were asked to answer the questions ME1 to ME5 listed in Table 2 while exploring this map (map exploration task; Section 4.3.1). Once completed, participants were asked to find the section-level map titled “Full.” The experimenter explained that this map represented a real office building and asked the participants to note that the map contained three horizontal rows of offices. In addition, the experimenter pointed out that the building contained a hallway that formed a loop, and asked the participants to verify that it was possible to walk continuously along this hallway. We felt that this initial phase was necessary to ensure that participants were exploring the correct map (remember that all communication was via audio only). At this point, participants were asked to answer map exploration questions ME6 to ME12 in Table 2.

Table 2.

Map Exploration (ME) Questions

| Room 09 Questions | |

|---|---|

| ME1 | Can you name all of the cubicles that are in the room? |

| ME2 | How many shelves are in the room, and which cubicle is closer to each shelf? |

| ME3 | Is Cube B to the East or to the West with respect to Cube E? |

| ME4 | If starting from the door, would it be shorter to walk to Cube C or to Cube D? |

| ME5 | If starting from the table, would it be shorter to walk to Cube A or to Cube F? |

| Full Building Questions | |

| ME6 | Can you name the rooms that are along the South edge of the building? |

| ME7 | How many exit doors are there at the North edge of the building? For each such exit, what is its closest room or rooms? |

| ME8 | How many exit doors are at the South edge of the building? For each such exit, what is its closest room or rooms? |

| ME9 | Can you name all rooms that have two doors? For each of these rooms, can you identify if one of the doors leads to another room? |

| ME10 | Is Room 47 to the East or to the West of the water fountain? |

| ME11 | Would it be shorter to walk from Room 47 to the North exit or to the South exit? |

| ME12 | Would it be shorter to walk from Room 09 to the West or to the East staircase? |

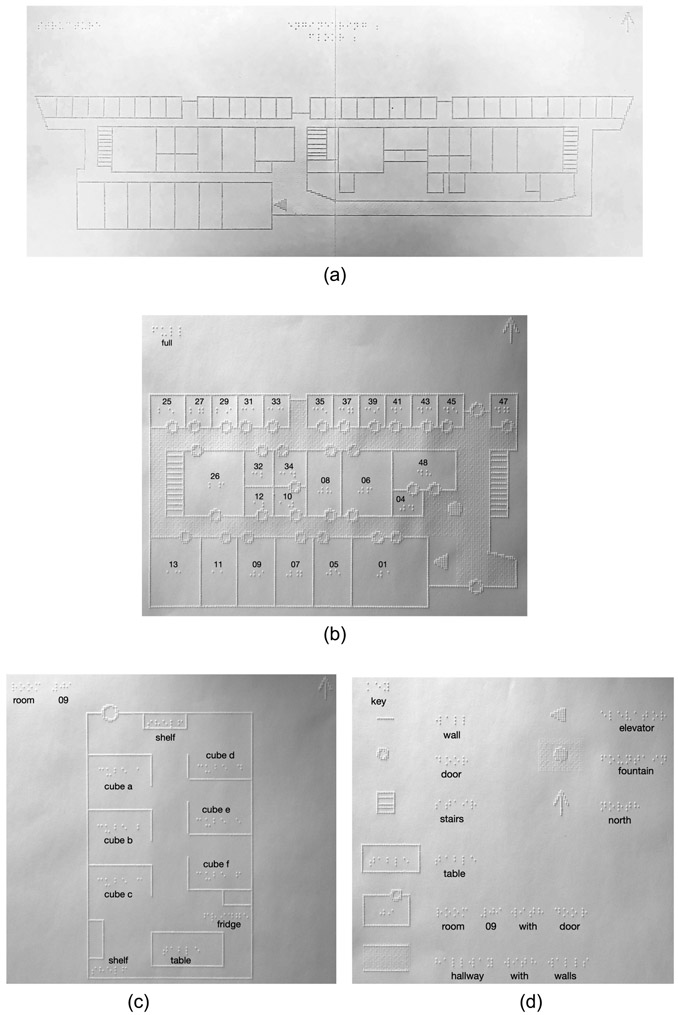

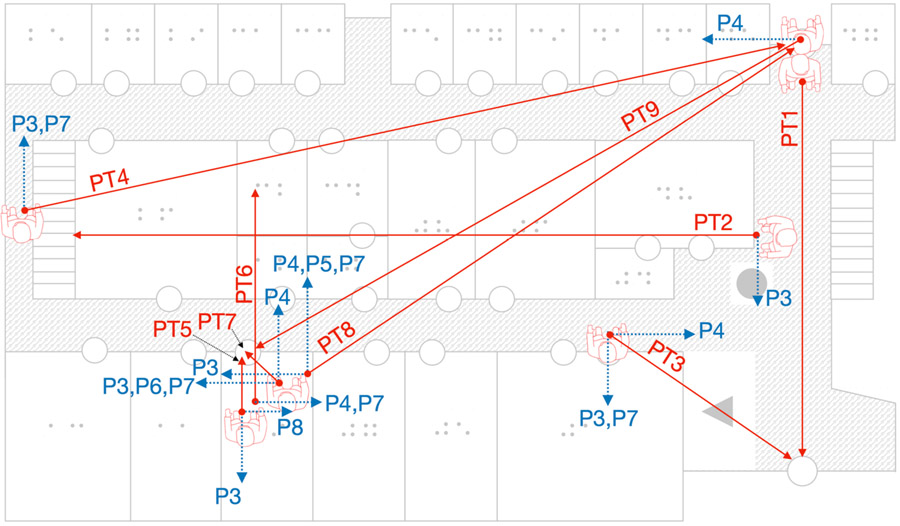

The next set of questions (PT1–PT4 in Table 2; see also Figure 6(a)) referred to pointing tasks (Section 4.3.1). Participants were asked to use whichever response mode they were most comfortable with to express relative directions, including clockface directions (e.g., at 2 o’clock); relative cardinal directions (e.g., NW from me); or descriptive directions (e.g., in front of me, slightly to the right).

Fig. 6.

A graphical illustration of the pointing direction tasks of Table 3. Each red arrow (labeled with the associated pointing task; e.g., PT3) starts from the location where participants were asked to imagine standing (with a body contour indicating the imagined body orientation), and ends at the location they were asked to point to. The dashed blue arrows display the orientations expressed by the participants with an error of 45° or more. These arrows are labeled with the ID of the participants (e.g., P4,P7).

Cross-Map Path Finding—Turn Direction/Walking Orientation Tasks.

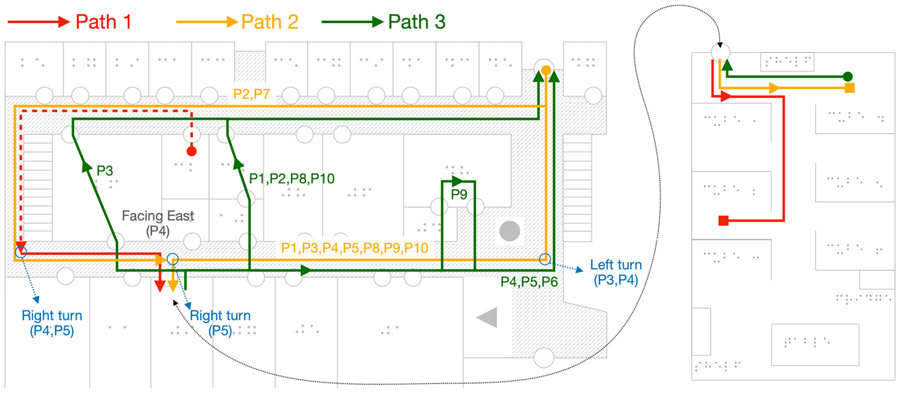

Participants were first given an example of these tasks, for a route (Path 1; see Figure 7) that began from Room 32 (on the “Full” map) and ended in Cube B of Room 09 (on the “Room 09” map). While verbally guided by the experimenter, participants were asked to follow this path with their finger as their ego point as if they were walking it. The experimenter started by asking them to imagine that they were in Room 32, facing North. They should then exit through the door and find themselves in the hallway. They should turn left, after which their body would be facing West. They would walk along the hallway, then they would turn left (now facing South), and continue to walk down the hallway, passing the staircase to their left.

Fig. 7.

The routes found by the participants (with the “guided” component of Path 1 shown as a dashed line). Where different routes were found (for Path 2 and Path 3), the ID of the participants is shown next to each path. Incorrect turn directions expressed by the participants are shown in blue, along with the participants’ IDs. Starting locations are shown as circles, while destinations are shown as squares.

At this point, the practice component ended, and the participants were asked to continue traversing the path. They were asked to verbally indicate whether they would turn left or right at each intersection and to say what cardinal orientation their body would be facing after the turn. Upon arriving at the door of Room 09, participants were asked to take out the “Room 09” map, and to continue the imaginary path traversal (still mentioning turning direction and body orientation at each turn) until they entered Cube B in the room. Two pointing questions were then given (PT5 and PT6 in Table 3), one involving a within-map pointing judgment and the other a cross-map judgment. Participants were encouraged to use both maps (“Full” and “Room 09”) when reasoning about how to answer these pointing questions.

Table 3.

Pointing (PT) Questions

| After Exploration of Full Building | |

|---|---|

| PT1 | Suppose you entered the building from the North exit/entrance door. As you enter, you will be facing South. Point to the direction of the South exit/entrance door. |

| PT2 | Suppose you are standing next to the water fountain and that you are facing West. Point to the direction of the Western staircase. |

| PT3 | Suppose you are exiting one of the doors of Room 01 (top right corner). You will be facing North. Point to the direction of the South exit/entrance door. |

| PT4 | Suppose you are in the hallway to the West of the Western staircase. You are facing North. Point to the direction of the North exit/entrance door. |

| After Completion of Path 1 | |

| PT5 | Suppose that you are in the center of Cube B, your body facing North. Point to the direction of the entrance door of Room 09 (the room you are in). |

| PT6 | Suppose that you are in the center of Cube B, your body facing North. Point to the direction of Room 32. |

| After Completion of Path 2 | |

| PT7 | Suppose that you are in the center of Cube D, your body facing North. Point to the direction of the entrance door of Room 09 (the room you are in). |

| PT8 | Suppose that you are in the center of Cube D, your body facing North. Point to the direction of the North exit door. |

| After Completion of Path 3 | |

| PT9 | Suppose that you are at the North exit door, your body facing North. Point to the direction of the door of Room 09. |

Participants were then asked to find by themselves and describe another cross-map path (Path 2 in Figure 7) from the North exit door of the building to Cube D of Room 09. Once there, they were asked pointing questions PT7 and PT8 from Table 3, for which, again, the second question required cross-map judgment.

Finally, participants were asked to imagine walking from Cube D of Room 09 to the North exit door. However, this time they were encouraged to find a route that included traversing a path through internal rooms with communicating doors rather than simply walking via the hallways (Path 3). Once they arrived at the North exit door, they were asked question PT9 in Table 3.

4.3.4. Exit Survey.

Having concluded all tasks, participants were asked to report on their experience with exploration of these maps through a set of seven Likert scale questions (Table 4). Then, they were asked the following open-ended questions: (1) What suggestions do you have for improvements to the maps? (2) Do you think that access to similar tactile maps of new buildings would be beneficial in terms of improving your confidence when traveling to new places?

Table 4.

Likert Scale Exit Questionnaire and Scores

| P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 | P9 | P10 | Med | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| S1:The Full map was easy to read. | 3 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| S2:The Room 09 map was easy to read. | 4 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 5 | 5 |

| S3: The symbols were easy to identify. | 5 | 4 | 4 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| S4: Finding a certain room in the Full map was easy. | 3 | 5 | 5 | 3 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| S5: Finding a path in the map was easy. | 4 | 5 | 4 | 4 | 3 | 5 | 5 | 5 | 5 | 5 | 5 |

| S6: When asked to imagine to follow a path in the map, I could correctly identify the orientation of my body at all times. | 5 | 5 | 3 | 3 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| S7: When asked to imagine that I was following a path with a transition from the Full map to the Room 09 map, this transition was easy for me to follow. | 4 | 5 | 4 | 4 | 5 | 5 | 4 | 5 | 5 | 5 | 5 |

5. RESULTS

5.1. General Observations

The study went smoothly, and no participants complained about any difficulties with the remote modality. All 10 participants were able to complete all tasks, except for P7, who became tired and asked to stop the experiment before the last task (Path 3). She complained of her fingers becoming “numb” after a while. The experimenter mistakenly neglected to ask the first four pointing questions to P5. The overall time to complete the study (including the exit survey) ranged from 79 minutes (P10) to 130 minutes (P1), with a median time of 92 minutes.

5.2. Map Exploration

In the room-scale map (“Room 09”), all participants except for P4 correctly answered the questions ME1 to ME5. P4 had some difficulties answering ME4. She was initially unable to find a path that would take her from the door to Cube C. She commented: “Wherever I go, I see walls.” She incorrectly thought (ME 5) that it would be easier to reach Cube A than Cube F starting from the table. At the section-level map (“Full”), some participants had difficulties locating the North exit door. For example, P2 could not find it at first, then explained that he was looking at the top edge of the map, whereas the exit door was located in a sort of alcove. We also noted that while some participants seemed to have memorized the location of relevant spatial features (e.g., the exit doors), others had to search for the same feature again when required by a question. Remarkably, all participants gave the correct answers to all map exploration questions on the “Full” map, except for ME9, which asked for the rooms with two doors. P1, P8, and P10 found all seven two-door rooms, while all other participants missed 4.7 such rooms on average.

5.3. Pointing

We found that it was necessary to remind the participants that the pointing direction to a certain location could be different than the actual walking direction (i.e., unlike a walking direction, a pointing direction could go through a wall). It appears some participants were not used to this “bird’s eye direction” concept. Similar confusion was found with blind individuals when pointing between known locations in their house and neighborhood, where people point along known routes versus the straight-line (Euclidean) distance between locations [84]. Six participants used clockface directions for their pointing judgments. Only P5 used relative cardinal directions, while P4, P7, and P8 preferred plain terms such as “to my right.” Note that clockface units have a resolution of 30°, while cardinal directions normally have a resolution of 45° (e.g., “NW”). We also assume that plain English directions can be expressed with a resolution of 45° (e.g., “in front of me to the right”). We thus consider a pointing direction with an error of 45° or larger to be incorrect.

Overall, we recorded 7 incorrect directions for the 5 questions in the “Full” map (PT1–PT4 and PT8), 6 incorrect directions for the 2 questions in the “Room 09” map (PT5, PT7) and 6 incorrect directions for the 2 cross-map questions (PT6, PT8). Only in one case (P3 for PT5) was the direction error equal to 180° degrees, whereas in four cases (P8 for PT5; P4 and P7 for PT6; and P3 for PT2) the error was of 90°. Three participants (P3, P4, P7) were responsible for 89% of the incorrect pointing directions.

Figure 6 provides a graphic representation of the pointing tasks and of the reported errors. The red arrows in this figure show the correct pointing directions for each question (PT1–PT9), along with the imagined body location and orientation for each question. Blue dashed arrows represent incorrect pointing directions.

5.4. Path Finding

Figure 7 shows the paths found by the participants. Almost all participants were able to find the most direct route for Path 2. Only P2 and P7 found a longer path, going counterclockwise and basically looping around the building. P2 quickly realized that there was a shorter path; he completed describing the first, longer path, then described the shorter path. For what concerns Path 3, participants found four different routes. Note that one of these routes (for P9) is unrealizable as it would go through a wall. Also note that the path selected by P4, P5, and P6 did not go through interconnecting rooms, even though this was explicitly requested by the question.

5.5. Turn Direction/Walking Orientation

Most participants needed to be periodically reminded to clearly spell out both egocentric turn directions and allocentric orientation. In many cases, a participant would, for example, say: “I turn East” instead of “I turn left, then I will be facing East.” Aside from that, only two participants (P4 and P5) had problems with this task. Although P5 failed to give correct turning directions in two cases only, she had to reason carefully at each turn. It appears that the fact that both Path 1 and Path 2 were going South made this task challenging, as she commented: “Much easier when I am going North than when going backwards”; “When I go South, it screws me up.”. At one point, she said: “If I am walking South then turn left, I am facing West but this is not where my finger is.” Eventually, after thinking aloud, she corrected herself and said: “East.” Later, she said: “It’s really hard because I am going down the map. If I was walking, it would be the other way.” A similar sentiment was expressed by P3, who said that he needed to remind himself that when walking South, if turning left you are facing East (something he called “reversing orientation”). While walking along Path 2, P4 produced two consecutive incorrect turn directions, commenting: “I cannot translate on the table what my body is doing in space.” All incorrect turn directions expressed by the participants are reported in Figure 7 (shown in blue).

5.6. Exit Survey

For each statement in the exit questionnaire (Table 4), the median Likert recorded score was 5. Even accounting for some inherent bias (e.g., the desire not to disappoint the experimenter [85]), this result is encouraging. Two statements (S4 and S6) received two ‘3’ scores. The first statement concerned the difficulty of finding a room in the Full map; the second referred to tracking one’s own orientation in an imagined traversal.

Concerning the first open-ended question (Section 4.3.4), several participants expressed satisfaction with the clarity of the map and of its symbols (including the arrow pointing North at the top of the map). P1 noted that compared with other maps she accessed before (possibly thermoform maps or maps created with microcapsule paper), these were crisper and easier to read. However, P9 thought that the practice of removing the number sign prefix for the room labels to economize on space (as explained in Section 3.2.2) could result in ambiguous reading for Braille readers. P6 commented that the Full map had large portions of “open space” that can be confusing.

The need for proficiency in Braille was pointed out by P8, as well as the need for “map skills,” with one participant (P4) suggesting that similar maps could be employed in a course on map literacy and wayfinding, maybe in the form of games. Suggestions for different symbols included removing texture in hallway areas (reserving it for other features; P10) and orienting the triangular symbol for the elevator so that it would point up (P2, P9). P2 suggested that a “clear” space in the map could indicate a walkable area (he shared that at the beginning of the study, it was not clear to him whether one could walk on an area marked as textured). P9 noted that it would be useful if the map indicated whether a staircase leads up or down and if there is a chance that one could end up underneath the staircase when walking in the space. P9 suggested that the map should contain information about the area immediately adjacent to the building near the exit doors; P1 commented that it would be necessary to indicate the location of restrooms in the building. P10 thought that the maps could contain more detail while acknowledging the difficulty of creating many different recognizable symbols. Others (P2, P6) also observed that the room-level map could contain more detail, such as the height of a shelf.

P6 commented about the importance of accurate scale: “Sometimes the scale between map and world don’t match.” The need for some form of metric distance information was brought up by P2, whereas P10 suggested that adding something like a bar scale would help with metric measurements.

Regarding the second question, all participants but for P5 thought that these maps may be useful, especially if available before a visit (given their large size, it would be hard to carry them around, as noted by P7). P5 said: “I never had a map before visiting a building—it would help some people, not sure for me … My spatial orientation is not good—mediocre—can’t picture visually—that’s why I like these maps.” P10 thought that the room-scale map was unnecessary because “you can figure it out when you are there” and because room layouts may change. P9, an accomplished traveler, said that he is normally able to “put the pieces together” when he visits a new place. He thought that these maps would not increase his confidence, but rather his enjoyment of going to new places. As he put it: “I am confident already, but I could really enjoy and make less of my focus, energy, and cognitive resources. A map can go a long way… puts me more in charge.”

5.7. Discussion

Where most accessible map learning studies focus on cognitive map development and global spatial learning, a number of studies have specifically investigated route learning from embossed tactile maps, with results demonstrating that the process of exploring a route by tracing it on the map, similar to our approach, leads to efficient learning and mental representation of route knowledge. For instance, studies in which blind participants were asked to first learn routes through a city environment on a tactile map and then go to the physical environment and attempt to navigate that same route from memory showed similar route accuracy performance when compared with participants who learned the route using verbal instructions during in situ navigation. However, the participants who were exposed to the map showed significantly better knowledge of surrounding spatial relations along the route and directions between locations than those who did not have prior map access [16, 86]. Although our procedure did not compare map learning to in situ route navigation as our interest was in studying how the maps were used, these results support the use of route following on the map and suggest that this practice leads to the development of accurate spatial representations in memory that would support subsequent navigation, which we will investigate in future studies.

Our technique of having participants verbalize their thinking as they trace the routes through our maps is in the spirit of the think-aloud procedure used in a seminal wayfinding study with blind participants navigating through a complex university building [87]. Although these participants were asked to navigate and describe routes through a large public building that they had previously walked, as opposed to tracing routes on a map, they were asked to verbalize their mental process as they traveled the route, similar to our approach in this article. Results showed that blind participants were able to accurately plan and execute routes but that they used more detail for their wayfinding decisions and utilized more types of environmental information than their sighted counterparts on the same routes using the same think-aloud procedure. For instance, they described more information about how to maintain walking direction and find nearby architectural/environmental features, that is, similar elements to what we asked our participants to describe when walking through the map. These results bolster our design decision to use a verbalization procedure during route following on maps, which are most amenable to conveying the types of environmental relations found to be useful to blind learners in the Passini and Proulx study [87]. Although we did not include sighted participants in our map learning task, this comparison of performance as a function of the type and amount of information used on the map would be an interesting future extension to the work.

The most compelling result from this study is how well participants performed across all map reading, pointing, and navigation tasks. Although our data are descriptive, the findings are clear that with few exceptions, our blind participants were able to read the maps, make sense of the information presented, and determine routes between requested locations. The majority were also accurate at performing the Euclidean pointing tasks, although such judgments require determining/imagining the straight-line relation between locations independent of the connecting route, which involves greater cognitive effort and reflects a high level of learning of global structure. The other surprise was how well participants did on tasks requiring using both maps and operating across scale. We predicted that such scale transitions may be difficult as (1) they would be unfamiliar to most blind people given that the vast majority of tactile maps are rendered at a fixed scale; and (2) doing so requires more cognitive effort, as the spatial information on the two maps must be consolidated into a unitary frame of reference. The finding that neither the within-map navigation nor pointing trials were notably better than those requiring between-map trials suggests that this parameter did not introduce additional effort or cognitive complexity, a finding supported by the limited research on haptic map zooming, but with small-scale digital maps [6, 11]. The current results not only suggest that learning traditional hardcopy maps from different scales is possible but that these transitions do not impair behavior. This opens the door to depicting environments at different levels of granularity on different maps or within the same map if it could be dynamically zoomed.

Our use of a think-aloud procedure was informative. Not only did this confirm that our map symbols were understandable and intuitive based on people’s ability to articulate them as they moved throughout the map, but we postulate that this procedure increased immersion in the map. That is, by verbalizing navigation actions, orientation, and map elements being passed as people moved, participants reinforced their egocentric position on the map while still maintaining allocentric knowledge. It is likely that this engagement facilitated the pointing judgments that required participants to indicate directions when their egopoint on the map was 180° opposed to their physical body orientation, for example, when pointing to the South from the North side of the map. In such cases, most people make alignment errors because of the perceptual bias introduced by the mismatch of their imagined and physical position [88], a phenomenon that has also been shown with blind participants when learning tactile route maps [89]. Although three participants (P3, P4, and P5) mentioned this challenge, the similarity of pointing behavior and verbal descriptions, irrespective of direction, suggests that the combination of a tactile map with verbal instructions facilitated accurate learning.

It is notable that most participants did not find all rooms with two doors. When mistakes were made, they often related to internal doors providing connectivity between adjacent rooms. The reason is not clear from the data but it is possible that participants were less accustomed to (or less comfortable with) searching the map when off the established corridors, thus, they were not as efficient at localizing these doors or considering them when requested to find alternative routing. The small tactile field of view also limits the spatial extent of the map that is perceived from a given haptic “glance,” meaning that tactile exploration must be extremely systematic to cover all aspects of the map, especially when exploring internal regions that are not immediately proximal to the corridors.

5.8. Limitations

While traditional hardcopy tactile maps, as we employed here, can be used during navigation, their large size and cumbersome nature means that they are primarily used (and studied) as a spatial support for offline learning, for example, done before travel, with an O&M instructor, or when not actually moving. This is a function of these maps being a large format spatial support and needing a flat place to read using touch. In a real-world scenario, the O&M instructor might work with a blind client to learn such a map before going to the environment or a blind user would explore the map before traveling to the space, following routes to a known destination or exploring the space and considering possible routes within the global context (as reviewed in [90]). This practice is not dissimilar to how our participants “walked” through the map to learn it in this study, except that they obviously didn’t have a teacher to help them learn the map, as this was part of what we were interested in measuring.

6. CONCLUSIONS

We introduced SIM, a web app that can be used to quickly trace a floor plan, available as an image, and transform it into a vectorized GeoJSON file. SIM automatically converts the traced floor plan into a format that can be used to generate a tactile map, using an embosser or microcapsule paper. SIM supports three different scale categories: Structure, Section, and Room. In the Section scale level, users can select the extent to be rendered in a single sheet. The three scale categories use different symbol taxonomies, in which the larger scale (Structure) renders only spaces and walls, whereas at smaller scales, details such as room numbers, doors, and appliances are rendered. The quality of maps generated by SIM has been tested with a user study with 10 blind participants, who completed a number of exploration, pointing, and pathfinding tasks. Overall, the participants appreciated the clarity and understandability of the tactile maps, and in most cases were successful at the tasks presented to them. Future work on SIM will include expanding the taxonomy of spatial features that can be represented, facilitating map exploration across building floors (e.g., indicating ingress/egress and connectivity), and supporting Braille-less maps (by replacing information conveyed in Braille through carefully designed tactile symbols) for those who cannot read Braille. These new map features will be developed using a scenario-based design methodology [91] and tested with blind participants. We will also assess the effect of prior map learning on in-situ exploration of buildings. Map learning has already shown to be beneficial for route exploration in the outdoors [16, 60, 86]. We expect that similar benefits will be observed in indoor environments.

Table 1.

Participant Information

| Gender | Age | Blindness Onset | Experience with tactile maps | |

|---|---|---|---|---|

| P1 | F | 70 | Late | Very little. Used a couple of times before. |

| P2 | M | 58 | Birth | A little experience with conferences he attended—sometimes they provided tactile map of convention hotel. |

| P3 | M | 68 | Undisclosed | Only city maps. Not buildings. |

| P4 | F | 63 | Birth | Topographic maps of the world but not wayfinding maps. O&M instructor created maps for intersections when she was a teenager. |

| P5 | F | 73 | Birth | Very little. Maybe a map with the U.S. states. |

| P6 | M | 70 | Birth | Used maps of a college campus. |

| P7 | F | 73 | Late | Remembered that years ago, her O&M instructor drew on her hand the entrances of the stores in a mall. She never forgot it. |

| P8 | F | 66 | Birth | As a child, she used a map of the San Diego Zoo. She also had maps that showed the shape of all U.S. states. Remembered also using a map at a hospital. |

| P9 | M | 62 | Early | As a child, he had a set of atlases of U.S. states, plus maps of different continents. |

| P10 | M | 50 | Blind | Quite a bit. Used maps in school. Later, used a map of his city neighborhood and university campus. Has tactile maps of the U.S. and of all continents. Also tested maps produced by TMAP [4]. |

CCS Concepts: Human-centered computing → Empirical studies in accessibility; Accessibility systems and tools;

ACKNOWLEDGMENTS

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or of the National Science Foundation.

Research reported in this publication was supported by the National Eye Institute of the National Institutes of Health under award number R01EY030952 and by the National Science Foundation under grant no. CHS-1910603.

REFERENCES

- [1].Bentzen BL. 1977. Orientation maps for visually impaired persons. Journal of Visual Impairment and Blindness 71, 5 (1977), 193–196. [Google Scholar]

- [2].Espinosa MA and Ochaita E. 1988. Using tactile maps to improve the practical spatial knowledge of adults who are blind, Journal of Visual Impairment & Blindness 92, 5 (1988), 338–345. [Google Scholar]

- [3].Andrews SK. 1983. Spatial cognition through tactual maps. In Proceedings of the 1st International Symposium on Maps and Graphics for the Visually Handicapped, Washington, DC. [Google Scholar]

- [4].Miele J, Landau S, and Gilden D. 2006. Talking TMAP: Automated generation of audio-tactile maps using Smith-Kettlewell’s TMAP software. British Journal of Visual Impairment 24, 2 (2005), 93–100. [Google Scholar]

- [5].Giudice NA, Walton LA, and Worboys M. 2010. The informatics of indoor and outdoor space: A research agenda. In Proceedings of the 2nd ACM SIGSPATIAL International Workshop on Indoor Spatial Awareness. San Jose, CA. [Google Scholar]

- [6].Adams R, Pawluk D, Fields M, and Clingman R. 2015. Multimodal Application for the Perception of Spaces (MAPS). In Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility, Lisbon, Portugal. [Google Scholar]

- [7].Giudice NA, Guenther BA, Jensen NA, and Haase KN. 2020. Cognitive mapping without vision: Comparing wayfinding performance after learning from digital touchscreen-based multimodal maps vs. embossed tactile overlays. Frontiers in Human Neuroscience 14, 87 (2020), 87–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Brayda L, Leo F, Baccelliere C, Ferrari E, and Vigini C. 2018. Updated tactile feedback with a pin array matrix helps blind people to reduce self-location errors. Micromachines 9, 7 (2018), 351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Dillemuth J, Goldsberry, and Clarke KCK. 2007. Choosing the scale and extent of maps for navigation with mobile computing systems. Journal of Location Based Services 1, 1 (2007), 46–61. [Google Scholar]

- [10].Montello DR. 1993. Scale and multiple psychologies of space. In European Conference on Spatial Information Theory. island of Elba, Italy. [Google Scholar]

- [11].Palani H, Giudice U, and Giudice NA. 2015. Evaluation of non-visual zooming operations on touchscreen devices. In International Conference on Universal Access in Human–Computer Interaction, Toronto, Canada. [Google Scholar]

- [12].Rastogi R and Pawluk TDKJ. 2013. Intuitive tactile zooming for graphics accessed by individuals who are blind and visually impaired. IEEE Transactions on Neural Systems and Rehabilitation Engineering 21, 4 (2013), 655–663. [DOI] [PubMed] [Google Scholar]

- [13].Loomis JM, Klatzky RL, and Giudice NA. 2013. Representing 3D space in working memory: Spatial images from vision, hearing, touch, and language. Multisensory Imagery 12, 4 (2013), 131–155. [Google Scholar]

- [14].Lederman SJ and Klatzky RL. 2009. Haptic perception: A tutorial. Attention, Perception, & Psychophysics 71, 7 (2009), 1439–1459. [DOI] [PubMed] [Google Scholar]

- [15].Ericsson KA and Simon HA. 1984. Protocol analysis: Verbal reports as data, The MIT Press. [Google Scholar]

- [16].Blades M, Ungar S, and Spencer C. 1999. Map use by adults with visual impairments. The Professional Geographer 51, 4 (1999), 539–553. [Google Scholar]

- [17].Golledge RG. 1991. Tactual strip maps as navigational aids. Journal of Visual Impairment & Blindness 85, 7 (1991), 296–301. [Google Scholar]

- [18].Ungar S, Blades M, and Spencer C. 1997. Strategies for knowledge acquisition from cartographic maps by blind and visually impaired adults. The Cartographic Journal 34, 2 (1997), 93–110. [Google Scholar]

- [19].Poppinga B, Magnusson C, Pielot M, and Rassmus-Gröhn K. 2011. TouchOver map: Audio-tactile exploration of interactive maps. In Proceedings of the 13th International Conference on Human–Computer Interaction with Mobile Devices and Services, Stockholm. Sweden. [Google Scholar]

- [20].Kaklanis N, Votis K, and Tzovaras D. 2013. Open Touch/Sound Maps: A system to convey street data through haptic and auditory feedback. Computers & Geosciences 57 (2013), 59–67. [Google Scholar]

- [21].Rice M, Jacobson RD, Golledge RG, and Jones D. 2005. Design considerations for haptic and auditory map interfaces. Cartography and Geographic Information Science 32, 4 (2005), 381–391. [Google Scholar]

- [22].Zeng L and Weber G. 2015. Exploration of location-aware you-are-here maps on a pin-matrix display. IEEE Transactions on Human–Machine Systems 46, 1 (2015), 88–100. [Google Scholar]

- [23].Kane SK, Morris MR, and Wobbrock JO. 2013. Touchplates: Low-cost tactile overlays for visually impaired touch screen users. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility, New York, NY. [Google Scholar]