Abstract

Recognition of human emotion states for affective computing based on Electroencephalogram (EEG) signal is an active yet challenging domain of research. In this study we propose an emotion recognition framework based on 2-dimensional valence-arousal model to classify High Arousal-Positive Valence (Happy) and Low Arousal-Negative Valence (Sad) emotions. In total 34 features from time, frequency, statistical and nonlinear domain are studied for their efficacy using Artificial Neural Network (ANN). The EEG signals from various electrodes in different scalp regions viz., frontal, parietal, temporal, occipital are studied for performance. It is found that ANN trained using features extracted from the frontal region has outperformed that of all other regions with an accuracy of 93.25%. The results indicate that the use of smaller set of electrodes for emotion recognition that can simplify the acquisition and processing of EEG data. The developed system can aid immensely to the physicians in their clinical practice involving emotional states, continuous monitoring, and development of wearable sensors for emotion recognition.

Keywords: Artificial neural network, electroencephalogram, emotion recognition, valence-arousal model

Introduction

Emotion is one of the psychological states that is associated with physical, mental, and social well-being. Human emotion can be described in many ways. In response to an external stimulus such as audio, video, and external physical parameters, the emotional state can change consciously or subconsciously.[1] Emotion plays an important and vital role in day-to-day life. In general, an emotion is misinterpreted with the states like “Mood” and “Affect.”[2] Due to the modern, sedentary, and virtual lifestyle, the excess usage of gadgets and psychosocial interactions can lead to emotion-related problems like depression, anxiety, bipolar disorder etc.[3]

In recent years, an innovative field of computation, known as affective computing, has been persistently used to make human–computer interaction (HCI) more natural.[4] Researchers are describing the emotion as a mental state which is influenced by strong or deep neural impulse produced. In general, the physiological signals are used for the recognition of emotional states by the physician. In recent years, many authors have used biological signals such as blood volume pulse, galvanic skin resistance (GSR, electromyogram (EMG), skin temperature, electroencephalogram (EEG), and electrocardiogram (ECG) for recognition of emotional states.[5]

The emotion types are indicated and described by two models, namely discrete emotion and two-dimensional valence-arousal model in the literature.[6] Ekman and Friesen.[7] proposed a discrete model to analyze six basic emotions, namely fear, sad, disgust, angry, happiness, and surprise. The two-dimensional bipolar model was proposed by Russell[8] to analyze human emotions. Based on Russell's two-dimensional model, the modified valence-arousal model was developed[9] and is shown in Figure 1.

Figure 1.

Valence-arousal emotion model[9]

In this model, valence and arousal are taken as the horizontal and vertical axes to form four quadrants to describe various emotions. The first quadrant represents the emotions with high valence and high arousal like “happiness.” The second quadrant indicates low valence and high arousal emotion like “anger.” The third and fourth quadrants represent emotions with low valence low arousal like “sadness” and high valence low arousal like “calmness,” respectively.

This aricle proposes a novel method for emotion recognition based on artificial neural networks (ANNs) that are used to classify emotional states using only a minimum number of frontal electrodes. Hence, a novel approach is proposed for emotion recognition with time series of multichannel EEG signals from a Database for Emotion Analysis and Using Physiological Signal (DEAP).

In the current study, we have focused mainly on region-based classification of emotional states. The EEG from different regions of the scalp (frontal, occipital, temporal, and parietal) were analyzed for emotion classification, and the corresponding performance was evaluated mainly focusing on frontal electrodes.

The rest of this article is organized as follows:

Related work, Methodology, Results, Discussion, Conclusion, and References.

Related work

A number of authors have worked on EEG-based emotion recognition. Ko et al.[10] calculated the power spectral density (PSD) of EEG bands and compared it with standard values using a Bayesian network. Petrantonakis PC et al.[11] extracted the features using Higher Order Crossing (HOC) and Hybrid Adaptive Filtering (HAF) analysis. The emotion related EEG signal characteristics were efficiently extracted using Hybrid Adaptive Filter which provided higher classification rate of 85.17%. Petrantonakis PC et al.[12] implemented Higher Order Crossing-Emotion Classifier (HOC-EC) to test different types of classifiers namely, Mahalanobis distance, Support Vector Machine (SVM), K-Nearest Neighbor (KNN) and Quadratic Discriminant Analysis (QDA. In this study, HOC-EC outperformed by achieving an accuracy of 62.3% (using QDA) and 83.33% (using SVM).

Lin et al.[13] proposed 512-point short-time Fourier transform and SVM to classify four emotional states (joy, anger, sadness, and pleasure) to obtain an average classification accuracy of 82.29% ± 3.06% across 26 subjects. In a framework proposed by Murugappan et al.,[14] the discrete wavelet transform (DWT) is used to extract the features of EEG signal. The experimental results indicate that the maximum average classification rate of 83.26% and 75.21% using KNN and LDA is obtained, respectively. Petrantonakis PC et al.[15] implemented Higher-order crossings and cross-correlation as feature-vector for further classification using SVM for six different classification scenarios in the valence/arousal space and obtained highest classification rate of 82.91%.

In a work conducted by Islam et al.,[16] the frequency transformation using Discrete Fourier Transform (DFT) and statistical measures such as skewness and kurtosis on the EEG signals are used to analyze different human emotions. Liu, Yi-Hung et al,[17] employed Kernel Eigen Emotion Pattern (KEEP) for feature selection and adaptive SVM for classification of discrete emotions in order to achieve high average valence and arousal classification rate of 73.42% and 73.57% respectively.

Murugappan et al.[18] implemented combined surface Laplace transform and time-frequency analysis for feature extraction using KNN and LDA classifiers to detect the discrete emotions. It is found that the KNN outperformed in comparison with LDA by offering a maximum average classification rate of 78.04%.

Özerdem Mehmet et al,[19] employed machine learning and discrete wavelet transform techniques, multilayer perceptron neural network (MLPNN) and KNN algorithm to classify emotional states using EEG signals and obtained the average overall accuracies of 77.14% and 72.92% respectively. Zheng et al.[20] proposed identification of participant independent and dependent emotional states using stable patterns of EEG signal and achieved an accuracy of 69.67% on DEAP dataset for four valance/arousal states. Upadhyaya et al.[21] classified various neurological and physiological disorders using EEG signal. In a recent study by Kumar et al.,[22] more than 30 features were extracted, and a backpropagation neural network was used for classifying the various emotional states resulting in an average accuracy above 92.45%.

Most of the initial research on emotion recognition was carried out on observable verbal or nonverbal emotional expressions (facial expressions and patterns of body gestures). However, tone of voice and facial expression can be deliberately hidden or overexpressed and in some other cases where the person is physically disabled or introverted may not be able to emotionally express through these parameters. This makes the method less reliable to measure emotions. In contrast, emotion recognition methods based on physiological signals such as EEG, ECG, EMG, and GSR are more reliable as humans intentionally cannot control them. Among them, EEG signal in the objective physiological signal is directly generated by the central nervous system, which is closely related to the human emotional state. EEG-based emotion recognition has drawn much attention of researchers nowadays. The authors have considered all regions of the scalp to recognize the emotion using different classifiers.

Methodology

In this study, machine learning techniques are adopted to classify emotional states. Based on a two-dimensional Russell's emotional model, states of emotion have been classified for each subject using EEG data. Figure 2 provides the block diagram of the proposed methodology.

Figure 2.

Block diagram of emotion classification using electroencephalogram

Data collection

The EEG data from Database for Emotion Analysis Using Physiological Signal (DEAP) online datasets are used for analysis and consists of peripheral biological signals as well as EEG recordings. EEG signal is collected from 32 subjects while watching 40 1-min-long music videos. Active 32 AgCl electrodes were placed according to the international 10–20 system, and EEG was recorded at a sampling rate of 512 Hz. Each video clip is rated by the participants in terms of the level of valence, arousal, and dominance.

Preprocessing

The signals recorded over different areas on the scalp normally contain noise and artifacts. While reducing the artifacts from raw EEG signals can cause the loss of some useful information, preserving frequencies within alpha and beta bands can be achieved through a band-pass filter.

Feature extraction

Features are extracted from the selected ten electrodes (Fp1, F3, F4, Fp2, O1, O2, T7, T8, P3, and P4) shown in Figure 3 for both high arousal-positive valence (happy) and low arousal-negative valence (sad) emotional states.

Figure 3.

Electroencephalogram electrode placement

The designed feature vectors include mean, Hjorth parameters, Higuchi fractal dimension (FD), HOC, entropy, and band power which are described in the following:

Mean

Mean (m) is the sum total number of samples divided by the number of samples of EEG:

where x(t) is the EEG signal with “t” as time index and “T” represents the total number of samples. It only finds the mean of EEG amplitude values over the sample length of the signal.

Hjorth parameters

The Hjroth parameters[29] describe the EEG signal in the time domain. It also characterizes the activity, mobility, and complexity of the EEG signal, which are described as follows:

The variance of the EEG signal is represented by the activity parameter and is calculated as:

where m is the mean of the signal. This parameter is a measure of the mean power representing the activity of the EEG signal.

Mobility of the EEG time series estimates the mean frequency. It is given by the square root of the ratio of first derivative variance to the signal itself and is calculated as shown:

where x(t) and dx(t) are the signal and its mean of the first derivative, respectively, var means variance.

Complexity is one of the Hjorth parameters which gives an estimation of the bandwidth of the EEG signal and is expressed as the ratio of motilities given by:

where x(t) and dx(t) are the signal and its mean of the first derivative, respectively.

Higuchi fractal dimension

FD is a ratio that denotes the structural complexity of the EEG signal. Fractals are the repetition of self-similar structures in a signal. They can be considered as smaller yardsticks that are used repetitively to measure the entire region. The fractal index can be a noninteger value. Higuchi[29] defined a new method to calculate the FD. To find FD using Higuchi's algorithm, the time-series EEG signal is decomposed into N samples:

A new time-series signal is constructed by picking up one sample after every kth sample from the original time series:

where m = 1, 2, 3,…, k. n shows the starting point and k represents the interval between the two samples in the original data.

Higher-order crossings

HOC is a technique that gives information about the oscillatory pattern of the EEG signals.[12] When the time elapses, the signal shows many up and down amplitude patterns which can be analyzed by calculating the count of zero crossings around the x-axis.

HOC can be calculated as following: the difference operator ∇ is a high-pass filter.

The sequence of high-pass filters is defined as:

Sk = ∇ k −1, k = 1,2,3… (8)

Then, the corresponding HOC will be:

Dk = NZC (Sk[x(t)t]) where NZC is the number of zeros crossing (9)

The value of “x (t)” is the length of the sequence and is calculated with an iterative process.

A total of 21 HOC features are extracted, including the zero crossing of the original signal, before any filter is applied.

B and power

The power features of EEG signals have been found more effective to recognize the emotional variation after stimulation. From the band filtered signals, power is calculated for each of the bands using Eq. 10.

where is the sequence length and is the EEG time-series signal.

Entropy

The number of eigenvectors that are needed to represent the EEG data comprised with the help of singular value decomposition entropy (SVDE). It measures the dimensionality of the EEG data. The SVDE is performed on matrix Y to produce T singular values,p1,....pT known as the singular spectrum. It is computed as in Eq. 11.

A total of 34 features (mean, Higuchi FD, HOC – 21, Hjorth features – 3, entropy – 4, and band power – 4 from each bands) are extracted from each of the ten selected electrodes to classify the emotional states.

Classification using artificial neural network

ANNs are statistical learning models inspired by biological neural networks. In the present study, a fully connected feed-forward neural network is used, which updates its weights using the delta learning rule by back propagating the error. The connection weights between neurons of different layers are updated during the training process. The objective of learning process in ANN is to minimize the error between the target and actual output of the neural network. Totally, 136 features are considered input to the network, there are 136 input neurons. The total number of hidden neurons selected is four and the output of the network has two output neurons for a two-class problem. The sigmoid function is used for model to predict the probability as an output. Here, 10-fold cross-validation method is used for training and testing the ANN. The entire dataset is divided into ten disjoint partitions. In every fold, nine partitions are combined to form the training data, and the remaining one fold is used as testing data. This process is repeated ten times so that each partition is used as testing data. The performance is computed for each fold, and the final average of all the ten folds is reported. In this study, the overall accuracy is used as a performance measure.

Results

In this study, the human emotional states, high arousal-positive valence (happy), and low arousal-negative valence (sad) are classified using the EEG signal acquired from the scalp consisting of activity of various regions (frontal, occipital, temporal, and parietal regions) of the brain. Further, the PSD is calculated from the group of electrodes from the scalp regions. The features such as mean, Hjorth parameter, HFD, band power of each activity band (namely theta, alpha, beta, and gamma), entropy in each activity band, and HOC are extracted. These features are further subjected to pattern classification using ANN and performance is evaluated.

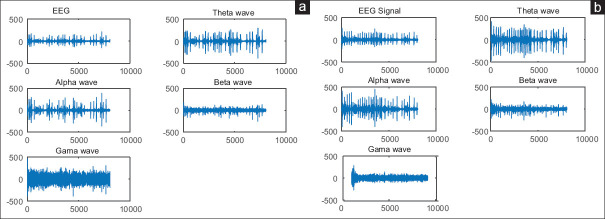

Figure 4a shows typical EEG and its activity bands corresponding to happy emotion. In the same way, Figure 4b shows typical EEG and theta, alpha, beta, and gamma decomposition. It can be seen from Figure 4 that the amplitude of EEG during sad emotion is larger than that of happy emotion.

Figure 4.

Electroencephalogram bands induced by (a) high arousal-positive valence and (b) low arousal-negative valence stimuli

Initially, the PSD is computed for the typical EEG with all 32 electrodes, and it is shown in Figure 5. In the same way, the PSD is computed for the typical EEG during happy emotion, and it is shown in Figures 6 and 7. Figure 6 shows the PSD of EEG during happy emotion for the selected ten electrodes, whereas Figure 7 shows the PSD of EEG signals from various regions (frontal, occipital, temporal, and parietal) of the scalp.

Figure 5.

A topological plot of the variation of power spectral density values across frequency bands considering all 32 electrodes

Figure 6.

Topological plot showing the variation of power spectral density values across frequency bands using the selected ten electrodes for the happy emotion

Figure 7.

2-Dimesional power spectral density plot of channels in electroencephalogram frequency bands

Similarly, PSD is computed for sad emotion and is depicted in Figures 8 and 9. Figure 8 shows the PSD of sad emotion for ten electrodes, whereas Figure 9 shows the PSD of electrodes of different regions of the scalp.

Figure 8.

sA topological plot of the variation of power spectral density values across frequency bands considering selected ten electrodes for sad emotion

Figure 9.

Two-dimensional power spectral density plot of channels in electroencephalogram frequency bands for sad emotion

Table 1 shows the classification performance of EEG from various electrodes using ANN. The features are extracted for all 32 electrodes and performance of 85.85% is obtained. Similarly, a subset of ten electrodes are used for EEG classification and a performance of 89.50% accuracy is obtained. In the same manner, the classification accuracy using the EEG from the different regions is shown in Table 1. It is found that EEG corresponding to the frontal region provided the highest accuracy of 93.25%.

Table 1.

Comparison of classifiers’ performance accuracy

| Region electrodes | Accuracy (%) | ||

|---|---|---|---|

|

| |||

| ANN | SVM | KNN | |

| All 32 electrodes | 85.85±0.84 | 75.85±0.64 | 80.85±0.54 |

| Selected 10 (Fp1, F3, F4, Fp2, O1, O2, T7, T8, P3 and P4) | 89.50±0.89 | 79.50±0.89 | 81.50±0.69 |

| Occipital (O1, O2) | 87.44±0.79 | 77.33±0.69 | 79.41±0.59 |

| Temporal (T7, T8) | 84.21±0.68 | 74.11±0.48 | 76.71±0.68 |

| Parietal (P3, P4) | 90.16±0.81 | 83.66±0.61 | 87.76±0.41 |

| Frontal (Fp1, F3, F4, Fp2) | 93.25±0.56 | 85.35±0.66 | 87.25±0.76 |

SVM – Support vector machine; ANN – Artificial neural network; KNN – K-nearest neighbor

In a reported computational study[26] using EEG conducted based on audio–video stimuli, it is postulated that alpha, beta, and gamma frequency bands are more effective for emotion classification in both valence and arousal dimensions. Since, the delta band is associated with the unconscious mind which occurs during deep sleep and hence it is excluded in the present work. The EEG signal is down-sampled from 512 Hz to 256 Hz. Then, it is filtered through a high-pass filter with a cutoff frequency of 2 Hz using EEGLAB software.

To reduce the computations, a subset of electrodes are chosen based on experimentation. The PSD of the selected electrodes (Fp1, F3, F4, Fp2, O1, O2, T7, T8, P3, and P4) is computed. The variation in the PSD calculated from the DEAP dataset is illustrated in Figure 6. As the DEAP dataset produced variations in higher frequency bands, which are related to high cognitive functions, we are encouraged to further study whether the cognitive level has any influence on emotion recognition.

As seen in Figure 7, to reduce the computations, PSD is calculated with a minimum number of electrodes at different scalp region.

Finally, the experimental results demonstrate that the region-based classifications provide higher accuracy compared to selecting all 32 and 10 electrodes. In recent development, a number of neurophysiological studies have reported that there is a correlation between EEG signals and emotions. Studies showed that the frontal scalp seems to store more emotional activation compared to other regions of the brain such as temporal, parietal, and occipital.[27,28]

From the experimental results of this study, the frontal region gives higher classification accuracy. Also, among different brain regions, frontal region proved improved performance in classifying happy and sad emotions. The performance accuracy obtained from ANN, SVM, and KNN classifiers is illustrated in Table 1. Among the classifiers, neural network showed better performance with an accuracy of 93.25%.

Discussion

Emotion is one of the physiological states which is expressed in different forms. Emotional balance is very much required for the physical, mental, and social well-being of the individual. Without the capability of emotion processing, understanding the psychological aspects or mental states is difficult. The recognition of emotions plays a key role in human communication enabling mutual sympathy. Among various patterns for the recognition of human emotion, EEG can be effectively used to quantitatively evaluate the different states of emotions. In this direction, a machine learning problem of classification of high arousal-positive valence (happy) and low arousal-negative valence (sad) emotions is undertaken in this study. We have obtained 93.25% accuracy while classifying the EEG signal of frontal electrodes. It is interesting to compare our results with the state-of-the-art methods available in the literature. Table 2 shows some prominent methods proposed in the literature by various authors. Chao et al.[23] adopted a deep learning framework for emotion recognition. In this context, a multiband feature matrix approach is proposed. Binary classification was performed for arousal, valence, and dominance that attained 68.28%, 66.73%, and 67.25% classification accuracy, respectively.

Table 2.

Assessment of accuracy of different studies using Database for Emotion Analysis and Using Physiological Signals data

| Papers | Emotion model | Features | Classification | Number of EEG channels | Accuracy (%) |

|---|---|---|---|---|---|

| Chao H et al.[23] | 3D | PSD | Deep learning | 32 | 66.73 |

| Nawaz et al.[24] | 2D | MSE | SVM | 32 | 72.10 |

| Nawaz et al.[25] | 3D | Statistical time domain | SVM | 14 | 78.06 |

| Zhong et al.[30] | 2D | MSE | CNN | 32 | 81.83 |

| Zheng et al.[31] | 2D | Statistical time domain | SVM | 12 | 86.65 |

| Maheshwari et al.[32] | 3D | Frequency domain | CNN | 14 | 98.91 |

| Mikhail et al.[39] | 2D | Frequency domain | SVM | 4 or 6 | 35.25 |

| Zhang and Chen[40] | 2D | Time and frequency | K-means | 12 | 90.00 |

| Gannouni et al.[42] | 2D | Time and frequency | QDN | 6 | 85.64 |

| RNN | 6 | 91.22 | |||

| Proposed method | 2D | Time and frequency | ANN | 32 | 85.55 |

| 10 | 89.50 | ||||

| Fp1, F3, F4, Fp2 | 93.25 |

PSD – Power spectral density; MSE – Multiscale sample entropy; EEG – Electroencephalogram; SVM – Support vector machine; CNN – Convolutional neural network; ANN – Artificial neural network; 3D – Three dimensional; 2D – Two dimensional; QDN – Quadrant discriminant classifier; RNN – Recurrent neural network

Nawaz et al.[24] used empirical mode decomposition method to extract features for emotion recognition of EEG signal. Using SVM classifier, the classification accuracy obtained is 72.10% and 70.41% for valence and arousal dimensions, respectively. In a framework proposed by Nawaz et al.,[25] the statistical time domain features are extracted for emotion recognition. To improve the performance accuracy, the principle component analysis is adopted. SVM is used to validate the efficacies of the features and achieved an overall best classification accuracy of 78.06%.

Zhong et al.[30] proposed a method of EEG access for emotion recognition based on a deep hybrid network and applied to the DEAP dataset for emotion recognition experiments, and the average accuracy for 3D emotion model (arousal, valence, and dominance) could achieve 79.77% on arousal, 83.09% on valence, and 81.83% on dominance.

Zheng and Lu.[31] adopted deep belief networks (DNBs) for recognition of three emotions. The recognition considering 12 channels is relatively stable and obtained the best accuracy of 86.65% with SVM classifier, which is even better than that of the original 62 channels.

Maheshwari et al.[32] used rhythm-specific multichannel convolutional neural network (CNN)-based approach for automated emotion recognition using multichannel EEG signals. The author's considered three-dimesional model (arousal, valance, and dominance) and 14 electrodes (AF3, F7, F3, F15, T7, P7, O1, O2, P8, T8, FC6, F4, F8, and AF4). The EEG rhythms from the selected channels coupled with deep CNN are used for emotion classification and obtained the accuracy values for 3D model of 98.91%, 98.45%, and 98.69% for LV versus HV, LA versus HA, and LD versus HD emotion classification strategies, respectively, using DEAP database. In the proposed method, 2-dimensional model (arousal and valance) and minimum electrodes (Only four frontal electrodes, Fp1, F3, F4, and Fp2) are considered instead of all scalp region electrodes. Both time and frequency domain features extracted from EEG signal band/wave-like alpha and beta bands show good signature of emotion states.

Chen et al.[35] proposed an improved deep CNN model to achieve better accuracy in emotion recognition for binary classification (valence and arousal dimensions) of emotions on DEAP dataset. When compared to the four shallow classifiers (BT, SVM, LDA, and BLDA), the deep CNN models showed 3.58% and 3.29% higher accuracy in valence and arousal dimension, respectively, than the traditionally best BT classifier. In the work of Luo et al.,[36] three algorithms (DWT, variance, and FFT) for feature extraction are implemented on DEAP and SEED databases for four (valence, arousal, dominance, and liking) and three (positive, negative and neutral) emotional states, respectively. NeuCube SNN architecture is used for emotion classification that generates accuracies of 78% (valence), 74% (arousal), and 80% (dominance) in the DEAP dataset.

Chao and Liu[37] in their work employ DBN-GC models to obtain the high-level feature sequence of the multichannel EEG signals on DEAP, AMIGOS, and SEED datasets and CRF to DNBs for a 2D emotion classification. They obtained accuracies of 76.13% in arousal and 77.02% in valence dimension. The work of Zhang[38] proposed an expression-EEG interaction multimodal emotion recognition method using a deep automatic encoder. The model employed a decision tree for feature selection, facial expression recognition, BDAE for feature extraction, and LIBSVM for emotion classification. Thirteen healthy participants were shown 30 video clips from a video library of 90 clips. The model achieved an average emotion recognition rate of 85.71% for discrete emotion state type.

Mikhail M et al,[39] proposed an approach that analysing the highly contaminated brain signals and extract relevant features for the emotion detection task based on neuroscience findings, an approach on fewer number of electrodes reached an average classification accuracy of 33% for joy emotion, 38% for anger, and 37.5% for sadness using four or six electrodes only.

Zhang J et al.[40] performed the emotion classification using only a part of the EEG electrodes (12 EEG electrodes placed on frontal cortex) and achieved classification accuracy of 90%.

Despite significant development in the field of DL and its suitability to various applications, almost 59% of researchers have used an SVM with RBF kernels for BCIs. The present approach is found to have the highest accuracy with respect to all the three Arousal-Valence- Dominance (AVD) emotion indices.[41]

Gannouni et al.[42] proposed a competitive approach in this field and it outperformed that of previous studies. The quantitative discontinuity characterization obtained an average accuracy of 82.37%, and the recurrent neural networks reached an accuracy average of 91.22%.

The proposed methodology achieved the highest classification accuracy of 93.25% in valence-arousal dimension with improvement of the results obtained by Chao et al.[23] which is 66.73%, Nawaz et al.[24] which is 72.10%, Nawaz et al.[25] which is 78.06%, Zhong et al.[30] which is 83.41%, and Zheng and Lu[31] which is 86.65%. The framework based on region-based classification achieved satisfactory results for EEG emotion recognition. Previous studies have reported that emotional experiences are stored within the temporal region of the brain. The current evidence suggests that emotional response may also be influenced by different regions of the brain such as the parietal and frontal regions. In addition, the optimal selection of the electrodes should also be considered since many of the EEG devices have different numbers of electrode and placement, and hence, the numbers and selection of electrode position should be explored systematically to verify how it affects the emotion classification task. The results of recognition rate of emotion proved that EEG data comprise ample amount of information to differentiate among different emotional states. Time, frequency, and nonlinear domain features are more suitable feature sets for emotion recognition. A comparison of our proposed method with the latest study of emotion recognition that has also used DEAP dataset is given in Table 2. To the best of our knowledge, the popular publicly available emotional EEG datasets are MAHNOB HCI[33] and DEAP.[34]

In the current study, we explored time and frequency domain features of EEG signals for different emotion states. However, the PSD for various emotion states studied indicates promising features. Further, deep features from PSD can be extracted for the classification of emotion states.

Conclusion

In this article, an emotion recognition system is proposed based on EEG signal. The results indicated that the extracted features are promising in recognizing human emotions. The performance of the ANN classifier is studied based on the features of EEG signals from the scalp that include frontal, occipital, temporal, and parietal regions. The results demonstrate that ANN provides the highest classification accuracy of 93.25% from the frontal region electrodes (Fp1, F3, F4, and Fp2) compared with all other regions. This improved accuracy enables us to use this system in different applications like wearable sensors design, biofeedback applications for monitoring stress and psychological well-being. Extending the present study by including a greater number of features or using another sophisticated analysis method such as event-related potential or deep learning to validate the current findings in a further work. In future, analysis can be explored with a 3-dimensional model, which can involve dominance in addition to valance and arousal.

Financial support and sponsorship

None.

Conflicts of interest

There are no conflicts of interest.

Acknowledgment

The authors are grateful to DEAP database for providing permission to use the data source. Further, the acknowledgment is extended to the Department of E and C and BME of MIT, Manipal Academy of Higher Education, for the facilities provided to carry out research work.

References

- 1.Scherer KR. What are emotions. And how can they be measured? Soc Sci Inf. 2005;44:695–729. [Google Scholar]

- 2.Frijda NH. The empirical status of the laws of emotion. Cogn Emot. 1992;6:467–77. [Google Scholar]

- 3.Mental Disorders. [[Last accessed on 2019 Nov 28]]. Available from: https://www.who.int/mediacentre/factsheets/fs396/en/

- 4.Picard RW. Affective computing: Challenges. Int J Hum Comput Int. 2003;59:55–64. [Google Scholar]

- 5.Bos DO. EEG-based emotion recognition. The influence of visual and auditory stimuli. Academia-CiteSeerx. 2006;56:1–17. [Google Scholar]

- 6.Gerber AJ, Posner J, Gorman D, Colibazzi T, Yu S, Wang Z, et al. An affective circumplex model of neural systems subserving valence, arousal, and cognitive overlay during the appraisal of emotional faces. Neuropsychologia. 2008;46:2129–39. doi: 10.1016/j.neuropsychologia.2008.02.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ekman P, Friesen WV. Constants across cultures in the face and emotion. J Pers Soc Psychol. 1971;17:124–9. doi: 10.1037/h0030377. [DOI] [PubMed] [Google Scholar]

- 8.Russell JA. Affective space is bipolar. J Pers Soc Psychol. 1979;37:345. [Google Scholar]

- 9.Russell JA. A circumplex model of affect. J Pers Soc Psychol. 1980;39:1161. [Google Scholar]

- 10.Ko KE, Yang HC, Sim KB. Emotion recognition using EEG signals with relative power values and Bayesian network. Int J Control Autom Syst. 2009;7:865. [Google Scholar]

- 11.Petrantonakis PC, Hadjileontiadis LJ. Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Trans Affective Comput. 2010;1:81–97. [Google Scholar]

- 12.Petrantonakis PC, Hadjileontiadis LJ. Emotion recognition from EEG using higher order crossings. IEEE Trans Inf Technol Biomed. 2010;14:186–97. doi: 10.1109/TITB.2009.2034649. [DOI] [PubMed] [Google Scholar]

- 13.Lin YP, Wang CH, Jung TP, Wu TL, Jeng SK, Duann JR, et al. EEG-based emotion recognition in music listening. IEEE Trans Biomed Eng. 2010;57:1798–806. doi: 10.1109/TBME.2010.2048568. [DOI] [PubMed] [Google Scholar]

- 14.Murugappan M, Ramachandran N, Sazali Y. Classification of human emotion from EEG using discrete wavelet transform. J Biomed Eng. 2010;3:390. [Google Scholar]

- 15.Petrantonakis PC, Hadjileontiadis LJ. Adaptive emotional information retrieval from EEG signals in the time-frequency domain. IEEE Trans Signal Process. 2012;60:2604–16. [Google Scholar]

- 16.Islam M, Ahmed T, Mostafa SS, Yusuf MS, Ahmad M. Human Emotion Recognition Using Frequency & Statistical Measures of EEG Signal. In 2013 International Conference on Informatics, Electronics and Vision (ICIEV) 2013:1–6. [Google Scholar]

- 17.Liu YH, Wu CT, Kao YH, Chen YT. Single-trial EEG-based emotion recognition using kernel Eigen-emotion pattern and adaptive support vector machine. Annu Int Conf IEEE Eng Med Biol Soc. 2013;2013:4306–9. doi: 10.1109/EMBC.2013.6610498. [DOI] [PubMed] [Google Scholar]

- 18.Murugappan M, Nagarajan R, Yaacob S. Appraising Human Emotions Using Time Frequency Analysis Based EEG Alpha Band Features. In 2009 Innovative Technologies in Intelligent Systems and Industrial Applications. 2009:70–5. [Google Scholar]

- 19.Özerdem MS, Polat H. Emotion recognition based on EEG features in movie clips with channel selection. Brain Inform. 2017;4:241–52. doi: 10.1007/s40708-017-0069-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zheng WL, Zhu JY, Lu BL. Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans Affect Comput. 2017;10:417–29. [Google Scholar]

- 21.Upadhyaya P, Bairy GM, Sudarshan VK. Electroencephalograms analysis and classification of neurological and psychological disorders. J Med Imaging Health Inf. 2015;5:1415–9. [Google Scholar]

- 22.Kumar GS, Sampathila N, Shetty H. Neural Network Approach for Classification of Human Emotions from EEG Signal. In Engineering Vibration, Communication and Information Processing [Google Scholar]

- 23.Chao H, Dong L, Liu Y, Lu B. Emotion recognition from multiband EEG signals using CapsNet. Sensors (Basel) 2019;19:2212. doi: 10.3390/s19092212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nawaz R, Nisar H, Voon YV. IEEE Access. 2018. The effect of music on human brain: Frequency domain and time series analysis using electroencephalogram; pp. 5191–205. [Google Scholar]

- 25.Nawaz R, Cheah KH, Nisar H, Yap VV. Comparison of different feature extraction methods for EEG-based emotion recognition. Biocybern Biomed Eng. 2020;40:910–26. [Google Scholar]

- 26.Sarno R, Munawar MN, Nugraha B. Real-time electroencephalography-based emotion recognition system. Int Rev Comput Softw IRECOS. 2016;11:456–65. [Google Scholar]

- 27.Zhuang N, Zeng Y, Tong L, Zhang C, Zhang H, Yan B. Emotion recognition from EEG signals using multidimensional information in EMD domain. Biomed Res Int. 2017;2017:8317357. doi: 10.1155/2017/8317357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hjorth B. EEG analysis based on time domain properties. Electroencephalogr Clin Neurophysiol. 1970;29:306–10. doi: 10.1016/0013-4694(70)90143-4. [DOI] [PubMed] [Google Scholar]

- 29.Higuchi T. Approach to an irregular time series on the basis of the fractal theory. Physica D. 1988;31:277–83. [Google Scholar]

- 30.Zhong Q, Zhu Y, Cai D, Xiao L, Zhang H. Electroencephalogram access for emotion recognition based on a deep hybrid network. Front Hum Neurosci. 2020;14:589001. doi: 10.3389/fnhum.2020.589001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zheng WL, Lu BL. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. Front Hum Neurosci. 2015;7:162–75. [Google Scholar]

- 32.Maheshwari D, Ghosh SK, Tripathy RK, Sharma M, Acharya UR. Automated accurate emotion recognition system using rhythm-specific deep convolutional neural network technique with multi-channel EEG signals. Comput Biol Med. 2021;134:104428. doi: 10.1016/j.compbiomed.2021.104428. [DOI] [PubMed] [Google Scholar]

- 33.Soleymani M, Pantic M, Pun T. Multimodal emotion recognition in response to videos. IEEE Trans Affect Comput. 2011;3:211–23. [Google Scholar]

- 34.Koelstra S, Muhl C, Soleymani M, Lee JS, Yazdani A, Ebrahimi T, et al. DEAP: A database for emotion analysis; using physiological signals. IEEE Trans Affect Comput. 2011;3:18–31. [Google Scholar]

- 35.Chen JX, Zhang PW, Mao ZJ, Huang YF, Jiang DM, Zhang YN. Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks. IEEE Access. 2019;7:44317–28. [Google Scholar]

- 36.Luo Y, Fu Q, Xie J, Qin Y, Wu G, Liu J, et al. EEG-based emotion classification using spiking neural networks. IEEE Access. 2020;8:46007–16. [Google Scholar]

- 37.Chao H, Liu Y. Emotion recognition from multi-channel EEG signals by exploiting the deep belief-conditional random field framework. IEEE Access. 2020;8:33002–12. [Google Scholar]

- 38.Zhang H. Expression-EEG based collaborative multimodal emotion recognition using deep autoencoder. IEEE Access. 2020;8:164130–43. [Google Scholar]

- 39.Mikhail M, El-Ayat K, Coan JA, Allen JJ. Using minimal number of electrodes for emotion detection using brain signals produced from a new elicitation technique. Int J Auton Adapt Commun Syst. 2013;6:80–97. [Google Scholar]

- 40.Zhang J, Chen P. Selection of optimal EEG electrodes for human emotion recognition. IFAC Papers Online. 2020;53:10229–35. [Google Scholar]

- 41.Garg S, Patro RK, Behera S, Tigga NP, Pandey R. An overlapping sliding window and combined features based emotion recognition system for EEG signals. Appl Comput Inform. 2021 [Doi: 10.1108/ACI-05-2021-0130] [Google Scholar]

- 42.Gannouni S, Aledaily A, Belwafi K, Aboalsamh H. Emotion detection using electroencephalography signals and a zero-time windowing-based epoch estimation and relevant electrode identification. Sci Rep. 2021;11:7071. doi: 10.1038/s41598-021-86345-5. [DOI] [PMC free article] [PubMed] [Google Scholar]