Abstract

Our objective is to locate and provide a unique identifier for each mouse in a cluttered home-cage environment through time, as a precursor to automated behaviour recognition for biological research. This is a very challenging problem due to (i) the lack of distinguishing visual features for each mouse, and (ii) the close confines of the scene with constant occlusion, making standard visual tracking approaches unusable. However, a coarse estimate of each mouse’s location is available from a unique RFID implant, so there is the potential to optimally combine information from (weak) tracking with coarse information on identity. To achieve our objective, we make the following key contributions: (a) the formulation of the object identification problem as an assignment problem (solved using Integer Linear Programming), (b) a novel probabilistic model of the affinity between tracklets and RFID data, and (c) a curated dataset with per-frame BB and regularly spaced ground-truth annotations for evaluating the models. The latter is a crucial part of the model, as it provides a principled probabilistic treatment of object detections given coarse localisation. Our approach achieves 77% accuracy on this animal identification problem, and is able to reject spurious detections when the animals are hidden.

Keywords: Localisation, Object identification, Group-housed mice, Linear programming

Introduction

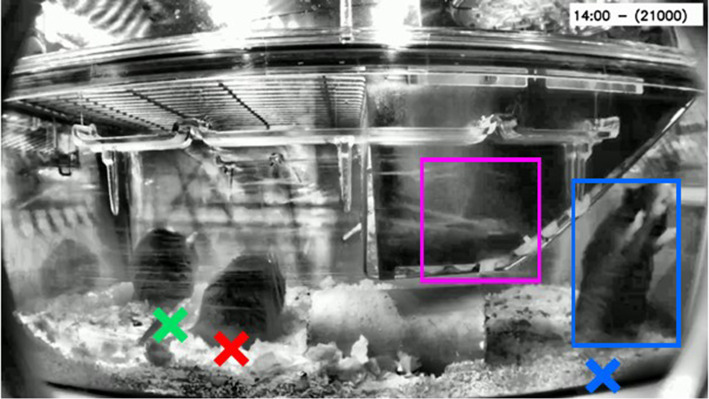

We are motivated by the problem of tracking and identifying group-housed mice in videos of a cluttered home-cage environment, as in Fig. 1. This animal identification problem goes beyond tracking to assigning unique identities to each animal, as a precursor to automatically annotating their individual behaviour (e.g. feeding, grooming) in the video, and analysing their interactions. It is also distinct from object classification (recognition) [1]: the mice have no distinguishing visual features and cannot be merely treated as different objects. Their visual similarity, as well as the close confines of the enriched cage environment make standard visual tracking approaches very difficult, especially when the mice are huddled or interacting together. We found experimentally that standard trackers alone break down into outputting short tracklets, with spurious and missing detections, and thus do not provide a persistent identity. However, we can leverage additional information provided by a unique Radio-Frequency Identification (RFID) implant in each mouse, to provide a coarse estimate of its location (on a grid). This setting is not unique to animal tracking and can be applied to more general objects. Similar situations arise, e.g. when identifying specific team-mates in robotic soccer [2] (where the object identification is provided by the weak self-localisation of each robot), or identifying vehicles observed by traffic cameras at a busy junction (as required for example for law-enforcement), making use of a weak location signal provided by cell-phone data.

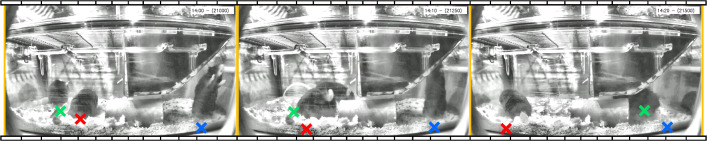

Fig. 1.

Sample frames from our dataset. To improve visualisation, the frames are processed with CLAHE [4] and brightened: our methods operate on the raw frames. The mice are visually indistinguishable, their identity being inferred through the RFID pickup, shown as Red/Green/Blue crosses projected into image-space. This is enough to distinguish the mice when they are well separated (left): however, as they move around, they are occluded by cage elements (Green in centre) or by cage mates (Green by Blue in right) and we have to reason about temporal continuity and occlusion dynamics

Our solution starts from training and running a mouse detector on each frame, and grouping the detections together with a tracker [3] to produce a set of tracklets, as shown in Fig. 3c. We then formulate an assignment problem to identify each tracklet as belonging to a mouse (based on RFID data) or a dummy object (to capture spurious tracklets). This problem is solved using Integer Linear Programming (ILP), see Fig. 3f. Tracking is used to reduce the complexity of the problem relative to a frame-wise approach and implicitly enforce temporal continuity for the solution.

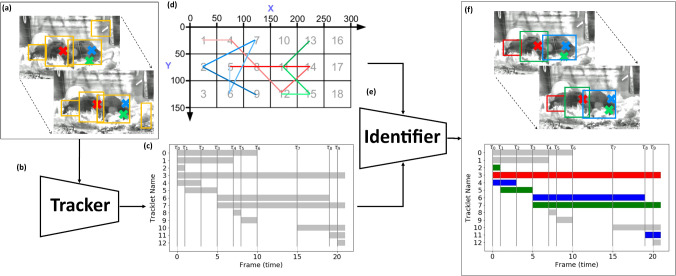

Fig. 3.

Our proposed architecture. Given per-frame detections of animals (a), a tracker (b) joins these into tracklets, shown (c) in terms of their temporal span. After discarding spurious detections based on a lifetime threshold, the identifier (e) combines these with coarse localisation traces over time (d), to produce identified Bounding Boxes (BBs) for each object throughout the video, shown [f] as coloured tracklets/(BBs)

The problem setup is not standard Multi-Object Tracking (MOT), since we go beyond tracking by identifying individual tracklets using additional RFID information which provides persistent unique identifiers for each animal. Our main contribution is thus to formulate this object identification as an assignment problem, and solve it using ILP. A key part of the model is a principled probabilistic treatment for modelling detections from the coarse localization information, which is used to provide assignment weights to the ILP.

We emphasise that we are solving a real-world problem which is of considerable importance to the biological community (as per the International Mouse Phenotype Consortium (IMPC) [5]), and which needs to scale up to thousands of hours of data in an efficient manner—this necessarily affects some of our design choices. More generally, with home-cage monitoring systems being more readily available to scientists [6], there are serious efforts in maximising their use.1 Consequently there is a real need for analysis methods like ours, particularly where multiple animals are co-housed.

In this paper, we first frame our problem in the light of recent efforts (Sect. 2), indicating how our situation is quite unique in its formulation. We discuss our approach from a theoretical perspective in Sect. 3, postponing the implementation mechanics to Sect. 4. Finally, we showcase the utility of our solution through rigorous experiments (Sect. 5) on our dataset which allows us to analyse its merits in some depth. A more detailed description of the dataset, details for replicating our experimental setups (including parameter fine-tuning) as well as deeper theoretical insights are relegated to the Supplementary Material. The curated dataset, together with code and some video clips of our framework in action, is available at https://github.com/michael-camilleri/TIDe (see Sect. 6 and Appendix D for details).

Related work

Below we discuss related work w.r.t. MOT, re-identification (re-ID) and animal tracking. We defer discussion of our ILP formulation relative to other work after discussing the method in Sect. 3.2.

Multi-object tracking

There have been many recent advances in MOT [7], fuelled in part by the rise of specialised neural architectures [8–10]. Here the main challenge is keeping track of an undefined number of visually distinct objects (often people, as in, e.g. [11, 12]), over a short period of time (as they enter and exit a particular scene) [13]. However, our problem is not MOT because we need to identify (rather than just track) a fixed set of individuals using persistent, externally assigned identities, rather than relative ones (as in, e.g. [14]): i.e. our problem is not indifferent to label-switching. We care about the absolute identity assigned to each tracklet—even if we had input from a perfect tracker, there would still need to be a way to assign the anonymous tracklets to identities, as we do below.

There is another important difference between the current state-of-the-art in MOT (see e.g. [9, 14–19]) and our use-case. As the mice are essentially indistinguishable, we cannot leverage appearance information to distinguish them but must rely on external cues—the RFID-based quantised position. On top of this, there are also the challenges of operating in a constrained cage environment (which exacerbates the level of occlusion), and the need to consistently and efficiently track mice over an extended period of time (on the order of hours).

(Re-)Identification

Re-ID [20] addresses the transitory notion of identity by using appearance cues to join together instances of the same object across ‘viewpoints’. Obviously, this does not work when objects are visually indistinguishable, but there is also another key difference. The standard re-ID setup deals with associating together instances of the same object, often (but not necessarily) across multiple non-overlapping cameras/viewpoints [21]. In our specific setup, however, we wish to relate objects to an external identity (e.g. RFID-based position); our work thus sits on top of any algorithm that builds tracklets (such as that of Fleuret et al. [20]).

Animal Tracking

Although generally applicable, this work was conceived through a collaboration with the Mary Lyon Centre at MRC Harwell [22], and hence seeks to solve a very practical problem: analysis of group-housed mice in their enriched home-cage environment over extended periods of 3-day recordings. This setup is thus significantly more challenging than traditional animal observation models, which often side-step identification by involving single animals in a purpose-built arena [23–26] rather than our enriched home-cage. Recent work on multiple-animal tracking [16, 27–29] often uses visual features for identification which we cannot leverage (our subjects are visually identical): e.g. the CalMS21 [28] dataset uses recordings of pairs of visually distinct mice, while Marshall et al. [29] attach identifying visual markers to their subjects in the PAIR-R24M dataset. Most work also requires access to richer sources of colour/depth information [30, 31] rather than our single-channel Infra-Red (IR) feed, or employs top-mounted cameras [16, 31–34] which give a much less cluttered view of the animals. Indeed, systems such as idtracker.ai [35] or DeepLabCut [36, 37] do not work for our setup, since the mice often hide each other and keypoints on the animals (which are an integral part of the methods) are not consistently visible (besides requiring more onerous annotations). Finally we reiterate that our goal is to identify tracklets through fusion with external (RFID) information, which none of the existing frameworks support—for example the multi-animal version of DeepLabCut [37] only supports supervised identity learning for visually distinct individuals.

Methodology

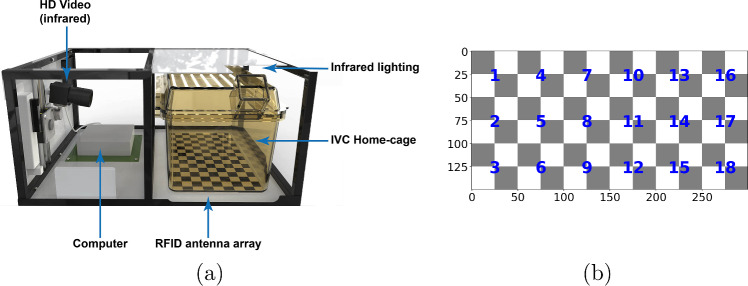

This section describes our proposed approach to identification of a fixed set of animals in video data. We explain the methodology through the running example of tracking group-housed mice. Specifically, consider a scenario in which we have continuous video recordings of mice housed in groups of three as shown in Fig. 1. The data, captured using a setup similar to that shown in Fig. 2a, consists of single-channel IR side-view video and coarse RFID position pickup from an antenna grid below the cage as in Fig. 2b. Otherwise, the mice have no visual markings for identification. Implementation details are deferred to Sect. 5.1.

Fig. 2.

The Actual Analytics Home-Cage Analysis system. a The rig used to capture mouse recordings (Illustration reproduced with permission from [22]). b The (numbered) points on the checkerboard pattern used for calibration (measurements are in mm): the numbers correspond also to the RFID antenna receivers on the baseplate

Overview

Our system takes in candidate detections about the fixed set of animals in the form of Bounding Boxes (BBs) and assigns an identity to each of them using a two-stage pipeline, as shown in Fig. 3. First, we use a Tracker [3] to join the detections across frames into tracklets based on optimising the Intersection-over-Union (IoU) between Bounding Boxes (BBs) in adjacent frames. This stage injects temporal continuity into the object identification, filters out spurious detections, and, as will be seen later, reduces the complexity of the identification problem. Then, an Identifier, using a novel ILP formulation, combines the tracklets with the coarse position information to identify which tracklet(s) belong to each of the known animals, based on a probabilistic weight model of object locations.

Tracking

We use the tracker to inject temporal continuity into our problem, encoding the prior that animals can only move a limited distance between successive frames. Subsequently, we formulate our object identification problem as assigning tracklets to animals over a recording interval indexed by .

The tracker also serves the purpose of simplifying the optimisation problem. While (BBs) and positions are registered at the video frame rate, it is not necessary to run our optimisation problem (below) at this granularity. Rather, we group together the sequence of frames for which the same subset of tracklets are active: a new interval will begin when a new tracklet is spawned, or when an existing one disappears. The observation is thus broken up into intervals, indexed sequentially by , shown as at the top of Fig. 3c.

Object identification from tracklets

The tracker produces I tracklets: to this we add T special ‘hidden’ tracklets for which no (BBs) exist, and which serve to model occlusion at any time-step. The goal of the Identifier is then to assign object identities to the set of tracklets, , of size . The subset of tracklets active at time is represented by . We assume there are J animals we wish to track/identify (and for which we have access to coarse location through time); an extra dummy object is a special outlier/background model which captures spurious tracklets in .

We also define a utility , which is a measure of the quality of the match between tracklet and animal : we assume that tracklets cannot switch identities and are assigned in their entirety to one animal (this is reasonable if we are liberal in breaking tracklets). The weight is typically a function of the BB features and animal position (see Sect. 3.3). Given the above, our ILP optimises the assignment matrix A with elements (where ‘ is assigned to ’), that maximises:

| 1 |

| 2 |

| 3 |

| 4 |

Constraint (2) ensures that a generated tracklet is assigned to exactly one animal, which could be the outlier model. Constraint (3) enforces that each animal (excluding the outlier model) is assigned exactly one tracklet (which could be the ‘hidden’ tracklet) at any point in time, and finally, Eq. ((4)) prevents assigning the hidden tracklets to the outlier model. Unfortunately, these constraints mean that the resulting linear program is not Totally Unimodular [38], and hence does not automatically yield integral optima. Consequently, solving the ILP is in general NP-Hard, but we have found that due to our temporal abstraction, using a branch and cut approach efficiently finds the optimal solution on the size of our data without the need for any approximations.

Our ILP formulation of the object identification process is related to the general family of covering problems [39] as we show in our Supplementary Material (see Sect. B.1). In this respect, our problem is a generalisation of Exact-Set-Cover [40] in that we require every animal to be ‘covered’ by one tracklet at each point in time: i.e. we use the stronger equality constraint rather than the inequality in the general ILP (see Eq. B.2). Unlike the Exact-Set-Cover formulation, however, (a) we have multiple objects (animals) to be covered, (b) some objects need not be covered at all (outlier model), and (c) all detected tracklets must be used (although a tracklet can cover the ‘extra’ outlier model). Note that with respect to (a), this is not the same as Set Multi-Cover [41] in which the same object must be covered more than once: i.e. in Eq. (B.2) is always 1 for us.

Assignment weighting model

The weight matrix with elements is the essential component for incorporating the quality of the match between tracklets and locations. It is easier to define the utility of assignments on a per-frame basis, aggregating these over the lifetime of the tracklet. The per-frame weight boils down to the level of agreement between the features of the BB and the position of the object at that frame, together with the presence of other occluders.

We can use any generative model for this purpose, with the caveat that we can only condition the observations ((BBs)) on fixed information which does not itself depend on the result of the ILP assignment. Specifically, we can use all the RFID information and even the presence of the tunnel, but not the locations of other (BBs). The reason for this is that if we condition on the other detections, the weight will depend on the other assignments (e.g. whether a BB is assigned to a physical object or not), which invalidates the ILP formulation.

We define our weight model as the probability that an animal picked up by a particular RFID antenna j, or the outlier distribution , could have generated the BB i. For the sake of our ILP formulation, we also allow animal j to generate a hidden BB when it is not visible.

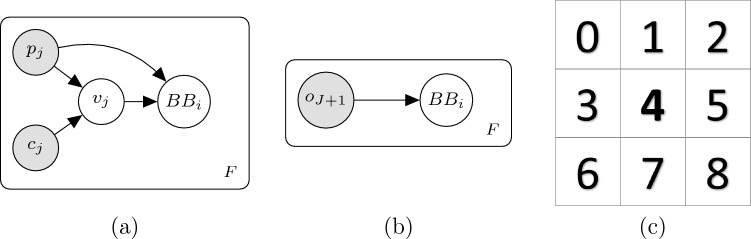

For objects of interest (), we employ the graphical model in Fig. 4a. Variable represents the position of object j: captures contextual information, which impacts the visibility of the BB and is a function of the RFID pickups of the other animals in the frame. The visibility, , can take on one of three values: (a) clear (fully visible), (b) truncated (partial occlusion), and (c) hidden (object is fully occluded and hence no BB appears for it). The BB parameters are then conditioned on both the object position (for capturing the relative arrangement within the observation area) and the visibility as:

| 5 |

where the parameters of the Gaussian are in general a function of object position and visibility. Note that in the top line of Eq. ((5)) we allow only a hidden object to generate a ‘hidden’ tracklet. Conversely, under the outlier model, Fig. 4b, the BB is modelled as a single broad distribution, capturing the probability of having spurious detections.

Fig. 4.

a Model for assigning to per-frame in F (frame index omitted to reduce clutter) when is an animal. Variable refers to the position of animal j, and captures contextual information. The visibility is represented by , and the BB (actual or occluded) by . b Model for assigning under the outlier model. c Neighbourhood definition for representing

Bringing it all together, the per-frame weight is

| 6 |

with:

| 7 |

where we have dropped the frame index for clarity. The complete tracklet-object weight is then the sum of the log-probabilities over the tracklet’s visible lifetime.

Relation to other methods

A number of works (see e.g. [20, 42–44]) have used ILP to address the tracking problem; however, we reiterate that our task goes beyond tracking to data association with the external information. Indeed, in all of the above cited approaches, the emphasis is on joining the successive detections into contiguous tracklets, while our focus is on the subsequent step of identifying the tracklets by way of the RFID position.

Solving identification problems similar to our is typically achieved through hypothesis filtering, e.g. Joint Probabilistic Data Association (JPDA) [42]. While we could have posed our problem into this framework (which would be a contribution in its own right, with the inclusion of the position-affinity model in Sect. 3.3), exact inference for such a technique increases exponentially with time. While there are approximation algorithms (e.g. [45]), our ILP formulation: (a) does not explicitly model temporal continuity (by delegating this to the tracker), (b) imposes the restriction that the (per-frame) weight of an animal-tracklet pair is a function only of the pair (i.e. independent of the other assignments), (c) estimates a Maximum-A-Posteriori (MAP) tracklet weight rather than maintaining a global distribution over all possible tracklets, but then (d) optimises for a globally consistent solution rather than running online frame-by-frame filtering. The combination of (a), (b) and (c) greatly reduce the complexity of the optimisation problem compared to JPDA, allowing us to optimise an offline global assignment (d) without the need for simplifications/approximations.

Implementation details

Detector

While the detector is not the core focus of our contribution, it is an important first component in our identification pipeline.

We employ the widely used FCOS object detector [46] with a ResNet-50 [47] backbone, although our method is agnostic to the type of detector as long as it outputs (BBs). We replace the classifier layer of the pre-trained network with three categories (i.e. mouse, tunnel and background) and fine-tune the model on our data (see Supplementary Material Sect. C.1). In the final configuration, we accept all detections with confidence above 0.4, up to a maximum of 5 per image. We further discard (BBs) which fall inside the hopper area by thresholding on a maximum ratio of overlap (with hopper) to BB area of 0.4. This setup achieves a recall (at IoU = 0.5) of 0.90 on the held-out test-set (average precision is 0.87, CoCo Mean Average Precision (mAP) = 0.60). Given our focus on the rest of the identification pipeline, we leave further optimisation over architectures to future work.

Tracker

We use the SORT tracker of Bewley et al. [3] which maintains a ‘latent’ state of the tracklet (the centroid (x, y) of the BB, its area and the aspect ratio, together with the velocities for all but the aspect ratio) and assigns new detections in successive frames based on IoU. At each frame, the state of each active tracklet is updated according to the Kalman predictive model, and new detections matched to existing tracklets by the Hungarian algorithm [3] on the IoU (subject to a cutoff).

Our plug’n’play approach would allow us to use other advanced trackers (e.g. [10, 43, 48, 49]), but we chose this tracker for its simplicity. Since most of the above methods leverage visual appearance information, and all mice are visually similar, it is likely that any marginal improvements would be outweighed by a bigger impact on scalability. In addition, previous research (e.g. [13]) has shown that simple methods often outperform more complex ones, but we leave experimentation with trackers as possible future research.

We do, however, make some changes to the architecture, in the light of the fact that we do not need online tracking, and hence can optimise for retrospective filtering. The first is that tracklets emit (BBs) from the start of their lifetime, and we do not wait for them to be active for a number of frames: this is because, we can always filter our spurious detections at the end. Secondly, when filtering out short tracklets, we do this based on a minimum number of contiguous (rather than total) frames—again, we can do this because we have access to the entire lifetime of each tracklet. We also opt to emit the original detection as the BB rather than a smoothed trajectory, since we believe that the current model assuming a fixed aspect ratio might be too restrictive for our use-case—indeed, we plan to experiment with different models for the Kalman state in the future. Finally, we take the architectural decision to kill tracklets as soon as they fail to capture a detection in a frame: our motivation is that we prefer to have broken tracklets which we can join later using the identification rather than increasing the probability of a tracklet switching identity.

To optimise the two main parameters of the tracker—the IoU threshold for assigning new detections to existing tracklets, and the minimum lifetime of a tracklet to be considered viable—we used the combined training/validation set, and ran our ILP object identification end-to-end over a grid of parameters. The optimal results were obtained using a length cutoff of 2 frames and IoU threshold of 0.8 (see Supplementary Material Sect. C.2 for details).

Weight model

We represent (BBs) by the 4-vector containing the centroid [x, y] and size [width, height] of the axis-aligned BB. A hidden BB is denoted by the zero-vector and is handled explicitly in logic. The visibility, , can be one of clear, truncated or hidden as already discussed, while the position, , is an integer representing the one-of-18 antennas in which the mouse is picked up. For , we consider the RFID pickups of the other two mice, but we must represent them in an (identity) permutation-invariant way: i.e. the representation must be independent of the identity of the animal we are predicting for (all else being equal). To this end, we define a nine-point neighbourhood around the antenna as in Fig. 4c, where would fall in cell 4. Note that where the neighbourhood extends beyond the range of the antenna baseplate, these cells are simply ignored. is then simply the count of animals picked up in each cell (0, 1 or 2).

Under the outlier model (Fig. 4b), the BB centroids, [x, y], are drawn from a very wide Gaussian over the size of the image: the size, [w, h], is Gaussian with its mean and covariance fit on the training/validation annotations. For the non-outlier case (Fig. 4a), we model the distribution using a Random Forest (RF): this proved to be the best model in terms of validation log-likelihood (this metric is preferable for a calibrated distribution). The mean of the multivariate Gaussian for the BB parameters at position p with visibility v in Eq. ((5)) is defined as follows. The centroid components [x, y] are the same irrespective of the visibility, and governed by a homography mapping between the antenna positions on the ground-plane and the annotated (BBs) centroids in the image. The width and height, [w, h], are estimated independently for each row in the antenna arrangement (see Fig. 1b) and visibility (clear/truncated). This models occlusion and perspective projection but allows us to pool samples across pickups for statistical strength. The covariance matrix is estimated solely on a per-row basis and is independent of the visibility. Further details on fine-tuning the parameters appear in our Supplementary Material (Sect. C.3).

Solver

Unfortunately, our linear program is not Totally Unimodular [38] due to the constraints in Eqs. (2–4), and hence, we have to explicitly enforce integrality. Consequently, solving the ILP is in general NP-Hard, but we have found that due to our temporal abstraction, using a branch and cut approach efficiently finds the optimal solution on the size of our data. We model our problem through the Python MIP package [50], using the COIN-OR Branch and Cut [51] paired with the COIN-OR Linear Programming [52] solvers. Running on a conventional desktop (Intel Xeon E3-1245 @3.5GHz with 32Gb RAM), the solver required on the order of a minute on average for each 30-minute segment.

Experiments

Dataset

Source

We apply and evaluate our method on a mouse dataset, [22], provided by Mary Lyon Centre at MRC Harwell, Oxfordshire (MLC at MRC Harwell). All the mice in the study are one-year old males of the C57BL/6Ntac strain. The mice are housed as groups of three in a cage and are continuously recorded for a period of 3 to 4 days at a time, with a 12-hour lights-on, 12-hour lights-off cycle. We have access to 15 distinct cages. The recordings are split into 30-minute periods, which form our unit of processing (segments). Since we are interested in their crepuscular rhythms, we selected the five segments straddling either side of the lights-on/off transitions at 07:00 and 19:00 every day. This gives us about 30 segments per cage.

The data—IR side-view video and RFID position pickup—is captured using one of four Home-Cage Analyser (HCA) rigs from Actual Analytics: a prototypical setup is shown in Fig. 2a. Video is recorded at 25 frames per second (FPS), while the antenna baseplate ( cells, see Fig. 2b) is scanned at a rate of about 2Hz and upsampled to the frame rate. The latter, however, is noisy due to mouse huddling and climbing. The video also suffers from non-uniform lighting, occasional glare/blurring and clutter, particularly due to a movable tunnel and bedding. Most crucially, however, being single strain (and consequently of the same colour), and with no external markings, the mice are visually indistinguishable.

Pre-processing

A calibration routine was used to map all detections into the same frame of reference using a similarity transform. In order to maintain axis-aligned (BBs), we transform the four corners of the BB but then define the transformed BB to be the one which intersects the midpoints of each transformed edge (we can do this because the rotation component is very minimal, ). A minimal number of segments which exhibited anomalous readings (e.g. spurious/missing RFID traces) that could not be resolved were discarded altogether (see Supplementary Material Sect. A.1).

Ground-truthing

Using the VIA tool [53], we annotate video frames at four-second intervals for three-minute ‘snippets’ at the beginning, middle and end of each segment, as well as on the minute throughout the segment. This gives a good spread of samples with enough temporal continuity to evaluate our models. The annotations consist of an axis-aligned BB for each visible mouse, labelling the identity as Red/Green/Blue and the level of occlusion as clear vs. truncated: hidden mice are by definition not annotated. A Difficult flag is set when it is hard to make out the mouse even for a human observer. Where the identity of any mouse cannot be ascertained with certainty, this is noted in the schema and the frame discarded in the final evaluation. Refer to Supplementary Material Sect. A.2 for further details.

Data splits

Our 15 cages were stratified into training (7), validation (3) and testing (5). This guarantees unbiased generalisation estimates, and shows that our method can work on entirely novel data (new cages/identities) without the need for additional annotations. We annotated a random subset of 7 segments (3.5 Hrs) for training, 6 (3 Hrs) for validation and 10 (5 Hrs) for testing (2 per cage). This yielded a total of 740 (training), 558 (validation) and 766 (testing) frames in which the mice could be unambiguously identified. We used all frames within the training/validation sets for optimising the weight model, but the rest of the architecture is trained and evaluated on three-minute snippets only, using 498, 398 and 699 frames, respectively. For testing, we remove snippets in which 50% or more of the frames show immobile mice or tentative/huddles due to severe occlusion.

Comparison methods

We compare the performance of our architecture to two simpler baseline models and off-the-shelf trackers. For overall statistics, we also report the maximum possible achievable performance given the detections.

The first of the baselines, Static (C), is a per-frame assignment (Hungarian algorithm) based on Euclidean distance between the centroid of the (BBs) and the projected RFID tag. The second model, Static (P), uses the probabilistic weights of Sect. 3.3, but is assigned on a per-frame basis.

Our problem is quite unique for the reasons enumerated in Sect. 2, and hence, off-the-shelf solutions do not typically apply. Most systems are either designed for singly housed mice [54, 55] or leverage different fur-colours/external markings [27, 56] to identify the mice. We ran some experiments with idtracker.ai [35], but it yielded no usable tracks—the poor lighting of the cage, coupled with the side-view camera interfered with the background-subtraction method employed. Bourached et al. [57] showed that the widely used DeepLabCut [36] framework does not work on data from the Harwell lab, possibly because of the side-view causing extreme occlusion of key body parts. The newer version of DeepLabCut [37] supports multi-animal tracking, but for identification it requires supervision with annotated samples (full keypoints) for each new cage. Such a level of supervision would be highly onerous and impractical. In contrast our method can be applied to new cages without retraining, as long as the visual configuration is similar (and indeed, we test it on cages for which we have not trained on). As a proof of concept, we explored annotating body parts for one of the cages, but found it impossible to identify reproducible body parts due to the frequent occlusions. For this reason, we cannot report any results for any of the above methods.

It should be emphasised that there is a misalignment of goals when it comes to comparing our method with state-of-the-art MOT trackers—no matter the quality of the tracker, there is still the need for an identification stage, and hence, the comparison degenerates to optimising over tracker architectures (which is outside the scope of our research). For example, while DeepLabCut does support multi-animal scenarios, it requires a consistent identification across videos (possibly using visual markings) to support identifying individuals; otherwise, it generates simple tracklets.

However, by way of exploration, we can envision having a human-in-the-loop that seeds the track (if the tracker supports this) and provides the identifying information. To simulate this, we took each three-minute snippet, extracted the ground-truth annotation at the middle frame, and initialised a SiamMask [8] tracker per-mouse, which we ran forwards and backwards to either end of the snippet. We used this architecture as it is designed to be a general purpose tracker with no need for pre-training on new data, and hence was readily usable with the annotations we already had.

Evaluation

The standard MOT metrics ((Multi-Object Tracking Precision (MOTP) and Multi-Object Tracking Accuracy (MOTA)) [58] do not sufficiently capture the needs of our problem, in that (a) we have a fixed set of objects we need to track (making the problem better defined), but (b) we also care about the absolute identity (i.e. permutations are not equivalent). While there is a similarity with object detection [59, 60] (using identity for object class), we know a priori that we cannot have more than one detection per ‘identity’ in each frame, and thus we modify existing metrics to suit our constraints.

Overall performance

For each (annotated) frame f and animal j, we define a ground-truth BB, —this is null when the animal is hidden. The identifier itself outputs a single hypothesis for animal j, which can also be null. Subsequently, the ‘Overall Accuracy’, , is the ratio of ground-truths that are correctly identified: i.e. where (a) the object is visible and the IoU between it and the identified BB is above a threshold, or (b) when the object is hidden and the identifier outputs null. Mathematically, this is:

| 8 |

where

| 9 |

In the above, we assume that IoU is 0 if any of the (BBs) is null: we use an IoU threshold, of 0.5 for normal objects, and 0.3 for annotations marked as Difficult.

A separate score, the ‘Overall IoU’, captures the average overlap between correct assignments, and is defined only for objects which are visible:

| 10 |

where represents the number of ground-truth visible mice in frame f: again, we assume that the IoU is 0 if any BB is null.

We also report some ‘binary’ metrics. The false negative rate (FNR) is given by the average number of times a visible object is not assigned a BB by the identifier:

| 11 |

where is the logical and. Note that this metric does not consider whether the predicted BB is correct: this is handled by the ‘Uncovered Rate’ which augments the FNR by measuring the average number of times a visible object is assigned a BB that does not ‘cover’ it:

| 12 |

Finally, the false positive rate (FPR) counts the (average) number of times a hidden object is wrongly assigned a BB:

| 13 |

where represents the number of animals hidden at frame f.

Performance conditioned on detections

The above metrics conflate the localisation of the BB (i.e. performance of the detector) with the identification error, which is what we actually seek to optimise. We thus define additional metrics conditioned on an oracle assignment of detections to ground-truth. Under the oracle, each candidate detection i is assigned to the ‘closest’ (by IoU) ground-truth annotation j using the Hungarian algorithm with a threshold of 0.5: this is relaxed to 0.3 for detections marked as Difficult. The identity of the detection is then that of the assigned ground-truth or, null if not assigned. Given the identity assigned by our identifier to BB i, we define the Accuracy given Detections as the fraction of detections which are given the correct (same as oracle) assignment by the identifier (including null when required):

| 14 |

where is the number of detections in Frame f, and is the Kronecker delta (i.e. iff and 0 otherwise).

We also report the rate of Mis-Identifications (i.e. a wrong identity is assigned): these are (BBs) which are given an identity by the oracle and a different identity by the Identifier.

| 15 |

For the purpose of this metric, null assignments are not counted as erroneous, and we normalise the count by the number of non-null oracle assignments.

Always relative to the oracle, we also quote the FPR and FNR. The FPR is now defined as the fraction of (BBs) with a null oracle assignment that are assigned an identity:

| 16 |

and conversely, the ratio of (BBs) with a non-null oracle assignment that are not identified define the FNR:

| 17 |

In fact, if we consider raw counts (rather than ratios), the sum of Mis-Identifications, FPR and FNR constitute all the errors the system makes.

Evaluation data-size

Table 1 summarises the size of each of the normalisers (within each dataset) that are used to compute the above metrics: the symbols used are the same as those used in the normalisers of Eqs. (8–17).

Table 1.

Number of samples for evaluating the models according to the Overall and Given Detections metrics in the Tuning (Train + Validation) and Test-sets, respectively

| Overall | Given detections | |||||

|---|---|---|---|---|---|---|

| FJ | ||||||

| Tune | 2688 | 2541 | 147 | 2967 | 2235 | 732 |

| Test | 2097 | 2012 | 85 | 2504 | 1836 | 668 |

Results

We comment on the quantitative performance of our approach. We also make available a sample of video clips showing our framework in action at https://github.com/michael-camilleri/TIDe: we comment on these in the Supplementary Material (see Sect. D).

Performance on the test-set

Table 2 shows the results of our model and comparative architectures evaluated on the held-out test-set (the number of samples in each case appear in Table 1), where our scheme clearly outperforms all other methods on all metrics apart from FNR. Table 3 on the other hand shows the raw counts of the same metrics as Table 2. Looking first at the left-side of the table, the overall accuracy is quite high at 77%. To get a feel of what this means consider that we are limited by the recall of the detector. In fact, the oracle, which represents an upper bound (given the detector), scores 94%. The FPR seems high, but in reality, the number of hidden samples (see Table 3) is only 85 (4%).

Table 2.

Comparative results on the test-set for the Overall Accuracy, and Accuracy given Detections

| Model | Overall | Given detections | |||||||

|---|---|---|---|---|---|---|---|---|---|

| FNR | FPR | MisID | FNR | FPR | |||||

| Static (C) | 0.659 | 0.626 | 0.286 | 0.036 | 0.788 | 0.623 | 0.178 | 0.122 | 0.590 |

| Static (P) | 0.716 | 0.666 | 0.219 | 0.045 | 0.741 | 0.694 | 0.138 | 0.100 | 0.496 |

| Ours | 0.767 | 0.694 | 0.145 | 0.070 | 0.659 | 0.791 | 0.104 | 0.066 | 0.317 |

| SiamMask | 0.637 | 0.565 | 0.336 | 0.000 | 1.000 | – | – | – | – |

| Oracle | 0.916 | 0.770 | 0.000 | 0.087 | 0.000 | – | – | – | – |

Static (C) is a baseline, Static (P) uses our weight model on a per-frame basis and the full temporal identifier is labelled Ours. We also report the overall performance of the SiamMask architecture as well as the oracle assignment as a theoretical upper bound on performance. The bold entry in each column shows the best-performing model for the respective score

Table 3.

Counts of performance metrics on the test-set (counterpart to Table 1)

| Model | Overall | Given detections | ||||||

|---|---|---|---|---|---|---|---|---|

| FNR | FPR | MisID | FNR | FPR | ||||

| Static (C) | 1382 | 575 | 73 | 67 | 1559 | 327 | 224 | 394 |

| Static (P) | 1502 | 441 | 91 | 63 | 1737 | 253 | 183 | 331 |

| Ours | 1608 | 292 | 141 | 56 | 1980 | 191 | 121 | 212 |

| SiamMask | 1335 | 677 | 0 | 85 | – | – | – | – |

| Normaliser | 2097 | 2012 | 2012 | 85 | 2504 | 1836 | 1836 | 668 |

IoU has no meaning as a count. The last row shows the normaliser which would be used in computing rates. Again, we show in bold, the best-performing score for each metric

The FNR is the only overall metric in which our method suffers, mostly because it prefers not to assign a BB rather than assign the wrong one: this is evidenced instead by the lower rate of Uncovered. Note that in moving from the baseline through to our final method, the performance strictly improves each time for all other metrics. Indeed, a big jump is achieved simply by the use of the weight model. On the other hand, the performance of the SiamMask [8] architecture is comparable to the Static (C) baseline. It should be noted that such a method would require a level of ground-truth annotation per-video to jump-start the process (i.e. a human-in-the-loop), but then could have been a viable baseline given that it requires no fine-tuning, while our detector needed to be trained using annotations. However, its performance is inadequate mostly because (a) it is not tailored to tracking mice and (b) because it cannot reason about occlusion, leading to high-levels of false positives.

The contrast between methods is starker when it comes to the analysis given detections (the SiamMask cannot be compared because it does not utilise detections). This can be explained because in the former, the performance of the detector acts as an equaliser between alternatives, while teases out the capability of the identification system from the quality of the detector. Here the FPR is lower, although from the point of view of detections, there are more of them that should be null (1563 or 45%), and hence, the ability of the identification system to reject spurious detections is illustrated. Note that the number of background (BBs) (impacting the FPR) is higher. We also investigated outlier models which were fit explicitly to the outliers in our data but validation-set evaluation yielded a drop in performance.

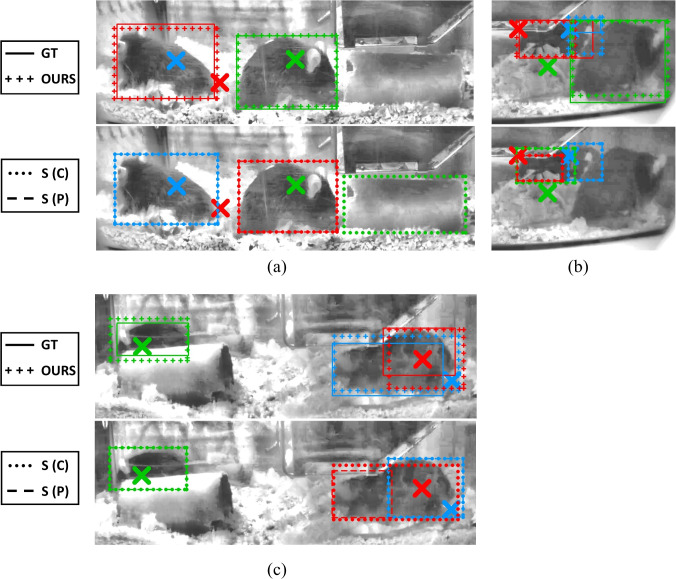

Example of successful identifications

Figure 5 shows a visualisation of the identification using our method (top) and the baseline/ablation models (below) for three sample frames. Panel (a) shows an occlusion scenario: the Blue mouse is entirely hidden by the Red one. This throws off the Static (C) method, to the extent that it classifies all mice incorrectly and picks up the tunnel as the Green mouse. The observation model in the Static (P) ablation is able to reject this spurious tunnel detection, but is confused by the other two detections, with the Red mouse marked Blue and the Green mouse identified as Red. Our method is able to use the temporal context to reason about this occlusion and correctly identifies Red/Green and rejects Blue as Hidden. A similar phenomenon is manifested in panel (c), where the temporal context present in our method (OURS) helps to correctly make out Red/Blue but the baseline/ablation get them mixed up: Static (P) particularly, uses the wrong BB altogether (which covers half a mouse).

Fig. 5.

Examples of successful identifications for OUR method (cropped to appropriate regions and brightened with CLAHE for clarity). Mouse pickups are marked by crosses of the appropriate colour. In each panel, a–c, the ground-truth (GT) and identifications according to our model (OURS) appear in the top row, while the bottom part shows the identifications due to the centroid-distance (S (C)) and static probabilistic (S (P)) models

Moving on to panel (b), this illustrates a particularly challenging scenario due to the three mice being in very close proximity under the hopper. In this case, annotation was only possible after repeated observations and following through of the RFID traces: indeed, while Green and Red are somewhat visible, Blue is almost fully occluded and is annotated as Difficult. Most significantly, however, the RFID pickups are shifted although in the correct relative arrangement. The baseline/ablation methods completely miss out the Green mouse due to this, and while Blue is assigned a correct BB, Red is confused as Green, with the Red BB covering a spurious detection. Despite this, our method correctly identifies all three mice, even if the (BBs) for Red and Blue do not perfectly cover the extent of the respective mice.

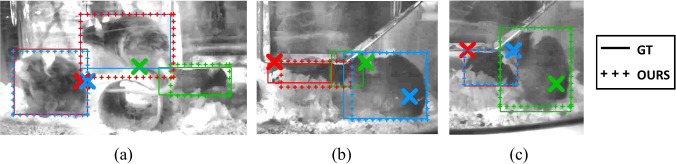

Sample failure cases

Despite outperforming the competition, our model is not perfect. We show some such failure cases in Fig. 6. Focusing first on panel (a), the switched identification between the Red and Blue mice happens due to a lag in the RFID pickup. In this case, Blue has climbed on the tunnel, but this is too high to be picked up by the baseplate and hence its position remains at its original location, occupying the same antenna space as Red. When this happens, symmetry is broken solely by the temporal tracking, but it appears this failed in this case.

Fig. 6.

Visualisation of failure cases illustrating a switched identification, b false negative and c false positive cases. The markups follows the arrangement in Fig. 5

Panel (b) shows a challenging huddling scenario, with the mice in close proximity: indeed, the Green mouse is barely visible, as it is hid by the Red mouse and the hopper. This produces a false negative: our method is unable to pick up the Green mouse, classifying it as Hidden, although it correctly identifies Red and Blue. A similar scenario appears in panel (c), although this time, the method also causes a false positive. In (c), the Red mouse is completely hidden behind the Blue/Green mice. Our method picks up green correctly, but assigns Red’s BB to Blue, effectively incurring two errors: a false positive (Blue should be occluded) and a false negative (Red is not picked up).

The last example also illustrates the interdependent nature of the errors, a side-effect of the constraint that there are at most one of each ‘colour’ of mice. This justifies our use of specific metrics to mitigate this overlap: note how our definition of Uncovered (Eq. 12) and Misidentification (Eq. 15) explicitly discounts such instances as they would have already been captured by false negatives/positives.

Conclusions

We have proposed an ILP formulation for identifying visually similar animals in a crowded cage using weak localisation information. Using our novel probabilistic model of object detections, coupled with the tracker-based combinatorial identification, we are able to correctly identify the mice 77% of the time, even under very challenging conditions. Our approach is also applicable to other scenarios which require fusing external sources of identification to video data. We are currently looking towards extending the weight model to take into account the orientation of the animals as this allows us to reason about the relative position of the BB to the RFID pickup, as well as modelling the tunnel which can occlude the mice.

Supplementary Material. We make available the curated version of the dataset and code at https://github.com/michael-camilleri/TIDe, with instructions on how to use it. We provide two versions of the data: a detections-only subset to train and evaluate the detection component, as well as an identification subset. In both cases, we provide ground-truth annotations. For detections, we provide the pre-extracted frames. For identification, in addition to the raw video and position pickups, we also released the (BBs) as generated by our trained FCOS detector, allowing researchers to evaluate the methods without the need to re-train a detector. Further details about the repository are provided in the Appendix D.

Acknowledgements

We thank our collaborators at the Mary Lyon Centre at MRC Harwell, Oxfordshire, especially Dr Sara Wells and Dr Pat Nolan for providing and explaining the data set.

Biographies

Michael P. J. Camilleri

is a PhD Student within the EPSRC Centre for Doctoral Training in Data Science at the University of Edinburgh, working with Prof. Chris Williams on Time-Series Modelling of mouse behaviour. Prior to this, he obtained an MSc in Data Science (University of Edinburgh), an MSc in Artificial Intelligence and Robotics (University of Edinburgh), and a BSc in Communications and Computer Engineering (University of Malta). His current research interest is at the cross-roads of probabilistic graphical models and temporal-series modelling. Throughout his career, he has worked with clinical (emergency response) data (DECOVID, BrainIT), mouse-behaviour data, robotics (part of the DARPA Robotics Challenge with team HKU), astronomy (pre-processing pipeline for the Square Kilometre Array), transport (DRT solutions at the University of Malta), and mobile gaming (RemoteFX cloud-computing, University of Malta).

Li Zhang

is a tenure-track Professor at the School of Data Science, Fudan University, where he directs the Zhang Vision Group. The aim of his group is to engage in state-of-the-art research in computer vision and deep learning. Previously, he was a Research Scientist at Samsung AI Center Cambridge and a Postdoctoral Research Fellow at the University of Oxford, where he was supervised by professor Philip H.S. Torr and professor Andrew Zisserman. Prior to joining Oxford, he read for his PhD in computer science under the supervision of professor Tao Xiang at Queen Mary University of London.

Rasneer S. Bains

is the Head of Phenotyping Technical Development at the Mary Lyon Centre at MRC Harwell. The main focus of her research is the establishment of passive phenotyping platforms that require minimum experimenter interaction with a focus on capturing non-evoked behaviours in mouse models of human diseases. She has a PhD in Neuroscience, an MSc in Medicines Management and a first Degree in B. Pharmacy.

Andrew Zisserman

FRS, is a Royal Society Research Professor and the Professor of Computer Vision Engineering at the Department of Engineering Science, University of Oxford, where he co-leads the Visual Geometry Group (http://www.robots.ox.ac.uk/~vgg/). His research is into visual recognition in images and videos.

Christopher K. I. Williams

did Physics at Cambridge, graduating in 1982 (BA in Physics and Theoretical Physics, Class I), and then did a further year of study known as Part III Maths (Distinction, 1983). He was interested in the “neural networks” field at that time, but it was difficult to find anyone to work with. He then switched directions for a while, doing an MSc in Water Resources at the University of Newcastle upon Tyne and going on to work in Lesotho, Southern Africa, in low-cost sanitation. In 1988, he returned to academia, studying neural networks/AI with Geoff Hinton at the University of Toronto (MSc 1990, PhD 1994). In September 1994, he moved to Aston University as a Research Fellow and was made a Lecturer in August 1995. He moved to the Department of Artificial Intelligence at the University of Edinburgh in July 1998, was promoted to Reader in the School of Informatics in October 2000, and Professor of Machine Learning in October 2005. He was Director of the Institute for Adaptive and Neural Computation (ANC) 2005–2012, founding Director of the CDT in Data Science 2013–2016, University Liaison Director for UoE to the Alan Turing Institute (2016–2018), and Director of Research for the School of Informatics (2018–2021). He was also elected a Fellow of the Royal Society of Edinburgh in 2021 and was program co-chair of the NeurIPS conference in 2009. He is currently a Fellow of the European Laboratory for Learning and Intelligent Systems (ELLIS), a Turing Fellow at the Alan Turing Institute (UK).

Appendix A Dataset

In this section, we elaborate on the Dataset used in our work, including the annotation schema used.

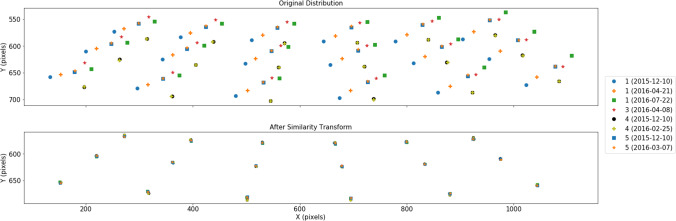

A.1 Data cleaning and calibration

We removed one of the cages as it had a non-standard setup. The RFID metadata was then filtered through consistency logic to ensure that the same mice were visible in all recordings from the same cage and that there was no missing data. In some cases, missing data due to huddling mice (which causes the RFID to be jumbled) could be filled in by looking at neighbouring periods to see if the mice moved in between and if not the same position filled in: otherwise, the segment was discarded. Segments in which there was a significant timing mismatch between recording time and RFID traces (indicating a possible invalid trace) were also discarded.

Since the data was captured using one of four different rigs, a calibration routine was required to bring all videos in the same frame of reference, simplifying downstream processing. We were given access to a number of calibration videos for each rig at multiple points throughout the data collection process (but always in between whole recordings), yielding 8 distinct configurations. Using a checkerboard pattern on the bottom of the rig (see Fig. 4b in main paper), we annotated 18 reproducible points, which we split into training (odd) and validation (even) sets. Note that the chosen points correspond to the centres of the RFID antennas on the baseplate, but this is immaterial to this calibration routine. We then used the training set of points to fit affine transforms of varying complexity and also optimised which setup to use as the prototype. We found that a Similarity transform proved ideal in terms of residuals on the validation set, as seen in Fig. 7.

Fig. 7.

Distribution of Calibration points across setups, before (top) and after (bottom) calibration

A.2 Annotation schema

We annotated our data using the VIA tool [53]. The annotation requires drawing axis-aligned (BBs) around the mice and the tunnel: the latter was included since we expect future work to incorporate the presence of the tunnel in reasoning about occlusion. For each frame, we annotate all visible objects (mice/tunnel): hidden objects are defined by their missing an annotation. For the mice, we do not include the tail or the paws in the BB: the tunnel includes the opening. For each annotation, we specify:

The identity of the mouse as Red/Green/Blue: we allow specifying multiple tentative identities when it cannot be ascertained with certainty, or when the mice are huddling and immobile (in the latter case, the BB extends over the huddle).

The level of occlusion: Clear, Truncated or NA when it is difficult to judge/marginal. Note that for this purpose, truncation refers to the size of the BB relative to how it would like if the mouse were not occluded. In other words, it may be possible for a mouse to be partially occluded in such a way that the BB is not Truncated.

For detections which are hard to make out even for a human annotator, e.g. when the mouse is almost fully occluded, a ‘Difficult’ flag is set.

Our policy was to annotate frames at 4 s intervals. Within each 30-minute segment, we annotate three snippets, at 00:00–03:00, 13:32–16:28 and 27:00–29:56. The last snippet does not include 30:00, since the last recorded frame is just before the 30:00 min mark. In some segments, we alternatively/also annotate every minute on the minute, again with the caveat that the last annotation is at 29:56 to follow the 4 s rule.

Appendix B Theory

B.1 ILP formulation as covering problem

We show that our ILP formulation is a special case of the covering problem [39].

The general cover problem is defined as a linear program:

| B1 |

| B2 |

| B3 |

in which, the constraint coefficients d, the objective costs c and the constraint values b are non-negative [39, p. 109]. Here represents an assignment variable and f iterates over constraints.

Let

| B4 |

represent the flattened assignment matrix A, where are the rows of the original matrix. Index e is consequently the index over i/j in row-major order given by:

| B5 |

If we assume that log-probabilities are always negative, our original objective, Eq. (1) involves maximising a sum of negative weights, which is equivalent to minimising the sum of the negated weights, and thus would fit the condition that the ’s in Eq. ((B1)) should be non-negative. In reality, probability densities may be greater than 1, and hence our assumption that the weight is always negative may not hold. However, we can circumvent this if we note that after computing all the weights, we can subtract a constant term such that all weights are negative. This means we can define c as:

| B6 |

where are again the rows of the original weight matrix. In this case, k would be the maximum value over W, added to all the elements, such that . Note that in our implementation we do not need to do this, since the solver is not impacted by the formulation as a covering problem.

As for the constraints of our original formulation, Eqs. (2–4), these are all summations over the assignment matrix (now represented by x) and the coefficients , encoding over which entries (in x) we perform summation (e.g. the set of visible tracklets active at time t). These are clearly non-negative as required in the covering problem formulation.

B.2 Motivation for performance given detections

Consider the scenario in Fig. 8. The ground-truth assignment for the ‘Blue’ mouse is shown as a blue BB; however, the detector only outputs the BB in magenta. Clearly, the right thing for the identifier to do is to not assign the magenta BB to any mouse, especially if the system is to be used down the line to say predict mouse behaviour. However, under the definition of overall accuracy, whatever the identifier does, its score on this sample will always be 0.

Fig. 8.

Hypothetical scenario in which the performance of the identifier is overshadowed by the detector (only Blue ground-truth is shown to reduce clutter)

Our metrics conditioned on the oracle assignment are designed to handle such cases, and tease out the performance of the identifier from any shortcomings of the detector. Note how in the same example, the oracle assignment will reject the magenta BB as having no identity: hence, the identifier can now get a perfect score if it correctly rejects the BB. This is possible because of the key difference between and : the former evaluates how well objects j are identified, but the latter measures identification performance for detections i.

Appendix C Fine-tuning

In this section, we comment on the various details of the methods employed, including how we came to choose certain configurations over others.

C.1 Fine-tuning the detector

We use the network pre-trained on CoCo [60] with the typical “1x” training settings (see public codebase2). For this, our grayscale images are replicated across the colour channels to yield RGB input to the model. We remove the COCO classifier layer of 81 classes and set a randomly initialised classifier with three categories (i.e. mouse, tunnel and background), and proceed to optimise the weights of the entire network. We investigate training using either: (a) the full set of 3247 annotated frames ((BBs) without identities), or (b) a reduced subset of 3152 frames, discarding frames marked as Difficult (see Sect. A.2). We set a constant learning rate 0.0001 for 30,000 iterations with batch size 16, checkpointing every 1000 iterations. Evaluation on the held-out annotations from the validation set indicated that the best performing model was that trained on the reduced subset (b), for 11,000 iterations.

C.2 Fine-tuning tracker parameters

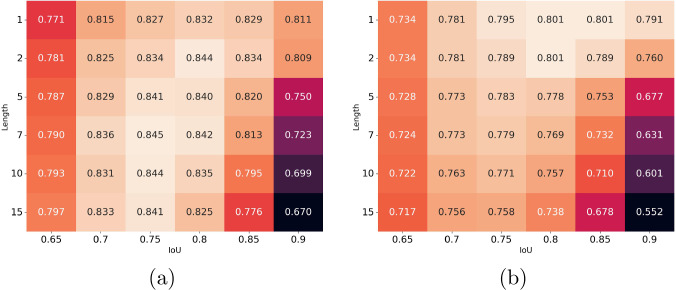

Using the combined training/validation set, we ran the identification process using our ILP method for different combinations of the parameters, in a grid-search, with IoU and length . The resulting accuracies appear in Fig. 9. In terms of the metric given detections, (a), the best score is achieved with and IoU. However, we see that the alternative configuration , IoU is only marginally worse, and it is also among the optimal in terms of Overall Accuracy (b). We thus opted for the latter configuration as a compromise between the two scores.

Fig. 9.

Accuracy a given detections, and b overall, for various configurations of the tracker IoU threshold and the minimum tracklet length

C.3 Fine-tuning the weight model

Tuning the parameters of the weight model is perhaps the central aspect which governs the performance of our solution. We document this in some depth below.

Choice of context vector

Using data from the combined training/validation set, and ignoring marginal visibility outcomes, we compared the mutual information between various choices of summarising the configuration c and the annotated visibility, and settled on counting the number of occupancies in each cell as the imparting the most information. This is natural, but we wanted to see if there was any simpler representation (such as leveraging left/right symmetry).

Predicting

Given position and context , our next task is to generate a distribution over visibility . We explored using multinomial Linear Regression (LR), Naïve Bayes (NB), decision trees, RFs and three-layer Neural Networks (NNs), all of which we optimised using five-fold cross-validation on the combined training/validation data. Note that since we will be summing out , getting a calibrated distribution is the key requirement, and hence, when choosing/optimising the models, we used the held-out data log-likelihood as our objective. Our best score was achieved with a RF classifier, with 100 estimators, a maximum depth of 12, minimum samples per-split at 5 and minimum samples at leaf nodes of 2. Despite the seemingly excessive depth, the mean number of leaf nodes per estimator was around 140 (and never going above 180), due to the regularisation nature of the ensemble method. The chosen configuration was eventually retrained on the entire training/validation dataset and used in the final models.

Probability of (BBs) under the object model

As in Eq. (5) from the main paper, when the object is presumed hidden ( Hidden), the probability mass function is a Kronecker delta with probability 0 for all detections, and 1 for the hidden BB. It remains to define the parameters of the Gaussian distribution over visible (BBs) when is Clear or Truncated, which we fit to the training/validation set, but discarding annotations marked as marginal.

We start with defining the means. In the case of the BB centroids, (x, y), we verified that the mean values per-antenna pickup were largely similar across clear/truncated visibilities. Subsequently, we fit a single model per-antenna. Given that there is limited data for some antennas, we sought to pool information from the geometrical arrangement of neighbouring antennas. To this end, we fit a homography [61] between the antenna positions on the baseplate (in world-coordinates, as they appear in Fig. 4b in main body of the paper), and the corresponding BB annotations (in image-space). The homography construct provides a principled way of sharing statistical strength across the planar arrangement.

On the other hand, inline with our intuition, a Hotelling T2 test [62] on the sizes of the (BBs), (w, h), yielded significant differences (with an 0.01 significance level) between (BBs) marked as clear or truncated in all but three of the antenna positions. Subsequently, we fit individual models per-visibility per-antenna. Again, we find the need to pool samples for statistical strength, and again we leverage the geometry of the cage. Specifically, our belief is that the sizes of the (BBs) will vary mostly with depth (into the cage) due to perspective, and are largely stable across antennas at the same depth (or conceptual row, in Fig. 4b). We thus estimate the mean sizes on a per-‘row’ basis, such that for example, antennas 1, 4, 7, 10, 13 and 16 share the same mean size.

We next discuss estimating the covariance. In actuality, fitting both the homography on the centroids and the size-means on a per-row/per-visibility basis assumes uniform variance in all directions. However, we investigated computing a more general covariance matrix using the centred data given the ‘means’ inferred from the above step. We explored three scenarios: (a) uniform variance on all dimensions (spherical, as assumed by our mean models), (b) full covariance, pooled across antennas and visibilities, and (c) full covariance pooled across antennas but independently per visibility. We fit the statistics on the training set and compared the log-likelihood of the validation-set annotations: Table 4 shows that option (c) achieved the best scores and was chosen for our weight model.

Table 4.

Average Log-Likelihoods for Gaussian distribution of BB geometries under different choices of the covariance matrix

| Spherical | Pooled covariance | Per-visibility | |

|---|---|---|---|

| Training LL | |||

| Validation LL |

The bold entry is the best performer on the validation-set

Probability of (BBs) under the outlier model

For the general outlier model, we fit a Gaussian independently on the centroid, (x, y) and size, (w, h) components. Ideally, we would use a uniform distribution over the size of the image for the former, but in the interest of uniformity with the size-model, we approximate it with a broad Gaussian. The parameters for the sizes are simply estimated on all BB annotations in our training/validation data.

Appendix D Description of the supporting material

We provide the Dataset, Code and Sample Video Clips at https://github.com/michael-camilleri/TIDe

D.1 DataSet

We have packaged a curated version of the dataset, containing the annotations, detections and position pickups as pandas dataframes. Further details appear in the repository’s Readme.

D.2 Code

We make available the tracking, identification and evaluation implementations: note that the code is provided as is for research purposes and scrutiny of the algorithm, but is not guaranteed to work without the accompanying setup/frameworks.

‘Evaluation.py’: implements the various evaluation metrics,

‘Trackers.py’: implementing our changes to the SORT Tracker and packaging it for use with our code,

‘Identifiers.py’: implementing the Identification problem and its solution.

D.3 Dataset sample

Clip 1

This shows a sample video clip of 30 s from our data. The original raw video appears on the left: on the right, we show the same video after being processed with CLAHE [4] to make it more visible to human viewers, together with the RFID-based position of each mouse overlayed as coloured dots. Note that our methods all operate on the raw video and not on the CLAHE-filtered ones.

D.4 Sample tracking and identification videos

We present some illustrative video examples showing the BB tracking each mouse as given by our identification method, and comparisons with other techniques. Apart from the first clip, the videos are played at 5 FPS for clarity, and illustrate the four methods we are comparing in a four-window arrangement with Ours on the top-left, the baseline on the top-right, the static assignment with the probabilistic model bottom-left and the SiamMask tracking bottom-right. The frame number is displayed in the top-right corner of each sub-window. The videos are best viewed by manually stepping through the frames.

Clip 2

We start by showing a minute-long clip of our identifier in action, played at the nominal framerate of 25FPS. This clip illustrates the difficulty of our setup, with the mice interacting with each other in very close proximity (e.g. Blue and Green in frames 42,375–42,570) and often partially/fully occluded by cage elements such as the hopper (e.g. Red in frames 42,430–42,500) or the tunnel (e.g. Blue in frames 42,990–43,020).

Clip 3

This clip shows the ability of our model to handle occlusion. Note for example how in frames 40,594–40,605, the relative arrangement of the RFID tags confuses the baseline (top-right), but the (static) probabilistic weight model is sufficient to reason about the occlusion dynamics and the three mice. Temporal continuity does, however, add an advantage, as in the subsequent frames, 40,607–40,630, even the static assignment (bottom-left) mis-identifies the mice, mostly due to the lag in the RFID signal. The SiamMask (bottom-right) fails to consistently track any of the mice, mostly because of occlusion and passage through tunnel which happens later on in the video (not shown here), and shows the need for reasoning about occlusions.

Clip 4

This clip shows the weight model successfully filtering out spurious detections. For clarity, we show only the static assignment (left) and the baseline (right). Note how due to a change in the RFID antenna for Green, its BB often gets confused for noisy detections below the hopper (see e.g. frames 40,867–40,875); however, the weight model, and especially the outlier distribution, is able to reject these and assign the correct BB.

Clip 5

This shows another difficult case involving mice interacting and hiding each other. The SiamMask is unable to keep track of any of the mice consistently. While our method does occasionally lose the red mouse when it is severely occluded (e.g. frame 40,990), the baseline gets it completely wrong (mis-identifying green for red in, e.g. frames 40,990–41,008), mostly due to a lag in the RFID which also trips the static assignment with our weight model.

Clip 6

Finally, this shows an interesting scenario comparing our method (left) to the SiamMask tracker (right). The latter is certainly smoother in terms of the flow of (BBs), with the tracking-by-detection approach understandably being more jerky. However, SiamMask’s inability to handle occlusion comes to the fore towards the end of the clip (frames 40,693-end), where the green track latches to the face of the Blue mouse.

Funding

MC’s work was supported by the EPSRC Centre for Doctoral Training in Data Science, funded by the UK Engineering and Physical Sciences Research Council (Grant EP/L016427/1) and the University of Edinburgh. LZ was supported by NSFC (No. 62106050). RSB was supported by funding to MLC at MRC Harwell from the Medical Research Council UK (Grant A410). Funding was also provided by the EPSRC Programme Grant Seebibyte EP/M013774/1.

Declarations

Ethical approval

Data collection for all animal studies and procedures used in the mouse dataset were carried out at MLC at MRC Harwell, in accordance with the Animals (Scientific Procedures) Act 1986, UK, Amendment Regulations 2012 (SI 4 2012/3039) as indicated in [22].

Footnotes

See e.g. the TEATIME consortium https://www.cost-teatime.org/

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Michael P. J. Camilleri, Email: michael.p.camilleri@ed.ac.uk

Li Zhang, Email: lizhangfd@fudan.edu.cn.

Rasneer S. Bains, Email: r.bains@har.mrc.ac.uk

Andrew Zisserman, Email: az@robots.ox.ac.uk.

Christopher K. I. Williams, Email: ckiw@inf.ed.ac.uk

References

- 1.Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV) 2015;115(3):211–252. doi: 10.1007/s11263-015-0816-y. [DOI] [Google Scholar]

- 2.Silva J, Lau N, Rodrigues J, Azevedo JL, Neves AJR. Sensor and information fusion applied to a robotic soccer team. In: Baltes J, Lagoudakis MG, Naruse T, Ghidary SS, editors. RoboCup 2009: Robot Soccer World Cup XIII. Berlin: Springer; 2010. pp. 366–377. [Google Scholar]

- 3.Bewley, A., Ge, Z., Ott, L., Ramos, F., Upcroft, B.: Simple online and realtime tracking. In: Proceedings—International Conference on Image Processing, ICIP, vol. 2016-Augus, pp. 3464–3468. IEEE Computer Society (2016). 10.1109/ICIP.2016.7533003

- 4.Zuiderveld K. Contrast limited adaptive histogram equalization. In: Heckbert PS, editor. Graphics Gems. Cambridge: Academic Press; 1994. pp. 474–485. [Google Scholar]

- 5.Brown SDM, Moore MW. The International Mouse Phenotyping Consortium: past and future perspectives on mouse phenotyping. Mamm. genome. 2012;23(9–10):632–640. doi: 10.1007/s00335-012-9427-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Baran SW, Bratcher N, Dennis J, Gaburro S, Karlsson EM, Maguire S, Makidon P, Noldus LPJJ, Potier Y, Rosati G, Ruiter M, Schaevitz L, Sweeney P, LaFollette MR. Emerging role of translational digital biomarkers within home cage monitoring technologies in preclinical drug discovery and development. Front. Behav. Neurosci. 2022 doi: 10.3389/fnbeh.2021.758274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Leal-Taixé, L., Milan, A., Schindler, K., Cremers, D., Reid, I., Roth, S.: Tracking the trackers: an analysis of the state of the art in multiple object tracking. arXiv preprint cs.CV (2017). arXiv:1704.02781

- 8.Wang, Q., Zhang, L., Bertinetto, L., Hu, W., Torr, P.H.S.: Fast online object tracking and segmentation: a unifying approach. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1328–1338 (2019)

- 9.Zhang, Y., Wang, C., Wang, X., Zeng, W., Liu, W.: FairMOT: on the fairness of detection and re-identification in multiple object tracking. arXiv preprint cs.CV (2020). arXiv:2004.01888

- 10.Peng J, Wang C, Wan F, Wu Y, Wang Y, Tai Y, Wang C, Li J, Huang F, Fu Y. Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) Glasgow: Springer; 2020. Chained-tracker: chaining paired attentive regression results for end-to-end joint multiple-object detection and tracking; pp. 145–161. [Google Scholar]

- 11.Leal-Taixé, L., Milan, A., Reid, I., Roth, S., Schindler, K.: MOTChallenge 2015: towards a benchmark for multi-target tracking. arXiv preprint cs.CV (2015). arXiv:1504.01942

- 12.Dendorfer, P., Rezatofighi, H., Milan, A., Shi, J., Cremers, D., Reid, I., Roth, S., Schindler, K., Leal-Taixé, L.: MOT20: a benchmark for multi object tracking in crowded scenes. arXiv cs.CV (2003.09003) (2020). arXiv:2003.09003

- 13.Dave A, Khurana T, Tokmakov P, Schmid C, Ramanan D. TAO: a large-scale benchmark for tracking any object. In: Vedaldi A, Bischof H, Brox T, Frahm J-M, editors. Computer Vision—ECCV 2020. Cham: Springer; 2020. pp. 436–454. [Google Scholar]

- 14.Lan L, Wang X, Hua G, Huang TS, Tao D. Semi-online multi-people tracking by re-identification. Int. J. Comput. Vis. 2020;128(7):1937–1955. doi: 10.1007/s11263-020-01314-1. [DOI] [Google Scholar]

- 15.Tang, S., Andriluka, M., Andres, B., Schiele, B.: Multiple people tracking by lifted multicut and person re-identification. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3701–3710. IEEE, Honolulu, Hawai (2017). 10.1109/CVPR.2017.394. http://ieeexplore.ieee.org/document/8099877/

- 16.Bergamini, L., Pini, S., Simoni, A., Vezzani, R., Calderara, S., D’Eath., R., Fisher, R.: Extracting accurate long-term behavior changes from a large pig dataset. In: Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications—Volume 5: VISAPP, pp. 524–533. SciTePress, University of Edinburgh (2021)

- 17.Yu, S.-I., Yang, Y., Li, X., Hauptmann, A.G.: Long-term identity-aware multi-person tracking for surveillance video summarization. arXiv cs.CV(1604.07468) (2016). arXiv:1604.07468

- 18.Fagot-Bouquet L, Audigier R, Dhome Y, Lerasle F. Improving multi-frame data association with sparse representations for robust near-online multi-object tracking. In: Leibe B, Matas J, Sebe N, Welling M, editors. Computer Vision—ECCV 2016. Cham: Springer; 2016. pp. 774–790. [Google Scholar]

- 19.Tang S, Andriluka M, Schiele B. Detection and tracking of occluded people. Int. J. Comput. Vis. 2014;110(1):58–69. doi: 10.1007/s11263-013-0664-6. [DOI] [Google Scholar]

- 20.Fleuret, F., Ben Shitrit, H., Fua, P.: Re-Identification for Improved People Tracking. Person Re-Identification, pp. 309–330. 10.1007/978-1-4471-6296-4_15

- 21.Ye M, Shen J, Lin G, Xiang T, Shao L, Hoi SCH. Deep learning for person re-identification: a survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021 doi: 10.1109/TPAMI.2021.3054775. [DOI] [PubMed] [Google Scholar]

- 22.Bains RS, Cater HL, Sillito RR, Chartsias A, Sneddon D, Concas D, Keskivali-Bond P, Lukins TC, Wells S, Acevedo Arozena A, Nolan PM, Armstrong JD. Analysis of individual mouse activity in group housed animals of different inbred strains using a novel automated home cage analysis system. Front. Behav. Neurosci. 2016;10:106. doi: 10.3389/fnbeh.2016.00106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Qiao, M., Zhang, T., Segalin, C., Sam, S., Perona, P., Meister, M.: Mouse academy: high-throughput automated training and trial-by-trial behavioral analysis during learning. bioRxiv (2018). 10.1101/467878

- 24.Wiltschko AB, Johnson MJ, Iurilli G, Peterson RE, Katon JM, Pashkovski SL, Abraira VE, Adams RP, Datta SR. Mapping sub-second structure in mouse behavior. Neuron. 2015;88(6):1121–1135. doi: 10.1016/j.neuron.2015.11.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tufail M, Coenen F, Mu T, Rind SJ. Mining movement patterns from video data to inform multi-agent based simulation. In: Cao L, Zeng Y, An B, Symeonidis AL, Gorodetsky V, Coenen F, Yu PS, editors. Agents and Data Mining Interaction. Cham: Springer; 2015. pp. 38–51. [Google Scholar]

- 26.Geuther BQ, Deats SP, Fox KJ, Murray SA, Braun RE, White JK, Chesler EJ, Lutz CM, Kumar V. Robust mouse tracking in complex environments using neural networks. Commun. Biol. 2019;2(1):1–11. doi: 10.1038/s42003-019-0362-1. [DOI] [PMC free article] [PubMed] [Google Scholar]